?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.

?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.ABSTRACT

Nowadays, environmental sound classification (ESC) has become one of the most studied research areas. Sound signals that are indistinguishable from the human auditory systems have been classified by computer-aided systems and machine learning methods. Therefore, ESC has been used in signal processing and sound forensics applications. A novel ESC type is presented in this paper, and it is named as vehicle interior sound classification (VISC). VISC is defined as one of the sub-branches of the ESC, and it is utilized as sound-based biometrics for vehicles. A hand-crafted feature-based VISC method is presented. The proposed method has multileveled feature generation by using maximum pooling and the proposed local quintet magnitude pattern (LQMP), feature selection with iterative neighborhood component analysis (INCA), and classification phases. A novel VISC dataset was collected from YouTube and the proposed LQMP and INCA based method applied to the collected sounds. The results denoted that following: the accuracy, F1-score, and geometric mean of the proposed LQMP and INCA based VISC method were calculated as 98.38%,98.23%, and 98.21% by using support vector machine classifier respectively. The contribution of the proposed VISC method is to denote that the vehicles can be classified by using sound.

Introduction

Environmental sound classification (ESC) has been used for different purposes in recent years and therefore it attracts the attention of researchers. In these studies, vehicle interior and exterior sounds (Hashimoto and Takao Citation1990; Västfjäll et al. Citation2002; Wang et al. Citation2013), internal organ sounds (Mishra, Menon, and Mukherjee Citation2018; Patidar and Pachori Citation2014; Randhawa and Singh Citation2015; Wang et al. Citation2013), fault detection from device sound (Gramatikov et al. Citation2016; Scanlon, Kavanagh, and Boland Citation2012), air conditioner and refrigerator noise (Jeon et al. Citation2011), aircraft and helicopter sounds (Akhtar, Elshafei-Abmed, and Ahmed Citation2001; More and Davies Citation2010), ambient sounds (Huang et al. Citation2019; Lurz et al. Citation2017), animal sounds (Ko et al. Citation2019), underwater monitoring (Mayer, Magno, and Benini Citation2019), gender recognition and classification using music (Chang, Chen, and Lee Citation2021; Liu et al. Citation2021), sound classification using acoustic properties (Nanni et al. Citation2017; Yang and Zhao Citation2021) have been used. ESC has limited information in terms of time and frequency properties. In addition, deterministic methods cannot achieve good performance since environmental sounds often differ according to the environment. Therefore, signal processing and machine learning techniques have been used in recent years.

Today, people have been mostly used their own vehicles for transportation (”ACEA Report Vehicles in use Europe Citation2019” 2019). Therefore, people spend a lot of time in vehicles (Boucher and Noyer Citation2012). Manufacturers have produced many types of land vehicles for different purposes, and these vehicle types have different characteristics. Design, engine sound, cabin interior sound, door closing sound, exhaust sound, headlight light of vehicles can be considered as biometric data of vehicles (Manders et al. Citation2000). Vehicle interior noise affects the preferences of users (Lemaitre and Susini Citation2019). Minimizing vehicle interior noise is a vital engineering process for manufacturers. There are also studies showing that the vehicle interior noise causes psychological effects during the trip (Takada et al. Citation2019).

Vehicles produce many different sounds during the drive. The sounds produced contain many descriptive data. The brand and model of the vehicle, engine failures, insulation level, cabin comfort level, material effect, exhaust measurement can be analyzed using sound signals. All sounds produced are considered as environmental sound. Ambient recognition or ESC has also been widely used for detecting criminal events. ESC has been used to detect the location of the crime scene (Al-Ali, Senadji, and Naik Citation2017; Khan et al. Citation2018). One of the sub-classes of ESC is vehicles. However, there is no special ESC method for vehicles. The studies have been generally proposed for ESC. Some of the related studies about cybercrime and vehicles are given as follows. Baucas and Spachos (Baucas and Spachos Citation2020) proposed a model for differentiating ambient sounds and urban sounds using the Mel-frequency cepstral coefficient and short-time Fourier transform feature extraction method. UrbanSound8K dataset (Salamon, Jacoby, and Bello Citation2014) was used in this study. Khan et al. (Khan et al. Citation2018) presented a method of detecting sounds from multimedia devices in IT crimes. The primary purpose of their study is to determine the microphone, acoustic environment, and scene. The recording, the codec, and the artifacts resulting from the ambient were detected by their detection method. Mazarakis and Avaritsiotis (Mazarakis and Avaritsiotis Citation2007) classified the data obtained from the Fiber Bragg Grating sensor system for classification of vehicles. In the dataset used (Duarte and Hu Citation2004), the model tested on Alexnet they achieved 87.1% classification accuracy rate. Wei et al. (Jie Wei et al. Citation2019) proposed a specialist model to remotely classify vehicle engines using a Laser Doppler Vibrometer (LDV). Artificial neural network and convolutional neural networks methods were chosen as classifiers in their study. Tone-pitch indexes were used and high accuracy rate was obtained. LDV dataset (Wei et al. Citation2014) is used for dataset evaluations. Kang et al. (Citation2020) proposed a thermal infrared image-based classification for four vehicle classes: buses, trucks, vans, and cars. 97% accuracy rate was achieved by using convolutional neural networks and SqueezeNet.

In this study, a model has been proposed for the detection of vehicle type from vehicle interior sounds using artificial intelligence methods.

Motivation

Vehicle interior sound classification (VISC) can be used for many purposes. These are robotic, information security, cybercrime, traffic accident detection, vehicle type classification. Computer-aided sound classification systems are capable of extracting many features from audio signals. However, the human auditory system can detect sounds in a range of limited frequency. Computer-aided automatic sound classification systems use all values in signal frequency. Therefore, high accurate automated sound classification methods have been proposed by using computer-aided systems. Our primary motivation is to define a novel sound classification study area by using vehicles. Humans spend a long time in vehicles, and vehicles can be utilized in a crime area. Therefore, vehicle type classification is crucial for sound forensics and machine learning. Detection of vehicle type from interior car sounds It can be used for digital forensics examination. Sound files are frequently encountered in digital materials obtained from criminals. The resulting sound files are usually analyzed by manual methods. It is important to obtain information about the wanted criminal from the sound files. The method we suggest will be able to detect which type of tool the criminal is using by using sound files. It can also be used to detect noise and sound quality interior the vehicle. Interior vehicle sound classification model, which we recommend for quality detection and estimation, can be used. In order to classify vehicle types, a VISC dataset is collected, and a novel VISC model is presented in this work. Also, our second motivation is to propose a sound classification based biometric identification method for vehicles. By using this method, sound classification based vehicle sound quality systems can also be developed.

Our Method

In this study, an local quintet magnitude pattern (LQMP) and INCA based VISC method are proposed for classification of the ambient sounds obtained from the cabin of eight different vehicle types used on paved roads. The study consists of four main stages. In the first stage, vehicle interior sounds are collected for different vehicle types. In the second stage, maximum pooling and the proposed LQMP method are used to generate low level, medium level, and high-level features. In the third stage, informative features are selected with INCA. In the last stage, the selected features are classified by using four conventional classifiers.

Contributions

The main contributions are;

This study defines a novel ESC sub-branch, and it is called as VISC. A novel hand-crafted sound classification method is presented to classify vehicles, and the presented method is tested on the collected VISC datasets. A high accurate VISC method was proposed in this work.

A novel feature extractor is proposed in this study, and it is called as LQMP. In the local feature extractors, signum and ternary functions have generally been used for binary feature generation. In the LQMP based feature extraction method, Euclidean and City Block distances are used to generate features. The proposed LQMP is an effective feature extractor for VISC problem.

Dataset

The used dataset is collected from the point of view (PoV) driving of different vehicle types from YouTube (Baucas and Spachos Citation2020). These are only vehicle interior sounds. There is no driver or any human voice. 5980 sounds were recorded with 8 classes. These vehicles were driven on asphalt road in open-air. We didn’t prefer to collect interior vehicle sounds on unpaved roads in rainy weather. These sound samples were collected from 30 different vehicle videos for each class. Toplanan The file format of these data is wav. The length of the used sound samples is in the range of 3–5 seconds with 48 KHz frequency. The chosen vehicle types are bus, minibus, pickup, sport car, jeep, truck, crossover, and car (automobile). Collected dataset can be downloaded by using https://zenodo.org/record/5606504#.YXpNrBpByUk URL. The attributes of the collected vehicle interior sound (VIS) dataset are summarized in .

Table 1. Collected VISC dataset.

As it can be seen in , the collected dataset is a heterogeneous dataset and there are 8 classes. Therefore, this dataset is called as VISC8 dataset.

The Proposed VISC Method

Our main goal is to propose a highly accurate and cognitive VISC method. Therefore, we proposed a multileveled VISC method. The main components of the proposed VISC method are given below.

Feature extraction with LQMP

Feature selection by using iterative NCA (Raghu and Sriraam Citation2018) (INCA).

Classification

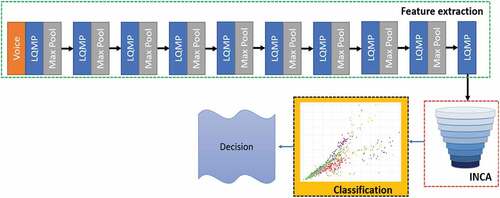

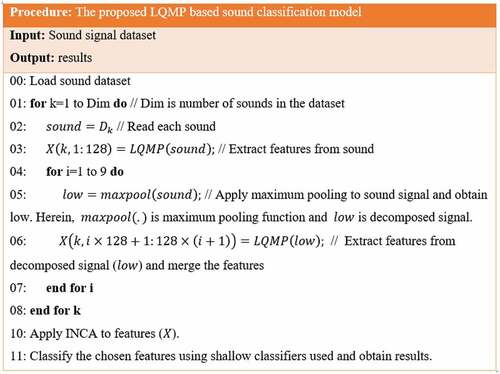

In the proposed LQMP and INCA based method, the maximum pooling operator is applied to raw vehicle interior sound 9 times. The proposed LQMP extract 128 features from raw vehicle interior sound and 9 maximum pooled signals of it. These features are fused, and 1280 features are generated totally. To select the most informative features, INCA is applied to the extracted 1280 features, and 140 most informative features are selected. kNN (Kar, Sharma, and Maitra Citation2015; Liao and Vemuri Citation2002), LD (Chen and Yang Citation2004), SVM (Keerthi et al. Citation2000), and BT (Hothorn and Lausen Citation2005) classifiers are used to classify these 140 most discriminative features. To better explain the proposed LQMP and INCA based VISC method, the graphical summarization of this method is shown in .

Moreover, pseudocode of the suggested LQMP and INCA based VISC method is denoted in .

Details of the proposed VISC method are given step by step below.

Step 1: Apply maximum pooling (Demir et al. Citation2020; Jain and Bhandare Citation2011) to vehicle interior sound 9 times.

where is input vehicle interior sound signal,

tth level maximum pooled signal,

represents the length of the signal,

and

define index values,

denotes maximum function and

are input parameters of the maximum function.

As it can be seen in Step 1, the interior voice signals are divided into 2 sized non-overlapping windows, and maximum function is applied to each window.

Step 2: Generate features from raw and maximum pooling sounds.

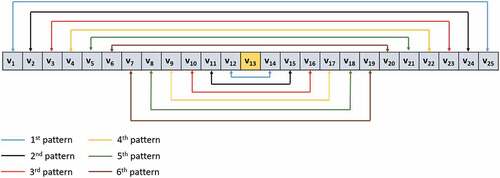

Step 2 defines the used feature extraction function. In this phase, LQMP is utilized as a feature generation function. The details of the proposed LQMP are given as sub-steps. The main objective of the proposed LQMP is to generate features by using 25 sized overlapping window, Euclidean, and city block distance metrics. 5 × 5 sized block is used to propose this model. In this work, we used one-dimensional signals. Therefore, we used 25 sized overlapping windows. This method is a center symmetric local feature extractor. We demonstrated the recommended pattern in .

Step 2.1: Divide the input signal into 25 sized overlapping windows and obtain windows.

Step 2.2: Extract Euclidean bits by using LPMQ pattern and Euclidean function.

where and

represent first and second Euclidean values,

defines values of the non-overlapping window,

denotes Euclidean bit, and

is index value.

Step 2.3: Generate city block bits by using EquationEquations (10)(10)

(10) –(Equation12

(12)

(12) ).

where and

are city block values and

is the city block bit.

Step 2.4: Calculate decimal Euclidean and city block values.

where is decimal Euclidean value and

decimal city block value.

Step 2.5: Extract histogram of the Euclidean value and city block value. In this step, two histograms with size 64 are extracted.

where and

represent Euclidean and City Block histograms. In EquationEquations (15)

(15)

(15) and (Equation16

(16)

(16) ), histograms are defined first values of them assigned zero. EquationEquations (17)

(17)

(17) and (Equation18

(18)

(18) ) define histogram value updating.

Step 2.6: Concatenate these histograms and obtain a feature vector with a size of 256.

The above steps are defined as the proposed LQMP. As it can be seen in these steps, it is a local binary pattern like feature extractor. It uses 25 sized windows center symmetrically, and kernel functions of it are Euclidean and City Block distance instead of signum or ternary function. LQMP aimed to extract informative features.

Step 3: Concatenate feature of each level and obtained the final feature vector with a size of 1280. EquationEquation (21)(21)

(21) denotes feature concatenation.

where X is the final feature vector.

Step 4: Select the most informative features with INCA.

In EquationEquation (22)(22)

(22) ,

is informative features, and

is an iterative NCA feature selector. NCA (Raghu and Sriraam Citation2018) is one of the commonly preferred distance-based feature selectors and uses an optimization function (stochastic gradient descent) to calculate weights of the features. It uses a City Block distance metric based fitness function to calculate the correlation between features and target. By using this correlation value, it assigns weight to each feature. The NCA weights are positive. Therefore, it is challenging to eliminate features. The other one of the most critical problems of the NCA is to select the optimal number of features automatically. Trial and error method or parametric feature selection have been generally used in the NCA to select features. In this work, we presented an iterative feature selection model, and it is called INCA. INCA should use a classifier to calculate errors. By using minimum error, the optimal number of features is selected. The steps of the INCA are shown below.

Step 4.1: Normalized each column of the X.

Step 4.2: Calculate NCA weights and index of the most informative features by using NCA function.

Where represents NCA weights.

Step 4.3: Select features by using the index vector iteratively.

where is ith selected features.

Step 4.4: Calculate the error values of each selected feature by using kNN. In this step, kNN is utilized as error calculator. The parameters of the used error calculator are given as follows. k is selected as 1, and the distance metric is chosen as city block. kNN has a low computational cost. Therefore, we select it as an error calculator.

Step 4.5: Select optimal features which have the minimum error.

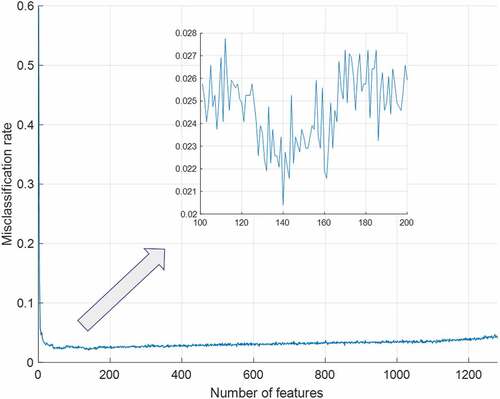

where is the index value of the minimum error, we selected a range for features. Our range is 100 from 1000 features. The calculated error rates according to the number of features are shown in .

As it can be seen in , the selected final feature vector has 140 features.

Step 5: Use the selected most informative features as the input of the classifiers. In this step, LD, kNN, SVM, and BT classifiers are utilized as classifiers. 10-fold CV is selected to test results. The hyperparameters of the used classifiers are given as below.

LD: Gamma value is set as 0 (Chen and Yang Citation2004).

kNN: k is 1, and the distance metric is city block (Kar, Sharma, and MaitraCitation2015; Liao and Vemuri Citation2002).

SVM: A kernel function is polynomial. Polynomial order is selected 3. Kernel scale is automatic, coding is one vs. all, and constraint value (C) is chosen as 1 (Keerthi et al. Citation2000).

BT: Bag ensemble method is chosen. 30 is chosen as the number of learning cycles, the learning rate is 0.1, and 799 is selected as the maximum number of splits (Hothorn and Lausen Citation2005).

Results

The experimental setup and results of the proposed LQMP and INCA based VISC method were explained in this section.

Experimental Setup

VISC8 database was collected from YouTube PoV drive videos, and interior sounds were stored as m4a format. MATLAB2018a programming environment was used to implement our model on these sounds. The proposed LQMP and INCA based VISC method was implemented on a desktop computer, and this computer has 16 GB main memory and intel i7 7700 microprocessor. The used operating system is Windows 10.1 ultimate.

Experimental Result

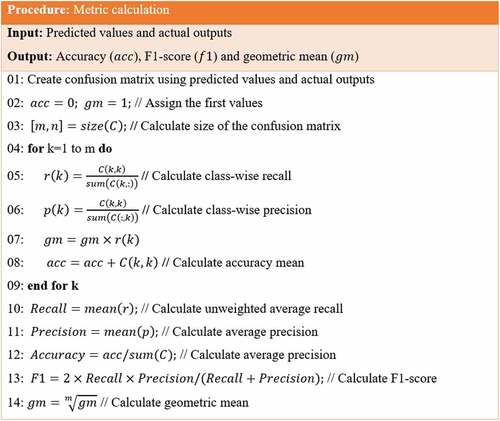

The commonly used performance evaluation metrics of the classifiers were used to determine the success of the proposed method. These metrics are classification accuracy, F1-score, and geometric mean. General mathematical notations of them were given as below.

where and

are the number of true positives, true negatives, false positives, and false negatives, respectively. EquationEquations (29)

(29)

(29) –(Equation31

(31)

(31) ) are defined for two-class classification problems. However, there are 8 classes in our VISC problem. Therefore, we used Algorithm 1 to calculate these parameters for multiclass problems.

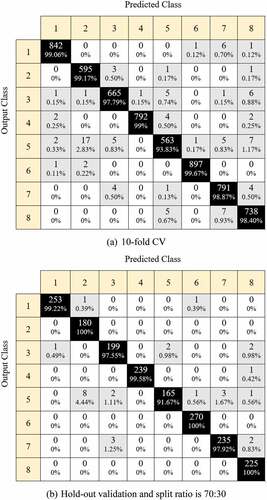

In this section, we used two validation models and these are 10-fold cross-validation and hold-out validation. In the hold-out validation, the split ratio is chosen as 70:30. By using these validation techniques, the calculated performance metrics are tabulated in .

Table 2. The calculated performance rates (%) according to the classifier and validation tecniques.

denotes that the SVM classifier attained over 98% classification accuracies for both validation techniques and it is the best classifier for this problem. In , we depicted confusion matrices of the SVM for both validatios. (a) 10-fold CV. (b) Hold-out validation and split ratio is 70:30

denotes the calculated confusion matrices using SVM classifier. Per , the best accurate class is 6th class using 10-fold CV (see ) since it achieved 99.67% classification accuracy. By testing the proposed LQMP and INCA based VISC method with 70:30 split ratio, our model reached 100% (perfect accuracy) using 2nd, 6th, and 8th classes.

Discussions

In this work, we presented a novel sub-study area of the ESC, and it is called as VISC. VISC is the crucial study area because it can be used in both ESC and biometric identification of the vehicles. We presented the first study of this area. Therefore, we collected a dataset from YouTube PoV drive videos. Sounds of these videos were recorded. We presented a hand-crafted feature extraction model, and this model has multilevel. We inspired deep networks for instance AlexNet (Krizhevsky, Sutskever, and Hinton Citation2012), GoogLeNet (Szegedy et al. Citation2015), ResNet (He et al. Citation2016). A new feature generation function was presented to achieve high accuracy. The proposed feature generation function is LQMP. The main point of the proposed LQMP is to extract informative features by using city block and Euclidean distance based binary feature-extraction functions, and it generates 128 features. The computational cost of the proposed LQMP and maximum pooling based feature generation method was calculated as because maximum pooling operator decomposed signal in each level and time cost of the LQMP is directly depend on the length of signal (

). An optimal number of features were selected by using INCA feature selector. The proposed LQMP and maximum pooling based feature generation method extract 1280 features and INCA select 140 most informative features of these 1280 features. kNN was utilized as an error value calculator, and we determined the range for the number of features. Because this range determines the number of executions for kNN. kNN also has low computational complexity (

). Therefore, it was used in INCA as a loss calculator. Four conventional classifiers were used to denote the efficiency of these 140 most informative features. 86.64%, 97.88%, 98.38%, and 96.89% classification accuracies were calculated by using LD, BT, kNN, and SVM classifiers with 10-fold cross-validation. Moreover, the suggested model have been tested with hold-out validation and our proposal achieved 87.85%, 96.60%, 98.44%, and 97.10% classification accuracies were calculated by using LD, BT, kNN, and SVM classifiers consecutively. Benefits of the proposed LQMP and INCA based VISC method are;

VISC is a new study area, and this study area effected automotive, machine learning, and crime investigation.

A novel feature extraction method (LQMP and maximum pooling) was presented.

An automated feature selector (INCA) was presented to overcome the optimal feature selection problem of the NCA.

By using these methods (LQMP based feature extraction and INCA feature selection), a high accurate VISC method was presented, and this method reached 98.44% classification accuracy for the collected 800 sounds of the VISC8 dataset.

The proposed LQMP and INCA based method is a robust classification method because the results were obtained by using 10-fold cross-validation.

Results of four classifiers were presented to evaluate the proposed LQMP and INCA based VISC method comprehensively.

Conclusions

We propose a novel sound classification study area in this paper. Our main aim is to classify the type of vehicles with high classification accuracy. Therefore, a novel dataset was collected, and it is called as VISC8 dataset. A new hand-crafted feature generation and iterative feature selection based method is proposed to test this dataset. Maximum pooling is used to create levels, and LQMP extracts 128 features from each level. 140 most informative features were selected with INCA, and these features were forwarded to LD, kNN, SVM, and BT classifiers. The best accuracy rates of the whole classifiers were higher than 86%, and 98.44% classification rate was achieved using SVM with 70:30 split ratio. Our findings clearly illustrated that the vehicles could be classified by using their interior sounds.

In the future works, our method can be motivated by other researchers, and novel big and parametric datasets can be collected. In the sound collection phase, several parameters such as weather, road type can be used, and novel learning methods can be presented to classify these datasets. Also, the proposed LQMP and INCA based methods can be used to create learning methods.

Disclosure statement

No potential conflict of interest was reported by the author(s).

References

- ACEA Report Vehicles in Use Europe 2019. 2019.

- Akhtar, S., M. Elshafei-Abmed, and M. S. Ahmed. 2001. Detection of helicopters using neural nets. IEEE Transactions on Instrumentation and Measurement 50 (3):749–3242. doi:10.1109/19.930449.

- Al-Ali, A. K. H., B. Senadji, and G. R. Naik. 2017. Enhanced forensic speaker verification using multi-run ICA in the presence of environmental noise and reverberation conditions. Paper Presented at the 2017 IEEE International Conference on Signal and Image Processing Applications (ICSIPA) 12-14 September 2017 Kuching, Malaysia.

- Baucas, M. J., and P. Spachos. 2020. Using cloud and fog computing for large scale IoT-based urban sound classification. Simulation Modelling Practice and Theory 101:102013. doi:10.1016/j.simpat.2019.102013.

- Boucher, C., and J.-C. Noyer. 2012. Automatic detection of topological changes for digital road map updating. IEEE Transactions on Instrumentation and Measurement 61 (11):3094–102. doi:10.1109/TIM.2012.2203873.

- Chang, P.-C., Y.-S. Chen, and C.-H. Lee. 2021. MS-SincResnet: Joint learning of 1D and 2D kernels using multi-scale SincNet and ResNet for music genre classification. ICMR '21: Proceedings of the 2021 International Conference on Multimedia Retrieval 21-24 August 2021 Taipei Taiwan.

- Chen, S., and X. Yang. 2004. Alternative linear discriminant classifier. Pattern Recognition 37 (7):1545–47. doi:10.1016/j.patcog.2003.11.008.

- Demir, S., S. Key, T. Tuncer, and S. Dogan. 2020. An exemplar pyramid feature extraction based humerus fracture classification method. Medical Hypotheses 109663. doi:10.1016/j.mehy.2020.109663.

- Duarte, M. F., and Y. H. Hu. 2004. Vehicle classification in distributed sensor networks. Journal of Parallel and Distributed Computing 64 (7):826–38. doi:10.1016/j.jpdc.2004.03.020.

- Gramatikov, B. I., S. Rangarajan, K. Irsch, and D. L. Guyton. 2016. Attention attraction in an ophthalmic diagnostic device using sound-modulated fixation targets. Medical Engineering & Physics 38 (8):818–21. doi:10.1016/j.medengphy.2016.05.004.

- Hashimoto, T., and H. Takao. 1990. Subjective estimation of running car interior noise, part 2: The relation between subjective and physical values of noise. Transactions of the Automotive Emineers of Japan (43):129–33.

- He, K., X. Zhang, S. Ren, and J. Sun 2016. Deep residual learning for image recognition. Paper Presented at the Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition 27-30 June 2016 Las Vegas, NV, USA.

- Hothorn, T., and B. Lausen. 2005. Bundling classifiers by bagging trees. Computational Statistics & Data Analysis 49 (4):1068–78. doi:10.1016/j.csda.2004.06.019.

- Huang, J., C. Chen, G. Guo, Z. Zhang, and Z. Li. 2019. A calculation model for typical data center cooling system. Journal of Physics: Conference Series, Volume 1304, The 2nd International Conference on Energy, Electrical and Power Engineering 25–28 June 2019 Berkley, USA.

- Jain, Y. K., and S. K. Bhandare. 2011. Min max normalization based data perturbation method for privacy protection. International Journal of Computer & Communication Technology 2 (8):45–50.

- Jeon, J. Y., J. You, C. I. Jeong, S. Y. Kim, and M. J. Jho. 2011. Varying the spectral envelope of air-conditioning sounds to enhance indoor acoustic comfort. Building and Environment 46 (3):739–46. doi:10.1016/j.buildenv.2010.10.005.

- Kang, Q., H. Zhao, D. Yang, H. S. Ahmed, and J. Ma. 2020. Lightweight convolutional neural network for vehicle recognition in thermal infrared images. Infrared Physics & Technology 104:103120. doi:10.1016/j.infrared.2019.103120.

- Kar, S., K. D. Sharma, and M. Maitra. 2015. Gene selection from microarray gene expression data for classification of cancer subgroups employing PSO and adaptive K-nearest neighborhood technique. Expert Systems with Applications 42 (1):612–27. doi:10.1016/j.eswa.2014.08.014.

- Keerthi, S. S., S. K. Shevade, C. Bhattacharyya, and K. R. Murthy. 2000. A fast iterative nearest point algorithm for support vector machine classifier design. IEEE Transactions on Neural Networks 11 (1):124–36. doi:10.1109/72.822516.

- Khan, M. K., M. Zakariah, H. Malik, and K.-K. R. Choo. 2018. A novel audio forensic data-set for digital multimedia forensics. The Australian Journal of Forensic Sciences 50 (5):525–42. doi:10.1080/00450618.2017.1296186.

- Ko, K., J. Park, D. K. Han, and H. Ko. 2019. Channel and frequency attention module for diverse animal sound classification. IEICE Transactions on Information and Systems 102 (12):2615–18. doi:10.1587/transinf.2019EDL8128.

- Krizhevsky, A., I. Sutskever, and G. E. Hinton. 2012. Imagenet classification with deep convolutional neural networks. NIPS'12: Proceedings of the 25th International Conference on Neural Information Processing Systems - Volume 1 December 2012 Lake Tahoe Nevada.

- Lemaitre, G., and P. Susini. 2019. Timbre, sound quality, and sound design Kai Siedenburg, Charalampos Saitis, Stephen McAdams, Arthur N. Popper, Richard R. Fay . In Timbre: Acoustics, perception, and cognition, 245–72 978-3-030-14831-7 doi:10.1007/978-3-030-14832-4 . Springer Nature Switzerland AG 2019: Springer.

- Liao, Y., and V. R. Vemuri. 2002. Use of k-nearest neighbor classifier for intrusion detection. Computers & Security 21 (5):439–48. doi:10.1016/S0167-4048(02)00514-X.

- Liu, C., L. Feng, G. Liu, H. Wang, and S. Liu. 2021. Bottom-up broadcast neural network for music genre classification. Multimedia Tools and Applications 80 (5):7313–31. doi:10.1007/s11042-020-09643-6.

- Lurz, F., S. Lindner, S. Linz, S. Mann, R. Weigel, and A. Koelpin. 2017. High-speed resonant surface acoustic wave instrumentation based on instantaneous frequency measurement. IEEE Transactions on Instrumentation and Measurement 66 (5):974–84. doi:10.1109/TIM.2016.2642618.

- Manders, E.-J., G. Biswas, P. J. Mosterman, L. A. Barford, and R. J. Barnett. 2000. Signal interpretation for monitoring and diagnosis, a cooling system testbed. IEEE Transactions on Instrumentation and Measurement 49 (3):503–08. doi:10.1109/19.850384.

- Mayer, P., M. Magno, and L. Benini. 2019. Self-sustaining acoustic sensor with programmable pattern recognition for underwater monitoring. IEEE Transactions on Instrumentation and Measurement 68 (7):2346–55. doi:10.1109/TIM.2018.2890187.

- Mazarakis, G. P., and J. N. Avaritsiotis. 2007. Vehicle classification in sensor networks using time-domain signal processing and neural networks. Microprocessors and Microsystems 31 (6):381–92. doi:10.1016/j.micpro.2007.02.005.

- Mishra, M., H. Menon, and A. Mukherjee. 2018. Characterization of $ S_1 $ and $ S_2 $ heart sounds using stacked autoencoder and convolutional neural network. IEEE Transactions on Instrumentation and Measurement 68 (9):3211–20. doi:10.1109/TIM.2018.2872387.

- More, S., and P. Davies. 2010. Human responses to the tonalness of aircraft noise. Noise Control Engineering Journal 58 (4):420–40. doi:10.3397/1.3475528.

- Nanni, L., Y. M. Costa, D. R. Lucio, C. N. Silla, Jr, and S. Brahnam. 2017. Combining visual and acoustic features for audio classification tasks. Pattern Recognition Letters 88:49–56. doi:10.1016/j.patrec.2017.01.013.

- Patidar, S., and R. B. Pachori. 2014. Classification of cardiac sound signals using constrained tunable-Q wavelet transform. Expert Systems with Applications 41 (16):7161–70. doi:10.1016/j.eswa.2014.05.052.

- Raghu, S., and N. Sriraam. 2018. Classification of focal and non-focal EEG signals using neighborhood component analysis and machine learning algorithms. Expert Systems with Applications 113:18–32. doi:10.1016/j.eswa.2018.06.031.

- Randhawa, S. K., and M. Singh. 2015. Classification of heart sound signals using multi-modal features. Procedia Computer Science 58:165–71. doi:10.1016/j.procs.2015.08.045.

- Salamon, J., C. Jacoby, and J. P. Bello. 2014. A dataset and taxonomy for urban sound research. MM '14: Proceedings of the 22nd ACM international conference on Multimedia 3-7 November 2014 New York, United States.

- Scanlon, P., D. F. Kavanagh, and F. M. Boland. 2012. Residual life prediction of rotating machines using acoustic noise signals. IEEE Transactions on Instrumentation and Measurement 62 (1):95–108. doi:10.1109/TIM.2012.2212508.

- Szegedy, C., W. Liu, Y. Jia, P. Sermanet, S. Reed, D. Anguelov, and A. Rabinovich. 2015. Going deeper with convolutions. 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR) 15 October 2015 Boston, MA.

- Takada, M., H. Mori, S. Sakamoto, and S.-I. Iwamiya. 2019. Structural analysis of the value evaluation of vehicle door-closing sounds. Applied Acoustics 156:306–18. doi:10.1016/j.apacoust.2019.07.025.

- Västfjäll, D., M.-A. Gulbol, M. Kleiner, and T. Gärling. 2002. Affective evaluations of and reactions to exterior and interior vehicle auditory quality. Journal of Sound and Vibration 255 (3):501–18. doi:10.1006/jsvi.2001.4166.

- Wang, Y., G. Shen, H. Guo, X. Tang, and T. Hamade. 2013. Roughness modelling based on human auditory perception for sound quality evaluation of vehicle interior noise. Journal of Sound and Vibration 332 (16):3893–904. doi:10.1016/j.jsv.2013.02.030.

- Wei, J., C.-H. Liu, Z. Zhu, L. R. Cain, and V. J. Velten. 2019. Vehicle engine classification using normalized tone-pitch indexing and neural computing on short remote vibration sensing data. Expert Systems with Applications 115:276–86. doi:10.1016/j.eswa.2018.07.073.

- Wei, J., N. Li, X. Xia, X. Chen, F. Peng, G. E. Besner, and J. Feng. 2014. Effects of lipopolysaccharide-induced inflammation on the interstitial cells of cajal. Cell and Tissue Research 356 (1):29–37. doi:10.1007/s00441-013-1775-7.

- Yang, L., and H. Zhao. 2021. Sound classification based on multihead attention and support vector machine. Mathematical Problems in Engineering 2021.