?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.

?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.ABSTRACT

The world has experienced a health crisis with the outbreak of the COVID-19 virus. The mask has been identified as the most effective way to prevent the spread of the virus. This has led to the need for a face mask recognition device that not only detects the presence of the mask but also provides the accuracy with which a person is wearing the face mask. In addition, the face mask should also be recognized from all angles. The project aims to create a new and improved real-time face mask recognition tool using image processing and computer vision approaches. A dataset consisting of images with and without a mask was used. For the purposes of this project, a pre-trained MobileNetV2 convolutional neural network was used. The performance of the given model was evaluated. The model presented in this project can detect the face mask with an accuracy of 99.21%. The face mask recognition tool can effectively detect the face mask in the side direction, which makes it more useful. The optimization function which contains the learning loops and the optimization function are also used.

Introduction

Coronavirus (COVID-19) is the latest evolving virus that has taken over the world in just a few months. It is a type of pneumonia that was initiated in early December 2019 near the city of Wuhan, Hubei Province, China, when it was declared a global pandemic by the World Health Organization (WHO) on March 11, 2020 (Fong, Dey, and Chaki Citation2021). According to WHO statistics, as of February 24, 2021, more than 111 million people were infected with the virus and about 2.46 million deaths were registered (Canete Citation2021). The most common symptoms of coronavirus are fever, dry cough and fatigue among many others. It is mainly spread by close direct contact between people and the respiratory droplets of an infected person, produced by coughing, sneezing or exhaling. Because these droplets are too dense to travel long distances through the air and fall quickly to floors or surfaces, they are also spread when people touch virus-damaged surfaces and touch their face (eg, eyes, nose, and mouth) (Vaishya et al. Citation2020). WHO declared a state of emergency worldwide and developed some emergency precautions to limit the spread of the virus, i.e. wash hands regularly with soap and water for 20 years, use disinfectants, keep distance, disinfect regularly. surfaces, using disposable tissues when coughing or sneezing, and especially wearing masks in public places (Bhagat et al. Citation2020; Xiao and Estee Torok Citation2020). Similar to the effective control of the spread of the SARS virus in the community during the 2003 SARS outbreak (Peng et al. Citation2003), community-wide masking has also been very effective in controlling the spread of the coronavirus (Cheng et al. Citation2020; Matuschek et al. Citation2020). Effective control of respiratory droplets has made wearing masks an essential element of the response to COVID-19 (Koklu, Cinar, and Selim Taspinar Citation2022). For example, the effectiveness of N95 and surgical masks in blocking virus transmission (by blocking respiratory droplets) is 91% and 68%, respectively (Tom et al. Citation2020). Wearing face masks can effectively prevent the entry of viruses and airborne particles so that some pollutants cannot enter the respiratory system of another person (Qin and Li Citation2020). Global scientific cooperation has greatly improved due to the outbreak of the Corona virus and is looking for new tools and methods to fight this virus. One such technology that can be used is artificial intelligence (AI) (Bhagat et al. Citation2020). It can quickly track the spread of the virus, identify high-risk patients, and control the epidemic in real time (Gayathri, Kumar, and Kumar Gunjan Citation2022). It is also useful for early prediction of infection by analyzing previous patient data, which in turn can reduce the risk of dying from the virus(Vaishya et al. Citation2020).

As discussed, wearing masks is the most effective measure to protect against the transmission of coronavirus, but ensuring that masks are worn in public places is a challenge for the government and relevant authorities. Fortunately, AI as an application (using machine learning (ML) or deep learning algorithms (DL)) can help to force the wearing of masks in public places by finding masks in real time with a built-in camera add network (network of surveillance cameras or any other). It is an easy way to control people locally, maintain social silence and make sure everyone wears masks (Alrammahi and Radif Citation2019).

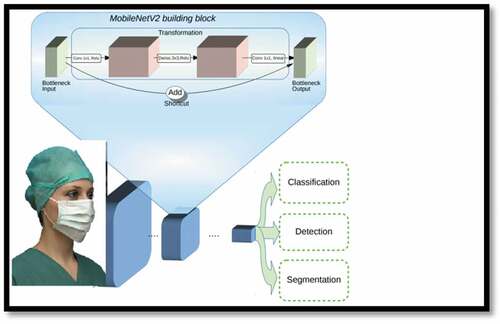

MobileNet architecture is special because it uses very less computation power to run. This makes it a perfect fit for mobile devices, embedded systems, and computers to run without GPUs. MobilenetV1 is the first version of the Mobilenet models (Koklu, Cinar, and Selim Taspinar Citation2022). It has more layers and convolution parameters than MobilenetV2. MobilenetV2 is the second version of the Mobilenet model (Mohd et al. Citation2022). It has significantly less parameters in deep neural networks. This makes deep neural networks easier. Because of its light weight, it is ideal for embedded systems and mobile devices.MobilenetV2 is an updated version of MobilenetV1 (Ahmed et al. Citation2022). This makes it more efficient and effective. Because of the importance of face mask detection in public places, here in this study, we have demonstrated the use of one popular DL-based architectures, i.e., MobileNetV2 for effective face mask detection (Wang et al. Citation2020), .

Related Work

Over the past few years, object recognition algorithms employing deep learning models have become theoretically more competent when compared to shallow models in tackling complicated jobs (Yadav Citation2020). One example is building a real-time system/model that is capable of detecting whether people have worn a mask or not in public areas.

Militants and Dionisio (Militante and Dionisio Citation2020) developed an automatic system to detect whether or not a person is wearing a mask and if the person is not wearing a system mask to generate an alarm. To develop the systems, the authors used CNN’s VGG-16 architecture. The systems achieved an overall detection accuracy of 96%. In the future, the authors decided to create a system that will not only detect whether a person is wearing a mask or not, but will also detect a physical distance between each person and trigger an alert if the physical distance is not correctly observed.

Sanjaya and Rakhmawan (Sanjaya and Adi Rakhmawan Citation2020) introduced a model using the DL algorithm to determine whether a person wears a mask or not in public places. To do this, they used the MobileNetV2 image classification method, which is a pre-trained method. In this experiment, the authors used two data sets, namely (1) RMFD obtained from Kaggle and (2) data set collected from 25 cities in Indonesia using CCTV cameras, traffic lights and shop cameras. Both datasets were used to train their model. The trained model achieved detection accuracy of 96% and 85% in the test sets of these two data sets, respectively.

P. Gupta, N. Saxena, M. Sharma, J. In this proposed approach, only extracted facial features are provided instead of raw pixel values as input. The facial features were extracted using Haar Cascade, and these typical facial features instead of pixel values are retained. As the number of redundant input functions decreases, so do the more sophisticated neural network recognition tools. It also makes the process smoother and faster by using DNN instead of network folding. The proposed method does not affect the physical accuracy, the average accuracy obtained was 97.05% (Gupta et al. Citation2018).

Nizam et al. (Din et al. Citation2020) developed a GAN-based system to remove observed face masks and synthesize missing facial components with more detailed details and area reconstruction. The proposed GAN used two separators: the first took over the structure of the face mask, while the second was able to remove the area covered by the face mask. They used two synthetic data sets in the model training process. Loey et al. (Loey et al. Citation2021) introduced a face mask detection model that works on deep transfer learning and classical ML classifiers (classical ML classifiers refer to ML algorithms that work on functions manually extracted and designed from input data). The Residual Neural Network (ResNet 50) algorithm was used to extract the features. The extracted services were then used to train three classic ML algorithms, namely the Support Vector Machine (SVM), the Decision Tree (DT), and the Ensemble Learning (EL). Three different face mask datasets were used in the study, namely (i) the Real World Masked Faces (RMFD) dataset, (ii) the simulated masked faces (SMFD), and (iii) the wild-labeled faces. (LFW). Finally, trained classifiers were tested for face mask detection. During the test, the SVM classifier achieved the highest detection accuracy compared to the DT and EL classifiers. It achieved 99.64% and 99.49% detection accuracy in RMFD and SMFD, respectively, and 100% detection accuracy in LFW.

Bhuiyan, Akter Khushbu, and Sanzidul Islam (Citation2020) published an article in which the purpose of the proposed system is to identify masked people, and the faces are represented using the advanced architecture of YOLOv3. YOLO (You Only Look Once) uses the Convolution Neural Network (CNN) learning algorithm. YOLO connects to CNN through hidden layers, through search, easy algorithm retrieval, and can detect and locate any type of image. The implementation begins by taking 30 unique images of the dataset into the model after combining the results to derive action-level predictions. It provides excellent imaging results as well as good detection results. This model is used for live video to verify that the frame rate of the model is in the video and its detection capability with two layers masked/unmasked. Inside video, our model has impressive outputs averaging 17 fps. This system is more efficient and faster than other methods that use their own data set (Bhuiyan, Akter Khushbu, and Sanzidul Islam Citation2020). The authors (Venkateswarlu, Kakarla, and Prakash Citation2020) recommended using a pre-trained MobileNet with a global pooling block that can be used for facial recognition and detection (Fadhil and Abbas Marhoon Citation2021). Preconfigured MobileNet creates a multidimensional component map from a shaded image. In the proposed model, there is no problem with overfitting, as it uses a common connection block.

Materials and Methods

Dataset

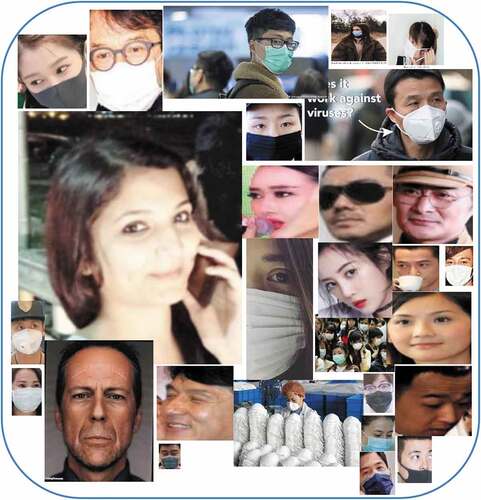

The dataset used in this project is the face dataset (with/without mask dataset) () from Kaggle.com. The dataset consists of 3,832 images divided into two classes of masks:

• Images taken with a mask: 1914

• Images taken Without Mask: 1918

there were different varieties of images with variations in size and resolution.

Methodology of the Proposed Study

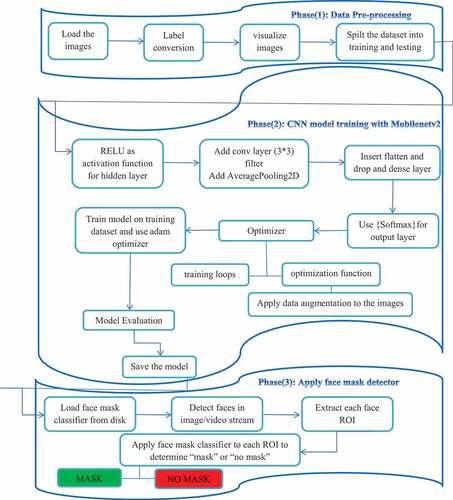

In order to predict whether a person has put on a mask, the model requires learning from a well-curated dataset, as discussed later in this section. The model uses Convolution Neural Network layers (CNN) as its backbone architecture to create different layers. Along with this, libraries such as OpenCV, Keras, Tensorflow and Sklearn are also used. The proposed model is designed in three phases: Data pre-processing, CNN model training and Applying face mask detector as described in .

Figure 3. Phases and individual steps for building a COVID-19 face mask detector with computer vision and deep learning using Python, OpenCV, and TensorFlow/Keras.

Data Pre-Processing

The accuracy of the model depends on the quality of the data set. Clearing the original data is performed to remove defective images found in the data set. The images are resized to a fixed size of 224 × 224, gives optimal results. Pictures are then marked with or without a mask. The image array is then converted to a NumPy array for quick calculations. The preprocess input function provided by MobileNetV2 is also used. Then, the data expansion method is used to increase the size of the training database and improve its quality. The ImageDataGenerator function is used to create multiple versions of the same image with the appropriate values for scrolling, scaling, horizontal or vertical scrolling. Training templates have been added to avoid overloading. It increases the generalization and strength of the studied model. The entire data set is then divided into read data and test data in an 8:2 ratio by randomly selecting images from the data set. The Stratify parameter is used to maintain the same proportion of data in the original data set in the read and test data sets.

MobileNetv2

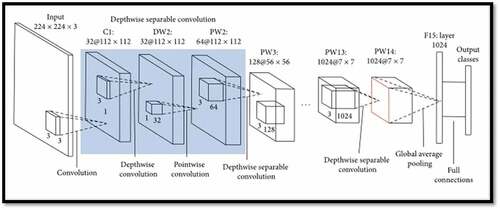

We used the Mobilenetv2 package from tensorflow to preprocess our image to work with the mobilenetv2 architecture, MobilenetV2 is a pre-trained model for image classification. Pre-trained models are deep neural networks that are trained using a large images dataset. Using the pre-trained models, the developers need not build or train the neural network from scratch, thereby saving time for development, .

Figure 4. Shows the architecture and the number of layers of a pre-trained MobileNetv2 model (Wang et al. Citation2020).

CNN Model Training

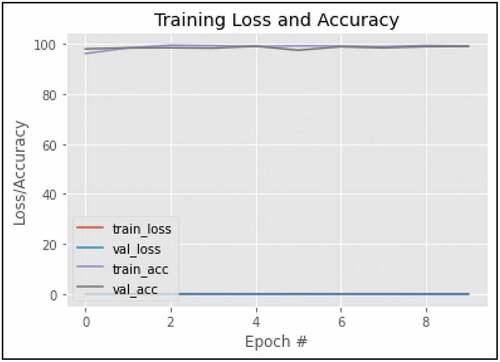

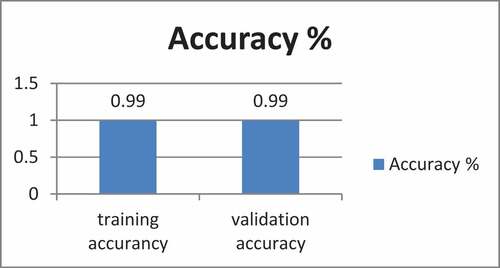

On dataset of images, extensive experiments were conducted to evaluate the performance and effectiveness of the suggested models. On dataset, shows the MobileNetV2 model’s training and validation curves. shows that over 10 epochs, the training and validation accuracy achieved by MobileNetV2 are 99%. Hence, the MobileNetV2 model achieved equal training accuracy on dataset. We made use of already existing MobileNetV2 architecture from keras. We remove the ADD layer and replace it with our own softmax layer brief explanation of the layers. In our model gradient descent is used which is an optimization algorithm used when training a machine learning model. It is based on a convex function and frequently adjusts its parameters to reduce a given function to a local minimum.

We start by defining the initial parameter values and from there use gradient calculus to adjust the values iteratively so that the specific cost function is minimized.

To improve the training of the model data augmentation has been used in data analysis are techniques used to increase the amount of data by adding slightly modified copies of already existing data or newly created synthetic data from existing data. It acts as a regulator and helps reduce overfitting when training a machine learning model. (Shorten and Khoshgoftaar Citation2019) It is closely related to data over-analysis. also used for optimization (Average pooling) involves calculating the average for each patch of the feature map. This means that each 7 × 7 square of the feature map is down sampled to the average value in the square. Our model is trained on ImageDataGenerator() (data augmentation) of the image dataset which we preprocess previously.

The new optimization algorithm used in this model includes easily adjusting gradients and how to calculate the loss, training acceleration with all kinds of tricks (e.g., teacher forcing) and better tuning of hyperparameters (e.g., using periodic learning rate). for every epoch it enumerates over the entire dataset in batches. For every batch, a forward pass and record every operation in a tape, calculate the loss with respect to the actual labels, use recorded operations to perform a backpropagation and calculate gradients, use the optimizer to adjust the layers weights by applying the gradients. Once, the pass on the entire training set finishes, the training loop performs a forward pass on the entire validation set in batches. For every batch, it does a forward pass and make sure the model is in an inference mode and calculate the validation loss of this epoch. It cumulates the losses to determine the validation loss of the current epoch.

Once, the pass on the entire training set finishes, the training loop performs a forward pass on the entire validation set in batches. For every batch, it does a forward pass and make sure the model is in an inference mode and calculate the validation loss of this epoch. It cumulates the losses to determine the validation loss of the current epoch. In the last step the CNN model is integrated into a web-based application that is hosted in order to be shared easily with other users and they can upload their image or live video feed to the model to recognize facial masks and then get the predicted result. Streamlit, an open-source python library, is used to design and construct a simple web app that allows users to submit a picture with a single click of a button and receive the outcome in a matter of seconds.

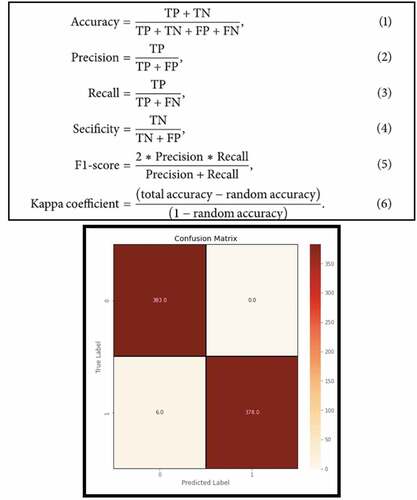

Evaluation Metrics

The effectiveness of the test data classification models was assessed using accuracy (equation (1)), accuracy (equation (2)), retrieval (equation (3)), specificity (equation (4)), F1 estimate (equation (5)). and the coefficient of the cap (equation (6)). An F1 rating is a harmonious environment for reminders and accuracy. Reminder, accuracy, and precision are calculated using true positive (TP), true negative (TN), false positive (FP), and false negative (FN), which can be calculated using confusion measurements, ) (İ̇lkayçınar Citation2022).

Experimental Results and Analysis

The final results, as shown in , were achieved after multiple experiments using various hyper-parameter values such as learning-rate(Taspinar et al. Citation2022), epoch size, and batch size.

Table 1. Classification report.

Model Testing

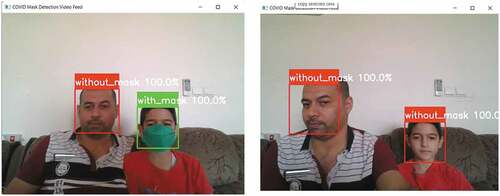

The model was tested on various diverse images, and some of them are exhibited below in the Fig. 10. The green rectangular box demonstrates a person correctly wearing the mask along with the accuracy score at the top, whereas the rectangular red box displays that the individual is without any mask. In summary, the model learns from the training dataset in order to label and then predict(Unal et al. Citation2022), .

Comparison with Other Models

A part from the custom CNN architecture implemented in this research, there exists some other architectures, such as MobilenetV2 (Kaur et al. Citation2021), etc. This model was compared to several models by training them on the same dataset.

MobileNetV2 is a CNN model of 53 layers and 19 blocks (Kaur et al. Citation2021). On comparing several models with the proposed model based on accuracy, size, and training speed, we can find that MobileNet-V2 performs marginally more inferior and is substantially slower to train but has a smaller memory footprint. On the contrary our proposed model, exhibits a slightly strong performance than main paper, training speed and is considerably smaller than (Kaur et al. Citation2021)

Conclusion

In conclusion, this project presents a model of machine learning in mask detection. After a process of training, testing and testing, the model is able to accurately measure the proportion of people wearing masks in certain cities. COVID-19 is one of the fastest spreading viruses and poses a threat to human health, global trade and the economy. The change and its rapid spread made it difficult to control the situation. Taking preventive measures will reduce the spread of the virus, one of the most important measures is wearing masks in public places.

Therefore, in this project, a deep learning-based approach was applied to automatically detect the face mask. The learning models i.e. Convolutional Neural Network (CNN) and MobileNetV2 model were evaluated on the dataset. The datasets consist of dataset containing 3832 images of individuals with and without masks. The comparative results show that MobileNetV2 achieved 99.21% classification accuracy. The program can also be linked to the entrance gates, allowing only those who are wearing masks to enter. It can also be used in shopping malls and universities.

Disclosure statement

No potential conflict of interest was reported by the author(s).

References

- Ahmed, S. M., S. Rushitha, P. Neeraj, Swapna, and V. Kumar Gunjan. 2022. Safety and prevention measure to reduce the spread of corona virus at places of mass human navigation-a precautious way to protect from covid-19 BT - modern approaches in machine learning & cognitive science: A walkthrough. In In, ed. V. K. Gunjan and J. M. Zurada, 327–3487. Cham: Springer International Publishing. doi:10.1007/978-3-030-96634-8_30.

- Alrammahi, A. H. I., and M. J. Radif. 2019. Neural networks in business applications. Journal of Physics Conference Series 1294 (4):042007. doi:https://doi.org/10.1088/1742-6596/1294/4/042007.

- Bhagat, S., N. Yadav, J. Shah, H. Dave, S. Swaraj, S. Tripathi, and S. Singh. 2020. Novel corona virus (COVID-19) pandemic: Current status and possible strategies for detection and treatment of the disease. Expert Review of Anti-Infective Therapy 20 (10):1–24. doi:10.1080/14787210.2021.1835469.

- Bhuiyan, M. R., S. Akter Khushbu, and M. Sanzidul Islam. 2020. “A deep learning based assistive system to classify COVID-19 face mask for human safety with YOLOv3.” In 2020 11th International Conference on Computing, Communication and Networking Technologies (ICCCNT), 1–5. IEEE.

- Canete, J. J. O. 2021. When expressions of faith in the philippines becomes a potential COVID-19 ‘superspreader.’. Journal of Public Health 43 (2):e366–67. doi:10.1093/pubmed/fdab082.

- Cheng, V.C.C, S.-C. Wong, V. Wai-Man Chuang, S. Yung-Chun so, J. Hon-Kwan Chen, S. Sridhar, K. Kai-Wang to, J. Fuk-Woo Chan, I. Fan-Ngai Hung, H. Pak-Leung, et al. 2020. The role of community-wide wearing of face mask for control of coronavirus disease 2019 (COVID-19) epidemic due to SARS-CoV-2. The Journal of Infection 81 (1):107–14. doi:10.1016/j.jinf.2020.04.024.

- Din, N. U., K. Javed, S. Bae, and J. Yi. 2020. A novel GAN-based network for unmasking of masked face. IEEE Access 8:44276–87. doi:10.1109/ACCESS.2020.2977386.

- Fadhil, S. A., and I. Abbas Marhoon. 2021. The efficiency of alcoholic extract of green algae chlorella sp. onleishmania major infection (in vivo). Annals of the Romanian Society for Cell Biology 25 (6):8408–20.

- Fong, S. J., N. Dey, and J. Chaki. 2021. An Introduction to COVID-19. In Artificial Intelligence for Coronavirus Outbreak, 1–22. Springer.

- Gayathri, B., T. Kumar, and V. Kumar Gunjan. 2022. Raspberry pi based crowd and facemask detection with email and message alert bt - modern approaches in machine learning & cognitive science: a walkthrough. In In, ed. V. K. Gunjan and J. M. Zurada, 369–75. Cham: Springer International Publishing. doi:10.1007/978-3-030-96634-8_35.

- Gupta, P., N. Saxena, M. Sharma, and J. Tripathi. 2018. Deep neural network for human face recognition. International Journal of Engineering and Manufacturing (IJEM) 8 (1):63–71. doi:10.5815/ijem.2018.01.06.

- İ̇lkayçınar, M. 2022. Identification of rice varieties using machine learning algorithms. Journal 28 (2):307–25.

- Kaur, G., R. Sinha, P. Kumar Tiwari, S. Kumar Yadav, P. Pandey, R. Raj, A. Vashisth, and M. Rakhra. 2021. Face mask recognition system using CNN model. Neuroscience Informatics 2 (3):100035. doi:10.1016/j.neuri.2021.100035.

- Koklu, M., I. Cinar, and Y. Selim Taspinar. 2022. CNN-based bi-directional and directional long-short term memory network for determination of face mask. Biomedical signal processing and control 71:103216. doi:10.1016/j.bspc.2021.103216.

- Loey, M., G. Manogaran, M. Hamed N Taha, and N. Eldeen M Khalifa. 2021. A hybrid deep transfer learning model with machine learning methods for face mask detection in the Era of the COVID-19 Pandemic. Measurement 167:108288. doi:10.1016/j.measurement.2020.108288.

- Matuschek, C., F. Moll, H. Fangerau, J. C. Fischer, K. Zänker, M. van Griensven, M. Schneider, D. Kindgen-Milles, W. Trudo Knoefel, A. Lichtenberg, et al. 2020. Face masks: Benefits and risks during the COVID-19 crisis. European Journal of Medical Research 25 (1):1–8. doi:10.1186/s40001-020-00430-5.

- Militante, S. V., and N. V. Dionisio. 2020. “Real-time facemask recognition with alarm system using deep learning.” In 2020 11th IEEE Control and System Graduate Research Colloquium (ICSGRC), 106–10. IEEE.

- Mohd, M., M. U. Q. Abdul, K. Sasidhar, and P. Sai Krishna. 2022. Deep learning-based prediction of NCOVID-19 disease using chest X-ray images (CXRIs) BT - contactless healthcare facilitation and commodity delivery management during COVID 19 pandemic. In In, ed. M. A. Chaurasia and S. Mozar, 15–25. Singapore: Springer Singapore. doi:10.1007/978-981-16-5411-4_3.

- “No title.” n.d. https://ai.googleblog.com/2018/04/mobilenetv2-next-generation-of-on.html.

- Peng, P. W. H., D. T. Wong, D. Bevan, and M. Gardam. 2003. Infection control and anesthesia: Lessons learned from the Toronto SARS outbreak. Canadian Journal of Anesthesia 50 (10):989–97. doi:10.1007/BF03018361.

- Qin, B., and D. Li. 2020. Identifying facemask-wearing condition using image super-resolution with classification network to prevent COVID-19. Sensors 20 (18):5236. doi:10.3390/s20185236.

- Sanjaya, S. A., and S. Adi Rakhmawan. 2020. Face Mask Detection Using MobileNetV2 in The Era of COVID-19 Pandemic. 2020 International Conference on Data Analytics for Business and Industry: Way Towards a Sustainable Economy (ICDABI), 1–5.

- Shorten, C., and T. M. Khoshgoftaar. 2019. A survey on image data augmentation for deep learning. Journal of Big Data 6 (1):1–48. doi:10.1186/s40537-019-0197-0.

- Taspinar, Y. S., M. Dogan, I. Cinar, R. Kursun, I. Ali Ozkan, and M. Koklu. 2022. Computer vision classification of dry beans (Phaseolus Vulgaris L.) based on deep transfer learning techniques. European Food Research and Technology 248 (11):2707–25. doi:10.1007/s00217-022-04080-1.

- Tom, L., Y. Liu, M. Li, X. Qian, and S. Y. Dai. 2020. Mask or no mask for COVID-19: A public health and market study. PloS One 15 (8):e0237691. doi:10.1371/journal.pone.0237691.

- Unal, Y., Y. Selim Taspinar, I. Cinar, R. Kursun, and M. Koklu. 2022. Application of pre-trained deep convolutional neural networks for coffee beans species detection. Food Analytical Methods 15 (12):3232–43. doi:https://doi.org/10.1007/s12161-022-02362-8.

- Vaishya, R., M. Javaid, I. Haleem Khan, and A. Haleem. 2020. Artificial Intelligence (AI) applications for COVID-19 Pandemic. Diabetes & Metabolic Syndrome: Clinical Research & Reviews 14 (4):337–39. doi:10.1016/j.dsx.2020.04.012.

- Venkateswarlu, I. B., J. Kakarla, and S. Prakash. 2020. “Face mask detection using mobilenet and global pooling block.” 2020 IEEE 4th Conference on Information & Communication Technology (CICT), 2020, pp. 1–5. doi:10.1109/CICT51604.2020.9312083.

- Wang, W., Y. Li, T. Zou, X. Wang, J. You, and Y. Luo. 2020. A novel image classification approach via dense-mobilenet models. Mobile Information Systems 2020:1–8. doi:10.1155/2020/7602384.

- Xiao, Y., and M. Estee Torok. 2020. Taking the right measures to control COVID-19. The Lancet Infectious Diseases 20 (5):523–24. doi:10.1016/S1473-3099(20)30152-3.

- Yadav, S. 2020. Deep learning based safe social distancing and face mask detection in public areas for covid-19 safety guidelines adherence. International Journal for Research in Applied Science and Engineering Technology 8 (7):1368–75. doi:10.22214/ijraset.2020.30560.