ABSTRACT

This paper presents a machine-learning-based method for evaluating the internal value of talent in any organization and for evaluating the salary criteria. The study assumes the design and development of a salary predictor, based on artificial intelligence technologies, to help determine the internal value of employees and guarantee internal equity in the organization. The aim of the study is to achieve internal equity, which is a critical element a that directly affects employees’ motivation. We implemented and validated the method with 130 employees and more than 70 talent acquisition cases with a Basque technology research organization during the years 2021 and 2022. The proposed method is based on statistical data assessment and machine-learning-based regression. We found that while most organizations have established variables for job evaluation as well as salary increments for staff according to their contribution to the organization, only a few employ tools to support equitable internal compensation. This study presents a successful real case of artificial intelligence applications where machine learning techniques help managers make the most equitable and least biased salary decisions possible, based on data.

Introduction

In current times, knowledge-intensive organizations that base their business on highly qualified professionals and digital profiles need to have a highly productive human resources (HR) department, given the lack of talent in the market and high staff turnover. In this regard, these departments need to introduce technology into their processes and automate their organizational tasks to increase agility in processes such as recruitment, career development, performance evaluations, training, and employee compensation management (Sipahi and Artantaş Citation2022).

Artificial intelligence (AI) has aspects applicable to different disciplines and sectors, including HR processes (Somayya, Holmukhe, and Kumar Jaiswal Citation2019), and provides tools to help in decision making. Important challenges, such as evaluating the value of talent in an organization and achieving internal equity in the salary aspect, are achievable thanks to AI and machine-learning technologies. That is, crucial aspects for the organization can be addressed using AI approaches to help employees and the organization achieve better performance (Sowa and Przegalinska Citation2020).

Pay equity is a critical issue for organizations. This is confirmed by existing literature that aims to quantify and create fair tools to assess human resources. Given the latest European Commission legislation regarding gender equality (European Commission Citation2014, Citation2021), equity is a critical element that directly affects the motivation of the staff (Acker Citation2006; Ng and Sears Citation2017; Ugarte and Rubery Citation2021; Zhu et al. Citation2022). However, it is difficult to achieve equity when the decision-making parties do not have adequate tools to facilitate equitable decisions over multivariate data.

Furthermore, employee motivation and its effect on superiors may not be based on objective variables of performance but instead on vital or current needs, or even on biased comparisons between coworkers (Bobadilla and Gilbert Citation2017; Litano and Major Citation2016). As such, those in charge of making salary decisions and the organizational staff face cognitive bias and variance on issues related to internal equity.

This study presents a common organizational problem and proposes a solution that employs data science and machine learning tools. It consists of a methodology and a tool co-designed and validated by data scientists and HR practitioners (Vassilopoulou et al. Citation2022) to help standardize the salary proposal for new talent, as well as the annual salary increments of current employees, by analyzing existing data and using a machine learning method as a salary predictor to deal with multivariate information and decrease the human cognitive and machine bias and, thereby decreasing the discrepancies associated with subjective variables.

The contributions of this study are as follows: it develops a predictor that mitigates human clinical prediction errors using statistical prediction methods as identified by Kahneman and Meehl (Kahneman Citation2013; Meehl Citation2013), it validates determinant variables used in salaries for hundreds of employees, and it presents evidence of the use and consequences of AI in HR practice demonstrating a successful real case. Consequently, the article contributes to the realization of the optimistic vision of the future, where AI improves the efficiency and fairness of HR management (Charlwood and Guenole Citation2022).

Background

To mitigate the risks posed by human predictive errors (Daniel, Sibony, and Sunstein Citation2021) and machine bias (Hutchinson and Mitchell Citation2019), this study is based on existing literature on pay equity and the main data science used or properly prepared as well as the problem statement explained in the previous section.

Salary Decision Systems

The concern of employers and employees on salary decision systems has been around for many years. This concern was exacerbated by pay disparities found in several salary studies relating to gender pay gap, which led to the formulation of equality laws and regulations in various countries. Most recent studies match pay equity with employee performance evaluation and focus on employee enhancement and productivity based on employee performance which improves their salaries (Aghdaie, Ansari, and Amini Filabadi Citation2020; Chikwariro, Bussin, and De Braine Citation2021; Loyarte-López et al. Citation2020; Reddy Citation2020). These studies do not contemplate gender issues in their cornerstone since their purpose is to analyze how employee performance can positively affect their salary and motivation and also what kind of extrinsic or intrinsic motivation impacts their performance. In the existing literature, there are articles that expressly study gender differences in salaries in certain sectors such as medicine (Kapoor et al. Citation2017; Mensah et al. Citation2020; Popovici et al. Citation2021; Wiler et al. Citation2021), surgery (Sanfey et al. Citation2017), services industry (Kronberg Citation2020), industry (Goraus, Tyrowicz, and van der Velde Citation2017), physician collective (Dan et al. Citation2021; Hayes, Noseworthy, and Farrugia Citation2020), higher education (Taylor et al. Citation2020), and banking sector (Tianyi, Jiang, and Yuan Citation2020). These kinds of studies attempt to promote equal changes for women and, after exhaustive analysis, propose structural and individual solutions to achieve equality not only in terms of salary but also in terms of promotion.

Few studies include automation-based assessments. Most of the studies are based on statistical studies and human resources practices. A recent article discomposes the gender wage gap using a LASSO estimator (Böheim and Stöllinger Citation2021). This estimator is valid to select among a large number of explanatory variables in wage regressions for a decomposition of the gender wage gap. After reviewing existing literature, we found that studies that validate machine-learning tools and consider real organizations and salary decision-making processes are scarce.

Existing studies provide different approaches to improving pay equity. Although there has been more activity recently in HR Management (HRM) through technological tools, this study contributes to the literature by providing a data science approach to developing a salary assessment methodology to validate salary determinants or factors. Moreover, the theories put forward by Meehl (Citation2013) and Kahneman (Citation2013) are a qualitative leap both in literature and organizational practice. As Meehl (Citation2013) demonstrated in his analysis of clinical decision making, mechanical prediction achieved through decision rules that determine the valid criteria for decision making tends to be more accurate than the expert judgment of clinicians. Likewise, in his Nobel prize-winning research, Kahneman (year) demonstrated with his studies that human beings are prone to big prediction errors that can be overcome by using algorithmic approaches.

Bias in HR Algorithms

Concern exists around the impact of AI in the field of HRM. Both positive and negative visions of the future are likely to coexist (Charlwood and Guenole Citation2022). Studies to prevent negative influences of AI in HR practices exist, such as Vassilopoulou et al. (Citation2022). They examine more than 10,000 manuscripts on HRM, inequality, bias, diversity, discrimination, and algorithm keywords. Finally, after an exhaustive search, 60 papers focusing on AI bias in HR practices as the cornerstone theme were selected for this study. They conclude that there are five ways through which HR algorithms can influence inequalities in organizations: programmed for bias, proxies, algorithmic specification of fit, segregation for individuals, and technical design. To mitigate these biases, they also develop a bias proofing methodology for algorithmic hygiene for HR professionals to reinforce and consolidate HR practices.

According to positive vision, AI (Data Science and Machine Learning) can help create methods and tools that complement human reasoning and improve decision-making in various fields. The management field is in dire need of these technologies because decisions made by managers are mostly complex and multidimensional, affecting the efficiency and efficacy of the organization, as well as its work environment. Current data science methodologies (Martinez, Viles, and Olaizola Citation2021) can help managers select the appropriate criteria and implement good-performing machine-learning tools to improve internal equity and transparency (Viroonluecha and Kaewkiriya Citation2018).

AI has started transforming the world of work (Heath Citation2019; Sipahi and Artantaş Citation2022; Spencer Citation2017) and it is going to affect it. It can dramatically affect positively in terms of efficiency and fairness or can provoke unforeseen or negative consequences when it is finally implemented and used.

This study contributes to the literature in terms of exploring a method designed to mitigate conscious and unconscious biases (both human and machine biases) in the decision-making process and validation with actual results in a research technology organization. A technological tool (a salary predictor) co-designed by data scientists and HR Managers was developed. According to the contribution of practitioners, this study represents a successful real case of AI, where machine-learning techniques help managers make the most equitable and least biased salary decisions possible, based on real data and facts.

The predictor is used to negotiate salaries with new talent acquisitions and as a basis to decide salary increases.

Validating Organization

Data validation is executed on a research technology organization located in the Basque Country of Spain. Its main business activity is R&D aimed at enhancing the innovative performance of industry and society. The organization is a nonprofit foundation with different research outputs, ranging from basic research to experimental prototype development (technology readiness levels 3–7).

Research Methodology

The exploration carried out in this study consists of a contextual analysis (Fantaw et al. Citation2020). In this case, the incidence of each variable mentioned in in the final result (salary) of each researcher is analyzed. This analysis makes the salary predictor much more precise than the classic job evaluation methodology because its talent is quoted.

The methodological ranking applied and the flow of steps are the follows:

Data collection

Validation method

Salary predictor design requirements

Prediction model: training process

Salary policy assessment

Results

Data Collection

The personnel of the organization are researchers, 40% of them hold doctoral degrees, working in a variety of technology domains. This research technology organization increased its employees by 75.43% in the last 5 years, including the departure of 86 employees (see ). In this sense, the staff movement and salary reviews are very high and require a considerable amount of time.

Table 1. Employee turnover and increase during 5 years.

The performance-measurement variables for researchers are based on objective criteria such as scientific publications, projects managed, patents achieved, and degrees (see ) (Loyarte-López et al. Citation2020, Citation2020). Consequently, the validation and examples developed in this study are based on this organization. However, this method is replicable in other types of organizations with different variables and criteria and HRM data-driven culture (Lin et al. Citation2022).

There are four researcher categories according to “seniority:” principal researcher, senior researcher, staff researcher, and junior researcher.” Each category has its minimum requirements and promotion merits. These variables are included in . In conclusion, salary determinant variables and the variables which influence the performance evaluation and the career development of each researcher have a full match and coherence as they are the same variables.

The data was collected in January 2021. At that moment the organization had 131 researchers: 5 principal researchers, 23 senior researchers, 46 staff researchers, and 56 junior researchers. Of these 131 researchers, 76 had assessment data available because their performance was evaluated in the previous year (these 76 researchers constituted the sample used as the training dataset. The data collected was enough for the research, given that all the researchers were distributed in four different categories with the same parameters. They are all very similar.

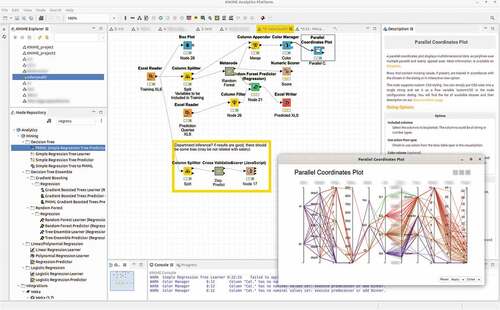

Data processing was carried out through the KNIME program. While most machine learning tools require programming skills, some tools such as KNIME (Berthold et al. Citation2006) allow users to visually prepare (ETL Extract, Load Transform), train, validate and plot machine learning models and prediction results. Therefore, the methods described in this paper were implemented on KNIME (see ).

Method

The proposed method is structured in two main phases (). The first one consists of the assessment of data. Salary information is analyzed to assess that value and equity criteria established by the organization are reflected in data. There are several statistical and machine-learning tests that are used to validate whether the criteria of the organization are reflected in the data. Basic statistical measurements such as mean/variance and median or Student’s-t test are hard to apply as multiple input variable values are different for targeted groups such as gender, nationality, or department. However, visual analytics techniques together with dimensionality-reduction techniques can help observe coherence in data and identify exceptions or anomalies as outliers. Moreover, machine-learning methods can be used to validate or refute hypotheses (e.g. gender bias hypothesis).

Once data quality and coherence (against bad policies and biases) are ensured by preprocessing and evaluating input data, we apply a method endowed by a machine-learning-based predictor for the process of hiring talent in any organization (). Personnel suitable for the position are selected based on the variables used for performance evaluation, and the talent criteria used to verify these variables. The objective analysis is combined with subjective information obtained during the interview. At this point, subjective and negotiable variables are considered. At this point, a regressor is introduced to obtain a reference value free of any human bias that is used as the baseline for the final decision. This way, the machine-learning-based regressor acts as a decision support system.

Moreover, the same method can be used for salary assessment by using the predictor to identify the coherence inherent in the salary-related data and fix potential deviations.

Salary Predictor Design Requirements

The current plethora of prediction methods require clear design criteria and method that help the mitigation of human and machine bias. The most suitable criteria must be selected for these employees (researchers). As the prediction target in our case is a numeric value (salary), we selected those oriented to regression tasks. Furthermore, as input data can include numerical and categorical data and the training dataset does not contain a big number of samples (around 130 samples), the selected methods must converge rapidly. In our case, the dataset is composed of 11 variables and k-fold 10 cross-validation is used to validate that the prediction model converges to an accurate bias-variance balance.

Regarding the bias/variance tradeoff (Belkin et al. Citation2019), in this case, low variance is a more relevant aspect as the target (salary) should not show high sensitivity to small fluctuations in input data.

The predictor should be co-designed and co-created by HR practitioners and data scientists to achieve effectiveness based on the two approaches and combine a successful combination of both types of knowledge with the aim of safeguarding ethical values regarding the importance of human dignity and justice (Raisch and Krakowski Citation2021; van den Broek, Sergeeva, and Huysman Vrije Citation2021). In this process, the assessment of the predictor is also very relevant to determine whether historical data contains any biases.

According to Vassilopoulou et al. (Citation2022), bias proofing for algorithmic hygiene for HR professionals should be also done to ensure compliance with the laws and social justice requirements and to achieve a machine-bias-free predictor. Our predictor should comply with the AI Act of the European Parliament (European Parliament and the Council Citation2021).

The last requirement is that, to understand the influence of each factor and to assess the fairness of the model, the method used should be interpretable. This is especially relevant to provide valuable information about how each individual case should improve and check the behavior of the model. We could verify whether the variables identified by the model as the most relevant are the ones that the organization wants to foster.

Prediction Model

Different regression models were tested, including linear regression, ridge regression, Lasso regression, SVM, gradient boosting, random forest, neural networks, Bayesian ridge, Ada boost, and KNN. In the case of the recruitment dataset, random forest (RF) (Breiman, 2001) is the only model that has consistently provided a mean absolute percentage error below 4% () after performing random K-fold 10 cross-validation experiments, while annual salary review dataset is better predicted using gradient boosting regressor (GBR) (Friedman Citation2001).

Table 2. Training and assessment variables.

Table 3. Training Performance.

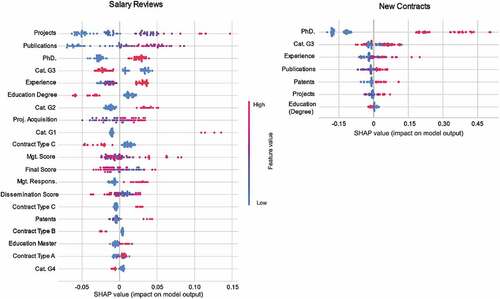

All the requirements established in the previous section and the good performance scores led us to select RF as the regression method for recruitment and GBR for salary review. Both methods offer relatively good explainability ( shows the variable importance according to predictors), tend to keep low variance and can compute different input data types (numeric and categorical). However, it is important to heed that a similar systematic comparative regression method benchmark should be applied to any new dataset.

Training Process

We used the salary database of the organization to build two predictors: one to calculate the employee salary in the recruitment process and the other to calculate annual salary increases, considering performance evaluations from recent years (three periods, for instance). We used the variables included in the performance measurement system designed and developed by the research technology organization.

The accuracy results of the two models (annual salary review where the performance index is calculated based on internal KPIs and recruitment dataset, where the performance index cannot be obtained and therefore is removed from the training) are shown in , and training performance statistical data is presented in .

The training dataset can be improved by increasing the internal coherence of data. In this sense, outliers and samples that are inconsistent with the internal policies must be removed. This task was performed during the assessment process. Moreover, feature space can be enriched by creating synthetic data according to the rules and criteria of the organization.

Salary Policy Assessment

The goal of the salary assessment process is to ensure that salary decisions are taken based on general policies that promote the goals of the organization (fairness, equity, performance, etc.) To mitigate human bias, we propose the use of machine-learning methods that extend the more classical statistical methods (A/B tests, Student T test, null hypothesis, etc). Our proposed approach includes three main strategies:

Visual analysis by dimensionality-reduction techniques

Explainability analysis of the prediction model

Hypothesis testing by changing the input variables of the prediction model

Visual Analysis by Dimensionality Reduction

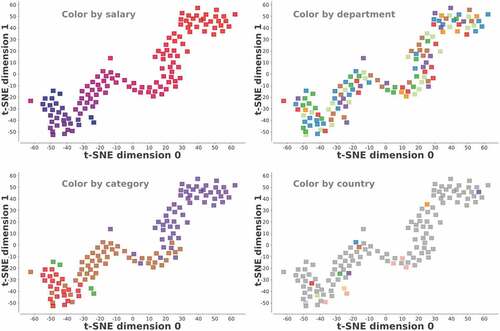

Visual cluster analysis can show how data are spread in feature space. We experimented using t-SNE method (Van Der Maaten and Hinton Citation2008) on our dataset and found a continuous path where categories and salaries evolve. Other features such as sex, origin, and department were randomly distributed. shows some examples of t-SNE representations where different criteria are shown as color codes to verify if clusters or patterns apply.

As observed in , while salary and category follow a clear pattern where even clusters can be visually distinguished, other aspects such as department or country of origin are randomly distributed. In other words, each cluster is represented by one color, and therefore, it can be visualized how salary and category follow a color order and departments and country are fuzzy.

Explainability

Even if models such as random forest can provide variable importance inherent to the model used, other explainable methods such as SHAP provide a deeper insight regarding the way these variables influence prediction. shows the SHAP values where Projects and Publications appear as the main factors for salary reviews. Even if the impact of Category is also relevant, this variable has a strong dependence on Education and Performance Scores. In the case of the recruitment dataset, PhD is a highly discriminant variable, and then Experience, Publications, and Projects show a similar impact. As with the salary reviews dataset, Category reflects a direct strong effect in salary but depends on the rest of the variables. Aspects such as Country of Origin, Gender or Department are below the threshold. Performance index scores have less relevance because they have been conditioned by the variables that already show a big influence in the final prediction.

Hypothesis Testing

Finally, we propose the use of hypothesis testing as the third assessment method. In this case, the salary is used as input data and variables such as gender, department, or country of origin (which are supposed to be unrelated to salary-related decisions) are transformed into the target of the prediction method. If the accuracy of the predictor is not clearly better than the random baseline, it can be assumed that this feature is not relevant to salary-related decisions.

In both the recruitment and salary review datasets, gender class is strongly unbalanced toward men with the consequent risk of bias. The behavior of tested classifiers in both cases tends to lean toward the majority class. The application of dataset balancing methods (upscaling, downscaling, SMOTE, etc.) can mitigate this effect. Upscaling was used in this case. As predictors are not able to predict women in the datasets (precision is below 0.5 in the salary review dataset and 0.67 in the recruitment dataset while a random predictor should have 0.5 of baseline accuracy). Similarly, a department predictor has given an accuracy of 0.227 (recruitment) and 0.33 (salary review) while the random baseline would be 0.143.

If the same approach is applied with a variable that has been identified as relevant (e.g., category) accuracy rises to 0.882 (salary review dataset) and 0.93 (recruitment dataset) while the random baseline would be 0.25 (4 categories), showing that all these results are consistent with the variable relevance information.

Results

The main result is that the salary predictor worked. The three salary assessment strategies show that the predictor is sensitive to the salary determinant variables indicated in . If one mentioned variable changes, it affects salary. HR practitioners of the organization carry out double checks to verify that the results acquired by the predictor are correct (guarantee internal equity).

Discussion

The salary predictor developed through data science explained in this paper was used as a tool that provides a salary reference in 130 employee cases, 70 acquisitions in 2021, and other 200 cases of salary increases in 2022. Even if the dataset size might seem quite limited, the created feature space satisfies the needs of the employed methods for prediction and assessment in terms of predictability, consistency, and explainability. In this section, we structured the reporting of the empirical results from a statistical, theoretical, and practical perspective.

Statistically, the following findings have been obtained during the process:

Consistency in 70 talent acquisition processes: We found that salary estimates by the tool are appropriate not only to offer a salary and start a negotiation but also to know the rank where the organization can move in each negotiation. The data provided by the predictor is scrutinized by managers to assess whether the salary information is consistent with that of similar profiles.

Consistency in salary review/assessment in 130 cases: We analyzed all cases to visualize salary and merits. This analysis has made it possible to carry out an individualized study of all the cases and make decisions to fix those cases in which a deviation was identified by the assessment process (deviations are identified as outliers in the dimensionality reduction visual representation and tend to have the highest errors when compared with the random forest regressor predictions.

Theoretically, this research contributes to the following:

The predictor can mitigate conscious and unconscious bias as it is based on objective data. Consequently, the resulting salary is more acceptable at the first instance to the employee than it is when determinant variables are subjective.

This methodology is valid not only to mitigate the gender gap but also to mitigate other diversity gap factors (including internal variables such as different departments).

As Charlwood and Guenole (Citation2002) conclude in their study, misconceptions about AI also exist. There are typical objections like “HR data is bad data,”“AI reproduces discriminatory behaviors produced by human biases,” or “AI are only functionally black boxes” that they are not real in the case explained in this article. HR data is good data because it is objective-based data, not based on opinions or subjective judgments, and in this case, an assessment has been implemented not to reproduce any previous mistake (every outlier was exhaustively studied).

This is a real AI case, not a hypothetical scenario.

Finally, practically, the main lessons learned are the following:

It is very important to develop key determinant factors or variables for salaries in an organization, and they need to be transparent and acceptable for employees. Employees can compare with each other and therefore, organizations must focus their comparison in terms of key determinants variables. The predictor works properly in terms of consistency between factors “results per employee” and “salary amount.”

A data-driven HRM culture is crucial for the organization to start working on AI in HR field and to achieve acceptance by employees.

Employees are more than a number of achievements and indicators, as it could happen that some intangible assets or variables are ignored by the predictor. Consequently, the predictor should be a tool to help in decision making rather than making decisions by itself. The predictor should be reviewed every year to evaluate whether it is working properly and to improve it (preventive maintenance).

Predictor designers and creation teams are crucial to achieving a successful tool. Their knowledge, collaboration, and good understanding as well as their commitment to developing a fair predictor are very important.

There are some limitations in the research validation of this study:

It has been validated through casuistry by employees of an organization with previous literature in the objective standardization of the professional career and performance of its researchers. Its generalization to other organizations or companies might require adaptations in the variable selection and assessment process.

This study could offer a more exhaustive state-of-the-art prediction model, but it has been focused on practical and replicable work. The aim of this article is to encourage and help other organizations to develop salary predictors to comply not only with current laws but also with the commitment to internal equity. Moreover, it contributes a new solution to frequently studied salary audit gaps and bias to the scientific community.

We consider that our methodology can be successfully extended to other organizations. The presented method can lead organizations to more objective decision making and higher accomplishments of established salary policies. This method might be especially useful when efforts and merits are difficult to measure, as is the case of R&D organizations and in medium-large organizations when salary decision-makers cannot know the performance of each employee.

For future work, we intend to review salary determinants to include other specific items to improve the model and test the acceptance of the model in other organizations. AI is a reality and therefore, thinking of how AI could improve our work is the first step toward working on emerging problems where AI could bring different skills, such as scientists and practitioners, to work together for mutual benefit.

Conclusion

This study demonstrates how to develop a method based on artificial intelligence for deciding the internal value of talent in an organization and for evaluating the salary criteria. The presented method helps to minimize the subjectivity of decision-making bodies and ensures consistency in internal equity throughout the organization and over time, improves objectiveness and fairness of organizations in talent management.

As Thomas Aquinas stated, “There will be the same equality between persons and between things in such a way that, as things are related to one another, so are persons. If they are not equal they will not have equal shares, and from this source quarrels and complaints will arise, when either persons who are equal do not receive equal shares in distribution, or persons who are not equal do receive equal shares.” (Thomas Citation1993). This study contributes to making decisions that determine the salary of employees based on their merits and abilities, as well as on the organizational requirements.

To sum up, the method and therefore the predictor must be faithful to the variables and politics to which it responds, meanwhile, decision-making bodies should respect the results of the predictor that responds to the designed system. When technology and humans follow the frameworks and systems designed and implemented, subjectivity is mitigated and effectiveness and productivity increase.

Acknowledgments

We are grateful to Basque Government for their funding in Emaitek Programs.

Disclosure Statement

No potential conflict of interest was reported by the author(s).

Data Availability Statement

The data that support the findings of this study are in the validating organization.

References

- AckerAcker, J. 2006. Inequality regimes. Gender & Society 20 (4):441–19. doi:10.1177/0891243206289499.

- Aghdaie, S. F. A., A. Ansari, and M. Amini Filabadi. 2020. Comparing the effects of brand equity, job security and salary and wages on the efficiency of human resources from the perspective of the staff. International Journal Procurement Management 13 (3):397–418.

- Belkin, M., D. Hsu, S. Ma, and S. Mandal. 2019. Reconciling modern machine-learning practice and the classical bias–variance trade-off. Proceedings of the National Academy of Sciences of the United States of America 116 (32):15849–54. doi:10.1073/pnas.1903070116.

- Berthold, M. R., N. Cebron, F. Dill, G. Di Fatta, T. R. Gabriel, F. Georg, T. Meinl, P. Ohl, C. Sieb, and B. Wiswedel. 2006. KNIME: The Konstanz information miner. 4th International Industrial Simulation Conference 2006, ISC 2006, 58–61. EUROSIS. doi:10.1145/1656274.1656280.

- Bobadilla, N., and P. Gilbert. 2017. Managing scientific and technical experts in R&D: Beyond Tensions, conflicting logics and orders of worth. R&D Management 47 (2):223–35. doi:10.1111/radm.12189.

- Böheim, R., and P. Stöllinger. 2021. Decomposition of the gender wage gap using the LASSO estimator. Applied Economics Letters 28 (10):817–28. doi:10.1080/13504851.2020.1782332.

- Charlwood, A., and N. Guenole. 2022. Can HR adapt to the paradoxes of artificial intelligence? Human Resource Management Journal January doi:10.1111/1748-8583.12433.

- Chikwariro, S., M. Bussin, and R. De Braine. 2021. Reframing performance management praxis at the Harare city council. SA Journal of Human Resource Management 19 (January). doi:10.4102/sajhrm.v19i0.1438.

- Daniel, K., O. Sibony, and C. R. Sunstein. 2021. Noise: A flaw in human judgment. London: Little, Brown Spark.

- Dan, Z., M. Liao, P. Wang, and H.-C. Chiu. 2021. Development and validation of an instrument in job evaluation factors of physicians in public hospitals in Beijing, China. Edited by Ritesh G. Menezes. Plos One 16 (1) : e0244584. doi:10.1371/journal.pone.0244584.

- European Commission. 2014. Commission recommendation of 7 March 2014 on strengthening the principle of equal pay between men and women through transparency. Brussels.

- European commission, directorate-general for research and innovation. 2021. Horizon Europe Guidance on Gender Equality Plans. doi:10.2777/876509.

- European Parliament and the Council. 2021. Laying down Harmonised Rules on Artificial Intelligence (Artificial Intelligence Act) and Amending Certain Union Legislative Acts. https://eur-lex.europa.eu/legal-content/EN/TXT/DOC/?uri=CELEX:52021PC0206&from=ES.

- Fantaw, A., S. K. Gupta, P. Reznik Nadia, E. Sipahi, and S. D. F. Teston. 2020. Analysis of the effect of compensation on twitter based on job satisfaction on sustainable development of employees using data mining methods. Talent Development & Excellence 12 (3):35.

- Friedman, J. H. 2001. Greedy function approximation: A gradient boosting machine. Annals of Statistics 29:5. doi:10.1214/aos/1013203451.

- Goraus, K., J. Tyrowicz, and L. van der Velde. 2017. Which gender wage gap estimates to trust? A comparative analysis. Review of Income and Wealth 63 (1):118–46. doi:10.1111/roiw.12209.

- Hayes, S. N., J. H. Noseworthy, and G. Farrugia. 2020. A structured compensation plan results in equitable physician compensation. Mayo Clinic Proceedings 95 (1):35–43. doi:10.1016/j.mayocp.2019.09.022.

- Heath, D. R. 2019. Prediction machines: The simple economics of artificial intelligence. Journal of Information Technology Case and Application Research 21 (3–4):163–66. doi:10.1080/15228053.2019.1673511.

- Hutchinson, B., and M. Mitchell. 2019. 50 years of test (Un)Fairness. Proceedings of the Conference on Fairness, Accountability, and Transparency, 49–58. New York, NY, USA: ACM. 10.1145/3287560.3287600.

- Kahneman, D. 2013. Thinking, fast and slow https://en.wikipedia.org/wiki/Thinking,_Fast_and_Slow.

- Kapoor, N., D. M. Blumenthal, S. E. Smith, I. K. Ip, and R. Khorasani. 2017. Sex differences in radiologist salary in U.S. public medical schools. American Journal of Roentgenology 209 (5):953–58. doi:10.2214/AJR.17.18256.

- Kronberg, A.-K. 2020. Workplace gender pay gaps: Does gender matter less the longer employees stay? Work and Occupations 47 (1):3–43. doi:10.1177/0730888419868748.

- Lin, S., E. Sipahi Döngül, S. Vural Uygun, M. Başaran Öztürk, D. Tran Ngoc Huy, and P. Van Tuan. 2022. Exploring the relationship between abusive management, self-efficacy and organizational performance in the context of human–machine interaction technology and artificial intelligence with the effect of ergonomics. Sustainability 14 (4):1949. doi:10.3390/su14041949.

- Litano, M. L., and D. A. Major. 2016. Facilitating a whole-life approach to career development. Journal of Career Development 43 (1):52–65. doi:10.1177/0894845315569303.

- Loyarte-López, E., I. García-Olaizola, J. Posada, I. Azúa, and J. Flórez. 2020. Sustainable career development for R&D professionals: Applying a career development system in Basque country. International Journal of Innovation Studies 4 (2):40–50. doi:10.1016/j.ijis.2020.03.002.

- Loyarte-López, E., I. García-Olaizola, J. Posada, I. Azúa, and J. Flórez-Esnal. 2020. Enhancing researchers’ performance by building commitment to organizational results. Research-Technology Management 63 (2):46–54. doi:10.1080/08956308.2020.1707010.

- Maaten, L. V. D., and G. Hinton. 2008. Visualizing data using T-SNE. Journal of Machine Learning Research 9. http://jmlr.org/papers/v9/vandermaaten08a.html.

- Martinez, I., E. Viles, and I. G. Olaizola. 2021. Data science methodologies: Current challenges and future approaches. Big Data Research 24 (May):100183. doi:10.1016/j.bdr.2020.100183.

- Meehl, P. E. 2013. Clinical versus statistical prediction: A theoretical analysis and a review of the evidence. Echo Point Books.

- Mensah, M., W. Beeler, L. Rotenstein, R. Jagsi, J. Spetz, E. Linos, and C. Mangurian. 2020. Sex differences in salaries of department chairs at public medical schools. JAMA internal medicine 180 (5):789. doi:10.1001/jamainternmed.2019.7540.

- Ng, E. S., and G. J. Sears. 2017. The glass ceiling in context: The influence of CEO gender, recruitment practices and firm internationalisation on the representation of women in management. Human Resource Management Journal 27 (1):133–51. doi:10.1111/1748-8583.12135.

- Popovici, I., M. J. Carvajal, P. Peeples, and S. E. Rabionet. 2021. Disparities in the wage-and-salary earnings, determinants, and distribution of health economics, outcomes research, and market access professionals: An exploratory study. PharmacoEconomics - Open 5(2):319–29. January doi: 10.1007/s41669-020-00247-2.

- Raisch, S., and S. Krakowski. 2021. Artificial intelligence and management: The automation–augmentation paradox. Academy of Management Review 46 (1):192–210. doi:10.5465/amr.2018.0072.

- Reddy, V. S. 2020. Impact of compensation on employee performance. IOSR Journal of Humanities and Social Science 25 (9):17–22. www.iosrjournals.org.

- Sanfey, H., M. Crandall, E. Shaughnessy, S. L. Stein, A. Cochran, S. Parangi, and C. Laronga. 2017. Strategies for identifying and closing the gender salary gap in surgery. Journal of the American College of Surgeons 225 (2):333–38. doi:10.1016/j.jamcollsurg.2017.03.018.

- Sipahi, E., and E. Artantaş. 2022. Artificial intelligence in HRM. 1–18. doi:10.4018/978-1-7998-8497-2.ch001.

- Somayya, M., R. M. Holmukhe, and D. Kumar Jaiswal. 2019. The future digital work force: Robotic process automation (RPA). Journal of Information Systems and Technology Management 16 (January):1–17. doi:10.4301/S1807-1775201916001.

- Sowa, K., and A. Przegalinska. 2020. Digital coworker: Human-AI collaboration in work environment, on the example of virtual assistants for management professions. Collaborative innovation networks conference of Digital Transformation of Collaboration, 179–201. Cham: Springer. doi:10.1007/978-3-030-48993-9_13.

- Spencer, D. 2017. Work in and beyond the second machine age: The politics of production and digital technologies. Work, Employment and Society 31 (1):142–52. doi:10.1177/0950017016645716.

- Taylor, L. L., J. N. Lahey, M. I. Beck, and J. E. Froyd. 2020. How to do a salary equity study: With an Illustrative example from higher education. Public personnel management 49 (1):57–82. doi:10.1177/0091026019845119.

- Thomas, A. O. P. Notre Dame Trans: C. I. Litzinger. 1993. Commentary on Aristotle’s Nicomachean Ethics. Dumb Ox Books https://www.amazon.com/Commentary-Aristotles-Nicomachean-Ethics-Aristotelian/dp/1883357519.

- Tianyi, M., M. Jiang, and X. Yuan. 2020. Cash salary, inside equity, or inside debt?—The determinants and optimal value of compensation structure in a long-term incentive model of banks. Sustainability 12 (2):666. doi:10.3390/su12020666.

- Ugarte, S. M., and J. Rubery. 2021. Gender pay equity: Exploring The impact of formal, consistent and transparent human resource management practices and information. Human Resource Management Journal 31 (1):242–58. doi:10.1111/1748-8583.12296.

- van den Broek, E., A. Sergeeva, and M. Huysman Vrije. 2021. When the machine meets the expert: An ethnography of developing AI for hiring. MIS Quarterly 45 (3):1557–80. doi:10.25300/MISQ/2021/16559.

- Vassilopoulou, J., O. Kyriakidou, M. F. Özbilgin, and D. Groutsis. 2022. Scientism as illusio in HR algorithms: Towards a framework for algorithmic hygiene for bias proofing. Human Resource Management Journal January doi:10.1111/1748-8583.12430.

- Viroonluecha, P., and T. Kaewkiriya. 2018. Salary predictor system for Thailand labour workforce using deep learning. 2018 18th International Symposium on Communications and Information Technologies (ISCIT), 473–78. Bangkok, Thailand: IEEE. 10.1109/ISCIT.2018.8587998.

- Wiler, J. L., S. K. Wendel, K. Rounds, B. McGowan, and J. Baird. 2021. Salary disparities based on gender in academic emergency medicine leadership. Academic Emergency Medicine 29(3):286–93. December doi: 10.1111/acem.14404.

- Zhu, X., F. Lee Cooke, L. Chen, and C. Sun. 2022. How inclusive is workplace gender equality research in the Chinese context? Taking Stock and looking ahead. The International Journal of Human Resource Management 33 (1):99–141. doi:10.1080/09585192.2021.1988680.