?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.

?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.ABSTRACT

In this paper, we have introduced a differential perturbation operator into the gray wolf optimization (GWO) algorithm using three randomly selected omega wolves which assist the three leader wolves of the original GWO algorithm for diversifying the solution quality among the feasible omega wolves. Additionally, we have introduced the use of similar values for the control parameters (A and C) of GWO for each leader wolf while updating the position of a single omega wolf. This diversification among the omega wolves introduces an element of exploration in the exploitation phase and hence further improves the optimization capability of the GWO algorithm. For comparative performance analysis, the results obtained from the proposed algorithm are compared with ten promising recently proposed meta-heuristic algorithms such as IAOA, RSA, mGWOA, VWGWO, mGWO, GWO, SCA, JAYA, ALO and WOA in optimizing 23 mathematical benchmark unimodal, multimodal and fixed dimension functions. Additionally, the performance of the proposed algorithm is tested in 12 promising data clustering problems using four performance measures such as accuracy, precision, F-score and MCC. Superiority of the proposed algorithm in optimizing benchmark functions and data clustering is statistically verified using pairwise Wilcoxon signed-rank test and Friedman and Nemenyi hypothesis test.

Introduction

Global optimization is a challenging task in the field of mathematics and computer science due to its substantial number of interdependent applications. In the essence of global optimization without the loss of generality, an optimization problem can be represented as given in Equationeq. (1)(1)

(1) :

Here, is an objective function defined over search space

, where the search space

covers an n-dimensional space. To be specific, an optimization algorithm can be presented as given in Equationeq. (2)

(2)

(2) :

Where, is a scalar quantity and

is a vector. Here, the Equationeq. (2)

(2)

(2) reveals that the optimization algorithms always search from the current position

to destination

by moving along the direction

with a step-size given by

.

Considering the above properties of optimization algorithm, different algorithms use different procedure to select and

Correspondingly, the performance of each algorithm depends on these two parameters. For example, Newton Raphson’s algorithm uses Newton’s steps to select

and

, the gradient descent algorithm uses negative gradients to select these above values with a hope to find optimal solutions. Moreover, these conventional algorithms show difficulties in solving the problems such as stagnation, dependence of initial solutions and converging to local optimal solutions. To tackle such issues, the real-world problems need to be simplified to be inclined toward specific mathematical properties such as continuity, differentiability and convexity. However, inclining such real-world problems toward certain mathematical properties is itself a hard problem as the real-world problems are non-differentiable, discontinuous, multimodal and multidimensional (Jamil and Yang Citation2013). Considering the above difficulties, the traditional algorithms are not suitable for obtaining satisfactory results.

The above situations expedite to search for new optimization algorithms such as meta-heuristic algorithms that intelligently and adaptively integrate various procedures for solving complex problems. Despite the fact most of the time it is not possible to obtain optimal solutions it is possible to obtain near-optimal solutions within a satisfactory amount of time. These meta-heuristic algorithms no longer require the convexity of the objective function and hence easier to apply in a wide range of optimization problems.

After several years of research in the field of optimization, many meta-heuristic based optimization algorithms have been developed such as Genetic Algorithm (GA) (Abualigah and Alkhrabsheh Citation2022; Alba and Dorronsoro Citation2005; Holland Citation1992; Lamos-Sweeney Citation2012; Liu, Xindong, and Shen Citation2011; Maulik and Bandyopadhyay Citation2000; Saida, Nadjet, and Omar Citation2014; Xiao et al. Citation2010; Zeebaree et al. Citation2017; Zhou, Miao, and Hongjiang Citation2018), Particle Swarm Optimization (PSO) (Aydilek Citation2018; Bratton and Kennedy Citation2007; Das, Abraham, and Konar Citation2008; X. Hung and Purnawan Citation2008; Kennedy and Eberhart Citation1995; der Merwe and Engelbrecht Citation2003, Citation2003; Mirjalili, Zaiton Mohd Hashim, and Moradian Sardroudi Citation2012; Olorunda and Engelbrecht Citation2008; Rana, Jasola, and Kumar Citation2010; Van Der Wang, Geng, and Qiao Citation2014; Zhang et al. Citation2021), Grey Wolf Optimization (GWO) (Akbari, Rahimnejad, and Andrew Gadsden Citation2021; Al-Tashi et al. Citation2019; Al-Tashi, Rais, and Jadid Citation2018; S. Faris et al. Citation2018; Gupta and Deep Citation2019; Kamboj, Bath, and Dhillon Citation2016; Kapoor et al. Citation2017; X. Katarya and Prakash Verma Citation2018; X. Khairuzzaman and Chaudhury Citation2017; Kumar, Kumar Chhabra, and Kumar Citation2017; Mirjalili Citation2015b; Mirjalili, Mohammad Mirjalili, and Lewis Citation2014a; Singh and Chand Bansal Citation2022; Teng, Jin-Ling, and Guo Citation2019; Zhang and Zhou Citation2015; Zhang et al. Citation2018, Citation2021), Sine Cosine Algorithm (SCA) (Mirjalili Citation2016b), Jaya Algorithm (JAYA) (Rao Citation2016), Teaching Learning based Optimization (TLBO) (R. V. Rao, Savsani, and Vakharia Citation2011), Cuckoo Search (CS) (Yang and Deb Citation2010), Ant Lion Optimization (ALO) (Azizi et al. Citation2020; Mirjalili Citation2015a), Whale Optimization Algorithm (WOA) (Chen et al. Citation2019; Mirjalili and Lewis Citation2016; Obadina et al. Citation2022), Bat Algorithm (BA) (Yang Citation2013; Yang and Hossein Gandomi Citation2012), Ant Colony Optimization (ACO) (Dorigo Citation2007), Artificial Bee Colony Optimization (ABC) (Karaboga Citation2010), Differential Evolution (DE) (Draa, Bouzoubia, and Boukhalfa Citation2015; Nadimi-Shahraki, Taghian, and Mirjalili Citation2021; Qin, Ling Huang, and Suganthan Citation2008), Gravitational Search Algorithm (GSA) (Bansal, Kumar Joshi, and Nagar Citation2018; Hooda and Prakash Verma Citation2022; Rashedi, Nezamabadi-Pour, and Saryazdi Citation2009; Venkateswaran et al. Citation2022), Moth Flame Optimization (MFO) (Mirjalili Citation2015c), Multi-Verse Optimizer (MVO) (Seyedali Mirjalili, Mirjalili, and Hatamlou Citation2016; Abualigah and Alkhrabsheh Citation2022), Dragonfly Algorithm (DA) (Elkorany et al. Citation2022; Mirjalili Citation2016a), Black-hole based Optimization (BBO) (Hatamlou Citation2013), Grasshopper Optimization algorithm (GOA) (S. Z. Abualigah and Diabat Citation2020; Mirjalili et al. Citation2018), Tabu search (TS) (Al-Sultan Citation1995; Alotaibi Citation2022; Ghany et al. Citation2022), Arithmetic Optimization Algorithm (AOA) (Abualigah et al. Citation2021), Improved Arithmetic Optimization Algorithm (IAOA) (Kaveh and Biabani Hamedani Citation2022), Reptile Search Algorithm (RSA) (Abualigah et al. Citation2022), Sand Cat Swarm Optimization (SCSO) (Seyyedabbasi and Kiani Citation2022), Modified Grey Wolf Optimization Algorithm (mGWOA) (Kar et al. Citation2022), Modified Grey Wolf Optimization (mGWO) (Mittal, Singh, and Singh Sohi Citation2016), Variable Weight Grey Wolf Optimization (VWGWO) (Gao and Zhao Citation2019), Incremental GWO (I-GWO) and Expanded GWO (Ex-GWO) (Seyyedabbasi and Kiani Citation2021), Improved GWO (Hou et al. Citation2022), I-GWO (Nadimi-Shahraki, Taghian, and Mirjalili Citation2021), Multi-Verse Optimizer (MVO) (Mirjalili et al., Citation2016) and Simulated Annealing (SA) (Kirkpatrick, Daniel Gelatt, and Vecchi Citation1983; Lee and Perkins Citation2021; Rutenbar Citation1989; Selim and Alsultan Citation1991). These meta-heuristic-based optimization algorithms are now becoming popular among the researchers because: (i) no requirement of gradient information, (ii) easy to implement and simple concept, (iii) has high potentiality to overcome local convergence issue and (iv) independent of problem domains. Additionally, these meta-heuristic algorithms are one of the most effective optimization algorithms which can easily find near-optimal solutions for any complex problem. Therefore, most of the complex optimization problems are solved using meta-heuristic based algorithms. In addition, due to flexibilities in algorithmic steps, the researcher are able to improve the performance with some minor or major modifications.

These nature inspired meta-heuristic algorithms can be categorized into (i) Swarm intelligence based (Parpinelli and Lopes Citation2011), (ii) evolutionary-based (Thiele et al. Citation2009), (iii) physics-based (Biswas et al. Citation2013), (iv) human-based algorithms (R. V. Rao, Savsani, and Vakharia Citation2011). Among these, many evolutionary algorithms are quite popular that are inspired by natural phenomena such as theory of evolution. These algorithms mainly generate random population in the initial phase and evolve through number of generation to improve the quality of solutions. The key strength of such algorithms is that the most fit chromosomes in the population are allowed to combine together to form chromosome for the next generation that helps the population to optimize the candidate solutions over the course of iterations. However, due to ignorance of search space information over the subsequent iterations these algorithms are unable to perform well to avoid local convergence. These problems have been easily tackled by swarm intelligence-based approaches. The technique of swarm intelligence approach mimics the social behavior of animals. For the last two decades, the swarm intelligence-based optimization algorithms have become more common among researchers than the evolutionary approaches due to the innovation of more numbers of competitive nature-inspired algorithms that use population information for evolving through new generations. The most advantageous features of this swarm-based optimization algorithm is the preservation of population information over the subsequent iterations and requirement of less operators in comparison to evolutionary algorithms. Therefore, these class of meta-heuristics are comparatively easier to implement. Due to such advantageous features, most of the real-life optimization problems are solved using swarm intelligence algorithms such as robot path planning (Kiani et al. Citation2022), FOPID controller design for power system stability (Kar et al. Citation2022), medical data analysis (Shial, Sahoo, and Panigrahi Citation2022a, Citation2022b), Time Series Forecasting (Panigrahi and Sekhar Behera Citation2019) and Internet of Things (Kiani and Seyyedabbasi Citation2022).

Regardless of the nature of each meta-heuristic based optimization algorithm, all these optimization algorithms share a common way of searching process, i.e. the whole search process is divided into two phases, i.e. exploration and exploitation (Alba and Dorronsoro Citation2005; Bansal and Singh Citation2021; Lin and Gen Citation2009; Olorunda and Engelbrecht Citation2008). In the exploration phase the degree of randomness among the search agents should be as most as possible to select feasible solutions from the most diversified solutions in the search space. Similarly, in the exploitation phase comparatively small degree of randomness should be adapted to search from the local region of the previously selected feasible solutions. Therefore, for an optimization algorithm to find better solutions, in the early stage of search process more exploration is maintained whereas in the later stage more exploitation is maintained. Moreover, maintaining a proper balance between exploitation and exploration is a crucial as well as a challenging task due to the stochastic nature of most nature-inspired meta-heuristic-based optimization algorithms. The research on stochastic optimization algorithms show that the idea of gradual change from exploration to exploitation toward the convergence gives most efficient results for an optimization problem (Mirjalili, Mohammad Mirjalili, and Lewis Citation2014a).

GWO is a recently developed efficient meta-heuristic algorithm proposed by Mirjalili et al. (Mirjalili, Mohammad Mirjalili, and Lewis Citation2014b) in the year 2014. The algorithm is mostly inspired by the leader wolf strategy (alpha, beta and delta wolf) of GWO which helps to find the search direction throughout the iterations and ensures fast convergence. In literature, this algorithm has been used for feature selection (Al-Tashi et al. Citation2019), economic dispatch problem (Pradhan, Kumar Roy, and Pal Citation2016), control system (Obadina et al. Citation2022), power dispatch problem (Jayakumar et al. Citation2016), data clustering (Ahmadi, Ekbatanifard, and Bayat Citation2021), classifications (Al-Tashi, Rais, and Jadid Citation2018), etc. Although the performance of GWO algorithm is very promising compared to other well-known algorithms, still for some complex optimization problems this algorithm traps at local optimal solutions and experiences ineffective balance between exploitation and exploration. The striking mechanism of GWO is with its leader wolves and multiple solution-based guided search scheme that provides a balance between exploration and exploitation. Therefore, in the literature several attempts have been made to improve the performance of GWO by modifying its search mechanism. For example, Gao and Zhao (Zhao and Ming Gao Citation2020) proposed a variable weight GWO and their governing equations which signifies unequal weight to each leading wolves with higher weight to alpha wolf (α) in comparison to beta wolf (β). Similarly, it applies for β and delta wolf (δ) with higher weight to beta in comparison to δ wolf while calculating the positions of each omega wolf during its explorative search. This paper also suggests to make a gradual decline in weight with the change in iterations for giving equal weight to each leader wolf toward the phase of exploitation which generally happens to arise toward the end of all iterations. Mittal et al. (Singh and Chand Bansal Citation2022) proposed a nonlinear control parameter to improve performance of GWO. It allows to control and balance the exploration and exploitation nature of algorithm. The results from experimental work suggest that the algorithm has achieved better performance but still unable to perform well on multimodal problems. Further, to improve the explorative skill of GWO, Long et al. (Long et al. Citation2018) proposed enhanced GWO (EEGWO). This algorithm uses a modified equation for position updation in order to improve the exploration capability of the algorithm. This EEGWO also uses a non-linear control parameter for balancing the diversity and the speed of convergence. Similarly, Bansal and Singh (Bansal and Singh Citation2021) proposed IGWO to enhance the exploration capability and to improve convergence speed using opposition-based learning. However, the mentioned algorithm is beneficial if the optimal result is far from the current solutions. Similarly, Yu, Xu and Li (Xiaobing, WangYing, and ChenLiang Citation2021) combined opposition-based learning (OBL) with GWO and proposed OGWO and also proposed a non-linear control parameter for enhancing the performance of original GWO. The algorithm improves its search capability in most benchmark problems while skipping true aspect of search process in most of the multimodal problems. Fan et al. (Fan et al. Citation2021) proposed a modified GWO algorithm by integrating beetle antenna strategy with the existing GWO algorithm for reducing unnecessary searches and to improve the exploration capability. This variant enhances exploration skill of the algorithm but unable to perform well for unimodal benchmark functions which shows poor capability in exploitation. Similarly, focusing on the improvement of exploration skill. Bansal and Singh (Bansal and Singh Citation2021) incorporated OBL with the explorative equation of GWO. Considering the classical GWO algorithm, it is observed that the algorithm suffers from trapping to local optima and less explorative capability.

The key contributions of the paper are summarized as follows:

Developed an enhanced GWO algorithm by incorporating a differential perturbation operator along with the use of randomly chosen omega wolves to obtain modified representation of α, β and δ wolves that maintains a better trade between exploration and exploitation.

Introduced similar values for parameters

and

for each leader wolf while updating the position of a single omega wolf to incorporate an element of exploration in the exploitation phase.

Applied the proposed GWO algorithm for optimizing 23 mathematical benchmark unimodal, multimodal and fixed dimensional multimodal functions.

In addition, to test the superiority of our proposed algorithm, we have applied it in 12 promising data clustering problems from UCI machine learning repository.

Applied Wilcoxon signed-rank test on the results to pairwise compare the algorithms on benchmark problems and 12 data clustering problems.

Applied Friedman and Nymenyi hypothesis non-parametric test on the obtained results to statistically rank the meta-heuristic algorithms (1 proposed +10 from the recent literature) in optimizing benchmark functions and clustering data.

The rest of the paper is organized as follows: Section 2 briefly introduces the background of GWO algorithm and its related literature. Section 3 describes the proposed enhanced GWO algorithm. Section 4 describes the application of proposed algorithm to data clustering problem. Section 5 describes the performance evaluation of our proposed algorithm using benchmark functions. Section 6 describes the performance evaluations of our proposed algorithm on benchmark clustering datasets. Finally, section 6 concludes the paper with academic implications and future research directions.

Grey Wolf Optimization

Grey wolf optimization (GWO) is a well-known meta-heuristic-based optimization algorithm introduced by Mirjalili et al. (Mirjalili, Mohammad Mirjalili, and Lewis Citation2014a) by mimicking the process of prey search and attacking procedure of gray wolves. The GWO is used in different fields of optimization such as software testing, medical diagnosis and engineering applications. The most advantageous features of this algorithm are simple concept, high-speed convergence, few adjustable parameters, better exploitation ability and being most appropriate for both linear and complex optimization problems. This algorithm uses a 4-layer pyramid structure to maintain the hierarchical structure of gray wolves for hunting and encircling processes. The hierarchy comprises alpha, beta, delta and omega group of wolves in first, second, third and fourth layers respectively with a decreasing order of dominance behavior among themselfs. Here, most importantly the top three leader wolves help a number of omega wolves to lead the hunting process in order to achieve near-optimal solutions. Again due to such collaborative activities among the wolves in the hierarchy, the chances of falling into local optimal decreases for the omega wolves. The hunting process of gray wolf is simulated in three steps such as (a) encircling, (b) hunting and (c) attacking the prey.

The mathematical illustration for encircling process of gray wolf for the prey is as given in Equationeq. (3)(3)

(3) and Equationeq. (4)

(4)

(4) :

where t denotes the current iteration, represents the location of prey at

iteration,

is the current position of a gray wolf at

iteration and

is the updated position of gray wolf

. The coefficients of the algorithm such as

and

are calculated as given in Equationeq. (5)

(5)

(5) and Equationeq. (7)

(7)

(7) :

where r1 and r2 are two random values which range in the interval [0-1] and is an acceleration coefficient that decreases linearly from 2 to 0 with the course of iteration. The linear change in the value of

can be mathematically represented as given in Equationeq. (6)

(6)

(6) that helps to make a transition from exploration to exploitation.

Hunting: With the assumption that the leader wolves have better knowledge about the location of prey, and therefore, these three leader wolves guide the other wolves in the overall hunting process.

In order to mathematically represent the hunting process of gray wolf, it is assumed that the best three wolves have better knowledge about the location of the prey. Therefore, considering the above assumption with the leadership hierarchy of gray wolf, hunting behavior of each omega group of wolf is mathematically modeled as given in Equationeq. (8)(8)

(8) , Equationeq. (9)

(9)

(9) and Equationeq. (10)

(10)

(10) :

where are three different suggestions by the leader wolves, i.e. alpha (α), beta (β) and delta (δ) respectively to help update the position of a single omega wolf (ω) at iteration

. The

,

and

are three random numbers generated within range [0–2] as given in Equationeq. (5)

(5)

(5) . Similarly,

,

and

are three random parameters that helps in making a linear transition from exploration to exploitation. Mathematically, the linearity and randomness are incorporated into the search strategy using Equationeq. (5)

(5)

(5) and Equationeq. (7)

(7)

(7) . Most importantly, the value of the control parameters

and

controls the global and local search behavior of the algorithm. Finally, the combined effort of the above three wolves helps to update the position of omega group of wolves using the given Equationeq. (11)

(11)

(11) :

Considering a few control parameters, ease of implementation and simplicity, it has been applied for solving wide variety of problems such as economic despatch problem (Jayabarathi et al. Citation2016; Pradhan, Kumar Roy, and Pal Citation2016), parameter estimation (X. Song et al. Citation2015), Recommender System (Katarya and Prakash Verma Citation2018), Unit Commitment Problem (Kamboj Citation2016), Wind Speed Forecasting (Song, Wang, and Haiyan Citation2018), Optimal Power Flow (Sulaiman et al. Citation2015) and Feature Selection (Qiang, Chen, and Liu Citation2019).

Moreover, a lot of research has been carried out to improve the performance of GWO by modifying its search mechanism to come up with some new variant of GWO for avoiding local convergence and improving the speed of the algorithm. Recently, Zhao and Ming Gao (Citation2020) (Zhao and Ming Gao Citation2020) proposed a variable weight updation strategy to update the positions of omega wolves instead of combining the efforts of each leader wolf by giving equal importance. Additionally, they also proposed a weight updation strategy that helps to give more importance to the in comparison to

and

. Later, with the change in iteration the weights are updated linearly to give equal importance to each leader wolves to convert the hunting phase into attacking phase. To achieve this three weight factors (w1, w2 and w3) are multiplied with α, β and δ wolves respectively as given in Equationeq. (12)

(12)

(12) :

Mathematically, the weight of α would be reduced from to

at the same time weight of β and δ would be enhanced from 0 to 1/3 with the change in iterations. Subsequently, thetheta (ϕ) and thephi (φ) are obtained in each iteration to update the above-mentioned weight factors to achieve the goal as given in Equationeq. (13)

(13)

(13) and Equationeq. (14)

(14)

(14) . Finally, the weight factors, w1, w2 and w3 are obtained using Equationeq. (15)

(15)

(15) , Equationeq. (16)

(16)

(16) and Equationeq. (17)

(17)

(17) .

Yu et al. (Xiaobing, WangYing, and ChenLiang Citation2021) proposed an opposition-based learning strategy to improve the population diversity and to save the algorithm from early convergence and avoid local optimal solutions. According to this algorithm, a group of opposition solutions are selected using opposition-based learning (OBL) following the upper bound and lower bound of each solution in all phase of the search process. Here, the best search agent among the two groups, i.e. original solutions and oppositions, helps to minimize the computational overhead and maximizes the convergence speed.

Additionally, Nadimi-Shaharaki et al. (Nadimi-Shahraki, Taghian, and Mirjalili Citation2021) proposed an improved GWO by constructing a neighborhood of radius R for solving engineering problems. The algorithm uses a dimension learning-based hunting (DLH) strategy that uses an approach to construct a neighborhood of each wolf for sharing the neighboring information between wolves. It also helps the wolves pack to maintain diversity among themselves and maintains a proper balance between exploration and exploitation.

In most cases, the GWO algorithm suffers from trapping at local optimal solutions and avoiding such local optima may not work well as wolves hunt in regions that is close to each other. Hence, an expanded GWO algorithm (Ex-GWO) (Seyyedabbasi and Kiani Citation2021) and an incremental GWO algorithm (I-GWO) are proposed to address the global optimization problems. The Ex-GWO method suggest that α, β and δ have better knowledge about the position of prey and hence, each omega wolf update its positions with the help of the newly updated positions of best three leader wolves (as given in Equationeq. (8)(8)

(8) , Equationeq. (9)

(9)

(9) and Equationeq. (10))

(10)

(10) and its previous wolves as given in Equationeq. (18)

(18)

(18) :

where denotes the population size,

parameter denotes wolf number in the pack and

denotes the generation counter.

,

and

are the updated positions of α, β and δ wolves respectively at the beginning of every iterations.

Similarly the I-GWO algorithm suggests that each wolf updates its position with the help of all previously selected wolves. The authors claim that I-GWO has more changes to find solutions in fewer iterations, but it may not always guarantee to find good solutions. According to the algorithm, the parameter has major role to select the position of best wolf (α) that only directs the search process. If

is nearer to the prey then algorithm convergences faster and if it is far away from the prey, then the algorithm needs more iterations to reach at the solutions. Hence, the authors suggest an additional improvement of parameters

(as given in Equationeq. (19))

(19)

(19) to make the algorithm more efficient. Mathematically, the position updation of each wolves is as given in Equationeq. (20)

(20)

(20) :

where parameters and

decide the directions for each wolves and parameter

decides the range of motion for the promising regions. The parameter

is used to increase the number of iteration for controlling the explorative capability of the algorithm.

is the population size and

parameter denotes wolf number in the pack.

denotes the generation counter.

GWO algorithm has been applied to analyze and design the FOPID-based damping controllers to enhance the power system stability (Kar et al. Citation2022). This paper proposes a modified GWO algorithm (mGWOA) to tune the control parameters of fractional-order PID. This paper concludes with superior performance of mGWOA in optimizing the benchmark functions and for damping low-frequency oscillations. To improve the performance of MGWOA, the authors have used a modified update equation for parameter (as in Equationeq. 21

(21)

(21) ).

where is the current iteration number and

is the maximum number iteration for the simulation work.

In this approach, to give more importance to leader wolves and the leadership hierarchy, the weight of α, β and δ are considered as 50%, 33.33% and 16.66% respectively. Mathematically, Equationeq. (11)(11)

(11) is modified as given in Equationeq. (22)

(22)

(22) :

where is the

omega wolf at

iteration.

According to Equationeq. (6)(6)

(6) of GWO algorithm, due to linear operators, half of the iterations are dedicated for exploration and the other half of the iterations are devoted for exploitation. To incorporate such searching technique into the classical GWO algorithm, Mittal et al. (Mittal, Singh, and Singh Sohi Citation2016) proposed mGWO which decreases value of parameter

from

to

exponentially. The proposed exponential function as given in Equationeq. (23)

(23)

(23) helps to decrease the value of

exponentially over the course of iterations.

The above exponential decay equation helps in transitioning from exploration to exploitation from initial iteration to final iteration with a ratio of 70% and 30% respectively. It shows that the mGWO enjoys high exploration in comparisons to classical GWO. This paper suggests that the algorithm is very effective because of high exploration in the initial phase, and hence, it has sufficient capability to avoid trapping to local optima. The paper also discusses the faster convergence behavior and superior performance of mGWO due to the above exponential decay function.

Furthermore, for most of the multimodal optimization problems, the GWO algorithm experiences a small degree of exploration as the algorithm does not hold any separate equation for exploration. Moreover, the control parameters () allow to make a slow transition from exploration to exploitation toward the convergence. This algorithm benefits from the combined effort of leader wolves in the hierarchy which helps for faster convergence with better exploitation. However, due to fast convergence sometimes it traps at local optimal solutions. Therefore, to improve the search capability and to maintain a proper balance between exploration and exploitation here we introduced a differential perturbation equation in the search mechanism of GWO which enables the algorithm to improve its explorative search. To improve the quality of solutions and to achieve better the explorative search capability of existing GWO algorithm, here we have introduced a novel differential perturbation equation into its search process with the help of three randomly chosen search agents. It modifies the algorithmic steps which enables it to achieve better exploration. Most commonly, the real-life problems are multimodal in nature and with the hope to solve such multimodal problems efficiently, we have proposed an enhanced GWO algorithm with improved explorative search capability which still maintains a better trade between exploration and exploitation.

Proposed Enhanced GWO Algorithm

In GWO, it is assumed that the leader wolves have better knowledge about the potential location of prey and hence the position of all omega wolves are updated using Equationeq. (3)(3)

(3) and Equationeq. (4)

(4)

(4) . It is also observed that the searching behavior of GWO is controlled by parameter

and

where the algorithm tries to escape from local optimal solutions using the stochastic parameter

. Similarly, the value of

helps in transitioning the algorithm from exploration to exploitation. When

it improves diversification among the possible solutions and similarly it minimizes diversification when

. Therefore, the algorithm performs better by balancing the exploration and exploitation. However, due to most dependencies on leader wolves every generated new solution is stuck at local optimal positions surrounded by leader wolves. Therefore, there is an improper balance between exploration and exploitation. To overcome this issue and improve the performance, we introduced a differential perturbation equation to maintain a trade between both the parameters by minimizing and balancing the biasness of leader wolves during its search process. Additionally, this equation is applied for each leader wolf separately that helps each omega wolf to find its better position with help of leader wolves and with an additional effort of three randomly selected omega wolves. Furthermore, the exploration capability of the existing algorithm has been enhanced by incorporating Equationeq. (24)

(24)

(24) , Equationeq. (25)

(25)

(25) and Equationeq. (26)

(26)

(26) into the traditional GWO algorithm which results in enhancing the robustness of the algorithm by maintaining a good balance between exploration and exploitation. Therefore, we have combined a differential perturbation equation into the classical GWO algorithm which enjoys a high degree of diversification among the population members in the explorative phase. Mathematically, the proposed modifications are given in Equationeq. (24)

(24)

(24) , Equationeq. (25)

(25)

(25) , Equationeq. (26)

(26)

(26) and Equationeq. (27)

(27)

(27) :

where are the corresponding modified representation of the alpha, beta and delta wolf for the

omega wolf which is represented as

. The three randomly selected omega wolves (

) are used to assist the leader wolves to search for more diversified solution around the most promising region of the search space. This improves the explorative skill of the algorithm. Here the original step mentioned in Equationeq. (11)

(11)

(11) of classical GWO algorithm is replaced by Equationeq. (27)

(27)

(27) in our proposed algorithm. Additionally, the three numerator terms of Equationeq. (27)

(27)

(27) i.e.

are obtained using Equationeq. (24)

(24)

(24) , Equationeq. (25)

(25)

(25) and Equationeq. (26)

(26)

(26) respectively. The parameters such as

,

and

are obtained from Equationeq. (8)

(8)

(8) , Equationeq. (9)

(9)

(9) and Equationeq. (10)

(10)

(10) respectively. To incorporate an element of exploration in the exploitation phase, we have used similar values for parameters

and

for each leader wolves while calculating the values of

,

and

for a single omega wolf and it keeps on changing with the change in omega wolf in the population.

Additionally, the strategy helps to add a self-adaptive behavior to the population members to enhance the population diversity with the help of the leader wolves and randomly selected population members. Hence, this perturbation strategy improves population diversity that enables to enhance the strength of exploration capability of the GWO algorithm. The coefficient in the proposed strategy is a scaling factor to improve the exploration capability of the searching agents. The second part of Equationeq. (24)

(24)

(24) , Equationeq. (25)

(25)

(25) and Equationeq. (26)

(26)

(26) are the difference vectors that are calculated by considering the updated position by each of the leader wolves (

) and one among the three randomly selected omega wolves (

). If the difference is high, then the perturbation equation with high scaling factor will enjoy more exploration and therefore it maintains more diversity among population members. This allows to incorporate the global search ability of the GWO in its search process. Simultaneously, this diversification helps in avoiding local convergence issue of GWO in most of the multimodal problems.

Additionally, the parameters such as ,

and

from in Equationeq. (24)

(24)

(24) , Equationeq. (25)

(25)

(25) and Equationeq. (26)

(26)

(26) respectively are obtained from Equationeq. (8)

(8)

(8) , Equationeq. (9)

(9)

(9) and Equationeq. (10)

(10)

(10) respectively.

Finally, the equation Equationeq. (27)(27)

(27) adds exploitation to the three leader wolf strategy. In comparison to Equationeq. (11)

(11)

(11) , the updated equation holds three diversified operands in its numerator that enables the proposed algorithm to overcome local convergence and to achieve near-optimal result.

The stability of the algorithm is tested with varying range (0 to 1 with an interval 0.1) of parameter to select its optimum value that gives better result. Experiments suggest that the algorithm provides the best result when

= 0.7.

The primary steps of our proposed algorithm is illustrated as shown in . Additionally, a boundary checking condition is also inserted in each iteration of the algorithm. The remaining steps of the proposed algorithm are similar to the classical GWO algorithm that selects leader wolves with better fitness at the beginning of each iteration.

presents the flowchart of our proposed enhanced GWO algorithm that operates in three steps such as Initialization steps, Iteration step and Final step. In the initialization step, the algorithm parameters are initialized such as max Iteration (), algorithmic specific control parameter such as

(0.7) and number of variables (

), population size (

), lower bound (

), upper bound (

) of search space and initial population. In the iterative steps, each omega wolf is updated with the combined effort of the help of leader wolves and three randomly selected omega wolves using differential perturbation equation. Then the omega wolves are allowed to update their positions with greedy selection approach. The iterative step is repeated until the termination criterion is satisfied. In the final step, the

wolf is chosen as the best solution for the optimization problem.

Application of Our Proposed Enhanced GWO Algorithm for Data Clustering

To evaluate the performance of our proposed GWO algorithm, we applied it on 12 clustering problems. The main objective here is to select optimal cluster centers among given data points in a search space that minimizes intra-cluster distance in order to form compact clusters. To maximize the compactness and minimize the clustering error most similar data points need to belong to a same cluster. Therefore, we have used Euclidean distance measure (as given in Equationeq. (28))

(28)

(28) to evaluate the compactness of each clusters.

where, and

are the cluster members and cluster centers respectively with dimension

.

Given a dataset of

instances with dimension

which can be represented as

. The aim of clustering task is to form

non-overlapping compact clusters among these data points.

To address the above problem and to assign membership value to each data instance, we have considered the minimization equation of partitional clustering algorithm (Shial, Sahoo, and Panigrahi Citation2022a; Ikotun et al. Citation2022Shial, Sahoo, and Panigrahi Citation2022b). To calculate the exactness of each cluster, we have accessed sum of square error (SSE) within each cluster. To achieve this, the primary focus is to minimize the objective function (as given in Equationeq. (29)(29)

(29) ).

such that

where,

AND

where denotes the

data instance and

denotes the

cluster center,

is the membership value of

instance to

cluster,

denotes the dimension of data.

is 0 for non-membership and 1 for membership of a data instance

to a cluster

.

Algorithm 1 presents the steps followed to perform data clustering using our proposed enhanced GWO algorithm. The steps of our proposed algorithm operate in three steps such as Initialization step, Iterative step and Final Step. In the initialization step, the algorithm initializes the parameters such as (number of clusters), number of generations gen,

,

, maximum number of iterative steps = 1000. In this step, the population members (the wolf pack) i.e. each solution is having

number of features where

denotes the number of features of a single data instance.

is the number of clusters (assuming

is known a priori). In the iterative step, all clustering solutions (except the leader wolves) are modified with the combined effort of leader wolves and three randomly selected omega wolves with the classical searching and encircling approach of GWO and using our novel differential perturbation equation. In this step, the greedy selection approach is followed to replace weak wolves from the population and to escape the weak wolves from trapping at local optimal solutions. In the final step, the best fit solution (α) is selected as the near-optimal solution for the clustering problem.

Table

Performance Evaluation of Proposed Enhanced GWO Algorithm Using Benchmark Functions

To measure the performance of our proposed enhanced GWO algorithm, we considered 23 well known mathematical benchmark functions. The functions used for the analysis are denoted by f1, f2, f3 … f23. These group of benchmark functions are unimodal (as given in ), flexible dimension multimodal (as given in ) and fixed-dimension multimodal (as given in ). In each table, the dim represents the dimension of the functions, range represents the higher and lower bound of decision variables. The optimal fitness value of the function is presented in column . Most commonly researchers from various fields used these benchmark functions for measuring the performance of their proposed algorithms (Bansal, Kumar Joshi, and Nagar Citation2018; Heidari et al. Citation2019; Mirjalili Citation2015a, Citation2015b). In order to evaluate the performance of the proposed enhanced GWO algorithm, statistical analysis has been made with ten recently proposed promising meta-heuristic algorithms such as Improved Arithmetic Optimization Algorithm (IAOA) (Kaveh and Biabani Hamedani Citation2022), Reptile Search Algorithm (RSA) (Abualigah et al. Citation2022), Modified Grey Wolf Optimization Algorithm (mGWOA) (Kar et al. Citation2022), Variable Weight Grey Wolf Optimization (VWGWO) (Gao and Zhao Citation2019), Modified Grey Wolf Optimization (mGWO) (Mittal, Singh, and Singh Sohi Citation2016), Grey Wolf Optimization (GWO) Teng, Jin-Ling, and Guo (Citation2019), Sine Cosine Algorithm (SCA) (Mirjalili Citation2016b), Jaya Algorithm (JAYA) (Rao Citation2016), Ant Lion Optimization (ALO) (Azizi et al. Citation2020; Mirjalili Citation2015a) and Whale Optimization Algorithm (WOA) (Chen et al. Citation2019; Mirjalili and Lewis Citation2016; Obadina et al. Citation2022). For a fair comparison among the considered algorithms, the swarm size and maximum number of iteration are fixed in all algorithms. Additionally, presents the values of different parameters such as number of search agents, maximum number of iterations, number of independent executions and parameter

.

Table 1. Unimodal Benchmark Functions.

Table 2. Flexible Multimodal Benchmark Functions.

Table 3. Fixed-Dimension Benchmark Functions.

Table 4. Parameter Settings of Meta-heuristic Algorithms.

To access the performance of all the considered meta-heuristic algorithms, each algorithm is executed 30 times independently. The mean results for all algorithms are recorded and are statistically verified using Wilcoxon signed-rank test with the proposed algorithm. The mean and standard deviation results are tabulated in , and for unimodal, multimodal and fixed dimensional functions respectively. In each table the mean denotes average of the fitness functions obtained in 30 independent executions and std. dev. denotes the standard deviation. In each table, the best result among the considered algorithms for each function is highlighted in boldface. One can see that our proposed algorithm achieves best mean results in f9, f10, f11, f12, f14, f16, f17, f18, f19 and f22 functions.

Table 5. Mean and standard deviation of fitness values obtained in 30 independent simulations by meta-heuristic algorithms on unimodal functions.

Table 6. Mean and standard deviation of fitness values obtained in 30 independent simulations by meta-heuristic algorithms on multimodal functions.

Table 7. Mean and standard deviation of fitness values obtained in 30 independent simulations by meta-heuristic algorithms on fixed dimensional functions.

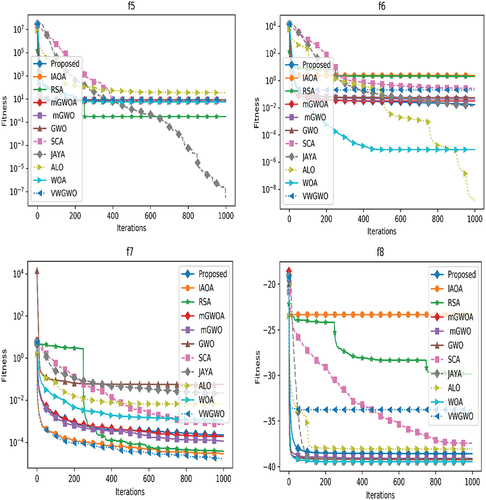

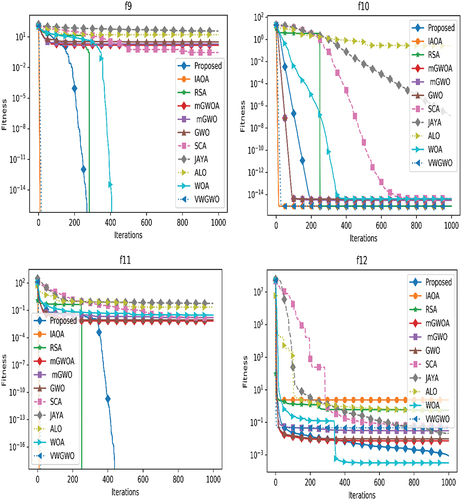

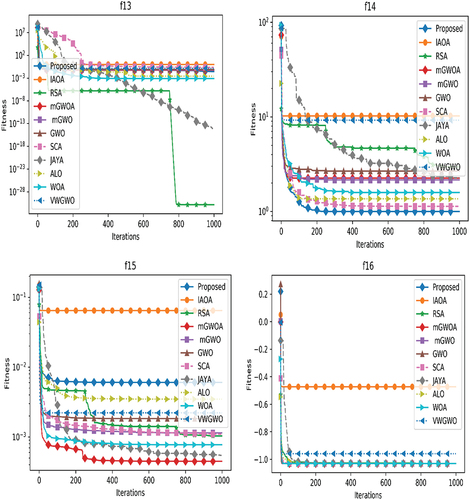

To determine both exploration and exploitation capability and convergence speed among stochastic algorithms, a set of unimodal benchmark functions are used as given in . The mean and standard deviation results of each algorithm is presented for seven unimodal functions. Additionally, to test the explorative behavior of all stochastic algorithms, our algorithm is tested for 16 multimodal test functions (f8-f23). These problems are again divided into two subgroups such as scalable multimodal functions (f8-f13) and multimodal fixed dimensional functions (f14-f23). The results obtained are presented in that clearly shows that our proposed algorithm achieves the best performance in 3 benchmark functions among considered 6 functions such as in f9, f10 and f11. Similarly, it achieves the best performance in 5 number of fixed dimensional multimodal benchmark functions such as in f14, f16, f17, f18, f19 and f22. The mean and standard deviation results obtained over 23 benchmark functions suggest that the proposed algorithm has comparatively better explorative skill than the state-of-the-art meta-heuristic algorithms. Moreover, due to such explorative skill the proposed algorithm is able to avoid local convergence issue of the multimodal problems.

In order to compare the proposed algorithm with other algorithms, we have applied a non-parametric Wilcoxon signed-rank test with 95% confidence level on pairwise basis. The results after the test are shown by symbols “+,” “–” and “≈” in . The best and worst results with respect to our proposed algorithm are shown by the symbols “+” and “–” respectively. Similarly, the equivalent result is shown with symbol “≈” One can see from the table that the proposed algorithm achieves statistically better results in comparison to other comparative algorithms.

Table 8. Wilcoxon Signed-Rank test statistical results with + indicating superior (+), inferior (–) or statistically equivalent (≈) algorithm in comparison to our proposed algorithm on unimodal, scalable dimensions multimodal and fixed dimensions with multimodal functions.

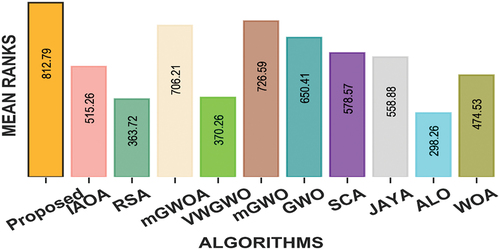

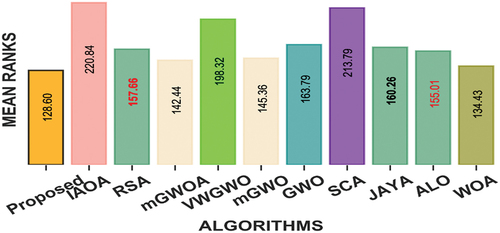

To make sure that the mean and standard deviation results obtained from the test are not just by chance, a non-parametric test is also conducted using Friedman and Nemenyi hypothesis test on the obtained results. The mean ranks obtained from the non-parametric test are presented in . The results obtained for minimizing these functions are analyzed at p-value = .000 and critical distance (CD) = 3.1. It can be observed from the figure that the proposed algorithm is showing lowest mean rank (value = 128.60) among other algorithms while minimizing 23 benchmark test functions. Hence, the proposed algorithm is best among other algorithms considered in this study. It can also be observed that the mGWOA and mGWO are statistically equivalent (since mean rank difference is not greater than CD). Similarly, RSA, JAYA and ALO are statistically equivalent. It is also revealed from the test result that RSA and ALO are statistically equivalent.

Figure 2. Mean rank of meta-heuristic algorithms for 23 benchmark functions with P-value = .000 and Critical Distance = 3.1.

In comparison to unimodal functions, multimodal functions have more number of local optima and it also increases exponentially with the number of design variables. Therefore, this kind of test problems turns very useful to evaluate the exploration capability of an optimization algorithm. Hence, the results obtained over function f8-f23 (i.e. multimodal, scalable dimension multimodal and fixed dimensional multimodal test problems) indicate that our proposed enhanced GWO algorithm has very good explorative capability that avoids most of the local optimal solutions. In fact, our proposed algorithm is most efficient in most of the multimodal problems. This is due to the integration of differential perturbation operator and consideration of randomly chosen omega wolves into the steps of existing GWO algorithm.

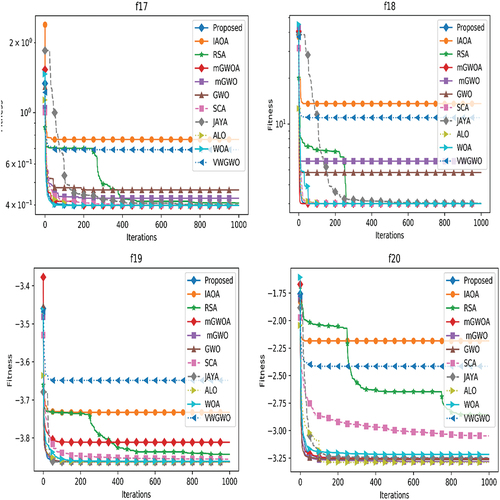

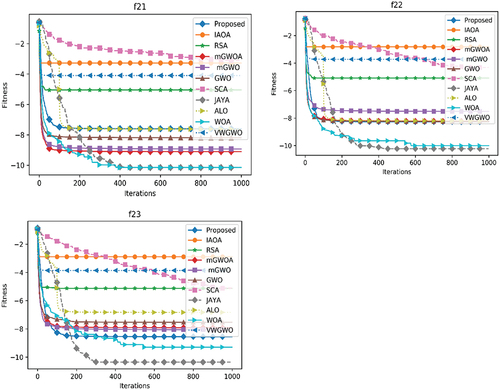

For a better visual understanding of comparative performance of meta-heuristic algorithms, we have plotted the convergence curves. As the convergence curve changes in different simulation, we have taken the mean convergence curves of 30 independent simulations for each of the algorithms separately on each benchmark function and plotted which are shown in .

Performance Analysis of Our Proposed Enhanced GWO Algorithm on Benchmark Clustering Datasets

Experiment Setup

Dataset Description and Resource Characteristics

The experiment is conducted using 12 clustering problem datasets that are accessed from UCI machine learning repository (Dua and Graff Citation2017). The detailed descriptions of the datasets are presented in . The details about resource characteristics and simulation parameter setting are presented separately in respectively.

Table 9. Dataset Descriptions.

Table 10. Resource Characteristics.

Table 11. Simulation Parameter Settings of Meta-heuristic Algorithms for Clustering Problems.

Evaluation Metrics

To access the performance of each algorithm we have used four performance metrics such as Accuracy, Precision, F-score and MCC. The detailed discussion and importance of each metric are described as follows.

Accuracy

Accuracy measure has been predominantly used in the literature to measure the performance of a classification and clustering algorithm. It computes its value by taking the ratio between the correctly classified instances and total number of instances. Mathematically, the accuracy performance is calculated as given in Equationeq. (30)(30)

(30) .

Here, TP denotes true positive values, TN denotes true negative values, FP denotes false positive values and FN denotes false negative values.

Precision

With respect to information retrieval the positive samples and negative samples are termed as relevant and irrelevant instances respectively. Here, precision can be seen as the fraction of retrieved documents that are relevant. In classifier performance measure the pair precision and recall are more informative than sensitivity and specificity respectively, where recall is the fraction of relevant samples that are correctly retrieved. Mathematically, the precision can be obtained from the confusion matrix as given in Equationeq. (31)(31)

(31) .

F-Score

With the context of information retrieval recall can be termed as relevant retrieved information which is the ratio between sums of relevant retrieved and total number of relevant items in the corpus whereas precision refers to the ratio between relevant retrieved documents and the total number of retrieved documents. Harmonic mean of both precision and recall is termed as F-score. Nowadays F1 − score is used in machine learning for both binary and multiclass scenarios. F-score has major drawback over MCC by giving incorrect score in the case of class swapping (if the positive class is renamed negative or vice-versa). However, F-score is equally invariant compared to MCC if micro/macro average F1 is used for class swapping problem. Second problem is F − score that it is independent from negative class being classified as positive. Despite of several flaws, F − score still remains the most widely spread performance matric among researchers. According to Cao et al., F-score and MCC estimate more realistic performance metrics for classification models (Cao, Chicco, and Hoffman Citation2020). Mathematically, the F-score performance measure is calculated as given in Equationeq. (32)(32)

(32) .

MCC

In order to tackle the class imbalance problem, Matthew Correlation Coefficient (MCC) measure is used as an alternative. In this paper, some of the datasets are imbalanced, we have considered MCC to measure the performance of the models. MCC generates a high score if the binary classifier is producing high true positive instances and high true negative instances (Chicco and Jurman Citation2020).The extreme value of MCC lies in the range [−1, +1] where +1 represents perfect classification and −1 represents perfect misclassification and MCC = 0 value is considered as coin tossing classifier. Mathematically, the MCC for a classification problem can be obtained using Equationeq. (33)(33)

(33) .

Result Analysis on Clustering Datasets

To access the performance of our proposed algorithm in clustering different datasets, the mean of 100 simulation results of all meta-heuristic algorithms are obtained. shows the mean and standard deviation (Std. Dev.) of all the considered meta-heuristic algorithms for data clustering. This table contains the results for 12 different datasets separately employing 4 performance measures such as accuracy, precision, F-score and MCC. One can see that our proposed algorithm achieves the best mean results in 10 different datasets such as Bupa, Haberman’s Survival, Hepatitis, Indian Liver Patient, Iris, Liver, Mammographic Mass, Seeds, WDBC and Zoo datasets considering accuracy performance measure. Similarly, considering precision performance measure our proposed algorithm shows the best mean results for 6 different datasets such as Haberman’s Survival, Iris, Liver, Mammographic Mass, Seeds and WDBC. Considering F-score performance measure our algorithms shows the best mean result for 7 different datasets such as Habarman’s Survival, Indian Liver Patient, Iris, Mammographic, Seeds, WDBC and Zoo. Considering MCC performance measure our proposed algorithm shows the best mean results for 7 different datasets such as Bupa, Iris, Liver, Mammographic Mass, Seeds, WDBC and Zoo.

Table 12. Mean and standard deviation of Accuracy, Precision, F-Score and MCC for data clustering employing Meta-Heuristic Algorithms.

To statistically verify the performance difference among all state-of-the-art meta-heuristic algorithms with respect to our proposed algorithm, we have applied the Wilcoxon signed-rank test and Friedman and Nemenyi hypothesis test on the obtained results considering 12 clustering problems. Here, the statistical nonparametric Wilcoxon signed-rank test is conducted at 95% confidence level on a pairwise basis on the obtained results. The test results are presented in for accuracy, precision, F-score and MCC performance measures respectively. One can observe from the tables that the proposed algorithm is showing statistically superior or equivalent performance than other alternative algorithms with respect to all performance measures.

Table 13. Wilcoxon Signed-Rank test statistical results with + indicating superior (+), inferior (–) or statistically equivalent (≈) algorithm in comparison to our proposed algorithm on clustering 12 benchmark datasets considering accuracy performance measures.

Table 14. Wilcoxon Signed-Rank test statistical results with + indicating superior (+), inferior (–) or statistically equivalent (≈) algorithm in comparison to our proposed algorithm on clustering 12 benchmark datasets considering precision performance measures.

Table 15. Wilcoxon Signed-Rank test statistical results with + indicating superior (+), inferior (–) or statistically equivalent (≈) algorithm in comparison to our proposed algorithm on clustering 12 benchmark datasets considering F-score performance measures.

Table 16. Wilcoxon Signed-Rank test statistical results with + indicating superior (+), inferior (–) or statistically equivalent (≈) algorithm in comparison to our proposed algorithm on clustering 12 benchmark datasets considering MCC performance measures.

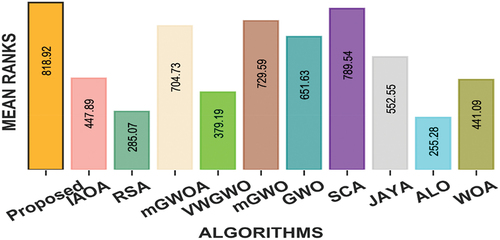

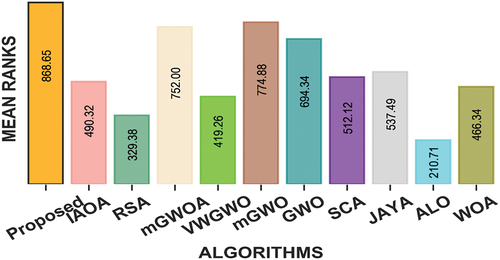

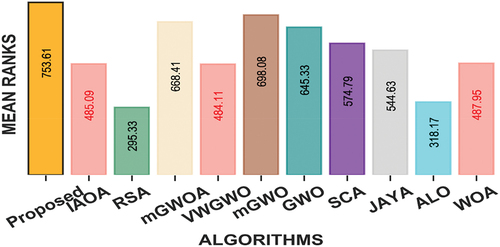

To rank each meta-heuristic algorithm, we have applied the Friedman and Nemenyi hypothesis test on the results obtained over 12 benchmark clustering problems. Since the performance measures are independent to each other, so we have conducted the test for the results obtained over all datasets on each performance measures separately. The statistical mean rank results for all the state-of-the-art meta-heuristic algorithms from the test are presented in , and for accuracy, precision, F-score and MCC performance measures respectively. The obtained p-value = .000 and critical distance (CD) = 4.4. From the figures, one can see that, our proposed enhanced GWO algorithm is showing the highest mean ranks (since maximization problem) in all performance measures that varies from all the other meta-heuristic algorithms by at least the critical distance. Therefore, the proposed algorithm is considered as the best algorithm for addressing the clustering problems. Furthermore, it can also be observed from that among all comparative algorithms, IAOA, VWGWO and WOA are tested statistically equivalent to each other in precision performance measure (since the mean rank differences are not greater than the critical distance).

Figure 9. Mean rank of meta-heuristic algorithms for clustering using 12 benchmark datasets on Accuracy performance measure with P-value = .000 and Critical Distance = 4.4.

Figure 10. Mean rank of meta-heuristic algorithms for clustering using 12 benchmark datasets on Precision performance measure with P-value = .000 and Critical Distance = 4.4.

Conclusion

In this paper, we have proposed a novel differential perturbation operator with help of three randomly selected omega wolves to improve the search capability of the existing GWO algorithm. Additionally, we have introduced similar values for parameters and

for each leader wolf while updating the position of a single omega wolf to incorporate an element of exploration in the exploitation phase. Hence, it improves the explorative capability while managing a proper trade between exploration and exploitation behavior of the algorithm. It also has a great impact in avoiding the algorithm from trapping at local optimal solutions. In order to evaluate the performance of our proposed algorithm, a comparative performance analysis has been carried out using 3 variants of GWO algorithm and 7 other promising meta-heuristic algorithms from recent literature. The statistical analysis on the simulation results show the superiority of our proposed algorithm with respect to the state-of-the-art meta-heuristic algorithms considering 23 benchmark functions and 12 data clustering problems. The Wilcoxon signed-rank test was conducted on the obtained results, and it confirms the superiority of our proposed algorithm in both mathematical benchmark function and data clustering problems. It is also observed from the Friedman and Nemenyi hypothesis test on the results obtained from benchmark function (minimization problems) that our algorithm achieves lowest mean rank result (128.60) that varies from other comparative algorithm by at least the critical distance (CD) and rejects the null hypothesis at p-value =.000 and critical distance = 3.1. Additionally, the results obtained from the benchmark clustering problems (maximization problems) that our proposed algorithm shows highest mean results in 10 different clustering problems such as Bupa, Haberman’s Survival, Hepatitis, Indian Liver Patient, Iris, Liver, Mammographic Mass, Seeds, WDBC and Zoo datasets considering accuracy performance measures. In precision performance measure, our algorithm achieves highest mean results in 6 benchmark clustering problems such as Haberman’s Survival, Iris, Liver, Mammographic Mass, Seeds and WDBC benchmark datasets. Similarly, considering F-score performance measure our algorithms is performing superior in 7 different clustering problems such as in Habarman’s Survival, Indian Liver Patient, Iris, Mammographic, Seeds, WDBC and Zoo datasets. Considering MCC performance measure, our proposed algorithm achieves highest mean results in 7 different clustering problems such as Bupa, Iris, Liver, Mammographic Mass, Seeds, WDBC and Zoo datasets. It is also observed from the Friedman and Nemenyi hypothesis test that our proposed algorithm achieves highest mean rank results such as 868.65, 735.61, 812.79 and 818.92 for accuracy, precision, F-score and MCC performance measures respectively. It is evident from the test results that our proposed enhanced GWO algorithm is showing highest mean rank results that varies from all other meta-heuristic algorithms by at least the critical distance. The obtained p-value=.000 and CD = 4.4. Hence, with the evidence from statistical results, the proposed GWO algorithm is statistically superior to other considered meta-heuristic algorithms considered in this study. One can observe from and that our proposed algorithm is able to overcome the local convergence issue for maximum multimodal problems. The results also reveals that, for some unimodal problems, it is showing comparatively inferior performance than some of the meta-heuristic algorithms. However, the supremacy of our proposed algorithm is statistically verified for the results obtained from both mathematical benchmark functions and clustering problems. Therefore, it is concluded that the proposed algorithm is highly competitive in avoiding local convergence issues and can be applied to solve different multimodal optimization problems. The following are some of the future research directions and academic implications of our proposed algorithm.

The GWO with differential perturbation operator enhances the diversities among the solution and hence enhances the balance between exploration and exploitation. Hence, it be applied to avoid local convergence issues of multimodal problems.

The proposed enhanced GWO algorithm can be applied to address engineering design problems.

Moreover, the proposed algorithm with better trade-off between exploration and exploitation can be modified to tackle the large-scale global optimization (LSGO) problems.

The proposed GWO algorithm can be applied for parameter optimization and feature selection for complex real world problems.

The proposed GWO algorithm can be applied to address multi-objective optimization problems such as path planning of multi-robots, vehicle ad-hoc network, clustering for wireless sensor networks and task scheduling for heterogeneous cloud environment etc.

The proposed GWO algorithm can be used to define optimized fitness function for calculating weight values in artificial neural network.

The proposed GWO algorithm can be integrated with gradient descent optimizer or independently applied for optimizing parameters and hyper-parameters of deep learning models.

Disclosure statement

No potential conflict of interest was reported by the authors.

References

- Abualigah, L., M. Abd Elaziz, P. Sumari, Z. Woo Geem, and A. H. Gandomi. 2022. Reptile Search Algorithm (RSA): A Nature-Inspired Meta-Heuristic Optimizer. Expert Systems with Applications 191:116158. doi:10.1016/j.eswa.2021.116158.

- Abualigah, L., and M. Alkhrabsheh. 2022. Amended Hybrid Multi-Verse Optimizer with Genetic Algorithm for Solving Task Scheduling Problem in Cloud Computing. The Journal of Supercomputing 78 (1):740–383. doi:10.1007/s11227-021-03915-0.

- Abualigah, L., and A. Diabat. 2020. A Comprehensive Survey of the Grasshopper Optimization Algorithm: Results, Variants, and Applications. Neural Computing & Applications 32 (19):15533–56. doi:10.1007/s00521-020-04789-8.

- Abualigah, L., A. Diabat, S. Mirjalili, M. Abd Elaziz, and A. H. Gandomi. 2021. The Arithmetic Optimization Algorithm. Computer Methods in Applied Mechanics and Engineering 376:113609. doi:10.1016/j.cma.2020.113609.

- Ahmadi, R., G. Ekbatanifard, and P. Bayat. 2021. A Modified Grey Wolf Optimizer Based Data Clustering Algorithm. Applied Artificial Intelligence 35 (1):63–79. doi:10.1080/08839514.2020.1842109.

- Akbari, E., A. Rahimnejad, and S. Andrew Gadsden. 2021. A Greedy Non-Hierarchical Grey Wolf Optimizer for Real-World Optimization. Electronics letters 57 (13):499–501. doi:10.1049/ell2.12176.

- Alba, E., and B. Dorronsoro. 2005. The Exploration/Exploitation Tradeoff in Dynamic Cellular Genetic Algorithms. IEEE Transactions on Evolutionary Computation 9 (2):126–42. doi:10.1109/TEVC.2005.843751.

- Alotaibi, Y. 2022. A New Meta-Heuristics Data Clustering Algorithm Based on Tabu Search and Adaptive Search Memory. Symmetry 14 (3):623. doi:10.3390/sym14030623.

- Al-Sultan, K. S. 1995. A Tabu Search Approach to the Clustering Problem. Pattern recognition 28 (9):1443–51. doi:10.1016/0031-3203(95)00022-R.

- Al-Tashi, Q., S. Jadid Abdul Kadir, H. Md Rais, S. Mirjalili, and H. Alhussian. 2019. Binary Optimization Using Hybrid Grey Wolf Optimization for Feature Selection. IEEE Access 7:39496–508. doi:10.1109/ACCESS.2019.2906757.

- Al-Tashi, Q., H. Rais, and S. Jadid. 2018. “Feature Selection Method Based on Grey Wolf Optimization for Coronary Artery Disease Classification.” In International Conference of Reliable Information and Communication Technology, Hotel Bangi-Putrajaya, Kuala Lumpur, Mal, 257–66.

- Aydilek, I. B. 2018. A Hybrid Firefly and Particle Swarm Optimization Algorithm for Computationally Expensive Numerical Problems. Applied Soft Computing 66:232–49. doi:10.1016/j.asoc.2018.02.025.

- Azizi, M., S. Arash Mousavi Ghasemi, R. Goli Ejlali, and S. Talatahari. 2020. Optimum Design of Fuzzy Controller Using Hybrid Ant Lion Optimizer and Jaya Algorithm. Artificial Intelligence Review 53 (3):1553–84. doi:10.1007/s10462-019-09713-8.

- Bansal, J. C., S. Kumar Joshi, and A. K. Nagar. 2018. Fitness Varying Gravitational Constant in GSA. Applied Intelligence 48 (10):3446–61. doi:10.1007/s10489-018-1148-8.

- Bansal, J. C., and S. Singh. 2021. A Better Exploration Strategy in Grey Wolf Optimizer. Journal of Ambient Intelligence and Humanized Computing 12 (1):1099–118. doi:10.1007/s12652-020-02153-1.

- Biswas, A., K. K. Mishra, S. Tiwari, and A. K. Misra. 2013. Physics-Inspired Optimization Algorithms: A Survey. Journal of Optimization 2013:1–16. doi:10.1155/2013/438152.

- Bratton, D., and J. Kennedy. 2007. “Defining a Standard for Particle Swarm Optimization.” In 2007 IEEE Swarm Intelligence Symposium, Honolulu, Hawaii, 120–27.

- Cao, C., D. Chicco, and M. M. Hoffman. 2020. The MCC-F1 Curve: A Performance Evaluation Technique for Binary Classification. ArXivPreprint ArXiv:2006 11278ArXivPreprint ArXiv:2006 11278 ArXivPreprint ArXiv:2006 11278:ArXivPreprint ArXiv:2006 11278. https://arxiv.org/abs/2006.1127

- Chen, H., X. Yueting, M. Wang, and X. Zhao. 2019. A Balanced Whale Optimization Algorithm for Constrained Engineering Design Problems. Applied Mathematical Modelling 71:45–59. doi:10.1016/j.apm.2019.02.004.

- Chicco, D., and G. Jurman. 2020. The Advantages of the Matthews Correlation Coefficient (MCC) over F1 Score and Accuracy in Binary Classification Evaluation. BMC Genomics 21 (1):1–13. doi:10.1186/s12864-019-6413-7.

- Das, S., A. Abraham, and A. Konar. 2008. Automatic Kernel Clustering with a Multi-Elitist Particle Swarm Optimization Algorithm. Pattern recognition letters 29 (5):688–99. doi:10.1016/j.patrec.2007.12.002.

- Dorigo, M. 2007. Ant Colony Optimization. Scholarpedia 2 (3):1461. doi:10.4249/scholarpedia.1461.

- Draa, A., S. Bouzoubia, and I. Boukhalfa. 2015. A Sinusoidal Differential Evolution Algorithm for Numerical Optimisation. Applied Soft Computing 27:99–126. doi:10.1016/j.asoc.2014.11.003.

- Dua, D., and C. Graff. 2017. “{UCI} Machine Learning Repository.” http://archive.ics.uci.edu/ml.

- Elkorany, A. S., M. Marey, K. M. Almustafa, and Z. F. Elsharkawy. 2022. Breast Cancer Diagnosis Using Support Vector Machines Optimized by Whale Optimization and Dragonfly Algorithms. IEEE Access 10. doi:10.1109/ACCESS.2022.3186021.

- Fan, Q., H. Huang, Y. Li, Z. Han, Y. Hu, and D. Huang. 2021. Beetle Antenna Strategy Based Grey Wolf Optimization. Expert Systems with Applications 165:113882. doi:10.1016/j.eswa.2020.113882.

- Faris, H., I. Aljarah, M. Azmi Al-Betar, and S. Mirjalili. 2018. Grey Wolf Optimizer: A Review of Recent Variants and Applications. Neural Computing & Applications 30 (2):413–35. doi:10.1007/s00521-017-3272-5.

- Gao, Z.M., and J. Zhao. 2019. An Improved Grey Wolf Optimization Algorithm with Variable Weights. Computational intelligence and neuroscience 2019:1–13. doi:10.1155/2019/2981282.

- Ghany, K. K. A., A. Mohamed AbdelAziz, T. Hassan a Soliman, and A. Abu El-Magd Sewisy. 2022. A Hybrid Modified Step Whale Optimization Algorithm with Tabu Search for Data Clustering. Journal of King Saud University-Computer and Information Sciences 34(3): 832–839.

- Gupta, S., and K. Deep. 2019. Hybrid Grey Wolf Optimizer with Mutation Operator. In Soft Computing for Problem Solving edited byBansal, Jagdish Chand, Das, Kedar Nath, Nagar, Atulya, Deep, Kusum, Ojha, Akshay Kumar, 961–68. Singapore: Springer.

- Hatamlou, A. 2013. Black Hole: A New Heuristic Optimization Approach for Data Clustering. Information Sciences 222:175–84. doi:10.1016/j.ins.2012.08.023.

- Heidari, A. A., S. Mirjalili, H. Faris, I. Aljarah, M. Mafarja, and H. Chen. 2019. Harris Hawks Optimization: Algorithm and Applications. Future Generation Computer Systems 97:849–72. doi:10.1016/j.future.2019.02.028.

- Holland, J. H. 1992. Genetic Algorithms. Scientific American 267 (1):66–73. doi:10.1038/scientificamerican0792-66.

- Hooda, H., and O. Prakash Verma. 2022. Fuzzy Clustering Using Gravitational Search Algorithm for Brain Image Segmentation. Multimedia Tools and Applications 81 (20):1–20. doi:10.1007/s11042-022-12336-x.

- Hou, Y., H. Gao, Z. Wang, and D. Chuansheng. 2022. Improved Grey Wolf Optimization Algorithm and Application. Sensors 22 (10):3810. doi:10.3390/s22103810.

- Hung, C.C., and H. Purnawan. 2008. “A Hybrid Rough K-Means Algorithm and Particle Swarm Optimization for Image Classification.” In Mexican International Conference on Artificial Intelligence, Berlin Heidelberg, 585–93.

- Ikotun, A. M., A. E. Ezugwu, L. Abualigah, B. Abuhaija, and J. Heming. 2022. K-Means Clustering Algorithms: A Comprehensive Review, Variants Analysis, and Advances in the Era of Big Data. Information Sciences 622:178–210. doi:10.1016/j.ins.2022.11.139.

- Jamil, M., and X.S. Yang. 2013. A Literature Survey of Benchmark Functions for Global Optimization Problems. ArXiv Preprint ArXiv:1308 4008 4(2):150–194.

- Jayabarathi, T., T. Raghunathan, B. R. Adarsh, and P. Nagaratnam Suganthan. 2016. Economic Dispatch Using Hybrid Grey Wolf Optimizer. Energy 111:630–41. doi:10.1016/j.energy.2016.05.105.

- Jayakumar, N., S. Subramanian, S. Ganesan, and E. B. Elanchezhian. 2016. Grey Wolf Optimization for Combined Heat and Power Dispatch with Cogeneration Systems. International Journal of Electrical Power & Energy Systems 74:252–64. doi:10.1016/j.ijepes.2015.07.031.

- Kamboj, V. K. 2016. A Novel Hybrid PSO–GWO Approach for Unit Commitment Problem. Neural Computing & Applications 27 (6):1643–55. doi:10.1007/s00521-015-1962-4.

- Kamboj, V. K., S. K. Bath, and J. S. Dhillon. 2016. Solution of Non-Convex Economic Load Dispatch Problem Using Grey Wolf Optimizer. Neural Computing & Applications 27 (5):1301–16. doi:10.1007/s00521-015-1934-8.

- Kapoor, S., I. Zeya, C. Singhal, and S. Jagannath Nanda. 2017. A Grey Wolf Optimizer Based Automatic Clustering Algorithm for Satellite Image Segmentation. Procedia computer science 115:415–22. doi:10.1016/j.procs.2017.09.100.

- Karaboga, D. 2010. Artificial Bee Colony Algorithm. Scholarpedia 5 (3):6915. doi:10.4249/scholarpedia.6915.

- Kar, M. K., S. Kumar, A. K. Kumar Singh, S. Panigrahi, M. Cherukuri, and P. Sharma. 2022. Design and Analysis of FOPID-Based Damping Controllers Using a Modified Grey Wolf Optimization Algorithm. International Transactions on Electrical Energy Systems 2022 2022:1–31. others. doi:10.1155/2022/5339630.

- Katarya, R., and O. Prakash Verma. 2018. Recommender System with Grey Wolf Optimizer and FCM. Neural Computing & Applications 30 (5):1679–87. doi:10.1007/s00521-016-2817-3.

- Kaveh, A., and K. Biabani Hamedani. 2022. Improved Arithmetic Optimization Algorithm and Its Application to Discrete Structural Optimization. Structures 35:748–64. doi:10.1016/j.istruc.2021.11.012.

- Kennedy, J., and R. Eberhart. 1995. “Particle Swarm Optimization.” In Proceedings of ICNN’95-International Conference on Neural Networks, Perth, WA, Australia, 4:1942–48.

- Khairuzzaman, A. K. M., and S. Chaudhury. 2017. Multilevel Thresholding Using Grey Wolf Optimizer for Image Segmentation. Expert Systems with Applications 86:64–76. doi:10.1016/j.eswa.2017.04.029.

- Kiani, F., and A. Seyyedabbasi. 2022. Metaheuristic Algorithms in IoT: Optimized Edge Node Localization. In Engineering Applications of Modern Metaheuristics, 19–39. Cham: Springer.

- Kiani, F., A. Seyyedabbasi, S. Nematzadeh, F. Candan, T. Çevik, F. Aysin Anka, G. Randazzo, S. Lanza, and A. Muzirafuti. 2022. Adaptive Metaheuristic-Based Methods for Autonomous Robot Path Planning: Sustainable Agricultural Applications. Applied Sciences 12 (3):943. doi:10.3390/app12030943.

- Kirkpatrick, S., C. Daniel Gelatt, and M. P. Vecchi. 1983. Optimization by Simulated Annealing. Science 220 (4598):671–80. doi:10.1126/science.220.4598.671.

- Kumar, V., J. Kumar Chhabra, and D. Kumar. 2017. Grey Wolf Algorithm-Based Clustering Technique. Journal of Intelligent Systems 26 (1):153–68. doi:10.1515/jisys-2014-0137.

- Lamos-Sweeney, J. D. 2012. Deep Learning Using Genetic Algorithms. Rochester Institute of Technology.

- Lee, J., and D. Perkins. 2021. A Simulated Annealing Algorithm with a Dual Perturbation Method for Clustering. Pattern recognition 112:107713. doi:10.1016/j.patcog.2020.107713.

- Lin, L., and M. Gen. 2009. Auto-Tuning Strategy for Evolutionary Algorithms: Balancing between Exploration and Exploitation. Soft Computing 13 (2):157–68. doi:10.1007/s00500-008-0303-2.

- Liu, Y., W. Xindong, and Y. Shen. 2011. Automatic Clustering Using Genetic Algorithms. Applied Mathematics and Computation 218 (4):1267–79. doi:10.1016/j.amc.2011.06.007.

- Long, W., J. Jiao, X. Liang, and M. Tang. 2018. An Exploration-Enhanced Grey Wolf Optimizer to Solve High-Dimensional Numerical Optimization. Engineering Applications of Artificial Intelligence 68:63–80. doi:10.1016/j.engappai.2017.10.024.

- Maulik, U., and S. Bandyopadhyay. 2000. Genetic Algorithm-Based Clustering Technique. Pattern recognition 33 (9):1455–65. doi:10.1016/S0031-3203(99)00137-5.

- Merwe, D. W. V. D., and A. P. Engelbrecht. 2003. “Data Clustering Using Particle Swarm Optimization.” 2003 Congress on Evolutionary Computation, CEC 2003 - Proceedings 1: 215–20. 10.1109/CEC.2003.1299577.

- Merwe, D. W. D., and A. Petrus Engelbrecht. 2003. Data Clustering Using Particle Swarm Optimization. The 2003 Congress on Evolutionary Computation 2003 (1):215–20. ( CEC’03).

- Mirjalili, S. 2015a. The Ant Lion Optimizer. Advances in Engineering Software 83:80–98. doi:10.1016/j.advengsoft.2015.01.010.

- Mirjalili, S. 2015b. How Effective is the Grey Wolf Optimizer in Training Multi-Layer Perceptrons. Applied Intelligence 43 (1):150–61. doi:10.1007/s10489-014-0645-7.

- Mirjalili, S. 2015c. Moth-Flame Optimization Algorithm: A Novel Nature-Inspired Heuristic Paradigm. Knowledge-Based Systems 89:228–49. doi:10.1016/j.knosys.2015.07.006.

- Mirjalili, S. 2016a. Dragonfly Algorithm: A New Meta-Heuristic Optimization Technique for Solving Single-Objective, Discrete, and Multi-Objective Problems. Neural Computing & Applications 27 (4):1053–73. doi:10.1007/s00521-015-1920-1.

- Mirjalili, S. 2016b. SCA: A Sine Cosine Algorithm for Solving Optimization Problems. Knowledge-Based Systems 96:120–33. doi:10.1016/j.knosys.2015.12.022.

- Mirjalili, S., and A. Lewis. 2016. The Whale Optimization Algorithm. Advances in Engineering Software 95:51–67. doi:10.1016/j.advengsoft.2016.01.008.

- Mirjalili, S. Z., S. Mirjalili, S. Saremi, H. Faris, and I. Aljarah. 2018. Grasshopper Optimization Algorithm for Multi-Objective Optimization Problems. Applied Intelligence 48 (4):805–20. doi:10.1007/s10489-017-1019-8.

- Mirjalili, S., S. Mohammad Mirjalili, and A. Hatamlou. 2016. Multi-Verse Optimizer: A Nature-Inspired Algorithm for Global Optimization. Neural Computing & Applications 27 (2):495–513. doi:10.1007/s00521-015-1870-7.

- Mirjalili, S., S. Mohammad Mirjalili, and A. Lewis. 2014a. Grey Wolf Optimizer. Advances in Engineering Software 69:46–61. doi:10.1016/j.advengsoft.2013.12.007.

- Mirjalili, S., S. Mohammad Mirjalili, and A. Lewis. 2014b. Grey Wolf Optimizer. Advances in Engineering Software 69 (March):46–61. doi:10.1016/j.advengsoft.2013.12.007.

- Mirjalili, S., S. Zaiton Mohd Hashim, and H. Moradian Sardroudi. 2012. Training Feedforward Neural Networks Using Hybrid Particle Swarm Optimization and Gravitational Search Algorithm. Applied Mathematics and Computation 218 (22):11125–37. doi:10.1016/j.amc.2012.04.069.

- Mittal, N., U. Singh, and B. Singh Sohi. 2016. Modified Grey Wolf Optimizer for Global Engineering Optimization. Applied Computational Intelligence and Soft Computing 2016:1–16. doi:10.1155/2016/7950348.

- Nadimi-Shahraki, M. H., S. Taghian, and S. Mirjalili. 2021. An Improved Grey Wolf Optimizer for Solving Engineering Problems. Expert Systems with Applications 166:113917. March. doi: https://doi.org/10.1016/j.eswa.2020.113917.

- Obadina, O. O., M. A. Thaha, Z. Mohamed, and M. Hasan Shaheed. 2022. Grey-Box Modelling and Fuzzy Logic Control of a Leader–Follower Robot Manipulator System: A Hybrid Grey Wolf–Whale Optimisation Approach. ISA transactions 129:572–93. doi:10.1016/j.isatra.2022.02.023.

- Olorunda, O., and A. P. Engelbrecht. 2008. “Measuring Exploration/Exploitation in Particle Swarms Using Swarm Diversity.” In 2008 IEEE Congress on Evolutionary Computation (IEEE World Congress on Computational Intelligence), Hong Kong, China, 1128–34.

- Panigrahi, S., and H. Sekhar Behera. 2019. Nonlinear Time Series Forecasting Using a Novel Self-Adaptive TLBO-MFLANN Model. International Journal of Computational Intelligence Studies 8 (1–2):4–26. doi:10.1504/IJCISTUDIES.2019.098013.