?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.

?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.ABSTRACT

In this paper, we present an approach to improve the effectiveness of automatic classification of music genres by integrating emotion and intelligent algorithms. We propose an automatic recognition and classification algorithm for music spectra, which takes into account emotional cues that can be extracted from music to improve classification accuracy. To achieve this goal, we set different weight coefficients, which are continuously adjusted based on the convergence process of the previous iteration. The size of each weighting coefficient is adaptively controlled to reduce the number of iterations of the reconstruction process, thereby reducing the algorithm’s computational complexity and speeding up its convergence. We conducted several experiments to evaluate the effectiveness of our proposed method. The experimental results demonstrate that the automatic classification method of music genres, which integrates emotion and intelligent algorithms, can significantly improve the accuracy of automatic music genre classification. Moreover, our approach reduces the algorithm’s computational complexity, resulting in a faster convergence speed. Our proposed approach provides a promising solution for automatic music genre classification that takes into account emotional cues. The integration of emotion and intelligent algorithms can help achieve higher accuracy and reduce computational complexity, making the proposed method applicable in various scenarios.

Introduction

There is too much European and American rock music today. Due to the different times, the culture embodied in music, the expression of self-emotion, and the focus on music itself have made popular music gradually become a trend all over the world, and also formed the foundation of mainland popular music (Amendola et al. Citation2017). Half of pop music is done in the music factory, and the other half is done in the audience behavior of the market. Although the spokespersons of pop music acknowledge that pop music is a tool of American hegemony and that the global music industry is under the control of Anglo-American interest groups, they still criticize the political culture of some countries affected by pop music. The Frankfurt School relies more on dogmatic theory than empirical methods, and tends to exaggerate the homogenization results about business culture (Anaya Amarillas, Citation2021).

Criticism of cultural imperialism oversimplifies the driving force of cultural productivity and its consumption power. As with movies and TV shows, one cannot assume that just because the whole world is listening to Anglo-American pop songs (Cano et al. Citation2018). Critics of the Frankfurt School (with the exception of some prominent scholars) underestimate the ability of consumers in various countries to filter foreign information through their own cultural experience and to study the meaning of that information. These critics also assume that American cultural imperialism is the product of economic tyranny, especially the possession of capital (Costa-Giomi and Benetti Citation2017). There is an interactive relationship between communication and popularity. On the one hand, modern mass media provides new production technologies and consumption forms for pop music, expands the “perceivable information” of pop music audio-visual, and even directly affects the content and connotation of pop music itself (Dickens, Greenhalgh, and Koleva Citation2018). In this way, pop music is closely related to the development of the economy, the economy affects the trend of music, and pop music in turn promotes the development of the economy. Pop music, which operates according to the laws of economic development, has been degenerating and changing around the problem of survival, and it is constantly colliding and rubbing with its own artistic pursuit.

Pop music is typically defined as a genre of popular music that originated in the 1950s and is characterized by catchy melodies, simple chord progressions, and relatable lyrics. The genre has evolved over time, incorporating elements of other genres such as rock, R&B, and hip hop, and continues to be a dominant force in the music industry today.

One of the defining features of pop music is its ability to reflect and comment on social issues. Pop songs often address topics such as love, relationships, and personal struggles, but they can also tackle larger societal issues such as politics, inequality, and social justice. In this sense, pop music can serve as a medium for artists to express their views on current events and connect with their audience on a deeper level.

Another aspect of pop music is its focus on creating music that appeals to the masses. Pop songs are often designed to be easily recognizable and memorable, with simple melodies and catchy hooks that can be quickly learned and sung along to. This emphasis on accessibility has helped pop music to reach a wide audience, making it one of the most commercially successful genres of music.

However, while pop music may be designed to please the masses, this does not necessarily mean that it is devoid of artistic merit. Many pop artists are skilled musicians and songwriters who are able to create music that is both commercially successful and artistically meaningful. Moreover, pop music continues to evolve and incorporate new sounds and styles, reflecting the changing tastes and interests of its audience.

In summary, pop music remains an influential and popular genre that is both reflective of and responsive to social issues. While its emphasis on accessibility and mass appeal may be seen as limiting by some, pop music continues to evolve and push the boundaries of what is possible within the genre.

The relationship between “communication” and “popularity” is both mutually dependent and interactive (Gonçalves and Schiavoni Citation2020). In this way, while the mass media maximizes the connection between pop music and the audience, it also goes beyond the form of intermediary, so that through The filter of cultural consumption directly penetrates into the daily life of the audience. However, once an avant-garde idea is accepted and assimilated by the public, its avant-garde will no longer exist (Gorbunova Citation2019). Rock music is popular today because of its uniqueness. This lack of personality seems to be fresh in society, but no matter how unscrupulous and strange it is, as long as it is in sync with the social psychology, it will form the basis of commercialization. When business labels anger, pain and laughter When one piece is taken off the industrialized assembly line and sold to the public on the market, this sentiment will be out of shape. It is certain that the relationship between commerciality and artistry will eventually break down after repeated conflicts and compromises. At this time, business will have a relationship with the next emerging culture, and start the next round of conflict and compromise (Gorbunova and Petrova Citation2019). The statement implies that the relationship between commerciality and artistry is inherently conflict-prone and unstable, and that this tension will inevitably lead to a breakdown of the relationship. This breakdown occurs when the two values are no longer able to coexist peacefully, and a choice must be made between them.

When this happens, the business world will be forced to adapt to the emergence of a new culture that challenges existing values and assumptions. This will likely involve a new round of conflict and compromise as the business world seeks to reconcile its own interests with those of the emerging culture. It is important to note that this relationship between commerciality and artistry is not a fixed or immutable one. Instead, it is constantly evolving and shifting as new values and cultural norms emerge over time. As such, the conflict and compromise that occurs between these two values is an ongoing process that will continue to play out over time.

Ultimately, the relationship between commerciality and artistry is a complex and multifaceted one that cannot be reduced to a simple binary opposition. While there will always be tension and conflict between these two values, it is also possible for them to coexist in a way that is mutually beneficial and sustainable. Achieving this balance requires a nuanced understanding of the relationship between these two values and a willingness to engage in ongoing dialogue and compromise.

The most terrifying power of the Internet is that it directly leads to “the world is flat.” When music is digitized, it no longer needs the assembly line of the disc pressing factory, the time-consuming and expensive transportation, and the location in the record store. There are limited shelves, and broadcast TV with scarce channels is no longer needed. What it needs is network hard disk space, and what it needs is bandwidth (Khulusi et al. Citation2020). These two things, which are getting cheaper and freer, provide a convenient path and favorable conditions for the digital technology of independent music, making music dissemination and sales a thing that everyone can participate in. This dissemination method is the music technology. New creations and new starting points in the field of communication will have a profound impact on the music life of contemporary and even future human beings (Magnusson Citation2021). Today’s development of network technology has made the scale and speed of today’s music dissemination unprecedented and explosive. At this time, more music, not more capital, has truly become the core power of the music industry. People’s concept of music has also undergone unprecedented changes. Changes in musical concepts lead to significant chain effects of musical behavior, social music production, musical phenomena and social music development. The globalization and integration of music followed (Michalakos Citation2021).

While any pop musician is fighting for commercial effect, the value of an individual or a specific work cannot be judged solely on the basis of commercial best-sellers. Because for the most part, popularity has little to do with the quality of the melody, harmony, rhythm, and structure of the music. This is because popular music is not just music itself, but to a large extent a sociocultural behavior and activity (Scavone and Smith Citation2021).

As a category of popular music, pop music has a special way of existence. Many musical factors combine to form its style, and it also has a special musical genre significance (Stensæth Citation2018). But that’s just the music itself. Considering the characteristics of the word “pop,” pop music also needs a hotbed of social environment and popularity. The formation and development of popular music is a musical product born on the basis of the accumulation of various social and musical factors. These factors have formed a social and cultural foundation in the development of popular music, and the maturity of this foundation has promoted popular music. Come to this special historical era (Tabuena Citation2020).

Pop music is an important cultural concept of American popular music, revealing the unique cultural characteristics of American society. The formation of pop music has allowed the American people to break through the racial troubles in history; let the world reflect the concept of human nature that people of different races and skin colors can communicate with each other and live in harmony through music; the spread of popular music to the United States and the whole world The music, human beliefs, behaviors, customs, culture, etc. have brought about a revolution, leading to profound changes in some aspects of society (Turchet and Barthet Citation2019). It is undeniable that there is no music in the world that can cause such a strong shock in the whole world as American pop music, and bring such a strong impact on the culture and life of people in the United States and the world (Turchet, West, and Wanderley Citation2021).

The task of identifying and classifying music genres involves several steps that are essential for accurate classification. These steps include preprocessing of the music spectrum, extraction of music features, and discrimination of music genres.

The first step in this process is preprocessing, which is critical in music spectrum processing. Its primary purpose is to prepare the music spectrum for the next stage of feature extraction. Preprocessing techniques may involve filtering, smoothing, or segmenting the spectrum to improve the quality of the data and remove any unwanted noise.

The second step is feature extraction, which involves extracting relevant information from the preprocessed music spectrum. The extracted feature parameters are another form of expression of the music spectrum because the spectrum contains a vast amount of redundancy. If the audio music spectrum in the time domain is directly input into the classification system, the computation required would be too high. Feature extraction reduces the dimensionality of the data, making it easier to classify the music into different genres.

The final step is discrimination, where the extracted feature parameters are input into the classifier. The classifier models the features by adjusting its parameters, which are obtained through training. The classifier assigns the music to a particular genre based on the extracted features and its model parameters.

In summary, identifying and classifying music genres involves preprocessing the music spectrum to prepare it for feature extraction. The features are then extracted from the spectrum to reduce its dimensionality. Finally, the extracted features are used to train a classifier that can accurately discriminate between different music genres. These steps are crucial in achieving accurate and efficient classification of music genres.

The best model implements the discrimination of the genre to which the test music sample belongs (Vereshchahina-Biliavska et al. Citation2021). Among them, in the feature extraction module, traditional methods were used to extract music features, and the extraction process was more complicated. Now with the rapid development of deep learning, in the field of music information retrieval, people gradually began to use the self-learning characteristics of deep learning algorithms to extract more abstract features. Features that can characterize the essential attributes of music (Way Citation2021).

The paper presents an innovative approach to improve the automatic classification of music genres. The proposed method integrates emotion and intelligent algorithms into the process of music genre classification. The method uses emotional cues that can be extracted from music to improve the accuracy of classification. The main innovation lies in the use of adaptive weighting coefficients that are continuously adjusted during the convergence process of the algorithm. The size of each weighting coefficient is adaptively controlled to reduce the number of iterations required for the reconstruction process, thereby reducing the algorithm’s computational complexity and speeding up its convergence.

The proposed approach is evaluated through several experiments, which demonstrate its effectiveness in improving the accuracy of automatic music genre classification. The integration of emotion and intelligent algorithms has shown to significantly improve the classification accuracy while also reducing the algorithm’s computational complexity. The experimental results indicate that the proposed approach provides a promising solution for automatic music genre classification that takes into account emotional cues.

The significance of this work is that it provides a new approach to music genre classification that considers emotional cues. This approach has the potential to be applied in various scenarios, such as music recommendation systems, music information retrieval, and music analysis. The proposed method has the potential to improve the accuracy of these systems and reduce the computational complexity required to achieve this accuracy.

In summary, the paper presents an innovative approach to music genre classification that integrates emotion and intelligent algorithms. The proposed method is shown to significantly improve the accuracy of classification while reducing the algorithm’s computational complexity. This work has the potential to be applied in various scenarios and provides a promising solution for automatic music genre classification that takes into account emotional cues.

Automatic Recognition and Classification Algorithm of Music Spectrum

The methodology consists of three main steps:

In the first step, “Sparse reconstruction algorithm of frequency hopping music spectrum,” the researchers conduct a literature review and investigate existing methods for reconstructing sparse signals from frequency hopping music spectra.

In the second step, “Sparse reconstruction,” the researchers apply a sparse reconstruction algorithm to the frequency hopping music spectra. This involves identifying the sparse coefficients that correspond to the significant components of the signal and reconstructing the original signal from these coefficients.

Finally, in the third step, “Gradient projection reconstruction algorithm based on weight and step correction,” the researchers use a gradient projection reconstruction algorithm that incorporates weight and step correction. This algorithm is used to further refine the reconstructed signal and improve its accuracy presented in .

Overall, the methodology involves a combination of research, signal processing techniques, and algorithmic refinement to reconstruct sparse signals from frequency hopping music spectra.

Sparse Reconstruction Algorithm of Frequency Hopping Music Spectrum

For the sparse music spectrum , the sparse representation is

, and the perceptual matrix is

, then the RIP criterion is expressed as:

Among them, is the K-order RIP constant.

is the product of the measurement matrix

and the sparse basis

, which is called the perception matrix.

is the sparse coefficient vector of the music spectrum under the sparse basis

. The sparse coefficient is also N-dimensional, but only a few K components are significantly larger than zero or larger than other sparse components. Generally, only the K components are retained, and other components are set to zero, so there are only K non-zero components in the sparse vector, that is, K is sparse.

The purpose of this criterion’s restriction on the measurement matrix is to ensure that two different K-sparse music spectra will not be projected to obtain the same observation value, that is, the same observation value is consistent with the sampling set. That is, the matrix formed by randomly sampling M column vectors from the measurement matrix must be non-singular, that is:

The definition of the correlation between the sparse basis and the measurement matrix is expressed as:

At the same time, the correlation value interval is , and

and

are incoherent, that is, the row vector

of the matrix

cannot be sparsely represented by the column vector

of the sparse base matrix

. The column vector

of the sparse basis

cannot be sparsely represented by the measurement matrix row vector

.

To accurately restore the original music spectrum from the sparsely sampled music spectrum, the above correlation needs to meet the conditions, that is, for the sparse music spectrum , the dimension M of the compressed and sampled music spectrum

must meet the following conditions:

Among them, c is a known constant, and the value is . According to the above constraints, the original music spectrum can be reconstructed with a high probability.

In a Gaussian random matrix, each element is independent and identically distributed and has a normal distribution with mean 0 and variance . Therefore, in this paper, Gaussian random matrix is used as the sparse projection matrix for the frequency hopping music spectrum to perform compression sampling. Combined with the sparse representation model, the sampling process can be expressed as:

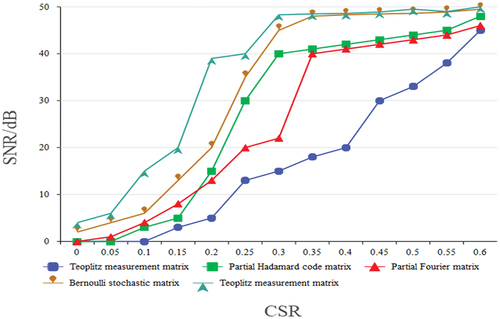

The same music spectrum is reconstructed from compressed sample values combined with the OMP algorithm. In order to balance the possible errors in the simulation process, the simulation was performed 100 times to balance the accidental errors. Finally, the reconstruction performance curve shown in is obtained. The vertical axis in the figure represents the signal-to-noise ratio (SNR), and its unit is dB.

In , we present the reconstruction performance curve, which represents the quality of the reconstructed signal compared to the original signal. The vertical axis of the curve represents the signal-to-noise ratio (SNR), which is a measure of the quality of the reconstructed signal. The SNR is expressed in decibels (dB), a logarithmic unit used to compare the power of two signals, in this case, the original signal and the reconstructed signal. A higher SNR value on the vertical axis indicates a better quality of the reconstructed signal, meaning that there is less noise and distortion in the reconstructed signal compared to the original signal. The curve shown in is obtained by varying the weight coefficients and evaluating the corresponding SNR values. The resulting curve provides an indication of the reconstruction performance of the algorithm at different weighting coefficients, allowing us to select the optimal weighting coefficients to achieve the best reconstruction quality. In summary, provides a graphical representation of the reconstruction performance of our proposed algorithm. The SNR values on the vertical axis indicate the quality of the reconstructed signal, and the curve shows how the quality varies with the weighting coefficients.

In order to satisfy the reconstruction condition, its sparsity must satisfy:

Among them, .

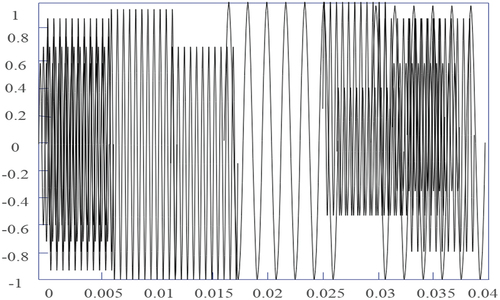

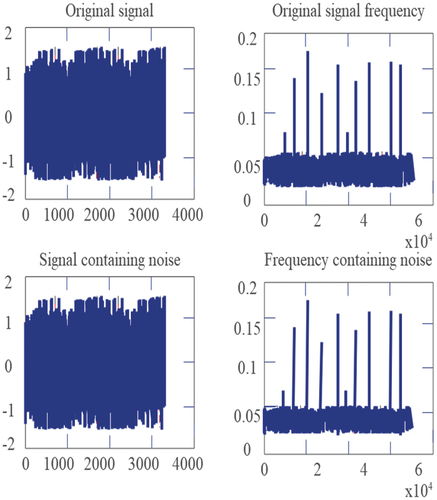

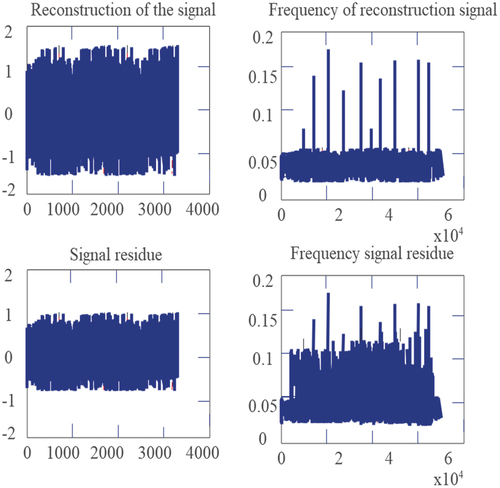

During the compression sampling process, due to the limitation of the size of the observation matrix and the computational complexity, 400 points are used for simulation, and the length of the music spectrum is 400. The compressed measurement value is obtained after the Gaussian random matrix is compressed and measured. The original time-domain music spectrum and the measured music spectrum are shown in , respectively.

Based on the Gaussian random matrix, this paper combines the sparse redundancy dictionary to sparsely sample the frequency hopping music spectrum to obtain the time-domain graph of the compressed sampling music spectrum, as shown in :

The measured value after the Gaussian random matrix is shown in . The length of the original music spectrum is 400, which is multiplied by the Gaussian matrix to obtain the measured value.

Sparse Reconstruction

The process of reconstructing the original music spectrum from the compressed sampled music spectrum can be summarized as solving an underdetermined equation y=Ax, where A represents the product form of the measurement matrix and the sparse basis.

In order to facilitate the theoretical description of the following reconstruction part, the definition of -norm is first introduced. For the sparse music spectrum

, its

-norm is expressed as:

Among them, ,and

is called the

-norm of the vector x.

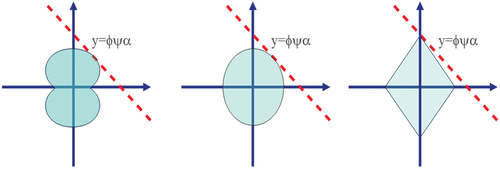

The geometric representation of the model is shown in , and from left to right are 0<p < 1, p = 1, p = 2.

In the figure, the intersection of the straight line and the shaded part represents the solution

. It can be seen that the solution of the

-norm, that is, the intersection of the line and the

-ball, will not be located on the coordinate axis, so there will be no sparse solution, and the intersection of the

-norm and the line will be on the y-axis. Under certain conditions, it has a sparse solution. Combined with the

-norm on the left side of the figure

, it also has a sparse solution. Therefore, it can be seen that in the process of sparse reconstruction, the

-norm (combinatorial optimization problem) has the same solution as the

-norm under certain conditions.

Combined with the compression measurement model described above, we can know

. Reconstructing the original music spectrum from the compressed sample values of the music spectrum is essentially a regression problem. In general, the most straightforward approach is to solve for the minimum

-norm. Considering the existence of different levels of noise in practice, the equality constraints are relaxed, and a constant parameter

is added to represent different noise levels, which is then expressed as:

Obviously, the compressed component dimension in this formula is much smaller than the coefficient dimension, which is an underdetermined equation. To solve the minimum -norm of the above formula, it is necessary to traverse all

possibilities of non-zero values in the coefficient

, and then to find the minimum sparse coefficient. Therefore, the problem is an NP problem.

Considering the sparseness of the sparse coefficient vector f, the non-convex -norm optimization problem can be transformed into a convex optimization form of the minimum

-norm optimization problem to solve, which is expressed as:

Another representation is:

In the above -norm problem, the solution method based on BP idea is to convert the convex optimization problem into an unconstrained convex optimization problem form, which is expressed as:

The GPSR algorithm consists of two parts, projection and backtracking linear search. The purpose of the linear backtracking process is to ensure that the objective function is sufficiently reduced so that the iterative update value conforms to the Armijo criterion.

The solution idea of the algorithm is as follows. For the original N-dimensional sparse music spectrum , the variable separation process can decompose the component

of the sparse coefficient to be obtained into two components, denoted as

, where

. That is to say, the positive and negative components of the original music spectrum

are separated, and then the coefficient vector can be expressed as the sum of two vectors, expressed as

. Among them,

is an N-dimensional row vector whose element is 1. Therefore, the above formula can be further expressed as the following form:

This formula is equivalent to the following quadratic programming representation:

Solving the gradient of this formula, we get:

represents the perception matrix, where

belongs to the Toeplitz matrix. The step size is

, and z is updated by the scalar parameter, that is,

, and it searches along the negative gradient direction each time, and projects in the non-negative quadrant, and performs a backtracking linear search until it satisfies the iterative stop condition. The function value is defined as follows:

The way to initialize the scalar parameter step size is to solve . To solve the above problems, the core of the GPSR algorithm is to obtain a scalar parameter sequence

and calculate

.

The GPSR-BB algorithm also solves the unconstrained minimization problem, and the step size is updated in each iteration:

Among them, represents the Hessian matrix approximation of F on

. In the algorithm, it is approximated by the following

method. Among them, d and Hessian matrix properties are approximate, namely:

By solving the least squares solution of this formula, this formula is transformed into:

The sparse reconstruction based on -norm is essentially a linear regression method, which takes minimizing the residual component as the objective function to solve the sparse coefficient, and then reconstructs the original music spectral component from the obtained sparse coefficient. Among the algorithms for solving regression problems, the typical algorithm is the Least Absolute Convergence and Selection Operator (LASSO) algorithm. The LASSO minimization objective function is expressed as:

LASSO realizes sparse approximation by converting the sparse approximation problem into a convex optimization problem to solve, and restricts the regression coefficients, that is, to estimate the sparse coefficients, thereby minimizing the reconstruction error

. The algorithm adopts quadratic programming in the reconstruction process, which increases the computational complexity and makes the reconstruction very time-consuming. For this purpose, LARS is used to solve the LASSO problem.

When the least squares method is solved by the LARS algorithm, the result of the coefficient solution can be expressed in the following form:

where is the identity matrix.

The OMP algorithm is a forward feature selection method, based on a greedy algorithm at each step, where each step element is highly correlated with the current residual. It is similar to the simpler match pursuit (MP), but it is more efficient to reconstruct than MP, and at each iteration, the residuals can be recomputed using orthogonal projections onto previously selected dictionary elements. Different from the convex optimization form of -norm, OMP belongs to the tracking algorithm. Based on the greedy iterative idea of

-norm, the reconstruction problem can be expressed as:

Among them, represents the musical spectrum to be reconstructed. The key to the solution process of the above formula is to select the atom with the highest degree of matching with the initial component or residual component from the perceptual matrix

in each iteration.

Gradient Projection Reconstruction Algorithm Based on Weight and Step Correction

The similarity between the GPSR algorithm and the OMP algorithm lies in the selection method of atoms, which is expressed as the atom with the largest similarity to the residual in the iterative process. The difference is that the GPSR algorithm always searches in the direction of negative gradient in the iterative process until the optimal solution is obtained.

In order to offset the imbalance existing in the classical GPSR reconstruction algorithm, this paper adjusts the balance in the above reconstruction problem by adding a weight coefficient to the sparse vector to control its penalty, which is expressed as:

The weight of the component in the reconstruction process is controlled by

, and the original fixed penalty term coefficient

is replaced. The

penalty term is directly controlled by the new adaptive parameter

. The purpose of this paper is to set an appropriate regularization factor for the sparse component

to achieve the optimum between the noise suppression ability and the sparse performance of the model, and further compromise the performance of the two. In addition, different regularization factors are applied to different components, which can reduce the influence of noise on the reconstruction accuracy and improve the reconstruction accuracy of the algorithm to a certain extent.

For the reconstructed model of the gradient update algorithm:

The quasi-Lagrangian function representation of the model obtained after transformation from the linear programming model (ignoring non-negative constraints) is:

The simplified form is:

The equivalent quadratic programming model of this model (the proof process is shown in the appendix below) is:

The step size selection is denoted as or

, where the vector is

, the gradient vector is

, and

.

(1) Design of step size d

The algorithm updates along the direction of negative gradient, which is represented as: . Among them, the step size parameter

is greater than 0, and takes its negative direction. Therefore, the quadratic programming model can be further expressed as a functional form of gradient update:

Among them, the matrix represents the Hessian matrix at the vector

. The next step is to solve the step size by solving the optimal value of the model, which is represented as follows:

Among them, . In order to simplify the solution method of Hessian matrix, it is replaced by its approximate representation, which is expressed as:

A new step size is obtained by substituting into the model, and the previous step size is replaced by this step size, and the step size is expressed as:

Since the gradient is related to the forward direction of the step size in the algorithm, it is directly expressed in the form of its forward direction.

Then, the step size is expressed as:

For the solution of the forward direction, the delay gradient algorithm proposed by Friedlander is used to accelerate the convergence speed of the algorithm, and the step size is further transformed based on the delay strategy to obtain a new step size representation, which is expressed as:

Based on the delayed gradient algorithm, the parameter matrix is set, and the update form of

is as follows:

It satisfies the BFGS correction criterion, where the gradient is .

Based on this, the step size is rewritten as:

Among them,. Combined with the above GPSR-BB algorithm, the following constraints are added to the step size. That is, to ensure the optimal solution form of the step size and prevent the step size from being too large, it is necessary to limit the range

, the new constraint condition is

, and the final step size is expressed as:

Combined with this value, the optimal value form is further derived, namely:

Thus, the optimal step size is obtained.

(2) Design of weight coefficient

In order to make the weight coefficient adaptive, this paper sets it to change according to the size of the vector , and assumes that at position i, the musical spectrum component is 0, and the estimated value

at this moment is not equal to zero, or close to 0. At this time, the weight coefficient should be large enough to ensure that a larger penalty term is added in subsequent iterations to set

to zero, otherwise the opposite is true. The second term of the weight coefficient represents the denoising ability. When the estimated value is equal to 0 or greater, indicating that the estimated value is correct, the weight value should be small to ensure that it will not affect the next iteration. In this way, the adaptive coefficient representation is set as:

represents the support set, that is, the set of non-zero components. For the compressed measurement value

, the weight coefficient update method is expressed as:

Among them, the parameter is . Obviously, the parameter

is smaller when the sparsity is smaller. However, when the weight coefficient is large, it means that the control of the support set is enhanced, which is conducive to the acquisition of sparsity. The update method of the unsupported set is:

At the same time, the fixed weight coefficient of the parameter c in the quadratic programming reconstruction model is replaced, which is expressed as:

After the above-mentioned setting of the weight coefficient and step size, the algorithm can reconstruct the music spectrum without the priori condition of the music spectrum sparsity. At the same time, the improvement of the step size ensures that the calculation effect is better, and the improvement of the weight coefficient ensures that the algorithm complexity and reconstruction accuracy can be better balanced.

Algorithm Performance Simulation and Analysis

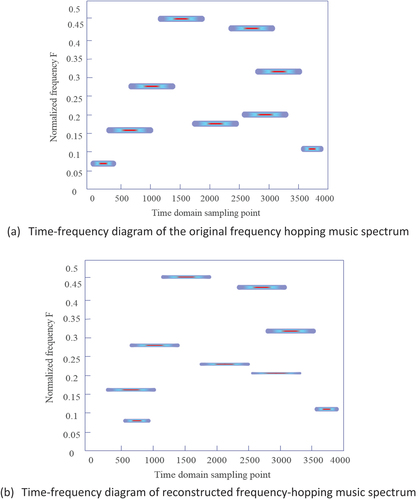

shows the time and frequency domain diagrams of the original frequency hopping music spectrum. For the frequency hopping music spectrum of 8hop, in order to be more in line with the actual situation, 3500 points are randomly intercepted here.

The paper proposes an algorithm for reconstructing a frequency-hopping music spectrum. To evaluate the algorithm’s feasibility, we conducted simulations using a Gaussian random matrix and added noise to the signal. The time-domain and frequency-domain diagrams of the reconstructed spectrum were then obtained and compared to the original frequency-hopping music spectrum.

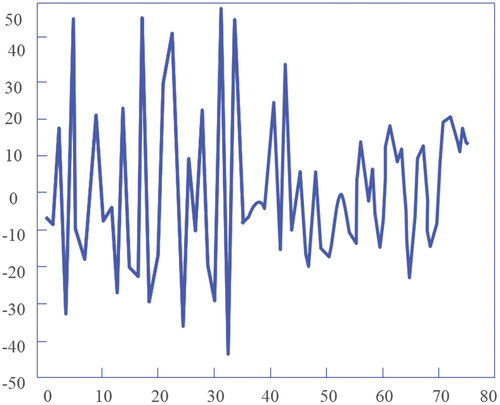

Mean square error is a common metric used to measure the similarity between two signals, and a smaller value indicates a better reconstruction. shows the comparison between the original and reconstructed spectra. The mean square error between the actual reconstructed music spectrum and the original frequency-hopping music spectrum was calculated.

The algorithm proposed can obtain a more accurate reconstruction effect. The use of the Gaussian random matrix indicates that the algorithm is based on a random matrix theory approach, which is effective in various signal-processing tasks. This means that the algorithm effectively reconstructs the frequency-hopping music spectrum even in the presence of noise.

The time-frequency joint analysis is performed on the original frequency hopping music spectrum and the reconstructed frequency hopping music spectrum of the above music spectrum, that is, STFT transformation. The time-frequency diagrams of the original frequency hopping music spectrum and the reconstructed frequency hopping music spectrum are obtained, respectively, as shown in :

From the reconstructed time-frequency diagram, it can be found that the algorithm in this paper can reconstruct the original music spectrum with a certain accuracy for the frequency-hopping music spectrum in the noise environment (Mrozek Citation2018).

On the above basis, the algorithm in this paper is combined with emotion recognition (Jiang et al. Citation2020; Joshi, Jain, and Nair Citation2021) to conduct an experimental study on automatic classification of music genres, and the accuracy of automatic classification of music genres is counted, and the results shown in below are obtained.

Table 1. Validation of the effect of automatic classification method of music genres integrating emotion and intelligent algorithms.

Automatic classification of music genres involves using machine learning techniques to categorize music tracks based on their audio features, such as tempo, rhythm, melody, and timbre (Bolotova, Druki, and Spitsyn Citation2014; Turchet, West, and Wanderley Citation2021). On the other hand, emotion recognition involves identifying the emotions expressed in the music, such as happiness, sadness, anger, and fear. Research has shown that integrating emotion recognition with automatic genre classification can lead to more accurate results. Intelligent algorithms can also improve the efficiency of music genre classification by learning from data and making predictions based on patterns in the audio features. Combining emotion recognition and intelligent algorithms can enable the system to not only classify music based on its audio features but also take into account the emotional content of the music. This can lead to more nuanced and accurate genre classification results, which can be useful in various applications such as music recommendation systems, search engines, and music therapy.

In summary, integrating emotion recognition with intelligent algorithms can improve the efficiency and accuracy of the automatic classification of music genres, making it a valuable tool in various applications.

Conclusion

The modern school is called a faction, but it is not a school or group in the traditional sense. It is all-encompassing, including almost all the content of cultural life, not just art and literature. In a sense, modernism is synonymous with historic new trends. The most direct goal of the modernist artist is to emphasize the difference and show individuality. Their artistic work is a direct manifestation of the achievement of these goals. Moreover, modernism not only means a transfer of creative direction, a kind of indifferent attitude toward tradition, but also means inheritance and development of tradition. In order to improve the effect of automatic classification of music genres, this paper combines emotion and intelligent algorithms to build an automatic classification model of music genres. The research shows that the automatic classification method of music genres integrating emotion and intelligent algorithms can play an important role in the automatic classification of music genres.

It is important to note that comparing the proposed method with other methods is a valid, future direction for the paper. It would be interesting to see how it performs compared to other well-known methods. Such a comparison can provide additional evidence of the proposed method’s effectiveness and help researchers better understand its strengths and limitations.

Disclosure statement

No potential conflict of interest was reported by the author(s).

Data Availability Statement

The dataset used to support this study are available from the corresponding author upon request.

Additional information

Funding

References

- Amendola, A., G. Gabbriellini, P. Dell’aversana, and A. J. Marini. 2017. Seismic facies analysis through musical attributes. Geophysical Prospecting 65 (S1):49–1399. doi:10.1111/1365-2478.12504.

- Anaya Amarillas, J. A. 2021. Marketing musical: música, industria y promoción en la era digital. INTERdisciplina 9 (25): 333–35.

- Bolotova, Y. A., A. A. Druki, and V. G. Spitsyn. 2014. License plate recognition with hierarchical temporal memory model. 2014 9th International Forum on Strategic Technology (IFOST) 136–39. doi:10.1109/IFOST.2014.6991089.

- Cano, E., D. FitzGerald, A. Liutkus, M. D. Plumbley, and F. R. Stöter. 2018. Musical source separation: Anintroduction. IEEE Signal Processing Magazine 36 (1):31–40. doi:10.1109/MSP.2018.2874719.

- Costa-Giomi, E., and L. Benetti. 2017. Through a baby’s ears: Musical interactions in a family community. International Journal of Community Music 10 (3):289–303. doi:10.1386/ijcm.10.3.289_1.

- Dickens, A., C. Greenhalgh, and B. Koleva. 2018. Facilitating accessibility in performance: Participatory design for digital musical instruments. Journal of the Audio Engineering Society 66 (4):211–19. doi:10.17743/jaes.2018.0010.

- Gonçalves, L. L., and F. L. Schiavoni. 2020. Creating digital musical instruments with libmosaic-sound and mosaicode. Revista de Informática Teórica e Aplicada 27 (4):95–107. doi:10.22456/2175-2745.104342.

- Gorbunova, I. B. 2019. Music computer technologies in the perspective of digital humanities, arts, and researches. Opcion 35 (SpecialEdition24):360–75.

- Gorbunova, I. B., and N. N. Petrova. 2019. Music computer technologies, supply chain strategy and transformation processes in socio-cultural paradigm of performing art: Using digital button accordion. International Journal of Supply Chain, Management 8 (6):436–45.

- Jiang, Y., W. Xiao, R. Wang, and A. Barnawi. 2020. Smart urban living: Enabling emotion-guided interaction with next generation sensing fabric. Institute of Electrical and Electronics EngineersAccess 8:28395–402. doi:10.1109/ACCESS.2019.2961957.

- Joshi, S., T. Jain, and N. Nair. 2021. Emotion based music recommendation system using LSTM - CNN architecture. In 2021 12th International Conference on Computing Communication and Networking Technologies (ICCCNT), 01–06. doi:10.1109/ICCCNT51525.2021.9579813.

- Khulusi, R., J. Kusnick, C. Meinecke, C. Gillmann, J. Focht, and S. Jänicke. 2020. A survey on visualizations for musical data. Computer Graphics Forum 39 (6):82–110. doi:10.1111/cgf.13905.

- Magnusson, T. 2021. The migration of musical instruments: on the socio-technological conditions of musical evolution. Journal of New Music Research 50 (2):175–83. doi:10.1080/09298215.2021.1907420.

- Michalakos, C. 2021. Designing musical games for electroacoustic improvisation. Organised Sound 26 (1):78–88. doi:10.1017/S1355771821000078.

- Mrozek, T. 2018. Simultaneous monitoring of chromatic dispersion and optical signal to noise ratio in optical network using asynchronous delay tap sampling and convolutional neural network (deep learning). In 2018 20th International Conference on Transparent Optical Networks (ICTON), 1–4. doi:10.1109/ICTON.2018.8473703.

- Scavone, G., and J. O. Smith. 2021. A landmark article on nonlinear time-domain modeling in musical acoustics. The Journal of the Acoustical Society of America 150 (2):3–4. doi:10.1121/10.0005725.

- Stensæth, K. 2018. Music therapy and interactive musical media in the future: Reflections on the subject-object interaction. Nordic Journal of Music Therapy 27 (4):312–27. doi:10.1080/08098131.2018.1439085.

- Tabuena, A. C. 2020. Chord-interval, direct-familiarization, musical instrument digital interface, circle of fifths, and functions as basic piano accompaniment transposition techniques. International Journal of Research Publications 66 (1):1–11. doi:10.47119/IJRP1006611220201595.

- Turchet, L., and M. Barthet. 2019. An ubiquitous smart guitar system for collaborative musical practice. Journal of New Music Research 48 (4):352–65. doi:10.1080/09298215.2019.1637439.

- Turchet, L., T. West, and M. M. Wanderley. 2021. Touching the audience: Musical haptic wearables for augmented and participatory live music performances. Personal and Ubiquitous Computing 25 (4):749–69. doi:10.1007/s00779-020-01395-2.

- Vereshchahina-Biliavska, O. Y., O. V. Cherkashyna, Y. O. Moskvichova, O. M. Yakymchuk, and O. V. Lys. 2021. Anthropological view on the history of musical art. Linguistics and Culture Review 5 (S2):108–20. doi:10.21744/lingcure.v5nS2.1334.

- Way, L. C. 2021. Populism in musical mash ups: Recontextualising brexit. Social Semiotics 31 (3):489–506. doi:10.1080/10350330.2021.1930857.