ABSTRACT

Scholars often recommend incorporating context into the design of an explainable artificial intelligence (XAI) model in order to ensure successful real-world adoption. However, contemporary literature has so far failed to delve into the detail of what constitutes context. This paper addresses that gap by firstly providing normative and XAI-specific definitions of key concepts, thereby establishing common ground upon which further discourse can be built. Second, far from pulling apart the body of literature to argue that one element of context is more important than another, this paper advocates a more holistic perspective which unites the recent discourse. Using a thematic review, this paper establishes that the four concepts of setting, audience, goals and ethics (SAGE) are widely recognized as key tools in the design of operational XAI solutions. Moreover, when brought together they can be employed as a scaffold to create a user-centric XAI real-world solution.

Introduction

The benefits of Artificial Intelligence (AI) in our society are well documented. Its contribution to modern medicine is immeasurable, with reductions in clinical errors (Kaushal, Shojania, and Bates Citation2003), improved prognostic scoring (Christopher Bouch and Thompson Citation2008; Nikolaou et al. Citation2021), better diagnostic decision-making (Miller Citation1994; Nikolaou et al. Citation2022) and its significant contribution to manufacturing and supply chains during the COVID pandemic (Wuest et al. Citation2020). It is used to identify production faults in manufacturing (Panda Citation2018), improve product design (Nozaki et al. Citation2017), improve product quality (Li et al. Citation2017) and create more efficient business processes (Prokopenko et al. Citation2020). Moreover, it has completely transformed the marketing industry with better personalization leading to more efficient and effective marketing strategies (Eriksson, Bigi, and Bonera Citation2020).

In a 2017 report commissioned by the UK Government (Hall and Pesenti Citation2017), it was estimated that AI could add an additional £ 630 billion to the UK economy by 2035, increasing the annual growth rate of GVAFootnote1 from 2.5% to 3.9%. Faced with the prolific ubiquity of these agents, readers would be forgiven for believing that society had already embraced AI tout court. However, both public and private sectors continue to struggle with its adoption.

A recent collaboration between the Carnegie UK Trust and the Data Justice Lab (Redden et al. Citation2022) found 61 instances of automated decision making systems being canceled by public bodies across Europe, UK, North America, Australia and New Zealand. The Redden et al. (Citation2022) report highlights the following examples:

Child protection services, where an algorithm designed to identify at-risk children has been abandoned.

Policing, where algorithms used to identify high crime hotspots and speed up the police’s response to gunfire incidents have been discarded.

Welfare and Benefits, where an algorithm to detect fraudulent welfare claims has similarly been rejected.

Yet oftentimes it is only through learning from negative and unintended consequences that practical improvements can be made. Mikalef et al. (Citation2022) argue this perspective by applying a dark side metaphor to the field of AI. The authors’ analysis reveals that safer and more effective technologies can be developed through the adoption of responsible AI principles, a key component of which is algorithmic transparency.

Transparency played a significant role in the decisions for these public bodies to move away from the reliance on algorithms (Redden et al. Citation2022). This finding complements the conclusion of a previous report from the Centre for Data, Ethics and Innovation (2020) which identified the potential for algorithmic bias and a lack of explainability of AI systems as key risks across all five sectors of; criminal justice; financial services; health and social care; digital and social media and energy and utilities.

These challenges have formed the backdrop for a drive to “peek inside the black box” (Adadi and Berrada Citation2018) and develop solutions to combat the lack of transparency and trust, and the increasing complexity and opacity of these AI models. One such solution, Explainable Artificial Intelligence (XAI) has emerged as a promising field of study.

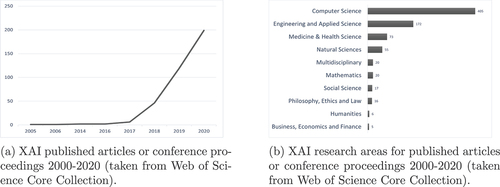

Many scholars have embraced the challenge of researching this nascent field. A search of the Web of Science Core Collection reveals significant growth in XAI-related published articles or conference proceedings over the past 20 years (). In particular the past 5 years have witnessed the majority of growth, with the beginnings of an exponential curve hinting at more contributions yet to come.

Moreover whilst artificial intelligence is known to permeate all aspects of society, further analysis of this journal population provides similar evidence of the interdisciplinary appeal of XAI. Recent XAI papers have covered humanities subjects such as art and music together with social sciences like tourism and hospitality alongside the more intuitive research areas of computer science, medicine and engineering ().

This fast growth and interdisciplinary appeal of XAI has caused some scholars to voice concerns about the quality and practicality of XAI models being proposed (Doshi-Velez and Kim Citation2017; Lipton Citation2018; Miller, Howe, and Sonenberg Citation2017). Notably, Miller et al.’s “light-touch” survey provided evidence that researchers were producing XAI models for themselves rather than for an intended audience. In doing so, they run the risk of alienating their audience by producing models which serve no real-world purpose.

Instead, scholars recommend incorporating context in the model design to deliver the successful integration of an explainable agent (Langer et al. Citation2021). However, few in the XAI field have expanded upon the meaning of context, or provided clarification as to what they consider its constituent parts. Some authors discuss context in relation to the setting, for example the triggers for requiring an explanation (Doshi-Velez and Kim Citation2017; Hoffman, Klein, and Mueller Citation2018; Lipton Citation2018) or the boundary conditions under which an effective explanation operates (Mittelstadt, Russell, and Wachter Citation2019; Saleema et al. Citation2015; Tulio Ribeiro, Singh, and Guestrin Citation2016). Others focus on the needs of the audience, citing the importance of a dialogue (Carenini and Moore Citation1993; Hoffman, Klein, and Mueller Citation2018; Moore and Swartout Citation1989), the growth of knowledge (Lombrozo and Carey Citation2006; Samek et al. Citation2019) and the audience’s mental model (Byrne Citation1991; Kulesza et al. Citation2013). Still others discuss context with reference to goals – either of the audience (Lombrozo Citation2006; Miller Citation2019) or of the explainable agent itself (Lombrozo and Carey Citation2006; Watson and Floridi Citation2021; Wick and Thompson Citation1992). More recently, authors also emphasize reflection on legal and ethical factors (Chesterman Citation2021; Wachter, Mittelstadt, and Floridi Citation2017).

The intention for this paper is not to argue that any one of these aspects is more important than the other, but rather to provide a scaffold which binds these considerations together. A thematic review of the extant literature reveals an interaction between the contextual elements of Setting, Audience, Goals and Ethics (SAGE), as illustrated in . This paper proposes SAGE as a conceptual framework that enables researchers to build context-sensitive XAI, thereby strengthening the prospects for successful adoption of an XAI solution.

This paper has three major research contributions. First, this is one of the few papers that delves into the detail of context in relation to the delivery of an XAI system. In particular, it is unique in the way in which it argues for a holistic understanding of context, rather than suggesting the hegemony of one aspect of context over another. Second, this paper suggests normative and XAI-specific definitions for context, setting, audience, goals and ethics. Establishing common terms and definitions provides scholars with a basis for common understanding, upon which further discourse can be built. Notably, the use of common definitions supports communication, assists transparency and limits confusion. Finally, it proposes the SAGE framework for ensuring both context-sensitive and human-centric XAI. It is the expectation of these authors that the application of this framework will serve as a guide for collecting and organizing all relevant contextual inputs to an explanation, and thereby assist in the development of more readily accepted XAI systems.

Positioning, Scope and Terminology

First and foremost, this paper positions itself as a design aid for those embarking on the construction of an XAI solution. It is therefore directed toward researchers and practitioners engaged in application-specific development of XAI to achieve clarity on model outcomes. The arguments presented support user-acceptance of XAI by encouraging the researcher to consider the audience experience as paramount.

Second, this paper does not engage in the terminological debate between interpretable and explainable artificial intelligence, preferring instead to remain neutral on that topic and using the term Explainable Artificial Intelligence (XAI) as an umbrella term to incorporate both interpretable and explainable models (Gilpin et al. Citation2018).

The remainder of this paper is structured as follows. Section 2 presents related work on the development of frameworks in the field of XAI. Section 3 firstly addresses the main notion of context, from both a philosophical and physical perspective and presents a normative definition for context in the domain of explainable AI. Following that discourse, Section 4 goes on to define and describe each of the terms setting, audience, goals and ethics in turn. Section 5 then provides a discussion on the theoretical and practical implications of this work before finally, Section 6 concludes with a discussion, limitations and opportunities for further work.

Related Work

Literature in XAI is dominated by the presentation, augmentation or application of explainable models. See Guidotti et al. (Citation2018) or Ras, van Gerven, and Haselager (Citation2018) for comprehensive reviews on state of the art explanation methods. However, authors increasingly look toward the development of frameworks in order to capitalize on the opportunities for more rigor, structure and normalization in this burgeoning field.

Several frameworks complement this one by identifying similar themes, albeit through a range of different lenses. Sokol and Flach (Citation2020) approached the practical considerations through the development of an XAI evaluation method. Their”explainability fact sheets” were designed for researchers to evaluate existing explainability methods by considering functional, operational, usability, safety and validation dimensions. Whilst their identification of salient themes is consistent with these authors, their framework is orthogonal to this paper. That is to say they assess the capabilities of the XAI from an algorithmic evaluation perspective rather than an audience-centric design perspective. This leads to an evaluation result which is largely audience-agnostic, rather than being driven by audience desiderata.

Hall, Gill, and Schmidt (Citation2019) is another paper that takes a pragmatic approach to XAI design. They contributed to the field by proposing a five-step methodology for requirements gathering, in order to ensure user desiderata are established at the design stage of an explainable AI system. Their approach complements this paper by emphasizing the centrality of the audience desiderata to a successful XAI system design. Notably, stages one to three in their five stage process focus on the identification of the audience roles and the documentation of audience requirements. Moreover, their recommendation to document constraints (privacy, resources, timeliness and information collection effort) satisfies some of the concerns these authors have with those who do not consider the setting as an integral part of the XAI design process.

The importance of understanding the end user perspective is also a core principle of Bahalul Haque, Najmul Islam, and Mikalef (Citation2023)’s systematic literature review. In their analysis, the authors explore the literature through the four quality dimensions of “format,” “completeness,” “accuracy” and “currency” (Wixom and Todd Citation2005) and propose a direct relationship between those dimensions and the anticipated consequences of technology use, arguing that consideration of these quality dimensions will positively influence AI use and adoption.

In contrast, Mohseni, Zarei, and Ragan (Citation2021) provided a comprehensive survey of literature looking at both user’s goals and researcher’s methods of explanation evaluation. Their work identified novice users, data experts and AI experts as key user groups with novice users more interested in goals designed for gaining trust in the system, data experts focused on model intricacies and model tuning and AI experts concerned with model debugging and interpretability. Alongside these design goals they describe five evaluation measures aimed at verifying explanation effectiveness. The measures again focus on the ability of the explanation system to satisfy user’s desiderata such as usefulness, trust and reliance as well as its ability to understand the user’s mental model and improve on human-AI task performance.

This paper shares the motivations of Mohseni, Zarei, and Ragan (Citation2021) to consolidate the broad spectrum of research on XAI and present an interdisciplinary consensus on best practice XAI design. However, their identification of the audience and their needs fails to take the context of the application into account. For example, the identification of audience groups according to their technical proficiency levels rather than their role that requires them to interact with the XAI system. This leads to the articulation of goals as XAI system goals, rather than a focus on satisfaction of audience desiderata.

Finally, James Murdoch et al. (Citation2019) introduces the PDR (predictive accuracy, descriptive accuracy and relevancy) framework to evaluate the effectiveness of an explanation. The authors highlight the importance of the role of human audiences when discussing interpretability. For example, the identification of relevancy as a salient measure of effectiveness underlines the importance of understanding the needs of a specific audience in a specific domain. However, there is no discourse pertaining to either the usability of the explanation or the incorporation of ethical factors in the development of the explanation. In contrast, this paper addresses relevancy in the context of the audience, their goals, the setting and the ethical design.

Explaining Context

The overarching consensus for explainable AI is that explanations are contextual (Miller, Howe, and Sonenberg Citation2017). That is, in order for an agent to deliver a successful explanation, the context of the question must first be determined, and then addressed within the explanation itself. Given the consensus, this article will assume the need for context is an anodyne point and instead address what “context” means from an XAI perspective, a scant focus point in the XAI literature.

Philosophical Context

First, this paper introduces context from a philosophical point of view. Dourish (Citation2004) provides an insightful perspective in his discourse regarding the dual philosophical aspects of context (). He describes the positivist approach as being a problem of representation – how to encode and utilize relevant information in order to develop a context-sensitive model. However, this is in conflict, he advises, with the phenomenological approach of using interpretation to determine contextual relevancy, which is dynamic.

Table 1. Dual philosophical aspects of context, adapted from Dourish (Citation2004).

Dourish’s interest lies in context-aware systems, where agents repeatedly assess and adapt to a changing environment. Hence it is perhaps no surprise that he argues in favor of the phenomenological viewpoint. Others might propose circumstances where a positivist approach is more fitting.

Consider, for example, an expert system such as a fraud management system. A model designed to explain why a transaction is fraudulent might traditionally take a rule-based approach. The developer would use pre-determined rules to encode relevant information into the model. The rules may periodically be updated, but substantially would stay the same. The rules and the transactions remain separate entities. Hence, the approach clearly satisfies the positivist requirements.

The positivist mind-set even continues to dominate the extant XAI literature. Once a machine learning model has determined the relevancy of a piece of information it becomes encoded and stable within those circumstances. For example, using saliency masks to unravel deep neural networks for determining neurodegenerative disease (Zintgraf et al. Citation2017), or using feature importance to uncover the intricacies of a support vector machine (Poulin et al. Citation2006).

The phenomenological viewpoint is only addressed once the dynamism that comes from a truly audience-centric approach is taken into account; when the explanation becomes a dialogue and the agent is able to adapt its response to the audience’s changing mental model. In fact, Ashraf et al. (Citation2018) conclude their extensive literature survey with a recommendation for researchers to focus on interactive explanations rather than the status quo of static models (Tulio Ribeiro, Singh, and Guestrin Citation2016). The XAI domain has some way to go before a point such as that can be reached.

Physical Context

Returning to context as a representation of the physical world, Dey (Citation2001) provided a critique of popular definitions. He firstly criticized some authors for providing definitions that were too precise. This was largely a result of the definitions being example-based. Schilit and Theimer (Citation1994), for example referred to context as the “location, identities of nearby people and objects and changes to those objects.” Similarly Ryan, Pascoe, and Morse (Citation1998) documented it as “the user’s location, environment, identity and time.” Example-based definitions such as these are easily undermined through the presentation of counter-examples which do not meet these precise terms.

His second criticism was that others’ definitions were too broad or ambiguous, evidenced by the use of synonyms. For example Hull, Neaves, and Bedford-Roberts (Citation1997)’s description of context as “aspects of the current situation” or Brown (Citation1995)’s definition “elements of the user’s environment that the computer knows about” both leave the reader in need of more clarification. Dey then went on to provide his own definition of context, in which he blended both synonyms and example-based text, with the intention of establishing a normative middle-ground. “Context is any information that can be used to characterise the situation of an entity. An entity is a person, place, or object that is considered relevant to the interaction between a user and an application, including the user and applications themselves.”

Whilst Dey’s definition is widely cited in the field of context-aware computing, this paper argues that it is difficult to translate into the field of XAI. In particular, it approaches the determination of context from the developer’s, rather than from the audience’s perspective. In doing so, it adopts Dourish’s positivist viewpoint by suggesting that context is pre-determined and stable. In contrast, explanations are known to be dynamic – they guide further enquiry and are often considered a starting point for further dialogue, rather than an end-point (Hoffman, Klein, and Mueller Citation2018). Hence, in the absence of a normative definition of context for XAI, this paper proposes a definition that places the audience at the center of the context and additionally provides more flexibility for ongoing dialogue.

Context [in explainable AI] is any information needed by the explanation system to satisfy the explanation goals, usability requirements and ethical expectations of the audience.

With this definition, the researcher is immediately directed to ensuring that the audience’s perspective is central to the explanation. Moreover, as the audience’s mental model and goals change, perhaps as a result of engagement with the technology, the context will equally change to support further understanding.

The SAGE Framework

The SAGE Framework provides a meeting point between commonly identified contextual elements which authors suggest make up an effective XAI solution. A structured and iterative methodology was used to identify relevant literature and categorize common themes. Specifically, the review began with thirteen core papers, identified through leading computer science journals and conferences, which addressed the design or evaluation of XAI models. Common themes were noted, and a subsequent keyword search was conducted using Google Scholar in order to broaden the literature population. Additional papers were also identified through careful review of citations. An iterative coding process was then used to tease out the key influencing factors which were driving the identification of those themes.

Despite the thematic similarities, it was evident that terminology was being used in an inconsistent manner. As a result, this framework begins with clarification as to the meaning of context (Section 3) and continues with each subsequent sub-section having a definition or description of the appropriate terms, within the context of XAI. The framework itself () centers around the identification of who the audience is. This point is pivotal, since it is the audience who determines whether an explanation is a good one or not (Doshi-Velez and Kim Citation2017). The perspective of the audience is also the driving force in the explanation design, influencing the factors to consider as part of the setting, goals and ethics of the system.

The aim of establishing the setting is to ensure that the audience is able to use the explanation (Section 4.2). This means meeting both practical requirements and comprehension considerations. Meanwhile, correct identification of the audience’s goals (Section 4.3) ensures that the explanation provides information that is relevant to the audience’s desiderata. Finally, employing appropriate measures to ensure that the audience can trust the explanation provides an ethical perspective (Section 4.4) that completes the SAGE Framework.

Audience – ”Who I Am”

Whilst many authors advocate the “audience” being central to explanation (Atakishiyev et al. Citation2020; Miller Citation2019; Ribera and Lapedriza Citation2019; Rüping et al. Citation2006), few clarify what the term means. This paper defines audience as follows:

Audience: The immediate recipients of the system-delivered explanation.

The use of the term immediate underlines the importance of engaging with those that interact directly with the explanation system. In contrast, we use the term “stakeholders” to refer to persons who are impacted by the explanation but do not interact directly with the explanation system.

provides an analysis of literature which explores the audience of an XAI model. Common themes for authors to examine are audience taxonomies, the audience mental model and audience communication through dialogue.

Table 2. Literature focusing on the audience of an XAI model.

Audience Taxonomies

Authors who emphasize the centrality of the audience have traditionally created or adopted a taxonomy of audience types to simplify the discourse. The creation of an audience taxonomy eases the identification of audience desiderata, which in turn enables the researcher to pre-determine appropriate explanation goals. Referring back to Dourish (Citation2004), this is a strongly positivist viewpoint which assumes audience desiderata are both delineable and stable. Critics might question this approach as lacking flexibility yet it is useful in a number of circumstances such as regulated industries, where segregation of duties is often mandated and the role of each audience group is typically quiescent.

Tomsett et al. (Citation2018) introduced the reader to six audience roles which they argue are useful in the determination of user goals. Interestingly, they suggest that only two of the roles interact directly with the explanation system. Firstly, the Creator, being a person or group of people who are responsible for creation of the system and secondly the Operator who provides the system with inputs and directly receives the system outputs. The remaining four roles (Executor, Decision-Subject, Data-Subject, Examiner) leverage information provided by the system, but do not interact directly with it.

These roles built on earlier work by Ras, van Gerven, and Haselager (Citation2018) who presented two high level categories of “expert user” and “lay user,” each being reduced down into smaller groups of sub-users. On the other hand, Preece et al. (Citation2018) differentiated their work by introducing the concepts of “Theorist” and “Ethicist” into their representation of user groups. The authors argued that Theorists looked for explanations which helped further the development of AI from an academic standpoint as opposed to Developers who were concerned with practical implementation. Moreover, their “Ethicist” categorization recognized the need for an audience whose focus was on fairness, accountability and transparency.

More recently, Meske et al. (Citation2022) presented a taxonomy of five distinct audience types along with generalized objectives for each of them. The paper suggests, for example, that”AI Users” need an explanation in order to validate that the AI reasoning is aligned with their own reasoning, whereas”AI Developers” on the other hand are more interested in explanations for debugging and improvement purposes. The three remaining types presented by the paper are”AI Managers,””AI Regulators” and”Individuals affected by AI decisions.”

Finally, Arrieta et al. (Citation2020) also argued that the identification of user profiles is important to establishing an effective explanation. However, their approach provided exemplars of users (e.g. executive board members and product owners) rather than categorizations, which can make application difficult if those roles are not evident in the real-world scenario.

Audience Mental Model and Dialogue

Miller (Citation2019) is widely credited with re-igniting the discourse on the contribution of the social sciences to XAI. The philosophical and cognitive theories of human understanding present a unique opportunity to improve user acceptance of explanations by tailoring them to a specific audience. The audience approach explanations with their own preconceived ideas and levels of expert knowledge (Lombrozo Citation2006). Hence whilst generic theories of understanding such as the known limitations of working memory are useful, it is simultaneously important to deliver explanations which build on these audience-specific conditions. To ensure this is achieved, researchers have taken a variety of evaluation approaches.

Wang et al. (Citation2020) took an ethnographic and participatory design approach in their medical study. Their collaboration with clinicians provided novel insights such as the importance of making raw and source data available. This enabled the clinicians to reconcile their own expert knowledge with the given explanation, thereby improving their own mental model of the application. Other approaches include asking participants to orally feedback their understanding (Ibáñez Molinero and Antonio Garca-Madruga Citation2011) and diagramming (Hoffman et al. Citation2018) whereby participants are asked to illustrate their understanding. For further review, Hoffman et al. (Citation2018) provides a comprehensive assessment of mental model evaluation approaches.

However, a recent study of non-technical XAI users provides an interesting perspective on how an audience’s mental model can change (Chromik et al. Citation2021). In this paper, the authors investigate the accuracy with which an audience can demonstrate understanding of a global model, given a handful of local explanations. The results, in both moderated and non-moderated participatory groups, demonstrate how the audience’s perception of understanding decreases when they are given the opportunity to examine their own responses.

An audience’s mental model is the subject of constant change as new knowledge blends with old knowledge. This process becomes synonymous with explanation as a dialogue, or in other words an incremental process (Biran and Cotton Citation2017, Miller Citation2019) wherein the audience repeatedly questions the explanation agent until a point of understanding is reached (Hoffman et al. Citation2018) or to ”fill a gap” (Doshi-Velez and Kim Citation2017). However, building knowledge in this way only allows for the audience to learn from the explanation agent. In contrast, there are also likely to be instances where experts have more knowledge than the explainer, resulting in them outperforming the system-generated explanation (Klein et al. Citation2017).

Dialogue should therefore be a two-way concept. Whilst we look to XAI to communicate unknown patterns and influences extracted from the prescribed data, the expert audience adds breadth, supplementing the prescribed data with their own peripheral knowledge and undocumented experiences. Hence, designing explanation with an interactive dialogue in mind allows for the development of a “learning loop,” which ultimately enhances the performance of both the XAI agent and the audience (Klein et al. Citation2017).

Setting – ”I Can Use It”

The purpose of understanding the setting is to ensure that the XAI solution has efficacy in a real world environment. As such, factors within the setting represent pre-determined immutable constraints which must be respected. Their existence traditionally reflects the physical domain or environment within which the explanation operates. However, in line with Miller (Citation2019) and Spinner et al. (Citation2019) this paper argues the setting should also include limitations imposed by the mental model of the audience, i.e. the ability for the user to comprehend the explanation. Since without a level of comprehension, even the most pragmatic explanation will be rendered useless. Considering these factors, this paper therefore defines the term Setting as follows:

Setting: Factors affecting the usability and comprehension of the explanation.

The setting plays a foundational role in the design of an explanation. Similar to the overarching concept of context, the setting is domain, instance and audience specific. summarizes papers addressing the setting of an explanation. The following cases are not exhaustive, but contribute toward highlighting the factors to consider in relation to the setting of an XAI agent.

Table 3. Literature addressing the setting of an explanation.

Explanation Frequency

The setting will dictate the frequency with which an explanation is required. Authors commonly cite the requirement that explanations are only needed when there is deviation from expected behavior (Adadi and Berrada Citation2018; Hoffman, Klein, and Mueller Citation2018). Some circumstances align with that perspective, such as the evaluation of loan applications (Arya et al. Citation2019), medical prognosis calculation (Nielsen et al. Citation2019), and mechanical fault diagnosis (Lee et al. Citation2019). Nevertheless, other circumstances dictate the need for an explanation irrespective of the outcome, such as recommender agents (Bobadilla et al. Citation2013).

Other scholars highlight the need for a balance between the time and effort required to produce the explanation and the perceived benefit (Bunt, Lount, and Lauzon Citation2012). Alternatively, Edwards and Veale (Citation2017) use the example of Google Spain’s experience with the Court of Justice of the European Union (CJEU) to offer the argument that explanations are unnecessary when a data subject just needs an action to be taken. Wachter, Mittelstadt, and Floridi (Citation2017) similarly challenge their readers to consider how meaningful an explanation might be before embarking on the explanation design.

Explanation Timing

Explanations may be required either real-time or after the event. Real-time explanations are required where speed is of the essence, such as in traffic management (Daly, Lecue, and Bicer Citation2013) or predictive maintenance of vehicles or machinery (Lee et al. Citation2019). Other circumstances allow for explanations to be provided at a later point in time such as the audit of black box decision-making or the interrogation of a previously made decision (Vaccaro Citation2019).

Explanation Soundness and Completeness

Setting will also incorporate the trade-off between how sound or complete an explanation should be. This paper adopts definitions from Kulesza et al. (Citation2013) which defines those terms as follows:

Completeness (“The whole truth”): The extent to which all of the underlying system is described by the explanation.

Soundness (“Nothing but the truth”): The extent to which each component of an explanation’s content is truthful in describing the underlying system.

Their study found that users chose to have both complete and sound explanations where available, but preferred complete over sound explanations when faced with a trade-off. However, the context of their study was a music recommendation engine which provides no danger to the data subject should an incorrect recommendation be advised. In comparison, explanations provided in high-stakes settings will need to ensure explanations have near 100% (if not full 100%) fidelity to the underlying model. For example, a medical diagnostic tool will need to optimize soundness for an explanation as to why it diagnoses a medical condition (Kononenko Citation2001) or prescribes a certain medication (Lamy, Sedki, and Tsopra Citation2020). Otherwise, an approximation could have dangerous consequences.

In line with other authors (Kulesza et al. Citation2013; Ribeiro, Singh, and Guestrin Citation2016) findings for completeness were found to improve the user’s trust in the model through their ability to learn and therefore understand how the model derived its results. This becomes a necessary quality for those in high-stakes domains as trust improves user-acceptance of the system (Biran and Cotton Citation2017; Freitas Citation2014; Ribeiro, Singh, and Guestrin Citation2016). This preference for both soundness and completeness implies researchers in high-stakes fields should favor models which are explainable by design, since post-hoc explanations lose soundness and completeness by providing simplified estimates of the original model (Rudin Citation2019).

Explanation Comprehension

Miller (Citation2019) makes the case for ensuring developers design explainable models with an appreciation of human cognition in mind. The author builds upon an earlier paper (Miller, Howe, and Sonenberg Citation2017) which articulates the importance of comprehension in order to ensure the explanation is useful to the intended user in a practical setting. This view is widely held (Biran and Cotton Citation2017; Doshi-Velez and Kim Citation2017; Freitas Citation2014; Hoffman, Klein, and Mueller Citation2018; Huysmans et al. Citation2011; Sokol and Flach Citation2020).

Other considerations for general comprehension include theories such as the known limitations of working memory. In this respect, resource theorists argue that only six or seven items can be held in the working memory at any one time (Van den Berg, Awh, and Ji Ma Citation2014). This capacity is reduced even further when participants are asked to simultaneously process additional pieces of information. Theories such as these support the argument for simplified explanations, ensuring that the audience is not over-burdened with an excessive volume of information.

Yet simplicity comes at the cost of accuracy (Adadi and Berrada Citation2018), and so a balance must be reached. In Gilpin et al. (Citation2018) the authors voice concerns about the dangers of simplification. They argue that simplicity creates an opportunity to mislead the user, resulting in “dangerous or unfounded conclusions.” Instead they suggest a personalisable experience, allowing the user to explore the trade-off between comprehension and accuracy for themselves. This perspective derives from the belief that comprehension is itself a subjective notion, dependent upon extraneous factors such as the user’s prior experience, mental model and their own cognitive biases.

To quantify comprehension, most authors look toward a measure of complexity (or analogously simplicity) by documenting the size of the model output (Pazzani Citation2000). For example, see Lombrozo (Citation2007) who quantifies simplicity as the number of causes invoked in an explanation. Similarly, other authors advise their peers to consider simplicity as one of the desiderata which contributes toward an effective explanation (Miller, Howe, and Sonenberg Citation2017; Watson and Floridi Citation2021).

Goals – ”It Tells Me What I Need to Know”

This paper suggests the goal of any explanation is to satisfy the questions of a specific audience. Given that definition, the discourse then pivots around what the term satisfy means, and what types of questions that audience might ask.

highlights key papers which investigate the goal of an explanation. The two common themes are identification of what the goal of an explanation is, and what it means for an explanation goal to be satisfied.

Table 4. Literature discussing explanation goals.

Goal Identification

Addressing the latter point first, authors have proposed many and varied taxonomies for why an explanation may be required. In the domain of expert systems, Swartout (Citation1983) was one of the first authors to move away from the original concept of providing explanations which simply “translate the code” into understandable terms, and to instead think of an explanation in terms of a justification of an outcome – answering why an outcome was reached as opposed to how it was reached.

Wick (Citation1992)’s focus was similarly on expert systems. They built on Swartout (Citation1983)’s concept of justification by suggesting an additional two audience desiderata; transfer the knowledge to the user (which Wick referred to as duplication, but is analogous with other authors’ categorizations of learning) and increase the user’s confidence in the system (ratification). Many other authors have agreed with Wick and Swartout’s concept of justification (Biran and Cotton Citation2017; Clancey Citation1986; Hoffman, Klein, and Mueller Citation2018) but Adadi and Berrada (Citation2018) expanded on it to include their own taxonomy of control, improve and discover.

A common approach for the presentation of explanation frameworks is to bring together the role of the user and the goals of the user. Haynes, Cohen, and Ritter (Citation2009) presented a study of the types of questions users typically ask for explanation systems. Their analysis revealed three distinct types of goal-oriented questions; ontological (what is this?); mechanistic (how does it work?) and operational (how do I use it?). Similarly, Sørmo, Cassens, and Aamodt (Citation2005) also combine user roles with goals to deliver a framework for explanation. Their five explanation goals of transparency, justification, relevance, conceptualization and learning align with the types of questions asked by a consortium of user groups.

Other authors focus on domains where the outcomes of these artificial agents involve safety-critical or highly impactful decisions. In that case, they recommend prioritizing fairness (Doshi-Velez and Kim Citation2017) and validation (Guidotti, Monreale, and Pedreschi Citation2019). Moreover Wachter, Mittelstadt, and Floridi (Citation2017) write from a regulatory perspective and suggest three distinct aims:

To inform and help the subject understand why a specific decision was reached.

To provide grounds to contest adverse decisions.

To understand what could be changed to receive a desired result in the future, based on the current decision-making model.

This final point echoes the views of Lombrozo and Carey (Citation2006) who suggest that the aim of explanation is to “subserve future intervention and prediction.” By this they mean that in revealing the past, explanations help to predict and control the future through generalization to new cases.

Finally, a more recent paper (Arya et al. Citation2019) addresses the topic of explanation goals by providing a comprehensive taxonomy of question types using the format of a decision tree. The authors’ toolkit is intended as a design tool to enable a developer to identify which explainable methods should be employed depending upon the audience’s question and the desired nature of the outcome (i.e. tabular, textual or image-based explanation).

It may be a futile effort to attempt to categorize all possible questions which an explanation could satisfy, yet the importance of understanding the explanation goal is not diminished. Furthermore, it is evident that the goals of the explanation agent align with the intent of the audience; they are inextricably linked. Hence identifying the primary questions of the audience becomes key to achieving the ambition of a successful explanation (Miller Citation2019).

Goal Satisfaction

Returning to this paper’s definition of a goal being to satisfy the questions of a specific audience, the second part of this discourse addresses what the term”satisfy” means. In this respect, one approach is to distinguish between full and partial explanations. Consider, as an example, an explanation for how a house might have been burgled:

The burglar jumped over the wall, broke the kitchen window and climbed inside the house.

The above sentence may be accepted as an explanation by a lay person, but a crime scene investigator is not likely to be satisfied. They may ask further questions such as “which wall did the burglar jump over?,” “how did the burglar jump over the wall?,” “what tool was used to break the kitchen window?” … etc. Far from it being a complete description of events leading up to the burglary, it provides only a high level summary of the burglar’s actions. This is what is meant by a partial explanation and in Ruben (Citation2012)’s view is the substance behind what others call “ordinary explanation.”

In contrast, the concept of a full explanation is more contentious. Hempelian disciples argue in favor of the purest interpretation, whereby a full explanation represents the “ideal” equivalent of “metamathematical standards of proof theory” (Ruben Citation2012). On the other hand, pragmatists will contest that the notion of a genuinely full explanation, where the explainer provides such a thorough and sufficient account of the cause of an event that it leaves no room for further questioning, is not achievable. This paper supports the pragmatic approach, and aligns its views with the perspective of Putnam (Citation1978) who argues that the completeness of an explanation should be judged by the relevance to the level of interest of the audience. To satisfy the questions of an audience, an explanation must therefore be a full explanation, i.e. one which provides the audience with sufficient details to satisfy their curiosity, without further clarification or questioning. Anything else is simply a partial explanation which requires further dialogue before a satisfactory answer can be reached.

But how does one determine whether or not an explanation is satisfactory? Authors propose various techniques for evaluating the quality of an explanation. In fact, one notable paper (Mohseni, Zarei, and Ragan Citation2021) provided a comprehensive survey of literature looking at both user’s goals and researcher’s methods of explanation evaluation. The authors identified novice users, data experts and AI experts as key user groups with novice users more interested in goals designed for gaining trust in the system, data experts focused on model intricacies and model tuning and AI experts concerned with model debugging and interpretability. Alongside these design goals the authors describe five evaluation measures aimed at verifying explanation effectiveness. The measures again focus on the ability of the explanation system to satisfy user’s desiderata such as usefulness, trust and reliance as well as its ability to understand the user’s mental model and improve on human-AI task performance.

Also linking users to their respective goals, Sanneman and Shah (Citation2020) propose a framework to ensure the XAI system communicates the right information to the right user. Heavily influenced by work in the domain of situational awareness, they suggest measurement is achieved by questioning users regarding their perception of the information (whether or not they understand the inputs and outputs), comprehension (whether they understand the model) and projection (whether they understand what would need to change, in order to change the output). However, this framework suffers from being model-centric rather than user centric, since it neglects the key concept of information relevancy (James Murdoch et al. Citation2019), i.e. it fails to recognize the possibility that a user understands the inputs, outputs, workings and influencing factors of a model but still does not receive the information they are looking for.

Finally, Watson and Floridi (Citation2021) payed tribute to Turing’s seminal “Imitation Game” paper with their own”Explanation Game” approach. The authors introduce the partnership of Alice and Bob as they strive to understand the workings of a black box algorithmic predictor. In their paper, the authors conclude that accuracy, simplicity and relevance are key measures to support Alice and Bob on their quest.

Ethics – ”I Trust It”

This paper takes a broader view of ethics than contemporary papers, arguing that it represents not just the expectations of truthfulness and fairness, but also the additional responsibility of sustainability – ensuring that explanations are developed and delivered in a way that supports wider social and environmental concerns.

Truthfulness and Fairness

Ensuring truthfulness from an explanatory agent may at first glance seem an axiomatic concept. After all, what use would an explanatory agent be if it told lies? However, news stories such as the Volkswagen emissions scandal (Hotten Citation2015) remind us that manipulation does happen, and unfortunately assumptions of honesty are not always justified. In a 2020 article, Lakkaraju and Bastani demonstrated how to fool users by providing misleading explanations. Their assessment reconstructed protected categories such as race or gender through the use of correlated attributes (such as zip code) thereby introducing bias into the model without the explanation revealing its presence.

Moreover deception is not always deliberate since predictions, by their very nature, are never certain. Zhang, Song, and Sun (Citation2019) demonstrated this uncertainty in their investigation of Ribeiro’s LIME model when they stress-tested LIME across two well-known datasets and a third synthetic dataset. Their explanations revealed inconsistencies in the feature importance when different sampling parameters were applied.

Within the domain of XAI, transparency has become synonymous with ensuring truthfulness Miller (Citation2019) and fairness (Doshi-Velez and Kim Citation2017; Gilpin et al. Citation2018; Guidotti, Monreale, and Pedreschi Citation2019). Transparency has been shown to reduce misconceptions Weller (Citation2019) and increase confidence in explanations Pearl and Li (Citation2007); Sinha and Swearingen (Citation2002); Wang and Benbasat (Citation2007), thereby enabling trust (Kizilcec Citation2016). Trust remains a significant aim of most modern technologies as it impacts the audience’s desire to engage with the technology (Jens Riegelsberger, McCarthy, and McCarthy Citation2005). Furthermore, trust enables processes to be more efficient (Chami, Cosimano, and Fullenkamp Citation2002), by eliminating the need to second-guess or double-check a result. Hence providing a coherent explanation of results improves trust and thus supports more efficient processes.

However trust is not a point in time measurement; it fluctuates (Holliday, Wilson, and Stumpf Citation2016). Mistakes can be costly, with false positives not only eroding customer loyalty (Buchanan Citation2019) but also increasing an organization’s workload (Ryman-Tubb, Krause, and Garn Citation2018). Organisations are acutely aware of the detrimental impact that accusations of unfair treatment of customers can have on both revenue and reputation. Whilst the auditing of algorithms is not currently mandated, organizations are investigating how to self-regulate their AI to avoid negative repercussions (Jobin, Ienca, and Vayena Citation2019).

The role of transparency is not to demonstrate the complete set of decision-making rationale that is used in the generation of a model outcome, but rather to relay the rationale that is pertinent to the audience’s goal. Whilst this often involves, for example, the justification of an outcome, transparency can also be employed to help embed and maintain trust by elucidating the boundary conditions of either the algorithmic output or the explanation itself (or both). Providing this additional information has been shown to benefit trust by avoiding”silly mistakes” (Ribeiro, Singh, and Guestrin Citation2016).

Sustainability

In the field of AI, sustainability issues are frequently overlooked. Yet AI algorithms have been shown to possess substantial carbon footprints (Schwartz et al. Citation2020; van Wynsberghe Citation2021). By extension, the field of XAI is no different, where explanations often employ Natural Language Processing (NLP) techniques in the generation of textual explanations. These techniques have been shown to have considerable computational and environmental cost. For example, when assessing BERT, a state of the art NLP model developed by researchers at Google AI Language, Strubell, Ganesh, and McCallum (Citation2019) found that the carbon footprint of training it is roughly equivalent to that of a trans-American flight. Moreover, their investigations also revealed that the CO2 emissions emanating from the training of the NAS (Neural Architecture Search) algorithm was equivalent to the lifetime CO2 emissions of five average sized cars.

Authors have traditionally argued that there is a trade-off between an algorithm’s accuracy and it’s carbon footprint. Yet an emerging corpus of literature suggests that accuracy need not be sacrificed if the size of the carbon footprint can be minimized through alternative resolution strategies. For example, in Mill, Garn, and Ryman-Tubb (Citation2022), the authors introduce the reader to a body of literature which attempts to address the carbon footprint of algorithms through the development of reproducibility checklists (Fursin Citation2020), energy reporting (Schwartz et al. Citation2020), energy prediction (Wolff Anthony, Kanding, and Selvan Citation2020), model redesign (Cai et al. Citation2019) and certification measures (Matus and Veale Citation2022). Whilst this is a nascent field for AI, there is also a notable lack of research investigating the carbon footprint of XAI models, alongside solutions for their mitigation. It is the hope of these authors that scholars are encouraged to explore opportunities in this domain.

Implications

Research Implications

This paper has three major research contributions. First, this is one of the few papers that delves into the detail of context in relation to the delivery of an XAI system. In particular, it is unique in the way in which it argues for a holistic understanding of context, rather than suggesting the hegemony of one aspect of context over another. Hence, this paper contributes to the already established corpus of literature reviews (Haque et al. Citation2023; James Murdoch et al. Citation2019; Mohseni, Zarei, and Ragan Citation2021; Sokol and Flach Citation2020), particularly by uniting rather than dividing the discourse.

Second, this paper suggests normative and XAI-specific definitions for context, setting, audience, goals and ethics. Establishing common terms and definitions provides scholars with a basis for common understanding, upon which further discourse can be built. Notably, the use of common definitions supports communication, assists transparency and limits confusion.

Finally, this paper proposes a novel human-centric framework by establishing connections between the audience of the explanation, and the setting, goals and ethics of the explanation (see ). The association of the audience to the setting ensures that the explanation is practically useful in a real-world environment; the association of the audience to the goals ensures that the explanation provides relevant information and is easily understood and; the inclusion of ethics ensures that the explanation can be trusted. It is the expectation of these authors that the application of this framework will enable researchers to demonstrate a comprehensive understanding of the audience’s desiderata for an explanation and thereby assist in the development of more readily accepted XAI systems.

Practical Implications

Research has repeatedly shown that the most effective explanations are those that have been built using audience input and collaboration (Bahalul Haque, Najmul Islam, and Mikalef Citation2023). Therefore, from a practical perspective, this framework can serve as a guide for collecting and organizing audience desiderata. The ubiquity of AI, coupled with the growing need for human-centric explanations may encourage researchers to adopt this framework as a tangible and accessible part of the XAI design process.

This framework is designed to be industry-agnostic, thereby supporting widespread adoption. However, explanations are considered to be particularly relevant in high-risk industries where decisions may impact people’s day to day lives. Consequently, both regulators and practitioners alike have been concerned about adopting”black box” decision-making models without the explanation of the resulting output (Mill et al. Citation2023). In particular, the European Union’s General Data Protection Regulation (GDPR) introduces the need to have explanations in place where black-box models perform automated decision-making (Chesterman Citation2021). In this case, the application and documentation of the SAGE framework will support the argument that understanding the needs of the audience have been pivotal in the design of the explanation.

Conclusion

This paper has addressed the concept of context within the domain of XAI. Recent progress to adopt AI solutions has been slow and impeded, not least due to an inability to understand the intricate workings of the complex algorithms. In order to leverage the opportunities that AI has to offer, government, society and industry need to demonstrate that the algorithms provide relevant information to the right audience in an ethical manner. Explainable AI is one field attempting to address that need.

Studies on the concept of explanation date back to Aristotle, yet normative terminology and fundamental concepts for XAI are still the subjects of energetic debate. Whilst the notion of context being central to an explanation is widely accepted, contemporary literature has failed to delve into the detail of what constitutes context. Far from pulling apart the body of literature to argue that one element of context is more important than another, this paper advocates a more holistic perspective which unites the recent discourse. Using a thematic review, this paper has established that the four concepts of setting, audience, goals and ethics (SAGE) are widely recognized as key tools in the design of operational XAI solutions. Moreover, when brought together they can be employed as a scaffold to create a user-centric XAI solution.

illustrates this in a succinct way. First, researchers should be clear on whom they consider their audience to be, since the audience’s perspective plays a pivotal role determining the setting, goals and ethics of the explanation. It is not unusual for authors to create or adopt a taxonomy of audience types to simplify the discourse. Assuming audience desiderata are both delineable and stable, each audience type is then associated with appropriate explanation goals in order to ascertain information relevancy.

For the setting, researchers should look to ensure that the audience finds the explanation to be usable, i.e. it has efficacy from the perspectives of both practicality and audience comprehension. This means not only understanding the physical constraints for the explanation system, but also understanding the circumstances within which the explanations are needed, such as how frequently an explanation might be required or whether the timing of the explanation is fundamental to its success. Critically, the setting will also incorporate the trade-off between the completeness and the soundness of an explanation. These qualities have been shown to influence audience trust and consequently audience acceptance of an explanation. Other aspects of comprehension involve a determination of how simple an explanation should be, often measured by the size of the model’s output. Yet care must also be taken not to over-simplify the explanation, since some authors argue that over-simplification has the ability to erode user trust.

Meanwhile, a clear understanding of the audience’s goals will ensure that the explanation provides information that is relevant. Previous authors have suggested a wealth of potential explanation goals, including justification, validation, and learning. Others have suggested frameworks to align explanation goals with the pre-determined roles of the audience, in an effort to provide additional structure and overcome unnecessary ambiguity. Moreover, the researcher should understand what constitutes a satisfactory answer for the audience. If there is any uncertainty as to what constitutes a full explanation then the ability to interrogate the initial explanation or create a dialogue is a key contributor to success.

Finally, ethical considerations should ensure the audience trust both the explanation and the underlying model. Authors consider transparency to be a conduit for truthfulness and fairness, since it has been shown to reduce misconceptions and improve confidence, thereby enabling the key objective of trust. Yet truthfulness should not be taken for granted, as illustrated in recent years by both academics and industry professionals who have demonstrated an ability to manipulate explanations and therefore mislead the audience.

In addition, this paper takes a broader view of ethics than contemporary papers, arguing that it represents not just the expectations of truthfulness and fairness, but also the responsibility of sustainability – ensuring that explanations are developed and delivered in a way that supports wider social and environmental concerns. The sustainability of XAI models, insofar as it applies to their carbon footprints, has largely been ignored in contemporary literature. It is the hope of these authors that scholars are encouraged to explore further opportunities in this domain.

Limitations and Future Work

This is a comprehensive framework which attempts to identify all relevant aspects of context within the design of an XAI solution. However, collaboration with industry partners is notoriously difficult to establish and yet without an industry partner researchers will struggle to gain ready access to the information needed by this framework. Consequently, detailing the usability requirements of the explanation system, the goals of the audience and the ethical considerations of the explanation may require assumptions to be made and documented.

Whilst this thematic literature review has focused on the domain of XAI, it has also, when needed, reached out to other domains for additional insight. Studies on leadership, for example, have much to offer in respect of ethics, particularly in so far as it pertains to a real-world application. Some researchers may look to explore this area further to identify synergies with XAI. Moreover, much of today’s literature on cognitive science and philosophical aspects of explanation was discussed in respect of expert systems 30 years ago. Even though technology has moved forward immeasurably since then, the ways humans understand and engage with explanations has not. These authors, therefore, encourage fellow researchers not to neglect the past (Ashraf et al. Citation2018) and instead seek out those established perspectives in order to expedite more understanding in this domain. Additionally, there is a notable opportunity for scholars to investigate and explore the environmental and social aspects of XAI with a view to ensuring solutions are produced in the most ethical way possible.

Finally, the validation of the SAGE conceptual framework in a working environment will be incorporated within future work. Nevertheless, fellow researchers are encouraged to test its efficacy in other real-world settings for themselves. It is the hope and expectation of these authors that this framework is sufficiently pragmatic to be useful in a wide range of industry scenarios.

Acknowledgements

This research received no specific grant from any funding agency in the public, commercial or not-for-profit sectors. The authors would like to thank the anonymous reviewers for their valuable feedback.

Disclosure statement

No potential conflict of interest was reported by the author(s).

Data availability statement

The data that support the findings of this study are available from the corresponding author, upon reasonable request.

Additional information

Funding

Notes

1. Gross Value Added. The value generated by any unit engaged in the production of goods and services (Office for National Statistics).

References

- Adadi, A., and M. Berrada. 2018. Peeking inside the black-box: A survey on explainable artificial intelligence (xai). IEEE Access 6:52138–32. doi:10.1109/ACCESS.2018.2870052.

- Arrieta, A. B., N. Daz-Rodrguez, J. Del Ser, A. Bennetot, S. Tabik, A. Barbado, S. Garca, S. Gil-López, D. Molina, R. Benjamins, et al. 2020. Explainable artificial intelligence (xai): Concepts, taxonomies, opportunities and challenges toward responsible ai. Information Fusion 58:82–115. doi:10.1016/j.inffus.2019.12.012.

- Arya, V., R. K. Bellamy, P.-Y. Chen, A. Dhurandhar, M. Hind, S. C. Hoffman, S. Houde, Q. V. Liao, R. Luss, A. Mojsilović, et al. 2019. One explanation does not fit all: A toolkit and taxonomy of ai explainability techniques. arXiv Preprint arXiv 1909:03012.

- Ashraf, A., J. Vermeulen, D. Wang, B. Y. Lim, and M. Kankanhalli. 2018. Trends and trajectories for explainable, accountable and intelligible systems: An hci research agenda. In Proceedings of the 2018 CHI Conference on human factors in computing systems, Montreal, Canada. 1–18.

- Atakishiyev, S., H. Babiker, N. Farruque, R. Goebel, M. Y. Kima, M. Hossein Motallebi, J. Rabelo, T. Syed, and O. R. Zaane. 2020. A multi-component framework for the analysis and design of explainable artificial intelligence. arXiv Preprint arXiv 2005:01908.

- Bahalul Haque, A. K. M., A. K. M. Najmul Islam, and P. Mikalef. 2023. Explainable artificial intelligence (xai) from a user perspective: A synthesis of prior literature and problematizing avenues for future research. Technological Forecasting and Social Change 186:122120. doi:10.1016/j.techfore.2022.122120.

- Biran Or and Courtenay Cotton. 2017. Explanation and justification in machine learning: A survey. IJCAI-17 Workshop on Explainable AI (XAI), Melbourne, Australia, 8, 8–13.

- Bobadilla, J., F. Ortega, A. Hernando, and A. Gutiérrez. 2013. Recommender systems survey. Knowledge-Based Systems 46:109–32. doi:10.1016/j.knosys.2013.03.012.

- Brown, P. J. 1995. The stick-e document: A framework for creating context-aware applications. Electronic Publishing-Chichester- 8:259–72.

- Buchanan, B. 2019. Artificial Intelligence in Finance. London, UK: The Alan Turing Institute. doi:10.5281/zenodo.2612537.

- Bunt, A., M. Lount, and C. Lauzon. 2012. Are explanations always important? A study of deployed, low-cost intelligent interactive systems. In Proceedings of the 2012 ACM international conference on intelligent user interfaces, Lisboa, Portugal, 169–78.

- Byrne, R. M. 1991. The construction of explanations, AI and Cognitive Science '90: University of Ulster, Jordanstown, 337–51. London: Springer.

- Cai, H., C. Gan, T. Wang, Z. Zhang, and S. Han. 2019. Once-for-all: Train one network and specialize it for efficient deployment. arXiv Preprint arXiv 1908:09791.

- Carenini, G., and J. D. Moore. 1993. Generating explanations in context. In Proceedings of the 1st international conference on intelligent user interfaces, Orlando, Florida, USA, 175–82.

- Centre for Data. Ethics and innovation. 2020. Review into bias in algorithmic decision-making. https://assets.publishing.service.gov.uk/government/uploads/system/uploads/attachment_data/file/957259/Review_into_bias_in_algorithmic_decision-making.pdf.

- Chami, R., T. F. Cosimano, and C. Fullenkamp. 2002. Managing ethical risk: How investing in ethics adds value. Journal of Banking & Finance 26 (9):1697–718. doi:10.1016/S0378-4266(02)00188-7.

- Chesterman, S. 2021. Through a glass, darkly: Artificial intelligence and the problem of opacity. The American Journal of Comparative Law 69 (2):271–94. doi:10.1093/ajcl/avab012.

- Christopher Bouch, D., and J. P. Thompson. 2008. Severity scoring systems in the critically ill. Continuing Education in Anaesthesia Critical Care & Pain 8 (5):181–85. doi:10.1093/bjaceaccp/mkn033.

- Chromik, M., M. Eiband, F. Buchner, A. Krüger, and A. Butz. 2021. I think i get your point, ai! the illusion of explanatory depth in explainable AI. In 26th International conference on intelligent user interfaces, College Station, Texas, USA, 307–17.

- Clancey, W. J. 1986. From guidon to neomycin and heracles in twenty short lessons. AI Magazine 7 (3):40–40.

- Daly, E. M., F. Lecue, and V. Bicer. 2013. Westland row why so slow? Fusing social media and linked data sources for understanding real-time traffic conditions. In Proceedings of The 2013 international conference on intelligent user interfaces, Santa Monica, California, USA, 203–12.

- Dey, A. K. 2001. Understanding and using context. Personal and Ubiquitous Computing 5 (1):4–7. doi:10.1007/s007790170019.

- Doshi-Velez, F., and B. Kim. 2017. Towards a rigorous science of interpretable machine learning. arXiv Preprint arXiv 1702:08608.

- Dourish, P. 2004. What we talk about when we talk about context. Personal and Ubiquitous Computing 8 (1):19–30. doi:10.1007/s00779-003-0253-8.

- Edwards, L., and M. Veale. 2017. Slave to the algorithm: Why a right to an explanation is probably not the remedy you are looking for. Duke Law & Technology Review 16:18.

- Eriksson, T., A. Bigi, and M. Bonera. 2020. Think with me, or think for me? On the future role of artificial intelligence in marketing strategy formulation. The TQM Journal 32 (4):795–814. doi:10.1108/TQM-12-2019-0303.

- Freitas, A. A. 2014. Comprehensible classification models: a position paper. ACM SIGKDD Explorations Newsletter 15 (1):1–10. doi:10.1145/2594473.2594475.

- Fursin, G. 2020. Enabling reproducible ml and systems research: The good, the bad, and the ugly. August. https://doi.org/10.5281/ZENODO, 4005773.

- Gilpin, L. H., D. Bau, B. Z. Yuan, A. Bajwa, M. Specter, and L. Kagal. 2018. Explaining explanations: An overview of interpretability of machine learning. In 2018 IEEE 5th international conference on data science and advanced analytics (DSAA), Turin, Italy, 80–89. IEEE.

- Guidotti, R., A. Monreale, and D. Pedreschi. 2019. The AI black box explanation problem. ERCIM News 116:12–13.

- Guidotti, R., A. Monreale, S. Ruggieri, F. Turini, F. Giannotti, and D. Pedreschi. 2018. A survey of methods for explaining black box models. ACM Computing Surveys (CSUR) 51 (5):1–42. doi:10.1145/3236009.

- Hall, P., N. Gill, and N. Schmidt. 2019. Proposed guidelines for the responsible use of explainable machine learning. arXiv Preprint arXiv 1906:03533.

- Hall, W., and J. Pesenti. 2017. Growing the artificial intelligence industry in the UK. UK: Department for science, innovation and technology. UK Government. https://assets.publishing.service.gov.uk/media/5a824465e5274a2e87dc2079/Growing_the_artificial_intelligence_industry_in_the_UK.pdf.

- Haque, A. B., A. N. Islam, and P. Mikalef. 2023. Explainable Artificial Intelligence (XAI) from a user perspective: A synthesis of prior literature and problematizing avenues for future research. Technological Forecasting and Social Change 186:122120. doi: 10.1016/j.techfore.2022.122120.

- Haynes, S. R., M. A. Cohen, and F. E. Ritter. 2009. Designs for explaining intelligent agents. International Journal of Human-Computer Studies 67 (1):90–110. doi:10.1016/j.ijhcs.2008.09.008.

- Hoffman, R. R., G. Klein, and S. T. Mueller. 2018. Explaining explanation for “explainable ai”. In Proceedings of the Human Factors and Ergonomics Society Annual Meeting, 62, 197–201. Los Angeles, CA, SAGE Publications Sage CA.

- Hoffman, R. R., S. T. Mueller, G. Klein, and J. Litman. 2018. Metrics for explainable AI: Challenges and prospects. arXiv Preprint arXiv 1812:04608.

- Holliday, D., S. Wilson, and S. Stumpf. 2016. User trust in intelligent systems: A journey over time. In Proceedings of the 21st international conference on intelligent user interfaces, California, USA, 164–68.

- Hotten, R. 2015. Volkswagen: The scandal explained. BBC News 10:12.

- Hull, R., P. Neaves, and J. Bedford-Roberts. 1997. Towards situated computing. In Digest of papers. first International Symposium on Wearable Computers, Cambridge, Massachusetts, USA, 146–53. IEEE.

- Huysmans, J., K. Dejaeger, C. Mues, J. Vanthienen, and B. Baesens. 2011. An empirical evaluation of the comprehensibility of decision table, tree and rule based predictive models. Decision Support Systems 51 (1):141–54. doi:10.1016/j.dss.2010.12.003.

- Ibáñez Molinero, R., and J. Antonio Garca-Madruga. 2011. Knowledge and question asking. Psicothema 23 (1):26–30.

- James Murdoch, W., C. Singh, K. Kumbier, R. Abbasi-Asl, and B. Yu. 2019. Definitions, methods, and applications in interpretable machine learning. In Proceedings of the National Academy of Sciences 116 (44):22071–80. doi:10.1073/pnas.1900654116.

- Jens Riegelsberger, M. A. S., J. D. McCarthy, and J. D. McCarthy. 2005. The mechanics of trust: A framework for research and design. International Journal of Human-Computer Studies 62 (3):381–422. doi:10.1016/j.ijhcs.2005.01.001.

- Jobin, A., M. Ienca, and E. Vayena. 2019. The global landscape of ai ethics guidelines. Nature Machine Intelligence 1 (9):389–99. doi:10.1038/s42256-019-0088-2.

- Kaushal, R., K. G. Shojania, and D. W. Bates. 2003. Effects of computerized physician order entry and clinical decision support systems on medication safety: A systematic review. Archives of Internal Medicine 163 (12):1409–16. doi:10.1001/archinte.163.12.1409.

- Kizilcec, R. F. 2016. How much information? Effects of transparency on trust in an algorithmic interface. In Proceedings of the 2016 CHI conference on human factors in computing systems, San Jose, California, USA, 2390–95.

- Klein, G., B. Shneiderman, R. R. Hoffman, and K. M. Ford. 2017. Why expertise matters: A response to the challenges. IEEE Intelligent Systems 32 (6):67–73. doi:10.1109/MIS.2017.4531230.

- Kononenko, I. 2001. Machine learning for medical diagnosis: History, state of the art and perspective. Artificial Intelligence in Medicine 23 (1):89–109. doi:10.1016/S0933-3657(01)00077-X.

- Kulesza, T., S. Stumpf, M. Burnett, S. Yang, I. Kwan, and W.-K. Wong. 2013. Too much, too little, or just right? Ways explanations impact end users’ mental models. In 2013 IEEE symposium on visual languages and human centric computing, San Jose, California, USA, 3–10, IEEE.

- Lamy, J.-B., K. Sedki, and R. Tsopra. 2020. Explainable decision support through the learning and visualization of preferences from a formal ontology of antibiotic treatments. Journal of Biomedical Informatics 104:103407. doi:10.1016/j.jbi.2020.103407.

- Langer, M., D. Oster, T. Speith, H. Hermanns, L. Kästner, E. Schmidt, A. Sesing, and K. Baum. 2021. What do we want from explainable artificial intelligence (XAI)?–A stakeholder perspective on XAI and a conceptual model guiding interdisciplinary XAI research. Artificial Intelligence 296:103473. doi:10.1016/j.artint.2021.103473.

- Lee, W. J., H. Wu, H. Yun, H. Kim, M. B. Jun, and J. W. Sutherland. 2019. Predictive maintenance of machine tool systems using artificial intelligence techniques applied to machine condition data. Procedia Cirp 80:506–11. doi:10.1016/j.procir.2018.12.019.

- Li, B.-H., B.-C. Hou, W.-T. Yu, X.-B. Lu, and C.-W. Yang. 2017. Applications of artificial intelligence in intelligent manufacturing: A review. Frontiers of Information Technology & Electronic Engineering 18 (1):86–96. doi:10.1631/FITEE.1601885.

- Lipton, Z. C. 2018. The mythos of model interpretability: In machine learning, the concept of interpretability is both important and slippery. Queue 16 (3):31–57. doi:10.1145/3236386.3241340.

- Lombrozo, T. 2006. The structure and function of explanations. Trends in Cognitive Sciences 10 (10):464–70. doi:10.1016/j.tics.2006.08.004.

- Lombrozo, T. 2007. Simplicity and probability in causal explanation. Cognitive Psychology 55 (3):232–57. doi:10.1016/j.cogpsych.2006.09.006.

- Lombrozo, T., and S. Carey. 2006. Functional explanation and the function of explanation. Cognition 99 (2):167–204. doi:10.1016/j.cognition.2004.12.009.

- Matus, K. J., and M. Veale. 2022. Certification systems for machine learning: Lessons from sustainability. Regulation & Governance 16 (1):177–96. doi:10.1111/rego.12417.

- Meske, C., E. Bunde, J. Schneider, and M. Gersch. 2022. Explainable artificial intelligence: Objectives, stakeholders, and future research opportunities. Information Systems Management 39 (1):53–63. doi:10.1080/10580530.2020.1849465.

- Mikalef, P., K. Conboy, J. Eriksson Lundström, and A. Popovič. 2022. Thinking responsibly about responsible AI and ‘the dark side’ of AI. European Journal of Information Systems 31 (3):257–68. doi:10.1080/0960085X.2022.2026621.

- Miller, R. A. 1994. Medical diagnostic decision support systems–past, present, and future: A threaded bibliography and brief commentary. Journal of the American Medical Informatics Association 1 (1):8–27. doi:10.1136/jamia.1994.95236141.

- Miller, T. 2019. Explanation in artificial intelligence: Insights from the social sciences. Artificial Intelligence 267:1–38. doi:10.1016/j.artint.2018.07.007.

- Miller, T., P. Howe, and L. Sonenberg. 2017. Explainable AI: Beware of inmates running the asylum or: How i learnt to stop worrying and love the social and behavioural sciences. arXiv Preprint arXiv 1712:00547.

- Mill, E., W. Garn, and N. Ryman-Tubb. 2022. Managing sustainability tensions in artificial intelligence: Insights from paradox theory. Proceedings of the 2022 AAAI/ACM Conference on AI, Ethics, and Society, Oxford, England, UK, 491–98.

- Mill, E., W. Garn, N. Ryman-Tubb, and C. Turner. 2023. Opportunities in real time fraud detection: An explainable artificial intelligence (XAI) research agenda. International Journal of Advanced Computer Science & Applications 14 (5). doi:10.14569/IJACSA.2023.01405121.

- Mittelstadt, B., C. Russell, and S. Wachter. 2019. Explaining explanations in AI. In Proceedings of the conference on fairness, accountability, and transparency, Atlanta, Georgia, USA, 279–88.

- Mohseni, S., N. Zarei, and E. D. Ragan. 2021. A multidisciplinary survey and framework for design and evaluation of explainable AI systems. Acm Transactions on Interactive Intelligent Systems (Tiis) 11 (3–4):1–45. doi:10.1145/3387166.

- Moore, J. D., and W. R. Swartout. 1989. A reactive approach to explanation, 11th International Joint Conference on Artificial Intelligence, Detroit, Michigan, USA. 1504–10.

- Nielsen, A. B., H.-C. Thorsen-Meyer, K. Belling, A. P. Nielsen, C. E. Thomas, P. J. Chmura, M. Lademann, P. L. Moseley, M. Heimann, L. Dybdahl, et al. 2019. Survival prediction in intensive-care units based on aggregation of long-term disease history and acute physiology: A retrospective study of the danish national patient registry and electronic patient records. Lancet Digital Health 1(2):e78–e89. doi:10.1016/S2589-7500(19)30024-X.

- Nikolaou, V., S. Massaro, M. Fakhimi, and W. Garn. 2022. Using machine learning to detect theranostic biomarkers predicting respiratory treatment response. Life 12 (6):775. doi:10.3390/life12060775.

- Nikolaou, V., S. Massaro, M. Fakhimi, L. Stergioulas, and W. Garn. 2021. Covid-19 diagnosis from chest x-rays: Developing a simple, fast, and accurate neural network. Health Information Science and Systems 9 (1):1–11. doi:10.1007/s13755-021-00166-4.

- Nozaki, N., E. Konno, M. Sato, M. Sakairi, T. Shibuya, Y. Kanazawa, and S. Georgescu. 2017. Application of artificial intelligence technology in product design. Fujitsu Scientific & Technical Journal 53 (4):43–51.

- Panda, S. 2018. Impact of AI in Manufacturing Industries. International Research Journal of Engineering & Technology (IRJET) 5 (11): 1765–1767.

- Pazzani, M. J. 2000. Knowledge discovery from data? IEEE Intelligent Systems and Their Applications 15 (2):10–12. doi:10.1109/5254.850821.