?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.

?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.ABSTRACT

In recent years, the integration of intelligent industrial process monitoring, quality prediction, and predictive maintenance solutions has garnered significant attention, driven by rapid advancements in digitalization, data analytics, and machine learning. As traditional production systems evolve into self-aware and self-learning configurations, capable of autonomously adapting to dynamic environmental and production conditions, the significance of reinforcement learning becomes increasingly apparent. This paper provides an overview of reinforcement learning developments and applications in the manufacturing industry. Various sectors within manufacturing, including robot automation, welding processes, the semiconductor industry, injection molding, metal forming, milling processes, and the power industry, are explored for instances of reinforcement learning application. The analysis focuses on application types, problem modeling, training algorithms, validation methods, and deployment statuses. Key benefits of reinforcement learning in these applications are identified. Particular emphasis is placed on elucidating the primary obstacles impeding the adoption and implementation of reinforcement learning technology in industrial settings, such as model complexity, accessibility to simulation environments, safety deployment constraints, and model interpretability. The paper concludes by proposing potential alternatives and avenues for future research to address these challenges, including improving sample efficiency and bridging the simulation-to-reality gap.

Introduction

In the context of the Fourth Industrial Revolution, commonly known as Industry 4.0, manufacturing processes are undergoing a transformation toward increasingly digitalized and automated ecosystems. This shift is driven by the development of various technologies, including Cyber-Physical Systems, the Industrial Internet of Things, Cloud Computing, Big Data, and Artificial Intelligence (AI). The revolution is based on the integration of these technologies in industrial processes, enabling the creation of flexible, smart, and autonomous factories, thus offering new opportunities for efficient, advanced, and sustainable production (Lasi et al. Citation2014).

Specifically, the continuous advances in AI and emerging applications in the industry present enormous opportunities to integrate state-of-the-art technologies and address challenges driven by the digital transformation in these environments (Peres et al. Citation2020).

As a sub-field of AI, Machine Learning (ML) algorithms are key elements in Industry 4.0 (Bécue, Praça, and Gama Citation2021). ML models, which are data-driven approaches, provide a set of techniques and methodologies to model and learn patterns from data in machines and computer systems. This smart exploitation of data in the industry has the potential to significantly impact Key Performance Indicators (KPIs) such as productivity, efficiency, quality, environmental footprint, and availability, which are of utmost importance in production systems.

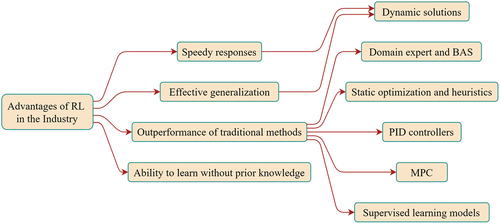

Within the realm of ML, supervised and unsupervised learning methods primarily analyze data patterns for prediction and clustering applications. In contrast, reinforcement learning (RL) excels in decision-making, offering support and control in real-time production settings. RL operates on the principle of goal-directed and interactive learning, guided by a numerical reward signal (Sutton and Barto Citation2018). The learner is not told which actions to take, but instead must discover which actions yield the most reward by trying them.

RL has demonstrated remarkable success across various domains, including complex games (Silver et al. Citation2018), video games (Lample and Singh Chaplot Citation2017), natural language processing (Uc-Cetina et al. Citation2023), healthcare (Yu et al. Citation2021), finance (Hambly, Xu, and Yang Citation2023), and autonomous driving (Kiran et al. Citation2021), showcasing its significant potential. However, despite these successes, implementing RL in manufacturing processes presents substantial challenges. Consequently, the adoption of RL technologies in industrial settings remains in its early stages.

Despite advances in digitalization, many industrial environments still rely on manual or traditional systems for process and system control and planning. Although extensive research has been conducted to improve these traditional systems (Guzman, Andres, and Poler Citation2022; Tavazoei Citation2012), these models often lack the adaptability and flexibility to respond to environmental changes. RL, however, has the potential to overcome many of these limitations. Given RL’s capabilities, it is essential to analyze the benefits it can provide, identify the primary challenges limiting its industrial applicability, and propose solutions for its controlled and safe implementation.

Relevant research has explored the use of RL in specific fields and tasks, such as robotics (Singh, Kumar, and Pratap Singh Citation2022), power systems (Cao et al. Citation2020), and the semiconductor industry (Stricker et al. Citation2018). Moreover, Nian, Liu, and Huang (Citation2020) conducted a study on the application of RL in industrial control focusing on a specific example of an industrial pumping system, comparing RL with traditional algorithms. However, these studies do not provide a comprehensive perspective across all industry domains.

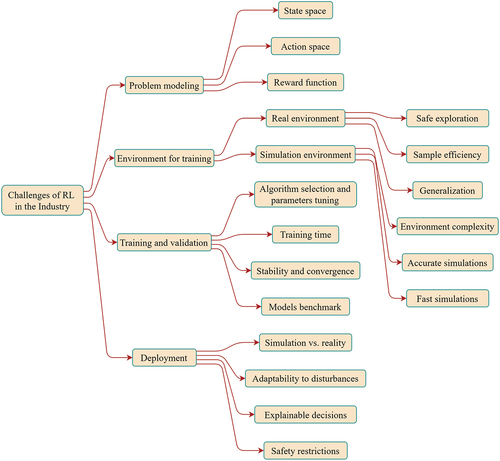

Our analysis explores various critical industrial applications, such as robotic manipulation, scheduling and routing design, operational performance, production efficiency, and fault diagnosis. To enhance clarity and facilitate comprehension, we categorize these applications according to industry type. Our main focus lies in exploring the benefits of RL and its challenges related to training and implementation, specifically targeting the simulation-to-reality (sim-to-real) gap, safety concerns, and deployment limitations. While acknowledging the existing body of literature, previous studies have not extensively addressed these areas. Through the examination of these challenges, our objective is to offer insights that can enrich the effectiveness of RL applications across various industrial contexts with shared characteristics. Hence, the contributions of this article are outlined as follows:

Analysis of diverse RL applications: Examine the current applications and objectives of RL within industrial domains, including modeling strategies, types of RL technologies utilized, and the level of validation and deployment achieved across various industries.

Identification of advantages and challenges: Identify the primary benefits and challenges associated with implementing RL in industrial settings. Explore potential alternatives to assess RL’s scalability and effectiveness in real-world industrial environments.

We initiate our exploration by elucidating the foundational concepts of RL and surveying the principal algorithms documented in the literature, presenting a taxonomy of these algorithms in Section 2. Proceeding to Section 3, we conduct an extensive review of RL applications within manufacturing, spanning diverse areas such as robot automation, welding processes, the semiconductor industry, injection molding, metal forming, milling processes, and the power industry. Additionally, we emphasize the variety of applications and offer an analysis of the environmental conditions employed in training, along with the current deployment status of each application. In Sections 4 and 5, we delve into a discussion regarding the potential and challenges associated with implementing RL in industrial domains, while also delineating possible avenues for future research. Finally, Section 6 encapsulates the conclusions drawn from our analysis and potential directions for further research.

Reinforcement Learning

This section provides an explanation of the field of RL and presents an overview of the main algorithms that have been applied in the presented articles.

RL provides an efficient framework for solving optimal control and decision-making tasks in stochastic and sequential environments modeled as Markov Decision Processes (MDPs) (Littman, Moore, and Moore Citation1996). Formally, an MDP can be defined by a 6-tuple , where:

is the set of states.

is the set of actions.

is the reward function, mapping each state-action pair to a real number representing the immediate reward obtained by taking that action in that state.

is the state transition probability distribution, specifying the probability of transitioning from one state to another given a specific action.

is the initial state distribution, which assigns probabilities to each state indicating the likelihood of starting the MDP in that state.

is the discount factor, a scalar value in the range

that balances the importance of immediate rewards against future rewards in the MDP;

discounts delayed rewards, while

values all future rewards equally.

A state, embodying all necessary information to predict system transitions, must adhere to the Markov property in an MDP (Equation 1) (Sutton and Barto Citation2018). This property asserts that, given the present state and action, the subsequent state’s probability distribution depends only on these factors, not on earlier states or actions, indicating that the current state sufficiently predicts future system behavior.

The conventional MDP framework, operating under the Markov property assumption, inherently assumes a fully observable environment. In real-world industrial scenarios, achieving full observability can be challenging, given that a portion of the state remains concealed from the agent (Zhao and Smidts Citation2022). This inherent limitation introduces an additional layer of complexity to the decision-making process. When the Markov property is not met, a pragmatic alternative is the consideration of a Partially Observable Markov Decision Process (POMDP), offering a solution for decision-making in environments where the agent’s perception is restricted to a limited portion of the environment or is subject to noise (Spaan Citation2012).

In the formal definition of a POMDP, encapsulated by the tuple , two pivotal additional elements come into play. Firstly,

comprises the set of observations that the agent can receive, contingent on the transition state

and potentially influenced by the previously executed action

in the environment’s prior state

. Secondly, the probability function

, which, given a pair

, determines the probability

assigned to each observation

, considering the state

and action

. Additionally, the initial observation is sampled from a probability function

. The key distinction of a POMDP from a fully observable MDP lies in the fact that the agent now perceives an observation

, as opposed to directly observing

.

In certain scenarios, external variables can influence the transition model (Ebrie et al. Citation2023). These variables, which are not inherently part of the state, may limit the model’s ability to generalize when directly incorporated (Hallak, Di Castro, and Mannor Citation2015). To address this challenge, the Contextual Markov Decision Process (CMDP) extends the capabilities of a traditional MDP. It achieves this by introducing a context space and a mapping function

. This function associates each context

with a specific set of MDP parameters (Modi et al. Citation2018). Consequently, a CMDP is represented as a tuple

, where

maps any context

to an MDP

. This MDP is defined by the tuple

, wherein

,

,

, and

are specific to context

. In alignment with the stated focus and objectives of this paper, our analysis does not extend to exploring potential applications within the industry domain for this particular extension.

In any of the frameworks, the process is modeled as an agent iteratively interacting with an environment. The agent takes actions in the environment, and subsequently, the environment provides the agent with the system state (or observations in the case of a POMDP) and the reward associated with the previous action and the current state. The agent aims to learn a function that maps states (or observations) to actions, referred to as policy , with the goal of maximizing the expected cumulative future reward. This iterative process within the framework of an MDP is illustrated in .

Algorithm 1 introduces a unified iterative reinforcement learning process that seamlessly integrates standard MDPs (and POMDPs) within a finite episode consisting of sequential interaction steps, each lasting for steps. To achieve effective training, it’s essential to engage in numerous interactions with the environment, which in turn necessitates a significant number of episodes. Furthermore, employing specific techniques to explore the state space (Ladosz et al. Citation2022), ensuring efficient sampling (Yu Citation2018), and maintaining training stability are crucial (Nikishin et al. Citation2018). Section 2.1 provides further elaboration on insights into policy updating.

Table

In RL, agents navigate environments with scalar feedback (rewards) guiding them toward optimal actions. These actions, encapsulated within the policy function, are pivotal for shaping control strategies and behavioral policies. A fundamental concept in RL is the deterministic greedy policy, , a strategy that consistently selects actions to maximize the expected cumulative future reward. Presented below is an introductory overview of the fundamental principles and primary functions in RL. All equations presented in this section are based on the formulation from Sutton and Barto (Citation2018).

At any given time step , the expected cumulative future reward is denoted by

, as defined in Equation 2. This value represents the discounted sum of rewards along an episode trajectory, guided by policy

and governed by the discount factor

. Mathematically,

can be expressed recursively: it starts with the immediate reward from the state-action pair

and adds the discounted expected cumulative future reward from time step

, denoted by

.

In assessing the efficacy of a policy, value functions are essential. Specifically, the value function provides an estimation of the discounted expected cumulative future reward

when starting from each state

under the guidance of policy

. This estimation is detailed in Equation 3.

Similarly, the state-action value function, or Q-values, , estimates the value associated with each state-action pair, as defined in Equation 4. This function summarizes the discounted expected future cumulative reward, starting from the state-action pair

, and follows policy

until the end of the episode.

Bellman equations play a crucial role in articulating the recursive relationships within RL. Along with their immediate derivations, they constitute a key mechanism for solving problems in the control theory domain (Sutton and Barto Citation2018). These equations establish a connection between the value of a state or a state-action pair and the values of its successor states and state-action pairs.

Equations 5 and 6 illustrate the Bellman equations for the previously defined value functions, incorporating the recursive nature of as presented in Equation 2. Equation 5 represents the Bellman equation for the state value function

, illustrating how the value of a state is recursively related to the values of its successor states under policy

. Similarly, Equation 6 outlines the Bellman equation for the state-action value function

, showcasing the recursive connection between the value of a state-action pair and the values of its successor state-action pairs under policy

.

Since the objective of RL aims to identify an optimal policy , the goal is to maximize expected rewards, thereby finding the policy that maximizes future trajectory values. Equations 7 and 8 define the Bellman optimality equations characterizing the optimal value functions

and

respectively. These equations introduce the maximization aspect by replacing

with

and

with

.

Solving the Bellman optimality equations to derive the optimal policy stands as a central objective in RL algorithms, aiming to maximize long-term rewards within the environment. Consequently, the optimal policy

is determined by actions that maximize Q-values in each state, as illustrated in Equation 9.

Initially, an agent’s decision policy may be random or based on prior expert knowledge, such as through transfer learning (Nian, Liu, and Huang Citation2020). In decision-making scenarios, where the agent is familiar with the environment dynamics, it leverages this knowledge to enhance its policy through dynamic programming algorithms (Liu et al. Citation2021). However, in pure RL scenarios, where the agent lacks knowledge about the initial environment’s transition probability distribution , exploration strategies come into play during training. Non-deterministic policies are employed to explore unknown states, refining estimates of the value function and learned policies. The data collection process carefully balances random actions (under a random policy) and known actions (under a greedy policy). The RL training process involves iterative refinement, where the agent evaluates actions in the environment to maximize the expected cumulative reward and utilizes these estimations to improve the policy (generalized policy iteration) (Sutton and Barto Citation2018).

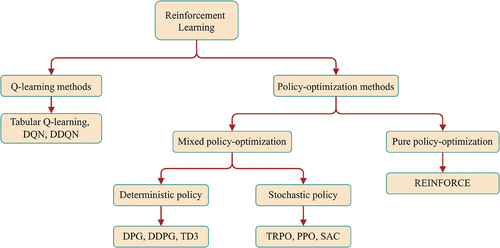

Classical Reinforcement Learning Methods

Classical RL methods in the literature can be classified into two major groups: Q-learning, and policy-optimization methods. Most RL algorithms involve learning value functions that estimate how good it is to be in a given state (EquationEquation 3(3)

(3) ), or how good it is to perform a given action in a given state (EquationEquation 4

(4)

(4) ). illustrates this classification into method types along with some relevant examples of specific algorithms found in the reviewed studies.

Q-Learning Methods

In Q-learning methods, the RL agent learns a Q-value function, representing the values of state-action pairs used to select actions with higher expected returns. The primary update step in Algorithm 1 for such methods involves adjusting the value of each state-action pair using a learning rate , which integrates the received reward and the estimated value of the subsequent state. This update process is formalized in Algorithm 2 (Watkins and Dayan Citation1992). Consequently, the policy is derived from the learned Q-value function.

Table

In tabular Q-learning methods, the expected future value for each state-action pair is estimated using a tabular format. However, relying on a lookup table may lead to slow convergence, particularly when dealing with a large number of states.

Real-world applications often require learning agents to navigate MDPs featuring large or continuous state and action spaces. The limitations of tabular methods, driven by the curse of dimensionality – where the number of states (or actions) increases exponentially with the growth of the state (or action) space’s dimensions – become apparent in such scenarios. Representing all possible state-action pairs in a lookup table becomes impractical, especially in large state and/or action spaces (Powell Citation2007).

To address this, function approximation becomes essential, allowing the generalization of learned policies from visited states to unknown ones (Xu, Zuo, and Huang Citation2014). These methods facilitate a more efficient computation and are adept at handling problems with high-dimensional spaces. RL employs various function approximators, including linear combinations of features, decision trees, nearest neighbors, neural networks, among others. Deep Reinforcement Learning (DRL) emerges from integrating Deep Neural Networks (DNN) with RL, evolving into a powerful tool for operating and managing large, high-dimensional, and complex continuous systems.

Deep Q-learning (DQN) (Mnih et al. Citation2015) learns the value function using DNNs as a function approximation to enable generalization. A replay buffer is a memory structure used to collect transitions and sample them randomly in batches for training the agent. The replay buffer allows more efficient training with better convergence behavior and stability; and increases sample efficiency, since it efficiently reuses past experiences and helps to decorrelate the training data.

In DQN, two networks with the same architecture are used to represent the value function. The main network updates its weights at every training step, while the target network’s weights are frozen and periodically copied from the main network. In Double Deep Q-learning (DDQN) (Van Hasselt, Guez, and Silver Citation2016), both networks are used when computing target values. The main function is used to select the action, while the target function estimates the value. DDQN addresses the maximization bias from the value function overestimation in Q-learning and DQN, allowing for more stable and faster learning.

Q-learning methods are typically applied to discrete action spaces. Therefore, in continuous domains, it is common to discretize the action space when using Q-learning approaches.

Policy-Optimization Methods

In policy-optimization methods, we can distinguish between pure policy-optimization methods and mixed policy-optimization methods.

Pure policy-optimization methods do not learn value functions; instead, they directly learn the policy. These methods can handle continuous action spaces and learn stochastic policies. In DRL, the weights of a DNN are updated repeatedly for batches of transitions to obtain an estimate of a deterministic or stochastic greedy policy.

For instance, REINFORCE is a Monte Carlo variant within the category of policy gradient methods (Sutton et al. Citation1999). The REINFORCE policy update is outlined in Algorithm 3. Here, governs the policy

undergoing continual learning. The policy parameters, denoted by

, are updated via gradient ascent on

. The update process occurs iteratively for each episode utilizing a learning rate

.

Table

Mixed policy-optimization methods are a hybrid between Q-learning and pure policy-optimization methods. They rely on two functions that work together to accelerate learning, introduce more stability, and improve performance: i) the critic, which estimates the value function, and ii) the actor, which updates the probability distribution of the parametric policy guided by the critic. As the critic network learns which states are better or worse, the actor uses the value estimations to guide the training and learn more efficiently, avoiding undesirable states (Grondman et al. Citation2012). Algorithm 4 delineates the fundamental updates for both the actor and critic in such policy-optimization methods, corresponding to and

updates, respectively. Here,

denotes the actor learning rate, and

represents the critic learning rate.

Table

Some methods consider deterministic policies, such as Deterministic Policy Gradient (DPG) (Silver et al. Citation2014), Deep Deterministic Policy Gradient (DDPG), which combines DPG and DQN, and Twin-delayed deep deterministic policy-gradient (TD3) (Fujimoto, Hoof, and Meger Citation2018), which is an extension of DDPG.

In contrast, others consider stochastic policies, like the Trust Region Policy Optimization (TRPO) method (Schulman et al. Citation2015), which incorporates a regularization term when updating the policy function. This introduces a constraint to minimize changes from the previous policy, avoiding possible divergences or unfavorable modifications. Proximal Policy Optimization (PPO) (Schulman et al. Citation2017) aims to simplify TRPO. It considers a clipped surrogate objective, making it easier to implement while maintaining similar performance. It also incorporates multiple epochs of stochastic gradient ascent at each policy update.

Soft Actor-Critic (SAC) (Haarnoja et al. Citation2018) is another example of a stochastic mixed policy-optimization method. It introduces randomness in the evaluation through an entropy measure that encourages exploration. Thus, there’s a trade-off between the entropy measure and the expected returns, which can be balanced. SAC accelerates learning and prevents the policy from converging too quickly to poor local optima.

Other Reinforcement Learning Methods

The methods discussed in the preceding section fall under the category of online model-free RL methods. In online model-free learning, the RL model or agent engages with an environment, gathering data through its own experiences and refining its understanding through trial and error. This environment can take the form of a real-world setting or virtual simulators. Notably, learning within virtual environments, such as digital twins, has demonstrated significant efficacy (Matulis and Harvey Citation2021). However, there might be a substantial gap between the virtual and the physical environment, which necessitates careful consideration of the transferability of learned behaviors and policies from simulations to real-world applications (Ranaweera and Mahmoud Citation2023). Conversely, learning within physical environments avoids the need for a simulation and the sim-to-real gap but presents other challenges and associated risks, such as the potential for hardware damage, safety concerns, slower learning, and higher costs related to trial-and-error learning in a real-world setting (Dulac-Arnold et al. Citation2021).

Within the literature, two primary types of online learning in RL are distinguished: model-free and model-based. Model-based RL involves the agent learning the dynamics of the environment through interactions and subsequently leveraging these learned dynamics to simulate interactions with the real environment for planning (Moerland et al. Citation2023). Periodically, new real-world experiences are incorporated, contributing to refining the dynamics in the simulated environment and correcting potential deviations. Model-based approaches prove particularly beneficial in environments where interactions are limited or costly, or when there’s a need to expedite the learning process.

On the other hand, offline learning involves the RL model or agent learning from a predefined dataset of accumulated experiences, guided by a behavior policy. This behavior policy can be a combination of various policies. The goal in offline RL is to acquire a robust policy from the predefined dataset that outperforms the behavior policy using data collected from it, without further exploration in the environment (Levine et al. Citation2020). To achieve this, the data collection policy must be sufficiently exploratory and somewhat effective in showcasing desirable states and favorable consequences of action-taking in different states. Moreover, this methodology integrates techniques to address issues related to distribution shift and overestimation challenges in offline RL, arising due to constraints in validating beliefs (Kumar et al. Citation2019).

In this review, our primary focus has centered on model-free online RL applications, representing the predominant approach across various industries. Many of these applications cover training scenarios within virtual environments (Zhong, Zhang, and Ban Citation2023), while some also involve the utilization of real-world systems for training or retraining purposes (Ahn, Na, and Song Citation2023).

Reinforcement Learning Applications

This section reviews selected representative application fields of RL in the manufacturing industry, including robot automation, welding processes, semiconductor industry, injection molding industry, metal forming industry, milling processes, and power industry. These industrial sectors are considered innovative fields with significant potential for RL applications. Moreover, most of the reviewed applications in this work can be easily extended and transferable to different domains because they represent the core manufacturing process for several industries (such as automotive and home appliances). Many productions utilize the same industrial procedures; for instance, welding or part stamping processes are used in the production of car products and mobile phones. Furthermore, an RL algorithm does not require previous information about “how to control a process;” but the possible control points in the system (action space). An RL algorithm can be adaptable and useful for a wide range of tasks as it can learn from experience and adapt to various contexts without relying on particular assumptions. This fact favors adaptation to other similar productions. However, the control agents need previous training before deploying them in a real-world environment. An agent cannot effectively operate within Industry 4.0 without prior expertise, as it may have a detrimental impact on industrial KPIs.

Each field is summarized in a table that provides the reference of each reviewed research, the specific application in the field, the RL algorithm implemented, its classical classification presented in section 2.1, and finally, the type of problem that it is trying to solve. In the tables, QL refers to Q-learning methods, and PO refers to policy-optimization approaches.

The criteria used in the discussion of various applications are based on four principal aspects to guide the development of future similar applications. The considered aspects are:

Objective: This includes the type of task being addressed, the specific industrial application, and categorization into control, planning, or maintenance solutions.

Modeling: This involves the state space, action space, and the modelization of the reward function for each application.

Training strategy: Analysis focuses on identifying relevant algorithms based on specific applications and problem modeling characteristics.

Validation and deployment: Attention is given to evaluation criteria and the extent of deployment for the provided solution.

Robot Automation

In the manufacturing context, the movement of a robotic arm requires a well-designed trajectory for efficient operation. Traditionally, this task has been carried out by researchers or engineers with expertise in the domain. However, recent research has focused on leveraging ML techniques to reduce the reliance on human experts and achieve promising results. Robotic arms are typically used in complex and non-linear environments with uncertainties stemming from disturbances and sensor noise, which require frequent reconfiguration (Pane et al. Citation2019). To address these challenges, RL technology has been explored to increase robustness and provide adaptive and immediate responses. While planning and control processes can be time-consuming, robotic arms must identify an efficient solution for a given task and also adapt in real-time to changing situations.

In this field, Tejer, Szczepanski, and Tarczewski (Citation2024) introduced a Q-learning algorithm tailored for controlling a robotic arm. Its objective is to adeptly choose products from three sources by considering factors such as availability at pickup points, historical data, and future projections. Within this framework, the RL agent garnered positive rewards for executing suitable movements, negative rewards for unsuitable ones, and neutral rewards for opting to wait. Both training and validation were conducted within a simulated environment. Therefore, it’s important to note that this research is in the developmental phase and has yet to be transitioned to real-world implementation.

Meyes et al. (Citation2017) developed a Q-learning algorithm for a six-axis industrial robot. The RL agent was trained to play the wire loop game, using only two dimensions for simplicity. The action space consisted of 6 movement actions to guide the metal loop, while the state space was a three-dimensional matrix containing local information about the robot’s tool center point, the loop’s position relative to the wire and the goal. The algorithm provided positive rewards when the goal was reached and negative ones when any non-desired situation occurred. An experimental setup was used to test the robot, which was able to plan complex motions along continuous trajectories and generalize to new scenarios not seen during the training phase, with successful outcomes.

Similarly, Pane et al. (Citation2019) considered the Actor-Critic algorithm introduced in Bayiz and Babuska (Citation2014) to improve the performance of a controller in tracking an industrial robotic manipulator arm. Two additive compensation methods were employed for this task, using RL algorithms to enable precise movement along a square reference path, a circular reference path, and a trajectory on a three-dimensional surface. The controlled variable was the velocity of the arm, based on its position and current velocity, in order to achieve maximum precision in the reference paths. The reward system was based on the precision joint error in the trajectories. In experimental tests, the proposed methods were successfully benchmarked against other industrial control techniques, including proportional derivative, Model Predictive Control (MPC), and iterative learning control.

Additional methods for controlling industrial robotic manipulator arms have been proposed in the literature. For instance, in Zeng et al. (Citation2020) and in Atae and Gruber (Citation2021), the effectiveness of the DDPG algorithm as a control technique is validated. Furthermore, a SAC approach is utilized in Matulis and Harvey (Citation2021).

Liu et al. (Citation2020) also applied a DDPG approach to a humanoid robotic-arm controller, which was multitasking-oriented and trained using a simulator environment to achieve rapid and stable motion planning. The RL control agent learned to minimize trajectory errors by moving the arm based on given coordinates.

In addition, Beltran-Hernandez et al. (Citation2020) employed a SAC method to instruct robotic agents in precise assembly skills. More specifically, a robotic agent underwent training to perform peg-in-hole tasks with uncertain hole positions. The RL agent was tasked with selecting controller parameters enabling it to reach the goal with minimal contact force. The approach was successfully validated in experimental tests involving a range of concrete tasks, yielding highly promising results.

In the same domain, Ahn, Na, and Song (Citation2023) explored the application of a SAC algorithm for dynamic peg-in-hole tasks within the field of robotics. The proposed assembly approach, integrating both visual and force inputs, endowed the robot with the capability to adapt to diverse assembly piece shapes and hole locations. Pretraining encompassed both force-based and image-based trajectory generators, utilizing human demonstration data and virtual environment training, respectively. Subsequent training took place in the real environment. Experimental validation underscored the efficacy of the strategy in handling a range of peg-in-hole challenges. Their method not only facilitated precise assembly but also demonstrated exceptional performance in the face of substantial orientation or initial position difficulties.

Nguyen et al. (Nguyen et al. Citation2024) proposed harnessing the inherent symmetry of the peg-in-hole task to efficiently train a memory-based SAC agent. Specifically, they demonstrated that various initial configurations of the peg and hole were equivalent due to the symmetry of a round hole, enabling data augmentation. By transforming trajectories initiated in one configuration to generate valid trajectories in others, they streamlined the search space for more sample-efficient learning. The problem was formulated as a POMDP, with the state comprising the relative pose from the peg to the hole coordinate. However, the agent received partial observations, including 3D position, torques, and forces. Actions corresponded to displacements of the arm’s tip. The reward structure was sparse, granted only upon successful peg insertion into the hole. Training was conducted using a simulator, yet leveraging data augmentation and regularization through auxiliary losses to exploit symmetries, enabling efficient training with real robots in minimal time.

The main applications of RL in the field of robot automation involve the control and planning of robotic manipulator arms in two and three-dimensional space, summarized in . The robotic arm plays a crucial role in the field of industrial automation, however, to get high-cost efficiency and provide good quality manufactured products, each task of the industrial robot must be well calibrated. Real-time adaptive supervision is necessary to ensure high accuracy and precision in complex, nonlinear, and uncertain environments. Recent approaches use algorithms to enable robots to deal with environmental variations and autonomously adapt to them. RL satisfies these requirements, but designing the state information passed to the agent for action selection and modeling the reward function, which is central to the learning objective, are complex aspects of these RL solutions.

Table 1. Robot automation RL applications and algorithms.

Policy-optimization methods are the most widely considered algorithms in this field, as they can handle continuous state and action spaces. However, Q-learning methods such as DQN can manage continuous state spaces but not continuous action spaces. In some of the reviewed works, the algorithm has to select from continuous ranges of velocities and movements in a multi-dimensional space. Policy-optimization algorithms respond very well to the needs of the industry by providing high-quality performance on the proposed goals. Several applications have been tested to demonstrate the effectiveness of the learned policies. Although these attempts demonstrate the conceptual usability of the methods, some research still relies on simplified case studies that are hardly scalable to more complex, large-scale, and real-world problems. Since robotics deals with real-world scenarios, unlike other traditional RL applications such as recommendation systems and gaming that deal with simulated ones, computing resource requirements and training time difficulties are more pronounced. The main drawbacks of interacting with real-world environments are:

These environments are frequently complex and dynamic in continuous state and action spaces.

In physical robotic environments, rewards are often sparse and delayed. Although RL algorithms are designed to handle delayed rewards, coping with very sparse rewards remains a challenge in learning efficient policies.

Policies must maximize the future cumulative reward but also avoid risky situations.

Welding Processes

Welding processes are highly complex and nonlinear, with the quality of results dependent on a multitude of variables and environmental conditions. These aspects make an effective system control quite challenging. While traditional controllers, such as proportional-integral (PI) or proportional-integral-derivative (PID) controllers, are commonly used in this industry, they do not solve all the challenges. These controllers typically work within a limited range of parameters and do not respond well enough to the dynamics and uncertainties of the process, since making precise predictions in this domain is difficult. Hence, more adaptive methods are required to achieve the desired welding quality under any dynamic and uncertain environment and to avoid defects in the welds such as porosity, cracking, or poor mechanical properties.

RL methods have been used for control purposes in conjunction with traditional PID controllers, as seen in Jin, Li, and Gao (Citation2019). In this research, an adaptive PID controller based on an Actor-Critic approach was employed to regulate the weld pool width. The RL agent was trained using a simulator to choose an input parameter for the PID controller, in order to minimize the difference between the actual and the desired weld pool width.

RL techniques have found application in the laser welding industry, where laser welding’s advantages, such as speed, high-quality welds, and reduced tool wear, have led to increased popularity. However, ensuring replicability in the produced parts remains a challenge due to dynamic conditions.

Gunther et al. (Günther et al. Citation2014, Citation2016) implemented an Actor-Critic algorithm to control the applied laser power, aiming to achieve the desired weld depth. Their proposed control system architecture integrated three key technologies:

Utilization of a DNN to extract significant variables from sensors, representing the environment’s state.

Application of a Temporal Difference (TD)-Learning algorithm with linear function approximation for real-time nexting (Modayil, White, and Sutton Citation2014) to assess system performance. Incorporated into the reward function, this guided control training toward achieving the target weld depth. TD-Learning predicted relevant features of the system’s sensor data for action selection in the control model.

Implementation of the Actor-Critic algorithm (Degris, Pilarski, and Sutton Citation2012) for laser power control.

Subsequently, a laser welding simulator provided the sensor data necessary for training and testing the control system architecture.

Masinelli et al. (Masinelli et al. Citation2020) proposed a similar control system architecture with the objective of adjusting laser power to control welding quality. The state of the system was captured using acoustic and optical emission sensors. The reward function incorporated welding depth and porosity. The learned control policy underwent testing in a laboratory environment, providing valuable algorithm validation. The control system consisted of:

An encoder based on a convolutional neural network (CNN) for translating sensor values to the environment state, reducing dimensionality.

A CNN classifier to compute the reward for the agent.

Development of two control approaches: a Fitted Q-iteration (FQI) algorithm (Antos, Szepesvári, and Munos Citation2007) and a REINFORCE algorithm.

In Quang et al. (Citation2022), the FQI approach from Masinelli et al. (Citation2020) was tested in two environments at different scales: in a laboratory environment and in a more unpredictable industrial environment. The authors highlighted differences in training time between both environments.

Mattera, Caggiano, and Nele (Citation2024) introduced a novel Stochastic Policy Optimization (SPO) algorithm, a variant of the REINFORCE algorithm, tailored for optimizing gas metal arc welding processes. The primary goal was twofold: achieving a desired penetration depth while minimizing the resulting bead area. The action space was defined by a combination of process parameters that are updated based on their current values, ensuring adaptability to changing conditions. The reward function was designed to leverage the disparity between the model output and the desired reference point. Moreover, penalties were incorporated, correlating with the deviation from the reference point and the bead area. Training of the algorithm was conducted within a simulated environment, showcasing superior computational efficiency and solution performance compared to a genetic algorithm across various scenarios. This work is in an early stage, only considering simulation scenarios.

The applications summarized in this field are detailed in , specifying the algorithm utilized for each.

Table 2. Welding processes RL applications and algorithms.

Significant progress has been made in advancing the control of welding processes. However, implementing these advancements in industrial plants remains challenging. Moreover, exploring additional control parameters, such as velocity and distance, and creating more complex scenarios to train RL agents that accurately reflect the intricacies of current industrial systems are crucial areas to focus on.

While policy-optimization methods are prevalent in the field, especially for control applications, a key challenge lies in translating sensor data into meaningful state and reward functions to properly input into the MDP. This translation is particularly necessary in processes where a large number of sensors capture data. The captured data from the process needs to be translated into simple and concise information required for the RL system. DNN and other RL approaches can be used for this translation task, learning a mapping from the sensor values to the MDP state representation and reward values.

Semiconductor Industry

In the realm of the semiconductor industry, researchers have delved into the application of RL for scheduling tasks. Within the intricate landscape of job shops, conventional production scheduling planning demonstrates inefficiency in addressing uncertainty and lacks the capability to respond promptly to unforeseen changes in scenarios (Yeap Citation2013). An exemplary study by Waschneck et al. (Citation2018) focused on enhancing production scheduling with the objective of improving uptime utilization.

The methodology involved the RL agent’s meticulous selection of each lot position, taking into account the capabilities, availabilities, and setups of the machines, along with the specific properties of the jobs slated for scheduling. The formulation of the reward function was grounded in the uptime utilization within the work centers and the overall factory, incorporating penalties associated with the problem constraints. Validation of the proposed approach was conducted through simulations in a small-scale semiconductor wafer processing factory. The results were then benchmarked against various dispatching heuristics to assess the effectiveness and efficiency of the developed RL-based production scheduling strategy.

Similarly, Stricker et al. (Citation2018) implemented an autonomous and adaptive Q-learning with a DNN system for order dispatching in the semiconductor industry. The RL agent was given information about the waiting and finished batches, the target of the next orders, and the position of the worker. Then, the agent selected what to do with a batch. The reward system was designed to maximize the utilization of all manufacturing equipment and minimize lead time. A TRPO algorithm was implemented for an equivalent task with the same aim in Kuhnle et al. (Citation2019), where the simulation environment represented a real use case in the semiconductor industry. Actions were defined to be the movement of resources based on their locations and relevant information about the order and machines. Moreover, an analogous TRPO approach was presented in Kuhnle, Röhrig, and Lanza (Citation2019) for order dispatching in a complex job shop and exemplified in the semiconductor industry. Later, the methodology was applied for a more complex and robust control system design in the semiconductor industry and evaluated with real-world scenarios (Kuhnle et al. Citation2021). Evaluations in all cases were done by comparison with heuristics in the simulation environments. The RL approach proved to be the best.

In the supply chain field, Tariq et al. (Citation2020) provided a DQN solution for optimal replenishment in a semiconductor complex supply chain collaboration model to mitigate the bullwhip effect. This research was done using a simulator with real use case data from a company. Results were compared with the company operating behavior. The action space was discrete where actions represented different replenishment amounts. The observations were based on the demand and the anticipation of target stock levels. The objective was to minimize penalties related to the positions deviating from the mean value of stock levels.

Similarly, in the semiconductor industry, a DQN was employed to enhance efficiency by optimizing production plan scheduling within an uncertain and dynamic environment (Lee and Lee Citation2022).

The state was defined based on four features per idle machine: the actual setup type, the number of waiting wafers, the relationship between planned and current production quantity, and the degree of production plan fulfillment at that moment.

An action represented the selection of a wafer layer by the agent from among the layers waiting to be processed on each machine.

The reward function gauged how closely the agent’s action selection aligned with the production plan.

Ma and Pan (Citation2024) employed RL algorithms to adjust weights in a run-to-run controller for real-time manufacturing processes, based on observable system states when facing unknown disturbances. The control objective was to ensure quality amidst environmental distortions. They trained DDPG and TD3 agents in a simulated environment and compared them with the control system without real-time weight adjustment. No tests were conducted in real environments due to the sim-to-real gap, which was not addressed in the study.

Previous investigations have shown remarkable results on the application of RL, outperforming traditionally rule-based systems, heuristics, and companies’ decision rules. Both Q-learning and policy-optimization methods with DNN provide valuable solutions for complex and dynamic tasks such as production scheduling, order dispatching, and supply chain management (). These methods help to minimize deviations in expected results, maximize machine utility, reduce production times, and avoid unfeasible or undesired planning. Despite these advantages, training is computationally expensive, and DNNs are considered black-box models, making it difficult to predict how the DRL agents will behave in unknown situations. In the literature, when an ML model is not transparent or interpretable to the user is often referred to as a black-box. This lack of transparency can be problematic if the user wants to justify or authenticate the behavior of the model because is unable to understand how the model derives its results. Further research is required to analyze and understand the performance and, in particular, the learned strategies under more scenarios. While some architectures have been tested with real use cases, they still rely on simulations. Therefore, the models validated by simulations must be transferred and tested in physical environments to confirm their efficacy.

Table 3. Semiconductor industry RL applications and algorithms.

Injection Molding Industry

Injection molding is a highly precise manufacturing process used extensively in the production of plastic products due to its efficiency and ability to produce complex shapes. The consistency of the final product quality largely depends on process variables such as temperature and pressure. Consequently, significant research efforts have been directed toward controlling and stabilizing these variables to achieve the desired quality. Optimal values for these process parameters, such as temperature and pressure, can improve the injection molding process by achieving the desired precision with minimal cost, time, and emissions. Traditionally, domain experts have relied on trial and error methods to determine the optimal process parameters. In order to avoid human errors, domain expert dependency, and streamline decisions, more advanced control and decision-making methods have been applied. However, most of the applied techniques are static with difficulties to react to changes or unforeseen events, anticipating situations, or responding in real-time. Some recent studies have applied RL to overcome the challenges related to the dynamism of the environments and the necessity of real-time responses.

Guo et al. (Citation2019) have developed an Actor-Critic framework for process parameter selection in an injection molding process. The parameters researched are the mold and melt temperatures, packing pressure, and packing time. The state of the MDP was defined by the parameters’ values and part thickness. Finally, the reward aggregated the time spent and the error in the quality indexes. They used a prediction model for training and an online practical environment for validating the results against static optimization.

Similarly, a temperature compensation control strategy under dynamic conditions based on DQN was proposed in Ruan, Gao, and Li (Citation2019). The aim was to achieve temperature stability by increasing, decreasing, or maintaining voltage values. The state was given by the current-voltage value, the sampling temperature, and the temperature error. The RL agent was penalized if the temperature error was too high. To validate the agent an experimental test was done with a modified injection molding machine.

Batch productions have also been considered in this research field. Non-efficient decision-making strategies for the setpoints in the process may cause unsatisfactory quality indexes. To avoid this, Qin, Zhao, and Gao (Citation2018) proposed an Actor-Critic approach to control the product quality in injection molding batch productions. Their objective was to minimize the error between the target quality and the predicted quality using a simulation environment. The controlled variables adjustments were based on the process variables at each moment in time. Similar successful research about RL in injection molding batch production control was presented in Wen et al. (Citation2021), where a Q-learning approach was validated in a simulation environment.

Finally, another Q-learning approach was presented in Li et al. (Citation2022). This approach was developed for fault-tolerant injection speed control to monitor accurately its value and whether the actuator was malfunctioning. It was successfully validated in an injection molding process by comparison with traditional methods. In this article, the algorithm was trained and validated in a simulation environment considering problem simplifications.

The summarized applications within this field are concisely presented in .

Table 4. Injection molding industry RL applications and algorithms.

The quality of results in a plastic injection process hinges significantly on two factors: i) the configuration parameters of the process, and ii) the control system’s capacity to adapt to environmental variations. Furthermore, the overall quality of production batches must be approached comprehensively, taking into account the interdependence of one cycle on the previous one. This intricate interplay of factors underscores the complexity inherent in optimizing industrial processes. It is within this intricate landscape that Dynamic Algorithm Configuration (DAC) becomes particularly pertinent. Adriaensen et al. (Adriaensen et al. Citation2022) introduced DAC as a paradigm within the optimization community, leveraging RL for the fundamental task of parameter selection. In light of this, integrating DAC into parameter selection becomes a pathway to address the dynamic challenges encountered in industrial settings.

In addition to quality optimization, the industry also seeks to minimize carbon emissions while meeting production controls and planning objectives. RL algorithms present an efficient means to achieve these dual objectives. The studies in this field exemplify the convergence and efficacy of these methods through straightforward approaches.

Metal Forming Industry

In the field of metal forming, heavy plate rolling is a very popular sheet deformation process in many industries, including the automotive and maritime industries (Meyes et al. Citation2018). Designing the rolling process is a complex task, as it involves multiple passes that depend on one another to achieve the desired product properties and shape. Traditionally, domain experts have planned the rolling schedule. However, this task involves many objectives, such as minimizing time, adapting velocities, reducing energy consumption, and optimizing machine deterioration, making it challenging to operate efficiently. To address these challenges, more sophisticated techniques have been extensively investigated.

In particular, Meyes et al. (Citation2018) proposed DQN to design a pass schedule for a heavy plate rolling use case to plan the height and grain size of the part per pass. Experiments were successfully validated in a simulation environment.

Similarly, Scheiderer et al. (Citation2020), applied a SAC algorithm to achieve the height and grain size goals of the parts and minimize energy consumption. The action space was based on the next height for the part and the pause time. The state provided to the RL agent was the current height and the grain size of the part at the end of the current pass, the part’s temperature, and the current pass schedule. Finally, the reward function was computed with the deviations from the goals and the energy accumulated. The trained agent performance was benchmarked in a simulation environment against a human domain expert’s behavior.

Concurrently, free-form metal sheet stamping technology integrated DQN to autonomously acquire the optimal stamping path and forming parameters, thereby enhancing control process precision (Liu et al. Citation2020). The DQN agent was specifically utilized to optimize the stamping process with a hammer, defining actions for the hammer, states that include coordinates and calculated stresses, and a reward function based on the desired shape of the next state.

Forging is another widely applied deformation metal process with longer life, higher deformation degree, and higher cost than the rolling one. Therefore, the final geometry of a workpiece is essential, but the final cost of the process is also important to consider. In this context, Reinisch et al. (Citation2021) applied a DDQN approach to design pass schedules in open-die forging processes. The main objectives for the planning were the achievement of the desired geometry in as few passes as possible and taking care of the machines as much as possible. The variables considered in the state space were heights, temperatures, and strain in order to set the height reduction and the bite ratio from discretized values. Finally, a forging experiment was carried out to validate the solution proposed.

Nievas et al. (Citation2022) designed a Q-learning approach for parameter selection in a hot stamping process to minimize the production time of a batch with the desired quality. The product quality was measured with the final temperature of the part. The controlled parameter was the die closing time per each sheet. The action space was based on three actions: increase, decrease, or maintain the previous closing time. The state space considered for action selection was the die temperature, the previous closing time, and the remaining parts of the batch. The training was done with surrogate models of the process and successfully validated against the business-as-usual strategy.

The study conducted by Xian et al. (Citation2024) is centered on alloy compositional design employing DQN. In contrast to many other RL applications, they integrated experimental data to train surrogate models, which are then utilized to simulate rewards during training. This configuration enables the trained agent to collect real-world data, subsequently employed to refine the surrogate model, thus addressing the sim-to-real gap. Within this RL framework, the agent determined the composition of a new component to enhance an alloy, with each state representing a specific alloy composition. The primary objective was to maximize the transformation enthalpy of the alloys. Finally, the study compares the performance of their RL approach with Bayesian global optimization and genetic algorithms.

Flexibility and adaptability to unexpected changes in the environment, along with robustness and economic efficiency, are among the main challenges in the metal forming industry. In this context, RL has shown potential in several subareas: i) pass schedule design to meet quality requirements, ii) free-form metal sheet stamping technology, iii) process variable selection for efficient production while ensuring the material properties of the parts, and iv) determining the combination of elements in an alloy to improve its properties or performance characteristics. The RL applications reviewed in this area, summarized in , have demonstrated their potential for complex tasks through proof-of-concept studies and small experiments. These findings provide a starting point for future research aimed at developing more scalable solutions for larger and more complex environments.

Table 5. Metal forming industry RL applications and algorithms.

Milling Processes

In milling processes, the selection of process parameters and the stabilization of the system has been extensively studied. Moreover, hybrid architectures of RL with other ML technologies have been developed to improve the control of these processes. However, an important concern in milling processes is the occurrence of chatter vibrations, which can negatively affect the final product quality. The occurrence of chatter depends on the process parameters and the dynamics of the system, and it is crucial to detect it as soon as possible.

To maximize productivity and prevent chatter vibration in milling processes, Friedrich, Torzewski, and Verl (Citation2018) applied reinforced k-nearest neighbors (RkNN) for the parameters’ selection during the execution. The algorithm generated the stability lobe diagrams based on the measured data from the process. Validation was done with an environment simulation with analytical benchmark functions.

In addition, Shi et al. (Citation2020) applied an RkNN algorithm for fault diagnosis with the aim of detecting chatter vibrations in these industrial processes. This approach was tested under different cutting conditions with other ML approaches.

Another importantly highlighted particularity of industrial products, and specifically in milling processes, is the demand for increasingly personalized products. Typically, this involves manually adapting production to each customer, increasing final production costs. In this context, to maximize manufacturing KPIs, real-time decisions and adjustments regarding process planning and scheduling must be taken. Moreover, since the environments are unpredictable and dynamic, solutions must be adaptive and flexible.

Mueller-Zhang, Oliveira Antonino, and Kuhn (Citation2021) presented a DQN approach to efficiently schedule and plan productions in milling processes to address changes in the system and the requirement for personalization. The state information for the action selection was the runtime status of products and resources. The agent had to select a service to execute. The evaluation of the proposed solution was done in a virtual aluminum cold milling environment. Future research should consider more complex MDP definitions, modifying the action space definition, to represent a close-to-reality situation. Moreover, benchmarks with current popular control and decision-making approaches could demonstrate the improvements and benefits of applying RL techniques in the field.

Wang et al. (Citation2022) developed two DDQN approaches to control the milling process parameters in order to maximize system efficiency and the quality of the results. They solved a single-objective optimization problem and a multi-objective optimization problem. The first one was used to optimize three internal parameters of a support vector regression considered for surface roughness evaluation based on prediction accuracy. The second one was used to optimize the following machine parameters selection: spindle speed, feed rate, the width of cut, and depth of cut based on the surface roughness evaluation as a quality indicator and the material removal rate as an efficiency indicator. Both models considered the state space as the current parameters’ values and the action space was based on three actions per parameter: increase, decrease, or maintain its setting value between the defined boundaries. The training was done with experimental data and validation with the comparison with other algorithms achieving significantly better results with the DQN approaches. However, the models can be improved in terms of quality, training time, and explainability.

In the sustainable manufacturing field, Lu et al. (Citation2023) proposed a SAC algorithm for improving energy efficiency under changing deformation limits at each pass in a milling process. The environment considered for training was a surrogate model based on an ANN. The definition of the action space was the selection of parameters for each cutting state. Rewards were given considering the energy consumption and penalties associated with machining efficiency. The algorithm proposed was validated against the following alternative solutions: i) by changing the environment (from the surrogate model to an empirical model) and ii) by modifying the selected RL algorithm (from SAC to DDPG). Results showed that the SAC algorithm with the surrogate model environment converged faster and performed better.

Another SAC approach was introduced by Samsonov et al. (Citation2023), aimed at determining the workpiece position and orientation within the workspace of a milling machine. The primary objective was to minimize axis collisions, traveled distances, and accelerations to improve milling efficiency and safety. The proposed solution underwent training and validation in a simulated environment, benchmarked against traditional metaheuristic methods. However, the SAC approach exhibited some limitations compared to metaheuristics in certain scenarios. Notably, the evaluation solely occurs in a simulated environment, lacking real-world validation.

RL approaches, particularly Q-learning methods, are applied in milling processes for planning, scheduling, fault diagnosis, and improving system efficiency (). The works presented in this section have shown promising results. However, the extension of these techniques to more sophisticated environments and problem considerations, such as a more complex action space, represents a significant challenge.

Table 6. Milling processes RL applications and algorithms.

Power Industry

Decision-making and control problems, including energy management, demand response, electricity market, and operational control are crucial research problems in the power system (Zhang, Zhang, and Qiu Citation2019). Achieving each of these objectives contributes to improving the Power Grid’s profitability, which should be maximized while ensuring safety and reliability. Therefore, it is a very powerful field of research that requires efficient and complex decision-making frameworks to overcome uncertainties and challenges in operational conditions. Additionally, this sector is in constant growth due to the increasing energy demand every year. The complexity, uncertainty, and nonlinearity of the power system make it difficult to achieve high-quality and efficient control.

A wide range of methods has been proposed to solve energy and cost minimization problems, including linear programming and dynamic programming, heuristic methods such as Particle Swarm Optimization (PSO), or fuzzy methods that fail to consider online solutions for large-scale, real problems (Mocanu et al. Citation2019). RL has been applied to planning, control, and management under uncertainty with the aim of obtaining a more scalable solution that can ensure power quality, safety, and reliability.

In particular, Rocchetta et al. (Citation2019) have developed a Q-learning approach with DNN to manage the operation and maintenance of Power Grids under uncertainty. The objective of the implementation was to select operational and maintenance actions to maximize revenues and minimize costs. Thus, the cost and incomes related to the DQN actions selection were computed in the reward function. Moreover, other costs, such as the cost of not serving all the demanded energy to some customer, were computed as penalizations in the reward function. Decisions were made based on the degradation mechanism and the setting variables of power sources. Finally, the training and validation was made on simulations.

Purohit et al. (Citation2021) proposed a Q-learning approach with a DNN to control the duty cycle values in a buck power converter to manage the non-linearity. The buck converter was formulated as an MDP where the state space was a discretization of the values for the inductor current and the capacitor voltage. The action space had also been discretized in certain duty cycle values. Finally, the reward function was based on the difference between the desired average output voltage and the achieved one. The system was trained in a simulation environment and validated in an experimental setup. It was benchmarked against a PID controller and a value iteration approach.

Yin, Yu, and Zhou (Citation2018) developed a DQN approach to improve the performance of controllers in a large-scale Power System. In the same domain, the efficiency of Q-learning had been proven before. They proposed a DRL approach to overcome the weaknesses of tabular methods. The reward function considered the performance standard index, the system frequency deviation, and the control errors. Moreover, the performance standard index and the area control errors were provided in the state of the environment to the controller to set the power flow command of the generator. The power flow command of the generation had been discretized for the DQN approach. Training and evaluation were done using a simulator. The proposed approach was compared with PID, Q-learning, and Q()-Learning (Yu et al. Citation2015).

Al-Saffar and Musilek (Citation2019) applied an RL-Monte Carlo Tree Search (MCTS) for the distributed optimal power flow calculation in the electric power industry. The RL approach was used for the selection of distributed energy resource price bids considering the generation output cost that needs to be minimized while ensuring the satisfaction of all the constraints. The power transfer distribution factor was also considered in the state information for the action selection. The control policy trained and evaluated with a simulator was scalable and easily integrated into software for execution.

Predictive maintenance often has the problem of a lack of data in situations of failure or risk. Most of the collected data in the processes are from non-failure operations. Because of this imbalance in the collected data, it is often difficult to train predictive maintenance and fault diagnosis models using supervised learning models. Zhong, Zhang, and Ban (Citation2023) proposed an RL approach based on a DDQN algorithm for fault diagnosis of equipment in nuclear power plants in the cases of imbalanced class data. They used datasets as the MDP environment for training. Given a sample from a dataset, the agent had to select an action. If the selected action corresponded to the diagnosis label from the sample in the dataset, the received reward was positive, and negative otherwise. Higher values were given to minority classes. The DDQN maintenance model was compared with a supervised learning model in different scenarios demonstrating that the RL approach obtained better results in most cases.

Finally, there are several applications in the field of sustainable energy systems. Since renewable energy sources are dependent on weather conditions, their use for electricity generation is variable and unpredictable. The replacement of fossil fuel energy sources with renewable and distributed energies introduces great uncertainty and complexity to the sector to achieve energy efficiency. This requires an understanding of consumption with a deeper vision in order to optimize the allocation of resources in buildings. With this aim, Mocanu et al. (Citation2019) proposed and compared two different RL approaches for smart buildings able to minimize energy consumption and the total cost. A DPG algorithm provided better peak reductions and cost minimizations than DQN algorithms. The training and evaluations were made in a data-driven environment based on a large database. The action space was referred to as the selection of turning on or off an electrical device. This action selection was based on the building’s energy consumption and the price given at every moment in time. To guide the agent’s learning the reward function aggregated the three main components of this problem: the total energy consumption, the total cost, and the electrical device constraints.

Ongoing with the challenge of energy resources management from various renewable sources, Wang et al. (Citation2019) studied a similar problem. They proposed a DQN algorithm to handle the routing energy design under users’ uncertainties and fluctuations in energy generation. The action space was based on the planning of electrical power from different sources. The state space considered for action selection was based on the difference between its supply and its demand. The reward function was defined by aggregating the operating cost, the environmental cost, and the security operation. Finally, the RL approach was validated with simulations.

Renewable energy volatility affects the renewable energy consumption rate. Han et al. (Citation2023) proposed LM-SAC and IL-SAC algorithms based on the Lagrange multiplier method and imitation learning, respectively, to improve the renewable energy consumption rate for the power industry. They used a simulated environment to train the RL agents. The control actions defined were the active power and voltage of generators guided by the operation costs, the load rate, the consumption rate of renewable energy, and constraints to achieve the power balance and prevent undesired situations. They compared the results with other RL algorithms: the traditional SAC, the PPO, and the DDQN. The proposed methods outperformed the others in terms of better robustness and performance.

In the power industry sector, RL has been extensively researched. The papers analyzed are presented in . Planning and control problems have been simulated to prove the efficiency of these methods and their improvements by comparison with more traditional technologies. Valuable results are obtained in this field in addressing uncertainties associated with renewable energy and user demand fluctuations. Furthermore, power network planning becomes exceedingly challenging in complex systems with many buses and generators. In these scenarios, the number of states in the system is very large. Therefore, RL solutions offer an effective means to address these challenges. Although Q-learning methods are the most commonly used algorithms in this field, recent studies (Han et al. Citation2023; Mocanu et al. Citation2019) have shown that Actor-Critic methods outperform Q-learning approaches in sustainable energy consumption planning problems. However, despite much research being conducted in this field, developing a scalable solution for real, nonlinear, complex, and dynamic environments remains a challenge.

Table 7. Power industry RL applications and algorithms.

Application Overview and Analysis

In this section, we present a classification of industry applications based on their types and delve into the specifics of the training environments utilized. Our objective is twofold: first, to identify tasks within industrial environments with the potential for RL application, and second, to assess their level of applicability and transferability to real industrial settings.

The classification of application types and tasks is outlined as follows:

Robotic manipulation: The interaction of a robot with its physical environment for the performance of specific tasks and the manipulation of objects.

Scheduling/routing design: The systematic allocation of resources over time and strategic planning to optimize paths within a system.

Operational performance: The achievement of objectives in a process, considering aspects such as precision, quality, and other indicators of success in task execution.

Production efficiency: The achievement of goals with the least amount of resources possible.

Fault diagnosis: The identification of problems, errors, or malfunctions in a system.

While we have categorized types of applications in RL, it is important to note that multi-objective problems are considered, and as a result, some RL problems may address more than one type of application. For instance, applications falling into both efficiency and performance categories aim to maximize quality while simultaneously optimizing system efficiency (Wang et al. Citation2022). Despite our efforts to classify studies based on their primary objectives, the categorization may not always be absolute.

In the industrial context, an extra challenge lies in developing RL algorithms that not only prove effective in simulated environments during training but also demonstrate robust and efficient generalization to diverse, dynamic, and unpredictable real-world situations. A significant hurdle encountered in dealing with physical environments is the sim-to-real gap (Ranaweera and Mahmoud Citation2023). Zero-shot generalization (ZSG) in DRL becomes particularly relevant when applied to industrial environments facing the sim-to-real gap (Kirk et al. Citation2023) Incorporating ZSG in industrial RL applications has the potential to narrow the sim-to-real gap, enabling models to generalize more effectively across various conditions without an excessive need for real-world data during training.

We indicate whether each reviewed article considers a virtual or simulation environment, if the RL agent is trained and/or validated in a physical environment, and whether solutions are proposed to address the sim-to-real gap.