?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.

?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.ABSTRACT

This review describes recent advances by the authors and others on the topic of incorporating experimental data into molecular simulations through maximum entropy methods. Methods which incorporate experimental data improve accuracy in molecular simulation by minimally modifying the thermodynamic ensemble. This is especially important where force fields are approximate, such as when employing coarse-grain models, or where high accuracy is required, such as when attempting to mimic a multiscale self-assembly process. The authors review here the experiment directed simulation (EDS) and experiment directed metadynamics (EDM) methods that allow matching averages and distributions in simulations, respectively. Important system-specific considerations are discussed such as using enhanced sampling simultaneously, the role of pressure, treating uncertainty, and implementations of these methods. Recent examples of EDS and EDM are reviewed including applications to ab initio molecular dynamics of water, incorporating environmental fluctuations inside of a macromolecular protein complex, improving RNA force fields, and the combination of enhanced sampling with minimal biasing to model peptides

KEYWORDS:

1. Introduction

A common task in molecular simulation is ensuring that observables of the simulation match experimentally measured values. For example, in simulations of protein structure [Citation1] or liquids [Citation2], quantitative agreement with experiments is the standard for assessing correctness of a model. When there is no quantitative agreement, changing the potential energy function or adding additional components to the simulation are possible ways to improve the fit. Making such changes can be an ambiguous and challenging process, especially if the potential energy function has multiple terms that can be modified. Minimal biasing techniques are a class of methods that modify a potential energy function to improve quantitative agreement with experimental values while minimizing the the change in the potential energy function. The definition of ‘minimal’ and the way the potential energy function is modified vary from method to method.

Recent reviews of minimal biasing methods can be found in [Citation3–5]. Table 1 in [Citation4] provides an overview of 28 minimal biasing methods, categorizing them by whether they maximize entropy, maximize parsimony, or use Bayesian inference. These three categories correspond broadly to the criteria used to ensure that the biasing function introduces a minimal change to the potential energy function. This review focuses on two techniques developed by the authors that are categorized as maximum entropy methods: experiment directed simulation [Citation6] (EDS) and experiment directed metadynamics [Citation7] (EDM). EDS is for matching ensemble average scalars and EDM is for matching free energy surfaces (probability distributions of observables).

EDS, like other minimal biasing methods, modifies a potential energy function to change ensemble averages of observables to match a specific value. These observables are typically equivalent to collective variables, but are intended to be only those that area experimentally verifiable quantities. What separates EDS from other methods is that it does not use replicas and can be used to construct a continuous NVE trajectory. For example, the Bayesian landscape tilting method of [Citation8] relies on post-processing so that there cannot be a continuous NVE trajectory. Another example is the replica method of [Citation9] which relies on replica-exchange of biasing forces and thus cannot result in a continuous trajectory. This does not mean EDS is ‘better’; indeed these two methods appear to provide better sampling and scaling than EDS. However, the ability to compute an NVE trajectory allows dynamic observables like hydrogen-bonding lifetimes to be computed. The key results from EDS have been to improve thermodynamic observables and indirectly improve dynamic observables. For example, EDS was recently used to create a state-of-the-art DFT water model that gives near perfect agreement with X-ray scattering results, water diffusivity, and proton-hopping behavior by improving only the water oxygen–oxygen coordination number [Citation10].

EDM is a maximum entropy method that matches ensemble probability distributions, or equivalently free energy surfaces, using prescribed functions. So far, EDM has proved most useful for matching radial distribution functions (RDFs), e.g. to then do coarse-grained modeling. EDM is less often used because it is rare to have experimental data that gives a probability distribution, other than a normal distribution (which is better treated with methods like in [Citation11]). Other groups have since arrived at the same approach as EDM and typically it is now called ‘targeted metadynamics’ because it uses the method of metadynamics to arrive at a target free energy surface [Citation12,Citation13]. EDM is also equivalent to variationally enhanced sampling metadynamics [Citation14], although there the target is a tool to improve sampling, and the biased simulation is not the goal. The name EDM was chosen by the authors because at the time there were new metadynamics methods that could target a collective variable domain [Citation15]. Now the term ‘targeted’ seems to apply exclusively to target distributions, and EDM is probably best described as falling under the umbrella of targeted metadynamics methods.

Here, we review the maximum entropy derivations that underpin both EDS and EDM, describe some of the recent research benefiting from these methods, and discuss implementation details such as understanding the effect of EDS and EDM on the virial and accounting for uncertainty in the experimental data.

2. Theory

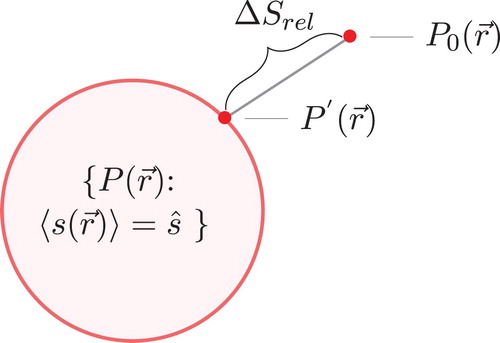

EDS minimally modifies an ensemble so that the ensemble average of some scalar matches a desired value, such as a value obtained from an experiment. The EDS bias is minimal because the resulting biased ensemble maximizes entropy [Citation16,Citation17]. Maximum entropy and minimum relative entropy are equivalent approaches to derive these minimal biasing equations [Citation18]. We will use the minimum relative entropy approach because it has an intuitive interpretation as a distance metric. Figure illustrates how the biased ensemble is one of many choices that matches our constraints, but is as close as possible to the unbiased ensemble as measured via relative entropy.

Figure 1. A schematic of the minimum relative entropy derivation. is the unbiased probability distribution from the unbiased potential energy. We are finding a biased probability distribution,

, that is consistent with Equation (Equation2

(2)

(2) ). There is a hypersurface of possible such probability distributions. With the condition that we minimize relative entropy, or ‘distance’ in this schematic, we find a unique point in the hypersurface

.

Consider an unbiased potential energy function, , which has a probability distribution of

under the NVT ensemble, following the Boltzmann distribution:

.

is the set of coordinate vectors of the N particles of the ensemble and

. k is the Boltzmann constant and T is absolute temperature. We would like to find a biased ensemble

which is as similar as possible to the unbiased ensemble. Similarity can be defined via the relative entropy:

(1)

(1) where

is the biased ensemble probability distribution, and the integral is taken over all coordinates. Having a lower

means that the biased and unbiased ensembles are more similar. The biased ensemble should also have an observable average that matches a target value, which could be obtained from an experiment. This constraint is represented as

(2)

(2) where

is an instantaneous value for our observable (collective variable) which we are matching to

, the desired scalar value. This is sometimes called a forward model and depends only on positions. We will relax this assumption below.

These equations represent (1) a constraint (to have our average simulation value match the target ), and (2) a scalar to minimize (the relative entropy). When these conditions are present, we can find the optimal value via the method of Lagrange multipliers. The Lagrange funtion is

(3)

(3)

Equation (Equation3(3)

(3) ) can be minimized by taking the functional derivative equal to zero:

= 0. Solving for

gives this expression for the biased ensemble:

(4)

(4) where

is a normalization constant. This gives an expression for the biased potential energy as

. This result shows that if the bias is linear in the instantaneous observable, the ensemble is minimally biased. This derivation can be repeated for multiple dimensions [Citation16] and for functions instead of scalars [Citation7]. The general result is

(5)

(5) where i is the index of ensemble averages that are matched to a desired value and j is the index of free energy surfaces that are matched to desired functions.

is a bias added that depends on

, another instantaneous observable, and causes the biased ensemble to match a specific distribution in the free energy surfaces

. Specifically,

(6)

(6) where δ is the Dirac delta function and

is a desired probability distribution for

(i.e.

).

could be obtained for example from a scattering or FRET experiment [Citation19].

The derivation above gives the form of the biased potential energy that is minimally biased, but it does not enable calculation of the Lagrange multipliers. That is the purpose of the EDS and EDM methods. EDS and EDM are time-dependent methods that change the potential energy of a simulation to arrive at the bias in Equation (Equation5(5)

(5) ). EDS is intended to be used in a two-step process: finding the Lagrange multiplier (adaptive) and then running a standard MD simulation using the modified force field given by Equation (Equation5

(5)

(5) ).

During the adaptive phase of EDS, the Lagrange multipliers are called coupling constants to distinguish from the time-independent Lagrange multipliers. These are indicated as where τ is a discrete step index. The Lagrange multipliers are set to be the average of

.

is defined as

(7)

(7)

(8)

(8) where w is an arbitrary constant used to ensure unit homogeneity,

is the ensemble average between step

and τ, and

is

(9)

(9) where A is a user-defined constant that controls the size of the first step. The point of

is to reduce the size of the steps over time. Note that because

is in the sum,

. Typically, the gradients should be clipped for stability and A is a natural upper/lower bound for clipping it. Thus, a user of this method must choose A and the time between updates. This update procedure is derived in [Citation6] and is based on per-coordinate infinite horizon stochastic gradient descent [Citation20]. Hocky et al. [Citation21] recently found that Equation (Equation8

(8)

(8) ) can be modified to use covariance in multiple dimensions to improve convergence and that replacing Equation (Equation7

(7)

(7) ) with Levenberg–Marquardt optimization further improves convergence.

The EDM method finds the bias function in Equation (Equation5

(5)

(5) ). For compactness, we will now omit the dependence on

. Unlike EDS, EDM consists of a single phase where

changes less over time. The update equation is

(10)

(10) where

is the value of

at time τ, G is a kernel function (e.g. Gaussian),

is the probability at position

from the target probability distribution and

is a function which controls convergence. Following the above update rule causes the simulation to converge to the following distributions [Citation7,Citation22], depending on the choice of θ:

(11)

(11) where

is the ‘tempering factor’ [Citation7],

is the marginal unbiased distribution for

, and

is the total or average of all previous

. The first condition,

, is similar to normal metadynamics; it reaches a distribution similar to

but may oscillate due to a lack of dampening in the update size. Condition two, called globally tempered, converges correctly to

. The last condition, locally tempered, converges to an adjustable mixture of the unbiased and target distribution. EDM is traditionally implemented as globally tempered, so that the final distribution is indeed the target. The first condition is good for tuning parameters, since it makes progress more quickly. The final condition, locally tempered, allows a pseudo-Bayesian tuning of prior belief in the unbiased ensemble. It is pseudo-Bayesian because the ratio of influence from the prior belief to evidence is computed, not set, in Bayesian modeling.

An important consideration of both EDS and EDM is that they add potential energy during the update step which quickly becomes kinetic energy. Thus, it is important that the thermostat used to maintain constant temperature in the NVT can dissipate energy faster than it is added by the update steps. This is necessary during the adaptive phase of EDS and EDM prior to convergence.

2.1. Treating uncertainty in experimental data

One complication of minimal biasing methods is uncertainty in the experimental data. For example, the experimental data could be the radius of gyration of a polymer with a reported uncertainty in the mean of 5 nm. Should the radius of gyration be matched exactly, or only to within 5 nm? It is possible to stop the adaptive phase of EDS early, for example when the average is within the uncertainty of the experimental data. An early stop is ad-hoc and not part of the maximum entropy derivation unlike other methods which are built to address uncertainty [Citation11,Citation23].

Cesari et al. [Citation24] proposed a modification to the maximum entropy derivation above to address experimental uncertainty. Instead of the constraint in Equation (Equation2(2)

(2) ), this constraint is used

(12)

(12) where ε is an auxiliary variable that allows deviations in the average of

and

is the probability distribution that will be solved after maximizing entropy. ε is a random variable from a prior distribution

that describes the uncertainty in the experimental data. For example,

could be a normal distribution or a Laplace distribution. Cesari et al. [Citation24] show that

. This leads to a different update step of

(13)

(13) where

is the analytic posterior of ε:

(14)

(14) Returning to the radius of gyration example above, if the uncertainty,

, is assumed to be a normal distribution normal with

nm, then

. This new update-step can be used to rigorously include experimental uncertainty in to the EDS method.

EDM is able to tune the relative importance of the target distribution and the unbiased ensemble with the locally tempered variant in Equation (Equation11(11)

(11) ). This could be used to match intuition. For example, if you believe that the target distribution which comes from experimental data is twice as accurate as the molecular dynamics simulation, you could choose

giving about twice as much weight to the target distribution.

3. Applications of EDS and EDM

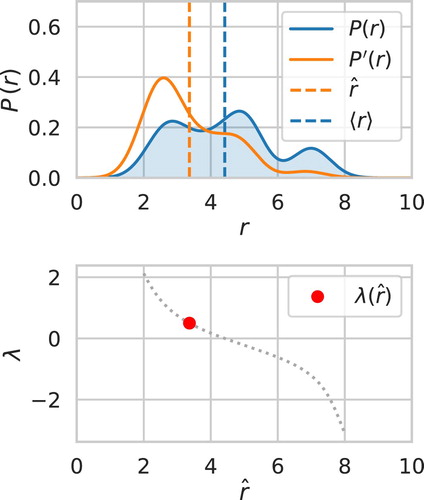

A model 1-D system is shown in Figure as a probability distribution function, . EDS is being used to modify the average value of r to match a new set point,

. EDS adds a linear bias, whose strength is indicated with the red dot, to create the biased PDF

according to Equation (Equation5

(5)

(5) ). Notice how the features of

are mostly maintained in

. The bottom plot shows how each value of

corresponds to a unique biasing strength.

Figure 2. A 1-D EDS calculation where the mean of a probability distribution function (PDF) is being biased to match the dash vertical orange line. The top plot shows the unbiased and biased PDFs. The biased PDF shows as much of the shape of the unbiased PDF as possible while matching the new biased mean. The bottom shows the Lagrange multipliers that give all possible biased means. The red dot indicates the current Lagrange multiplier for the biased PDF. There is a unique λ for all possible biased means, as discussed in the Theory section.

A more sophisticated system which demonstrates the capabilities of improving dynamic observables is the recent work on EDS ab initio molecular dynamics (AIMD) simulations of water [Citation10]. DFT water with the BLYP exchange functional poorly represents water structure as seen in Figure (black line). It is over-structured and has water self-diffusion coefficients that are too high (0.005–0.005 Å2 / ps) [Citation25] compared with the experimental value of 0.23 Å2/ ps [Citation26]. EDS was used to improve the coordination number of the oxygen–oxygen (–

) water molecules and resulted in good agreement with experimental radial distribution function (Figure ). A number of other observables improved as well, including RDFs and the water self-diffusion coefficient, which increased to 0.06

Å2/ps. White et al. [Citation10] further demonstrated that the EDS bias could be transferred to excess proton-water simulations and that when combined with DFT dispersion corrections [Citation28], the agreement further improves. The accuracy improvement with the dispersion corrections shows that EDS is general in its applicability to DFT methods.

Figure 3. Water oxygen–oxygen radial distribution function from ab inito molecular dynamics at 300 K NVT and experiments from [Citation27]. BLYP and BLYP-D3 are from DFT with and without dispersion corrections with the BLYP exchange functional. Note that BLYP-D3 is typically done at 330 K, which gives much better agreement [Citation25]. See [Citation10] for complete system details. The BYLP-EDS line is DFT with EDS bias added to the water oxygen–oxygen coordination number. EDS shows near quantitative agreement with experiment. Reproduced from White AD, Knight C, Hocky GM, et al. Communication: improved ab initio molecular dynamics by minimally biasing with experimental data. J Chem Phys. 2017;146(4), ISSN 00219606., with the permission of AIP Publishing.

![Figure 3. Water oxygen–oxygen radial distribution function from ab inito molecular dynamics at 300 K NVT and experiments from [Citation27]. BLYP and BLYP-D3 are from DFT with and without dispersion corrections with the BLYP exchange functional. Note that BLYP-D3 is typically done at 330 K, which gives much better agreement [Citation25]. See [Citation10] for complete system details. The BYLP-EDS line is DFT with EDS bias added to the water oxygen–oxygen coordination number. EDS shows near quantitative agreement with experiment. Reproduced from White AD, Knight C, Hocky GM, et al. Communication: improved ab initio molecular dynamics by minimally biasing with experimental data. J Chem Phys. 2017;146(4), ISSN 00219606., with the permission of AIP Publishing.](/cms/asset/e0b77c73-41f0-4999-8e8b-7f10035970ad/gmos_a_1608988_f0003_oc.jpg)

Cortina et al. [Citation29] used EDS to study the KPC-2 carbapenemase enzyme, which is responsible for drug resistance in the majority of carbapenem-resistant Gram-negative bacteria [Citation30]. They used EDS to modify protein-protein distances while doing a committor analysis [Citation31] to identify transition states in the carbapenem-enzyme complex. Cesari et al. [Citation24] used a method similar to EDS (modified update step) to improve agreement with experimental NMR3J coupling data for RNA oligonucleotides. After biasing, they used the simulation results to improve the underlying Amber force field and thus create a transferrable model. Cesari et al. [Citation24] also developed a novel approach to account for experimental data uncertainty by adding auxiliary variables to the EDS update step.

EDM has been used less than EDS due to the rarity of experimental data giving an exact probability distribution. One example explored in the original EDM paper was to construct a mean field bias that mimics an alanine dipeptide being in the backbone of a protein [Citation7]. This was done by computing a potential of mean force (PMF) for dihedral angles from PDB crystallography data for the alanine-alanine sequence in protein structures. This corresponds to a probability distribution and a molecular dynamics simulation of alanine dipeptide was done with its

dihedral angles biased to match the desired values. The result was a molecular dynamics simulation where the dipeptide was biased to behave as part of a longer protein structure. A similar approach was later used by [Citation21] with EDS to built a pseudo-mean field model for actin filaments.

Another example of EDM can be found in [Citation13] who used it (called targeted metadynamics) to improve agreement of RNA oligonucleotides' dihedral angles with PDB crystallography data. They similarly built dihedral angle PMFs using the crystallography data and biased molecular dynamics simulations of RNA to adopt the dihedral angle probability distribution functions. Gil-Ley et al. [Citation13] then took the converged EDM bias and transferred it to a different simulation of an RNA tetramer and found little to no improvement of agreement with the crystallography data. This may have been due to the underlying assumption that a PMF derived from crystallographic data is not representative of the room temperature ensemble. Nevertheless, EDM is a promising technique for incorporating experimental data as a type of auxiliary potential energy function in simulations.

3.1. Coarse-grained modeling

EDS and EDM are well-suited for coarse-grain (CG) modeling because they guarantee that a CG model matches experimental data. Dannenhoffer-Lafage et al. [Citation32] showed that EDS can be applied before force-matching (a CG method) and the improvements of agreement with experimental data is maintained. Dannenhoffer-Lafage et al. [Citation32] began with all atom molecular dynamics simulations of Ethylene carbonate with the force field from [Citation33], which is known to not match center-of-mass coordination numbers from more accurate DFT calculations from [Citation34]. Dannenhoffer-Lafage et al. [Citation32] biased the all-atom simulations with EDS to match these known coordination numbers. They then used force-matching to create multiple CG models with one, two, and three site CG beads, using either biased all-atom or unbiased all-atom simulation data. The biased all-atom simulations showed better agreement with coordination numbers, indicating that the improvement in all-atom simulations translates to improvement in the CG model with EDS. Of course, EDS could be used directly on the CG model but that can lead to complications in how observables are calculated on CG models [Citation35].

EDS has been applied to CG simulations of the G- and F-actin proteins as monomers and trimers [Citation21]. Actin proteins are globular proteins that can polymerize into long semi-flexible filaments. Their hypothesis was that they could model a subsystem from within the polymerized actin structure by incorporating information about structural fluctuations from simulations of the larger system via EDS. By biasing the first and second moment of two important collective variables in actin monomer structure, they were able to observe filament-like conformations, and importantly, the fluctuations of these observables for an actin monomer, even in the system which contained only a single monomer solvated in water. Hocky et al. [Citation21] also studied a number of questions about the EDS method in their system and found the following conclusions: (1) the linear term of EDS better matches target values and maintains system fluctuations than harmonic biases; (2) replacing the variance term in Equation (Equation8(8)

(8) ) with a covariance matrix for all biased dimensions has faster convergence; (3) Levenberg–Marquardt [Citation36] converges faster than stochastic gradient descent. This paper also derived a simple equation that can be used to guess the value of λ without doing a stochastic minimization (whose accuracy depends on the distance of the target observables from the unbiased observables), and hence can serve as a good initial guess for starting an EDS simulation.

3.2. Enhanced sampling

EDM may require enhanced sampling if there are slow degrees of freedom orthogonal to the biased collective variable. This is most conveniently treated via the extensive literature on enhanced sampling with metadynamics, since EDM is a type of metadynamics and typically implemented within a metadynamics code. EDS is not as simple because it requires that the term in Equation (Equation8

(8)

(8) ) be taken over an NVT ensemble. This limits the enhanced sampling techniques to those that still give correct NVT ensemble averages. One example is parallel-tempering replica-exchange. It is possible to use metadynamics if an appropriate estimator [Citation37] is done to compute the averages but this has not been explored in practice.

Amirkulova and White [Citation38] demonstrated the use of enhanced sampling and EDS with the parallel-tempering well-tempered ensemble (PT-WTE) [Citation39]. The PT-WTE method is a enhancement of enhanced parallel-tempering replica-exchange that improves exchange rates and reduces the required number of replicas [Citation40]. PT-WTE satisfies the observable that the ensemble averages, , can be computed during the course of the simulation because PT-WTE only changes the magnitude of potential energy fluctuations, not their expectations. One apparent drawback is that the method loses the one-replica observable of EDS that allows computation of dynamic observables. However, this only applies to the adaptive phase. During the fixed-bias second phase of EDS, one replica can again be used to allow analysis of dynamic observables.

Amirkulova and White [Citation38] studied the GYG peptide with the EDS plus enhanced sampling approach. Eight simulations were conducted, each with 1, 8, or 16 replicas. The simulations had EDS, PT-WTE, and/or parallel-tempering. EDS was used to bias proton chemical shifts to improve agreement with experimental NMR data. The simulation results showed that PT-WTE improves sampling in the EDS method and does not change agreement with experimental data. PT-WTE also converged the EDS bias with fewer replicas than the PT method. One consideration in all EDS simulations is the effect on unbiased observables. The Ramachandran plots of the simulations with and without EDS bias are compared in Figure . When EDS and enhanced sampling are used (Figure (a)), the simulation explores a larger region of configurational space relative to the control simulation (Figure (b)). Also, the global minimum changes when using EDS in Figure , bringing it closer to what was found in [Citation41] for the GYG sequence.

Figure 4. Free energy surface of GYG peptide along dihedral angles (Ramachandran plot) from [Citation38]. (a) EDS and enhanced sampling with PT-WTE and (b) no EDS and no enhanced sampling. The difference between panels (a) and (b) shows that EDS changes the global free energy minimum, which better matches data from [Citation41], and PT-WTE improves sampling based on explored regions. Republished with permission of World Scientific Publishing Co., Inc., from Combining enhanced sampling with experiment-directed simulation of the GYG peptide, Amirkulova DA and White AD, 17, 3 and 2018; permission conveyed through Copyright Clearance Center, Inc.

![Figure 4. Free energy surface of GYG peptide along dihedral angles (Ramachandran plot) from [Citation38]. (a) EDS and enhanced sampling with PT-WTE and (b) no EDS and no enhanced sampling. The difference between panels (a) and (b) shows that EDS changes the global free energy minimum, which better matches data from [Citation41], and PT-WTE improves sampling based on explored regions. Republished with permission of World Scientific Publishing Co., Inc., from Combining enhanced sampling with experiment-directed simulation of the GYG peptide, Amirkulova DA and White AD, 17, 3 and 2018; permission conveyed through Copyright Clearance Center, Inc.](/cms/asset/dd544e26-9572-4893-8137-71737efe6efd/gmos_a_1608988_f0004_oc.jpg)

4. The role of pressure

Thus far, we have discussed NVT and NVE ensembles. EDM and EDS add new forces to the simulation and affect the system virial and pressure. This can lead to undesirable density changes when the bias is subsequently used in an NPT simulation. It may be possible to rectify this change in pressure, and thus density, by adding a further constraint that the contribution to the pressure from potential energy be unchanged while still maximizing entropy. As shown in the Appendix, this leads to an unsolvable equation or requires changing changing density to constrain pressure. One intuitive reason for this is that the Clausius virial theorem requires the average virial be proportional to average temperature: . Therefore, if we tried to bias an ensemble with fixed density so that its average virial is constrained to a new value, then the average temperature would change, violating our constrained average temperature.

One way around this challenge lies in the correlation between biased observables in EDS. When biasing multiple observables which are correlated, there is in fact no longer a unique set of Lagrange multipliers [Citation16]. These extra degrees of freedom in the choice of Lagrange multipliers that maximize Equation (Equation3(3)

(3) ) enable us to choose the Lagrange multipliers that minimally change the virial. Equation (Equation8

(8)

(8) ) can be modified so that our coupling constants minimize the additional pressure which they exert [Citation42]:

(15)

(15)

(16)

(16) where ν is a parameter that controls the importance of minimizing the virial,

is the change in the system virial pressure due to the EDS bias,

is the partial derivative of the observable with respect to one component of the triclinic box matrix, and

can be computed from the adjugate of the triclinic box matrix. When biasing multiple observables,

should be the total over all observables.

is in units of

per energy, so ν must be in units of volume squared times

per energy squared. Practically, we can choose a unitless

, which is defined from

(17)

(17)

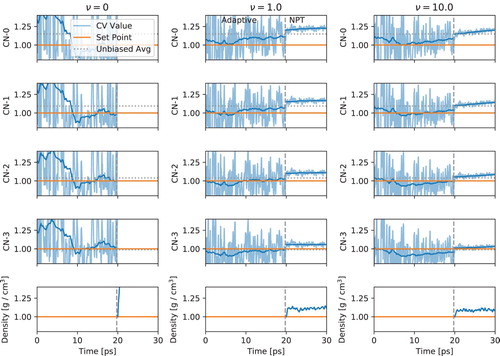

Equation (Equation15(15)

(15) ) was used in a molecular dynamics simulation of 128 modified SPC/E water molecules. This modified SPC/E water has increased charges (

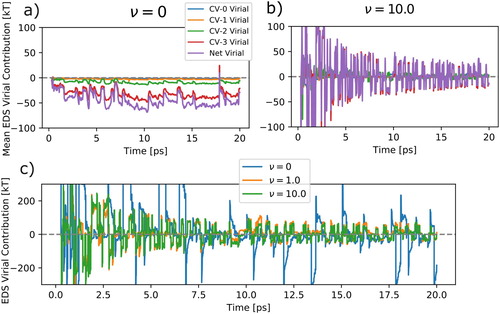

) to distort its coordination number. EDS was then applied to correct the coordination number using experimentally derived coordination numbers from [Citation27] with varying strengths of the virial correction. The coordination number moment definition may be found in [Citation6]. Figure shows that coordination number and its moments are still correctly biased with the virial term. The EDS parameters were a range (A) of 50 kj/mol, a period of 25 fs, and the Levenberg–Marquardt optimization procedure. A Nose-Hoover thermostat with a time constant of 25 fs and a timestep of 0.5 fs were used for the molecular dynamics in the LAMMPS simulation engine [Citation43]. The NPT barostat was Parrinello-Rahman.

Figure 5. EDS applied to water coordination number moments 0 through 3 in molecular dynamics simulation of over-structured SCP/E water model. The columns show increasing strength of virial minimization. Each plot is collective variable scaled by its set-point. The vertical dashed line spearates the NVT adaptive simulation and a fixed bias NPT phase. The results show that increasing the strength of the virial minimization actually improves convergence by reducing large-magnitude changes in biasing force. Without virial minimization, EDS can produce nonphysical densities when the NVT bias is transferred to NPT. The change in density moves the CVs. For , this meant CV values beyond the y-limits of the plot.

Figure shows the effect of the virial penalty term on the EDS virial contribution. The virial contribution plotted and computed here is the mean per-particle virial energy contribution. That is which removes the effect of particle number and energy scale. Panel (a) shows the cumulative mean virial contribution of each collective variable with

(no virial minimization). The net average virial contribution is 39.2 kT. Panel (b) shows

and there is a much lower contribution of 5.02 kT. Panel (c) compares the instantaneous net virial contribution of three different

values. The lack of virial minimization shows large swings in the virial contribution, even after EDS has converged longer timesteps.

Figure 6. The EDS contribution to virial as EDS is applied to water coordination number moments 0 through 3 in molecular dynamics simulation of over-structured SCP/E water model. Panels (a) and (b) compare the cumulative mean virial contribution in a normal EDS and EDS with virial minimization strength of broken out by individual CV. There is lower per collective variable mean virial contribution with virial minimization. Panel (c) shows the effect of different

values on the instantaneous net virial contribution from EDS.

Figure shows the impact on the convergence of the biased coordination number and its moments with the virial minimizing terms. Interestingly, adding a virial minimization term actually improves convergence. This is due to the well-known effect of regularization, which improves convergence in optimization [Citation44]. The virial minimization term is proportional to , so it brings down the magnitude of

. This prevents the large swings seen in the

system. Thus, adding the virial minimization term not only reduces the virial contribution but can improve convergence by minimizing magnitudes of the coupling constants. The true test can be seen in the NPT portion past 20ps where the bias is fixed but the box dimensions are free. EDS with

gives poor densities in NPT with a bias computed in NVT. The virial minimization reduces the change in density with a trade-off in match to the bias set-points. Virial minimization is in general recommended due to its enhanced convergence and better representation of virial forces.

5. Implementations

There are three implementations of EDS available: Colvars [Citation45], Plumed1.3 [Citation46], and Plumed2 [Citation47]. The Colvars and Plumed1.3 implementations match the original EDS manuscript. The Plumed2 implementation is actively maintained as a plugin [Citation47] and has features from more recent EDS articles [Citation21]. For example, the covariance term in Equation (Equation8(8)

(8) ) can be computed using the full sample covariance matrix or the sample variance. The update steps can also be computed using the Levenberg–Marquardt method [Citation21,Citation36], instead of Equation (Equation7

(7)

(7) ). The Plumed2 implementation is recommended.

EDM is implemented in Plumed2 as well, under the ‘targeted metadynamics’ keyword within the metadynamics biasing class. One of the common use-cases for EDM is to bias RDFs, which is not exactly a collective variable. RDFs are ‘function collective variables’ in the sense that there is a distribution at each timestep. Thus the bias update equation, shown in Equation (Equation10(10)

(10) ), applies to each observed point in the RDF at each step (see [Citation7] for equations). This can lead to thousands of updates per timestep on even small systems. To improve performance and scaling, we have created an implementation in LAMMPS that is more tightly integrated with the simulation engine than Plumed2 [Citation7]. Note that the addition of hills is parallelized here, which is unusual and enables the scaling as a function of particle size. Hill addition is 90% of the CPU utilization. This was the implementation used in [Citation7] for an ethylene carbonate electrolyte simulation and Lennard-Jones benchmark systems.

6. Conclusions

Minimal biasing techniques are an emerging area that improve the accuracy of molecular simulation and better utilize comparable experimental data. There are many proposed methods for minimal biasing [Citation3–5]. EDS and EDM are two maximum entropy techniques developed by the authors that minimally bias an ensemble so that average observables or probability distributions of observables match set values. EDS and EDM both converge the potential energy of a simulation to Equation (Equation5(5)

(5) ), allowing a subsequent NVE simulation with fixed potential energy in a single replica so that dynamic observables can be computed. EDS and EDM can bias multiple observables simultaneously and be combined with enhanced sampling methods. It is possible to treat uncertainty in experimental data using methods from [Citation24]. Explicitly setting pressure is not possible in general for maximum entropy biasing as shown in this work. However, it is possible to minimize the change to pressure when biasing multiple collective variables in EDS. This minimization can actually improve convergence of EDS by acting as a regularization. A variety of example systems have been presented here and there are implementations available in most simulation engines for both EDS and EDM.

Acknowledgments

The authors thank Prof. Pengfei Huo for reviewing the appendix mathematics, Rainier Barrett for help preparing the manuscript and Prof. Glen Hocky for assistance in surveying the literature and preparing the manuscript.

Disclosure statement

No potential conflict of interest was reported by the authors..

Additional information

Funding

References

- Lindorff-Larsen K, Piana S, Palmo K, et al. Improved side-chain torsion potentials for the amber ff99SB protein force field. Proteins. 2010;78(8):1950–1958. ISSN 08873585.

- Shivakumar D, Williams J, Wu Y, et al. Prediction of absolute solvation free energies using molecular dynamics free energy perturbation and the opls force field. J Chem Theory Comput. 2010;6(5):1509–1519. ISSN 15499626. doi: 10.1021/ct900587b

- Sormanni P, Piovesan D, Heller GT, et al. Simultaneous quantification of protein order and disorder. Nat Chem Biol. 2017;13(4):339–342. ISSN 1552-4450. doi: 10.1038/nchembio.2331

- Bonomi M, THeller G, Camilloni C, et al. Principles of protein structural ensemble determination. Curr Opin Struct Biol. 2017;42:106–116. ISSN 1879033X. doi: 10.1016/j.sbi.2016.12.004

- Olsson S, Frellsen J, Boomsma W, et al. Inference of structure ensembles of flexible biomolecules from sparse, averaged data. PLoS ONE. 2013;8(11):e79439. ISSN 1932-6203. doi: 10.1371/journal.pone.0079439

- White AD, Voth GA. Efficient and minimal method to bias molecular simulations with experimental data. J Chem Theory Comput. 2014;10(8):3023–3030. doi: 10.1021/ct500320c

- White A, Dama J, Voth GA. Designing free energy surfaces that match experimental data with metadynamics. J Chem Theory Comput. 2015112451–2460. ISSN 1549-9618. doi: 10.1021/acs.jctc.5b00178

- Beauchamp KA, Pande VS, Das R. Bayesian energy landscape tilting: towards concordant models of molecular ensembles. Biophys J. 2014;106(6):1381–1390. ISSN 0006-3495. doi: 10.1016/j.bpj.2014.02.009

- Lindorff-Larsen K, Best RB, DePristo MA, et al. Simultaneous determination of protein structure and dynamics. Nature. 2005;433(7022):128–132. ISSN 0028-0836. doi: 10.1038/nature03199

- White AD, Knight C, Hocky GM, et al. Communication: improved ab initio molecular dynamics by minimally biasing with experimental data. J Chem Phys. 2017;146(4), ISSN 00219606.

- Hummer G, K öfinger J. Bayesian ensemble refinement by replica simulations and reweighting. J Chem Phys. 2015;143(24):243150. ISSN 00219606. doi: 10.1063/1.4937786

- Marinelli F, Faraldo-Gómez J. Ensemble-biased metadynamics: a molecular simulation method to sample experimental distributions. Biophys J. 2015;108(12):2779–2782. ISSN 15420086. doi: 10.1016/j.bpj.2015.05.024

- Gil-Ley A, Bottaro S, Bussi G. Empirical corrections to the amber RNA force field with target metadynamics. J Chem Theory Comput. 2016;12(6):2790–2798. ISSN 15499626. doi: 10.1021/acs.jctc.6b00299

- Valsson O, Parrinello M. Variational approach to enhanced sampling and free energy calculations. Phys Rev Lett. 2014;113(9):090601. doi: 10.1103/PhysRevLett.113.090601

- Dama JF, Hocky GM, Sun R, et al. Exploring valleys without climbing every peak: more efficient and forgiving metabasin metadynamics via robust on-the-fly bias domain restriction. J Chem Theory Comput. 2015;11(12):5638–5650. ISSN 15499626. doi: 10.1021/acs.jctc.5b00907

- Pitera JW, Chodera JD. On the use of experimental observations to bias simulated ensembles. J Chem Theory Comput. 2012;8(10):3445–3451. ISSN 1549-9618. doi: 10.1021/ct300112v

- Jaynes ET. Information theory and statistical mechanics. Phys Rev. 1957;106(4):620–630. doi: 10.1103/PhysRev.106.620

- Roux B, Weare J. On the statistical equivalence of restrained-ensemble simulations with the maximum entropy method. J Chem Phys. 2013;138(8):084107 ISSN 00219606. doi: 10.1063/1.4792208

- Boura E, Rozycki B, Herrick DZ, et al. Solution structure of the ESCRT-I complex by small-angle X-ray scattering, EPR, and FRET spectroscopy. Proc Natl Acad Sci. 2011;108(23):9437–9442. ISSN 0027-8424. doi: 10.1073/pnas.1101763108

- McMahan HB, Streeter M. Adaptive bound optimization for online convex optimization. arXiv. 2010; 1–19. ISSN 09505849.

- Hocky GM, Dannenhoffer-Lafage T, Voth GA. Coarse-grained directed simulation. J Chem Theory Comput. 2017;13(9):4593–4603. ISSN 15499626. doi: 10.1021/acs.jctc.7b00690

- Dama JF, Parrinello M, Voth GA. Well-tempered metadynamics converges asymptotically. Phys Rev Lett. 2014;112(24):240602. ISSN 0031-9007. doi: 10.1103/PhysRevLett.112.240602

- Brookes DH, Head-Gordon T. Experimental inferential structure determination of ensembles for intrinsically disordered proteins. J Am Chem Soc. 2016;138(13):4530–4538. ISSN 15205126. doi: 10.1021/jacs.6b00351

- Cesari A, Gil-Ley A, Bussi G. Combining simulations and solution experiments as a paradigm for RNA force field refinement. J Chem Theory Comput. 2016;12(12):6192–6200. ISSN 15499626. doi: 10.1021/acs.jctc.6b00944

- Steve Tse YL, Knight C, Voth GA. An analysis of hydrated proton diffusion in ab initio molecular dynamics. J Chem Phys. 2015;142(1, ISSN 00219606.

- Krynicki K, Green CD, Sawyer DW. Pressure and temperature dependence of self-diffusion in water. Faraday Discuss Chem Soc. 1978;66(0):199–208. doi: 10.1039/dc9786600199

- Skinner LB, Huang C, Schlesinger D, et al. Benchmark oxygen–oxygen pair-distribution function of ambient water from X-ray diffraction measurements with a wide Q-range. J Chem Phys. 2013;138(7), ISSN 00219606. doi: 10.1063/1.4790861

- Sitarz M, Wirth-Dzieciolowska E, Demant P. Loss of heterozygosity on chromosome 5 in vicinity of the telomere in γ-radiation-induced thymic lymphomas in mice. Neoplasma. 2000;47(3):148–150. ISSN 00282685.

- Cortina GA, Hays JM, Kasson PM. Conformational intermediate that controls KPC-2 catalysis and beta-Lactam drug resistance. ACS Catal. 2018;8(4):2741–2747. ISSN 21555435. doi: 10.1021/acscatal.7b03832

- Pemberton OA, Zhang X, Chen Y. Molecular basis of substrate recognition and product release by the klebsiella pneumoniae carbapenemase (KPC-2). J Med Chem. 2017;60(8):3525–3530. doi: 10.1021/acs.jmedchem.7b00158

- Du R, Pande VS, Grosberg AY, et al. On the transition coordinate for protein folding. J Chem Phys. 1998;108(1):334–350. doi: 10.1063/1.475393

- Dannenhoffer-Lafage T, White AD, Voth GA. A direct method for incorporating experimental data into multiscale coarse-grained models. J Chem Theory Comput. 2016;12(5):2144–2153. ISSN 1549-9626. doi: 10.1021/acs.jctc.6b00043

- Masia M, Probst M, Rey R. Ethylene carbonate-Li+: a theoretical study of structural and vibrational properties in gas and liquid phases. J Phys Chem B. 2004;108(6):2016–2027. ISSN 1520-6106. doi: 10.1021/jp036673w

- Borodin O, Smith GD. Quantum chemistry and molecular dynamics simulation study of dimethyl carbonate: ethylene carbonate electrolytes doped with liPF6. J Phys Chem B. 2009;113(6):1763–1776. ISSN 1520-6106. doi: 10.1021/jp809614h

- Wagner JW, Dama JF, Durumeric AEP, et al. On the representability problem and the physical meaning of coarse-grained models. J Chem Phys. 2016;145(4, ISSN 00219606. doi: 10.1063/1.4959168

- Stinis P. A maximum likelihood algorithm for the estimation and renormalization of exponential densities. J Comput Phys. 2005;208(2):691–703. ISSN 00219991. doi: 10.1016/j.jcp.2005.03.001

- Tiwary P, Parrinello M. A time-independent free energy estimator for metadynamics. J Phys Chem B. 2015;119(3):736–742. ISSN 1520-6106. doi: 10.1021/jp504920s

- Amirkulova DB, White AD. Combining enhanced sampling with experiment-directed simulation of the GYG peptide. J Theor Comput Chem. 2018;17(03):1840007. ISSN 0219-6336. doi: 10.1142/S0219633618400072

- Bonomi M, Parrinello M. Enhanced sampling in the well-tempered ensemble. Phys Rev Lett. 2010;104(19):1–4. ISSN 00319007. doi: 10.1103/PhysRevLett.104.190601

- Deighan M, Bonomi M, Pfaendtner J. Efficient simulation of explicitly solvated proteins in the well-tempered ensemble. J Chem Theory Comput. 2012;8(7):2189–2192. ISSN 15499618. doi: 10.1021/ct300297t

- Ting D, Wang G, Shapovalov M, et al. Neighbor-dependent Ramachandran probability distributions of amino acids developed from a hierarchical dirichlet process model. PLOS Comput Biol. 2010;6(4). ISSN 1553734X. doi: 10.1371/journal.pcbi.1000763

- Louwerse MJ, Baerends EJ. Calculation of pressure in case of periodic boundary conditions. Chem Phys Lett. 2006;421(1–3):138–141. ISSN 00092614. doi: 10.1016/j.cplett.2006.01.087

- Plimpton S. Fast parallel algorithms for short-range molecular dynamics. J Comput Phys. 1995;117(1):1–19. ISSN 0021-9991. doi: 10.1006/jcph.1995.1039

- Scholkopf B, Smola AJ. Learning with kernels: support vector machines, regularization, optimization, and beyond. Cambridge (MA): MIT Press; 2001.

- Fiorin G, Klein ML, Hénin J. Using collective variables to drive molecular dynamics simulations. Mol Phys. 2013;111(22–23):3345–3362. doi: 10.1080/00268976.2013.813594

- Bonomi M, Branduardi D, Bussi G, et al. A portable plugin for free-energy calculations with molecular dynamics. Comput Phys Commun. 2009;180(10):1961–1972. ISSN 00104655. doi: 10.1016/j.cpc.2009.05.011

- Tribello GA, Bonomi M, Branduardi D, et al. New feathers for an old bird. Comput Phys Commun. 2014;185(2):604–613. ISSN 0010-4655. doi: 10.1016/j.cpc.2013.09.018

Appendix Minimal biasing pressure derivation

We would like to constrain the average contribution to virial pressure from potential energy while minimizing relative entropy in a biased ensemble. The goal is to modify the potential energy while keeping temperature, number of particles and volume the same. We define the virial contribution from potential energy to be where V is volume and

is the potential energy of our biased ensemble. We can rewrite this as

(A1)

(A1) where V is volume and

. The constraints for this ensemble are

(A2)

(A2) which enforces normalization and to set the average

to be equal to the scalar

:

(A3)

(A3) where we have taken the

term, which describes change in potential energy with fixed atom positions, to be zero. The quantity to be minimized is relative entropy of the biased probability distribution with respect to the unbiased probability distribution:

(A4)

(A4)

To solve this system, we compute the functional derivative of the Lagrange function with respect to the biased probability distribution . This requires a functional derivative of Equation (EquationA3

(A3)

(A3) ), which is

(A5)

(A5)

Some intuition for Equation (EquationA5(A5)

(A5) ) can be gained from the definition of the functional derivative. Equation (EquationA5

(A5)

(A5) ) says that adding an arbitrary test function

to the probability distribution scaled by ε, where ε is small has the following result:

(A6)

(A6)

The right-hand side is independent of the probability distribution, so changing the probability distribution decreases the virial by N regardless of where the change occurs. With the functional derivative of the virial being constant, the functional derivative of the Lagrange function is(A7)

(A7)

(A8)

(A8) which has no solution if N and V are not variables because

and

are colinear. Thus, there is no way constrain the potential energy contribution to pressure. This argument does not apply to constraining pressure by changing volume or density, which is the definition of an NPT ensemble or a grand canonical ensemble.