Abstract

The neural substrates of tactile roughness perception have been investigated by many neuroimaging studies, while relatively little effort has been devoted to the investigation of neural representations of visually perceived roughness. In this human fMRI study, we looked for neural activity patterns that could be attributed to five different roughness intensity levels when the stimuli were perceived visually, i.e., in absence of any tactile sensation. During functional image acquisition, participants viewed video clips displaying a right index fingertip actively exploring the sandpapers that had been used for the behavioural experiment. A whole brain multivariate pattern analysis found four brain regions in which visual roughness intensities could be decoded: the bilateral posterior parietal cortex (PPC), the primary somatosensory cortex (S1) extending to the primary motor cortex (M1) in the right hemisphere, and the inferior occipital gyrus (IOG). In a follow-up analysis, we tested for correlations between the decoding accuracies and the tactile roughness discriminability obtained from a preceding behavioural experiment. We could not find any correlation between both although, during scanning, participants were asked to recall the tactilely perceived roughness of the sandpapers. We presume that a better paradigm is needed to reveal any potential visuo-tactile convergence. However, the present study identified brain regions that may subserve the discrimination of different intensities of visual roughness. This finding may contribute to elucidate the neural mechanisms related to the visual roughness perception in the human brain.

Introduction

Tactile perception is crucial in perceiving the material characteristics of a physical surface. A number of neuroimaging studies have investigated the neural mechanisms of tactile discrimination in the human brain (Kitada et al. Citation2005; Kim, Muller, et al. Citation2015), whereas only a few studies have investigated visually evoked neural representations of haptic exploration (Meyer et al. Citation2011). For example, Meyer and colleagues examined neural activity patterns in response to video clips showing a hand exploring an everyday object (Meyer et al. Citation2011). Their results demonstrate that visual stimuli containing tactile and proprioceptive information can activate the primary somatosensory cortex (S1). However, to the best of our knowledge, no study so far explicitly explored whether activity patterns in the brain encode roughness intensity when roughness stimuli were presented visually.

In the present study, we investigated neural encoding of roughness intensity evoked by observing tactile exploration of various rough surfaces. A multivoxel pattern analysis (MVPA) was used to statistically assess neural activity patterns in response to the touch observations. We considered the MVPA to be more appropriate to our study than the classical general linear model (GLM) analysis, because our data analysis focussed on identifying brain regions encoding multiple levels of roughness intensity. In particular, a searchlight MVPA was employed to search for the regions exhibiting neural activity patterns associated with visual roughness perception. Then, we investigated whether a visuo-tactile convergence could be detected within the identified regions. To this end, we carried out a correlation analysis between decoding accuracies for each identified brain region and individual behavioural performance obtained from a roughness discrimination task performed before the MR scanning session.

Materials and methods

Participants and stimuli

Fifteen healthy volunteers (9 females, age: 26.7 ± 3.6 years) with no contraindication of MR investigations and no history of neurological disorders participated in the experiment. All participants were right-handed and had no deficits in tactile and visual processing. Experimental procedures were approved by the ethical committee of the University Clinics Tübingen (649/2016BO2) and the study was conducted in accordance with the Declaration of Helsinki. All participants were informed about the experimental procedure and gave written consent.

Sandpapers with five roughness levels (aluminium-oxide abrasive paper, 3 M Center, St. Paul, MN; particle size of 0.3, 12, 40, 60, and 100 μm; coloured in white, yellow, blue, grey, and brown) were prepared in the size of 5 × 3 cm and attached on a black plastic plate sized 5 × 8 cm. These sandpapers were successfully used and validated in a previous texture perception study (Miyaoka et al. Citation1999). In addition, five short video clips (3 s) displaying a right index fingertip exploring one of the sandpapers were recorded to show participants during the fMRI experiment. In the video clips, the sandpaper surface was located at the centre of the screen. For 3 seconds, a right index finger explored the tactile surface using horizontal movements (from side to side). The finger was shown from a viewpoint close to that experienced by the participants in the behavioural experiment.

Experimental design

Prior to the fMRI experiment, all participants performed a tactile discrimination task outside of the MR room. Participants completed five blocks, each consisting of 20 trials (counterbalanced pairwise combination of five sandpapers). In each trial, participants explored two sandpapers sequentially with the right index fingertip and reported which one felt rougher. Participants were not blindfolded. The duration of a single trial was 15 s and the inter-trial interval was set at 5 s. A 1-min break was provided between the blocks and the entire behavioural experiment took ∼30 min.

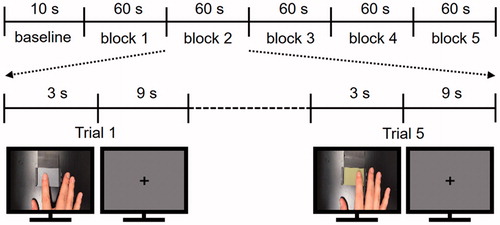

During the fMRI experiment, participants laid in a supine position and wore earplugs to prevent auditory disturbances from the surroundings. They watched a screen with a projector resolution of 1280 × 1024 pixels at a refresh rate of 60 Hz through an angled surface-mirror. Participants performed 3 fMRI runs of 25 trials (5 repetitions ×5 roughness intensities) and each run started with a 10 s baseline period (). The videos displaying the five levels of roughness intensity were presented in randomized order in each block. Each trial consisted of two consecutive periods: a stimulation period of 3 s followed by a fixation resting period of 9 s. During the presentation of each video clip, participants were asked to recall the tactile roughness intensity of the sandpaper when they had explored it previously in the behavioural experiment. There was no tactile stimulation during any periods of the fMRI experiment and participants were not asked to enter any roughness ratings.

Data acquisition and pre-processing

Neuroimaging data were acquired on a 3 Tesla Siemens Prisma system with a 64-channel head coil (Siemens Medical Systems, Erlangen, Germany). Anatomical images were obtained using a T1-weighted sequence (ADNI, 192 slices) with the following parameters: repetition time (TR) = 2000 ms, echo time (TE) = 3.06 ms, flip angle =9°, field of view (FOV) = 256 mm, and voxel size =1 mm³. Functional images were acquired using a slice-accelerated multiband gradient-echo-based echo planar imaging (EPI) sequence using T2*-weighted blood oxygenation level dependent (BOLD) contrast (multiband acceleration factor: 2): 46 slices, TR =1520 ms, TE =30 ms, flip angle =68°, FOV =192 mm, slice thickness =3 mm, and in-plane resolution =3 × 3 mm2. The functional images covered the whole cerebrum. Standard preprocessing of the fMRI data was performed using SPM8 (Wellcome Department of Imaging Neuroscience, UCL, London, UK) and a high-pass filter of 128 s was used to remove low-frequency noise. The EPI data were corrected for slice-timing differences, realigned for motion correction, co-registered to the individual T1-weighted images, normalized to the Montreal Neurological Institute (MNI) space, and spatially smoothed by a 2-mm full-width-half-maximum (FWHM) Gaussian kernel.

Data analyses

To search for brain regions exhibiting visually evoked roughness intensity information, we carried out a whole brain searchlight MVPA using a Searchmight Toolbox (Pereira and Botvinick Citation2011). Specifically, the searchlight analysis was performed on parameter estimates (i.e., beta values) acquired from a general linear model (GLM). To extract parameter estimates, standard predictors were yielded by the convolution of stimulation periods with a hemodynamic response function (HRF) provided by SPM8. We implemented a GLM independently for each trial to increase the number of exemplars. As such, a total of 75 regressors (25 trials ×3 fMRI runs) were built for each voxel. Obtained parameter estimates were used as input features for the searchlight analysis. We constructed a searchlight consisting of a centre voxel and its neighbourhood within a 3 × 3×3 voxel cube. In each searchlight, a Gaussian Naïve Bayes (GNB) classifier discriminated five roughness intensities from the spatial patterns of the parameter estimates for the voxels. Each experimental run was considered as one fold and a 3-fold cross-validation procedure was performed. The resulting decoding accuracies were stored in the centre voxel of the searchlight cube. Chance-level accuracy (0.2 in our case; recall that the classifier predicted one out of five different roughness levels) was subtracted from the accuracy value stored in each voxel to yield deviations from chance. A random-effects group analysis was then performed on these individual accuracy maps to identify commonalities among individual neural activity patterns. To correct the fMRI cluster results for multiple comparisons, we estimated an empirical cluster size threshold that would be obtained by chance using a bootstrap procedure described in a previous study (Oosterhof et al. Citation2010). The maximum cluster size under the null hypothesis was calculated for each iteration and this procedure was repeated 1000 times, yielding an empirical null hypothesis distribution of cluster sizes. Then, we compared the cluster size from the group analysis to the null hypothesis distribution and reported the clusters in the 5% of the upper tail, i.e., p < .05 corrected for multiple comparisons through cluster size.

Once we found the brain regions showing distinct neural activity patterns across intensity levels, we further examined whether the decoding accuracies within these regions were correlated with individual discriminability assessed behaviourally. The procedure estimating behavioural discriminability of roughness intensities was identical to our previous study (Kim, Chung, et al. Citation2015). Just noticeable difference (JND) values for each participant were measured from the fitted psychometric curves and these values were correlated with the decoding accuracies within each of the identified brain regions using linear regression.

Results

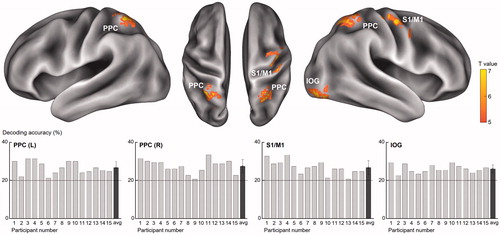

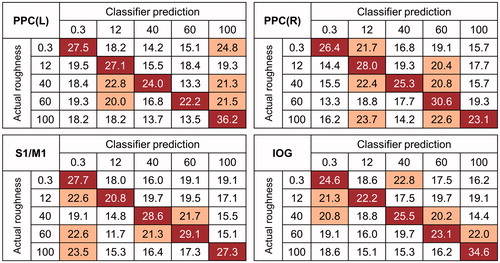

A random-effects group analysis revealed four clusters showing significantly high decoding accuracies (p < .001 uncorrected, size >50) ( and ). These clusters were located in the bilateral posterior parietal cortices (PPC), the primary somatosensory cortex (S1) extending to the primary motor cortex (M1) in the right hemisphere, and the inferior occipital gyrus (IOG) including V1. Notably, a significant cluster in S1 was found at the hand knob area of somatomotor cortex. Decoding accuracies for each identified cluster were as follows (presented as mean ± standard deviation, highest and lowest accuracies for each cluster): 26.7 ± 3.3%, 31.3%, 21.3% for the left PPC; 27.4 ± 3.5%, 33.3%, 20.7% for the right PPC; 26.8 ± 3.6%, 33.3%, 20.7% for the S1; and 26.0 ± 2.1%, 29.3%, 22.7% for the IOG. Neural discriminability for the five roughness intensities were higher than chance (20%) for all identified brain regions (all ps < 0.01; left PPC: t14 = 7.8; right PPC: t14 = 8.2; S1: t14 = 7.2; IOG: t14 = 11.1). An analysis of variance (ANOVA) showed that there was no significant difference in decoding performances between the regions. shows the confusion matrices for each cluster. Note that the value on row i and column j in each matrix represent the probability that presentation of roughness intensity i was predicted as roughness intensity j. An ideal confusion matrix would have a 100% probability on the diagonal and 0% in the off-diagonal entries.

Figure 2. Results of the whole brain searchlight MVPA. The analysis identified four brain regions showing significant performance in discriminating visually evoked roughness intensities. The bottom panels show the decoding accuracies for each of the 15 participants and the rightmost bar indicates the average accuracy across the participants. Chance level is marked by the dashed line (20%).

Figure 3. Confusion matrices for predictions of the classifier for each identified cluster. The rows of the matrix indicate the actual visual roughness intensity provided to the participants and the columns indicate the intensity predictions by a neural decoder. The cells on the diagonal entries (correct predictions) are highlighted in red and the cells where the misclassification rates exceeded chance level (20%) are highlighted in orange.

Table 1. Identified clusters showing significantly higher decoding accuracies than chance level (p<.001 uncorrected, size >50).

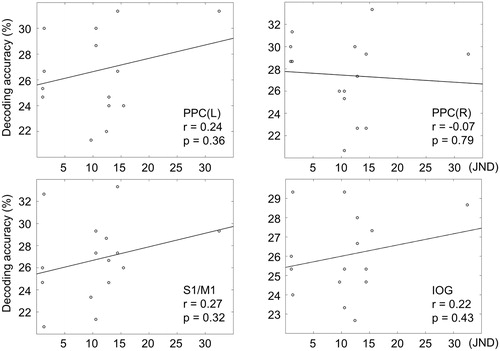

We further explored whether classification accuracies for individual participants varied with their tactile roughness discriminability (i.e., JNDs) (). We could not find any significant correlation between JND values and decoding accuracies (all rs < 0.27, ps > 0.32) ().

Figure 4. Correlations between behavioural and neural decoding accuracies for visual roughness intensities. The neural decoding accuracies for the 15 participants are plotted over their behavioural JND values.

Table 2. Perceptual and neural roughness discriminability for each participant.

Discussion

In this study, using a searchlight MVPA, we have demonstrated that visual roughness intensity can be decoded from fMRI signals. In particular, the bilateral PPC, the right S1, and the IOG carry information about visual roughness. In a related study, Meyer and colleagues asked participants to watch video clips displaying active haptic exploration of a common object (Meyer et al. Citation2011). Their MVPA results showed that S1 activity patterns allowed to discriminate between the objects that the participant had seen. In line with that study, the present study provides additional evidence that visually-evoked surface roughness can also elicit distinct activity patterns in S1 depending on the roughness intensity of the displayed sandpapers. Moreover, our results clearly show significant decoding performance in the bilateral PPC. Even though numerous neuroimaging studies have reported the involvement of the PPC in tactile discrimination (Francis et al. Citation2000; Kim J, Muller, et al. Citation2015), a role in visual texture perception has yet to be completely characterized. One recent fMRI study with a large number of participants revealed that observations of passive touch activated the PPC (Chan and Baker Citation2015). Identification of roughness representation in the IOG is also an interesting observation. Several previous studies have demonstrated that visual texture information is processed in the IOG (Cant et al. Citation2009; Cant and Goodale Citation2007). Together with these previous findings, our results provide additional clues to answer which brain areas are essential for the discrimination of visual roughness information.

In the presented video clips, all sandpapers had the same shape, but they displayed different colours depending on their level of roughness. Therefore we cannot rule out the possibility that the classifier discriminated the colour rather than the roughness information. To solve this issue, we performed an additional decoding analysis. We used a GNB classifier to discriminate the two roughest surfaces against the two smoothest surfaces for each participant. This means that activity patterns evoked by sandpapers of different colours were analyzed together. If sandpapers’ colour had been a determining factor for the evoked activity patterns in the previous analysis, we would expect no significant discrimination performance between the activity patterns in this new analysis, while if the activity patterns are evoked primarily by the sandpapers’ roughness, decoding accuracy should subsist. Our results show that decoding accuracies in each region were significantly higher than chance level (Left PPC: t14 = 18.8, p < .01; Right PPC: t14 = 10.1, p < .01; S1/M1: t14 = 21.6, p < .01; IOG: t14 = 15.4, p < .01). These results suggest that the confounding effect of colour is small.

Importantly, no significant correlation between behavioural discriminability and neural decoding accuracy was found. In other words, when roughness information was perceived solely from visual cues without any intrinsic tactile content, no brain region was found to encode individual perceptual sensitivity on visual roughness. In contrast, in our previous study (Kim, Chung, et al. Citation2015), when roughness information was perceived solely tactilely in the scanning session, the SMA showed a significant correlation with the behavioural results. Then, how do we explain this discrepancy? There are at least two possible explanations. First, this may be attributed to roughness intensity being perceived through different sensory pathways. Instead of the perception originating from the mechanoreceptors in the skin, in the present study participants perceived the roughness of the stimuli through the visual pathways originating from the photoreceptors in the retina. A previous fMRI study demonstrated that visual texture information was processed in areas along the ventral and dorsal streams in the visual cortices (Kastner et al. Citation2000) and these areas were different from the operative areas for tactile texture perception in the somatosensory pathways (e.g., S1, parietal operculum) (Servos et al. Citation2001; Stilla and Sathian Citation2008). In line with these previous findings, in our studies, we found that visually and tactilely evoked roughness information elicited distinct activation patterns in different brain regions and we suggest that this may have led to the inconsistent result between both studies. Second, the difference in results can be explained by an insufficient information transfer between visual (e.g., lingual gyrus, middle occipital gyrus) and somatosensory cortical regions (e.g., S1, superior parietal lobule) (Hadjikhani and Roland Citation1998; Kawashima et al. Citation2002). Considering that participants were asked to recall perceived roughness intensities while they viewed the video clips, it is plausible that visual roughness intensity encoding was mediated by the remembered tactile information. We thus speculate that tactile information transfer, which might be beneficial to the visual roughness encoding, was not sufficient to elicit distinct neural activation patterns. Consequently, we could not find neural correlates of individual differences in visual roughness perception.

Patterns of misclassification as described by the confusion matrices are also noteworthy. Our previous study found the highest classification accuracy always on the diagonal entries for every identified cluster (Kim, Chung, et al. Citation2015). Moreover, frequent confusions, those for which the misclassification rates surpassed chance level, were found only 2 times per cluster on average. In the present study, confusions were observed more often and decoding accuracies were also lower compared with those from our previous tactile study. In line with our observation, a recent 7 T fMRI study investigating visually and tactilely evoked perception of surface roughness showed that the topography of visual maps is weaker than the topography of tactile maps (Kuehn et al. Citation2018). Further investigations are needed, but our finding suggests that visually evoked brain activities about surface properties are characterized by less distinctive patterns than those obtained from the tactile sense.

There is a clear limitation in our paradigm as both experiments differ on several points. First, the tactile behavioural task was performed outside of the scanner, therefore we can only correlate results of a behavioural task resulting from tactile input with the fMRI activity resulting from a purely visual input. Second, during the fMRI scan, the task to recall the perceived roughness intensity of the sandpaper displayed in the video clip was unsupervised, therefore we do not have any behavioural data on how well they did in this task. These differences between both experiments reduced the chance of observing any evidence of visuo-tactile convergence. Obviously, a more suitable design is needed for revealing such convergence between vision and touch for roughness perception.

In conclusion, our searchlight MVPA revealed the bilateral PPC, the right S1, and the IOG to exhibit distinct neural activity patterns specific to each of the visually evoked roughness intensity. Our results suggest that visual roughness is encoded likely in one of these areas, but more extensive investigations are needed for deciphering their role. We envision that future work will uncover the detailed neural mechanisms of these areas underlying multisensory perception.

Disclosure statement

No potential conflict of interest was reported by the authors.

Additional information

Funding

References

- Cant JS, Arnott SR, Goodale MA. 2009. Fmr-adaptation reveals separate processing regions for the perception of form and texture in the human ventral stream. Exp Brain Res. 192:391–405.

- Cant JS, Goodale MA. 2007. Attention to form or surface properties modulates different regions of human occipitotemporal cortex. Cereb Cortex. 17:713–731.

- Chan AW-Y, Baker CI. 2015. Seeing is not feeling: posterior parietal but not somatosensory cortex engagement during touch observation. J Neurosci. 35:1468–1480.

- Francis ST, Kelly EF, Bowtell R, Dunseath WJR, Folger SE, McGlone F. 2000. Fmri of the responses to vibratory stimulation of digit tips. Neuroimage. 11:188–202.

- Hadjikhani N, Roland PE. 1998. Cross-modal transfer of information between the tactile and the visual representations in the human brain: a positron emission tomographic study. J Neurosci. 18:1072–1084.

- Kastner S, De Weerd P, Ungerleider LG. 2000. Texture segregation in the human visual cortex: a functional mri study. J Neurophysiol. 83:2453–2457.

- Kawashima R, Watanabe J, Kato T, Nakamura A, Hatano K, Schormann T, Sato K, Fukuda H, Ito K, Zilles K. 2002. Direction of cross-modal information transfer affects human brain activation: a pet study. Eur J Neurosci. 16:137–144.

- Kim J, Chung YG, Park JY, Chung SC, Wallraven C, Bulthoff HH, Kim SP. 2015. Decoding accuracy in supplementary motor cortex correlates with perceptual sensitivity to tactile roughness. PLoS One. 10:e0129777.

- Kim J, Muller KR, Chung YG, Chung SC, Park JY, Bulthoff HH, Kim SP. 2015. Distributed functions of detection and discrimination of vibrotactile stimuli in the hierarchical human somatosensory system. Front Hum Neurosci. 8:1070.

- Kitada R, Hashimoto T, Kochiyama T, Kito T, Okada T, Matsumura M, Lederman SJ, Sadato N. 2005. Tactile estimation of the roughness of gratings yields a graded response in the human brain: an fmri study. Neuroimage. 25:90–100.

- Kuehn E, Haggard P, Villringer A, Pleger B, Sereno MI. 2018. Visually-driven maps in area 3b. J Neurosci. 38:1295–1310.

- Meyer K, Kaplan JT, Essex R, Damasio H, Damasio A. 2011. Seeing touch is correlated with content-specific activity in primary somatosensory cortex. Cereb Cortex. 21:2113–2121.

- Miyaoka T, Mano T, Ohka M. 1999. Mechanisms of fine-surface-texture discrimination in human tactile sensation. J Acoust Soc Am. 105:2485–2492.

- Oosterhof NN, Wiggett AJ, Diedrichsen J, Tipper SP, Downing PE. 2010. Surface-based information mapping reveals crossmodal vision-action representations in human parietal and occipitotemporal cortex. J Neurophysiol. 104:1077–1089.

- Pereira F, Botvinick M. 2011. Information mapping with pattern classifiers: a comparative study. Neuroimage. 56:476–496.

- Servos P, Lederman S, Wilson D, Gati J. 2001. Fmri-derived cortical maps for haptic shape, texture, and hardness. Brain Res Cogn Brain Res. 12:307–313.

- Stilla R, Sathian K. 2008. Selective visuo-haptic processing of shape and texture. Hum Brain Mapp. 29:1123–1138.