ABSTRACT

Background and Context

Objective

This study explores postgraduate students’ perceptions of the modified team-based learning instructional approach used to teach it and the extent to which the Bootcamp course improves their practical skills.

Method

In the beginning, participants (n = 185) were asked to rate their practical experience on the taught topics. At the end of ProgSD in S1 and Semester 2 (S2) during the Covid19 lockdown, respondents (n = 150 and n = 43, respectively) were surveyed on their perceptions of ProgSD. Wilcoxon test, Mann-Whitney U Test and Kruskal-Wallis test were used to analyse data. Thematic Analysis was used to analyse students’ comments.

Findings

Results showed that students’ practical experience significantly increased at the end of ProgSD with a p-value < 0.05. Median ratings of the modified TBL activities and perception of teamwork were positive overall, and many (66.5%) felt more confident about taking the courses in S2. Qualitative results supported these findings.

Implications

Findings have implications for practitioners facing the challenges identified here. Given the current pandemic, the authors suggest approaches to redesign face-to-face activities (tAPP) for online teaching and learning.

Introduction

Team-based learning

Research has highlighted that active learning methods foster deeper conceptual learning of traditional teacher-centred methodology (Biggs & Tang, Citation2011). Team-based learning (TBL) is an active learning method devised by Larry Michaelsen, which is extensively used in medicine and mainly in small group teaching (Michaelsen et al., Citation2004). It is a highly structured learning design that incorporates individual formative feedback, peer interaction, and active peer management of the team interactions. It is a student-centred approach led by teacher instructions to promote active learning. Many empirically supported best practices of student-centred active learning are brought into a single strategy (Leupen, Citation2020). Four principles govern TBL (Michaelsen et al., Citation2004, Citation2008):

a) Team formation. Permanent and strategically formed and managed small teams from week one;

b) accountability is essential as each student is responsible for their own learning and contributes to the team in order to achieve a higher level of cognitive learning (Vasan et al., Citation2009)

c) in-class teams must foster interaction, promote team learning and development;

d) immediate feedback is particularly critical to reinforce student learning and enables team development. With TBL, the exams are no longer “end-of-unit exercises”. Instead, they take a preparatory role.

The TBL sequence starts with student preparation which requires individual pre-reading and studying out of the class. Stage 2 (Readiness Assurance) tests students’ knowledge of the pre-studied subject, first individually through individual readiness assurance tests (iRATs), then through team readiness assurance tests (tRAT) in the class. During this stage, teams can appeal if they believe the answer to a question is not correct. Furthermore, the instructor provides feedback tailored to the evidence of iRAT and tRAT results and clarifies any further misunderstandings. At stage 3, students start applying what they have learned through discussion of high-level concepts and problem-solving activities.

TBL has been used in other disciplines, including engineering, business, and computing science (CS) (Christensen et al., Citation2019; Diniz et al., Citation2019; Elnagar & Ali, Citation2013; Ghadiri et al., Citation2013; Lasserre & Szostak, Citation2011; Matalonga et al., Citation2017). There is a growing body of evidence suggesting that students achieve more academically with TBL than the traditional approaches to instruction (Reimschisel et al., Citation2017). A TBL review by Swanson et al. (Citation2019) on the effect of team-based learning on content knowledge and comprehension found that it improves students’ end of course grades, test performance, and classroom engagement. It can improve the attendance rate (Inuwa et al., Citation2012) and improve the final exams scores more than the traditional lecture environment (El-Banna et al., Citation2020). TBL stimulates deep learning, fosters collaboration, teamwork, enhances student engagement and communication skills (Vlachopoulos et al., Citation2020). This instructional approach is about interactive small-group learning that fosters critical thinking. Furthermore, even when compared to a moderated structured active learning, TBL still provides greater learning (Ng & Newpher, Citation2020).

In CS, in particular, it has been found that this student-centred instructional approach has many advantages, including a high level of engagement, better academic performance for weaker students, enhanced programming skills and confidence in the ability to program (Elnagar & Ali, Citation2013; Kirkpatrick & Prins, Citation2015; Lasserre & Szostak, Citation2011; Rankin et al., Citation2007). This study will examine whether some of these advantages are observed in a new postgraduate course we created: Programming and Systems Development (ProgSD).

Our context in creating ProgSD

Increasing enrolments in Computing Science have created significant demands and challenges (Camp et al., Citation2017). In our school, postgraduate students coming from a variety of backgrounds come with different skills sets, which our lecturers are having trouble addressing. So in order to better serve our students given their diverse skills sets, we created a new program, Programming and Systems Development (ProgSD), that they will all go through together so we can standardise their knowledge. This will make it easier for our lecturers in future classes to know which skills they can rely on students having, which was the lecturers’ complaint. We hope that with lecturers’ better able to rely on specific skills, their classes will work better for students, and student performance will increase.

The course is offered to all our master’s (CS+) programmes: MSc Data Science (DS), MSc Computing Science (CS), and MSc Information Security (IS). All students on these programmes are assumed to have a CS or software development undergraduate degree, or at least to have done some programming during their undergraduate degree. The expectation is for them to be able to understand programming concepts and be able to apply these to solve problems. This course is also offered to MSc Data Analytics (DA) students who are able to take some of our CS courses in Semester 2; these students have a Maths background and have no or lower experience in programming than the other groups.

ProgSD is a highly practice-focused Bootcamp-style course that aims to standardise students foundation of most of our master’s courses. Bootcamp courses are increasingly being used by universities (Tu et al., Citation2018) because they can help develop students’ programming, interpersonal and communication skills. They are also being used in a variety of areas. For example, in Thalluri (Citation2016), the intensive short program was aimed at students with little or no background in biology, chemistry or physics. Bootcamps are used to strengthen student understanding of the fundamentals of the chosen topics. A typical Bootcamp in universities lasts 12 to 16 weeks or longer (Wilson, Citation2018) and focuses on one programming language. However, to better serve our students, ProgSD, which is embedded in the curriculum, runs for six weeks and focuses on several subjects, specifically where lecturers are having trouble. These subjects are Java, Python, Database, Data manipulation, Data Visualisation and Unix.

In brief, our Bootcamp is a mandatory full-time 20 credits learner-centric intensive short course delivered at the start of the students’ Masters programme and designed to provide a standardised foundation to the curriculum through mainly practical tasks. below presents a snapshot of a typical ProgSD weekly class contact timetable. The students must also take two other full-time standard-length courses (12 weeks) from week 1, which makes their timetable in the first 6 six very tight.

Table 1. Typical ProgSD Bootcamp weekly timetable.

ProgSD aims to increase postgraduates’ practical skills and their performance in the CS courses that they take in S2. We were unable to directly evaluate student performance in those S2 courses because of the Covid19 pandemic, which led to the exam format being changed. Instead, the focus of this paper will be on evaluating students’ practical skills before and after ProgSD, as well as their perceptions of the teaching approach. Both of these issues are equally important for this course: in Computing Science, particularly in introductory programming courses, there have been reports of higher attrition rates and low pass rates due mainly to poorly designed courses, the lack of practice, timely feedback, and the difficulty in learning programming (Beaubouef & Mason, Citation2005; Watson & Li, Citation2014). One of the suggested solutions to this problem is using a pedagogical approach that promotes active student engagement and collaboration through the use of evidence-based collaborative learning and teaching strategy such as Team-Based Learning (TBL) (Walker, Citation2017). Furthermore, fostering collaboration from week one is very important as studying abroad for the first time can be very challenging to our mainly overseas student cohort. There is a need to foster team interaction and engagement from an early stage and to increase students’ confidence. Literature shows a growing call for using Team-Based Learning as an effective collaborative learning methodology in higher education (Vlachopoulos et al., Citation2020). It is more suitable to implement TBL on this course because Bootcamps, which tend to be learner-centric, optimise TBL student-centred approaches. Other studies have also combined Bootcamp and TBL. For example, Mastel-Smith et al. (Citation2019) used it in a 16-h team-based learning Dementia Care Bootcamp. They found that the Bootcamp had a significant positive effect on the participants’ attitudes, knowledge and confidence for dementia care. Lastly, the main reason for choosing modified TBL as a method of instructions for ProgSD is because, as already explained, there are far too many topics per each lecture slot (tAPP time – 1 hour per session) which would be impossible to cover using a traditional lecture approach for the same duration. There is no extra available hour in the student weekly timetable to allow more time on tAPPs because they are also taking another two classes.

The following section will give an overview of modified Team-Based Learning and research questions. Section 3 describes the materials and methods, including details on the implementation of a modified TBL in ProgSD. Section 4 presents the results, while Section 5 presents a discussion. The paper concludes in Section 6.

Modified team-based learning

There is an increased use of modified TBL. In the literature, instructors have had to choose and practice one or more of the TBL phases or other modifications (Burgess et al., Citation2018; Elnagar & Ali, Citation2013; Inuwa et al., Citation2012) selectively. For instance, in an 8-week Cardiovascular Systems Block, Burgess et al. ran two iterations of modified TBL for 1.5 hours each. Their version of modified TBL included 1) compulsory pre-reading material; 2) Individual Readiness Assurance Test (iRAT) at the beginning of each class and Team Readiness Assurance Test (tRAT) in the form of multiple-choice questions; 3) Immediate feedback and 4) team problem-solving activities. However, they did not include peer evaluation. Elnagar and Ali adopted a TBL version called Lectures and Team-Based Learning (LTBL) on a 16-week Introduction to Information Technology course. Each class mixed a normal lecture with TBL activities. It would start with the instructor’s description of the subject, groups discussion, student assessment using a quiz and the provision of immediate feedback. They also used the peer evaluation component of TBL, albeit just once or twice during the semester. Inuwa et al.’s format included 1) pre-reading, 2) in-class iRAT, 3) group readiness assurance test (gRAT); 4) immediate feedback and 5) wrap-up sessions. They did not use application exercises, and a peer evaluation was performed at the end of the 11-week course.

It has been suggested that when modifying the traditional TBL structure, one must consider the ultimate value of the TBL aspects they would like to retain. For instance, Leupen (Citation2020), who investigated TBL evidence in STEM and Health Science, stated, “it is a poor choice to use only RATs and not team applications” as it is the applications that are at the centre of TBL. However, without any prior preparation, application, or problem-solving activities will fail.

Research questions

A modified Team-Based Learning was chosen as a method of instruction. The purpose of the study is to explore master’s student perceptions of the modified team-based learning instructional approach in a Bootcamp-style course and the extent to which the Bootcamp course increases their practical experience skills. For this study, students were asked to rate the different modified TBL activities, teamwork, and their practical experience levels. This study was conducted with the approval of the University’s research ethics committee. Participants received a document containing all the necessary information about the study and their participation. All students who participated did so voluntarily, and it was made clear that the lack of participation would not affect them in any way.

The following questions frame the study:

To what extent does the ProgSD Bootcamp course increase student practical experience in the taught topics?

Is there any significant difference in the level of practical experience at the end of the course and across the different master’s student groups?

Is there any significant difference in the perceptions of modified TBL activities and teamwork scores across the different master’s student groups?

Is there any significant difference in the perceptions of modified TBL activities and teamwork scores at the end of the ProgSD course and after Semester 2 courses?

Materials and methods

ProgSD course design

Students attend two one-hour lectures and two 2-hours labs per week. They also have a 2-hour in class (lab) session per week reserved for team projects supervision (see ), with tutors available. The grades of the course are divided into three different evaluations. Students have three assessed exercises based on Python and Java (weeks 2, 3 and 5) and one coursework on Linux (given on week 5 and due on week 6) (30%), a practical lab exam (Python, Java) (50%) on week 6, and a team project (20%) which is distributed at the start and submitted on week 7 to alleviate the pressure on students during that last week.

Implementation of modified TBL for ProgSD

ProgSD’s implementation of TBL consists of team formation, readiness assurance process (RAP), module application activities, individual programming tasks and team and individual exams. Teams were formed during the first week by the course. Each team consisted of 5 to 6 students grouped based on their ability. On day one, students were asked to take a quiz based on two or three questions per topic covered in the course. The quiz scores were organised in order, and the teams were created by chunking the list into teams of 5 or 6 students. The goal was to put students with similar capabilities together.

As required in TBL, the students needed to read the material before attending the classes. The modified TBL teaching approach was explained on the Moodle page, which was accessible to enrolled students well before they attended their first class. The message was reiterated at the beginning of the first class. Teaching materials were made available on Moodle at least two weeks before each class and were mainly in the form of PowerPoints. Minimum availability of two weeks was deemed necessary because of the large amounts of materials per subject that needed to be covered for each class. To maximise the benefits of the readiness assurance stage and due to the limited time, students were asked to do their iRAT out of class. Carbrey et al. (Citation2015), for example, explored whether the iRAT could be completed at home instead of in class. There was no significant difference, and they concluded that iRAT could be done at home for more simplicity. Kirkpatrick (Citation2017) also recommended using pre-class online quizzes for iRAT. In this study, we used the class time for team application exercises (tAPP), and we did not include tRAT due to the lack of time as tasks related to programming require more time (Lasserre, Citation2009), and the programme timetable could not fit additional time.

The course requires students to change their learning habits by spending a substantial amount of time in prior preparation and assessments. We use class time to reinforce the knowledge that students have acquired during prior preparation. It is very practice focussed; for example, during team application exercises (tAPP – in lecture room), teams get to a) debug codes, b) hand-execute codes, c) solve Parson puzzles (Parsons & Haden, Citation2006), d) write code (to solve a problem), and e) do basic Numpy maths. These exercises are done using paper and pencil only; no computers are allowed. Hands-on practice on computers only takes place in the lab sessions. The idea of using just a pencil and paper to solve these problems comes from other studies that highlight the lack of students’ sound conceptual understanding in programming (Cutts et al., Citation2019; Sudol-DeLyser et al., Citation2012).

The tAPP tasks were an application of what the students had learned. They were created based on the pre-reading material (PowerPoint slides) and the outcome of iRATs. Students were asked to solve the same significant problems and report them simultaneously. A typical tAPP contained the following instructions: Using a pencil and paper, work on problem 1 on your own for 5 minutes. Then discuss with your team members and present one solution as a team (5 minutes). Swap your solutions with another team for peer evaluation (10 minutes). The length of time spent on working alone and in teams was dependent on the number of problems. After making sure each team gave their solution to another team, the correct answer was then displayed on the screen. After marking or commenting on them, the solutions were returned to their corresponding team, and typically, students sought further clarifications from the lecturer and sometimes discussed with the other team why their answer was marked in a particular way or if they could elaborate on the comments. In the lab session, although students are supposed to work alone on their practical programming exercises, team members chose to sit together and help each other. Application-focused team programming assignments or team projects were used to foster social interaction where team members would engage in supportive communication practices, including asking for help, sharing information and conflict mediation (Rankin et al., Citation2007).

Participants

ProgSD ran during the first six weeks of Semester 1 (from September 2019). Participants (n = 185) were master’s students enrolled in the Programming and System Development course. The attendance rate was very good, with 99% attendance during team project activities and 97% during tAPP except during the last week (64.8%).

Data collection and analysis

Data were collected in three stages. At the beginning of the course, participants (n = 185) were asked to rate their practical experience levels on the taught topics. At the end of the six-week course and in Semester 2, during the Covid-19 lockdown, they were surveyed on their practical experience level, perceptions of the modified TBL activities and their perception of working in teams. The reason for the last survey was to find out if they would maintain the same attitudes and perceptions of ProgSD and whether it had helped them prepare for Semester 2 courses. Internal consistency of the perceptions of the modified TBL activities and perceptions of teamwork was high (Cronbach’s alpha = 0.92 for TBL and 0.93 for teamwork). The questions, including those adapted from previous research (Vasan et al., Citation2009), were mostly scales that included: a) perception of the modified TBL activities, b) perception of teamwork, and c) practical experience on the six taught subjects. Likert questions were rated on a scale of 1 to 10 (1 = Very inexperienced, 10 = Very experienced). The questionnaire included two open-ended questions on what they liked and disliked the most about the novel approach to teaching.

Quantitative data analysis was performed using the R Statistical Package, and data treated anonymously. Quantitative data collected was analysed using descriptive and inferential statistics. These include the Wilcoxon test, Mann-Whitney U Test and Kruskal-Wallis tests, non-parametric tests that are useful to compare groups (Pallant, Citation2020)

Qualitative data from students’ comments were analysed using Thematic Analysis (TA). Thematic analysis (TA), one of the most commonly used qualitative data analysis approach in Psychology (Braun & Clarke, Citation2006), is increasingly being used in education (Xu & Zammit, Citation2020). It was chosen as the approach for analysing the qualitative data because it is a theoretically flexible method (Braun & Clarke, Citation2019) that is independent from any particular epistemological and ontological base (Terry et al., Citation2017). It is also flexible in the method of data collection, sample size and analysis. Following Braun and Clarke’s six-phase analytic process, step 1 involved the familiarisation through “repeated engagement with the data”, through reading the comments several times; step 2, the “ iterative and flexible” initial code generation process of the entire dataset; step 3, searching for themes through interpretive analysis of these initial codes; step 4, reviewing themes before (step 5) defining and naming them. The last phase was about writing up. Illustrative quotations from the key themes were used in the text.

Results

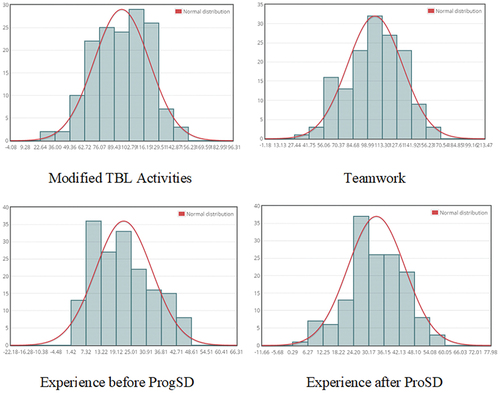

Of the 185 eligible participants, 181 (98%) completed the first questionnaire, and 150 (81%) completed the post questionnaire after the ProgSD course, but only 43 (23.2%) completed the last questionnaire mainly due to Covid19 disruption. Respondents of the second questionnaire were 61 female (40.9%), 83 males (55.7%), 5 students who preferred not to say (3.4%) and 1 missing data. Ordinal Likert items were summated to produce the total scores for each Likert scale. presents the histograms and normal distributions of the Likert scales. Ninety-one students answered the open-ended questions in the second questionnaire on what they liked and disliked the most about the new teaching method.

Descriptive analysis of individual scale items

On average, the median score of student perceptions of teamwork was positive. All items had a median of 6 or above (). The item with a combined highest percentage ratings over 5 (from a scale of 1 to 10) and the high median was “The ability to collaborate with other students is necessary if I want to be successful as a student” (Md = 8, 82%) with CS students scoring the most. The lowest rated item for the teamwork scale was “Discussing and solving problems with my team members in the lecture room provides a deeper understanding of the topic”. (Md = 6.00, 54%). Data Analytics students’ median scores were the lowest for all three lowest items across all master’s student groups, with Md = 5 for each of these items.

Table 2. Perceptions of teamwork scale ratings for all students (survey 2).

Of the 15 items that formed the modified TBL activities scale (), all but one had a median of 6 or above. The items with the same combined highest median score and percentage of ratings above 5 (Md = 8.00; 83%) were “Individual readiness assurance tests (iRAT) – (quizzes before the lectures) were useful learning activities” and “Taking individual readiness assurance tests (iRAT) – (quizzes before the lectures) is an effective way to practice what I have learned”. However, these were scored lower for DA students. This scale’s item with the lowest median was “TBL helped me increase my understanding of the course material” (Md = 5.5, 50%), closely followed by “TBL helped me prepare for the assessments” (Md = 6, 52%). DA students overall lowest median score was for the following statement “I feel more engaged in TBL activities than I usually am in traditional lecture presentations”. (Md = 5). After S2 courses, most students agreed with the statement “Programming and Systems Development has helped me prepare for my semester two courses”, with 68.2% scores of 6 and above and an average of 8.03 over 10.

Table 3. Perception of modified TBL activities scale ratings for all students (survey 3).

Student perception of modified TBL

To answer the research question “Is there a difference in the perception of TBL activities and teamwork scores across the master’s student groups?” Kruskal-Wallis Tests were performed and indicated no statistically significant difference in the modified TBL activities scores between the MSc groups (n = 150, p = 0.62) and the teamwork scores between the MSc groups (n = 150, p = 0.19). presents a descriptive analysis of the perceptions of teamwork and TBL activities across all postgraduate groups. Wilcoxon tests with continuity correction test showed that were also no significant differences in the modified TBL Activities (p = .177) scores and teamwork (p = .187) scores at the end of the ProgSD course and after S2 courses. The descriptive analysis of students’ perceptions of modified TBL Activities and teamwork at the end of ProgSD and after S2 courses are in .

Table 4. Descriptive analysis of perception of teamwork and TBL activities across all postgraduate groups.

Students’ evaluation of their self-reported practical experience

Before ProgSD, students self-reported having from 0 months to 5 years experience in Java and Linux; 0 months to 4 years experience in Python; and 0 months to 15 years in databases, data manipulation and visualisation. presents students’ self-reported levels of practical experience on individual subjects before and after the Bootcamp course on a scale of 1 to 10 (1 = Very inexperienced, 10 = Very experienced).

Table 5. Practical experience on individual subjects before and after course. Higher percentages for rates between 1 and 5 and lower percentages for rates between 6 and 10 BEFORE ProgSD indicate that many students self-reported having no or low practical experience in subjects. Lower percentages for rates between 1 and 5 and higher percentages for rates between 6 and 10 AFTER ProgSD indicate that many students self-reported having gained more practical experience.

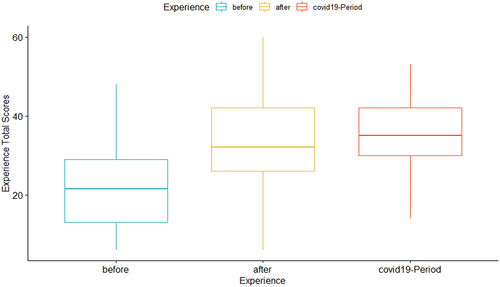

A Wilcoxon test with continuity correction showed that students’ self-reported practical experience level after ProgSD (n = 148, Md = 32) was significantly different from their self-reported practical experience before they started the course (n = 170, Md = 21.5) at p < 0.001 (). Students’ self-reported practical experience scores in Semester 2 were not significantly different from those at the end of ProgSD in Semester 1 with p = .282. presents the self-reported practical experience level at the end of ProgSD and after S2 courses.

Table 6. Descriptive analysis of the perceptions of modified TBL Activities and teamwork and the self-reported practical experience level at the end of ProgSD and after S2 courses.

Figure 2. Student self-reported practical experience level before and after the ProgSD Bootcamp course and during the Covid19 period.

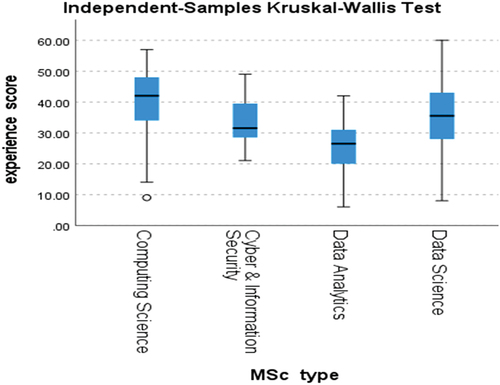

A Kruskal-Wallis test between master’s groups revealed a statistically significant difference in self-reported experience levels across the groups with p < 0.001 (). The effect size, calculated using eta squared formula eta2[H] = (H – k + 1)/(n – k) (where H = value obtained in the Kruskal-Wallis test; k = number of groups; n = total number of observations) was large at 0.16. A post-hoc test used Dunn (1964) Kruskal-Wallis multiple comparison p-values adjusted with the Bonferroni method. The multiple pairwise comparisons indicated that the median scores for “Data Analytics and Computing Science” and “Data Science and Data Analytics” are significantly different, with p < 0.001. presents the descriptive analysis of postgraduate groups self-reported practical experience levels after the course.

Figure 3. Student self-reported practical experience level after the ProgSD Bootcamp course across master’s groups.

Table 7. Descriptive analysis of postgraduate groups self-reported practical experience levels after the course.

What students like/dislike the most about the modified team-based learning approach to teaching

The open-ended data analysis generated some themes suggesting that the modified team-based learning approach to teaching 1) fostered collaborative learning with positive team dynamics, 2) improved student learning through social interaction, 3) kept the students motivated and engaged; however 4) there was some resistance to new teaching methods.

Collaborative learning and team dynamics

One of the components of team-based learning is students being put in teams from day one to foster collaboration. For many respondents, the collaborative aspect, which helped them build teams skills through discussions and communication with their peers during problem-solving tasks and the team project, was widely reported. The following comments summarise the general feeling: “That’s really a good way to improve ability of cooperating with others, and learn from others while solving problem together”. (student 18); another student noted, “This helped me develop teamwork and communication skills” (student 49). The students liked that “teams were assigned in such a way that the level of knowledge among the team members was calibrated” (student 22).

However, this positive feeling of working with others was not felt by a small number of students highlighting the importance of positive team dynamics in collaboration. Imbalance of knowledge was the primary source of frustrations as some felt they had an unfair load due to some of their teammates’ limited programming skills, as highlighted in the following quotes:

“I don’t like the team project because we have the imbalance knowledge and cannot coordinate well” (student 74).

“Other members in my team know nothing about it [project]. So, I have to spend a lot of time doing more coding as well as teaching them what they don’t know” (student 20).

“Team members have different backgrounds; sometimes in order to finish team project in a high quality, some people have to take most of the work” (student 73).

This apparent lack of interaction from others, also due to the language barrier as one student remarked, “there were language gaps that made working in a team difficult” (student 63), impacted the amount of time they could spend on discussion during the team application exercises (tAPP). The number of exercises per tAPP activity meant that despite some students finding them easy as “it is kind of time-wasting for those easy questions” (student 17), many students could only spend a little time on each problem fuelling more comments on the limited discussion time. Furthermore, the physical setting of the modified TBL activities also affected the team dynamics during tAPP as “the layout of the classroom wasn’t very good for group work because you couldn’t discuss with the entire team at once”(student 33). It was “very inconvenient as lecture hall was always clustered and teams struggled to communicate”(student 47).

Social interaction

This theme revealed that the modified TBL encouraged social interaction as it gave students the opportunity to embrace diversity, to“Meet new students, whom I would not talk to otherwise. Lovely people that I would not know them if it was not for this team-based learning format”., “make friends”, “getting to know other people”, “got a chance to meet some classmates”, “Helped me interact with my classmates”, and “practise English” (some students’ comments). Although the impact of social environment on learning is very well recognised and placed at the very centre of learning, just being with people who are more knowledgeable is not enough. Indeed, according to Vygotsky, social interaction is important in cognitive development. Vygotsky’s theory of Zone of Proximal Development (ZPD) presents the dynamic relationship between learning and development. It reveals the learner’s potential and that a learner can achieve higher performance with peers’ help. This aspect of social interaction leads to a sense of community where students are involved in a community of practice, which Lave and Wenger (Citation1990) defined as a community where people learn from each other. This sense of co-construction of learning was felt by many students who mentioned “knowledge exchange”, “sharing knowledge”, “learning from others”, indicating that the modified TBL instructional approach helped create shared learning experiences. It enhanced deeper cognitive skills with “Programming skills strengthened” (student 88) through “exposure to different opinions and views” (student 90). The modified TBL activities fostered student-centred learning and their interaction, leading to successful completion of activities and students acknowledging that they would have struggled without that interaction and support from team members.

It kept me motivated to work

Student engagement, a sociological and psychological concept (Kahu, Citation2013), can capture student behaviours, including student satisfaction. Student engagement components include the sense of belonging (team members have close and positive relationships), interaction and enthusiasm (Kahu, Citation2013). This theme revealed an interplay between student engagement components and student satisfaction, indicating that teaching and learning activities that foster engagement through (social) interaction can lead to higher student satisfaction and influence student participation in those activities. The students felt that the modified TBL activities kept them motivated and engaged as some students expressed, “It [modified TBL] kept me motivated to work” (student 52), “It was fun in lectures” (student 66), the course was “fun and useful” (student 33), “engaging” (student 93), “ It was easier to pay attention to every lecture because we were solving problems” (student 65) and “I won’t fall asleep” (student 53).

Resistance to change or additional skills needs?

Despite liking some aspects of the modified TBL, very few respondents did not like “That it [tAPP] replaced the actual lecture”. They felt that not having normal lectures and being asked to participate in iRAT meant they were teaching themselves. iRATs require the students to read the material before attending the class. An ungraded practice quiz is provided for each weekly topics to provide scaffolding to support students. That less positive feeling emerged mainly from DA students who felt that the subjects were challenging to read prior to any tAPP as they did not have a CS background.

Another source of concerns was the type of problem-solving exercises. Some students felt that having to do the exercises such as hand-coding, parsons puzzles or debugging, on a piece of paper was not a good value of time. This is summarised in these comments, “I don’t think having to handwrite code in lectures is as useful for learning to code than practising in labs etc”. (student 65), “Sometimes the exercises are not useful, for example, in my opinion, arranging code pieces in the right order” (student 22), “I don’t think handwriting answers is an effective way to learn a practical course such as this. It’s difficult to know if your code is correct if you can’t run it”(student 46).

Discussion

ProgSD, a Bootcamp course developed to standardise master’s students’ different skills sets, was taught using an instructional approach that was unfamiliar to many students. The use of a modified Team-Based Learning allowed many topics to be taught in a very short period, taking into consideration the limitations of the academic timetable while fostering team interaction and engagement. This study explored postgraduate students’ perceptions of the modified TBL activities and teamwork and whether the Bootcamp course could increase student practical experience in the taught topics. The study also intended to find out whether a modified TBL could bring the same benefits as traditional Team-Based Learning. Students’ comments reinforced the quantitative results.

Positive perceptions of modified TBL activities and teamwork across the different master’s student groups

Overall, most students had positive perceptions of teamwork and modified TBL activities, with no statistically significant difference across the different master’s groups. Results showed that, just like the original TBL, a modified Team-Based Learning could be an effective collaborative learning methodology in higher education (Vlachopoulos et al., Citation2020). In this study, individual readiness assurance tests (iRAT) (quizzes before the lectures) were useful learning activities and an effective way to practise what students have learned. In their study, Elnagar and Ali (Citation2013) also found that individual readiness assurance test played an important role in student confidence and preparation readiness for their exam. We also concur with other literature that team-based application exercises (tAPPs) impact student learning (Coyne et al., Citation2018; Michaelsen et al., Citation2008). Nonetheless, based on our findings, it seems reasonable to assume that while a modified team-based learning approach to teaching may have a positive impact on student learning experience due to the collaborative nature of its activities such as tAPPs, a continued positive team dynamics where the skills and experiences are evenly distributed (Leupen, Citation2020) is crucial. Furthermore, agreeing with a study that investigated students’ perceptions of the value of social interaction and found that social interaction improved student learning by enhancing their knowledge (Hurst et al., Citation2013), we believe that our modified TBL activities fostered student-centred learning and their interaction with peers, which helped them learn, leading to successful completion of activities. Many students acknowledged that without that interaction and support from their team members, they would have struggled. This supports the notion that co-construction of learning is a crucial aspect of team-based learning (Swanson et al., Citation2019) because as students interact with each other, they consciously or subconsciously increase their learning (Gray & DiLoreto, Citation2016). Students’ comments also led to thinking that their positive perceptions may also have been influenced by their participation in a non-traditional lecture that encouraged active learning activities such as those in the modified TBL approach described in this study. A psychosocial construct that emerged was student motivation which played a critical role in student engagement in the modified TBL activities. Indeed, student engagement has been linked to learning and positive emotions (Jeno et al., Citation2017) and can mediate the effect of learner interaction on their perceived learning (Gray & DiLoreto, Citation2016).

Discussions during team problem-solving exercises

Some students felt that “discussing and solving problems with my team members in the lecture” was neither an effective way to practice what they have learned, did not provide a deeper understanding of the topic, nor a valuable use of time. These observations have also been made in the literature. For example, in their modified Team-Based learning approach to introduce computer engineering and problem-solving (programming) to freshmen students, Selim and Bender (Citation2020) observed that the change in skills reported for group/peer learning was lower after the intervention. Another study reported that some students did not feel being in a team helped them learn course materials more effectively than studying alone nor improved their grades (Siah et al., Citation2019).

In this study, however, the lower rates for team discussion items may have been due to other reasons. During tAPP, the students had to solve two to 4 problems per one-hour class and could only spend a very short time discussing each problem. Student teamwork experience and discussion were also affected by the settings in the lecture room. Students sat in a linear format instead of being around the table. In some cases, students had to stand in order to be able to communicate with their team members. The conditions were not ideal, but there was no way to remediate these at the time. In a typical TBL environment, teams sit around the tables with screens that make the content visible regardless of where they sit. Finally, this negative experience in team discussions could be because we (instructors) did not promote students questioning one another and student accountability (Leupen et al., Citation2020).

Improving students’ practical skills through Bootcamp courses

At the end of the Bootcamp, students felt more confident in their upcoming Semester 2 courses after taking ProgSD. In Semester 2, most students agreed that ProgSD had helped them prepare for the S2 courses they were taking as they were able to apply the skills they gained through the Bootcamp in those Semester 2 courses. Similar observations were made in the literature that Bootcamps help improve skills and self-confidence in applying knowledge and skills important to students’ majors (Alavi et al., Citation2020, June; Fronza et al., Citation2020; Kenny et al., Citation2018; Lerner et al., Citation2018). That increase in confidence can last for at least six months (Selden et al., Citation2013), thus supporting the idea that the ProgSD Bootcamp had a positive effect on student knowledge and skills retention. In addition, knowledge retention was also due to the modified TBL instructional approach used in this study. Similar observations have been made in the literature. For example, Team-Based Learning has also been positively linked to knowledge retention (Ozgonul & Alimoglu, Citation2019).

Teaching a mixed-ability class

Compared to other master’s groups, the results from the DA students, though not negative, were the least positive. Individual item scores supported by DA students’ qualitative comments suggested their preference for a traditional lecture-style rather than the modified TBL activities and working in teams to solve problems. This agrees with other studies which have highlighted that resistance to a new teaching style is common (Clerici-Arias, Citation2021; Lasserre, Citation2009). On the other hand, teaching a mixed-ability class is challenging (Bennedsen & Caspersen, Citation2007; Mohamed, Citation2019). DA students may have felt that way because they have a Maths background and tend to have no or minimal prior practical experience in the CS subjects covered. They generally struggled with the ProgSD content much more than the others, which likely contributed to their assessment of the instructional approach used in this study. Some of the strategies used to help overcome the challenges posed in a mixed-ability class include using active learning activities and getting students to work with their peers (Mohamed, Citation2019). Our modified TBL approach encompassed these activities; however, these students may not have felt the full benefits because of its intensity. In addition to ProgSD, they took another two other courses during the same period, and ProgSD covers 6 subjects and six assessment elements during six weeks. Had one subject been the focus of the intervention like in a typical Bootcamp (Wilson, Citation2018), maybe their experience would have then been as positive as the other master’s groups. Remediation to these issues will require considering the specific background and needs of the DA cohort at the beginning of the course to accommodate them, rather than encapsulating all the students with the same set of initial assumptions (Lasserre, Citation2009). An example could be the provision of extra programming support.

Types of team application exercises (tAPPs)

ProgSD is a highly practice-focused course, and the team application exercises had to be adapted in order to fit this critical component of team-based learning. Some students complained about the type of tAPPs exercises which, as one student remarked, were not “as useful for learning to code than practising in labs” despite already having a dedicated 2-hour practice in labs for each tAPP session. The tAPPs exercises included hand-coding, Parson puzzles, code debugging, and problem-solving from scratch. The students had to use a pencil and paper only. To enhance computational thinking skills, an important development step in programming is code comprehension using exercises (Cutts et al., Citation2019), including the ones mentioned in this paper. Computational thinking is a foundational competency for problem-solving in STEM education (Grover & Pea, Citation2018). However, one of the issues identified in the literature of CS is the misleading aspect of goal-directed problem-solving labs, which let the novice student believe that the most important achievement in programming is to get programs working (Cutts et al., Citation2019). Cutts et al. found that using pencil-and-paper exercises, away from computers, novices were able to develop their skills. In our study, however, the comments were made by both experienced and novice programmers. For both groups, they may not have been familiar with these types of exercises that foster computational thinking or the notion of computational thinking itself. It is not surprising; although the notion of computational skills has existed for a long time, it is only in recent years that there has been increasing attention to infusing computational thinking into various spheres of education, including in schools (Herro et al., Citation2021). We recognise that our students’ comments may also reflect our failure, as instructors, to reiterate why these types of exercises were necessary for their learning, emphasising that notion of computational thinking.

Additionally, some of the experienced students’ conceptions emerging from how they learned programming could have been conflicting with how these concepts were being taught in ProgSD. We should have fostered “situational interest” (Thomas & Kirby, Citation2020) in these activities in order to help reconstruct or merge their knowledge. Situational interest refers to the “levels of interest elicited by characteristics of an immediate learning task”. In their study that sought to understand the factors that contribute to meaningful conceptual change, Thomas and Kirby (Citation2020) found that situational interest is essential to help change learners’ conceptual knowledge.

Conclusions

This study presents a successful implementation of modified Team-Based Learning in a six-week multi-subject Bootcamp-style CS course (ProgSD). Overall, despite the fast pace and intensity of the ProgSD Bootcamp course, modified TBL, the instructional approach used to teach it, was perceived favourably by students, who also believed the Bootcamp course itself helped improve their technical skills. Our study demonstrated that our modified TBL strategy could produce the same benefits as a strict structured TBL. Students valued their interaction with others, the opportunity to learn from each other and gain multiple perspectives from their peers and solve problems together. Findings also showed that a well-formed team, an appropriate setting for activities and a clear explanation of the choice of exercises for tAPPs are important to maintain student engagement. Some issues related to teaching a mixed-ability class were also highlighted. We intend to consider these findings in the next implementation of modified TBL for the same course next academic year, with some adaptation of face-to-face activities for online delivery.

Limitations and future research

The main limitation is that the study involved participants from one university and one course. One possible bias is that those who chose to fill out the self-reported questionnaires may have favoured the modified TBL format and Bootcamp style course. The results may not be reflective of a wider population, and the qualitative comments may not be generalisable. However, we hope that the findings may inform future development of student skill sets beyond this context and may encourage future research that considers that affordance of using active learning methods such as modified TBL in postgraduate education. The study has shown that implementing a modified team-based learning approach can be as successful and benefit students as the traditional TBL. Further studies may consider investigating which of the TBL components is more suitable in CS education or other specific STEMs subjects. Additionally, there is not much research exploring postgraduate education, and results showed that how best to handle the diverse skills sets of incoming students is not an easy matter. Future research should help explore the extent of student skills sets and how these can be standardised to ensure their readiness for their master’s programme.

Practical implications

Computing Science courses tend to be highly practice-focused and could be unsuitable for the traditional TBL. Implementing a modified version of TBL would give practitioners the flexibility to redesign their courses and adopt TBL elements that would best suit their needs while still retaining the essence and benefits of TBL. The instructional approach chosen for the course must foster a sense of belonging by enabling student interaction, fostering active student engagement, collaboration and teamwork. Students are more engaged and motivated to complete the tasks and learn from each other when they are in a well-formed team created to nurture engagement and a sense of belonging. Using modified TBL in a Bootcamp style course might be of value to other practitioners who have to consider the challenges posed by a diversified student body and are having trouble with the following issues: students from different backgrounds with a variety of skill sets; large classes; a large volume of teaching material and lack of time to cover all the topics or tight curriculum; and the need to increase student performance. Finally, although this course was meant for master’s students with undergraduate degrees, most of our students had the same issues as students taking Introductory programming courses in Year 1, which is zero or minimal programming skills in one or more of the areas addressed. This can result in a high drop-out rate (Beaubouef & Mason, Citation2005; Walker, Citation2017; Watson & Li, Citation2014). Using a modified TBL approach to teaching might help improve retention (Walker, Citation2017) in those courses.

Our new proposed modified TBL format due to Covid-19

Given the dramatic change in practice because of covid-19, some elements of the modified TBL will change. While pre-reading material is already available online, the difficulty is the delivery of the tAPP, which requires face-to-face interaction with team members sitting together to discuss and solve problems. This emphasis on peer-to-peer learning facilitated by the teacher could be disrupted. One approach implemented by Jumat et al. (Citation2020) could be to get students to remain on video calls and also use a chat feature if needed during the session while choosing their own platform for communicating with their teammates. This enables students to remain connected with their peers, even during class-wide discussions. Teams would answer questions on a shared document and present their answer through a video conferencing tool. Jumat et al. based that approach on Bandura’s social cognitive theory, which argues that individual consciousness can only be achieved through communicative interactions. However, they recognised that their approach was more suitable for the teaching of theoretical knowledge. Also, they had only 80 students and formed 13 teams.

Our course had 185 students last year and the projection for this academic year (January 2020) is 250 students with more than 40 teams of 5 to 6. Furthermore, there are only two 1-hour sessions per week reserved for this face-to-face classroom interaction. It means that we could consider teams working on their tAPP before attending the one-hour video conferencing session and use the live session to have them present their answers to the class. However, the main limitation is that there will be less time for discussion, which is not an issue for those running courses with a) more time in hand, b) a small number of students c) or courses with theory focused knowledge.

For our practice-intense course, we will have teams peer-review other teams’ code or tAPP before the session and use the live video conference session for presenting solutions, highlighting some of the key issues and discussion. This option is also beneficial in that it will help solve the issue of lack of time for tAPP and foster discussion. Finally, Jumat et al. suggest using an all-encompassing video conferencing that enables all TBL elements to be implemented without facing the possible disruption of having to switch from one platform to the other.

An extension of this work could investigate students’ perceptions of this new format, whether the same educational objectives as the face-to-face approach are achieved. It could also investigate the perceptions of the lecturers teaching the courses for which the Bootcamp course is preparing students.

Ethics approval

Ethical approval was obtained from the University’s Research Ethics Committee; application number: 300190051.

Availability of data and material

The datasets used and/or analysed during the current study are available from the corresponding author on reasonable request.

Disclosure statement

No potential conflict of interest was reported by the author(s).

Correction Statement

This article has been republished with minor changes. These changes do not impact the academic content of the article.

Additional information

Funding

References

- Alavi, Z., Meehan, K., Buffardi, K., Johnson, W. R., & Greene, J. (2020, June). Assessing a summer engineering math and projects Bootcamp to improve retention and graduation rates in engineering and computer science. Paper presented at 2020 ASEE Virtual Annual Conference Content Access, Virtual Online, 1–17. https://peer.asee.org/assessing-a-summer-engineering-math-and-projects-bootcamp-to-improve-retention-and-graduation-rates-in-engineering-and-computer-science.pdf

- Beaubouef, T., & Mason, J. (2005). Why the high attrition rate for computer science students: Some thoughts and observations. SIGCSE Bulletin, 37 (2), 103–106. June 2005. https://doi.org/10.1145/1083431.1083474.

- Bennedsen, J., & Caspersen, M. E. (2007). Failure rates in introductory programming. SIGCSE Bulletin, 39(2), 32–36. https://doi.org/10.1145/1272848.1272879

- Biggs, J., & Tang, C. (2011). Teaching for quality learning at university (4th ed.). McGraw Hill, Society for Research into Higher Education & Open University Press.

- Braun, V., & Clarke, V. (2006). Using thematic analysis in psychology. Qualitative Research in Psychology, 3(2), 77–101. https://doi.org/10.1191/1478088706qp063oa

- Braun, V., & Clarke, V. (2019). Reflecting on reflexive thematic analysis. Qualitative Research in Sport, Exercise and Health, 11(4), 589–597. https://doi.org/10.1080/2159676X.2019.1628806

- Burgess, A., Roberts, C., Ayton, T., & Mellis, C. (2018). Implementation of modified team-based learning within a problem based learning medical curriculum: A focus group study. BMC Medical Education, 18(74), 1–7. https://doi.org/10.1186/s12909-018-1172-8

- Camp, T., Adrion, W. R., Bizot, B., Davidson, S., Hall, M., Hambrusch, S., Walker, E., & Zweben, S. (2017). Generation CS: The challenges of and responses to the enrollment surge. ACM Inroads, 8(4), 59–65. https://doi.org/10.1145/3141773

- Carbrey, J. M., Grochowski, C. O., Cawley, J., & Engle, D. L. (2015). A comparison of the effectiveness of the team-based learning readiness assessments completed at home to those completed in class. Journal of Educational Evaluation for Health Professions, 12(34), 1–5. https://doi.org/10.3352/jeehp.2015.12.34

- Christensen, J., Harrison, J. L., Hollindale, J., & Wood, K. (2019). Implementing team-based learning (TBL) in accounting courses. Accounting Education, 28(2), 195–219. https://doi.org/10.1080/09639284.2018.1535986

- Clerici-Arias, M. (2021). Transitioning to a team-based learning principles course. The Journal of Economic Education , AHEAD-OF-PRINT, 1–8. https://doi.org/10.1080/00220485.2021.1925184

- Coyne, L., Takemoto, J. K., Parmentier, B. L., Merritt, T., & Sharpton, R. A. (2018). Exploring virtual reality as a platform for distance team-based learning. Currents in Pharmacy Teaching & Learning, 10(10), 1384–1390. https://doi.org/10.1016/j.cptl.2018.07.005

- Cutts, Q., Barr, M., Bikanga Ada, M., Donaldson, P., Draper, S., Parkinson, J., Singer, J., & Sundin, L. (2019). Experience report: Thinkathon - countering an “i got it working” mentality with pencil-and-paper exercises. ACM Inroads, 10(4), 66–73. https://doi.org/10.1145/3368563

- Diniz, P., Veiga, A., & Rocha, G. (2019). Adapting team-based learning for application in telecommunications engineering using software-defined radio. The International Journal of Electrical Engineering & Education, 56(3), 238–250. https://doi.org/10.1177/0020720918799524

- El-Banna, M. M., Whitlow, M., & McNelis, A. M. (2020). Improving pharmacology standardized test and final examination scores through team-based learning. Nurse Educator, 45(1), 47–50. https://doi.org/10.1097/NNE.0000000000000671

- Elnagar, A., & Ali, M. S. (2013). Survey of student perceptions of a modified team based learning approach on an information technology course. 2013 Palestinian International Conference on Information and Communication Technology, Gaza, 2013. Institute of Electrical and Electronics Engineers, 22–27. https://doi.org/10.1109/PICICT.2013.14

- Fronza, I., Corral, L., Pahl, C., & Iaccarino, G. (2020). Evaluating the effectiveness of a coding camp through the analysis of a follow-up project. In Proceedings of the 21st Annual Conference on Information Technology Education (SIGITE’ 20). Association for Computing Machinery, NY, USA, 248–253. https://doi.org/10.1145/3368308.3415391

- Ghadiri, K., Qayoumi, M. H., Junn, E., Hsu, P., & Sujitparapitaya, S. (2013). The transformative potential of blended learning using MIT edX’s 6.002x online MOOC content combined with student team‐based learning in class. MIT Open Learning. Retrieved October 23, 2020, from https://www.edx.org/sites/default/files/upload/ed-tech-paper.pdf

- Gray, J. A., & DiLoreto, M. (2016). The effects of student engagement, student satisfaction, and perceived learning in online learning environments. International Journal of Educational Leadership Preparation, 11(1), 1–20. Retrieved May 19, 2021 from http://files.eric.ed.gov/fulltext/EJ1103654.pdf

- Grover, S., & Pea, R. (2018). Computational thinking: A competency whose time has come. In S. Sentance, S. Carsten, & E. Barendsen (Eds.), Computer science education: perspectives on teaching and learning in school (pp. 19 - 38). Bloomsbury.

- Herro, D., Quigley, C., Plank, H., & Abimbade, O. (2021). Understanding students’ social interactions during making activities designed to promote computational thinking. The Journal of Educational Research, 114(2), 183–195. https://doi.org/10.1080/00220671.2021.1884824

- Hurst, B., Wallace, R., & Nixon, S. B. (2013). The impact of social interaction on student learning. Reading Horizons: A Journal of Literacy and Language Arts, 52(4), 375–398. Retrieved May 19, 2021 from https://scholarworks.wmich.edu/reading_horizons/vol52/iss4/5

- Inuwa, I. M., Al-Rawahy, M., Roychoudhry, S., & Taranikanti, V. (2012). Implementing a modified team-based learning strategy in the first phase of an outcome-based curriculum–challenges and prospects. Medical Teacher, 34(7), e492–e499. https://doi.org/10.3109/0142159X.2012.668633

- Jeno, L. M., Raaheim, A., Kristensen, S. M., Kristensen, K. D., Hole, T. N., Haugland, M. J., & Mæland, S. (2017). The relative effect of team-based learning on motivation and learning: A self-determination theory perspective. CBE Life Sciences Education, 16(4), ar59. https://doi.org/10.1187/cbe.17-03-0055

- Jumat, M. R., Wong, P., Foo, K. X., Lee, I., Goh, S., Ganapathy, S., Tan, T. Y., Loh, A., Yeo, Y. C., Chao, Y., Cheng, L. T., Lai, S. H., Goh, S. H., Compton, S., & Hwang, N. C. (2020). From trial to implementation, bringing team-based learning online-duke-NUS medical school’s response to the COVID-19 pandemic. Medical Science Educator, 1–6. Advance online publication. https://doi.org/10.1007/s40670-020-01039-3.

- Kahu, E. R. (2013). Framing student engagement in higher education. Studies in Higher Education, 38(5), 758–773. https://doi.org/10.1080/03075079.2011.598505

- Kenny, L., Booth, K., Freystaetter, K., Wood, G., Reynolds, G., Rathinam, S., & Moorjani, N. (2018). Training cardiothoracic surgeons of the future: The UK experience. The Journal of Thoracic and Cardiovascular Surgery, 155(6), 2526–2538. https://doi.org/10.1016/j.jtcvs.2018.01.088

- Kirkpatrick, M. S. (2017). Student perspectives of team-based learning in a CS course: Summary of qualitative findings. In Proceedings of the 2017 ACM SIGCSE Technical Symposium on Computer Science Education (SIGCSE’ 17), Seattle Washington, USA. Association for Computing Machinery, New York, NY, USA, 327–332. https://doi.org/10.1145/3017680.3017699

- Kirkpatrick, M. S., & Prins, S. (2015). Using the readiness assurance process and metacognition in an operating systems course. In Proceedings of the 2015 ACM Conference on Innovation and Technology in Computer Science Education (ITiCSE’ 15). Association for Computing Machinery, New York, NY, USA, 183–188. https://doi.org/10.1145/2729094.2742594

- Lasserre, P. (2009). Adaptation of team-based learning on a first term programming class. SIGCSE Bulletin, 41(3), 186–190. https://doi.org/10.1145/1595496.1562937

- Lasserre, P., & Szostak, C. (2011). Effects of team-based learning on a CS1 course. In Proceedings of the 16th annual joint conference on Innovation and technology in computer science education (ITiCSE’ 11), Darmstadt, Germany. Association for Computing Machinery, New York, NY, USA, 133–137. https://doi.org/10.1145/1999747.1999787

- Lave, J., & Wenger, E. (1990). Situated learning: legitimate peripheral participation. Cambridge University Press.

- Lerner, V., Higgins, E. E., & Winkel, A. (2018). Re-boot: Simulation elective for medical students as preparation Bootcamp for obstetrics and gynecology residency. Cureus, 10(6), e2811. https://doi.org/10.7759/cureus.2811

- Leupen, S. (2020). Team-based learning in STEM and the health sciences. In J. Mintzes & E. Walter (Eds.), Active learning in college science (pp. 219 - 232). Springer. https://doi.org/10.1007/978-3-030-33600-4_15

- Leupen, S. M., Kephart, K. L., & Hodges, L. C. (2020). Factors influencing quality of team discussion: Discourse analysis in an undergraduate team-based learning biology course. CBE Life Sciences Education, 19(1), ar7. https://doi.org/10.1187/cbe.19-06-0112

- Mastel-Smith, B., Kimzey, M., Garner, J., Shoair, O. A., Stocks, E., & Wallace, T. (2019). Dementia care boot camp: Interprofessional education for healthcare students. Journal of Interprofessional Care, 34(6), 799–811. https://doi.org/10.1080/13561820.2019.1696287

- Matalonga, S., Mousqués, G., & Bia, A. (2017). Deploying team-based learning at undergraduate software engineering courses. In Proceedings of the 1st International Workshop on Software Engineering Curricula for Millennials (SECM’ 17), Buenos Aires, Argentina. IEEE Press, 9–15. https://doi.org/10.1109/SECM.2017.2

- Michaelsen, L. K., Knight, A. B., & Fink, L. D. (2004). Team-based learning: A transformative use of small groups in college teaching. Stylus.

- Michaelsen, L. K., Parmelee, D. X., & McMahon, K. K. (eds.). (2008). TeamBased learning for health professions education: A guide to using small groups for improving learning. Stylus.

- Mohamed, A. (2019). Designing a CS1 programming course for a mixed-ability class. Proceedings of the Western Canadian Conference on Computing Education (WCCCE’ 19), Calgary, AB, Canada, (pp. 1-6). Association for Computing Machinery, York, NY, USA. https://doi.org/10.1145/3314994.3325084.

- Ng, M., & Newpher, T. M. (2020). Comparing active learning to team-based learning in undergraduate neuroscience. Journal of Undergraduate Neuroscience Education: JUNE: A Publication of FUN, Faculty for Undergraduate Neuroscience, 18(2), A102–A111. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC7438168/

- Ozgonul, L., & Alimoglu, M. K. (2019). Comparison of lecture and team-based learning in medical ethics education. Nursing Ethics, 26(3), 903–913. https://doi.org/10.1177/0969733017731916

- Pallant, J. (2020). SPSS survival manual: A step by step guide to data analysis using IBM SPSS (7th ed.). McGraw Hill.

- Parsons, D., & Haden, P. (2006). Parson’s programming puzzles: A fun and effective learning tool for first programming courses. In Proceedings of the 8th Australasian Conference on Computing Education, Hobart, Australia. Australian Computer Society, Inc, AUS, 52, 157–163.

- Rankin, Y. A., Lechner, T., & Gooch, B. (2007). Team-based pedagogy for CS102 using game design. In ACM SIGGRAPH 2007 educators program (SIGGRAPH’ 07, San Diego, California, USA. Association for Computing Machinery, New York, NY, USA, 20–es. https://doi.org/10.1145/1282040.1282061

- Reimschisel, T., Herring, A. L., Huang, J., & Minor, T. J. (2017). A systematic review of the published literature on team-based learning in health professions education. Medical Teacher, 39(12), 1227–1237. https://doi.org/10.1080/0142159X.2017.1340636

- Selden, N. R., Anderson, V. C., McCartney, S., Origitano, T. C., Burchiel, K. J., & Barbaro, N. M. (2013). Society of neurological surgeons boot camp courses: Knowledge retention and relevance of hands-on learning after 6 months of postgraduate year 1 training. Journal of Neurosurgery, 119(3), 796–802. https://doi.org/10.3171/2013.3.JNS122114

- Selim, M. Y., & Bender, H. (2020). Flipping student ability differences from a liability to an advantage: A team-based learning approach to introduce computer engineering and problem solving (programming) to freshmen students. Electrical and Computer Engineering Conference Papers, Posters and Presentations, 98. Retrieved October 28, 2020, from https://lib.dr.iastate.edu/ece_conf/98

- Siah, C.-J., Lim, F.-P., Lim, A.-E., Lau, S.-T., & Tam, W. (2019). Efficacy of team-based learning in knowledge integration and attitudes among year-one nursing students: A pre- and post-test study. Collegian, 26(5), 556–561. https://doi.org/10.1016/j.colegn.2019.05.003

- Sudol-DeLyser, L. A., Stehlik, M., & Carver, S., (2012). Code comprehension problems as learning events. In Proceedings of the 17th ACM annual conference on Innovation and technology in computer science education (ITiCSE’ 12). Association for Computing Machinery, New York, NY, USA, 81–86. https://doi.org/10.1145/2325296.2325319

- Swanson, E., McCulley, L. V., Osman, D. J., Lewis, N. S., & Solis, M. (2019). The effect of team-based learning on content knowledge: A meta-analysis. Active Learning in Higher Education, 20(1), 39–50. https://doi.org/10.1177/1469787417731201

- Terry, G., Hayfield, N., Clarke, V., & Braun, V. (2017). Thematic analysis. In C. Willig, & W. Rogers The SAGE Handbook of qualitative research in psychology (pp. 17–36). SAGE Publications Ltd. https://www.doi.org/10.4135/9781526405555.

- Thalluri, J. (2016). Bridging the gap to first year health science: Early engagement enhances student satisfaction and success. Student Success, 7(1), 37–48. https://doi.org/10.5204/ssj.v7i1.305

- Thomas, C. L., & Kirby, L. A. J. (2020). Situational interest helps correct misconceptions: An investigation of conceptual change in university students. Instructional Science, 48(3), 223–241. https://doi.org/10.1007/s11251-020-09509-2

- Tu, Y., Dobbie, G., Warren, I., Meads, A., & Grout, C. (2018). An experience report on a Boot-Camp style programming course. In Proceedings of the 49th ACM Technical Symposium on Computer Science Education (SIGCSE’ 18), Baltimore, Maryland, USA. Association for Computing Machinery, New York, NY, USA, 509–514. https://doi.org/10.1145/3159450.3159541

- Vasan, N. S., DeFouw, D. O., & Compton, S. (2009). A survey of student perceptions of team-based learning in anatomy curriculum: Favorable views unrelated to grades. Anatomical Sciences Education, 2(4), 150–155. https://doi.org/10.1002/ase.91

- Vlachopoulos, P., Jan, S. K., & Buckton, R. (2020). A case for team-based learning as an effective collaborative learning methodology in higher education. College Teaching, 69(2), 69–77. https://doi.org/10.1080/87567555.2020.1816889

- Walker, H. M. (2017, (October 2017)). ACM RETENTION COMMITTEE. Retention of students in introductory computing courses: Curricular issues and approaches. ACM Inroads, 8(4), 14–16. https://doi.org/10.1145/3151936

- Watson, C., & Li, F. W. B. (2014). Failure rates in introductory programming revisited. In Proceedings of the 2014 conference on Innovation & technology in computer science education (ITiCSE’ 14), Uppsala, Sweden. Association for Computing Machinery, New York, NY, USA, 39–44. https://doi.org/10.1145/2591708.2591749

- Wilson, G. A. (2018). Could a coding bootcamp experience prepare you for industry? IT Professional, 20(2), 83–87. https://doi.org/10.1109/MITP.2018.021921655

- Xu, W., & Zammit, K. (2020). Applying thematic analysis to education: A hybrid approach to interpreting data in practitioner research. International Journal of Qualitative Methods,19, 1–9. https://doi.org/10.1177/1609406920918810