ABSTRACT

This study investigates differential effectiveness of teacher behaviour in relation to classroom composition by searching for the extent to which teachers exhibit the same teaching skills when they teach mathematics in different classrooms within a school year. Twenty-six teachers who taught mathematics in more than one classroom of the same age group of students participated in this study. Quality of teaching was measured through external observations and student questionnaires. Student achievement in mathematics at the beginning and at the end of the school year was measured. Data about each teacher factor of the dynamic model but orientation and dealing with student misbehaviour were generalizable at the teacher level. Multilevel structural equation modelling analysis revealed that all teacher factors had an effect on student achievement. Prior achievement (aggregated at classroom level) had an effect on the functioning of two factors (orientation and dealing with misbehaviour). Implications of findings are drawn.

Introduction: research on differential teacher effectiveness

Educational effectiveness research (EER) conducted in many countries around the world reveals that the classroom level can explain more variance in student learning outcomes than the school level (Muijs et al., Citation2014; Scheerens, Citation2013). It was also found that most variance of student achievement situated at the classroom level can be explained by what teachers actually do in their classroom (Kyriakides et al., Citation2021; Rivkin et al., Citation2005; S. P. Wright et al., Citation1997). Consequently, instructional practice has become integrated into theoretical models of educational effectiveness which attempt to identify teacher factors associated with student learning outcomes, such as the dynamic model of educational effectiveness (Creemers & Kyriakides, Citation2008). By comparing the main theoretical frameworks of effective teaching developed within the field of EER, Panayiotou et al. (Citation2021) claimed that most frameworks not only refer to some common factors (e.g., classroom management, supportive learning environment, and questioning skills) but also treat teacher factors as generic in nature. Teacher factors are expected to affect student learning irrespective of the age of students, ethnicity, gender, and other contextual factors (Muijs et al., Citation2014).

Most effectiveness studies which have been conducted over the last 3 decades have attempted to uncover generic characteristics of effective teacher behaviour in the classroom (e.g., Brophy & Good, Citation1986; Creemers, Citation1994). As Campbell and colleagues (Citation2003) argue, effective teaching was constructed as “a Platonic ideal, free of contextual realities” (p. 352). As a consequence, EER has been criticized for the homogenized approach in studying teacher effectiveness, and a call was made, from the beginning of the new century, for increased attention to differential teacher effectiveness (e.g., Campbell et al., Citation2003; Kyriakides, Citation2007; Muijs et al., Citation2005; Scherer & Nilsen, Citation2019). Five possible dimensions of differentiation in teacher effectiveness have been reported in the literature. These refer to: (a) differences in subjects and/or components of subjects, (b) differences in students’ personal characteristics, (c) differences in students’ background variables, (d) differences in teacher roles, and (e) differences in cultural and organizational context (see Campbell et al., Citation2004). Until now, several studies have been carried out to examine the extent to which differential teacher effectiveness exists with regard to: (a) subjects and curriculum area, (b) promoting the cognitive progress of different groups of students according to background variables such as student socioeconomic status (SES) and ability, and (c) promoting the learning of students according to their personal characteristics (e.g., Campbell et al., Citation2004; Charalambous et al., Citation2019; Cohen et al., Citation2018; Kyriakides et al., Citation2019; Scharenberg et al., Citation2019). However, the evidence is somewhat mixed. In some domains, the evidence for differential effectiveness is quite strong and in others very weak (Muijs et al., Citation2014). For example, several studies investigating differential effectiveness reveal that teachers matter most for underprivileged and/or initially low-achieving students (e.g., Kyriakides et al., Citation2019; Scheerens & Bosker, Citation1997). However, other studies reveal that teachers tend to be equally effective in promoting different types of learning outcomes of different subjects (e.g., Charalambous et al., Citation2019; Kyriakides & Creemers, Citation2008). In addition, very little research appears to exist on whether the effectiveness of teachers depends on the context in which they have to work. Different syntheses of teacher effectiveness studies reveal that studies investigating the validity of different theoretical frameworks of EER attempted to identify effects of teacher factors on achievement of different age groups of students in different school subjects and types of learning outcomes, but almost none of these studies search for the extent to which teachers exhibit the same teaching skills when they are expected to teach in different classrooms during a school year.

In this context, the study reported here is concerned with differential teacher effectiveness, and, more specifically, it aims to identify the extent to which the classroom context has an impact on teacher effectiveness through the influence that the classroom composition may have on teachers’ ability to demonstrate their teaching skills. Specifically, the study collects data on the behaviour of secondary teachers who are asked to teach mathematics to the same age group of students but in more than one classroom of a single school. Some authors (e.g., Pacheco, Citation2009; Smylie et al., Citation2008) argue that classroom context may influence teachers’ practices. For instance, Whitehurst et al. (Citation2014) discuss the case of a teacher who gets an unfair share of students who are challenging to teach because they are less well prepared academically, are not fluent in English, or have behavioural problems. As they argue, this teacher is going to have a tougher time performing well, for example, on questioning techniques, than the teacher in the gifted and talented classroom. In addition, Ladson-Bilings (Citation2009) questions whether “being an excellent teacher in a suburban school serving high-income students means that you will also be an excellent teacher in an urban school serving students who are low income, recent immigrants, and/or English language learners” (p. 220). Nevertheless, there is no study investigating the extent to which teachers are not equally effective when they are asked to teach in different classrooms of the same school within the same period (e.g., during 1 school year). Thus, the study reported here made use of the dynamic model of educational effectiveness to measure quality of teaching which refers to generic teacher factors and attempts to measure both quantitative and qualitative characteristics of effective teaching (Kyriakides et al., Citation2021). Since this study is one of the few studies investigating the validity of the dynamic model in secondary education, we not only search for the impact of teacher factors on student achievement in mathematics but also investigate the extent to which teachers exhibit the same generic teaching skills (based on the teacher factors of the dynamic model) when they teach mathematics in different classrooms (of the same age group of students) within a school year. In this way, the assumption that teacher factors are generic in nature is tested and implications for research on teacher effectiveness and improvement are drawn.

Before proceeding to the importance of examining the classroom context effect on teaching skills, it is necessary to refer to the term “classroom context” in an attempt to identify characteristics of context. It seems that a common definition for classroom context among scholars and researchers does not exist. Particularly, as Turner and Meyer (Citation2000) observe in their literature review, there are nearly as many definitions as studies on the effect of the classroom context. Definitions vary extensively depending on the perspective (i.e., educational, sociological, psychological, or anthropological) that is used in examining the impact of the classroom context. This study makes use of the definition provided by Steinberg and Garrett (Citation2016), who saw classroom context as “the settings in which teachers work and the students that they teach” (p. 293). This definition not only refers to the settings in which teachers work but also to the students to whom teachers are assigned, and it, therefore, takes into account the main dimensions through which differential teacher effectiveness is examined. It should also be acknowledged that a huge number of different contextual variables has been reported in the literature that may contribute to the variation on teachers’ in-class behaviour. Some of these variables that are relevant for the definition of classroom context provided above are: class size (e.g., Blatchford et al., Citation2011; Smylie et al., Citation2008); number of adults and students in the classroom and the time of the day (e.g., very beginning or end of the school day), week, and year (e.g., Bell et al., Citation2012; Curby et al., Citation2011); composition/student characteristics (e.g., academic and language-cultural heterogeneity, the percentage of low-income students, students with special needs, or relatively older students as compared to the grade-level average; e.g., Grossman et al., Citation2014; Mihaly & McCaffrey, Citation2014; Smylie et al., Citation2008; Steinberg & Garrett, Citation2016; Whitehurst et al., Citation2014); and grade (e.g., Goe et al., Citation2008; Mihaly & McCaffrey, Citation2014). These contextual variables can be combined in many ways to define a particular classroom context in detail. In addition, as noted by Pacheco (Citation2009), classrooms are not isolated from the larger context of schools and the broader context of community. Both contexts may introduce new variables that may affect the teacher–student interaction. Although EER generated evidence about the effect that school and community factors may have on quality in education (e.g., Kyriakides et al., Citation2021; Reynolds et al., Citation2014), the extent to which within a school the context of the classroom can also have an effect on quality of teaching has not been investigated. Thus, this paper focuses on whether teachers exhibit the same teaching skills when they teach mathematics to the same age group of students but in more than one classroom of a single school. This question is more relevant when generic factors are used to evaluate teaching skills since such studies may also help us further develop the theoretical frameworks of EER by considering the effects of contextual variables on the functioning of effectiveness factors.

Investigating the classroom context effect on teaching skills: implications for EER and for teacher evaluation

In this section, we acknowledge that each study can take into account only a few aspects of the classroom context. It is also possible that the influence of one contextual variable on teaching may depend on which other variables are present as well. Therefore, studying classroom context requires a careful design of research. It is also acknowledged that several studies searched for the effect of classroom context on student achievement (e.g., Belfi et al., Citation2012; Opdenakker et al., Citation2002) and relevant theories have been developed (e.g., Televantou et al., Citation2021), but a review of the literature in this topic is beyond the scope of this paper, which is concerned with the impact of classroom context variables on teacher behaviour. Even if studying classroom context is complex and difficult in this paper, we search for the effect of specific classroom context variables on teacher behaviour in order to develop a deeper understanding of teaching in all its complexity (Kyriakides et al., Citation2021). In addition, the study reported here may assist the design of a teacher evaluation framework that may be both more responsive to the realities of teaching and more useful in the improvement of teachers’ teaching skills (Pacheco, Citation2009).

Although many variables may contribute to the variation on teachers’ in-class behaviour, one of the aims of this study is to explore the influence of students’ background factors (i.e., gender and ethnicity) and students’ prior achievement (aptitude) on teacher in-class behaviour, as this is measured by classroom observation and/or student questionnaire. As mentioned in the next section, several studies demonstrate that these classroom context variables are related to teachers’ ability to use effective teaching practices (e.g., Göllner et al., Citation2020; Grossman et al., Citation2014; Lazarev & Newman, Citation2015). However, these studies did not find whether teachers tend to exhibit the same teaching skills when they teach in more than one classrooms of the same age groups of students, and there is variation in the composition of these classrooms in terms of students’ gender, ethnicity, and prior achievement. Even if according to the national policy of the country where this study was conducted, students are expected to be assigned randomly in their classrooms, in reality the classroom placement of students is far from random as parents often influence to which class their children are assigned (Braun, Citation2005; Kyriakides, Citation2005). The non-random assignment of students to the classrooms due to the influence of the parents may lead to more homogeneous groups and more specifically to classrooms with more students with higher prior achievement and/or native students compared to students in other classrooms. We, therefore, assume that some teachers may face more challenges in relation to certain teaching skills (e.g., dealing with misbehaviour or providing appropriate orientation or modelling tasks) in some classrooms than others. For example, in classrooms with a high percentage of lower achieving students, teachers may find it more difficult to engage students in orientation tasks especially since this group of students is likely to be less motivated and may also not like to remain on task during the lesson.

It is important to clarify here that teachers are expected to differentiate their instruction according to the specific needs of the students of each class (Nurmi et al., Citation2012; Ritzema et al., Citation2016). The adaptation to the specific needs of each student or group of students is expected to increase the successful implementation of a teaching factor and eventually maximize its effect on student learning outcomes (Creemers & Kyriakides, Citation2008; Tomlinson, Citation1999). Nevertheless, our study is concerned with the skills of teachers and examines whether teachers are able to demonstrate the same generic teaching skills, such as providing students with application opportunities (but not the same application tasks), in all classes that they have to teach. This research question has implications for research on understanding effectiveness and reveals whether it is feasible to draw from generic theoretical models of teaching in developing the theoretical frameworks of EER. The generic character of teacher factors included in different models of EER is usually examined by looking at the extent to which these skills are associated with student achievement gains in different subjects and age group of students (e.g., Kyriakides & Creemers, Citation2008; Seidel & Shavelson, Citation2007). However, if teacher behaviour in different classrooms varies, then the generic nature of these skills could be questioned. Thus, studies investigating the consistency of teacher behaviour across different classrooms may have implications for differential teacher effectiveness research (Campbell et al., Citation2004) and for developing theoretical models that consider the impact of the context on the functioning of teacher factors.

Apart from testing the generic nature of the teacher factors included in the theoretical frameworks of EER, understanding the extent to which teachers exhibit the same teaching skills when they teach different groups of students is also crucial for policy reasons, especially when classroom observations are used to evaluate teachers. If teachers are not in a position to demonstrate their skills in specific contexts, then the decontextualized use of observation scores can be questioned. When measures of student achievement are used for teacher evaluation purposes, attempts are made to control for contextual variables related with student characteristics, especially those found to be associated with student test performance (e.g., through using value-added models which control for the effect of the socioeconomic status and prior achievement levels of students; McCaffrey et al., Citation2004). However, when classroom observation scores are used for teacher evaluation purposes, contextual variables are, usually, not taken into account (Whitehurst et al., Citation2014). This may be attributed to the fact that very little is known about the influence of contextual variables on quality of teaching and more specifically on the consistency of teacher in-class behaviour in different classrooms.

A review of studies examining the classroom context effect on teaching skills

Recently some studies have attempted to investigate the extent to which measuring teaching skills by classroom observations and/or student questionnaire is influenced by classroom context variables, such as student achievement and student socioeconomic status. These studies found significant correlations between teachers’ observation scores and characteristics of the students of the classes they teach. Specifically, by using data from the administrative databases of four urban districts of the USA of moderate size (the names of the districts are not given), Whitehurst et al. (Citation2014) found strong statistical association between the prior achievement level of students and teacher ranking based on observation scores. In other words, they found that teachers with students with higher prior achievement receive observation scores that are higher on average compared to those received by teachers whose incoming students are at lower achievement levels. However, an overall score for measuring quality of teaching is used, and no information on the teaching skills which were considered in measuring quality of teaching is provided. It is, therefore, not possible to find out the aspects of teacher behaviour with which the classroom context variables were found to be related.

Similar results emerged from the study of Lazarev and Newman (Citation2015), who found consistent and pervasive correlations between class-average incoming achievement level and teacher observation scores (from two generic observation tools, Framework for Teaching [FFT] and Classroom Assessment Scoring System [CLASS]) by using data from the Measures of Effective Teaching (MET) project. However, Steinberg and Garrett (Citation2016), also by using data from the MET project (and specifically from the FFT observation tool), found that the incoming achievement of students matters differently for teachers in different classroom settings (English Language Arts [ELA] teachers compared to math teachers and subject-matter specialists compared to their generalist counterparts). By using data from the same project but focusing on a subject-specific observational tool (Protocol for Language Arts Teaching Observations [PLATO]), Grossman et al. (Citation2014) found that the composition of students in the class (i.e., race, income, English-language learning status and special education classification) is associated with teacher observation scores. Another study (Chaplin et al., Citation2014) showed that the ratings from both a generic observation tool (Research-Based Inclusive System of Evaluation [RISE]) and a student questionnaire (7Cs) are negatively associated with the percentage of low-income and racial/ethnic minority students. Thus, the studies presented here seem to support the hypothesis that the classroom context in which teachers work may play a role in determining teachers’ performance measured through classroom observations and/or student questionnaires.

However, most studies in this area suffered from some serious methodological limitations, as data have been obtained from a single class per teacher per year. Even when data from different school years were used (e.g., Whitehurst et al., Citation2014), researchers were not in a position to examine whether teachers’ in-class behaviour may vary over time due to their participation in effective professional development programmes. Moreover, researchers were not in a position to determine whether differences in observational ratings are related to student characteristics or to the systematically non-random sorting of teachers to classes of students. As mentioned above, in most districts parents often influence to which class and teacher their children are assigned (Braun, Citation2005). In addition, data from recent studies showed that schools tend to assign less experienced teachers to classrooms with lower achieving, minority, and poor students and the more experienced or effective teachers to higher achieving students (Kalogrides & Loeb, Citation2013; Kalogrides et al., Citation2013). As Steinberg and Garrett (Citation2016) found in their study, the non-random process by which teachers are often assigned to classes of students has a significant influence on measured performance based on classroom observation scores.

Therefore, the studies mentioned above can only demonstrate correlations between teaching quality and classroom context characteristics rather than causal effects of the classroom context on teaching quality. These correlational studies reveal that observation scores representing a certain teaching skill were more/less observed depending on the classroom context. However, in some contexts teachers may decide to offer fewer tasks associated with a skill and more tasks associated with another skill due to the specific learning needs of the students of this class. This limitation can be attributed to the interest of observation tools in only quantitative characteristics of the functioning of each factor. To avoid this limitation, the observation tools of this study are based on the dynamic model, and not only quantitative but also qualitative characteristics (including differentiation) of each factor are examined. In this way we can identify the extent to which observation scores (per factor and dimension) change according to the different classrooms that each teacher has to teach.

Given that several methods and instruments for measuring quality of teaching exist, whether the findings are differentiated according to the instrument that is used should also be investigated. One could claim that some instruments and/or teacher factors may be more sensitive to contextual variables than others. For example, some instruments may put emphasis only on measuring how frequently specific behaviours are observed (e.g., how frequently structuring tasks are provided) and not on their qualitative characteristics (e.g., the focus of the structuring tasks provided to students), which are also important in describing the complex nature of effective teaching (Kyriakides & Creemers, Citation2008). Thus, in this study we made use of all the instruments measuring the teacher factors included in the dynamic model and searched for the effects of classroom context variables on each factor of the dynamic model.

The dynamic model of educational effectiveness: the theoretical framework of this study

The establishment of the dynamic model was based on a critical analysis of the integrated models of educational effectiveness (e.g., Creemers, Citation1994; Scheerens, Citation1992) and on a synthesis of studies testing the validity of these models. The dynamic model is considered as one of the most influential theoretical models of EER (e.g., Heck & Moriyama, Citation2010; Sammons, Citation2009; Scheerens, Citation2013). This model not only takes into account the dynamic nature of education but is also multilevel in nature since it refers to factors found to be associated with student achievement gains that operate at four levels: student, classroom (teacher), school, and system. At the classroom/teacher level, the dynamic model refers to the following eight factors that describe teachers’ instructional role: (a) orientation, (b) structuring, (c) teaching modelling, (d) application, (e) questioning, (f) assessment, (g) management of time, and (h) classroom as a learning environment. Kyriakides et al. (Citation2021) argue that an integrated approach to define effective teaching is used since the model refers both to factors associated with the direct and active teaching approach (e.g., application and structuring) and to factors that are in line with the constructivist approach (e.g., modelling and orientation). An overview of the main elements of these factors is provided in .

Table 1. The main characteristics of each teacher factor included in the dynamic model.

In addition, a specific framework for measuring the functioning of each factor is proposed (Creemers & Kyriakides, Citation2008). Each factor is defined and measured by using five dimensions (i.e., frequency, focus, stage, quality, and differentiation) which describe not only quantitative but also qualitative characteristics of the functioning of each factor. Sixteen empirical studies and one meta-analysis have been conducted to examine the main assumptions of the dynamic model at classroom level (for a review of these studies, see Kyriakides et al., Citation2021). These studies revealed that the teaching factors and their dimensions are associated with student achievement gains. Cognitive learning outcomes in different subjects (i.e., mathematics, language, science, religious education, and physical education) as well as non-cognitive outcomes, such as student attitudes towards mathematics, were used to measure the impact of teacher factors. Therefore, some support for the assumption that these factors are associated with student achievement gains in different learning outcomes has been provided. The generic nature of these factors is also partly supported since these studies revealed that the effects of the factors of the dynamic model on different student learning outcomes were similar (i.e., Cohen’s d values were around 0.20). However, these studies took place mainly in primary schools, and the extent to which the classroom context affects teacher behaviour was not examined, especially since teachers who participated in these studies taught in a single class each school year.

Thus, the study reported here aims to test further the generic nature of teacher factors by not only searching for their effects on student achievement in secondary schools but also investigating whether teachers exhibit the same generic teaching skills (based on the teacher factors of the dynamic model) when they teach mathematics in different classrooms (of the same age group of students) within a school year. In this way, the effects of classroom context variables on teacher behaviour are investigated and possibilities of expanding the theoretical framework of EER are explored.

Methods

Participants

A stage sampling procedure was used. At the first stage, 14 lower secondary public schools (Gymnasiums) out of the 32 lower secondary public schools situated in the districts of Nicosia and Larnaca were randomly selected, and 12 schools agreed to participate. Then, all the teachers of the school sample (N = 37) who used to teach mathematics in at least two classes of the same age group of students (i.e., either Grade 7 or Grade 8) were invited to participate in this study, and 26 teachers (19 female and 7 male) agreed to participate. Most of the participating teachers taught only in two classes of the same age group of students (84%). In total, data were collected from 57 classes (30 classes of Grade 7 and 27 classes of Grade 8) of the participating teachers. Appendix 1 provides descriptive data about the composition of participating classes in terms of prior achievement of students and student background characteristics. One can see that the values of the standard deviations of prior achievement for each class are relatively small, and this reveals that these classrooms are homogeneous in terms of their students’ prior achievement. By comparing the mean scores of the classes that each teacher had to teach, relatively large differences can be detected in most cases. Differences can also be detected by comparing the classes that each teacher had to teach in terms of the percentage of native students and of students who speak Greek at home. One could therefore argue that the classes that each teacher had to teach were different as regards to prior achievement and ethnicity, and the effects of these classroom context variables on teacher behaviour could be detected.

All the participating teachers were subject-matter specialists who teach only mathematics. Although the teacher sample is not a nationally representative sample, the chi-square test did not reveal any statistically significant difference at the .05 level between the research sample and the teacher population of lower secondary mathematics teachers of Cyprus in terms of gender (X2= 0.02, df = 1, p = 0.88). Moreover, the t test did not reveal any statistically significant difference between the research sample and the teacher population in terms of years of experience (t = −1.56, df = 25, p = 0.13). Therefore, the teacher sample had the same background characteristics as the national sample in terms of gender and year of experience.

All students (N = 915) from all the 57 classrooms of the teacher sample participated in this study. Full student data from 840 out of the 915 students of our sample (i.e., 91.8%) were available, and no imputation method was used. Missing student assessment data was most likely caused by the absence of a student at one or both time points, whereas an individual self-selection bias was fairly unlikely due to the non-high-stakes character of the tests used to measure prior and final achievement in mathematics. It is finally important to note that the chi-square test revealed that the student sample was representative of the student population of Cyprus in terms of gender (X2 = 1.334, df = 1, p = 0.25). In addition, the t test revealed that the student sample was representative of the student population of Cyprus in terms of the size of the class (t = 1.205, df = 56, p = 0.24). Regarding the size of the classes of our sample, it should be acknowledged that all classes were more or less of the same size due to the fact that the educational system in Cyprus is centralized and there are specific regulations about the class size. Moreover, 73% of the student sample spoke Greek at home and 67% were natives. However, there are no national data on these two student background characteristics, and it was, therefore, not possible to examine whether the student sample was representative of the student population of Cyprus in terms of these two variables.

Data collection and research variables

Student achievement in mathematics

Data on student achievement in mathematics were collected at the beginning and at the end of the school year 2014–2015 by using external forms of assessment. The written tests were developed and validated in a pilot study conducted during the school year 2013–2014 (see Kokkinou, Citation2019). The construction of the tests was based on a review of the national curriculum of Cyprus, the national student textbooks, and instruments measuring mathematics skills of Grades 6–8. For each test, scoring rubrics were developed in order to differentiate between up to three levels of proficiency in each task, leading to the collection of ordinal data about the extent to which each student had obtained each skill of mathematics. In order to equate the tests, common items were used (i.e., more than 15%) with representative content to be measured (Kolen & Brennan, Citation2004). The analysis of the data of the main study was done by using the extended logistic model of Rasch (Andrich, Citation1988), which revealed that each scale had satisfactory psychometric properties. Particularly, two scales which refer to student achievement in mathematics at the beginning and at the end of the school year were created. Reliability was calculated by using the Item Separation Index and the Person (i.e., student) Separation Index. For each scale, the indices of cases (i.e., students) and items separation were found to be higher than 0.83, indicating that the separability of each scale was satisfactory (B. D. Wright, Citation1985). The mean infit mean squares and the mean outfit mean squares of each scale were in all cases very close to the Rasch-model expectations of one and the values of the infit t scores and the outfit t scores were approximately zero. Furthermore, each analysis revealed that all items had item infit with the range 0.84 to 1.19. Therefore, each analysis revealed that there was a good fit to the model (Keeves & Alagumalai, Citation1999). Thus, for each student, two different scores for their achievement in mathematics were calculated using the relevant Rasch person estimate in each scale.

Student background characteristics

Information through a short student questionnaire was collected on gender (388 boys and 452 girls) and ethnicity. Specifically, it was possible to identify the country of birth of students and their parents/guardians (67% native children). Students were also asked to indicate whether they speak Greek at home and/or any other language. Information about the composition of each classroom and of the whole sample in terms of these variables can be found in Appendix 1.

Quality of teaching

The eight factors dealing with teacher behaviour in the classroom were measured by both an independent observer and students. Taking into account the way the five dimensions of each effectiveness factor are defined, one high-inference and two low-inference observation instruments were developed. The eight factors and their dimensions were also measured by administering a questionnaire to students as a strategy to enhance internal validity and minimize bias. These instruments were used in 12 effectiveness studies investigating the impact of teacher factors on student learning outcomes (for a review of these studies, see Kyriakides et al., Citation2021). The construct validity of these instruments was systematically tested by using confirmatory factor analysis (e.g., Azigwe et al., Citation2016; Kyriakides & Creemers, Citation2008). Creemers and Kyriakides (Citation2012) present the observation instruments and guidelines on how the instruments can be used for teacher and school improvement purposes. Thus, a brief description of these instruments is provided here.

In regard to the observation instruments, the two low-inference observation instruments generate data for all teacher factors but assessment and their dimensions. Specifically, one of the low-inference observation instruments is based on Flanders’ system of interaction analysis (Flanders, Citation1970). However, a classification system of teacher behaviour was developed which was based on the way the management of time and the classroom as a learning environment factors are defined in the dynamic model. For example, in order to measure the quality dimension of teacher behaviour in dealing with disorder, which is an element of the classroom as a learning environment factor, the observers are asked to identify any of the following types of teacher behaviour in the classroom: (a) the teacher is not using any strategy at all to deal with a classroom disorder problem, (b) the teacher is using a strategy but the problem is only temporarily solved, and (c) the teacher is using a strategy that has a long-lasting effect. The distinction between temporarily solved (i.e., Category b) and long-lasting effect (i.e., Category c) is based on observations on what is happening during the lesson after the action of the teacher. Moreover, a classification system of student behaviour was developed, and the observer is expected not only to classify student behaviour when it appears but also to identify the students who are involved in each type of behaviour. Thus, the use of this instrument enables us to generate data about teacher–student and student–student interactions, which are two very important aspects of the classroom as a learning environment factor. The second low-inference observation instrument refers to five factors of the model (i.e., orientation, structuring, teaching modelling, questioning, and application). Observers are expected to report not only on how frequently tasks associated with these factors take place but also on the period that each task takes place and its focus. An emphasis on measuring specific aspects of the quality dimension of each factor (e.g., the type of questions raised and the type of feedback provided to students’ answers) can also be identified. Finally, the high-inference observation instrument covers the five dimensions of all factors of the model but assessment, and observers are expected to complete a Likert scale in order to indicate how often each teacher behaviour was observed.

In this study the high-inference observation instrument was used twice in each class in which each participating teacher had to teach mathematics. Observations lasted for 45 min (i.e., a typical math lesson), and all the observations were conducted during the same time period in all the classes taught by the same teacher. For practicality reasons, teachers were not required to teach the same topic in each class. In addition, participating teachers were not aware of the observation instruments and the factors which were considered in measuring quality of teaching. Since observations were carried out by only one researcher, the inter-rater reliability of the data from the high- and low-inference observation instruments cannot be tested. However, in order to reduce the possibility of bias, a well-trained observer was used, who attended a series of seminars on how to use the three observation instruments. This observer was part of a team that used these instruments for the purposes of two earlier projects. In these studies, the inter-rater reliability of the team was tested and found to be higher than 0.80 (see Charalambous et al., Citation2019; Dimosthenous et al., Citation2020). Moreover, not only classroom observations but also a student questionnaire was used to evaluate the generic teaching skills of the participating teachers, and, in this way, the internal validity of data on quality of teaching was systematically tested.

As mentioned above, a questionnaire was administered to the students of all classes of Grades 7 and 8 of the participating teachers at the end of the school year in order to gather data on their teacher’s instructional behaviour in relation to the eight factors and their dimensions. Specifically, students were asked to indicate the extent to which their teacher behaves in a certain way in their classroom, and a Likert scale was used to collect data. For example, an item concerned with the stage dimension of the structuring factor was asking students to indicate whether at the beginning of the lesson the teacher explains how the new lesson is related to previous ones, whereas another item was asking whether at the end of each lesson they spend some time in reviewing the main ideas of the lesson. The student questionnaire was used in one international and several national effectiveness studies, which generated empirical support to its construct validity (see Panayiotou et al., Citation2014).

For the purposes of this study, separate confirmatory factor analyses (CFA) for each effectiveness factor were conducted in order to identify the extent to which data from different methods can be used to measure each factor in relation to the five dimensions of the dynamic model. The main results which emerged from using CFA approaches to analyse the multi-trait multi-method matrix (MTMM) concerned with each classroom-level factor of the dynamic model provided support for the construct validity of the observation instruments (see Kokkinou, Citation2019). Convergent validity for most measures was also demonstrated by the relatively high (i.e., higher than 0.62) standardized trait loadings, in comparison to the relatively lower (i.e., lower than 0.36) standardized method loadings. These findings provide support for the use of multi-method techniques for increasing measurement validity and construct validity, and, thus, stronger support for the validity of subsequent results.

Analysis of data

Investigating the consistency of teacher behaviour

The main aim of this study is concerned with the extent to which teachers exhibit the same teaching skills when they teach in different classrooms. For this reason, a generalizability study was conducted (Marcoulides & Kyriakides, Citation2010) by considering the type of data collected on quality of teaching through each observation instrument and the student questionnaire. According to Shavelson et al. (Citation1989), the generalizability theory asks how accurately observed scores allow to generalize about persons’ behaviour in a defined universe of situations. If a dimension of a teacher factor, as measured by an observation instrument or the student questionnaire, is found to be generalizable at the teacher level, one may argue that teacher behaviour regarding this dimension of the teacher factor tends to be consistent from classroom to classroom. In this section, we refer to the strategy that was used to analyse the data of each instrument separately.

For the two low-inference observation instruments, one-way analysis of variance (ANOVA) was conducted in order to identify whether the data that emerged from each low observation instrument are generalizable at the level of the teacher. Our decision to use this technique is attributed to the fact that each instrument was used only once in generating scores from observing the participating teachers in each class. One-way ANOVA would allow us to find out if there is homogeneity in the observation scores obtained from different classrooms which were taught by the same teacher. Thus, ANOVA helps us to find out whether the data that emerged from the low-inference observation instruments are generalizable at the level of the teacher. It is important to note that each teacher factor (and its dimensions) was measured by only one of the two low-inference observation instruments. For this reason, separate analysis from each low-inference observation instrument per factor was conducted.

The high-inference observation instrument was used twice in each class of each participating teacher. Thus, multilevel regression modelling techniques (Snijders & Bosker, Citation2012) were used to test if the data are generalizable at the level of the class and/or teacher. Specifically, for each factor score that emerged from using the high-inference observation instrument, we ran three alternative empty models to find out whether the data fit better to a three-level model (i.e., observations within classes within teachers) or to any of the two-level models that ignore either the teacher or the class level. The same approach was used to examine the generalizability of the data that emerged from the student questionnaire. Separate multilevel analyses for each questionnaire item and for each factor score that emerged from the CFA of student questionnaire data were conducted. Specifically, we compared the three alternative empty models to find out whether the data for each item and each factor score fit better to a three-level model (students within classes within teachers) or to any of the two-level models that ignore either the teacher or the class level.

Using multilevel structural equation modelling (MSEM) techniques to search for effects of teacher factors and classroom contextual factors on final achievement

The other two aims of this study are concerned with whether each of the teacher factors of the dynamic model can explain variation in student achievement in mathematics and whether the aggregated scores of student background factors (including prior achievement) at the classroom level have any impact on the functioning of any teacher factor. Direct and/or indirect effects of student background factors on student achievement are, therefore, examined. For this reason, multilevel structural equation modelling (MSEM) was used, which allows for exploring direct and/or indirect effects of the classroom context factors on student outcomes while at the same time accounting for the nested nature of the data (e.g., Goldstein & McDonald, Citation1988; B. O. Muthén & Satorra, Citation1989). Since this study searches for the effects of teaching factors on student achievement and the effects of classroom context (especially average prior achievement) on teaching factors and through that on student achievement, the main variables of this study are situated at the student and classroom levels. Thus, a two-level model (students within classrooms) was employed by using the Mplus 7 software (L. K. Muthén & Muthén, Citation2001). Admittedly, the data of this study are based on four levels (students within classrooms within teachers within schools), and ignoring the school and teacher levels may distort the class- and student-level variance component and may bias standard errors downwards (Martínez, Citation2012; Snijders & Bosker, Citation2012). The school level was, however, not taken into account since the study does not measure any school-level variable and the number of schools participating in the study is relatively small. In regard to the teacher level, the study reported here refers to the impact of classroom context factors on teaching quality, which means that the classroom rather than the teacher level should have been considered. In addition, not all teacher factors were found to be generalizable at the teacher level, and aggregating their scores at the teacher level is not permitted. On the other hand, all teacher factors were found to be generalizable at the classroom level. Thus, for practical and parsimonious reasons a two-level model was used (Preacher, Citation2011).

The two-level model used to analyse the data of this study investigates the assumption that classroom context factors (including the average prior achievement) have not only a direct effect on final achievement but also an indirect effect on student achievement through influencing the functioning of teacher factor. Specifically, at the student level, student background factors (i.e., gender, ethnicity) and prior achievement are expected to have direct effects on students’ final achievement in mathematics. Student background factors may also affect prior achievement in mathematics. At the class level, each teacher factor is expected to have an effect on final student achievement. Each teacher factor is treated as a latent variable which consists of the scores that emerged from the observation instruments and the student questionnaire and were aggregated at the class level to measure the functioning of each factor in each class separately. At the class level, we also assume that student prior achievement has both direct and indirect effects on achievement (through influencing the functioning of each teacher factor). Results from the MSEM analysis including the model that was found to fit the data are presented in the next section.

The full information maximum likelihood (FIML) estimation approach using the standard estimator MLR was used to search for indirect and/or direct effects of prior achievement on final achievement in mathematics. To calculate the indirect effect of prior achievement, we used the multivariate delta method (see Olkin & Finn, Citation1995), which attempts to find the large sample standard error of the difference between a simple correlation and the same correlation partialled for a third variable. MacKinnon et al. (Citation2002) compared 14 methods to test the statistical significance of the indirect effect of a variable. It was shown that methods based on a two-step approach lead to low Type I error rates and low statistical power. On the other hand, methods based on the distribution of the product and on the difference-in-coefficients had a more accurate Type I error and greater statistical power.

Results

Investigating the consistency of teacher behaviour

In order to facilitate the understanding of the results of the generalizability analyses, was constructed. In this table, one can see the level at which data about each factor that emerged from each instrument were found to be generalizable. As mentioned in the data analysis section, data from the high-inference observation instrument and the student questionnaire were analysed by using multilevel analysis. Specifically, separate analyses for each factor were conducted. For example, the following three empty models were used to analyse the data from the high-inference observation instrument on the structuring factor: (a) observations within classrooms within teachers, (b) observations within classrooms, and (c) observations within teachers. By comparing the likelihood statistics of each model, it was found that the empty model consisting of observations within teachers represented the best solution. shows that the difference in the likelihood statistic was 12.67 in favour of the observations within teacher model. This result reveals that data from the high-inference observation instrument measuring the structuring factor are generalizable at the level of the teacher (see Snijders & Bosker, Citation2012). The second column of shows that this two-level model was found to fit better than any other model when data measuring each of the other factors but orientation were analysed. In the case of orientation, the comparison of the likelihood statistics of the three models revealed that the observations within classroom model represented the best solution (with a difference in likelihood statistics with the observations within teacher model equal to 5.97).

Table 2. Summarized results of all the instruments used in the study in regard to the level (i.e., teacher or classroom) to which each teacher factor of the dynamic model was found to be generalizable by considering the instrument that was used to measure the factor.

The following three empty models were used to analyse data from the student questionnaire measuring each factor separately: (a) students within classrooms within teachers, (b) students within classrooms, and (c) students within teachers. By comparing the likelihood statistics of these three models, it was found that for each factor but orientation and dealing with student misbehaviour (which is an aspect of the classroom as a learning environment factor), the empty model consisting of students within teachers represented the best solution. In the case of orientation and dealing with student misbehaviour, the students within classrooms model represented the best solution. also shows that in each case the differences in the likelihood statistics were not only significant but also relatively big. These findings reveal that data on each factor but orientation and dealing with student misbehaviour are generalizable at the level of the teacher.

One-way ANOVA was used to analyse the data from the low observation instruments. For example, one can see that in regard to the structuring factor the value of F statistics is statistically significant at level .001 (see ). It is also important to note that the between-group variance was found to be much higher than the within-group variance. Similar results emerged from analysing the data of all factors but orientation and dealing with student misbehaviour. shows that no statistically significant results at the .05 level emerged from analysing the data of these two factors. One could therefore claim that for all factors but orientation and one aspect of the classroom as a learning environment factor (i.e., dealing with misbehaviour), the data are generalizable at the level of teacher.

In summary, it is apparent from that for five factors (i.e., application, modelling, management of time, structuring, and questioning techniques), as well as for the two main elements of the classroom as a learning environment (i.e., the teacher–student interaction and student–student interaction), teacher behaviour was found to be consistent from classroom to classroom irrespective of the instrument used to measure the functioning of the factor. Therefore, for all these factors data were found to be generalizable at the level of the teacher. On the other hand, data from each instrument measuring the orientation factor were not found to be generalizable at the level of the teacher. For dealing with misbehaviour (which is an element of the classroom as a learning environment factor), the findings were differentiated according to the instrument that was used to collect data. Only data from the high-inference observation instrument were found to be generalizable at the level of the teacher. It is, finally, important to note that the factor of assessment was measured only by administering the student questionnaire and was found to be generalizable at the level of the teacher.

The impact of prior achievement and teacher factors on final achievement in mathematics

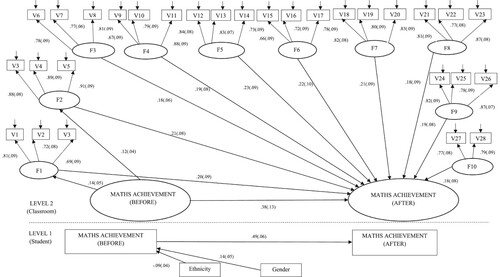

This section presents the results of the MSEM analysis, which was conducted in order to search for the effects of teacher factors on student achievement. MSEM was also used to identify any effects that contextual factors may have on the functioning of teacher factors. The model that is based on the assumption that prior achievement can have not only direct but also indirect effects on achievement (through affecting the functioning of teacher factors) was found to fit the data very well (i.e., X2 = 449.4, df = 425, p = 0.199; comparative fit index [CFI] = 0.99, Tucker–Lewis index [TLI] = 0.98; root-mean-square error of approximation [RMSEA] = 0.03; standardized root-mean-square residual for the between model [SRMR(B)] = 0.09, standardized-root-mean-square residual for the within model [SRMR(W)] = 0.02). Specifically, the p value for the chi-square test of the model shown in was found to be higher than 0.05. Moreover, both the CFI and the TLI were higher than 0.95. As far as the value of the RMSEA is concerned, it was lower than 0.05. These results reveal that the model presented in fitted the data well (see Hu & Bentler, Citation1999). The SRMR-W and the SRMR-B, available in Mplus, were also estimated. According to Hu and Bentler (Citation1999), a value of SRMR less than .08 is considered to indicate good model fit and a value of .10 to indicate moderate fit. The values of SRMR indicate that the model is well fitting at the lowest level but that it does not fit that well at the class level. The relatively high value of SRMR-B could also be attributed to the relatively small number of classrooms participating in this study (see Hox et al., Citation2014).

Figure 1. Direct and indirect effects of teacher and classroom contextual factors on student achievement in mathematics.

Notes: The Rasch person (i.e., student) estimates were used for the variables concerned with achievement in mathematics. Although these variables are not directly measured, they are shown in rectangles since they cannot be considered as latent variables. Latent variables in structural equation modelling are variables with a factor analytic measurement model. On the other hand, achievement variables at Level 2 represent random intercepts and are treated as latent variables and are, thereby, shown in ellipses. To generate the latent variables measuring the teacher factors, the scores from each instrument used to measure the functioning of each factor were considered. Each score from the observation instruments and the student questionnaire is shown in rectangles. The name of each teacher factor is: F1: Orientation; F2: Dealing with misbehaviour (first aspect of the classroom as a learning environment factor); F3: Modelling; F4: Management of time; F5: Application; F6: Questioning techniques; F7: Structuring; F8: Teacher–student interaction (second aspect of the classroom as a learning environment factor); F9: Student–student interaction (third aspect of the classroom as a learning environment factor); F10: Assessment.

illustrates the estimated standardized parameters (standard errors are put in parentheses) which were found to be statistically significant at the .05 level. Paths that were not found to be statistically significant at the .05 level are not shown in this figure. At the lowest level, both gender and ethnicity were found to be associated with prior achievement in mathematics, but only prior achievement was found to have a direct effect on final achievement. When the background variables were aggregated at the class level, none of them was found to have a statistically significant effect either on prior or on final achievement. Moreover, none of the background variables aggregated at the classroom level was found to have an effect on the functioning of any teacher factor. As a consequence, gender and ethnicity are not presented in the classroom level of . On the other hand, prior achievement was found to be a good predictor of final achievement, both at the student and the class level.

At the class level, all teacher factors were found to have an effect on student achievement in mathematics. To estimate the relative strength of the effect of each factor, a measure of the effect size was computed as proposed by Marsh et al. (Citation2009) that is comparable to Cohen’s d (Cohen, Citation1988): ES = (2 * b * SD predictor) / SD outcome where b is the unstandardized regression coefficient, SD predictor is the standard deviation of the predictor (i.e., factor), and SD outcome is the Level 1 standard deviation of the outcome (i.e., student achievement in mathematics). presents the effect sizes of the teaching factors included in the dynamic model on student achievement in mathematics. The following observations arise from this table. First, the effect sizes presented in this table are considered small, especially since all of them are below 0.25. It should, however, be acknowledged that all of them are similar in magnitude to those recorded in previous studies (e.g., Dimosthenous et al., Citation2020; Kyriakides & Creemers, Citation2008; Panayiotou et al., Citation2014) and meta-analyses (Kyriakides et al., Citation2013; Scheerens, Citation2013) investigating the effect of teaching factors on cognitive achievement in primary mathematics. Second, the structuring factor has a relatively stronger effect than all the other teaching factors. It should, nevertheless, be acknowledged that the effect sizes of the teacher factors have a small variation since their values are between 0.16 and 0.23. Thus, the findings of the MSEM seem to provide further empirical support for the importance of all teacher factors for promoting student learning outcomes in mathematics in secondary education.

Table 3. Effects of each teacher factor on student achievement in mathematics (as expressed by Cohen’s d).

also shows that for most factors, none of the classroom context factors examined (i.e., gender, ethnicity, and prior achievement) had any statistically significant effect. However, teachers’ ability to offer orientation tasks and deal with misbehaviour was found to be influenced by student prior achievement aggregated at the class level. The effect sizes concerned with the impact of prior achievement on the functioning of these two factors were found to be relatively small (i.e., 0.17 and 0.19, respectively). However, in each case, positive statistically significant effects were identified, which reveal that teachers can more easily deal with student misbehaviour and provide orientation skills in those classrooms in which students had higher scores in mathematics at the beginning of the school year.

Discussion

In this section, implications of findings are drawn. First, the study presented here seems to provide further empirical support for the dynamic model of educational effectiveness. The MSEM analysis revealed that each teacher factor included in the model had an effect on final student achievement in mathematics. This finding seems to be in line with the results of more than 15 studies conducted in different countries and continents which reveal the importance of the eight teacher factors for promoting student learning outcomes in primary schools (for a review of these studies, see Kyriakides et al., Citation2021). It is, however, important to note that most of the studies testing the validity of the dynamic model at the classroom level were concerned with the achievement of primary students in different subjects including mathematics. The study reported here is the first study searching for the impact of teacher factors on promoting the learning outcomes of secondary students. Therefore, some support for the assumption that teacher factors can be treated as generic seems to be generated since the study reported here reveals that these factors could be considered in teaching not only primary students but also secondary students. We therefore argue for the need of studies investigating the impact of teacher factors on student learning outcomes in different subjects at secondary school level.

Second, this is the first study investigating the extent to which teachers who are expected to teach in different classrooms exhibit the same teaching skills in regard to the factors included in the dynamic model. This research question could be raised since the study took place in secondary schools where teachers are subject specialists and are expected to teach the same subject (in the case of this study, mathematics) in classrooms with different composition characteristics (see Appendix 1). By investigating the generalizability of the data that emerged from measuring teacher behaviour in different classrooms, it was found that for most factors the data are generalizable at the level of the teacher. This finding seems to be in line with the fact that MSEM analysis revealed that none of the factors found to be generalizable at the level of the teacher was influenced by any classroom context variable measuring student background characteristics (i.e., gender and ethnicity) including prior achievement. One could therefore argue not only that teacher factors are important for promoting achievement in mathematics but also that teachers can make use of these factors during their teaching irrespective of the characteristics of their students. Thus, the results of both types of analyses of the data of this study seem to reveal that teachers can demonstrate their skills (in regard to most factors of the dynamic model) in different classrooms irrespective of the background characteristics of the students that they have to teach. It should, however, be acknowledged that the dynamic model treats differentiation as a dimension of measuring the functioning of each factor, which implies that teacher factors are expected to work differently for different types of students and in different types of classrooms. Thus, these findings should not be misinterpreted as implying that teachers should use the same teaching tasks in teaching different groups of students and in different types of classrooms, especially since differentiation is one of the most important measurement dimensions of each factor in explaining variation in student achievement gains (see Kyriakides et al., Citation2021).

Third, the results of the generalizability analysis revealed that data measuring the orientation factor which emerged from observations and student questionnaires are not generalizable at the level of the teacher. This finding seems to be in line with the fact that MSEM also revealed that prior achievement (aggregated at the classroom level) has a positive effect on the functioning of this factor. One could attribute the fact that teachers offer more appropriate orientation tasks in some classrooms than in others to the effect that student prior achievement was found to have (through the MSEM analysis) on the functioning of this factor. This finding needs to be considered in providing teacher professional development courses aiming to support teachers to improve their teaching skills. Teachers should be aware that all students (irrespective of their attainment level) should be provided with orientation tasks. It seems to be easier for teachers to provide orientation tasks to those students who have higher prior achievement and usually have more incentives to work in mathematics, especially since research reveals a reciprocal relationship between student motivation and student achievement (Bamburg, Citation1994; Marsh & Craven, Citation2006; Marsh & Parker, Citation1984). However, teachers should search for alternative ways to engage low-achieving students in orientation tasks which can help students identify the reasons why they are taught specific concepts in mathematics.

This finding has also some significant implications for research on teacher evaluation. In case teacher evaluation is conducted for summative reasons (e.g., for teacher promotion purposes) and orientation is treated as an evaluation criterion to establish a fair teacher evaluation mechanism, the impact of the classroom context (and especially of student prior achievement) on the functioning of this factor should be considered. In case teacher evaluation is conducted for formative purposes, teachers should be observed teaching in different classrooms in order to find out whether they are able to provide appropriate orientation tasks not only in classrooms where students prior achievement is high but also in classrooms where students have low prior achievement scores.

Fourth, the generalizability of teachers’ skills to deal with misbehaviour was found to be only generalizable at the level of the teacher when their skills were measured through a high-inference observation instrument. The other two instruments revealed significant variation in the behaviour of teachers in different classrooms. The later finding is in line with the results of the MSEM, which reveal a positive effect of student prior achievement on the functioning of the factor dealing with student misbehaviour. This positive effect seems to reveal that variation in the functioning of this factor in different classrooms can be attributed to a classroom contextual factor, namely, the prior achievement of students. One could argue that teachers are more likely to deal with student misbehaviour problems in a more efficient way in those classrooms where students have higher prior achievement. Since prior achievement can be treated as a proxy measure of aptitude, one could claim that in classrooms where students have lower prior achievement scores, misbehaviour problems are more likely to occur, and therefore teachers have more challenges to face in order to deal with misbehaviour incidents (Muijs & Reynolds, Citation2001). We argue here that the impact of prior achievement on the functioning of orientation and dealing with misbehaviour can be attributed to the fact that both factors aim to promote learning through improving student-level factors (i.e., motivation and time on task) which are closely related with prior achievement (see Creemers & Kyriakides, Citation2008). As a consequence, teachers have more challenges to face in providing appropriate orientation tasks and in ensuring that all students are on task in those classrooms where prior achievement is lower. As a consequence, we can detect variation in teacher behaviour in providing orientation tasks and in dealing with student misbehaviour when teachers are observed teaching in more challenging classrooms.

Fifth, in regard to the question of whether the classroom context affects teacher behaviour, the findings of this study seem to reveal the complexity of this question. In this study, three classroom context factors were taken into account (i.e., gender, ethnicity, and prior achievement), and one of them (i.e., student prior achievement aggregated at the class level) was found to have statistically significant effects on the functioning of two teacher factors of the dynamic model (i.e., orientation and dealing with misbehaviour). One could therefore claim that the answer to this question can partly be attributed not only to the aspects of classroom context that are taken into account but also to the factors that are used to conceptualize and measure quality of teaching. In this study, a specific framework of effective teaching was used which gives emphasis to observable behaviour of teachers in the classroom and to those types of behaviour that were found to be consistently related with student achievement gains. It is also important to note that factors included in the dynamic model are considered as generic in nature. Despite the fact that a single framework was used, no effect on most factors was identified. At the same time, the generalizability analysis revealed that there is no significant variation in the functioning of these factors when teachers are asked to teach in different classrooms. However, the orientation factor and one aspect of the classroom as a learning environment factor were found to be influenced by the classroom context and more specifically by student prior achievement aggregated at the classroom level. A possible explanation for this effect might have to do with the fact that prior achievement is a proxy measure of aptitude. Therefore, this effect can be attributed to the special challenges that teachers may have to face in providing orientation tasks and dealing with student misbehaviour when students have not sufficient prior background to achieve their aims. This group of students is likely to be less motivated to learn mathematics and to be on task during the lesson. By considering that these two factors of the dynamic model promote learning through addressing specific student factors (i.e., motivation and time on task, respectively), one could also explain why classroom context effects on orientation and dealing with student misbehaviour were detected. It is also important to note here that the importance of taking into account student prior achievement when measuring teaching skills and when investigating the effects of teaching strategies on student achievement is supported by previous research studies, which were based on a different theoretical framework (e.g., Caro et al., Citation2018; Lazarev & Newman, Citation2015), and the factors which were considered had similar characteristics to orientation and dealing with misbehaviour.

In the final part of this section, we refer to the main limitations of this study and provide suggestions for further research. First, the study reported here searched for the effect of three classroom context factors (i.e., gender, ethnicity, and prior achievement) on teacher behaviour. Since different answers about the effect of classroom context on quality of teaching are provided when different aspects of classroom context are considered, further research is needed to explore the effect of various aspects of the classroom context beyond gender, ethnicity, and prior achievement, which were considered in the current study. Second, the study reported in this paper took into account the teacher factors of the dynamic model in measuring quality of teaching. Studies investigating the impact of classroom context on factors included in other theoretical frameworks should also be conducted. In this way, we could search for the extent to which classroom context factors have bigger effects on the functioning of factors included in different frameworks of effective teaching. Third, this study was concerned with classroom context effects on teaching a specific subject (i.e., mathematics) to specific age groups of students (i.e., seventh- and/or eighth-grade students) in Cyprus. Further research is needed to test the generalizability of the findings of this study by collecting data on the teacher factors of the dynamic model when teachers are expected to teach different subjects to various age groups of secondary students and in different educational contexts beyond Cyprus. Steinberg and Garrett (Citation2016) argue that prior achievement matters differently in the classroom behaviour of teachers working in different classroom settings (e.g., ELA teachers compared to math teachers).

It is finally acknowledged that classrooms are not isolated from the larger context of schools (Pacheco, Citation2009; Scheerens, Citation2016), and for this reason the dynamic model refers not only to student- and teacher-level factors but also to factors operating at the school and the system levels (see Creemers & Kyriakides, Citation2008). Individual teachers may respond to different school contexts differently and may not show consistency in their teaching behaviour when they are expected to teach in different schools. In this study, participating teachers had to teach in different classrooms of the same school. In this way, the school effect was controlled and the net effect of classroom context on teacher behaviour was examined. By including in the sample teachers teaching in more than one school, more variation in the composition of classrooms could have been obtained, resulting in more statistical power to detect effects. Further studies are therefore needed to collect data from both teachers who teach mathematics in different classrooms of the same school and teachers who teach mathematics in different classrooms of different schools. Such studies may examine effects on teacher behaviour of not only classroom but also school contextual factors including the school policy of teaching and the school learning environment, since these two factors are expected to have an effect on the actions of different stakeholders including teachers (see Kyriakides et al., Citation2015). Studies investigating the effects of the classroom and school context on the functioning of teacher factors proposed here may help the research community not only to identify what matters and why but also to explain under which conditions and for whom teacher factors can promote quality in education.

Disclosure statement

No potential conflict of interest was reported by the authors.

Additional information

Notes on contributors

Elena Kokkinou

Elena Kokkinou is a postdoctoral researcher at the Department of Education of the University of Cyprus. She has participated in several international projects on educational effectiveness and school improvement. Her research interests lie in the area of educational effectiveness. She is interested in examining the classroom context effect on teaching skills.

Leonidas Kyriakides

Leonidas Kyriakides is Professor of Educational Research and Evaluation at the Department of Education of the University of Cyprus. His main research interests are in the area of school effectiveness and school improvement and especially in modelling the dynamic nature of educational effectiveness and in using research to promote quality and equity in education. Leonidas acted as chair of the EARLI SIG on Educational Effectiveness and as chair of the AERA SIG on School Effectiveness and Improvement.

References

- Andrich, D. (1988). A general form of Rasch’s extended logistic model for partial credit scoring. Applied Measurement in Education, 1(4), 363–378. https://doi.org/10.1207/s15324818ame0104_7

- Azigwe, J. B., Kyriakides, L., Panayiotou, A., & Creemers, B. P. M. (2016). The impact of effective teaching characteristics in promoting student achievement in Ghana. International Journal of Educational Development, 51, 51–61. https://doi.org/10.1016/j.ijedudev.2016.07.004

- Bamburg, J. D. (1994). Raising expectations to improve student learning. North Central Regional Educational Lab.

- Belfi, B., Goos, M., De Fraine, B., & Van Damme, J. (2012). The effect of class composition by gender and ability on secondary school students’ school well-being and academic self-concept: A literature review. Educational Research Review, 7(1), 62–74. https://doi.org/10.1016/j.edurev.2011.09.002

- Bell, C. A., Gitomer, D. H., McCaffrey, D. F., Hamre, B. K., Pianta, R. C., & Qi, Y. (2012). An argument approach to observation protocol validity. Educational Assessment, 17(2–3), 62–87. https://doi.org/10.1080/10627197.2012.715014

- Blatchford, P., Bassett, P., & Brown, P. (2011). Examining the effect of class size on classroom engagement and teacher–pupil interaction: Differences in relation to pupil prior attainment and primary vs. secondary schools. Learning and Instruction, 21(6), 715–730. https://doi.org/10.1016/j.learninstruc.2011.04.001

- Braun, H. I. (2005). Using student progress to evaluate teachers: A primer on value-added models. Educational Testing Service.

- Brophy, J., & Good, T. L. (1986). Teacher behavior and student achievement. In M. C. Wittrock (Ed.), Handbook of research on teaching (3rd ed., pp. 328–375). Macmillan.

- Campbell, R. J., Kyriakides, L., Muijs, R. D., & Robinson, W. (2003). Differential teacher effectiveness: Towards a model for research and teacher appraisal. Oxford Review of Education, 29(3), 347–362. https://doi.org/10.1080/03054980307440

- Campbell, R. J., Kyriakides, L., Muijs, R. D., & Robinson, W. (2004). Assessing teacher effectiveness: Developing a differentiated model. RoutledgeFalmer.

- Caro, D. H., Kyriakides, L., & Televantou, I. (2018). Addressing omitted prior achievement bias in international assessments: An applied example using PIRLS-NPD matched data. Assessment in Education: Principles, Policy & Practice, 25(1), 5–27. https://doi.org/10.1080/0969594X.2017.1353950

- Chaplin, D., Gill, B., Thompkins, A., & Miller, H. (2014). Professional practice, student surveys, and value-added: Multiple measures of teacher effectiveness in the Pittsburgh Public Schools (REL 2014–024) (ED545232). ERIC. https://files.eric.ed.gov/fulltext/ED545232.pdf