Abstract

Guitarists struggle to play their instruments while simultaneously using additional computing devices (i.e., encumbered interaction). We explored designs with guitarists through co-design and somaesthetic design workshops, learning that they (unsurprisingly) preferred to focus on playing their guitars and keeping their instruments’ material integrity intact. Subsequently, we devised an interactive guitar strap controller, which guitarists found promising for tackling encumbered interaction during instrumental transcription, learning and practice. Our design process highlights three strategies: considering postural interaction, applying somaesthetic design to interactive music technology development, and augmenting guitar accessories.

1. Introduction

Digital technologies can support musicians in diverse ways, from directly enhancing their ability to generate musical sounds, to helping them record their music, to supporting them using scores and videos to learn and prepare new material. Thus, musicians often find themselves mediating their embodied musical practices with complex artefact ecologies (Masu et al., Citation2019), which involve dedicated accessories and devices to support their multifaceted musical activities and performance with their instruments—e.g. straps, footstools, music stands, audio effects units, recording equipment, among others—alongside general-purpose computers, which support the usage of a plethora of music software tools for recording, composing, producing, training, practicing, sound design and synthesis, et cetera. In this paper we focus on a particular musical activity in which many guitarists engage: performance preparation with digital media using computing devices, specifically, instrumental transcription (AKA ‘learning by ear’) with the aid of Internet videos.

During instrumental practice with digital media, a guitarist may engage in various activities (Avila et al., Citation2019a), such as browsing for resources online, transcribing music with their guitar (colloquially known as ‘learning by ear’) (Green, Citation2017), or simply playing (and sometimes additionally singing) through their repertoire or set list as a rehearsal (Avila Citation2019a, Citation2019b). Here we specifically address the process of instrumental transcription with the support of online videos. During this process, guitarists may find themselves surrounded by an ecology (Vyas, Citation2007) of physical and digital resources (in addition to their instrument), involving printed resources and/or handwritten notes containing set lists, sheet music, chord sheets (among other resources), as well as personal computers (or mobile devices) that may display the digital and/or interactive version of the printed resources mentioned above (e.g. interactive scores or tablatures provided by online services, such as SoundSlice, Ultimate Guitar or Guitar Pro, among others), and other digital media such as instructional videos or sound files. Moreover, other artefacts may be present during practice, such as specialist accessories like tuners, metronomes, guitar straps, and plectra, as well as specialist audio processing devices such as guitar pedals, or audio interfaces. In this sense, guitarists’ interactions with technology during performance preparation are increasingly becoming more complex and interleaved (between instrument and other artefacts in their ecology) as they manage to control a multifaceted array of devices that serve a diverse range of functions, while simultaneously engaging in the highly skilled, dexterous, and demanding task of playing their instruments. This raises the challenge of encumbered interaction, in which humans interact with digital technologies while performing other complex manual tasks (Ng et al., Citation2014; Oulasvirta & Bergstrom-Lehtovirta, Citation2011).

In a previous paper, Avila et al. (Citation2019a) reported on findings from an ethnographic study in which they observed guitarists preparing to perform by using digital media and paper-based resources. This study revealed how engaging with these resources during instrumental transcription demands fine-grained manual operation of computer interfaces (e.g. using a mouse and keyboard or touch screen to navigate videos and other digital learning resources), which combined with having a chordophone (e.g. guitar or bass) at hand, results in regular interruption of the very activity that the digital media is supposed to support—learning and practicing music with the instrument. In some cases, some of the musicians observed had developed remarkable strategies such as attempting to play the instrument one-handed by tapping out their parts on the fretboard with their fretting hand while manipulating mouse and keyboard with their plucking hand, testifying to the significance of the challenge of encumbered interaction as an ongoing distraction from their musical practice, leading Avila et al. (Citation2019a) to seek alternative interfaces. Here we report on lessons learned from an extended design process to tackle Avila et al.’s previously characterised challenge of encumbered interaction for guitarists during instrumental transcription when using digital media involving an active use of transport controls (e.g. media players for video or music). Thus, in our design process we focus on proficient guitarists who are learning to play and/or rehearsing new performance material with the support of such digital media resources (e.g. videos and music from online services such as YouTube or Ultimate-Guitar).

Firstly, we ran a series of four design workshops—with groups ranging from four to six guitarists in each session (n = 20)—who were exposed to various interaction modalities for controlling external media (i.e. touch screen controls, foot switches, voice commands, musical notes as input, and gesture controls). These established the key concerns of guitarists while also revealing a broad array of potential solutions. An opportunity then arose to engage in a ‘soma design’ workshop—led by experienced soma designers—which encouraged us to focus on the somaesthetic (Höök, Citation2018; Shusterman, Citation2012) experience of playing the guitar (Avila et al., Citation2020). This employed defamiliarization activities to perturb our design perspective, re-framing our understanding of the musician’s physical and emotional relationship with the guitar and associated artefacts and directly inspiring the concept of a ‘Stretchy Strap’, an elastic guitar strap that can be used to control digital media through bodily movement of the guitar and facilitate instrumental transcription through micro-gestures (Olwal et al., Citation2020; Wolf et al., Citation2016). Finally, we produced a functional prototype of the Stretchy Strap, employing e-textiles to enable it to stretch and function as a digital media transport controller while maintaining its original function as a support, and evaluated this while being used by 10 guitarists.

Reflection across this design process contributes to the understanding of designing for encumbered musical interaction, encouraging designers to consider that most encumbrances and interruptions in the context of practice emerge when guitarists are required to switch from guitar playing to interacting with a digital system. This includes both trying to mitigate the challenges that they introduce in terms of the awkwardness of controlling media while playing, but also exploring new possibilities for more performative and aesthetic interactions that might arise from using a guitar to control media. Thus, our process of designing for encumbered interaction involves aspects of problem solving and seeking out novel opportunities. We note that augmenting guitars is something of a ‘wicked problem’ with many potential solutions that exhibit complex trade-offs. We propose that our Stretchy Strap occupies a distinctive position within this design space by enabling control of external media without overloading existing modalities while also resonating with the aesthetic experience of playing. We argue that this is because the strap embodies three wider design strategies: postural interaction that responds to overall bodily movement as a natural, aesthetic and yet under-considered modality; addressing the player’s somaesthetic experience of playing rather than directly on the instrument itself; and augmenting an accessory that fits into the guitarist’s wider ecology of devices.

2. Related work

We review four areas of related work: encumbered interaction with guitars, approaches to augmenting guitars, user-centred design practices with musicians, and an introduction to soma design.

2.1. Encumbered interaction

Physical encumbrances have often been studied in the context of interactions with computing devices whilst the hands are occupied with other physical tools or objects (Ng et al., Citation2014; Oulasvirta & Bergstrom-Lehtovirta, Citation2011), when people are preoccupied with other tasks (Wolf et al., Citation2011), or when they are in motion (Marshall & Tennent, Citation2013). Frequently, encumbrances are considered as situational impairments which hamper or impede the interaction with a device (Wobbrock, Citation2019). Furthermore, multitasking and interruptions have been addressed in terms of how much they detract attention from a primary task (Borst et al., Citation2015; Janssen et al., Citation2015). Generally, interruptions are characterised as occurrences within a task or between a main task and a secondary task, and problematised in terms of how disruptive they are for productivity, or harmful in attention-sensitive settings, such as healthcare and aviation (Janssen et al., Citation2015). Such disruptions are thus evaluated in terms of the introduction of errors and delays in the efficient performance of a task and in turn inform the design of interactive systems aimed at supporting people who engage in such tasks (Janssen et al., Citation2015). When encumbrances, motion and multitasking intersect—e.g. when having to engage with a smartphone while cycling (Marshall & Tennent, Citation2013; Wolf et al., Citation2016) or driving (Wolf et al., Citation2011)—a useful approach is to design for micro-gesture support, i.e. for small movements of the hand which can be performed without interrupting the primary activity (Wolf et al., Citation2011).

As noted in the introduction, Avila et al’s. previous research on encumbered interaction in the domain of musical performance and learning, involved an ethnographic study of how skilled guitarists engaged with digital media such as videos during both individual practice sessions and band rehearsals as they learned new material (Avila et al., Citation2019a, Citation2019b). As previously mentioned, they observed numerous examples of musicians struggling with the manual control of media on a secondary computing device—e.g. trying to find, play and loop around segments of videos as they also tried to play along on their instruments to learn their parts—as well as developing one-handed control strategies to simultaneously manipulate both instrument and computer peripherals (tapping the space bar on a computer keyboard or clicking on a mouse), highlighting the impact of encumbrances in their music practices.

2.2. Augmenting guitars

There is already a rich history of digitally augmenting guitars. Some authors have referred to the guitar as a ‘laboratory for experimentation’ (San Juan, Citation2020) in allusion to how different sound producing aspects of the guitar can be modified through electronic augmentation (Hopkin & Landman, Citation2012) or physical intervention (e.g. prepared guitars). Throughout the years, the guitar has also evolved from being solely an acoustic instrument to an electronic device that can connect to myriad other devices, from amplifiers and pedals (many of which are now digital) to digital devices including phones and digital audio workstations. Recently, the guitar has also been thought about as an artefact that could be augmented with a digital layer (Benford et al., Citation2016) and as part of the so-called ‘Internet of Musical Things’ (Turchet et al., Citation2017; Turchet & Barthet, Citation2019).

Within the field of new interfaces for musical expression (NIME) research, it is widespread practice to augment musical instruments with sensors and actuators to interface with secondary computing devices. In the case of the guitar and other hand-held string instruments (e.g. violin or mandolin), instrument designers have explored different approaches to incorporate live electronics into their instrumental practices, such as by mounting additional inputs in the surface of the instrument for proximal control (Ko & Oehlberg, Citation2020; MacConnell et al., Citation2013; Turchet, Citation2017), or by incorporating the inputs into existing proxy artefacts associated with the instrument, for example the guitar plectrum (Morreale et al., Citation2019; Vets et al., Citation2017) and vibrato bar (Kristoffersen & Engum, Citation2018) or the violin bow (Young, Citation2002). Overall, a common design goal in these projects is to allow the musician to control aspects of the performance without compromising their established embodied practices with the instrument (Kristoffersen & Engum, Citation2018; MacConnell et al., Citation2013; Morreale et al., Citation2019; Vets et al., Citation2017; Young, Citation2002).

Although previous research has revealed numerous ways of augmenting guitars to support their playability and sound producing aspects, our distinctive focus here lies in the less explored objective of supporting interaction with external media while playing the guitar, especially as part of learning and practice when instrumental transcription is involved. Within human–computer interaction (HCI) studies, encumbrance and interruptions emerging from digital media navigation have been noted as a problem that affects embodied guitar practice (Cai et al., Citation2021; Wang et al., Citation2021), as well as when following directions from instructional videos of manual practices and practical tasks (Chang et al., Citation2019; Tuncer et al., Citation2021)—such as cooking, applying makeup, or fixing a bicycle. Within the commercial spheres of music technology gamified approaches like Yousician or Rocksmith have provided ways of learning music by providing interactive scores that respond and provide feedback to guitarists when making mistakes. However, the aim here is not to provide a prescriptive ‘solution’ to encumbered interactions during instrumental transcription, but to remediate the existing performance preparation practices and the existing artefact ecologies of working musicians through technological interventions, which might involve augmenting the instrument itself, but which might also be open to other design possibilities as we explore below.

2.3. User-centred design with musicians

We now turn to the design methods that might help us tackle this challenge. User-centred design, in which users are closely involved in the design of interactive technologies from the earliest stages of ideation through iterative design cycles is widely recognised in HCI and practiced in industrial user-experience design. The aim is to integrally involve the users in the design process and harness their valuable domain knowledge to inform the design work that addresses them; thus, facilitating their participation is crucial for them to contribute productively to the process (Lucero et al., Citation2012). User-centred design has been applied to the design of interactive musical instruments. For example, Tanaka et al. co-designed a haptic-based audio editing device (the Haptic Wave) with a group of visually impaired audio producers by collaborating with them over the course of two and a half years. To inform the initial stages of the design process the researchers prepared a haptic interface to enable the participants to ‘feel’ and subsequently discuss qualities of sound which are often presented in a visual medium (Tanaka & Parkinson, Citation2016). Newton and Marshall developed the Augmentalist system to facilitate the process of prototyping and tinkering with sensor technology with musicians (Newton & Marshall, Citation2011). The system simplified the process of mapping sensor input to audio output to enable a group of musicians without any experience of sensor technology (including guitarists and bassists, among others) to technologically intervene in their own instruments and allow the researchers to examine their design approaches (Newton & Marshall, Citation2011). Co-design workshops have proved to be a powerful specific method within user-centred design for engaging researchers and musicians through making and low-fi prototyping. Inspired by elements from the Magic Machines workshops outlined by Andersen and Wakkary (Citation2019), Lepri and McPherson ran a series of workshops with musicians in which they were prompted to engage in embodied making processes to design and craft fictional musical instruments, with the aim of eliciting value-based design implications (Friedman, Citation1996) emerging from the instrumental concerns and musical values embodied in their low-fidelity hand-crafted instruments (Lepri & McPherson, Citation2019). Overall, our research followed an iterative user-centred design process that included initial co-design workshops to elicit ideas and opinions from guitarists through low-fi prototyping and the subsequent implementation and testing of a functional prototype.

2.4. Soma design

There is a longstanding recognition of the importance of embodied experience in both our interaction with computers and in making music. Dourish has drawn on phenomenology to argue that human–computer interaction should focus on how people create and communicate meaning through their embodied experience of systems in the world (Dourish, Citation2004). Focusing on music, Leman et al. (Citation2017), drew attention to embodied music interaction as a dynamical perspective that addresses how sensorimotor principles can shape musical experiences and meanings. Against this broad recognition of the importance of embodied interaction, Somaesthetic design has emerged as a method that directly targets designing interactive technologies for the body and for embodied experience.

However, more recent approaches like somaesthetic interaction design, albeit not diametrically opposed to existing views of embodiment in HCI, address the seldom explored somaesthetic appreciation of felt subjective bodily experiences (such as the one described above with musical instruments) as a resource for design (Höök, Citation2018; Loke & Schiphorst, Citation2018; Shusterman, Citation2012). One of these design approaches is soma design (Höök, Citation2018) which is grounded in the pragmatist philosophy of somaesthetics (Shusterman, Citation2008) that is primarily concerned with the practice and development of aesthetic appreciation through the soma—i.e. the amalgamation of body, mind and emotions—as a way to improving the human life experience. In this sense, soma design focuses on training the somaesthetic appreciation of designers and honing their first-person perspective of felt experiences to inform the design process (Höök et al., Citation2018). This approach has recently gained traction in the field of Human–Computer Interaction (HCI) and has proven to be a fruitful approach to design (Alfaras et al., Citation2020; Avila et al., Citation2020; Delfa et al., Citation2020; Höök et al., Citation2021; Loke & Schiphorst, Citation2018; Tennent et al., Citation2021).

To hone their somaesthetic appreciation soma designers engage in bodily practices such as Feldenkrais exercises (Feldenkrais, Citation2011) (among others) that train them to turn their attention to the subjective appreciation of specific areas of the body. To build on these introspective activities, designers can also engage in active processes of bodily exploration which are supported by encounters with the tactile and kinaesthetic properties of various materials (Höök et al., Citation2018) and technological encounters with mediating technological toolkits such as soma bits, a collection of deformable and elastic soft materials that can be actuated through inflation, vibration, and heat and applied to different parts of the body during interaction to help inspire future body-focused interactive devices (Windlin et al., Citation2019).

Soma design encounters are enhanced by grounded embodied ideation design practices, such as estrangement (Wilde et al., Citation2017) (to disrupt the familiar, e.g. by setting physical constraints or misaligning perception), bodystorming (Schleicher et al., Citation2010) (physically situated brainstorming), slowstorming (Höök, Citation2018) (a slower, more reflective, material-oriented and embodied version of brainstorming) and embodied sketching (Márquez Segura et al., Citation2016) (a type of bodystorming focused on capturing the emerging ‘sketches’ resulting from embodied ideation), which in the context of soma design allow for questioning and deconstructing habitual movements and encompassing practices to reveal new perspectives which may inform the embodied design process. The end goal of soma design practice is to make use of the tacit somaesthetic knowledge that emerges from the exploration of different somatic activities and to use it to enable interaction gestalts (Lim et al., Citation2007), i.e. intimate correspondences between the artefacts we design and their users (Höök, Citation2018).

The intimate embodied relationship between musicians and musical instruments has been deeply examined in fields such as phenomenology (Sudnow & Dreyfus, Citation2001) and musicology (Nijs, Citation2017), reporting on proficient performers’ experiences of their instrument being felt as an extension of their body when performing (Ingold, Citation2019; Nijs, Citation2017; Sudnow & Dreyfus, Citation2001). These views have in turn influenced arguments that digital musical instruments (DMIs) purportedly lack the acoustic ‘feel’ of traditional musical instruments, thus potentially affecting the embodiment relationship between musician and instrument (Magnusson, Citation2009). In response, some DMI designers have aimed to simulate this sensation in DMIs by providing vibrotactile feedback (Marshall & Wanderley, Citation2011). However, these design responses mainly centre the focus on instrument rather than the internal subjective experience of the musician whilst playing it.

Conversely, in a co-design process with a cellist, Andersen and Gibson (Citation2017) drew upon the musician’s experience of playing the instrument, as well as electroacoustic composition, to augment his cello through a series of embodied ideation (Wilde et al., Citation2017) and prototyping exercises. The aim of this particular design process was to enable sustained periods of flow and concentration while playing the augmented cello (Wilde et al., Citation2017). Considering the lived experience of playing guitar—which is honed through years of developing embodied practice, musicality, expression, and performance with the instrument—we have also employed our somaesthetic appreciation of playing guitar (as guitarists) to inform our design process with the Stretchy Strap which, as described in the next section. In turn, our exploratory work in soma design for DMIs (Avila et al., Citation2020) has inspired a series of remarkable works that have also employed a somaesthetic interaction design approach to make DMIs and augmented instruments, such as by harnessing the performer’s experience of their body whilst singing to inform the design and development of an interface for capturing the physiological changes that occur when doing so (Cotton et al., Citation2021; Tapparo & Zappi, Citation2022), as well as movement-based interactions in general (Bigoni & Erkut, Citation2020; Mainsbridge, Citation2022).

3. Design work

In what follows we report how we engaged guitarists in an overall iterative user-centred design process to explore the possibilities of better supporting encumbered interaction with digital media when playing their instruments. This unfolded in four stages: (i) a series of four co-design workshops involving 20 guitarists that employed low-fi prototyping methods to explore the broad design space of potential solutions; (ii) a soma design workshop led by external soma design experts that focused on the embodied experience of playing the guitar from which the concept of the stretchy strap emerged; (iii) development of a functional prototype; and (iv) evaluating the prototype with 10 proficient players.

3.1. Initial co-design workshops

The aim of initial workshops was to gather insights and inspirations by evaluating and refining low and high-fidelity prototypes with guitarists. We recruited 20 active players who reported learning musical pieces with interactive media on a regular basis. We recruited initial participants via our local networks of musicians and then employed the snowballing technique to reach out to further participants through these. We targeted working musicians, i.e. players that are often engaged in expanding their repertoire and performing in front of live audiences on a regular basis and were mindful throughout to try and recruit a balance of players in terms of musical style, age, and gender. Our final 20 participants comprised mostly of guitarists and a few bassists, and other instrumentalists who played both instruments and other chordophones (e.g. ukulele, mandolin, etc.).

The workshops took place in a laboratory space and were structured in three stages, namely, (1) an introduction, where the researcher presented himself to the participants, as well as the aims of the workshop, and the topics to be discussed; (2) a series of co-design rounds, where the participants tested the prototypes, reflected on them and refined them through a series of design activities, and (3) a concluding discussion to wrap up the workshop facilitated by a series of reflective questions led by the researcher. When testing the prototypes, participants were given the task to interact with computer-based media during guitar practice, that would include attending to a user interface (laptop screen) and operating media transports controls to manipulate media playback (e.g. play, pause and rewind). In order to ensure a broad exploration of ideas, we identified in advance of the workshop five interaction modalities for controlling external media that we used to seed discussions:

Mounting additional controls (e.g. dials, switches, and touchpads) on the surface of the guitar itself (an approach that is evident in several contemporary ‘smart guitars’ that use embedded displays to control onboard digital effects such as the LAVA ME 3 Guitar and Lâg’s HyVibe Smart Guitar).

Introducing a pedal to enable external media control via the feet.

Employing voice user interfaces (VUI) for control via speech.

Gestural interaction in which players would bodystorm (Schleicher et al., Citation2010) gestures (e.g. moving the neck of the guitar), gestures near the guitar (i.e. mid-air gestures, e.g. Leap Motion); making touch gestures on the body of the instrument (e.g. slide, tap, flick), and making gestures with their body (e.g. moving their head or eyes). Using cardboard mock-up guitars enabled participants to focus on bodily movements without the ‘distraction’ of having to play and make sound and allowed for easy annotation, both by drawing and sticking on imagined controls.

A music recognition technique called Muzicodes in which successfully playing musical ‘codes’ (pre-scored sequences of pitches and rhythms) that are embedded into the music itself triggers digital interactions (Greenhalgh et al., Citation2017).

To our minds, the first four represented obvious design strategies to consider, while the last one, building on a thread of our own previous research, introduced something more unusual into the mix to widen the discussion beyond the obvious. We assembled various prototypes by using off-the-shelf technologies (i.e. MIDI foot controllers, mobile phones, voice-based dictation features on Mac OS, and Muzicodes software) for participants to evaluate and discuss the interaction modalities. We also provided them with acrylic and cardboard guitar cut-outs to hold, mime with, demonstrate envisaged gestures, and draw on (e.g. sketching controls) akin to established methods such as bodystorming (Schleicher et al., Citation2010) and embodied sketching (Márquez Segura et al., Citation2016).

Participants considered each of our five seed modalities in turn, enacting possible solutions and discussing these as a group, guided by questions such as: whether they would use these technologies in their everyday practice; or whether they envisioned alternative applications for the specific interaction modalities beyond the setting of everyday practice. Finally, we explored their attitudes regarding intervention into their ecosystem of our prototypes (i.e. instruments and accessories), specifically the benefits and constraints of physical modification or augmentation of artefacts versus creating additional supplementary accessories. We briefly summarise the most salient impressions of and critical reflections on each interaction modality from our 20 participants.

3.1.1. Instrument-mounted controls

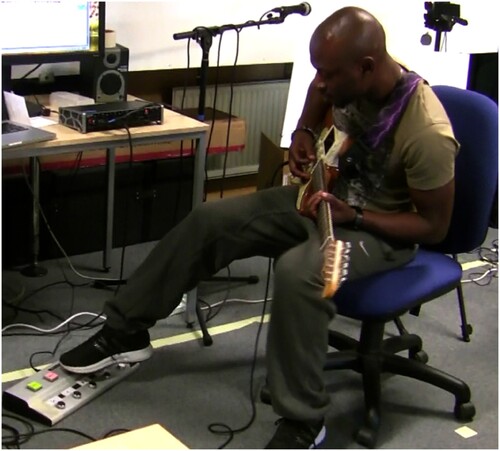

Although participants generally pointed out that proximal instrument-mounted touch controls were convenient to keep their focus centred on the guitar at the beginning, 12 participants nonetheless highlighted it as a disruptive interaction, after a closer inspection, as they reported needing to visually attend to the numerous controls in the touch screen prototype, which consisted of a mobile phone attached to the body of the guitar running a GUI-based MIDI controller sending control messages to a laptop. During the subsequent design activities participants responded by exploring designs which proposed a reduced number of inputs in the device in the form of physical buttons with tactile cues. Likewise, participants proposed to assign more actions to single inputs, to simplify the interface (Figure ).

Figure 1. (Above) Participant using the touch controls mounted on the guitar. (Below) Sketch of instrument-mounted controls on acrylic guitar.

Half the participants raised concerns as to whether such an approach would be too physically invasive with respect to the guitar, such as scratching or leaving adhesive residues behind or changing its resonating properties. Similarly, they discussed the size and positioning of the device on the guitar in relation to its body shape and the physicality of different playing styles. For example, one participant mentioned that playing more lively music that would require a more expressive movement of the strumming hand which might be obstructed by the device. In response to these issues, 18 of participants proposed the solution of compact and detachable (‘clip-on’) interventions which could be flexibly positioned on any part of the guitar. We also presented the idea of a bespoke guitar with integrated technologies for media control (e.g. browsing, navigation, control, and feedback), many deemed this concept as impractical, specifically noting that acquiring a guitar solely to support their practice preparation would neither be cost effective nor aesthetically appealing. Furthermore, guitarists often use the same guitar and equipment for practice as they do for performance, further inhibiting acceptance of a bespoke ‘practice’ instrument.

3.1.2. Pedal

For many participants, a pedal was the most obvious, familiar, and reliable approach for hands free control of media resources, especially to those who use effect pedals as part of their ecosystem. Participants pointed out that such devices are well established (e.g. commercially available foot-controllable page turners for tablets) and easy to operate. However, 11 of the participants highlighted similar concerns as with the instrument-mounted controls, specifically that a pedalboard would also draw their attention to a secondary device, albeit one that would enable them to stay hands-on with the guitar.

Similarly, participants also raised the issue of having to constantly stomp on pedals with their feet to navigate media would potentially be a physically tedious and time-consuming activity. In response, half of the participants suggested that the pedalboard device could either provide peripherally visible cues, such as lights on each footswitch to make them more easily locatable or reduce the number of footswitches to simplify the interaction with the stomping mechanism (Figure ).

3.1.3. Voice commands

Most participants were familiar with this technology from other contexts, such as when driving, and for the most part voice commands were perceived as a potentially convenient technology to enable hands free interaction. However, 9 of the participants suggested that this modality would be better suited for older people or those with relevant disabilities, rather than their own context of practice. Most pointed out that voice commands would need to function reliably to avoid user frustration, especially as in this context regular voice commands would be necessitated to pause, stop, rewind, and play media transport functions repetitively. Furthermore, 8 participants mentioned that even a robust voice user interface (VUI) would rapidly become impractical and inconvenient when practicing guitar playing and singing, as single word commands such as ‘stop’ could be accidentally triggered if contained in the lyrics sung. Hence, several participants suggested that two-word commands might be required to sidestep the issue (i.e. deafen the system). When asked to discuss how they might design their own voice commands, several participants deemed this as a potentially prohibitively time-consuming configuration task that would require prior specialist system configuration knowledge.

3.1.4. Physical gestures

12 participants noted that if video-game technologies like the Microsoft Kinect (i.e. motion capture) or the Nintendo Wii (motion sensors) were to be used to detect the movements of the guitar and enact gestures, there would invariably be instances of false positives and false negatives, resulting in a frustrating experience (similarly noted in the previous interaction modalities of voice and musical commands). Interestingly, 12 participants proposed strategies that resembled proximal controls. For example, they proposed a set of discrete gestures involving the body of the guitar, such as tapping or sliding their finger across the body of the guitar to trigger media actions.

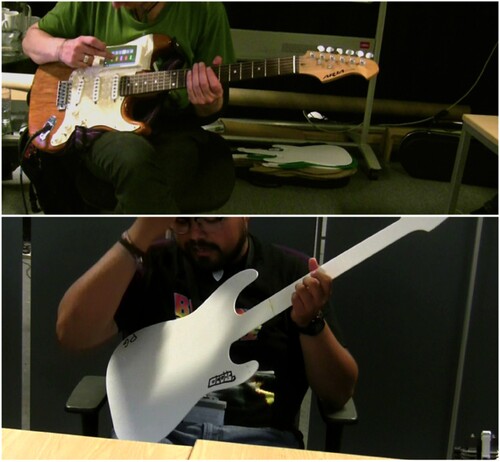

3.1.5. Musical codes

In this instance 11 participants appeared quite amused by the game-like qualities of interacting with media and triggering bespoke actions by playing customisable musical phrases on their instrument, and thus excited by the prospects of this technology. However, given that the interaction with the system implied playing distinct musical phrases (Figure ), 14 participants observed similar concerns as those raised with the VUI prototype, specifically the impracticalities of configuring the system to recognise distinct musical phrases, and the potential for false negatives and false positives. Hence, in this case they also proposed it would be necessary to selectively ‘deafen’ the system.

Figure 3. Musical notes as inputs for media control.

In summary, initial scoping workshops revealed participants to be adamant about conserving the acoustics and aesthetics of their instruments, with a majority preference for non-invasive, temporary interventions that can be removed and transferred across different guitars. Moreover, although receptive to technological interventions in this space, they disfavoured any interaction modalities prone to overload or confuse other modalities already in use during performance or that were susceptible to the Midas Touch problem, i.e. requiring unique and discernible input to avoid being accidentally triggered (Kjeldsen & Hartman, Citation2001). In general, participants showed a desire for reliable interaction modalities that would simply allow them to focus more on the activity of playing suggesting that designing for encumbered interaction requires looking beyond immediate questions of where controls go —on an instrument or in pedals—to also consider how they recede into the background to become part of the embodied playing experience.

3.2. Soma design workshop

Our engagement with soma design came about by a fortuitous accident when we were invited to participate in a soma design workshop staged jointly between our research team at the University of Nottingham and researchers from the Royal Institute of Technology, Stockholm, who were championing the approach within HCI. Three of the authors of this paper attended the workshop, bringing along the problem of encumbered interaction with guitars as a challenge for soma design.

The workshop was driven by the overall theme of balance, bringing together various ongoing projects for which balance was seen as being an important or potentially fruitful angle on design, including dancing with prosthetic limbs, walking balance beams, operating flying harnesses and in our case, playing the guitar while standing and moving—see (Höök et al., Citation2021) for a full account of the workshop. Throughout, we focused on the somaesthetic appreciation of guitar playing, and considered interventions in this through the lens of balance, exploring how our bodies assumed various postures when conducting different guitar related activities, such as performance and practice preparation.

The workshop began with a series of Feldenkrais exercises to attune our senses to the aesthetic experience of balance, leading us to relax and focus on various parts of our body during various balance exercises, initially solo and then with a partner. Following this, we undertook a series of bodily explorations using the soma bits toolkit (Windlin et al., Citation2019), which comprises various soft materials (e.g. cushions and straps) and actuators to control these (including vibration and inflation) that can shape material encounters with the body (Höök et al., Citation2018). Working in a small team of two guitarists and two soma designers, we tried positioning these artefacts at distinct parts of the body that mediate contact between the guitarist and the guitar, or that are engaged when performing standing up, such as the feet, torso, back muscles, and the arms. We also deliberately intervened in the players’ sense of balance when playing the instrument. In one example, we stacked a soft cushion on vibration actuators, on a board, on a pivot, to mock-up an unusual wobbly pedal board that the guitarist could stand on and rock back-and-forth as a form of control while receiving feedback through vibrations in their feet. In a second workshop we placed inflatable cushions between the guitar and their body to explore whether they might be able to squeeze against the instrument as a form of control while receiving feedback through increasing or decreasing resistance (i.e. inflation and deflation).

As we continued to experiment with varied materials, we repurposed an elastic yoga band as a guitar strap. The kinaesthetic properties of the elastic material allowed us to engage in motions that are not familiar with guitar straps which are sturdy and do not stretch once set, but nonetheless felt aesthetically pleasing both to perform and to watch. Defamiliarizing (Wilde et al., Citation2017) the habitual function of the strap (which is to simply hold the guitar in place) provided an interesting design resource to explore the bodily motions and sensations that emerge from the interplay between guitar and body. With this stretchy strap we explored how it felt to slowly sway the upper parts of the body while holding the guitar or to tug the neck of the guitar downwards or pull it forwards by stretching the elastic material. The sensation of temporarily pushing the guitar away from one’s body into order to control some digital media or effect and then have it quickly return to place felt natural and pleasing and mirrored gestures that some guitarists already perform such as moving and bending the neck to create vibrato.

Towards the end of the workshop, we improvised a Wizard of Oz performance to show how our Stretchy Strap, as it was now called, could be used to modulate audio effects. One of the guitarists put on the strap and started playing guitar over an amplifier whilst balancing and swaying his body back and forth on the wobbly pedal and stretching the elastic strap. At the same time another guitarist simulated various interactions by observing their movements and correspondingly modulating the sounds coming out of the amplifier by using audio software on a laptop (Figure ).

Figure 4. Wizard of Oz performance with Stretchy Strap and Wobbly Pedals.

One of the guitarists put on the strap and started playing guitar over an amplifier whilst balancing and swaying his body back and forth on the wobbly pedal and stretching the elastic strap. At the same time another guitarist simulated various interactions by observing their movements and correspondingly modulating the sounds coming out of the amplifier by using audio software on a laptop.

Our final plenary discussion with wider workshop participants beyond the immediate ‘guitar team’ allowed us to reflect on these powerful and aesthetically pleasing embodied sensations of interacting with a guitar through balancing, squeezing, rocking, and stretching. We realised that this focus on balance and use of the soma bits had directed our attention to the ways in which the body engages the guitar beyond the traditional focus of the fingers and hands, and largely beyond the feet too. We realised that other bodily interactions might be used to control digital media without overloading the hands and feet and that moreover this might even enhance the feeling of playing. The ideas that we had experimented with remained with us after the workshop and led to two subsequent activities. One was a follow-up workshop to further explore positioning squeezable and inflatable cushions around the guitar, which led to the unusual design concept of breathing instruments as reported in Avila et al. (Citation2020). However, while this was certainly an intriguing idea, we felt that its realisation as a practical proposition for everyday guitarists was unlikely in the near term. Instead, our attention kept returning to the Stretchy Strap concept as a potentially feasible intervention and so we decided to progress making and testing a functional prototype.

3.3. Design of the Stretchy Strap functional prototype

The notion of augmenting the guitar strap to become a media transport controller addressed findings from both our co-design and soma workshops. While the idea emerged from the Soma Design workshop as resonating with the bodily experience of playing the guitar, it also addressed key requirements from the co-design workshop, namely: augmenting the existing ecology of guitar accessories; not being invasive to the instrument itself; being readily transferrable between guitars; and avoiding overloading an already heavily used modality. The guitar strap is a ubiquitous guitar accessory which is used to suspend the guitar around the body of the player so that they do not need to hold it themselves. Although the strap is not a sound producing device, guitarists often exploit the fact that the guitar is safely suspended from their body to explore ancillary motions with the guitar, an aspect of performance that has been explored in other guitar augmentation projects such as augmented plectra (Morreale et al., Citation2019).

The elastic affordances of the Stretchy Strap concept oriented our design exploration towards e-textiles, as recent works in this field of design have explored stretch-based interaction techniques applied to the navigation of media (Olwal et al., Citation2020; Vogl et al., Citation2017). Furthermore, it has been reported that textile-based sensors can be smoothly integrated into everyday objects to imbue them with interactive qualities (Vogl et al., Citation2017)—as it is our aim with augmenting the guitar strap—and that stretch-based inputs are especially suitable to support micro-gestures (Olwal et al., Citation2020; Wolf et al., Citation2011), i.e. interactions that require less than four seconds to initiate and complete, and are usually intended to minimise visual, manual and mental attention in contexts were imprecise interactions are prone to happen, such as in guitar practices with digital media.

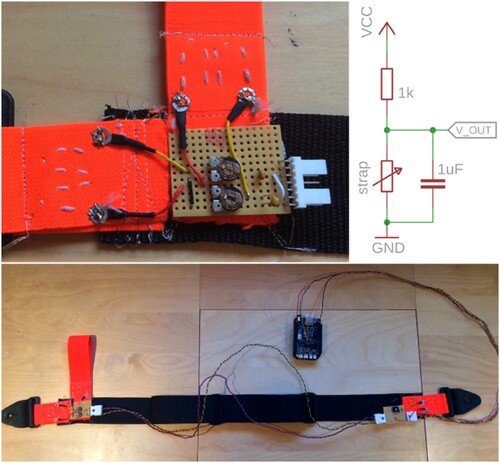

In the case of the Stretchy Strap, we crafted a set of three stretch sensors by cutting segments of a 2-way stretch elastic ribbing and hand-stitching them with stainless steel conductive yarn (80% polyester and 20% stainless steel). The stitch pattern is a running stitch which zigzags across the width of the elastic fabric. This allows for the conductive fibres of the yarn to be compressed when the fabric is stretched. To increase the compression of the fibres and the tension of the elastic fabric material, a 10 cm piece of elastic fabric is looped around the plastic ends of the strap and then machine-sewn to the ends of a guitar strap (Figure ). This ensures that the stretch sensor is strong enough to hold the weight of an electric guitar but also to be stretched when downward tension is applied and to return to its original position when let go. To connect the stretch sensors to electronic components the ends of the yarn are overstitched with silver-plated nylon thread. The silver threads are then looped around solder tags and tinned with solder. The conductive yarn forms one half of a voltage divider circuit (Figure ). The fixed resistor is chosen to balance the nominal resistance of the thread, and the capacitor provides lowpass filtering for noise reduction. The output of the voltage divider is sampled by a 16-bit analogue-to-digital converter on a Bela embedded computer (McPherson & Zappi, Citation2015) which runs a script mapping sensor readings to actions.

Figure 5. The Stretchy Strap with a close-up view of its e-textile circuit and schematic.

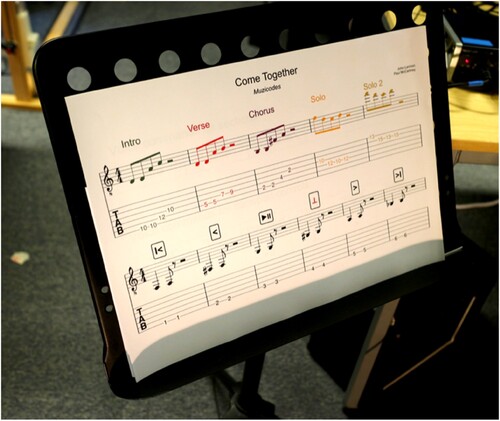

To use the Stretchy strap as a media controller we employed a combination of technologies. To read and process the sensor data we use a Bela Mini running a script that sends MIDI CC (continuous controller) messages whenever the stretch sensors in the strap are stretched. These MIDI messages are sent to the host machine over USB. To host and run the media we used the SoundSlice platformFootnote1, which synchronises YouTube videos with interactive music notation in the web browser. By embedding the SoundSlice player in a static webpage and using their API and the Web MIDI API we were able to map incoming MIDI messages from the Stretchy Strap to media controls to navigate a music video and the notation.Footnote2

These media control actions are triggered by engaging in subtle but firm bodily movements with the guitar which do not demand too much pull force to stretch the textile sensors. For example, tugging the neck of the guitar downwards toggles between play and pause, pushing down on the body of the guitar with the forearm rewinds the playback and pulling on the rip cord on the side sets loops (Figure ). The stretches which are more ready at hand (i.e. pulling down the neck and pushing down the body of the guitar), are mapped to more critical and immediate actions like play, pause and rewind, whereas the rip cord is mapped to a secondary action which can be used when the guitarist stops playing, such as setting playback loops.

Figure 6. Stretchy Strap Control Actions.

3.4. Evaluating the Stretchy Strap with guitarists

We deployed our Stretchy Strap prototype in a user study with 10 proficient guitarists—who either played guitar as working musicians, or regularly learned new songs—to assess their initial experience with the artefact. Recruited participants used their own guitars in the study and were informed they would be asked to practise a song during the study using the SoundSlice media platform running on a laptop using an interactive strap to control the SoundSlice media. Participants were not required to learn the song beforehand and were not expected to perform the song perfectly during the study, but rather to engage with the media as they typically would during practice and to focus on the experience of using the Stretchy Strap. The study involved four stages:

The study commenced with a brief background interview in which participants were asked about their instrumental practice and personal experience with the guitar and their everyday use of media resources to support their performance preparation. The aim of this interview was to survey the participants’ experiences with encumbered interaction in the practice setting.

Following the interview participants were introduced to the Stretchy Strap and were asked to strap it on to their guitar. Before beginning to practice the song with the strap participants received a brief tutorial regards the strap’s mappings and engaged in a brief orientation period, using it to trigger the SoundSlice transport controls.

After familiarising themselves with the strap for a few minutes, participants were then instructed to practice along with the song and navigate the media using the strap. They were free to use the Stretchy Strap for as long as they wanted over the course of this stage of the study.

The study concluded with a semi-structured interview in which we captured participants’ experiences of using the Stretchy Strap. Questions explored issues such as the responsiveness of the stretch inputs and the bodily experience of using the strap to control the media and whether the overarching experience felt comfortable for them. We also asked them to compare the Stretchy Strap with the artefacts they would normally use to support their practice (e.g. mouse, or laptop trackpad), and comment on whether the strap allowed them to focus more on guitar playing (Figure ).

Figure 7. Guitarist using the Stretchy Strap with SoundSlice.

We now report on the interview data collected from those who tested the strap prototype and distil some salient examples. The background interview highlighted and confirmed the challenge of encumbrance, in that participants’ interacting with digital media resources whilst playing their instrument would often experience a break of flow when needing to attend to the digital media. Many participants noted they would frequently repeat specific sections of a media track by manually pausing and rewinding to locate their segment of interest. More specifically, some participants mentioned that they wanted to focus their practice time more on playing the instrument rather than configuring equipment or media resources to support the rehearsal.

The third stage of the study, the extended engagement with the strap, revealed several themes. Most participants found the strap intuitive, responsive, and simple to use. The strap can soon feel familiar, ‘It's just a kind of everyday part of playing the guitar’ (PT). In use, participant CG stated it: ‘is quite intuitive, tactile, and physical. You can quickly get the feel for how to do it’. Participant GM observes that this simplicity and absence of a configuration process enabled them to just get on with the desired activity, stating: ‘I have limited time to sit down I don't want to be configuring equipment, setting up pedals and stuff like that.’ (GM).

Most of the participants reported that the strap controller felt like it was part of the instrument, ‘It feels like it is more part of holding the guitar, more like guitar playing’ (CG), participant PT also observed how it was ‘closer to being part of the movements, the actions, the activities, the process of playing’, going on to reflect that: ‘[whereas] the computer does not feel like part of the instrument’ (PT).

Furthermore, the participants indicated that the strap’s closeness to the guitar enabled them to maintain a better focus and flow with the instrument, in contrast to their experience of using other modalities such as foot pedals. For example, ‘When you are using pedals you are in a different realm of control. When I’m practicing, I want to get into grips with what's happening on the fret board’ (GM), and PT observes that ‘[pedals are] kind of peripheral to your guitar, and the strap feels like it's closer to your guitar’, going on to acknowledge, ‘whereas this feels more like you could sort of like flow into the action.’ (PT). Participant CG identified a similar experience, observing, ‘You haven't got to lean forward to stop and tap things and upset your balance with the guitar. You can keep the guitar in the right kind of pose and yeah it just helps you to carry on with it without breaking up that experience’ (CG). In addition to the guitar, maintaining a focus on the SoundSlice UI while controlling it was also highlighted, ‘after a little while with using the strap I didn't have to take my eyes off the screen at all, you know, I could just like stop, start again and carry on, so it was actually helpful with focusing on what you're mentally doing’ (CG).

The final discussion stage focused on talking through the strap interactions as configured for the study and discussing possible adaptions. Participants were struck by the proximity of the strap to their body and the instrument and were therefore keen to prioritise the real-time media transport actions to the strap, GM notes, ‘The advantage of having it on the strap is obviously the proximity. There are things that you need that real time control for’. Participant AP expands on this, stating that play and pause were the critical real-time functions to prioritise, ‘In the computer I think that the play and pause are the ones that cause the most interruptions, so maybe it’s important to have them in close to the body.’ Participant PB observed that assigning play and pause to the neck down control action enabled them, ‘to have [their] hands on the neck of the guitar, not doing something else and then suddenly it's time to play’.

There was a collective desire to ‘keep it simple’ (AP), a thought also echoed by PB, ‘I probably only would use play, pause and perhaps rewind on it, and not have lots of controls that I wouldn't be sure quite what was really going on’, suggesting that a simple interaction (in this case simply mapping a single action via a single sensor rather than relying on complex mappings and/or multiple sensors) can sometimes be an effective one. Discrete control such as play and pause appeared appropriate to the nature of the strap’s control interactions, but when fine-grained or continuous control was required, other modalities may serve better. For example, PB goes on to suggest, ‘If I wanted to make something fine-grained, I'm just going to get on with the mouse I think’. The rip cord interaction was not considered a real-time control action, as it required the player to take a hand away from performing on the instrument, ‘I could never do that while playing because I’d have to take the hand off to pull it’ (PT). Nonetheless, PT goes on to note that ‘It has a very different feel as an action to the other two, it's more deliberate. It's not an action that you do in flow’, thus this control action is more suited to, ‘some form of break period […] like moving to the next song, or back to the beginning of this one’ (PT).

Participants also highlighted some other limitations with this version of the prototype having to push on the body of the guitar to rewind was deemed a tedious action, suggesting a preferred mapping for this control input to be resetting the media play head to the beginning of the track. Conversely, some participants speculated about other potential applications for the Stretchy Strap, such as using it during performance to control audio effects or other things in the stage.

In general, participants described a positive bodily experience of using Stretchy Strap, as it enabled them to keep the flow of guitar playing a priority and the controller felt like part of the instrument and the performance. The proximity of the controller to the body and the instrument, along with the subtle movement of the neck of the guitar (to stretch the strap), removed the need to lunge forward to attend to other peripheral controls, such as a computer or a pedalboard. In relation to this latter point it must be noted that all the participants used the prototype whilst sitting down. The controls of the strap were considered most suited to real-time controls of play and pause and the rip cord for transport actions that take place in breaks between performances, whereas ‘fine-grained’ or continuous control might be better suited to other peripheral controllers.

4. Design recommendations

We now reflect across our extended design process to draw out recommendations for designing unencumbered interaction with guitars and potentially with other instruments or even non-musical objects. We begin with some general reflections on the wide variety of design possibilities that arose during our process before highlighting three design strategies that are embodied by our stretchy strap: (i) designing for postural interaction; (ii) designing for the soma; and (iii) designing for the wider ecology of the instrument.

4.1. Diverse design possibilities

It is evident from our design process that there are potentially many ways of augmenting a guitar to better support encumbered interaction and that choosing among these might be influenced by an equally wide variety of factors. We also see how supporting encumbered interaction involves mitigating challenges such as manipulating digital media while playing an instrument but also exploring new aesthetic possibilities. The ability of electric guitarists to manipulate tremolo bars, volume knobs, pickup switches and other controls mounted on their instrument shows that it is possible to develop an eyes-free relationship to controls mounted in slightly odd places near the strings of a guitar. This suggests that most encumbrances and interruptions in the context of practice emerge when the guitarist is required to switch from thinking about guitar playing towards thinking about what another digital system is doing, and then switch back again. Given this, although instrument-mounted controls can physically reduce the space and time to move between instrument and media controls, they still require a change of thought and attention, and so may not be significantly better than conventional control methods (e.g. using a keyboard and mouse), especially if the controls still demand dexterous or complex operation. Moreover, there is a risk of overloading and hence disrupting a modality that is already reserved for making sound with the instrument. Finally, we observed a resistance to modifying guitars and the need for a solution that can be applied to multiple guitars from a players’ collection.

Pedals are very commonly used by guitarists (and other instrumentalists) in performance to trigger and control effects and media with their feet whilst having their hands free to perform with the instrument, however they also impose physical and cognitive demands. While the feet allow for relatively precise timing of simple actions, they lack the articulation and fine-motor capabilities of the hands, and so the inputs in pedalboards are restricted to footswitches or rocking pedals that need only gross motor control. And although the placement and affordances of pedals compensate for this, they still demand some prior physical preparation (coordination and body-weight distribution) from performers—which is attained over time by regularly performing with these devices—in order to achieve skilful operation (Emmerson, Citation2016; Furniss & Dudas, Citation2014). The greater the number of inputs on a pedal, the more coordination and visual attention it will demand, and unless the guitarist is sitting down, the interaction is limited to one foot. And again, pedals are already widely used for controlling sound so that there is a risk of overloading what is already a busy modality.

Although most commercial VUIs enable hands-free interactions, media navigation with these devices is often restricted to remote control actions, such as play and pause. Although these controls are convenient for passive interactions with media, Chang et al. (Citation2019) have pointed out that navigating interactive media actively (such as in how-to videos to learn musical pieces) with VUIs required their participants to introduce delays in order to sequence multiple commands (e.g. pause, then rewind) to achieve the same task that a single click would achieve (e.g. hover the mouse to point earlier in the timeline and click), and that they need to anticipate that the system would need to take some time to process their voice command in addition to the time it takes them to produce the utterance. These authors also identify technical challenges such as audio separation when users are already engaging in a noisy task (e.g. playing an instrument) that could undermine interactions with VUIs. Our workshop participants also pointed out how using VUIs could conflict with singing.

The concept of musical codes or commands that are embedded within the music itself presents a similar issue, as mapping musical notes performed with the instrument to trigger media controls could conflict with the performance rehearsal. Although controlling media via instrumental performance posits the advantage of exploiting existing skills of the guitarist, restricting notes from the playable range of the guitar to be used as inputs would be limiting in the context of preparation. It also suffers from similar problems to VUIs in the pacing of sequencing musical commands.

We must be sensitive to the fact that instrumental practices with the guitar require extensive manual engagement and are often accompanied by singing, and we should avoid overloading interaction modalities (McPherson et al., Citation2013), especially where they are already employed for the primary task of playing music and involve highly skilled and intense interactions that could easily be disrupted with (musically) catastrophic consequences. Looking beyond music, in any potential application domain, when we are considering encumbered interaction design, we need to critically inspect the specific practices of the activity that we are designing for. For example, what are the unique things that people do and ways they work within that field? What are they good at? Where are the challenges and constraints?

At first glance, these initial reflections are bewildering. What is a designer to do when faced with this complex array of choices and trade-offs? Is there a best option that trades off ease of media control with potential conflict with existing practice? Or is it a matter of personal choice for each player?

While we recognise these possibilities, a specific and distinctive design did emerge from our process in the form of the stretchy strap. We do not claim that the stretchy strap will ultimately prove to be better than other approaches, certainly not for all players under all circumstances, but do argue that it is a particularly interesting solution to the challenge of encumbered interaction because it embodies three important design strategies.

4.2. Design for postural interaction

The primary purpose of straps (and harnesses of various kinds) is to help us manage the challenge of encumbrance, connecting objects to our bodies in ways that allow us to continue to stand and move while using them. It is perhaps not surprising then that those straps might support the design of encumbered interaction.

In moving beyond being a regular strap to becoming an interactive device, the Stretchy Strap harnessed a particular mode of interaction—postural interaction—that draws on those bodily movements associated with maintaining postural orientation and equilibrium while encumbered, in this case while playing the guitar. The possibilities of postural interaction have previously been considered in HCI through research that aims to monitor and feedback about posture as part of both physical and emotional wellbeing (Asplund & Jonsson, Citation2018; Bianchi-Berthouze et al., Citation2006.; Morrison et al., Citation2016; Van Schaik et al., Citation2002) or that recognises postures, especially hand postures, as a part of gestural control (Brown et al., Citation2016.; Sagayam & Hemanth, Citation2017). Our approach is different. The Stretchy Strap does not try to recognise a set of postures, but instead simply maps a key postural movement—the flexing of the body in relation to the guitar—as a form of direct input. While simple, we argue that this direct postural approach offers two potentially powerful features as a design strategy:

It avoids overloading other modes, most notably the use of the fingers and hands that are already fully occupied when playing the guitar, the feet which may already be occupied with various pedals, and the voice which may of course be employed for singing and talking. As far as the guitar is concerned, body posture is an unexploited modality. It also has a distinctive, and we argue potentially powerful, aesthetic quality, both feeling and looking good. As we experienced ourselves during the soma design workshop, it can feel pleasurable to shift posture when playing, for example to ‘dig in’ when playing a sustained note or chord and could also appear suitably performative to an audience.

On the other hand, this kind of postural interaction has some limitations when compared to other modes. It offers limited degrees of freedom and sensitivity of control when compared to, for example, using the fingers. While players might learn a finer sensitivity of control over time, it is likely that for reasons of both physiology and existing training, interaction via the whole body will remain coarser than that with the dexterous and trained fingers and hands. This suggests that real-time interactions via the strap might be limited to just a few basic actions (play, loop back, stop in our case) and that some configuration work may be required to prepare these before playing. It is also interesting to speculate on other kinds of extended strap designs that extend the range of movement and degrees of freedom. Harnesses that involve multiple straps, for example, might capture lateral movements around the body as well as the broadly up and down movement of our current stretchy strap.

4.3. Design for the Soma

One of the principal aims of soma design is to inform how we can flow with experiences, our body, and the artefacts we design (Höök, Citation2018). This led us to focus on the experience of our whole bodies when playing the guitar, not just on our hands. Our attention as designers was inexorably drawn towards aspects of bodily experience that are not always in focus for guitarists, including the experience of holding and carrying the guitar and one’s posture and movement when playing it. Our attention shifted away from the fingers, hands and arms towards the torso, shoulders, and legs as potential sites for interactive interventions.

By engaging in activities like estrangement (Wilde et al., Citation2017) and slowstorming (Höök, Citation2018), we respectively defamiliarised the experience of playing the guitar, opening ourselves to unfamiliar design possibilities, and reflected on the new sensations we were experiencing during the process. Feldenkrais exercises also helped refocus our design sensitivity to embodied experience while the soma bits toolkit, with its focus on soft and deformable materials, led us away from only considering the possibilities of conventional ‘hard’ materials such as switches, dials, foot pedals and of course the guitar itself. It was the playful exploration of the elastic materials in the soma bits toolkit that directly inspired the idea of having a stretchy guitar strap. Moving with a guitar connected to the body via elastic straps subsequently proved to be a surprisingly natural and expressive experience and stimulated thinking about how this might address encumbered interaction with external media.

It is notable that the concept of the stretchy strap emerged from our soma design workshop, not from the co-design workshops with guitarists. We reflect on several reasons why this was the case. Encumbrance is very much a body-focused issue and so calls out for design methods that address bodily issues. However, playing the guitar is also very much an aesthetic matter in the sense that it needs to both feel good to the player and often look good to the audience too. While the traditional focus on ergonomic design as part of human factors can help address body-centric issues such as motor performance, stress and fatigue, soma design deliberately encourages an aesthetic appreciation of bodily experience and so was an ideal fit for our brief. It is no coincidence that, according to those who tested it, the Stretchy Strap feels good to move with and can also encourage performative movements. However, it should be noted that although media control with the strap was regarded as ‘closer to being part of the movements’ involved in guitar playing—in contrast to peripheral devices like keyboards, mice, and pedals—media navigation with this device is still closer to the logistic aspects of performance preparation than to the embodied flow of instrumental performance.

The soma workshops were also notable for involving people who were experts in bodily experience but who were not experts in playing the guitar. These participants did not arrive with the familiar baggage of expectations and assumptions about guitars and instead drove us to focus more on the body. This contrasts with the co-design workshops where participants were experienced guitarists and whose responses expressed a degree of conservatism. Experienced musicians who are knowledgeable about, if not obsessed by, guitars may see the instrument in terms of its familiar controls, while also being concerned about overloading these or damaging their instruments. Soma practitioners on the other hand were frankly much less interested in guitars than they were in human bodies, which led us in a new direction. However, this raises the challenge of how to give the soma designers in the team who are not instrumentalists a sense of what it is like to play an instrument. One possibility might be to run performance exercises with the instrument alongside general body-awareness activities such as Feldenkrais early in the design process to attune a team’s sensibilities to the character of a given instrument. This might build on the techniques reported in (Avila et al., Citation2020) that encouraged non-players to engage with the guitar, including strumming electric guitars unplugged to make them less intimidating and minimise discordant sounds; playing in open tunings which tend to make pleasant sounds when just strummed; or even playing in pairs, with expert players fingering chords while ‘general designers’ strum strings.

4.4. Design for the wider ecology of the instrument

We must consider that guitars can often be regarded as valuable artefacts for guitarists as they may be imbued with sentimental, material, and aesthetic values which are developed over years of ownership and the provenance of the instrument (Benford et al., Citation2016). Consequently, our participants were reticent about any kind of intervention that might compromise the material and aesthetic integrity of their guitars, such as instrument mounted devices.

From this perspective, we reflect on the nature of the strap as a locus of intervention and its implications. Guitar straps are typically mundane and unexceptional accessories, but almost ubiquitous for guitar players (excepting classical guitarists). They can be readily moved from one guitar to another, will not damage the instrument and do not significantly affect the way that it is played. With hindsight this seems like an ideal point at which to innovate without deeply modifying the core technology (the instrument) or usual practice. Users can try out a strap and then adopt it if it suits.

Indeed, the guitar world operates in this way: there is a very wide range of accessories of various kinds available to guitarists, spanning hundreds of effects pedals, cables, tuners, plectrums and of course straps, and so forth, which complement, and often connect to, but do not replace the instrument. Some (effects pedals) directly affect the sound that is produced whereas others perform ancillary functions such as tuning or, in the case of straps, encumbrance. There are also standards for connecting and controlling them so that they interoperate as part of the extended ecology of the instrument (extending as far as guitar amplifiers and public address systems). Many guitarists assemble collections of such accessories, and these typically change more often than the instrument. Interesting, innovations that start off in accessories may then find their way into instruments (e.g. on-board tuners in acoustic guitars are a good example). In short, the guitarist’s world is highly accessorised as even a quick glimpse inside a guitar shop or skim of a guitar magazine will testify.

These accessories might also be referred to as ‘gadgets’, especially when they are digital; while that may suggest that they are frivolous, for many guitarists specific gadgets or accessories become fundamental to their sound, style, or practice, for example a favourite effects pedal or a clip-on tuner. We propose that the stretchy strap is an appropriate response to the challenge of encumbered interaction precisely because it is a gadget; something that can be easily experimented with, without changing the core instrument or indeed its wider ecology and that can be transferred between instruments. One consequence of this line of thought is that the strap should be extended to be compatible with the wider ecology of guitar accessories and functions, for example by acting as a digital MIDI controller or analogue expression pedal.

However, we should ask if this is a unique feature of the guitar world. Other musical instruments, e.g. traditional classical ones seem less ‘gadgety’, although we note that there are still standard accessories (e.g. cases, rosin, oil, reeds, bows, depending on instrument) and that many have straps so that they can be played while standing or moving. Considering other kinds of products, some would seem to be very accessory or gadget friendly, for example home computers and cars. So, we recommend that researchers and designers consider the whole ecosystem of devices and resources at play, and be open to intervening through accessories and gadgets, especially in domains where the core technology or device may be more resistant to direct change or replacement.

5. Conclusions and future directions

This paper presents a response to the challenge of encumbered interaction that had been raised by previous ethnographic studies of guitarists’ everyday musical practices, especially how they learned, prepared, and practiced new material. The essence of the challenge is how to better support them interacting with external media—videos, sheet music, tabs and so forth displayed on phones, tablets, and PCs—while holding and playing their instruments. Our response involved an extended human-centred design process in which we engaged guitarists in co-design workshops to explore the many potential ways in which their guitars might be digitally augmented, before prototyping and testing a novel solution in the form of the Stretchy Strap, an elastic guitar strap connected to stretch sensors that can be stretched and relaxed to trigger various actions such as replaying and looping external media. This design first emerged during a soma design workshop which served to focus our attention on guitarists’ general bodily interactions with their instruments while also defamiliarizing their guitars, most notably using various soft materials from the Soma Bits toolkit.

We offer some general conclusions from our experience. Augmenting guitars in this way is something of a ‘wicked problem’ with many potential solutions that exhibit complex trade-offs which may well be subject to individual preferences. This said, the Stretchy Strap occupies a new and distinctive point within the overall design space, potentially offering a form of interaction that does not overload existing modalities and that resonates with the aesthetic experience of playing the guitar. We argue that this is because it embodies three wider design strategies:

Postural interaction – it responds to posture and overall bodily movement in relation to the guitar as a natural aspect of playing the instrument.

Ecology – it focuses on creating an accessory that might easily be introduced into the wider ecology of the guitar, sitting alongside the instrument and other devices.

Soma – the design emerged from a focus on the player’s aesthetic embodied experience rather than on the instrument itself.

Whether our Stretchy Strap design is ultimately successful remains to be seen. There are indeed other interaction modalities that we could have explored and alternative ways in which we could have designed the range of prototypes evaluated by our participants during the co-design workshops, e.g. mounting tactile switches instead of using a touch screen, or mid-air gesture interactions with a Leap Motion controller. However, feedback from our participants provided consistent insights across different groups of guitarists in regards of in-instrument mounted controls (irrespective of the transducer used for input), pedal-based controls (irrespective of the form factor of the footswitches or the shape of the floorboard’s surface), gesture-based interactions (addressing different gesture modalities, such as mid-air gestures with the hands, touch gestures, body-tracking gestures and instrument-based gestures), as well as other less conventional modalities in this space, such as voice-based interactions and using musical notes as input.