?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.

?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.ABSTRACT

This paper presents an Augmented Reality software suite aiming at supporting the operator’s interaction with flexible mobile robot workers. The developed AR suite, integrated with the shopfloor’s Digital Twin, provides the operators’ a) virtual interfaces to easily program the mobile robots b) information on the process and the production status, c) safety awareness by superimposing the active safety zones around the robots and d) instructions for recovering the system from unexpected events. The application’s purpose is to simplify and accelerate the industrial manufacturing process by introducing an easy and intuitive way of interaction with the hardware, without requiring special programming skills or long training from the human operators. The proposed software is developed for the Microsoft’s HoloLens Mixed Reality Headset 2, integrated with Robotic Operation System (ROS), and it has been tested in a case study inspired from the automotive industry.

1. Introduction

In recent years, Human–Robot Collaboration (HRC) has been a popular concept in the industry (Makris Citation2021). Fully automated production systems have been used to address the requirement for mass production. Nevertheless, the current transition to mass customization of products dictates the need for flexible and easily reconfigurable factories (Chryssolouris Citation2006; Rabah et al. Citation2018). This need cannot be facilitated by the traditional, highly automated and fixed production systems. In the context of the HRC approach, robot and human skills can be combined for the achievement of a more efficient, flexible, and productive manufacturing system, where robots are in charge of the repetitive and most ergonomically stressful tasks, while human operators undertake the tasks that require high dexterity and cognition (Michalos et al. Citation2014).

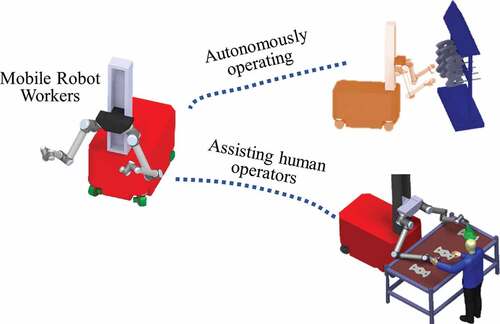

To this direction, the latest trends in European manufacturing foster the employment of mobile robot workers (Green et al. Citation2008) to support human operators in various manufacturing processes (). These robot workers are flexible enough to autonomously navigate in different workstations undertaking multiple operations such as part handling, screwing and drilling. Their mobility enables the dynamic reconfiguration of the factory in a faster and easier way when changes need to take place in the system’s structure and/or operations (Kousi et al. Citation2018).

The facilitation of such a reconfigurable production paradigm requires the deployment and integration of a set of novel robotic technologies: a) online environment and process perception for the robot workers, b) safety strategies for enabling human-robot safe co-existence in a fenceless environment, c) monitoring and control mechanisms for HRC execution orchestration, d) digital twinning techniques for online tasks re-planning and e) intuitive human side interfaces enabling seamless integration and communication between the human operators and robot workers.

This paper focuses on the deployment of smart human side interfaces towards enabling efficient Human Robot Interaction (HRI) schemes in the context of the reconfigurable production paradigm (). In particular, an Augmented Reality (AR) based software suite is presented aiming at providing interfaces to support human operators in different phases of the HRC as follows:

Support for easy programming of mobile robot platforms and robot manipulators by non-robot experts,

Support in assembly process execution by providing a) assembly instructions and b) input interface for the operators to provide feedback on the execution status,

Support in safety awareness by visualizing safety working volumes and emergency alerts,

Support in execution recovery by providing resilience instructions when unexpected events or failures occur, minimizing the production stoppages.

The paper is divided into the following sections. Section 2 conducts a thorough literature review of existing HRI approaches and their use of Augmented Reality, identifying existing limits and the given system’s progress beyond the state of the art. Section 3 describes the AR software suite’s methodology and supporting features. Section 4 outlines the actions required to develop the proposed solution. Section 5 shows the technology in action in an automobile use case. Section 6 describes the findings of this deployment’s performance evaluation, emphasizing the application’s benefits and user acceptance. Finally, Section 7 summarizes the study’s findings and highlights the authors’ future research goals.

2. Literature review

To bring the screen alive for the spectator, a cinematographer named Morton Heilig created the idea of Augmented Reality in 1950. Heilig created the first AR machine, Sensorama, in 1962. Over the last decades, AR technology has been introduced in different sectors such as medical (Blum et al. Citation2012; Wen et al. Citation2014; Sutherland et al. Citation2019), military (Julier et al. Citation2002; Livingston et al. Citation2002), manufacturing (Mourtzis, Vlachou, and Zogopoulos Citation2017) and education (Wu et al. Citation2013; Mourtzis, Zogopoulos, and Vlachou Citation2018). The term Augmented Reality (AR) refers to the presentation of virtual and physical objects simultaneously in a real-world environment, allowing the user to interact with both elements (Azuma Citation1997).

2.1 Augmented reality and industry 4.0

Over the last two decades, AR has been widely used in the industry and academic sector. Augmented reality applications are able to enhance the real-world perception ability of the operator. Esengün and İnce (Citation2018) highlighted the added value from AR technology’s usage in manufacturing, maintenance, assembly, training and collaborative operations in the Industry 4.0 era. AR approaches for the real-time maintenance of manufacturing machines have been presented by Zhu, Ong, and Nee (Citation2015) and Mourtzis, Siatras, and Angelopoulos (Citation2020). The visualization of hidden areas and objects existing outside of the operators’ field of view (FOV) investigated by Fraga-Lamas et al. (Citation2018). Additionally, AR may be used for operators’ support during objects’ assembly (Atici-Ulusu et al. Citation2021). Wang, Ong, and Nee (Citation2016) introduced a multi-modal assembly guidance application assisting operators with assembly instructions’ and manipulated components specifications’ visualization inside the user’s FOV.

2.2 Augmented reality integration in robotic solutions

Through the AR technology, human operators are able to directly communicate with machines and robots. Andersson et al. (Citation2016) presented an AR solution for the visualization of simulated robot’s motions using ROS (Quigley et al. Citation2009) protocol communication. Kyjanek et al. (Citation2019) developed an application for the visualization of a robot motion’s percentage of progress. The speed of robot’s end effector, robot’s joint values and the trajectory are visualized to the human operator through an AR glass device. The reachability and manipulability of a robot manipulator has been investigated by Ong et al. (Citation2020).

2.3 Augmented reality as an HRI method

Nowadays, human and robot resources are cooperating in shared working areas increasing the flexibility of manufacturing systems (Makris Citation2021). Using AR technology, operators and robot resources are able to collaborate more safely and interactively inside industrial shopfloors (Dianatfar, Latokartano, and Lanz Citation2021).

Robot controlling

Fang, Ong, and Nee (Citation2012) introduced an AR solution for teaching new poses for a robot manipulator through a desktop monitor. The user was able to validate the created trajectory path after the simulated execution of the motion in the AR environment. An AR solution for controlling robot manipulators’ joints through the screen of a smartphone has been presented by Maly, Sedlacek, and Leitao (Citation2016). Despite the fact that this kind of programming solutions have been successfully tested in several robotic environments, more direct controlling approaches for robotic manipulators have been investigated during the last years.

Several AR applications integrated with human gesture-based algorithms for robots’ control have been presented (Wen et al. Citation2014; Wang, Ong, and Nee Citation2016). Maly, Sedlacek, and Leitao (Citation2016) investigated the controlling of a simulated robot manipulator using a Leap Motion Controller. The operators were able to manipulate a robot arm using hand gestures’ recognition techniques. A hybrid concept based on both human gestures’ and speech recognition algorithms including virtual robot’s trajectory paths editing through pinch gestures has been introduced in the work of Quintero (Citation2018). These applications can be characterized by limited re-configurability due to their lack of a common software platform for applications’ communication with the robot.

For this reason, Ostanin and Klimchik (Citation2018) introduced a point-to-point (PTP) AR-based solution for programming robot manipulators based on ROS. Another ROS-based application for the planning and execution of robot’s motions has been developed by Kyjanek et al. (Citation2019). Lotsaris et al. (Citation2021b) introduced a hologram-based solution for defining static robot motions’ goal poses through user’s interaction with the robot’s hologram inside the virtual world of the AR application. Nevertheless, there is a limitation in a combined approach to program both stationary and mobile robots through a unified AR application.

Safety

During the last years, several AR applications focusing on the safety collaboration of human and robot resources have been added to the literature. A hybrid AR solution including smartwatch and AR glass devices has been presented by Gkournelos et al. (Citation2018) in which the operator was informed through visual and audio alerts when the robot was moving inside their common workspace. In parallel, robot’s working area was visualized inside operator’s FOV. Makris et al. (Citation2016) presented an AR application providing visual instructions to human operators for an assembly process. Through his FOV, the operator was informed about the status of the robot resource (Robot in motion, Robot stopped) and the working area of the robot. Static robot’s dynamic safety zone visualization in the virtual world based on robot’s pose has been investigated by Cogurcu and Maddock (Citation2021). Additionally, Hietanen et al. (Citation2020) introduced an AR solution for the visualization of a static robot’s margins inside operator’s FOV using a depth sensor. Despite the fact that several applications have been presented focusing on the safety in HRC environments, there is a limitation on applications supporting in parallel mobile robots and system’s recovery strategies.

Mobile robots

An AR application for mobile robots and operator collaboration has been presented by Green et al. (Citation2008). Through the AR glasses, the user was informed about the robot’s status (battery level, motor speed, etc.) and was able to send new navigation goals to the mobile unit. Using the tablet-based application developed by Chandan, Li, and Zhang (Citation2019), operators were able to perceive mobile robots' location and their navigation path in the investigated shopfloor and help them complete their navigation tasks if it was required. A touchscreen-based approach was introduced for the remote manipulation of a mobile robot by Hashimoto et al. (Citation2011). Dinh Quang Huy, Vietcheslav, and Seet Gim Lee (Citation2017) developed a handheld device and an AR application for the remote controlling of mobile units. A human-gesture-based AR application for surgical mobile robots’ controlling investigated by Wen et al. (Wen et al. Citation2014). The navigation and teleoperation of mobile robots using airtap and virtual buttons’ tapping techniques were investigated by Kousi et al. (Citation2019b).

2.4 Smart interfaces VS augmented reality

Apart from AR glasses, other applications including smart interfaces such as smartwatches, tablets, projectors and smartphones have been implemented in HRC environments assisting operators. An AR application based on a smartwatch device presented by Gkournelos et al. (Citation2018) gives an active role to the operator during an assembly process execution. The operator was able to stop or resume robot’s motion, report a human task’s completion and change the running mode of the robot (manual guidance, normal mode). Through the application developed by Dimitropoulos et al. (Citation2021), the operator was able to change the functionalities of a robot manipulator and be informed in case of unexpected safety issues inside the shopfloor. Additionally, using a projector device, assembly instructions were provided to the human operator. Another projector-based solution for educating young children in a more interactive way was introduced by Kerawalla et al. (Citation2006). Maly, Sedlacek, and Leitao (Citation2016) presented a smartphone-based application in which the simulated model of a robot manipulator was visualized inside a smartphone’s display and the user was able to send motion commands to the robot model using the smartphone. An AR application for robot motion’s visualization using a simulated robot model developed by Lambrecht and Kruger (Citation2012). The visualization of products’ and tools’ location in large-scale shopfloors through a tablet has been investigated by Fraga-Lamas et al. (Citation2018). Another tablet-based application was presented by Frank, Moorhead, and Kapila (Citation2016) assisting the user to define the object to be manipulated by the robot. Segura et al. (Citation2020) introduced an AR application including one tablet and one projector assisting operators during electric cabinets’ wiring process.

2.5 Augmented reality applications in industrial shopfloors

Lotsaris et al. (Citation2021b) validated their AR application through the assembly process of the left front damper from a vehicle car. The assembly operation of a motorbike alternator was used for the validation of the application developed by Wang, Ong, and Nee (Citation2016). An AR application assisting operators during a diesel motor’s assembly process including textual instructions’ provision and robot margins’ visualization introduced by Hietanen et al. (Citation2020). Additionally, Gkournelos et al. (Citation2018) validated their application in a use case from the automotive industry and another one from the white goods. Another application developed by Mourtzis, Vlachou, and Zogopoulos (Citation2017) has been validated in a case study derived from the white-goods industry focusing on maintenance services’ provision to the user.

The assembly process of an elevator’s cabin and the assembly process of large aluminum panels have been chosen as two industrial use cases by Dimitropoulos et al. (Citation2021). The AR framework presented by Mourtzis, Siatras, and Angelopoulos (Citation2020), was tested and validated in an industrial scenario involving the machine tools used in a machine-shop for different machines’ maintenance. In the use case selected by Segura et al. (Citation2020), machine operation tasks were enhanced by AR technology through projection mapping techniques. A use case from the aerospace industry focusing on the production of carbon-fiber-reinforced-polymer (CFRP) was selected by Quintero et al. (Citation2018). Rabah et al. (Citation2018) validated their proposed AR solution in a use case from the building manufacturing sector focusing in regulators’ maintenance. Fraga-Lamas et al. (Citation2018) validated their developed application in a use case focusing on the maintenance of ships by presenting failures in hiding areas which are invisible to the operator. An AR application for the inspection of cracks and the ground test process of aircraft production was presented by Eschen et al. (Citation2018).

2.6 Digital twin and augmented reality integration

Digital manufacturing focuses on bringing closer the design and operation phases of a product (Tomiyama et al. Citation2019). The integration of a Digital Twin (DT) model enables the utilization and visualization of real-time sensor data inside AR applications (Schroeder et al. Citation2016) but also the dynamic programming of robot resources (Cai, Wang, and Burnett Citation2020). Several definitions for the term “Digital Twin’ are available in the literature (Barricelli, Casiraghi, and Fogli Citation2019). The connection between a DT and an AR application has been validated in a use case from the manufacturing-building sector without including robot resources by Rabah et al. (Citation2018). The integration of a DT and an AR application in a construction machinery investigated by Hasan et al. (Citation2021) for machines’ monitoring and remote controlling. Kyjanek et al. (Citation2019) investigated the integration of AR technology with a DT model based on ROS for real-time data collection and usage for timber fabrication. The proposed DT model included real-time data about robot’s status. Current approaches need to be enhanced to combine multiple real-time sensor and production data under the DTs, enriching the available information to be provided on the human operators. End-to-end integration of the AR devices and software tools into the digital and physical world may enable the deployment of multiple AR features in a unified system.

2.7 Discussion

The AR-based software suite introduced in this paper focuses on the efficient introduction of mobile robots into existing manufacturing shop floors where human operators and robots work together in the same areas for different tasks’ execution. The mobile robots’ ability to autonomously re-locate themselves in different workstations in the factory, undertaking various operations can significantly increase the flexibility of the production system to respond to changes. The proposed AR application aims to allow operators to seamlessly interact with these robot workers, during the programming phase but also during the execution phase, without the need for them to have any expertise in robotics.

Although a lot of research has been done on how to exploit AR in HRC environments, there is a lack of integrated solutions to facilitate the seamless introduction and interaction of mobile robots with human operators in the manufacturing environment. Existing prototypes cover only individual phases of the manufacturing system lifecycle such as resource programming or support during operation, while only a few of them handle the existence of mobile robots. In their majority, existing applications neglect actual industrial requirements since they have not yet been tested in real production systems. The lack of interfaces to support human operators in cases of unexpected events related to the robot workers is also an important limitation in existing literature. provides an overview of the developed AR-based features already available in the literature.

Table 1. Comparative table of AR applications in HRC environments.

The proposed AR software suite aims to exceed the existing limitations by:

Providing a set of AR-based User Interfaces covering the identified support needs to enable intuitive interaction between mobile robots and human operators,

Integrate those interfaces under a unified AR-based application,

Deploy integration services for the real-time connection of the AR system with a) the production Digital Twin including smart digital models of the resources, the sensor data and the process information and b) the mobile robots control level,

Exploit ROS provided capabilities towards building a scalable integration platform which enables the interoperability among different software components and hardware configurations such as varying sensors’ models, robot platforms, AR devices and so forth.

The scientific contribution of this paper is inspired by the overall objective of the dynamic reconfigurable factory. The developed AR application addresses the requirements raised for facilitating such a reconfigurable system from a technological perspective providing the following progress beyond the state-of-the-art:

Provide interfaces to human operators in order to dynamically teach new tasks to the mobile robots and reconfigure the production system when needed as per the production demand.

Digitalization of the factory by employing smart digital models of the shopfloor containing information of the actual environment, including human operators, robots, parts and processes. A synthesis of multiple 2D-3D sensor data is used for the dynamic update of this digital world model based on the real-time shopfloor status.

AR application’s connection with the digital twin including resource’s digital models, sensors’ data and the process information.

The presented system builds upon authors previous work (Lotsaris et al. Citation2021a, Citation2021b) by enhancing the supported functionalities, optimizing the User Interfaces, extending the integration services and validating the resulted system in terms of performance and user acceptance.

3. Approach

Following the previous analysis, the novelty of the proposed approach relies on developing an AR-based integrated solution that will enable the following:

Shopfloor reconfiguration by introducing mobile dual-arm workers, the so-called Mobile Robot Platforms (MRPs), able to navigate in different working areas executing assembly tasks.

Seamless collaboration of robots and human operators using state-of-the-art AR technologies for human robot interaction without physical contact be required.

Support of human operators working in complex and unpredictable systems by assembly instructions but also robots’ easy programming and system’s recovery solution provision.

Increased human operators’ awareness through real-time communication with robots’ and sensors’ controllers but also connection with shopfloors’ Digital Twin in order for the application to have up-to-date information about the status of the resources as well as their assigned task execution and accordingly provide this information to operators through the virtual environment of the AR application.

Easy removal or addition of new sensor and robot components in the AR environment due to AR software suite’s connection with ROS and the big-sized library of ROS controllers which are publicly available.

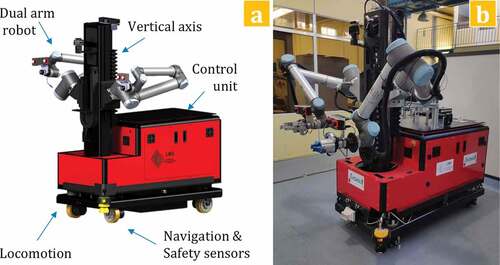

The introduced mobile dual-arm workers consist of two robot manipulators placed on a two axis, vertical and rotational, moving torso. The torso is placed on an omnidirectional mobile platform providing mobility in the robot resource as shown in . The MRPs can navigate autonomously in different workstations of the factory. They use embedded cognition to position and localize themselves in the required workstation towards successfully executing various manufacturing tasks using the available tooling (Bavelos et al. Citation2021). A set of 2D and 3D sensors are placed on the robots, so for them to be able to perceive their real-time environment and adjust their behavior in order to efficiently collaborate with the human operators. These characteristics allow the system to flexibly be adapted to production changes by efficiently dispatching the available resources where they are needed.

In this production paradigm, the MRPs are working side by side with human operators in a fenceless environment. This raises the need to support human operators, especially considering that the majority of them do not have any prior knowledge regarding robots behavior. More specifically, the suggested AR-based system aims at assisting the human operator’s work and increase the overall efficiency of the system through the following functionalities:

Provision of information on the operator’s task at each cycle.

Operators’ feedback provision to the system about the outcome of their tasks.

Provision of real-time safety information, including real-time visualization of the safe working areas around the mobile robot.

Provisions of easy-to-use AR-based interfaces give the operators the ability to give direct instructions to the mobile platform and the robot arm. The user can directly move the robot by pointing to the desired location or by simply interacting with the 3D hologram of the MRP.

Provision of resilience instructions to the operators, on how to overcome certain failures or exceptions that may occur during tasks’ execution.

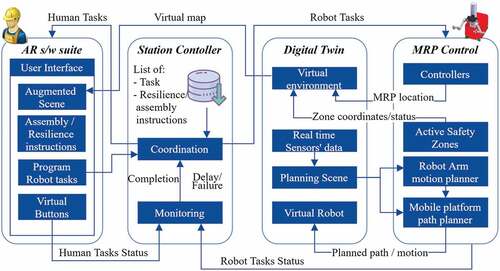

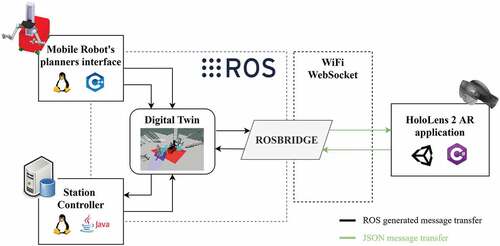

The novelty of the system relies on the end-to-end integration and real-time connection of the AR software suite with a) the Station Controller that monitors and orchestrates the collaborative process execution, b) the DT of the factory that provides the real-time status in the shopfloor, and c) the MRP controllers that provide the online mobile workers state. The data flow of the overall system and the previously presented components are visualized in .

3.1 Station controller

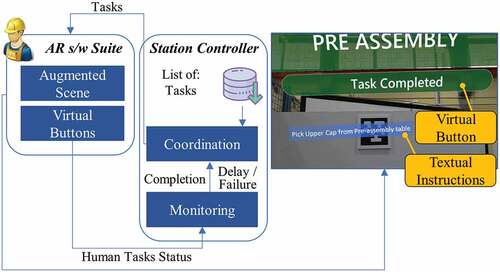

The Station Controller is a software platform responsible for the human robot collaborative tasks’ execution coordination and monitoring by dispatching them to the different resources available in the shopfloor and tracking their status. This is the main communication platform of the shopfloor receiving data from the different software and hardware components, analysing them and assigning future tasks’ execution to the available resources. Using AR technology, operators are informed about their assigned tasks and system’s recovery actions by the Station Controller. On top of that, human operators are included in the execution loop, reporting the status of the execution to the Station Controller through virtual buttons embedded in the AR application.

3.2 Digital twin

The Digital Twin combines the real-time sensor data to have up-to-date information about the actual production process and resource’s status. Additionally, it includes the computer-aided design (CAD) files of the layout components to semantically describe and reconstruct the real-time augmented scene. The real-time data of the DT are used by the AR application to inform human operators about mobile robots’ safety zones avoiding zones’ violation by the operator as well as the robots’ upcoming tasks. The reduction of robot safety zones’ violation events will increase the production rate of the manufacturing system.

3.3 MRP control

MRP controlling system provides access to the MRP’s motion and path planners towards generating collision-free robot arm motions and navigation paths. By having access to the controlling system of the robot, human operators are able to quickly teach new robot motion and navigation goals, increasing the flexibility and the re-configurability of the production line.

3.4 User interface

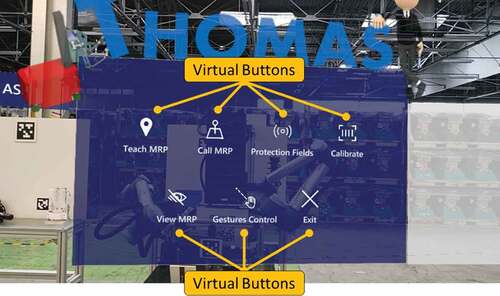

Through the UI of the developed application, human operators gain access to the different features of the AR application. The proposed application features are used for a) Assembly guidance provision, b) Resilience from unexpected events, c) Easy robot programming and d) Awareness of MRP behavior.

3.4.1 Assembly guidance provision

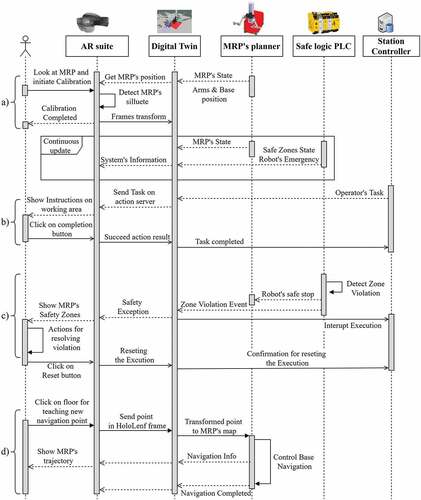

In order to help inexperienced operators during required task execution, assembly guidance is provided through the AR application in the form of textual instruction visualization inside operator’s FOV. The textual information is provided by the Station Controller based on the assembly task assigned to the human operator. Additionally, the AR application is connected with shopfloor’s working areas and uses green coloured labels to indicate the area that the human operator should walk in to execute each task. The data flow of the overall system during this feature’s usage is presented in .

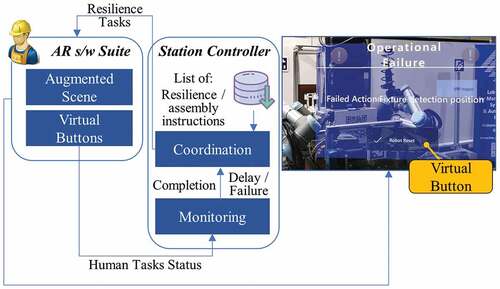

3.4.2 Resilience from unexpected events

Using the proposed AR application, human operators are able to quickly recover the system in case of unexpected events using virtual interactive buttons. MRP’s controller incapability to plan the corresponding robot trajectory or robot manipulator’s incapability to execute a planned trajectory due to robot’s singularities is characterized as a system failure from the Station Controller to execute a robot task. In order to overcome these kinds of failures and resume the assembly process when necessary, resilience tasks are assigned to human operators by the Station Controller providing instructions on how to reset the system in the form of textual information. The data flow of the overall system during this feature’s usage by the operator is visualized in .

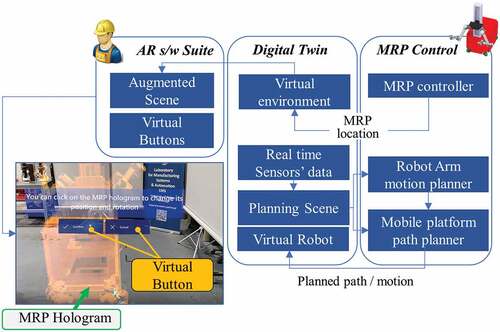

3.4.3 Easy robot programming

Hologram-based easy robot programming techniques are included in the proposed AR software suite. The operators are able to interact with MRP’s hologram in the virtual environment and define new robot arm’s motion and platform’s navigation goals. The easy robot programming is based on the connection of the AR application with the controllers of the MRP. Virtual interactive buttons are used to validate the future poses of the MRP and send planning and execution commands to the robot arm motion and mobile robot path planners. The data flow of the overall system during the easy robot programming feature’s usage is presented in .

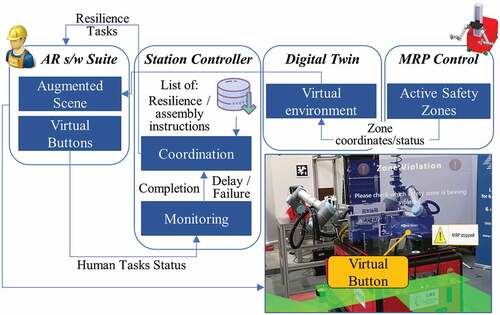

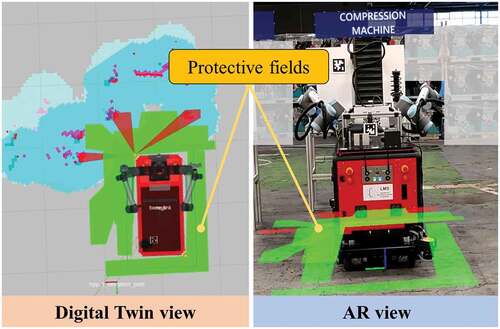

3.4.4 Safety zones’ violation

Through the UI of the proposed AR application, human operators are able to enable and disable the visualization of the safety zones around the MRP in the virtual environment. During the execution of HRC tasks, operators check robot’s safety zones and execute their tasks without violating the safety zones which will cause a failure to the system and reduce the production rate of the shopfloor. In the case of a safety zone violation event, Station Controller sets the system to failure status and provides textual information to human operators about how to recover the system and resume task’s execution. presents the data flow of the overall system during safety zones violation feature’s usage by the operator.

4. Implementation

4.1 User interface

The proposed AR application has been created based on Unity AR & Virtual Reality (VR) development platform (Unity Citation2021) and C# as the main scripting language. The Mixed Reality Toolkit (Citation2021) components were used for the development of the AR application’s features and for enabling its cross-platform. The presented application could be deployed on a wide range of platforms such as Windows Mixed Reality, Oculus and OpenVR. An early approach (Kousi et al. Citation2019b) of this suite with fewer features had been tested on the first version of the Microsoft HoloLens headset, which has limited functionalities compared to the latest one. For facilitating the new and updated features of the proposed AR suite, Microsoft HoloLens 2 device has been selected. The migration from one device to the other was seamless due to the cross-platform design of the application.

The upgraded version of HoloLens offers several additional capabilities that improve the user experience and acceptance, such as a bigger FOV for visualizing holograms, improved speech recognition in noisy environments and advanced manipulation functionalities for touching, grasping, and moving holograms. Multimodal interaction methods are included in the presented suite, in order for the user to use simple and instinctual interactions. The interaction modalities can be classified into three categories: a) gaze and commit, b) hands manipulation and c) hands-free.

Gaze and commit is a basic input mechanism that is roughly comparable to how mouse devices are used to interact with computers (point and click). The head direction vector is determined by the HoloLens headset and used as a close estimate of where the user is looking at. A cursor is drawn at the point where the ray of the gaze vector intersects with virtual or real-world objects. The commit step is defined as the way of interacting with the targeted element. The air tap gesture with the handheld upright is the specified commit action in our suite. The main use of gaze and commit modality is for interacting with augmented content that is out of reach.

Hands manipulation modality provides the users the ability to touch and manipulate holograms with their hands. It is the simpler interaction method since users interact with virtual elements in the same way they interact with real-world objects. For realizing this modality, the information from the hand detection method of HoloLens and the spatial mapping functionality is combined. The accurate tracking of the user’s hand provides the ability to ‘grasp’, ‘pull’, ‘press’ and ‘rotate’ an augmented element, while the surface detection of spatial mapping ensures the proper placing and visualization of these elements to prevent colliding with an actual item. The augmented elements in the proposed AR application that the users can actively interact with are virtual buttons, MRP’s hologram and gripper holograms. The floor of the working area, the tables and the fixtures are detected from the spatial mapping. There are constraints for each moving hologram describing the surfaces that could be attached as well as the axes which can be moved or rotated. All the defined constraints of the proposed AR suite are presented in .

Table 2. Augmented element constraints.

The hands-free modality is based on voice input. Using the user’s voice to command and control an interface is a simple approach to operate without hands and skip several stages. In assembly use cases where the operators are working with their hands, it is extremely inconvenient for them to perform extra actions for selecting and clicking virtual buttons. From this point of view, hand-free modality’s usage saves the operator’s confusion and requires less interaction time. The HoloLens speech recognition component is used for identifying a set of predefined voice commands. presents available voice commands on the AR application.

Table 3. AR hand-free modality – voice commands.

The AR suite functionalities are served by combining the three aforementioned modalities and their interaction methods. A list of the available functionalities alongside the interaction methods that enable each of them is presented in . The proposed suite includes a main menu that displays AR application’s functionalities to the user. This menu is a virtual panel that appears in the user’s FOV as visualized in . The voice command functionalities of HoloLens have been utilized for showing or hiding this menu panel.

Table 4. AR suite functionalities and interaction methods.

System’s overload with augmented information was a critical aspect, affecting the user experience of the AR suite. For this reason, the users have the option to select the virtual panels and holograms which they want to be presented in their FOV. Specific information is available only when the user is looking at an area related to this information. For example, a working area’s label, which is a virtual panel with the name of the specified area and is located on its top side, is rendered only when the operator looks at it. Users can only view those labels when they look at this area in the same manner that anyone can see real signs and labels on the environment.

4.2 Integration with the system

The proposed AR application requires a direct connection with the hybrid production system. Due to the robot’s mobility which is required, communication with the back-end server is established over a wireless network connection. presents the overall architecture of the system and the network topology of the connected components. Two major components have been used for enabling the complete integration of the AR application with the overall system:

The DT of the manufacturing environment (Kousi et al. Citation2019a) including the virtual reconstruction of the layout according to the CAD models of the involved components and the real-time data of the sensors placed on the shop floor or on the mobile robots.

The Station Controller is responsible for dispatching the scheduled tasks among the available resources and monitoring their execution through human and robot side interfaces (Gkournelos et al. Citation2020).

4.2.1 Unity – ROS bridge

Considering the above architecture, a primary issue in terms of end-to-end system’s integration, was the diversity of each subsystem on the basis of software programming and communication compatibility. For encountering this complexity, the discussed solution exploits the middleware suite of ROS. The DT is running on Linux operating system and is developed as a ROS-based program providing a communication infrastructure between different programs.

Despite the fact that ROS is ideal for robot-based applications, another layer is required to link other types of devices and applications. For this reason, a bridging protocol has been selected that provides a JSON API to ROS functionalities for non-ROS-based programs. Per each message, service, and action which is exchanged between the DT and the AR application, a corresponding C# class has been developed in Unity for handling the serialization and deserialization of the corresponding JSON message.

The back-end server is provided by the open-source package called “rosbridge_server” (Citation2021). This package implements a WebSocket server which creates a full-duplex communication channel that operates through a single TCP socket. ROS# library has been utilized for implementing the WebSocket client on the AR application. With the JSON message serialization API and the WebSocket protocol as the transport layer, a stable and easy to expanded communication between the deployed application on HoloLens and multiple programs on the ROS network is achieved.

4.2.2 Digital twin

The DT is providing all the environment information based on the CAD models of the layout and the different components of the system. It is also responsible for gathering and structuring the real-time data derived from the different sensors of the system, which are used for the planning and controlling modules but also for visualization purposes.

4.2.2.1 Virtual world calibration method

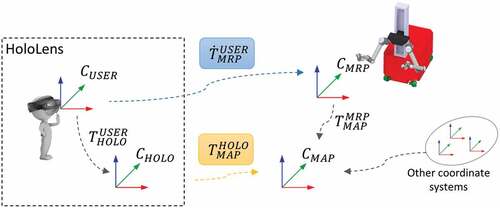

In the presented hybrid production paradigm including various mobile resources, products, and working areas, multiple coordinate systems exist and accurate transformations between them are required. For the accurate visualization of the objects in the augmented space and the efficient human interaction with the MRP, a calibration method for the system needs to be defined. depicts the different coordinate systems denoted by the letter , as well as the transformations between them shown with the letter

.

The core coordinate frame of the DT world is , which is defined on MRP’s mapping process. All the frames are linked with the

either with static transformations for fixed frames, such as the working areas, or with dynamically updated transformations for moving resources. The transformation

of MRP’s base frame

with the

is constantly changing because of MRP’s navigation inside the shopfloor.

Regarding the AR application’s virtual world, HoloLens spatial mapping feature provides an accurate understanding of the real space. Spatial mapping has a main coordinate frame which is initialized at the point where the user is standing at the start-up of the device. User’s frame (

) is the coordinate system that describes the position of objects with respect to the user’s FOV. As the user moves around the environment, the HoloLens localization component calculates the changing transformation between the user’s frame and HoloLens frame (

) using headset’s inertial measurement sensors.

A method for calibrating the HoloLens virtual environment with the MRP’s world has been developed. This method aims to identify the transformation between Hololen’s main frame

and the map frame

. These coordinate systems were selected because they are constant and not altered. The calibration method calculates the

from the following composition:

Equation’s (1) missing transformation is . For identifying this transformation, a vision detection system running on HoloLens is used. The vision system detects the silhouette of MRP and, as a result, provides a point in

frame. For the development of the detection system, the functionalities of Vuforia Engine (Citation2021) have been utilized. The calibration method is required to be performed at the initial start-up of the AR suite as the phase a) on the sequence diagram in depicts. The calibration process may be executed at the runtime also in case the user identifies mismatches between the augmented and virtual world.

4.2.2.2 Consumed data from digital twin

Once the AR suite is properly calibrated, the required information is able to be properly visualized in the virtual world according to the global frame which is common with the map frame of the robotic system. The DT is the main source for retrieving the digital information of the system. This interface with the DT makes the AR resistant to frequent changes of the production station and reduces the work required to keep multiple applications up to date. On the occasion that something is changed on the system, only the DT is required to be updated, and the AR suite will automatically inherit this modification.

MRP’s virtual model for representing the robot arms state and its location on the map is served by the DT to the AR suite. This information enables application’s functionality for the visualization of the MRP’s hologram. This hologram is loaded through a Universal Robot Description File (URDF) from a file server that is provided by the DT. In case of a modification on robot model description, the AR suite is automatically updated. Moreover, the location of each working area and its labels are provided by the DT.

Another important function of the AR application which is utilizing the connection with the DT is the visualization of the MRP laser scanner’s safety fields as presented in . The MRP is equipped with two SICK safe laser scanners microScan3 (Citation2021), one on the front side and one on the back side of the mobile unit. These two safe lasers are connected and controlled by a central Safe Logic PLC. Phase c) of the sequence diagram presents the communication flow between the Safe Logic PLC, the DT, and the HoloLens device.

Figure 13. Sequence diagram of AR – system communication a) calibration phase b) human task execution c) zone violation d) teach MRP new navigation point.

The information exchanged between the DT and the provided AR suite is summarized in . The ROS message type used for the description of each data is also presented in this table. For primitive data types, such as geometric schemes, the standard ROS message libraries std_msgs and geometry_msgs have been utilized. Custom messages have been developed in case none of the existing types fulfil authors’ needs. For the safety zone representation in DT and the AR suite, a custom message has been implemented. This message includes the list of points that compose an arbitrary zone as it is designed by a safety expert and each zone’s status (infringed or not).

Table 5. Online data exchanged with the digital twin.

4.2.3 Station controller

The Station Controller is in charge of orchestrating the various modules and resources. It is in charge of dividing the planned tasks into particular actions and assigning them to the available resources. It is also responsible for monitoring each task’s execution and providing recovery plans and instructions in case of an unexpected event or failure. The digital work instructions, both for the operator’s tasks and the recovery steps, are stored in the system’s database. The ROS action protocol has been used for the development of the communication interface between the AR suite and the Station Controller. On the AR suite, two action servers are deployed. The first action server is used for the operator’s assembly tasks and the second for recovery instruction provision.

4.2.4 Robot planning interfaces

The Robot planning interfaces required for the MRP can be broken down into two main categories, namely the MRP base navigation and the MRP arms control interfaces. Starting with the MRP arms control interface, a MoveIt package for the UR10 robot arms is required, together with a ROS node that employs MoveIt’s functionality, an open-source Motion Planning Framework (Chitta, Sucan, and Cousins Citation2012), and handles the communication from and to the ROS system. AR suite’s connection with the DT allows the creation of collision-free trajectories for the robot arms. One ROS node for AR application’s connection with MoveIt has been developed, managing the necessary messages and sending them to the appropriate ROS topics. In more detail, it is responsible for:

Taking the goals, for planning or execution, from the AR application and forward them to MoveIt.

Forwarding the outcome of the motion planning from MoveIt to the AR application.

Refactoring the calculated trajectory plan from MoveIt and sending it to HoloLens in order to be displayed.

For the navigation of MRP’s base inside the shopfloor, the open-source library navigation stack (Marder-Eppstein et al. Citation2010) is used. This library provides ROS services and topics. The interface with the AR suite is developed based on these ROS services and topics. In this way, the AR suite can control the MRP’s base and send a new navigation goal while in parallel may track the path that the MRP will follow during navigation’s execution. The communication channel between the AR suite and the MRP’s base planner is presented in phase d).

5. Industrial use case

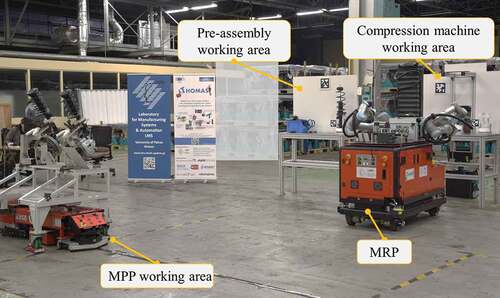

The proposed AR suite has been tested and validated in a case study, coming from the automotive industry involving the assembly of a passenger vehicle’s front left suspension (damper). A replica of the selected industrial setup was used for the validation of the proposed application as presented in . The selected assembly process consists of a set of assembly tasks assigned to a human operator and a mobile dual-arm robot. These tasks need to be performed in three different working areas, namely: a) Pre-assembly working area, b) Compression machine working area and c) Mobile Product Platform (MPP) working area. In these three working areas, dampers get assembled by putting together all the required parts, compressing the spring, connecting the compressed damper to the disk and inserting the required cables.

The ability of the MRP to autonomously navigate between the different working areas of the shopfloor enabled the equal distribution of assembly tasks among the available resources in terms of human ergonomy and resources’ idle time reduction. The distribution of the required tasks among the available resources and the working areas in which each task takes place are presented in .

Table 6. Damper assembly process’s tasks.

The automotive use case layout () through the operator’s FOV is presented in . Blue labels are visualized on the top side of each working area by introducing the layout to the operator.

The proposed AR suite has been deployed to assist the operator during the investigated assembly process in four different cases, namely:

Assembly instruction provision.

System recovery after robot failure.

Easy robot programming.

Safety zone’s violation.

5.1 Assembly guidance provision

During the investigated assembly process, several tasks are assigned either to the operator or to the MRP. In case that an assembly task is assigned to the operator, textual instructions are visualized inside operator’s FOV during the execution of this task. The Station Controller, as presented in the previous section, is responsible to send the textual instructions from the database to the AR application based on the task which is assigned to the operator for execution. When an assembly task is assigned to the operator, the label of the corresponding working area turns in green colour indicating the working area that the operator should walk in to execute this task. In addition, the textual instructions for the specific task appear in the operator’s FOV along with a virtual green button used for informing the Station Controller when the task is completed. visualizes as an example, the FOV of the operator when the task ‘Insert cap and align damper in compression machine’ is sent to him/her for execution.

5.2 Resilience from unexpected events

Sometimes during the execution of damper’s assembly process, robot arms’ controllers may fail to execute a motion. These failures may be occurred either due to robot’s controller incapability to plan the corresponding robot trajectory or robot manipulator’s incapability to execute the planned trajectory due to robot’s singularities. In order to overcome these kinds of failures, a recovery solution is included in the proposed AR software suite. As presented in ), in case of an operational failure from the robot, an alert is sent to the operator informing him/her about the status and the title of robot’s failed task. The operator approaches the MRP and manually guides the robot arm to the desired position in order for the assembly process to be continued (). After the manual guidance of the robot arm, the operator informs the Station Controller to continue the assembly process using the virtual button ‘Robot Reset’ as presented in the ).

5.3 Easy robot programming

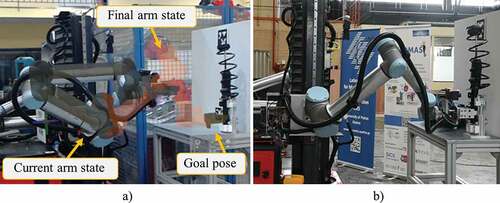

Through the developed AR application, the operator is capable of programming the robot without prior knowledge on robot systems. Using a hologram of the robot manipulator, the operator is able to interact with the virtual robot arm of the MRP and create new robot goal poses. The robot arm’s controller solves the planning problem and the robot manipulator is moving to the desired goal pose. The programming of a robot’s pose for grasping the pre-assembled damper in the pre-assembly working area using this AR application’s functionality is presented in the following .

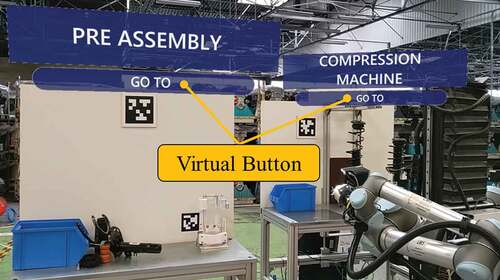

Additionally, a hologram-based approach for programming new navigation goals for the mobile robot is included in the proposed AR application. As visualized in ), the user is able to interact with a hologram of the mobile robot and move it inside application’s virtual environment. The operator moves the hologram to a new position and sends a navigation command to the robot platform controller in order to compute and execute the corresponding navigation path.

One navigation goal has been initially defined per each working area of the use case layout in the application’s database. Through the AR application, the operator is able to send navigation commands to the mobile platform in order to navigate in each working area of the layout. For this reason, one virtual button is available on the bottom side of each working area’s virtual label. In the following , the ‘GO TO’ virtual buttons for the pre-assembly and the compression machine working areas are presented.

5.4 Safety zones’ violation

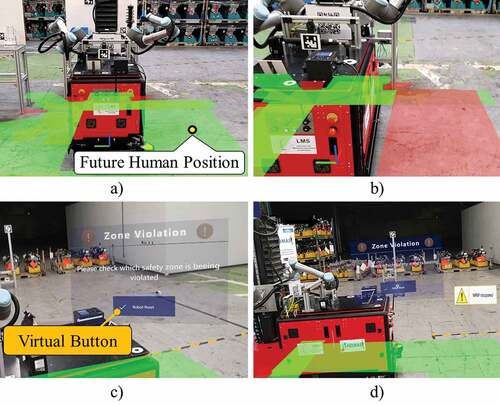

Another functionality of the proposed AR software suite is the safety zones’ visualization around the mobile platform as visualized in ). By the time a human operator enters a safety zone, the corresponding zone turns into red colour and the robot enters in safety zones’ violation mode ()). Textual information is visualized inside operator’s FOV to assist him/her restoring the system as presented in ). In order to restore the system from this type of violation event, the operator should move away from the red zone and press the virtual button ‘Robot Reset’ ()). Then, the Station Controller continues the assembly process by assembly tasks’ assignment to the available resources.

6. Results

The proposed AR application has been designed and developed to increase the flexibility and the production rate of a manufacturing system by assisting human operators during assembly task execution and increase human operator safety awareness through safety working volumes and emergency alerts visualization. A group of 10 researchers was used to test the developed application and measure its effectiveness on the selected assembly process presented in the industrial use case section. The feedback received from the researchers was that the developed application was very useful for them during assembly task execution and the execution of time-consuming actions like robot programming or systems’ recovery from unexpected event became easier for them by using the AR application’s features.

The case study presented in the previous section has been used for validating the benefits of the proposed system in terms of three aspects: a) recovery of the production system in case of unexpected events, b) usability and time reduction during the robot’s re-programming phase and finally c) user acceptance and application’s usage ease.

Regarding the aspects a) and b), a set of key performance indicators has been defined in order to quantify the effect of the deployed AR system. These KPIs are listed in the following :

Table 7. AR application performance evaluation KPIs.

System recovery

In current industrial practices, in case of a disturbance or machines’ failure, the operator should inform the production manager so for the latter to call the maintenance department and undertake the recovery of the system. The recovery process for an operational failure is a different one from the recovery process for a safety zones’ violation event. For this reason, two different KPIs have been considered. The AR application enables the direct recovery of the system by the operator from any location of the layout. In the current industrial practices, each time the system enters in operation failure or safety zones’ violation mode, the operator needs approximately 70 or 36 seconds accordingly to pause the execution of his/her assigned task, approach the main assembly line’s computer, recover the system and approach again the layout to complete his/her previously assigned task. On the contrary, using the AR application the operator needs an average of 21 or 6 seconds to recover the system from an operation failure or safety zones’ violation event accordingly. In this way, it has been calculated that the required time for the system’s recovery is decreased by 70% in case of an operation failure and 83% for a safety zones’ violation event.

Programming

This group consists of the ‘Program new robotic arm pose’ and the ‘Program new navigation point’ KPIs. The first KPI refers to the time that the operator needs to program a new robotic arm’s pose using ROS and MoveIt motion planner library instead of the corresponding presented functionality of the AR application. For the programming of the same robot arm motion, it was measured that the robot programmer of the assembly line needs 21 seconds instead of 8 seconds needed for the operator to program the same robot motion using the AR application. The time reduction for programming a new navigation action through the AR application instead of the traditional programming way was investigated in the second KPI of this group. The calculated times and results for these two KPIs are summarized in . In the current state of the assembly line, the robot programmer needs 21 seconds to program a new navigation action. On the contrary, using the proposed AR application, the operator is able to program the same navigation action in 7 seconds. The calculated times and the results are presented in the following .

Table 8. Recovery from robot failure KPIs.

Table 9. Easy robot programming KPIs.

An important aspect to consider was the ergonomy and end user’s acceptance when the application was used by human operators. These aspects were critical towards ensuring that the designed application will be easy to be used as well as beneficial for improving the job quality of the operators. As previously documented, the AR application was tested by a group of researchers with varying technical background to validate these aspects. These researchers’ profiles are presented in . Based on their experience using the proposed AR software suite, users’ feedback was received through a questionnaire including the questions of .

Table 10. Researchers’ profile.

Table 11. Questions for collecting AR application’s evaluation data from the researchers.

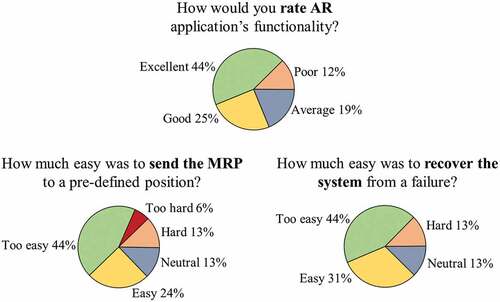

Researchers’ questionnaire included three questions regarding the functionalities of the AR suite’s features. The overall functionality of the proposed application was investigated through the first question, while the other two questions were focused on the level of difficulty for researchers to teach a new navigation position for the MRP but also to recover the system in case of unexpected failures. In more detail, these questionnaires’ outcome per question is presented below.

How would you rate AR application’s functionality?

About 44% of the researchers who tested the AR application claimed that the proposed solution was excellent. 25% of them characterized this application as ‘Good’, while only 12% mentioned that this application is poor. The rest of the researchers had an average opinion about the AR application.

How easy was the robot guidance to a working area with and without the AR application?

During application’s testing, the researchers were asked to test the easy mobile robot’s programming feature of the system. 68% of the researchers which participated in the testing phase claimed that it was easy for them to teach a new navigation goal for the MRP and only 19% of them characterized the teaching functionality of the AR application as a difficult one.

How easy was the system recovery process with and without the AR application?

In the case of a robot operation failure or a safety zone violation event during the testing phase, the researchers were able to recover the system using the corresponding functionality of the AR software suite. About 75% of the researchers which tested the AR software suite claimed that the system’s recovery functionality was easy to be used. 13% of them characterized this functionality as hard to be used, while the rest of the researchers were neutral about the proposed system’s recovery solution. These questions’ analysis outcome is visualized in .

The overall feedback received from this application’s test by the researchers was that this application was easy to be used and its features helped the users during the assembly process. In more detail:

Users were able to interact with the system and the robots remotely by using the virtual environment’s objects. The reduction of the time needed to recover the system from a failure increased the production rate due to operators' ability to reset the system using the virtual objects, without the access to the main computer of the system be required.

Non-expert operators were able to easily program new robot motions using the programming features of the AR application.

Operators felt more safe and confident to share the same working areas with robots as they were able to see robots’ safety zones and got familiarized with AR safety zones’ visualization state-of-the-art technologies.

7. Conclusion and future work

An AR software suite assisting human operators during assembly tasks’ execution in an HRC environment is presented in this paper. A user-friendly application is introduced to help human operators without prior knowledge on robot systems, perform the required assembly tasks but also program new robot motions and recover the system in case of system’s unexpected failures. The hardware’ independent character of ROS and the coordinating and monitoring abilities of the Station Controller enable easy reconfiguration of the proposed AR application. The development and the implementation of this software suite in an industrial use case from the automotive sector are presented in detail.

The presented AR application was evaluated through its testing by several researchers and research engineers of the University of Patras. The evaluation process was based on a dataset including performance times and information received through questionnaires. Through the information received by the questionnaires, it was observed that the researchers found the robot programming and system’s recovery features profitable for the assembly process’s operator. After analysing the performance times which were recorded during the multiple execution of the assembly process, the authors observed a) 70% time’s reduction for system’s recovery in case of operational failure, b) 83% time’s reduction for system’s recovery in case of safety zone violation, c) 62% time’s reduction for programming a new robot arm goal, d) 67% time’s reduction for programming a new platform’s navigation goal.

However, despite the obvious benefit of improving the flexibility of a collaborative production system, augmented reality technology, and more particularly the available AR devices, continues to have limitations. The most critical point raised by every contender in the survey is the ergonomic issue caused by the headset’s weight. Almost every headset on the market weighs roughly at approximately 400 g, rendering them unsuitable for a regular eight-hour work.

A second constraint is the user’s interaction with the available interfaces (holograms, virtual buttons, etc.). The lighting conditions and occlusions from actual objects have an effect on the fidelity of the virtual depiction, which complicates the user’s interaction with the augmented objects. Further examination of ergonomics and user acceptance in real-world production systems in comparison to various augmented reality devices will highlight critical shortcomings of existing technology.

Additional study should be directed on integrating and validating additional ‘smart’ interface mechanisms aimed at improving the human operator experience and comfort. The coupling of AI techniques that predict the operator’s interaction intention, with the presented suite, should be investigated. The next step would be to validate the acceptance of the AR suite from actual human operators of the selected use case. Finally, the objective is to expand the use to other industrial settings where HRC may be advantageous.

Acknowledgments

This work has been partially funded by the EC research project “THOMAS – Mobile dual arm robotic workers with embedded cognition for hybrid and dynamically reconfigurable manufacturing systems” (Grant Agreement: 723616) (www.thomas-project.eu/); “TRINITY – Digital Technologies, Advanced Robotics and increased Cyber-security for Agile Production in Future European Manufacturing Ecosystems” (Grant Agreement: 825196) (https://trinityrobotics.eu/). The authors would like to thank PEUGEOT CITROEN AUTOMOBILES S.A.(PSA) for providing important information about the current status and the challenges of the front suspension’s assembly line.

Disclosure statement

No potential conflict of interest was reported by the author(s).

Additional information

Funding

References

- Andersson, N., A. Argyrou, F. Nägele, F. Ubis, U. E. Campos, M. O. de Zarate, and R. Wilterdink. 2016. “AR-Enhanced Human-Robot-Interaction – Methodologies, Algorithms, Tools.” Procedia CIRP 44: 193–198. doi:10.1016/j.procir.2016.03.022.

- Atici-Ulusu, H., Y. D. Ikiz, O. Taskapilioglu, and T. Gunduz. 2021. “Effects of Augmented Reality Glasses on the Cognitive Load of Assembly Operators in the Automotive Industry.” International Journal of Computer Integrated Manufacturing 34 (5): 487–499. doi:10.1080/0951192X.2021.1901314.

- Azuma, R. T. 1997. “A Survey of Augmented Reality.” Presence: Teleoperators and Virtual Environments 6 (4): 355–385. doi:10.1162/pres.1997.6.4.355.

- Barricelli, B. R., E. Casiraghi, and D. Fogli. 2019. “A Survey on Digital Twin: Definitions, Characteristics, Applications, and Design Implications.” IEEE Access 7: 167653–167671. doi:10.1109/ACCESS.2019.2953499.

- Bavelos, A. C., N. Kousi, C. Gkournelos, K. Lotsaris, S. Aivaliotis, G. Michalos, and S. Makris. 2021. “Enabling Flexibility in Manufacturing by Integrating Shopfloor and Process Perception for Mobile Robot Workers.” Applied Sciences 11 (9): 3985. doi:10.3390/app11093985.

- Blankemeyer, S., R. Wiemann, L. Posniak, C. Pregizer, and A. Raatz. 2018. “Intuitive Robot Programming Using Augmented Reality.” Procedia CIRP 76: 155–160. doi:10.1016/j.procir.2018.02.028.

- Blum, T., R. Stauder, E. Euler, and N. Navab, 2012. “Superman-like X-ray Vision: Towards brain-computer Interfaces for Medical Augmented Reality.” Presented at the 2012 IEEE International Symposium on Mixed and Augmented Reality (ISMAR), Atlanta, GA, USA: IEEE, 271–272.

- Cai, Y., Y. Wang, and M. Burnett. 2020. “Using Augmented Reality to Build Digital Twin for Reconfigurable Additive Manufacturing System.” Journal of Manufacturing Systems 56: 598–604. doi:10.1016/j.jmsy.2020.04.005.

- Chandan, K., X. Li, and S. Zhang, 2019. “Negotiation-based Human-Robot Collaboration via Augmented Reality.” ArXiv, abs/1909.11227.

- Chitta, S., I. Sucan, and S. Cousins. 2012. “MoveIt!” IEEE Robotics & Automation Magazine 19 (1): 18–19. doi:10.1109/MRA.2011.2181749.

- Chryssolouris, G. 2006. Manufacturing Systems: Theory and Practice. 2nd ed. New York: Springer.

- Cogurcu, Y. E., and S. Maddock. 2021. “An Augmented Reality System for Safe human-robot Collaboration.” 4th UK-RAS Conference for PhD Students & Early-Career Researchers on ?Robotics at Home? Online Conference. doi:10.31256/Ft3Ex7U

- Danielsson, O., A. Syberfeldt, R. Brewster, and L. Wang. 2017. “Assessing Instructions in Augmented Reality for Human-robot Collaborative Assembly by Using Demonstrators.” Procedia CIRP 63: 89–94. doi:10.1016/j.procir.2017.02.038.

- Dianatfar, M., J. Latokartano, and M. Lanz. 2021. “Review on Existing VR/AR Solutions in Human–Robot Collaboration.” Procedia CIRP 97: 407–411. doi:10.1016/j.procir.2020.05.259.

- Dimitropoulos, N., T. Togias, G. Michalos, and S. Makris. 2021. “Operator Support in Human–Robot Collaborative Environments Using AI Enhanced Wearable Devices.” Procedia CIRP 97: 464–469. doi:10.1016/j.procir.2020.07.006.

- Eschen, H., T. Kötter, R. Rodeck, M. Harnisch, and T. Schüppstuhl. 2018. “Augmented and Virtual Reality for Inspection and Maintenance Processes in the Aviation Industry.” Procedia Manufacturing 19: 156–163. doi:10.1016/j.promfg.2018.01.022.

- Esengün, M., and G. İnce. 2018. The Role of Augmented Reality in the Age of Industry 4.0. In Duc Truong Pham (Ed.), Industry 4.0: Managing The Digital Transformation, Cham: Springer , 201–215.

- Fang, H. C., S. K. Ong, and A. Y. C. Nee. 2012. “Interactive Robot Trajectory Planning and Simulation Using Augmented Reality.” Robotics and Computer-Integrated Manufacturing 28 (2): 227–237. doi:10.1016/j.rcim.2011.09.003.

- Fraga-Lamas, P., T. M. Fernandez-Carames, O. Blanco-Novoa, and M. A. Vilar-Montesinos. 2018. “A Review on Industrial Augmented Reality Systems for the Industry 4.0 Shipyard.” IEEE Access 6: 13358–13375. doi:10.1109/ACCESS.2018.2808326.

- Frank, J. A., M. Moorhead, and V. Kapila. 2016. “Realizing Mixed-reality Environments with Tablets for Intuitive Human-robot Collaboration for Object Manipulation Tasks.” Presented at the 2016 25th IEEE International Symposium on Robot and Human Interactive Communication (RO-MAN), New York, NY, USA: IEEE, 302–307.

- Gkournelos, C., P. Karagiannis, N. Kousi, G. Michalos, S. Koukas, and S. Makris. 2018. “Application of Wearable Devices for Supporting Operators in Human-Robot Cooperative Assembly Tasks.” Procedia CIRP 76: 177–182. doi:10.1016/j.procir.2018.01.019.

- Gkournelos, C., N. Kousi, A. C. Bavelos, S. Aivaliotis, C. Giannoulis, G. Michalos, and S. Makris. 2020. “Model Based Reconfiguration of Flexible Production Systems.” Procedia CIRP 86: 80–85. doi:10.1016/j.procir.2020.01.042.

- Green, S. A., X. Q. Chen, M. Billinghurst, and J. G. Chase. 2008. “Collaborating with a Mobile Robot: An Augmented Reality Multimodal Interface.” IFAC Proceedings Volumes 41 (2): 15595–15600. doi:10.3182/20080706-5-KR-1001.02637.

- Hasan, S. M., K. Lee, D. Moon, S. Kwon, S. Jinwoo, and S. Lee. 2021. “Augmented Reality and Digital Twin System for Interaction with Construction Machinery.” Journal of Asian Architecture and Building Engineering 21 (2): 564–574.

- Hashimoto, S., A. Ishida, M. Inami, and T. Igarashi. 2011. “TouchMe : An Augmented Reality Based Remote Robot Manipulation.“ Proceedings of ICAT2011, 2: 28–30 .

- Hietanen, A., R. Pieters, M. Lanz, J. Latokartano, and J.-K. Kämäräinen. 2020. “AR-based Interaction for Human-Robot Collaborative Manufacturing.” Robotics and Computer-Integrated Manufacturing 63: 101891. doi:10.1016/j.rcim.2019.101891.

- Huy, D. Q., I. Vietcheslav, and G. Seet Gim Lee. 2017. “See-through and Spatial Augmented Reality – A Novel Framework for Human-Robot Interaction.” Presented at the 2017 3rd International Conference on Control, Automation and Robotics (ICCAR), Nagoya, Japan: IEEE, 719–726.

- Julier, S., Y. Baillot, D. Brown, and M. Lanzagorta. 2002. “Information Filtering for Mobile Augmented Reality.” IEEE Computer Graphics and Applications 22 (5): 12–15. doi:10.1109/MCG.2002.1028721.

- Kerawalla, L., R. Luckin, S. Seljeflot, and A. Woolard. 2006. ““Making It Real”: Exploring the Potential of Augmented Reality for Teaching Primary School Science.” Virtual Reality 10 (3–4): 163–174. doi:10.1007/s10055-006-0036-4.

- Kousi, N., G. Michalos, S. Aivaliotis, and S. Makris. 2018. “An Outlook on Future Assembly Systems Introducing Robotic Mobile Dual Arm Workers.” Procedia CIRP 72: 33–38. doi:10.1016/j.procir.2018.03.130.

- Kousi, N., C. Gkournelos, S. Aivaliotis, C. Giannoulis, G. Michalos, and S. Makris. 2019a. “Digital Twin for Adaptation of Robots’ Behavior in Flexible Robotic Assembly Lines.” Procedia Manufacturing 28: 121–126. doi:10.1016/j.promfg.2018.12.020.

- Kousi, N., C. Stoubos, C. Gkournelos, G. Michalos, and S. Makris, 2019b. “Enabling Human Robot Interaction in Flexible Robotic Assembly Lines: An Augmented Reality Based Software Suite.” Procedia CIRP, Ljubljana, Slovenia. Elsevier B.V., 1429–1434.

- Kyjanek, O., B. Al Bahar, L. Vasey, B. Wannemacher, and A. Menges. 2019. “Implementation of an Augmented Reality AR Workflow for Human Robot Collaboration in Timber Prefabrication.” Presented at the 36th International Symposium on Automation and Robotics in Construction, Banff, AB, Canada.

- Lambrecht, J., and J. Kruger. 2012. “Spatial Programming for Industrial Robots Based on Gestures and Augmented Reality.” Presented at the 2012 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS 2012), Vilamoura-Algarve, Portugal: IEEE, 466–472.

- Livingston, M. A., L. J. Rosenblum, S. J. Julier, D. Brown, Y. Baillot, J. E. Swan, J. L. Gabbard, and D. Hix. 2002. An Augmented Reality System for Military Operations in Urban Terrain Proceedings of Interservice / Industry Training, Simulation & Education Conference (I/ITSEC) Orlando, Florida, USA , 868–875.

- Lotsaris, K., N. Fousekis, S. Koukas, S. Aivaliotis, N. Kousi, G. Michalos, and S. Makris. 2021a. “Augmented Reality (AR) Based Framework for Supporting Human Workers in Flexible Manufacturing.” Procedia CIRP 96: 301–306. doi:10.1016/j.procir.2021.01.091.

- Lotsaris, K., C. Gkournelos, N. Fousekis, N. Kousi, and S. Makris. 2021b. “AR Based Robot Programming Using Teaching by Demonstration Techniques.” Procedia CIRP 97: 459–463. doi:10.1016/j.procir.2020.09.186.

- Makris, S., P. Karagiannis, S. Koukas, and A.-S. Matthaiakis. 2016. “Augmented Reality System for Operator Support in Human–Robot Collaborative Assembly.” CIRP Annals 65 (1): 61–64. doi:10.1016/j.cirp.2016.04.038.

- Makris, S. 2021. Cooperating Robots for Flexible Manufacturing. Cham: Springer.

- Maly, I., D. Sedlacek, and P. Leitao. 2016. “Augmented Reality Experiments with Industrial Robot in Industry 4.0 Environment.” Presented at the 2016 IEEE 14th International Conference on Industrial Informatics (INDIN), Poitiers, France: IEEE, 176–181.

- Marder-Eppstein, Eitan, Berger, Eric, Foote, Tully, Gerkey, Brian, and Konolige, Kurt 2010 The Office Marathon: Robust Navigation in an Indoor Office Environment IEEE international conference on robotics and automation Anchorage, AK, USA 03-07 May 2010 (IEEE)300–307 doi:10.1109/ROBOT.2010.5509725

- Michalos, G., S. Makris, J. Spiliotopoulos, I. Misios, P. Tsarouchi, and G. Chryssolouris. 2014. “ROBO-PARTNER: Seamless Human-Robot Cooperation for Intelligent, Flexible and Safe Operations in the Assembly Factories of the Future.” Procedia CIRP 23: 71–76. doi:10.1016/j.procir.2014.10.079.

- Mixed Reality Toolkit (MRTK) [online]. 2021. Accessed 30 Jun 2021. https://github.com/microsoft/MixedRealityToolkit-Unity

- Mourtzis, D., A. Vlachou, and V. Zogopoulos. 2017. “Cloud-Based Augmented Reality Remote Maintenance Through Shop-Floor Monitoring: A Product-Service System Approach.” Journal of Manufacturing Science and Engineering 139 (6): 061011. doi:10.1115/1.4035721.

- Mourtzis, D., V. Zogopoulos, and E. Vlachou. 2018. “Augmented Reality Supported Product Design Towards Industry 4.0: A Teaching Factory Paradigm.” Procedia Manufacturing 23: 207–212. doi:10.1016/j.promfg.2018.04.018.

- Mourtzis, D., V. Siatras, and J. Angelopoulos. 2020. “Real-Time Remote Maintenance Support Based on Augmented Reality (AR).” Applied Sciences 10 (5): 1855. doi:10.3390/app10051855.

- Ong, S. K., A. W. W. Yew, N. K. Thanigaivel, and A. Y. C. Nee. 2020. “Augmented reality-assisted Robot Programming System for Industrial Applications.” Robotics and Computer-Integrated Manufacturing 61: 101820. doi:10.1016/j.rcim.2019.101820.

- Ostanin, M., and A. Klimchik. 2018. “Interactive Robot Programming Using Mixed Reality.” IFAC-PapersOnLine 51 (22): 50–55. doi:10.1016/j.ifacol.2018.11.517.

- Quigley, M., B. Gerkey, K. Conley, J. Faust, T. Foote, J. Leibs, E. Berger, R. Wheeler, and A. Ng. 2009. “ROS: An Open-source Robot Operating System.” International Conference on Robotics and Automation, Kobe, Japan.

- Quintero, C. P., S. Li, M. K. Pan, W. P. Chan, H. F. Machiel Van der Loos, and E. Croft. 2018. “Robot Programming through Augmented Trajectories in Augmented Reality.” Presented at the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid: IEEE, 1838–1844.

- Rabah, S., A. Assila, E. Khouri, F. Maier, F. Ababsa, V. Bourny, P. Maier, and F. Mérienne. 2018. “Towards Improving the Future of Manufacturing through Digital Twin and Augmented Reality Technologies.” Procedia Manufacturing 17: 460–467. doi:10.1016/j.promfg.2018.10.070.

- rosbridge_server [online]. 2021. Accessed 30 Jun 2021. http://wiki.ros.org/rosbridge_server

- Ruffaldi, E., F. Brizzi, F. Tecchia, and S. Bacinelli. 2016. “Third Point of View Augmented Reality for Robot Intentions Visualization.” In Augmented Reality, Virtual Reality, and Computer Graphics, edited by L. T. De Paolis and A. Mongelli, 471–478. Cham: Springer International Publishing.

- Safety Laser Scanners microScan3 SICK [online]. 2021. Accessed 21 Jul 2021. https://www.sick.com/ca/en/opto-electronic-protective-devices/safety-laser-scanners/microscan3/c/g295657

- Schroeder, G., C. Steinmetz, C. E. Pereira, I. Muller, N. Garcia, D. Espindola, and R. Rodrigues. 2016. “Visualising the Digital Twin Using Web Services and Augmented Reality.” Presented at the 2016 IEEE 14th International Conference on Industrial Informatics (INDIN), Poitiers, France: IEEE, 522–527.

- Segura, Á., H. V. Diez, I. Barandiaran, A. Arbelaiz, H. Álvarez, B. Simões, J. Posada, A. García-Alonso, and R. Ugarte. 2020. “Visual Computing Technologies to Support the Operator 4.0.” Computers & Industrial Engineering 139: 105550. doi:10.1016/j.cie.2018.11.060.

- Sutherland, J., J. Belec, A. Sheikh, L. Chepelev, W. Althobaity, B. J. W. Chow, D. Mitsouras, A. Christensen, F. J. Rybicki, and D. J. La Russa. 2019. “Applying Modern Virtual and Augmented Reality Technologies to Medical Images and Models.” Journal of Digital Imaging 32 (1): 38–53. doi:10.1007/s10278-018-0122-7.

- Tomiyama, T., E. Lutters, R. Stark, and M. Abramovici. 2019. “Development Capabilities for Smart Products.” CIRP Annals 68 (2): 727–750. doi:10.1016/j.cirp.2019.05.010.

- Unity [online]. 2021. Accessed 25 Jun 2021. https://unity.com/

- Vuforia Engine [online]. 2021. Accessed 6 Jul 2021. https://www.ptc.com/en/products/vuforia/vuforia-engine

- Wang, X., S. K. Ong, and A. Y. C. Nee. 2016. “Multi-modal Augmented-reality Assembly Guidance Based on Bare-hand Interface.” Advanced Engineering Informatics 30 (3): 406–421. doi:10.1016/j.aei.2016.05.004.

- Wang, X. V., L. Wang, M. Lei, and Y. Zhao. 2020. “Closed-loop Augmented Reality Towards Accurate Human-Robot Collaboration.” CIRP Annals 69 (1): 425–428. doi:10.1016/j.cirp.2020.03.014.

- Wen, R., W.-L. Tay, B. P. Nguyen, C.-B. Chng, and C.-K. Chui. 2014. “Hand Gesture Guided Robot-assisted Surgery Based on a Direct Augmented Reality Interface.” Computer Methods and Programs in Biomedicine 116 (2): 68–80. doi:10.1016/j.cmpb.2013.12.018.

- Wu, H.-K., S. W.-Y. Lee, H.-Y. Chang, and J.-C. Liang. 2013. “Current Status, Opportunities and Challenges of Augmented Reality in Education.” Computers & Education 62: 41–49. doi:10.1016/j.compedu.2012.10.024.

- Zhu, J., S. K. Ong, and A. Y. C. Nee. 2015. “A Context-aware Augmented Reality Assisted Maintenance System.” International Journal of Computer Integrated Manufacturing 28 (2): 213–225. doi:10.1080/0951192X.2013.874589.