?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.

?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.ABSTRACT

Despite the increasing degree of automation in industry, manual or semi-automated are commonly and inevitable for complex assembly tasks. The transformation to smart processes in manufacturing leads to a higher deployment of data-driven approaches to support the worker. Upcoming technologies in this context are oftentimes based on the gesture-recognition, − monitoring or – control. This contribution systematically reviews gesture or motion capturing technologies and the utilization of gesture data in the ergonomic assessment, gesture-based robot control strategies as well as the identification of COVID-19 symptoms. Subsequently, two applications are presented in detail. First, a holistic human-centric optimization method for line-balancing using a novel indicator – ErgoTakt – derived by motion capturing. ErgoTakt improves the legacy takt-time and helps to find an optimum between the ergonomic evaluation of an assembly station and the takt-time balancing. An optimization algorithm is developed to find the best-fitting solution by minimizing a function of the ergonomic RULA-score and the cycle time of each assembly workstation with respect to the workers’ ability. The second application is gesture-based robot-control. A cloud-based approach utilizing a generally accessible hand-tracking model embedded in a low-code IoT programming environment is shown.

1. Introduction

Within the 4th industrial revolution, the so-called Industry 4.0, more and more smart technologies as connected intelligent devices (sensors and actuators), or augmented reality applications find their way into production systems. Nevertheless, production system still is, and will most likely remain, a sociotechnical system in the future. Hence, the employees have to interact with these smart technical systems, e.g. machinery or robots. The realization of Smart Factories needs new ways of monitoring, control and interaction between humans and technical systems to increase efficiency (Ansari et al. Citation2018). These ‘multimodal’ Human-Machine-Interaction mechanisms become a crucial factor in the factories of the future. The term ‘multimodal’ summarizes research that leverages speech, touch, vision and gesture (Turk Citation2014). Especially, gesture-based methods provide a huge potential in industry as they can be used actively to control technical devices as robots and passively to monitor and analyse the human movements of employees e.g. to assess the ergonomic situation. In this context, several approaches to track human gestures are presented, which then utilize e.g. the arm trajectory to control technical devices. This contribution reviews the different strategies of motion capturing and relates their application in industrial environment. Here, the focus is on ergonomic assessment, gesture recognition and analysis as well as gesture-based control of (industrial) robots.

Ergonomic evaluation in working scenarios requires a deep analysis of postures, loads, and frequency of movements. In the industrial field, experts commonly carry it out with observational methods that are not supported by automated, instrumental measurements and are affected by subjective bias (Roman-Liu Citation2014). This approach is costly and time-consuming; therefore, it is usually carried out retrospectively of an accident and by sampling only the most critical stations/processes and workers. Hence, long-term and systemic ergonomic evaluations are difficult due to current tools limitations. These are the main reasons why the ergonomic assessment is not integrated into line balancing issues as a holistic view of the process. The authors want to leverage the 4th industrial revolution, and its integrated technologies for supporting the worker of the future, the so-called ‘Operator 4.0’ (Romero et al. Citation2016a). Here, bio-data monitoring (i.e. postures and workload physiological data), smart solutions (i.e. wearable trackers and sensors) and advanced Human-Machine-Interaction can contribute improving wellbeing, inclusivity, and safety of workplaces without affecting the productivity (Romero et al. Citation2016b).

As part of HMI (Human-Machine Interaction), Human-Robot Interaction (HRI) is a growing field of research, especially with intuitive programming and control of robots (Tsarouchi, Makris, and G. Chryssolouris Citation2016b).

Gesture control of industrial robots has a huge potential regarding less teaching requirements and various industrial settings, which may have restrictions in term of accessibility (Tang and Webb Citation2018). Furthermore, the ongoing trend of ‘mass-customization’ in industrial manufacturing leads to the requirement of flexible production equipment and processes (Schmitt et al. Citation2021). Pederson et al. identify robot programming as a general bottleneck for adaption and reconfiguration in production (Pedersen et al. Citation2016). Hence, gesture-based programming and control of robots provide a more intuitive and thus time effective way to enhance flexibility in industrial value chains. Other possibilities for eliminating bottlenecks in a human-robot production, such as optimized layout planning (Tsarouchi et al. Citation2017) or improved human-robot collaboration in individual activities (Dimitropoulos et al. Citation2021) will not be considered in this work.

Both issues, automated ergonomic assessment and gesture-based control of robots are covered in this contribution. After a literature review of gesture-based capturing technologies, ergonomic assessment, COVID-19 gesture identification and robot control, two different application scenarios are focused are presented focusing on utilizing gesture data in industry. First, the Ergo-Takt approach, which combines ergonomic assessment and assembly line balancing and second, gesture-based robot control. The contribution closes with a summary and further research activities.

2. State of research in gesture-based monitoring and control

The following paragraph gives an overview on different motion capturing technologies, which contain the basis for gesture-based monitoring and control of technical systems. In the field of gesture-based monitoring, ergonomic assessment methods utilize human gestures to evaluate, e.g. the exposure of employees to physiological stress in working environments. The captured human movements are related to different ergonomic measures-. In the research field of gesture-based monitoring, approaches of Human-Robot-Interaction are reviewed and categorized by their control strategy.

2.1. Gesture capturing technologies

Various technologies to capture human movements or gestures are present. summarizes the most relevant ‘wearable free’ ones classified according to the ergonomic score method described in the following paragraph, captured body area and capturing technology. The term ‘wearable free’ in this context means, that capturing devices are not attached to the human body, as this can be a constraint in industrial processes. In terms of the successful application in a production environment and the necessity of a high acceptance of the capturing system is a crucial aspect. Hence, capturing technologies, which potentially has no disturbing influence on the operator in assembly are reviewed. Additionally, the score methods for ergonomic assessment are shown in . They are presented and discussed in detail in the next paragraph

Table 1. Capturing methods and ergonomic assessment measure.

indicates that two capturing techniques are common: depth camera and inertial measurement unit (IMU). Nearly all listed approaches use a Microsoft Kinect as a depth camera. The Kinect camera was originally designed for gaming, but due to its low cost and high performances it became an outstanding tool for researchers in many fields from medical (Webster and Celik Citation2014; Boenzi et al. Citation2016) to virtual and augmented reality (Cruz, Lucio, and Velho Citation2012; Facchini et al. Citation2016; Manghisi et al. Citation2018). Different methods utilizing other equipment, which is applied to the body (wearables), are presented in Lu et al. (Citation2014) or Moin et al. (Citation2021) and are not discussed in our contribution. For the first gesture-based application example, i.e. the ergonomic assessment, certain scores must be derived using the captured movements.

2.2. Motion capturing for ergonomic assessment

Ergonomics assessment methods and tools have been extensively studied and presented in scientific literature, medical knowledge, and industrial environment rules. One of the first approaches is the Ovako Working Posture Analysing System (OWAS), which divides movements into four body parts (trunk, arms, lower body and neck). It uses a series of instantaneous observations to compile a score of the harmfulness of the activity from defined tables (Karhu, Kansi, and Kuorinka Citation1977). The basic concept of OWAS inspired more complex methods as RULA (Rapid Upper Limb Assessment), REBA (Rapid Entire Body Assessment), and EAWS (European Assembly Worksheet), NIOSH-Eq (Revised National Institute for Occupational Safety and Health – Equation) -, Strain Index (SI) - and OCRA (McAtamney and Corlett Citation1993; Hignett and McAtamney Citation2000; Schaub et al. Citation2013; Waters et al. Citation1993; Moore and Garg Citation1995; Occhipinti Citation1998; Colombini Citation1998). OCRA presents results as a quotient of the theoretically best possible execution of the activity (Occhipinti Citation1998; Colombini Citation1998). Also the JSI-L (job severity index – lifting) method provides a quotient of the possible performance of an employee and the foreseen activity. This method is suitable only indirectly for the evaluation of the activities; the suitability of the employee for a certain activity is evaluated first (Liles et al. Citation1984). Compared to this basis, the Metabolic Energy Expenditure Rate (EnerExp) method considers the energy consumption of an activity (Garg, Chaffin, and Herrin Citation1978). For each type of activity an energy consumption value is assigned. Finally, the sum of the used physical energy is compared to the maximum harmless energetic performance of a worker. The presented methods share a practical limitation. To evaluate an activity, an observation must take place, through either direct annotation or video recording, followed by an assessment usually carried by specialized personnel. To overcome human error and bias, literature presents different approaches to ergonomic measurement automation. In it can be seen, that the RULA metric is mostly used in the literature as an automated tool. RULA was developed originally for quasi-static manual evaluation. However, the ErgoSentinel tool, allows real time skeleton tracking and RULA processing (Manghisi et al. Citation2017).

2.3. COVID-19 gesture identification

During COVID-19 pandemic, also gesture-based issues have also been studied to support the fight against the virus spread. Here, sensor systems and data analysis strategies to identify cough and other COVID-19 related gestures have been investigated. In Chuma and Iano (Citation2021) present a method using a K-band Doppler radar to detect coughs. The data analysis is done via Convolutional Neural Network (CNN) with an accuracy of 80%−88% under the consideration of the distance of the proband to the sensor. In Rehman et al. (Citation2021), the authors propose a platform to identify COVID-19 symptoms by detecting hand movement, coughing, and breathing. The platform uses Channel Frequency Response (CFR) to record the minute changes in Orthogonal Frequency Division Multiplexing (OFDM) subcarriers due to any human motion over a wireless channel (Rehman et al. Citation2021). In Khan et al. (Citation2020), the same research groups review non-contact sensing principles of COVID-19 symptoms by WiFi-based technologies. Manghisi et al. (Citation2020) present a body-tracking solution to detect hand-face contact gesture as a potential pathway of contagion. The systems utilize a Microsoft Kinect camera to track especially the head area. The developed HealthShield module compares each newly acquired frame with the previous one in real-time. If the contact label changes from no-contact to contact for certain head region (e.g. mouth, nose, left/right eye) contact, a signal is sent to the interface module, indicating a contact event and the contact area. With this approach, the research group get an overall accuracy of 79.83% in seven classification-fields in the humans’ face.

Based on this literature review, it can be stated that gesture identification, monitoring and control provide a huge potential in industry for various purposes. One the one hand smart ways to utilize human movements for device control can lead to increase efficiency in production. On the other hand, recommendations can be derived to plan or (in real time) adapt workstations in a more individual and ergonomically manner. In the following we present the Ergo-Takt approach, which combines gesture-based ergonomic assessment with production planning and control issues to distribute work load with a human centred approach.

3. The Ergo-Takt approach

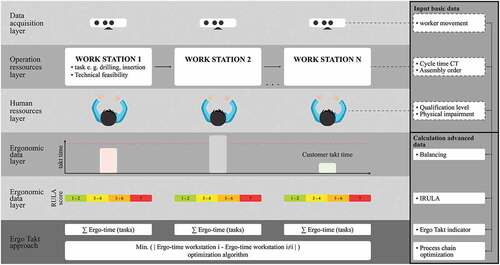

Although industrial processes lead toward extensive use of flexible automation, manual assembly is still inevitable due to the mounting complexity e.g. of cables, hoses or electronics, hard-to-reach places, sensitive surfaces, difficult operations, etc. (Heilala and Voho Citation2001). The design and levelling of a predominantly manual assembly line is since the beginning of industrial mass production, but it becomes even more critical nowadays. In fact, to compete in the global market, companies need to increase effectiveness and efficiency, especially in high-loan countries. In addition, global policies lead to an increased focus on workers’ wellness and safety. In fact, according to the Sixth European Working Conditions Survey (Eurofund Citation2019), exposure to repetitive arm movements and tiring positions lead to the development of work-related musculoskeletal disorders (WMSDs) with heavy costs for countries welfare. WMSDs include ‘all musculoskeletal disorders that are induced or aggravated by work and the circumstances of its performance’ (WHO Citation2003). WMSDs extend to almost all occupations and sectors, bearing critical physical and economic consequences for the sufferer: workers, families, businesses, and governments. These ailments are considered the most common labor medical problems among workers in the European Union. Furthermore, these issues are amplified by the aging of the workforce; in 2080, about one-third of the European population will be 65 or older (Eurostat Citation2019). This involves the need for preserving the operators’ wellbeing consistent with their active aging in the production environment. Therefore, the aging society, the lack of specialists or the product quality inevitably lead to high ergonomic requirements for manual assembly processes (Peruzzini and Pellicciari Citation2017). There is a wide range of different approaches in the literature for taking ergonomic factors into account in production and its planning. For example, factors can already be taken into account in the early design phase of processes (Sun et al. Citation2018) or by using simulations. For this purpose, entire work environments and processes can be virtualised (Golabchi et al. Citation2015) or virtual environments in which real processes are carried out (Michalos et al. Citation2018; Peruzzini et al. Citation2021). Another starting point is to achieve an ergonomic work planning mathematically. One of the possibilities is to use ergonomic factors for an adapted job rotation (Michalos et al. Citation2010). Another is to take ergonomics aspects into consideration when optimising work processes. The time-leveling of an assembly line is mostly named in the literature as ‘assembly line balancing’ (ALB). ALB is normally designed and evaluated by means of takt-time. As a matter of fact, takt-time levelling of an assembly line and the worker ergonomics are not directly related. This means that a well-levelled assembly line may not be ergonomic, and, conversely, a series of ergonomic workstations does not lead to a well-balanced assembly line. Ergonomic evaluation of the work shift requires a deep analysis of postures, loads, and frequency of movements. In the industrial field, it is commonly carried out by experts with observational methods that are not supported by instrumental measurements and are affected by subjective bias (Roman-Liu Citation2014). This approach is costly and time-consuming; therefore, it is usually carried out retrospectively of an accident and by sampling only the most critical stations/processes and workers. Hence, long term and systemic ergonomic evaluations are difficult due to current tools limitations. These are the main reasons why the ergonomic assessment is not integrated into ALB as a holistic view of the process. In this contribution, a novel approach called ‘ErgoTakt’ is proposed, which improves the standard takt-time centered balancing approach by automatic ergonomic evaluation. This is achieved by integrating the ErgoSentinel system (Manghisi et al. Citation2017) with an optimization algorithm to find the best solution of takt-time and ergonomic score for an entire chain of assembly stations. The approach also considers possible constraints, such as the qualification of the employees for the respective tasks, their physical impairment, etc. The ErgoTakt approach with its six layers for an entire assembly process chain is illustrated in : data acquisition, operating resources, human resources, economic data, ergonomic data, ErgoTakt layer

The data acquisition layer provides ergonomic data captured by a Microsoft Kinect camera and processed by the ErgoSentinel software, which derives a real-time RULA-score as input data for the operator movements. For this reason, every workstation should be equipped with a camera. The operating resource layer contains the technical description of the workstation, which means the tasks to be conducted at the specific workstation, e.g. drilling, gluing, screwing and/or manual assembly. As input data the cycle time of a single task and the needed qualification for each task at the workstation are necessary. The human resource layer characterizes the operators by their qualification level, which is related in a database with the workstation description. Furthermore, the physical impairment is considered as a value [0 … 1]. This value gives, e.g. a disabled or older person more time to fulfil a specified task. Both, the qualification as well as the impairment are used as input data or the underlying calculation of advanced data. The economic data layer and ergonomic data layer import and aggregate data from the former three named layers. The balancing chart on the one hand and the ergonomic data, on the other hand, are derived with respect to a work cycle. The ErgoTakt layer computes the optimization of ErgoTakt indicator. All input data and the calculated values are aggregated and fed into an optimization algorithm. The aim is to minimize the ergonomic stress of the worker and cycle time per workstation with respect to workers’ qualification and physical impairment as well as the mandatory assembly order in the process chain. The utilized ErgoSentinel software is described by the authors in Manghisi et al. (Citation2017, Citation2020) in detail.

3.1. Optimization of ergonomics and line balancing

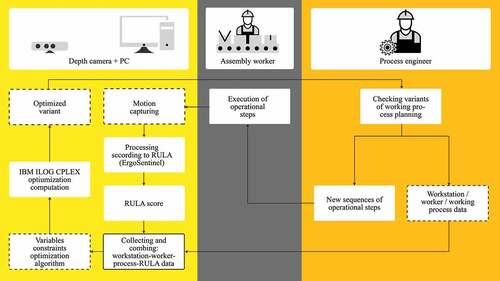

The scheme in illustrates how the necessary data are provided and used to optimize the assembly process chain with respect to the operators’ workload. Starting from the ‘process engineer’, the workstation, the worker and the process data are collected, checked and brought into a feasible work sequence before these data were provided to the data-processing system. Then, the ‘assembly worker’ carries out the assembly work. The movements are recorded with the aid of a depth camera and converted into a RULA score by the ErgoSentinel tool. Under the constraints of the mandatory process order, the assembly technology, the worker qualification, and the physical impairment, the algorithm builds variants of the optimized working processes.

These constraints and possible resulting variants are made available to the algorithm through matrices. The following parameters are used for this purpose and for optimization:

The assembly preparer provides most of these parameters as input data. Using the data, two matrices ‘tasks-workstations’ and ‘workers-tasks’ are formed. These represent which employee may/can do which task and which task can be executed on which station. Binary variables are used to show which combinations are feasible.

The data are also used to form a three-dimensional matrix of all theoretical combinations. Each combination is a three-dimensional binary variable containing the assigned worker, task and station. This matrix is used as start point for the algorithm.

The resulting worker-workstation-task variables ( are compared under the following conditions:

1. Is the workstation feasible to fulfil the addressed task(s) and vice versa?

2. Is the worker able to fulfil the task with respect to the qualification level and his/her eventual physical impairment?

3. Is each task exactly assigned once?

4. Is the maximum number of workers per workstation and vice versa one?

5. Is the sum of all cycle times of the workstation less than the customer takt-time?

The optimization algorithm using the ErgoTakt as indicator for its objective function

which becomes the determining factor for the objective function.

The objective function minimizes the sum of the absolute values of all differences between the individual ErgoTakt variants.

with ErgoTakt of workstation m with worker n. The factor

is necessary to relate the variable to the indicator mathematically. The factor describes the parameters to be used in the calculation.

The optimization algorithm evaluates all possible task-workstation-worker combinations regarding the accomplishment of the named conditions and the predefined assembly order. Based on these results the tasks are assigned to the workstations according to the minimum ErgoTakt indicator.

3.2. Ergonomic and line balancing use-case

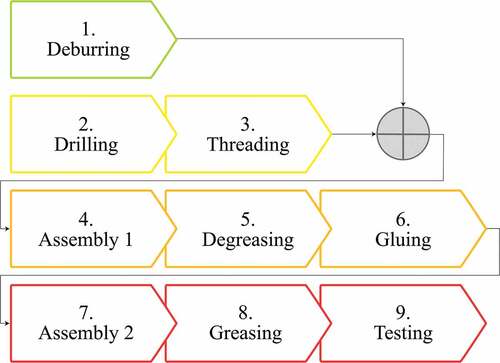

To illustrate the ErgoTakt approach and its results regarding an assembly scenario, a synthetic process chain is designed as a virtual simulation of nine tasks configured as shown in .

The tasks can be performed by five simulated workers with respect to their qualifications and are distributed over five workstations. The assembly/task order is fixed. The cycle time (CT) for tasks and the average RULA-score are presented in The worker data contain the worker ID from A to E and his/her physical resilience, which is represented by a percentage value (see also ). In this case, it is supposed that the workers B and D have reduced physical resources, e.g. due to their age or disability. Those employees will get more time to fulfil the task according to their impairment. Furthermore, the qualification for the specified tasks (1–9) is noted as a binary variable.

Table 2. Input data for the ErgoTakt algorithm.

Table 3. Categorized literature review gesture-controlled robotics.

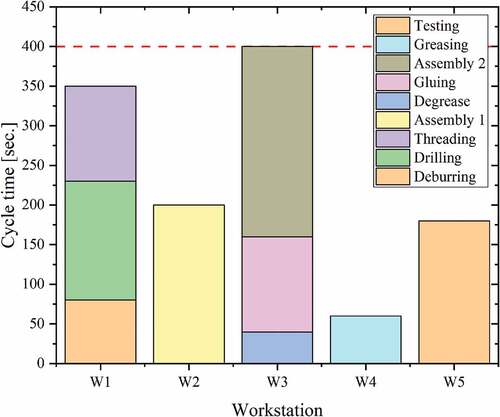

The initialization of the optimization is done by various, but technically feasible, allocation of tasks to a workstation (Wi), illustrated in . As an additional starting condition, it is defined that gluing (Task 6 in ) is only feasible at workstation 4. Worker D is the only one qualified for this task. The sum of the task cycle times per workstation defines its maximum cycle time. It can be seen, that the resulting levelling is less than ideal.

Based on these input data, the optimization is carried out by the algorithm described previously. To show how the implication of ergonomic assessment influences the optimization results, the assembly scenario has been optimized in two phases:

3.2.1. Phase 1

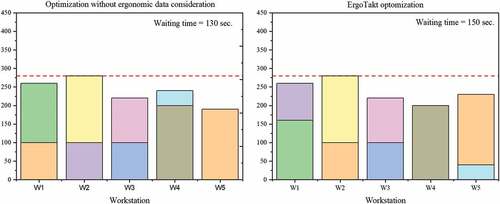

In phase 1 no ergonomic data are considered for the line balancing optimization. Hence, the physical impairment value does not influence the algorithm even if the workers might be burdened by stress or time pressure in real assembly scenarios. The aim is here to optimize the balancing within the entire process chain. Thereby, the target is to lower the maximum cycle time of the”slowest” workstation in the process chain. The initial scenario gives the start value as 400 s. at W3 (see ).

The result of this optimization phase shows the reassignment of the tasks to the workstations as in the initial scenario and this a ‘homogenization’ of the cycle times. The maximum cycle time of the process chain is lowered to 280 s.; the total waiting time between the workstations is 130 s (see , right).

3.2.2. Phase 2

In phase 2, the RULA score is considered ( right). The new target value of the optimization algorithm is to minimize the ErgoTakt indicator. As a result, the tasks are also reorganized within the process chain under the given order between W1 to W5. In addition, a technological feasible order is still present. As a result, the maximum cycle time of the process time stays at 280 s., the waiting rises slightly to 150 s (see. , left). The mean cycle time decreases from 258 s. (phase 1) to 238 s. in phase 2. The standard deviation σ decreases also from ±57,4 s. to 31,2 s. at the expense of the waiting time (WT) between all workstations (130 s. → 150 s.).

These indicators show that the integration of the ergonomic score and resilience into the optimization leads to more WT between the workstations. The mean cycle time as well as the standard deviation σ between both optimization phases differs, which both indicate a homogenization of the assembly line especially when considering σ. Furthermore, the average RULA scores, which are known for each task, can be related to both phases even if they are not considered for the optimization in phase 1. The task-workspace- assignments then lead to a RULA scoring for W1–W5. In shows the occurring alignment. The physical exposure of the worker at W2 is brought down from RULA 3,5 to RULA 3, but at the expense of W4 (2,0–2,5). The RULA score is levelled in a better way by the ErgoTakt approach as in phase for this assembly scenario.

The results indicate the applicability and the advantages of the approach in a simulated assembly process chain. Based on the virtual scenario simulation, both, the line balancing and the ergonomic scores are improved. The RULA score at the most critical station 2 decreases by 30%. The increase of at WS 4 is in a rather uncritical range (2 → 2,5). The benefits of the ergonomic acquisition by the ErgoSentinel software are obvious, long-term evaluation, continuous RULA scoring or low-cost equipment are just some aspects. The combination of automated RULA evaluation and production line balancing makes it possible to optimize both dimensions, economic line balancing and ergonomic posture.

3.3. Discussion and limitations of the ErgoTakt approach

It can be seen critically, that actually and, above all, in the existing assembly process chain, many other factors influence the decision of tasks and how they are organized. For instance, the factory layout, the technical flexibility of shifting tasks between workspaces or technological incompatible combinations of workstation and tasks might be a challenge for the implementation of the ErgoTakt approach. Therefore, the first use of the ErgoTakt should already take place in the planning phase of an assembly process chain in order to evaluate the RULA-scores by ErgoSentinel, e.g. by cardboards. Then, the technical equipment of the workstations and the arrangement of the tasks can be analyzed in advance in terms of ergonomic and line balancing issues. Nevertheless, the ErgoTakt approach is applicable in an existing assembly line, when the following aspects are fulfilled predominantly:

Workstation must be compatible with existing skeleton capturing technologies (e.g. postures, tools and safety devices, occlusions, etc.)

Modular assembly lines with reconfigurable tasks and task technologies

Re-configurability of the assembly line layout

Similarity of (assembly) technologies of the workstations

Low degree of automated workstations within the process chain in comparison to manual assembly tasks. These conditions are usually verified in normal working environment, thus they are not limiting the use of this technology

The ErgoTakt use case demonstrate the utilization of gesture-based monitoring to derive ergonomic values from the workers movement (Wilhelm et al. Citation2021).

The next paragraph reviews gesture-based robot control technologies and show a cloud-based straight-forward approach of gesture-based robot control to demonstrate either gesture-based monitoring and control issues.

4. Gesture-based Human-Machine-Interaction

The capture of movements can additionally be used for interaction between humans and machines to provide an intuitive process by using gesture control. This allows a modification of existing production systems by new and smart interaction mechanisms. An obvious application for gesture commands is the control of robots. Industrial robots are especially used to assist humans in working environments, e.g. due to dangerous environmental conditions or high physical loads. Due to the fact that a robot is supposed to replace the movements of an employee, programming through corresponding movements is an intuitively applicable method. In the following , a review of gesture-based robot control is presented. The utilized technologies, the type of robot and the kind of control gestures are classified. The technologies are divided into four categories: wearables, camera, infrared camera (Leap Motion) and depth camera (Microsoft Kinect). The robot types are classified into professional industrial robots and non-professional/commercial robots, which functionalities are similar to industrial robots. Other areas with strong research activity in gesture control, such as humanoid robots, are left out. A distinction is made between static gestures and dynamic gestures. Static gestures are firmly assigned gestures that trigger exactly one movement in the robot. Dynamic gestures can be the indirect transmission of motion sequences, e.g. the movements of a human hand on a robot arm. Mirroring, in our context, means transmitting the (scaled) spatial coordinates of the arm movement, and thus, determining the position of the end effector.

Marinho et al. (Citation2012) show the use of a depth camera (Microsoft Kinect) in combination with an industrial robot. The movements of the right arm are mirrored. The left arm solves predefined tasks through defined gestures, e.g. gripping objects. Only one arm can be used at a time. The transmission of the movements of the arm must be stopped by a defined gesture, if the other one should be used. Moe and Schjølberg (Citation2013) show a similar setup with a depth camera (Microsoft Kinect) and an industrial robot with the aim of performing a pick-and-place task. An acceleration sensor (smartphone) to be able to transmit the orientation extends the depth camera. They notice an inaccuracy of the end-point and a delay, especially with fast movements. In addition, smaller movements are poorly recognized. Shirwalkar et al. (Citation2013) use a similar setup (Microsoft Kinect + industrial robot) to perform pick-and-place and liquid-handling tasks. The focus of their work deals with different workspace sizes.The paper does provide a direct coordinate transformation of the length of the arms’ movements or a variable factor to convert the length to the robots’ workspace. In their work, the movements are continued by the robot at a constant speed until the gesture to stop is given. Other static gestures are used, especially for commands to the gripper. Yang et al. (Citation2015) use movement control in conjunction with a collaborative work process. Using a depth camera (Microsoft Kinect v2), a defined object is recognized in one of the worker’s hands. With the other arm, commands are given in the form of static gestures. The spatial position of the object is detected and the industrial robot picks up the object from the operator or releases the grip after receiving corresponding instructions through gestures. Fuad (Citation2015) also uses a depth camera (Kinect v2) and an industrial robot. The focus of this work is on the direct transmission of velocities at the axes by measuring the joint angle between the adjacent bones at the corresponding human joint of the arm. Chen et al. (Citation2015) present an approach that uses an infrared camera and industrial robots. For the robot control, an IR camera detects only the hand and the coordinate transformation into movements of the robot arm is made. Tsarouchi et al.(Citation2016b) use an IR camera (Leap Motion) in addition to the depth camera (Kinect) to send static commands to an industrial robot using ROS. The depth camera detects arm positions as commands, while the IR camera for close range detects finger positions for the same commands. Cueva, Torres, and Kern (Citation2017) use a depth camera (Kinect v2) and an Arduino-controllable robot. Different boxes on a screen are shown to the user, whereas each of them represents an axis or the robot gripper itself. The gripper can be controlled independently of the other axes, as it is operated with the other hand. If the hand is moved from one side to the other in a box, the axis is moved. In addition, a distinction is made between an open hand and a closed hand for start or stop. In their work, Hong et al. (Citation2017) use a depth camera (Kinect v2) and a self-built robotic arm that functions as closely as possible to a human arm. To achieve a smooth movement of the robot arm, a smoothing filter is implemented for the control commands. Erroneous values e.g. due tomeasurement errors of the depth camera are removed. Coban and Gelen (Citation2018) also use an EMG wristband (MYO wristband) to control an industrial robot. In addition, to defined gestures and associated pre-programmed movement commands to the arms, the gyroscope, accelerometer and magnetometer are used. Roll, pitch and yaw orientation values from the sensors are utilized for spatial orientation. Kandalaft et al. (Citation2018) apply speech control to a non-professional robotic arm in addition to an accelerometer attached to the operator’s hand. The accelerometer provides x, y, z values. Defined hand positions are calibrated beforehand and are assigned to specific commands for the robot arm. Commands can also be given by voice control, for which the spoken words are translated into text via smartphone and compared with programmed text for specific movements. Atre et al. (Citation2018) use a USB camera and computer vision for gesture control. The camera captures images of the user. Computer vision is used to extract the hand and determine the user’s gestures. The recognised gesture is then used for control. In their work, Auquilla et al. (Citation2019) use a similar system with boxes as described by Cueva, Torres, and Kern (Citation2017). Boxes are displayed on the user’s screen. The control is now realized by gestures in the different boxes. Using this control, a pick-and-place task is performed. An automatic process is also tested, in which defined steps in the process are started by the user’s gestures. Fan, Yang, and Wu (Citation2019) combine EMG (MYO wristband) and IR camera (Leap Motion) for control. The control is activated as soon as a closed hand is detected by the IR camera. When the hand is moved in the viewfield of the IR camera, the coordinates are translated and transmitted. The transmission ends as soon as the hand is opened. The wristband serves as a safety feature that detects unforeseen movements (e.g. shaking of the arm) and briefly interrupts the transmission of the data. Li (Citation2020) shows an approach to gesture control using a depth and RGB camera (Kinect v2). Static gestures, dynamic gestures and movements are recognized by a trained Neural Network. The simulated picking and screwing is given as an application example. Kaczmarek et al. (Citation2020) use the depth camera (Kinect v2) and voice control to control an industrial robot. The developed software is used to control different industrial robots. The robot is controlled with pre-programmed gestures. In addition, it is shown that each command is executed with a delay.

Islam et al. (Citation2020) present a gesture-controlled non-professional robotic arm for IoT-applications. It is controlled by an IR camera (Leap Motion). Compared to a jostick controller, similar results can be achieved using gesture control. Movement sequences can be performed with similar accuracy as with a joystick. When recognizing certain commands, such as grab or rotate, there may be errors in the recognition. Lin et al. (Citation2020) use a depth camera (Kinect v2) to recognize both hands. One hand is used to transmit real-time movements to the non-professional robot. The other hand is used for static gestures. The gestures enable the start and stop of a recording of real-time movements. Another gesture is used to run the recorded gestures through the robot arm. Salamea et al. (Citation2020) use a depth camera (Kinect v2) to control a non-professional SCARA robot by static gestures. Here, the way of transferring the commands for teleoperations is focused. In view of the work, it is clear that depth cameras (Microsoft Kinect) are the most commonly used technology for detection. For higher precision, infrared camera technology (Leap Motion) is used. For control, the most common application is the use of static and dynamic gestures in combination.

The review provides that gesture-based control of robots seem to be feasible even for industrial application. Due to the fact, that software as a service is an increasing model to outsource computationally intensive processes, the next paragraph introduce a cloud-based approach of gesture-based robot control.

4.1. Cloud-based approach of gesture-based robot control

Two keywords of this contribution are Industry 4.0 and smart manufacturing. In this context, cloud-based services more and more find their way in production. Hence, a straight-forward approach to realize gesture-based robot control by combining existing cloud-based AI-models and graphical IoT-programming is presented.

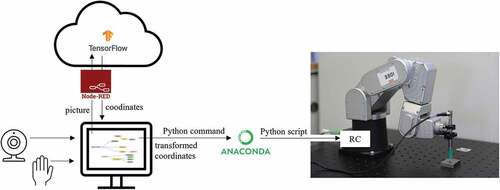

Our approach relies on the TensorFlow model for hand-pose recoginition (MediaPipe Handpose Citation2022) integrated in a NodeRed flow. NodeRED is an open-source graphical development tool based on JavaScript for the integration of IoT-devices, APIs (Application Programming Interfaces) and online services by so-called flows (Gardasevic et al. Citation2017).

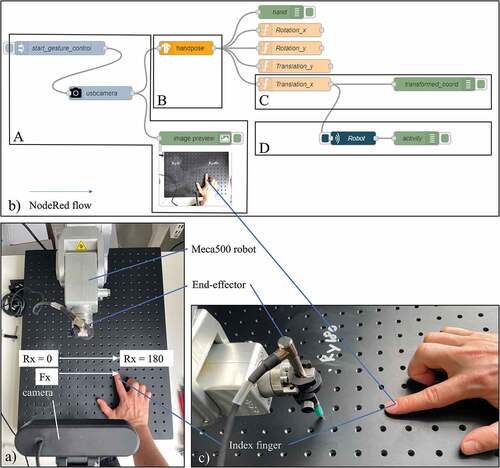

To realize the interface the robot the pythonshell-node is used to send commands to the robot control. A MECA 500 industrial 6-axis serial robot is utilized, which can be controlled via python commands. Doing this, the open-source IDE Anaconda is employed. The chosen architecture is shown in -.

For the test-bench the MECA 500 robot is equipped with a measurement probe as end-effector. A camera is statically mounted over the entire setup. The aim of our use is, that the robot adopts the x-coordinate (Rx) of its program according to the x-position of the index-finger (Fx), which has the same direction. The NodeRed flow and the resulting gestured-based robot control application is structured as follows (see also ):

Capturing a picture: An inject node is used to start the image capturing via a USB-camera. An image preview node shows the picture taken from the operators’ hand

Getting the x-coordinate of the index finger (Fx): The image is passed by the handpose-node, which provides a cloud-based hand-tracking service via a Neural Network (Zhang et al. Citation2020). The output of this node is a JSON data object providing the x- and y-coordinate of each finger.

Transforming Fx to the robot coordinate (Rx): To scale the resulting index-finger coordinate according to the world reference frame of the robot a function-node linearizes Fx.

Transfer the transformed coordinate to the python robot script: The resulting coordinate is then transferred by a pythonshell-node to the robot program.

Figure 7. (a) top-view of the test bench with the MECA500 robot the camera; b) NodeRed flow and its direction; c) Resulting robot position after pointing a position with the index-finger.

With the presented approach, a straightforward gesture-based robot control can be realized at low-costs and low-code effort enabled by the provided visual elements of NodeRed. A crucial drawback is the high latency (>1 sec.) as the model computation is done not locally, yet.

4.2. Discussion and limitations of the cloud-based approach of gesture-based robot control

The industrial application of the described human-robot interaction approach depends on different factors, as latency, robustness and accuracy of human trajectory compared to the robot one. The control loop time for industrial PLCs (programmable logic controller) is less than 5 ms. Hence, a broadband infrastructure is essential. Here, industrial 5 G technology can provide a wireless standard, which is applicable for cloud-based and time critical scenarios. The robustness can be accociated with the repeatability and independence from the user, as industrial applications normally run 24/7 with a high availability. The accuracy depends on the real application, if the robot should be placed in sub-millimetre dimension or just roughly positioned. Here, the scaling mechanism from human to robot movements need to be adoptable.

5. Conclusion and further research

The recognition of gestures and movements in manufacturing offers a wide range of possibilities to be applied. It can be used for health prevention for employee as well as for various value-adding application scenarios in terms of line-balancing. Low-cost technologies in the form of depth cameras are available for digitising and supporting human-system interaction. With the help of these, various potential applications are already realized with different devices combined with other data-driven approaches, as artificial intelligence (neural networks) or augmented reality (AR). Further fields can be opened.

The ErgoTakt approach uses this inexpensive possibility of using a depth camera to record human movements. This is used to create a balance between the performance and health of the employee and economic interests. For this purpose, the RULA score is recorded using the ErgoSentinel software and combined with an optimization algorithm for manual assembly lines. In the developed use case two scenarios are presented, one optimization with and one without ergonomics values. The result shows an improvement of the RULA-Score of the most critical workstation of about 30% expense of increasing the waiting time by 14%.

In addition to this approach, the acquisition of gestures to control robots as a crucial automation element manufacturing systems is presented. The technique used is a low-cost, low-code solution with one USB camera with open-source IoT-environments. The camera captures movements of the hand by means of image recognition and transforms the derived finger coordinate into the robot coordinate frame for positioning tasks. The robot programm is adapted within the control loop. This cloud-based approach of teleoperative control again opens several possible applications. Scenarios can be assisted remotely even for difficult and precise tasks given by experienced users, or operations in hazardous environments can be executed at a safe distance even with the required precision.

Both approaches illustrate industrial applications for gesture-based technologies in digitized industry, but also in other fields. At this point, further research needs to adapt current sensing and processing techniques for use in real industrial environments. In addition, there is a demand for further adaptation of the optimization algorithm to be able to integrate automated processes or even teleoperative processes. In addition, the control possibilities via gesture must be improved by means of low latencies and tested for their reliability in acquisition and processing. The possibilities and effects of integrating teleoperations into existing (industrial) processes must also be investigated. In addition, human aspects must be considered. An examination whether and how employees change their behavior when their activities are recorded and processed by technologies is crucial for the acceptance of technical systems in working environments.

Acknowledgments

The authors gratefully thank the Hans-Wilhelm Renkhoff Foundation for supporting the ROKOMESS project as well as the Bayerische Forschungsallianz funding the BayIntAn- project to strengthen the international research relation of the Politechnico di Bari and the University of Applied Sciences Würzburg-Schweinfurt. Grant no. BayIntAn_FHWS_2019_159).

The Ergosentinel software is a result of the implementing agreement between the Apulia agency of the National Institute for Insurance against Accidents at work of the Apulia Region (INAIL) and the Polytechnic institute of Bari: “Sviluppo e Diffusione di un tool software low cost basato su Kinect TM v2, per il monitoraggio automatico in real time del rischio ergonomico”.

Disclosure statement

No potential conflict of interest was reported by the author(s).

Additional information

Funding

References

- Ansari, F., P. Hold, W. Mayrhofer, S. Schlund, and W. Sihn. 2018. AUTODIDACT: Introducing the Concept of Mutual Learning into a Smart Factory Industry 4.0. International Association for Development of the Information Society. 978-989-8533-81-4. http://www.iadisportal.org/digital-library/autodidact-introducing-the-concept-of-mutual-learning-into-a-smart-factory-industry-40

- Atre, P., S. Bhagat, N. Pooniwala, and P. Shah. 2018. “Efficient and Feasible Gesture Controlled Robotic Arm.” In 2018 Second International Conference on Intelligent Computing and Control Systems (ICICCS), 14.06.2018 - 15.06.2018, 1–6. Madurai, India: IEEE. doi:10.1109/ICCONS.2018.8662943.

- Auquilla, A. R., H. T. Salamea, O. Alvarado-Cando, J. K. Molina, and P. A. S. Cedillo. 2019. “Implementation of a Telerobotic System Based on the Kinect Sensor for the Inclusion of People with Physical Disabilities in the Industrial Sector.” In 2019 IEEE 4th Colombian Conference on Automatic Control (CCAC), 15.10.2019 - 18.10.2019, 1–6. Medellín, Colombia: IEEE. doi:10.1109/CCAC.2019.8921359.

- Battini, D., A. Persona, and F. Sgarbossa. 2014. “Innovative Real-Time System to Integrate Ergonomic Evaluations into Warehouse Design and Management.” Computers & Industrial Engineering 77: 1–10. doi:10.1016/j.cie.2014.08.018.

- Boenzi, F., S. Digiesi, F. Facchini, and G. Mummolo. 2016. “Ergonomic Improvement Through Job Rotations in Repetitive Manual Tasks in Case of Limited Special- Ization and Differentiated Ergonomic Requirements.” IFAC-PapersOnline 49 (12): 1667–1672. doi:10.1016/j.ifacol.2016.07.820.

- Bortolini, M., M. Faccio, M. Gamberi, and F. Pilati. 2018a. “Motion Analysis System (MAS) for Production and Ergonomics Assessment in the Manufacturing Processes.” Computers & Industrial Engineering 139. doi:10.1016/j.cie.2018.10.046.

- Bortolini, M., M. Gamberi, F. Pilati, and A. Regattieri. 2018b. “Automatic Assessment of the Ergonomic Risk for Manual Manufacturing and Assembly Activities Through Optical Motion Capture Technology.” Procedia CIRP 72: 81–86. doi:10.1016/j.procir.2018.03.198.

- Chen, S., H. Ma, C. Yang, and M. Fu. 2015. “Hand Gesture Based Robot Control System Using Leap Motion.” In Intelligent Robotics and Applications, Lecture Notes in Computer Science, edited by H. Liu, N. Kubota, X. Zhu, R. Dillmann, and D. Zhou, 581–591. Vol. 9244. Cham: Springer International Publishing. doi:10.1007/978-3-319-22879-2_53.

- Chuma, E. L., and Y. Iano. 2021. “A Movement Detection System Using Continuous-Wave Doppler Radar Sensor and Convolutional Neural Network to Detect Cough and Other Gestures.” IEEE Sensors Journal 21 (3, February 1): 2921–2928. doi:10.1109/JSEN.2020.3028494.3.

- Coban, M., and G. Gelen. 2018. “Wireless Teleoperation of an Industrial Robot by Using Myo Arm Band.” In 2018 International Conference on Artificial Intelligence and Data Processing (IDAP), 28.09.2018 - 30.09.2018, 1–6. Malatya, Turkey: IEEE. doi:10.1109/IDAP.2018.8620789.

- Colombini, D. 1998. “An Observational Method for Classifying Exposure to Repetitive Movements of the Upper Limbs.” Ergonomics 41 (9): 1261–1289. doi:10.1080/001401398186306.

- Cruz, L., D. Lucio, and L. Velho. 2012. “Kinect and Rgbd Images: Challenges and Applications.” In Proceedings of the 2012 25th SIBGRAPI Conference on Graphics, Pat- terns and Images Tutorials. IEEE. doi:10.1109/SIBGRAPI-T.2012.13.

- Cueva, C. W. F., S. H. M. Torres, and M. J. Kern. 2017. “7 DOF Industrial Robot Controlled by Hand Gestures Using Microsoft Kinect V2.” In 2017 IEEE 3rd Colombian Conference on Automatic Control (CCAC), 18.10.2017 - 20.10.2017, 1–6. Cartagena: IEEE. doi:10.1109/CCAC.2017.8276455.

- Dimitropoulos, N., T. Togias, N. Zacharaki, G. Michalos, and S. Makris. 2021. “Seamless Human–robot Collaborative Assembly Using Artificial Intelligence and Wearable Devices.” Applied Sciences 11 (12): 5699. doi:10.3390/app11125699.

- Eurofund (European Foundation for the Improvement of Living and Working Conditions). 2019. “Sixth European Working Conditions Survey: Working Conditions and Workers’ Health”.

- Eurostat (European Statistical Office). 2019. “Population Structure and Ageing.”

- Facchini, F., G. Mummolo, G. Mossa, S. Digiesi, F. Boenzi, and R. Verriello. 2016. “Minimizing the Carbon Footprint of Material Handling Equipment: Comparison of Electric and LPG Forklifts.” Journal of Industrial Engineering and Management 9 (5): 1035–1046. doi:10.3926/jiem.2082.

- Fan, Y., C. Yang, and X. Wu. 2019. “Improved Teleoperation of an Industrial Robot Arm System Using Leap Motion and MYO Armband.” In 2019 IEEE International Conference on Robotics and Biomimetics (ROBIO), 06.12.2019 - 08.12.2019, 1670–1675. Dali, China: IEEE. doi:10.1109/ROBIO49542.2019.8961758.

- Fuad, M. 2015. “Skeleton Based Gesture to Control Manipulator.” In 2015 International Conference on Advanced Mechatronics, Intelligent Manufacture, and Industrial Automation (ICAMIMIA), 15.10.2015 - 17.10.2015, 96–101. Surabaya, Indonesia: IEEE. doi:10.1109/ICAMIMIA.2015.7508010

- Gardasevic, G., H. Fotouhi, I. Tomasic, M. Vahabi, M. Bjorkman, and M. Linden. 2017. “A Heterogeneous IoT-Based Architecture for Remote Monitoring of Physiological and Environmental Parameters.” In 4th EAI International Conference on IoT Technologies for HealthCare, Angers, France, October 24–25. doi:10.1007/978-3-319-76213-5_7.

- Garg, A., D. B. Chaffin, and G. D. Herrin. 1978. “Prediction of Metabolic Rates for Manual Materials Handling Jobs.” American Industrial Hygiene Association Journal 39 (8): 661–674. doi:10.1080/0002889778507831.

- Golabchi, A., S. Han, J. Seo, S. Han, S. Lee, and M. Al-Hussein. 2015. “An Automated Biomechanical Simulation Approach to Ergonomic Job Analysis for Workplace Design.” Journal of Construction Engineering and Management 141 (8): 4015020. doi:10.1061/(ASCE)CO.1943-7862.0000998.

- Haggag, H., M. Hossny, S. Nahavandi, and D. Creighton. 2013. “Real Time Ergonomic As- Sessment for Assembly Operations Using Kinect.” In Proceedings of the 2013 UK- Sim 15th International Conference on Computer Modelling and Simulation, 495–500. IEEE. doi:10.1109/UKSim.2013.105.

- Heilala, J., and P. Voho. 2001. “Modular Reconfigurable Flexible Final Assembly Systems.” Assembly Automation 21 (1): 20–30. doi:10.1108/01445150110381646.

- Hignett, S., and L. McAtamney. 2000. “Rapid Entire Body Assessment (REBA).” Applied Ergonomics 31 (2): 201–205. doi:10.1016/S0003-6870(99)00039-3.

- Hong, C., Z. Chen, J. Zhu, and X. Zhang. 2017. “Interactive Humanoid Robot Arm Imitation System Using Human Upper Limb Motion Tracking.” In 2017 IEEE International Conference on Robotics and Biomimetics (ROBIO), 05.12.2017 - 08.12.2017, 2746–2751. Macau: IEEE. doi:10.1109/ROBIO.2017.8324706.

- Islam, J., A. Ghosh, M. I. Iqbal, S. Meem, and N. Ahmad. 2020. “Integration of Home Assistance with a Gesture Controlled Robotic Arm.” In 2020 IEEE Region 10 Symposium (TENSYMP), 05.06.2020 - 07.06.2020, 266–270. Dhaka, Bangladesh: IEEE. doi:10.1109/TENSYMP50017.2020.9230893.

- Kaczmarek, W., J. Panasiuk, S. Borys, and P. Banach. 2020. “Industrial Robot Control by Means of Gestures and Voice Commands in Off-Line and On-Line Mode.” Sensors (Basel, Switzerland) 20 (21): 6358. doi:10.3390/s20216358.

- Kandalaft, N., P. S. Kalidindi, S. Narra, and H. N. Saha. 2018. “Robotic Arm Using Voice and Gesture Recognition.” In 2018 IEEE 9th Annual Information Technology, Electronics and Mobile Communication Conference (IEMCON), 01.11.2018 - 03.11.2018, 1060–1064. Vancouver, BC: IEEE. doi: 10.1109/IEMCON.2018.8615055.

- Karhu, O., P. Kansi, and I. Kuorinka. 1977. “Correcting Working Postures in Industry: A Practical Method for Analysis.” Applied Ergonomics 8 (4): 199–201. doi:10.1016/0003-6870(77)90164-8.

- Khan, M. B., Z. Zhang, L. Li, W. Zhao, M. A. M. A. Hababi, X. Yang, and Q. H. Abbasi. 2020. “A Systematic Review of Non-Contact Sensing for Developing a Platform to Contain COVID-19.” Micromachines 11 (10): 912. doi:10.3390/mi11100912.

- Li, X. 2020. “Human–robot Interaction Based on Gesture and Movement Recognition.” Signal Processing: Image Communication 81: 115686. doi:10.1016/j.image.2019.115686.

- Liles, D. H., S. Deivanayagam, M. M. Ayoub, and P. Mahajan. 1984. “A Job Severity In- Dex for the Evaluation and Control of Lifting Injury.” Human Factors: The Journal of the Human Factors and Ergonomics Society 26 (6): 683–693. doi:10.1177/001872088402600608.

- Lin, C.-S., P.-C. Chen, Y.-C. Pan, C.-M. Chang, and K.-L. Huang. 2020. “The Manipulation of Real-Time Kinect-Based Robotic Arm Using Double-Hand Gestures.” Journal of Sensors 2020: 1–9. doi:10.1155/2020/9270829.

- Lu, Z., X. Chen, Q. Li, X. Zhang, and P. Zhou. 2014. “A Hand Gesture Recognition Framework and Wearable Gesture-Based Interaction Prototype for Mobile Devices.” IEEE Transactions on Human-Machine Systems 44 (2): 293–299. doi:10.1109/THMS.2014.2302794.

- Manghisi, V. M., M. Fiorentino, A. Boccaccio, M. Gattullo, G. L. Cascella, N. Toschi, A. E. Pietroiusti, and A. E. Uva. 2020. “A Body Tracking-Based Low-Cost Solution for Monitoring Workers’ Hygiene Best Practices During Pandemics.” Sensors 20 (21): 6149. doi:10.3390/s20216149.

- Manghisi, V. M., A. E. Uva, M. Fiorentino, V. Bevilacqua, G. F. Trotta, and G. Monno. 2017. “Real Time RULA Assessment Using Kinect V2 Sensor.” Applied Ergonomics 65: 481–491. doi:10.1016/j.apergo.2017.02.015.

- Manghisi, V. M., A. E. Uva, M. Fiorentino, M. Gattullo, A. Boccaccio, and G. Monno. 2018. “Enhancing User Engagement Through the User Centric Design of a Mid-Air Ges Ture-Based Interface for the Navigation of Virtual-Tours in Cultural Heritage Ex- Positions.” Journal of Cultural Heritage 32: 186–197. doi:10.1016/j.culher.2018.02.014.

- Marinho, M. M., A. A. Geraldes, A. P. L. Bo, and G. A. Borges. 2012. “Manipulator Control Based on the Dual Quaternion Framework for Intuitive Teleoperation Using Kinect.” In 2012 Brazilian Robotics Symposium and Latin American Robotics Symposium, 16.10.2012 - 19.10.2012, 319–324. Fortaleza, Brazil: IEEE. doi:10.1109/SBR-LARS.2012.59.

- Martin, C. C., D. C. Burkert, K. R. Choi, N. B. Wieczorek, P. M. McGregor, R. A. Herrmann, and P. A. Beling. 2012. “A Real-Time Ergonomic Monitoring System Using the Microsoft Kinect.” In Proceedings of the 2012 IEEE Systems and Information Engineering Design Symposium, 50–55. IEEE. doi:10.1109/SIEDS.2012.6215130.

- McAtamney, L., and E. N. Corlett. 1993. “RULA: A Survey Method for the Investigation of Work-Related Upper Limb Disorders.” Applied Ergonomics 24 (2): 91–99. doi:10.1016/0003-6870(93)90080-S.

- MediaPipe Handpose. 2022. ( 0.0.7), Accessed June 5 2022. https://www.npmjs.com/package/@tensorflow-models/handpose

- Michalos, G., A. Karvouniari, N. Dimitropoulos, T. Togias, and S. Makris. 2018. “Workplace Analysis and Design Using Virtual Reality Techniques.” CIRP Annals 67 (1): 141–144. doi:10.1016/j.cirp.2018.04.120.

- Michalos, G., S. Makris, L. Rentzos, and G. Chryssolouris. 2010. “Dynamic Job Rotation for Workload Balancing in Human Based Assembly Systems.” CIRP Journal of Manufacturing Science and Technology 2 (3): 153–160. doi:10.1016/j.cirpj.2010.03.009.

- Moe, S., and I. Schjølberg. 2013. “Real-Time Hand Guiding of Industrial Manipulator in 5 DOF Using Microsoft Kinect and Accelerometer.” In 2013 IEEE RO-MAN, 26.08.2013 - 29.08.2013, 644–649. Gyeongju: IEEE. doi:10.1109/ROMAN.2013.6628421.

- Moin, A., A. Zhou, A. Rahimi, A. Menon, S. Benatti, G. Alexandrov, S. Tamakloe, J. Ting, N. Yamamoto, Y. Khan, F. Burghardt, L. Benini, A. C. Arias, and J. M. Rabaey. 2021. “A Wearable Biosensing System with In-Sensor Adaptive Machine Learning for Hand Gesture Recognition.” Nature Electronics 4 (1): 54–63. doi:10.1038/s41928-020-00510-8.

- Moore, J. S., and A. Garg. 1995. “The Strain Index: A Proposed Method to Analyze Jobs for Risk of Distal Upper Extremity Disorders.” American Industrial Hygiene Association Journal 56 (5): 443–458. doi:10.1080/15428119591016863.

- Nguyen, T. D., M. Kleinsorge, A. Postawa, K. Wolf, R. Scheumann, J. Krüger, and G. Seliger. 2013. “Human Centric Automation: Using Marker-Less Motion Captur- Ing for Ergonomics Analysis and Work Assistance in Manufacturing Processes.” In Proceedings of the 11th Global Conference on Sustainable Manufacturing. Berlin, Germany. . 586–592. https://www.gcsm.eu/Papers/132/18.3_34.pdf

- Occhipinti, E. 1998. “OCRA: A Concise Index for the Assessment of Exposure to Repetitive Movements of the Upper Limbs.” Ergonomics 41 (9): 1290–1311. doi:10.1080/001401398186315.

- Paliyawan, P., C. Nukoolkit, and P. Mongkolnam. 2014. “Office Workers Syndrome Moni- Toring Using Kinect.” In Proceedings of the 20th Asia-Pacific Conference on Com- munication (APCC2014), 58–63. IEEE. doi:10.1109/APCC.2014.7091605.

- Pedersen, M. R., L. Nalpantidis, R. S. Andersen, C. Schou, S. Bøgh, V. Krüger, and O. Madsen. 2016. “Robot Skills for Manufacturing: From Concept to Industrial Deployment.” Robotics and Computer-Integrated Manufacturing 37: 282–291. doi:10.1016/j.rcim.2015.04.002.

- Peppoloni, L., A. Filippeschi, E. Ruffaldi, and C. A. Avizzano. 2016. “A Novel Wearable System for the Online Assessment of Risk for Biome- Chanical Load in Repetitive Efforts.” International Journal of Industrial Ergonomics 52: 1–11. doi:10.1016/j.ergon.2015.07.002.

- Peruzzini, M., F. Grandi, S. Cavallaro, and M. Pellicciari. 2021. “Using Virtual Manufacturing to Design Human-Centric Factories: An Industrial Case.” International Journal of Advanced Manufacturing Technology 115 (3): 873–887. doi:10.1007/s00170-020-06229-2.

- Peruzzini, M., and M. Pellicciari. 2017. “A Framework to Design a Human-Centred Adaptive Manufacturing System for Aging Workers.” Advanced Engineering Informatics 33: 330–349. doi:10.1016/j.aei.2017.02.003.

- Plantard, P., H. P. Shum, A. S. Le Pierres, and F. Multon. 2017. “Validation of an Ergonomic Assessment Method Using Kinect Data in Real Workplace Conditions.” Applied Ergonomics 65: 562–569. doi:10.1016/j.apergo.2016.10.015.

- Rehman, M., R. A. Shah, M. B. Khan, N. A. A. Ali, A. A. Alotaibi, T. Althobaiti, N. Ramzan, S. A. Shaha, X. Yang, A. Alomainy, M. A. Imran, and Q. H. Abbasi. 2021. “Contactless Small-Scale Movement Monitoring System Using Software Defined Radio for Early Diagnosis of COVID-19.” IEEE Sensors Journal 1. doi:10.1109/JSEN.2021.3077530.

- Roman-Liu, D. 2014. “Comparison of Concepts in Easy-To-Use Methods for MSD Risk Assessment.” Applied Ergonomics 45 (3): 420–427. doi:10.1016/j.apergo.2013.05.010.

- Romero, D., P. Bernus, O. Noran, J. Stahre, and Å. Fast-Berglund. 2016b. “The Operator 4.0: Human Cyber-Physical Systems & Adaptive Automation Towards Human-Au- Tomation Symbiosis Work Systems.” In Proceedings of IFIP International Confer- ence on Advances in Production Management Systems, 677–686. doi:10.1007/978-3-319-51133-7_80.

- Romero, D., J. Stahre, T. Wuest, O. Noran, P. Bernus, A. Fast-Berglund, and D. Gorecky. 2016a. “Towards an Operator 4.0 Typology: A Human-Centric Per- Spective on the Fourth Industrial Revolution Technologies.” In Proceedings of In- ternational Conference on Computers & industrial Engineering (CIE46). Tianjin, China, 1–11.

- Salamea, H. T., A. R. Auquilla, O. Alvarado-Cando, and C. U. Onate. 2020. “Control of a Telerobotic System Using Wi-Fi and Kinect Sensor for Disabled People with an Industrial Process.” In 2020 IEEE ANDESCON, 13.10.2020 - 16.10.2020, 1–6. Quito, Ecuador: IEEE. doi:10.1109/ANDESCON50619.2020.9272069.

- Schaub, K., G. Caragnano, B. Britzke, and R. Bruder. 2013. “The European Assembly Work- Sheet.” Theoretical Issues in Ergonomics Science 14 (6): 616–639. doi:10.1080/1463922X.2012.678283.

- Schmitt, J., A. Hillenbrand, P. Kranz, and T. Kaupp. 2021. “Assisted Human-Robot-Interaction for Industrial Assembly: Application of Spatial Augmented Reality (SAR) for Collaborative Assembly Tasks.” In Companion of the 2021 ACM/IEEE International Conference on Human-Robot Interaction, 52–56. doi:10.1145/3434074.3447127.

- Shirwalkar, S., A. Singh, K. Sharma, and N. Singh. 2013. “Telemanipulation of an Industrial Robotic Arm Using Gesture Recognition with Kinect.” In 2013 International Conference on Control, Automation, Robotics and Embedded Systems (CARE), 16.12.2013 - 18.12.2013, 1–6. Jabalpur, India: IEEE. doi:10.1109/CARE.2013.6733747.

- Sun, X., R. Houssin, J. Renaud, and M. Gardoni. 2018. “Towards a Human Factors and Ergonomics Integration Framework in the Early Product Design Phase: Function-Task-Behaviour.” International Journal of Production Research 56 (14): 4941–4953. doi:10.1080/00207543.2018.1437287.

- Tang, G., and P. Webb. 2018. “The Design and Evaluation of an Ergonomic Contactless Gesture Control System for Industrial Robots.” Journal of Robotics 2018: 1–10. doi:10.1155/2018/9791286.

- Tsarouchi, P., A. Athanasatos, S. Makris, X. Chatzigeorgiou, and G. Chryssolouris. 2016a. “High Level Robot Programming Using Body and Hand Gestures.” Procedia CIRP 55: 1–5. doi:10.1016/j.procir.2016.09.020.

- Tsarouchi, P., S. Makris, and G. Chryssolouris. 2016b. “Human – Robot Interaction Review and Challenges on Task Planning and Programming.” International Journal of Computer Integrated Manufacturing 29 (8): 916–931. doi:10.1080/0951192X.2015.1130251.

- Tsarouchi, P., G. Michalos, S. Makris, T. Athanasatos, K. Dimoulas, and G. Chryssolouris. 2017. “On a Human–robot Workplace Design and Task Allocation System.” International Journal of Computer Integrated Manufacturing 30 (12): 1272–1279. doi:10.1080/0951192X.2017.1307524.

- Turk, M. 2014. “Multimodal Interaction: A Review.” Pattern Recognition Letters 36: 189–195. doi:10.1016/j.patrec.2013.07.003.

- Vignais, N., M. Miezal, G. Bleser, K. Mura, D. Gorecky, and F. Marin. 2013. “Innovative System for Real-Time Ergonomic Feedback in Industrial Manufacturing.” Applied Ergonomics 44 (4): 566–574. doi:10.1016/j.apergo.2012.11.008.

- Waters, T. R., V. Putz-Anderson, A. Garg, and L. J. Fine. 1993. “Revised NIOSH Equation for the Design and Evaluation of Manual Lifting Tasks.” Ergonomics 36 (7): 749–776. doi:10.1080/00140139308967940.

- Webster, D., and O. Celik. 2014. “Systematic Review of Kinect Applications in Elderly Care and Stroke Rehabilitation.” Journal of Neuroengineering and Rehabilitation 11 (1): 108. doi:10.1186/1743-0003-11-108.

- W. H. O. (World Health Organization). 2003. Protecting Workers-Health Series No. 5, Preventing Musculoskeletal Disorders in the Workplace, 2003.

- Wilhelm, M., V. M. Manghisi, A. Uva, M. Fiorentino, V. Bräutigam, B. Engelmann, and J. Schmitt. 2021. “ErgoTakt: A Novel Approach of Human-Centered Balancing of Manual Assembly Lines.” Procedia CIRP 97: 354–360. doi:10.1016/j.procir.2020.05.250.

- Yang, Y., H. Yan, M. Dehghan, and M. H. Ang. 2015. “Real-Time Human-Robot Interaction in Complex Environment Using Kinect V2 Image Recognition.” In 2015 IEEE 7th International Conference on Cybernetics and Intelligent Systems (CIS) and IEEE Conference on Robotics, Automation and Mechatronics (RAM), 15.07.2015 - 17.07.2015, 112–117. Siem Reap, Cambodia: IEEE. doi:10.1109/ICCIS.2015.7274606.

- Zhang, F., V. Bazarevsky, A. Vakunov, A. Tkachenka, G. Sung, C. L. Chang, and M. Grundmann. 2020. “Mediapipe Hands: On-Device Real-Time Hand Tracking.” arXiv preprint arXiv:2006.10214.