Abstract

We report on the development, testing and implementation of a bespoke benchmarking framework and assess its influence on the open innovation performance of new healthcare infrastructure. The research was developed from a series of 10 workshops, which took place after a four-month public consultation. This investigation borrows a pragmatic paradigm and is inductive and qualitative by nature, but uses quantitative scoring within the benchmarking assessment. The established framework is composed of 4 dimensions: Infrastructure Design & Management; Service Provision & Deployment; Operational Systems and Decision-Making Enablers, and 39 criteria. The assessment of the focal healthcare infrastructure is provided, along with three specific external cases, two from the UK: P and W, and one from the USA: SH, considered as best practice. Importantly, the study also reports on the experts’ reflective analysis, to examine in depth how benchmarking became the catalyst and knowledge management platform triggering open innovation.

1. Introduction

Innovation is defined as the process of leading firms to generate and implement novel ideas, practices and concepts into a given system (organization, product, process, service or infrastructure) affecting positively its operational and financial performances (Hartmann Citation2006; Kavadias and Ulrich Citation2020; Ruiz-Moreno, Tamayo-Torres, and García-Morales Citation2015; Shaw and Burgess Citation2013). Open innovation leads organizations to advance their innovation culture, processes and capabilities by developing deep collaborations with external firms and purposefully managing knowledge within firms’ boundaries (Bogers et al. Citation2019; Jasimuddin and Naqshbandi Citation2019; Roldán Bravo, Lloréns Montes, and Ruiz Moreno Citation2017; Severo et al. Citation2020). However, open innovation processes and practices, which have been described as imperative, require more exploration, and researchers have called for further investigations (Bogers et al. Citation2019; Tassabehji, Mishra, and Dominguez-Péry Citation2019; Thakur, Hsu, and Fontenot Citation2012). There are different attributes that enable firms to innovate successfully, for instance, Iacobucci and Hoeffler (Citation2016) shed light on the role of social networks and platforms as a source for open innovations, and Shaw and Burgess (Citation2013) reported that organizational knowledge, culture and capacity are critical factors. From this perspective, platforms, systems and tools are necessary to support firms in their innovation process (Bogers et al. Citation2019; Jasimuddin and Naqshbandi Citation2019). In this article, we will rely on a broad definition of a platform, adapted from Muffatto (Citation1999) and Scholten and Scholten (Citation2012). A platform for innovation is an artefact providing a coherent set of common interconnected components to create a stable model, designed to achieve different specific results and provide control mechanisms. In healthcare, platforms are considered major sources of service innovation and value co-creation tools (Akter et al. Citation2022).

Open innovation must be carefully implemented to be strategic and impactful (Bogers et al. Citation2019; Keskinocak and Savva Citation2020; Lee and Schmidt Citation2017) and firms may want to focus their innovations on superior resources (i.e. on their infrastructure) to maximize the impact and return on investment. Having said that, innovation is complex to achieve for many firms in different sectors (Cantarelli Citation2022; Roels and Perakis Citation2006) and healthcare executives and practitioners have expressed some concerns regarding the sector’s innovation (Thakur, Hsu, and Fontenot Citation2012). It has been argued that both the healthcare and the built environment industries have been suffering from a lack of open innovation (Bygballe and Ingemansson Citation2014; Cantarelli Citation2022; Dubois and Gadde Citation2002; Omachonu and Einspruch Citation2010; Thakur, Hsu, and Fontenot Citation2012). In the UK, the public healthcare sector experience inefficiencies, and it can be perceived as uncoordinated and with a high volume of non-added value activities. All of which results in higher costs and relatively lower innovation perception, compared to other industries (Akter et al. Citation2022; Keskinocak and Savva Citation2020; Wiler et al. Citation2017).

This article problematized that healthcare infrastructures are strategic or superior assets, allowing healthcare organizations to provide and deliver health and social care effectively and therefore should be developed innovatively to be fit for purpose and sustainable over time (Viergutz and Apple Citation2022). Traditionally, infrastructures are the interface, allowing patients to receive healthcare services, they have a substantial impact on the organization’s service quality and productivity (Akmal et al. Citation2022; Bamford et al. Citation2015; Liyanage and Egbu Citation2005; Kagioglou and Tzortzopoulos Citation2010; Omachonu and Einspruch Citation2010). The design and architecture of healthcare infrastructures affect the health outcomes and the organization results in terms of efficiency, quality and costs (Dehe and Bamford Citation2017). Additionally, infrastructure development is not a straightforward process, and its complexity creates uncertainty and variation, making it unfavourable to innovation (Dehe and Bamford Citation2015; Cantarelli Citation2022; Ekanayake, Shen, and Kumaraswamy Citation2021; Pellicer et al. Citation2014). The lack of overall performance management and innovation is a recognized problem, preventing: (i) a holistic value chain perspective and (ii) the understanding of the entire development process (Shohet and Lavy Citation2010). First, Francis and Glanville (Citation2002) and then Akter et al. (Citation2022) emphasized that frameworks for iterative exchange of information between multiple stakeholders need to be put in place to explore and trigger innovation in the healthcare sector. Therefore, this study aims to address these issues, by investigating how a bespoke benchmarking framework may trigger, initiate and enhance open innovation, in the context of new healthcare infrastructure development (Feibert, Andersen, and Jacobsen Citation2019; Strang Citation2016; Tezel, Koskela, and Tzortzopoulos Citation2021).

Benchmarking has been implemented in many sectors to improve performance (Bhattacharya and David Citation2018; Böhme et al. Citation2013; Maravelakis et al. Citation2006) with varying reported degrees of success. Generally, firms apply benchmarking to identify success factors and best practices to replicate and improve their performances, stimulate continuous improvement, promote changes and deliver improvements in quality, productivity and efficiency (Cooper et al. Citation2004; Feibert, Andersen, and Jacobsen Citation2019; Kay Citation2007; Rauter et al. Citation2019; Strang Citation2016). In this study, the case of healthcare infrastructure development is used to explore the relationship between benchmarking and open innovation. This is achieved by (i) developing a bespoke benchmarking framework, (ii) undertaking four benchmarking assessments and (iii) reflecting on its innovation impact. To focus and structure this study, the following research question is posited: How can a bespoke benchmarking framework be developed as a platform to enhance the performance and open innovation of new healthcare infrastructure projects? Borrowing a pragmatic worldview, our paper attempts a contribution that is rigorous and relevant (Hodgkinson and Rousseau Citation2009) by considering both a theoretical and practical perspective, when formulating the problematization and positioning the study (Nicholson et al. Citation2018). This research responds to the recognized lack of open innovation mechanisms within the healthcare built environment (Hinks and McNay Citation1999; Kagioglou and Tzortzopoulos Citation2010; Pellicer et al. Citation2014; Shohet and Lavy Citation2010). Moreover, the developed artefact can be applied and adapted as a blueprint for diverse healthcare organizations to enhance their infrastructure development performance and open innovation culture, which makes the practical contribution.

2. Literature review

2.1. A classical view of innovation

Adams, Bessant, and Phelps (Citation2006), Hidalgo and Albors (Citation2008), Kavadias and Ulrich (Citation2020), Kalkanci, Rahmani, and Toktay (Citation2019), Oke (Citation2007), and Ortt and van der Duin (Citation2008) provided definitions of innovation, suggesting that innovation is generated by a combination of productive resources, leading to the introduction of new products, the development of new methods, the exploration of new markets or the design of new organizational structures and supply network configurations. Over the past 20 years, the concept of innovation has evolved, from a specific outcome generated by individual actions to more interactive processes created by relationships and collaborations within and between firms (Huizingh Citation2011), in other words, innovation is shifting from being closed to open (Chesbrough and Di Minin Citation2014). The interactive process of learning and exchanging information between structures and firms is a source of innovation (Jasimuddin and Naqshbandi Citation2019). Moreover, the process of innovation may involve formally the final consumer to be effective and adopted rapidly (Roldán Bravo, Lloréns Montes, and Ruiz Moreno Citation2017). Innovation is often described and framed around contrasting aspects: (i) macro or micro, (ii) incremental or radical and (iii) open or closed innovation.

Macro innovations directly include and impact other institutions, such as governments, agencies and industry networks (Herzlinger Citation2006); whereas micro innovations remain at the firms or internal supply chain level. Industrial clusters can be a relevant source of knowledge, and innovation, as firms can establish links with each other as well as codify and exploit formal and informal exchanges (Turkina, Assche, and Kali Citation2016). Iacobucci and Hoeffler (Citation2016) suggested that access to platforms was key to generating collaborative insights within a network. These can be important mechanisms used by firms to access external knowledge and resources (Almus and Czarnitzki Citation2003).

Innovation is categorized as incremental or radical (Chandrasekaran, Linderman, and Schroeder Citation2015; Damanpour and Gopalakrishnan Citation2001; Siguaw, Simpson, and Enz Citation2006). Incremental innovations are associated with minor changes following an organic progression in knowledge (Lawless and Anderson Citation1996; Siguaw, Simpson, and Enz Citation2006). On the other hand, radical innovations are related to redefining a market or reinventing a system. They can cause disruptive changes within a firm as they often incorporate a large degree of new knowledge and complexity (Dewar and Dutton Citation1986).

Open innovation relates to the use of purposive inflows and outflows of knowledge to accelerate internal innovation (Cantarelli and Genovese Citation2021; Chesbrough, Vanhaverbeke, and West 2006; Huizingh Citation2011). Open innovation is defined as the process of distributed ideas based on an intentional and managed exchange of information beyond the boundaries of the firm (Chesbrough Citation2003). While closed innovation relates to innovation developed exclusively within an organization (Chesbrough Citation2003). Platforms, systems and tools are necessary to integrate external knowledge and are instrumental to open innovation capabilities (Bogers et al. Citation2019; Jasimuddin and Naqshbandi Citation2019; Roldán Bravo, Lloréns Montes, and Ruiz Moreno Citation2017).

2.2. Platforms, systems and tools of open innovations

Open innovation comes from collaborations and integrations, which require a set of interfaces to manage the learning and the shared experience (Igartua, Garrigós, and Hervas-Oliver Citation2010). Platforms and systems are required for the effective management of the open innovation process, these artefacts support the collaborative efforts and capture and share information between departments and organizations (Hidalgo and Albors Citation2008). There are several practices, platforms, systems and tools used by firms to support and structure open innovation, such as team building and brainstorming workshops, road mapping, scenario analysis, technology watch, networking and virtual enterprise (Igartua, Garrigós, and Hervas-Oliver Citation2010). Quality function deployment (QFD) has led firms to be more innovative by capturing the voice of the customer, sharing information, identifying problems early, seeking new opportunities and aligning strategies (Dehe and Bamford Citation2017; Vinayak and Kodali Citation2013). Benchmarking has also been associated with innovation (Bhattacharya and David Citation2018). For instance, Salama et al. (Citation2009) proposed and tested a new audit methodology for operations and supply chain improvement projects. They demonstrated how the involvement of key people is necessary to generate the learning enabling companies to innovate. Böhme et al. (Citation2014) reported on how a firm can acquire in-depth knowledge using methodologies and platforms to enhance its knowledge transfer and innovation capabilities. Maravelakis et al. (Citation2006) demonstrated how benchmarking can improve the iterative process of innovation but acknowledged that it remains relatively under-utilized as an innovation tool. As benchmarking and innovation firms’ practices and capabilities remain latent, especially in megaprojects, the built environment and the healthcare sector, further research is meaningful and valuable (Cantarelli and Genovese Citation2021). This study contributes to this body of knowledge, by investigating how can a bespoke benchmarking framework be a major vehicle to manage open innovation, using the healthcare infrastructure context.

2.3. Benchmarking definitions, applications and process

Benchmarking consists of investigating practices, establishing metrics, setting up performance levels and comparing them against a specific process (Buckmaster and Mouritsen Citation2017; Camp Citation1989; Forker and Mendez Citation2001; Strang Citation2016). Adebanjo, Abbas, and Mann (Citation2010) and Zhang et al. (Citation2012) explained that benchmarking has been one of the most popular and widely adopted management techniques since the 1990s, after its diffusion from Japan for managing quality and in the Total Quality Management (TQM) logic (Dale et al. Citation2016). It started gaining popularity in the west when organizations, such as Xerox, GE and Motorola, demonstrated market share improvement driven by changes generated from their benchmarking initiatives (Gans, Koole, and Mandelbaum Citation2003; Talluri and Sarkis Citation2001).

Adebanjo, Abbas, and Mann (Citation2010) argued that there are three types of benchmarking: internal, external and best practice. Marwa and Zairi (Citation2008) explained that a benchmarking activity is undertaken by collecting data, both primary and secondary, to develop a deep understanding of a process. Therefore, it is critical that the team works within a bespoke framework to collect and analyse the relevant information. Voss, Åhlström, and Blackmon (Citation1997) demonstrated, both theoretically and empirically, the relationships between undertaking robust and coherent benchmarking activities and the improvement in performances.

Benchmarking has been widely implemented (Sweeney Citation1994; Voss, Chiesa, and Coughlan Citation1994), for instance, in the automotive (Delbridge, Lowe, and Oliver Citation1995), the food industry (Adebanjo and Mann Citation2000), finance and banking (Cook et al. Citation2004; Vermeulen Citation2003), logistics (Bhattacharya and David Citation2018), healthcare (Böhme et al. Citation2013; Buckmaster and Mouritsen Citation2017; Fowler and Campbell Citation2001; Reponen et al. Citation2021; Willmington et al. Citation2022) and construction (Kamali, Hewage, and Sadiq Citation2022; Love, Smith, and Li Citation1999; Sommerville and Robertson Citation2000). Several models have been developed with varied process steps (Dale et al. Citation2016; Delbridge, Lowe, and Oliver Citation1995; Zairi and Youssef Citation1995). However, the common stages are (i) identification of the partner organization, (ii) data collection and analysis, (iii) study of best practices and (iv) recommendations (Marwa and Zairi Citation2008). Zairi and Youssef (Citation1996) explained that the benchmarking process is divided into four stages (i) plan, (ii) analyse, (iii) communicate or integrate and (iv) review or act and reflect, for which a great amount of effort and thinking must be undertaken in the planning phase, prior to the benchmarking visit (Marwa and Zairi Citation2008).

Despite its popularity and wide applications, benchmarking practices remain ill-defined, as Adebanjo, Abbas, and Mann (Citation2010), Kamali, Hewage, and Sadiq (Citation2022) and Talluri and Sarkis (Citation2001) explained and researchers have called for further in-depth implementations (Buckmaster and Mouritsen Citation2017; Feibert, Andersen, and Jacobsen Citation2019; Nandi and Banwet Citation2000; Maravelakis et al. Citation2006; Reponen et al. Citation2021; Sharma, Caldas, and Mulva Citation2021).

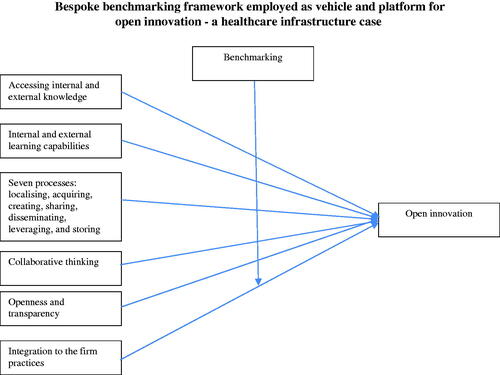

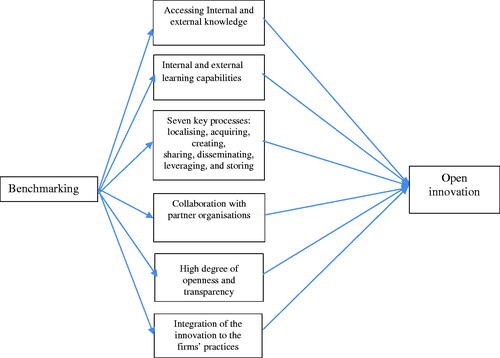

2.4. Gap analysis and conceptual model: the links between knowledge management, open innovation and benchmarking

To address rapidly changing environments, a firm’s ability to integrate, build and reconfigure internal and external knowledge is needed to innovate (Rothaermel and Hess Citation2007; Teece, Pisano, and Shuen Citation1997). Antecedents to innovation require locally embedded knowledge and skills amongst intellectual human capital at the individual and the firm level. This reinforces the importance of internal and external learning necessary for a firm to innovate (Rothaermel and Hess Citation2007). Knowledge that is difficult to generate, combine, transform and transfer will lead to a sustainable differentiation (Nonaka Citation1994; Wiklund and Shepherd Citation2003). Therefore, to investigate how innovation is generated, we borrowed theoretical concepts from the knowledge management body of understanding. Managing knowledge and learning involves seven key processes: localizing, acquiring, creating, sharing, disseminating, leveraging and storing (Probst, Raub, and Romhardt Citation2002). It is suggested that the internal and external processes and mechanisms should be linked and aligned to create new knowledge effectively, and that firms’ executives and managers should know-how to locate, acquire and use the information. Moreover, all employees play a significant role in the knowledge management process (e.g. creating, sharing, disseminating, leveraging), which requires an open, collaborative and trusting organizational culture and structure (Laursen and Salter Citation2006). Knowledge management ideas encourage firms to search, acquire and apply external knowledge to enhance innovation. Laursen and Salter (Citation2014) suggested that firms should search and capture external knowledge to generate open innovation through collaborating with different external actors in an iterative process. Furthermore, Dahlander et al. (Citation2016) evidenced that in-depth external information searches significantly influence innovation. This process extensively relies on mutual coordination and commitment (Dyer and Singh Citation1998) and a high degree of openness and transparency to enable enhancing the knowledge exchange. However, the external knowledge gained should be transferred and disseminated within the organization’s boundaries (Carlile Citation2004) and integrated into the firm’s practices (Ter Wal, Criscuolo, and Salter Citation2017). For doing so, Massa and Testa (Citation2004) argued that recursive benchmarking projects can promote innovative behaviours, mainly by encouraging the acquisition of external knowledge, comparing levels of performance and analysing knowledge gaps. McAlearney (Citation2006) also believed that benchmarking is particularly salient to address open innovation challenges in healthcare, although difficulties and complexities exist. In this study, we will go a step further, by demonstrating how benchmarking can be the platform to enhance and trigger open innovation by its ability to capture, manage and transfer critical knowledge in the context of the healthcare built environment. summarizes the six main components and characteristics to achieve open innovation, as per our interpretation of the literature: (i) accessing internal and external knowledge; (ii) internal and external learning capabilities; (iii) seven key processes; (iv) collaboration with partners organizations; (v) high degree openness and transparency and (vi) integrations to the firm practices.

2.5. Healthcare built environment and the lack of overall innovation

The healthcare built environment is composed of different interconnected firms, the healthcare organization, an architecture company, as well as some engineering and construction firms (Hamzeh, Ballard, and Tommelein Citation2009). These networks of organizations have different roles and responsibilities within the whole life cycle of the infrastructure development process, from planning and design through to production, construction, use and management (Bamford et al. Citation2015; Myers Citation2008; Winch Citation2006). One of the key characteristics, and probable consequences of this complex network of public and private organizations in the UK, is its lack of formal partnership practices, which makes the healthcare built environment industry fragmented (Vidalakis, Tookey, and Sommerville Citation2013), naturally leading to the fundamental problem of weak productivity and innovation levels, as pointed out in many academics’, practitioners’ and governmental publications (Bamford et al. Citation2015; Cain Citation2004; Egan Citation1998; Myers Citation2008; Pellicer et al. Citation2014; Santorella Citation2011). The lack of overall performance management and innovation is a significant issue in this disjointed network, which remains organized in silo, inhibiting the holistic understanding of the value chain (Lawlor-Wright and Kagioglou Citation2010; Shohet and Lavy Citation2010; Williams Citation2000). Innovation in public healthcare built environment has been missing due to limited accumulated knowledge and a lack of guidance on how to evaluate and manage innovative ideas (Hartmann Citation2006). Gambatese and Hallowell (Citation2011) identified factors affecting innovation in the built environment. They highlighted that at the project level, innovation is influenced by the owner or client vision, the presence of innovation champions, the ability to capture the lessons learned, the effectiveness of the knowledge management system, as well as the upper management support and the extent of the R&D involvement.

In 1999, Hinks and McNay demonstrated the need to establish a key performance indicators system for the built environment in order to promote innovation. Kagioglou, Cooper, and Aouad (Citation2001) pointed out that performance measurement systems should monitor two aspects: (i) the product as the building and (ii) the process of creating the building. While the literature demonstrates relevant evidence of measuring the performance of the infrastructure, there is little evidence showing process innovation. For instance, in their study, Hinks and McNay (Citation1999) identified a set of appropriate indicators, which could be used by healthcare infrastructure development organizations to realistically evaluate their performance. They developed a comprehensive list of 23 characteristics, as shown in .

Table 1. List of 23 KPIs (adapted from Hinks and McNay Citation1999).

While this list may be adapted to modern healthcare infrastructure development, it would need further development to promote open innovation. For example, by capturing processes and decision-making information as well as service and sustainability features (García-Sanz-Calcedo, de Sousa Neves, and Fernandes Citation2021). It would also require a quantification mechanism to facilitate comparison across infrastructures or organizations (Willmington et al. Citation2022). Public healthcare infrastructure developments differ from the more mainstream projects due to their enhanced level of complexity, the public-private funding mechanisms, their overall governance and the public and local communities’ interest and participation in the consultation. Moreover, the salience of external search and benchmarking activities is recognized in the built environment literature, yet, there are limited studies demonstrating comprehensive and holistic applications and results (Gawin and Marcinkowski Citation2017; Gilleard and Wong Citation2004; Loosemore and Hsin Citation2001; Roka-Madarasz, Malyusz, and Tuczai Citation2016; Yun et al. Citation2016). Therefore, in this study a bespoke framework has been developed and implemented to address the postulated research question, while acknowledging the work from the Department of Health in the UK and its AEDET toolkit, to determine and manage design requirements for a new healthcare infrastructure (Lawlor-Wright and Kagioglou Citation2010).

This study was designed as such considering (i) the significance of the healthcare sector, in 2018 it reached 9.8% of the GDP expenditure in the UK and 17% in the USA (OECD Citation2020); (ii) the relevance and under-researched phenomenon of open innovation within the healthcare built environment (Dehe and Bamford Citation2015; Dehe and Bamford Citation2017; Herzlinger Citation2006; Kagioglou and Tzortzopoulos Citation2010) and (iii) the limited volume of academic research exploring benchmarking as an open innovation platform (Feibert, Andersen, and Jacobsen Citation2019; Massa and Testa Citation2004; Strang Citation2016).

3. Methodology

To explore and address this study’s problematics an embedded-case study as part of a wider 2-years action research programme has been designed (Dehe and Bamford Citation2017). A pragmatic paradigm is borrowed to address the established research question. Sliwa and Wilcox (Citation2008, 100) explained that the foundations of pragmatism are associated with Charles Saunders Peirce

who developed pragmatism as a theory of meaning, based on information, arguing for the existence of an intrinsic connection between meaning, information, and action, and proposing that the meaning of ideas is best discovered by subjecting them to an experimental test and then observing the consequences (Murphy and Murphy Citation1990).

Moreover, William James and C.I Lewis, two other main contributors to this paradigm, argued that pragmatism is a philosophy oriented towards practice, action and relative principles, through experiments, experiences and perceptions grounded within empirical research, as opposed to the abstractions and absolutes (James Citation2013; Sliwa and Wilcox Citation2008).

Overall, this is an experimental qualitative research that explores and investigates the role of benchmarking, as the focal healthcare organization aimed to generate innovation and improvement within its infrastructure development processes. First, a bespoke benchmarking framework was developed, and then the assessment and scoring of four infrastructures were undertaken, similarly to Bibby and Dehe (Citation2018), who developed a framework to assess and compare the level of implementation of Industry 4.0 technologies of 13 companies in the defence sector. Finally, a reflective qualitative data analysis was performed to investigate the impact of benchmarking on innovation. This methodological approach, relying on key triangulation mechanisms, has several similarities with Böhme et al. (Citation2013) who applied an audit benchmarking framework (i.e. QSAM) to eight Australian healthcare value streams. However, this article differs from Böhme et al. (Citation2013) by designing a bespoke framework to assess its impact on open innovation, as opposed to examining the transferability of a well-established model from the private sector to the public sector to offer valuable insights.

3.1. The benchmarking development process

The benchmarking process followed and described in this study is in line with Dale et al. (Citation2016). To develop the bespoke framework composed of 4 dimensions (Infrastructure Design & Management, Service Provision & Deployment, Operational Systems and Decision-Making Enablers) and 39 criteria, and to undertake the benchmarking assessment, a series of 10 outputs driven workshops, facilitated by a group of 10 experts, was organized, as detailed in . The team of experts was composed of the 10 primary senior managers and decision-makers directly involved in the focal firm’s new infrastructure development project, 3 from Estates and Facilities, 3 from Primary Care, 2 service providers, 1 clinician and 1 planner as in Dehe and Bamford (Citation2017). The same group of experts were involved in the assessment as well as in the reflective interviews to explore the impact on innovation similarly to Böhme et al. (Citation2013).

Table 2. List of the events and workshops.

First, an initial framework was generated and designed taking into consideration the ‘voice of the patient and customer’. This was achieved by using results from the public consultation, which is the process a healthcare organization goes through to collect large datasets by engaging and consulting members of the public and the local communities. As part of this four-month public consultation, a patient needs questionnaire for new infrastructure development was distributed (N = 3055), in addition, several focus group activities were organized to collect rich data, as in Dehe and Bamford (Citation2015) and Dehe and Bamford (Citation2017). From the thematic analysis performed on the public consultation qualitative data, the focal firm was able to identify patients, service users and providers’ needs and requirements regarding future healthcare infrastructures. Key identified features were: ‘building with a human scale’, ‘interior space being optimised’, ‘aesthetical and signposted building’, ‘excellent facilities provided’, ‘single point of access’, ‘good accessibility’, ‘modern building’ and ‘visibility in the decision-making’. Then, a series of meetings and focus groups were set up by the researcher to design, in an inductive manner, the structured framework, in line with the voice of the experts, the voice of the customers, the voice of the providers, as well as the academic and practitioner literature. The team agreed that four major dimensions needed to be considered: (i) the hard facility, (ii) the service provided, (iii) the management of the operations and (iv) the processes to develop the infrastructure. To reach a consensus amongst the 10 experts, five organic iterations of the model were required as part of workshop 2 as shown in . The outcome of this workshop led to the framework presented in . Once, the four dimensions (Infrastructure Design & Management, Service Provision & Deployment, Operational Systems and Decision-Making Enablers) and the associated 39 criteria were agreed upon and approved by the experts, the framework was externally validated. In total, four external healthcare senior managers and four Operations Management scholars were consulted to validate the framework’s criteria and descriptors. This process was equivalent to a Q-sorting exercise (Moore and Benbasat Citation1991; Bamford et al. Citation2018). The external panel was asked to categorize the criteria and their associated descriptors with the four dimensions. The panel members were also asked about any modifications they thought would be relevant. This led to some minor changes in criteria and descriptor labels; for example, C3 changed from ‘staff integration’ to ‘HR systems and staff integration’, and was moved from Dimension 2: Service Provision & Deployment, to Dimension 3: Operational Systems. However, the fundamental structure of the framework was not altered and therefore was validated. This validation process provided the research team with confidence, reassurance regards reliability. Subsequently, the team of experts went on to pilot the framework by applying it to two small and relatively modern internal healthcare infrastructure projects, this established confidence in the protocol and enabled to test the framework. Consequently, the team performed the internal assessment to establish the internal benchmark and calibrate the scorings.

Table 3. Benchmarking framework scores.

3.2. Benchmarking assessment and the scoring element

Three external healthcare infrastructures were identified as relevant benchmark partners, according to their characteristics and the objectives of the focal organization. Two infrastructures in the North West of England (referred to as P and W) were selected as the external competitive benchmarking partners, as well as one in California, USA, (referred to as SH) selected as an external ‘best in class’ partner. The UK-based team of experts made contacts with the infrastructure managers of the external benchmark partners (P, W and SH) and organize the site visits. At least a full day of on-site access was granted to the team during the assessment. The UK benchmarking evaluations were reasonably straightforward to organize, however, as one can imagine, the US-based evaluation was more challenging logistically, therefore only one of the researchers was able to travel and undertake the site visit on behalf of the team. The experts had the opportunity to discuss with different employees, clinicians and managers throughout the day in order to make the assessment.

Finally, when all the datasets had been compiled and combined the experts met to triangulate their interpretations and developed the scoring. This led to (i) establishing the gap analysis, (ii) determining the goals for the future and (iii) developing an action plan, all of which were then clearly communicated to the benchmarking project’s stakeholders.

To allow for the quantification process, the framework was specially designed to accommodate a weighting and a scoring mechanism. The weighting refers to the criteria’s importance from 1 to 5 (1 = not important to 5 = extremely important). The scoring refers to the performance of a particular criterion, from 1 to 9 (1 = very poor to 9 = excellent). This mechanism allows the comparison of the projects, the identification of the gaps and enables improvement targets to be set up.

The team, made up of 10 experts, undertook the assessment, following the framework in a structured and objective manner. For each criterion, the assessment consisted of capturing and recording the relevant pieces of information, score and the provision of further qualitative information regarding this specific indicator. Based on the secondary and primary data collected, the team was able to complete a concrete assessment of the visited infrastructures (Böhme et al. Citation2013).

3.3. The reflective qualitative data analysis

As part of the last workshop (workshop 10), the 10 experts were interviewed for about 30 minutes, to reflect on the impact of the benchmarking process and results and its effect on innovation. The experts were asked to share their reflective views. The following overarching questions were asked: ‘Can you speak about your experience of undertaking the benchmarking?’; ‘What did you learn from the process?’; ‘What did you learn from the results?’; ‘How has benchmarking changed the way you approached new infrastructure development projects?’; ‘How has benchmarking led to innovation?’; ‘What have been the main benefits?; ‘What have been the main issues?’; ‘What should we do differently next time?’.

The semi-structure interviews analysis was originally organized around the six themes that emerged from the literature review: (i) accessing internal and external knowledge; (ii) internal and external learning capabilities; (iii) seven key processes; (iv) collaboration with partners organizations; (v) high degree of openness and transparency and (vi) integration to the firm’s practices. To initially structure the data analysis, the conceptual framework developed in the literature review was used around these six themes. However, to add rigour to the analysis, once the data were collected, two iterations of coding were undertaken, as it can be seen in . The first iteration led to 21 nodes, which were then refined into 4 final codes, as per Burnard et al. (Citation2008) protocol. The four codes are: (i) deep and valuable knowledge and learning are gained from the benchmarking process; (ii) deep and valuable knowledge and learning are gained from the benchmarking results; (iii) benchmarking is source of open innovation and (iv) benchmarking acts as a knowledge management platform for open innovation, as detailed in .

Table 4. Qualitative data analysis and coding.

4. Results

4.1. The benchmarking framework

The framework developed was composed of four dimensions: (i) ‘Infrastructure Design & Management’, which represents the hard facility; (ii) ‘Service Provision & Deployment’, which provides an indication of the strategy selected to deliver and design services; (iii) ‘Operational Systems’, which allows the assessment of the efficiency and effectiveness within the infrastructure and (iv) ‘Decision-Making Enablers’, which records and assesses the criteria referring to the decision-making processes and practices within the planning and design activities. Each dimension is further detailed and subdivided into criteria. In total, 39 criteria were defined, demonstrating the multi-faceted nature of this framework, as shown in .

4.1.1. Infrastructure design & management

This first dimension looked at the key estates’ attributes, as detailed in . These have been defined further by descriptors: statements to demonstrate precisely what was assessed. The descriptors were based on the different departments’ strategies and targets. The descriptors allow the focus to be on the major points and have proved very beneficial, overcoming several issues, in terms of communication and lack of synchronization. presents the 11 criteria that have been identified and their matching agreed descriptors.

4.1.2. Service provision & deployment

This second dimension looks at what services are provided within the infrastructure. The organization’s strategy is to develop infrastructures in which both health and social services are provided, in such a way that synergies are developed throughout the service integration and co-location. Therefore, the benchmarking framework needed to translate this aspect, as the eight criteria, summarized in , composing this theme demonstrate.

4.1.3. Operational systems

This dimension establishes the way in which the infrastructure is run at an operational and strategic level. There are 11 criteria to ensure the successful delivery of the services that have been identified, as shown in . These sets of data are relevant in order to link the previous two themes and gain a better understanding of implementation issues.

4.1.4. Decision-making enablers

Finally, it was important to capture the way in which the healthcare organizations had managed the planning and design of their new infrastructures and the decision-making processes, which was also lacking for other frameworks or models. While the previous dimensions assessed and consider output criteria, this dimension focuses on the processes. It comprises nine criteria, as per .

4.2. The benchmarking partners

In this study, the results show the assessment of three external benchmarking partners, two UK-based cases (P and W) and one US-based (SH).

4.2.1. Benchmarking case 1: the P infrastructure

This healthcare infrastructure opened in November 2009. Its building was the largest of the region’s regeneration phase and was a joint venture by a healthcare organization and a city council. The infrastructure offers a broad range of health services, together with community and council services. The centre, developed by a LIFT Company, cost £17 million, and, within its 6000 m2, accommodates two GP practices, a specialist children’s facility and a library. This infrastructure won an award in the North West Region in 2010. ‘The Awards recognise the best in the built environment, from architecture to planning, townscape to infrastructure, and reward projects that make a difference to local people and their communities’ as reported by some internal documentation.

4.2.2. Benchmarking case 2: the W infrastructure

As part of the UK government’s 10-year plan to modernize and reform the NHS in this area, this infrastructure was developed and then opened in September 2008. This infrastructure was the first of three similar buildings planned in this area. This £12 million project was aimed at bringing key facilities, such as community health care services, council and library services, into one building of 3000 m2, making them as accessible as possible to the local population and offering new services, which previously were hospital-based, such as X-rays. This healthcare infrastructure offers many services, including physiotherapy and occupational therapy, GP and dental services, community health services, including podiatry, community paediatrics and district nursing, among others, plus an advice and information area next to the library.

4.2.3. Benchmarking case 3: the SH infrastructure

A US-based organization was identified that demonstrated advanced techniques in planning and designing healthcare infrastructures. This not-for-profit healthcare organization owns 27 hospitals and healthcare centres in Northern California. In 2004, the organization embarked on a long and challenging journey, the goal of which was to apply lean thinking principles to the design and construction of its infrastructures, so as to develop state of art hospitals and other healthcare centres (Lichtig Citation2010). The drivers were to cope with the changing demand and to improve their performance and innovation by building flexible facilities, to cope with the dynamic healthcare environment.

4.3. Benchmarking outputs

The fact that the two healthcare infrastructures: P and W, were similar was clearly translated by the framework results achieving respectively total scores of 10.52 and 11.10 points. The same patterns of results for both projects were established, with ‘service provision & deployment’ (13.00 and 13.13) as the strongest dimension, then ‘operational systems’ (11.23 and 12.59), then ‘infrastructure design & management’ (9.36 and 10.18), and, finally, ‘decision-making enabler’ (8.50 and 8.50). As the bespoke performance framework is designed to holistically assess the effectiveness and efficiency of the infrastructure, these results were relevant so that the focal organization could compare itself with similar local organizations, set up targets based on the gap analysis, engage in a continuous improvement journey and support its innovation strategy. Based on the collected data, the assessment confirmed that SH was among the exemplars of best practices encountered in healthcare so far. They have achieved the highest score compiled, performing better than any other infrastructures assessed internally and externally, with an aggregate score of 14.92 points. The points system was established to assist the experts in comparing and benchmarking the different infrastructure performances, based on the same measurement system, and to help them to focus on innovation and to drive improvements. The internal benchmarking assessment scored 6.28, which demonstrates the potential and substantial room for improvement. As mentioned previously provides the detail of the results.

4.4. The reflective interviews data analysis

presents the qualitative data analysis post-implementation. The interview data and quotes were categorized following the six themes that emerged from the literature review (c.f. ). Then key ideas regarding the impact of benchmarking were compiled around 21 initial nodes following Burnard et al. (Citation2008) methods. For instance, benchmarking ‘enables to solve complex problems’, ‘captures different perspectives’, ‘aligns strategies’, ‘strengthens relationships’, ‘enables cross-functional learning’, enables to innovate’, ‘deepens collaborations’, leads to new ideas and behaviours’. Finally, the authors extracted four final codes: ‘deep and valuable knowledge and learning are gained from the benchmarking process’, ‘deep and valuable knowledge and learning are gained from the benchmarking results’, ‘benchmarking is source of open innovation’ and ‘benchmarking acts as a knowledge management platform for open innovation’.

5. Discussion

5.1. Deep and valuable knowledge and learning are gained from the benchmarking process

From the process perspective, developing the framework was extremely useful as a starting point for challenging the current practices and status-quo as well as helping the experts explore different models for planning, designing and managing healthcare infrastructure. The development of the benchmarking framework allowed the different functions of the organization (i.e. Primary Care, Estates, Finance, Service Design and Strategy) to be further aligned, through developing consensus and agreement on the key criteria, their associated descriptors, as well as on their importance. One of the Primary care managers, in the team, particularly appreciated the process: ‘going through the iterations enabled us to have concrete discussions, to solve problems early and appreciate the different functions’ perspectives and objectives, especially between us and estates […] It helped also aligning our policies, strategies and communication’. The process of developing the artefact was innovative in itself and led to ‘new cross-functional practices’ and ‘different ways of working with external partners’ (i.e. with contractors, architects, other NHS organizations and the local university). This shows that the benchmarking process led to influence and shape (i) internal learning, such as teamworking, policies, cross-functionality and culture as well as (ii) external learning, such as the inter-firm collaboration and industry cluster and network. The benchmarking process allowed the need analysis (D2) to be performed in a more consistent and robust manner, enabling the organization to reflect on the most critical key performance indicators that should be set and measured when developing new infrastructure. It also enabled the focal organization to clearly (i) identify that design (A1) should be prioritized over size (A2) in any key decision-making process (e.g. incremental innovation), which was not the case traditionally and (ii) focus on services’ co-location and service integration (B2), which is key radical innovation.

We observed that by focussing on the benchmarking framework development, the experts were able to ask specific questions and as a result were able to communicate more effectively before making critical decisions. For instance, when deciding about the site location (D4) or for identifying key design features such as the availability and opening hours (C1), the consumer pathways and flows (C2) and the engineering systems (C9). The benchmarking allowed these complex decision-making processes to be streamlined and enhance their transparency (Dehe and Bamford Citation2017). This led to incremental innovations, for example, by using multiple criteria decision analysis (MCDA) to optimize site locations (Dehe and Bamford Citation2015) or when developing a clear service concept and culture. It also enabled radical innovations, for instance, when integrating health and social services and applying lean construction methods (Tezel, Koskela, and Tzortzopoulos Citation2021). The focal organization, consequently, changed and introduced new features, new partnerships and new configurations of processes (Hidalgo and Albors Citation2008). Our findings support Carlile’s (Citation2004) idea, that innovation is generated by working and sharing knowledge across firms’ boundaries and domains.

5.2. Deep and valuable knowledge and learning are gained from the benchmarking results

From the result perspective, the assessments allowed the experts to think in an innovative manner and push the boundaries of their practices. For instance, in terms of the infrastructures’ design and layout (A1 and A9), the focal organization focuses now on designing modular design based on patient flows and prioritizing multi-functional space. Also, the organization now recognizes the criticality of infrastructure management. As a result, it was decided that all facilities had to have an infrastructure manager acting as the cornerstone between the clinicians, the admin teams and the technical services. They are responsible for implementing a leadership system (C4) and managing problem solving and resolving conflicts (C8). Specifically, technical tools such as PDCA (Plan-Do-Check-Act) and DMAIC (Define-Measure-Analyse-Improve-Control) are heavily promoted to approach problems consistently, develop a culture of continuous improvement and capture knowledge. This directly enabled increasing the innovation level and allowing good practices to be recorded and replicated. Another notable innovation was the acceptance and the promotion of Multiple Criteria Decision Analysis (MCDA) across complex decision-making processes after having learnt how Choosing by Advantage (CBA) was used to optimize the decision-making at SH (Dehe and Bamford Citation2015). Furthermore, the benchmarking outcomes have helped to introduce a more consistent approach to infrastructure management, enabling comparing and contrasting the performances of the main infrastructures against each other and generating focussed continuous improvement activities. For instance, the focal organization decided to redesign its public consultation processes (D6) to collect the voice of the customer much more systematically and have the member of the public further involved in key decision-making by implementing QFD (Dehe and Bamford Citation2017).

Additionally, the benchmarking results showed quantitatively the potential room for improvement in terms of innovation (Bhattacharya and David Citation2018). Across the four dimensions the internal infrastructure scored 6.28 compared to the external benchmarked partners, which were assessed respectively as 10.52, 11.10 and 14.92, having said that, the knowledge captured and transferred within the organization enables them to create breakthrough innovation improvements in terms of processes and products at the micro-level first and macro-level in the future. The assessment has evidenced that the focal firm has lagged behind other healthcare organizations in terms of infrastructure development but was now willing to change its practices and culture regarding (i) the eco-friendliness aspect of the building (A8) by using renewable materials and solar panels; (ii) the level of health and social care service integration (B2), by co-locating and incorporating social and wellbeing providers, therapists and other children’s services as well as a library; (iii) increasing the user involvement (C7) by creating engagement and consultation panels and (iv) optimizing the construction process (D9) by using modern technologies, techniques and practices such as pre-fabrication, standardization and lean construction. This shows, from a knowledge management perspective, how deep knowledge was gained from the benchmarking results (Laursen and Salter Citation2014; Maravelakis et al. Citation2006).

5.3. Benchmarking is source of open innovation

One source of open innovation relates to the transfer of knowledge among groups or networks (Alexander and Childe Citation2013). The benchmarking process was an eye-opener in terms of room for improvement and innovation potential for the team of experts. One of the Estate Managers early on reflected ‘it is so interesting to understand how other NHS Trusts are managing their estates and new infrastructure, we seem to have such different practices’. Both the P and W infrastructures were extremely relevant benchmarks to the focal organization, due to their similarities, in terms of scope, size, budget, strategy and vision. The team of experts acknowledged that the information gathered, and the knowledge gained from the benchmarking exercise undoubtedly influence the current and future development projects. A Senior Manager admitted that ‘we have to think outside the box, if we want to deliver fit for purpose, ‘one-stop shop’ healthcare facilities to the local communities […] seeing and analysing what others are doing is helping us to visualise what else can be done’. The SH case was selected so that data could be collected from what was considered to be best in class. The experts agreed that this US-based infrastructure was an inspiration in terms of areas for improvement and innovation and that the organization will aspire to learn from, going forward and in the future. The team planner explained that

even if SH’s scale and scope and resources are not entirely comparable, we need to aspire to develop much stronger collaboration with partners and universities as they do. Also, we should apply lean principles from the design phase […] This is something we are keen to adopt in the future to transform the way we develop new projects.

The benchmarking process led new ideas to emerge and be implemented within the focal organization. It enabled promoting innovative behaviours and culture, which were directly influencing the methods and practices of planning, design and building healthcare infrastructures. For instance, as the results of the benchmarking, the focal organization experts were convinced of the health and social care integration model (Glasby and Dickinson Citation2009). ‘This model is an extremely innovative solution for the NHS where synergies, economies of scale and quality improvement can be gained’ argued one of the experts. The focal firm is now considering this integration as part of all of its new developments, in which the design of these integrated operations and pathways can be synchronized as early as the concept and planning processes. Specifically, this means defining the boundaries between health and social care services, designing integrated information systems, developing joint budgets and co-managing the resources. This demonstrates an enhanced orientation towards open innovation that emerged from the external search results (Dahlander et al. Citation2016; Hult, Hurley, and Knight Citation2004).

5.4. Benchmarking acts as a knowledge management platform for open innovation

Benchmarking is generally described as ‘an external focus on internal activities, functions and operations in order to achieve internal continuous improvement’ (Leibfried and McNair Citation1992; Moriarty and Smallman Citation2009; Strang Citation2016); however, our findings demonstrated it impacts open innovation directly (Bogers et al. Citation2019). In this study, the benchmarking framework was designed and implemented and created a platform allowing the focal organization to learn from best practices and generate a mechanism for collaboration and for managing knowledge flows between and within organizations (Akter et al. Citation2022). Our findings showed that benchmarking acted as a powerful artefact for managing the internal and external knowledge and learning, the foundation for open innovation. First, it enabled the organization to consciously identify and seek out the information needed, then to compile and analyse it consistently before transferring and communicating it. Of course, gaining access to relevant information depends on the partners’ willingness to share information and knowledge transparently (Dyer and Singh Citation1998). This was managed by building the benchmarking project, based on reciprocity and trust principles, where the focal firm would share their findings and analysis with the partners (P, W and SH) as well as would be delighted to showcase their newly developed innovative practices. Therefore, cooperative (as opposed to opportunistic) behaviours, and willingness to share knowledge with other organizations, as demonstrated in this study, are key prerequisites (Hartmann Citation2006). The benchmarking team required space and time to learn and visit other organizations, which significantly contributed to the team’s creativity and open innovation process (Adebanjo, Abbas, and Mann Citation2010).

The results suggest that the focal firm has adopted an open innovation mindset and culture via the benchmarking processes. The framework acted as the platform to manage new infrastructure development knowledge, enhancing the organization’s learning mechanism (Oliver Citation2009). and augmenting the organizational learning capabilities. This is in line with Skerlavaj, Song, and Lee (Citation2010) explanation that the organizational learning culture is critical to improving innovation capacities. As a consequence, we are proposing the following conceptual model, as per . The model shows that benchmarking is the trigger and main vehicle to drive open innovation (process, product, incremental and radical) by being the catalysts and directly influencing the determinants of open innovation.

6. Conclusion

In this article, we have demonstrated how benchmarking has enabled the focal firm, a healthcare organization in the UK, to trigger and drive open innovation in its complex infrastructure development. We have (i) reported on the design and implementation of a bespoke framework composed of four dimensions and 39 criteria and (ii) presented the analysis that has emerged from four assessments: the internal benchmark (internal scoring = 6.28), two external ‘competitive’ benchmarks (P = 10.52 and W = 11.10) and one ‘best in class’ benchmark (SH = 14.92) and (iii) analysed the experts’ reflection of the implementation.

6.1. How can a bespoke benchmarking framework be developed as a platform to enhance the performance and open innovation of new healthcare infrastructure projects?

The interactive process associated with the benchmarking allowed cycles of knowledge and cycles of learning that were not established previously, through the iterative process of developing the framework, collecting different external information, agreeing on the assessment and analysing the gaps. The benchmarking created a productive exchange of information platform used by the different functions and stakeholder groups to communicate and enhance their alignment. This platform and system helped the organization to develop its abilities to identify, assimilate and exploit knowledge effectively and efficiently (Akter et al. Citation2022). Therefore, we believe it generates significant open innovation improvement and enhances the focal firm’s capability to innovate and exploit its infrastructure development know-how (Cohen and Levinthal Citation1989; Mitchell and Zmud Citation1999; Vartanova and Kolomytseva Citation2019). We conclude that benchmarking was powerful in directly supporting the focal organization in managing its open innovation, learning and knowledge capabilities. This goes towards Perez-Araos et al. (Citation2007) findings, who argued that a formal knowledge management approach to sharing ideas, experiences, improvement and best practices help organizations to increase their overall innovation.

6.2. Contribution to practice and to knowledge

In this study, there is an incremental and revelatory contribution (Nicholson et al. Citation2018) around three key outputs. First, this benchmarking study has enhanced the focal organization’s learning capability, enabling the team of experts to overcome specific traditional barriers and challenging the status-quo (Böhme et al. Citation2013; Böhme et al. Citation2014; Goh and Richards Citation1997) as far as healthcare infrastructures are planned, designed, built and managed. Open innovation requires learning capabilities, and the established artefact was more than an enabler, it was the trigger and the main vehicle as depicted in . Therefore, from a theoretical perspective, we argue and demonstrate that benchmarking is a key knowledge management platform and vehicle to drive open innovation. Second, a plethora of firms have successfully implemented tools and techniques to enhance innovation, however, few have been able to create an open innovation mindset in the healthcare built environment. This bespoke benchmarking framework () allowed the focal organization to identify and pursue partnerships and best practices to enhance its open innovation mechanisms, while integrating the strategy of the business and the voice of the customer, which forms the practical contribution aspect of this study. Finally, by empirically developing and testing this benchmarking framework (), and by analysing the outcomes of the three external cases, this research also contributes by responding to the recognized lack of overall performance and innovations models within the built environment, especially within the development of new healthcare infrastructure (García-Sanz-Calcedo, de Sousa Neves, and Fernandes Citation2021; Hinks and McNay Citation1999; Kagioglou and Tzortzopoulos Citation2010; Pellicer et al. Citation2014; Sharma, Caldas, and Mulva Citation2021; Shohet and Lavy Citation2010). We believe, the artefact can be replicated and adapted as a blueprint for other healthcare organizations to enhance their infrastructure development and innovation performance.

6.3. Limitations and future studies

While we appreciate the contributions of this study, we also acknowledge a set of limitations and suggest directions for future research. First, the framework composed of four dimensions and 39 criteria was developed mainly from the focal firm perspective, therefore some of the items and descriptors might not be as relevant to all healthcare firms. However, to control for this limitation we ensured that (i) the framework was first externally validated by eight independents experts (four healthcare professionals and four OM scholars) and (ii) the framework was meaningful to the external benchmarks’ partners (P, W and SH), which operated under different models. Regarding this aspect, it is worth reflecting that there are some contextual differences between the UK and USA models: (i) the UK healthcare organizations in which the focal firm, P and W, operate are publicly and government funded under the UK NHS; (ii) The USA organization, SH, is a private not-for-profit healthcare and part of a larger healthcare network; this is common across the USA and representative. The assessment presented within this paper indicates that the framework is adaptable to other healthcare organizations. We would however encourage other researchers to build on this framework by further testing its fitness for purpose within other healthcare organizations. Second, this study relied on three external benchmarks to draw the findings and assess the open innovation effect. It would be useful in the future to replicate this benchmarking process and analyse additional healthcare infrastructures to identify other best and innovative practices. Developing several assessments would enable to analyse emerging patterns and perform some correlation analyses. Third, this research borrowed a pragmatism worldview and was qualitative and exploratory by nature, so future research could test quantitatively the conceptual model established in . A survey could be designed to explain further the relationships between the constructs and quantify the significance that benchmarking has on open innovation. Finally, this research investigated the healthcare built environment mainly within the UK. This phenomenon is context-dependent, and we believe it would add value to investigate it through in-depth case studies from developing countries, where healthcare and construction practices, productivity and innovation are different.

Acknowledgements

We are grateful to the healthcare organizations that collaborated on this project and provided access to data and their infrastructures. We would also like to acknowledge the journal’s reviewers and editors for their insightful feedback and comments.

Disclosure statement

No potential conflict of interest was reported by the author(s).

Additional information

Funding

Notes on contributors

Benjamin Dehe

Benjamin Dehe is an Associate Professor in Supply Chain Management at Auckland University of Technology. He focuses his research on the application of supply chain and operations excellence and innovation concepts in manufacturing, built environment and sport. More recently, he has worked on aspects of Industry 4.0 and its technologies. His work, which has been published in national and international journals such as the International Journal of Operations and Production Management, Expert Systems with Applications, the Journal of Business Research and Production Planning & Control, revolve predominantly around the decision-making processes.

David Bamford

David Bamford is an experienced industrialist/academic who has published over 100 articles, book chapters and reports as well as presented widely on his topics to both academic and practitioner audiences. His multiple publications include two co-authored books: (i) Managing Quality (2016) and (ii) Essential Guide to Operations Management (2010). He has worked with multiple Higher Education institutions: University of Manchester, Lancaster University; Harvard University; University of Birmingham; Queens University; The Open University.

Suntichai Kotcharin

Suntichai Kotcharin is an Associate Professor in International Business, Logistics and Transport at Thammasat Business School, Thammasat University, Thailand. He is a member of the centre of excellence in operations management and information management at Thammasat University. His research interests are in the areas of transportation, logistics, operations and supply chain management. His research has been published in leading academic journals including the Journal of Business Research, Transportation Research Part E, International Journal of Logistics Research and Applications and Journal of Air Transport Management.

References

- Adams, R., J. Bessant, and R. Phelps. 2006. “Innovation Management Measurement: A Review.” International Journal of Management Reviews 8 (1): 21–47. doi:10.1111/j.1468-2370.2006.00119.x.

- Adebanjo, D., A. Abbas, and R. Mann. 2010. “An Investigation of the Adoption and Implementation of Benchmarking.” International Journal of Operations & Production Management 30 (11): 1140–1169. doi:10.1108/01443571011087369.

- Adebanjo, D., and R. Mann. 2000. “Identifying Problems in Forecasting Consumer Demand in the Fast Moving Consumer Goods Sector.” Benchmarking: An International Journal 7 (3): 223–230. doi:10.1108/14635770010331397.

- Akmal, A., J. Foote, N. Podgorodnichenko, R. Greatbanks, and R. Gauld. 2022. “Understanding Resistance in Lean Implementation in Healthcare Environments: An Institutional Logics Perspective.” Production Planning & Control 33 (4): 356–370. doi:10.1080/09537287.2020.1823510.

- Akter, S., M. M. Babu, M. A. Hossain, and U. Hani. 2022. “Value Co-creation on a Shared Healthcare Platform: Impact on Service Innovation, Perceived Value and Patient Welfare.” Journal of Business Research 140: 95–106. doi:10.1016/j.jbusres.2021.11.077.

- Alexander, A. T., and S. J. Childe. 2013. “Innovation: A Knowledge Transfer Perspective.” Production Planning & Control 24 (2–3): 208–225. doi:10.1080/09537287.2011.647875.

- Almus, M., and D. Czarnitzki. 2003. “The Effects of Public R&D Subsidies on Firms’ Innovation Activities: The Case of Eastern Germany.” Journal of Business & Economic Statistics 21 (2): 226–236. doi:10.1198/073500103288618918.

- Bamford, D., P. Forrester, B. Dehe, and R. G. Leese. 2015. “Partial and Iterative Lean Implementation: Two Case Studies.” International Journal of Operations & Production Management 35 (5): 702–727. doi:10.1108/IJOPM-07-2013-0329.

- Bamford, D., C. Hannibal, K. Kauppi, and B. Dehe. 2018. “Sports Operations Management: Examining the Relationship between Environmental Uncertainty and Quality Management Orientation.” European Sport Management Quarterly 18 (5): 563–582. doi:10.1080/16184742.2018.1442486.

- Bhattacharya, A., and D. A. David. 2018. “An Empirical Assessment of the Operational Performance through Internal Benchmarking: A Case of a Global Logistics Firm.” Production Planning & Control 29 (7): 614–631. doi:10.1080/09537287.2018.1457809.

- Bibby, L., and B. Dehe. 2018. “Defining and Assessing Industry 4.0 Maturity Levels–Case of the Defence Sector.” Production Planning & Control 29 (12): 1030–1043. doi:10.1080/09537287.2018.1503355.

- Bogers, M., H. Chesbrough, S. Heaton, and D. J. Teece. 2019. “Strategic Management of Open Innovation: A Dynamic Capabilities Perspective.” California Management Review 62 (1): 77–94. doi:10.1177/0008125619885150.

- Böhme, T., E. Deakins, M. Pepper, and D. Towill. 2014. “Systems Engineering Effective Supply Chain Innovations.” International Journal of Production Research 52 (21): 6518–6537. doi:10.1080/00207543.2014.952790.

- Böhme, T., S. J. Williams, P. Childerhouse, E. Deakins, and D. Towill. 2013. “Methodology Challenges Associated with Benchmarking Healthcare Supply Chains.” Production Planning & Control 24 (10-11): 1002–1014. doi:10.1080/09537287.2012.666918.

- Buckmaster, N., and J. Mouritsen. 2017. “Benchmarking and Learning in Public Healthcare: Properties and Effects.” Australian Accounting Review 27 (3): 232–247. doi:10.1111/auar.12134.

- Burnard, P., P. Gill, K. Stewart, E. Treasure, and B. Chadwick. 2008. “Analysing and Presenting Qualitative Data.” British Dental Journal 204 (8): 429–432. doi:10.1038/sj.bdj.2008.292.

- Bygballe, L. E., and M. Ingemansson. 2014. “The Logic of Innovation in Construction.” Industrial Marketing Management 43 (3): 512–524. doi:10.1016/j.indmarman.2013.12.019.

- Cain, C. T. 2004. Profitable Partnering for Lean Construction. Oxford: Blackwell Publishing.

- Camp, R. C. 1989. Benchmarking: The Search for Industry Best Practices That Lead to Superior Performance. New York: ASQC Quality Press.

- Cantarelli, C. C. 2022. “Innovation in Megaprojects and the Role of Project Complexity.” Production Planning & Control 33(9–10), 943–956.

- Cantarelli, C. C., and A. Genovese. 2021. “Innovation Potential of Megaprojects: A Systematic Literature Review.” Production Planning & Control: 1–21. doi:10.1080/09537287.2021.2011462.

- Carlile, P. R. 2004. “Transferring, Translating, and Transforming: An Integrative Framework for Managing Knowledge across Boundaries.” Organization Science 15 (5): 555–568. doi:10.1287/orsc.1040.0094.

- Chandrasekaran, A., K. Linderman, and R. Schroeder. 2015. “The Role of Project and Organizational Context in Managing High‐tech R&D Projects.” Production and Operations Management 24 (4): 560–586. doi:10.1111/poms.12253.

- Chesbrough, H. 2003. Open Innovation: The New Imperative for Creating and Profiting from Technology. Boston: Harvard Business School Press.

- Chesbrough, H., and A. Di Minin. 2014. “Open Social Innovation.” In Open Innovation: New Frontiers and Applications, edited by Henry Chesbrough, Wim Vanhaverbeke, and Joel West, 301–315. Oxford: Oxford University Press.

- Chesbrough, H., W. Vanhaverbeke, and J. West, eds. 2006. Open Innovation: Researching a New Paradigm. London: Oxford University Press.

- Cohen, W. M., and D. A. Levinthal. 1989. “Innovation and Learning: The Two Faces of R & D.” The Economic Journal 99 (397): 569–596. doi:10.2307/2233763.

- Cook, W. D., L. M. Seiford, and J. Zhu. 2004. “Models for Performance Benchmarking: Measuring the Effect of e-Business Activities on Banking Performance.” OMEGA 32 (4): 313–322. doi:10.1016/j.omega.2004.01.001.

- Cooper, R. G., S. J. Edgett, and E. J. Kleinschmidt. 2004. “Benchmarking Best NPD Practices – II.” Research-Technology Management 47 (3): 50–59. doi:10.1080/08956308.2004.11671630.

- Dahlander, L., S. O'Mahony, and D. M. Gann. 2016. “One Foot in, One Foot out: How Does Individuals’ External Search Breadth Affect Innovation Outcomes?” Strategic Management Journal 37 (2): 280–302. doi:10.1002/smj.2342.

- Dale, B. G., B. Dehe, and D. Bamford. 2016. “Quality Management Techniques.” In Managing Quality, edited by B. G. Dale, D. Bamford, and T. van der Wiele, 215–265. 6th ed. Oxford: Wiley.

- Damanpour, Fariborz, and Shanthi Gopalakrishnan. 2001. “The Dynamics of the Adoption of Product and Process Innovations in Organizations.” Journal of Management Studies 38 (1): 45–65. doi:10.1111/1467-6486.00227.

- Dehe, B., and D. Bamford. 2015. “Development, Test and Comparison of Two Multiple Criteria Decision Analysis (MCDA) Models: A Case of Healthcare Infrastructure Location.” Expert Systems with Applications 42 (19): 6717–6727. doi:10.1016/j.eswa.2015.04.059.

- Dehe, B., and D. Bamford. 2017. “Quality Function Deployment and Operational Design Decisions – A Healthcare Infrastructure Development Case Study.” Production Planning & Control 28 (14): 1177–1192. doi:10.1080/09537287.2017.1350767.

- Delbridge, R., J. Lowe, and N. Oliver. 1995. “The Process of Benchmarking: A Study from the Automotive Industry.” International Journal of Operations & Production Management 15 (4): 50–62. doi:10.1108/01443579510083604.

- Dewar, R. D., and J. E. Dutton. 1986. “The Adoption of Radical and Incremental Innovations: An Empirical Analysis.” Management Science 32 (11): 1422–1433. doi:10.1287/mnsc.32.11.1422.

- Dubois, A., and L. E. Gadde. 2002. “The Construction Industry as a Loosely Coupled System: Implications for Productivity and Innovation.” Construction Management and Economics 20 (7): 621–631. doi:10.1080/01446190210163543.

- Dyer, J. H., and H. Singh. 1998. “The Relational View: Cooperative Strategy and Sources of Interorganizational Competitive Advantage.” Academy of Management Review 23 (4): 660–679. doi:10.5465/amr.1998.1255632.

- Egan, J. 1998. Rethinking Construction: The Report of the Construction Task Force. Report. London: Department of the Environment, Transport and the Regions. Accessed 12 March 2020. https://constructingexcellence.org.uk/wp-content/uploads/2014/10/rethinking_construction_report.pdf

- Ekanayake, E. M. A. C., G. Q. Shen, and M. M. Kumaraswamy. 2021. “Identifying Supply Chain Capabilities of Construction Firms in Industrialized Construction.” Production Planning & Control 32 (4): 303–321. doi:10.1080/09537287.2020.1732494.

- Feibert, D. C., B. Andersen, and P. Jacobsen. 2019. “Benchmarking Healthcare Logistics Processes – A Comparative Case Study of Danish and US Hospitals.” Total Quality Management & Business Excellence 30 (1-2): 108–134. doi:10.1080/14783363.2017.1299570.

- Forker, L. B., and D. Mendez. 2001. “An Analytical Method for Benchmarking Best Peer Suppliers.” International Journal of Operations & Production Management 21 (1/2): 195–209. doi:10.1108/01443570110358530.

- Fowler, A., and D. Campbell. 2001. “Benchmarking and Performance Management in Clinical Pharmacy.” International Journal of Operations & Production Management 21 (3): 327–350. doi:10.1108/01443570110364678.

- Francis, S., and R. Glanville. 2002. Building 2020 Vision: Our Future Healthcare Environments. Great Britain: The Stationery Office.

- Gambatese, J. A., and M. Hallowell. 2011. “Enabling and Measuring Innovation in the Construction Industry.” Construction Management and Economics 29 (6): 553–567. doi:10.1080/01446193.2011.570357.

- Gans, Noah, Ger Koole, and Avishai Mandelbaum. 2003. “Call Center Measurements, Data Models and Data Analysis.” Manufacturing & Service Operations Management 5 (2): 79–141. doi:10.1287/msom.5.2.79.16071.

- García-Sanz-Calcedo, J., N. de Sousa Neves, and J. P. A. Fernandes. 2021. “Measurement of Embodied Carbon and Energy of HVAC Facilities in Healthcare Centers.” Journal of Cleaner Production 289: 125151. doi:10.1016/j.jclepro.2020.125151.

- Gawin, B., and B. Marcinkowski. 2017. “Business Intelligence in Facility Management: Determinants and Benchmarking Scenarios for Improving Energy Efficiency.” Information Systems Management 34 (4): 347–358. doi:10.1080/10580530.2017.1366219.

- Gilleard, J. D., and P. Wong. 2004. “Benchmarking Facility Management: Applying Analytic Hierarchy Process.” Facilities 22 (1/2): 19–25. doi:10.1108/02632770410517915.

- Glasby, J., and H. Dickinson, eds. 2009. International Perspectives on Health and Social Care: Partnership Working in Action. Chichester, WS: John Wiley & Sons.

- Goh, S., and G. Richards. 1997. “Benchmarking the Learning Capability of Organizations.” European Management Journal 15 (5): 575–583. doi:10.1016/S0263-2373(97)00036-4.

- Hamzeh, F. R., G. Ballard, and I. D. Tommelein. 2009. “Is the Last Planner System Applicable to Design? - A Case Study.” Paper presented at the Iin 17th Annual Conference of the International Group for Lean Construction (IGLC 17), Taipei, Taiwan, July 15–17.

- Hartmann, A. 2006. “The Context of Innovation Management in Construction Firms.” Construction Management and Economics 24 (6): 567–578. doi:10.1080/01446190600790629.

- Herzlinger, R. 2006. “Why Innovation in Health Care Is so Hard.” Harvard Business Review 84 (5): 58–66.

- Hidalgo, A., and J. Albors. 2008. “Innovation Management Techniques and Tools: A Review from Theory and Practice.” R&D Management 38 (2): 113–127. doi:10.1111/j.1467-9310.2008.00503.x.

- Hinks, J., and P. McNay. 1999. “The Creation of a Management-by-Variance Tool for Facilities Management Performance Assessment.” Facilities 17 (1/2): 31–53. doi:10.1108/02632779910248893.

- Hodgkinson, G., and D. Rousseau. 2009. “Bridging the Rigour–Relevance Gap in Management Research: It’s Already Happening!.” Journal of Management Studies 46 (3): 534–546. doi:10.1111/j.1467-6486.2009.00832.x.

- Huizingh, E. K. 2011. “Open Innovation: State of the Art and Future Perspectives.” Technovation 31 (1): 2–9. doi:10.1016/j.technovation.2010.10.002.

- Hult, G. T. M., R. F. Hurley, and G. A. Knight. 2004. “Innovativeness: Its Antecedents and Impact on Business Performance.” Industrial Marketing Management 33 (5): 429–438. doi:10.1016/j.indmarman.2003.08.015.

- Iacobucci, D., and S. Hoeffler. 2016. “Leveraging Social Networks to Develop Radically New Products.” Journal of Product Innovation Management 33 (2): 217–223. doi:10.1111/jpim.12290.