Abstract

Many neuroscientists view prediction as one of the core brain functions. However, there is little consensus as to the exact nature of predictive information and processes, or the neural mechanisms that realise them. This paper reviews a host of neural models believed to underlie the learning and deployment of predictive knowledge in a variety of brain regions: neocortex, hippocampus, thalamus, basal ganglia and cerebellum. These are compared and contrasted in order to codify a few basic aspects of neural circuitry and dynamics that appear to be the heart of prediction.

1. Introduction

Keen predictive abilities have long been recognised as special talents. Those who can consistently determine what the future brings often enjoy high salaries and elevated social standing. In early human civilisations, the well-being of an entire tribe was contingent upon the ability to foresee a rough winter or a pending enemy attack, whereas today, in a world governed by a perplexing interplay between complex systems such as climate, politics and international markets, predictive prowess is at an absolute premium.

Yet despite its well-respected role in society, prediction often goes unappreciated as a fundamental component of intelligence. Recently, several prominent scientists (Llinas Citation2001; Hawkins Citation2004) have championed the primacy of prediction in cognition. In On Intelligence, computer scientist and founder of the Redwood Neuroscience Institute, Jeffrey Hawkins, argues for a more prediction-centred view of intelligence:

Intelligence and understanding started as a memory system that fed predictions into the sensory stream. These predictions are the essence of understanding. To know something means that you can make predictions about it…We can now see where Alan Turing went wrong. Prediction, not behavior, is the proof of intelligence. (Hawkins Citation2004, pp. 104–105)

In i of the Vortex, the renowed neuroscientist Rodolfo Llinas states:

The capacity to predict the outcome of future events–critical to successful movement–is, most likely, the ultimate and most common of all global brain functions. (Llinas Citation2001, pg. 21)

As discussed previously in Downing (Citation2005, Citation2007), these predictive facilities may underlie our common-sense understanding of the world and may provide support for cognitive incrementalism (Clark Citation2001)—the view that cognition arises directly from sensorimotor activity–which, in turn, is a motivating philosophy of situated and embodied artificial intelligence (SEAI). However, the pronounced differences between procedural and declarative knowledge (Squire and Zola Citation1996) (and the brain areas that appear to facilitate them) are also pointed to, which leave considerable doubt as to whether a single corpus of predictive information could support both sensorimotor activity and higher-level cognition.

This paper continues our quest to better understand the role of prediction in the brain. Many neural subsystems and associated computational models are examined to distill a set of basic anatomical and physiological factors that support predictive behaviour. Although Hawkins Citation(2004) focuses on the cortex and Llinas Citation(2001) on the cerebellum, interesting predictive architectures, as proposed by experimental and computational neuroscientists, are found in five different systems: cerebellum, basal ganglia, hippocampus, neocortex and thalamocortical. The former two embody procedural predictions, whereas the latter three have a more declarative nature.

The key difference between the procedural and declarative predictive forms resides in the explicit awareness of the connections between spatiotemporal states that embody predictive knowledge. For example, a basketball player is explicitly aware of the fact that a strong rebound and quick outlet pass often predict a fast break, but may not know what shooting movements can predict a successful shot, even though he/she can feel whether a shot will hit or miss the instant it leaves his/her fingertips.

As will be seen, these two types of prediction, the explicit and implicit, require different architectures. However, within separate regions of the brain, the same types of architecture for procedural and declarative prediction, respectively, seem to reoccur. This apparent duplication of prediction-supporting machinery supports claims that prediction is a fundamental brain process, at both the conscious and the subconscious level.

We begin by defining prediction for our neuroscientific purposes. Procedural prediction in the cerebellum and basal ganglia is then explored, with detailed anatomy and physiology of both regions presented and analysed. We then move on to declarative prediction, where the hippocampus, neocortex and thalamocortical system are dissected, as are a collection of computational models, all by different researchers. A very interesting commonality was discovered across these models, which served as the prime motivation for this article. This common abstraction is summarised in a Generic Declarative Prediction Network (GDPN) prior to the presentation of the individual models. Next, the five predictive systems are compared to find key similarities between those supporting the same type of prediction, and key differences between those underlying different predictive modes. Finally, the paper concludes with general remarks on the role of prediction in brain science.

2. Defining prediction

From a psychological or social perspective, prediction denotes a wide range of abilities, many of which involve the capacity to learn temporal correlations among events or world states. One can predict the consequences of actions by using acquired associations between those acts and the world states that have, in the past, immediately succeeded them. One can predict the world state that normally follows another world state, where, presumably, the earlier state includes some hints as to the key processes governing the state change. When viewing a snapshot of a baseball player running full speed across the warning track, we can predict that one of the following states involves the same player crashing into the outfield wall. We connect the two states via the action, hard running, so clearly evident in the first state.

The dictionary (Merriam-Webster; www.merriam-webster.com/dictionary/predict) gives two primary definitions of predict:

| 1. | To declare or indicate in advance. | ||||

| 2. | To foretell on the basis of observation. | ||||

To declare is to make known formally, officially or explicitly, whereas to indicate is to be a sign, symptom or index of (Merriam-Webster). In short, the declarative form of prediction is more concrete and direct, whereas the indicative form is more indirect and implicit. As a simple example, one may declaratively predict an upcoming sunny day by explicitly stating, ‘Tomorrow will be a sunny day’. Conversely, one can indicate that prediction by various preparatory acts such as purchasing sunblock, retrieving the lawnmower from the deep recesses of the garage, etc.

Interestingly, these two forms of prediction map quite well to distinct neural structures. The explicit variant seems to coincide with cortical and hippocampal activity, whereas the implicit type maps to commonly proported cerebellar and basal gangliar functions, which are often described as procedural or non-declarative (Squire and Kandel Citation1999). Consequently, the terms declarative and procedural will be used to denote the explicit and indicative forms of prediction, respectively.

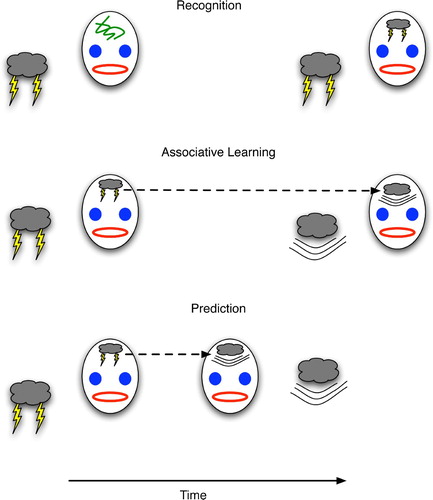

illustrates the basic conception of declarative prediction from a neural perspective. First, recognition is defined as attaining a brain state, S, that has previously exhibited a strong correlation with the (now familiar) experience (e.g. object, event or state of the agent itself). Informally, S is that brain state that is both most likely to arise under the given experience (e.g. lightning), and not likely to arise under other conditions. Along these lines, declarative predictive knowledge involves two such correlations between brain states and experiences plus a link between the two brain states such that one can trigger the other prior to (or even in the complete absence of) the latter's associated experience. This link need not be bi-directional, so the experience of lightning may lead to the brain state corresponding to the experience of thunder, but not necessarily vice versa.

Figure 1. (Top) Recognition depicted as the formation of a brain state (drawn as lightning on the forehead) that becomes correlated with a physical event (lightning). (Middle) Learning the association between one event (lightning) and its successor (thunder) by linking the brain states that correlate with each. (Bottom) Declarative prediction entails recognising one event (lightning) and forming the succeeding brain state for thunder prior to (or even in the absence of) the real-world event with which it correlates.

In the framework of , predictive learning is essentially a special case of associative learning in which the related items represent events having at least a small temporal deviation such that the start of event A precedes that of event B. Then, during the interim between the two starts, prediction proves its worth by indicating B (and thus enabling the animal to prepare for B) prior to B's occurrence. This preparatory window gives prediction a survival advantage above and beyond that of non-temporal association. In the latter, an antelope can link the sight of a tiger to fear (and its consequences such as hiding or fleeing), but without the ability to associate events over time the antelope could not link the rustling of bushes at time t with the appearance of a tiger at time t+d, much to the antelope's detriment.

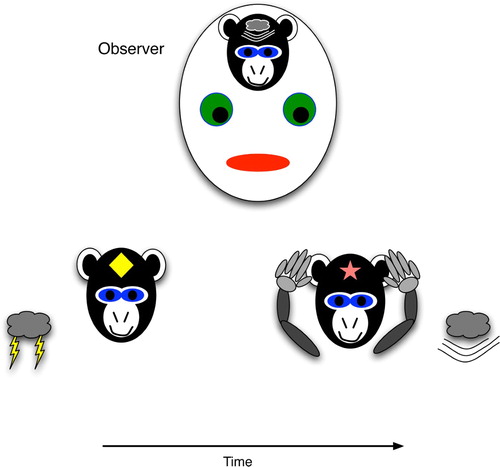

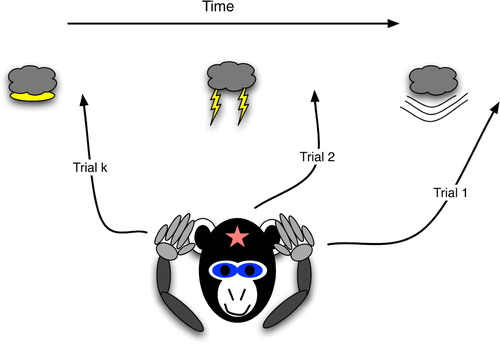

depicts procedural prediction. Here, the agent (a monkey) has acquired a link between a brain state that weakly correlates with lightning (a diamond) and one that weakly maps to thunder (a star). These are weak in the sense that they may not be completely specific for these events, such that any flashing light would trigger the diamond state, and any loud noise would trigger the star state. Thus, it is difficult to claim that the monkey declaratively predicts thunder. In contrast to a declarative representation, the general, weakly correlated state would not stimulate other conscious thunderstorm thoughts, such as the association with dark rain clouds, the potential dangers, examples of destructive effects, etc. However, an observer may easily interpret the monkey's procedural act of covering its ears as an explicit prediction of thunder. When the agent's actions, but not its brain state, appear to foretell a specific event, the prediction is procedural.

Figure 2. Procedural prediction, wherein the agent's actions indicate specific knowledge of a future world state, even though the agent (monkey) has no explicit brain state that strongly correlates with the world state. The agent's ear-covering behaviour can easily lead an observer to infer that the agent has the strongly correlated brain state, i.e. explicit knowledge of the upcoming thunder.

The difference between the two predictive forms is probably most easily discernible in humans, because our communicated descriptions of future states often indicate a declarative component, whereas many physical situations require us to act quickly and appropriately, but without forming clear neural correlates of the next situation. For example, in watching the slow-motion replay of a tennis serve, a coach may predictively describe where and how the ball will land, but in playing the return shot him/herself, he/she would simply run to the appropriate spot and adjust his/her body to the speed, angles and spin expected of the incoming serve. Only a naïve outside observer would infer that he/she had explicit knowledge of those parameters.

In the sections that follow, five neural systems, all of which have been posited as centres of predictive activity by several authors, are examined both in general and with respect to prediction. In many cases, the focus is on computational models of those systems, as these typically provide more thorough – albeit unproven – mechanistic explanations than do the more traditional neuroscientific findings. These systems are the cerebellum and basal ganglia, both viewed as procedurally predictive engines, and the hippocampus, neocortex and thalamocortical loop, each of which shows strong declarative tendencies.

3. Procedural prediction in the cerebellum

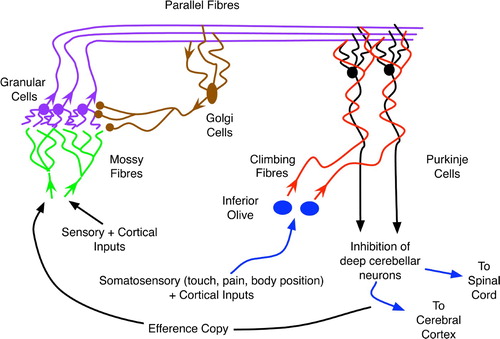

The cerebellum has a well-established role in the learning and control of complex motions (Kandel, Schwartz and Jessell Citation2000; Bear, Conners and Paradiso Citation2001), and many believe that this involves the use of predictive models (Wolpert et al. Citation1998; Barto, Fagg and Houk Citation1999). A brief anatomical overview (based on Bear et al. Citation(2001)) of the cerebellum appears in , which indicates the highly ordered structure of this region.

Figure 3. The basic organisation of the cerebellum, an abstraction and combination of more complex diagrams in Bear et al. Citation(2001), originally appearing in Downing Citation(2007).

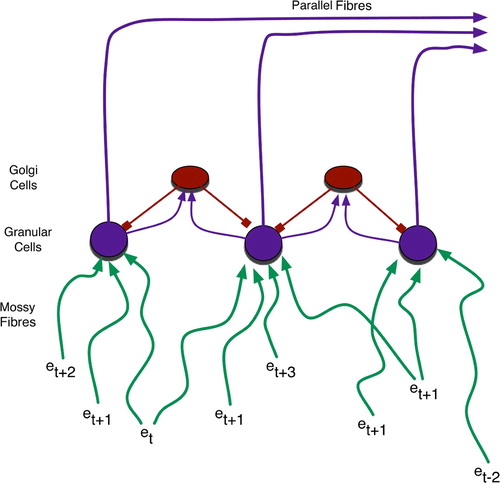

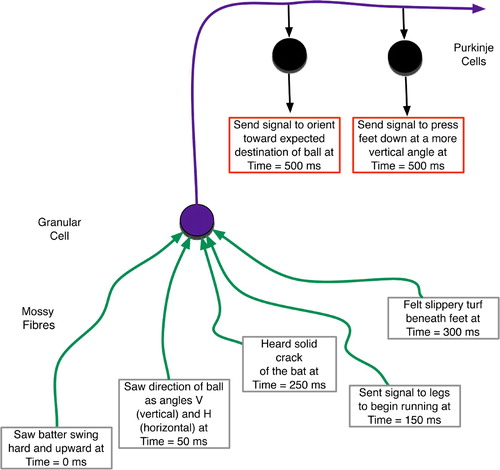

As shown in and , the cerebellar input layer, the granular cells, receive a variety of peripheral sensory and cortical signals via mossy fibres stemming from the spinal cord and brainstem. These signals experience differential delays before converging upon the granular cells, with an average of four such inputs per cell (Rolls and Treves Citation1998). The large number of such cells, approximately 1011 in humans (Kandel et al. Citation2000), combined with their tendency to inhibit one another laterally, via the interspersed golgi cells, indicates that the granular cells serve as sparse-coding detectors of relatively simple (i.e. involving just a few integrated stimuli) contexts (Rolls and Treves Citation1998). As delay times vary along the mossy fibres, each context has both temporal and spatial extent.

Figure 4. Granular cells realise sparse coding for temporally blended contexts. Events (e) of various temporal origin (denoted by subscripts) simultaneously activate granular cells due to differential delays – roughly depicted by line length, with longer lines denoting events that occurred further in the past – along mossy fibres.

One parallel fibre (PF) emanates from each granular cell and synapses on to the dendrites of many Purkinje cells (PCs), each of which may receive input from 105 to 106 parallel fibres (Kandel et al, Citation2000). As the Purkinje outputs are the cerebellum's ultimate contribution to the control of motor (and possibly cognitive) activity, the plethora granular inputs to each Purkinje cell would appear to embody a complex set of preconditions for the generation of any such output. As the PF–PC synapses are modifiable (Rolls and Treves Citation1998; Kandel et al. Citation2000), these preconditions are subject to learning/adaptation.

As shown in , climbing fibres from the inferior olive send signals to the PF–PC synapses. The climbing fibres transfer pain signals from the muscles and joints controlled by those fibres’ corresponding Purkinje cells, and these affect long-term depression (LTD) of the neighbouring PF–PC synapses (Rolls and Treves Citation1998; Kandel et al. Citation2000). Thus, the climbing fibres provide a primitive form of supervised learning (Doya Citation1999) wherein the combination of parallel fibres that cause a Purkinje cell to fire (and thus promote a muscular movement resulting in discomfort) will be less likely to excite the same PC in the future. In short, the feedback from the inferior olive and climbing fibres helps to filter out inappropriate contexts (embodied in the parallel fibres) for particular muscle activations.

Plasticity at the PF–PC synapse relies on post-synaptic LTD. When a climbing fibre (CF) forces a PC to fire strongly, those PC dendrites that had recently been activated by parallel fibres undergo chemical changes that reduce their sensitivity to glutamate (the neurotransmitter used by PFs). Hence, the influence of those PFs on the PC declines (Bear et al. Citation2001).

Rather counterintuitively, the simplest behaviours often require the most complex neural activity patterns. For example, it takes a much more intricate combination of excitatory and (particularly) inhibitory signals to wiggle a single finger (or toe) than to move all five. Hence, the tuning of PC cells to achieve the appropriate inhibitory mix is a critical factor in basic skill learning.

gives a hypothetical example of a behavioural rule implemented by a cerebellar tract. A baseball outfielder receives a variety of sensory inputs with different temporal delays, shown here as converging on the same granular cell. The granular output then affects several Purkinje cells, including those whose ultimate effect is to adjust the player's orientation and leg angle in the attempt to accelerate rapidly towards the projected destination of the ball.

Figure 5. Temporally mixed sensory and proprioceptive experiences of a baseball outfielder. These form a context for increasing vertical foot plant while accelerating to catch a fly ball.

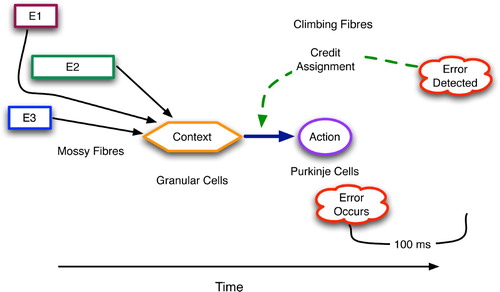

The predictive nature of this and similar rules involves the integration of sensory stimuli, whose temporal relationships are highly salient, to determine proper actions. Thus, cross-sections of the past determine present decisions about future behaviours. As depicted in , the detection of any salient consequences or errors comes even later, due to sensory processing delays. That error signal should then provide feedback regarding the decisions made earlier.

Figure 6. The temporal scope of cerebellar decision-making. Context from the past affects present action choices whose actions are realised in the future and whose consequences are perceived even further in the future.

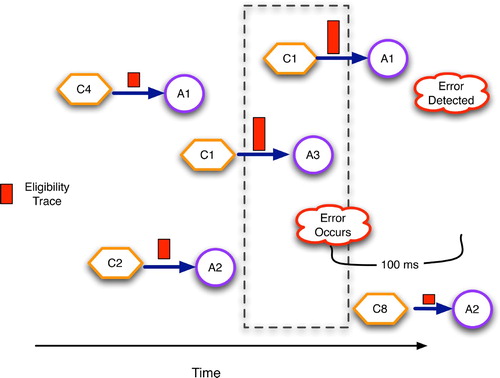

To maintain an approximate record of what channels were active, and when, and thus what synapses are most eligible for modification, the cerebellum and many other brain areas utilise a complex biochemical process that essentially yields a synapse most receptive to long-term potentiation (LTP) or LTD about 100 ms after high transmission activity (as discussed in Houk, Adams and Barto Citation(1995) and Kettner et al. Citation(1997)). This eligibility trace, in the parlance of reinforcement learning theory (Sutton and Barto Citation1998), helps compensate for the time delays of sensory processing and motor activation. Eligibility dynamics have probably coevolved with the sensory, motor and proprioceptive apparatus to support optimal learning. shows the eligibility traces associated with several context–action pairs, with those occurring within a narrow time window prior to error detection having the highest values.

Figure 7. Cerebellar eligibility traces, drawn as rectangles on the condition–action arcs, with taller rectangles denoting higher eligibility. Synapses are most eligible for modification approximately 100 s after they transmit an action potential.

Considering that the human cerebellum consists of billions of parallel fibres, each of which embodies a context–action association, physical skill learning may consist of the gradual tuning and pruning of this immense rule set. Links of high utility should endure, whereas others will fade via LTD. Importantly, because contexts reflect states of the world prior to action choice and action performance – again, due to inherent sensory processing delays – the actions that they recommend should be those most appropriate for states of the body and world at some future time (relative to the contexts). Recommendations that lack this predictive nature will produce inferior behaviour and be weakened via LTD. By trial and error, the cerebellum learns to support the most salient predictions, which are those that properly account for the inherent delays in sensory processing and motor realisation.

From the viewpoint of an outside observer, the cerebellum's actions would appear to involve explicit knowledge as to future states, such as L, the location of the baseball 3 s after contact with the bat. However, the cerebellar rule need only embody the behaviour that will eventually move the player to that spot, without an explicit representation of the spot itself. With respect to our definition of declarative prediction (as drawn in ), there is not necessarily a brain state that correlates with L, and even if there is, it need not be stimulated by the cerebellar activity that helps move the player to L.

For example, in the eye-tracking simulations and primate trials of Kettner et al. Citation(1997), both monkeys and computer models anticipate future points along complex visual trajectories by shifting gaze to the appropriate locations. In describing these systems as predictive, the authors refer to overt behaviours that indicate, to the outside observer, explicit knowledge of future locations. However, neither system is claimed to explicitly house representations (i.e. correlated brain states) for those sites. The predictive knowledge is purely procedural. Knowing how and when to look at a location is a lot different from explicitly knowing about that spot.

4. Regressive procedural prediction in the basal ganglia

In the basal ganglia, prediction arises in the course of reinforcement learning (RL) (Sutton and Barto Citation1998), which many researchers view as a central capability of this region (Houk et al. Citation1995a; Doya Citation1999; O'Reilly and Munakata Citation2000). RL systems learn associations between environmental (and bodily) states and various rewards or punishments (i.e. reinforcements) that those states may incur, either immediately or at some time in the future. Thus, the system learns to predict the reinforcement from the state. Naturally, RL provides a survival advantage, because it enables organisms to behave proactively instead of merely reactively.

The extent of prediction in RL, however, is somewhat suspect: animals are not necessarily foretelling future states in any great detail. Instead, they may possess only basic intuitions about impending pleasure or pain. As shown in , the selective advantage stems from recognising these reinforcements based on earlier, and often more subtle, clues. Thus, the predictive ability regresses in time. For example, a monkey that anticipates thunder at the first sight of atmospheric light can more consistently protect its ears than one dependent on the sight of a lightning bolt, which, when close to the observer, strikes almost simultaneously with the thunder blast.Footnote1

Figure 8. Regressive prediction: the agent recognises earlier and earlier indicators of the emotive event.

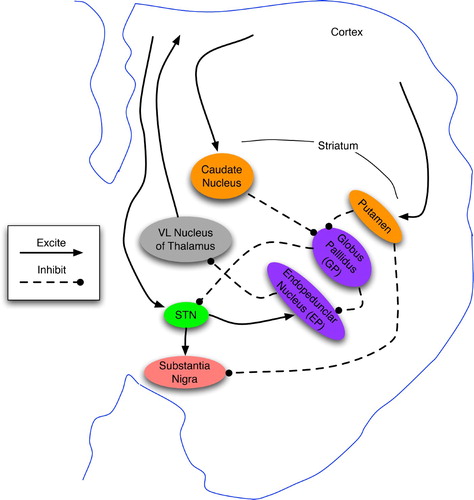

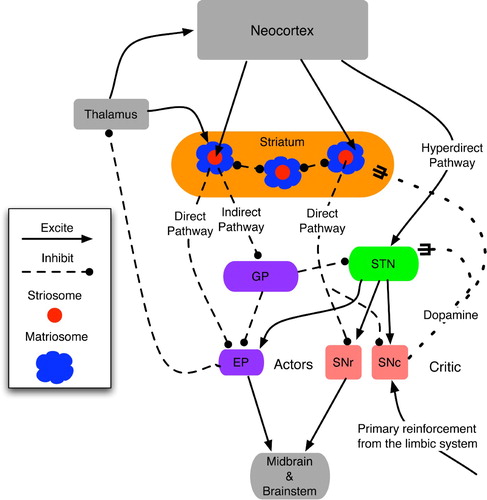

Sketched in and , the based ganglia (BG) are large midbrain structures that receive convergent inputs from many cortical areas onto the striatum (consisting of caudate nucleus and putamen) and the subthalamic nucleus (STN). The striatal cells appear to function as a layer of competitive context detectors (Houk Citation1995), as: (a) each neuron receives inputs from about 10,000 cortical neurons; (b) their electrochemical properties are such that they fire only if many of those inputs are active; and (c) they have intra-layer inhibitory connections.

Figure 9. Basic anatomy of the basal ganglia in one hemisphere, shown as a coronal cross-section. Based on diagrams in Bear et al. Citation(2001) and Prescott, Gurney and Redgrave Citation(2003).

Figure 10. Functional topology of the basal ganglia and their main inputs, derived from the text and diagrams in Houk Citation(1995), Houk et al. Citation(1995a), Prescott et al. Citation(2003) and Graybiel and Saka Citation(2004). The actor denotes the direct outputs of the BG: EP and SNr, whereas the critic consists of the diffuse neuromodulatory output from SNc. Matriosomes are primarily gateways to the actor circuit, whereas striosomes have direct-pathway links to both actors and critics.

Strong evidence (Strick Citation2004; Graybiel and Saka Citation2004) indicates that the BG are arranged in parallel loops wherein a striatal cell's inputs come from a region of a particular cortex, such as the motor cortex (MC). Their outputs to the substantia nigra pars compacta (SNc), substantia nigra pars reticulata (SNr) and entopeduncular nucleus (EP) are eventually channelled back to the MC in the form of both action potentials (via the thalamus) and the neuromodulator dopamine. A great majority of these loops appear to involve the prefrontal cortex (PFC) (Houk Citation1995; Kandel et al. Citation2000; Strick Citation2004), thus indicating that BG contributes to attention, possibly as the mechanism for gating new patterns into working memory (O'Reilly Citation1996; Graybiel and Saka Citation2004).

Accounts of GB functional topology vary considerably (Houk Citation1995; Houk et al. Citation1995a; Prescott et al. Citation2003; Graybiel and Saka Citation2004; Granger Citation2006), but several similarities do exist. First, the the striatum appears to consist of two main neuron types: striosomes and matriosomes, where the former are surrounded by the latter. Several prominent researchers (Barto Citation1995; Houk et al. Citation1995a) characterise the BG as a combination of actor and critic, with the matriosomes and pallidal neurons (EP and SNr) as the actor's input and output ports, respectively, whereas the striosomes and SNc comprise the critic. Although this characterisation is not completely consistent with other sources, such as Joel, Niv and Ruppin Citation(2002), the matriosomes and striosomes are often characterised as respectively supporting action selection and state assessment (via dopamine signalling from SNc). See Houk et al. Citation(1995b) and Graybiel and Saka Citation(2004) for overviews of the empirical data and theoretical models.

From an abstract perspective, the BG map contexts to actions. When a context-detecting matriosome fires, it inhibits a few downstream pallidal (GP and EP) neurons. In stark contrast to the striatum, the EP consists of low-fan-in neurons, most of which are constantly firing and thereby inhibiting their downstream counterparts in the thalamus (Houk Citation1995). When a striatal cell inhibits a pallidal neuron, this momentarily disinhibits the corresponding thalamic neuron, which then excites a cortical neuron, often in the PFC. The cortical excitation links back to the thalamus, creating a positive feedback loop that sustains the activity of both neurons, even though pallidal disinhibition may have ceased. Thus, the striatal-pallidal actor circuit momentarily gates in a response whose trace may reside in the working memory of the PFC for many seconds or minutes (Houk Citation1995; O'Reilly and Munakata Citation2000).

As the PFC is the highest level of motor control (Fuster Citation2003), its firing patterns often influence activity in the pre-motor (PMC) and motor (MC) cortices, whereas the MC sends signals to the muscles via the spinal cord. In addition, the sustained PFC activity provides further context for the next round(s) of striatal firing and pallidal inhibition that embody context detection and action selection, respectively. Via this recurrent looping, the basal ganglia execute high-level action sequences. The situation–action rules housed within the BG may comprise significant portions of our common-sense understanding of body–environmental interactions, whether consciously or only subconsciously accessible.

The BG learns salient contexts via dopamine (DA) signals from the SNc, which influence the synaptic plasticity of regions on to which they impinge (Kandel et al. Citation2000). DA acts as a second messenger that strengthens and prolongs the response elicited by the primary messenger. For example, when a striatal neuron, S, is fired via converging inputs from the cortex, the primary messenger is the neurotransmitter from the axons of the cortical neurons (C) that recently fired. The immediate response of those S′ dendrites (D) connected to the active axons is to transmit an action potential (AP) towards S's cell body. The summation of these D inputs will lead to S's production of a new AP. If dopamine enters these dendrites shortly after AP transmission, a series of chemical (and sometimes physical) changes occur that make those dendrites more likely to generate an AP (and a stronger one) the next time its upstream axon(s) produce neurotransmitters. As the chemicals involved in this strengthening process are conserved, those dendrites that did not receive neurotransmitter may become less likely to fire an AP later on, even when neurotransmitters reach them. Thus, in the future, when the C neurons fire, the likelihood of S firing will have increased, whereas other cortical firing patterns will have less chance of stimulating S. In short, S has become a detector for the context represented by C. Without the dopamine infusion, S does not develop a bias towards C and may later fire on many diverse cortical patterns.

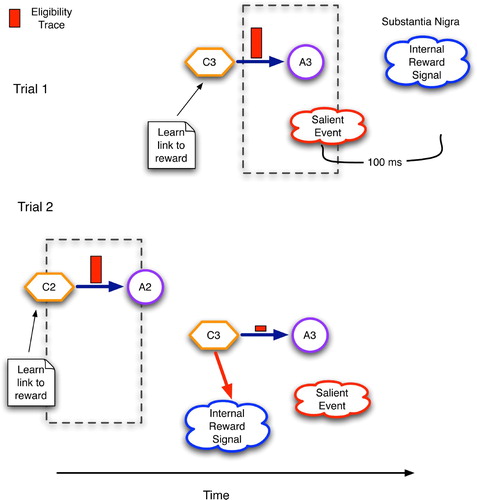

In unfamiliar situations, the SNc fires upon receiving stimulation from various limbic structures, such as the amygdala (the seat of emotions (Ledoux Citation2002)), which triggers on painful or pleasurable experiences. The ensuing dopamine signal encourages the striatum to remember the context that elicited those emotions – the stronger the emotion, the greater the learning bias. Owing to the biochemical temporal dynamics (Houk et al. Citation1995a), the striatal neurons that become biased (i.e. learn a context) are those that fired approximately 100 ms prior to the emotional response. Hence, the BG learns a context (C) that predicts the reinforcing situation (R).

As dopamine signalling is diffuse, the matriosomes and striosomes in a striatal module are both stimulated to learn. Hence, the critic not only learns to predict important states, but also assists in the learning of proper situation–action pairs by the actor circuit.

portrays the changes in a single context–action link in the basal ganglia as initiated by a reinforcement signal and modulated by an eligibility trace. The key difference between this and the situation in the cerebellum () involves the connections from the STN to the SNc. By strengthening these links, the basal ganglia allow contexts to directly predict rewards, as shown by the arrow from C3 to the internal reward signal at the bottom of . This, in turn, allows earlier contexts (C2) to predict the same reward during later trials. Hypothetically speaking, a similar functionality in the cerebellum would require tuneable direct links from granular cells (the context detectors) to the inferior olive (the source of feedback signals).

Figure 11. Temporal regression of predictive competence using eligibility traces and reinforcement signals in the basal ganglia. C2 and C3 are contexts detected by the striatum and STN, whereas A2 and A3 are accepted/chosen versions of C2 and C3, respectively.

Again, descriptions of the critical topological elements differ (see Joel et al. Citation(2002) for a review) but many experts name two paths from the cortex to SNc (Prescott et al. Citation2003; Graybiel and Saka Citation2004). The first, often called the hyperdirect pathway, bypasses the striatum and directly excites the STN, which, in turn, excites SNc. The second, termed the direct pathway, involves a strong inhibitory link from striatum to SNc. The hyperdirect pathway is quick but excites SNc for only a short period. Conversely, the direct pathway is slower, but it inhibits SNc for a much longer period.

This timing difference between excitation and inhibition enables these predictions (of reinforcement based on context) to regress backwards in time such that very early clues can prepare an organism for impending pleasure or pain. As pointed out by Joel et al. Citation(2002), physiological evidence indicates that the excitatory and inhibitory signals to SNc cannot both come from the striatum, but more likely from the prefontal cortex (via the hyperdirect pathway) and the striatum, respectively.

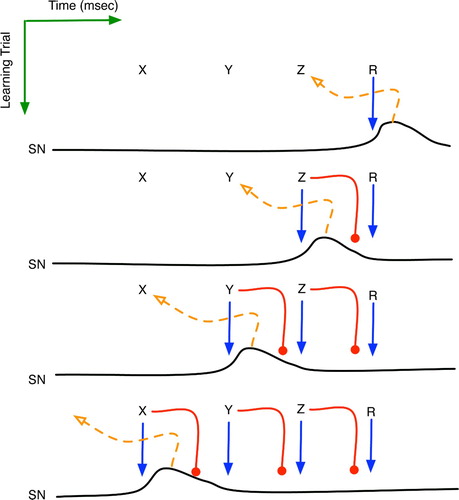

Consider the simplified scenario of , in which an animal experiences a temporal series of sensorimotor contexts X, Y and Z before attaining the reinforcing state R. When this sequence first occurs, the attainment of R will be the first indicator of success, and the limbic reward signal will excite SNc and STN, causing dopamine-induced learning of context Z in both. The BG has learned a predictive rule that Z eventually leads to R: .

Figure 12. The implicit reinforcement learning of sequence X→Y→Z→R. Horizontal plots are the time series activation levels for the substantia nigra pars compacta (SNc). Solid arrows denote excitatory effects upon the SNc, whereas round heads represent the delayed inhibition. Dashed arrows portray the learning of a new context governed by the SNc's dopamine signal.

On a later trial, the occurrence of Z will initially stimulate SNc, and the ensuing dopamine will assist learning of a salient context immediately prior to Z, which is Y. Thus, another striosome is recruited to recognise a new context and notify SNc upon its detection. The system has thus learned , but only implicitly in the sense that from Y, the agent knows the actions needed to attain Z, without necessarily knowing of Z and its relationship to Y. An outside observer might infer declarative knowledge of the

sequence, but its true nature need only be procedural.

When R is attained, the limbic system still signals SNc, but by that time, Z’s inhibitory signal has reached SNc, thereby preventing further dopamine dissemination. Neuroscientists (Houk et al. Citation1995b; Kandel et al. Citation2000) have long known that dopamine signals occur only when a reinforcement is not expected, i.e. not predicted by a prior context. The temporal aspects of the biochemistry and the critic-circuit topology provide a clear explanation: when a context predicts a reward, its latent inhibition of SNc blocks subsequent attempts to stimulate it. Finally, on a still later trial, the occurrence of Y will stimulate SNc, causing X to be encoded by a striosome and to be implicitly learned.

In the end, what predictive information does this model of the BG produce? An outside observer might infer that, indeed, the sequence is now explicit knowledge of the system. However, this model indicates that only the following associations have been acquired, in approximately the order shown:

| 1. |

| ||||

| 2. | When in Z, perform action a z to attain the reward state R. | ||||

| 3. |

| ||||

| 4. | When in Y, perform action a

y

, which just happens to put the system in state Z, although the system itself has no direct knowledge of this | ||||

| 5. |

| ||||

| 6. | When in X, perform action a x , which just happens to put the system in state Y. | ||||

Sequence learning is often posited as a key faculty of the basal ganglia (Houk et al. Citation1995b; Prescott et al. Citation2003; Graybiel and Saka Citation2004), but the above description implies that the BG only learns how to get from one element of a sequence to the next, just as the outfielder's cerebellum helps him get to the ball. Actual knowledge of the links between sequence elements need not be explicitly represented anywhere in the system.

5. Predictive topologies

Despite their many anatomical and (apparent) functional differences, the neural networks of the cerebellum and basal ganglia share several important features:

| 1. | The entry points to each – granular and striatal cells, respectively – have high fan-in, strongly inhibit one another, and appear to serve as detectors of contexts with significant temporal extent. | ||||

| 2. | The downstream pathways from these context cells are parallel tracts, with little integration. | ||||

| 3. | Outputs have direct effects upon physical actions (cerebellum) or planning states that prepare the agent for action (basal ganglia). | ||||

| 4. | Synaptic biochemistry embodies eligibility traces with maximum values appearing along pathways that were active just prior (i.e. 100 ms) to the supervisory/reinforcement signal. | ||||

As described earlier, the tuning of the plethora context-sensitive rules is driven by error or reward signals, and modulated by eligibility traces. It yields procedurally predictive controllers that are adapted to the inherent sensory and motor delays of the organism.

The contextual input neurons for precedural prediction appear to detect complex multi-modal patterns, but intra-layer competition among these neurons and the parallel tracts of their efferents seem to preclude the actual representation of complex contexts in any manner that would support explicit reasoning about them. It permits contexts to serve as atomic triggers for action, but little else.

Declarative prediction requires different machinery, i.e. that which can associate two patterns, both of which have strong correlations with external states. The review, in the three following sections, of several neural models of declarative prediction reveals a common connection scheme, which forms the basis of the author's Generic Declarative Prediction Network (GDPN).

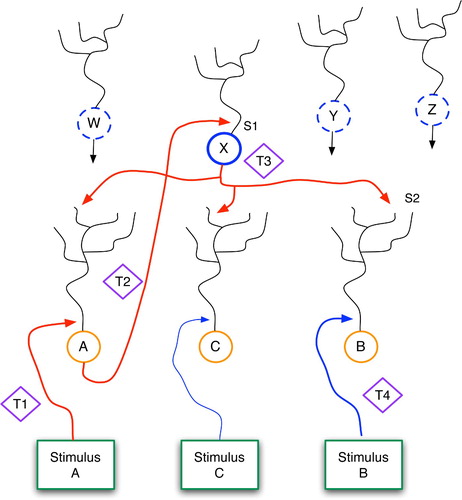

sketches the basic GDPN framework, in which a set of sensory inputs map directly to a set of low-level detector neurons (A, B and C). Above these lies a second (higher) level of neurons (W, X, Y and Z). This topology provides one relatively simple mechanism for learning temporal correlations among events, such as the fact that stimulus A is normally followed by stimulus B. In this diagram, it is important to note that low-level inputs to higher levels occur proximally, i.e. close to the soma, whereas top-down signals, such as those from X to A, B and C, enter via distal dendrites. In general, this means that low-level signals can more easily drive the activity of their high-level neighbours, whereas the high-level signals have a much weaker effect on lower levels.

Figure 13. The Generic Declarative Prediction Network (GDPN). Neurons A, B and C serve as low-level detectors for stimuli A, B and C, and W–Z represent neurons at a higher level. Only the axonal projections from X are shown, although W, Y and Z have similar links to the lower level. The T1–T4 diamonds represent time steps, and S1 and S2 denote important synapses, as discussed further in the text.

Consider a situation in which stimulus A precedes stimulus B. The following series of events explains how the network learns to predict B when A occurs in future situations. First, at time t1, stimulus A has a strong effect upon neuron A, via its proximal synapse. Neuron A then fires and sends bottom-up signals to W, X, Y and Z. At this level, as in all levels of the brain, neurons fire randomly, with probabilities depending upon their electrochemical properties and those of their surroundings. Assume that neuron X happens to fire during, or just after (i.e. within 100 ms of ) neuron A. Assuming that synapse S1 is modifiable, the A–X firing coincidence will lead to a strengthening of S1, via standard Hebbian learning. In reality, several such high-level neurons may coactivate with A and have their proximal synapses modified as well.

When X fires, it sends signals horizontally and to both higher and lower levels. These latter top-down signals have a high fanout, impinging upon the distal dendrites of neurons A, B and C. Since entering distally, along unrefined synapses, these signals have only weak effects upon their respective soma, so at time t3 neurons B and C are receiving only mild stimulation. At this stage, we can metaphorically say that: (a) X is waiting for B and C (and thousands or millions of other low-level neurons) to fire; and (b) X hedges its bets by investing equally and weakly in each potential outcome.

At time t4, when event B occurs, neuron B will fire hard due to the proximal stimulation from below. This will cause further bottom-up signalling, as when A fired, but the critical event for current purposes involves the LTP that occurs at synapse S2. Previously, stimulation from X alone was not sufficient to fire neuron B. But if synapse S2 houses NMDA receptors, as do many dendrites throughout the brain, then the coincidence of B firing and S2 being (even mildly) active in the 100-ms time window prior to t4 will lead to strengthening of S2 (Kandel, Schwartz and Jessell Citation2000). Thus, in the future, the firing of X will send stronger signals across S2, possibly powerful enough to fire neuron B without help from stimulus B.

Through one or several A-then-B stimulation sequences, S1 and S2 can be modified to the point that an occurrence of stimulus A will fire neuron A, as before, but this will then directly cause X to fire, which in turn will fire neuron B. Thus, stimulus A will predict stimulus B. Over time, neuron X ceases to hedge its bets and achieves a significant bias towards neuron B. This stems from both the strengthening of S2 and the weakening, via LTD, of X's synapses upon other low-level neurons, as explained below. Thus, X simply becomes a dedicated link between A and B. In a larger system, X and other neurons at its level would become links between a pattern of activation in the lower level, P1, and a subsequent pattern, P2.

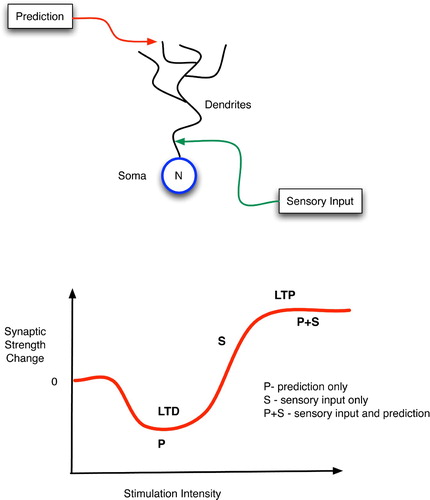

From the viewpoint of synaptic electrophysiology, the acquisition of declarative predictive models within this hierarchical network has a very plausible explanation based on bi-modal thresholding. As illustrated in , Artola, Brocher and Singer Citation(1990) have shown that weak stimulation of neurons (in the visual cortex) leads to LTD of the synapses that were active during this stimulation, whereas stronger stimulation incurs LTP of the active synapses.

Figure 14. (Top) Top-down, predictive, distal and bottom-up, sensory, proximal inputs to a neuron. (Bottom) Changes in synaptic strength as a function of post-synaptic stimulation intensity.

Three learning cases are worth considering with respect to: (a) a particular neuron, N; (b) its low-level sensory inputs, S, with proximal synapses onto N; and (c) its high-level predictive inputs, P, with distal synapses onto N. First, if S is active but P is not, then the effects of S on N will produce a high enough firing rate in N to incite LTP of the S-to-N proximal synapse. Hence, N will learn to recognise certain low-level sensory patterns.

Second, if both P and S provide active inputs to N, then an even higher firing rate of N can be expected, so LTP of both the S-to-N and P-to-N synapses should ensue. In essence, the predictive and sensory patterns create a meeting point at N by tuning the synapses there to respond to the P-and-S conjunction. In fact, after repeated co-occurences of S and P, the synapses in N may strengthen to the point of responding to the P-or-S disjunction as well, in effect saying that it trusts the prediction P even in the absence of immediate sensory confirmation.

In the third case, when only P is active, the distal contacts of the P axons may suffice only to stimulate N weakly, thus leading to LTD: a weakening of the P-to-N synapses. Hence, future signals from P will not suffice to fire N, and thus P's predictions will not propagate through N in the absence of verification from S. In short, the system learns that P is not a good predictor of S.

These three scenarios provide a very simple mechanism for the synaptic tuning that gradually converts a blanket of bet-hedging anticipatory links into a few dedicated connections between associated neural patterns.

In the following three sections, the neocortex, hippocampus and thalamocortical system are analysed with respect to prediction, with each showing clear evidence of the bet hedging and refinement so characteristic of the GDPN.

6. Declarative prediction in the neocortex

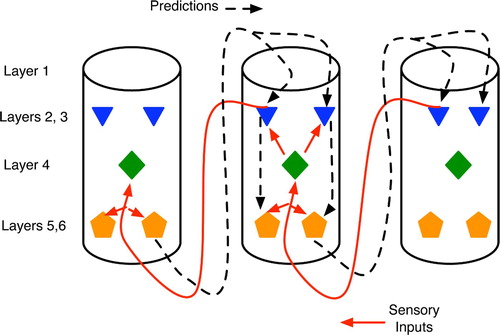

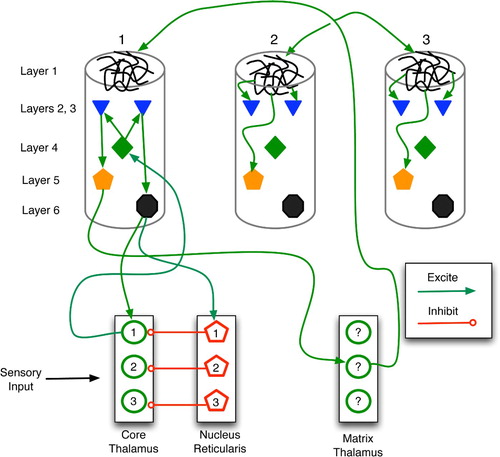

The neocortex provides a very straightforward instantiation of the GDPN, with individual neurons replaced by cortical columns. The neocortex is the thin outer surface of the cerebrum, only a few millimetres in thickness and composed of six cell layers. These cells appear to be grouped into vertical columns (Mountcastle Citation1998), which are often viewed as processing modules (Fuster Citation2003; Hawkins Citation2004). In mammals, it is convenient, and reasonably accurate, to view cortical columns near the back of the head as processors of primitive sensory information, particularly visual, whereas the more anterior columns process and represent higher-level concepts (Kandel et al. Citation2000; Fuster Citation2003). Under this abstraction, bottom-up sensory-driven interpretation processes involve cascades of activation patterns moving from the back to the front of the neocortex. Conversely, top-down, memory-biased processing moves front to back, as shown in .

Figure 15. Abstract view of cortical columns and top-down versus bottom-up information flow. Bottom-up flow (solid lines) goes from layers 2 and 3 of the sending column to layer 4 of the higher-level column, but with additional synapses on to the large pyramidals in layers 5 and 6 (pentagons). In the top-down pathway (dotted line), large pyramidals output to layer 1 of lower-level columns, with the signal eventually reaching layers 5 and 6 via either the layer 2–3 relays or directly via long dendrites from the large pyramidals.

Within each cortical column, the neurons capable of emitting the strongest and most influential signals to other columns are large pyramidals with cell bodies residing in the lower layers, 5 and 6 (Mountcastle Citation1998). Axons emanating from these two layers tend to synapse on lower-level cortical columns (Rolls and Treves Citation1998; Hawkins Citation2004), particularly motor neurons, and the thalamus, a subcortical structure known as a key relay station for sensory signals and believed to play a key role in integrating information (Sherman and Guillery Citation2006). The dendrites of these large pyramidal cells extend up to layer 1, which is essentially a dendritic mat receiving inputs from both higher-level cortical columns and subcortical structures such as the thalamus, hippocampus and basal ganglia. Signals from layer 1 reach the large pyramidals either directly, via the latter's dendrites, or indirectly via small neurons in layers 2 and 3.

Incoming axons from other columns can synapse with the large pyramidals at just about any point along their dendrites, from layer 4 up to layer 1. Proximal synapses (i.e. those close to the cell body, such as in layer 4) typically have a stronger effect upon the pyramidal's firing activity than will distal synapses at layer 1 or relay pathways through layers 2 and 3 (Mountcastle Citation1998).

Of critical importance to understanding predictive-model learning in the neocortex is the fact that the axonal inputs from lower-level (i.e. posterior) cortical columns tend to enter higher-level cortical columns in layer 4, with some synapses also forming at layer 6 (Mountcastle Citation1998; Kandel et al. Citation2000; Hawkins Citation2004). Thus, the low-level inputs form synapses near the cell bodies of the large pyramidals, whereas the inputs from higher-level (i.e. anterior) columns normally connect via layer 1. The immediate implication is that low-level sensory signals, which essentially represent the organism's current sensation of reality, have a stronger influence upon a cortical column than do the high-level thoughts (i.e. predictions) that often bias perception.

Hawkins Citation(2004) emphasises the branching factors of bottom-up versus top-down pathways in the neocortex. In general, the number of cortical columns decreases in moving up the processing hierarchy. Hence, bottom-up pathways appear convergent in that many primitive sensory neurons feed into the same higher-level neurons. Likewise, top-down pathways appear divergent, with one associative neuron signalling many lower-level columns. Hence, a predictive high-level neuron, P, may initially supply many lower-level neurons, in effect encoding a bet-hedging expectation that many different sensory patterns will be active when P fires. Through experience, many of these divergent connections will be pruned as their synapses weaken due to unfulfilled expectations and the resulting LTD.

Learning of a temporal correlation between stimulus states A and B follows the basic GDPN protocol in the cortex. Low-level cortical columns serve as detectors for specific features and (in moving up the hierarchy) combinations of features. Assuming column C A detects stimulus A, its layer 2–3 neurons will fire, sending signals to the proximal dendrites of layer 5 neurons in higher-level columns. Any of those neurons that randomly fire in that same time frame will thus have their synapses from C A strengthened. Assume X is one such layer 5 pyramidal. It will send divergent axons to layer 1 of many lower-level columns, thus hedging all bets and waiting for the next stimulus detector to fire. When stimulus B arrives, its detector column, C B, activates (i.e. its layer 5 pyramidals fire) and the links between X and layer 1 of C B are enhanced. After one or several occurrences of A-then-B, C A will fire X, which will then fire C B, even in the absence of stimulus B.

Another key aspect of this model concerns temporal relationships. Assume an initial sensory scenario, S 1 at time t 1. This will propagate up the cortical hierarchy but will also evoke top-down predictive signals in layers that house expectations associated with S 1. For these expectations to propagate further down the hierarchy, they will need to match new sensory data (thereby firing the layer 5 pyramidals that send signals to lower levels). Owing to the inherent time lag in neural signalling pathways, the predictions related to S 1 will need to match up with ascending sensory data from situation S 2, which occurred at a slightly later time, t 2. Hence, the natural time delays in the system will ensure the learning of predictions between states at time t 1 and time t 2, thus neatly corresponding with our general conception of causal knowledge.

Over time, a network of cortical columns with the topology and learning mechanisms described above will adapt its synaptic strengths to form a system that can both interpret sensory data and use top-down expectations to complete partial sensory states and predict future states. In fact, under the general view of a context as an amalgamation of related information with some degree of temporal scope, the completion of a partial context could essentially involve the recall of future states associated with states closer to the present. Hence, many acts of pattern completion embody prediction as well, and the cortex seems particularly adept at this task.

One final aspect of Hawkins's theory of cortical function deserves mention. He postulates that sensory inputs will propagate up the cortical hierarchy until they reach a level at which expectations/predictions (presumably based on previous inputs and/or brain states) match the reality embodied in the current inputs. The correspondence between prediction and reality will then block further upward progress. In short, surprise travels upwards until it ceases to be unexpected. If it confounds all predictions and reaches the higher associational areas, it then feeds into the hippocampus and (assuming significant emotional content) spurs learning, which helps ensure that it will not be such a surprise on its next occurrence. The discussion of the thalamocortical loop (in a later section) sheds further light on these ideas.

7. Declarative prediction in the hippocampus

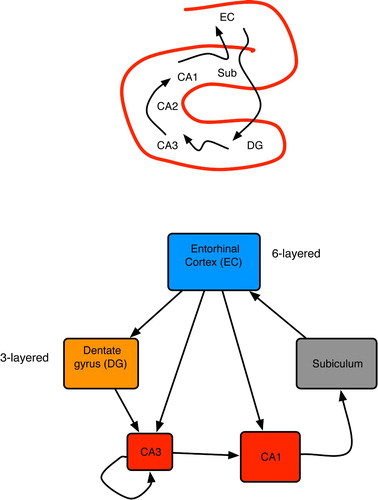

The hippocampus (HC) resides in the temporal lobe and is commonly viewed as the seat of long-term memory formation, but not necessarily of storage (Gluck and Myers Citation1989; McClelland, McNaughton and O'Reilly Citation1994; Squire and Kandel Citation1999). Anatomically, it resembles a horn (Andersen, Morris, Amaral, Bliss and O,Keefe 2007), as shown at the top of . A wide variety of high-level associational areas send inputs to HC, most of which are funnelled through the entorhinal cortex (EC). As implied at the bottom of , the HC and surrounding regions employ high convergence to compress information between the neocortex and area CA3, and a complementary expansion (via divergence) on the return path through CA1 and subiculum (Rolls and Treves Citation1998; Andersen et al. Citation2007). The topology of the HC proper is a main loop with several shortcuts from the EC to CA3 and CA1 (Andersen et al. Citation2007).

Figure 16. (Top) Basic anatomy of the mammalian hippocampus. (Bottom) Primary hippocampal areas and their connectivity. Box dimensions roughly illustrate relative sizes of neural populations in each area; all connections are excitatory. Based on pictures and diagrams in Burgess and O'Keefe Citation(1994), Rolls and Treves Citation(1998) and Andersen et al. Citation(2007).

Only CA3 contains extensive recurrence, with each neuron connected to 1–4% of the others (Rolls and Treves Citation1998; Andersen et al. Citation2007). This indicates that CA3 performs associative learning by standard Hebbian means: neurons that fire together wire together (Hebb Citation1949). The high convergence from a diverse array of neocortical areas on to CA3 hints at the holistic nature of these patterns. In rats, individual neurons in CA3 and CA1 are known as place cells (Burgess and O'Keefe Citation2003), because they fire only when the animal is at a particular location, whereas in monkeys they are called view cells, because they fire when the primate merely looks at such a location (Rolls and Treves Citation1998). Discovery of these cells has motivated a plethora of artificial neural network (ANN) models of HC-based navigation, as summarised in Redish Citation(1999); Burgess and O'Keefe Citation(2003). Many of these involve implicit predictive knowledge in CA3 and CA1, wherein place cells fire before the animal arrives at the corresponding location.

In one of the more popular models, Burgess and O'Keefe Citation(1994) proposed a layer of goal cells (possibly in the subiculum) that receive inputs from many CA1 place cells. Goals represent very salient locations, often those involving reinforcements. As a simulated rat moves about, the goal cells fire at frequencies correlated with the rat's proximity to their target fields, so navigation is achieved by choosing movements that increase the firing of focal goal cells. Another interesting variant (Kali and Dayan Citation2000) posits CA3 as the site of predicted situations and CA1 as the site of real situations (via direct inputs from EC). Mismatches between the two drive learning in CA3 and thus improve the accuracy of future predictions.

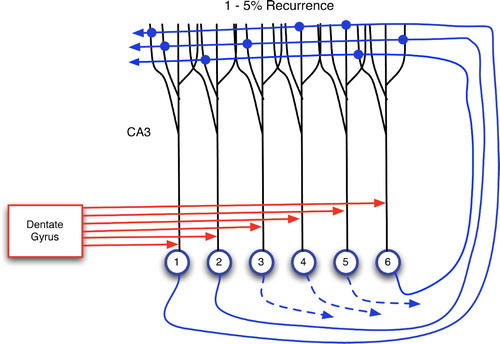

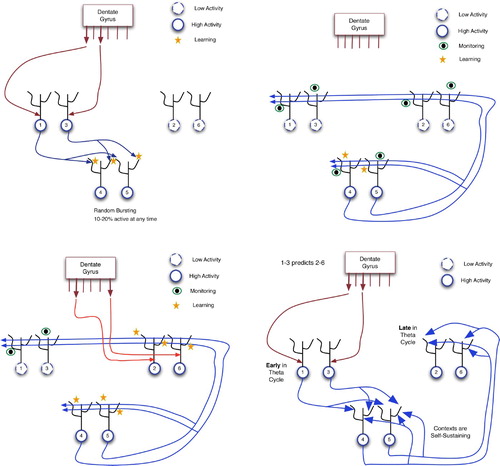

The model that is now considered in detail is that of Wallenstein, Eichenbaum and Hasselmo Citation(1998). It provides an intricate mechanism for predictive learning that is centred largely in CA3, able to connect events separated by significant temporal delays, and quite similar to the author's GDPN. Wallenstein et al.’s model utilises the key differences between proximal and distal contacts to CA3 pyramidal cells. As shown in , afferents from the dentate gyrus have proximal targets on CA3 neurons, whereas CA3’s recurrent collaterals have distal synapses. Hence, DG inputs, when active, tend to drive CA3 pyramidals, enforcing external conditions (via EC and its cortical afferents) on them. However, the authors cite the well-known 4–10 Hz theta oscillations (Kandel et al. Citation2000; Buzsaki Citation2006) as a simple switching mechanism between afferent-driven and collateral-driven CA3 activation. Hence, CA3 can receive DG inputs during one phase of a theta wave and exploit internal computations (via recurrence) during the opposite phase.

Figure 17. Two main sources of input to CA3 neurons: proximal connections from the dentate gyrus and distal, recurrent inputs from CA3 itself. Each CA3 neuron has recurrent links to 1–5% of the others (Rolls and Treves Citation1998; Andersen et al. Citation2007).

The following detailed example, based on Wallenstein et al.’s model, reveals very clear GDPN dynamics within CA3, but without the need for a hierarchy of layers. Assume that the system will learn the association between two temporally distinct events, E1 and E2, where E1 involves two sensory features, 1 and 3, while E2 involves features 2 and 6. In the initial phase, shown in , features 1 and 3 enter CA3 via DG. Each DG granular cell projects to a small number (around 15) of CA3 cells (Andersen et al. Citation2007); this is abstractly depicted as a 1–1 relationship in the figures, where the DG is drawn with six output ports.

Figure 18. Learning a predictive association in the hippocampus. In these diagrams: large arrows extending from DG indicate active output ports; and CA3 neurons are separated into three groups for explanatory purposes only. (Top left) Input of pattern 1–3 from the dentate gyrus via proximal synapses on to CA3 pyramidals. (Top right) Context neurons send distal monitoring signals to their recurrent connections and to themselves. This causes weak activation of distal dendrites throughout CA3 and incites autocatalytic learning within the 4–5 context. (Bottom left) Distal monitoring inputs from the 4–5 context coincide with DG-forced firing of neurons 2 and 6 (as a consequence of event E2). This leads to LTP at the distal synapses of neurons 2 and 6. (Bottom right) Using the learned association between events E1 and E2: when E1 occurs, 4–5 context neurons and then neurons 2 and 6 fire, thus predicting the future occurrence of E2.

When neurons 1 and 3 fire, they send signals to all neurons with which they have recurrent connections. In the hippocampus, this would be 1–5% of all CA3 neurons. Hence, many CA3 neurons receive weak, distal stimulation.

As is common in CA3 and many other brain areas, neurons randomly burst (i.e. produce action potentials) at all times, although normally at lower rates than those of neurons that receive many inputs. In the upper left of , neurons 4 and 5 happen to be bursting within approximately the same 100 ms window as the arrival of stimuli 1 and 3. Hence, neurons 4 and 5 fire while receiving distal inputs from 1 and 3. The NMDA receptors on these distal dendrites will then detect this coincidence (of pre-synaptic input and post-synaptic firing) and strengthen the distal synapses. The connection between 1–3 and 4–5 would thereby strengthen.

Neurons 4 and 5 are referred to as context neurons. As shown below, they form the glue between E1 and E2, even when many seconds (or minutes) separate the two events. At this point, CA3 has learned the association between 1–3 and this context. It must now learn to connect the context to E2. It is therefore important that the context remains active while simultaneously sending out monitoring signals to other CA3 neurons, in anticipation of future inputs from DG. The upper right of shows this state, wherein the context sends distal signals to many neurons, including 4 and 5. Note that because 4 and 5 are firing hard during the arrival of these self-monitoring inputs, their distal synapses will strengthen, as shown on the bottom left of the figure, making the context an autocatalytic activation pattern, and thus one that can remain active for long periods of time. Neurons 1 and 3 are now inactive, because (a) event E1 is completed; and (b) the system experiences the second half of a theta oscillation, the half in which internal dynamics dominates extrinsic influences.

After a delay of seconds or even minutes, event E2 occurs, sending signals for features 2 and 6 into CA3 via EC and DG. These proximal inputs force neurons 2 and 6 to fire, but, as shown on the bottom left of , the key learning now occurs on the distal dendrites to 2 and 6, which were active during monitoring. This LTP strengthens the links between context neurons 4–5 and neurons 2 and 6. Although not discussed in Wallenstein et al. Citation(1998), the distal links from context to neurons 1 and 3 could weaken by LTD, because the monitoring inputs did not coincide with post-synaptic activity in those 2 neurons. Note that all of this assumes that the context and, more importantly, its monitoring signals, remain active during the E1–E2 delay. Contexts are able to achieve this prolonged activation if they consist of enough neurons and can quickly strengthen autocatalytic connections.

As shown by the large arrows on the bottom right of , the links from E1 detectors to context and then to E2 detectors have all been enhanced. Thus, when E1 occurs, its distal contacts suffice to fire the context neurons, and their distal contacts can then keep the context active and excite neurons 2 and 6. This constitutes a prediction that E2 will occur.

As inputs to HC come from high-level associational cortices, they need not have direct links to immediate sensory and proprioceptive activity. For example, sequences of activation states in these cortices may represent different steps in a reasoning process. Hence, the predictive learning in CA3 can also encompass associations between any mental states, but particularly when they have high emotional content, because emotions trigger neuromodulators that enhance activation and learning in areas such as CA3 (LeDoux Citation2002). For instance, when pondering the events of a mystery novel, thoughts of the butler may initiate a chain of inferences ending in the conclusion that he must be the murderer. The emotional content of this deduction may lead to a strong link in CA3 between butler and murderer, with many of the intervening (emotion-free) inferences eventually being forgotten.

8. Declarative prediction in the thalamocortical loop

Although neuroscientists previously viewed the thalamus as merely a transfer point for signals from sensory periphery to cortex, it is now known that only about 20% of thalamic inputs are ascending pathways (i.e. upward along the spinal cord) from the senses, while most of its remaining afferents descend from the cortex (Kandel et al. Citation2000; Sherman and Guillery Citation2006). Hence, the thalamus is now seen as a key component and integrator of several brain functions, including predictive/sequential learning (Rodriguez, Whiteson and Granger 2004).

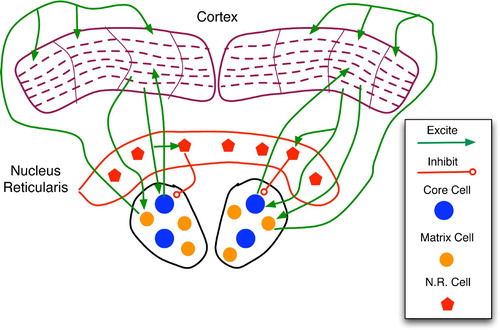

As shown in , the thalamus is divided into several nucleii, each of which relays sensory signals to and receives top-down feedback from a specific cortical region, such as auditory, visual or multi-modal cortices. Two key neuron types in each nucleus are the core and the matrix cells. The former are large and have sparse, topographic connections to layer 4 neurons in the corresponding cortical area, whereas the latter are smaller and send diffuse projections to the layer 1 dendritic mats of many cortical columns within a region.

Figure 19. Regions and connections of the thalamocortical loop germane to a discussion of predictive models, based on descriptions in Rodriguez, Whitson and Granger Citation(2004). The cortex is divided into regions, vertical columns, and the six well-known horizontal layers. Each core cell maps to layer 4 of a specific cortical column and receives feedback from layer 6. Matrix cells receive cortical afferents from layer 5 and send signals to the layer 1 dendritic mats of many columns.

Inputs to core cells come from ascending sensory pathways, cortical feedback, and the nucleus reticularis, a strong inhibitory module. Conversely, matrix cells are believed to receive the majority of their afferents from the cortex (particularly layer 5) (Rodriguez et al. Citation2004). This makes them important for the thalamocortical feedback but not necessarily for the initial sensory relay.

The nucleus reticularis (NR) maps topographically to core cells, with excited NR cells strongly inhibiting their core counterparts. As shown in , descending pathways from cortical layer 6 link to the corresponding NR and core cells. As NR neurons have proximal links to core neurons, their inhibitory effect is very strong, tending to override sensory input from ascending pathways. As pointed out by Granger Citation(2006), the chemistry of excitatory glutamate versus inhibitory GABA synaptic potentials is such that the former persists for a mere 15–20 ms, whereas inhibition lasts from 80–150 ms. This, combined with the transmission pathways of , implies that a sensory stimulus will briefly excite a core thalamus cell, causing further activation of the corresponding cortical column; but feedback from this column, via NR, will then silence the core neuron for a considerably longer period.

Figure 20. Sketch of thalamocortical loops for three columns of a cortical region. Core, matrix and nucleus reticularis (NR) cells are separated into three modules for illustrative purposes. The main types (but not all instances) of connections are drawn. Within a column, the key connections are: entry layer 4 links to layers 2 and 3; layer 2–3 stimulation of layers 5 and 6; and layer 1 excitation of layers 2, 3 and 5. In general, the column is considered active when layer 5 and 6 neurons fire at high frequency.

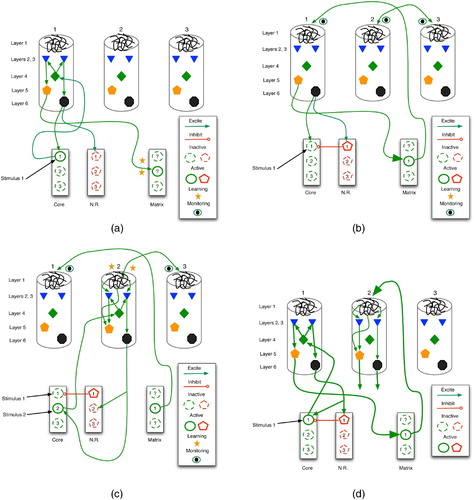

The models of Rodriguez et al. Citation(2004) and Granger Citation(2006) provide excellent insights into the emergence of predictive associations within thalamocortical loops. As sketched in , two stimuli are linked via thalamic and cortical activation, monitoring and learning. then illustrates the recall process wherein stimulus 1 leads to the expectation of stimulus 2.

Figure 21. Learning of a predictive sequence in the thalamocortical circuit. Only the main active connections are shown. (a) Entry of stimulus 1 stimulates the corresponding core thalamus neuron and cortical column, as well as a random matrix thalamus neuron. (b) Matrix stimulation leads to distal monitoring of diverse cortical columns. (c) Entry of stimulus 2 adds proximal stimulation to (already distally stimulated) cortical column 2, producing distal synaptic strengthening. (d) In future trials, stimulus 1 leads to excitation of column 1, then 2, thus embodying a prediction of stimulus 2.

Initially (, stimulus 1 enters the core thalamus via ascending pathways, firing core neuron 1, which then relays to layer 4 of column 1. This excites layers 2 and 3, which then stimulate layers 5 and 6. Layer 6 then sends positive feedback to both the original core neuron and its antagonistic NR cell, while layer 5 excites matrix thalamus neurons. Those matrix cells that happen to burst randomly within this time frame (drawn as solid circles) accrue stronger synapses via LTP.

Next (, the active matrix cell (now linked to column 1 via LTP) sends monitoring signals to the layer 1 dendritic mats of many cortical columns. In addition, the active NR cell (pentagon 1) inhibits its core thalamus counterpart (circle 1), despite the potentially continuous presence of stimulus 1. This gradually deactivates cortical column 1.

Stimulus 2 now activates the core thalamus (, which relays excitation to column 2. The coincident activation of deep-layer neurons and layer 1 dendrites leads to NMDA-driven LTP in column 2, thus forging a strong link between matrix neuron 1 and column 2. Inhibition of core neuron 1 remains in effect, so even if stimuli 1 and 2 are co-present, only 2 has an effect at this stage.

illustrates the recall of the newly formed predictive association. The occurrence of stimulus 1 fires core neuron 1, which stimulates cortical column 1. In turn, this excites matrix neuron 1, which then drives column 2 activity. The firing of layer 5 and 6 neurons in column 2 embodies recall of stimulus 2 as a consequence of stimulus 1.

Although they serve our purpose well, these models were originally designed to show the role of thalamocortical activity in perceptual processing. In that case, stimulus 1 represents the set of most salient features in a percept, typically known as the invariants: the most common features among instances of a perceptual class. For example, in the elephant category, these might include size and the presence of a trunk. Once recognised, the effects of these features should be damped such that other, more specific, attributes can be processed in order to perform fine-grained discrimination, when necessary. The negative feedback loop from cortical level 6 to the nucleus reticularis to core thalamus performs this function. It allows stimulus 2 (which represents a set of secondary features) to dominate processing for a few fractions of a second.

The link formed between stimulus 1 and stimulus 2 is indeed anticipatory, but for this classification task the prediction is between one stage of perceptual processing (represented by a neural activity pattern) and another, with no direct connection to temporally sequential events in the external world: the trunk and large body of the elephant do not actually enter our visual field before the other features, they are merely processed first.

Clearly, a sensorimotor system benefits from this incremental processing, because immediate reactions, when necessary, can be mobilised on the basis of only the most salient features, and thus with minimal delay. Thus, evolution would favour such an approach over an all-perception-before-action scheme reminiscent of early artificial intelligence and robotics (Rich Citation1983). More general predictive knowledge, involving temporally contiguous real-world events, could then capitalise on the same basic thalamocortical system. The only significant difference is in , where only stimulus 2 would appear, not both 1 and 2. In short, our predictive capacities stem directly from our ability to classify incrementally or vice versa.

Finally, the thalamocortical model helps explain Hawkins's theory that surprise propagates up the cortical hierarchy. As in , assume that stimulus 1 has primed column 2, which now fully expects to see stimulus 2. If it arrives, it does so via the core thalamus and then level 4 of column 2. This further stimulates layers 2–3 and then layers 5 and 6. As layer 6 fires very hard, this sends strong signals to NR, which swiftly inhibits core neuron 2, thus removing the excitatory input to level 4, which in turn removes a strong excitatory input to layers 2 and 3. As layers 2 and 3 are the main output ports for propagation up the cortical hierarchy, further ascent is blocked or reduced significantly.

Conversely, if stimulus 2 arrives in column 2 without the simultaneous presence of expectation-driven firing (beginning in layer 1), then layer 6 may not fire hard enough to inhibit completely core neuron 2. Thus, column 2 would remain active, sending signals up and down the cortical hierarchy. This conflicts somewhat with our original description of stage 1 of thalamocortical predictive learning in ,b, in which the NR neurons are stimulated (and the corresponding core neurons blocked). However, the dynamics are probably more continuous than discrete, such that NR excitation reduces core and then cortical activity to varying degrees, depending upon NR firing levels.

This view hints of a learning model wherein, upon seeing an object for the first time, cortical columns corresponding to the most salient features fire, but at levels too weak to stimulate fully NR. Hence, secondary features go largely unnoticed during the early trials as primary features dominate cortical activity. However, with repeated presentation of the object, the salient columns begin to fire harder, due to synapses strengthened by simple Hebbian means during earlier trials. Thus, NR becomes a more active player, helping to shut down salient core neurons and columns, thereby allowing access to secondary and eventually tertiary stimuli. In short, we learn the most important features first and require repeated trials to absorb fully the details. Stated differently, the learning of a feature set S entails the ability to make predictions about S, and as these predictions become more accurate, less processing time is required (and used) for S, and more can be devoted to other features.

Along the same lines, a predictive sequence cannot be learned in its entirety, but piecemeal, with links between temporally later events forming only after earlier segments have become familiar.

9. General features of predictive circuitry

A high-level topological comparison indicates the differential predictive functionality of the procedural and declarative circuits. Under the reasonably standard view that our explicit representations (i.e. those that we can attend to, reason about, etc.) consist of a good deal of perceptual information (i.e. that based on past or present sensations), it makes sense that a predictive association between two such representations involves connections within the more perceptually oriented areas of the brain. If these patterns represent similar world states, then, due to the topological nature of much of the brain, they probably reside near one another and may even have many shared active neurons. A birds-eye view of these two patterns and the active synapses that embody the predictive link would indicate a tight mesh of intra-layer and intra-region connections: high recurrence.

Conversely, the cerebellum and basal ganglia have little recurrence. They exhibit parallel tracts that map sensorimotor contexts to actions. The basal ganglia appears slightly more cognitive in that it may be gating sensorimotor contexts–that embody perceptions plus intended actions–into prefrontal areas, where they can then influence future motor acts and reasoning.

The procedurally predictive areas are therefore hard pressed to link representation R1 for world-state 1 to R2, the representation for world-state 2. However, they can learn to map R1 to actions and action plans that are appropriate for world-state 2; and in a fast-moving world, this is often all that is required, or permitted.

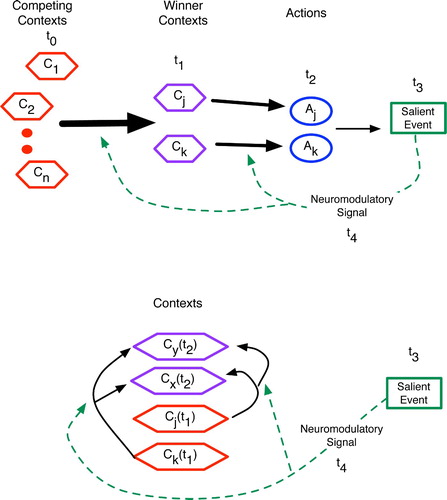

As shown in , a key difference between the procedural and declarative predictive mechanisms involves space. In the procedural areas, activation patterns move along parallel tracks, and the learning initiated by a salient event (such as an error or reinforcement signal) targets synapses between one area and the next. For example, in the cerebellum, the competing contexts stem from diverse brain regions whose axons converge upon the granular cells, whereas the winning contexts are those whose granular cells can maintain activity in the face of inhibitory signals from other granular cells. By linking the granular cells to the Purkinje cells, the parallel fibres provide a distinct spatial location for the transfer from contexts to actions. The basal ganglia house similar parallel tracks, although the direct connection between any BG area and action is less obvious, as most BG outputs target high-level cortical areas.

Figure 22. Abstract comparison of the procedural (top) and declarative (bottom) predictive mechanisms, with relative time points given as t 0 - t 4 and vertical cell columns indicating brain regions. (Top) In procedural prediction, neural patterns corresponding to competing contexts, winning contexts and proposed actions have distinct spatial locations in the brain. Salient events and the ensuing feedback (via neuromodulators) then alter synapses between these regions, such as between granular cell contexts of the cerebellum and the action-regulating Purkinje cells. Similar spatial localisation occurs in the basal ganglia. (Bottom) In declarative areas such as the hippocampus, cortex and thalamocortical system, active patterns have considerably more spatial overlap such that neuromodulatory feedback affects recurrent (often distal) connections within a region. The competition among contexts thus occurs in place.

In learning declarative predictions, the brain must link contexts to contexts, and these often reside in the same brain region. Hence, learning involves a modification of recurrent arcs, as shown at the bottom of , and as detailed by the previous models of the cortex, hippocampus and thalamocortical system.

As reviewed by Dominey Citation(2003), ANN experiments indicate that recurrence alone will not suffice to learn pattern sequences, as the delayed neuromodulatory feedback has a credit assignment problem: difficulty targeting the relevant activation rounds that accounted for an action. However, as Dominey, Lelekov, Ventre-Dominey, and Jeannerod Citation(1998) show, an ANN with a combination of leaky integrator neurons with a diverse range of time constants and synaptic modification restricted to a small portion of the network can learn temporal sequences.

The models in this article indicate other factors that may be critical for learning in recurrent regions, including eligibility traces (which seem to be a standard component of biologically plausible learning rules (Fregnac Citation2003)), a wealth of available neurons to serve as links between two stimulus patterns, phasic toggling between external driving and recurrent signalling, and the general monitoring mechanism involving distal dendrites.

Evolution has clearly endowed the brains of higher mammals with considerable recurrence, and thus with more sophisticated declarative predictive abilities. The hippocampus was the first major step (Striedter Citation2005), with both high recurrence within CA3 and the loop organisation of the entire hippocampal region, with most signals entering and leaving via EC. The neocortex, with its massive intra- and inter-region feedback, elaborates that trend. With increased evolutionary size, the cortex also sends a greater density of axons to diverse neural regions, both higher and lower. Thus, the most advanced predictive machinery attains a higher degree of control in the mammalian, and particularly primate, brain.

A finer grained analysis of the five systems above reveals several features that many share. These properties involve neurons, synapses and circuitry. Obviously, LTP and LTD are essential for strengthening relevant and weakening irrelevant connections between neurons. When Hebbian LTP governs synaptic change, the random bursting becomes a vital characteristic of predictive networks as well. In all of the models above, randomly bursting neurons form convergence points for the active neurons of a salient context. This is evident in granuler cells of the cerebellum, striatal cells of the basal ganglia, CA3 cells of the hippocampus, and matrix cells of the thalamus. Also, in cortical columns, neurons of layers 4 and 5 exhibit higher degrees of bursting than those of other layers (Mountcastle Citation1998); as the primary input port for convergent lower-level signals, layer 4 also appears to perform a context-detecting role.