?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.

?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.ABSTRACT

This paper touches upon the philosophical concept of evil in the context of creativity in general, and computational creativity in particular. In this work, dark creativity is introduced and linked to two important pre-requisites of creativity (i.e. freedom and constraints). A hybrid computational system is then presented; it includes one swarm intelligence algorithm, Stochastic Diffusion Search – mimicking the foraging behaviour of one species of ant, Leptothorax acervorum – and one physiological mechanism – imitating the behaviour of human immunodeficiency virus. The aim is to outline an integration strategy deploying the search capabilities of the swarm intelligence algorithm and the destructive power of the digital virus. The swarm intelligence algorithm determines the colour attribute of the dynamic areas of interest within the input image, and the digital virus modifies the state of the input image, creating the projection of “evil” over time (evil is used here as excessive use of underlying freedom). The paper concludes by exploring the significance of sensorimotor couplings and the impact of intentionality and genuine understanding of computational systems in the light of the philosophical concept of weak and strong computational creativity.

1. Introduction

Computational creativity is a young field that has been attracting increasing numbers of researchers from various domains, including computer science, psychology, cognitive science, robotics and arts. While computational creativity is based on a larger more comprehensive body of work on (human) creativity carried out for hundreds of years (see, e.g. Royce, Citation1898; Prindle, Citation1906), like many other debated domains it lacks a single unifying definition that guarantees a clear consensus among active researchers in the field. Additionally, this relatively new field has been dealing with the “hostile” view that machines can never be creative, which rekindles an older battle in the field of artificial intelligence about whether machines can ever be intelligent (see Preston and Bishop, Citation2002 for more details).

This work does not aim at resolving the above, but tries to offer a perspective that, while not completely ruling out the possibly of a machine being creative one day, elaborates and introduces a concept (weak vs. strong computational creativity) that both addresses the issues above and fits in with the current state-of-the-art development in the field of computational creativity. This concept is an extension of the well-established notions of weak and strong artificial intelligence.

Additionally, this paper discusses the role of evil in the field of creativity in general and computational creativity in particular. Following an introduction to the concept of dark creativity and a philosophical discussion arguing its relevance to the topic, a hybrid computational system and psychological mechanism is introduced. The hybrid system can be seen as an “art” generating system in its own right. However, the focus here is on illustrating a few of the concepts presented. In other words, the images generated by the hybrid system in this paper serve as a platform for investigating whether or not the system is capable of exhibiting aspects of computational creativity. Thus, the visual examples are used to initiate a discussion of the role of destruction in computational creativity.

To summarise, this paper discusses the concept of freedom, in its various flavours, within the topic of creativity by first introducing creativity and dark creativity and discussing the importance of freedom in creativity through the eyes of other thinkers and philosophers; in this part, one of the many definitions of evil as one of the possible outcomes of excessive use of freedom is introduced.

Using this definition, an introduction is given to the visualisation of the computational system; the swarm intelligence algorithm used is explained first, and subsequently the physiological mechanism of the virus is briefly presented in this context. Afterwards, details are provided on how the swarm intelligence algorithm and the virus mechanism are hybridised; the behaviour of the virus in the system is contextualised and the process through which the hybrid system contributes to creating artistic works is explained. Next, a discussion of intentionality and creativity is presented, emphasising the concept of freedom and the possibility that creativity with excessive freedom can go beyond the boundaries of what the society can tolerate, or even do harm to the general good. Such creativity is referred to in this paper as “evil”. This term is not used here in a judgemental or even religious sense, but as a striking label for creativity with excess freedom: Examples are given in later sections and include a bridge collapsing with great loss of life, a company facing financial ruin, and the generation of unease and anxiety in a society.

Following the above-mentioned topics, a link is made to the concept of weak and strong computational creativity, where the significance of sensorimotor couplings in generating genuine understanding and intentionality is highlighted; it is in this section where our argument for weak computational creativity is presented. Finally, suggestions for possible future research can be found at the end, along with the conclusions.

2. Creativity and dark creativity

In this section, after providing a brief introduction to creativity in general, freedom and constraint (as two of the pre-requisites for creativity) are discussed in the context of artistic creativity as well as other fields. Then creativity's relation with freedom is further discussed. Subsequently, an introduction is provided to the dark side of creativity, which is then followed by a discussion on the related topic of the moral dimension of creativity.

2.1. Creativity

Although discussions of creativity go back at least to Plato and Aristotle, and also stretch to the eastern world, it has been the subject of deliberate, intense, prolonged and structured research since a call by the president of the American Psychological Association in 1949, (Guilford, Citation1950), for further investigation. Modern interest was driven by new concepts of intelligence, and interest in creativity was further stimulated by the so-called Sputnik Shock of 1957, which led to the conclusion that engineers in the western world had lost the space race to their counterparts in the eastern bloc because the westerners lacked creativity .Footnote1 Despite the fact that this initial surge of interest in modern times was driven by a perceived failure of technological creativity, in the ensuing years creativity research has largely developed in the field of psychology, but with interest spanning other fields such as engineering, business, marketing, education, and the arts.

The study of creativity is frequently hindered by the pervasive belief that to be creative it is necessary to have a special gift bestowed by a higher power. This view of creativity has been extended to encompass the idea that it is an inherent property of all living systems, which generate novelty simply because it is their biological nature to do so. This view was recently re-articulated by Rothman (Citation2014), who saw creativity as a way of being and argued that to be creative it is only necessary “to live, observe, think, and feel”. The belief that creativity results from divine inspiration, is simply an epiphenomenon of biological existence, or is a uniquely human state of being seems to be incompatible with the very idea of computational creativity.

Thinking in the area is also hampered by a range of misconceptions related to what might be called the contents of creativity. Not the least of these is the widespread view that creativity is the exclusive preserve of the arts. In McWilliam and Dawson (Citation2008), it is observed that creativity has been “relegated to the borderlands of the visual and performing arts”. As stated by Baillie (Citation2002), there is also a strong view that creativity can neither be taught, nor measured. Again, these views seem to question the idea of computational creativity.

However, creativity has been well-defined in modern psychological thinking, and the factors that facilitate or hinder its emergence have been studied extensively. Cropley and Cropley (Citation2015) summarised the personality characteristics that research has shown to be strongly associated with the generation of novelty, and offered an overview. They also offer an overview of research on the cognitive processes that favour the production of original ideas. Cropley and Cropley (Citation2015) summarise organisational studies which have focused on the environment in which creativity takes place and reviewed investigations that have also established the characteristics of a product, process or service that make it creative. These factors have been combined in a definition that is emerging as the “standard”, as given in Runco and Jaeger (Citation2012): creativity involves the generation of useful novelty in many fields, and the processes, personal properties, and environmental conditions needed for this.

Following the generic account provided on creativity, the next section discusses the importance of freedom and constraints in the generation of artistic creativity.

2.2. Freedom and constraint in artistic creativity

As has been pointed out, one of the main domains in which creativity is discussed and disputed is art, where the relationship between freedom and art has been at the heart of heated debates. In one such example the words of Ludwig Hevesi (1843–1910), feuilletonist and art critic in the Secession era, elegantly reflect this thought. The German prose placed at the entrance to the Secession Building in Vienna reads: Der Zeit ihre Kunst, Der Kunst ihre Freiheit which translates into To Time its Art, To Art its Freedom.

Centuries earlier, Aristotle had focused on the significance of freedom (materialising itself as having “a tincture of madness”) in generating a creative act:

There was never a genius without a tincture of madness.

However, in one of her latest works (Boden, Citation2010), highlights the fact that the relationship between freedom and creativity is not clear-cut. She states: “A style is a (culturally favoured) space of structural possibilities: not a painting, but a way of painting. Or a way of sculpting, or of composing fugues .. [] .. It's partly because of these [thinking]styles that creativity has an ambiguous relationship with freedom.”

This ambiguousness arises from the fact that creativity depends not only upon freedom, but also upon the existence of constraints. Johnson-Laird (Citation1988) also highlights the role of freedom and constraints in creativity: “… for to be creative is to be free to choose among alternatives .. [] .. for which is not constrained is not creative”. In another example, Hanif Kureishi, a renowned English playwright and novelist, encapsulates these two elements, stating (Kureishi, Citation2004, p. 147): “In anything genuinely creative there has to be a balance between the push to finish it, and the necessity of allowing it to evolve as far it can go, for it to be as mad as it can be while remaining a communication.”

According to this position, freedom is envisaged as a form of “madness” and constraint and rules are emphasised as necessary for “remaining a communication” (i.e. for satisfying the constraints of the external world to a sufficient degree for other people to be able to make sense out of and tolerate the results of the exercise of freedom). An example of failure to keep the exercise of freedom within the limits imposed by the constraints is an artist who stole human body parts from fresh graves so that he could use them in collages. Although the novelty generated by his exercise of artistic freedom was lauded by some critics, he was mainly criticized for having gone too far (i.e. having offended against social constraints in connection with respect for the dead), and – as far as the police were concerned – was merely a grave robber, and thus a criminal.

While freedom and constraint's link to the emergence of artistic creativity is acknowledged, they should not be limited to only this field. The next section touches upon the significance of these concepts in other domains.

2.3. Freedom and constraint in other fields

The idea of creativity as involving a clash, or at least a compromise between freedom and constraints which has just been outlined is not confined to discussions of fine art, literature and the like. For instance, Cropley (Citation2015) and Cropley and Cropley (Citation2015) discuss this issue in detail in connection with both engineering creativity and commercial creativity. The example of the Westgate Bridge in the Australian city of Melbourne demonstrates the point. The structural boundaries referred to by Boden were literally enlarged by the introduction of novel ways of using structural steel by twisting it. However, while still under construction the bridge collapsed with a whiplash action. The bridge's design was novel, but its failure to remain within the constraints imposed by the physical properties of steel (elasticity) led to Australia's worst civil engineering disaster, with considerable loss of life; and when we turn to the commercial world, the disaster of New Coke is instructive: in blind taste tests the new product was almost universally hailed as superior to its predecessor (Classic Coke), even by its opponents, but was seen as attacking one of the fundamental pillars of the American way of life (things go better with Coke), and the change was rejected by consumers. Thus, not just in the aesthetic realm but in all domains, creativity is subject to the constraints of the world into which it is introduced, whether these constraints are physical (as in the case of the Westgate Bridge), social (as in the case of the negative emotional reaction to New Coke), or artistic/aesthetic (as in Kureishi's remarks above).

Having discussed the importance of both freedom and constraint in artistic creativity and other fields, the next section focuses more on the relationship between creativity and freedom, looking at the impact of excess freedom as well as the role of freedom in swarm intelligence techniques.

2.4. Creativity's ambiguous relationship with freedom

We have already referred to Boden's conclusion in Boden (Citation2010) that, despite the fact that freedom is an obvious prerequisite for creativity – where change is impossible, no novelty can be generated – creativity has what she calls an “ambiguous relationship” to freedom. What then is the nature of the link between freedom and creativity? One way of looking at this link is to return to the issue of constraints versus freedom. The nature of this dialectic is most readily observable in non-aesthetic settings such as commercial environments because in such cases, the constraints involved are usually concrete and clearly observable (for example the exercise of freedom must not lead to a company going bankrupt). Creativity turns out to be problematic when the extension of the structural boundaries identified by Boden as at the heart of the creative process goes too far. As Besemer (Citation2006) put it using commercial terminology, “consumers don't like too many surprises” (p. 171), or in the words of Cropley and Cropley (Citation2015), commercial creativity is constrained by the “usefulness imperative” (p. 21): not everything goes.

In an aesthetic sense, to paraphrase Kureishi, the creative product has to capitalise on freedom by going as far as it can, it is true, but it must not become so mad that it ceases to communicate anything to other people. Otherwise, the creativity exhibits what (Worsley, Citation1996) called “the destructiveness of excess”. As Mueller, Melwani, and Goncalo (Citation2012) pointed out, psychological research shows that extension of the structural boundaries (creativity) leads to uncertainty. Human beings find low levels of uncertainty stimulating (i.e. they enjoy a bit of novelty), but dislike high levels of it, and display anxiety when confronted with such high levels. Limits to tolerance for uncertainty thus restrain the amount of novelty that can be successfully introduced into a social system. Paradoxically, freedom is necessary for creativity, to be sure, but excess of freedom goes too far.

This interplay of freedom and constraints has been interpreted in psychological theory as involving a two-step process of creativity, based on the exercise of freedom paired with adherence to constraints. As Cropley and Cropley (Citation2015) explained, especially in commercial approaches to creativity, this process can be treated as involving a phase of generation of novelty – in which freedom dominates – and a phase of fitting this novelty in with the requirements of the particular field – in which constraints dominate. As mentioned in Cropley and Cropley (Citation2005), in cognitive terms, creativity involves a pairing of divergent thinking, through which a field is explored with maximum freedom, and convergent thinking, through which the results of divergent thinking are attuned to the constraints of the surrounding environment.

In the swarm intelligence systems that will be used later in this paper, the two phases of exploration and exploitation introduce the freedom and control the level of constraint. By pushing the swarms towards exploration, freedom is boosted, and by encouraging exploitation, constraint is emphasised. Finding a balance between exploration and exploitation has been an important theoretical challenge in swarm intelligence research and over the years many hundreds of different approaches have been deployed by researchers in this field. Equally, finding a trade off between freedom and constraint remains a crucial element to creativity.

Following the discussions in this section and the previous one on freedom, constraint, creativity and the relationship between them, the next section provides an alternative account of creativity, namely the dark side of creativity.

2.5. The dark side of creativity

The question that now arises is how to conceptualise creativity that fails to achieve an acceptable balance of freedom and constraint. This task is made more difficult by the fact that there is a strong tendency to regard creativity as always good. For example, Kampylis and Valtanen (Citation2010) reviewed 42 modern definitions of creativity and no fewer than 120 terms typically associated with creativity (collocations), and concluded that the vast majority of discussions do not take any account whatsoever of negative aspects of creativity. Despite this, the basic idea that creativity is not always good has been considered since the time of Aristotle. McLaren (Citation1993), for example, highlighted Plato's mistrust of creative people. A simple example of negative creativity would be a worker thinking up an effective new way to steal from a company. Such negative creativity need not have actual destructive intent: indeed, the thief just mentioned might have a vested interest in seeing the company prosper so that he or she could continue to steal. Robert Oppenheimer and other scientists who developed the basis for nuclear weapons apparently believed, perhaps correctly at the time, that they were working for the benefit of humankind, but produced creative work that has had a profound negative impact on the world. Cropley (Citation2010) concluded that such negative creativity encompasses effective novel products which do good for one party but harm to another, and referred to the negative aspect of creativity as involving its “dark side”. This term is now widely used to refer to creativity which leads to negative consequences.

Cropley (Citation2010) made an important distinction in this context by pointing out that two conditions can lead to negative creativity: a positive intention on the part of the creative individual that goes wrong (for a variety of reasons which cannot be reviewed in detail here), and a deliberate intention to do harm. Cropley referred to the former as “failed benevolence” (p. 366), and the latter as “conscious malevolence”. An extreme example of failed benevolence is seen in Edward Jenner's discoveries on disease-bearing micro-organisms eventually leading to germ warfare. An extreme example of conscious malevolence is seen in the successful innovativeness of the World Trade Center attacks on September 11 2001. Cropley went further by considering not just the intention of the creator but also the nature of the surrounding environment; in the World Trade Center example, for instance, the environment was hostile to the implementation of the creativity, but it succeeded all the same, an example of “resilient malevolence”.Footnote2

The approach to dark creativity just outlined expands thinking about creativity beyond cognition – special forms of data processing such as linking remote associates, identifying unexpected connections, defining concepts in a broad or fuzzy manner, i.e. “cold” components of creativity (Cropley and Cropley, Citation2015). In fact, although creativity theory early in the modern era immediately following the Sputnik Shock was initially dominated by the idea of divergent versus convergent thinking (i.e. information processing, typically referred to by researchers in psychology and education as “process”), attention quickly turned to the creative person and to aspects of creativity such as personality and motivation (i.e. “hot” components Cropley and Cropley, Citation2015).

Cropley and Cropley (Citation2015) argued that different variants of the generation of effective novelty can be placed along a continuum defined by the intent of the creator, ranging from low intent to high. In the case of innovation, the continuum would range from a pole involving novelty generated without any intention of developing a commercially salient product to novelty generated with the absolute intention of generating commercially salient novelty. Other domains of generation of novelty could be placed along the continuum; for example, everyday creativity (such as a cook inventing a new dish for the family's evening meal) involves some intention of producing a useful product, but no expectation that the product will be marketed for commercial gain. The writing of the present paper involves a strong intention of producing a useful product, but no intention of the product yielding commercial gain.

Applied to dark creativity, this approach suggests that a continuum of malevolent intention would help to make sense of the situation. At one pole would be complete absence of negative intention (malevolence), so that Jenner's failed benevolence would be placed at this pole. At the other pole would be the conscious malevolence of the September 11th attackers. Other combinations of intention and outcome such as failed malevolence (high level of malevolent intention but lack of success, such as in a failed assassination attempt) would occupy intermediate positions.

2.6. The moral dimension of creativity – evil

Cropley (Citation2011) examined this issue in detail. Stated in moral terms, malicious creativity involves evil: the deliberate intent to do harm to others. In this article evil is conceptualised as involving excessive use of freedom. Worsley argues that evil is a consequence of human freedom; he states that human evil is a matter of choice, but involves a destructive choice: a “destructiveness of imperfection or excess” (Worsley, Citation1996, p. 144). This raises the question of the “creativity” of manifestations of evil. In “Anger, Madness, and the Daimonic: The Psychological Genesis of Violence, Evil, and Creativity” (Diamond, Citation1996), the link between evil and creativity is discussed in detail; furthermore, a link is made between repressing anger (defined as a form of evil) and repressing creativity:

Our culture requires that we repress most of our anger,and, therefore, we are repressing most of our creativity.

Empirical work in psychology has looked at negative traits associated with being creative, that is, at the personal aspect of the dark side. For example, different studies have shown that creative people are more likely to manipulate test results, be able to tell more different types of creative lies than less creative people, be deceptive during conflict negotiation, and demonstrate less integrity, e.g. Walczyk, Runco, Tripp, and Smith (Citation2008). People who make greater use of creativity to inflict deliberate harm on other people are more likely to be physically aggressive and have lower emotional intelligence. Another approach to studying the dark side of creativity is to find out how potentially negative situational or personal factors enhance creativity. For example, as suggested in Mayer and Mussweiler (Citation2011), there is evidence to suggest that when people are primed to be distrustful, creative cognitive ability increases if the person is being creative in private. It has also been shown by Mayer and Mussweiler (Citation2011) that threatening stimuli provoked more creative responses than non-threatening, apparently because creativity can help reduce the cognitive dissonance associated with the disequilibrium between being under threat and simultaneously wishing to be secure (Riley and Gabora, Citation2012).

Following this topic, the next section deploys a computational “artwork” generating system with an embedded “evil” mechanism. The hybrid system takes an image as input which is analysed by the swarm intelligence technique and gradually destroyed by the digital virus while generating the projection of “evil” as the artwork produced.

3. Visualisation

In this section, a brief introduction is given to both the swarm intelligence algorithm and the physiological mechanism used to generate the paintings. Then the visualisation process of the “evil” system is detailed, followed by a discussion of the performance of the hybrid system.

3.1. Swarm intelligence algorithm

This section introduces stochastic diffusion search (SDS) – as described in al-Rifaie and Bishop (Citation2013) – which is a population-based, global search and optimisation techniques based on simple interaction of agents .Footnote3 This algorithm is inspired by one species of ants, Leptothorax acervorum, where a “tandem calling” mechanism (i.e. one-to-one communication) is used: the forager ant finds the food location and recruits a single ant upon its return to the nest, physically publicising the location of the food .Footnote4

SDS introduces a probabilistic approach for solving best-fit pattern recognition and matching problems. As a multi-agent population-based global search and optimisation algorithm, SDS is a distributed mode of computation utilising interaction between simple agents. Unlike many nature-inspired search algorithms, SDS has a strong mathematical framework that describes the behaviour of the algorithm by investigating its resource allocation, convergence to global optimum, robustness and minimal convergence criteria and linear time complexity.

The SDS algorithm commences a search or optimisation by initialising its population. In any SDS search, each agent maintains a hypothesis, h, defining a possible problem solution. After initialisation, two phases are followed (see Algorithm 1):

In the test phase, SDS checks whether the agent hypothesis is successful or not by performing a partial hypothesis evaluation that returns a boolean value (e.g. active or inactive). Later in the iteration, contingent on the precise recruitment strategy employed, successful hypotheses diffuse across the population; this way, information on potentially good solutions spreads throughout the entire population of agents.

In standard SDS (as used in this paper), passive recruitment mode is employed. In this mode, if the agent is inactive, a second agent is randomly selected for diffusion. If the second agent is active, its hypothesis is communicated (diffused) to the inactive one; otherwise there is no flow of information between agents, and a completely new hypothesis is generated for the first inactive agent at random instead (see Algorithm 2).

In Algorithm 2, “ag” stands for agent, “r_ag” refers to a random agent and a hypothesis is a point in the search space that an agent points to. The concept of agent's hypothesis is the same in both Algorithms 1 and 2.

In the next section, a physiological mechanism is introduced which will be integrated with the already described swarm intelligence technique.

3.2. Physiological mechanism

This section introduces human immunodeficiency virus (HIV), whose behaviour is to be used later in this paper as a digital virus. The digital virus is to be used alongside the swarm intelligence algorithm to alter the state of an input image.

The progression of HIV infection to acquired immune deficiency syndrome (AIDS) involves two distinct phases, from the initial phase of acute infection to the infection by an opportunistic disease.

When entering the body, the virus causes an acute infection, mainly targeting the white blood cells responsible for defending the body (Levy, Citation1993). These cells mount an attack to destroy the virus on its entrance; however, the virus conceals itself by inserting its genetic material into the cells and using their material to replicate itself inside them, eventually destroying them.

The process causes a high depletion of T-cells over a period of time that can last up to 10–12 years (Katzenstein, Citation2003). This ultimately leads to ineffective, cell-mediated and humoral responses to HIV resulting in a chronic phase of infection, which is characterised by persistent immune activation and progressive decline of the naïve and memory T-cells. The continuously active immune system loses its defending cells and becomes inefficient. In other words, during the chronic phase of the illness the system loses its ability to fight and the patient will be vulnerable to opportunistic diseases .Footnote5

3.3. The ‘Evil’ system

This section details the process through which the swarm intelligence algorithm is coupled with digital viruses to create a visual piece based on an input image.

As stated earlier, each SDS agent has two components: the status and the hypothesis. The status is a boolean value, and the hypothesis is the coordinate indicating the colour attribute (i.e. RGB values) of a particular pixel within the search space (input image).

The physiological mechanism of the virus is loosely borrowed in this work and it offers three distinctive features that are incorporated into the hybrid system:

identifying the white blood cells,

destroying the cells and

spreading the virus throughout the system.

These three features are integrated into the hybrid system as detailed below:

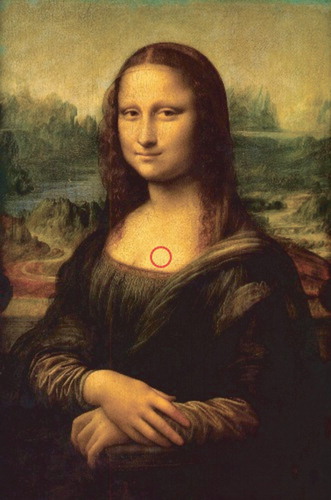

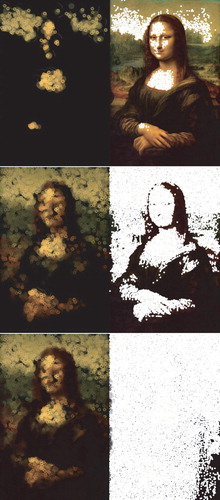

Following the analogy of the virus identifying the white blood cells, the brightest colour within the image is identified and the coordinate of the relevant pixel is recorded (see Figure ).

The pixel that has been located is set as the goal for SDS.

SDS agents are initialised throughout the search space.

SDS iterates n times through the test and diffusion phases (n=100).

During the test phase, the colour of the pixel where each agent resides is compared against that of the identified brightest pixel; if the RGB distance between the two colours is less than the distance threshold,

, the agent is set active, otherwise it is set inactive.

In the diffusion phase, as in standard SDS, each inactive agent randomly picks another agent. If the randomly selected agent is active, the inactive agent adopts the

coordinate of the active agent (with a Gaussian random distance,

). However, if the selected agent is inactive, the selecting agent generates a random

coordinate from the search space.

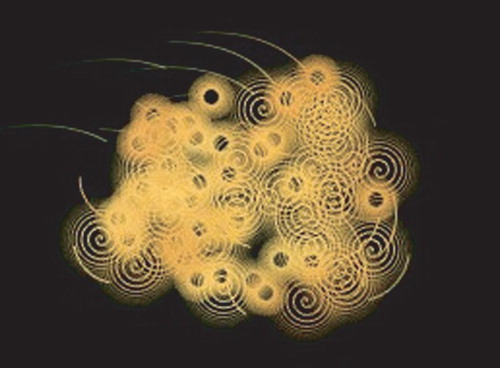

After n iterations of test and diffusion phases, the average colour of the agents is calculated and then visualised in the form of a spiralFootnote6 appearing on the output canvas (see the images on the left column of Figure ).

The pixel with the brightest colour and the pixels where the active agents are residing are removed from the input image (i.e. the digital virus destroys the identified “white” cells/pixels).

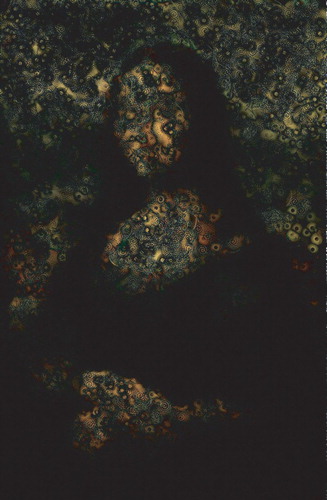

Figure 3. The hybrid swarm intelligence and evil system. The images are recorded after a few n full system iterations. The top set of images show the first recorded image, and the bottom set of images show the final generated image by the hybrid painting system.

This process is repeated until all the pixels from the input image have been removed and the output image is complete. In other words, this process gives rise to the destruction of the entire pixels of the input image and creates a visualisation that, while non-identical to the original image, is still representative of it.

The initial number of agents is 10; this number (representing the number of digital virus) increases with the ratio of 0.1 after each 1000 full system iterations (SDS cycle and physiological mechanism).

Note that agent size of 0 indicates the absence of digital virus on the canvas (the canvas stays intact), and as the number of digital virus increases, the pace at which the canvas is “altered” increases as well, therefore not allowing the viewer to see the graduate impact of the digital virus on the canvas.

Apart from the computational system presented in this paper, there have been several relevant attempts to create “creative” computer generated artwork using artificial intelligence, artificial life and swarm intelligence. Irrespective of whether these systems are considered genuinely creative or not, their similar individualistic approach is not totally dissimilar from those of the “elephant artists” (Weesatchanam, Citation2006):

After I have handed the loaded paintbrush to [the elephants], they proceed to paint in their own distinctive style, with delicate strokes or broad ones, gently dabbing the bristles on the paper or with a sweeping flourish, vertical lines or arcs and loops, ponderously or rapidly and so on. No two artists have the same style.

Similarly if the same input image (see Figure ) is repeatedly given to the hybrid system, the output images produced by the swarms are never the same. For instance, while the images in Figure use Figure as their input, they exhibit different visual outputs. Figure shows the absolute difference between the images in Figure . Using the absolute difference measure between the images, the normalised difference of each pixel from one image with the other is: ,

,

, where

,

and

are the averaged absolute differences in red, green and blue colour, respectively. This is calculated by using the following formula:

(1)

(1) where

is the average distance for channel i (e.g. red, green or blue); w and h are the width and height, respectively; and D is the vector of the absolute differences between the pixels of the images, the size of which is

. In other words, even if the hybrid system processes the same input several times, it will not generate two identical image.

In the example presented in this paper, if the destructive or “evil” power of the system (represented through the radius of the digital virus) is increased, it allows the digital virus to inflict a greater impact on the input image; as a result, the image generated has less resemblance to the original image.

Having presented the “evil” system as an integrated system of physiological mechanism and swarm intelligence technique, the next section focuses on the role of the swarm in providing what is described as a swarm regulated freedom. This type of freedom is contrasted against unregulated (excessive) freedom.

4. Swarm regulated freedom versus unregulated (excessive) freedom

If a gradual increase in the destructive nature of the digital virus exists, the output images exhibit a gradually increasing distance from more recognisable representations (e.g. Figure – left) of the input image towards different less recognisable representations (e.g. Figure – middle ); and then with a further increase in the “dose of evil” the output images display increasing loss of fidelity to the original image (e.g. Figure – right).

Figure 6. Increasing the “dose of evil” and its impact on the system. From left to right, the digital virus is empowered and thus the impact on the output images shown below increases gradually.

When more destruction is applied, the hybrid painting system soon begins to deviate from the original image. For this reason, excessively increasing the destruction or “dose of evil” results in a “low fidelity” representation of the original image. In contrast, if the digital virus “moderately” affects the input image as it spreads on the canvas the generated image maintains a recognisable fidelity to the input.

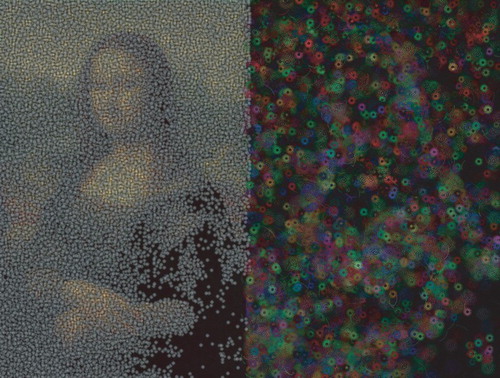

This section presents an experiment with the goal of contrasting the behaviour of the swarms to that of a group of random agents. In this experiment, the freedom of the swarm (i.e. Swarm Regulated Freedom) is maintained by the swarm intelligence algorithms used in the system, whereas the freedom of the agents in the randomised algorithm is controlled by what we call the Unregulated (Excessive) Freedom. These definitions are utilised here to highlight the potential of the swarms in exhibiting computational creativity.

The generated images in Figure show two outputs from the algorithm with swarm regulated freedom, where the swarms have full access to the whole of the search space, but are constrained by some internal rules.

When the same level of freedom is granted to the algorithm with unregulated (excessive) freedom, the algorithm soon begins to deviate excessively from the original colour patterns. For this reason such randomisation results in a very poor interpretation of the original input image (see Figure ). In the randomised algorithm, contra the swarms system, it can be seen that simply giving the agents more randomised behaviour (unregulated, excessive freedom) fails to produce more “creative output”.

Figure 7. The output of the system using unregulated (excessive) freedom. The left-hand image shows the impact of the evil system on the input image, and the image on the right shows the output generated with the randomised algorithm.

The swarm regulated freedom or “controlled freedom” exhibited by the swarm algorithms (induced by the stochastic side of the algorithms) is crucial to the resultant work and is the reason why having the same input does not result in the system producing identical generated images. This freedom emerges from some influencing factors, including the stochasticity of SDS algorithm in picking agents for communication, as well as choosing agents to diffuse information.

In other words, the reason why the swarm generated images are different from the simple randomised paintings is that the underlying converging component of the algorithm constantly endeavours to accurately trace the colour of interest in the input image while the SDS foraging component constantly endeavours to explore the wider canvas (i.e. together the two mechanisms ensure high-level fidelity to the input without making an exact low-level copy of the original input).

It can be seen that simply extending a basic physiological mechanism (i.e. inducing more destructive force), or injecting unregulated excessive freedom, fails to demonstrate that more “creative images” are produced. A question raised here is how to control the extent of freedom, which is perhaps where the “creativity” lies, and the area where the computer needs to be in control in order to demonstrate the sought after computational “creativity”. What can be stated now is that controlling the dose of evil or excessive freedom exhibited by the hybrid system is crucial to the resulting work.

In the next section, the notorious question of whether a system is able to exhibit a strong computational creativity or not is presented. In the discussion presented in the next section, the notion of weak computational creativity is described in order to distinguish between a system that is genuinely creativity, and a system that while exhibiting elements of creativity, yet fails to be considered genuinely creative. This argument is supported by presenting “Dancing with Pixies” reductio (DwP) as well as the Chinese Room Argument (CRA).

5. Weak and strong computational creativity

It is a commonly held view that “there is a crucial barrier between computer models of minds and real minds: the barrier of consciousness” and thus that information-processing and phenomenal conscious experienceFootnote7 are conceptually distinct (Torrance, Citation2005). Indeed, Cartesian theories of cognition can be broken down into what (Chalmers, Citation1996) calls the “easy” problem of perception (i.e. the classification, identification and processing of sensory-neural states) and a corresponding “hard” problem, which is the realization of the associated phenomenal experience. The difference between the easy and the hard problems and the apparent lack of a link between theories of the former and an account of the latter has been termed the “explanatory gap”.

Is consciousness a necessary prerequisite for the realisation of mental states and “genuine” cognition in any entity? Certainly John Searle thinks it is, “.. the study of the mind is the study of consciousness, in much the same sense that biology is the study of life” (Searle, Citation1992) and this observation leads Searle to postulate the Connection Principle whereby “… any mental state must be, at least in principle, capable of being brought to conscious awareness”. But is such experience also necessary for an entity to be considered “creative” and, furthermore, can a mere computing machine (qua computation) ever aspire to realise consciousness in all its raw and terrifying grandeur? Certainly Rothman's notion of creativity as a “way of being” reifies the creative act into a lived act that involves consciousness and phenomenal reflection: “If you're really creative, really imaginative, you don't have to make things. You just have to live, observe, think, and feel.” But can a machine ever genuinely feel purely in virtue of executing the appropriate computations?

Certainly across the realms of science and science fiction the hope is periodically reignited that a computational system will one day be conscious by virtue of its execution of an appropriate program; indeed in 2004 the UK funding body EPSRC awarded an “Adventure Fund” grant [GR/S47946/01] of £493,290 to a team of Roboteers and Psychologists at Essex and Bristol led by Owen Holland, with the goal of instantiating machine consciousness in a humanoid-like robot called Cronos through appropriate computational “internal modelling”. But equally, the view that the mere execution of a computer program can bring forth consciousness has not gone unchallenged.

The next section describes the “Dancing with Pixies” reductio in order to address the aforementioned issues as well as the issue of machine consciousness.

5.1. Dancing with pixies (DwP)

One argument that Bishop has developed which questions the very possibility of machine consciousness, is the “Dancing with Pixies” reductio (Bishop, Citation2002, Citation2009). Its underlying thread derives from positions originally espoused by Putnam (Citation1988), Maudlin (Citation1989) and Searle (Citation1990), with subsequent criticism from Chalmers (Citation1996), Klein (Citation2004) and Chrisley (Citation2006) among others .Footnote8

In the DwP reductio, instead of seeking to secure Putnam's claim that “every open system implements every Finite State Automaton” (FSA) and hence that “psychological states of the brain cannot be functional states of a computer”, Bishop establishes the weaker result that, over a finite time window, every open physical system implements the execution trace of a Finite State Automaton Q on a given input vector (I).

That this result leads to panpsychism (the view that consciousness or mind is a universal feature of all things) is clear as, equating FSA Q(I) to a finite computational system that is claimed to instantiate phenomenal states as it executes, and employing Putnam's state-mapping procedure to map a series of computational states to any arbitrary non-cyclic sequence of states, we discover identical computational (and ex hypothesi phenomenal) states lurking in any open physical system (e.g. a rock); little pixies [raw conscious experiences] “dancing” everywhere.

Baldly speaking DwP is a simple reductio ad absurdum argument to demonstrate that:

IF the assumed claim is true – that an appropriately programmed computer really does instantiate genuine phenomenal states;

THEN panpsychism is true.

However, if, against the backdrop of our immense scientific knowledge of the closed physical world and the corresponding widespread desire to explain everything ultimately in physical terms, we are led to reject panpsychism, then the DwP reductio suggests computational processes cannot instantiate phenomenal consciousness and computational accounts of cognitive processes must, at best, exhibit what John Searle termed “weak AI” (Searle, Citation1980) .Footnote9

Furthermore, taken alongside the CRA,Footnote10 Searle's famous critique of strong AI and machine understanding (Searle, Citation1980) , we suggest the DwP reductio places bounds on the successes of any mere computationally powered creativity project because, if we are correct, no purely computational engine can ever genuinely feel or understand anything of the world nor, indeed, anything of its own “creative response” to that world (nor the world's response to it).

Thus, echoing Searle's taxonomy of artificial intelligence, al-Rifaie and Bishop (Citation2015) have suggested that, taken together, the CRA and DWP suggest a dual taxonomy of “computationally creative systems”: a weak notion, which does not go beyond exploring the simulation of “human” creative processes; emphasising that any creativity so exhibited springs forth from the interaction of human and machine (and fundamentally remains the responsibility of the human) and a strong notion, in which the expectation is that the underlying creative system is autonomous, autopoietic and conscious, with “genuine understanding” and other cognitive states.

That said, of course there always remains a trivial sense in which every time we run any computer program the machine is in some sense “computationally creative”, as symbols and patterns that did not previously exist together are put into the world; indeed, as Newell (Citation1973) famously observed in 1973:

Computer science is an empirical discipline. We would have called it an experimental science, but like astronomy, economics and geology, some of its unique forms of observation and experience do not fit a narrow stereotype of the experimental method. None the less, they are experiments. Each new machine that is built is an experiment. Actually constructing the machine poses a question to nature; and we listen for the answer by observing the machine in operation and analyzing it by all analytical and measurement means available.

However, lacking autonomous teleology, contextualisation and intent, even this modest conception of a “computational” creative process is merely analogous to the ballistic throw of a diceFootnote11; invoking only the faintest echo of “creativity” as the word is more usually employed.

Thus, viewed under a modern conception of creativity – as a process positioned within a reflective historical lineage – such reflections inexorably lead us to question in what sense any computational system could ever be seriously described as strongly creative (and not simply as a tool, an accelerator, serving its programmer's own vivid imaginings). Indeed, in his recent address in A-EYE: An exhibition of art and nature-inspired computation, organised by al-Rifaie, Blackwell, and Puntil (Citation2014) and to open AISB50 (the 50th anniversary conference of the UK society for Artificial Intelligence and the Simulation of Behaviour), Harold Cohen, the British-born artist well known as the creator of AARON (a computer program often claimed to produce art “autonomously” ) offered an alternative approach to this very shibboleth by electing to describe his own work merely in terms of interactive collaborations between human and machine.

5.2. The body in question

If we are to take the notion of “strong creativity” seriously we believe that the “Dancing with Pixies” reductio and John Searle's “CRA” suggest that we need to move away from purely computational explanations of creative processes and instead reflect on how meaning, teleology and human creative processes are fundamentally grounded in the human body, society and the world; obliging us, in turn, to take issues of embodiment – the body and our social embedding – much more seriously. And such a strong notion of embodiment most certainly cannot be realised by simply co-opting a putative computational creative system into a conventional tin can robot .Footnote12

As Nasuto and Bishop set out in their recent discussion of Biologically controlled animatsFootnote13 and the so-called Zombie animalsFootnote14 (two examples carefully chosen to lie at polar ends of the spectrum of possible engineered robotic/cyborg systems), because the induced behavioural couplings therein are not the effect of the intrinsic “nervous” system's constraints (metabolic or otherwise) at any level, a fortiori, merely instantiating appropriate sensorimotor coupling is not sufficient to instantiate any meaningful intentional states (Nasuto and Bishop, Citation2013).

On the contrary, in both Zombie animals and Biologically controlled animats the sensorimotor couplings are actually the cause of extrinsic metabolic demands (made via the experimenter's externally directed manipulations). But since the experimenter drives the sensorimotor couplings in a completely arbitrary way (from the perspective of the intrinsic metabolic needs of the animal or its cellular constituents), the actual causal relationship between the bodily milieu and the motor actions and sensory readings can never be genuinely and appropriately coupled. Hence Nasuto and Bishop (Citation2013) concluded that only the right type and directionality of sensorimotor couplings can ultimately lead to genuine understanding and intentionality.

In the light of such concerns, and until the challenges of the CRA and DwP have been fully met and the role of embodiment more strongly engaged (such that neurons, brain and body fully interact with other bodies, world and society), we suggest a note of caution in labelling any artificial system as “strongly creative” in its own right; any creativity displayed therein being simply a projection of its engineer's intellect, aesthetic judgement and desire.

6. Conclusion

Discussion of creativity and the conditions which make a particular work creative have generated heated debates among scientists and philosophers for years. Although this article does not aim to resolve any of these issues (or even suggest that the work presented strongly fits and endorses the category of the “computationally creative realm”), it has presented an investigation of the performance of a novel hybrid swarm intelligence and physiological painting system. Works of this nature have previously been viewed through the philosophical lens of Deleuze, offering new insights into the putative creativity, autonomy and authorship of the resulting system (al-Rifaie, Bishop, and Caines, Citation2012).

The so-described evil computational artist presented in this paper is the result of merging a swarm intelligence algorithm and the physiological mechanism of the digital virus. The computational system was used as a tool to explore the impact of “evil” – as an extended form of freedom and a destructive force – in creating artworks. In connection with this, the role of destruction in creativity was briefly discussed. This work has also shown the influence of the “dose of evil” (or the impact of digital destruction) on the output of the collaborative system.

In this paper the concept of dark creativity is introduced in the broader context of creativity and computational creativity. Freedom and constraint as two of the pre-requisites for creativity were discussed and the question whether strong computational creativity is achievable without the presence of intention and genuine understanding is raised. Additionally, caution is urged in attributing genuine understanding and intentionality to a system without the right type and directionality of sensorimotor couplings. These issues were discussed using the notions of weak and strong computational creativity as an extension to the already existing concepts of weak and strong artificial intelligence.

Disclosure statement

No potential conflict of interest was reported by the authors.

ORCID

Mohammad Majid al-Rifaie http://orcid.org/0000-0002-1798-9615

Notes

1. For an overview, see Cropley and Cropley (Citation2015).

2. The use of this example should not be taken as an expression of approval of the World Trade Center attacks.

3. In swarm intelligence literature, agents often refer to social animals or insects, e.g. ants, bees, etc.

4. Swarm intelligence algorithms have been used actively by researchers in the field of optimisation and, increasingly, visualisation. Recently an adapted swarm intelligence technique was applied to restoration of spatial degradations that appear in images due to invariant or variant blurs and additive noise (Bilal, Mujtaba, and Jaffar, Citation2015).

5. Thanks to Dr Ahmed Aber, from the Department of Cardiovascular Sciences, University of Leicester Royal Infirmary, Leicester, UK for his contribution to this section of the paper.

6. The system uses a “Hundertwasser-like” approach where the algorithm uses curves instead of straight lines, as Hundertwasser considered straight lines not nature-like and avoided them in his works in order not to bias the style of the system's drawings (Restany, Citation2001). Three types of spiral were used, each defining one mode of evolution of the universe (Peirce, Hartshorne, and Weiss, Citation1932). See Figure for an enlarged part of an output image showing the spirals.

7. The term “consciousness” can imply many things to different people; in this essay we specifically mean that aspect of consciousness Ned Block terms “phenomenal consciousness” (Block, Citation1997), by which we refer to the first person, subjective phenomenal sensations: pains, visual experiences, smells and so on.

8. For early consideration of these themes see the special issue of “Minds and Machines”, 4: 4, “What is Computation?”, November 1994.

9. Strong AI takes seriously the idea that one day machines will be built that can think, be conscious, have genuine understanding and other cognitive states by virtue of their execution of a particular program; in contrast weak AI does not aim beyond engineering the mere simulation of [human] intelligent behaviour.

10. The CRA is John Searle's (in)famous critique of strong AI and machine understanding (Searle, Citation1980); if correct Searle has demonstrated that “syntax is not sufficient for semantics” and hence that computational systems can never genuinely “understand” the symbols they so powerfully manipulate.

11. Tristan Tzara and William S. Burroughs both famously utilised random acts (drawing a series of words from a hat; cutting-up texts and randomly rearranging them, respectively). In the context of this essay it is argued that the creativity here lies more in the artist's decision to deploy a random process centrally within the creative act, rather than the Rorschach interpretations these processes eventually revealed.

12. Whereby an appropriate AI is simply bolted onto a classical robot body and the particular material of that “embodiment” is effectively unimportant.

13. Robots controlled by a cultured-array of real biological neurons.

14. E.g. An animal whose behaviour is “remotely-controlled”, by an external experimenter, say by optogenetics; see also Gradinaru et al. (Citation2007), who used optogenetic techniques to stimulate neurons selectively, inducing motor behaviour without requiring conditioning.

References

- al-Rifaie M. M., & Bishop M. (2013). Stochastic diffusion search review. Paladyn, Journal of Behavioral Robotics, 4(3), 155–173. doi: 10.2478/pjbr-2013-0021

- al-Rifaie M. M., & Bishop M. (2015). Weak and strong computational creativity. In Besold and Smaill (Ed.), Computational creativity research: Towards creative machines (pp. 37–49). Berlin: Springer.

- al-Rifaie M. M., Bishop M., & Caines S. (2012). Creativity and autonomy in swarm intelligence systems. Cognitive computation, 4, 320–331.

- al-Rifaie M. M., Blackwell T., & Puntil C. (2014). A-EYE: An exhibition of art and nature-inspired computation. London: AISB Society. ISBN: 978-1-908187-41-3.

- Baillie C (2002). Enhancing creativity in engineering students. Engineering Science & Education Journal, 11(5), 185–192. doi: 10.1049/esej:20020503

- Besemer S. P. (2006). Creating products in the age of design: How to improve your new product ideas!. Stillwater: New Forums Press.

- Bilal M., Mujtaba H., & Jaffar M. (2015). Novel optimization framework to recover true image data. Cognitive Computation, 7, 1–13. doi: 10.1007/s12559-015-9339-7

- Bishop M. (2002). Dancing with pixies: Strong artificial intelligence and panpyschism. In B. J. Preston J, (Ed.), Views into the Chinese room: New essays on Searle and artificial intelligence (pp. 360–378). Oxford: Oxford University Press.

- Bishop M. (2009). Why computers can't feel pain. Minds and Machines, 19(4), 507–516. doi: 10.1007/s11023-009-9173-3

- Block N. (1997). On a confusion about a function of consciousness. In G. G. Block N & O. Flanagan (Eds.), The Nature of Consciousness (pp. 227–287). Cambridge MA: MIT Press.

- Boden M. (2010). Creativity and art: Three roads to surprise. Oxford, UK: Oxford University Press.

- Chalmers D. J. (1996). The conscious mind: In search of a fundamental theory. Oxford: Oxford University Press.

- Chrisley R. (2006). Counterfactual computational vehicles of consciousness. In S. Hameroff (Ed.), Toward a Science of Consciousness April 4–8 2006 (pp. 4–8). Tucson AZ: Tucson Convention Center.

- Cropley D. H. (2010). Summary – the dark side of creativity: A differentiated model. In D. H. Cropley, A. J. Cropley, J. C. Kaufman, & M. A. Runco (Eds.), The dark side of creativity (pp. 360–374). Cambridge: Cambridge University Press.

- Cropley A. J. (2011). Moral issues in creativity. Encyclopedia of Creativity, 2, 140–146. doi: 10.1016/B978-0-12-375038-9.00053-4

- Cropley D. H. (2015). Creativity in engineering: Novel solutions to complex problems. San Diego, CA: Academic Press.

- Cropley D. H., & Cropley A. J. (2005). Engineering creativity: A systems concept of functional creativity. In J. C. Kaufman & J. Baer (Eds.), Creativity across domains: Faces of the muse (pp. 169–185). New York, NY: Lawrence Erlbaum Associates.

- Cropley D. H., & Cropley A. J. (2015). The psychology of innovation in organisations. Cambridge: Cambridge University Press.

- Diamond S. A. (1996). Anger, madness, and the daimonic: The psychological genesis of violence, evil, and creativity. Albany, NY: SUNY Press.

- Gradinaru V., Thompson K. R., Zhang F., Mogri M., Kay K., Schneider M. B., & Deisseroth K. (2007). Targeting and readout strategies for fast optical neural control in vitro and in vivo. Journal of Neuroscience, 26:27(52), 14,231–14,238. doi: 10.1523/JNEUROSCI.3578-07.2007

- Guilford J. P (1950). Creativity. American Psychologist, 5, 444–454. doi: 10.1037/h0063487

- Johnson-Laird P. N. (1988). Freedom and constraint in creativity. In R. J. Sternberg (Ed.), The nature of creativity: Contemporary psychological perspectives (pp. 202–219). Cambridge, UK: Cambridge University Press.

- Kampylis P. G., & Valtanen J (2010). Redefining creativity-analyzing definitions, collocations, and consequences. The Journal of Creative Behavior, 44(3), 191–214. doi: 10.1002/j.2162-6057.2010.tb01333.x

- Katzenstein T. L. (2003). Molecular biological assessment methods and understanding the course of the hiv infection. APMIS. Supplementum, (114), 1.

- Klein C. (2004). Maudlin on computation. Working Paper, 12–18.

- Kureishi H. (2004). My ear at his heart. England: Faber and Faber.

- Levy J. A. (1993). Pathogenesis of human immunodeficiency virus infection. Microbiological Reviews, 57(1), 183.

- Maudlin T. (1989). Computation and consciousness. Journal of Philosophy, 86, 407–432. doi: 10.2307/2026650

- Mayer J., & Mussweiler T. (2011). Suspicious spirits, flexible minds: when distrust enhances creativity. Journal of personality and social psychology, 101(6), 1262–1277. doi: 10.1037/a0024407

- McLaren R. B. (1993). The dark side of creativity. Creativity Research Journal, 6(1–2), 137–144. doi: 10.1080/10400419309534472

- McWilliam E., & Dawson S. (2008). Teaching for creativity: Towards sustainable and replicable pedagogical practice. Higher Education, 56(6), 633–643. doi: 10.1007/s10734-008-9115-7

- Mueller J. S., Melwani S., & Goncalo J. A. (2012). The bias against creativity: why people desire but reject creative ideas. Psychological Science, 23, 13–17. doi: 10.1177/0956797611421018

- Nasuto S., & Bishop J. (2013). Of (zombie) mice and animats. In V. C. Müller (Ed.), Philosophy and theory of artificial intelligence. Studies in applied philosophy, epistemology and rational ethics (5) (pp. 85–106). Berlin: Springer.

- Newell A., & Simon H (1973). Computer science as empirical enquiry: Symbols and search. Communications of the ACM, 19(3), 113–126. doi: 10.1145/360018.360022

- Peirce C. S., Hartshorne C., & Weiss P. (1932). Collected papers of Charles Sanders Peirce. Vol. 1, Principles of philosophy. Cambridge, MA: Belknap Press of Harvard University Press.

- Preston J., & Bishop M. (2002). Views into the Chinese room: New essays on Searle and artificial intelligence. Oxford: Oxford University Press.

- Prindle E. J. (1906). The art of inventing. Transactions of the American Institute of Electrical Engineers (XXV), 519–541. doi: 10.1109/T-AIEE.1906.4764745

- Putnam H. (1988). Representation and reality. Cambridge, MA: Bradford Books.

- Restany P. (2001). Hundertwasser: The painter-king with the five skins: The power of art. Vienna: Taschen America Llc.

- Riley S. N., & Gabora L. (2012). Evidence that threatening situations enhance creativity. In N. Miyake, D. Peebles, & R. P. Cooper (Eds.), Proceedings of the 34th annual meeting of the cognitive science society (pp. 2234–2239). Austin TX: Cognitive Science Society.

- Rothman J. (2014). Creativity creep. The New Yorker. Published on September 2 2014, Retrieved from http://www.newyorker.com/books/joshua-rothman/creativity-creep.

- Royce J. (1898). The psychology of invention. Psychological Review, 5(2), 113–144. doi: 10.1037/h0074372

- Runco M. A., & Jaeger G. J (2012). The standard definition of creativity. Creativity Research Journal, 24(1), 92–96. doi: 10.1080/10400419.2012.650092

- J. R. Searle (1980). Minds, brains, and programs. Behavioral and Brain Sciences, 3(3), 417–457. doi: 10.1017/S0140525X00005756

- Searle J. (1990). Is the brain a digital computer? In Proceedings of the American Philosophical Association, Vol. 64 (pp. 21–37). Newark: American Philosophical Association.

- Searle J. (1992). The rediscovery of the mind. Cambridge, MA: The MIT Press.

- Torrance S. (2005). Thin phenomenality and machine consciousness. In R. C. R. Chrisley & S. Torrance (Eds.), Proceedings of the 2005 AISB symposium on next generation approaches to machine consciousness: Imagination, development, intersubjectivity and embodiment, AISB 2005 Convention. Hertfordshire: University of Hertfordshire.

- Walczyk J. J., Runco M. A., Tripp S. M., & Smith C. E. (2008). The creativity of lying: Divergent thinking and ideational correlates of the resolution of social dilemmas. Creativity Research Journal, 20(3), 328–342. doi: 10.1080/10400410802355152

- Weesatchanam A.-M. (2006). Are paintings by elephants really art? The Elephant Art Gallery.

- Worsley R. (1996). Human freedom and the logic of evil. London: MacMillan.