?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.

?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.ABSTRACT

Water Wave Optimisation algorithm (WWO) is a new swarm-based metaheuristic inspired by shallow wave models for global optimisation. In this paper, an enhanced WWO, which combines with multiple assistant strategies (EWWO), is proposed. First, the random opposition-based learning (ROBL) mechanism is introduced to generate the initial population with high quality. Second, a new modified operation is designed and embedded into propagation operation to balance the global exploration and the local exploitation. Third, the covariance matrix self-adaptation evolution strategy (CMA-ES) is employed by the refraction operation to further strengthen the local exploitation. Furthermore, the diversity of the population is maintained in the evolution process by using a crossover operator. The experiment results based on CEC 2017 benchmarks indicate that the EWWO outperforms the state-of-the-art variant algorithms of the WWO and the standard WWO.

1. Introduction

Research on the evolutionary algorithms (EAs) has been raised as an attractive methodology to solve the complex combinatorial problems for several decades. Numerous evolutionary algorithms have been proposed to solve complex optimisation problems. Single-objective optimisation plays an important role in various research domains and application fields. Traditional optimisation algorithms and traditional mathematical methods, which are generally determined by fixed structure and parameters, are applied to solve the single-objective optimisation problems. However, the two methods have certain limitations and their performances are unsatisfactory in solving complex optimisation problems such as premature convergence and poor search ability. Due to these shortcomings, meta-heuristics are proposed to overcome the disadvantages of the traditional mathematical methods and the traditional optimisation algorithms. Meta-heuristics, inspired by the natural evolutionary process, were proposed by various researchers. As the mechanisms of the algorithms have the advantages on simple procedure of implementation, Meta-heuristics are becoming increasingly popular in research and engineering practitioners. Most importantly, meta-heuristics are applied to a wide range of problems in different disciplines.

The classical algorithms to large-scale combinatorial optimisation problems have to address the complexity related to the dimensions, such as Genetic Algorithm (GA) (Das, Das, & Ghosh, Citation2017; Yu, Qiang, & Benfei, Citation2019), Simulated annealing (SA) (Grobelny & Michalski, Citation2017), Differential Evolution (DE) (Awad, Ali, Mallipeddi, & Suganthan, Citation2018; Price, Citation1999), Particle Swarm Optimisation (PSO) (Bijan & Pillay, Citation2019; Kennedy & Eberhart, Citation1995), Harmony search (HS) (Geem, Kim, & Loganathan, Citation2001; Tian & Zhang, Citation2018 ). A series of advanced evolutionary algorithms have been emerged, such as Biogeography-based Optimisation Algorithm (BBO) (Simon, Citation2008), Cuckoo Search algorithm (CS) (Yang, Deb, & Mishra, Citation2018), Bat Algorithm (BA) (Cheng, Citation2010), self-adaptive harmony PSO search algorithm (SHPSO) (Zhao, Liu, Zhang, & Wang, Citation2015), Gravitational Search Algorithm (GSA) (Rashedi, Nezamabadi-pour, & Saryazdi, Citation2009), hybrid algorithm based on self-adaptive gravitational search algorithm (SGSADE) (Zhao, Xue, et al., Citation2018), two-stage differential biogeography-based optimisation algorithm (TDBBO) (Zhao, Qin, et al., Citation2019). These algorithms have a unique search mechanism and the ability to better balance global and local search studies. In addition, among the above algorithms the following are utilised for solving the flow shop scheduling problem, such as flexible flow shop (Abdollahpour & Rezaian, Citation2016), the chaotic local search bacterial foraging optimisation (CLS-BFO) (Zhao et al., Citation2016), P-EDA for the blocking flow-shop scheduling problem (Shao, Pi, & Shao, Citation2018a). The key of evolutionary algorithms depends on the balance of exploration and exploitation at different evolutionary stages. However, most evolutionary algorithms are either biased towards exploitation or exploration and fell into local optimum to solve optimisation problems.

Water Wave Optimisation Algorithm based on the shallow water wave model is a novel nature-inspired metaheuristic method proposed by Zheng (Citation2015). The framework of the WWO has three effective mechanisms: propagation, refraction and breaking, to balance the capabilities of local search and global search. A large number of experiments have been shown that the WWO shows efficient performance in numerical tests and practical applications. WWO has been testified to be an effective algorithm on various problems. For instance, WWO is utilised to deal with the travelling salesman problem (TSP) (Wu, Liao, & Wang, Citation2015). WWO is applied to the permutation flow shop scheduling problems (PFSP) (Yun, Feng, Lyu, Wang, & Liu, Citation2016). A discrete WWO (DWWO) (Zhao, Liu, et al., Citation2018) is used to solve the no-wait flow shop scheduling problem (NWFSP) (Allahverdi, Aydilek, & Aydilek, Citation2018). WWO with the single-wave mechanism (SWWO) (Zhao, Zhang, et al., Citation2019) is introduced for the NWFSP and a novel discrete WWO for blocking flow-shop scheduling problem (BFSP) (Shao, Pi, & Shao, Citation2018b). Although the exploitation level of WWO is excellent, the standard WWO has certain drawbacks such as convergence rate and calculation accuracy. To overcome the shortcoming of the WWO, various operations and improvements have been proposed to enhance the performance of search capacity by researchers. Four aspects are included in the improvement of WWO. (1) The theoretical research of WWO algorithm. (2) The performance of WWO algorithm is improved by modifying the main operations. (3) Hybrid WWO and other evolutionary algorithms are combined for the advantages of different algorithms. (4) WWO algorithm is applied to practical problems. The literatures are summarised as follows.

Various modified approaches have been introduced to strengthen the performance of WWO. A simplified version, named Sim-WWO, is proposed by Zheng and Bei (Citation2015). In Sim-WWO, the refraction operator is removed in the case of ignoring the wave height. Meanwhile, population size reduction strategy is introduced to balance exploration and exploitation as well as partially compensate the weeding-out effect of the refraction operator. An improved version with variable population size (VC-WWO) is proposed by Zhang, Zhang, Zhang, and Zheng (Citation2015). While the variable population size was introduced to VC-WWO, a comprehensive learning mechanism was used to increase the diversity of the solution in the refraction operator. A method of text feature selection based on WWO, named WWOTFS, is proposed by Chen, Hou, Luo, Hu, and Yan (Citation2018). WWO is utilised to excavate the text feature selection rules. WWO-SM proposed by Zhao et al. (Citation2017) is labelled as WWO to identify the unknown parameters for the IIR filter. An improved sine cosine WWO algorithm (SCWWO) that used elite opposition based is proposed by Zhang, Zhou, and Luo (Citation2018) and to solve optimisation functions and structure engineering design problems. The performance of WWO is constantly improved after the WWO is proposed. However, there are still various problems in initialising the population, balancing the capabilities of exploration and exploitation, improving the convergence rate and calculation accuracy. Therefore, a new hybrid algorithm assisted by multiple strategies, named EWWO, is presented to address the premature convergence problem and calculation accuracy problem. In EWWO, the original population initialised has been modified by the opposition-based learning (OBL) (Mahdavi, Rahnamayan, & Deb, Citation2018) to maintain the diversity and stability of population. The modified propagation operation according to , named MPO, is presented to improve the capability of global search and crossover operator is used to increase. In the MPO, the wave length

is replaced by the mutation factor

to control the different step size of the population. In the new breaking operator, the identical mutation strategy as MPO is applied to generate the current population and strengthen the diversity of population. CMA-ES (Hansen & Hansen, Citation2006 ) is a novel evolutionary optimisation strategy and the covariance matrix adaptation has a function of storing and learning from the historical optimal information. Therefore, the covariance matrix adaptation, as a local search strategy, is introduced into WWO and the refraction operation is replaced by updating the covariance matrix adaptation to learn from the information of historical water wave optimal and enhance local search while avoiding local optimisation. In addition, another purpose of the all operators is adopted to improve the convergence rate of the proposed algorithm. In general, EWWO has better performance than the state-of-the-art variants of WWO by testifying on the CEC 2017 benchmark functions with 10, 30, 50 and 100 dimensions. In this paper, the novel methodology and mechanisms are presented and the contributions are summarised as follows.

The random opposition-based learning (ROBL) mechanism is introduced to initialise the population, maintain the diversity and stability of population and increase the high probability of finding the optimal solution.

A modified propagation operation, which is referred to as

, named MPO, is proposed to enhance the capability of the global search. The difference step is controlled by the wave length

rather than the mutation factor

.

According to the disadvantages of WWO in convergence rate and calculation accuracy, the refraction operation is replaced by the covariance matrix adaptation to improve the local search.

The convergence of the proposed algorithm is analysed by the iterative methods.

The remainder of the paper is organised as follows. A brief background material is given in Section 2. The proposed algorithm is described in Section 3. In Section 4, the DOE, the operation analysis, the experimental results and comparisons are analysed. A conclusion is given in Section 5.

2. Background materials

The notation used in this paper is summarised as follows.

| = | population size | |

| = | the length of the | |

| = | wavelength | |

| = | a “wave” has a height (or amplitude) | |

| = | the wavelength reduction coefficient | |

| = | a very small positive number to avoid division-by-zero | |

| = | the best solution found so far | |

| = | a random number between 1 and a predefined number | |

| = | the breaking coefficient | |

| = | the maximum fitness evaluations of the algorithm. | |

| = | parameter for weighting between rank-one and rank- | |

| = | the variance effective selection mass | |

| = | step-size, | |

| = | an orthogonal matrix | |

| = | covariance matrix at generation | |

| = | diagonal elements of | |

| = | learning rate for cumulation for the rank-one update of the covariance matrix | |

| = | learning rate for the rank-one update of the covariance matrix update | |

| = | learning rate for the rank- | |

| = | learning rate for the cumulation for the step-size control | |

| = | a diagonal matrix | |

| = | damping parameter for step-size update | |

| = | expectation value | |

| = | generation counter, iteration number | |

| = | identity matrix, unity matrix | |

| = | mean value of the search distribution at generation | |

| = | evolution path | |

| = | where |

2.1. Water wave optimisation

The WWO has the characteristics of simple algorithm framework, fewer control parameters and smaller population size. In the WWO, the initialised population is simulated into water waves in a shallow water wave model, which is similar to the solution in the solution space. Each wave has a wavelength and a wave height

which is initialised to a constant

. Three operations – propagation, breaking and refraction – are executed by WWO at each generation to gradually approach the global optimum. In the propagation operator, a new wave

is produced by shifting each dimension of the original wave

, and then the wavelength

of water wave

is updated as follows.

(1)

(1)

(2)

(2) where

is a uniformly distributed random number fixed in [−1,1], and

is the length of the

dimension of the search space,

and

are the maximum and minimum fitness values, respectively, among the current population,

is the wavelength reduction coefficient.

is a tiny positive integer to avoid division-by-zero. To increase the diversity of population, the breaking operator performs a local search around the best solution

to further enhance its quality. To be specific,

dimensions are selected randomly (where

is a random number between 1 and constant

), and a solitary wave

is generated at each dimension as follows.

(3)

(3) where

is the breaking coefficient. When the wave height decreases to zero, the refraction operator is only performed by Equation (4) and the wavelength is set as

. Afterwards, the propagation, breaking and refraction are repeated until a termination criterion is satisfied.

(4)

(4) where

is the best solution found so far, and

is a Gaussian random with mean

and standard deviation

.

As shown above, a desirable trade-off between exploration and exploitation for WWO is established by the three operators. In Section 3, the new operators are designed to satisfy the characteristics and requirements of the considered problem based on the idea of the original WWO algorithm.

2.2. Covariance matrix adaptation evolutionary strategy

The adaptive covariance matrix evolution strategy (CMA-ES) algorithm is a novel evolutionary algorithm proposed by Hansen, Müller, and Koumoutsakos (Citation2014). CMA-ES is a stochastic evolutionary method that updates and samples population by covariance matrices and evolutionary paths to obtain optimal solutions. During each iteration, individuals are sampled in the Gaussian distribution, and a solution with a desirable fitness is selected to update individuals in the Gaussian distribution. CMA-ES has the characteristics of rotation invariance for solving non-separable and ill-conditioned optimisation problems. In addition, CMA-ES is performed well on complex optimisation problems.

The basic process of CMA-ES is divided into three parts: sampling and recombination, updating the step size control and covariance matrix adaptation. The offsprings of generation

are calculated by

, where

means the centre of mass of the selected individuals of generation

.

represents the covariance matrix.

is the global step size. Evaluating the solutions, sort them by quality and find the best solution. According to the weighted recombination of the

best solutions, the new mean is calculated by

. Afterwards, the evolution path

is updated and applied to update the step size

according to Equation (5).

(5)

(5) where

is the variance effective selection mass,

,

is a learning rate for the cumulation for the step-size control,

.

obeys the Gaussian distribution, so does

. Additionally,

is compared with

to calculate the step size

as follows.

(6)

(6) where the

is a damping parameter for step-size update,

,

is an expectation of the Euclidean norm of a

distributed random vector,

. Finally, the covariance matrix adaptation is updated according to Equation (7).

(7)

(7) where

is the learning rate for the rank-one update of the covariance matrix,

.

is the learning rate for the rank-

update of the covariance matrix,

.

is of minor relevance,

,

is another evolution path, which is updated according to Equation (8). It is worth noting that two methods named rank-

-update and rank-

-update are combined to enhance robust and rapid search in the updating of the covariance matrix adaptation.

(8)

(8) where

is a learning rate for cumulation for the rank-one update of the covariance matrix,

. The property of the covariance matrix is determined by

.

2.3. Differential evolution

Differential Evolution (DE) is proposed by Storn and Price (Citation1997) . DE, which is the same as other evolutionary algorithms, is a stochastic model to simulate biological evolution and individuals who adapt to the environment are preserved through repeated iterations. The DE is mainly used to solve the global optimisation problem of continuous variables and the main steps are basically mutation, crossover and selection. DE is to retain desirable individuals, eliminate inferior individuals and guide the search process to the global optimal solution through continuous iterative calculation. Researchers have proposed various different strategies to improve the mutation operation. Five different mutation schemes, including and

, are suggested by Swagatam Das, Mullick, and Suganthan (Citation2016).

(9)

(9)

(10)

(10)

The indexes ,

,

are mutually unequal integers which were randomly selected from the range

, the scaled factor

is the mutation operator and the difference step size of the population individual is determined by

.

with a small value will affect the difference among the individuals and make the algorithm fall into the local optimal.

with a large value enhances the global search ability of the algorithm, which is beneficial to the search of the optimal solution; however, the convergence rate of the algorithm is affected by

with a large value.

is the current population and

is the best individual with the best fitness in the population at generation

. In this paper, the propagation operator is assisted by the

to trade off the exploration and exploitation.

3. The proposed algorithm

3.1. Initialise population

In various evolutionary algorithms, the population is initialised using a stochastic strategy, and the population generated by the stochastic strategy is said to undesired in the quality of the final result and the convergence rate of the population. The populations are generated by the original WWO in the search space, which leads to the unpredictability of the convergence of the algorithm. The opposition-based learning (OBL) (Xu, Wang, Wang, Hei, & Zhao, Citation2014) has been proved an effective way to initialise population in optimisation problem. Given the solution for a set of problem evaluation, each individual in

has a reverse individual

, the probability that the individual and the reverse individual are closer to the optimal individual is 50%. The closer individual is selected as the initial individual of the solution

and the individual in the population is close to the optimal solution. It is assumed that the opposite solution for

obtained a desirable solution

, the distance between

and the best solution

is reduced. Namely, the opposite solution

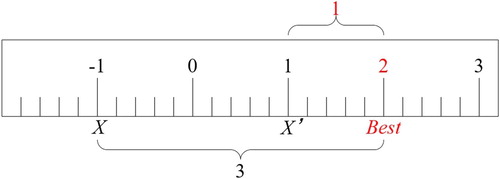

is closer to the best solution. An example is shown in Figure , 2 is the best solution, −1 is an individual and its reverse individual is 1, the distance between −1 and 2 is 3, while the distance from 1 to 2 is 1. Therefore, the reverse individual is closer to the optimal. The details of the OBL are shown in Algorithm 1.

Assuming is the solution in the search range, the opposite solution

is calculated as follows.

(11)

(11) where

is the lower bound

is the upper bound of the search range.

After initialising population, the LSHAED-SPACMA algorithm is introduced before the propagation operation to more effectively help the propagation operation find the global optimal solution in this range, and improve the exploration ability and performance of the proposed algorithm (The role of LSHAED-SPACMA algorithm (Mohamed, Hadi, Fattouh, & Jambi, Citation2017) is to improve the global search, please refer to the literature for details.).

Table

3.2. The modified propagation operator

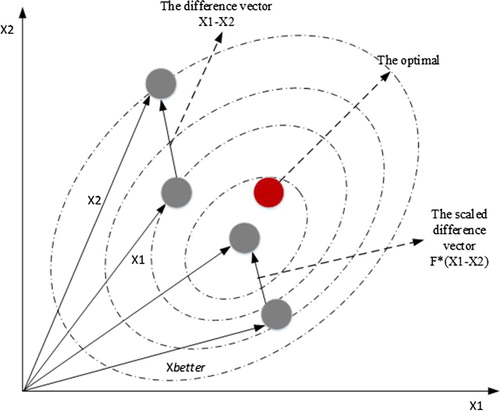

The propagation operation is executed after the population is initialised and a global search within the specified search range. The position of the new water wave is calculated according to Equation (1) to balance the global exploration and local exploitation. The disadvantage of WWO is that the undesirable search accuracy leads to the solution found is deviated from the global optimal solution. Therefore, the mutation strategy of DE is introduced to give the water wave a vector that propagates in a better direction to find the optimal solution. Several mutation strategies of DE are widely applied in evolutionary algorithms and each mutation strategy has its advantages and disadvantages. The

is frequently appeared in numerous literatures. The difference step size of the population is determined by the mutation operator

. However, the difference step size of the population is controlled by the wave length

in the proposed algorithm. The reason is that the greater the fitness value, the shorter the wavelength and the smaller the corresponding propagation range. Not only that the breaking is closely related to the wave length reduction. Thus, the wave length is applied to control the influence of the difference vector. Meanwhile, a random variable named

(“better” represents “better solutions in terms of probability”) (He & Zhou, Citation2017) is generated for each individual

by sampling the Gaussian distribution

. Furthermore,

is applied to guide the search direction of

to enhance the exploration. Combined with Equations (13) and (14), the modified propagation operation is applied to assist the proposed algorithm.

(12)

(12)

(13)

(13)

(14)

(14)

(15)

(15) where

is a mutation population,

is wavelength,

is the current better solution,

is the maximum and

is the minimum wavelength reduction coefficient,

,

.

The process of the mutation strategy is illustrated in Figure .

3.3. The modified breaking operator

In original WWO, the individual which is generated by propagation operator is to find global optimal. However, the global optimal solution found in this way is relatively random. Although the optimal solution is found in the population via the change of the wavelength to a certain extent, the direction of finding the optimal solution is uncertain. Therefore, this problem is solved by proposing the mutation-propagating individuals., Let the population find the position which is closer to the optimal solution through the variable wavelength based on the original propagation according to Equation (1). In each iteration, a mutation propagation individual is approached toward the optimal solution according to Equation (13). After the modified propagation operator, the population for the breaking operation is determined by a crossover operator. The breaking operation is performed by WWO to find the optimal solution

. In this paper, the identical mutation strategy, as the propagation operator, is applied in a modified breaking operator. The specific method is each solitary wave

of

is obtained by randomly selecting

dimensions (where

is a random number between 1 and a predefined number

) and adding variation (The variation is obtained by the mutation strategy mentioned in section 3.2) to the original position at each dimension

. A new solitary wave

is generated in the search space according to Equation (16).

(16)

(16)

(17)

(17) where

is the breaking coefficient, and

is a Gaussian random number with mean 0 and standard deviation 1,

is the mutation population.

3.4. Local search strategy

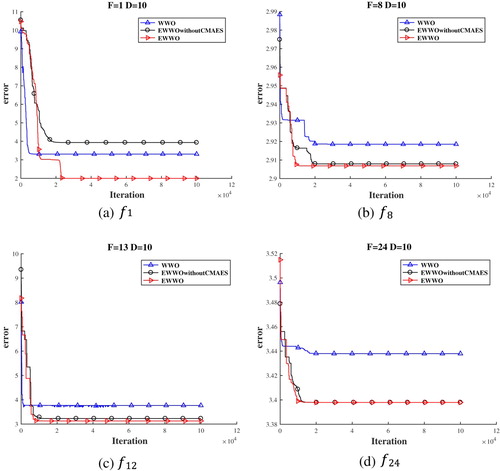

The refraction operator is to avoid search stagnation, escape the local optimum and enhance the diversity of the population. When the water wave height is reduced to 0, the WWO tends to fall into the local optimal solution. At this time, the algorithm has escaped the local optimum by the refraction operation. After the propagation operator and the breaking operator are performed, the fitness values of the population remain unchanged and the local search strategy is executed to help the algorithm escape the local optimum, the newly generated water wave continuously approaches the optimal water wave. However, the risk of premature convergence is increased by the refraction operator. Therefore, CMA-ES is employed to reduce the complexity of the algorithm and improve the search ability of the algorithm. The detailed process is as follows. Assume that the wave height is ignored. When the global optimal solution is updated after the breaking operator, the covariance matrix adaptation is applied as a local search strategy for adaptive adjustment and self-learning. As a well-performing evolutionary algorithm, the covariance matrix adaptation shows desirable results in medium-scale complex optimisation problems, such as non-separable, ill-conditioned or rugged and multi-modal. Meanwhile, CMA-ES has a desirable ability of convergence. In this paper, the covariance matrix adaptation, as a local search strategy, helps EWWO to escape the local optimum by storing the information of the historical optimal solution in the covariance matrix and continuously updating the covariance matrix adaptation to learn from the historical optimal solution. The optimal solution is found by the individuals in the population along the direction in which the covariance matrix is updated. In addition, in order to verify the impact of the covariance matrix adaptation on EWWO, in this section, the original WWO has been compared with the EWWO without a local search strategy CMA-ES and the proposed EWWO on 10D. The three algorithms are testified on the CEC2017 benchmark problem set (The detailed of the CEC2017 benchmark is described in Section 4). As seen in Figure , EWWO has better convergence speed and convergence accuracy than the original WWO and EWWO without CMA-ES. From Figure (a), EWWO falls into the local optimum after the new breaking operator is executed. Afterwards, EWWO is disturbed by the covariance matrix adaptation to escape the local optimum. Compared with EWWO without CMA-ES, the convergence speed and convergence accuracy of EWWO are improved in other three.

3.5. Pseudocode of EWWO

With the above-detailed description, the pseudocode of the EWWO is illustrated in Algorithm 2.

According to the pseudocode, it is clear that a new mutation strategy and the covariance matrix adaptation work together to balance the exploration and exploitation of the EWWO. However, it is not a mere mix of the two algorithms. After the initialisation of the population, the propagation operation is executed to control the global exploration, where the new individuals are generated by sampling a Gaussian distribution and a mutation strategy combined with the wavelength

, the global exploration is stressed. To ensure that the diversity of the population is not destroyed, the identical mutation strategy is applied into the breaking operation. At the moment, the algorithm is easy to fall into local exploitation and the covariance matrix adaptation is introduced to address the problem. Since the CMA-ES has the ability of self-learning and the covariance matrix is updated to control the current population and learn from the history optimal solution to enhance the local exploitation. Furthermore, by balancing the global exploration and the local exploitation, EWWO is testified on the CEC2017 benchmark test functions and expected to solve the practical problems.

Table

3.6. Complexity analysis

A set of fitness evaluations and iterations are given to generally calculate the time complexity of an EA (Ren, Zhang, Wen, & Feng, Citation2013) by an analysing the extra time in each generation. However, the time of function evaluations is not considered. In this paper, the population size and the dimension of problem are denoted as and

, respectively. In terms of initialisation population evolution, the time complexity is

, which are obtained by lines 2–10 in Algorithm 3. From Algorithm 4, it takes

to execute the LSHADE-SPACMA at each generation in line 6. During the update of the propagation and breaking operations,

(lines 7–17) is taken to generate the next generation. In addition, the time complexity of the CMA-ES, which is analysed by Hansen et al. (Citation2014), is reduced from

to

(line 18). Furthermore, the update of all parameters takes

. Therefore, the overall time complexity of EWWO is

.

3.7. Convergence analysis of EWWO

In general, the analysis of the convergence of evolutionary algorithms helps to understand the algorithm and guide the improvement of the algorithm. The convergence of evolutionary algorithms mainly refers to whether the algorithm finally searches for and retains the global optimal solution of the problem under the condition that the iteration time or algebra tends to infinity, or whether the individual in the whole population become global optimal according to Zhang and Muhlenbein (Citation2004) In this paper, the iterative method (Argyros, Behl, Machado, & Alshomrani, Citation2019) is introduced to analyse the convergence of EWWO. The itterative method adopted an initial hypothesis value to generate a sequence of approximate solutions for a set of problems, if the corresponding sequence converges for given initial approximations, the iterative method is a convergent process.

Theorem 3.1.

.

Theorem 3.2:

Suppose that . Then, the necessary and sufficient condition for

is the spectral radius of the matrix

,

.

Theorem 3:

Suppose that is a system of equation and its first-order linear stationary iteration method is

. For the arbitrarily selected initial vectors

, the necessary and sufficient condition for convergence of the iteration method is the spectral radius of the matrix

,

.

Proof:

(1) Sufficiency. Let the exact solution is , the error vector is

.

(18)

(18) Let

, according to Theorem 3.2,

, then,

for

, namely,

. (2) Necessity.

for

,

. It is obvious that the limit

is the solution of equation set and for

,

According to Theorem 3.1 and Theorem 3.2,

.

The convergence analysis of the EWWO is analysed by the iterative method. It has proved that any individual in WWO converges in two special cases by simplifying the target problem and parameter settings in the literature (Zheng & Zheng, Citation2016). (1) Propagation operator is only performed. (2) Refraction operator is only performed. However, refraction operator has been removed in this paper, only the MPO is performed and analysed. Based on the basic process of the WWO, the position of the water wave is affected by its wavelength

, and the change of wavelength

is related to the fitness of the water wave

. Let

denote the iteration number of EWWO,

,

is a random number. Equations (2) and (17) are expressed as follows.

(19)

(19)

(20)

(20) Equation (23) is described as follows.

(21)

(21)

(22)

(22) And so forth,

(23)

(23) Let

represent the exponential term

and

representsthe mean value of

Equation (23) is described as follows.

(24)

(24) At this time, the propagation formula is described as follows.

(25)

(25) where

.

Assuming in the iteration, if

,

. If

,

If

,

. Therefore,

. When

,

, namely, the position of the water wave no longer changes with time and the EWWO iteratively converges.

4. The experiment and comparisons

In this section, EWWO is evaluated on the CEC 2017 benchmark problem set and compared with the standard WWO algorithm, three state-of-the-art variant algorithms of the WWO and CMA-ES. The compared algorithms are listed as follows. WWO (Zheng, Citation2015), MWWO (Soltanian, Derakhshan, & Soleimanpour, Citation2018), SCWWO (Zhang et al., Citation2018), Sim-WWO (Zheng & Bei, Citation2015), CMA-ES (Zheng & Bei, Citation2015). All the parameter settings are shown in Table . The benchmark problem set is divided into four classes: Unimodal Functions (), Simple Multimodal Functions (

), Hybrid Functions (

) and Composition Functions (

). More details for a description of the benchmark functions are found in the literature (Awad, Ali, Liang, Qu, & Suganthan, Citation2016). The EWWO was coded using MATLAB. Section 4.1 describes the experimental condition when the simulation is run. Section 4.2 discusses the experimental results on 30 benchmark test functions in IEEE CEC2017. The experimental results of EWWO with some state-of-the-art proposed algorithms are described in Section 4.3. It is necessary to point out that according to the evaluation criteria of CEC2017,

has been excluded because it shows unstable behaviour especially for higher dimensions, and significant performance variations for the same algorithm implemented in MATLAB. The simulation experiments were implemented on a personal computer (PC) with Intel (R) Core (TM) i7-6700 CPU 3.4GHz and 8.00GB memory with a windows Server 2012 OS. The maximum number of fitness evaluations (

) is set to

for each algorithm on the problems to ensure a fair comparison. A brief description of compared algorithms is as follows.

MWWO is a modified variant of WWO, which is a new kind of exploration parameter to increase the exploration ability of algorithm and uses an exponential adaptive

strategy.

SCWWO is the sine cosine algorithm combined with WWO. Additionally, the elite opposition-based learning strategy is introduced into the refraction operation to improve the diversity of the population and enhance the exploration capability of WWO.

Sim-WWO is a simplified version of WWO by removing the refraction operation and introducing a population size reduction strategy to balance exploration and exploitation.

CMA-ES is an evolutionary method that updates and samples population by covariance matrix and evolutionary paths to obtain optimal solutions. The detailed parameter setting is referred to the literature according to Section 2.2.

Table 1. Parameter settings of competitive algorithms.

4.1. Parameters analysis

The parameters have important influence on the performance of stochastic algorithms, whereas it is difficult to exactly determine their values. Therefore, the goal of the parameter control is to trace desirable parameter values during the optimisation procedure. In this paper, the design of experiments method (DOE) (Montgomery, Citation2006) is adopted to calibrate the parameters of EWWO. There are five critical parameters to analyse according to the description of EWWO in Section 3. (the crossed factor),

(the wavelength reduction coefficient),

(the breaking reduction coefficient),

(which solutions is utilised in updating the population distribution is determined by

) and

(search accuracy is determined by

). In this paper, parameter adaptation is applied to adjust the two parameters

and

. A linear adaptive

strategy is updated as follows.

(26)

(26) where

is the maximum and

is the minimum wavelength reduction coefficient,

,

.

has a larger value to improve the performance of the global search in the exploration stage.

linearly decreases from 0.01 to –0.001 according to the standard WWO as follows.

(27)

(27) where

is the maximum and

is the minimum breaking reduction coefficient.

For the remaining three parameters, a full factorial design is considered where the different choices for the five parameters are considered. The choice of each parameter is as follows. ,

,

. All the possible combinations of the three parameters is in total of

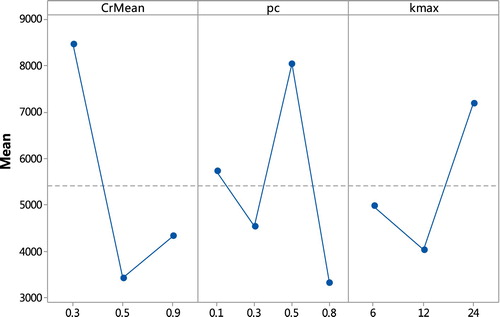

. The experimental results are analysed by means of a multifactor analysis of variance (ANOVA) according to the literatures (Shao, Pi, & Shao, Citation2018). The importance of the interaction between parameters is illustrated by the ANOVA. The

in ANOVA is an indicator of significance when the

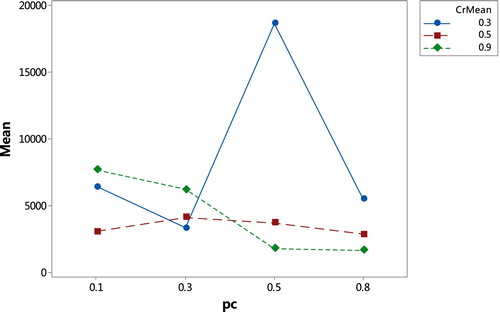

. The ANOVA results of parameter calibration are shown as Table .

Table 2. The experimental result of the ANOVA on parameter calibration of EWWO.

As shown in Table , the maximal corresponds to

. It suggests that the

has the most important effect over the average performance of EWWO among the considered factors. According to the main effect plot of parameters in Figure , the choice of

is better than other candidates. The diversity of population evolution and the convergence rate of population are affected by the value of

. From Figure , the diversity of population is enhanced, but the algorithm is easy to premature convergence when

. When

, the exploitation is improved, while the diversity of population is destroyed. However, the main effect plot is not comprehensive if there are significant interactions among the parameters. The interaction between

and

explains that

is the best choice on the basis of Figure .

The second biggest corresponds to

. It has demonstrated that the interactions between the crossed factor

and the updating rate

are significant. In addition,

interaction is significant as the

is less than 0.05.

and

lead to the best performance of EWWO according to Figure . However, in order to maintain the diversity of population and balance the exploration and exploitation, the role of

is important according to Figure .

To sum up, the values of the parameters are determined to apply in the EWWO. ,

and

.

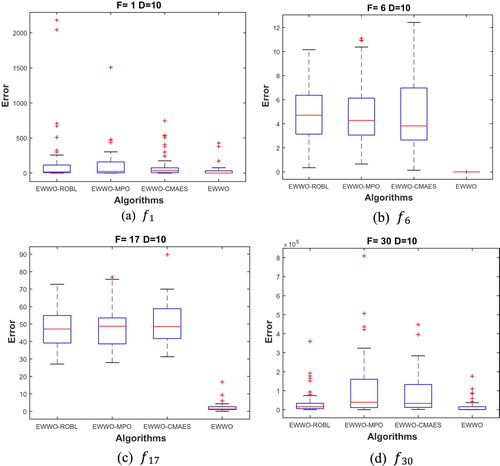

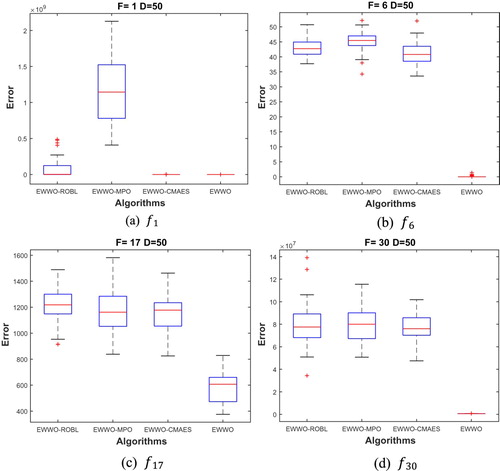

4.2. Operator analysis

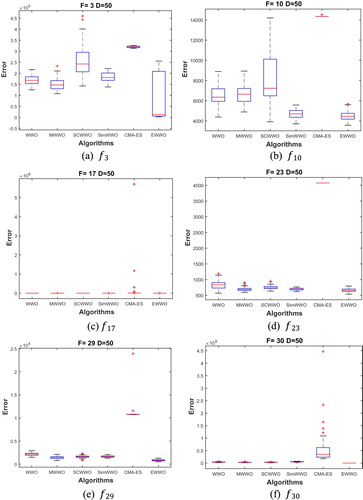

In this section, EWWO is compared with EWWO without random OBL (EWWO-ROBL), EWWO without the MPO (EWWO-MPO) and EWWO without the covariance matrix adaptation (EWWO-CMAES) to verify the impact of each strategy on the proposed algorithm. The average results of EWWO, EWWO-ROBL, EWWO-MPO and EWWO-CMAES on 10 and 50 dimensions are testified on the CEC2017 test suite (Awad et al., Citation2016) and the stability of each category is shown with boxplots. The effectiveness of each strategy is demonstrated by experimental results. The proposed algorithm is superior to EWWO-ROBL, EWWO-MPO and EWWO-CMAES in high- and low-dimensional functions. The performance of the multiple strategy algorithm is better than the single strategy algorithm owing to the difference of the landscape of each function and the diversity. The boxplots of certain typical benchmark functions for each strategy on 10 and 50 dimensions are shown in Figures and , respectively, the stability of EWWO is stronger than EWWO-ROBL, EWWO-MPO and EWWO-CMAES, namely, the combination of the three operations contributes to enhance the stability of EWWO.

4.3. Experimental analysis and result

In order to evaluate the performance of the proposed EWWO, four groups of the simulations are executed on 10, 30, 50 and 100 dimensions over the CEC2017 test suite, and five statistical metrics are calculated, that is Best, Worse, Median, Mean and Std (Awad et al., Citation2016). These metrics are used to analyse the performance of EWWO. The results of EWWO, including the mean error and standard deviation, for 100-dimensions are shown in Table . The results of 10, 30 and 50-dimensions are moved into the supplementary materials. The best value in each run is set to zero if the value is less than 10e-8 in this research. All experimental algorithms are run independently 51 times on each test problem, and Mean and Std metrics are calculated. The experimental results are depicted. The best result for each function is shown in boldface. For the evolution algorithms to solve CEC2017 benchmark functions is an immense challenge. With the dimensions increasing of functions, the optimisation problems become increasingly complicated. All the benchmark functions with are also executed to detect the stable of EWWO and the results of the five algorithms for

are shown in Table , which show that the performance of EWWO is better than that of other variants of WWO on the most functions. EWWO significantly outperforms other variants of WWO with

and the performance of EWWO increases with the dimension increased. The detailed analysis is as follows. As shown in Table , the EWWO performs desired results in simple multimodal functions from

,

,

and

for 100 dimensions. While for hybrid functions and composition functions from

,

,

and

, the proposed algorithm achieves the optimal solution. For the remaining composition functions, EWWO shows an advantage from

and

. In sum, the EWWO has obtained good results on the most of functions from low dimensions to high dimensions and EWWO outperforms other compared algorithms on the CEC 2017 benchmark especially on high-dimensional functions.

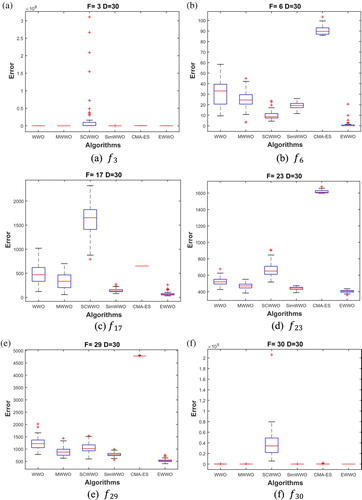

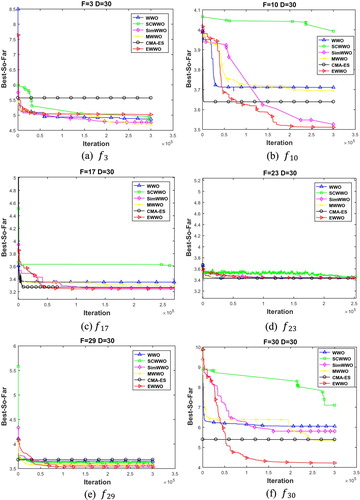

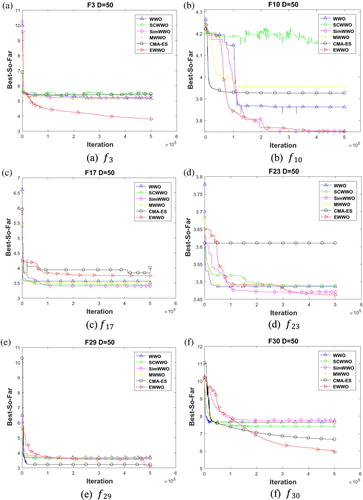

The statistical tests show that EWWO has a significant improvement than the compared algorithms to testify the performance of the EWWO. The Wilcoxon’s test (García, Molina, Lozano, & Herrera, Citation2009), which compares the algorithms in pairs, is introduced to detect the significant difference between EWWO and other algorithms. Tables – show the statistical analysis result of the Wilcoxon’s test between EWWO and other variants of WWO on the functions with different dimensions, considering EWWO as a control algorithm. If EWWO is significantly better than the compared algorithm with a confidence level, the test results are marked in boldface. In Tables –, is the sum of the rank that EWWO outperforms than another algorithm in the current row, and

is the sum of the levels that EWWO another algorithm in the current row outperforms than EWWO. A yes means that EWWO is significantly superior to other algorithms in the current row, no is the opposite mean. The stability of the result for the algorithms is shown in Figures and for 30 and 50 dimensions, respectively. Meanwhile, the convergence plots of the compared algorithms are described in Figures and on

,

,

,

,

,

which includes four categories of CEC2017 benchmark function. It is observed that the convergence rate of EWWO is better than the compared algorithms in 50 dimensions. The modified propagation and breaking operations play a desirable role to balance the global exploration and local exploitation. In addition, the convergence rate and the convergence speed are improved by the covariance matrix adaptation. However, no algorithms are better than the linear enumeration of the search space or the pure random search algorithm according to the no-free lunch theorem (NFL) (Wolpert, Citation1997).

Figure 10. Convergence plots of EWWO, WWO, SCWWO, Sim-WWO, CMA-ES and MWWO on some typical benchmark functions (30D).

Figure 11. Convergence plots of EWWO, WWO, SCWWO, Sim-WWO, CMA-ES and MWWO on some typical benchmark functions (50D).

Table 3. p-Value of Wilcoxon’s rank-sum test for 10D.

Table 4. p-Value of Wilcoxon’s rank-sum test for 30D.

Table 5. p-Value of Wilcoxon’s rank-sum test for 50D.

Table 6. p-Value of Wilcoxon’s rank-sum test for 100D.

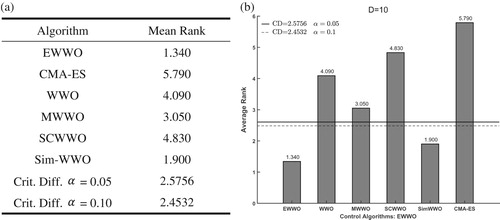

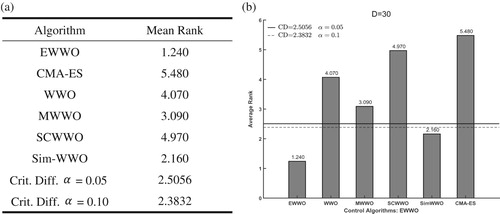

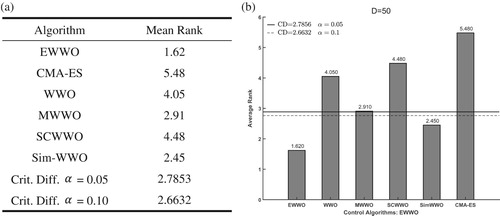

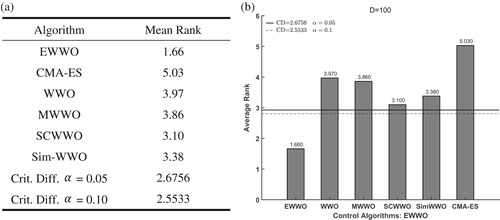

To further check the significant differences between EWWO and the five competitors, the Friedman’s test (García et al., Citation2009) is carried out to detect all compared algorithms. The rank of EWWO and compared algorithms on Friedman’s test is shown in Figures – for . All the figures about Friedman’s test illustrate that the proposed algorithm has the best ranking among all algorithms. To evaluate the significance level of all algorithms, an additional Bonferroni–Dunn’s procedure is applied as a post hoc procedure to calculate the critical difference (CD in Equation (28)) for comparing their differences with α=0.05 and

.

(28)

(28) In Equation (34) parameters

and

are the number of algorithms to be compared and the number of benchmarks, respectively. There are

and

in the experimental evaluations. When

,

is 2.576 and

,

is 2.327 from Table B.16 (two-tailed

) of (Ransom, Citation1974). Figures – sketch the results of Bonferroni–Dunn’s test which considers EWWO as a control algorithm. There is a significant difference between EWWO and the five compared algorithms with

and

for 30 and 50 dimensions, respectively. However, there is no significant difference between EWWO and Sim-WWO with

and

for 10 dimensions.

In sum, statistical analysis of the results obtained by comparing the algorithms in the study shows that the proposed EWWO is the best for the 30, 50 and 100 dimensions on the multimodal functions, hybrid functions and composition functions, respectively. Although EWWO is not able to achieve the best results on some test problems, it achieves suboptimal results compared to all experimental algorithms. Compared with other algorithms, EWWO achieves the best results on unimodal and multimodal functions of different dimensions. On the remaining test questions, the proposed algorithm still maintains the stable solution performance.

The experimental results of CMA-ES, WWO, SCWWO, Sim-WWO and EWWO are shown in Table for 100-dimensions as follows.

Table 7. The results of all algorithms for 100-dimensional benchmark functions.

5. Conclusions

In this paper, an enhanced water wave optimisation assisted by the random OBL, mutation strategies and CMA-ES, named EWWO, are proposed to enhance the convergence rate and computational accuracy of WWO. In EWWO, a new modified operation for propagation operation and breaking operation is designed to improve the ability of global search and to balance the exploration and exploitation. Crossover operation between propagation and breaking operations are embedded to maintain the diversity of population. Furthermore, the refraction operation is removed to improve the convergence rate of EWWO and CMA-ES, as a local search strategy, is added to improve the calculation accuracy of EWWO and avoid EWWO falling into the local optimal solution and taking place the phenomenon of premature convergence. In addition, the wavelength reduction coefficient and the breaking coefficient are linearly decreased in order to find the optimal solution in a variable state. The other parameters are testified on DOE. CEC 2017 benchmark functions are applied to verify the performance of EWWO and the experiential results demonstrate that the EWWO is superior to WWO, SCWWO, MWWO, Sim-WWO and CMA-ES. To sum up, EWWO is an efficiency and effectiveness algorithm.

For the future work, several issues are worthy to further research. It is a worthwhile research direction for applying EWWO to solve the combinatorial optimisation problem in practical application domains. For instance, the standard WWO has been applied to solve the energy consumption problem, the distribution flow shop scheduling problem (DFSP) for discrete optimisation problems. Furthermore, the proposed algorithm will be utilised in the other fields of combination to solve real optimisation problems.

Supplemental_material

Download MS Word (66 KB)Disclosure statement

No potential conflict of interest was reported by the authors.

ORCID

Fuqing Zhao http://orcid.org/0000-0002-7336-9699

Additional information

Funding

References

- Abdollahpour, S., & Rezaian, J. (2016). Two new meta-heuristics for no-wait flexible flow shop scheduling problem with capacitated machines, mixed make-to-order and make-to-stock policy. Soft Computing, 21(12), 1–19.

- Allahverdi, A., Aydilek, H., & Aydilek, A. (2018). No-wait flowshop scheduling problem with two criteria; total tardiness and makespan. European Journal of Operational Research, 269(2), 590–601.

- Argyros, I. K., Behl, R., Machado, J. A. T., & Alshomrani, A. S. (2019). Local convergence of iterative methods for solving equations and system of equations using weight function techniques. Applied Mathematics and Computation, 347, 891–902.

- Awad, N., Ali, M., Liang, J., Qu, B., & Suganthan, P. (2016). Problem definitions and evaluation criteria for the CEC 2017 special session and competition on single objective real-parameter numerical optimization. Technical Report, Nanyang Technological University, Singapore, November 2016.

- Awad, N. H., Ali, M. Z., Mallipeddi, R., & Suganthan, P. N. (2018). An improved differential evolution algorithm using efficient adapted surrogate model for numerical optimization. Information Sciences, 451, 326–347.

- Bijan, M. G., & Pillay, P. (2019). Efficiency estimation of the induction machine by particle swarm optimization using rapid test data with range constraints. IEEE Transactions on Industrial Electronics, 66(8), 5883–5894.

- Chen, H. W., Hou, Y. J., Luo, Q. X., Hu, Z., & Yan, L. Y. (2018). Text feature selection based on water wave optimization algorithm. In 10th International Conference on Advanced Computational Intelligence (ICACI), 546-551. New York: IEEE.

- Cheng, L. I. (2010). A new metaheuristic bat-inspired algorithm. Computer Knowledge & Technology, 284, 65–74.

- Das, A. K., Das, S., & Ghosh, A. (2017). Ensemble feature selection using bi-objective genetic algorithm. Knowledge-Based Systems, 123, 116–127.

- Das, S., Mullick, S. S., & Suganthan, P. N. (2016). Recent advances in differential evolution – an updated survey. Swarm & Evolutionary Computation, 27, 1–30.

- García, S., Molina, D., Lozano, M., & Herrera, F. (2009). A study on the use of non-parametric tests for analyzing the evolutionary algorithms’ behaviour: A case study on the CEC’2005 special session on real parameter optimization. Journal of Heuristics, 15(6), 617.

- Geem, Z. W., Kim, J. H., & Loganathan, G. V. (2001). A new heuristic optimization algorithm: Harmony search. Simulation, 76(2), 60–68.

- Grobelny, J., & Michalski, R. (2017). A novel version of simulated annealing based on linguistic patterns for solving facility layout problems. Knowledge-based Systems, 124, 55–69.

- Hansen, N., & Hansen, N. (2006). The CMA evolution strategy: A comparing review. Studies in Fuzziness & Soft Computing, 192, 75–102.

- Hansen, N., Müller, S. D., & Koumoutsakos, P. (2014). Reducing the time complexity of the derandomized evolution strategy with covariance matrix adaptation (CMA-ES). Evolutionary Computation, 11(1), 1–18.

- He, X., & Zhou, Y. (2017). Enhancing the performance of differential evolution with covariance matrix self-adaptation. Applied Soft Computing, 64, 227–243.

- Kennedy, J., & Eberhart, R. (1995, 27 Nov.-1 Dec. 1995). Particle swarm optimization. Proceedings of ICNN’95 – International Conference on Neural Networks.

- Mahdavi, S., Rahnamayan, S., & Deb, K. (2018). Opposition based learning: A literature review. Swarm & Evolutionary Computation, 39, 1–23.

- Mohamed, A. W., Hadi, A. A., Fattouh, A. M., & Jambi, K. M. (2017). LSHADE with semi-parameter adaptation hybrid with CMA-ES for solving CEC 2017 benchmark problems. Evolutionary Computation, 145–152. doi:10.1109/CEC.2017.7969307.

- Montgomery, D. C. (2006). Design and analysis of experiments. New York, NY: John Wiley & Sons.

- Price, K. V. (1999). An introduction to differential evolution. In C. David, D. Marco, G. Fred, D. Dipankar, M. Pablo, P. Riccardo, & V. P. Kenneth (Eds.), New ideas in optimization (pp. 79–108). London: McGraw-Hill.

- Ransom, J. (1974). Biostatistical analysis J. H. Zar. American Biology Teacher, 36(5), 316–316.

- Rashedi, E., Nezamabadi-pour, H., & Saryazdi, S. (2009). GSA: A gravitational search algorithm. Information Science, 179(13), 2232–2248.

- Ren, Z., Zhang, A., Wen, C., & Feng, Z. (2013). A scatter learning particle swarm optimization algorithm for multimodal problems. IEEE Transactions on Cybernetics, 44(7), 1127–1140.

- Shao, W., Pi, D., & Shao, Z. (2018). A hybrid discrete teaching-learning based meta-heuristic for solving no-idle flow shop scheduling problem with total tardiness criterion. Computers & Operations Research, 94, 89–105.

- Shao, Z., Pi, D., & Shao, W. (2018a). Estimation of distribution algorithm with path relinking for the blocking flow-shop scheduling problem. Engineering Optimization, 50(5), 894–916.

- Shao, Z., Pi, D., & Shao, W. (2018b). A novel discrete water wave optimization algorithm for blocking flow-shop scheduling problem with sequence-dependent setup times. Swarm and Evolutionary Computation, 40, 53–75.

- Simon, D. (2008). Biogeography-based optimization. IEEE Transactions on Evolutionary Computation, 12(6), 702–713.

- Soltanian, A., Derakhshan, F., & Soleimanpour, M. (2018). MWWO: Modified water wave optimization. In 2018 3rd Conference on Swarm Intelligence and Evolutionary Computation (CSIEC).

- Storn, R., & Price, K. (1997). Differential evolution – a simple and efficient heuristic for global optimization over continuous spaces. Journal of Global Optimization, 11(4), 341–359.

- Tian, Z., & Zhang, C. (2018). An improved harmony search algorithm and its application in function optimization. Journal of Information Processing Systems, 14(5), 1237–1253.

- Wolpert, D. (1997). Macready: No free lunch theorems for optimization. IEEE Transactions on Evolutionary Computation, 1(1), 67–82.

- Wu, X.-B., Liao, J., & Wang, Z.-C. (2015). Water wave optimization for the traveling salesman problem. In International Conference on Intelligent Computing (ICIC) 9225, 137–146.

- Xu, Q., Wang, L., Wang, N., Hei, X., & Zhao, L. (2014). A review of opposition-based learning from 2005 to 2012. Engineering Applications of Artificial Intelligence, 29, 1–12.

- Yang, X.-S., Deb, S., & Mishra, S. K. (2018). Multi-species cuckoo search algorithm for global optimization. Cognitive Computation, 10(6), 1085–1095.

- Yu, T., Qiang, Z., & Benfei, Z. (2019). A genetic algorithm based on spatiotemporal conflict between continuous berth-allocation and time-varying specific crane assignment. Engineering Optimization, 51(3), 390–411.

- Yun, X., Feng, X., Lyu, X., Wang, S., & Liu, B. (2016, July 24-29). A novel water wave optimization based memetic algorithm for flow-shop scheduling. 2016 IEEE Congress on Evolutionary Computation (CEC), 1971–1976. New York: IEEE.

- Zhang, Q., & Muhlenbein, H. (2004). On the convergence of a class of Estimation of distribution algorithms. IEEE Transactions on Evolutionary Computation, 8(2), 127–136.

- Zhang, B., Zhang, M. X., Zhang, J. F., & Zheng, Y. J. (2015). A water wave optimization algorithm with variable population size and comprehensive learning. International Conference on Intelligent Computing, 9225, 124–136.

- Zhang, J., Zhou, Y., & Luo, Q. (2018). An improved sine cosine water wave optimization algorithm for global optimization. Journal of Intelligent & Fuzzy Systems, 34(4), 2129–2141.

- Zhao, S., Li, Z., Xin, Y., Wang, K., Xin, L., & Bo, L. (2017). IIR filters designing by water wave optimization. IEEE International Conference on Control & Automation (347–352). New York, NY: IEEE.

- Zhao, F., Liu, Y., Shao, Z. S., Jiang, X., Zhang, C., & Wang, J. B. (2016). A chaotic local search based bacterial foraging algorithm and its application to a permutation flow-shop scheduling problem. International Journal of Computer Integrated Manufacturing, 29(9), 962–981.

- Zhao, F., Liu, H., Zhang, Y., Ma, W., & Zhang, C. (2018). A discrete water wave optimization algorithm for no-wait flow shop scheduling problem. Expert Systems with Applications, 91, 347–363.

- Zhao, F., Liu, Y., Zhang, C., & Wang, J. B. (2015). A self-adaptive harmony PSO search algorithm and its performance analysis. Expert Systems with Applications, 42(21), 7436–7455.

- Zhao, F., Qin, S., Zhang, Y., Ma, W. M., Zhang, C., & Song, H. B. (2019). A two-stage differential biogeography-based optimization algorithm and its performance analysis. Expert Systems with Applications, 115, 329–345.

- Zhao, F., Xue, F., Zhang, Y., Ma, W. M., Zhang, C., & Song, H. B. (2018). A hybrid algorithm based on self-adaptive gravitational search algorithm and differential evolution. Expert Systems with Applications, 113, 515–530.

- Zhao, F., Zhang, L., Liu, H., Zhang, Y., Ma, W., Zhang, C., & Song, H. (2019). An improved water wave optimization algorithm with the single wave mechanism for the no-wait flow-shop scheduling problem. Engineering Optimization, 51(10), 1727–1742.

- Zheng, Y. (2015). Water wave optimization: A new nature-inspired metaheuristic. Computers & Operations Research, 55, 1–11.

- Zheng, Y., & Bei, Z. (2015). A simplified water wave optimization algorithm. 2015 IEEE Congress on Evolutionary Computation (CEC).

- Zheng, B., & Zheng, Y. J. (2016). Convergence analysis of water wave optimization algorithm. Computer Science, 43, 41–44.