?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.

?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.Abstract

The diversity of the population is a key factor for particle swarm optimisation (PSO) when dealing with most optimisation problems. The best previously visited positions of each particle are the exemplar in PSO to guide particle swarm to search, and the diversity of the population can be controlled by these best previously visited positions. Base on this idea of to control the diversity of population to improve the performance of PSO, this paper proposes a novel PSO with mutation breeding (MBPSO), which performs a mutation breeding operation periodically, to control the diversity of the population to improve the global optimisation ability. The mutation breeding operation can be divided into two steps: breeding and mutation. The breeding step is to replace all of best previously visited positions of each particle with the global best previously visited position, and the mutation step is to perform a mutation operation for those new generated best previously visited positions. In addition, we adopt a new updating mechanism of the global best previously position to avoid falling into local optimum. The experimental results on a suit of benchmark functions verifies that the proposed PSO is a competitive algorithm when compare with other PSO variants.

1. Introduction

In recent years, particle swarm optimisation (PSO), which was first introduced by Kennedy and Eberhart (Citation1995), has been widely used and researched by many scholars due to its simple algorithm description, easy to understand, and requires fewer parameters to be adjusted (Han & Liu, Citation2014; Pare, Kumar, Bajaj, & Singh, Citation2019; Tran, Xue, Zhang, & Nguyen, Citation2016). PSO has good extensibility to adapt various optimisation problems both in single-objective problems and multi-objective problems (Li, Wang, & Xu, Citation2017; Lv, Wang, Han, Zhao, & Wang, Citation2019), and it has been widely applied to many real optimisation problems (Wang et al., Citation2018), such as neural networks optimisation (Han & Zhu, Citation2011), flow-shop scheduling problem (Huang & Chen, Citation2009), mechanical engineering optimisation problem (Guedria, Citation2016), image segmentation problem (Pare et al., Citation2019; Tan, Chen, & Li, Citation2018), etc. (Cao, Jiang, & Wang, Citation2017; Guan, Yang, Gu, Jiang, & Lin, Citation2018; Jegan & Ravindran, Citation2017; Lin et al., Citation2017; Lu, Yan, Zhang, & Levy, Citation2014; Raja, Citation2014; Sivakoti, Basava, Balaga, & Sannidhi, Citation2017; Tan & Xia, Citation2018; Tran, Citation2018; Wang, Shi, Ding, & Gu, Citation2016).

However, PSO also has some disadvantages such as premature convergence and easiness getting trapped into local minima (Li, Zhan, Lin, Zhang, & Luo, Citation2015), especially in dealing with some high-dimensional problems (Han & Liu, Citation2015). Many scholars have done a lot of research on this issue, and many variants have been proposed to improve the performance of PSO algorithm. In general, these variants can be classified under four categories (Tanweer, Suresh, & Sundararajan, Citation2015):

The first category is based on algorithm parameter settings. In order to improve the convergence performance, a method of linearly varying inertial weight (PSO-LVIW) was proposed in Shi and Eberhart (Citation1998), and this strategy was adopted by many PSO variants. In Nagra, Han, and Ling (Citation2019), an improved hybrid self-inertia weight adaptive PSO with gradient-based local search was proposed. In Taherkhani and Safabakhsh (Citation2016), this paper proposed a strategy of dynamically adjusting the inertial weight or acceleration coefficients according to the state of the algorithm, which can reduce the risk of premature convergence. In Eberhart and Shi (Citation2000), a new constriction coefficient was employed to control the convergence of the particles, which can improve the convergence speed. Ecological particle swarm optimisation algorithm was proposed in Kang, Wang, and Wu (Citation2008), which defines a ecological hierarchy and competition model based on population density, and assigns the different inertia weight factors for different ecological hierarchies. In Tanweer et al. (Citation2015), a self regulating particle swarm optimisation algorithm was proposed, which uses a self-regulating inertia weight strategy and a self-perception of global search direction strategy for intelligent exploitation of the solution space. An adaptive PSO with supervised learning and control was introduced in Dong and Zhou (Citation2017), through the method that treats PSO with optimisation problem as a system to be controlled, to choose parameters adaptively to improve its exploration competence.

The second category is based on neighbourhood topology. Different kinds of neighbourhood topology such as fully connected, wheel connected, Von-Neumann connected were researched and compared in Kennedy and Mendes (Citation2002). A novel method of using a weighted sum of all the neighbouring particles to update the position of a given particle which named fully informed particle swarm was proposed in Mendes, Kennedy, and Neves (Citation2004). Club-based PSO and adaptive default membership club based PSO was proposed in Emara (Citation2009), which split the particle swam into some small groups, and dynamically change the group members. In Liang, Li, Zhang, and Zhou (Citation2015), a variation called adaptive PSO based on clustering was introduced, which via a K-means clustering operation to divide the swarm dynamically and use a ring neighbourhood topology for information sharing among these clusters. In Liang, Qin, Suganthan, and Baskar (Citation2006), a new variant PSO named Comprehensive Learning PSO (CLPSO)was proposed, in this paper a comprehensive learning strategy was adopted which uses all other particles' historical best information to update a particle's velocity. In Cheng and Jin (Citation2015), a social learning particle swarm optimisation was introduced in this paper, which uses the strategy of the particles learning from any better particles in the current swarm.

The third category is to improve the search strategy of PSO. Attractive and Repulsive PSO (ARPSO)was proposed in Riget and Vesterstrom (Citation2002), which uses a diversity measure to control particle's motion strategy, to overcome the problem of premature convergence. Spatial Extension PSO (SEPSO) (Krink, Vesterstrom, & Riget, Citation2002) endowing each particle with a radius, then causing particles to bounce off of one another, to prevent particles overcrowding in a small range. Bare-Bones PSO (BBPSO) (Kennedy, Citation2003) through Gaussian normal distribution to update the particles instead of using acceleration coefficient and velocity. A CLPSO embedded with local search was proposed in Cao et al. (Citation2019), this paper proposes an adaptive local search starting strategy by utilising the quasi-entropy index to improve the optimisation performance both in global search capability and fast convergence ability. An adaptive two-layer particle swarm optimisation algorithm with elitist learning strategy was proposed in Lim and Isa (Citation2014), this algorithm performs evolution on both the current swarm and the memory swarm, self-adaptively dividing the swarms into exploration and exploitation sections, and uses an elitist learning strategy to enhance the search efficiency and to mitigate premature convergence.

The last category is to combine PSO with other heuristic algorithms. In Gong et al. (Citation2016), a genetic learning particle swarm optimisation algorithm was proposed in this paper, which composed of two cascading layers of GA and PSO respectively, and using genetic evolution to breed promising exemplars for PSO. In Nie and Yue (Citation2008), a GA and PSO hybrid algorithm was introduced, which create individuals in a new generation both by crossover and mutation operations and the mechanisms of PSO. In Yang, Niu, and Cai (Citation2018), the simulated annealing and chaos mechanism was introduced in the process of optimal information to increase the population diversity. A new ACO-PSO combined Ant Colony Algorithm and PSO was proposed in Pal, Verma, Gautam, and Indait (Citation2016), which defines that the selection of parameter does not depend on artificial experience, but relies on the robust search on the particles in the PSO. In Bao and Han (Citation2017), a hybrid multi-swarm PSO based on shuffled frog leaping algorithm was proposed, which group the particle swarms by fitness, and use shuffled frog leaping algorithm to update the particles.

In addition, many PSO variants with mutation operation have been presented beside the PSO and GA hybrid algorithms. A particle swarm optimiser with two differential mutation (PSOTD) was proposed in Chen et al. (Citation2017). This paper design a novel structure with two swarms and two layers, the top layer and the bottom layer, which consists all the personal best particles and all the particles respectively, and uses two differential mutation operators with different characteristics to generate promising particles in the top layers. Through this method, the bottom particles can achieve a good trade-off between exploration and exploitation. Particle swarm algorithm with hybrid mutation strategy (HPSO) was proposed in Gao and Xu (Citation2011), which uses a global Henon mutation operator to enhance the global searching ability of particles, and use a local Henon mutation operator to improve the convergence speed, where the global henon mutation operator mutate the velocity and the local henon mutation operator mutate the global best previously visited position. In Dong, Kang, and Zhang (Citation2017), an opposition-based particle swarm optimisation with adaptive mutation strategy (AMOPSO) was proposed. This algorithm uses an adaptive mutation selection strategy and an adaptive nonlinear inertia weight strategy, where the adaptive mutation selection strategy is to disturb the position of the current global optimal particle, and gains a new mutation position adaptively, which base on the distance between the best previously visited positions of each particle and the global best previously visited position.

The diversity of the population is a key factor for particle swarm optimisation. A great diversity of the population usually means that the positions of the population contain too much useless information, which may distract the particle swarm and result in difficult to convergence. However if the population lack diversity, which usually leads to a premature convergence. In PSO, the sets of each particle's best previously visited positions, which is represented as p in this paper, and the global best previously visited position, which is represented as in this paper, are the exemplars to guide the particle swarm to search. Thus, the diversity of the population can be controlled by the p in PSO. In terms of this, inspired by the mutation breeding technique, we present a novel PSO with mutation breeding (MBPSO), to improve the ability of global optimisation. The main contributions of this work can be highlighted as follows:

The mutation breeding operation is proposed to generate a population with a limited diversity. The mutation breeding operation is consisted of two steps: breeding and mutation. In the breeding step, only select the

and discard all of positions in p, then copy the selected position to replace those discarded positions in p. Through the breeding step, we can obtain a p which the diversity is 0. In the mutation step, the new generated positions sets p will be mutated randomly, i.e. randomly select some dimensions from some randomly selected positions base on the mutation probability, then initial the value of these dimensions, to generate a population with a limited diversity. The diversity can be controlled easily by the probability of mutation.

The mutation breeding operation is performed periodically in MBPSO to obtain a limited diversity of p, to control the diversity of the population. It's worth noting that these positions in p which were generated and mutated by the mutation breeding operation need not evaluate the fitness, and these positions in p will be updated with the current positions in the population at the next iteration.

A new updating mechanism of the global best previously position is adopted to enhance the ability of jump out from the local optimum. This new updating mechanism is that whenever the mutation breeding operation has been performed, the

will be replaced with the best position in the current population at the next iteration.

The rest of this paper is organised as follows. Section 2 reviews the classical PSO and its weaknesses. The MBPSO was presented in Section 3 including its computational complexity and search behaviour. Section 4 experimentally compares MBPSO and other PSO variants through a series of benchmark functions. Section 5 discusses the parameter settings for MBPSO, and the conclusion is drawn in Section 6.

2. Classical PSO

PSO algorithm is a population intelligence-based random search algorithm and it was widely used to solve various optimisation problems. In the PSO algorithm, the particle swarm's position sets is denoted as x, and the positions of these particles can be denoted as ,

,…,

, where n is the number of particles, corresponding to a candidate solution of the objective function. Similarly, for the best previously visited positions sets p, each best previously visited position in it can be denoted as

,

,…,

, corresponding to the positions of each particle respectively. The process of searching for the best result of the objective function in PSO is the process of updating the particle swarm's positions, and the positions sets x of the particle swarm is updated by Equations (Equation1

(1)

(1) ) and (Equation2

(2)

(2) ):

(1)

(1)

(2)

(2) where v is the velocity of the particle, i is the ith particle of the swarm, t is time, w is inertia weight of the particle,

,

are the acceleration constants with positive values,

,

are two uniformly distributed random number within range from 0 to 1,

is the best previously visited position of the ith particle, and

is the global best previously visited position of the particle swarm. The best previously visited positions sets p and the global best previously visited position

in PSO are updated by Equations (Equation3

(3)

(3) ) and (Equation4

(4)

(4) ):

(3)

(3)

(4)

(4)

where f is the evaluation function, is the fitness of the ith position in p which evaluated by f at the time t, and

is the fitness of

which evaluated by f at the time t. Due to the position updating formula of PSO, a scattered p may throw particles into chaos and result in a low convergence speed, however a low diversity of p may stagnate these particles near to the position of

and unable continuing to search. If

is a local minimum, which usually result in premature convergence (Monson & Seppi, Citation2006). In addition, because the

and

can only be replaced with a better position, a local optimum will usually prevent the particle swarm to jump out of it because in this situation the

and

are hard to change.

3. The proposed MBPSO algorithm

3.1. Mutation breeding operation

Mutation breeding is an agriculture technique which was widely used in breeding new varieties of plants, and more than 3088 mutagenic plant varieties were released by the end of 2009 (Bradshaw, Citation2016). Mutation breeding is the process of using radiation, chemicals, T-DNA or transposons to treat seeds to generate mutants, and to select mutants with desirable traits to be bred. Inspired by mutation breeding, we suggest this mutation breeding operation to control the diversity of the population to improve the global optimisation ability of the PSO algorithm. Just as the mutation breeding, the mutation breeding operation in MBPSO can be divided into two steps: breeding and mutation. In the first step, only select the global best position and breed it to replace the best previously visited positions of each particle; in the second step, mutate these new generated best previously visited positions. Set the best previously visited positions sets of each particle to p, the global best previously visited position to , the number of particles to n, the number of dimensions to d, the mutation probability to pm, this operation can be described as Algorithm 1:

3.2. The MBPSO algorithm

The MBPSO algorithm is a variant PSO based on the classical PSO, and the difference is that in MBPSO, a mutation breeding operation is periodically performed to update the p, and the will be replaced with the best current position of the particle swarm at the next iteration. In order to explain this operation, set the current positions sets of particle swarm to x, the best previously visited positions sets of each particle to p, the global best previously visited position to

, the number of particles to n, the maximum optimisation iteration number to m, the number of dimensions to d, the cycle of mutation breeding operation to cm, the pseudocode of MBPSO algorithm is described in Algorithm 2.

The mutation breeding operation has some similarities to the Genetic Algorithm (GA), both of them use the mutation operation to try to find potential offsprings. However, there is much difference between mutation breeding operation and GA. In GA, the order to generate offsprings is crossover, mutation and selection. Unlike the GA, the the order in mutation breeding operation is breeding and mutation. Mutation breeding operation is much simpler than GA, on one hand, it does not have the crossover operation, which means the computation complexity is much lower than GA, on the other hand, the selection strategy in the breeding step is very simple, just ”winner-takes-all”, i.e. only the global best previously visited position can be preserved to breed offsprings. The reason of why we use mutation breeding operation and not GA to generate p in MBPSO is that the diversity of offsprings can be easily controlled through mutation breeding operation by the probability of mutation, however, because of the selection operation is the last step in GA, the diversity of these generated offsprings is hard to control.

3.3. Complexity analysis of MBPSO

The computational costs of the classical PSO algorithm include the particle swarm initialisation (), the evaluation of each particle's position (

), and update the positions of each particle (

). Assume the dimensions of the search space is D, the MaxFEs is the maximum number of fitness evaluation, the time complexity of PSO can be estimated as Equation (Equation5

(5)

(5) )

(5)

(5) Therefore,

is the time complexity of the classical PSO (Gong et al., Citation2016).

The major difference between classical PSO and MBPSO is that MBPSO performs a mutation breeding operation periodically during the optimisation process. Assume that the cycle of mutation breeding operation is cm, the computational costs of the breeding step () is

, and the mutation step (

) is

. The time complexity of MBPSO can be estimated as Equation (Equation6

(6)

(6) )

(6)

(6) Therefore,

is the time complexity of the MBPSO, just as same as the classical PSO.

3.4. Analysis of MBPSO's search behaviour

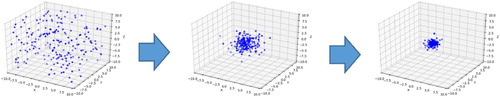

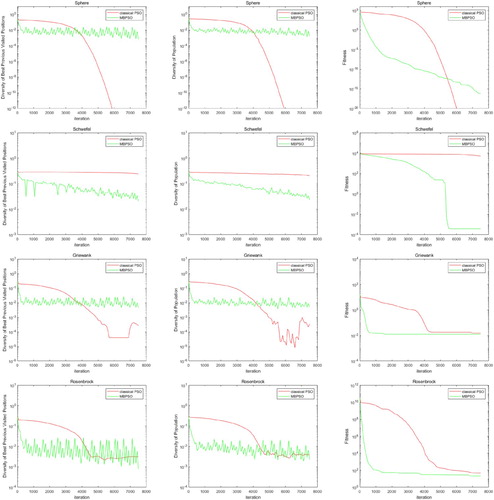

The mutation breeding operation is periodically performed in MBPSO, which will take some different search behaviour from the classical PSO. After a mutation breeding operation, the updated sets of each particle's best previously visited positions p will retain a limited diversity. The different changes of the p between the classical PSO and the MBPSO can be observed from Figures and .

Through the mutation breeding operation to control the diversity of population is the main difference between MBPSO and the classical PSO. The diversity of positions can be calculated by Equation (Equation7(7)

(7) ) according to Han and Zhu (Citation2011)

(7)

(7) where p is the positions sets of the particle swarm,

is the diversity of the positions sets p,

is the population size,

is the length of the longest radius of the search space, D is the dimensions of the problem,

is the value of the ith position in p in the jth dimension, and

is the average value of all the positions in p in the jth dimension.

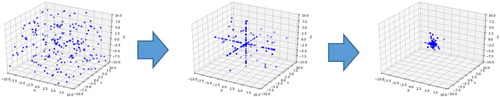

In order to illustrate the effect of mutation breeding operation, Four test functions, Sphere function, Schwefel's function, Griewank's function and Rosenbrock's function are chosen to investigate the difference of diversity change between classical PSO and MBPSO. Figure shows the diversity curve of the particle's best previously visited positions p, the diversity curve of the population and the fitness curve from left to right, respectively, where the first line is on Sphere function, the second line is on Schwefel function, the third line is on Griewank function and the fourth line is on Rosenbrock function.

Figure 3. The diversity curve of p, the diversity curve of x and the fitness curve on Sphere, Schwefel, Griewank and Rosenbrock.

By observing Figure , we can find that the p and the population in MBPSO maintains a stable diversity along with the iteration, meanwhile in classical PSO the diversity has a big value at the early stage of iteration and has a small value at the latter iteration on Sphere, Griewank and Rosenbrock. Different from those three functions, the diversity on Schwefel's function of classical PSO is always too large, which causes the particle swarm hardly to convergence. Generally, for a multimodal problem, if the diversity of population is too large, which usually means the population lack of direction and fall into disorder, and will result hardly to convergence. However, if the diversity of population is too small, which usually means the particle swarm lack of motivation and hard to jump out from current positions. MBPSO keeps a reasonable diversity for the population to avoid this problem, and has a better global optimisation ability when compared with the classical PSO.

4. Numerical simulation and analysis

4.1. Experimental setup

In this section, the performance of the proposed MBPSO algorithm is compared with the PSO-LVIW (linearly varying inertial weight PSO), BBPSO (Bare-Bones PSO), CLPSO (Comprehensive Learning PSO), SRPSO (Self Regulating PSO) and GLPSO (Genetic Learning PSO). To thoroughly evaluate the performance of the proposed MBPSO, ten basic benchmark functions, , and CEC'15 Learning-Based Benchmark Suite,

, which are more complex than the basic functions, are chosen to test the performance of each algorithm. The basic benchmark test functions contain two unimodal functions,

, six multimodal functions,

, and two expanded functions,

, which are shown in Table . The CEC'15 Learning-Based Benchmark Suite please refer to Liang, Qu, Suganthan, and Chen (Citation2014), which contain two unimodal functions,

, three simple multimodal functions,

, three hybrid functions,

, and seven composition functions,

. The search ranges are set to

for all of test functions except the

. For

, the search range is set to

.

Table 1. Basic Test function used in experiment.

4.2. Parameter settings for the involved algorithms

All the algorithms are carried out in Matlab R2018b environment on an Intel Core(TM) i7-8750H CPU. The population size for all of algorithms are set to 40 in all experiments. Each algorithm performs 30 times independently to run over the 10 basic benchmark functions and the fifteen CEC'15 benchmark functions in 30, 50 and 100 dimensional respectively (García, Molina, Lozano, & Herrera, Citation2008), and the MaxFEs is set to . The parameter configurations of each algorithm are according to the corresponding reference (Gong et al., Citation2016; Liang et al., Citation2006; Shi & Eberhart, Citation1998; Tanweer et al., Citation2015), which are shown in Table .

Table 2. Parameter configurations.

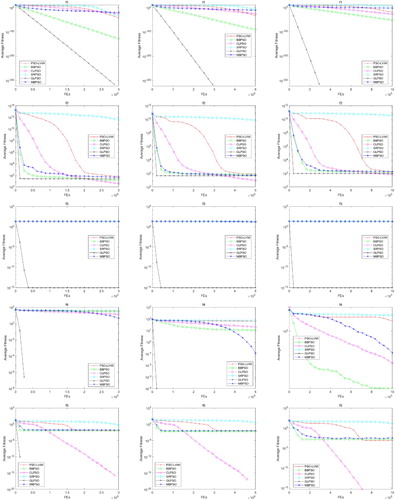

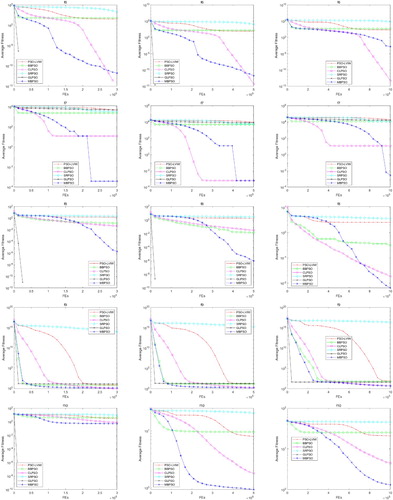

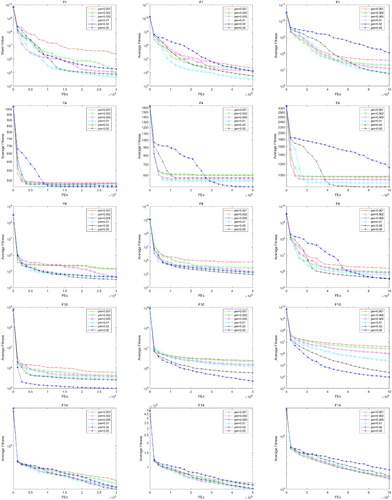

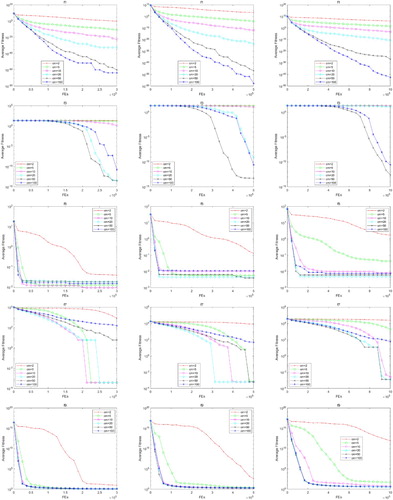

4.3. Experimental results and discussions

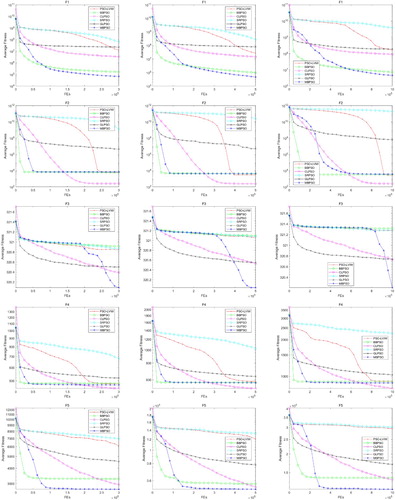

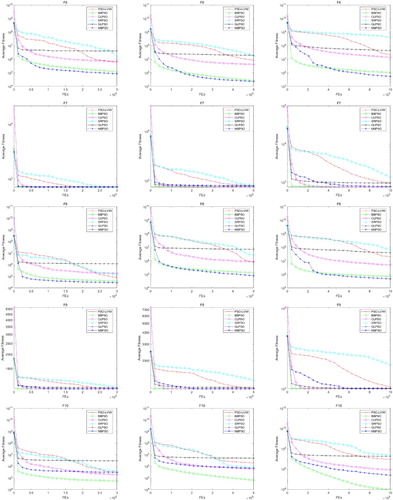

The test results are shown in follows. Table shows the results on the 10 basic test functions and Tables and show the results on the CEC'15 Learning-Based Test Suite. The best results among the six PSO variants are shown in bold and the second best are shown in italic. Figures and present the convergence curve of the six PSO variants on ,

from top to bottom, each test function with 30, 50 and 100 dimensional are shown in figures from left to right, respectively. Similar to the above, Figures – present the convergence curve of the six PSO variants on

,

,

, respectively.

Table 3. Results for basic test functions

.

.

Table 4. Results for CEC'15 Learning-Based Benchmark Suite

.

.

Table 5. Results for CEC'15 Learning-Based Benchmark Suite

.

.

4.3.1. Experimental results on the basic test functions

For the ten basic test functions , benefited from the GA operation, GLPSO shows its excellent accuracy and convergence speed on

,

,

,

,

,

and

whether in 30-dimensional, 50-dimensional or 100-dimensional, however it fails to converge on

. MBPSO shows a stable convergence ability on those basic test functions, and it is the only one that the result could close to the global optimum on

in 30, 50 and 100 dimensional. Meanwhile, MBPSO is the second best algorithm on

,

,

,

,

and

in 30-dimensional, on

,

,

,

and

in 50-dimensional, on

,

,

and

in 100-dimensional. From the Figures and , we can observe that although the convergence accuracy is not the strengths for MBPSO, it performs competitively on most functions when compared with PSO-LVIW, BBPSO, CLPSO and SRPSO.

4.3.2. Experimental results on the CEC'15 learning-based benchmark suite

It can be easily observed that MBPSO is a competitive PSO variant for the CEC'15 Learning-Based Benchmark Suite. When the dimensions are 30, MBPSO is ranked the first on ,

,

,

,

,

and

and ranked the second on

. When the dimensions are 50, MBPSO is ranked the first on

,

,

,

,

and

and ranked the second on

,

and

. When the dimensions are 100, MBPSO is ranked the first on

,

,

,

,

and

and ranked the second on

,

,

and

. GLPSO performs best on the basic test functions, however the performance is worse than MBPSO, CLPSO and BBPSO significantly when it be tested on the CEC'15 Learning-Based Benchmark Suite. The results on the CEC'15 Learning-Based Benchmark Suite verifies that MBPSO has a good adaptive capability, especially for some complex problems.

4.4. Statistical comparison

In order to further compare MBPSO with other PSO variants, the comparisons of PSO-LVIW, BBPSO, CLPSO, SRPSO, GLPSO and MBPSO for benchmark functions and

on 30-dimensional, 50-dimensional and 100-dimensional are shown in Tables –, respectively, where

and

denote that the t-test results of compared algorithm are significantly better and worse than MBPSO (p<0.05), and

stands for the differences between the results are not significant.

Table 6. Comparisons between MBPSO and other PSO variants for the average fitness (30-dimensional).

Table 7. Comparisons between MBPSO and other PSO variants for the average fitness (50-dimensional).

Table 8. Comparisons between MBPSO and other PSO variants for the average fitness (100-dimensional).

From Tables – we can obverse that although MBPSO is not the best algorithm among the six algorithms, it is a competitive algorithm that significantly better than PSO-LVIW, BBPSO, SRPSO and GLPSO. Compare with CLPSO, the ratio of significantly better and significantly worse on 30-dimensional, 50-dimensional and 100 dimensional are 10:10, 11:8 and 11:9 respectively, which reports that there are little difference between the two algorithms for the optimisation, and each of them has its own strengths and weaknesses when dealing with different problems.

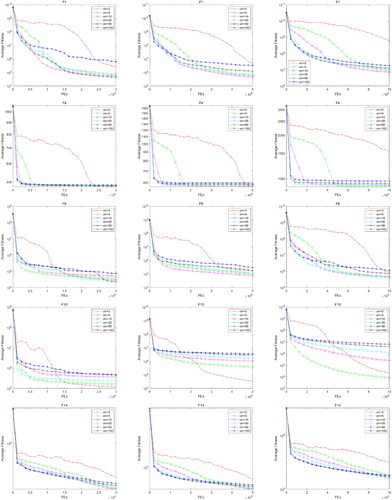

5. Discussion of parameter settings for MBPSO

In MBPSO, two new parameters were introduced. One is the mutation probability pm, and the other is the cycle of mutation breeding operation cm. In order to thoroughly investigate the sensitivity of MBPSO to the pm and cm, we choose ten representative functions among the 25 benchmark functions, to evaluate the effect of the two parameters in 30, 50 and 100 dimensional. Five basic test functions, ,

,

,

and

, and five CEC'15 test functions,

,

,

,

and

, are chosen for the experiment. The population size is set to 40, and the maximum optimisation iteration number is set to

.

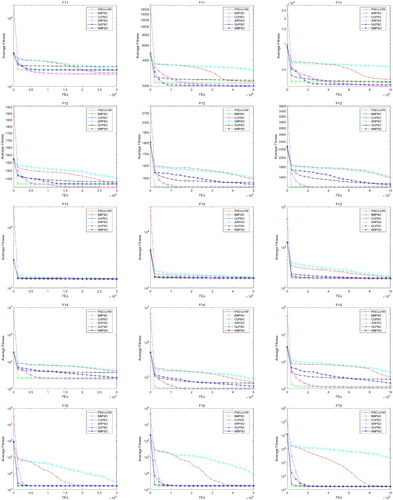

First, the mutation probability pm is investigated with 0.001, 0.002, 0.005, 0.01, 0.02 and 0.05, respectively. The parameters of the cycle of mutation breeding operation cm is fixed to 10. The test results on basic test functions

,

,

,

and

have been shown in Figure from top to bottom. The test results on CEC'15 test functions

,

,

,

and

have been shown in Figure from top to bottom.

By observing the Figure , we can find that for , the pm is smaller the better. For

, pm = 0.001 or 0.002 is better than other options. For

, except pm = 0.05 is a bad choice in every conditions, there are no significant differences between other options. For

,

, 0.005 and 0.01 can obtain a stable convergence. For

, the convergence result is not sensitive to the pm when pm<0.05.

Let us observe the Figure . For , pm = 0.01 or 0.02 is better than others, whether a too small or too big value will reduce the performance. For

, a relatively large value of pm may help the algorithm to jump out from local minimum more easily. For

, pm = 0.02 or 0.05 is relatively better than other options. For

, pm is the larger the better, just contrary to the curves of

. For

, the convergence is not sensitive to the pm, pm = 0.01 is relatively better than others.

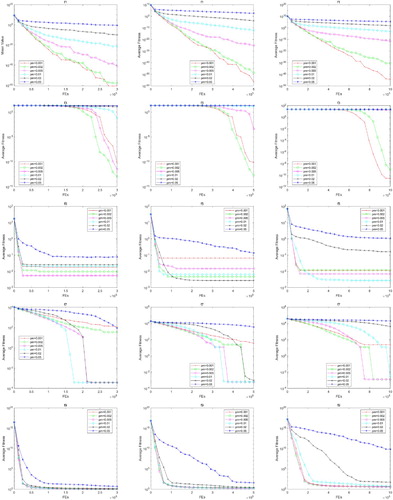

Second, the cycle of mutation breeding operation cm is investigated with cm = 2, 5, 10, 20, 50 and 100, respectively. The parameters of the probability of mutation breeding pm is fixed to 0.01. The test result have been shown in Figures and .

Figure shows that a large cm will help to improve the convergence accuracy for . For

, cm = 50 is the best option. For

, a small cm may cause difficult to convergence. For

, in the condition of

, 20, or 50 the algorithm can reach a low error to the global minimum, and whether the cm is too small or too large will generate a poor result. For

, the pm<5 will slow down the convergence speed obviously.

Figure reports that for , cm = 10 is the best option when the dimensions are 50 or 100. For

, the convergence speed will be reduced when the pm is small, while the results have no significant difference. For

, the results are not sensitive to the different cm. For

,

or 5 is better than other options, and the convergence accuracy will be reduced with the increasing cm. For

, the best value of pm is 10 or 20.

From the above, MBPSO is a parameter sensitive algorithm, for different problems, the most appropriate parameter settings are also different. In generally, for a simple problem, a small pm and a large cm can improve the convergence speed, on the contrary, a big pm and a small cm will provide a higher probability to jump out from the local optimum, which is more appropriate for some complex problem. In this paper, we set the pm to 0.01 and the cm to 10, which is a compromise for most problems to get a relatively good results.

6. Conclusions

This paper presents a novel PSO algorithm inspired by mutation breeding. The proposed algorithm through a method that to perform a mutation breeding operation periodically to control the diversity of population, which can overcome the shortcoming of classical PSO like premature convergence or difficult to convergence for some problems. The mutation breeding operation can be divided into two steps, first, breed the global best previously visited position to replace all of the best previously visited positions of each particle, second, mutate those new generated best previously visited positions randomly. After a mutation breeding operation, the global best previously visited position will be replaced with the best position in the current population at the following iteration. The experiments results illustrate that the MBPSO algorithm has a competitive performance when compared with other PSO variants. However, the discussion of parameter settings shows that MBPSO is a parameter sensitive algorithm, and to further improve the optimisation ability of MBPSO, the strategy of parameter adaption should be borrowed. In the future, we will study the MBPSO with parameter adaption to improve the optimisation ability of the proposed algorithm.

Disclosure statement

No potential conflict of interest was reported by the authors.

Additional information

Funding

References

- Bao, H., & Han, F. (2017). A hybrid multi-swarm PSO algorithm based on shuffled frog leaping algorithm. In Lecture Notes in Computer Science (pp. 101–112). Springer International Publishing.

- Bradshaw, John. E. (2016). Plant Breeding: Past, Present and Future (pp. 529–560). Cham: Springer International Publishing.

- Cao, J., Jiang, Z., & Wang, K. (2017). Customer demand prediction of service-oriented manufacturing using the least square support vector machine optimized by particle swarm optimization algorithm. Engineering Optimization, 49, 1197–1210. doi: 10.1080/0305215X.2016.1245729

- Cao, Y., Zhang, H., Li, W., Zhou, M., Zhang, Y., & Chaovalitwongse, W. A. (2019). Comprehensive learning particle swarm optimization algorithm with local search for multimodal functions. IEEE Transactions on Evolutionary Computation, 23(4), 718–731. doi: 10.1109/TEVC.2018.2885075

- Chen, Y., Li, L., Peng, H., Xiao, J., Yang, Y., & Shi, Y. (2017). Particle swarm optimizer with two differential mutation. Applied Soft Computing, 61, 314–330. doi: 10.1016/j.asoc.2017.07.020

- Cheng, R., & Jin, Y. (2015). A social learning particle swarm optimization algorithm for scalable optimization. Information Sciences, 291, 43–60. doi: 10.1016/j.ins.2014.08.039

- Dong, W., Kang, L., & Zhang, W. (2017). Opposition-based particle swarm optimization with adaptive mutation strategy. Soft Computing, 21(17), 5081. doi: 10.1007/s00500-016-2102-5

- Dong, W., & Zhou, M. (2017). A supervised learning and control method to improve particle swarm optimization algorithms. Systems IEEE Transactions on Systems, Man, and Cybernetics: Systems, 47(7), 1135–1148. doi: 10.1109/TSMC.2016.2560128

- Eberhart, R. C., & Shi, Y. (2000). Comparing inertia weights and constriction factors in particle swarm optimization. In Proceedings of Congress Evolutionary Computation. CEC00 (Cat. No.00TH8512) (Vol. 1. pp. 84–88).

- Emara, H. M. (2009). Adaptive clubs-based particle swarm optimization. In Proceedings of the American Control Conf (pp. 5628–5634).

- Gao, H., & Xu, W. (2011). Particle swarm algorithm with hybrid mutation strategy. Applied Soft Computing, 11(8), 5129–5142. doi: 10.1016/j.asoc.2011.05.046

- García, S., Molina, D., Lozano, M., & Herrera, F. (2008). A study on the use of non-parametric tests for analyzing the evolutionary algorithms' behaviour: A case study on the CEC'2005 special session on real parameter optimization. Journal of Heuristics, 15(6), 617–644. doi: 10.1007/s10732-008-9080-4

- Gong, Y., Li, J., Zhou, Y., Li, Y., Chung, H. S., Shi, Y., & Zhang, J. (2016). Genetic learning particle swarm optimization. IEEE Transactions on Cybernetics, 46(10), 2277–2290. doi: 10.1109/TCYB.2015.2475174

- Guan, G., Yang, Q., Gu, W., Jiang, W., & Lin, Y. (2018). Ship inner shell optimization based on the improved particle swarm optimization algorithm. Advances in Engineering Software, 123, 104–116. doi: 10.1016/j.advengsoft.2018.06.012

- Guedria, N. B. (2016). Improved accelerated PSO algorithm for mechanical engineering optimization problems. Applied Soft Computing, 40, 455–467. doi: 10.1016/j.asoc.2015.10.048

- Han, F., & Liu, Q. (2014). A diversity-guided hybrid particle swarm optimization based on gradient search. Neurocomputing, 137, 234–240. doi: 10.1016/j.neucom.2013.03.074

- Han, F., & Liu, Q. (2015). An improved hybrid PSO based on ARPSO and the quasi-newton method. In Advances in Swarm and Computational Intelligence (pp. 460–467). Springer International Publishing.

- Han, F., & Zhu, J. (2011). An improved arpso for feedforward neural networks. In Proceedings of the Seventh International Conference Natural Computation (Vol. 2, pp. 1146–1150).

- Huang, S.-Y., & Chen, C.-L. (2009). A hybrid particle swarm optimization algorithm with diversity for flow-shop scheduling problem. In Proceedings of the Information and Control (ICICIC) 2009 Fourth International Conference Innovative Computing (pp. 864–867).

- Jegan, T. M. C., & Ravindran, D. (2017). Electrochemical machining process parameter optimization using particle swarm optimization. Computational Intelligence, 33(4), 1019–1037. doi: 10.1111/coin.12139

- Kang, Q., Wang, L., & Wu, Q. (2008). A novel ecological particle swarm optimization algorithm and its population dynamics analysis. Applied Mathematics and Computation, 205(1), 61–72. doi: 10.1016/j.amc.2008.05.067

- Kennedy, J. (2003). Bare bones particle swarms. In Proc. IEEE Swarm Intelligence Symp. SIS'03 (Cat. No.03EX706) (pp. 80–87).

- Kennedy, J., & Eberhart, R. (1995). Particle swarm optimization. In Proc. ICNN'95 -- Int. Conf. Neural Networks (Vol. 4. pp. 1942–1948.

- Kennedy, J., & Mendes, R. (2002). Population structure and particle swarm performance. In Proc. Congress Evolutionary Computation. CEC'02 (Cat. No.02TH8600) (Vol. 2. pp. 1671–1676).

- Krink, T., Vesterstrom, J. S., & Riget, J. (2002). Particle swarm optimisation with spatial particle extension. In Proc. Congress Evolutionary Computation. CEC'02 (Cat. No.02TH8600) (Vol. 2. pp. 1474–1479).

- Li, L., Wang, W., & Xu, X. (2017). Multi-objective particle swarm optimization based on global margin ranking. Information Sciences, 375, 30–47. doi: 10.1016/j.ins.2016.08.043

- Li, Y., Zhan, Z.-H., Lin, S., Zhang, J., & Luo, X. (2015). Competitive and cooperative particle swarm optimization with information sharing mechanism for global optimization problems. Information Ssiences, 293, 370–382. doi: 10.1016/j.ins.2014.09.030

- Liang, X., Li, W., Zhang, Y., & Zhou, M. (2015). An adaptive particle swarm optimization method based on clustering. Soft Computing, 19(2), 431–448. doi: 10.1007/s00500-014-1262-4

- Liang, J. J., Qin, A. K., Suganthan, P. N., & Baskar, S. (2006). Comprehensive learning particle swarm optimizer for global optimization of multimodal functions. IEEE Transactions on Evolutionary Computation, 10(3), 281–295. doi: 10.1109/TEVC.2005.857610

- Liang, J. J., Qu, B. Y., Suganthan, P. N., & Chen, Q. (2014). Problem definitions and evaluation criteria for the CEC 2015 competition on learning-based real-parameter single objective optimization. CEC 2015 Competition on Learning-based Real-Parameter Single Objective Optimization.

- Lim, W. H., & Isa, N. A. M. (2014). An adaptive two-layer particle swarm optimization with elitist learning strategy. Informantion Sciences, 273, 49–72. doi: 10.1016/j.ins.2014.03.031

- Lin, W.-C., Yin, Y., Cheng, S.-R., Edwin Cheng, T. C., Wu, C.-H., & Wu, C.-C. (2017). Particle swarm optimization and opposite-based particle swarm optimization for two-agent multi-facility customer order scheduling with ready times. Applied Soft Computing, 52, 877–884. doi: 10.1016/j.asoc.2016.09.038

- Lu, Y., Yan, D., Zhang, J., & Levy, D. (2014). Direct back propagation neural dynamic programming-based particle swarm optimisation. Connection Science, 26, 367–388. doi: 10.1080/09540091.2014.931355

- Lv, Z., Wang, L., Han, Z., Zhao, J., & Wang, W. (2019). Surrogate-assisted particle swarm optimization algorithm with pareto active learning for expensive multi-objective optimization. IEEE/CAA Journal of Automatica Sinica, 6(3), 838–849. doi: 10.1109/JAS.2019.1911450

- Mendes, R., Kennedy, J., & Neves, J. (2004). The fully informed particle swarm: Simpler, maybe better. IEEE Transactions on Evolutionary Computation, 8(3), 204–210. doi: 10.1109/TEVC.2004.826074

- Monson, C. K., & Seppi, K. D. (2006). Adaptive diversity in PSO. In M. Cattolico, (Eds.), Genetic and Evolutionary Computation Conference, (pp. 59–66).

- Nagra, A. A., Han, F., & Ling, Q. H. (2019). An improved hybrid self-inertia weight adaptive particle swarm optimization algorithm with local search. Engineering Optimization, 51, 1115–1132. doi: 10.1080/0305215X.2018.1525709

- Nie, R., & Yue, J. (2008). A GA and Particle Swarm Optimization based hybrid algorithm. In Proc. IEEE Congress Evolutionary Computation (IEEE World Congress Computational Intelligence) (pp. 1047–1050).

- Pal, D., Verma, P., Gautam, D., & Indait, P. (2016). Improved optimization technique using hybrid ACO-PSO. In Proceedings of the 2nd International Conference on Next Generation Computing Technologies (NGCT) (pp. 277–282).

- Pare, S., Kumar, A., Bajaj, V., & Singh, G. K. (2019). A context sensitive multilevel thresholding using swarm based algorithms. IEEE/CAA Journal of Automatica Sinica, 6, 1471–1486.

- Raja, M. A. Z. (2014). Solution of the one-dimensional bratu equation arising in the fuel ignition model using ANN optimised with PSO and SQP. Connection Science, 26, 195–214. doi: 10.1080/09540091.2014.907555

- Riget, J., & Vesterstrom, J. (2002). A diversity-guided particle swarm optimizer -- the ARPSO. Technical report, EVALife, Department of Computer Science, University of Aarhus.

- Shi, Y., & Eberhart, R. (1998). A modified particle swarm optimizer. In Proceedings of the IEEE World Congress Computational Intelligence (Cat. No.98TH8360), 1998 IEEE International Conference Evolutionary Computation (pp. 69–73).

- Sivakoti, K. K., Basava, M., Balaga, R. V., & Sannidhi, B. M. (2017). Design optimization of radar absorbing materials using particle swarm optimization. International Journal of Applied Metaheuristic Computing, 8(4), 113–132. doi: 10.4018/IJAMC.2017100107

- Taherkhani, M., & Safabakhsh, R. (2016). A novel stability-based adaptive inertia weight for particle swarm optimization. Applied Soft Computing, 38, 281–295. doi: 10.1016/j.asoc.2015.10.004

- Tan, L., Chen, Y., & Li, C. (2018). Research on image segmentation optimization algorithm based on chaotic particle swarm optimization and fuzzy clustering. In Proceedings of the 7th International Conference on Software and Computer Applications (pp. 178–182).

- Tan, F., & Xia, B. (2018). Chemical reaction intermediate state kinetic optimization by particle swarm optimization. In Advances in Swarm Intelligence -- 9th International Conference (Vol. 10941. pp. 132–142).

- Tanweer, M. R., Suresh, S., & Sundararajan, N. (2015). Self regulating particle swarm optimization algorithm. Information Sciences, 294, 182–202. doi: 10.1016/j.ins.2014.09.053

- Tran, T. A. (2018). The optimization of marine diesel engine rotational speed control process by fuzzy logic control based on particle swarm optimization algorithm. Future Internet, 10(10), 99. doi: 10.3390/fi10100099

- Tran, B., Xue, B., Zhang, M., & Nguyen, S. (2016). Investigation on particle swarm optimisation for feature selection on high-dimensional data: local search and selection bias. Connection Science, 28, 270–294. doi: 10.1080/09540091.2016.1185392

- Wang, X., Shi, Y., Ding, D., & Gu, X. (2016). Double global optimum genetic algorithm–particle swarm optimization-based welding robot path planning. Engineering Optimization, 48, 299–316. doi: 10.1080/0305215X.2015.1005084

- Wang, F., Zhang, H., Li, K., Lin, Z., Yang, J., & Shen, X.-L. (2018). A hybrid particle swarm optimization algorithm using adaptive learning strategy. Information Sciences, 436–437, 162–177. doi: 10.1016/j.ins.2018.01.027

- Yang, X., Niu, J., & Cai, Z. (2018). Chaotic simulated annealing particle swarm optimization algorithm. In Proceedings of the 2nd IEEE Advanced Information Management, Communicates, Electronic and Automation Control Conference (IMCEC) (pp. 11–19).