ABSTRACT

In 2017, the Health Resources and Services Administration’s HIV/AIDS Bureau funded an Evaluation Center (EC) and a Coordinating Center for Technical Assistance (CCTA) to oversee the rapid implementation of 11 evidence-informed interventions at 26 HIV care and treatment providers across the U.S. This initiative aims to address persistent gaps in HIV-related health outcomes emerging from social determinants of health that negatively impact access to and retention in care. The EC adapted the Conceptual Model of Implementation Research to develop a Hybrid Type III, multi-site mixed-methods evaluation, described in this paper. The results of the evaluation will describe strategies associated with uptake, implementation outcomes, as well as HIV-related health outcomes for clients engaged in the evidence-informed interventions. This approach will allow us to understand in detail the processes that sites undergo to implement these important intervention strategies for high priority populations.

Introduction

State of the HIV epidemic

Advances in HIV medicine, coupled with innovative interventions to successfully engage people with HIV in care, are important tools to end the HIV epidemic. However, intervention implementation gaps have resulted in persistent health disparities including differential rates in HIV diagnosis, linkage to and retention in care, and viral suppression (Centers for Disease Control and Prevention, Citation2019b). These disparities particularly affect populations including Black men who have sex with men (BMSM), transgender women, individuals who have co-occurring mental health and/or substance use issues, and those with a history of trauma. To close these gaps in HIV care, strategies are needed to accelerate the dissemination, uptake, and evaluation of effective interventions.

In 2017, the Health Resources and Services Administration’s (HRSA) HIV/AIDS Bureau launched the “Using Evidence Informed Interventions to Improve Health Outcomes for People Living with HIV” Initiative (E2i). E2i aims to address the disparities in access to and retention in effective care and treatment for people with HIV served by HRSA’s Ryan White HIV/AIDS Program (RWHAP) by funding the implementation of evidence-informed interventions specifically for underserved groups. This paper describes the evaluation of E2i, based on an established framework, the Conceptual Model of Implementation Research (also known as “The Proctor Model”) as it is currently being conducted by the Evaluation Center (EC), located at the University of California, San Francisco’s Center for AIDS Prevention Studies (CAPS).

Background

Implementation Science and the RWHAP

The RWHAP provides direct medical and support services to more than half of all people with diagnosed HIV in the U.S. by funding HIV service providers as the payor of last resort. The RWHAP funds: eligible metropolitan areas (Part A), states and territories (Part B), early intervention services and capacity development at the local level (Part C), and specialty medical care for families, women, infants, children and youth with HIV (Part D). RWHAP Part F funds clinical training, technical assistance and demonstration projects focused on evaluating the adaptation, implementation, utilization, cost, and health-related outcomes of innovative treatment models while promoting the dissemination and replication of successful interventions in real world settings, such as the clinics and hospitals receiving Ryan White Parts A-D funding (Health Resources & Services Administration, Citation2019). Currently, the disproportionate burden of HIV on Black MSM and transgender women, and the lack of effective interventions for them, are central challenges to improving outcomes (Melendez & Pinto, Citation2009; Mizuno et al., Citation2015; Rosenberg et al., Citation2014; Singh et al., Citation2017). Furthermore, many people with HIV face greater need for behavioral health services, and for services that address prevalent experiences of traumatization (Health Resources & Services Administration, Citation2015; Mugavero et al., Citation2006). Thus, HRSA funded the E2i Initiative to support the adaptation, implementation, and evaluation of evidence-informed interventions for these high priority populations.

Transgender women

Transgender women are disproportionately vulnerable to HIV (Nemoto et al., Citation2004). A recent Centers for Disease Control and Prevention (CDC) analysis demonstrated that transgender people had the highest rates of HIV diagnoses among all groups tested (Centers for Disease Control and Prevention, Citation2015). Current efforts to provide effective access, care, and treatment to transgender women with HIV are not as successful as with other populations. Transgender women with HIV are less likely to be receiving antiretroviral therapy (ART) compared to cisgender men and women (Melendez & Pinto, Citation2009), and transgender women on ART face greater challenges to adherence and have fewer positive interactions with healthcare professionals (Sevelius et al., Citation2010). Addressing these challenges is critical to altering the pattern of HIV-related disparities that lead to disproportionately poorer health outcomes.

Black men who have sex with men

In 2017, MSM represented 70% of all HIV diagnoses in the US, and Black MSM have higher HIV prevalence rates and lower viral suppression than all other racial/ethnic groups (Centers for Disease Control and Prevention, Citation2019a). Compared with other MSM, BMSM have higher rates of undiagnosed HIV infection (Millett et al., Citation2011), are less likely to be linked to care within 90 days (DeGroote et al., Citation2016), and are less likely to be on and adhere to ART (Rubin et al., Citation2010). To reduce disparities, evidence-informed interventions are needed to address multiple barriers preventing Black MSM from successfully engaging in HIV healthcare (Maulsby et al., Citation2013).

Behavioral health integration into primary medical care

People with HIV have high rates of mental health issues; approximately 50% of adults treated for HIV also have symptoms of a psychiatric disorder and 13% having co-occurring mental illness and substance-use disorder (Higa et al., Citation2012). People with HIV who experience depression are more likely to have higher viral loads, more anxiety, and are more likely to have a problem with substance use (Bengtson et al., Citation2019; Garey et al., Citation2015; O’Cleirigh et al., Citation2015). These rates of mental illness present a serious challenge in implementing behavioral health interventions; many patients with HIV also have complex mental health issues (Gaynes et al., Citation2008). Current mental illness, especially depression and substance abuse, predict lower ART adherence, a greater likelihood of failing ART, and increased mortality (Substance Abuse and Mental Health Services Administration and Health Resources and Services Administration, Citation2016). Successfully engaging people with mental health issues in HIV care requires evidence-informed approaches to fully integrate behavioral health interventions into primary medical care.

Identifying and addressing trauma

According to the HRSA Center for Integrated Health Solutions, people with HIV are as much as twenty times more likely to have experienced trauma than the general population (Substance Abuse and Mental Health Services Administration and Health Resources and Services Administration, Citation2016). Being part of a marginalized community may increase the risk of exposure to potentially traumatic events. In order to improve healthcare engagement among people with HIV who have experienced trauma, evidence-informed interventions must be implemented that address the mental health sequelae of trauma, diminished ART adherence, more frequent opportunistic infections, and higher risk of HIV-related mortality (Schafer et al., Citation2012).

E2i initiative Description and evaluation

E2i is a four-year initiative funding 26 RWHAP sites implementing 11 evidence-informed interventions in the four focus areas highlighted above (Keuroghlian et al., Citation2018). Sites differ in the services they offer (clinical and non-clinical), the larger context in which those services are provided (university hospitals, federally qualified health centers, community-based organizations), and where they are located throughout the U.S. (rural and urban settings). The Fenway Institute serves as E2i’s Coordinating Center for Technical Assistance (CCTA), in partnership with AIDS United, to provide intervention training, toolkit development, technical assistance, and intervention funding to the sites. UCSF CAPS is the initiative’s EC and is developing and executing a Hybrid Type III implementation study (Curran et al., Citation2012) and multi-site evaluation. In this paper, we describe the EC’s comprehensive evaluation, based on the Proctor Model.

Methods

Comprehensive evaluation Plan

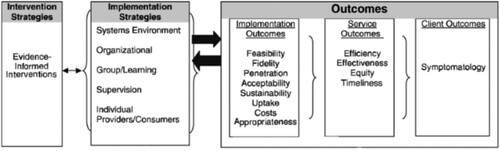

The Proctor Model (Proctor et al., Citation2013) provides a framework for understanding the process and success of intervention implementation and how specific strategies of that process impact outcomes. The model offers a framework for the simultaneous evaluation of intervention strategies, implementation strategies, and three categories of outcomes. For the E2i Initiative, the EC designed a multi-method approach to assess each of Proctor’s primary concepts, including intervention and implementation strategies and all three levels of outcomes. Below, we describe the procedures, measures, and planned analyses for the E2i comprehensive evaluation and show how they correspond to key components of the Proctor Model.

Procedures and measures

From , Intervention Strategies correspond to the core elements of the 11 evidence-informed interventions implemented by the E2i sites (). Implementation Strategies are the methods used to enhance the adoption, implementation, and sustainability of an intervention; Implementation Outcomes are the effects of deliberate and purposive actions to implement a new intervention; Service Outcomes are the ideal qualities of health care; and, Client Outcomes are the direct impacts on the participants (Proctor et al., Citation2013). We designed the evaluation to utilize multiple data sources, measured at different levels of the organization and at multiple time-points throughout the implementation process. Data sources include the E2i site leadership, the intervention frontline staff, intervention participant characteristics, and reflections of the CCTA and EC staff on the intervention implementation process. The evaluation was designed to minimize data collection burden on intervention staff and requires no collection directly from participants (see for sources and timing by Proctor concept).

Table 1. Evidence-Informed Interventions.

Table 2. Proctor Model Concept by Data Source.

Secondary document review

We are using secondary document review to describe Intervention Strategies. Initially, we conducted a review of initial program documents generated prior to intervention implementation. The CCTA consulted with the original intervention developers to prepare one logic model per intervention and to confirm each intervention’s core elements. The CCTA then refined the logic models in discussions with the developers and the individual sites so that each site had a tailored logic model. The CCTA and the developers then worked with the sites to formalize comprehensive implementation plans. The results of this baseline document review allowed the EC to describe the sites’ intervention strategies and serves as the rubric to assess implementation strategies and implementation outcomes.

For assessment of the implementation strategies and outcomes, we are using additional Secondary Document Review to describe sites’ implementation progress, intervention uptake, and mid-course changes. Throughout the implementation period the EC regularly collects secondary documents for analysis including: training materials, monthly site monitoring call notes, quarterly reports, action plans, site visit reports, and any other project-related documents. In this secondary document review, we will be assessing the sites’ larger contexts and describing the content and reasons for intervention adaptations.

Document review measures

Using a template, we extract relevant information from each source document on an on-going basis. Every five months, the EC conducts a within-site content analysis of data abstracted and produces an implementation snapshot. We will use the three implementation snapshots (Months 8, 13, 18) to facilitate a longitudinal analysis of implementation progress. We will identify common implementation themes across sites within a focus area and will identify notable similarities and differences across focus areas.

Learning session observations

Sites engage in bi-annual Learning Sessions (LS) comprised of E2i leadership and front-line staff of all sites to support intervention implementation. With origins in continuous quality improvement efforts (Headrick et al., Citation1996), these LS are meetings bringing together key implementers to participate in a two-day collaborative learning process, at times grouped according by focus area and other times by commonalities across sites (intervention, setting, etc.). LS provide the EC an opportunity to observe how site representatives interact with, learn from, and teach one another. The formal aspects of the LS observations center on assessing implementation progress, challenges, and processes. We specifically attend to information concerning how the sites use the LS process to change their implementation plans and shift their implementation strategies. Observations conducted during the LS will be structured to also capture site perspectives on the eight implementation outcomes.

Learning session observation measures

Using our own observation tool, members of the EC team take notes during the LS which are then used to generate field notes. These field notes are systematically analyzed following typical conventions of coding (Ryan, Citation2006), theme identification, and interpretation all focused on understanding implementation experiences.

Organizational assessment

The EC asks each site’s Project Director to complete an Organizational Assessment online containing items to assess implementation strategies as well as each of the eight implementation outcomes. The baseline assessment took place during the formative phase, prior to implementation, and administration continues every six months thereafter through the funding period.

Organizational assessment measures

The assessment asks site directors about the culture of the organization, staffing patterns, placement of the intervention relative to other services, anticipated changes to workflows, and other elements relevant for implementation capacity. Items are modified on subsequent administrations to capture change since the prior administration. The assessment also contains the Checklist for Assessing Readiness for Implementation for Evidence-Based Practices (Barwick, Citation2011). This assessment will allow a comparison of the site’s capacity and readiness to implement the intervention to the specific strategies and desired outcomes, measuring all eight implementation outcomes.

Intervention exposure

In order to be able to describe participants and to understand their receipt of intervention services, sites complete a single enrollment form for each intervention participant. Sites record demographic information and assign a Unique E2i Identifier Number to each participant. This identifier is used on all patient/client forms to link enrollment, intervention exposure, and medical records. Measurement of intervention exposure is completed upon each encounter with E2i participants and contributes to our assessment of the degree to which the program was implemented (uptake) as intended (fidelity) as well as any adaptations to intervention delivery (frequency, dosage, duration, delivery method). We will be able to determine the time elapsed between enrollment and first exposure to the intervention (timeliness) and determine that the intervention is targeted to those who it would best serve (effectiveness) and without differences in delivery (equity) based on participant characteristics.

Intervention exposure measures

A single enrollment form documents the participant’s year of birth, sex assigned at birth, current gender identity, racial/ethnic identity, date of entry into care, and date of E2i intervention enrollment. Intervention Exposure data are collected contemporaneously with participation in the intervention. These include the date of participation, category of staff administering the intervention, interaction modality, types of activities administered, and any activity outcomes. Using the documents and results from the baseline Secondary Document Review, we developed a grid of the Core Elements and noted common and unique activities and outcomes of each of the 11 interventions. These form the response options for this measure.

Medical record data

We collect information about participants’ HIV healthcare utilization for 12 month periods before and after enrollment. Sites use a combination of Ryan White Service Report data or abstracted medical record data to measure linkage to and retention in care, ART prescriptions, and viral suppression. Before submitting these health data to the EC, all records are fully de-identified, using only the participants’ Unique E2i Identifier Numbers. These clinical indicators will assess Proctor’s outcome symptomatology.

Medical record measures

Information from medical data include: HIV care visit dates, CD4 and viral load counts, and dates of ART prescriptions. These data permit us to measure linkage (primary care visit), treatment (ART prescription), retention (at least one visit in each of two 6-month periods, >60 days apart), and viral suppression (viral load < 200).

Costing data

The EC will conduct annual cost assessments (cost) to measure the financial and human capital resources directed toward intervention implementation, including total monetary expenses, in-kind donations, and personnel hours for intervention adaptation and implementation. This analysis will also allow us to assess the cost per person of implementing the intervention (efficiency).

Costing data measures

The goal is to describe the true intervention implementation costs regardless of funding source. Examples of expenses included in these assessments are those related to: personnel effort to develop intervention protocols; electronic medical record modifications necessary to implement the intervention; provider and staff trainings; routine performance monitoring and feedback that are integral to an intervention model (e.g., reviewing clinic record data to perform panel management); and personnel and care-related expenses that are above and beyond levels required before intervention implementation. The costs of intervention implementation do not include expenses and personnel dedicated to the development and implementation of evaluation and routine costs of care that exist independently of the intervention.

Analyses

In this mixed-methods study, we are using qualitative methods to document intervention strategies, quantitative methods to assess service and client outcomes, and mixed methods for a comprehensive understanding of implementation strategies and outcomes.

Analyses of qualitative data, including all documents and LS field notes, consist of organization and examination following the principles of thematic and Framework Analysis (Ritchie & Spencer, Citation1993). These techniques are useful for analysis of qualitative data when some a priori domains are defined based on the research questions of interest; in this case, the Proctor domains (Powell et al., Citation2015). Once a priori domains are defined, initial coding of the data consists of reviewing source documents and identifying sections of the text that correspond to the a priori domains and developing new domains as needed. To organize and sort data, all materials are entered into Dedoose (Version 5.0.11) a web-based qualitative data analysis platform. Excerpts associated with key codes are summarized and tabled for comparison and theme identification to inform the evaluation of intervention and implementation strategies, in addition to implementation outcomes.

As noted above, preliminary analyses of quantitative data occur on an ongoing basis. The quality of the quantitative data is monitored throughout the initiative. A combination of monthly and specialized data quality reports, identifying missing and potentially inaccurate data values, are generated and provided to the sites. Using these reports, we work with the site data teams to correct errors and prevent them from recurring. Regular technical assistance, training, and documentation is provided to keep sites informed on best practices for data collection.

We conduct analyses of the Organizational Assessment at the end of each assessment period and disseminate information on enrollment and intervention exposure for each intervention and site monthly. Additionally, we disseminate results from medical chart abstraction every six months and information on the costs of each intervention annually. Analyses of our quantitative data will employ linear and non-linear mixed models to evaluate the quantitative relationship between components of the Proctor Model measured at the organizational and individual levels while controlling for repeated measures. For each intervention, our primary analyses will identify implementation strategies, implementation outcomes, and service outcomes that predict our primary client outcome, viral suppression.

Overall interpretation of our results will consist of an integration of qualitative and quantitative findings, using Convergence Model Triangulation (Creswell & Plano Clark, Citation2011). Each method is given equal weight in terms of importance and contribution. In both the qualitative and quantitative approaches, our multi-level data collection is on-going and examines consistency and differences across methods. This approach will permit us to compare the results of both methods for assessing implementation strategies and implementation outcomes, as well as connect the results from the assessment of implementation strategies to the service and client outcome data.

Discussion

This implementation science approach to evaluation, using the Proctor Model, will allow us to understand in-depth how sites are implementing these important evidence-informed interventions for high priority populations. Because we are focusing on implementation strategies explicitly, we hope to have results to inform accelerated progress in closing gaps between knowing what works and applying it broadly in HIV care settings. Other organizations considering future adoption of these, or similar, evidence-informed interventions can learn from the implementation experiences of the sites in this Initiative. The EC’s findings may help guide future organizations to select appropriate intervention strategies, avoid common implementation pitfalls, and maximize their opportunity for implementation success, thereby improving outcomes for people with HIV.

Disclosure statement

No potential conflict of interest was reported by the author(s).

Additional information

Funding

References

- Altice, F. L., Bruce, R. D., Lucas, G. M., Lum, P. J., Korthuis, P. T., Flanigan, T. P., Cunningham, C. O., Sullivan, L. E., Vergara-Rodriguez, P., Fiellin, D. A., Cajina, A., Botsko, M., Nandi, V., Gourevitch, M. N., & Finkelstein, R. (2011). HIV treatment outcomes among HIV-infected, opioid-dependent patients receiving buprenorphine/naloxone treatment within HIV clinical care settings: Results from a multisite study. JAIDS Journal of Acquired Immune Deficiency Syndromes, 56(Suppl 1), S22–S32. https://doi.org/https://doi.org/10.1097/QAI.0b013e318209751e

- Babor, T. F., McRee, B. G., Kassebaum, P. A., Grimaldi, P. L., Ahmed, K., & Bray, J. (2007). Screening, brief intervention, and Referral to treatment (SBIRT). Substance Abuse, 28(3), 7–30. https://doi.org/https://doi.org/10.1300/J465v28n03_03

- Barwick, M. (2011). Checklist to assess organizational readines s (CARI) for EIP implementation. Hospital for Sick Children Toronto.

- Bengtson, A. M., Pence, B. W., Mimiaga, M. J., Gaynes, B. N., Moore, R., Christopoulos, K., O’Cleirigh, C., Grelotti, D., Napravnik, S., Crane, H., & Mugavero, M. (2019). Depressive symptoms and engagement in human Immunodeficiency Virus care following antiretroviral therapy Initiation. Clinical Infectious Diseases, 68(3), 475–481. https://doi.org/https://doi.org/10.1093/cid/ciy496

- Centers for Disease Control and Prevention. (2015). HIV Among Transgender People. http://www.cdc.gov/hiv/group/gender/transgender/

- Centers for Disease Control and Prevention. (2019a). HIV Infection Risk, Prevention, and Testing Behaviors Among Men Who Have Sex With Men—National HIV Behavioral Surveillance, 23 U.S. Cities, 2017. https://www.cdc.gov/hiv/pdf/library/reports/surveillance/cdc-hiv-surveillance-special-report-number-22.pdf

- Centers for Disease Control and Prevention. (2019b). Understanding the HIV care continuum. Fact Sheet. https://www.cdc.gov/hiv/pdf/library/factsheets/cdc-hiv-care-continuum.pdf

- Creswell, J. W., & Plano Clark, V. L. (2011). Designing and Conducting Mixed Methods Research. In 2nd ed.. Los Angeles and London: Sage Publications, 2011, pp. xxvi, 457 (pp. xxvi).

- Curran, G. M., Bauer, M., Mittman, B., Pyne, J. M., & Stetler, C. (2012). Effectiveness-implementation hybrid designs: Combining elements of clinical effectiveness and implementation research to enhance public health impact. Medical Care, 50(3), 217–226. https://doi.org/https://doi.org/10.1097/MLR.0b013e3182408812

- Dawson-Rose, C., Draughon, J. E., Cuca, Y., Zepf, R., Huang, E., Cooper, B. A., & Lum, P. J. (2017). Changes in specific substance involvement scores among SBIRT recipients in an HIV primary care setting. Addiction Science & Clinical Practice, 12(1), 34. https://doi.org/https://doi.org/10.1186/s13722-017-0101-1

- DeGroote, N. P., Korhonen, L. C., Shouse, R. L., Valleroy, L. A., & Bradley, H. (2016). Unmet Needs for ancillary services Among Men Who have Sex with Men and Who Are receiving HIV medical care - United States, 2013-2014. MMWR, 65(37), 1004–1007. https://doi.org/https://doi.org/10.15585/mmwr.mm6537a4

- Empson, S., Cuca, Y. P., Cocohoba, J., Dawson-Rose, C., Davis, K., & Machtinger, E. L. (2017). Seeking Safety Group therapy for Co-occurring substance use disorder and PTSD among transgender women living with HIV: A Pilot study. Journal of Psychoactive Drugs, 49(4), 344–351. https://doi.org/https://doi.org/10.1080/02791072.2017.1320733

- Garey, L., Bakhshaie, J., Sharp, C., Neighbors, C., Zvolensky, M. J., & Gonzalez, A. (2015). Anxiety, depression, and HIV symptoms among persons living with HIV/AIDS: The role of hazardous drinking. AIDS Care, 27(1), 80–85. https://doi.org/https://doi.org/10.1080/09540121.2014.956042

- Garofalo, R., Kuhns, L. M., Hotton, A., Johnson, A., Muldoon, A., & Rice, D. (2016). A Randomized Controlled Trial of Personalized text Message Reminders to Promote medication adherence Among HIV-positive Adolescents and Young adults. AIDS and Behavior, 20(5), 1049–1059. https://doi.org/https://doi.org/10.1007/s10461-015-1192-x

- Gaynes, B. N., Pence, B. W., Eron, Jr., J. J., & Miller, W. C. (2008). Prevalence and comorbidity of psychiatric diagnoses based on reference standard in an HIV+ patient population. Psychosomatic Medicine, 70(4), 505–511. https://doi.org/https://doi.org/10.1097/PSY.0b013e31816aa0cc

- Headrick, L. A., Knapp, M., Neuhauser, D., Gelmon, S., Norman, L., Quinn, D., & Baker, R. (1996). Working from upstream to improve health care: The IHI Interdisciplinary Professional Education collaborative. JOINT COMMISSION JOURNAL ON QUALITY IMPROVEMENT, 22(3), 149–164. https://doi.org/https://doi.org/10.1016/S1070-3241(16)30217-6

- Health Resources & Services Administration. (2015). Health Care Action: Impact of Mental Health on People Living with HIV. http://hab.hrsa.gov/deliverhivaidscare/mentalhealth.pdf

- Health Resources & Services Administration. (2019). About the Ryan White HIC/AIDS Program. https://hab.hrsa.gov/about-ryan-white-hivaids-program/about-ryan-white-hivaids-program

- Higa, D. H., Marks, G., Crepaz, N., Liau, A., & Lyles, C. M. (2012). Interventions to improve retention in HIV primary care: A systematic review of U.S. Studies. Current HIV/AIDS Reports, 9(4), 313–325. https://doi.org/https://doi.org/10.1007/s11904-012-0136-6

- Jain, K. M., Maulsby, C., Brantley, M., Kim, J. J., Zulliger, R., Riordan, M., Charles, V., & Holtgrave, D. R. (2016). Cost and cost threshold analyses for 12 innovative US HIV linkage and retention in care programs. AIDS Care, 28(9), 1199–1204. https://doi.org/https://doi.org/10.1080/09540121.2016.1164294

- Kaner, E., Bland, M., Cassidy, P., Coulton, S., Deluca, P., Drummond, C., Gilvarry, E., Godfrey, C., Heather, N., Myles, J., Newbury-Birch, D., Oyefeso, A., Parrott, S., Perryman, K., Phillips, T., Shenker, D., & Shepherd, J. (2009). Screening and brief interventions for hazardous and harmful alcohol use in primary care: A cluster randomised controlled trial protocol. BMC Public Health, 9(1), 287. https://doi.org/https://doi.org/10.1186/1471-2458-9-287

- Keuroghlian, A., Marc, L., Massaquoi, M., Cahill, S., Nortrup, E., Myers, J., … Shade, S. (2018, December 11-14). National implementation of evidence-informed interventions for people living with HIV across 26 sites. Paper presented at the National Ryan White Conference on HIV care and treatment, Washington DC.

- Maulsby, C., Millett, G., Lindsey, K., Kelley, R., Johnson, K., Montoya, D., & Holtgrave, D. (2013). A systematic review of HIV interventions for black men who have sex with men (MSM). BMC Public Health, 13(1), 625. https://doi.org/https://doi.org/10.1186/1471-2458-13-625

- Melendez, R. M., & Pinto, R. M. (2009). HIV prevention and primary care for transgender women in a community-based clinic. Journal of the Association of Nurses in AIDS Care, 20(5), 387–397. https://doi.org/https://doi.org/10.1016/j.jana.2009.06.002

- Millett, G. A., Ding, H., Marks, G., Jeffries, W. L., Bingham, T., Lauby, J., Murrill, C., Flores, S., & Stueve, A. (2011). Mistaken assumptions and missed opportunities: Correlates of undiagnosed HIV infection among black and Latino men who have sex with men. JAIDS Journal of Acquired Immune Deficiency Syndromes, 58(1), 64–71. https://doi.org/https://doi.org/10.1097/QAI.0b013e31822542ad

- Mizuno, Y., Frazier, E., Huang, P., & Skarbinski, J. (2015). Characteristics of transgender women Living with HIV receiving medical care in the United States. LGBT Health, 2(3), 228–234. https://doi.org/https://doi.org/10.1089/lgbt.2014.0099

- Mugavero, M. J. (2008). Improving engagement in HIV care: What can we do? Topics in HIV Medicine, 16(5), 156–161.

- Mugavero, M. J. (2016). Elements of the HIV care Continuum: Improving engagement and retention in care. Topics in Antiviral Medicine, 24(3), 115–119.

- Mugavero, M. J., Ostermann, J., Whetten, K., Leserman, J., Swartz, M., Stangl, D., & Thielman, N. (2006). Barriers to antiretroviral adherence: The importance of depression, abuse, and other traumatic events. AIDS Patient Care and STDs, 20(6), 418–428. https://doi.org/https://doi.org/10.1089/apc.2006.20.418

- Naar-King, S., Outlaw, A., Green-Jones, M., Wright, K., & Parsons, J. T. (2009). Motivational interviewing by peer outreach workers: A pilot randomized clinical trial to retain adolescents and young adults in HIV care. AIDS Care, 21(7), 868–873. https://doi.org/https://doi.org/10.1080/09540120802612824

- Naar-King, S., & Suarez, M. (2011). Motivational Interviewing with Adolescents and Young adults. Guilford.

- Najavits, L. M., Weiss, R. D., Shaw, S. R., & Muenz, L. R. (1998). “Seeking safety”: outcome of a new cognitive-behavioral psychotherapy for women with posttraumatic stress disorder and substance dependence. Journal of Traumatic Stress, 11(3), 437–456. https://doi.org/https://doi.org/10.1023/A:1024496427434

- Nemoto, T., Operario, D., Keatley, J., Han, L., & Soma, T. (2004). HIV risk behaviors among male-to-female transgender persons of color in San Francisco. American Journal of Public Health, 94(7), 1193–1199. https://doi.org/https://doi.org/10.2105/AJPH.94.7.1193

- O’Cleirigh, C., Magidson, J. F., Skeer, M. R., Mayer, K. H., & Safren, S. A. (2015). Prevalence of psychiatric and substance abuse symptomatology Among HIV-Infected Gay and Bisexual Men in HIV primary care. Psychosomatics, 56(5), 470–478. https://doi.org/https://doi.org/10.1016/j.psym.2014.08.004

- Powell, B. J., Waltz, T. J., Chinman, M. J., Damschroder, L. J., Smith, J. L., Matthieu, M. M., Proctor, E. K., & Kirchner, J. E. (2015). A refined compilation of implementation strategies: Results from the Expert Recommendations for implementing change (ERIC) project. IMPLEMENTATION SCIENCE, 10(1), 21. https://doi.org/https://doi.org/10.1186/s13012-015-0209-1

- Proctor, E. K., Powell, B. J., & McMillen, J. C. (2013). Implementation strategies: Recommendations for specifying and reporting. Implementation Science, 8(1), 139. https://doi.org/https://doi.org/10.1186/1748-5908-8-139

- Pyne, J. M., Fortney, J. C., Curran, G. M., Tripathi, S., Atkinson, J. H., Kilbourne, A. M., … Gifford, A. L. (2011). Effectiveness of collaborative care for depression in human Immunodeficiency Virus clinics. JAMA Internal Medicine, 171(1), 23–31. https://doi.org/https://doi.org/10.1001/archinternmed.2010.395

- Rebchook, G., Keatley, J., Contreras, R., Perloff, J., Molano, L. F., Reback, C. J., Ducheny, K., Nemoto, T., Lin, R., Birnbaum, J., Woods, T., & Xavier, J. (2017). The transgender women of color initiative: Implementing and evaluating innovative interventions to enhance engagement and retention in HIV care. American Journal of Public Health, 107(2), 224–229. https://doi.org/https://doi.org/10.2105/AJPH.2016.303582

- Resick, P. A., Wachen, J. S., Dondanville, K. A., Pruiksma, K. E., Yarvis, J. S., Peterson, A. L., Mintz, J., Borah, E. V., Brundige, A., Hembree, E. A., Litz, B. T., Roache, J. D., & Young-McCaughan, S. (2017). Effect of Group vs individual Cognitive Processing therapy in Active-Duty Military Seeking treatment for Posttraumatic Stress disorder: A Randomized clinical Trial. JAMA Psychiatry, 74(1), 28–36. https://doi.org/https://doi.org/10.1001/jamapsychiatry.2016.2729

- Resick, P. A., Wachen, J. S., Mintz, J., Young-McCaughan, S., Roache, J. D., Borah, A. M., Borah, E. V., Dondanville, K. A., Hembree, E. A., Litz, B. T., & Peterson, A. L. (2015). A randomized clinical trial of group cognitive processing therapy compared with group present-centered therapy for PTSD among active duty military personnel. Journal of Consulting and Clinical Psychology, 83(6), 1058–1068. https://doi.org/https://doi.org/10.1037/ccp0000016

- Ritchie, J., & Spencer, L. (1993). Qualitative data analysis for applied policy research. In A. Bryman & R. G. Burgess (Eds.), Analyzing qualitative data (pp. 173–194). Routledge.

- Rosenberg, E. S., Millett, G. A., Sullivan, P. S., del Rio, C., & Curran, J. W. (2014). Understanding the HIV disparities between black and white men who have sex with men in the USA using the HIV care continuum: A modeling study. The Lancet HIV, 1(3), e112–e118. https://doi.org/https://doi.org/10.1016/S2352-3018(14)00011-3

- Rubin, M. S., Colen, C. G., & Link, B. G. (2010). Examination of inequalities in HIV/AIDS mortality in the United States from a fundamental cause perspective. American Journal of Public Health, 100(6), 1053–1059. https://doi.org/https://doi.org/10.2105/AJPH.2009.170241

- Ryan, A. B. (2006). Methodology: Analysing qualitative data and Writing up your findings. In Researching and Writing your thesis: A guide for postgraduate students (pp. 92–108). Maynooth Adult and Community Education.

- Schafer, K. R., Brant, J., Gupta, S., Thorpe, J., Winstead-Derlega, C., Pinkerton, R., Laughon, K., Ingersoll, K., & Dillingham, R. (2012). Intimate partner violence: A predictor of worse HIV outcomes and engagement in care. AIDS Patient Care and STDs, 26(6), 356–365. https://doi.org/https://doi.org/10.1089/apc.2011.0409

- Sevelius, J. M., Carrico, A., & Johnson, M. O. (2010). Antiretroviral therapy adherence among transgender women living with HIV. Journal of the Association of Nurses in AIDS Care, 21(3), 256–264. https://doi.org/https://doi.org/10.1016/j.jana.2010.01.005

- Sevelius, J. M., Chakravarty, D., Neilands, T. B., Keatley, J., Shade, S. B., Johnson, M. O., … Group, H. S. T. W. o. C. S. (2019). Evidence for the model of gender Affirmation: The Role of gender Affirmation and healthcare Empowerment in viral suppression Among transgender women of color Living with HIV. AIDS and Behavior. https://doi.org/https://doi.org/10.1007/s10461-019-02544-2

- Singh, S., Mitsch, A., & Wu, B. (2017). HIV care outcomes Among Men Who have Sex With Men With diagnosed HIV infection — United States, 2015. MMWR. Morbidity and Mortality Weekly Report, 66(37), 969–974. https://doi.org/https://doi.org/10.15585/mmwr.mm6637a2

- Substance Abuse and Mental Health Services Administration and Health Resources and Services Administration. (2016). The Case for Behavioral Health Screening in HIV Care Publication (SMA-16-4999). Rockville, MD: Substance Abuse and Mental Health Services Administration.

- Unützer, J., Katon, W., Callahan, C. M., Williams, Jr, J. W., Hunkeler, E., Harpole, L., Hoffing, M., Della Penna, R. D., Noël, P. H., Lin, E. H. B., Areán, P. A., Hegel, M. T., Tang, L., Belin, T. R., Oishi, S., Langston, C., & for the IMPACT Investigators. (2002). Collaborative care management of Late-Life depression in the primary care SettingA Randomized Controlled Trial. JAMA, 288(22), 2836–2845. https://doi.org/https://doi.org/10.1001/jama.288.22.2836

- Wilson, E. C., Garofalo, R., Harris, R. D., Herrick, A., Martinez, M., Martinez, J., Belzer, M., … The Adolescent Medicine Trials Network for HIV/AIDS Interventions. (2009). Transgender female youth and sex work: HIV risk and a comparison of life factors related to engagement in sex work. AIDS and Behavior, 13(5), 902–913. https://doi.org/https://doi.org/10.1007/s10461-008-9508-8