?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.

?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.ABSTRACT

This study uses a design science research methodology to develop and evaluate the Pi-Mind agent, an information technology artefact that acts as a responsible, resilient, ubiquitous cognitive clone – or a digital copy – and an autonomous representative of a human decision-maker. Pi-Mind agents can learn the decision-making capabilities of their “donors” in a specific training environment based on generative adversarial networks. A trained clone can be used by a decision-maker as an additional resource for one’s own cognitive enhancement, as an autonomous representative, or even as a replacement when appropriate. The assumption regarding this approach is as follows: when someone was forced to leave a critical process because of, for example, sickness, or wanted to take care of several simultaneously running processes, then they would be more confident knowing that their autonomous digital representatives were as capable and predictable as their exact personal “copy”. The Pi-Mind agent was evaluated in a Ukrainian higher education environment and a military logistics laboratory. In this paper, in addition to describing the artefact, its expected utility, and its design process within different contexts, we include the corresponding proof of concept, proof of value, and proof of use.

1. Introduction

The current vision of transformation across various industries and fields embraces the idea of human-centric, resilient, and sustainable processes (Breque et al., Citation2021). Despite tremendous advances in digitalisation and automation (Tuunanen et al., Citation2019), human employees play the dominant role, especially in creative, strategic, and emergent decision-making (Jarrahi, Citation2018). A human-centric perspective, however, leads to more subjective decisions and high dependency on particular employees, therefore creating preconditions for the processes’ bottlenecks, information, and work overload (Matthews et al., Citation2019; Phillips-Wren & Adya, Citation2020), thus threatening organisational resilience and sustainability (Raetze et al., Citation2021). Recent emergencies caused by refugee crises, global hacker attacks, hybrid activities, wars, COVID-19, etc. (Sakurai & Chughtai, Citation2020) have provided many illustrative examples of the disruption of organisational decision-making due to the human factor. We call this challenge “overload vs. organizational resilience”.

One way to increase organisational resilience and sustainability for human-centricity is digitalisation and cybernization of real-world human-driven decision environments by digital twinning, which translates assets, processes, information systems, and devices into digital resources (Jones et al., Citation2020). This study focuses on the digital twinning of human resources (HR) to reduce overload and achieve process resilience. Human digital twins, or cognitive clones in digitising human cognitive capabilities (Somers et al., Citation2020), act as representatives of human actors in cyberspace. They can either (a) copy (simulate) both correct and incorrect (former and potential) decisions by the “donor” (the human owner), acting according to an “exact”, imperfect, and biased model of a particular “donor”, or (b) be capable of correcting potential decision-making mistakes of the “donor” using an automated machine model of a “perfect”, unbiased decision-maker). Option (b) has long been the goal of AI community studies, whereas Option (a) has not yet been studied enough. We intend to uncover the possibilities of a new class of applications of Option (a), leveraging the twinning of a decision maker’s mental model with all personal preferences, features, and biases. Shifting the focus of attention from the long-living Option (b) to the emerging Option (a) is a relatively new phenomenon. The shift is the recently recognised need to bring humans back into the loop of highly automated processes’ control and the newly emerged opportunities due to the evident success of deep learning models and tools in the cognitive computing domain.

Our research questions are as follows:

How can the digital cognitive clone of a donor be designed to inherit the personal preferences, responsibilities, decision features, and biases of a decision-maker?

How can the cognitive clone be applied for resilient, sustainable, and human-centric organisational decision-making?

To achieve this, we apply design science research (DSR) (Hevner et al., Citation2004; Peffers et al., Citation2007) to develop, demonstrate, and evaluate an information technology (IT) artefact (the Pi-Mind agent) capable of making decisions on behalf of its human donor concerning the personal preferences, decision features, and biases of a particular decision-maker.

Previous studies have shown how cognitive clones, which have been created with semantic modelling and deep learning, can digitalise decision systems in Industry 4.0 (Longo et al., Citation2017; Terziyan et al., Citation2018b). This study focuses on developing an applicable IT artefact suitable for various organisational and business processes.

The rest of the paper is organised as follows. We present how we applied DSR methodology (DSRM) in the research. Next, we study the context of digital transformation, digital twinning, and decision-making automation, formulate the design principles, and describe the design of the Pi-Mind agent. We then demonstrate and evaluate the developed artefact. Lastly, we discuss the findings and conclude.

2. Research methodology

To develop cognitive digital clones capable of acting as decision-makers, we apply the DSRM (see, ). The DSRM (Peffers et al., Citation2007) comprises the following phases: identifying the problem and motivation, defining the objectives (for a solution), designing and developing artefact(s), demonstrating the solution to the problem, and evaluating the solution (Peffers et al., Citation2007). We adopt the DSR evaluation approach suggested by Venable et al. (Citation2012) and Nunamaker et al. (Citation2015). Namely, we demonstrate the effectiveness of the artefact (proof of concept), evaluate its efficiency (proof of value) for achieving its stated purpose, and identify the side effects or undesirable consequences of its use for Ukrainian higher education (HE) and society at large (proof of use). This evaluation approach follows Tuunanen and Peffers (Citation2018) and Nguyen et al. (Citation2020).

Figure 1. The DSRM applied for the study, adapted from Peffers et al. (Citation2007).

3. Related work

The relationship between digital and physical realities is changing dramatically. It is not just about the emergence of new scenarios in underlying process management (Filip et al., Citation2017) or digital transformation in organisational structures and management concepts (Kuziemski & Misuraca, Citation2020). An ontological shift towards a “digital-first” (Ågerfalk, Citation2020; Baskerville et al., Citation2019) has led to a new logic of organisational decision-making regarding the underlying models of human–computer interaction.

Traditional organisational decision-making deals with overload in the workplace with simplified models of human–computer interaction. The three basic approaches are straightforward and represent a certain compromise between the two extremes: (1) entirely human decision-making (HR management approach) and (2) completely automated decision-making (see, ).

Table 1. Existing solutions for decision-making in organisations.

The new decision-making logic requires clear interaction of the data-driven insights and the behavioural drivers behind human analysts and managers (Li & Tuunanen, Citation2022; Sharma et al., Citation2014). The new logic should be “structured to be modifiable rather than rigidly fixed”, light touch processes, infrastructurally flexible to enable the “flexibility and configurability of process data flow”, and mindful actors who act “based on the prevailing circumstances of the context” (Baiyere et al., Citation2020, p. 3). All can be facilitated by the smart integration of human and machine intelligence. Human workforce decisions are combined with those made by artificial mindful actors (Duan et al., Citation2019).

There are different views on model joint human and AI decision-making. Ultimately, all human-AI decision-making approaches can be combined into several potential collaborative decision-making scenarios: complete human-to-AI delegation, hybrid (human-to-AI and AI-to-human sequential decision-making), and aggregated human-AI decision-making that aggregates the decisions of a group of individuals (Shrestha et al., Citation2019). Human judgement and individual preferences are expected to remain vital (Agrawal et al., Citation2019), especially in agile environments (Drury-Grogan et al., Citation2017). Therefore, hybrid scenarios of organisational decision-making based on human–AI symbiosis are estimated to prevail (Jarrahi, Citation2018). Human–AI symbiosis is seen as intelligence augmentation in such hybrid models, demanding that AI extend human cognition when addressing complexity. By contrast, humans offer a holistic, intuitive approach to dealing with uncertainty and equivocality. As machine-prediction technology improves, complex decisions (besides easy-to-automate jobs) will be increasingly delegated to an artificial workforce (Bughin et al., Citation2018; Kolbjørnsrud et al., Citation2016).

Acharya et al. (Citation2018) reported a hybrid model in which human operators evaluate automated advice based on their preferences and knowledge. Ruijten et al. (Citation2018), Wang et al. (Citation2019), and Golovianko et al. (Citation2021) demonstrated that AI models are twins of human decision behaviour in various organisational processes.

Even though digital twins have already been implemented, the common vision of “digital representatives” is still under development (Rathore et al., Citation2021). The theoretical foundation for the creation of digital representatives for the human owner (Pi-Mind agent) was 1) the concept of digital twins (Grieves, Citation2019) as high-quality simulations or digital replicas of physical objects, and 2) cognitive/digital clones (Al Faruque et al., Citation2021; Terziyan et al., Citation2018b) as a “cloning” technology regarding the cognitive skills of humans.

Cognitive cloning is quickly becoming mainstream in today’s applied IT developments (Becue et al., Citation2020; Booyse et al., Citation2020; Hou et al., Citation2021). NTT Secure Platform Laboratories has defined a digital twin as an autonomous, agent-driven entity (Takahashi, Citation2020). Microsoft has patented the technology for creating digital clones (to the extent of conversational bots) for specific persons (Abramson & Johnson, Citation2020), and Truby and Brown (Citation2021) discussed the ethical implications of “digital thought clones” for customer experience personalisation.

4. Design of the Pi-Mind agent

4.1. Design principles of the artefact

Attempts to simulate or automate decision-making always encounter some polarising opinions: what should one rely on when facing a decision problem: expertise, intuition, heuristics, biases, calculations, or algorithms (Kahneman & Klein, Citation2009)? Can human decision-making be improved by either helping a person (partial automation and augmentation) or replacing the decision-maker with advanced AI algorithms (full autonomy)? Where should we get the decision-making procedures? Should they come from the direct transfer of knowledge from humans to decision-making systems, or the automated discovery of knowledge based on observations and machine learning (ML)? What contexts should we foresee for decision-making? Should these be simple or complex, with full information or under uncertainty, unlimited, or limited resources (time, memory, etc.), and business-as-usual situations or crisis management? To have a personal, autonomous, always available, as-smart-as-a-human, reliable, fast, and responsible digital substitute for oneself, it is necessary to abandon attempts to choose just one direction of research. After all, they are all present (one way or another) in the human mental model.

The main idea comprises a partial shift from human-driven to AI-driven decision-making supported by an IT artefact, the Pi-Mind agent, which enables the replacement (when needed and appropriate) of a human actor (donor) at decision points with a personal cognitive clone (aka proactive digital twin). This ensures ubiquity or the involvement of a particular person in many processes simultaneously without losing the characteristics of decision-making (responsibility) and cognitive tension (resilience).

Thus, we formulate design principles (DPs), prescriptive statements to constitute the basis of the design actions (Chatterjee et al., Citation2017), and approaches for the evaluation of the DPs’ implementation (see, ). The artefact should be designed as a responsible (DP1), resilient (DP2), and ubiquitous (DP3) cognitive copy (DP0) of its donor. The primary and fundamental design principle is the Turing principle (DP0), which is inspired by the historical principle of AI – the so-called Turing test that examines the ability of AI to exhibit intelligent behaviour equivalent to or indistinguishable from human behaviour (Turing, Citation1950).

Table 2. Pi-Mind agent design principles.

To assess the implementation of all the DPs, we measure the effectiveness of the artefact in terms of the correspondence between a clone and donor; we also measure efficiency in terms of the benefits gained from replacing the human decision-maker with AI.

Several metrics of the correspondence between a clone and the donor allow evaluation of the quality of the implementation of DP0-DP2. Considering the decision evaluation as a binary classification problem (with two possible output classes: “correct decision” and “incorrect decision”), we apply the F1-score as a metric of decision accuracy. The F1 score is a widely used metric for model performance in both AI and management, making it universal and applicable to all our cases.

Efficiency indicates user utilities; thus, it can be interpreted and measured using several metrics. We consider the challenge of overload versus organisational resilience to be the main benefit of utilising the Pi-Mind agent. The clone enables the participation of a human in various processes simultaneously (DP3). The donor saves time by delegating part of the work to the clone. Therefore, we use the following efficiency metrics: timesaving per transaction and the number of processes in which a clone participates simultaneously.

4.2. Twinning decision behaviour

The Pi-Mind agent is an intelligent model for ubiquitous decision-making based on the digital twinning of human decision behaviour. A specific instance of the Pi-Mind agent is a digitally shared proactive copy of a particular person’s decision system in terms of decision schemes and preferences that depend on specific tasks, domains, and contexts. Digital decision-specific knowledge is stored as interrelated semantic resources in a set of ontologies. Personal preferences are defined as a unique set of decision criteria formalised with various information models, such as mathematical models (e.g., neural networks and decision trees), detailed specifications (algorithms), or explicit scoring (judgement) systems based on customised sets of values.

To provide a new instance of the Pi-Mind agent with knowledge, we extract decision-specific knowledge from the human donor, annotate it in terms of decision ontologies, and store it in the corresponding knowledge base. Suppose that the decision behaviour can be explicitly explained. In that case, this task is reduced to configuring the most accurate mathematical decision-making model (identifying the correct type of model, the appropriate parameters, and the relationships between them). The general laws, principles, and rules are rigidly fixed in this case.

The basic model is shared among all decision-makers and functions as the basis for making decisions. However, the final decisions are unique because each decision-maker customises and personalises the model by setting up the preferences (consciously assigned values for the parameters) within it. With this approach, the digitised human is a carrier of a vector of values for the model parameters. Decisions made according to this method are explainable because the essence of each parameter and its comparative value can be understood. This approach is well established in DSS, formalising human (or group) multi-criteria decision behaviour in business-as-usual situations.

However, this approach encounters problems if the situation ceases to be ordinary. When decision-makers leave their comfort zones because of fuzziness, uncertainty, or a lack of information, the previously fixed model becomes irrelevant. In this situation, humans are still capable of making decisions; however, they unconsciously use hidden personal biases, heuristics, and intuition. It is difficult for a person to explain their decisions in this situation. Therefore, a different technology for extracting knowledge is needed.

This approach assumes the model (the architecture and parameters) to be self-configurable when observing the human donor’s decision behaviour. The outcomes of this model cannot be explained by analysing its parameters, because the values for these parameters are not provided by donors but by artificial (computational) intelligence when simulating human behaviour. In this approach, the information system can be trained to make personalised decisions in various situations, simulating concrete human decision-maker behaviour.

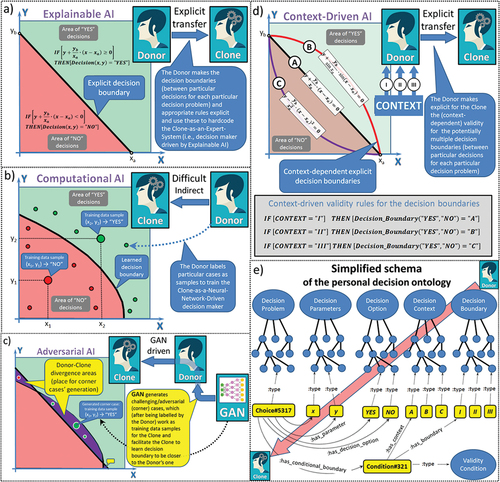

We use both fundamental AI approaches to extract expert knowledge (see, ).

In the first facet shown in , the top-down (“symbolic”) approach, which is based on semantic annotation, experts specify and explicitly conceptualise how they evaluate alternatives and choose a solution. In the second facet, the bottom-up approach, computational intelligence enables learning decision-specific knowledge from observation for more intuitive decision practices and weakly formalised problems. The third facet enables the autonomic behaviour of the Pi-Mind, exhibiting proactivity and the abilities of situation- and self-awareness and self-management. These are considered important components of future strong AI, artificial general intelligence, and super-intelligence and are mainly associated with the development of reinforcement learning techniques (Silver et al., Citation2021).

4.3. Creating cognitive clones as AI agents

Agent-based models drive the autonomic (the third AI facet in ) and proactive behaviour of the clones as ubiquitous activity within decision environments (DP3). During the technology’s life cycle, the Pi-Mind agent “lives” in two types of modes almost synchronously. In the “operational” mode, the clone addresses different decision-making tasks (jobs). In the “university” mode, the agent develops its cognitive capabilities for better performance, aiming to adapt its behaviour continuously to new challenges, contexts, and tasks. Within such an environment, the Pi-Mind agent learns the following:

Understanding and using the personal decision preferences and values of its human donors and choosing the most appropriate solution among alternatives in business-as-usual processes.

Applying known decision models to different but related problems with transfer learning.

Obtaining critical decision-making experience and preparing for new, difficult tasks, complex contexts, and disruptions from continuous retraining in adversarial settings.

Training the AI immunity (Castro et al., Citation2002) against cognitive attacks (Biggio & Roli, Citation2018). Such attacks threaten an agent’s intelligence capabilities by breaking ML models with specifically crafted adversarial inputs.

Unlike simulators with embedded typical behaviour patterns, the Pi-Mind agent acquires the ability to learn and proactively exhibits the unique decision-making behaviour of a particular person (donor). A simple formula connecting all three ideas with the concepts in is as follows:

Here, we use a pragmatic notion of “consciousness”, which supports the concept of the Pi-Mind agent. According to our definition, consciousness (for a human or for an AI agent) includes (a) self-awareness – understanding the boundary between everything within the accessible “me” and the accessible “the rest of the world” and (b) self-management, which is an autonomic, proactive activity for keeping the balance between these two. The “balance” means the following: (a) for a human, sustainable opportunity to complete the personal mission statement, and (b) for an AI agent, sustainable opportunity to complete its design objectives.

This extension of the digital twin concept also influences the distribution of responsibilities among the relevant players. The players are as follows: the donor (the object for twinning), the designer (the subject performing twinning), the clone (the outcome of twinning), and the user (the user of the designed clone). Consider the case when the clone is a simple digital twin of some donor. In this case, the user takes remote control of the clone. If some severe fault happens in use, the responsibility will be divided between the user (for possible incorrectness in control) and in the designer (when the clone is not functioning according to the agreed-upon specification). Consider the case when Pi-Mind drives the clone of a donor. In this case, the designer and donor are the same person; the clone works (behaves and decides) proactively, following the donor’s decision and self-management logic. Therefore, here, the user will not be responsible for the potential problems, and the responsibility will be entirely on the side of the clone – that is, the donor and/or designer. The difference between these two scenarios is another reason for using the term “responsible cognitive clones” when discussing Pi-Mind agents.

This property allows us to talk about “soft substitution” that preserves jobs. The clone does not push the donor out of the workplace but instead strengthens the donor’s capabilities, allowing simultaneous virtual participation in several processes (DP3). This creates an understudy for emergencies and a sparring partner for coevolution.

4.4. Clone’s cognitive development by ML

The training environment for Pi-Mind agents is built to ensure the implementation of the declared design principles (), namely DP0 (Turing principle or the minimal donor-clone deviation), DP1 (transfer of specific responsibilities to the agent), and DP2 (resiliency or ability to act both reactively and proactively):

A Pi-Mind agent learns to act as a smart cognitive system capable of creativity, cognition, and computing in real-world settings, where it is impossible to predict future tasks and problems. The agent should observe new realities and be capable of generating new alternatives and parameters. Therefore, an agent’s cognitive capabilities are developed primarily through adversarial learning using variations and enhancements of the generative adversarial network (GAN) architecture (Goodfellow et al., Citation2014).

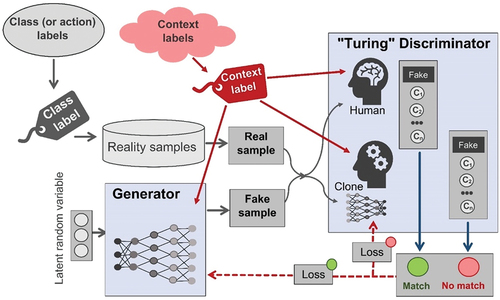

A Pi-Mind agent must react similarly to the target (human) donor. We suggest updating the basic GAN architecture by finding a place for the donor (human) component. We call this mix (agent + donor) the “Turing discriminator” (TD), and all the GAN architectures that are included in this mix will have the letter “T” in their acronym. A direct extension of the basic GAN is called the T-GAN.

A Pi-Mind agent is required to operate not only as a binary classifier (“real” or “fake”), as the basic GAN does, but can also address the complete decision options spectrum (class labels and fake detection). To achieve this, we use some architectural features from SGAN (Odena, Citation2016), which is an extension of a generic GAN architecture with classification capability. Classification is a kind of decision-making problem that involves choosing a particular class label from those available. Behaviour is also a decision-making problem of choosing a specific action from the available ones.

A Pi-Mind agent is trained in different contexts to make decisions similar to those of its donor. This means that the agent will learn not only one but several decision models personalised in different contexts, including critical decision-making. We add the letter “C” to the acronym of such context-aware GAN architectures; for example, the direct extension of the basic GAN will be named C-GAN (not to be confused with CGAN, which is the conditional GAN).

The aggregation of these requirements forms a target adversarial architecture for training Pi-Mind agents (clones): T|C-SGAN (see, ).

Figure 3. Pi-Mind technology-based T|C-SGAN adversarial architecture for transferring decision-making skills from a human to a digital clone.

T|C-SGAN includes a TD with different semantics than a traditional GAN discriminator. The TD is a small collective intelligence team consisting of a “human” (H) and a trainable digital “clone” (C). The TD gets the input samples from the reality samples set and is expected to guess the correct decision (class or action label). Additional inputs come in the form of a specific context label, which means that the correct decision derived by the TD must also be appropriate for the given context. H and C (independent of each other) suggest their decisions (correct labels) for the input. The decisions are compared, and the numeric evaluation of the mismatch is computed. This mismatch is used as feedback (loss function value) for the neural network model of C, and the network parameters are updated, aiming for better performance next time. Thus, this procedure comprises supervised context-aware (backpropagation-driven) learning by C, where the clone is trained to guess the decisions made by its donor in similar situations and contexts as precisely as possible.

4.5. Proactivity training with adversarial ML

A more challenging case occurs when the information system is trained in adversarial or conflicting interactions to copy human decision-making behaviour in complex emergencies. Observing the donor, the information system captures and configures the donor’s hidden critical decision-making model. We consider the concrete (but hidden) heuristics and biases people use to make personal judgements or choices in cases of ignorance or uncertainty (Tversky & Kahneman, Citation1974). Cognitive clones must capture personal specifics while learning from donors. This type of learning is based on adversarial ML (Bai et al., Citation2021; Kumar et al., Citation2020; Kurakin et al., Citation2016), in which situations with maximum ignorance and uncertainty are generated and addressed synchronously by humans and clones. Using this principle of (inexplicable) knowledge extraction, even in complex adversarial conditions, the information system copies an individual model of the donor’s behaviour without understanding and explaining its parameters.

The GAN philosophy requires that the training process be executed in adversarial conditions, assuming that a challenging training environment facilitates attaining the intended learning outcomes. Therefore, the “generator” (G) adds an adversary component to the architecture. G constantly challenges the TD (H + C team) by aiming to generate input samples similar to those from the reality samples set to confuse the TD. The goal of G is to maximise the mismatch or difference between the H and C reactions. The H and C teams are expected to synchronously address the inputs (classify) and uncover fake inputs. G is also a trainable and neural network-driven component. If G’s content cannot confuse the TD, then G receives feedback (as the loss function value), and the parameters of G will be updated accordingly. With time, G improves its adversarial performance. This “game” (TD vs. G) drives the process of the coevolution of TD and G towards perfection in their competing objectives. Improvement of the TD implies that C learns how to make the same decisions as H (possibly even incorrect ones) in the same situation and the same context and while under pressure (if there are any). This training ensures the Turing principle of minimal donor-clone deviation for business-as-usual and critical decisions.

The quality of the resulting artefacts regarding their fit with the declared DPs could be assessed according to the metrics used in ML, as shown in . However, one crucial advantage of ML is the possibility of applying quality assurance along with quality assessment. Design quality assurance requires correspondence between the artefact itself and the declared principles. The additional requirement concerns designing the artefact to enable the production of the artefact with the declared principles when any noticed deviation of the process from the intended one is self-corrected according to the feedback from real-time process monitoring.

More specifically, the feedback provided by neural networks during the training process is a value of the so-called loss function. The process of self-correction based on feedback is called backpropagation (Amari, Citation1993). Neural network training environments and algorithms ensure self-design and self-evaluation with these instruments. It is important to note that the TD provides two feedback loops: one to the clone being trained and one to the G, which plays the role of a challenger in the training process (see, ). Regarding the first feedback loop, the TD in the architecture plays the role of a digital measuring device to monitor the fitness of the current design iteration to DP0 (minimal donor-clone deviation). At each iteration of the cloning process, the TD outputs the value of a loss function (quality assurance measure regarding DP0), which is a precise assessment of the deviation and is used as feedback for both TD and G via backpropagation. The cloning (particularly clone training) process stops when the loss function tends to zero, meaning that DP0 is satisfied.

Regarding the second feedback loop, the TD in the architecture plays the role of a digital measuring device to check the fitness of the current design iteration to the DP2 design principle (resiliency or the capability of a clone to adapt or recover being under pressure of complex, challenging situations or adversarial attacks). The organisation of the corresponding GAN architecture guarantees that G improves its performance in generating challenging (adversarial) inputs to the TD at each iteration of the cloning process. Therefore, the feedback (loss function value), which G gets, actually evaluates the quality of the challenge for the TD, and (because the clone learns synchronously to address the challenge), this feedback can be used to ensure the quality of DP2. This is because the TD is learning to adapt to potentially the most challenging inputs and – by doing this – performs as a resilient decision-maker.

Finally, the donor (a human being cloned) in the architecture also receives some feedback (a kind of reward or punishment) from the environment as a response to the decisions she has made, and this feedback reinforces the human’s ability to change (adapt or upgrade) from some hidden model that drives her choices. This evolution indicates how seriously the person takes personal responsibility for their own decisions. Personal responsibility is challenging to measure directly from a human perspective. However, suppose we undertake lifelong retraining of the clone (Crowder et al., Citation2020) according to the architecture shown in . In that case, the clone will coevolve with its donor and perform with the same level of responsibility. Therefore, the suggested GAN architecture will also guarantee the fitness of the design process to the principle of DP1 (responsibility). Finally, the clone (as a trained neural network) can be easily copied and used as an autonomic decision-maker within several processes where the same decision task is required. Consequently, the average number of synchronously running clones from the same donor could be used to measure ubiquity (DP3).

4.6. An example of cognitive cloning

Let us assume that we want to train the clone to make the same decisions as the donor when facing the same decision problems within the same decision context in the future. ) illustrates a simple explicit donor–clone knowledge transfer case. Here, we have a two-dimensional decision space, meaning that each decision task is defined by two parameters (x, y). We also have two different decision options (“YES” or “NO”).

Figure 4. Challenges regarding cognitive cloning: a) explicit (donor to clone) knowledge transfer (e.g., as a set of decision rules); b) machine learning-driven training of the clone (donor labels the particular decision contexts, and the clone learns the boundaries between different decision options by discovering the hidden decision rules of the donor); c) adversarial learning (driven by GANs) helps facilitate the training process in b) by discovering the corner cases for challenging decisions, hence making the clone’s decision boundaries and rules closer to the donor’s; d) discovering and making explicit the contexts that influence the donor’s decision boundaries and rules and training the clone specifically for all such contexts; e) integrating both explicit and learned decision knowledge into different decision tasks and decision contexts under the umbrella of personal decision ontology, which will be used by the Pi-Mind agent when acting as a clone of a particular human donor.

In ), the donor is supposed to know the rules for addressing particular types of decision cases. Each rule is a kind of formal definition of the bounded subspaces within the decision space, corresponding to each decision option (the area of “YES” decisions and the area of “NO” decisions in the figure). Given the parameters (coordinates) of the decision task (a point within the multidimensional decision space), the donor applies the rules to locate the point within one of the decision subspaces (decision options) to make the corresponding decision. Even for popular decision problems, each donor may have specifics in their decision boundaries, meaning that different donors may make different decisions in some cases. Therefore, knowing the explicit definitions of the decision boundaries (decision rules) allows the donor to design the clone top-down via explicit personal decision skill transfer.

Humans may not always know exactly how and why they make certain decisions. To capture hidden decision skills, one needs to “interview” the target donor on many decision cases and collect the chosen options for each case. The collected samples can then be used as training data for various computational intelligence techniques, drawing the decision boundaries and capturing the rules for designing the clone in a bottom-up (ML-driven) way. This option is illustrated in ). Here, based on several cases of “YES” and “NO” decisions, some ML algorithms draw the decision boundary (the separation curve between the “YES” and “NO” decision subspaces). After this, new decision-making cases can be addressed accordingly. This method of cloning entails a kind of supervised learning, wherein the donor labels the set of decision cases with the chosen decision option, and the clone learns based on this set (by guessing the hidden decision boundary that the donor uses when making decisions).

Such supervised ML depends heavily on the training data. Let us assume that the actual (but hidden) decision boundary for a particular donor is the line shown in ). However, some ML algorithms (e.g., neural network backpropagation learning) draw the curve as the decision boundary based on the labelled data, as shown in ). The donor will continue to make further decisions with different boundaries from the clone. As shown in ), the difference between actual and guessed decision boundaries creates some divergence areas within the decision space; if they happen to belong to these areas, all the decision tasks will be addressed differently by the donor and clone. To minimise discrepancies between the donor’s and clone’s opinions, we have to provide the clone with better training data. We used GANs for this purpose because they are capable of discovering (within the real-time training process) divergence areas and generating new (corner) cases (to be labelled by the donor) from these areas. This type of (adversarial) training, as illustrated in ), facilitates the learning of precise decision boundaries and makes the clone capable of making almost the same decisions as the donor would.

Humans often make decisions differently in different contexts. Consequently, if the clone learns the donor’s decision logic (appropriate decision boundaries and corresponding decision rules) in one context, this will not mean that the same logic would work in another context for the same set of decision tasks. ) illustrates the context-dependent decision boundaries and the appropriate (meta-) rules. When a particular decision context is known, the particular decision boundary (and hence the corresponding decision rules) becomes valid (according to explicitly defined meta-rules) and will be used for further decisions. In the most complicated cases, the cloning challenge would mean that both the hidden decision rules and hidden context meta-rules of the donor must be learned bottom-up using adversarial, context-aware, GAN-driven techniques, as we described earlier and illustrated in .

All these decision-making skills, which the intended clone either gets explicitly from the donor or learns by observing the donor’s decisions for different decision problems and contexts, must be integrated as an interconnected set of capabilities controlled by the Pi-Mind agent. This content (the taxonomies and semantic graphs of acquired or learned decision problems, parameters, options, contexts, boundaries, rules, and meta-rules that characterise the decision-making specifics of the donor) is constructed automatically under the umbrella of the personal decision ontology, as shown in ). Semantic (machine-processable) representation on the top of decision models allows for automated processing (by the Pi-Mind agent-driven clone), seamless integration of available decision-making knowledge and skills, openness to lifelong learning of new knowledge and skills (via continuous observation of the donor), and communication and coordination between intelligent agents.

5. Demonstration and evaluation of the Pi-Mind agent

5.1. The specifics of the Pi-Mind demonstration and evaluation

Appropriate conditions must be met to use a Pi-Mind agent (a virtual ecosystem with support for all Pi-Mind options). Therefore, launching a fully functional version without preliminary testing of the individual components and tools is too complicated, expensive, and risky. We approached the solution to this problem as follows: 1) through diversification with a pilot launch of the Pi-Mind technology in different procedures (we provides examples called the NATO case, NURE case, and HE case); 2) by leveraging the ecosystems of their previous projects; and 3) by using the minimum viable product (MVP) (Nguyen-Duc, Citation2020) approach, not only for development but also to demonstrate the value of the agents (different Pi-Mind options were selected as MVPs). We also used the opportunity to “play” in different contexts:

Complex contexts (e.g., in security systems) are of special interest because of their difficult and ambiguous tasks, but they also carry significant risks and require enormous resources. We used field testing at a local scale to achieve validation through repeatability (using the test-retest reliability as an indicator of the same-tests – same-results similarity.

Contexts with simple tasks without excessive risks are also interesting because they make it possible to validate the scalability of a concept.

The NATO case is in the security systems domain (a real laboratory with a real system, real users, and simulated problems). Complex implicit knowledge transfer based on continuous adversarial learning cannot be checked in a social environment. The requirement of large, well-formed, validated datasets for comprehensive training and badly formalised decision processes forced us to turn to the ongoing project funded by NATO Science for Peace and Security (NATO SPS; http://recode.bg/natog5511; Terziyan et al., Citation2018a). We aimed to prove that Pi-Mind agents could be trained to enhance civil and military security infrastructures and to take over control of certain operations on behalf of security officers. The agents “observed” the interroll cassette conveyor, which is an analogue of those used in airports for distributing and inspecting luggage. Their task was to prevent any potential danger caused by cassette loads on the conveyor by applying expert donors’ judgement and expertise. Two types of decision processing contexts based on image recognition were tested: in the business-as-usual environment and in adversarial conditions (DP2), which would cause disruptions to the critical infrastructure. A detailed description of the previous and current experiments is available in Golovianko et al.’s (Citation2021) study.

Although the human decision-makers showed better performance in threat recognition, the experiments’ results are promising because of the high accuracy of the artificial predictions and (even better) high human-clone correlation regarding the decisions. Experiments with different adversarial conditions showed that artificial and human workers tended to misclassify threatening objects in adversarial settings. However, the agents can be trained to develop a new capability – an artificial cognitive immunity – which can help improve the accuracy of human donors’ evaluations by giving artificial advice.

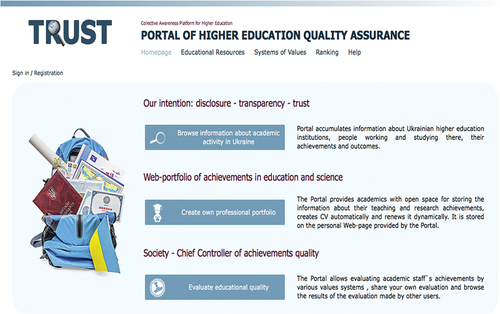

The NURE case is used in the real business processes of organisations, with real users, problems, and information systems. The education domain provides an opportunity to demonstrate the Pi-Mind agent in a social environment with many users in simple contexts. Some of the quality assurance (QA) processes at Ukrainian universities were executed through the TRUST Portal (http://portal.dovira.eu), an academic social media and process management platform (see, ) with ontology-based information storage and inference mechanisms on top (Terziyan et al., Citation2015).

Figure 5. TRUST Portal, http://portal.dovira.eu.

To introduce Pi-Mind agents into the portal, we transferred explicit knowledge from the donors to the Pi-Mind agents to implement a basic decision-making procedure when choices were made based on a comparative numeric evaluation (ranking) of the registered or available options. The core of this procedure is a personal system of values (PSV) consisting of parameters for the evaluation function in personalised decision-making. Combined with agent technologies and implemented in the semantic environment, a PSV can become a proactive entity capable of accessing digitised information, operating automatically as a personal clone, and participating in collective decision-making.

Over the past few years, the Pi-Mind agent has been used as a decision-making tool on the portal. As the most active user, Kharkiv National University of Radio Electronics (NURE) – the leading Ukrainian IT university – was the first to implement this technology in its business processes (see, ).

Table 3. Evaluation of the Pi-Mind agent at NURE.

Next, we present the evaluation of the Pi-Mind agent, showing that the use of the agent meets the following goals: 1) effectiveness, given its improved decision accuracy (proof of concept), and 2) efficiency, given the decreased time for decision-making (proof of value) and the digital transformations in decision-making in HE (proof of use).

5.2. Proof of concept: increasing the accuracy of decisions

In the “employee motivation” in the NURE case (administrative decision-making, see, ), to distribute bonuses among academic staff, the rector – an authorised decision-maker – annually analyzes the achievements of each employee for a certain period. Based on this analysis, the rector either increases the employee’s monetary bonus (for good results regarding certain objectives) or reduces the bonus (for decreased efficiency). The application of Pi-Mind agents significantly changed the parameters of this procedure (see, ).

Table 4. Results of the decisions regarding the distribution of bonuses among academic staff before using the Pi-Mind agents.

Table 5. Results of decisions for the distribution of bonuses among academic staff after using Pi-Mind agents.

The co-focusing of the bonus objectives (multidimensional vector) and actual employee development vector determines the utility of the decision. The effectiveness of the decision (or the accuracy) is assessed following the assessment practices used in a binary classification (Tharwat, Citation2021), and it is evaluated based on a confusion matrix and measured through the F1-score, a metric for model performance that combines precision and recall (see, ).

Table 6. Assessment of the decisions for the distribution of bonuses among academic staff.

Thus, using the Pi-Mind agent also increased resilience in the rector’s workplace: accidental and deliberate errors arising from information overload, lack of time, or the malicious influence of outside forces on the decision-making process were excluded. Neither advocates nor opponents of the new procedure supported by the Pi-Mind agent could reproach the rector with the claim of biased or non-transparent distribution of the bonuses.

In the other example in the NURE case, “extreme resource reconfiguration” (see, ), the announcement of the COVID-19 quarantine required the university to make an urgent transition to crisis management mode and reconfigure resources for the on/off-line processes accordingly. Although the university rector was not available at that moment due to a business trip, the university administration quickly managed to get the rector’s opinion on the reallocation of resources from his digital clone (proactive PSV). Although this clone was created for other business processes, it reflected his administrative preferences. With the help of the Pi-Mind agent, the university managed to restructure and adapt its processes completely to a remote mode in all the activity areas and was able to do so within three working days. This case demonstrates that the ubiquitous Pi-Mind agent can involve HR, even when the employee is temporarily unavailable. This reduces the risk of interruptions in the workflow (especially in critical circumstances). The Pi-Mind agent protects employees from losing their jobs in the case of temporary unavailability.

The accuracy of the decisions supported by the Pi-Mind agent during the COVID-19 crisis was assessed by comparing the regulatory documents adopted (cancelled or changed) during the transition process (). Between March 12 and 16, 2020 (three working days), 20 orders were approved, and an additional 31 official instructions were released regarding the transfer of the university processes to a remote work mode. One order was later cancelled, and two orders were changed. Supplements were issued regarding one order and one instruction. Seven drafts remained unapproved.

Table 7. Assessment of the decisions for the redistribution of resources without the direct involvement of the decision-maker.

5.3. Proof of value: decreasing the time needed for decision-making

Our third example in the NURE case (“recruitment”; see, ) shows that using a Pi-Mind agent improves the system’s efficiency with the organisation’s resources. The decisive (voting) stage of the “job candidates’ selection” procedure includes a meeting of the hiring selection committee, wherein members of the academic council are familiarised with summaries of the processed documents for each candidate. A private vote then takes place at the academic council meeting. A Pi-Mind agent launched at this decision-making point duplicates the HR (i.e., the voter is only a professional expert) and is not subject to being influenced by the situation (like a vulnerable human voter). Such a decision becomes explainable and reviewable, and, accordingly, responsible. Another benefit is that such decision-making requires significantly less time. The former procedure required at least 10 minutes to review each candidate. Involvement of the Pi-Mind agents minimises the time spent searching for an acquaintance with the documents, allowing for the creation of individual ranking lists for candidates without a separate meeting. As a result, the time for approving each candidate was reduced to one minute. Nine minutes saved per transaction resulted in tangible savings throughout the university ().

Table 8. Savings in human workload per year for the whole university.

5.4. Proof of use: The digitally accelerated transformation of Ukrainian HE decision-making and society at large

Proof of use of the Pi-Mind agent was performed in numerous case studies to justify the current artefact’s applicability in various domains, as promoted by Nunamaker et al. (Citation2015), to identify its use’s side effects or undesirable consequences (Venable et al., Citation2012). The transformation from specific, one-time decisions to more complex, fuzzy decision models allowed us to reveal the real scope of the artefact’s implicit and explicit capabilities and, therefore, its potential to influence change management processes and collective decision-making.

The HE case impacts the national level with real users, problems, and information systems. The Pi-Mind agent was implemented as part of the digital infrastructure, contributing to accelerating national reforms and managing change. Social change happens as a set of transitional processes that qualitatively shape different systems and communities. Even developed countries often experience difficulties when reforming because of the complex, nonlinear, dynamic, and difficult-to-predict nature of the underlying transition processes. In post-Soviet developing countries, such as Ukraine, unfavourable “starting positions” make this challenge even greater. Our goal was to use digital cloning (both decision-makers and processes) to enhance the efficiency of transitional processes, decrease the level of corruption, and increase societal trust in the process participants. We studied the effect of the Pi-Mind agent on the educational domain in Ukraine in several phases.

First, the web-based platform TRUST (www.portal.dovira.eu) was launched in four universities (NURE, Yuriy Fedkovych Chernivtsi National University, Ukrainian Catholic University, and the National Academy of Managing Personnel of Culture and Art) with the support of the Ministry of Education and Science of Ukraine. A meta-procedure for QA procedures was developed and tested on five procedures at each of these universities: management of academic recruitment, academic staff assessment, and motivation; innovation management at the level of HE institutions; student feedback management; internationalisation; academic networking management; and management of academic staff’s lifelong learning. All the developed procedures were properly tested and documented (Semenets et al., Citation2021). The first phase of implementing the Pi-Mind agent showed that even a reasonably constructed QA system required the support and commitment of its players. This conclusion was confirmed by the Organization for Economic Cooperation and Development (Melchor, Citation2008). Transparent, and the systematic use of the Pi-Mind agent has become a support for the agents of change in universities, addressing the lack of academic consolidation, awareness, and acceptance of the reforms.

The second phase of evaluating the proof of use was shaped by deploying an entire ecosystem for accelerated cognitive development. This allowed cloning processes that could simulate new scenarios to predict the system’s behaviour, processes, and individual players within the new “rules of the game”, and then publish and compare the results. The Pi-Mind agent was first officially implemented at the national level during the election of private HE institutions’ representatives to the first national independent QA agency in Ukraine in 2015. The congress of private HE institutions used the tools and services of TRUST as digital support for the election process. The Pi-Mind agent (as a carrier of the collective vision) evaluated the candidates’ applications, choosing the most qualified members and publishing the final scores so that everybody could verify them and check the validity of the achievements. An attempt by the Ukrainian Congress to ignore the results and push previously chosen candidates behind closed doors was described in detail by the independent analytical platform VoxUkraine. The report on its analysis of the election results was later published with the title “Reform of HE: One Step Ahead and Two Steps Back”. The article illustrated (step by step with screenshots) that the best candidates (the Pi-Mind agent’s decisions) and the nominated candidates (decisions of the officials) were different. The Ministry of Education and Science (responsible for the elections), under pressure from the solid and transparent facts provided by the Pi-Mind agent, subsequently annulled these nominations. For more than seven years, the Pi-Mind agent has continued to be used in various processes, helping to adapt people’s mindsets, culture, attitudes, and practices to new environments.

The results of the functioning of the ecosystem (the TRUST Portal) with the support of Pi-Mind technology are as follows:

The ontological knowledge base stores more than 400,000 resources registered by users, and 23 million knowledge triples. Knowledge (semantic) triples (subject-predicate-object statement) are elementary units that define one connection between two entities (often called “resources”) within a shared ontological knowledge graph (often called a “semantic graph”).

More than 5,000 individual and corporate users (with advanced usage powers) were registered on the portal.

More than 8,000 academic achievements were registered on the portal.

More than 500 Pi-Mind agents, with their value systems, were created on the portal.

More than 1,700 procedures were launched by Pi-Mind agents and stored on the portal.

The artefact was rigorously evaluated for its effectiveness and efficiency in business-as-usual and crisis management processes for the design principles (DP0–3) used for its design ().

Table 9. Evaluation of the effectiveness and efficiency of the Pi-Mind agent and the design principles DP0–DP3.

The performed test runs convinced us that not only personal decision expertise but also entire processes can be cloned. In cloning processes, by placing different Pi-Mind agents at the appropriate decision points, the organisation can ensure the continuity and quality of the processes based on collective intelligence and dynamic assessments. These findings led us to work on twinning and simulating transitional processes to test various expert approaches, which are extremely important in situations with limited human and time resources. More specifically, the Pi-Mind agents can be considered key providers of the processes’ sustainability in the context of hybrid threats in the environment created in the “Academic Response to Hybrid Threats” (WARN) Project (https://warn-erasmus.eu). We are currently simulating complex situations in which a cognitive clone (or an “artificial clone + human donor” team) learns to make decisions in real processes when challenged by complex, non-standard adversarial conditions.

6. Discussion

6.1. Use prospects for the artefact

Our study makes a novel artifactual contribution to the research (Ågerfalk & Karlsson, Citation2020) on improving organisational resilience in points of decision-making supported by information systems (Duchek, Citation2020; Heeks & Ospina, Citation2019; Hillmann & Guenther, Citation2021; Linnenluecke, Citation2017; Riolli & Savicki, Citation2003). Global crises provoked by “unknown unknowns” have made this task especially important because situations such as the COVID-19 pandemic have revealed the considerable vulnerability of decision-makers in critical situations. The search for appropriate solutions led to AI technologies, enabling a smooth transformation from human-driven to automated decision-making.

The IT artefact (Pi-Mind agent) is an AI solution designed and implemented to create digital clones of professional experts. With its basic concepts (cognitive clone and cloning environment), models, methods, techniques, interfaces, and tools, the agent will ensure responsible, resilient, and ubiquitous decision-making in business-as-usual and crisis management conditions.

The applicability and utility of the IT artefact were demonstrated and evaluated via several successful implementations and deployments. First, we presented the design and implementation of the top-down facet of the Pi-Mind agent within organisations in the university environment. The administrative and participative decision-making processes were reformed based on this implementation, but the critical decision-making processes also changed completely. The implementation assessments confirmed an increase in the accuracy of HR decisions and savings, with an increase in personnel’s general interest in the new technology. Second, the bottom-up facet of the Pi-Mind agent was implemented based on new GAN-oriented architectures. Third, the implementation of the Pi-Mind ecosystem in Ukrainian HE institutions has led to several societal and legislative changes:

Several initiatives for improving regulatory and legislative acts were successfully adopted at the level of the Cabinet of Ministries,Footnote1 the Parliament Committee of Education and Science,Footnote2 and the Ministry of Education and Science.Footnote3

Members of the project team participated in activities for the development of the new law on HE (September 2014).

Expert recommendations for the draft of the concept of reforms in the system of accreditation and licencing of HE institutions and a roadmap for establishing the National Independent QA Agency were presented.Footnote4

Therefore, we claim that our “last-mile DSR” has directly impacted Ukrainian society and how decisions are made in Ukrainian HE institutions. Consequently, our study has implications for practice and provides an example of how proof of use can be evaluated in a DSR project.

Furthermore, the developed DPs make a novel contribution to the development of both responsible AI (Arrieta et al., Citation2020; Gupta et al., Citation2021) and ethical AI (see, e.g., the Montreal Declaration, 2017: montrealdeclaration-responsibleai.com/the-declaration; the Asilomar AI principles: futureoflife.org/ai-principles; Berente et al., Citation2021; Floridi & Cowls, Citation2019; Henz, Citation2021). Regarding the “responsibility” concept, we consider three dimensions: “taking over a responsibility”, “being responsible”, and “deciding responsibly”. “Being responsible” involves the ethical dilemma of who will be responsible for incorrect decisions made by the clone (Royakkers et al., Citation2018). The DPs create the technical prerequisites for transparent distribution of responsibilities between the donor, developer, and user and enable a good balance between AI autonomy and high control of the donor or of those the Pi-Mind collaborates with. Putting humans at the centre of systems design thinking, the Pi-Mind agent validates Shneiderman’s human-centred AI framework (Schneiderman, Citation2020).

Our study also promotes the concept of “deciding responsibly” as a computational aspect of measurable responsibility for mistakes. We capture and measure personal bias and consider it an indication and a measure of responsibility as the extent to which the decision-maker is concerned regarding the potential consequences of one’s decisions. The study also contributes to solving several ethical issues in the workplace: 1) the Pi-Mind agent provides a guarantee of the virtual presence of a needed specialist without overloading him; 2) the opportunity to participate simultaneously in several critical processes increases an individual’s confidence regarding employment; 3) in cases where an employee has exceptionally unique, strong, and potentially reusable expertise, they can “patent” the clone and sell copies elsewhere; therefore, as a technology acronym, “Pi” (or “π” as another option) refers to “patented intelligence”.

6.2. Further development of the artefact

The IT artefact (Pi-Mind agent) is a testing ground for extensive research on “human-like” decision-making. For example, donors have different attitudes regarding how they behave in new situations, either choosing a rational (statistically more rewarding) option or bravely trying something new out of curiosity. Clones must also capture these attitudes during training. In Terziyan and Nikulin’s (Citation2021) study, the “gray zones” within the data (collected as experiences for future training) were defined as the voids within the decision space. Such zones indicate the boundaries for potential situations for which no decisions have been made. The grey zones can be handled and used for “curiosity-driven learning” when training and testing samples are intentionally generated deep inside the grey zones. This would force the intelligent algorithm (e.g., a potential cognitive clone) to learn faster about how to decide in cases of uncertainty and ignorance, such as the current COVID-19 crisis.

Our study also contributes to the literature on human-centric AI and the change from computational thinking (Wing, Citation2006) to AI thinking (Zeng, Citation2013), as well as to human-centric AI thinking (How et al., Citation2020), human-centric AI (Bryson & Theodorou, Citation2019), and trustworthy AI applications (García-Magariño et al., Citation2019; Kaur et al., Citation2020). More specifically, our study addresses how AI helps respond to emergencies and how human-aware AI (Kambhampati, Citation2019) should be designed to address these challenges by modelling the mental states of a human in the loop, recognising their desires and intentions, proactively addressing them, behaving safely and clearly by giving detailed explanations of demand, and so forth. We argue that this development will result in the form of collaborative AI, which starts with two former extremes: AI-agent-driven swarm intelligence (Schranz et al., Citation2020), which is based on nature-inspired collective behaviour models for self-organised multi-agent systems, and human-team-driven collective intelligence (Suran et al., Citation2020), which focuses on the search for a compromise among individual (human) decision-makers. Collaborative AI is a compromise of both, thus including human + AI-agent-driven decision-making (Paschen et al., Citation2020). This benefits from AI but keeps humans in the loop, as in the case of “human swarming” (Rosenberg, Citation2016), which combines the benefits of an efficient computational infrastructure with the unique values that humans bring to the decision process.

The foreseen development of collaborative AI has implications for many areas of society. One such example is the manufacturing industry’s digital transformation towards smart factories (“Industry 4.0”) and the use of cyber-physical systems to cybernize manufacturing processes (Tuunanen et al., Citation2019). Given the increasing role of AI in the digital transformation of industrial processes, one can assume that the supervisory role of humans in future industries will decrease (Rajnai & Kocsis, Citation2017). However, one way to keep humans in the loop would be to create cognitive clones of humans, as suggested here. The use of cognitive clones of real workers, operators, and decision-makers will preserve the human-centric nature of cybernized manufacturing processes, enabling highly personalised product manufacturing (Lu et al., Citation2020).

A more everyday area of society related to the current study is the use of self-driving vehicles, which have been seriously impacted by various ethical dilemmas and responsibility distribution issues (Bennett et al., Citation2020; Lobschat et al., Citation2021; Myers, Citation2021). However, if the digital driver (as a clone of the customer) will make ethically similar choices (among the legal ones) as the customer would make in similar critical situations (having enough time to think properly), then the customer-to-vehicle trust would be much higher. Another important case for cognitive clones as “digital customers” would include improving the (digital) customer experience because of customer involvement in the design and manufacturing processes via corresponding digital clones. Such cyber-physical systems would allow them to interconnect the processes related to a cybernized customer experience (Rekettye & Rekettye, Citation2020; Tuunanen et al., Citation2019) and supply chain innovation (Hahn, Citation2020).

Finally, the obvious intensification of various global and local crises of various natures (manufactured disasters, terrorist attacks, refugee crises, global hacker attacks, hybrid and real wars, COVID-19, other pandemics, etc.) play a role as catalysers for the emergence of all these AI-related trends. Therefore, we believe that society today does not need faceless and ruthless AI analytics. On the contrary, people need AI with a “human face” or a sustainable and trusted partner capable of helping humans overcome problems and feel safe in this challenging world. This was the main driver of this study.

7. Conclusions

The synergy of vulnerabilities of different natures has become a real-world crash test for decision-making mechanisms. Most processes supported by information systems have decision branch points driven mainly by humans. The key dispatcher role a human (as a thinker and decision-maker) has in process management makes many believe that this role is the reason for our existence. Descartes’s famous “Cogito, ergo sum” (“I think, therefore I am”) confirms the vital human need to be involved in decision-making. Any interruption of our active involvement in a cognitive activity is interpreted as a threat to our existence. However, this phrase takes on a new meaning with cognitive cloning technology. We offer an IT artefact (Pi-Mind agent) for the digital duplication of human decision-makers with their unique competencies. The Pi-Mind agent, which can imitate a human donor’s decision-making, has an invaluable advantage: the technology is not exposed to the intentional and unintentional biological threats related to, for example, infections, pollution, toxic substances, and so forth. Therefore, the Pi-Mind agent will preserve sustainable cognitive involvement (“existence”) for itself and its human donor. Consequently, through the resilience of the Pi-Mind technology, we can modernise the quote with a new interpretation: “My clone thinks when I cannot, therefore, I (still) am”.

Pi-Mind as a technology covers the top-down, bottom-up, and autonomic approaches to AI, along with the technology of decision behaviour twinning and the technology of creating decision clones. This allows us to determine how to make a digital, proactive copy of a person’s decision system:

The developed DP1–3 brings flexibility to the solution because of the ability to choose one of the options as the MVP (Nguyen-Duc, Citation2020) and join the remaining options when appropriate.

To teach an AI agent to capture personal decision preferences, values, and skills from humans (DP0), we propose a hybrid approach to knowledge extraction: AI can be developed based on data, information, and knowledge taken from humans, or AI can be trained based on the available data; both options can be used alone or in combination with each other.

To teach an AI agent to (consciously) choose the most appropriate solution among the alternatives at critical decision points, we propose supplementing agent-based technologies with training agents in adversarial environments (within the GAN paradigm).

The developed IT artefact is not simply a digital twin, an intelligent agent, or a decision-making system; it is a more complex IT artefact with heterogeneous features.

The research questions in this study focused on how to ensure organisational resilience using the Pi-Mind agent. Our answer is empowering (and complementing, when appropriate) humans as decision-makers and, at the same time, decreasing the vulnerabilities of human-dependent decision-making processes due to special techniques used for IT artefact design and further adversarial training.

The current study has some limitations. First, the Pi-Mind agent requires special digital (agent-enabled) environments that must be integrated with the target information system before being used. In addition, we did not address the fact that using Pi-Mind agents as digital decision-making clones also has social and, in particular, ethical challenges. The use of Pi-Mind agents in organisations may reveal many economic and legal challenges (copyrights, liability, rewards for using such an asset, etc.), which should be studied separately. Despite these limitations, we believe that the results present a clear benefit for ensuring the efficiency of critical decision-making and organisational resilience.

For future research, we also recognise the importance of understanding (during cloning) the donor’s emotional state, which often influences their decisions. The extent to which emotions influence choices during decision-making is very personal. Each clone must capture these specifics. Humans often make decisions in groups, and social interactions affect each individual’s preferences. Everyone must balance individual and group biases while making decisions; therefore, personal clones or Pi-Mind agents must capture the specific limits of compromises for every individual. Finally, cloning the groups could be a potentially important topic for generalising the Pi-Mind concept from individual to collective intelligence. Such clones will learn to compromise between individual and collective choices. However, there is much to be done in this area, and we welcome other researchers to join us.

Disclosure statement

No potential conflict of interest was reported by the author(s).

Notes

1. August 2013, http://dovira.eu/Law_proposal.pdf.

2. November 2013, http://dovira.eu/Round_table_%208_11.pdf.

3. February 2014, http://dovira.eu/UCU_proposals.pdf.

4. March 2014, http://dovira.eu/Accreditation_proposals.pdf.

References

- Abramson, D. I., & Johnson, J. (2020, December 1). Creating a conversational chatbot of a specific person (U. S. Patent No. 10,853,717). U.S. Patent and Trademark Office. https://pdfpiw.uspto.gov/.piw?PageNum=0&docid=10853717&IDKey=&HomeUrl=%2F

- Acharya, A., Howes, A., Baber, C., & Marshall, T. (2018). Automation reliability and decision strategy: A sequential decision-making model for automation interaction. Proceedings of the Human Factors and Ergonomics Society Annual Meeting, 62(1), 144–148. https://doi.org/10.1177/1541931218621033

- Ågerfalk, P. J. (2020). Artificial intelligence as a digital agency. European Journal of Information Systems, 29(1), 1–8. https://doi.org/10.1080/0960085X.2020.1721947

- Ågerfalk, P. J., & Karlsson, F. (2020). Artefactual and empirical contributions in information systems research. European Journal of Information Systems, 29(2), 109–113. https://doi.org/10.1080/0960085X.2020.1743051

- Agrawal, A., Gans, J. S., & Goldfarb, A. (2019). Exploring the impact of artificial intelligence: Prediction versus judgment. Information Economics and Policy, 47, 1–6. https://doi.org/10.1016/j.infoecopol.2019.05.001

- Al Faruque, M. A., Muthirayan, D., Yu, S. Y., & Khargonekar, P. P. (2021). Cognitive digital twin for manufacturing systems. In Proceedings of the 2021 design, automation & test in Europe conference & exhibition (pp. 440–445). IEEE. https://doi.org/10.23919/DATE51398.2021.9474166

- Amari, S. I. (1993). Backpropagation and stochastic gradient descent method. Neurocomputing, 5(4–5), 185–196. https://doi.org/10.1016/0925-2312(93)90006-O

- Arrieta, A. B., Díaz-Rodríguez, N., Del Ser, J., Bennetot, A., Tabik, S., Barbado, A., Garcia, S., Gil-Lopez, S., Molina, D., Benjamins, R., Chatila, R., & Herrera, F. (2020). Explainable artificial intelligence (XAI): Concepts, taxonomies, opportunities and challenges toward responsible AI. Information Fusion, 58, 82–115. https://doi.org/10.1016/j.inffus.2019.12.012

- Bai, T., Luo, J., Zhao, J., & Wen, B. (2021). Recent advances in adversarial training for adversarial robustness. arXiv preprint. arXiv:2102.01356.

- Baiyere, A., Salmela, H., & Tapanainen, T. (2020). Digital transformation and the new logics of business process management. European Journal of Information Systems, 29(3), 238–259. https://doi.org/10.1080/0960085X.2020.1718007

- Baskerville, R. L., Myers, M. D., & Yoo, Y. (2019). Digital first: The ontological reversal and new challenges for IS research. MIS Quarterly. Advance online publication. https://scholarworks.gsu.edu/cgi/viewcontent.cgi?article=1009&context=ebcs_articles

- Becue, A., Maia, E., Feeken, L., Borchers, P., & Praça, I. (2020). A new concept of digital twin supporting optimization and resilience of factories of the future. Applied Sciences, 10(13), 4482. https://doi.org/10.3390/app10134482

- Bennett, J. M., Challinor, K. L., Modesto, O., & Prabhakharan, P. (2020). Attribution of blame of crash causation across varying levels of vehicle automation. Safety Science, 132, 104968. https://doi.org/10.1016/j.ssci.2020.104968

- Berente, N., Gu, B., Recker, J., & Santhanam, R. (2021). Managing artificial intelligence. MIS Quarterly, 45(3), 1433–1450. https://doi.org/10.25300/MISQ/2021/16274

- Biggio, B., & Roli, F. (2018). Wild patterns: Ten years after the rise of adversarial machine learning. Pattern Recognition, 84, 317–331. https://doi.org/10.1016/j.patcog.2018.07.023

- Booyse, W., Wilke, D. N., & Heyns, S. (2020). Deep digital twins for detection, diagnostics and prognostics. Mechanical Systems and Signal Processing, 140, 106612. https://doi.org/10.1016/j.ymssp.2019.106612

- Breque, M., Nul, L. D., & Petridis, A. (2021). Industry 5.0: Towards a sustainable, human-centric and resilient European industry. European Commission Directorate-General for Research and Innovation. https://ec.europa.eu/info/news/industry-50-towards-more-sustainable-resilient-and-human-centric-industry-2021-jan-07_en

- Bryson, J. J., & Theodorou, A. (2019). How society can maintain human-centric artificial intelligence. In M. Toivonen & E. Saari (Eds.), Human-centered digitalization and services (Vol. 19, pp. 305–323). Springer. https://doi.org/10.1007/978-981-13-7725-9_16

- Bughin, J., Hazan, E., Lund, S., Dahlström, P., Wiesinger, A., & Subramaniam, A. (2018). Skill shift: Automation and the future of the workforce. McKinsey Global Institute, 1, 3–84. https://www.mckinsey.com/featured-insights/future-of-work/skill-shift-automation-and-the-future-of-the-workforce

- Carneiro, J., Martinho, D., Marreiros, G., & Novais, P. (2019). Arguing with behavior influence: A model for web-based group decision support systems. International Journal of Information Technology & Decision Making, 18(2), 517–553. https://doi.org/10.1142/S0219622018500542

- Castro, L. N., De Castro, L. N., & Timmis, J. (2002). Artificial immune systems: A new computational intelligence approach. Springer-Verlag.

- Chatterjee, S., Xiao, X., Elbanna, A., & Saker, S. (2017). The information systems artifact: A conceptualization based on general systems theory. In Proceedings of the 50th Hawaii International Conference on System Sciences (pp. 5717–5726). https://doi.org/10.24251/HICSS.2017.689

- Crowder, J. A., Carbone, J., & Friess, S. (2020). Methodologies for continuous, life-long machine learning for AI systems. In Artificial psychology (pp. 129–138). Springer.https://doi.org/10.1007/978-3-030-17081-3_11

- Cummings, T. G., & Worley, C. G. (2014). Organization development and change. Cengage Learning.

- Drury-Grogan, M. L., Conboy, K., & Acton, T. (2017). Examining decision characteristics & challenges for agile software development. Journal of Systems and Software, 131, 248–265. https://doi.org/10.1016/j.jss.2017.06.003

- Duan, Y., Edwards, J. S., & Dwivedi, Y. K. (2019). Artificial intelligence for decision making in the era of big data—Evolution, challenges and research agenda. International Journal of Information Management, 48, 63–71. https://doi.org/10.1016/j.ijinfomgt.2019.01.021

- Duchek, S. (2020). Organizational resilience: A capability-based conceptualization. Business Research, 13(1), 215–246. https://doi.org/10.1007/s40685-019-0085-7

- Filip, F. G., Zamfirescu, C. B., & Ciurea, C. (2017). Computer-supported collaborative decision-making. Springer. https://doi.org/10.1007/978-3-319-47221-8

- Floridi, L., & Cowls, J. (2019). A unified framework of five principles for AI in society. Harvard Data Science Review, 1(1), 1–15. https://doi.org/10.1162/99608f92.8cd550d1

- García-Magariño, I., Muttukrishnan, R., & Lloret, J. (2019). Human-centric AI for trustworthy IoT systems with explainable multilayer perceptrons. IEEE Access, 7, 125562–125574. https://doi.org/10.1109/ACCESS.2019.2937521

- Golovianko, M., Gryshko, S., Terziyan, V., & Tuunanen, T. (2021). Towards digital cognitive clones for the decision-makers: Adversarial training experiments. Procedia Computer Science, 180, 180–189. Elsevier. https://doi.org/10.1016/j.procs.2021.01.155