?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.

?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.ABSTRACT

Indoor-environmental quality (IEQ) assessments must consider multiple aspects and domains. In this context, common IEQ building evaluation and rating schemes frequently apply conjoint indicators to aggregate these different aspects via importance or significance ranking formalisms involving, for instance, points, scores and weights. However, the reasoning for the specific selection of variables and the sources of their assigned weights are not necessarily disclosed as a matter of course. In the present contribution, we investigate one of the paths to the provision of such reasoning that leans on experts’ views. Online feedback from a small sample of IEQ experts provided the basis for the illustration of this path and the kinds of principal insights that it can offer, including the degree of consistency among experts and the potential factors that could influence their judgment. Moreover, the study also exemplified the process of deriving relative importance weightings (i.e. coefficients applied to various domain and sub-category variables) and how such processes can be applied toward a total IEQ measure. The study’s findings underscore the need to improve the transparency of the processes through which such schemes and their constitutive ingredients are arrived at.

Introduction and background

It is common knowledge that indoor-environmental conditions influence people’s health, comfort, satisfaction and productivity (Torresin et al., Citation2018; Zhao & Li, Citation2023). Whereas the exact mechanisms behind human consequences of indoor-environmental exposure may not have been fully understood, there is general consensus that this exposure is not a monolithic causal factor, but encompasses multiple sensory dimensions, including thermal, visual, auditory and olfactory channels of energy and information processing (Alais et al., Citation2010). Past research and standardization efforts have commonly addressed these dimensions of the indoor-environmental quality (IEQ) separately. However, more recently, the interest in the relations between these individual dimensions and their combined and cross-effects has been increasing (Chinazzo et al., Citation2022). An integrated view and treatment of the multiple dimensions of indoor-environmental conditions is essential at many levels. Thereby, one particular question concerns practical procedures for the assessment and rating of total IEQ (Mahdavi et al., Citation2020). How would relevant stakeholders evaluate the buildings’ overall IEQ, given the fact that it depends on quality considerations in its multiple constituent individual dimensions?

A pragmatic approach to obtaining a single measure of the total IEQ starts with the assumption that there already exist methods to obtain, for each of the constitutive individual domains (e.g. thermal comfort, visual comfort, indoor air quality and aural comfort), operationalized quality indicators. Once multiple single-domain quality indicators are obtained, they can be combined into a single pragmatic quality score (Fathi & O’Brien, Citation2023; Tang et al., Citation2020). Frequently, this is done using a simple (e.g. additive) function, whereby the assumed relative importance of individual domains can be accounted for via assignment of respective weighting factors (Rohde et al., Citation2020; Tran et al., Citation2023). This pragmatic approach is quite common in point-based building certification and rating systems (Mahdavi et al., Citation2020). And there are further use cases for single scores, such as application of global optimization methods in the building design process (i.e. selection of the best performing instances of design variants for a project). Likewise, decision making regarding investments in building construction, retrofit and maintenance could presumably benefit from aggregate quality indicators that assign relative importance to various dimensions of the quality space. Whereas the focus here is on IEQ, building-related aggregate quality indicators often include not only IEQ criteria, but also other items such as energy efficiency, material use and integration in the urban context.

Note that, independent of the application area and the number and types of included quality criteria, their relative importance must be addressed, when deriving a pragmatic single quality measure. In fact, understanding of various stakeholders’ views on the relative importance of various factors in the built environment is of interest, even if seeking such understanding is not motivated by the intention to derive an aggregate measure of total IEQ. As mentioned earlier, scores and rating systems have been extensively employed to assess the quality of indoor environments. On one hand, building certification schemes such as DGNB System (Citation2020), BREEAM (Citation2023) and NABERS (Building Research Establishment, Citation2023) have introduced various weighting schemes to evaluate the relative importance of multiple building quality parameters (see ). For instance, the Deutsche Gesellschaft für Nachhaltiges Bauen (DGNB) certification system expresses the relative weight of different evaluation domains (e.g. IEQ, sociocultural and functional quality) as a percentage of the total quality score (DGNB System, Citation2020). entails the IEQ-related segment of this system for new buildings as a function of building type.

Table 1. Example of cited (IEQ-related) national and international sets of weights related to thermal comfort (TC), air quality (AQ), acoustic comfort (AC) and visual comfort (VC) (cited from Fathi & O’Brien, Citation2023; Wei et al., Citation2020).

Table 2. Overview of the share of the IEQ-related evaluation domains (in percentage) of the total score in the DGNB system (new buildings) as a function of building type (based on Mahdavi et al., Citation2020).

Previous reviews of such building-related rating and certification systems and the respective weighting schemes (Mahdavi et al., Citation2020; Wei et al., Citation2020) could not identify explicit procedures toward the numeric aggregation of multiple IEQ dimensions in terms of overall IEQ ratings of buildings. Moreover, as argued in previous studies, the weighting schemes in such certification systems can be very different (Rohde et al., Citation2020).

A general observation regarding such resources and a central motivating factor behind the current treatment is the paucity of the transparent disclosure of the processes leading to (a) the selection of the set of criteria to be evaluated in the evaluation schemes and (b) the determination of the weights applied to these criteria. Note that a number of attempts have been made to derive weights for the overall assessment of IEQ from environmental observations, subjective assessments or a combination of both (Danza et al., Citation2020; Heinzerling et al., Citation2013) (see ). However, the weights associated with each domain vary not only between studies but also between locations (Zalejska-Jonsson & Wilhelmsson, Citation2013). Quoting the ‘lack of objective weighting methodologies for complex groups of assessment criteria’, Rohde et al. (Citation2020) pursued the development of weights for IEQ feature of Danish multifamily dwellings based on an expert survey and using simple percentile prioritization and expert panel judgments.

Table 3. Comparison between weighting factors derived from field studies related to thermal comfort (TC), air quality (AQ), acoustic comfort (AC) and visual comfort (VC) (based on Danza et al., Citation2020).

The variety of the weighting schemes and their dependency on the methods of derivation, sources of information and diversity in applications (e.g. domains, building typologies) underline further the need for a cardinal discussion of the availability and quality of reasoning underlying the specification of weighting criteria and the numeric specification of weights. Various strategies could be conceived in dealing with multiple quality domains. Three such strategies were discussed in the aforementioned review of rating and certification systems (Mahdavi et al., Citation2020). The first strategy involves actually no weighting step. Rather, it merely ‘involves the categorization of requirements into distinct sets pertaining to separate domains (e.g. thermal, visual and acoustic). In this case, the evaluation is conducted (and the evidence of compliance is provided) separately for each domain. The second strategy subsumes ‘multiple quality evaluation domains in a unitary – typically point-based or credit-based – framework. Thereby, an overarching or total quality score is derived based on the combination (e.g. simple or weighted addition) of individual domains’ scores. Such weights need not reflect objective features of the real world, but could be presumably derived via pragmatic approaches such as polling stakeholders' views (e.g. building professionals, office workers). The third strategy involves an integrative path, whereby ‘inherent – physiologically or psychologically relevant – interactions, independencies, and cross-effects among various influencing variables in different perceptual dimensions would be taken into consideration, including their complexity and presumptive non-linearity’ (Mahdavi et al., Citation2020).

We focus here on the second strategy in the above list (deriving a total IEQ measure via the weighted aggregation of multiple single-domain quality indicators) due to the following two considerations: The first strategy is not directly relevant to the present discussion, as it does not involve aggregate quality measures and the third strategy – given the current state of knowledge in this area – does not appear to be immediately feasible. It could be of course argued, quite convincingly, that this entire inquiry lacks a solid conceptual foundation. The concept of an overall IEQ measure may be claimed to be a rather insufficiently precise construct. Moreover, even if treated as a useful pragmatic measure, it may not be simply derivable from a set of weights assigned to its purported single-domain constituents. If one nonetheless does proceed with the endeavour of establishing and applying such weights, one should not view them as constants, but rather as variables that may depend on the specifics of the application context (e.g. the purpose of rating, the specific set of rating items, the typology of the rated buildings) and the diversity of human perception (Hellwig, Citation2017).

Notwithstanding these reservations, and as illustrated in the aforementioned references to literature, both aggregate IEQ constructs and respective weighting schemes are commonly in use, necessitating further critical reflections on the subject. These schemes appear to primarily involve heuristic operations and pragmatic considerations. This circumstance may be to some extent unavoidable, but it has consequences in terms of discrepancies amongst different rating systems, thus creating a certain appearance of arbitrariness regarding their structure, items and weights. As alluded to before, one way of establishing and using such weights is by polling of views of stakeholder groups including buildings’ occupants as well as building design and operation experts.

Method

We describe, in the following, the methodological strategy underlying the present investigation. This involved mainly the use of a survey to obtain experts’ opinions, as the primary source of orientation for the assignment of weights to different IEQ dimensions. Surveys have been used frequently in the past to obtain not only occupants’ level of satisfaction and comfort (Frontczak, Andersen et al., Citation2012; Frontczak, Schiavon et al., Citation2012), but also experts’ views on the importance of various IEQ factors (Rohde et al., Citation2020). In the present study, the focus was on the structure and content of a preliminary online survey of experts’ views on the relative importance of different constituent aspects of IEQ in buildings. The survey was conceived as online medium (Limesurvey GmbH, Citation2016) to obtain, in a compact manner, responses from experts with relevant background and expertise regarding the relative importance of different IEQ factors. From the building typology point of view, the scope of the survey was limited to office buildings. The link to the online survey was shared with professional groups and entities with topically relevant members, including IEA EBC Annex 79 (O’Brien et al., Citation2020), ISIAQ (Citation2023) and IBPSA (Citation2023). Note that the population of the participating experts is not suggested to be statistically representative of the large and diverse field of IEQ experts. The intention was rather to obtain (i) a preliminary impression of how experts generally rank the importance of various domains of IEQ and (ii) an initial estimate of the degree of agreement among experts as well as the spread of expressed views on the relative importance of these domains (and their sub-categories).

The background of the survey was explained to the prospective participants in terms of the following general proposition:

It is generally assumed that, in the mid-term and long-term, office occupants’ overall satisfaction with indoor-environmental conditions results from the combined effects of multiple factors. This questionnaire distinguishes five factors or domains of IEQ (indoor environmental quality). The relative importance (weight) of these domains toward the formation of occupants’ overall evaluation of the IEQ is to be determined. Moreover, within each domain, the relative importance of the contributing aspects is also to be determined.

The survey entailed six sections, each including a ranking question. The first question prompted the participants to rank five IEQ domains in terms of their relative importance. The subsequent five questions prompted the participants to rank, within each of the above five domains, five sub-categories.

These sections are described in the following (see for an overview):

The first section entailed the evaluation of the relative importance of five domains that were assumed to influence occupants’ overall evaluation of the indoor-environments’ quality, namely (i) thermal comfort, (ii) indoor air quality, (iii) visual comfort, (iv) acoustic comfort and (v) the quality of office infrastructure/amenities (i.e. ergonomic furniture, equipment and connectivity).

The second section involved the importance ranking of five items within the thermal quality domain, namely (i) maintaining the preferred air temperature, (ii) avoiding draft (winter), (iii) avoiding draft (summer), (iv) avoidance of a very high or very low relative humidity and (v) avoidance of radiation asymmetry.

The third section entailed the importance ranking of five items within the visual quality domain, namely (i) maintaining the preferred illuminance levels, (ii) avoidance of glare in the field of view, (iii) availability of daylight, (iv) view to outside and (v) colour temperature of light sources.

The fourth section entailed the importance ranking of five items within the indoor air quality domain, namely (i) perception of air freshness, (ii) perception of air dryness, (iii) absence of unpleasant odours, (iv) presence of pleasant odours and (v) presence of operable windows.

The fifth section involved the importance ranking of five items within the acoustic quality domain, namely (i) avoidance of outside noise (e.g. traffic), (ii) avoidance of noise from inside (equipment, HVAC, etc.), (iii) avoidance of speech noise from adjacent or close-by offices/workstations, (iv) maintaining acoustic privacy and (v) avoidance of hall and echoes.

The sixth section entailed the importance ranking of five items within the domain of office environments’ infrastructure and amenities, namely (i) ergonomic furniture, (ii) individual office (versus shared offices), (iii) high-quality equipment (computers, printers, etc.), (iv) connectivity (wireless availability and speed) and (v) personal control over environmental systems (heating, cooling, ventilation and lighting).

Table 4. Overview of the five domains subjected to expert ranking, as well as ranking items within each domain.

Lastly, the survey allowed participants the opportunity to provide additional comments, observations, qualifications and general feedback. Furthermore, participants provided information regarding their gender, location, education, profession, primary area of IEQ-related expertise and years of professional experience in the field. It is important to emphasize, from the ethical approval standpoint, that the survey was conducted on a fully voluntary and entirely anonymous manner.

A total of 163 individual responses were recorded in the period of May and June 2023 via LimeSurvey (multiple answered surveys from the same respondents were avoided). On average, the survey lasted for 12 min (±14). The criteria employed in the data-cleaning process included test surveys, the overall survey duration and incomplete responses. Pre-survey test responses (n = 2) were removed as the initial step. Subsequently, responses with a total time of zero (n = 60) were discarded. Finally, incomplete responses, defined as those failing to provide complete answers to ranking questions (n = 11), or having incomplete responses to ranking questions (n = 17), were removed from the dataset. After the dataset underwent these cleaning processes, a total of 73 answered surveys remained, serving as the sample for the analysis presented in the paper.

Among the 73 respondents in this sample, 37.0% were male and 57.5% female (5.5% opted not to disclose their gender information). Responses were collected from diverse global locations, namely Europe (43.8%), Americas (23.3%) and Asia (23.3%). As for professional backgrounds, the majority (37.0%) identified as educators or researchers, 34.2% as engineers and 16.4% as architects (12.4% did not provide information on their professional background). The education level was predominantly represented by participants with a doctoral degree or equivalent (61.6%), followed by those with a master’s degree or equivalent (20.5%), a bachelor’s degree or equivalent (12.3%) and 5.5% who fall in the ‘other’ or non-response category. Among educators and researchers, 81.5% stated they have a doctoral degree, whereas the percentage was 56.0% among engineers and 41.7% among architects.

With regards to their primary area of expertise in IEQ, the majority of participants indicated thermal quality (74%) as their focus, followed by indoor air quality (9.6%), visual quality (5.5%) and others (11%). The survey question concerning participants’ years of professional experience revealed a broad distribution. For example, among participants with expertise in thermal quality, the average number of professional years of experience stood at 13.6 ± 11.4 years. No responses were collected from participants who consider themselves experts in acoustics. It is possible that some participants had experience in more than one domain. However, factual information about this matter cannot be discerned from the survey.

Data processing was predominantly carried out using RStudio, an integrated development environment for R (R Development Core Team, Citation2023). The survey results were primarily subjected to descriptive statistical analysis to address several pertinent questions. Thereby, the main queries concerned participants’ views on the relative importance of different aspects of IEQ domains, as well as the relative importance of various items within each of the sub-categories of these domains. To this end, we mainly focused on the percentage distribution of the participants’ ranking of various items in different domains and sub-categories. Given the limitations of the sample and the illustrative nature of this exercise, conducting complex inferential statistical analyses was not deemed warranted. Nonetheless, to obtain a sense of ranking tendencies of various sub-segments of the sample of participants, it was useful to assign a numeric value (from 1 denoting the highest ranked item to 5 denoting the lowest ranked item). Given the ordinal nature of this scale, statistical derivation of the mean values of participants' ranking votes can be considered questionable, as the intervals between different rankings cannot be suggested to be equal. In other words, it is not the case that equal intervals at different ranges of the scale would be equal in magnitude, as it is in the case when dealing with ratio scales. Nonetheless, the obtained mean values can provide a sense of ranking tendencies at the level of the sub-segments of the sample and can thus provide a preliminary basis for the comparison of aggregate ranking tendencies.

Note that the questions included in the survey are not suggested to cover all relevant IEQ issues in the respective domains and sub-categories. Specifically, the distinguished domains and particularly the defined sub-categories in each domain are not suggested to be the ‘right’ ones, as the opinions in these matters can widely vary among the members of the relevant professional and scientific communities. Rather, our objective was to obtain a preliminary impression of IEQ domain ranking tendencies and a first estimate of and variance of experts’ opinions, given a set of distinct categories that appeared to reasonably capture some of the relevant variables. As such, the survey results represent an illustrative step in gauging the broad tendencies and the consistency level of expert-based comparative assessment of different dimensions of IEQ. Given the motivational background of the study, a number of additional queries were conducted to explore the potential influence of factors such as gender, professional background, area of expertise and location on participants’ ranking.

Results

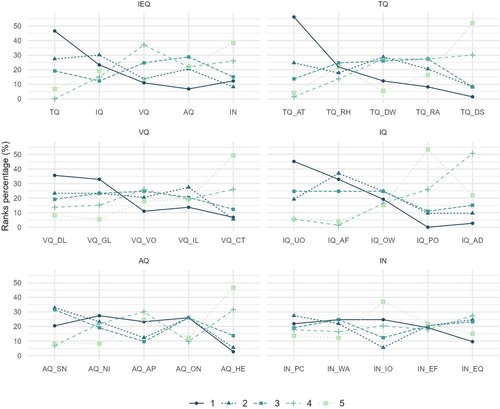

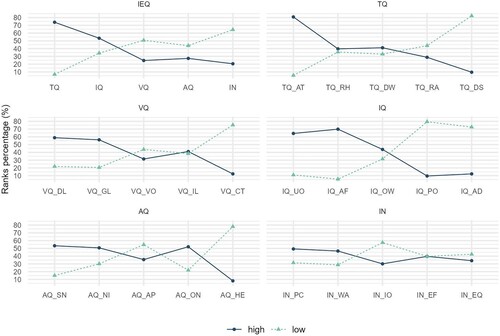

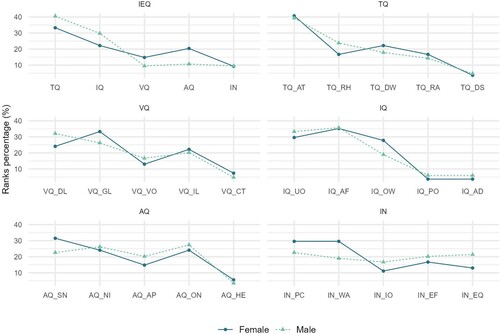

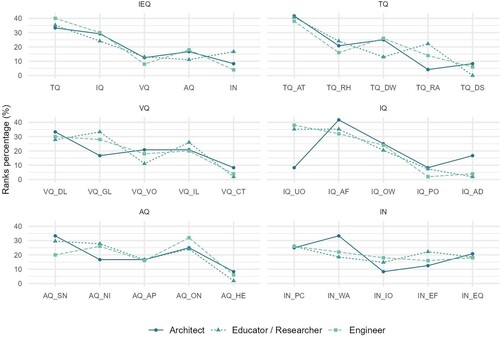

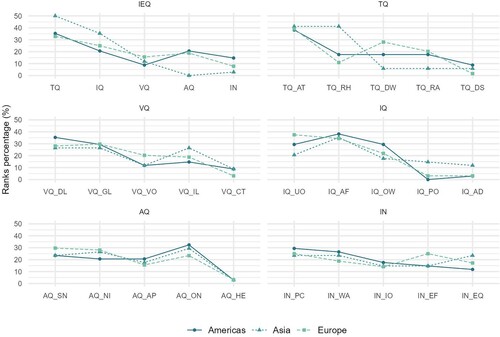

The key results of the survey are presented below in terms of multiple graphs and tables. shows the fraction of participants, who ranked the five IEQ items (x-axis) first (rank 1) to last (rank 5). It also includes the participants’ ranking of the sub-categories of each of the five IEQ categories as per . To further facilitate the possibility to gain an overall impression of the data, the same information is shown in a more compact form in in terms of two contrasting functions, one (High) denoting higher rankings percentage (sum of rankings 1 and 2) for each ranking item versus the other (Low) denoting lower rankings percentage (sum of rankings 4 and 5) of the same item. summarizes the descriptive statistics of participants’ ranking in terms of mean and standard deviations, whereby a value of 1 denotes the highest and 5 the lowest ranking. summarizes participants’ ranking (mean and standard deviation) of the IEQ domains (and their sub-categories) broken down for various subsets of the participants in terms of gender (female/male), profession (architect, engineer and educators/researcher), area of expertise (TQ, IQ, VQ) and location (Europe, Americas, Asia).

Figure 2. Participants’ ranking of the IEQ domains and their sub-categories (‘high’ denotes the percentage of votes ranking an item as either rank 1 or rank 2, whereas ‘low’ denotes the percentage of votes ranking an item as either rank 4 or rank 5).

Table 5. Summary of participants’ ranking of IEQ domains as well as sub-categories in each domain subjected to expert ranking in terms of mean and standard deviation (Sd).

Table 6. Participants’ ranking (mean score) of the IEQ domains (and their sub-categories) broken down for various subsets of the participants, i.e. gender (female/male), profession (architect, engineer, educator/researcher), area of expertise (TQ, IQ, VQ) and location (Europe, Americas, Asia).

Discussion

The survey results, as summarized in the previous section, may not be generalized arbitrarily, but they nonetheless allow for certain noteworthy observations. As stated at the outset, one of the main questions that motivated the survey idea was obtaining a preliminary view of experts regarding the relative importance of various IEQ domains as well as the relative ranking of the items (subcategories) within each of these domains. The collected data allow for treating this ranking question in slightly different ways, including the following three: (i) What was the highest ranked option in each domain and sub-category? (ii) Was a specific item ranked high in the participants’ list in the sense of being included in the top two options? (iii) What was the ranking of the items in each domain and sub-category in terms of the respective – statistically derived – mean scores? includes the summary of the rankings based on these three criteria. It includes the order of participants’ importance ranking of IEQ main domains and sub-categories based on: (i) selection as highest ranking, (ii) inclusion as the highest or the second highest ranked option and (iii) mean ranking score.

Table 7. Order of participants’ importance ranking of IEQ main domains and sub-categories based on: (i) destination as highest ranking, (ii) inclusion in highest and second highest ranking and (iii) mean ranking score.

Note that, as it can be seen from this table, in most cases these three ranking possibilities yield similar results. This can be suggested to support the aggregate ranking as per the last column of . Thereby, for each sub-category, items are ranked from highest to lowest.

We are inclined to take these results at the face value and avoid commenting on them based on the potential preconceptions. That said, it would not be imprudent to suggest that they appear to be consistent with anecdotal impressions of common views in the field as well as the aforementioned weighting tables in existing resources. Considering the IEQ mandates, thermal comfort and indoor air quality are indeed often high on the agenda when buildings’ indoor-environmental control systems for heating, cooling and ventilation are discussed. Likewise, fresh air supply in the air quality domain, provision of daylight and avoidance of glare in the visual domain, noise control and speech interference in the acoustic domain and the much-discussed personal control in the infrastructure domain do appear to be frequently among the top talking points to surface in discussions within the relevant professional communities.

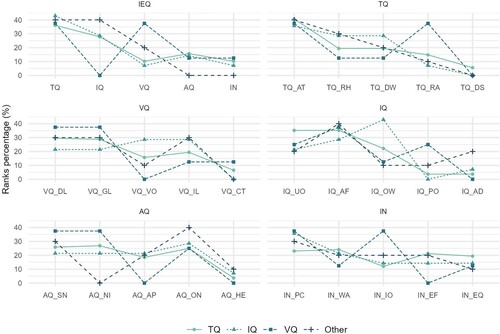

Given the aforementioned comments regarding the limitations of the sample of participants, the following comments on the results with regard to ranking tendencies of various sub-segments of the sample of participants should be regarded as attempting to examine the results in terms of their general plausibility and, more importantly, to formulate a few possible motivational factors behind ranking tendencies, which could be pursued in more detail in the course of future investigations. In this context, it is important to recall here that the sample of participants in the survey was rather uneven in terms of their primary expertise area: over two-third of the participants indicated thermal domain and about 10% indoor air quality as their primary area of expertise. Hence, it could be suggested that this circumstance may have introduced a bias in the evaluation of the different domains’ importance, notwithstanding the fact that most IEQ experts are likely to be familiar with more than one specific domain. To address this issue, we compared the ranking of the three domains thermal quality, indoor air quality and visual quality separately for the participants with primary expertise in this area. The result, as shown in , does not seem to conclusively confirm this expertise-driven bias conjecture. Thermal quality received very high rankings independent of the participants’ declared area of expertise. It may be also noteworthy to mention that the indoor air quality experts, when evaluating the items in the indoor air quality domain, assigned the highest rank to the operable windows item (see and ). A point can be made perhaps regarding the ranking tendency of the participants who declared visual quality as their area of expertise. They indeed ranked their own area of expertise very high, but ranked indoor air quality the lowest. However, this may be simply an artefact of visual quality experts’ underrepresentation in the sample of participants.

Figure 3. Comparison of the percentage of participants who evaluated different IEQ domains as high (sum of rankings 1 and 2) by participants with different main area of expertise (i.e. thermal quality, visual quality, indoor air quality).

An attempt was also made to query if the participants’ ranking choices could have been influenced due to other sample attributes, such as participants’ gender, their educational background or their location. includes the ranking frequency of the five IEQ domains (selecting an item as either the first or second most important one) separately for female and male participants. The viewed importance of these five domains appears in case of the female participants to be less varied than those of the male participants, who ranked thermal and indoor air quality somewhat higher and the visual and acoustic quality somewhat lower than their female counterparts. also depicts gender-dependent evaluation of the sub-categories of each of the five IEQ domains considered. These appear to be consistent in their overall tendencies, perhaps with the exception of the infrastructure/amenities sub-category. In this instance, the views of the male participants regarding the importance of these sub-categories are less varied than the female participants, who ranked the personal control and connectivity sub-categories higher (and the other categories lower) than their male participants.

Figure 4. Comparison of the percentage of participants who evaluated different IEQ domains as high (sum of rankings 1 and 2) shown separately for female and male participants.

We also examined, in a similar vein, potential effect of participants’ educational background and their location. entails a comparison of the percentage of participants who evaluated different IEQ domains and the respective sub-categories as high (sum of rankings 1 and 2) in separate functions for three educational background categories (architects, engineers and educators/researchers). A qualitative comparison based on this figure does not seem to yield major divergencies in participants’ ranking. It is perhaps worth mentioning that, in the visual quality sub-category, architects ranked daylight availability the highest, whereas they ranked glare noticeably lower than their engineers and educator/researcher counterparts.

Figure 5. Comparison of the percentage of participants who evaluated different IEQ domains as high (sum of rankings 1 and 2) by participants with different professional background (i.e. architects, educators/researchers, engineers).

shows a comparison of the percentage of participants who evaluated different IEQ domains as high in terms of separate functions for three different locations (Europe, Americas, and Asia). Participants located in Asia assigned a higher ranking to thermal quality and indoor air quality than their counterparts from either Europe or Americas, but assigned much less weight to the acoustic quality and infrastructure/amenities items. Experts situated in Asia attributed a considerably higher ranking to the relative humidity item in the thermal quality sub-category. Interestingly, Europe-based experts attributed a higher ranking to the importance of winter draft as compart to experts located in either Asia or Americas. Note that the consistently low ranking of summer draft is consistent with the observation, that during the warmer period of the year, air movement can have a positive effect on thermal comfort. As such, it should not come as a surprise, that this item received the lowest importance ranking (see and , and ), with the exception of participants from Asia, who voted for the ‘avoidance of radiant asymmetry’ as the least important item.

Figure 6. Comparison of the percentage of participants who evaluated different IEQ domains as high (sum of rankings 1 and 2) by participants from different locations (Europe, Americas, Asia).

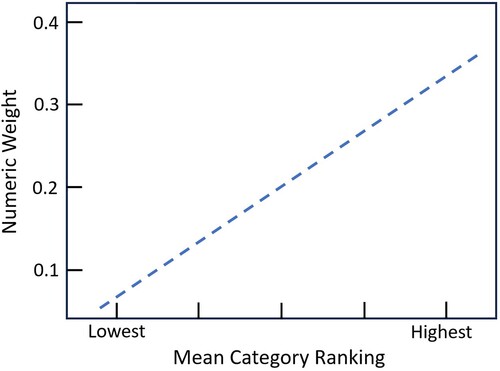

The survey questions explicitly associated the relative ranking of the importance of the five IEQ domains and the respective sub-categories in each domain with respective weights. This opens a path to derive numeric values for the weights based on participants’ corresponding mean ranking votes (see ). Weights, as constructs, can be sensibly argued to have, in the case at hand, values that positively correlate with the participants’ ranking of questionnaire items. There are different options to functionally express this correlation. One possibility is to assume a linear relationship between the participants’ mean ranking tendency of the questionnaire items and the numeric values of the weights. Operating on the basis of this assumption, and arranging the values of the weight such that they add up, in each category, to one, we obtain the relationship illustrated in . Thus, the mean rankings of can be mapped to the weights as summarized in . The weights obtained in this manner can facilitate, in principle, the comparability of the obtained weights to already existing weighting schemes (Fathi & O’Brien, Citation2023; Wei et al., Citation2020) in literature or to future studies in this area (see, for examples, ). Thereby, it is important to transparently disclose both the manner in which judgments of relative importance have been elucidated (specifically, type and values of deployed scales), and the manner through which they are mapped to numerically expressed weights (e.g. linear versus non-linear relationships) ().

Table 8. Weights derived for the five IEQ domains and the respective sub-categories in each domain based on participants’ corresponding mean ranking votes (the numeric values of the weights in each category are derived in a manner so as to add to one).

As mentioned at the outset, one of the benefits of exploring relative rankings and respective weights lies in deriving various aggregated scores of global or total quality indices. To illustrate the possibility of deriving a kind of total indoor-environmental quality index (TIEQ) index based on the domains, sub-categories and the respective weights of , we can start by evaluating each of the items using a simple numeric scale. For instance, we could apply a 5-point scale to each of the items (IEQ domains and their sub-categories) of to evaluate the conditions in an existing building. Each item could be then given the score 4 for the attribute ‘very good’, 3 for ‘good’, 2 for ‘mediocre’, 1 for ‘poor’ and zero for ‘very poor’. Using this scoring, the weights can be adjusted to derive the value of TIEQ. For example, if TIEQ is to have weights from zero (the most negative ranking possible) to 100 (the highest possible ranking), the following TIEQ function can be derived (see for the abbreviations).

It is important to emphasize the essential purpose of the above illustrative TIEQ function, which is not about the entailed specific domains, variables and coefficients. The selection of these elements is a fundamentally pragmatic matter, as it is typically the case in many fields, when dealing with pragmatic measures and conjoint indices. Rather, the point is to argue for the necessity for transparency regarding the processes through which the constitutive variables in such functions are selected and their coefficients derived. Information obtained from expert panels and through surveys and interviews involving different groups of participants (e.g. professionals, building users) can provide the basis for derivation of integrative quality indices that, while not unique or representational (in the sense of capturing a reality ‘out there’), can be shown to have a transparent and traceable lineage. As such, successive efforts in this area could build upon experience and knowledge gained in previous iterations, thus converging toward comparability, consistency and accountability in definition and application of aggregate IEQ indices.

Conclusion

Given the multi-aspect nature of IEQ, it is reasonable to expect that respective evaluation methods can benefit from conjoint quality indicators. Such indicators have the potential to aggregate various IEQ-related items and dimensions using formalisms involving points, scores, weights and alike. As such, they can be of pragmatic benefit in certain applications, such as point-based building certification and rating systems, optimization techniques in the building design process, building investment decision support and IEQ management. Moreover, the applied methods to derive the relative importance of IEQ domains based on feedback from different groups (e.g. building design and operation professionals, building occupants) and in different building types (e.g. residential, commercial and educational) can shed light on potential perceptual gaps among the stakeholders involved and hence reduce the probability of miscommunication. Last but not least, weights thus obtained can even find a kind of indirect reality check functionality in psychological studies of people’s multi-domain information processing.

However, whatever the application scenario, the shared objective of deriving a single IEQ score from quality evaluations in the multitude of domains involved, may be realized in a variety of ways. This variety may pertain to the selected subset of such domains, the corresponding proxy variables in each domain, the formalisms applied, the building types targeted and the sources of information deployed. Existing building certification systems entail various instances of such evaluation procedures. However, as stated at the outset of this investigation, the reasoning for the specific selection of variables and the sources of their assigned weights are not necessarily disclosed as a matter of course. Addressing this gap in the past developments and applications of IEQ-related weighting schemes motivated the effort described in this paper and represents its main contribution. This effort involved the illustrative exploration of one of the possible paths that could help establishing a transparent rationale behind such weighting schemes. This path leans on explicit and formal elicitation of views from experts in the relevant domains. Online feedback from a small sample of IEQ experts provided the basis for the illustration of this path and the kinds of principal insights that it can offer, including the degree of consistency and agreement among experts and the potential factors that could influence their judgment. Moreover, the study also exemplified the process of deriving weights (i.e. coefficients applied to various domain and sub-category variables) and how such weights can be applied toward a TIEQ measure.

Given the aforementioned, primarily illustrative character of the present study, it does not purport to offer unique results and generalizable formulae. It is thus important to briefly reiterate the intended scope of the study, which is intended to (i) provide impulses and directions toward future investigations by researchers in the relevant domains and (ii) inform practitioners in view of a careful, informed, pragmatic and differentiated application of IEQ-related weighting schemes in literature and standards. We have already alluded to the limitations of the study, which was focused on a single building type and relied on an anonymous survey. Rather than discussing the same limitations again, it is more productive to address, in the following, some deeper challenges, that have the potential to inform future research undertakings in this important area of inquiry.

Even before starting to contemplate the ranking preferences of participants in the survey, it is important to validate the coverage and robustness of the criteria offered for ranking. As alluded to before, in the present case, this was based on the results of a preliminary workshop deliberation, which cannot be suggested to have produced a representative and validated list of domains and sub-categories. Post-survey observations indeed imply that certain formulations may have been overtly suggestive (e.g. ‘unpleasant odours’ in the air quality domain), or predictably less relevant (e.g. ‘summer draft’ in the thermal quality domain), or confusable (e.g. ‘speech noise’ and ‘inside noise’ in the acoustic quality domain).

Furthermore, there is arguably not a unique list of domains and items suitable for all kinds of pragmatic IEQ-related queries. Future studies need to adapt a fit-for-purpose approach to the identification of distinct sets of criteria that would cover the salient aspects of the evaluation intent. For instance, IEQ-related evaluation exercises may be conducted in a rather narrow sense (for instance, by focusing on occupants’ perception of thermal, visual or acoustic conditions), or cover further, ontologically different but operationally related, factors (e.g. energy considerations, ecological concerns and investment requirements). In our survey, the infrastructure/amenities category had an ontologically different standing than the other four domains, which may be of more immediate relevance to building occupants’ perceptual response to indoor-environmental conditions. However, the composition of survey items needs to be more systematic and transparent in terms of the underlying reasoning. Ultimately, as with the construct validation requirement in the design of experimental studies in psychology and sociology, the consistency and comprehensibility of survey items must also undergo a prior testing step (using, perhaps, smaller control groups), before actual large-scale distribution among survey participants.

Notwithstanding these reservations, it seems reasonable to highlight a twofold conclusion concerning multi-dimensional evaluation schemes that involve assignment of points and weights to various presumed constituents of IEQ and may proceed further to aggregate those in terms of a single pragmatic quality measure. First, such schemes are potentially useful and can serve multiple applications. It is true that they involve simplifications and reductionist moments, but they may also compensate for those in part via the kind of practical efficiency and high-level orientation aid they can offer. Second, in order to ensure the consistency, scalability and pervasive applicability, one needs to improve the transparency of the processes through which such schemes and their constitutive ingredients are arrived at. The experience with the illustrative survey-based exercise presented in this contribution was meant to outline a path that, if taken diligently in the course of further investigations, can lead to such an improvement in the future.

Disclosure statement

No potential conflict of interest was reported by the author(s).

Additional information

Funding

References

- Alais, D., Newell, F. N., & Mamassian, P. (2010). Multisensory processing in review: From physiology to behaviour. Seeing and Perceiving, 23(1), 3–38. https://doi.org/10.1163/187847510X488603

- BREEAM. (2023). BREEAM UK New Construction Version 6 - Technical Manual SD5079. Building Research Establishment (BRE).

- Building Research Establishment. (2023, March). Guide to design for performance: NABERS UK - Version 1.0.

- Chinazzo, G., Andersen, R. K., Azar, E., Barthelmes, V. M., Becchio, C., Belussi, L., Berger, C., Carlucci, S., Corgnati, S. P., Crosby, S., Danza, L., de Castro, L., Favero, M., Gauthier, S., Hellwig, R. T., Jin, Q., Kim, J., Sarey Khanie, M., Khovalyg, D., … Wei, S. (2022). Quality criteria for multi-domain studies in the indoor environment: Critical review towards research guidelines and recommendations. Building and Environment, 226, Article 109719. https://doi.org/10.1016/j.buildenv.2022.109719

- Cobern, W. W., & Adams, B. (2020). Establishing survey validity: A practical guide. International Journal of Assessment Tools in Education, 7(3), 404–419. https://doi.org/10.21449/ijate.781366

- Danza, L., Barozzi, B., Bellazzi, A., Belussi, L., Devitofrancesco, A., Ghellere, M., Salamone, F., Scamoni, F., & Scrosati, C. (2020). A weighting procedure to analyse the indoor environmental quality of a zero-energy building. Building and Environment, 183, Article 107155. https://doi.org/10.1016/j.buildenv.2020.107155

- DGNB System. (2020). New construction, buildings criteria set version 2020 international.

- Fathi, A. S., & O’Brien, W. (2023, June 11–14). Impact of different indoor environmental weighting schemes on office architectural design decisions in different climates. In M. Schweiker, C. van Treeck, D. Müller, J. Fels, T. Kraus, & H. Pallubinsky (Eds.), Proceedings of Healthy Buildings 2023 Europe, Aachen, Germany.

- Frontczak, M., Andersen, R. V., & Wargocki, P. (2012). Questionnaire survey on factors influencing comfort with indoor environmental quality in Danish housing. Building and Environment, 50, 56–64. https://doi.org/10.1016/j.buildenv.2011.10.012

- Frontczak, M., Schiavon, S., Goins, J., Arens, E., Zhang, H., & Wargocki, P. (2012). Quantitative relationships between occupant satisfaction and satisfaction aspects of indoor environmental quality and building design. Indoor Air, 22(2), 119–131. https://doi.org/10.1111/j.1600-0668.2011.00745.x

- Heinzerling, D., Schiavon, S., Webster, T., & Arens, E. (2013). Indoor environmental quality assessment models: A literature review and a proposed weighting and classification scheme. Building and Environment, 70, 210–222. https://doi.org/10.1016/j.buildenv.2013.08.027

- Hellwig, R. T. (2017, July 3–5). Perceived importance of indoor environmental factors in different contexts. Proceedings of PLEA 2017 – Design to Thrive, Edinburgh, UK.

- IBPSA. (2023). International building performance simulation association. http://www.ibpsa.org

- ISIAQ. (2023). International society of indoor air quality and climate. https://www.isiaq.org/

- Limesurvey GmbH. (2016). LimeSurvey: An open Source survey tool (Version 2.06 + Build 160129). LimeSurvey GmbH. http://www.limesurvey.org

- Mahdavi, A., Berger, C., Bochukova, V., Bourikas, L., Hellwig, R. T., Jin, Q., Pisello, A. L., & Schweiker, M. (2020). Necessary conditions for multi-domain indoor environmental quality standards. Sustainability, 12(20), 8439–8424. https://doi.org/10.3390/su12208439

- O’Brien, W., Wagner, A., Schweiker, M., Mahdavi, A., Day, J., Kjærgaard, M. B., Carlucci, S., Dong, B., Tahmasebi, F., Yan, D., Hong, T., Gunay, H. B., Nagy, Z., Miller, C., & Berger, C. (2020). Introducing IEA EBC annex 79: Key challenges and opportunities in the field of occupant-centric building design and operation. Building and Environment, 178, Article 106738. https://doi.org/10.1016/j.buildenv.2020.106738

- R Development Core Team. (2023). RStudio (4.3.1). https://www.r-project.org/

- Rohde, L., Larsen, T. S., Lund Jensen, R., Larson, O. K., Jønsson, K. T., & Loukou, E. (2020). Determining indoor environmental criteria weights through expert panels and surveys. Building Research & Information, 48(4), 415–428. https://doi.org/10.1080/09613218.2019.1655630

- Tang, H., Ding, Y., & Singer, B. (2020). Interactions and comprehensive effect of indoor environmental quality factors on occupant satisfaction. Building and Environment, 167, Article 106462. https://doi.org/10.1016/j.buildenv.2019.106462

- Torresin, S., Pernigotto, G., Cappelletti, F., & Gasparella, A. (2018). Combined effects of environmental factors on human perception and objective performance: A review of experimental laboratory works. Indoor Air, 28(4), 525–538. https://doi.org/10.1111/ina.12457

- Tran, M. T., Wei, W., Dassonville, C., Mandin, C., Derbez, M., Martinsons, C., Ducruet, P., Héquet, V., & Wargocki, P. (2023, October 4–5). Applicability and sensitivity of the TAIL rating scheme using data from the French national school survey. Proceedings of 43rd AIVC - 11th TightVent & 9th Venticool Conference 2023 - Ventilation, IEQ, and Health in Sustainable Buildings, Copenhagen, Denmark.

- Vannette, D. L., & Krosnick, J. A. (2018). The Palgrave handbook of survey research. Palgrave Macmillan.

- Wei, W., Wargocki, P., Zirngibl, J., Bendžalová, J., & Mandin, C. (2020). Review of parameters used to assess the quality of the indoor environment in green building certification schemes for offices and hotels. Energy and Buildings, 209, Article 109683. https://doi.org/10.1016/j.enbuild.2019.109683

- Zalejska-Jonsson, A., & Wilhelmsson, M. (2013). Impact of perceived indoor environment quality on overall satisfaction in Swedish dwellings. Building and Environment, 63, 134–144. https://doi.org/10.1016/j.buildenv.2013.02.005

- Zhao, Y., & Li, D. (2023). Multi-domain indoor environmental quality in buildings: A review of their interaction and combined effects on occupant satisfaction. Building and Environment, 228, Article 109844. https://doi.org/10.1016/j.buildenv.2022.109844