Abstract

Background: There are significant challenges across the research pathway, including participant recruitment. This paper aims to explore the impact of clinician recruitment decision-making on sampling for a national mental health survey.

Method: Clinical teams in 20 English mental healthcare provider organisations screened caseload lists, opting-out people whom, in their judgement, should not be approached to participate in a survey about stigma and discrimination. The reasons for each individual opted-out were requested. We assess these reasons against study recruitment criteria and investigated the impact of variations in opt-out rates on response rates and study findings.

Results: Over 4 years (2009–2012), 37% (28,592 people) of the total eligible sampling frame were excluded. Exclusions comprised three categories: clinical teams did not screen their lists within recruitment period (12,392 people: 44%); protocol-specified exclusions (8364 people: 29%); clinician opt-outs queried by research team (other reasons were given) (7836, 28%). Response rates were influenced by decision-making variations.

Conclusions: Large numbers of people were denied the opportunity to choose for themselves whether to participate or not in the Viewpoint Survey. The clinical research community, and their employing organisations, require support to better understand the value of research and best practice for research recruitment.

Introduction

Global interest in mental health provision, and mental health research, is increasing as the recognised economic and social cost of mental health problems rises (Collins et al., Citation2011). There is acknowledgement among policy makers in England, for example, that raising the profile of research can improve health outcomes (Department of Health, Citation2006; NHS England, Citation2016); the Health and Social Care Act 2012 states all National Health Service (NHS) organisations have an obligation to recognise and promote the value of research.

In 2005, the mental health research system in England was reviewed and weaknesses were identified in relation to research leadership, financing, capacity and infrastructure, producing quality studies and knowledge transfer (Clark & Chilvers, Citation2005). The establishment of the Mental Health Research Network in 2004, and the National Institute of Health Research (NIHR) in 2006 is now having an impact in each of these areas. The network has contributed to increased trial sample sizes, successful target recruitment and increased levels of public and patient involvement in studies (Ennis & Wykes, Citation2013). However, levels of mental health research funding remain comparatively low (Murray et al., Citation2013).

Low investment in mental health research is one challenge for the sector, but unless the practical barriers of carrying out research are addressed, increased resources will not deliver better quality studies or improved service user outcomes. Addressing inefficiencies in the system of recruitment to research is an ethical imperative as a lot of research uses public funds which should be used efficiently. Blockages in the research pathway include system bureaucracy (Leeson & Tyrer, Citation2013), complex ethics committee processes (Gould et al., Citation2004) and difficulties for clinicians, service users and carers to understand the value of participation in trials (Adams, Citation2013). The difficulties of study recruitment can generate cost and scientific challenges as a result of selection bias.

Research has identified a wide range of factors influencing recruitment in mental health randomised controlled trials (RCT) (Borschmann et al., Citation2014). Low recruitment to target within a supported employment trial were identified as: misconceptions about trial design; lack of equipoise; misunderstanding of the trial aims; variable interpretations on eligibility criteria; and paternalism (Howard et al., Citation2009). Lack of equipoise included views expressed by recruiters, in this case care coordinators and research staff, that the intervention arm was better, as the control arm was not an equivalent form of support, and that the topic should not be the subject of an RCT impacting on their attitudes and approach to recruitment. Paternalism in research recruitment, when clinicians prioritise their own views on whether their client should take part without giving people the opportunity to choose for themselves, has been found in several studies exploring clinicians and researchers’ views (Borschmann et al., Citation2014; Mairs et al., Citation2012). In one, a qualitative study exploring barriers to general practitioners (GPs) recruiting people with depression into trials, it was found GPs wanted to protect vulnerable service users, an example of paternalism, and they also felt they lacked the skills to introduce research requests within consultations, and gave priority to clinical and administrative duties (Mason et al., Citation2007). As a result, although people were encouraged to have active involvement in treatment decision-making this was denied in terms of access to research participation. This study highlighted the importance of both system level barriers including capacity constraints, as well as individual clinician factors such as attitudes or skills. People with mental health problems are aware of this problem, as shown by a focus group study with day centre service users in Scotland who identified concerns that psychiatrists screened access to research invitations rather than people who knew them better such as family or staff running the day centre (Ulivi et al., Citation2009).

Such studies acknowledge that efforts to improve the quality of mental health trials have to address filtering by clinicians, including cultural values and staff attitudes towards research (Borschmann et al., Citation2014; Patterson et al., Citation2010). Most research assessing participant recruitment to date has only considered RCT study designs (Fletcher et al., Citation2012). In this paper, we explore clinicians’ decisions concerning recruitment to an annual research survey between 2009 and 2012. Using screening data collected from clinicians in recruitment gate-keeping roles, we explore variations in decision-making across 20 mental health service provider organisations in England and consider the impact on the survey findings.

Methods

This paper draws on data collected in the Viewpoint Survey (Henderson & Thornicroft, Citation2009), that aimed to recruit 1000 mental health service users each year from across five NHS mental health trusts which are organisations delivering secondary care mental health services in England. Currently, there are 56 NHS mental health Trusts in England; data in this paper is drawn from 20 trusts. Participants in the survey took part in a telephone interview about their experiences of stigma and discrimination using the DISC survey tool (Thornicroft et al., Citation2009). The Viewpoint Survey methods have been reported in detail elsewhere (Corker et al., Citation2013; Hamilton et al., Citation2011; Henderson et al., Citation2012; Henderson & Thornicroft, Citation2009). The survey ran 2008 to 2014 and we collected data on reasons for clinical staff “opting-out” service users from 2009 to 2012. The study received approval from Riverside NHS Ethics Committee 07/H0706/72.

Participants were sampled randomly from NHS organisation databases by non-clinical staff in Patient Records departments to give between 3150 and 4500 persons receiving specialist mental health care. The number varied by site because of variations in the size of the organisations. Invitation packs were distributed until sufficient numbers of consent forms were received each year; approximately 1200 consents were needed to achieve 1000 interviews allowing for drop-out. Lead clinicians were asked to review recruitment lists (the names of service users meeting eligibility criteria after electronic data base search), and where people were to be opted out based on their clinical view, to provide a brief reason for this decision. Study inclusion criteria were:

a diagnosis of mental health problems (excluding dementia);

contact with a participating trust’s services in the 6 months prior to sampling;

aged between 18 and 65 years old;

living in the community.

Study exclusion criteria were:

Service users in prison or hospital because of practical concerns about taking part in a confidential telephone survey in these settings.

Clinician review is an important part of the recruitment process ensuring a research team does not approach ineligible people or those viewed on medical grounds to be too unwell to take part. Each recruitment review list was collated centrally by the participating site, and opt-out reasons for each service user provided in anonymised format. Three members of the research team were involved in coding and analysing these data. The process was as follows:

Opt-out data from 2009 to 2012 was collated by one researcher using excel spread sheets;

One year of data, 2009, 2010 and 2011 data were given to a researcher to code; these three researchers produced a draft coding framework and applied it to their allocated data set.

The three coding frameworks were compared and discussed by the team and a second coding framework produced. Each person applied the second draft framework to their original data and the 2012 data set.

The framework was once again reviewed and changes applied to produce a final coding framework containing 26 codes.

One team member applied the final coding framework to the data across all 4 years.

The two other team members took a sample of codes and checked for accuracy and consistency. All responses where coding queries were identified were brought to a team discussion and final decisions made collectively.

Data were analysed using SPSS 20.0 to explore impact of opt-out on response rates and study findings. Two hypotheses were proposed, both exploratory, based upon research team discussions:

If clinicians appropriately excluded vulnerable participants, based on medical grounds, higher response rates would be expected because clinicians would have filtered out those service users who were least likely to engage as research shows patient’s state of health is a key factor affecting recruitment to trials (Hughes-Morley et al., Citation2015);

The rate of clinician opt-out (protocol-specified or queried) would correlate with mental health discrimination scores, though direction was not predicted;

Results

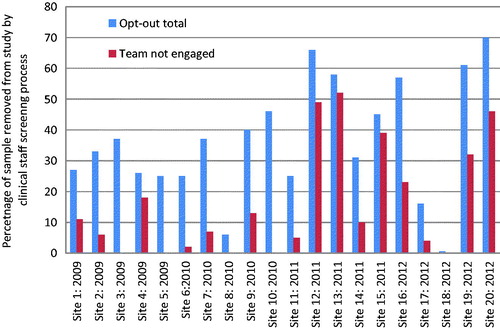

Across 4 years of data collection, 76,683 service users were identified through organisation database searches according to study recruitment criteria. Between 2009 and 2012, 48,091 people (63%) were opted-in by clinicians to receive an invitation pack to consider giving written, informed consent to participate in the study (12,878 in 2009; 13,746 in 2010; 10,886 in 2011; and 10,581 in 2012). A total of 28,592 people were excluded from data collection by clinicians (5262 in 2009; 6423 in 2010; 8776 in 2011; and 8131 in 2012) accounting for 37% of the total potential participant sample. Clinician opt-out rates varied between sites (see ). Two sites screened out very few people; only 19 or 0.6% (site 18: 2012) and 219 or 6% (site 8: 2010) excluded through clinician opt-out decisions.

Clinician reasons for exclusion

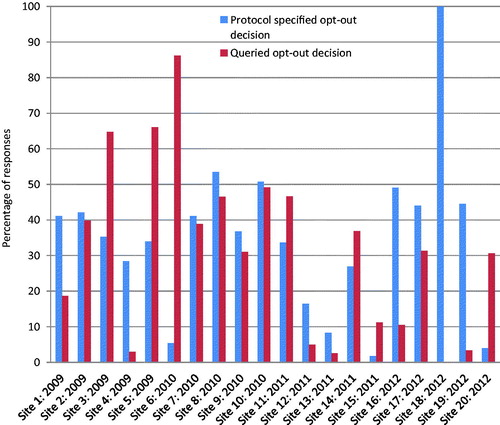

Three categories of clinician opt-outs were created: (i) where the whole clinical team did not engage in the screening process at all, and so the entire list of people was excluded (non-engagement); (ii) where clinicians provided opt-out reasons following study eligibility criteria (protocol-specified exclusions); and (iii) for opt-out reasons falling outside the study eligibility criteria (queried opt-outs by the research team). Non-engagement in screening was the most common reason for exclusion, accounting for 16% of exclusions over 4 years (n = 12,392) and 44% of the opt-out group. This occurred in 15 out of 20 sites with the proportion increasing over time (ANOVA, F = 3.243, p = 0.05); non-engagement was highest in 2011, accounting for 68% of exclusions.

The reasons clinicians provided for opting out participants are shown in and , distinguishing protocol-specified reasons (29% of total opt-outs), and those which the research team queried as potentially non-legitimate reasons (28% of total opt-outs). Queried decisions include system-level problems such as not having an address for a service user on the caseload (3%), or members of the care team being asked to provide judgement on service users whom they have no or little clinical knowledge (4%). They also include value judgements by clinicians which prevent service users from making informed decisions to participate in research themselves (2%). Variation in opt-out decisions was found by site, comparing protocol-specified decisions with others (see ). In one site, all opt-out decisions were rated as protocol-specified by the research team (site 18), in another only 2% (site 12).

Table 1. Protocol-specified clinical opt-out decisions for excluding participants from Viewpoint Survey.

Table 2. Clinical opt-out decisions for excluding participants queried by research team.

Impact on response rates and results

The impact of opt-out variations on research findings was explored. Overall study response rates were low year on year: 7.6% in 2009 and 2010; 11% in 2011; and 10% in 2012. Voucher payments of £10 were included in 2010 which may account for the increase achieved. It was hypothesised that if clinicians appropriately excluded vulnerable participants, higher response rates would be expected from the remaining sample as they would have filtered out those service users who were least likely to engage with the research on medical grounds, although there are also other barriers to participation. We found a positive correlation between site-level response rate and the total percentage opted out of the survey (Pearson’s correlation: 0.472, p = 0.036). Where trusts sent out fewer invitation packs, higher response rates were achieved (Pearson’s correlation: −0.609, p = 0.004). However, this was largely accounted for by teams not engaging rather than the screening process by individual clinicians where no correlation was found. There was a positive correlation between the proportion of opt-outs resulting from teams not engaging and site-level response rate (Pearson’s correlation: 0.570, p = 0.009).

Clinician opt-out decisions were explored to consider whether they contributed to a bias in the survey sample that might affect the overall study findings in relation to mental health discrimination scores. If such a bias was introduced, different levels of mental health discrimination in areas such as finding and keeping work or relationships with family members, would be expected to be reported in those sites with smaller proportions opted out, compared with those with more opted-out. The hypothesis was that the rate of clinician opt-out (protocol-specified or queried) would correlate with Viewpoint Survey discrimination scores, though direction was not predicted. No significant correlations were found.

Discussion

We found variations in how mental health trusts screened patients for recruitment to a national mental health survey. The data showed high rates of opt-out across the total sample post-data base screening; 37% of potential participants were excluded by the clinician recruitment screening process. The process began with team (non) engagement in recruitment screening, influenced in part by system issues, and ended with individual clinician decision-making service user by service user.

Clear variations in opt-out rates by site were found, from 0.6% of the sample excluded in one site to 66% in another, and rates have increased over time from 29% in 2009 to 43% in 2012. The quality of organisations’ recordkeeping practices may be a factor in this variation, affecting the accuracy of service user records including current address, lead clinician and even whether the person is still alive. Where records are inaccurate, teams may be compensating by removing people who should not have been included in the initial sample. Each site recruited from a variety of community mental health teams so varying level of illness severity should not have been a key factor in explaining the differences. Those sites where few people were excluded may be motivated by a belief that service users should be allowed to make the decision for themselves, or may reflect reluctance to spend time doing more detailed screening themselves.

These data show that team reluctance to engage in the screening process was widespread in 15 out of 20 sites. In half the sites, non-engagement accounted for over 30% of opt-out decisions. There was a trend over time for less clinical team engagement in the Viewpoint Survey. It is possible that there were specific reasons for non-engagement that relate to organisation and system factors rather than individual clinician decision-making. In at least one site, ongoing restructures and threat of redundancy for some clinicians was a stated reason for low engagement by care teams. Elsewhere, pressures on service capacity including high caseloads may have resulted in reluctance to devote time to supporting Viewpoint Survey research recruitment.

Improving the accuracy of clinician recruitment screening is indicated by the data as response rates were impacted. We found where trusts sent out fewer invitation packs and percentage of service users opted in was lower, a higher site response rate was achieved. Doing so may improve participant response rates overall, though more research is needed to understand both system and individual clinician roles in recruitment screening. In this study, teams not engaging improved response rates, suggesting group decisions on suitability to take part might be justified. Clinician screening is important because it acts as a double-check on eligibility criteria. In the study invitation sent to potential participants, the eligibility criteria are specified. People who do not meet the criteria but who were not filtered out by the clinician screening may filter themselves out through non-response.

The findings do not suggest that higher rates of exclusion by clinicians introduced a bias to the overall survey findings, at least in relation to experiences of mental health discrimination. However, the practice of excluding people for reasons other than those in the research protocol has implications for the accuracy of reporting research and replicability of findings through obscuring selection criteria.

Clinician screening as part of research recruitment has to strike a balance between competing interests of clinicians, service users and researchers. On the one hand, clinicians have a duty of care to the individual, including to protect the therapeutic relationship. On the other hand, by removing people from a study prior to invitations being sent, recruitment gatekeepers potentially deny people the right to choose to tell their story and could affect the validity of study findings. The Viewpoint Survey analysis suggests that this balance may not be appropriately struck in many cases, either because teams’ lack of engagement denies people the right to participate, or because clinicians’ expectations of people’s preferences might be ill-founded. In sites that screen few people out of the study, vulnerable people may be receiving invitation packs inappropriately causing them personal distress, or harm, though in our study this was not demonstrated in an increased number of complaints or concerns expressed by those receiving an invite.

Other studies have shown that people living with severe mental health problems have an interest in research and setting research priorities (Robotham et al., Citation2016; Rose et al., Citation2008), have capacity to understand the complexities of consent process and what is being asked of them (Roberts et al., Citation2006), and have opinions on research quality in RCTs (Xia et al., Citation2009). In busy mental health services there is little capacity for practitioners to discuss research with clients, helping them to understand information sheets and weigh up whether they want to consent to join a study. In some instances, clinicians do not know the client sufficiently well to make an informed judgement about non-maleficence and beneficence, key principles in medical bio-ethics (Beauchamp & Childress, Citation2001). Research shows that gatekeepers having protected time to undertake research activity enhances access to potential participants (Borschmann et al., Citation2014).

Study limitations

There are limitations within this analysis. The Viewpoint Survey did not carry out a qualitative project to understand clinician decision-making. The data presented are routine recruitment screening information and therefore the quality of the reasons given depends on the clinician’s engagement. In some cases, clinicians responded simply “no” or “not suitable” without providing a specific explanation. The survey itself had a low response rate among those who were sent an invitation to participate. This may obscure findings from our analyses assessing impact of opt-out rates on study findings, if the study sample itself is not representative of the population.

Future research

Designing studies that look at barriers to research participation are essential for improving the quality of mental health research. In the future, studies could explore with clinicians directly their decision-making process when screening caseload lists for studies, assessing whether the subject of study, type of research and proposed methodology, team factors such as workload and their own research interests impact on opt-out decisions, and solutions for dealing with variations. It would also be valuable to explore with service users, their decision-making over research engagement; both those who consent to take part and those whom decline.

Conclusions

This study adds to current literature on research recruitment, by focusing on a non-trial case study to explore clinician decision-making, where most literature to date in this field has only considered trial recruitment (Treweek et al., Citation2013). Variation in clinician decision-making can influence the quality of research, including response rates and accurate reporting. Lack of engagement and inappropriate exclusions are also denying large numbers of mental health service users the opportunity to decide whether to participate in a study on an issue of central importance to many. A research active clinical community, supported by relevant organisations, is essential for high quality research and cannot be achieved without training for clinicians in the value of research and best practice research recruitment that goes beyond guidelines (see, for example, General Medical Council, Citation2013).

This is an important issue, both to enhance the quality of research and health outcomes, and to protect the rights of people to make their own decisions about research participation. One way in which this may be done is through prior indication using a prior “consent for consent” system where preferences to engage with mental health research are registered. There is limited information available about such systems but pilot programmes emerging such as those at the NIHR Bio-Medical Research Centre, King’s College London (Papoulias et al., Citation2014).

Declaration of interest

No potential conflict of interest was reported by the authors.

References

- Adams C. (2013). Many more reasons behind difficulties in recruiting patients to randomized controlled trials in psychiatry. Epidemiol Psychiatr Sci, 22, 321–3

- Beauchamp TL, Childress JF. (2001). Principles of biomedical ethics. Oxford: Oxford University Press

- Borschmann R, Patterson S, Poovendran D, et al. (2014). Influences on recruitment to randomised controlled trials in mental health settings in England: A national cross-sectional survey of researchers working for the Mental Health Research Network. BMC Med Res Methodol, 14, 23

- Clark M, Chilvers C. (2005). Mental health research system in England: Yesterday, today and tomorrow. Psychiatr Bull, 29, 441–5

- Collins PY, Patel V, Joestl SS, et al. (2011). Grand challenges in global mental health. Nature, 475, 27–30

- Corker E, Hamilton S, Henderson C, et al. (2013). Experiences of discrimination among people using mental health services in England 2008–2011. Br J Psychiatry, 202, s58–63

- Department of Health. (2006). Best research for best health: A new national health research strategy. London: HMSO

- Ennis L, Wykes T. (2013). Impact of patient involvement in mental health research: Longitudinal study. Br J Psychiatry, 203, 381–6

- Fletcher B, Gheorghe A, Moore D, et al. (2012). Improving the recruitment activity of clinicians in randomised controlled trials: A systematic review. BMJ Open, 2, e000496. doi: 10.1136/bmjopen-2011-000496

- General Medical Council. (2013). Good Practice in research to consent in research. Available from: http://www.gmc-uk.org/Good_practice_in_research_and_consent_to_research.pdf_58834843.pdf [last accessed 4 Nov 2017: http://www.gmc-uk.org/guidance/)

- Gould S, Bowker C, Roberts A. (2004). Comparison of requirements of research ethics committees in 11 European countries for a non-invasive interventional study. BMJ, 328, 140–1

- Hamilton S, Pinfold V, Rose D, et al. (2011). The effect of disclosure of mental illness by interviewers on reports of discrimination experienced by service users: A randomized study. Int Rev Psychiatry, 23, 47–54

- Henderson C, Corker E, Lewis-Holmes E, et al. (2012). England’s time to change antistigma campaign: One-year outcomes of service user-rated experiences of discrimination. Psychiatric Serv, 63, 451–7

- Henderson C, Thornicroft G. (2009). Stigma and discrimination in mental illness: Time to change. Lancet, 373, 1928–30

- Howard L, de Salis I, Tomlin Z, et al. (2009). Why is recruitment to trials difficult? An investigation into recruitment difficulties in an RCT of supported employment in patients with severe mental illness. Contemp Clin Trials, 30, 40–6

- Hughes-Morley A, Young B, Waheed W, et al. (2015). Factors affecting recruitment into depression trials: Systematic review, meta-synthesis and conceptual framework. J Affect Disord, 172, 274–90

- Leeson VC, Tyrer P. (2013). The advance of research governance in psychiatry: One step forward, two steps back. Epidemiol Psychiatr Sci, 22, 313–20

- Mairs H, Lovell K, Keeley P. (2012). Clinician views of referring people with negative symptoms to outcome research: A questionnaire survey. Int J Ment Health Nurs, 21, 138–44

- Mason VL, Shaw A, Wiles NJ, et al. (2007). GPs’ experiences of primary care mental health research: A qualitative study of the barriers to recruitment. Fam Pract, 24, 518–25

- Murray CJ, Richards MA, Newton JN, et al. (2013). UK health performance: Findings of the Global Burden of Disease Study 2010. Lancet, 381, 997–1020

- NHS England. (2016). Five year forward view for mental health for the NHS in England. A report from the independent mental health taskforce for NHS England. Available from: https://www.england.nhs.uk/wp-content/uploads/2016/02/Mental-Health-Taskforce-FYFV-final.pdf [last accessed 12 Aug 2016]

- Papoulias C, Robotham D, Drake G, et al. (2014). Staff and service users’ views on a ‘Consent for Contact’ research register within psychosis services: A qualitative study. BMC Psychiatry, 14, 377

- Patterson S, Kramo K, Soteriou T, Crawford MJ. (2010). The great divide: A qualitative investigation of factors influencing researcher access to potential randomised controlled trial participants in mental health settings. J Ment Health, 19, 532–41

- Roberts LW, Warner TD, Hammond KG, Hoop JG. (2006). Views of people with schizophrenia regarding aspects of research: Study size and funding sources. Schizophr Bull, 32, 107–15

- Robotham d, Wykes T, Rose D, et al. (2016). Service user and career priories in a Biomedical Research Centre for mental health. J Ment Health, 25, 185–8

- Rose D, Fleischman P, Wykes T. (2008). What are mental health service users’ priorities for research in the UK? J Ment Health, 17, 520–30

- Thornicroft G, Brohan E, Rose D, et al. (2009). Global pattern of experienced and anticipated discrimination against people with schizophrenia: A cross-sectional survey. Lancet, 373, 408–15

- Treweek S, Lockhart P, Pitkethly M, et al. (2013). Methods to improve recruitment to randomised controlled trials: Cochrane systematic review and meta-analysis. BMJ Open, 3, e002360. doi:10.1136/bmjopen-2012-002360

- Ulivi G, Reilly J, Atkinson JM. (2009). Protection or empowerment: Mental health service users’ views on access and consent for non-therapeutic research. J Ment Health, 18, 161–8

- Xia J, Adams C, Bhagat N, et al. (2009). Losing participants before the trial ends erodes credibility of findings. Psychiatr Bull, 33, 254–7