Abstract

Purpose

To describe the development and determine the diagnostic accuracy of the Brisbane Evidence-Based Language Test in detecting aphasia.

Methods

Consecutive acute stroke admissions (n = 100; mean = 66.49y) participated in a single (assessor) blinded cross-sectional study. Index assessment was the ∼45 min Brisbane Evidence-Based Language Test. The Brisbane Evidence-Based Language Test is further divided into four 15–25 min Short Tests: two Foundation Tests (severe impairment), Standard (moderate) and High Level Test (mild). Independent reference standard included the Language Screening Test, Aphasia Screening Test, Comprehensive Aphasia Test and/or Measure for Cognitive-Linguistic Abilities, treating team diagnosis and aphasia referral post-ward discharge.

Results

Brisbane Evidence-Based Language Test cut-off score of ≤157 demonstrated 80.8% (LR+ =10.9) sensitivity and 92.6% (LR− =0.21) specificity. All Short Tests reported specificities of ≥92.6%. Foundation Tests I (cut-off ≤61) and II (cut-off ≤51) reported lower sensitivity (≥57.5%) given their focus on severe conditions. The Standard (cut-off ≤90) and High Level Test (cut-off ≤78) reported sensitivities of ≥72.6%.

Conclusion

The Brisbane Evidence-Based Language Test is a sensitive assessment of aphasia. Diagnostically, the High Level Test recorded the highest psychometric capabilities of the Short Tests, equivalent to the full Brisbane Evidence-Based Language Test. The test is available for download from brisbanetest.org.

Aphasia is a debilitating condition and accurate identification of language disorders is important in healthcare.

Language assessment is complex and the accuracy of assessment procedures is dependent upon a variety of factors.

The Brisbane Evidence-Based Language Test is a new evidence-based language test specifically designed to adapt to varying patient need, clinical contexts and co-occurring conditions.

In this cross-sectional validation study, the Brisbane Evidence-Based Language Test was found to be a sensitive measure for identifying aphasia in stroke.

Implications for rehabilitation

Introduction

Accurate identification of acquired language disorders (aphasia) is important in healthcare. Clinical assessment of language however is complex and multifaceted [Citation1,Citation2] and the accuracy of assessment procedures is dependent upon a number of factors. Performance on language tasks may be easily impacted by multiple other clinical elements which can spuriously influence the accuracy of test scores [Citation2,Citation3]. Co-occurring apraxia of speech or dysarthria may influence verbal language performance and lead to false positives on assessment tasks [Citation3]. Visual deficits such as hemianopia or neglect may interfere with the perception of written language stimulus or the naming of pictures [Citation3,Citation4] and motor limb impairments such as hemiparesis may impact on writing legibility [Citation5]. Additional factors such as patient fatigue, medical instability and the pressured demands of different clinical contexts also require language testing to be time-efficient, user-friendly and adaptable to the varying needs of the testing environment [Citation2].

A wide range of language tests currently exist. Brief aphasia screening tests such as the Frenchay Aphasia Screening Test (FAST) [Citation6], Language Screening Test (LAST) [Citation7] and ScreeLing [Citation8] aim to provide a fast, efficient gauge of language functioning. These “non-specialist” screeners are intended for use by multiple professions and focus on efficiency and brevity in aphasia screening. Such tests however gauge functioning through a narrow range of language tasks, do not assess language ability across the severity spectrum from severe to mild, and at times omit assessment of core language domains such as reading and/or writing [Citation7]. As a result, any deficits within any these areas may be missed.

Comprehensive language tests such as the Western Aphasia Battery-Revised (WAB-R) [Citation3], Comprehensive Aphasia Test (CAT) [Citation9] and Boston Diagnostic Aphasia Examination (BDAE) [Citation10] provide a thorough evaluation of language across the domains. Such measures however take up to 1-2 h to administer and have been reported to be too cumbersome for use in certain settings (e.g., acute hospital sessions which typically last ∼30 min) [Citation2,Citation11]. Other, more time-efficient speech pathology measures such as the Acute Aphasia Screening Protocol [Citation12] and WAB-R Bedside [Citation3] are widely used in practice. However, surprisingly, systematic reviews of the literature have failed to find any published studies reporting these tests’ validation in stroke patients with and without aphasia [Citation13,Citation14].

The complexity of clinical language evaluations highlights the need for an evidence-based, adaptable, time-efficient yet comprehensive language test. The Brisbane EBLT is a new language test created to address this need. The test has been developed from Evidence-Based Principles (EBP) [Citation15] and is available in five test versions specifically developed to accurately assess language while adjusting to patient need, the clinical context and co-occurring conditions. The aim of this study is to report on the development and diagnostic validation of the Brisbane EBLT in identifying aphasia in stroke populations.

Materials and methods

Study design and participants

Diagnostic accuracy was examined in a single (assessor) blinded cross-sectional study of 100 consecutive acute stroke admissions at two large tertiary hospitals in Brisbane, Australia. A priori sample size calculations revealed 98 patients were required to yield a 10% width of a 2-sided 95% CI with a conservative estimate of 50% disease prevalence [Citation16] (estimates of aphasia in left-hemisphere brain damage reported to be 55.1%) [Citation17]. The project was granted university and health service committee ethics approval and aims to comply with recognised Standards for Reporting Diagnostic Accuracy (STARD) [Citation18]. The STARD statement is an EQUATOR network guideline (Enhancing the QUAlity and Transparency Of health Research) of widely accepted criteria for the rigorous reporting of sample selection, study design and statistical analysis in diagnostic accuracy research [Citation18].

Target condition

The target disorder was defined as impaired language functioning from severe to mild deficits within any language domain (verbal expression, comprehension, reading, writing, and gesture) resulting from ischaemic or haemorrhagic stroke.

Inclusion/exclusion criteria

All admitted stroke patients were screened for eligibility within 2 days of hospital admission. Eligible patients were those admitted for stroke management and deemed sufficiently medically stable to undergo language assessment if the following were met: sustained level of consciousness for >10 min (cognitive functioning was pragmatically assessed based on a patient’s ability to participate in, engage with and complete the required language tasks); absence of any precluding acute medical condition as per treating medical team; aged >14 years; native-level English language ability, and with confirmed stroke site of lesion (as determined by acute medical CT/MRI report) within the left frontal, parietal, temporal, occipital, limbic or insular lobes, basal ganglia (caudiate nucleus, putamen, globus pallidus, substantia nigra, nucleus accumbens, and subthalamic nucleus), internal capsule, and thalamus (including thalamic nuclei). To optimise test external validity, the presence of common post-stroke non-language but communication-related conditions (affecting vision, hearing, speaking, or writing) such as hemianopia, hemiparesis, dysarthria or apraxia of speech was not used as an exclusionary criterion. For these patients, the presence of these co-occurring conditions was noted, and language test items severely affected by these conditions (such that they were uninterpretable) were recorded as missing data. Patients with subarachnoid haemorrhage or lesions isolated to the right cerebral hemisphere, right subcortical regions or midbrain, or below (as identified by acute medical CT/MRI report) were excluded [Citation19].

Assessments

Brisbane EBLT test development

The Brisbane EBLT is a new language test conceived and created by the first author, Alexia Rohde. The first phase of test development involved the construction of a large item bank of stimulus items including both language tasks (e.g., repetition, reading aloud, picture-word matching) and questions (e.g., picture naming “what is this called?”). The aim of the item bank was to develop as many new tasks and questions as possible for the new assessment. This item bank was guided by the structure and content of existing informal language measures (n = 44) which were collected from clinicians via an email request sent through a professional speech pathology network. Based on these informal measures, similar new tasks and questions were created which formed the basis of the new test. These items were created to assess language across all the domains of verbal expression, auditory comprehension, gesture, reading and writing. Linguistic factors, such as word length, stimulus imageability and word frequency were all incorporated into the test development process. Tasks and questions were designed to assess language across the severity spectrum, from simpler (e.g., picture matching tasks) to complex high-level questions (e.g., synonyms “what is one word that means the same as…?”). Item bank tasks and questions were categorised into a specific area (language domain and difficulty level) to ensure each language area and severity of language functioning was assessed.

At this phase in the test development, the item bank included over 100 subtests and was far too lengthy for practical use. The next phase involved the shortening of the item bank to a workable test length (∼50 subtests). Tasks and questions which were ambiguous, too long, not practical, or difficult to score were eliminated to leave only the most effective, efficient and easy-to-score items within the final measure. During this phase each language task and question underwent a rigorous process of piloting, feedback, revision and review based on the four principles of EBP [Citation15]:

Clinician experience

A total of 108 clinicians provided feedback on test items during the development process. Speech pathologists provided feedback in a variety of settings including as part of their attendance at a national conference, as part of focus-groups and during one-to-one test administration sessions. During this time the test was a dynamic document that underwent frequent revision and refinement. Speech pathologists reviewed the new assessment and then provided feedback. Changes to the test were then made and the test then underwent further speech pathology review. The test became progressively shorter as duplicate/similar, ambiguous and difficult-to-score tasks and questions were excluded. This pattern was repeated until nil new feedback on the document was obtained.

Clinical context

Piloting of the test in the acute hospital environment (n = 10 patients) led to the exclusion of test items too lengthy or cumbersome for acute use. Subtests became short in length (maximum of 5–6 tasks/questions) to ensure the test moved quickly and swiftly across assessment areas. In the final phase of test development, two speech pathology hospital departments piloted the test in their clinical caseloads (n = 17 clinicians) and gave further feedback on test items.

Patient perspectives

Directly following administration, item bank feedback from stroke patients and/or family members (n = 74) was sought. Potential cultural bias was minimised with feedback obtained from participants originating from the following English speaking countries: USA, England, Australia, New Zealand, Scotland, Canada, and South Africa. Any test items with reported cultural bias (items unfamiliar to participants from any cultural background) were excluded. The majority of patients (n = 57) had positive/nil concerns with the new test (“I enjoyed it,” “Was what I expected it,” “No worries,” etc.). Feedback relating to specific tasks or questions was provided by three study participants/their family members which was incorporated into the test development process. For example, the parent of one study participant reported that “Some task instructions were quite complex however the tasks were low. Like the ‘semantic’ ones’.” For simpler items, it was subsequently ensured that an example was always first completed by the administering clinician to demonstrate the type of required response. Verbal understanding of the instructions is therefore not needed.

Five of the study participants reported the test was “too long” (n = 5), “too simplistic” (n = 5) or “too difficult” (n = 4). To minimise patient fatigue, avoid patient exposure to test items considered too difficult or easy and replicate the typical length of existing informal measures, the Brisbane EBLT was then subsequently also made available in four shorter versions (Short Tests). Clinician experience, clinical context and patient feedback were all instrumental in guiding the test development process. Only tasks and questions meeting these rigorous EBP standards were included in the final measure, the Brisbane EBLT.

Clinically relevant research

The final EBP principle is the focus of this report which describes the diagnostic analysis of this new test.

Brisbane EBLT

The above test development process resulted in the final measure, the Brisbane EBLT which is the full version of the assessment, evaluating language in 49 subtests (∼45 min) across the severity spectrum (severe to mild) in the following language domains (): verbal expression including repetition, automatic speech, spontaneous speech (picture description), naming, auditory comprehension, actions/gesture, reading, and writing. Certain subtests require the use of two of each of the following everyday objects: cup, spoon, pen and knife. An additional “Perceptual” subtest examines abilities not requiring a verbal or written response (e.g., object to picture matching) to allow assessment of functioning when both verbal and written responses may be difficult (for example in situations when both severe apraxia of speech may result in limited verbal expression and dominant-hand hemiparesis may limit written responses). Large print/pictures and the use of everyday objects as stimulus items assist to accommodate visual co-occurring conditions (e.g., hemianopia). Short, concise writing tasks (for example “Write ONE sentence which contains ALL of these words in THIS order” requires only a single sentence written response) minimise upper limb motor fatigue associated with hemiparesis or non-dominant hand use. The 49 Brisbane EBLT subtests and description of test items are listed in .

Table 1. Brisbane EBLT subtests.

Test administration and scoring

The Brisbane EBLT does not have a user’s manual. All information required to use and administer the test is contained on the test forms themselves and the Administration and Scoring Guidelines form (brisbanetest.org). Question-specific administration and scoring information is contained on the Brisbane EBLT test forms whereas overall scoring guidelines are provided on the Administration and Scoring Guidelines form. The same Administration and Scoring Guidelines apply to all test versions.

Test administration is standardised. Each question is administered by following the task instructions given above each question. Prompting (e.g., the provision of an initial phoneme or additional gestural cue) is not allowed. All tasks must be administered according to the set instructions. One repetition of task instructions is allowed without penalty for non-language related difficulties in interpreting task instructions (e.g., hearing impairment). This repetition has no influence on scoring (e.g., if the patient answers correctly on the second (louder) repetition, the response is still scored as correct).

Patient responses should be scored according to the first purposeful response within the target modality (e.g., the first purposeful attempt at a verbal response is scored, any prior unrelated vocalisations are not scored). The most common responses are provided on the test form. If an alternative yet correct response is given (other than those listed), as judged by the administering clinician, this is still scored as correct. Scores are not deducted for responses impacted by motor related output deficits (e.g., phonetic distortions due to dysarthria or reduced writing legibility due to hemiparesis) providing the target is still interpretable. If the response is not interpretable (e.g., illegible writing or unintelligible speech), these items are to be left blank with a brief note reporting the reason for the lack of score. Non-language related self-correction (e.g., attempts to neaten messy writing due to hemiparesis) are not penalised (still scored as correct), however if any language-related self-corrections are evident (e.g., self-correcting phonemic or semantic paraphasias), these are scored incorrect. All Brisbane EBLT test forms and the Administration and Scoring Guidelines are available from brisbanetest.org.

Language reference standard

The reference standard diagnosis was based on five clinical factors; the results from a battery of published tests interpreted in the context of four additional clinical factors [Citation21]:

A composite language test score: All patients were first administered the Language Screening Test (LAST) [Citation7] a brief (2 min) “non-specialist” screening test which provided an initial gauge of language functioning and directed which comprehensive language battery would be most appropriate. Patients were then administered one of three longer comprehensive batteries, the Aphasia Screening Test (AST) [Citation22] (LAST scores ≤ 5/15), the Comprehensive Aphasia Test (CAT) [Citation9] (LAST scores of 5–15), or the Measure for Cognitive-Linguistic Abilities (MCLA) [Citation23] (LAST scores ≥10).

The treating clinician’s documented diagnosis,

Multidisciplinary report

Medical team report, and

Presence of referral for language services post-discharge

Reference diagnosis was based on a majority of clinical determinants of ≥3 out of the five areas.

Procedure

Consecutive stroke admissions from 21 January to 15 December 2015 were reviewed for eligibility. Informed written consent was obtained for all participants or an officially authorised next of kin. Recruited participants completed the full 49 subtest Brisbane EBLT and reference measure randomised in order. Brisbane EBLT sections were also randomised for each participant. The assessment procedure was single (assessor) blinded. Assessments were administered as closely together as possible at the patient’s bedside or ward clinic room by two speech pathologists blinded to the other’s test results. Study participants were not directly informed of their aphasia diagnosis status as part of their participation in this study, however their diagnosis status likely would have been disclosed by members of their treating multidisciplinary care team as part of their routine acute stroke care.

Statistical analysis

Diagnostic analysis was completed using MedCalc v 13.3.3.0 and/or Stata/IC 13.0. Diagnostic accuracy was calculated by comparing Brisbane EBLT test scores against the binary (yes/no) reference result to determine test sensitivity, specificity, positive (+LR), and negative likelihood ratios (−LR). Test discrimination against the reference standard was determined using the area under (AUC) the receiver operating characteristic (ROC) curve. Cut-off scores for discriminating between patients with and without aphasia were determined by evaluating the sensitivity and specificity estimates at each cut-off threshold score.

Brisbane EBLT Short Tests

The Brisbane EBLT was administered to the 100 patients for the purposes of data collection to allow for a full dataset of all 49 subtests of the Brisbane EBLT to be captured. However, at ∼45 min in length this full test version of the Brisbane EBLT is inappropriate for use in many contexts (e.g., routine acute hospital bedside use) [Citation2,Citation11]. To replicate the <30 min length of existing informal acute language measures and allow adaption to varying contexts, the Brisbane EBLT dataset was divided to create four Short Tests (15–25 min each).

Each Brisbane EBLT Short Test assesses language across the domains of verbal expression, auditory comprehension, reading and writing yet focuses on a particular severity level (mild, moderate or severe). Two Short Tests focus on severe language deficits: 1) “Foundation Test I” (with objects) (cup, spoon, pen and knife) and 2) “Foundation Test II” (requiring nil objects). The “Standard Test” examines moderate deficits and the “High Level Test” identifies mild/nil impairment. Assessment items from the full Brisbane EBLT were broadly allocated to a Short Test according to the percentage of participants who answered each question correctly (>75% for the Foundation Tests, 75–35% for the Standard Test and >45% for the High Level Test). Based on clinician feedback some questions were included within two test versions (e.g., Picture Description is within the Standard and High Level Tests). Short Test subtests are listed in .

Table 2. Brisbane EBLT Short Tests.

To ensure clinical applicability of these new shorter measures, all Short Test underwent acute hospital piloting and speech pathology feedback to ensure the tests were effective in real-world clinical contexts. Diagnostic analysis was separately completed for all Short Tests allowing each to act as standalone assessments enabling clinicians to select any test version at their discretion based on their initial clinical impression, the context, the presence of any co-occurring deficits and level of language functioning they wish to assess (thereby preventing patients from being exposed to tasks markedly too simple/difficult). The same scoring method applies to all test versions where each question is attributed a score adding to a total score used to indicate the presence of aphasia. Full scoring instructions are available at brisbanetest.org.

Adapted test scores

Additional subdivision of the Brisbane EBLT datasets was conducted to create adapted test scores. Adapted scores enable the tests to be administered and total scores calculated despite the elimination of certain subtests which may be required depending upon different testing environments. The full Brisbane EBLT and Short Tests (excluding High Level) have adapted test versions to adjust to the context (e.g., limited time), patient ability (e.g., fatigue) or when severe co-occurring conditions are present (e.g., hemiparesis, hemianopia, dysarthria or apraxia of speech). The full Brisbane EBLT has two adapted scores. The first excludes the subtest requiring the naming of hospital ward objects (e.g., bed, pillow) and enables the test to be administered in a clinic room (the Foundation Tests and Standard Test also have this adapted version). The second adapted version allows the calculation of a total score while excluding subtests which rely heavily upon verbal expression as the mode of patient response. Excluding these subtests minimises the confounding of the overall total test scores by the presence of severe co-occurring dysarthria or apraxia of speech. The Standard Test has an additional adapted score which excludes reading and writing tasks and enables the test to be administered when there is limited time, patient fatigue or when there are severe co-occurring deficits affecting reading (e.g., hemianopia) and/or written responses (e.g., hemiparesis). Note, any dysgraphia and dyslexia will not be identified using this adapted score. Diagnostic analysis was completed for all adapted test scores. Brisbane EBLT adapted scores are reported in and Short Tests adapted scores in .

Indeterminate Brisbane EBLT and reference standard results

The Brisbane EBLT was developed with the aim to minimise the influence of non-language related deficits (e.g., hemiparesis, hemianopia, apraxia of speech) on language test scores, however when co-occurring deficits were severe (e.g., hemiparesis which prevented the completion of any writing tasks) affected test scores were recorded as missing and scored zero to ensure resultant scores were as pragmatic and reflective of clinical practice as possible. Reference standard tests were scored according to manual instructions. If reference standard data was missing or impaired due to non-language deficits, subtest scores were used instead. For all participants, multiple factors informed reference standard diagnosis.

Results

Participants

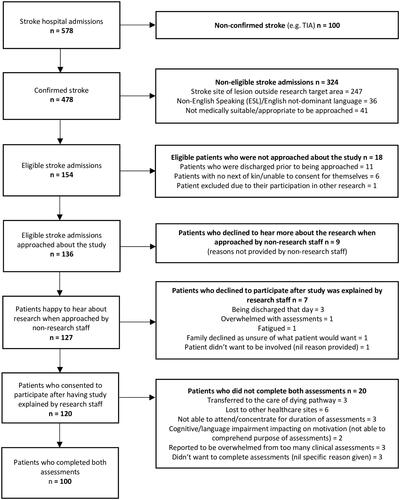

Of 154 eligible admissions, 120 (77.9%) stroke patients consented to participate. Of these, 100 (64.9%) completed both assessments (). Cognition was not screened or assessed formally or informally but rather the participants’ successful engagement in and completion of the assessment process made them eligible to participate and have their data used in the protocol. The flowchart of study eligibility and recruitment is provided in . Participant characteristics (patients who completed both assessments) are described in .

Table 3. Patient sample demographics.

To optimise test external validity, common post-stroke co-occurring conditions were not used as exclusion criteria. Patients with non-language but communication-related co-occurring conditions (affecting vision, hearing, speaking, or writing) were included within the study sample. The Brisbane EBLT was developed to minimise the impact of these conditions (e.g., large stimulus/text size) with the specific aim of enabling the majority of patients to complete assessment items. This test design was found to be able to accommodate the majority of co-occurring conditions, enabling patients who presented with mild or moderate co-occurring deficits (e.g., mild-moderate hemiparesis) to still perform set tasks and questions. However, a limited number of patients who presented with severe co-occurring conditions (e.g., severe hemiparesis) were still unable to complete certain Brisbane EBLT assessment items. The percentage of included patients presenting with co-occurring conditions and the number of patients unable to complete certain tasks due to the severity of their impairment (reported in brackets) were: hearing impairment 43% n = 43 (0 patients) (i.e., 43 (43%) patients reported some form of hearing impairment however all of these deficits were able to be overcome (e.g., repetition of instructions/increasing volume) in all cases such that 0 patients were unable to complete items due to the severity of their hearing deficits); hemianopia 27.06% n = 26 (4 patients); hemiparesis 60% n = 60 (8 patients); upper limb apraxia 5%; n = 5 (1 patient); apraxia of speech 25% n = 24 (13 patients); and dysarthria 34% n = 34 (4 patients).

Statistically, the inclusion of patients with severe co-occurring conditions had little impact on the overall psychometric dataset. When patients had severe deficits in one area (e.g., severe hemiparesis resulting in inability to write), these patients were often able to complete items in other areas (e.g., verbal tasks such as following commands). As a result, the overall impact of these affected test items was found to constitute <5% of the recorded data and consequently the impact of missing data was considered statistically negligible [Citation24]. In clinical practice however, when patient performance needs to be summed to calculate an overall test score, the creation of Brisbane EBLT Adapted Test Scores assists with further adjusting the test to accommodate the presence of these severe co-occurring conditions.

Distribution of severity of language impairment

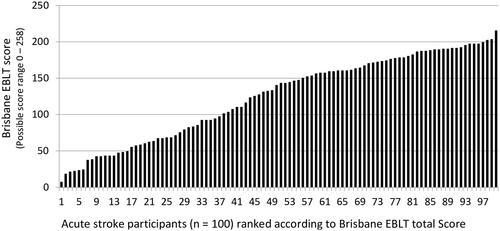

Seventy-three (73%) participants were diagnosed with aphasia according to the reference standard. Of these, seven were assessed using the AST [Citation22] (severe aphasia), 36 with the CAT [Citation9] (moderate aphasia), and 57 with the MCLA [Citation23] (mild or nil aphasia). The Brisbane EBLT demonstrated no floor or ceiling effects with scores ranging from 7 to 215 (out of a possible 0 to 258) ().

Time interval between index test and reference standard

Patients were admitted to hospital on average 1 day 14 h post-stroke. The average time between admission and completion of both assessments was 7 days 3.75 h (ranging from 1 day 21 h to 28 days 6 h). The Brisbane EBLT took 48.09 min and the reference standard took 76.05 min on average to complete. The average time between assessments was 23 h 39 min. Patients received only dysphagia related speech pathology intervention between the two tests.

Adverse events from performing the index test or reference standard

Patients reported fatigue when completing the reference standard. In the absence of an existing recognised acute diagnostic language test, the reference battery was lengthy (average 76.05 min) and consequently not optimal for acute use [Citation2,Citation11].

Estimates of diagnostic accuracy

Brisbane EBLT

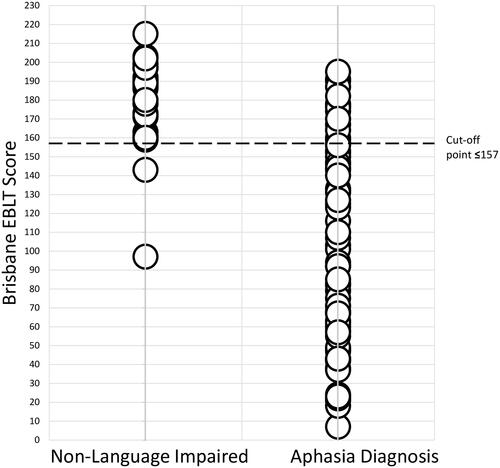

An overall Brisbane EBLT cut-off score of ≤157 had a sensitivity of 80.8% (95% CI, 69.9–89.1) and specificity of 92.6% (95% CI, 75.7–99.1) with LR+ 10.9 and LR− 0.21. ROC analysis indicated an AUC of 0.908 (SE 0.030) (95% CI, 0.85–0.97). Threshold scores indicating presence of language impairment were determined based on the cut-off that yielded the highest sensitivity for a specificity ≥90%. Score distributions indicated that aphasia diagnosis represented a spectrum ranging from mild to severe while scores of language-intact stroke patients clustered in a narrower range thus creating a shoulder effect for specificity. Cut-off score distribution analysis indicated that despite the lower sensitivity estimates (≥80.8%) obtained from this cut-off, patients diagnosed with aphasia who achieved Brisbane EBLT scores of ≤157 documented a similar score range to those without language impairment (). In circumstances when a single (binary) cut-off score may be needed (e.g., for research), these lower cut-off thresholds should be used. However, to assist with clinical exclusion of the condition, threshold scores above which indicate likely absence of impairment were also determined based on higher cut-offs (e.g., ≤ 177 (≥178)) that yielded sensitivities ≥ 90%. Scores between the two cut-offs indicated possible risk of impairment. Age, education level, and gender were not found to significantly affect test scores. Cross tabulation of Brisbane EBLT scores by reference standard and diagnostic accuracy estimates (including adapted scores) are listed in .

Table 4. Brisbane EBLT diagnostic accuracy characteristics.

Short Tests

Short Test versions all reported specificities of ≥92.6% with the Standard and High Level Tests reporting sensitivities of ≥72.6% (excluding adapted scores). Foundation Tests reported lower sensitivity estimates of ≥57.5% given their focus on more severe conditions. ROC analysis indicated an AUC of ≥0.808 (SE 0.041) (95% CI, 0.73–0.89) for all Short Tests (). Diagnostically, the High Level Test reported the highest estimates of the Short Tests, equal to the full Brisbane EBLT with a sensitivity of 80.8% (95% CI 69.9–89.1) and specificity of 92.6% (95% CI 75.7–99.1).

Discussion

This multicentre cross-sectional study describes the development and diagnostic validation of the Brisbane EBLT. The use of EBP [Citation15] in guiding test development aims to ensure the test meets high psychometric standards and is feasible, user-friendly and adaptable to varying clinical contexts, patient abilities and settings. The Brisbane EBLT demonstrates good sensitivity of 80.8% (95% CI, 69.9–89.1) and a high specificity of 92.6% (95% CI, 75.7–99.1) in identifying aphasia.

Clinically, the 15–25 min Short Tests are most appropriate for use in acute hospital use. Diagnostically, all five test versions (the full Brisbane EBLT and four Short Tests) demonstrate specificity of ≥90% (excluding adapted scores). Of these, the High Level Test reports the highest diagnostic estimates, equivalent to that of the full Brisbane EBLT.

Comparison with other research, implications for practice, intended use, and clinical role of the test

A wide range of language measures currently exist to aid in the clinical assessment of aphasia [Citation2]. A number of brief aphasia tests such as the LAST [Citation7] and Quick Aphasia Battery (QAB) [Citation25] report sensitivity and specificity data in stroke populations. While these tests aim to quickly and efficiently assess language functioning in the acute post-stroke environment, these brief tests are either intended for “non-specialist” screening and/or lack assessment across all core language domains of auditory comprehension, verbal expression, reading comprehension and writing [Citation7,Citation25]. Other measures such as the WAB-R [Citation3], CAT [Citation9] and MCLA [Citation23] provide a thorough assessment of language however these measures have been reported to be too lengthy for use in certain clinical contexts [Citation2]. While these tests report published psychometric data, surprisingly, a systematic review examining these tests’ psychometrics was unable to identify published diagnostic (sensitivity/specificity) validation data verifying these tests’ abilities to identify aphasia in stroke populations [Citation14].

The Brisbane EBLT aims to provide a time-efficient, comprehensive language test which assesses language across the severity spectrum in all language domains, is adaptable to varying clinical contexts and presents quick, evidence-based guidance regarding the need for intervention. As diagnostic analysis has been completed for all five tests, each version acts as a standalone assessment capable of identifying aphasia at the patient’s hospital bedside or clinic room in as little as 15–25 min. Adapted test scores further accommodate the needs of the patient and clinical context, enabling diagnostic estimates to be calculated despite the presence of fatigue or common co-occurring post-stoke conditions. This test aims to contribute to the accuracy and evidence-base of aphasia assessment procedures.

Strengths and limitations

A strength of this validation study is in the methodology used. Study participants were a consecutive sample which met a priori sample size calculation requirements. The study employed a robust reference standard which used multiple clinical factors to inform the diagnostic decision [Citation21]. Finally, the index test and reference measure were randomized in order of administration and test administrators were blinded to the results of the other measure. This study aims to comply with recognised published STARD diagnostic reporting standards [Citation18].

Different test versions optimise the clinical utility of this assessment. The shorter High Level Test and the full Brisbane EBLT reported identical diagnostic estimates. The High Level test consequently provides a short but psychometrically equivalent alternative to the longer comprehensive test and demonstrates that increased test length does not necessarily equate to increased diagnostic accuracy estimates. Conversely, the full 49 subtest Brisbane EBLT presents with no floor or ceiling effects and may be useful in a research or clinical context where patient performance of all ability levels can be compared across the same language measure. Adapted test scores, which enable the test to be adjusted for co-occurring conditions (e.g., hemianopia, apraxia of speech) provide additional flexibility. The presence of co-morbidities was common within the recruited sample with hemianopia, hemiparesis, hearing impairment, upper limb apraxia, apraxia of speech and dysarthria all present within the patient cohort. The Brisbane EBLT was specifically designed to minimise the impact of such co-occurring conditions (e.g., large text/stimulus items, not penalising for motor based impairment in responses and allowing repetition of tasks to account for deficits such as hearing impairment). As a result, less than 5% of patient language responses were unable to be scored thus indicating the new test’s ability to adapt to the complex and multifaceted needs of stroke populations.

Statistical uncertainty

This study’s results need to be interpreted in the context of the following factors. While both the High Level and full Brisbane EBLT report the same high specificity of 92.6%, their sensitivity is 80.8% indicating the test is less accurate in excluding a language disorder. However, comparison of aphasia patients who achieved Brisbane EBLT score of ≤157 and non-aphasia study participants indicated similar performance across the two groups. Hence, despite these lower diagnostic estimates, any patients not identified through this cut-off will have a language test performance highly similar to the language-intact stroke group. The clinical impact of any possible language impairment upon patient functioning is likely to be minimal. However, given the clinical usefulness of being able to exclude impairment, higher cut-offs have also been provided which prioritise sensitivity ≥90%. Patients scoring above these cut-offs have low probability of aphasia. Finally, given the Foundation Tests’ focus on severe language deficits, the resultant lower sensitivities of these measures indicate other versions of the assessment are more suited to identifying milder language deficits. All five Brisbane EBLT tests reported AUC results of ≥0.808 indicating strong overall discriminative performance.

Generalisability

This study is an initial examination of the diagnostic accuracy of the Brisbane EBLT however the generalisability and reproducibility of these results within practice or in similar study populations have not yet been verified. A separate validation study in a similar, independent population would demonstrate the test’s capabilities in comparable settings and enable a diagnostic systematic review and meta-analyses to be performed.

Conclusion

The Brisbane EBLT aims to provide a new language test to assist in the identification of aphasia. The test examines patient functioning across the severity spectrum in all language domains with Short Tests capable of identifying aphasia in just 15–25 min. As diagnostic estimates have been calculated for all test versions, each can act as a stand-alone assessment with different versions able to adjust to the needs of varying clinical environments and enable clinicians to select tests at their own discretion based on patient ability and the clinical context. These findings aim to improve the evidence-base of aphasia assessment procedures and assist with informing accurate aphasia epidemiological statistics, healthcare services, and developers of stroke guidelines. The Brisbane EBLT is available for download from brisbanetest.org.

Acknowledgements

ANZ Medical Trustees, Australian Stroke Foundation, Equity Trustees Wealth Services Ltd.; Royal Brisbane and Women’s Hospital, and Royal Brisbane and Women’s Hospital Foundation. The stroke units and speech pathology departments of the Royal Brisbane and Women’s Hospital and Princess Alexandra Hospitals in Brisbane made this study possible. The authors thank the stroke patients, clinicians and other study participants who contributed to this research. Full list of acknowledgements at brisbanetest.org.

Disclosure statement

EG is on the Clinical Council of the Stroke Foundation. No other conflicts of interest are reported.

Additional information

Funding

References

- Hersh D, Wood P, Armstrong A. Informal aphasia assessment, interaction and the development of the therapeutic relationship in the early period after stroke. Aphasiology. 2018;32:876–901.

- Vogel AP, Maruff P, Morgan AT. Evaluation of communication assessment practices during the acute stages post stroke. J Eval Clin Pract. 2010;16:1183–1188.

- Kertesz A. Western Aphasia Battery – Revised. San Antonio (TX): Pearson; 2007.

- Goodwin D. Homonymous hemianopia: challenges and solutions. Clin Ophthalmol. 2014;8:1919–1927.

- Simpson B, McCluskey A, Lannin N, et al. Feasibility of a home-based program to improve handwriting after stroke: a pilot study. Disabil Rehabil. 2016;38:673–682.

- Enderby PM, Wood VA, Wade DT, et al. The Frenchay Aphasia Screening Test: a short, simple test for aphasia appropriate for non-specialists. Int Rehabil Med. 1987;8:166–170.

- Flamand-Roze C, Falissard B, Roze E, et al. Validation of a new language screening tool for patients with acute stroke: The Language Screening Test (LAST). Stroke. 2011;42:1224–1229.

- Doesborgh SJC, van de Sandt-Koenderman WME, Dippel DWJ, et al. Linguistic deficits in the acute phase of stroke. J Neurol. 2003;250:977–982.

- Howard D, Swinburn K, Porter G. Comprehensive Aphasia Test. New York (NY): Psychology Press; 2004.

- Goodglass H. The Assessment of Aphasia and Related Disorders. 3rd ed. Philadelphia (PA): Lippincott Williams & Wilkins; 2001.

- LaPointe L. Handbook of aphasia and brain-based cognitive-language disorders. New York (NY): Thieme Medical Publishers; 2011.

- Crary MA, Haak NJ, Malinsky AE. Preliminary psychometric evaluation of an Acute Aphasia Screening Protocol. Aphasiology. 1989;3:611–618.

- El Hachioui H, Visch-Brink EG, de Lau LML, et al. Screening tests for aphasia in patients with stroke: a systematic review. J Neurol. 2017;264:211–220.

- Rohde A, Worrall L, Godecke E, et al. Diagnosis of aphasia in stroke populations: a systematic review of language tests. PLoS One. 2018;13:e0194143.

- Straus S, Glasziou P, Richardson S, et al. Evidence based medicine. How to practice and teach it. 4th ed. London (UK): Churchill Livingstone; 2010.

- Buderer NM. Statistical methodology: I. Incorporating the prevalence of disease into the sample size calculation for sensitivity and specificity. Acad Emerg Med. 1996;3:895–900.

- Scarpa M, Colombo A, Sorgato P, et al. The incidence of aphasia and global aphasia in left brain-damaged patients. Cortex. 1987;23:331–336.

- Bossuyt PM, Reitsma JB, Bruns DE, et al.; STARD Group. STARD 2015: an updated list of essential items for reporting diagnostic accuracy studies. BMJ. 2015;351:h5527.

- Binder JR, Frost JA, Hammeke TA, et al. Human brain language areas identified by functional magnetic resonance imaging. J Neurosci. 1997;17:353–362.

- Yorkston KM, Beukelman DR. An analysis of connected speech samples of aphasic and normal speakers. J Speech Hear Disord. 1980;45:27–36.

- Reitsma JB, Rutjes AW, Khan KS, et al. A review of solutions for diagnostic accuracy studies with an imperfect or missing reference standard. J Clin Epidemiol. 2009;62:797–806.

- Whurr R. Aphasia screening test: a multi-dimensional assessment procedure for adults with acquired aphasia. Milton Keynes (UK): Routledge; 2011.

- Ellmo W, Graser J, Krchnavek B, et al. Measure of Cognitive-Linguistic Abilities (MCLA). Norcross (GA): The Speech Bin; 1995.

- Schafer JL. Multiple imputation: a primer. Stat Methods Med Res. 1999;8:3–15.

- Wilson SM, Eriksson DK, Schneck SM, et al. A quick aphasia battery for efficient, reliable, and multidimensional assessment of language function. PLoS One. 2018;13:e0192773.