Abstract

Purpose

The iWalk study showed that 10-meter walk test (10mWT) and 6-minute walk test (6MWT) administration post-stroke increased among physical therapists (PTs) following introduction of a toolkit comprising an educational guide, mobile app, and video. We describe the use of theory guiding toolkit development and a process evaluation.

Materials and methods

We used the knowledge-to-action framework to identify research steps; and a guideline implementability framework, self-efficacy theory, and the transtheoretical model to design and evaluate the toolkit and implementation process (three learning sessions). In a before-and-after study, 37 of the 49 participating PTs completed online questionnaires to evaluate engagement with learning sessions, and rate self-efficacy to perform recommended practices pre- and post-intervention. Thirty-three PTs and 7 professional leaders participated in post-intervention focus groups and interviews, respectively.

Results

All sites conducted learning sessions; attendance was 50-78%. Self-efficacy ratings for recommended practices increased and were significant for the 10mWT (p ≤ 0.004). Qualitative findings highlighted that theory-based toolkit features and implementation strategies likely facilitated engagement with toolkit components, contributing to observed improvements in PTs’ knowledge, attitudes, skill, self-efficacy, and clinical practice.

Conclusions

The approach may help to inform toolkit development to advance other rehabilitation practices of similar complexity.

Toolkits are an emerging knowledge translation intervention used to support widespread implementation of clinical practice guideline recommendations.

Although experts recommend using theory to inform the development of knowledge translation interventions, there is little guidance on a suitable approach.

This study describes an approach to using theories, models and frameworks to design a toolkit and implementation strategy, and a process evaluation of toolkit implementation.

Theory-based features of the toolkit and implementation strategy may have facilitated toolkit implementation and practice change to increase clinical measurement and interpretation of walking speed and distance in adults post-stroke.

Implications for Rehabilitation

Introduction

Stroke remains a major health concern and the second highest cause of disability worldwide [Citation1–3]. Stroke rehabilitation guidelines are widely available [Citation4–7] and implementation of recommended practices is expected to improve the quality of care and patient outcomes [Citation8,Citation9]. Existence of recommendations, however, does not ensure consistent application in clinical practice [Citation10–14]. In fact, guidelines have been criticized for lacking a comprehensible structure, utility, and local applicability [Citation15,Citation16]. The field of knowledge translation [Citation17] has emerged to narrow the gap between what is known from research and knowledge synthesis and application of this knowledge by decision-makers to improve healthcare delivery and outcomes. Knowledge translation interventions designed to facilitate guideline implementation in stroke rehabilitation to date have been costly, requiring funds to support and train local clinical facilitators or clinicians to enable practice change [Citation14,Citation18–20]. Interventions have also targeted the implementation of multiple recommended practices simultaneously [Citation14,Citation19,Citation21], making it challenging for stroke teams to prioritize practices [Citation22].

Toolkits, defined as “a packaged grouping of multiple knowledge translation tools and strategies that codify explicit knowledge,” [Citation23] may be an alternative low cost knowledge translation intervention, aimed at supporting widespread implementation of a set of inter-related clinical practice guideline recommendations. According to a systematic review of 39 toolkit evaluations [Citation23], toolkits may include a variety of components, such as educational materials; templates (e.g., checklists, preprinted letters); visual reminders (e.g., posters); pocket cards or electronic tools (e.g., calculator on personal digital assistant), to educate and facilitate behaviour change. To improve the reporting of toolkit evaluations, review authors recommended clearly describing the purpose of and rationale for each toolkit component; the evidence supporting the effectiveness of each toolkit component; and guidance on the implementation process [Citation23]. None of the studies included in this review described toolkits targeting rehabilitation professionals or healthcare services for people post-stroke [Citation23]. Nonetheless, individual tools [Citation20] or toolkits [Citation14,Citation21] have been previously included in multi-component knowledge translation interventions targeting the implementation of best practices in stroke rehabilitation.

Toolkits are considered to be complex interventions, as they may target a large number of components, behaviours, groups, organizational levels and outcomes, or they may require significant tailoring during implementation [Citation24]. Implementation science experts recommend using theories, models and frameworks to design, tailor, and implement complex interventions, as this enables us to better understand how each intervention component is expected to help achieve the desired outcomes [Citation24–26]. Numerous theories, models, and frameworks exist [Citation27], however, making their choice and application challenging. Nilsen [Citation28] classified theories, models and frameworks used in implementation science as either: (1) process models, which aim to describe or guide the process of translating research into practice; (2) determinant frameworks, which specify types of determinants that influence implementation outcomes; (3) classic theories, which identify variables and relationships between variables to explain causal processes underlying human behaviour; (4) implementation theories, which explain aspects of implementation; or (5) evaluation frameworks that guide plans to evaluate implementation. While authors commonly cite theories, models and frameworks used to develop knowledge translation interventions [Citation27], there are few examples [Citation29] of how to use them to guide knowledge translation intervention design, methods of evaluation, and interpretation of results in the rehabilitation context.

Recently, we conducted a single-group before-and-after study to evaluate the impact of implementing a novel toolkit (“iWalk”) for stroke rehabilitation [Citation30]. The details of this “impact evaluation” will be available in an upcoming publication (manuscript in preparation). Briefly, the objective of the iWalk toolkit is to promote an evidence-informed approach to using the 10-meter walk test (10mWT) and the 6-minute walk test (6MWT) post-stroke among physical therapists (PTs). Following iWalk toolkit implementation, PTs were significantly more likely to administer the two walk tests post-stroke based on documentation in health records. The highest increase was observed in outpatient rehabilitation settings, followed by inpatient rehabilitation and acute care settings. Compared to the 6MWT, implementation of the 10mWT improved to a greater extent.

In this paper, we describe how we used a process model, a determinant framework, and two classic theories to guide the design and process evaluation of the implementation of the iWalk toolkit. These findings will help us to understand the results of the impact evaluation and advance the science of developing toolkits as knowledge translation interventions.

Methods

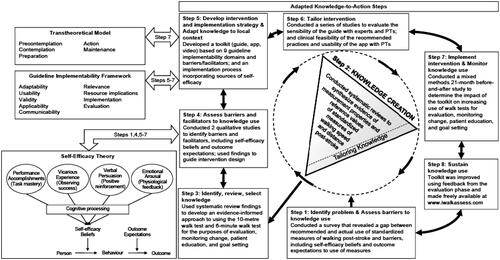

We used the knowledge-to-action (KTA) framework [Citation31] () to guide the overall research process. The KTA framework is widely used [Citation32] and promotes ongoing collaboration between researchers and decision-makers (or “end-users” e.g., patients, clinicians, managers, policy-makers) in the research process to optimize relevance and applicability of findings [Citation33]. While the KTA framework provides broad guidance, we used self-efficacy theory [Citation34], a guideline implementability framework [Citation35], and the transtheoretical model [Citation36] to better understand determinants of human behaviour change. The role of these theories, models, and frameworks within the overall KTA paradigm is illustrated in . Below, we (i) describe these individual theories, models, and framework in greater detail; and (ii) outline each of the KTA steps. The specific process evaluation of the iWalk toolkit implementation aligns with Step 7 (“Implement intervention & monitor knowledge use”) and is the focal point of the present study.

Figure 1. Knowledge-to-action framework (taken from Graham et al. [Citation31] with copyright permission).

![Figure 1. Knowledge-to-action framework (taken from Graham et al. [Citation31] with copyright permission).](/cms/asset/ae8e5399-e7af-46df-ab7f-3846e46ee41d/idre_a_1867653_f0001_b.jpg)

Figure 2. Use of the knowledge-to-action framework, self-efficacy theory, a guideline implementability framework, and the transtheoretical model to guide the development and process evaluation of the toolkit and implementation strategy.

Overview of theories, models, and frameworks

Knowledge-to-action framework

The KTA framework [Citation31] is a meta-framework based on the synthesis of planned action theories. As a process model, it outlines a series of steps for the creation and application of knowledge. As shown in , the knowledge creation phase is represented by a funnel to highlight the importance of filtering and synthesizing knowledge from individual studies to develop knowledge products and tools, such as guidelines, designed to guide clinical decision-making. The action cycle of the KTA framework outlines a series of steps that can be taken in variable order to apply the knowledge products of the knowledge creation phase. The knowledge creation funnel can spin to inform any step in the action cycle.

Self-efficacy theory

Self-efficacy theory [Citation34] is a classic theory that can assist with the interpretation, modification and prediction of behaviour. Self-efficacy theory highlights the importance of building positive self-efficacy beliefs and outcome expectations to facilitate behaviour change. As shown in , self-efficacy, defined as the level of belief in one’s ability to organize and execute given types of performances, is expected to predict clinician behaviour. Performance accomplishments, vicarious experience, verbal persuasion, and emotional arousal are four mechanisms for increasing self-efficacy. Outcome expectations, defined as judgments of the likely consequences a behaviour will produce, can provide incentive or disincentive to perform a desired behaviour.

Guideline implementability framework

The guideline implementability framework [Citation35] is a determinant framework that identifies 27 guideline design elements, grouped into nine domains shown in , expected to improve guideline use.

Transtheoretical model

The transtheoretical model [Citation36] is a classic theory developed in the field of psychology. It posits that people progress through five stages when attempting to change behaviour either on their own or with intervention: (1) precontemplation – not intending to take action within the next 6 months; (2) contemplation – intending to take action within the next 6 months; (3) preparation – intending to take action in the next 30 days; (4) action – made overt changes less than 6 months ago; and (5) maintenance – made overt changes more than 6 months ago.

Use of theory to design the toolkit, implementation strategy, and process evaluation

The iWalk toolkit was developed and evaluated through an eight-step process based on the knowledge creation funnel and action cycle of the KTA framework, with integration of self-efficacy theory, the guideline implementability framework, and transtheoretical model (). Preliminary descriptions of steps 1 and 2 have been reported [Citation37]. We applied an integrated knowledge translation approach [Citation33] throughout by collaborating with end-users, including physical therapy clinicians, professional leaders (PLs), educators, medical directors, and regional stroke system representatives.

Step 1: Identify problem & Assess barriers to knowledge use

Despite recommendations in stroke rehabilitation guidelines to use standardized assessment tools [Citation4–7], our survey [Citation13] showed that PTs do not consistently use valid measures to evaluate or monitor change in walking, formulate a prognosis, or judge readiness for discharge. Questionnaire items were designed to assess factors, including self-efficacy and outcome expectations, influencing knowledge use. Results from our survey and other research identified barriers, including insufficient knowledge of valid measures and normative values [Citation13,Citation38]; limited time and self-efficacy to access and appraise the literature to select optimal measures [Citation39–41], administer and score tests [Citation13,Citation42]; and low outcome expectations due to scepticism regarding the ability of tests to capture change and relate to the home or community environment [Citation13].

Step 2: Knowledge creation

To address these problems, we conducted four systematic reviews [Citation43–46] to synthesize evidence of measurement properties, reference values, and protocols, for standardized measures of walking speed and distance post-stroke. We focused the reviews on measures of walking speed and distance, as they were recommended in emerging guidelines and had demonstrated sensitivity to change [Citation47,Citation48].

Step 3: Identify, review, select knowledge

We used systematic review findings to develop an evidence-informed approach to using the 10mWT and 6MWT for the purposes of evaluation, monitoring change, patient education, and goal setting. These tests were recommended and had optimal quality and interpretability [Citation43–46]. This approach was designed to support three Canadian Stroke Best Practice Recommendations to use valid assessment tools, provide patient, family and caregiver education, and involve patients and families in their management, goal setting and transition planning [Citation49].

Selection of walk test protocols and reference values

Because walk test protocol features (e.g., distances [Citation50,Citation51]; walking aid used [Citation52]; encouragement [Citation53]) can influence performance, a single protocol for each walk test was developed to enable comparison across care settings. We used criteria (see Supplementary Material 1) to select and adapt protocols that would optimize measurement accuracy, applicability to people undergoing stroke rehabilitation, and implementation feasibility for hospital settings in terms of cost, space, and time. For example, tests permitting use of minimal physical assistance were selected to broaden clinical utility for patients post-stroke. A supplemental file contains the final 10mWT and 6MWT protocols (Supplementary Material 2). From the systematic review findings [Citation43–46], we used criteria established a priori (Supplementary Material 3) to select published reference values for age- and sex-specific norms [Citation54,Citation55]; maximum mean distances to walk at community locations [Citation45]; and crosswalk speed of 1.2 m/s based on municipal [Citation56] and federal [Citation57] documents; and derived minimal detectable change values at the 90% confidence level (MDC90) [Citation58] from a study evaluating similar walk test protocols [Citation59].

Step 4: Assess barriers and facilitators to knowledge use

We conducted two qualitative studies with PTs to identify barriers and facilitators to knowledge use, using self-efficacy theory to inform interpretation of results [Citation15,Citation60,Citation61]. Findings highlighted difficulty administering tests in patients with moderate-to-severe motor deficits and aphasia as a barrier, and developing skill and self-efficacy to administer walk tests, and a smartphone application as potential enablers.

Step 5: Develop intervention and implementation strategy & Adapt knowledge to local context

The iWalk toolkit, consisting of an educational guide, mobile app, and educational video [Citation62], was developed to codify the evidence-informed approach created in Step 3. The purpose of the toolkit was to help PTs administer stroke-specific protocols for the 10mWT and the 6MWT, interpret test performance, educate patients about test performance, set goals for each test, and select treatments with the potential to improve walking speed and distance in acute care, and inpatient and outpatient stroke rehabilitation. In alignment with the KTA process [Citation31], toolkit components were designed to address barriers and incorporate facilitators to walk test use in clinical practice. outlines the purpose and rationale for each toolkit component.

Table 1. Purpose and rationale for iWalk toolkit components to address barriers to walk test use.

Based on the guideline implementability framework [Citation35], we incorporated design elements into the toolkit to increase its clinical adoption, as outlined in . For example, to address the applicability domain in the framework, we used case scenarios, a recommended adult learning strategy desired by PTs [Citation60,Citation63], to illustrate how to apply recommended practices to individual patients. Finally, we proposed small group learning sessions with homework activities as a strategy to implement the toolkit. Review of the guide and practice of the clinical activities targeted in the guide was expected to increase knowledge and skill, and self-efficacy to administer tests, and interpret and document test performance and positive outcome expectations about the clinical relevance of the walk tests [Citation34,Citation61,Citation64]. Self-efficacy was expected to increase through the mechanisms of performance accomplishments, emotional arousal, and vicarious experience [Citation34].

Table 2. Reviewer ratings of guideline implementability features of the iWalk toolkit.

iWalk guide

The printed guide consisted of eight modules. Module 1 outlined the “Top 10” reasons to use the walk tests post-stroke; iWalk practice recommendations, and related Canadian Stroke Best Practice Recommendations. Modules 2–5, respectively, provided guidance on test administration, interpretation, patient education and goal setting, and treatment selection. These modules included clinical tips and “Did you know?” text boxes to highlight relevant research supporting each walk test. Module 6 described a process for using audit and feedback [Citation65–67] to advance practice change and strategies for overcoming challenges PTs might encounter. Module 7 presented case scenarios and learning activities to help integrate the information in the guide. Module 8 provided information to guide three 1-h small group learning sessions scheduled at 2-week intervals to implement the toolkit, including instructions and agendas, equipment and space checklists, printable test protocols (including aphasia-friendly procedures), data collection and goal setting forms, a quick reference value guide, a patient education tool, and breakdown of equipment and training costs to implement the walk tests. We recommended identifying someone to coordinate and facilitate learning sessions. PTs were asked to review relevant modules prior to each session. Session 1 involved reviewing Module 1, discussing walk tests, viewing the educational video, and practicing the tests. Session 2 involved entering data into the app to interpret test performance based on case scenarios, and documenting results. Session 3 involved role-playing to practice goal setting based on a case scenario, and discussing feasibility of using audit and feedback to monitor implementation. Each session, PTs discussed implementation and were asked to practice what they learned (i.e., test administration, documentation, interpretation, patient education and goal setting) with a patient prior to the next session.

App and video

The iWalkAssess app [Citation62] was developed for iOS and Android platforms. The purpose of the app was to (i) increase awareness of stroke-specific 10mWT/6MWT protocols and reference values; and facilitate (ii) administration and scoring of 10mWT and 6MWT post-stroke; (iii) interpretation of test performance at point of care using normative, community ambulation, and MDC values; (iv) goal setting; and (v) use of the 6MWT to evaluate exercise intensity. The app developer followed user-centred design principles [Citation68] to develop a prototype based on a template proposed by author NMS, draft content, and a “think aloud” session during which a PT verbally described her thought process during walk test administration, patient education and goal setting with a simulated patient. Authors NMS (researcher and PT) and SV (PT in clinical practice) provided feedback on app prototypes. Once walk test protocols and reference values were finalized, we developed scripts and engaged a PT and a patient to produce an 10-min educational video consisting of a PT administering walk tests with a person post-stroke [Citation69]. Individuals who were videorecorded signed a photo/video release form.

Step 6: Tailor intervention

We conducted a series of studies to evaluate the sensibility of the guide among experts and PTs; and the clinical feasibility of recommended practices and usability of the app among PTs. Ten researchers from Canada (n = 4), the USA (n = 3), Australia (n = 2) and Sweden (n = 1), with expertise in measurement (n = 9), stroke (n = 7), and/or gait assessment (n = 7), provided written feedback on content validity of the guide (accuracy and comprehensiveness), rating the above-mentioned 27 design elements as 1-Inadequate, 1-poor, 2-fair, 3-average, 4-good, or 5-excellent. Mean ratings across design elements and domains ranged from 4.0 to 4.9 (). Clinical reviewers (i.e., end-users) of the iWalk guide consisted of 18 PTs with an average of 14 years of clinical experience in acute (n = 7), inpatient (n = 7), outpatient (n = 6), and homecare (n = 3) stroke rehabilitation practice settings. Reviewers were asked to identify information that should be added or removed, and rate the format, organization, readability, content validity of the guide, and the clinical feasibility of implementing the iWalk recommendations across clinical settings as 1-Inadequate, 1-poor, 2-fair, 3-average, 4-good, or 5-excellent. Reviewers received a $50 gift card in acknowledgment. Mean ratings of format, organization, readability, and content validity were 4.5, 4.6, 4.7, and 4.8, respectively. Mean ratings of the feasibility of implementing the walk tests in acute care, inpatient rehabilitation, outpatient rehabilitation, and in homecare were 3.0, 4.1, 4.5, and 2.8, respectively.

In beta testing of the app, three PTs [Citation70] in acute care, inpatient and outpatient rehabilitation used the app to administer walk tests with colleagues or patients and provided feedback on clinical utility, usability, content, function, and format in a 30-min interview. Feedback guided final improvements to the app.

Step 7: Implement intervention & Monitor knowledge use

As noted previously, the present paper focuses on a process evaluation of the iWalk toolkit implementation, which aligns with steps in the KTA action cycle. We used the theories, models, and frameworks discussed earlier to develop the objectives, hypotheses, and methods of the process evaluation described here to help interpret main study findings. We expected that perceived toolkit quality together with implementation fidelity and engagement with the intervention, would increase self-efficacy and readiness to measure outcomes in the causal pathway towards performance of recommended practices. Process evaluation objectives were to: (1) describe implementation fidelity and engagement of PTs and PLs with the toolkit; (2) describe toolkit quality, specifically toolkit implementability, and app quality; and (3) evaluate effects of providing the toolkit on PTs’ readiness to measure outcomes, self-efficacy to perform recommended walk test practices, and app use for learning and practice (i.e., intermediate study outcomes).

Overview of process evaluation

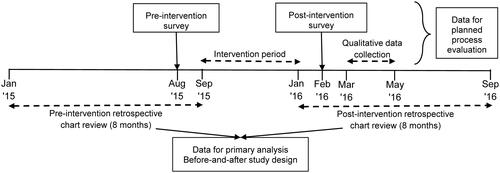

The process evaluation of the single-group before-and-after impact evaluation study incorporated quantitative and qualitative methodologies. outlines the process evaluation framework. An online questionnaire was administered to participants in clinical practice pre-intervention (August 2015) and five months later (February 2016). Site-specific focus groups with PTs, and interviews with PLs were held after completion of learning sessions. provides an overview of data collection for the primary analysis and process evaluation. The health sciences research ethics board (REB) at the University of Toronto and REBs of each participating healthcare organization approved the study protocol. The TIDIeR checklist [Citation71] was used to guide reporting.

Figure 3. Overview of data collection for the primary analysis (impact evaluation) and process evaluation.

Table 3. Process evaluation framework: constructs, variables, and data collection approach.

Eligibility and recruitment

Hospitals providing acute care, inpatient or outpatient rehabilitation services for people post-stroke were eligible. PTs registered with the provincial regulatory body and providing walking rehabilitation to ≥10 patients post-stroke per year could participate. Professional leaders and professional practice leaders (PPLs), responsible for advancing evidence-based practice and ensuring attainment of professional practice standards, were invited to participate. PPLs, but not PLs, carried a caseload. We recruited from six general and three rehabilitation hospitals in Ontario (n = 7) and Nova Scotia (n = 2) to understand toolkit effects across the care continuum and to optimize external validity. Eligible individuals (PTs, PLs, and PPLs) expressing interest in participating provided written informed consent.

Intervention

The main intervention of this study is the iWalk toolkit. The intervention content was developed as part of Step 5 (“Develop intervention and implementation strategy & Adapt knowledge to local context”) of the KTA cycle and was thus described previously. In September 2015, each site was mailed printed copies of the iWalk guide; Android smartphones for 22 PTs who did not own a smartphone or were unwilling to use their personal smartphone; and lanyards for smartphones to enable safe, hands-free administration of the walk tests. An electronic copy of the iWalk guide and a private YouTubeTM link to the iWalk video was emailed participants. In case of questions, participants could email or telephone a PT expert with 24 years of experience treating people post-stroke. Communication with the clinical expert was tracked. Sites were asked to complete learning sessions within a 5-month intervention period.

Quantitative data collection

An online questionnaire developed using Fluid Survey v3.0 was administered pre- and post-intervention. outlines the variables, response scales, and timepoints for data collection. PTs’ engagement with the toolkit was evaluated by asking about completion of learning activities, attendance at learning sessions, and duration of app use. To evaluate app quality, we included the Mobile App Rating Scale [Citation72] (MARS) in the post-intervention questionnaire. MARS is a 23-item self-report questionnaire with four subscales: engagement, functionality, aesthetics, and information quality. In each subscale, items are rated on a 5-point scale (1-inadequate, 2-poor, 3-acceptable, 4-good, 5-excellent), and ratings are averaged to produce a subscale score. Evidence of validity and excellent inter-rater reliability of total and subscale scores among raters with expertise related to the topic of the app has been reported [Citation72]. Supplementary MARS items designed to evaluate the likelihood of the app to improve user knowledge, attitudes, intentions to change, and behaviour change using a 5-point Likert scale were included to further evaluate app quality. To evaluate readiness to measure outcomes, the 26-item Clinician Readiness for Measuring Outcomes Scale [Citation73] (CReMOS) was included in the pre- and post-intervention questionnaire. CReMOS scores are used to classify individuals in one of the five categories of readiness to change (i.e., pre-contemplation, contemplation, preparation, action, and maintenance). Evidence of validity and excellent test–retest reliability of the CReMOS among rehabilitation professionals, including PTs, has been reported [Citation73]. To evaluate self-efficacy to perform recommended walk test practices, we developed 6 questionnaire items per test (see ) based on guidelines for developing self-efficacy scales [Citation74] and included them in the pre- and post-intervention questionnaire. For each item, participants were asked to indicate their level of confidence to perform the described activity on an 11-point scale that ranged from 0% (no confidence) to 100% (completely confident). To evaluate the nature of app use for learning and practice, we developed questionnaire items to determine the frequency of app use to increase personal knowledge, administer and interpret each test, and share results (). Data on sociodemographic and practice characteristics (age, sex, highest degree attained, years of clinical experience, number of patients post-stroke on caseload, setting, preferred methods for evaluating walking, and entry-to-practice education on use of the walk tests) were collected pre-intervention using pre-tested items [Citation39]. Questionnaire items developed by the research team were piloted with two PTs with expertise in neurological rehabilitation and revised based on their feedback. Supplementary Material 4 contains all questionnaire items.

Qualitative data collection

At each site, focus groups were conducted with PTs using a semi-structured guide developed to explore their experiences using the iWalk toolkit, participating in learning sessions, and applying the evidence-informed approach to using the walk tests in clinical practice, and suggestions for toolkit improvement. In Ontario, focus groups were held onsite, whereas in Nova Scotia, focus groups were conducted by telephone with an onsite facilitator. PLs and PPLs were interviewed by phone using semi-structured interview guides developed to explore their experiences with organizing walkways and learning sessions, the features of the toolkit that facilitated these activities, and recommendations for improvement. Additionally, PPLs were asked about their experiences using the walk tests in clinical practice.

Focus groups and interviews were scheduled for one hour. The interviewer held a Master’s degree and had previous experience conducting qualitative research. The interviewer was not a PT and was not familiar to study participants. This may have enabled study participants to speak openly about their experiences without fearing judgment and describe clinical scenarios in detail when the interviewer asked for clarification. Ideas raised by participants in one focus group or interview were raised in subsequent sessions. After each session, the interviewer wrote reflective notes describing key messages, and relating them to comments from previous sessions. Focus groups and interviews were audio-recorded and professionally transcribed. The interviewer compared transcripts to audio-recordings to verify accuracy.

Data analysis

Categorical data were summarized using frequencies and percentages. Percentages were interpreted as low (0%–30%); moderate (31%–60%); and high (61%–100%). MARS subscale scores used to evaluate app quality were summarized using mean, standard deviation and range if normally distributed (otherwise median and 25th/75th percentile) for PTs who used the app for ≥1 month. Wilcoxon signed rank test was used to evaluate change from 0 to 5 months in item-level ratings of self-efficacy to perform recommended practices. McNemar’s test was used to evaluate change from 0 to 5 months in the percentage of PTs in the action or maintenance stage to measure outcomes. Adjustment for clustering of PTs within sites was not considered in the analysis, as study site did not exert a clustering effect in the main analysis [Citation30]. An alpha value of 0.05 determined statistical significance.

Qualitative data and email communication with PLs/PPLs were used to determine implementation fidelity, specifically walkway set-up, and the timing and conduct of the learning sessions comprising the implementation strategy. To explore the implementability of the toolkit as a component of toolkit quality, a directed content analysis [Citation75] of the qualitative data was performed. Each domain of the guideline implementability framework was defined in a codebook and used for initial coding categories. One author (N. M. S.) and a research assistant used the codebook to independently code four transcripts using the predetermined codes. New codes were developed for text that could not be categorized. After, coders met to discuss the results [Citation75], reconcile differences and modify the codebook. The research assistant coded the remaining transcripts using NVivo 11.0 software.

Sample size

A sample size of 30 PTs was targeted to obtain a paired sample size of 150 patients post-stroke (5 patients/PT in pre- and post-intervention periods) for the main analysis of the impact evaluation [Citation30]. For the process evaluation, a sample size of 30 PTs provided 80% power (2-sided α = 0.05) to detect an increase of 32% or greater in the percentage of therapists in the action or maintenance stage (given a 3% drop from the action/maintenance stage) [Citation76], and an effect size of ∼0.5 for the mean change in self-efficacy ratings, pre- to post-intervention [Citation77]. For the qualitative analysis, a sample size of 30 PTs was considered sufficient given that we reached theoretical saturation with 23 participants in a related study [Citation15].

Step 8: Sustain knowledge use

Following completion of the process evaluation described in the present paper, the iWalk toolkit was made freely available at www.iwalkassess.com and launched using a comprehensive dissemination plan that targeted stroke and research networks, physical therapy schools worldwide, and members of the World Confederation of Physical Therapy.

Results

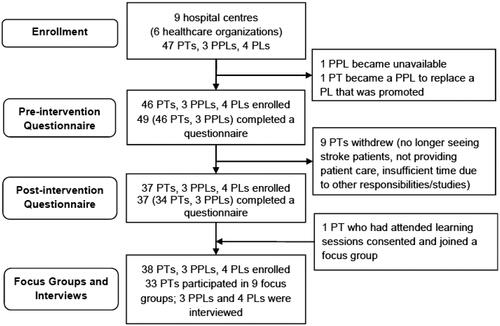

describes the flow of participants in the study. Three PPLs and 4 PLs overseeing practice at 9 hospital centres from 6 healthcare organizations participated. Hospitals provided acute care (n = 6), in-patient rehabilitation (n = 5), and out-patient rehabilitation (n = 6). Supplementary Material 5 outlines hospital characteristics. Pre-intervention, 1 PPL in an acute care hospital became unavailable, and 1 PL at a rehabilitation hospital was promoted and replaced by a PPL and together they facilitated study implementation. A total of 46 PTs (35 from Ontario, 11 from Nova Scotia) and 3 PPLs completed the online questionnaire at baseline and 34 PTs and 3 PPLs completed the online questionnaire post-intervention. Of the 37 PTs with pre- and post-intervention data, 57% held a Bachelor’s degree, and delivered acute care (43%), inpatient (35%) and outpatient (22%) rehabilitation. The percentage of PTs who had learned to administer the 10mWT and the 6MWT during their professional training, was 27% and 78% respectively. Preferred methods for evaluating walking included observational gait analysis (92%); Functional Independence Measure (62%); Timed “Up and Go” (62%); 2MWT (60%); 6MWT (49%); Community Balance and Mobility Scale (41%); and 10mWT (32%). We conducted nine focus groups with 33 PTs from all study sites, and interviewed 3 PPLs and 4 PLs. shows that characteristics of PTs completing the questionnaire and focus groups were similar. Throughout the study, the clinical expert received two questions from participants about confirming the need to evaluate blood pressure pre- and post-6MWT and saving data in the app. An algorithm error for calculating 6MWT norms for men was detected and corrected partway through the study.

Table 4. Participant and practice characteristics.

Implementation fidelity

Walkways were marked completely (n = 6), partially (i.e., 10 m section but not acceleration/deceleration distances; n = 2); or not at all (n = 1). All sites conducted learning sessions: 7 sites (78%) completed three learning sessions within 3 (n = 3), 4 (n = 2), or 5 (n = 2) months; 1 site completed the recommended learning activities in two sessions within 6 months; and 1 site, delayed by an influenza outbreak, completed two of the three learning sessions within 7 months.

Engagement with the toolkit

presents results on completion of learning activities. Attendance at learning sessions 1, 2, and 3 was 78%, 61%, and 50%, respectively. The module reviewed most and least frequently was Module 2 describing test administration (97%), and Module 6 describing evaluation using audit and feedback (62%), respectively. The educational video was viewed by 83%. Percentage of PTs who practiced the 10mWT and 6MWT with colleagues was 73% and 54%, respectively. Duration of app use (n = 37) was: 0 months (16%), 1 month (27%), 2 months (16%), 3 months (11%), 4 months (8%), and 5 months (22%).

Table 5. Completion of learning activities by physical therapists (n = 37).

App quality

presents MARS item ratings. Mean (standard deviation) score for engagement, functionality, aesthetics, and information subscales and total score were 2.9 (0.6), 3.5 (0.6), 3.4 (0.6), 4.0 (0.8), and 3.5 (0.5), respectively. presents level of PTs’ agreement on the likelihood that the app will improve knowledge, attitudes, intentions, and behaviour for each walk test (n = 30). The following indicates the percentage of PTs who agreed or strongly agreed the app would likely increase: (i) knowledge to administer the walk test: 10mWT: 90%, 6MWT: 83%; (ii) knowledge to interpret test performance: 10mWT: 100%, 6MWT: 87%; (iii) attitude toward using the walk tests: 10mWT: 73%, 6MWT: 73%; (iv) intentions/motivation to administer the walk tests: 10mWT: 77%, 6MWT: 67%; and (v) walk test use: 10mWT: 73%, 6MWT: 67%.

Table 6. Mobile App Rating Scale scores (n = 31)a.

Table 7. Likelihood of iWalkAssess app improving users’ knowledge, attitudes, intentions, and behaviour (n = 30).

Toolkit implementability

summarizes the qualitative findings outlining the barriers and facilitators to using the iWalk toolkit in each implementability domain. Relevance: PTs noted that the guide clearly communicated how to not only administer the walk tests but also use the tests results in clinical practice. Adaptability: Although the guide was designed as the primary educational resource, some PTs expressed a preference for learning content from the app. Similarly, some PTs preferred to carry and refer to the entire guide while delivering patient care, even though the app, and protocol and reference value summaries in the guide appendix were designed as portable tools to facilitate clinical application. Usability: The extensive length of the guide made it difficult to carry and quickly access information in the clinical setting. No comment was made regarding challenges with carrying mobile phones to use the app. Supplementary Material 6 outlines participants’ recommendations related to improving the content of the guide and the app and presenting the information in different formats to facilitate learning. Despite suggestions for alternate learning resources and strategies, there was consensus that the toolkit was a sufficient resource for learning and application to practice.

Table 8. Qualitative findings describing iWalk toolkit implementability.

Validity: PTs expressed valuing the incorporation of research evidence in clinical practice and acknowledged the clear list of clinical practices that the toolkit aimed to promote, and links to supporting research evidence and guideline recommendations in the guide. Applicability and communicability: PTs expressed an appreciation for the pictorial instructions for people with aphasia, quick access to interpretations of walk test performance using normative, MDC, and community ambulation values, and case-based examples of how to communicate walk test results, as this information enhanced their knowledge and enabled them to apply test results to individual patients for patient education and goal setting. Focus groups revealed a misconception that the 6MWT is only for patients who can walk continuously for six minutes. These beliefs limited the perceived and actual clinical applicability of the test, particularly in the acute care setting. PTs noted additional factors that decreased the feasibility of toolkit implementation. Organizational factors related to the challenge of finding a 30 m 6MWT walkway length free of traffic, and hospital policy prohibiting taping of floors and walls. Toolkit factors included the lack of a case scenario reflecting the acute care setting; perceptions by some that goal-setting for improved walk test performance was not patient-centred or relevant to acute care; and inconsistent function of the app’s 6MWT length counter.

Resource implications: PLs and PPLs described referencing the guide’s equipment checklist and instructions and diagrams for setting up walkways, but not the list of equipment and training costs as sites either had access to the equipment needed to administer the tests or had no budget to purchase new equipment.

Implementation: PLs and PPLs consistently described using instructions and agendas in the guide for setting up and running learning sessions. At one site where the PPL became unavailable, administrative staff scheduled learning sessions. PTs followed the agendas during the sessions, but did not describe completing learning activities in advance as there was no one to provide reminders to do this. Evaluation: Most PL/PPLs and PTs indicated that limited resources to perform an audit prohibited the use of audit and feedback to monitor documentation of walk test use. They preferred the alternative proposed in the guide of scheduling a group meeting to reflect on and discuss practice change.

Effects of toolkit

The percentage of 37 PTs in each stage of readiness to measure outcomes at 0 versus 5 months was: pre-contemplation, 0% versus 0%; contemplation, 0% versus 0%; preparation, 5% versus 8%; action, 73% versus 65%; and maintenance, and 22% versus 27%. The percentage of PTs in the action or maintenance stage decreased from 95% to 92% between 0 and 5 months; this change was not significant (McNemar’s S = 0.33, p = 0.56).

presents self-efficacy ratings pre- and post-intervention. Mean changes in self-efficacy ratings to perform six 10mWT practices ranged from 17 to 30 percentage points and were significant (p ≤ 0.004). Mean changes in self-efficacy ratings to perform six 6MWT practices ranged from 2-9 percentage points and were not significant (p ≥ 0.112). The two largest increases in self-efficacy for both tests related to interpreting performance, and educating patients about performance.

Table 9. Self-efficacy to apply walk test practices pre- and post-intervention (n = 37).

outlines the impact on app use. Approximately a third of PTs reported using the app most or all of the time to interpret performance on the 10mWT and 6MWT, and to share 10mWT and 6MWT information with the patient.

Table 10. Nature of iWalkAssess app use during intervention period (n = 31)a.

Discussion

Implementation fidelity and engagement with the toolkit

The universal completion of learning sessions, and the moderate-to-high rates of attendance at learning sessions, review of the guide and video, and practice of walk tests, provide some evidence linking the toolkit intervention with improved walking assessment practice observed in the main analysis. The moderate percentage of PTs who used the app for 3–5 months does not necessarily imply these were the only individuals who attempted to use the walk tests because PTs’ preferences for using the smartphone versus the printed guide varied. This finding aligns with previous research [Citation60].

Toolkit quality (toolkit implementability and app quality)

Findings related to toolkit quality provide insight as to whether the intervention addressed known barriers across practice settings to enable practice change. Qualitative findings related to toolkit validity, applicability, and communicability, triangulated with MARS ratings suggest the intervention increased knowledge, and fostered beliefs in the clinical relevance (i.e., outcome expectations) and implementation of recommended practices, except in acute care hospitals. This helps to explain why documented walk test administration was lowest in acute care settings. The app was a particularly effective tool for enabling quick and convenient access to test instructions and reference values. PTs are generally positive about using a personal digital device to support evidence-based practice [Citation60]. Few PTs in the current study expressed previously reported concerns about visual accessibility, given the small device size, or using a digital device in front of patients [Citation60].

Belief in clinical relevance is an important factor influencing best practice implementation [Citation78,Citation79]. There is little guidance, however, on how to change negative attitudes. Theoretical Domains Framework [Citation64] developers recommend providing feedback on behaviour, including self-monitoring and comparison with peers, to help change PTs’ beliefs about consequences. Our findings suggest that persuasive research-based arguments and guideline recommendations supporting the recommended practices, case scenarios reflecting the targeted clinical contexts, and information on how to interpret scores for individual patients can improve attitudes towards recommended assessment practices, thereby facilitating practice change.

Effects on readiness to measure outcomes, self-efficacy, and app use

The toolkit and implementation strategy could not increase PTs’ readiness for measuring outcomes because 95% of PTs were already in the action or maintenance stage at baseline. Although the scale used to determine readiness to change was not specific to the 10mWT and 6MWT, these results may indicate PTs were actively involved with modifying or maintaining their approach to using these walk tests as a result of the toolkit intervention [Citation73]. In contrast, self-efficacy to perform all recommended 10mWT practices significantly increased, with the largest gains observed for interpreting performance and educating patients about performance. A similar pattern of smaller but non-significant improvements in self-efficacy for 6MWT practices was observed. Because self-efficacy beliefs are considered to predict behaviour [Citation34], these results may partly explain why a larger increase in documented 10mWT compared to 6MWT administration was found in the primary analysis. Compared to the 10mWT, a higher percentage of PTs reported learning the 6MWT in their professional training, identified the 6MWT as their preferred approach to measuring walking capacity, and consistently rated their self-efficacy for 6MWT practices higher at baseline, leaving less room for improvement. Moreover, difficulty administering the 6MWT due hospital traffic was a universal challenge that toolkit resource could not overcome. Conducting the 6MWT using a 15-meter walkway, found reliable in a recent study [Citation80], may address some of the issues associated with the 30-meter walkway.

Limitations

Self-reported methods of data collection in this study were vulnerable to social desirability bias [Citation81] and may have led to an overestimation of effects on study outcomes, such as knowledge, self-efficacy, attitudes, and behaviour. As noted previously, this before-and-after study did not have a control group. Thus, a causal relationship between the toolkit and measured change in study outcomes cannot be assumed. Given only a third of PTs reported using the 10mWT at baseline, however, it is likely that the toolkit and implementation strategy contributed to the positive changes in self-efficacy to perform recommended 10mWT practices observed.

Conclusions

This study contributes novel understanding of how a process framework, a determinant framework, and classic theories can be used to guide the development and process evaluation of a toolkit and implementation strategy to promote recommended practices in rehabilitation. We used theory to plan the overall research process, identify determinants of behaviour, incorporate strategies to enable behaviour change in the intervention, and identify intermediate study outcomes and hypotheses guiding qualitative and quantitative data analysis. The approach may help to inform toolkit development to advance other rehabilitation practices.

Supplementary Material 6

Download MS Word (17.4 KB)Supplementary Material 5

Download MS Word (14.9 KB)Supplementary Material 4

Download MS Word (82.7 KB)Supplementary Material 3

Download MS Word (47.1 KB)Supplementary Material 2

Download PDF (2.6 MB)Supplementary Material 1

Download MS Word (49.8 KB)Acknowledgements

The authors acknowledge Jonathan Lung for his work in developing the iWalkAssess app.

Disclosure statement

No potential conflict of interest was reported by the author(s).

Data availability statement

The data gathered and used for this analysis are not publically available because of the inclusion of identifying information of the participants and potentially sensitive case information.

Additional information

Funding

References

- Krueger H, Koot J, Hall RE, et al. Prevalence of individuals experiencing the effects of stroke in Canada: trends and projections. Stroke. 2015;46(8):2226–2231.

- Mozaffarian D, Benjamin EJ, Go AS, et al. Executive summary: heart disease and stroke statistics-2016 update: a report from the American Heart Association. Circulation. 2016;133(4):447–454.

- Global health estimates: disease burden and mortality estimates. Geneva: World Health Organization; 2016 [cited 2019 May 30]. Available from: http://www.who.int/healthinfo/global_burden_disease/en/

- Teasell R, Salbach NM, Foley N, et al. Canadian stroke best practice recommendations: rehabilitation, recovery, and community participation following stroke. Part one: rehabilitation and recovery following stroke, 6th edition update 2019. Int J Stroke. 2020;15(7):763–788.

- Winstein CJ, Stein J, Arena R, et al.; American Heart Association Stroke Council, Council on Cardiovascular and Stroke Nursing, Council on Clinical Cardiology, and Council on Quality of Care and Outcomes Research. Guidelines for adult stroke rehabilitation and recovery: a guideline for healthcare professionals from the American Heart Association/American Stroke Association. Stroke. 2016;47(6):e98–e169.

- Australian clinical guidelines for stroke management: Stroke Foundation; 2017 [cited 2019 Dec 18]. Available from: https://strokefoundation.org.au/What-we-do/Treatment-programs/Clinical-guidelines

- Veerbeek JM, Van Wegen EEH, van Peppen RPS, et al. KNGF clinical practice guideline for physical therapy in patients with stroke; 2014 [cited 2020 Oct 27]. Available from: http://95.211.164.114/index.php/kngf-guidelines-in-english

- Duncan PW, Horner RD, Reker DM, et al. Adherence to postacute rehabilitation guidelines is associated with functional recovery in stroke. Stroke. 2002;33(1):167–177.

- Reker DM, Duncan PW, Horner BD, et al. Postacute stroke guideline compliance is associated with greater patient satisfaction. Arch Phys Med Rehabil. 2002;83(6):750–756.

- Nathoo C, Buren S, El-Haddad R, et al. Aerobic training in Canadians stroke rehabilitation programs. J Neurol Phys Ther. 2018;42(4):248–255.

- Menon-Nair A, Korner-Bitensky N, Ogourtsova T. Occupational therapists' identification, assessment, and treatment of unilateral spatial neglect during stroke rehabilitation in Canada. Stroke. 2007;38(9):2556–2562.

- Rochette A, Korner-Bitensky N, Desrosiers J. Actual vs best practice for families post-stroke according to three rehabilitation disciplines. J Rehabil Med. 2007;39(7):513–519.

- Salbach NM, Guilcher SJ, Jaglal SB. Physical therapists' perceptions and use of standardized assessments of walking ability post-stroke. J Rehabil Med. 2011;43(6):543–549.

- Salbach NM, Wood-Dauphinee S, Desrosiers J, et al. Facilitated interprofessional implementation of a physical rehabilitation guideline for stroke in inpatient settings: process evaluation of a cluster randomized trial. Implement Sci. 2017;12(1):100.

- Salbach NM, Veinot P, Rappolt S, et al. Physical therapists' experiences updating the clinical management of walking rehabilitation after stroke: a qualitative study. Phys Ther. 2009;89(6):556–568.

- Cochrane LJ, Olson CA, Murray S, et al. Gaps between knowing and doing: understanding and assessing the barriers to optimal health care. J Contin Educ Health Prof. 2007;27(2):94–102.

- Canadian Institutes of Health Research – Knowledge translation. 2020 [cited 2020 Oct 18]. Available from: http://www.cihr-irsc.gc.ca/e/29418.html

- Munce SEP, Graham ID, Salbach NM, et al. Perspectives of health care professionals on the facilitators and barriers to the implementation of a stroke rehabilitation guidelines cluster randomized controlled trial. BMC Health Serv Res. 2017;17(1):440.

- Pennington L, Roddam H, Burton C, et al. Promoting research use in speech and language therapy: a cluster randomized controlled trial to compare the clinical effectiveness and costs of two training strategies. Clin Rehabil. 2005;19(4):387–397.

- Petzold A, Korner-Bitensky N, Salbach NM, et al. Increasing knowledge of best practices in occupational therapists treating post-stroke unilateral spatial neglect: results of a knowledge-translation intervention study. J Rehabil Med. 2012;44(2):118–124.

- Richards CL, Malouin F, Nadeau S, et al. Development, implementation, and clinician adherence to a standardized assessment toolkit for sensorimotor rehabilitation after stroke. Physiother Can. 2019;71(1):43–55.

- Bayley MT, Hurdowar A, Richards CL, et al. Barriers to implementation of stroke rehabilitation evidence: findings from a multi-site pilot project. Disabil Rehabil. 2012;34(19):1633–1638.

- Yamada J, Shorkey A, Barwick M, et al. The effectiveness of toolkits as knowledge translation strategies for integrating evidence into clinical care: a systematic review. BMJ Open. 2015;5(4):e006808.

- Craig P, Dieppe P, Macintyre S, et al.; Medical Research Council Guidance. Developing and evaluating complex interventions: the new Medical Research Council guidance. BMJ. 2008;337:a1655.

- Moore GF, Audrey S, Barker M, et al. Process evaluation of complex interventions: Medical Research Council guidance. BMJ. 2015;350:h1258.

- Damschroder LJ. Clarity out of chaos: use of theory in implementation research. Psychiatry Res. 2020;283:112461.

- Strifler L, Cardoso R, McGowan J, et al. Scoping review identifies significant number of knowledge translation theories, models, and frameworks with limited use. J Clin Epidemiol. 2018;100:92–102.

- Nilsen P. Making sense of implementation theories, models and frameworks. Implement Sci. 2015;10:53.

- Sibley KM, Brooks D, Gardner P, et al. Development of a theory-based intervention to increase clinical measurement of reactive balance in adults at risk of falls. J Neurol Phys Ther. 2016;40(2):100–106.

- Salbach NM, MacKay-Lyons M, Brooks D, et al. Effect of a toolkit on physical therapists' implementation of an evidence-informed approach to using the 10-metre and 6-minute walk tests post-stroke: a before-and-after study. Cerebrovasc Dis. 2017;43(Suppl 1):I–II.

- Graham ID, Logan J, Harrison MB, et al. Lost in knowledge translation: time for a map? J Contin Educ Health Prof. 2006;26(1):13–24.

- Field B, Booth A, Ilott I, et al. Using the Knowledge to Action Framework in practice: a citation analysis and systematic review. Implement Sci. 2014;9:172.

- Gagliardi AR, Berta W, Kothari A, et al. Integrated knowledge translation (IKT) in health care: a scoping review. Implement Sci. 2016;11:38.

- Bandura A. Self-efficacy: toward a unifying theory of behavioral change. Psychol Rev. 1977;84:24.

- Gagliardi AR, Brouwers MC, Palda VA, et al. How can we improve guideline use? A conceptual framework of implementability. Implement Sci. 2011;6(1):26.

- Prochaska JO, DiClemente CC. Stages and processes of self-change of smoking: toward an integrative model of change. J Consult Clin Psychol. 1983;51(3):390–395.

- Sibley KM, Salbach NM. Applying knowledge translation theory to physical therapy research and practice in balance and gait assessment: case report. Phys Ther. 2015;95(4):579–587.

- Swinkels RAHM, van Peppen RPS, Wittink H, et al. Current use and barriers and facilitators for implementation of standardised measures in physical therapy in the Netherlands. BMC Musculoskelet Disord. 2011;12(1):1–15.

- Salbach NM, Jaglal SB, Korner-Bitensky N, et al. Practitioner and organizational barriers to evidence-based practice of physical therapists for people with stroke. Phys Ther. 2007;87(10):1284–1303.

- Jette DU, Bacon K, Batty C, et al. Evidence-based practice: beliefs, attitudes, knowledge, and behaviors of physical therapists. Phys Ther. 2003;83(9):786–805.

- Scurlock-Evans L, Upton P, Upton D. Evidence-based practice in physiotherapy: a systematic review of barriers, enablers and interventions. Physiotherapy. 2014;100(3):208–219.

- Jette DU, Halbert J, Iverson C, et al. Use of standardized outcome measures in physical therapist practice: perceptions and applications. Phys Ther. 2009;89(2):125–135.

- Salbach NM, Oʼbrien KK, Brooks D, et al. Considerations for the selection of time-limited walk tests poststroke: a systematic review of test protocols and measurement properties. J Neurol Phys Ther. 2017;41(1):3–17.

- Cheng DK, Dagenais M, Alsbury K, et al. Distance-limited walk tests poststroke: a systematic review of protocols and measurement properties (under review). J Neurol Phys Ther. 2020;41:3–17.

- Salbach NM, O'Brien K, Brooks D, et al. Speed and distance requirements for community ambulation: a systematic review. Arch Phys Med Rehabil. 2014;95(1):117–128.

- Salbach NM, O'Brien KK, Brooks D, et al. Reference values for standardized tests of walking speed and distance: a systematic review. Gait Posture. 2015;41(2):341–360.

- Kendall BJ, Gothe NP. Effect of aerobic exercise interventions on mobility among stroke patients: a systematic review. Am J Phys Med Rehabil. 2016;95(3):214–224.

- French B, Thomas LH, Coupe J, et al. Repetitive task training for improving functional ability after stroke. Cochrane Database Syst Rev. 2016;(11):CD006073.

- Hebert D, Lindsay MP, McIntyre A, et al. Canadian stroke best practice recommendations: Stroke rehabilitation practice guidelines, update 2015. Int J Stroke. 2016;11(4):459–484.

- Ng SS, Tsang WW, Cheung TH, et al. Walkway length, but not turning direction, determines the six-minute walk test distance in individuals with stroke. Arch Phys Med Rehabil. 2011;92(5):806–811.

- Ng SSM, Au KKC, Chan ELW, et al. Effect of acceleration and deceleration distance on the walking speed of people with chronic stroke. J Rehabil Med. 2016;48(8):666–670.

- Allet L, Leemann B, Guyen E, et al. Effect of different walking aids on walking capacity of patients with poststroke hemiparesis. Arch Phys Med Rehabil. 2009;90(8):1408–1413.

- Guyatt GH, Pugsley SO, Sullivan MJ, et al. Effect of encouragement on walking test performance. Thorax. 1984;39(11):818–822.

- Bohannon RW, Williams Andrews A. Normal walking speed: a descriptive meta-analysis. Physiotherapy. 2011;97(3):182–189.

- Hill K, Wickerson LM, Woon LJ, et al. The 6-min walk test: responses in healthy Canadians aged 45 to 85 years. Appl Physiol Nutr Metab. 2011;36(5):643–649.

- Pedestrian countdown signals: City of Toronto; 2019 [cited 2019 Dec 18]. Available from: https://www.toronto.ca/services-payments/streets-parking-transportation/traffic-management/traffic-signals-street-signs/types-of-traffic-signals/pedestrian-countdown-timers/

- Canadian capacity guide for signalized intersections: Institute of Transportation Engineers, District 7 – Canada; 2008 [cited 2019 Dec 18]. Available from: http://www.tac-atc.ca/sites/tac-atc.ca/files/site/doc/resources/report-capacityguide.pdf

- Stratford PW. Getting more from the literature: estimating the standard error of measurement from reliability studies. Physiother Can. 2004;56(1):27–30.

- Flansbjer UB, Holmback AM, Downham D, et al. Reliability of gait performance tests in men and women with hemiparesis after stroke. J Rehabil Med. 2005;37(2):75–82.

- Salbach NM, Veinot P, Jaglal SB, et al. From continuing education to personal digital assistants: what do physical therapists need to support evidence-based practice in stroke management? J Eval Clin Pract. 2011;17(4):786–793.

- Pattison KM, Brooks D, Cameron JI, et al. Factors influencing physical therapists' use of standardized measures of walking capacity poststroke across the care continuum. Phys Ther. 2015;95(11):1507–1517.

- iWalkAssess: the latest evidence-informed approach to walking assessment post-stroke. [cited 2020 Jan 20]. Available from: http://www.iwalkassess.com/

- Salbach NM, Solomon P, O'Brien KK, et al. Design features of a guideline implementation tool designed to increase awareness of a clinical practice guide to HIV rehabilitation: A qualitative process evaluation. J Eval Clin Pract. 2019;25(4):648–655.

- Michie S, Johnston M, Francis J, et al. From theory to intervention: mapping theoretically derived behavioural determinants to behaviour change techniques. Appl Psychol. 2008;57(4):20.

- Jamtvedt G, Young JM, Kristoffersen DT, et al. Does telling people what they have been doing change what they do? A systematic review of the effects of audit and feedback. Qual Saf Health Care. 2006;15(6):433–436.

- Ivers N, Jamtvedt G, Flottorp S, et al. Audit and feedback: effects of professional practice and healthcare outcomes. Cochrane Database Syst Rev. 2012;(6):CD000259..

- Hysong SJ. Meta-analysis: audit and feedback features impact effectiveness on care quality. Med Care. 2009;47(3):356–363.

- Nielsen J. 10 usability heuristics for user interface design. 1994 [cited 2020 19 October]. Available from: https://www.nngroup.com/articles/ten-usability-heuristics/

- iWalk educational video: administration of the 10-metre walk test and 6-minute walk test with a person post-stroke: YouTube; 2015 [updated 2020 Mar 6]. Available from: https://www.youtube.com/watch?v=PI_gERx5EmI&t=309s

- Virzi RA. Refining the test phase of usability evaluation – how many subjects is enough. Hum Factors. 1992;34(4):457–468.

- Hoffmann TC, Glasziou PP, Boutron I, et al. Better reporting of interventions: template for intervention description and replication (TIDieR) checklist and guide. BMJ. 2014;348:g1687.

- Stoyanov SR, Hides L, Kavanagh DJ, et al. Mobile app rating scale: a new tool for assessing the quality of health mobile apps. JMIR Mhealth Uhealth. 2015;3(1):e27.

- Bowman J, Lannin N, Cook C, et al. Development and psychometric testing of the Clinician Readiness for Measuring Outcomes Scale. J Eval Clin Pract. 2009;15(1):76–84.

- Bandura A. Guide for constructing self-efficacy scales. In: Urdan T, Pajares F, editors. Self-efficacy beliefs of adolescents. Greenwich: Information Age Publishing; 2006. p. 307–337.

- Hsieh HF, Shannon SE. Three approaches to qualitative content analysis. Qual Health Res. 2005;15(9):1277–1288.

- Sample size for McNemar test or paired changes in proportions program: StatsToDo; 2020 [cited 2020 Oct 17]. Available from: http://www.statstodo.com/SSizMcNemar_Pgm.php

- Hulley SB, Cummings SR, Browner WS, et al. Designing clinical research. 4th ed. Philadelphia (PA): Wolters Kluwer – Lippincott Williams & Wilkins; 2013.

- Salbach NM, Guilcher SJT, Jaglal SB, et al. Determinants of research use in clinical decision making among physical therapists providing services post-stroke: a cross-sectional study. Implement Sci. 2010;5(1):77.

- Cane J, O'Connor D, Michie S. Validation of the theoretical domains framework for use in behaviour change and implementation research. Implement Sci. 2012;7:37–54.

- Cheng DK, Nelson M, Brooks D, et al. Validation of stroke-specific protocols for the 10-meter walk test and 6-minute walk test conducted using 15-meter and 30-meter walkways. Top Stroke Rehabil. 2019;21:1–11.

- Callegaro M. Encyclopedia of survey research methods. London: Sage Publications, Inc.; 2008 [cited 2019 Oct 29].

- Ventola CL. Mobile devices and apps for health care professionals: uses and benefits. P T. 2014;39(5):356–364.

- Buchholz A, Perry B, Weiss LB, et al. Smartphone use and perceptions among medical students and practicing physicians. J Mobile Tech Med. 2016;5(1):6.