?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.

?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.Abstract

Purpose

We investigated the impacts of the COVID-19 pandemic on the modality of formal cognitive assessments (in-person versus remote assessments).

Materials and methods

We created a web-based survey with 34 items and collected data from 114 respondents from a range of health care professions and settings. We established the proportion of cognitive assessments which were face-to-face or via video or telephone conferencing, both pre- and post-March 2020. Further, we asked respondents about the assessment tools used and perceived barriers, challenges, and facilitators for the remote assessment of cognition. In addition, we asked questions specifically about the use of the Oxford Cognitive Screen.

Results

We found that the frequency of assessing cognition was stable compared to pre-pandemic levels. Use of telephone and video conferencing cognitive assessments increased by 10% and 18% respectively. Remote assessment increased accessibility to participants and safety but made observing the subtleties of behaviour during test administration difficult. The respondents called for an increase in the availability of standardised, validated, and normed assessments.

Conclusions

We conclude that the pandemic has not been detrimental to the frequency of cognitive assessments. In addition, a shift in clinical practice to include remote cognitive assessments is clear and wider availability of validated and standardised remote assessments is necessary.

We caution the wider use and interpretation of remote formal cognitive assessments due to lack of validated, standardised, and normed assessments in a remote format.

Clinicians should seek out the latest validation and normative data papers to ensure they are using the most up to date tests and respective cut offs.

Support is needed for individuals who lack knowledge/have anxiety over the use of technology in formal cognitive assessments

IMPLICATIONS FOR REHABILITATION

Introduction

In late 2019 a novel corona virus (COVID-19) was identified in China and spread to Europe within months [Citation1]. After the first recorded case of COVID-19 appearing in the UK at the end of January, in March 2020, the United Kingdom implemented a national lockdown [Citation2], limiting or restricting face-to-face contact for many clinical services. Hospitals and primary care settings in the UK stopped providing non-essential or routine appointments and all settings had to adjust organisationally to the challenges of personal protective equipment and staff/client safety [Citation3,Citation4]. The rate of face-to-face appointments at general practices decreased exponentially, with 71% of routine consultations being conducted remotely, up from 25% the same time in 2019 [Citation4]. With this paper, we wanted to investigate the effects of this halting of face-to-face appointments, with regards to potential shifts in the modality of formal cognitive assessments.

Formal cognitive assessments are typically completed in-person. By formal cognitive assessment we refer here to any cognitive testing undertaken using a standardised measure using a standardised measure or tool, rather than informal brief checks. In 2019, a mixed methods survey investigation of videoconferencing in clinical neuropsychologists in Australia revealed only 7% of 90 respondents reported using video conferencing for assessment, with the highest percentage of using videoconferencing reported for feedback and interventions, 18% and 21% respectively [Citation5]. This is likely to be much higher than in other parts of the world, given that Australia already had relatively well-established telehealth services (e.g., [Citation6]). The same survey also found that where videoconferencing was considered, it was used very infrequently. The lack of engagement with remote or digitised assessment seems to exist despite reported high (98%) satisfaction with teleneuropsychological services [Citation7]. After the onset of the COVID-19 pandemic, the abrupt changes in clinical practice brought about several challenges. Practical limitations to in-person assessments included administration in personal protective equipment such as face or full body coverings, which can limit what the client can see and hear [Citation8], as well as usage of materials in assessments which could increase risk of cross-contamination and may not be allowed [Citation9].

Services needed to swiftly switch to remote and digital assessments with likely long-term changes taking effect [Citation10]. Guidance was published on adjustments, for example, from stroke services [Citation11] and memory clinics [Citation12]. Guidance from the Royal College of Physicians suggested virtual assessments could be utilised such as WhatsApp or Facetime by multidisciplinary teams for virtual ward rounds and rehabilitation [Citation13] in order to continue practice. Guidance from the Division of Neuropsychology (a division of the British Psychological Society) encouraged remote assessment, but needed caution in interpreting results in comparison to existing normative data [Citation14].

This swift change also encouraged the use of cognitive assessments that were not validated or did not provide normative data for remote assessment [Citation10]. Encouragingly, when comparing remote and in-person assessments, research so far has shown performance to be similar across versions [Citation15]. For instance the use of the Montreal Cognitive Assessment (MoCA) via video conferencing has not been fully standardised with normative data [Citation16], but it was recommended to use by the team behind the MoCA [Citation17] given partial validation for its version [Citation10]. Test-specific guidance has been released for some standardised measures which are not normally administered remotely (see e.g., Oxford University Innovations: Clinical Outcomes, 2020 [Citation18], for Oxford Cognitive Screen remote assessment guidance) which illustrate ways that tests could be administered, but again suggesting caution with the interpretation of results. With time, we anticipate a rise in further validation research into the use of remote assessments, allowing clinicians to be more confident in their interpretations of remote assessment results. Since original submission of the current study, for example, there have been validation studies for the Telephone MOCA or T-MOCA in response to the COVID-19 pandemic [Citation19,Citation20].

Currently there are limited data regarding the frequency of use of remote assessments of cognition prior to and after the COVID-19 pandemic began in a global sample. One survey in late March 2020 surveyed 372 board-certified neuropsychologists in America, and found that, following the start of the pandemic, in March 2020 only 21% of neuropsychologists were conducting in-person assessments, and 67% planned to use, or already were using, teleneuropsychological services for assessments [Citation21]. Of the 67% of respondents using teleneuropsychology, only 26% reported using remote assessments to administer neuropsychology batteries, with the majority reporting using the format for interviews (73%) and screening tests, verbal tests, brief batteries, dementia batteries, mental status exams (66%).

A separate survey study during the pandemic in the USA received responses from 230 independent practitioners of clinical neuropsychology and found that only 14% reported using teleneuropsychology for intake interviews, feedback, and some testing, and 3% for full neuropsychological examination [Citation22]. We note that this survey only asked about full neuropsychological batteries, rather than whether individual neuropsychological tests were used, for example, only using an individual subtest.

To date, the teleneuropsychology surveys have exclusively recruited neuropsychologists or psychologists and none have explicitly included wider practice in cognitive assessments. Here, we wished to acknowledge the breadth of professions that run formal cognitive assessments in clinical practice, across a range of settings including neurorehabilitation and memory clinics. In this pre-registered study (registration available here https://osf.io/hvmx9) with full data and analysis repository (see https://osf.io/uv8jr/), we asked health care professionals from a range of settings about their experiences of assessing cognition remotely and face-to-face through a web-based survey. We aimed to estimate and compare the rates of remote assessment pre-COVID-19 pandemic and post-COVID-19 pandemic, particularly focussing in on assessing the reported rates of formal cognitive assessments specifically (aim 1). We further aimed to identify which cognitive assessments were used both pre-COVID-19 pandemic and currently, with a subset of questions specifically focussed on the Oxford Cognitive Screen (aim 2). Finally, we set out to gather data on perceived barriers, challenges, and facilitators to remote assessment of cognition (aim 3). Similarly, to the previously described online surveys on the use of teleneuropsychology before and after the COVID-19 pandemic, and as an extension of questions used previously [Citation23], we anticipated lower use of teleneuropsychology prior to the pandemic, with an increase in phone and video conferencing since March 2020.

Methods

We report how we determined our sample size, all data exclusions (if any), all manipulations, and all measures included in the study [Citation24]. We followed the CHERRIES Equator Network guidance [Citation25] when reporting our web-based open-questionnaire. Approval for the study was gained from the Central University Research Ethics Committee (R51993/RE001). Informed consent was gained from respondents through a mandatory item on the web-based survey which should only be checked if the respondent was above 18 and agreed to take part, after reading the participant information sheet. In advertisements of the survey, respondents were told completion of the survey would take 5–15 min. The participant information sheet included all information regarding the ways in which data were stored, where, and for how long, who the investigating team were and the purpose of the study.

Respondents

Two power analyses were conducted to establish minimum sample size to establish differences in remote cognitive assessment practice pre- and post-March 2020. The power analyses for both a one-sided paired t-test and a one-sided Wilcoxon signed-rank test (depending on data characteristics), were set with an alpha of .05, a small Cohen’s d of 0.20, a paired sample, a one-sided test, and 80% power using the pwr (version 1.3–0) package in R Studio [Citation26]. The power analysis revealed we needed a minimum sample of 156 respondents for the paired t-test. We did not reach the expected sample size prior to our completion date set out in our pre-registration criteria, so we conducted a sensitivity analysis to see which effect size we could detect given our final sample of 114 respondents after exclusions, detailed later. With this sample size, we could detect with 80% power, an alpha of .05, using a paired and one-sided test, and a Cohen’s d of 0.23.

We opened up the web-based survey for data collection on the 17 September 2020 and closed data collection on the 5 November 2020. Our intended completion date was to coincide with the second national lockdown in the UK in November 2020, and we did not seek extension of the data collection period as we achieved an adequate sample size to detect a marginally different smallest effect size of interest.

Respondents were convenience sampled by the research team via word of mouth, social media, our websites, and email. In addition, we contacted the British Psychological Society’s Division of Neuropsychology, The Royal College of Occupational Therapists Specialist Section – Neurological Practice, the International Neuropsychological Society, various stroke wards, and occupational therapists to snowball our study. Respondents were non-selected pending they met inclusion criteria, we used the first seven items of the web-based survey to determine inclusion criteria, except comprehension of English language. Our inclusion criteria were that the respondents were aged 18 or over, willing to give informed consent for participation, and were healthcare professionals with direct contact with formally assessing cognition of service users and/or overseeing the assessment of service users. We note that where we have advertised our web-based survey has biased our sample to psychologists/neuropsychologists, and occupational therapists working in stroke services, over and above other professionals who conduct formal cognitive assessments in other settings. This is discussed in the limitations section.

Respondents who were not healthcare professionals or did not have any current experience completing or overseeing cognitive assessments with clients were excluded. Our approved procedures did not allow for data collection through cookies or using the IP address of participants to track unique visitors to the web-based survey. There were 1451 link clicks to the survey landing page, of which 31 of those who followed the link continued through the consent process and then exited the survey, 20 of those who followed the link proceeded to survey page 3 and then exited the survey, 10 exited after page 4, and 3 exited after page 5. 136 visitors completed the survey and all mandatory items, and submitted their responses. Of those non-unique visitors who passed the informed consent page (1421), we had a completion rate of 10.44% (1421/136).

After exclusions, detailed in Supplementary Materials, we analysed data from 114 respondents. Further, we present our advertising materials, as approved through ethical review, in the Supplementary Materials.

Materials

For the current study, a web-based open-survey was created and hosted on JISC Online Surveys [Citation27]. The survey was designed by the first and senior authors in consultation with a clinical psychologist (second author EK) working in neurorehabilitation services to ensure appropriateness and face validity of the web-based survey items for our intended purposes. We created the custom survey to answer our research question, similar to previous studies [e.g., Citation26] on this topic. Prior to collection of any data, simulated data were created from the web-based survey to ensure that it was administered as intended and to identify any issues affecting later analyses.

The web-based survey had a total of 34 items, presented over 4 webpages with percentage of pages complete visible at the top of the screen, of which nine were optional extras regarding the OCS. Participants were able to press a back button to revise answers.

All items of the web-based survey are available in .

Table 1. Items and items descriptions of the web-based online survey regarding pre- and post-COVID-19 pandemic cognitive assessments.

Of the original 34 items (including sub-items), 19 items were available to all respondents. There were optional additional questions regarding the OCS and its use before and after March 2020, as well as whether it had been administered remotely as per recent published guidance by the authors (see https://innovation.ox.ac.uk/news/ocs-remote-administration/ and see https://tinyurl.com/yxz5hafn for demonstration). The final items about the OCS remote administration were to gather specific feedback on this tool and to aid in answering aim 2.

We structured the web-based survey such that we could fulfil the aims of the project, with the first few items concerning the rate of cognitive/other assessments pre-COVID-19 pandemic (i.e., pre-March 2020). The additional items, only available where the participant responded “Yes” to whether they had conducted or overseen assessments of cognition (item 8) aimed to gather data for the use of remote assessments of cognitive functioning both before and during the COVID-19 pandemic. The final section enquired about perceived benefits, challenges, and facilitators of remote assessment of cognition, which was included to fulfil aim three. The optional section on the OCS was included to gather feedback for the authors on the remote assessment version of the OCS, which was released online in May 2020, as well as to address aim 2.

Procedure

The web-based survey did not require an account to access and included the information sheet before beginning the web-based survey, and consent process to take part. Respondents were allowed to exit the web-based survey at any point simply by closing the web-based survey tab or browser.

All data were anonymous and not linked to names or personal information. Once completed, respondents could request a completion certificate to include in their portfolio evidencing continued professional development, or to confirm their interest to participate in further research. Respondents were asked to provide their completion ID to obtain their certificate. No linking information was stored following the sending of the certificate.

Data analysis

We explored descriptive participant statistics from the web-based survey, including where respondents were based and what roles they had. Note, due to the majority of respondents being from the UK, we split the data into UK versus non-UK respondents, and present the main results from the UK sample only, and replicate results in the non-UK sample at the end of each section. We present results for each study aim. We conducted inferential analysis in the form of median comparisons of percentages of the modalities of formal cognitive assessment prior to and after March 2020 to examine whether there was a shift in format of assessment. Our data for percentages of face-to-face versus teleneuropsychology violated the assumption of normality so we used Wilcoxon Signed Rank tests for comparison. We report medians and interquartile ranges (IQR) for all comparisons between before and after March 2020.

We counted the frequency of formal cognitive assessments listed by respondents before and after March 2020, and the percentage of responses which had reported changing assessments used after March. As the OCS was identified to be commonly used, and it was of interest to the authorship team to investigate, we summarise the findings from the optional OCS questions here. Finally, we summarised the perceived benefits, challenges, and facilitators identified by items 13 to 15.

In order to summarise the qualitative data, a standardised coding system was developed using thematic analysis ([Citation28] from [Citation29]), an appropriate method for establishing the frequency of themes emerging from open-ended survey questions. The first author read each qualitative answer per qualitative question a minimum of twice to familiarise themselves with the data. The first author then generated initial codes, per qualitative item in the web-based survey, with example exerts used to classify the initial codes. When initial codes were classified, themes linking excerpts were created within the survey items. Next, the first author compared codes between qualitative items in the survey to establish any cross over, or adaption, of codes or themes between items. Finally, the first author defined key themes that emerged across each of the qualitative items and the process was approved by the second author, and agreement was decided over the emerged themes and associated codes. The standardised coding scheme, as well as the associated frequencies of these codes occurring in respondents answers, is available in Table S1 in Supplementary materials.

Results

Sample characteristics

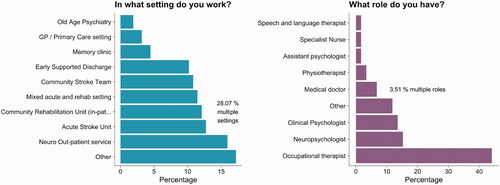

The 114 respondents who passed the attention check were mainly based in the UK (82 respondents, see for respondents per country) and worked in a variety of settings and held a varied number of roles. summarises proportions of respondents for each setting and role worked in the UK sample.

Figure 1. Percentage of respondents who reported each setting worked in and roles worked for the majority of time (N = 114). Figure available at https://osf.io/3qzrv/ under a CC-BY4.0 license.

Table 2. Reported frequencies of countries worked in.

The “Other” option for setting worked, selected 27 times, revealed multiple community-based settings (n = 6), paediatric settings (n = 3), neurological inpatient facilities (n = 3), private practices (n = 4), vocational workplace, a sleep lab, a radiology department, a physiotherapist, an outpatient psychiatric facility, a cognition clinic, an emergency department, an eye outpatient’s facility, a child disability team, a general geriatric clinic, and a movement disorder clinic.

The “Other” option for role, selected 17 times, revealed roles such as behaviour analyst, clinical researcher (n = 2), therapists (n = 2), a trainee clinical psychologist, a counsellor, a dietician, a nurse, an occupational psychologist, an orthoptist, a physio assistant, a radiographer, a receptionist, a sleep technician, a social prescriber, and a team leader.

The rate of remote assessment (any) Pre-COVID-19 pandemic

In the UK only sample of 82 respondents, 13.41% used telephone calls and 1.22% used videoconferencing assessment methods prior to March 2020, non-cognition specific.

In the non-UK sample of 32 respondents, 0% used telephone calls and 9.38% of participant used videoconferencing assessment methods prior to March 2020, non-cognition specific.

Cognitive assessments pre- and during COVID-19 pandemic period

Of the 82 UK respondents, five did not complete any cognitive assessments, and 10 respondents from the UK sample incorrectly completed items 9a–c (e.g., percentage/absolute values did not sum to either 100% or the number of assessments run) and had to be excluded in analyses on percentages of cognitive assessments pre-March 2020.

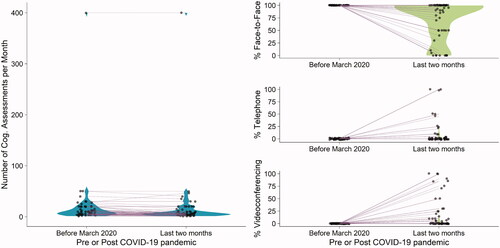

Respondents reported conducting or overseeing a median of 10 cognitive assessments per month prior to March 2020 (IQR = 15, range = 1–400). Of these cognitive assessments, they reported 100% (IQR = 0, range = 100–100) were face to face, and 0% were via telephone or by videoconferencing.

During the COVID-19 pandemic, the median monthly number of cognitive assessments conducted or overseen by the respondent in the last 2 months was 6 (median; IQR = 10, range = 1–400). Of their conducted or overseen cognitive assessments, 72.53% (IQR = 50, range = 0–100) were face to face, 9.74% (IQR = 20, range = 0–100) were via telephone, and 17.74% (IQR = 20, range = 0–100) were by videoconferencing.

We conducted three one sided Wilcoxon signed rank tests with continuity correction, due to normality violations, with a Bonferroni alpha correction level of (.05/3 = .016), to compare the numbers of cognitive assessments administered before and after March 2020, as well as to compare the percentages reported for face-to-face, videoconferencing, and telephone cognitive assessments before and after March 2020 (see ). Importantly, we found that there was no significant difference between the numbers of cognitive assessments completed before March in the average month, and in the last 2 months on average (MD = 2.12, = 616, p = 1,

= 0).

Figure 2. Illustrates the change in number and modality of cognitive assessments prior to and since the COVID-19 pandemic began. The left-side graph illustrates the number of cognitive assessments conducted in the average month, both before and during the COVID-19 pandemic, lines represent the connection for individuals’ data before and during the COVID-19 pandemic. On the right-side, the percentage of assessments which were telephone or videoconferencing both before and during the COVID-19 pandemic are presented, where lines represent the connection for individuals’ data before and during the COVID-19 pandemic. Figure available at https://osf.io/b6rjq/ under a CC-BY4.0 license.

As for the face-to-face, while there was an apparent decrease in formal cognitive assessments carried out before March to the last 2 months on average, this difference was not statistically significant (MD = 27.47, = 435, p = 1,

= 0).

However, we did find a significant difference for percentages of the telephone assessments comparing pre- and post-March 2020 (MD = −9.74, = 0, p<.001,

= −.42). The same was true for videoconferencing (MD = −17.74,

= −4.30, p < .001,

= −.54) where there was an increase in videoconferencing. All effect sizes for significant tests (r = −.37 to −.56), when converted to Cohen’s (d = −0.80 to −1.35) were above the minimum effect size we could detect in our sensitivity analysis with 80% power.

The same patterns of change data were present in the non-UK sample data, with a few exceptions. There were seven respondents after exclusions for incorrectly answering items 9a–c to 10a–c. The non-UK group conducted similar cognitive assessments in the average month (both had a median of 10), and similar telephone and videoconferencing assessments (0% respectively) pre-March 2020. However, of the seven, only one shifted from 100% face-to-face assessments to 50% videoconferencing and face-to-face.

Cognitive assessments used

From the UK sample, 18 respondents gave details of specific tests they have used remotely. The most commonly reported test to remotely assess cognition pre-March 2020 was the Addenbrookes Cognitive Examination (ACE; [Citation30]) (38.89% of 18 respondents reported use), followed by the Montreal Cognitive Assessment (MoCA; [Citation31]), followed by the Delis-Kaplan Executive Function System [Citation32] (22.22%) and the Oxford Cognitive Screen (22.22%). 16.42% of respondents reported not using the same tests during the pandemic as they did before, and eight respondents reported the tests they now use. These included the Repeatable Battery for the Assessment of Neuropsychological Status (n = 1), the ACE (n = 2), mini-MoCA (n = 1), MoCA blind (n = 1), telephone MoCA (n = 1), the D-KEFS (n = 2), the Behavioural Assessment of Dysexecutive Function [Citation33] (n = 1), the Wechsler Adult Intelligence Scale (n = 2), Wechsler Memory Scale (n = 1), the OCS (n = 1), and the Telephone Interview for Cognitive Status [Citation34] (n = 1).

In the non-UK sample 14 respondents reported tests used remotely at any time point, the most commonly used test was the MoCA (35.71%) followed by the D-KEFS (28.57%), and Wechsler Test of Adult Reading [Citation35]. There were two respondents who changed tests since March 2020, these included starting to use the telephone Mini-Mental State Examination [Citation36], and child specific tests. In Supplemental Table S3, we present all of the tests reported to be used in remote assessment. Following this analysis, data are no longer split by country.

We also investigated the Oxford Cognitive Screen (OCS) specifically, for aim 2, regarding its use before and after March 2020, to explore the uptake of remote assessment using the OCS. Prior to March 2020, 51.75% of all 114 respondents reported using the OCS. Users of the OCS were primarily occupational therapists (68.33%), however, other users included clinical psychologists (8.33%), medical doctors (6.67%), neuropsychologists (6.67%), physiotherapists (3.33%), assistant psychologists (1.67%), speech and language therapists (1.67%), others (3.33%) including a clinical researcher and dietician. The OCS was primarily used in acute stroke units (19.78%) and was also used by community stroke teams (16.48%), community rehabilitation units (15.38%), early supportive discharge (14.29%), neuro out-patient facilities (12.09%), mixed acute and rehab settings (10.99%), memory clinics (2.20%), and others (8.79%) including a cognition clinic, neurological in-patient (n = 2), a community neuro service, neurological rehabilitation (n = 4), a brain injury service, and a geriatric clinic. The 86 respondents reported using the following groups: Stroke = 57, Traumatic brain injury = 17, Mild cognitive impairment = 9, Dementia = 2, and other = 1. Where “other” was for a brain tumor.

Since March 2020, 43.86% of respondents reported using the OCS. Of those who responded “Yes” to using the OCS since March 2020, 18% reported using the remote assessment version. Respondents reported that they liked using OCS because it was aphasia friendly, easily adaptable to remote usage, and easy to administer in comparison to other tests.

The potential improvements that could be made were largely that the entire OCS should be remote rather than requiring a pack to be sent ahead of time with materials (33.33% of respondents).

Of the 58 responses on reasons they did or did not administer the remote OCS, for those who had administered it (n = 9), the most common reasons reported were to assess cognition (55.55%), and integration into wider remote assessment of the professional service they operated in (22.22%). For those who did not administer the remote OCS (35 respondents), the most common reasons reported was working in in-patient facilities with limited need for remote assessment (37.14%) as well as a lack of awareness of a remote version (22.85%).

Perceived benefits, challenges, and facilitators to remote assessment of cognition

We asked respondents who reported using cognitive assessments, what they experienced as benefits of remote assessment. The most commonly reported theme emerging from the data, of 97 responses, reflected the economic, time, and environmental cost savings associated with remotely administering tests (43.30%). The second most common benefit was safety in relation to reduction of COVID-19 exposure and infection control (31.96%). This was followed by reported increased accessibility of participants attending appointments, particularly travel and rural communities were frequently mentioned (21.54%).

Respondents (n = 97) reported that the key barrier to remote assessment of cognition was that they could not pick up on subtleties of behaviour or see how participants were responding to the questions/tasks (28.87%). This lack of observation made it harder to interpret the results of the assessments for some participants. The second most frequently reported barrier was that some participants could not be tested due to lack of knowledge about technology and therefore hesitance to use it (27.84%). Some respondents reported that their participants were anxious about adopting remote assessment because it was new to them. Thirdly, respondents reported concerns regarding the reliability of internet or technical issues making the connection unstable when conducting remote assessments (26.80%). Other notably frequent concerns regarded the lack of (in terms of existence or their access to) standardised and validated/normed assessments to use for remote assessments (17.50%).

Finally, when asked what could facilitate remote assessment of cognition, the most frequent response was to increase the number of standardised, validated, and normed assessments (27.84%) available for use. Other responses such as increasing access to remote assessment software (13.40%), technology (13.40%), and physically supporting the participant during administration by a family member/someone else (13.40%), were relatively equally frequent. Full details of the coded responses of the qualitative items can be found in Table S2.

Discussion

We presented a pre-registered web-based survey study that investigated changes in the assessment of cognition before and during the COVID-19 pandemic. A sample of 114 (135 before exclusions) healthcare professionals from various clinical settings, including primary care, in-patient settings, and specialised neurological out-patient service working in a diverse range of roles from physiotherapists, occupational therapists, neuropsychologists, and medical doctors, responded to our survey. This heterogeneous sample reflects the variety of professions that engage in the assessment of cognition in clinical practice. One of the key aims was to reflect responses from this clinical reality rather than limit the survey to only neuropsychologists/psychologists performing cognitive assessments. The majority of the sample were from the UK, but there were responses from across the globe. To allow meaningful interpretation of the results of certain subsection, we analysed the UK subsample separately, though the broad findings of increased remote cognitive assessments since March 2020 was evident throughout the full international sample.

Importantly, we found that the overall number of cognitive assessments completed did not significantly differ compared to before the pandemic, which provides reassurance that this important aspect of assessing hidden deficits in neurological populations remained included in clinical services. It is testament to the ingenuity and flexibility of teams to swiftly adapt their ways of working and to keep these services running during this period of the COVID-19 pandemic.

We found that the frequency with which videoconferencing or telephone conferencing modalities were used to assess cognition increased significantly with 9.74% and 17.74% for video and telephone conferencing respectively. There were statistically more video and telephone assessments of cognition since the pandemic. This in line with what recent research has shown in other samples [Citation21,Citation23]. In terms of overall remote assessment prior to the pandemic, our results did not match that of Hammers et al. [Citation23] who found a self-reported use of video neuropsychological assessment of 11% prior to the pandemic, whereas we found that rates of remote assessment were less than 1% across the whole sample. In addition, Hammers et al. [Citation23] found that there was no significant increase in video usage (from 11% to 15%). This may be because of differences in the respondent samples. Hammers et al. [Citation23] reported data from over 80% neuropsychologists and psychologists, whereas we had a diverse range of roles and settings for our sample. It is highly likely that many of the occupational therapists worked in settings where no telehealth was available, and this was only implemented following the COVID-19 restrictions. Indeed, we found that none of our sample used remote assessment pre-pandemic, and during the pandemic nearly half (45.20%) of respondents reported using videoconferencing for cognitive assessment.

We found that the most commonly used tests to assess cognition before the pandemic were the Addenbrookes Cognitive Examination [Citation30], the Montreal Cognitive Assessment [Citation31], the Delis-Kaplan Executive Function System [Citation32], and the Oxford Cognitive Screen (OCS) [Citation37]. There was limited change in tests used since the pandemic, when switching to remote assessments. This means that many of the same tests were used pre- and post-pandemic, when they were not validated in the new remote format, potentially affecting the interpretation of test performance, and making clinical decisions more complex than usual. Half of all respondents reported that they used the OCS, and this only slightly shifted down during the pandemic. The main reason that respondents did not administer remote tests was that they were working in in-patient settings where this was not required. For the remote version of the OCS, which remains to be validated in this format, a common reason for not administering the test was not knowing it existed. There is a clear need for improvement in creating and validating tests for remote usage and improved dissemination to health professionals in clinical practice of these validated modalities as soon as they become available.

With regards to the benefits, challenges, and facilitators of remote assessment of cognition, respondents reported that remote assessment had economic and time saving implications, they were COVID-19 secure, and that they increased accessibility for hard-to-reach participants who lived far away or did not travel. However, in terms of challenges faced, respondents felt that they could not get enough information from simple observation of behavioural responses which would allude to subtle impairments or different conclusions, and that the clients themselves were hesitant to use technology generally. While less frequent, an important issue that arose was the lack of standardised and validated tool for the remote assessment of cognition.

Limitations and future research

In order to advance the evidence base on the use of remote cognitive assessments, the current research on differences before and during the start of COVID-19 pandemic intended to collect data from a web-based survey from a global sample. However, the vast majority of the recruited sample were from the UK despite attempts for a wider distribution through social media and international professional bodies such as the International Neuropsychological Society. As such we could not draw firm conclusions about the global use of remote cognitive assessments, or examine any differences depending on geographical area and severity of the pandemic. Instead, we focussed statistical analyses on the UK only sample and made comparisons to the smaller non-UK sample. However, there were only very small sample differences suggesting that the observed rates in the UK may be reflective of a more global picture in regard to shifts in modality of formal cognitive assessments.

We used word of mouth, social media, websites, email and direct communication with UK based professional groups and this potentially restricted our sample. Moreover, our recruitment avenues likely resulted in a selection bias of typically engaging respondents who were motivated to take part in research rather than reflecting the full breadth of allied health professionals conducting cognitive screening. Our sampling outcome was similar to Hammers et al. [Citation23], who recruited mostly USA based respondents with smaller proportions from elsewhere in the globe, and as such is not a unique problem of this paper. In future, in order to garner a truly global sample, greater collaboration between international researchers and the inclusion of more international societies or other relevant professional bodies based in other countries could aid in gaining better sample representation.

However, we did gain a large enough sample for statistical power, and our UK analysis replicated even in the smaller more internationally diverse samples in this paper. Moreover, we aimed to capture the update and shift in the first critical phase of the pandemic, and this will be invaluable to shape future investigations.

Finally, we had a number of participants who incorrectly answered percentage based items (item 9–10c) because their answers for the percentage of formal cognitive assessments conducted either face-to-face or remotely did not sum to 100% or to the total number of tests they ran. In retrospect, a response validation where responses must sum to 100% and a notification of error where they do not, would have prevented data loss for those items. Further research may also want to investigate whether there was a change in the available medical resources or staff, which may have impacted on the criteria for proceeding cognitive evaluation itself. Especially in places with very severe waves and high mortality due to the pandemic, this could, at least temporarily, have impacted the frequency of cognitive assessments, remote or otherwise.

Based on the outcomes of this study, and current research, there is an imperative to generate validated, standardised, and feasible tools to assess participants remotely either through videoconferencing, telephone conferencing, or through both modalities. This is instrumental in enabling clinicians to have greater confidence in the conclusions given by remote assessments and have clearer normative data to compare performance to.

Conclusion

The current study investigated shifts in the formal assessment of cognition before and during the COVID-19 pandemic in a wide range of allied health professionals involved in assessing cognition. We found that the number of cognitive assessments carried out, and the number of face-to-face assessments were not different before or during the pandemic. However, there was a large increase in the use of video and telephone conferencing modalities in assessing cognition. Our study highlights the immediate need for standardised and validated tests which can give greater confidence for clinicians administering them remotely and for participants to partake in.

Supplementary_Materials_ND_SW.docx

Download MS Word (33.2 KB)Acknowledgements

The views expressed in the submitted article are the authors own views and not an official position of the institution or funder.

Disclosure statement

No potential conflict of interest was reported by the author(s).

Data availability statement

The data and analysis scripts that support the findings of this study are openly available on the Open Science Framework (anon peer review only https://osf.io/uv8jr/?view_only=6a2057f4a1e34301a6b56a5ed851876b)

References

- Lescure F-X, Bouadma L, Nguyen D, et al. Clinical and virological data of the first cases of COVID-19 in Europe: a case series. Lancet Infect Dis. 2020;20(6):697–706.

- Dropkin G. COVID-19 UK lockdown forecasts and R 0. Front Public Health. 2020;8:256.

- Coetzer R, Bichard H. The challenges and opportunities of delivering clinical neuropsychology services during the covid-19 crisis of 2020. Panamer J Neuropsychol. 2020;14:29–34.

- Thomas S, Mancini F, Ebenezer L, et al. Parkinson’s disease and the COVID-19 pandemic: responding to patient need with nurse-led telemedicine. Br J Neurosci Nurs. 2020;16(3):131–133.

- Chapman JE, Ponsford J, Bagot KL, et al. The use of videoconferencing in clinical neuropsychology practice: a mixed methods evaluation of neuropsychologists’ experiences and views. Aust Psychol. 2020;55(6):618–616.

- Bradford NK, Caffery LJ, Smith AC. Awareness, experiences and perceptions of telehealth in a rural Queensland community. BMC Health Serv Res. 2015;15:427. Available from: https://www.ncbi.nlm.nih.gov/pmc/articles/PMC4587917/.

- Parikh M, Grosch MC, Graham LL, et al. Consumer acceptability of brief videoconference-based neuropsychological assessment in older individuals with and without cognitive impairment. Clin Neuropsychol. 2013;27(5):808–817.

- Phillips NA, Andrew M, Chertkow H, et al. Clinical judgment is paramount when performing cognitive screening during COVID-19. J Am Geriatr Soc. 2020;68(7):1390–1391.

- Coetzer R. First impressions of performing bedside cognitive assessment of COVID-19 inpatients. J Am Geriatr Soc. 2020;68(7):1389–1390.

- Hantke NC, Gould C. Examining older adult cognitive status in the time of COVID-19. J Am Geriatr Soc. 2020;68(7):1387–1389.

- Ford PGA, Hargroves DD, Lowe DD, et al. Adapting stroke services during the COVID-19 pandemic: an implementation guide. 2020;57:1–8. Available from: https://www.nice.org.uk/media/default/about/covid-19/specialty-guides/specialty-guide-stroke-and-coronavirus.pdf

- NHS London Clinical Networks. Guidance on remote working for memory services during COVID-19 [Internet]. 2020. Available from: https://www.rcpsych.ac.uk/docs/default-source/members/faculties/old-age/guidance-on-remote-working-for-memory-services-during-covid-19.pdf?sfvrsn=ef9b27a9_2.

- NHS England, Royal College of Physicians. Clinical guide for the management of stroke patients during the coronavirus pandemic. Canada: NHSE and NHSI; 2020.

- Division of Neuropsychology [Internet]. Division of neuropsychology professional standards unit guidelines to colleagues on the use of tele-neuropsychology. The British Psychological Society. 2020; Available from: https://www.bps.org.uk/sites/www.bps.org.uk/files/Member%20Networks/Divisions/DoN/DON%20guidelines%20on%20the%20use%20of%20tele-neuropsychology%20%28April%202020%29.pdf.

- Brearly TW, Shura RD, Martindale SL, et al. Neuropsychological test administration by videoconference: a systematic review and meta-analysis. Neuropsychol Rev. 2017;27(2):174–186.

- Phillips NA, Chertkow H, Pichora-Fuller MK, et al. Special issues on using the montreal cognitive assessment for telemedicine assessment during COVID-19. J Am Geriatr Soc. 2020;68(5):942–921.

- MoCA Test Inc. Remote MoCA Testing [Internet]. 2020. [cited 2020 Sep 4]. Available from: https://mailchi.mp/mocatest/remote-moca-testing?e=bbeb81559c.

- Oxford University Innovations: Clinical Outcomes [Internet]. Oxford cognigive screen remote administration version now available!. Oxford University Innovation. 2020; [cited 2020 Sep 4]. Available from: https://innovation.ox.ac.uk/news/ocs-remote-administration/.

- Katz MJ, Wang C, Nester CO, et al. T-MoCA: a valid phone screen for cognitive impairment in diverse community samples. Alzheimers Dement Diagn Assess Dis Monit. 2021;13:e12144.

- Klil-Drori S, Phillips N, Fernandez A, et al. Evaluation of a telephone version for the montreal cognitive assessment: establishing a cutoff for normative data from a cross-sectional study. J Geriatr Psychiatry Neurol. 2021;08919887211002640. DOI:10.1177/08919887211002640.

- American Academy of Clinical Neuropsychology [Internet]. AACN COVID-19 Survey. 2020. Available from: https://theaacn.org/wp-content/uploads/2020/04/AACN-COVID-19-Survey-Responses.pdf.

- Marra DE, Hoelzle JB, Davis JJ, et al. Initial changes in neuropsychologists clinical practice during the COVID-19 pandemic: a survey study. Clin Neuropsychol. 2020;34(7-8):1251–1216.

- Hammers DB, Stolwyk R, Harder L, et al. A survey of international clinical teleneuropsychology service provision prior to and in the context of COVID-19. Clin Neuropsychol. 2020;34(7–8):1267–1283.

- Simmons JP, Nelson LD, Simonsohn U. A 21 word solution. Available at SSRN 2160588. 2012.

- Eysenbach G. Improving the quality of web surveys: the checklist for reporting results of internet E-Surveys (CHERRIES). J Med Internet Res. 2004;6(3):e34.

- Champely S, Ekstrom C, Dalgaard P, et al. Pwr: basic functions for power analysis. R Package Version. 2015;1:665.

- JISC. JISC Online Surveys [Internet]. 2020. [cited 2020 Sep 11]. Available from: https://www.onlinesurveys.ac.uk/.

- Braun V, Clarke V. Thematic analysis. In APA handbook of research methods in psychology. Washington (DC): American Psychological Association; 2012. p. 57–71.

- Kiger ME, Varpio L. Thematic analysis of qualitative data: AMEE guide no. 131. Med Teach. 2020;42(8):846–854.

- Mioshi E, Dawson K, Mitchell J, et al. The addenbrooke’s cognitive examination revised (ACE‐R): a brief cognitive test battery for dementia screening. Int J Geriat Psychiatry. 2006;21(11):1078–1085.

- Nasreddine ZS, Phillips NA, Bédirian V, et al. The montreal cognitive assessment, MoCA: a brief screening tool for mild cognitive impairment. J Am Geriatr Soc. 2005;53(4):695–699.

- Delis DC, Kaplan E, Kramer JH. Delis–Kaplan executive function system (D–KEFS) [internet]. APA PsycTests. 2001. Available from:.

- Wilson BA, Evans JJ, Alderman N, et al. Behavioural assessment of the dysexecutive syndrome. Methodol Frontal Exec Funct. 1997;239:250.

- Brandt J, Spencer M, Folstein M. The telephone interview for cognitive status. Neuropsychiatry Neuropsychol Behav Neurol. 1988;1:111–117.

- Wechsler D. Wechsler test of adult reading: WTAR. London (England): Psychological Corporation; 2001.

- Folstein MF, Folstein SE, McHugh PR. “Mini-mental state”. A practical method for grading the cognitive state of patients for the clinician. J Psychiatr Res. 1975;12(3):189–198.

- Demeyere N, Riddoch MJ, Slavkova ED, ED, et al. The oxford cognitive screen (OCS): validation of a stroke-specific short cognitive screening tool. Psychol Assess. 2015;27(3):883–894.