Abstract

Purpose

To assess the content, quality, and supporting evidence base of clinical practice guidelines (CPGs) with reference to cognitive assessment in stroke.

Materials and methods

We performed a systematic review to identify eligible CPGs pertaining to cognitive assessment in adult stroke survivors. We compared content and strength of recommendations. We used the AGREE-II (appraisal of guidelines for research and evaluation) tool to appraise the quality of the guideline.

Results

Eight eligible guidelines were identified and seven were rated as high quality (i.e., appropriately addressing at least four domains of the AGREE-II tool including “rigor of development”). There was heterogeneity in the recommendations offered and limited guidance on fundamental topics such as which cognitive test to use or when to perform testing. Generally, the lowest quality of evidence (expert opinion) was used to inform these recommendations.

Conclusions

Although assessment of cognition is a key aspect of stroke care, there is a lack of guidance for clinicians. The limited evidence base, in part, reflects the limited research in the area. A prescriptive approach to cognitive assessment may not be suitable, but more primary research may help inform practice.

Cognitive assessment in stroke exhibits substantial variation in practice, clinical practice guidelines rarely give prescriptive recommendations on which approach to take.

Where guideline recommendations on cognitive assessment in stroke were made these were based on expert opinion.

Our summary of the guideline content found certain areas of consensus, for example, routine assessment using validated tools.

Implications for rehabilitation

Introduction

Stroke and cognitive decline are positively associated with advancing age [Citation1,Citation2]. They often co-exist with a bi-directional relationship. Stroke is associated with a spectrum of cognitive issues, often labelled using the umbrella term “vascular cognitive impairment”. Post-stroke cognitive impairments are highly prevalent with estimates suggesting important impairments in almost one in four stroke survivors [Citation3].

Despite this, our understanding of best practice in managing stroke related cognitive deficits is limited and as a result there is considerable variation in practice [Citation4]. Cognitive problems can manifest at all stages of the stroke journey, from pre-stroke cognitive impairment, through acute cognitive issues including delirium [Citation5], to medium and longer term cognitive issues, including overt dementia [Citation6,Citation7].

The importance of post stroke cognition to stroke survivors themselves is clear [Citation8], particularly with regards to attention and visuospatial abilities.

In stroke research, priorities indicate improving the management of cognitive impairment is consistently voted the most important factor by stakeholders including stroke survivors and their care-givers, both in Scotland, and the UK [Citation9,Citation10]; however, this might not be universal given a Swedish study did not find is to be the number one priority [Citation11].

The first step in managing stroke related cognitive problems is assessment and diagnosis. However, there is no consensus agreement on the optimal approach to cognitive assessment. Cognitive assessment can be defined as “[the] examination of higher cortical functions, particularly memory, attention, orientation, language, executive function (planning activities), and praxis (sequencing of activities)” [Citation12–15]. The visuospatial domain of cognition may also be tested, and is highly relevant in stroke care [Citation16]. This is particularly true with respect to visuospatial neglect.

To achieve this cognitive assessment, there are a wide variety of tools available [Citation17,Citation18], ranging from very short screening tools, through to longer multidomain assessments and then tools that attempt to give a diagnostic formulation. Some assessments focus on cognitive impairment through psychometric assessments, whereas others assess cognition through functional activities. There are further levels of variation as these cognitive assessments can be delivered in person, by questionnaire [Citation19], by video call [Citation20] or using other IT platforms [Citation21]. With this myriad of examination and testing options, clinicians may struggle to choose the optimal cognitive assessment [Citation22].

In this context of an important clinical problem and multiple potential management options, clinicians look to clinical practice guidelines (CPGs) to inform the care they offer. The expectation is that management decisions aligned with CPG recommendations will be evidence based and appropriate. CPGs can be defined as “systematically developed statements to assist practitioner decisions about appropriate health care for specific clinical circumstances” [Citation23]. Important stroke-cognitive assessment themes where clinicians and policy makers may seek guidance include the timing of cognitive assessment, the approach to cognitive assessment, the training and expertise required and how to communicate and use results of these assessments.

Guidelines are not a panacea and the CPG label is not a guarantee of quality. Indeed, there has been recent concern about biases and other limitations in certain high-profile CPGs [Citation24–26]. As with any collection of applied research data, there are methods for critical appraisal of CPG content. The development of the Appraisal of Guidelines for Research and Evaluation 2nd version (AGREE-II) provides a yardstick to judge CPG quality [Citation26,Citation27]. Various international bodies and professional societies produce guidelines and the recommendations included may differ across countries, healthcare systems, or professional groups. As methods for the collation, synthesis and critical appraisal of guideline content are now available, a potential useful application would be to use these methods in exploring the topic of cognitive assessment in stroke.

Aims

We set out to identify, compare, and appraise relevant CPGs pertaining to cognitive assessment in stroke survivors.

Specific objectives were to:

Compare content and recommendations of international CPGs.

Assess the quality of these CPGs using the AGREE-II tool.

Describe the evidence base that informed the recommendations.

Materials and methods

We followed best practice in systematic review and evidence synthesis. As there is no specific protocol or guidance for CPG synthesis, we used the Preferred Reporting Items in Systematic Review and Meta-Analysis (PRISMA) checklist where appropriate [Citation28]. We registered our protocol at the Centre for Open Science [Citation29].

All aspects of the conduct of the review (title selection, data extraction, and quality assessment) involved two researchers (DM, CM) working independently and comparing results. Both are clinicians trained in systematic review but neither have any stake, or conflicts of interest with the CPGs reviewed. Consensus was reached through discussion with recourse to a third-rater (TQ) where needed.

Search strategy

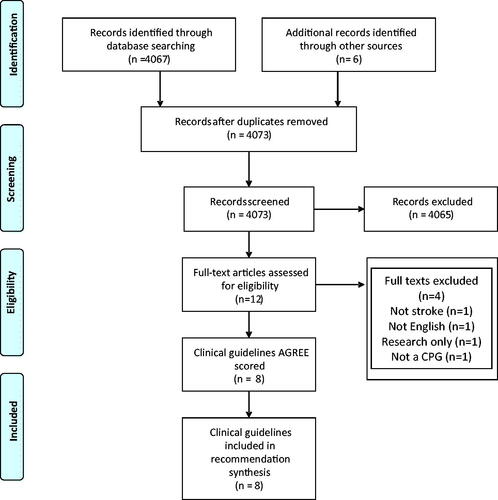

We searched various, multidisciplinary electronic databases: Medline (OVID), Embase (OVID), and CINAHL (EBSCO) & PsycInfo (EBSCO) and both the Scottish Intercollegiate Guideline Network (SIGN) and National Institute of Clinical and Healthcare Excellence (NICE) websites from March 2008 to March 2021 [Citation29] ().

We supplemented our literature search by liaising with international topic experts. We hand searched the websites of relevant specialist societies and guideline producers: American Heart Association (AHA), European Stroke Organisation (ESO), Stroke Foundation (Australia). We also contacted relevant professional associations: British Psychological Society, British Neuropsychological Society (BNS), Royal College of Occupational Therapists, Council of Occupational Therapists for European Countries, and the Stroke Psychology Special Interest Group of the World Federation for Neuro Rehabilitation (OPSYRIS – Organisation for Psychological Research in Stroke) (full search strategy and syntax can be found in Supplementary Materials).

Inclusion/exclusion criteria

We formulated our inclusion criteria using the “PICAR” approach recommended for guideline reviews (modified from the traditional PICO for clinical question framing and focussing on Population, Intervention, Comparator, Attributes, and Recommendations) [Citation30].

We limited inclusion to English language guidance and publication within our search time window. Where more than one guideline was produced on the same topic by the same organisation, we selected the most recent publication. If a guideline was described as needing updated by the host organisation, but no update was available, and the guidance was still in the public domain then we included the CPG ().

Table 1. PICAR inclusion criteria for the review.

Data extraction

Both reviewers extracted all relevant information from CPGs into a bespoke extraction form. We extracted general and topic specific guideline information: publisher of the guideline, country of origin, target population, method of evidence collation, method of evidence grading, method of evidence synthesis, evidence base for the recommendation(s) as well as the recommendations. We also pre-defined four specific areas of particular interest, namely around cognitive test to be used, timing of assessment, training required, and how to use the resulting data (). The extracted elements were compared to ensure both reviewers had consistent data. The master list of all verbatim extractions is in Supplementary Material as “Master table of all extracted recommendations & evidence strengths”.

Table 2. Data extraction table of clinical practice guidelines.

Quality assessment

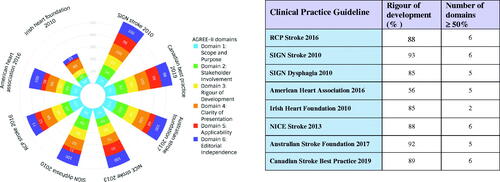

We used the AGREE II tool to assess the quality of included guidelines [Citation31]. AGREE-II consists of 23 items arranged into six domains: Scope and Purpose (three items), Stakeholder Involvement (three items), Rigour of Development (eight items), Clarity of Presentation (three items), Applicability (four items), and Editorial Independence (two items) [Citation32–34] ().

All guidelines with recommendations on cognitive assessment in stroke were assessed at the level of each AGREE-II domain item using a seven-point scale and transferring the results to a standardised form based on the AGREE template. The scoring system was ordinal with a score of 1 (strongly disagree) to 7 (strongly agree). A combined AGREE-II domain result was calculated using an aggregated score using (obtained score – minimum possible score)/(maximum possible score – minimum possible score)×100%. This was done as per the AGREE II user’s manual, and each domain had the same weighting [Citation33].

Judgements on each guideline’s overall quality were made by employing a standardised scoring rubric. Guidelines were of “high quality” if they adequately addressed at least four of the six AGREE II domains, including the “Rigour of Development” domain. To be considered as having adequately addressed a domain, a calculated AGREE-II result threshold of 50% or more had to be attained. If two or more domains were adequately addressed (or three domains except for “Rigour of Development”) CPGs were graded “moderate quality”. CPGs where only one, or no domains reached the 50% result were of “low” overall quality. There is no consensus on scoring AGREE-II data. As the topic CPGs could inform clinical practice, we prioritised the “Rigour of Development” domain, believing that all clinical guidance should be as evidence based as possible. For the same reasons, we set a high threshold for the label of “high quality” by mandating that at least four domains be adequately addressed. Our approach followed usual practice in other reviews of guidelines [Citation35]. When interpreting AGREE-II, one should remember that the scoring relates to the quality and reporting of the published CPG rather than the evidence underlying the recommendations [Citation32].

Recognising the potential for variation in AGREE-II assessments, it is recommended that all domains, as an aggregate, are compared using the intra-class correlation coefficient (ICC) [Citation31,Citation36], where values less than 0.5, between 0.51 and 0.75, between 0.76 and 0.9, and greater than 0.91 are indicative of poor, moderate, good, and excellent reliability, respectively [Citation31]. Where disagreement remained following discussion (ICC score of less than 0.5), a third-rater (TQ) made a final judgement.

Data synthesis

We developed matrices of guideline recommendations to facilitate systematically comparing, categorising, and summarising the content across, and within CPGs (). Although the wording in each guideline differed, there were commonalities across the actions recommended. To allow an easily understood summary of the guideline content, we combined and condensed the recommendations. Full text of each recommendation was copied verbatim, creating a long list of free text statements. The list was assessed independently by DM and TQ, where recommendations suggested a common action, these were combined, and a summary text was created. This was an iterative process with comparison and discussion of the independent summaries. The process continued until no more recommendations could be combined. We present these summary descriptions in data matrices, where recommendations are cross classified with guidelines and overall quality of evidence of the guideline. Full original text of each recommendation is available in Supplementary Materials.

Table 3. Stroke CPG recommendations and strength of evidence.

The domain level quality of each guideline was collated and incorporated within a stacked polar chart. We had planned to covert the statements regarding the evidence supporting each recommendation into a standardised rubric to allow easy comparison however as all recommendations relied upon expert opinion only, we described this as a narrative.

Results

Our search yielded eight eligible CPGs (), offering 27 recommendations regarding cognitive assessment in stroke. Of the 20 high profile guidelines selected for full text review following our initial scoping search, 12 did not have any mention of cognition and its assessment [Citation37–48].

We were able to condense these into 14 common recommendations: three describing assessment; three describing assessment timing; two describing who to assess and six describing how the assessment should be used (). We also found four recent documents that were relevant to our question but did not completely meet our inclusion criteria: a guidance document from the Chinese Society of Geriatrics on Cerebrovascular Small Vessel Disease; a ESO White Paper on Cognitive Impairment in Cerebrovascular Disease; ESO-Karolinska Recommendations on Cognitive Assessment in Stroke Trials and Norwegian Directorate of Health Guidelines on Stroke (Supplementary Materials). We also note that an ESO guideline on Post Stroke Cognitive Impairment is in production and due for release in late 2021.

Seven CPGs were of high quality including the Royal College of Physicians (RCP), SIGN (two guidelines), Australian Stroke Foundation, Canadian Best Practice, AHA, and NICE. The Irish Heart Foundation CPG was judged moderate quality ().

All included CPGS achieved greater than 50% in the Scope and Purpose domain. Seven CPGS achieved greater than 50% in Stakeholder Involvement and Rigour of Development. All CPGS achieved greater than 50% in Clarity of Presentation. Three CPGs achieved greater than 50% in Applicability. Seven CPGs achieved greater than 50% for Editorial Independence. Greatest variation was within the Stakeholder and Applicability domains.

The strength of evidence that underpinned all the recommendations was based on expert opinion and the wording of the recommendations was created by the guideline development groups. Where primary evidence was used to inform the CPGs, NICE guidance used indirect evidence from a Cochrane review [Citation49] and Canadian guidance was partly based on test accuracy [Citation50,Citation51] and epidemiological studies [Citation52].

Discussion

Despite the importance of cognitive impairment in stroke, in our review of English language guidelines, we found a limited number of CPGs offering recommendations with reference to cognitive assessment in stroke care settings. By comparison medical management and physiological monitoring during stroke featured in all the national guidelines assessed. The UK National Stroke Audit (Sentinel Stroke National Audit Programme (SSNAP)) [Citation53] highlights the potential disparity between “psychological” and “medical” aspects of stroke care. Across the UK, availability of access to a clinical psychologist has the lowest audit compliance (12 of the 169 UK stroke centres included meet this criterion).

The included CPGs were generally of high quality when assessed using the AGREE-II tool, albeit there was variation across guidelines and across individual domains of the quality assessment. However, this high quality is not synonymous with clinically useful guidance. AGREE was developed with the intention of improving the comprehensiveness, completeness, and transparency of reporting in practice guidelines. The AGREE-II checklists are used to assess the process and content of CPGs. A guideline that concludes “more research is needed” could score well using AGREE-II but is not necessarily useful in practice.

Where guidance was offered in our eligible CPGs, there was consensus that post stroke cognitive impairment is common and should be assessed as part of routine clinical care. We pre-specified important clinical questions for planning stroke cognitive assessment. While the guidelines provided content on these themes, the recommendations were often generic rather than an explicit plan that could be implemented by clinicians. For example, only one CPG named a preferred assessment tool (Montreal Cognitive Assessment) while others recommend using a “validated” (an undefined concept) tool, some provide no elaboration and others give tables or appendices of various possible assessments. The vague nature of guidance offered was not unique to a country or guideline producing body, rather it was common to all the guidelines assessed.

Underpinning all the relevant recommendations was a lack of high-quality trial evidence and a reliance on expert consensus. This is not a criticism of the CPGs, as for the topic of post stroke cognitive assessment clinical trials are generally lacking. This situation is not unique to post stoke cognitive assessment. Other important aspects of stroke care such as management of aphasia often rely on expert consensus as definitive original research studies are limited, albeit the situation is improving with important new studies recently completed or ongoing [Citation54]. In the context of rapidly evolving evidence base, CPGs need to promptly incorporate new data. We note that some guideline producers are moving to a “living” guideline approach, where the evidence is scanned regularly and recommendations updated as soon as required by new research.

Despite the critical importance of cognition in clinical practice, stroke guidelines are not alone in offering vague recommendations around cognitive assessment. Even in conditions with a cognitive focus, like dementia and delirium guideline bodies such as SIGN [Citation55], NICE [Citation56] and the RCP [Citation57] are equivocal in their recommendations about the cognitive assessment to be used [Citation55]. Here, a lack of primary research is less of an issue, as systematic reviews and meta-analyses of various cognitive screening tests are available [Citation58,Citation59].

The availability of a CPGs with clear and evidence-based recommendation is not a guarantee of implementation. There are well described clinician barriers to clinician engagement with guidelines. A full discussion of the barriers and facilitators is beyond the scope of this review, but important factors include, time, access, and ease of understanding the guidance and supporting evidence [Citation60,Citation61]. In this sense, more systematic reviews of CPGs, with summaries and critique of the CPG content, may help clinicians make sense of contentious areas of practice.

The CPG recommendations were all based on expert opinion – often considered the lowest form of evidence. Using randomised methods to inform practice in use of a test strategy is uncommon, although research novel designs are emerging. While there are systematic reviews and meta-analyses of the properties of cognitive tests in stroke [Citation62], the classical test accuracy paradigm of comparing a test to a gold standard is only partly helpful in clinical practice. More sophisticated methods involving comparative test accuracy, test-treatment-outcomes and user experience are needed if the next iterations of guidelines are to offer more concrete recommendations [Citation63].

Perhaps it is not for a CPG to mandate a particular approach to cognitive assessment. The choice of approach to assessing cognition will vary based on the person to be assessed, the clinical question to be answered and the resources available. A degree of clinical judgement will always be needed, and CPGs are a source of guidance rather than standardised operating procedures. However, few would argue against the need for more primary research on cognitive assessment in stroke that can allow the clinician an evidence-based approach to their assessment.

Strengths and limitations

Our search strategy was robust with a professional librarian generating a comprehensive search strategy with iterative steps, ensuring as much relevant literature was captured as possible. We followed best practice in evidence synthesis, with all steps performed independently by at least two trained assessors. While neither of the assessors were experienced guideline producers, as consumers of guidelines in clinical practice both had a working understanding of what fellow clinicians need from CPGs. We used various approaches to data visualisation, taking data that exist across several axes, and creating easy to understand synthesis suitable for clinicians, researchers, and policy makers.

Some weakness of our work includes only capturing English language CPGs. Thus, our guidelines have an Australasia, North American, UK, and Ireland focus. We suspect guidelines from other countries, especially low- and middle-income countries may look quite different. We limited our review to only one aspect of the management of cognition in stroke, namely assessment. Our scoping of the literature suggests that a review of treatment options in post-stroke cognitive impairment may be equally limited by a lack of primary research. To aid data visualisation and summarising of the CPG text, we collated and condensed recommendations. In doing this, we tried to preserve the meaning and nuance of the original text, but it is possible some information could have been lost. Some CPGs included in our synthesis were described as out of date by the host organisations. In the absence of any new version of these materials, we still included these CPGs in our review.

Implications for practice, policy, and research

The motivation for this review was the perceived inconsistency in clinical approach to cognitive assessment in stroke. The review of guidelines does not suggest a preferred strategy. There are a multitude of cognitive assessment tools and it is unclear currently which one is best; this is an area that could benefit from greater standardisation [Citation64]. The current lack of consensus among CPGs highlights the uncertainty in the clinician community. While it may not be possible or appropriate to give prescriptive guidance on the choice of cognitive assessment, recommendations on timing of assessment, training of assessors and modifications to assessment strategies for particular patient groups could inform clinical pathways and ultimately improve patient care. As well as standardising care, CPGs have an important role in bench marking best practice and clear guidelines around cognitive assessment may help improve the visibility and raise standards in cognitive assessment. A useful next step would be to ask the clinical stroke community what they want from CPGs around cognitive assessment.

Our use of the AGREE tool suggests that guidelines in the stroke cognition space are produced to a high quality. Although there is still scope to further ensure stakeholder involvement in production and greater consideration of the barriers and facilitators of implementation of the guidance – as these were the domains with the greatest variability across CPGs. In line with other quality assessment tools, there may be an argument for adding a further domain to AGREE to allow assessment of clinical relevance of the guidance. By developing a “clinical recommendation” quality assessment domain, it might be possible not only to drive up the reporting standards of clinical guidelines but to begin to comment on their inherent clinical utility of the guideline.

Conclusions

While stroke care has advanced hugely in recent decades, some elements are better considered and evidenced than others. Explicit guidance on hyperacute stroke therapy, underpinned by robust primary research has transformed stroke care. At present, the assessment of cognition in stroke is lacking useful guidance but this partly reflects the availability of original research in this area. Where recommendations are available, the guidelines tend to be of high quality but may lack clinical utility. Given the myriad of stroke cognitive presentations, clinician variation in management and differences in healthcare settings, prescriptive guidance on the exact approach to cognitive testing may not be suitable. Clinical guidelines are just that, guidance and are not a substitute for clinical judgement or consideration of patient preferences. However, further primary research on cognitive assessment would allow the next iterations of guidelines to offer a stronger evidence base that could hopefully improve the approach to assessment.

Supplementary_material___table_of_contents__copy_copy.pdf

Download PDF (826.8 KB)Disclosure statement

No potential conflict of interest was reported by the author(s).

Additional information

Funding

References

- Brainin M, Tuomilehto J, Heiss WD, et al. Post-stroke cognitive decline: an update and perspectives for clinical research. Eur J Neurol. 2015;22:229–e16.

- Yousufuddin M, Young N. Aging and ischemic stroke. Aging. 2019;11(9):2542–2544.

- Douiri A, Rudd AG, Wolfe CDA. Prevalence of poststroke cognitive impairment: South London Stroke Register 1995–2010. Stroke. 2013;44(1):138–145.

- Mancuso M, Demeyere N, Abbruzzese L, et al. Using the Oxford Cognitive Screen to detect cognitive impairment in stroke patients: a comparison with the mini-mental state examination. Front Neurol. 2018;9:101.

- Melkas S, Laurila JV, Vataja R, et al. Post-stroke delirium in relation to dementia and long-term mortality. Int J Geriatr Psychiatry. 2011;27(4):401–408.

- Sun JH, Tan L, Yu JT. Post-stroke cognitive impairment: epidemiology, mechanisms and management. Ann Transl Med. 2014;2(8):80.

- Kalaria RN, Akinyemi R, Ihara M. Stroke injury, cognitive impairment and vascular dementia. Biochim Biophys Acta. 2016;1862(5):915–925.

- Cumming TB, Brodtmann A, Darby D, et al. The importance of cognition to quality of life after stroke. J Psychosom Res. 2014;77(5):374–379.

- Pollock A, St George B, Fenton M, et al. Top ten research priorities relating to life after stroke. Lancet Neurol. 2012;11(3):209.

- Alliance TJ. Stroke survivor, carer and health professional research priorities relating to life after stroke. Scotland: Glasgow Caledonian University; 2012.

- Rudberg A-S, Berge E, Laska A-C, et al. Stroke survivors’ priorities for research related to life after stroke. Top Stroke Rehabil. 2021;28(2):153–156.

- Siqueira GSA, Hagemann PDMS, Coelho DDS, et al. Can MoCA and MMSE be interchangeable cognitive screening tools? A systematic review. Gerontologist. 2019;59(6):e743–e763.

- Pinto TCC, Machado L, Bulgacov TM, et al. Is the Montreal Cognitive Assessment (MoCA) screening superior to the mini-mental state examination (MMSE) in the detection of mild cognitive impairment (MCI) and Alzheimer's disease (AD) in the elderly? Int Psychogeriatr. 2019;31(4):491–504.

- Chua SIL, Tan NC, Wong WT, et al. Virtual reality for screening of cognitive function in older persons: comparative study. J Med Internet Res. 2019;21(8):e14821.

- Nasreddine ZS, Phillips NA, Bédirian V, et al. The Montreal Cognitive Assessment, MoCA: a brief screening tool for mild cognitive impairment. J Am Geriatr Soc. 2005;53(4):695–699.

- Toornstra A, Hurks P, Van der Elst W, et al. Measuring visual, spatial, and visual spatial short-term memory in schoolchildren: studying the influence of demographic factors and regression-based normative data. J Pediatr Neuropsychol. 2019;5(3):119–113.

- Quinn TJ, Elliott E, Langhorne P. Cognitive and mood assessment tools for use in stroke. Stroke. 2018;49(2):483–490.

- Burton L, Tyson S. Screening for cognitive impairment after stroke: a systematic review of psychometric properties and clinical utility. J Rehabil Med. 2015;47(3):193–203.

- McGovern A, Pendlebury ST, Mishra NK, et al. Test accuracy of informant-based cognitive screening tests for diagnosis of dementia and multidomain cognitive impairment in stroke. Stroke. 2016;47(2):329–335.

- Chapman JE, Cadilhac DA, Gardner B, et al. Comparing face-to-face and videoconference completion of the Montreal Cognitive Assessment (MoCA) in community-based survivors of stroke. J Telemed Telecare. 2021;27(8):484–492.

- Elliott E, Green C, Llewellyn DJ, et al. Accuracy of telephone-based cognitive screening tests: systematic review and meta-analysis. Curr Alzheimer Res. 2020;17(5):460–471.

- Lees RA, Broomfield NM, Quinn TJ. Questionnaire assessment of usual practice in mood and cognitive assessment in Scottish Stroke Units. Disabil Rehabil. 2014;36(4):339–343.

- Thomas L. Clinical practice guidelines. Evid Based Nurs. 1999;2(2):38–39.

- Shaneyfelt TM, Mayo-Smith MF, Rothwangl J. Are guidelines following guidelines? JAMA. 1999;281(20):1900.

- Ward JE, Grieco V. Why we need guidelines for guidelines: a study of the quality of clinical practice guidelines in Australia. Med J Aust. 1996;165(10):574–576.

- Navarro Puerto MA, Ibarluzea IG, Ruiz OG, et al. Analysis of the quality of clinical practice guidelines on established ischemic stroke. Int J Technol Assess Health Care. 2008;24(3):333–341.

- Alonso-Coello P, Irfan A, Solà I, et al. The quality of clinical practice guidelines over the last two decades: a systematic review of guideline appraisal studies. Qual Saf Health Care. 2010;19(6):e58.

- Liberati A, Altman DG, Tetzlaff J, et al. The PRISMA statement for reporting systematic reviews and meta-analyses of studies that evaluate health care interventions: explanation and elaboration. PLoS Med. 2009;6(7):e1000100.

- McMahon D. Protocol for systematic review of clinical practice guidelines detailing cognitive assessment in stroke. Centre for Open Science; 2020 [cited 2021 Jul]. Available from: https://www.cos.io/

- Huang X, Lin J, Demner-Fushman D. Evaluation of PICO as a knowledge representation for clinical questions. AMIA Annu Symp Proc. 2006;2006:359–363.

- Koo TK, Li MY. A guideline of selecting and reporting intraclass correlation coefficients for reliability research. J Chiropract Med. 2016;15(2):155–163.

- Hatakeyama Y, Seto K, Amin R, et al. The structure of the quality of clinical practice guidelines with the items and overall assessment in AGREE II: a regression analysis. BMC Health Serv Res. 2019;19(1):788.

- Brouwers MC, Kerkvliet K, Spithoff K. The AGREE reporting checklist: a tool to improve reporting of clinical practice guidelines. BMJ. 2016;352:i1152.

- Brouwers MC, Kho ME, Browman GP, et al. AGREE II: advancing guideline development, reporting and evaluation in health care. CMAJ. 2010;182(18):E839–E842.

- Johnston A, Hsieh SC, Carrier M, et al. A systematic review of clinical practice guidelines on the use of low molecular weight heparin and fondaparinux for the treatment and prevention of venous thromboembolism: implications for research and policy decision-making. PLOS One. 2018;13(11):e0207410.

- Liljequist D, Elfving B, Skavberg Roaldsen K. Intraclass correlation – a discussion and demonstration of basic features. PLOS One. 2019;14(7):e0219854.

- Einhäupl K, Bousser MG, De Bruijn SFTM, et al. EFNS guideline on the treatment of cerebral venous and sinus thrombosis. Eur J Neurol. 2006;13(6):553–559.

- New Zealand clinical guidelines for stroke management 2010. New Zealand: Stroke Foundation for New Zealand; 2010.

- Heart and Stroke Foundation. Canadian stroke best practice recommendations acute stroke management. Canada: Acute Stroke Management Best Practice Writing Group; 2018.

- Heart and Stroke Foundation. Rehabilitation, recovery and community. Canada; 2019.

- Heart and Stroke Foundation. Management of spontaneous intracerebral hemorrhage. Canada; 2020.

- Higashida R, Alberts MJ, Alexander DN, et al. Interactions within stroke systems of care: a policy statement from the American Heart Association/American Stroke Association. Stroke. 2013;44(10):2961–2984.

- Holtkamp M, Beghi E, Benninger F, et al. European Stroke Organisation guidelines for the management of post-stroke seizures and epilepsy. Eur Stroke J. 2017;2(2):103–115.

- Powers WJ, Rabinstein AA, Ackerson T, et al. Guidelines for the early management of patients with acute ischemic stroke: 2019 update to the 2018 guidelines for the early management of acute ischemic stroke: a guideline for healthcare professionals from the American Heart Association/American Stroke Association. Stroke. 2019;50(12):e344–e418.

- Steiner T, Salman RA-S, Beer R, et al. European Stroke Organisation (ESO) guidelines for the management of spontaneous intracerebral hemorrhage. Int J Stroke. 2014;9(7):840–855.

- Towfighi A, Ovbiagele B, Husseini NE, et al. Poststroke depression: a scientific statement for healthcare professionals from the American Heart Association/American Stroke Association. Stroke. 2017;48(2):e30–e43.

- National Institute for Health and Care Excellence. NICE guideline [NG128]. Stroke and transient ischaemic attack in over 16s: diagnosis and initial management. 2019 [cited 2021 Jul]. Available from: https://www.nice.org.uk/guidance/ng128

- Winstein CJ, Stein J, Arena R, et al. Guidelines for adult stroke rehabilitation and recovery: a guideline for healthcare professionals from the American Heart Association/American Stroke Association. Stroke. 2016;47(6):e98–e169.

- Chung CSY, Pollock A, Campbell T, et al. Cognitive rehabilitation for executive dysfunction in adults with stroke or other adult non-progressive acquired brain damage. Cochrane Database Syst Rev. 2013;(4):CD008391.

- Delavaran H, Jönsson AC, Lövkvist H, et al. Cognitive function in stroke survivors: a 10-year follow-up study. Acta Neurol Scand. 2017;136(3):187–194.

- Pendlebury ST, Mariz J, Bull L, et al. MoCA, ACE-R, and MMSE versus the national institute of neurological disorders and Stroke-Canadian stroke network vascular cognitive impairment harmonization standards neuropsychological battery after TIA and stroke. Stroke. 2012;43(2):464–469.

- Ayerbe L, Ayis S, Wolfe CDA, et al. Natural history, predictors and outcomes of depression after stroke: systematic review and meta-analysis. Br J Psychiatry. 2013;202(1):14–21.

- Sentinel Stroke National Audit Program (SSNAP). 2019 [cited 2021 Jul]. Available from: https://www.strokeaudit.org/

- Brady MC, Kelly H, Godwin J, et al. Speech and language therapy for aphasia following stroke. Cochrane Database Syst Rev. 2016;(6):CD000425.

- Soiza RL, Myint PK. The Scottish Intercollegiate Guidelines Network (SIGN) 157: guidelines on risk reduction and management of delirium. Medicina. 2019;55(8):491.

- NICE. Delirium overview. NICE pathways; 2010 [updated 2019 Mar; cited 2019 Apr 1]. Available from: https://pathways.nice.org.uk/pathways/delirium#content=view-node%3Anodes-assess-for-delirium

- Royal College of Physicians. Acute care toolkit 3 – acute medical care for frail older people [toolkit]. Royal College of Physicians; 2012. Available from: https://www.rcplondon.ac.uk/guidelines-policy/acute-care-toolkit-3-acute-medical-care-frail-older-people

- Harrison JK, Fearon P, Noel-Storr AH, et al. Informant Questionnaire on Cognitive Decline in the Elderly (IQCODE) for the diagnosis of dementia within a secondary care setting. Cochrane Database Syst Rev. 2015;(3):CD010772.

- Beishon LC, Batterham AP, Quinn TJ, et al. Addenbrooke's Cognitive Examination III (ACE-III) and mini-ACE for the detection of dementia and mild cognitive impairment. Cochrane Database Syst Rev. 2019;(12):CD013282.

- Zwolsman S, Te Pas E, Hooft L, et al. Barriers to GPs' use of evidence-based medicine: a systematic review. Br J Gen Pract. 2012;62(600):e511–e521.

- Hong B, O'Sullivan ED, Henein C, et al. Motivators and barriers to engagement with evidence-based practice among medical and dental trainees from the UK and republic of Ireland: a national survey. BMJ Open. 2019;9(10):e031809.

- Lees R, Selvarajah J, Fenton C, et al. Test accuracy of cognitive screening tests for diagnosis of dementia and multidomain cognitive impairment in stroke. Stroke. 2014;45(10):3008–3018.

- Takwoingi Y, Quinn TJ. Review of diagnostic test accuracy (DTA) studies in older people. Age Ageing. 2018;47(3):349–355.

- Harrison JK, Noel-Storr AH, Demeyere N, et al. Outcomes measures in a decade of dementia and mild cognitive impairment trials. Alzheimers Res Ther. 2016;8(1):48.