Abstract

Purpose

Most activities of daily living (ADLs) require the use of both upper extremities. However, few assessments exist to assess bimanual performance, especially among adults living with cerebral palsy (CP). The aim of this preliminary study is to assess the interrater reliability and convergent validity of the Assisting Hand Assessment (AHA) scoring grid applied to unstandardized ADLs.

Materials and methods

For this validation study, nineteen adults living with spastic unilateral CP were videotaped performing seven bimanual ADLs. Three raters assessed the videos independently using the 20-item grid of the AHA. Gwet’s AC2 was used to assess interrater reliability. Kendall’s Tau-b correlation was used between the observation-based scoring grid and Jebsen-Taylor Hand Function Test (JTHFT) scores to assess convergent validity.

Results

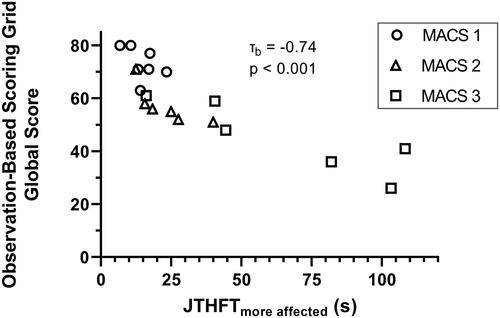

Interrater reliability was good (0.84, SD = 0.02). The correlation with the JTHFT was high (τb = −0.74; p < 0.001).

Conclusion

The results show the potential of using an observation-based scoring grid with unstandardized ADLs to assess bimanual performance in adults living with CP, but further research on psychometric properties is needed. This method allows for an assessment that is occupation-oriented, ecological, and meaningful.

IMPLICATIONS FOR REHABILITATION

An observation-based scoring grid (Assisting Hand Assessment) can be applied in unstandardized activities of daily living to assess bimanual performance in adults with cerebral palsy.

This method allows an occupation-oriented, ecological, and client-meaningful assessment.

Although this approach is a pilot measure, it can be used by clinicians and researchers until further psychometric analyses are undertaken.

Introduction

Cerebral palsy (CP) is the most common cause of physical disability during childhood, with spastic unilateral CP being one of the most prevalent forms worldwide [Citation1–3]. Spastic unilateral CP is characterized by sensorimotor impairments that affect predominantly one side of the body, which often leads to difficulties in performing activities of daily living (ADLs) [Citation4,Citation5]. Although the deficits are mainly lateralized to the upper extremity (UE) contralateral to the brain lesion, sensorimotor deficits to the other upper extremity are also observed, resulting in reduced bimanual performance in people living with spastic unilateral CP [Citation6]. Considering that most ADLs require coordinated use of both UE [Citation7], it is important to better understand the deficits in bimanual performance in CP. However, most of the available assessments for UE function are limited to unilateral tasks [Citation8]. Unimanual assessments are poor indicators of bimanual performance [Citation9,Citation10], so the latter must be assessed specifically. Furthermore, adults living with CP have often been neglected in rehabilitation care and research, and few assessment tools are available for this specific population [Citation11,Citation12]. It is important to have valid assessments to measure changes in function considering the declines observed in many areas of functioning during the adult life of people living with CP [Citation13]. There is currently no assessment of bimanual performance that is specifically validated for adults living with CP, as most assessments are either validated for children with CP or adults with stroke [Citation14–17]. Moreover, most of the existing bimanual assessments are self-reported questionnaires (i.e., ABILHAND, Children’s Hand-use Experience Questionnaire (CHEQ)) and are therefore susceptible to several biases (e.g., recall bias, desirability bias) [Citation15,Citation18].

The Assisting Hand Assessment (AHA) is an observation-based assessment that consists of a semi-structured task allowing the examiner to observe different aspects pertaining to bimanual performance [Citation19]. The AHA has been validated for children with CP and adults with stroke, and has shown good psychometric properties [Citation20–22]. The AHA scoring grid and the psychometric properties of the AHA have only been assessed using the standardized tasks designed by the AHA developers: a play session with toys (for children aged 18 months to 5 years), two board games (one for children aged 6 to 12 years and one for adolescents), and two functional tasks, namely sandwich-making and gift-wrapping (for adolescents with CP or adults with stroke).

It is relevant to determine whether the AHA scoring grid can offer a valid and reliable assessment of bimanual performance in unstandardized tasks for both clinical and research purposes. In clinical practice, this would allow therapists to assess bimanual function in diverse ADLs that are relevant for a given individual, allowing for a more meaningful and occupation-oriented approach compared to traditional, less ecological assessments. This preliminary study therefore aimed to assess the interrater reliability and convergent validity of the AHA scoring grid in the context of unstandardized ADLs in adults living with spastic unilateral CP.

Materials and methods

Participants

A convenience sample of 19 adults with spastic unilateral CP was recruited between December 2020 and April 2022 through the Université Laval mailing list and the health records of the Centre Intégré Universitaire de Santé et de Services Sociaux de la Capitale-Nationale (CIUSSS-CN). With respect to the latter, approximately 300 patient files were screened, of which about sixty were eligible and about thirty could be contacted by the archive’s services. Of these, fifteen consented to participate in the study. The other four candidates were recruited from the Université Laval mailing list. The inclusion criteria were: (1) being aged between 18 and 65 years old; (2) having a diagnosis of spastic unilateral CP; and (3) having a level of I, II, or III on the Manual Ability Classification System (MACS). The MACS classifies how someone with CP uses their hands to handle objects in daily activities. It is composed of 5 levels, ranging from 1) Handles objects easily and successfully, to 5) Does not handle objects and has severely limited ability to perform even simple actions [Citation23]. This validation study was part of a larger project aiming to characterize sensorimotor deficits (using clinical and robotic assessments) and the use of the UE (using accelerometry) in adults with CP. Results of other components of the study have been published in journals or as abstracts, but none of these publications focused on results from the AHA [Citation24–27]. The project was approved by the local ethics committee (Ethics #2018-609, CIUSSS-CN). All participants provided their written informed consent prior to the experiment.

Data collection

The manual and scoring grid of the AHA version 5.0 was used [Citation19]. The scoring grid consists of 20 items that evaluate how effectively a person involves their affected hand during bimanual tasks. Each item represents a different aspect of the assisting hand’s performance, and these are grouped into six different categories: general use, arm use, grasp–release, fine motor adjustment, coordination, and pace (see for a list of the 20 items and how they are grouped into six categories). Each item is scored on a four-point rating scale: 4) effective; 3) somewhat effective; 2) ineffective; and 1) does not do. The criteria are related to frequency (as compared with the less affected side), quality, and efficiency of the abilities represented by each item. The global score ranges from 20 to 80, with the latter corresponding to a perfectly integrated use of the assisting hand in the observed tasks. Detailed instructions are available on how to score each item in the AHA manual to ensure objective assessment. The AHA is a commercialized assessment battery belonging to Handfast AB (Solna, Sweden); please refer to their website for more information on this assessment and the scoring grid (https://ahanetwork.se/).

Table 1. Interrater gwet’s AC2 per item and for the global score of the observation-based scoring grid.

In order to implement this assessment in unstandardized tasks, the participants were requested to perform seven bimanual ADLs, which are described in . Only general instructions were given to the participants about the tasks, to leave them the possibility of carrying out the tasks in their own way. The ADLs were chosen to cover a broad range of brief and typical tasks that require the use of both hands and that collectively allow all items from the AHA grid to be observed. For example, tasks like cutting a piece of putty, putting toothpaste on a toothbrush, and making coffee require distal movements and were chosen to observe items in the fine motor adjustments category. Tasks requiring more proximal motor skills (e.g., folding towels and cleaning a table) were chosen to observe items in the Arm use category, and allowed for observation of full range of motion at the shoulder. The tasks were also selected to cover a broad spectrum of bimanual use of the UE. Results of a study published by our group show that accelerometry metrics measured during these tasks make it possible to differentiate between participants living with CP or without it [Citation24]. Moreover, accelerometry metrics make it possible to differentiate tasks in terms of relative use of both limbs, with some tasks being mainly unimanual (e.g., putting toothpaste on a toothbrush) and others being mainly bimanual (e.g., folding towels) [Citation24]. The rating of each item was based on the observation of all seven tasks. Some observations were redundant from one task to another, but it was deemed important to provide participants with multiple opportunities to demonstrate a skill since many criteria consider the frequency with which the skill was presented; This aspect of redundancy is also present in the standardized versions of the AHA. All assessments took place in a simulated living-environment laboratory. As in the original standardized version of the AHA, assessments were videotaped to allow scoring as a distinct step. This also allowed all raters to base their ratings on the same performance.

Table 2. Description of the seven tasks performed by participants.

To assess interrater reliability, three raters scored the performance of each of the 19 participants based on the videotaped recordings. Two of the raters (IP and LGP) were occupational therapists with clinical experience with neurological populations (six years and three years of experience, respectively). The third rater was an occupational therapy student, which made it possible to observe the influence of a naïve assessor on the interrater reliability. To operationalize the scoring grid for the new tasks, two videotaped sessions were selected for a pilot phase and were assessed by the two experienced raters. The raters then met to compare their results and discuss discrepancies. The interpretation of the scoring grid was clarified for six items to better suit the nature of less structured tasks. For example, more precise indications about frequency were added to the items Amount of use, Reaches, Moves upper arm, Moves forearm, and Grasps (see for an example). Considering the less structured nature of the tasks used in the present study compared with those used in standardized AHA tasks, the occasions for showing those abilities were quite different. Also, specific tasks were identified to assess the item Manipulates, like handling utensils, toothbrush, or toothpaste. These clarifications to the observation-based scoring grid in the context of less structured tasks made it possible to decrease the number of mismatches between the two raters from 5.5 to 1.7 mismatches per participant on average. After the clarifications were made, the three raters proceeded independently with the 17 remaining assessments using the AHA observation-based scoring grid, while being blinded to each other’s scoring.

Table 3. Example of modification made to the scoring grid.

To assess the convergent validity of the observation-based scoring grid, the Jebsen-Taylor Hand Function Test (JTHFT) was used to measure UE function. The JTHFT is a standardized assessment of unimanual motor function of the UE [Citation28]. The assessment consists of seven functional tasks: (1) writing; (2) turning cards; (3) picking up small objects; (4) simulating feeding; (5) stacking checkers, and (6, 7) picking up light or heavy objects. Each task is timed, and the amount of time needed is used as a score. If the person is unable to complete a task, a score of 180s is assigned for this task. For the analysis, the sum of the seven scores for the most affected UE was used. It is important to consider that the AHA and the JTHFT assess different constructs, as the AHA targets bimanual performance, and the JTHFT rather targets unimanual performance. A tool assessing the same construct would have been preferable to assess convergent validity, but this choice was made considering the scarcity of tools that assess bimanual performance in adults with CP.

Data analysis

Gwet’s agreement coefficient (Gwet’s AC2) was used to determine interrater reliability for the global score as well as for each item of the observation-based scoring grid. Gwet’s AC2 considers the number of raters in the chance-agreement probability. It has been shown that Gwet’s AC2 provides a more stable interrater reliability coefficient compared to Cohen’s Kappa [Citation29]. To adopt a more conservative approach, a linear weight was used for the Gwet’s AC2, since it attributes greater disagreement to a one-level difference in ratings compared to a quadratic weight [Citation30]. The level of agreement was defined according to the following scale: poor ≤ 0.20, fair = 0.21 to 0.40, moderate = 0.41 to 0.60, good = 0.61 to 0.80, very good = 0.81 to 1.00 [Citation30]. Interrater reliability analyses were performed on the data from the 17 blinded assessments. Analyses for convergent validity and differences in UE performance according to MACS level were conducted using the revised global score for all 19 assessments. The revised global score was obtained by pooling the results of the two experimented raters and by reaching an agreement on the items that differed. To determine convergent validity, Kendall’s Tau-b correlation coefficient was used between the global scores of the observation-based scoring grid and the JTHFT total scores. The size of the correlation was determined according to the following scale: negligible ≤ 0.3, low = 0.3 to 0.5, moderate = 0.5 to 0.7, high = 0.7 to 0.9, very high = 0.9 to 1.0 [Citation31]. Non-parametric statistics were used due to the presence of outliers and the non-normal distribution of the JTHFT scores. Kendall’s Tau-b was selected due to the presence of ties in the global scores of the observation-based scoring grid. To determine the ability of the assessment to differentiate different levels of UE performance, the Kruskal-Wallis test was used to assess differences in performance between MACS group in the global scores of the observation-based scoring grid and the JTHFT. Post-hoc analyzes were conducted using Dunn’s test with Bonferroni adjustment. All analyses were made in R v.4.2.1 using RStudio version 2022.02.3 + 492.

Results

Participants’ clinical and demographic characteristics are presented in . UE deficits according to the MACS were equally distributed. The duration of the assessment varied from four to 19 min (mean = 6.5 min), depending on the participant’s level of impairment.

Table 4. Participants’ clinical and demographic characteristics (n = 19).

Psychometric properties

The Gwet’s AC2 for interrater reliability was 0.84 (SD = 0.02), indicating a very good agreement between the raters for the global scores of the 17 participants, as determined by the observation-based scoring grid. The interrater reliability for each item was good to very good, with a Gwet’s AC2 ranging from 0.67 to 0.97. Sixteen items out of 20 showed a very good agreement and four items showed a good agreement (Amount of use, Manipulates, Readjusts grasp, Flow in bimanual task). All Gwet’s AC2 are reported in . When comparing the agreement between experienced and naïve raters, a disparity was observed. The experienced raters disagreed with both other raters on 1.0 item per participant on average (IP = 1.29 disagreement per participant; LGP = 0.71 disagreement per participant), whereas the naïve raters disagreed with both experienced raters on 5.1 items per participant on average. A secondary analysis was therefore carried out in order to determine the inter-rater reliability between the experienced raters. The Gwet’s AC2 between the two experienced raters was very good (AC2 = 0.92; SD = 0.02).

For the convergent validity, the global scores of the observation-based scoring grid and the JTHFT were highly correlated (τb = −0.74; p < 0.001). When observing data distribution according to the different levels of the MACS, we can see that it is consistent with the performance in both assessments (). Participants with MACS 1 tended to perform better on both the observation-based scoring grid and the JTHFT, while participants with MACS 3 showed poorer performance on both. Differences were observed between MACS groups in the global scores of the observation-based scoring grid (p = 0.003) and JTHFT (p = 0.009). Post-hoc analysis indicated that MACS 3 had significantly lower scores compared to MACS 1 for the observation-based scoring grid (p = 0.003) and JTHFT (p = 0.002), while the difference between MACS 2 and both MACS 1 and MACS 3 did not reach statistical significance for both assessments.

Figure 1. Relationship between the global scores of the observation-based scoring grid and the JTHFT.

JTHFT: Jebsen-Taylor Hand Function Test; MACS: Manual Ability Classification System.

Discussion

This exploratory study aimed to assess the interrater reliability and convergent validity of the AHA observation-based scoring grid in the context of unstandardized ADLs in adults living with CP. The main findings were that the interrater reliability was very good and that the convergent validity with the JTHFT was high. The results also indicate the ability of this assessment to differentiate the level of impairment of the UE. These findings are promising for research and clinical practice since so few tools have been validated to assess UE in this specific population, but further research is needed to confirm the psychometric properties of this approach.

The interrater reliability was slightly inferior to what had been previously shown for the AHA with children (ICC = 0.98), adolescents (ICC = 0.97), or adults with stroke (ICC = 0.99) [Citation21,Citation22,Citation32]. This lower result was expected since the scoring instructions and grid were not specifically developed for this task. Some items’ instructions were therefore less adapted and were more susceptible to be influenced by the rater’s subjectivity, creating more variability in the scoring between raters. For example, some tasks in the standardized version of the AHA were designed to demonstrate precise abilities, like clapping cymbals in different orientations to rate the items Moves forearm and Orients objects. In the context of unstandardized ADLs, those abilities were demonstrated in a less obvious way, creating a certain ambiguity in scoring.

Guidelines generally recommend a reliability coefficient equal to or greater than 0.80 for group-level comparisons, and a coefficient greater than 0.90 for individual-level decision making [Citation33]. As a result, even if reliability was lower when using the AHA scoring grid in unstandardized tasks, the level of reliability shown in the current study was considered appropriate for research purposes. Considering that the reliability coefficient between the two experienced raters was greater than 0.90 (AC2 = 0.92), the use of this grid for clinical reasoning on the individual level was deemed acceptable when used by experienced clinicians. Consequently, the use of this rating grid in unstandardized tasks should be approached with caution when used for clinical reasoning with inexperienced practitioners. Moreover, training for this assessment should be given, especially to novice clinicians, as evidence showed that they benefit more from assessment training in order to enhance reliability [Citation34].

Regarding the interrater reliability of individual items, the items that showed the lowest Gwet’s AC2 were Amount of use, Manipulates, Readjusts grasp, and Flow in bimanual task, with Gwet’s AC2 ranging from 0.67 to 0.79. A reason that might explain the lower reliability in the Amount of use item was that its scoring was mainly based on frequency. When using the AHA scoring grid in the standardized tasks with which the grid was designed, the number of opportunities to present an ability is more constant and predictable, making it easier to judge the frequency objectively. However, the Amount of use item was also among the least reliable in the AHA with adolescents (ICC = 0.63) and adults with stroke (Cohen’s weighted kappa = 0.79) [Citation22,Citation32]. Lower reliability in the Manipulates and Readjust grasp items could be attributed to the lack of specific task designed to assess the skills encompassed by those categories. In comparison, the standardized AHA board game included a marbles-manipulation task that facilitates the observation of in-hands manipulation, and a paper-cutting task to observe grasp readjustment on the paper while cutting. Therefore, care should be taken when choosing functional tasks for assessment, to ensure they allow for effective observation of fine motor adjustment skills.

The results for convergent validity were inferior to those from a previous study with adults with sub-acute stroke, where the correlation between the AHA and the JTHFT was rs = −0.93 [Citation17]. Apart from the use of a more conservative statistical test (Kendall’s Tau-b) particularities about population could explain these results. Considering the acquired and recent nature of the stroke, a better correlation between performance in a functional task and a UE motor assessment was understandable, since the person would not yet have had time to develop compensatory strategies. Conversely, an adult living with CP might demonstrate better function despite the level of UE impairment as a result of the multiple strategies they would have developed throughout their life. As mentioned before, the AHA and the JTHFT assess different constructs, with the AHA targeting bimanual performance, and the JTHFT targeting unimanual performance. As a result, a perfect correlation was not expected between these two assessment tools. Overall, the good validity and reliability results obtained for this assessment could be explained by the extended and precise scoring instructions for each item in the AHA manual, and the adjustments made to those instructions to fit the new tasks. The fact that the assessment is videotaped also ensures that the assessment is thoroughly visualized and that all elements are considered, enabling all raters to base their quotations on the same observations, and limiting potential bias associated to the order of task presentation. That said, when using the grid in a new context, there is a need to adapt the scoring instructions to the task by conducting pilot testing beforehand.

The results showed the feasibility of using the AHA’s observation-based scoring grid in unstandardized ADLs for research purposes. This type of grid also shows potential for clinical purposes, as it could be used as a versatile tool to integrate into observations of different ADLs to consider bimanual performance and obtain a more objective measure. Using this type of grid would allow the client and the clinician to select tasks that are meaningful and that are aligned with the client’s functional objectives, which would provide an assessment that is occupation-oriented, ecological, and meaningful to the client. Furthermore, considering the limited time available for clinicians to achieve their assessments, being able to combine an ADL observation and an objective evaluation makes it possible to optimize efficiency by avoiding duplicating their tests and observations.

Limitations and future studies

This was an exploratory study, and further research is needed to demonstrate with more confidence the validity of using an observation-based scoring grid in unstandardized ADLs. Test-retest reliability would be an important property to assess since the performance of a subject is more likely to vary from one time to another in an unstandardized task. It would also be relevant to compare the change in scores across different sets of tasks, to ensure the reliability of this approach in different contexts. Sensitivity to change and minimal clinically important difference should also be studied before widespread clinical implementation.

When assessing interrater reliability, raters are usually blinded for all evaluations. However, an initial comparison of the two pilot assessments was necessary in the present study to allow the scoring criteria to be adapted to an unstandardized context. This is a limitation in our study, since the comparison may have led to better reliability results. The sample size is also a potential limitation, as it is usually recommended to use a n > 30 for assessing the interrater reliability of a four-point rating scale [Citation35].

Acknowledgments

The authors thank Sophie Levac for her participation as a rater for the interrater reliability.

Disclosure statement

The authors report no conflicts of interest.

Data availability statement

Due to the nature of this research (involving videos), participants of this study did not agree for their data to be shared publicly, therefore supporting data is not available.

Additional information

Funding

References

- Oskoui M, Coutinho F, Dykeman J, et al. An update on the prevalence of cerebral palsy: a systematic review and meta‐analysis. Dev Med Child Neurol. 2013;55(6):509–519. doi: 10.1111/dmcn.12080.

- Himmelmann K, Uvebrant P. The panorama of cerebral palsy in Sweden part XII shows that patterns changed in the birth years 2007-2010. Acta Paediatr. 2018;107(3):462–468. doi: 10.1111/apa.14147.

- Robertson CMT, Ricci MF, O’Grady K, et al. Prevalence estimate of cerebral palsy in Northern Alberta: births, 2008-2010. Can J Neurol Sci. 2017;44(4):366–374. doi: 10.1017/cjn.2017.33.

- Østensjø S, Carlberg EB, Vøllestad NK. Motor impairments in young children with cerebral palsy: relationship to gross motor function and everyday activities. Dev Med Child Neurol. 2004;46(9):580–589. doi: 10.1111/j.1469-8749.2004.tb01021.x.

- Van Meeteren J, Roebroeck M, Celen E, et al. Functional activities of the upper extremity of young adults with cerebral palsy: a limiting factor for participation? Disabil Rehabil. 2008;30(5):387–395. doi: 10.1080/09638280701355504.

- Gordon AM, Bleyenheuft Y, Steenbergen B. Pathophysiology of impaired hand function in children with unilateral cerebral palsy. Dev Med Child Neurol. 2013;55 Suppl 4(s4):32–37. doi: 10.1111/dmcn.12304.

- Bailey RR, Klaesner JW, Lang CE. Quantifying real-world upper-limb activity in nondisabled adults and adults with chronic stroke. Neurorehabil Neural Repair. 2015;29(10):969–978. doi: 10.1177/1545968315583720.

- Wagner LV, Davids JR. Assessment tools and classification systems used for the upper extremity in children with cerebral palsy. Clin Orthop Relat Res. 2012;470(5):1257–1271. doi: 10.1007/s11999-011-2065-x.

- Semrau JA, Herter TM, Scott SH, et al. Examining differences in patterns of sensory and motor recovery after stroke with robotics. Stroke. 2015;46(12):3459–3469. doi: 10.1161/STROKEAHA.115.010750.

- Kantak S, Jax S, Wittenberg G. Bimanual coordination: a missing piece of arm rehabilitation after stroke. Restor Neurol Neurosci. 2017;35(4):347–364. doi: 10.3233/RNN-170737.

- Ng S, Dinesh S, Tay S, et al. Decreased access to health care and social isolation among young adults with cerebral palsy after leaving school. J Orthop Surg (Hong Kong). 2003;11(1):80–89. doi: 10.1177/230949900301100116.

- Rapp CE, Jr, Torres MM. The adult with cerebral palsy. Arch Fam Med. 2000;9(5):466–472. doi: 10.1001/archfami.9.5.466.

- Liptak GS. Health and well being of adults with cerebral palsy. Curr Opin Neurol. 2008;21(2):136–142. doi: 10.1097/WCO.0b013e3282f6a499.

- Riquelme I, Arnould C, Hatem SM, et al. The two-arm coordination test: maturation of bimanual coordination in typically developing children and deficits in children with unilateral cerebral palsy. Dev Neurorehabil. 2019;22(5):312–320. doi: 10.1080/17518423.2018.1498552.

- Penta M, Tesio L, Arnould C, et al. The ABILHAND questionnaire as a measure of manual ability in chronic stroke patients: rasch-based validation and relationship to upper limb impairment. Stroke. 2001;32(7):1627–1634. doi: 10.1161/01.str.32.7.1627.

- Barreca SR, Stratford PW, Masters LM, et al. Validation of three shortened versions of the chedoke arm and hand activity inventory. Physiother Can. 2006;58(2):148–156. doi: 10.3138/ptc.58.2.148.

- Krumlinde-Sundholm L, Lindkvist B, Plantin J, et al. Development of the assisting hand assessment for adults following stroke: a rasch-built bimanual performance measure. Disabil Rehabil. 2019;41(4):472–480. doi: 10.1080/09638288.2017.1396365.

- Sköld A, Hermansson LN, Krumlinde-Sundholm L, et al. Development and evidence of validity for the children’s hand‐use experience questionnaire (CHEQ). Dev Med Child Neurol. 2011;53(5):436–442. doi: 10.1111/j.1469-8749.2010.03896.x.

- Holmefur M, Krumlinde-Sundholm L. Psychometric properties of a revised version of the assisting hand assessment (Kids-AHA 5.0). Dev Med Child Neurol. 2016;58(6):618–624. doi: 10.1111/dmcn.12939.

- Louwers A, Beelen A, Holmefur M, et al. Development of the assisting hand assessment for adolescents (Ad-AHA) and validation of the AHA from 18 months to 18 years. Dev Med Child Neurol. 2016;58(12):1303–1309. doi: 10.1111/dmcn.13168.

- Holmefur M, Krumlinde-Sundholm L, Eliasson AC. Interrater and intrarater reliability of the assisting hand assessment. Am J Occup Ther. 2007;61(1):79–84. doi: 10.5014/ajot.61.1.79.

- Van Gils A, Meyer S, Van Dijk M, et al. The adult assisting hand assessment stroke: psychometric properties of an observation-based bimanual upper limb performance measurement. Arch Phys Med Rehabil. 2018;99(12):2513–2522. doi: 10.1016/j.apmr.2018.04.025.

- Eliasson A-C, Krumlinde-Sundholm L, Rösblad B, et al. The manual ability classification system (MACS) for children with cerebral palsy: scale development and evidence of validity and reliability. Dev Med Child Neurol. 2006;48(7):549–554. doi: 10.1017/S0012162206001162.

- Poitras I, Clouâtre J, Campeau-Lecours A, et al. Accelerometry-based metrics to evaluate the relative use of the more affected arm during daily activities in adults living with cerebral palsy. Sensors. 2022;22(3):1022. doi: 10.3390/s22031022.

- Poitras I, Campeau-Lecours A, Mercier C. Sensory deficits in adults living with hemiparetic cerebral palsy and their impact on upper limb use during unconstrainted bilateral tasks. Poster session presented at: 12th World Congress for Neurorehabilitation (WCNR); Vienna, Austria; 2022

- Poitras I, Clouâtre J, Campeau-Lecours A, et al. Robotic assessment of unilateral and bilateral upper limb performance in adults living with hemiparetic cerebral palsy. Poster session presented at: Neural Control of Movement; Dublin, Ireland; 2022

- Poitras I, Clouâtre J, Campeau-Lecours A, et al. Accelerometry-based metrics to characterize upper extremity motor deficits during a kitchen task among adults living with cerebral palsy. Poster session presented at: ISEK XXIV; Québec, Canada; 2022

- Jebsen RH, Taylor N, Trieschmann RB, et al. An objective and standardized test of hand function. Arch Phys Med Rehabil. 1969;50(6):311–319.

- Wongpakaran N, Wongpakaran T, Wedding D, et al. A comparison of cohen’s kappa and gwet’s AC1 when calculating inter-rater reliability coefficients: a study conducted with personality disorder samples. BMC Med Res Methodol. 2013;13(1):61. doi: 10.1186/1471-2288-13-61.

- Gwet KL. Handbook of inter-rater reliability: the definitive guide to measuring the extent of agreement among raters. 4th ed. Gaithersburg (MD): Advanced Analytics, LLC; 2014.

- Hinkle DE, Wiersma W, Jurs SG. Applied statistics for the behavioral sciences. Boston (MA): Houghton Mifflin College Division; 2003.

- Louwers A, Krumlinde-Sundholm L, Boeschoten K, et al. Reliability of the assisting hand assessment in adolescents. Dev Med Child Neurol. 2017;59(9):926–932. doi: 10.1111/dmcn.13465.

- Polit DF, Hungler BP. Nursing research: principles and methods. Philadelphia: Lippicot; 1999.

- Sadler ME, Yamamoto RT, Khurana L, et al. The impact of rater training on clinical outcomes assessment data: a literature review. Int J Clin Trials. 2017;4(3):101. doi: 10.18203/2349-3259.ijct20173133.

- Cicchetti DV. Assessing inter-rater reliability for rating scales: resolving some basic issues. Br J Psychiatry. 1976;129(5):452–456. doi: 10.1192/bjp.129.5.452.