?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.

?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.ABSTRACT

Wearable camera photo review has successfully been used to enhance memory, yet very little is known about the underlying mechanisms. Here, the sequential presentation of wearable camera photos – a key feature of wearable camera photo review – is examined using behavioural and EEG measures. Twelve female participants were taken on a walking tour, stopping at a series of predefined targets, while wearing a camera that captured photographs automatically. A sequence of four photos leading to these targets was selected (∼ 200 trials) and together with control photos, these were used in a recognition task one week later. Participants’ recognition performance improved with the sequence of photos (measured in hit rates, correct rejections, & sensitivity), revealing for the first time, a positive effect of sequence of photos in wearable camera photo review. This has important implications for understanding the sequential and cumulative effects of cues on episodic remembering. An old-new ERP effect was also observed over visual regions for hits vs. correct rejections, highlighting the importance of visual processing not only for perception but also for the location of activated memory representations.

Introduction

Wearable cameras are small lifelogging devices that have been shown to significantly enhance autobiographical memory retrieval in patients with memory impairments, as well as offering mnemonic benefits to cognitively healthy individuals (see Chow & Rissman, Citation2017, for a detailed review). These devices are small digital cameras, first developed by Microsoft in 2003, that are worn around the neck and designed to automatically capture low-resolution, wide-angle photographs from the wearer’s perspective. A key feature of these cameras is that the picture capture is influenced by in-built sensors, which detect salient environmental factors, such as movement, light, temperature, and direction. With typical use, the cameras take approximately one image every 10-15s, and sequences of consecutive images can later be reviewed in the form of a time-lapse “movie” at either a predetermined or self-regulated pace. This provides an efficient way of showing many photos in a short time, which can create the experience of an intense “flood” of recollection, termed by Loveday and Conway (Citation2011) as a “Proustian moment”.

Although there is now a growing number of studies that show the beneficial effects of the wearable camera photo review (Chow & Rissman, Citation2017; Mair et al., Citation2017; Silva et al., Citation2016), an important question for both practitioners and memory theorists is how and why this technology offers such a powerful memory aid. What is it about this type of photograph or the way they are reviewed that makes them so effective in triggering episodic remembering? Explanations include the idea that visual cues are more effective than verbal ones (Maisto & Queen, Citation1992), or that the sequence of photos provides additional temporal information which supports retrieval through contextual reinstatement (Barnard et al., Citation2011). Mair et al. (Citation2017) recently showed that the presentation of photos in their natural (i.e., sequential) order is more beneficial than random presentation. A more convincing suggestion is that the photographs produced by these cameras share a number of overlapping features with normal human memory (Hodges et al., Citation2011; Loveday & Conway, Citation2011). For example, wearable camera-generated stimuli are visual, passively captured, have a “field” (as opposed to “observer”) perspective, are time-compressed, and are sequentially ordered.

A key challenge with identifying the underlying mechanism of wearable camera photo review is that there are many variations in how wearable cameras are used, both in experimental studies and everyday life. This makes it difficult to make direct comparisons and draw conclusions. In particular, there is a lack of consistency in whether photos are presented: singly or as a collection; in temporal order, reverse order, or randomly; once or more than once; within hours, weeks or months; or at a pre-determined or self-regulated pace. Studies that have evaluated the value of the camera as a memory aid have not yet systematically explored these factors, nor have they explored the neural correlates associated with these factors.

An important feature of wearable camera is the sequential way in which photos are captured, which allows them to be presented and reviewed in the same pattern but in a faster pace. No study to date has explored the behavioural dynamics or neural correlates of this process. An effective way to investigate the fine temporal structure of the underlying processes is using electroencephalography (EEG) to measure event-related potentials (ERPs). While (EEG) and ERPs have not been used with wearable cameras, there is however a large body of work that uses this approach to explore the neurophysiology of recognition memory under laboratory conditions. A well-established finding is that correctly identified old words compared to new words elicit a ERP with a higher amplitude over parietal electrodes (Sanquist et al., Citation1980; Wilding & Ranganath, Citation2011). When recording from lateral electrodes, this old-new effect is largest over the left-parietal electrodes 500–800 milliseconds after stimuli presentation, thus it is termed the left-parietal old-new ERP effect (Friedman & Johnson, Citation2000; Rugg, Citation1994). It has been shown that this signature specifically indexes recollection (Mecklinger et al., Citation2016), whereas familiarity is characterised by mid-frontal old-new ERP effect (Mecklinger & Jäger, Citation2009). Familiarity here refers to participants ability to recognise old stimuli based on their feeling of “knowing” from somewhere without necessarily recollecting specific information about the context in which the item was studied or experienced. Furthermore, using coloured clip-art stimuli it has been shown that when participants are tested on different study-test time intervals the parietal old-new (recollection) effect attenuates after one week and fades after four weeks, whereas the familiarity old-new effect remains consistent within this time (Roberts et al., Citation2013; Tsivilis et al., Citation2015). It is not clear whether these effects will be observed for recognition of real-world memories, which differ from laboratory-based memories in several ways. For example, the study of memory in laboratory conditions usually involves simple stimuli (e.g., words, shapes, generic pictures) encountered within a relatively impoverished and unchanging environment. In contrast, real-world memories consist of complex stimuli encoded within rich, dynamic, and multisensory environments, and involve novel combinations of often familiar items (e.g., people, places, objects). As such, real-world memories are more likely to be personally relevant, emotionally salient, goal-directed, and intrinsically motivated. Moreover, recognition memory in the laboratory involves the presentation of precisely the same stimuli presented within the same context both at study and at test, but outside the laboratory, objects, people, and places are recognised in contexts that differ from the original encoding context, and usually, only partial cues are available.

The current experiment is the first to use EEG to explore the behavioural and neural mechanisms underlying the recollection of memories based on wearable camera photos in a real-world context. We took participants on a guided walking tour where they saw a series of targets while they were wearing a wearable camera. The targets included urban artefacts such as a building’s facades, sculptures, and other salient objects in the city. The camera produced multiple photos for each target from participants perspective as they walked their way towards them. One week later, participants performed a recognition task that included these photos along with control photos taken in a similar manner. During this task, a sequence of four photos per target was shown in their natural order. Participants used a response box and indicated their memory response for each of these photos using three types of responses including “don’t remember”, “familiar”, & “recollect”. This paradigm allowed us to measure behavioural and neurophysiological changes associated with observing a sequence of photos. Firstly, we investigate whether the typical “old-new” ERP effects found with simple stimuli are also elicited by complex wearable camera images depicting a recently experienced event. Secondly, we look at ERP amplitude changes across the sequence of photos, in order to identify the timing and location of cortical activation that occurs during a sequenced image review. If memory success is influenced by the sequential presentation of related images, then we would expect to observe that, over the course of the presented sequence, participants’ recognition memory would increase, and corresponding increase in amplitude would be observed in recognition-related ERPs. On the other hand, if the sequence is unimportant for memory success then we would expect no consistent relationship between the serial position of the image within the sequence and measures of recognition sensitivity and the corresponding ERP amplitude.

Methods

Participants

Participants were 13 females, ranging in age from 45–56 (M=51.12, SD=3.76). One participant was excluded as they mentioned after the study that they had confused the responses during the recognition task. They were recruited by an advertisement on City University London’s participant recruitment website and word of mouth. None of the participants reported any history of brain injury or serious mental health condition at the time of the study and all had a normal or corrected vision. All participants signed an informed consent form. The study was approved by the Psychology Department Ethics Committee of City, University of London.

Wearable camera

The wearable camera Autographer was used in this study (OMG PLC, http://www.autographer.com). Autographer is a small camera that is worn around the neck and captures photos from the perspective of the person wearing it. It is equipped with a set of sensors reacting to changes in colour, brightness, temperature, perceived direction, and motion. The information from these sensors is then used to detect and take a photo at a “good” moment. We used Autographer on a setting that takes a photo on average every 10 sec, with variance depending on the information from the sensors.

Stimuli

Fifty-eight predefined “targets” – urban artefacts are seen during the walking tour, such as a unique building facet, an old police post, a church entrance, and sculptures – were used to create the experimental stimuli. The tour was devised in a way that avoided any famous sights in London. While the areas participants walked through may have been familiar to them, the targets were chosen such that it was very unlikely for them to have seen them or payed attention to them before the tour. This was the same for the control tour. For each participant, photos of these predefined targets were selected from the full set of photos captured on their Autographer during the tour, along with a sequence of three preceding photos. All other photos were discarded. There was some variability between the photos for each exhibit within the sequence, as the photos depicted the exhibits from slightly different angles and slightly different distances, however, the targets were seen in all the photos. The temporal gap between photos were approximately 10 sec unless a photo had been removed from a sequence in which case this gap was twice as long. After rejecting sequences with photos containing a recognisable object (e.g., the experimenter, participants’ hand), the number of targets ranged from 48 to 56 for participants. Following artefact rejection in of the EEG data, we had the following average number of trials for each condition: 40.8 in sequence 1, 42.7 in sequence 2, 44.5 in sequence 3, & 46.2 in sequence 4 hit responses and 44.1 in sequence 1, 45.8 in sequence 2, 47.2 in sequence 3, & 48.2 in sequence 4 correct rejection responses.

As a control for the sequences of “tour photo”, a set of “new photo” sequences were constructed from Autographer photos captured on a different walk in a different location by the experimenter. For each participant, the number of new (control) sequences was adjusted to be equal to the number of tour (old) photos. Photos were shown on a CRT screen (resolution: 1264 × 790) with a large 30° * 40° visual angle.

Design and procedure

The experiment consisted of two parts, a guided walking tour, followed by a recognition test with EEG a week later. Pairs of participants were taken on the guided walking tour while wearing the camera. The experimenter acted as the guide and ensured that the participants encountered each of the targets for long enough for it to be captured on the Autographer. They were told to walk naturally as if they were exploring the area and their attention was not specifically drawn to the targets. Participants could talk and take a short break during the tour. All participants followed the same route and observed the same targets. The walking tour took place in the London Borough of Islington and City of London, London, UK, it was 3 miles long and took participants approximately 90 min to complete.

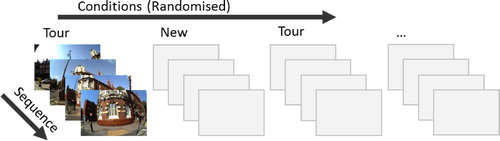

The recognition and EEG recording session took place after a one-week interval. For each condition (tour vs new photos), there were between 48 and 56 sequences of 4 photos, compiling on average 207 photos per condition. During the recognition task, the sequences of tour and new photos were presented in a random order, but photos within each sequence were kept in the correct temporal order (see for task design). Each photo was presented on the screen until a response was made. This was followed by a 500 ms inter-trial interval, during this time the screen turned grey, before the next photo was presented. There was no fixation cross before or after the photo presentation. Participants were instructed to use a response box and respond to each photo by pressing one of three buttons: “Don’t remember”, when they did not recognise the photo as one from the guided walking tour; “Familiar”, when they were sure that they remembered the photo from the tour but had no specific recollection of the context (what they were thinking, saying or doing); and “Recollection”, when in addition to remembering the photo from the tour they also recalled what they were experiencing during that time, such as what they were talking or thinking about. Photos were presented until participants responded, with 500 milliseconds inter-trial interval. The responses we were interested in were: “hit-recollect”– correctly recollected photos; “hit-familiar” – correctly familiar photos; “miss” – incorrect “don’t remember” responses. “correct rejection” – correct “don’t remember” responses; and “false alarm” – incorrectly recollected or familiar responses.

Figure 1. The design of the study. Four photos for each target on the walking tour were presented in the order in which they were captured. The order of targets and conditions within the experimental session was randomised. Participants had to respond to every photo they saw with no time limit, with 500 milliseconds inter-trial interval.

EEG acquisition and pre-processing

EEG was recorded using a 64-channel, BrainVision BrainAmp series amplifier (Brain Products, Herrsching, Germany) with a 1000 Hz sampling rate. The data were recorded with respect to FCz electrode reference and later re-referenced to TP9 and TP10 electrodes. Ocular activity was recorded with an electrode placed underneath the left eye. Pre-processing steps were conducted using BrainVision Analyzer (Brain Products, Herrsching, Germany). We first applied a low cut-off filter of 0.5 Hz and an automatic ocular correction using the ocular independent component analysis. The data were then segmented from 200 ms prior to 800 ms after stimulus presentation. After a high cut-off filter of 20 Hz, automatic artefact rejection was applied excluding segments with a slope of 200 µV/ms and min–max difference of 200 µV in 200 ms interval. Baseline correction was applied to the 200 ms interval preceding the stimulus. After pre-processing, the mean amplitude for the given time window for every trial was exported from BrainVision Analyzer for statistical analysis to MatLab (Mathworks Inc, Natick, MA) and grand averages were computed in BrainVision Analyzer for visual inspection of ERPs.

Behavioural analysis

We were interested in participants’ hit rate, recollection rate, correct rejection, sensitivity (d’), and response bias and specifically how these measures change over the sequence. Therefore, each of these measures was computed 4 times, corresponding to each serial position in the sequence. The hit rate was measured as the proportion of hit responses (both hit-familiar and hit-recollected) to all responses to the tour photos (hits and misses), the recollection rate as the proportion of correctly recollected responses (hit-recollect) to all hit responses (hit-recollect and hit-familiar), and correct rejection as the proportion of don’t remember responses to the controls photos to all control photos.

The sensitivity (d’) and the response bias (c) were computed from participants’ hit and false alarm rates (Stanislaw & Todorov, Citation1999). False alarm rate was taken as 1 min correct rejection. The sensitivity measure provides information about participants’ ability to discriminate between old and new photos; response bias provides information about participants’ inclination to say “remember” or “don’t remember”. The hit and false alarm rates used for this analysis were log-transformed to avoid undefined sensitivity values for extreme cases; hit rate of one, and false alarm rate of zero (Hautus, Citation1995).

To examine how these changed with the sequence, we used a one-way repeated ANOVA in which only the linear effect of the sequence was included. In order to explore where these effects lay within the sequence, we used three post hoc t-tests and adjusted their p values with Bonferroni correction.

To examine changes in participants’ reaction times (RT) across conditions, responses, and the sequences we used a 3 (response: familiar, recollect, & don’t remember) by 2 (condition: tour & control) by 4 (sequence: first, second, third, & fourth photo) repeated measures ANOVA. Here also only a linear effect of sequence was included in the model. To explore where the effect of sequence lied, we used three post hoc t-tests and adjusted their p values with Bonferroni correction. Similarly, we used three post doc tests to explore differences across the three responses. ERP analysis

We used a collapsed localiser approach (See Luck & Gaspelin, Citation2017) to choose a time window. First, we averaged across all conditions and then used this collapsed waveform to define the time windows to be used to compare the different conditions. This method allows us to obtain an unbiased time-window. Based on this we examined a time window between 135 and 450 milliseconds post-stimulus, and explored five regions of interest: (ROI) frontal (F1, F2, Fz), central (C1, C2, Cz), parietal (P1, P2, Pz), parieto-occipital (PO3, PO4, POz), and occipital (O1, O2, Oz) electrodes. Given that five ROIs were being examined, we used False Discovery Rate (FDR) correction for the p values obtained from these comparisons (Benjamini & Hochberg, Citation1995).

We conducted two separate analyses to examine the mean amplitude of ERP differences between hits and correct rejections (“old-new effect”), and between hits-recollect and hits-familiar (“familiarity-recollect effect”). In both analyses, we also looked at the effect of the sequence. For these analyses, we used linear mixed-effects models (LME; Barr et al., Citation2013). This analysis considers participant-specific variability and accommodates the repeated measures study design. The fixed part of the model included the response, i.e., hit or correct rejection, when looking at the old-new effect, and hit-familiar or hit-recollect when looking at the familiarity-recollect effect. Additionally, we included electrodes within the ROI and the linear effect of the sequence as fixed factors. As random effects, an intercept, slope for the response, sequence, and the electrodes were all included for each participant, the interindividual variability in EEG amplitude was accounted for, and this therefore represented a “baseline” for each participant. We computed the significance of fixed effects by comparing a model with the fixed effect of interest with a model without it.

Unlike conventional ANOVA methods where epochs are first averaged across each condition for each participant, LME takes each epoch data (Barr et al., Citation2013). Doing so is advantageous as the models in this method consider that different conditions may have different variances and number of data points – a crucial weakness in ERP studies that is being improved by using linear mixed effect models (Koerner & Zhang, Citation2017; Tibon & Levy, Citation2015). We used maximum likelihood to estimate the parameters and Likelihood Ratio tests to attain significance levels (X2) for these parameters (Bolker et al., Citation2009). Finally, we used the Benjamini-Hochberg method to correct for false discovery rate of multiple comparisons on different ROIs (Benjamini & Hochberg, Citation1995).

Results

Behavioural results: response rate, sensitivity, and reaction time

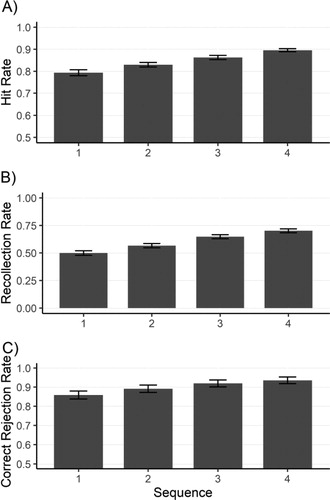

Participants’ hit rate, recollection rate, and correct rejection rate for different sequences are presented in . The overall hit rate was 82.75% . There was a significant linear effect of sequence on participants hit rate

, recollection rate

, as well as correct rejection rate

. For the hit rate, post hoc tests failed to depict a significant effect between sequence 1 and 2

, sequence 2 and 3

, or between 3 and 4

. For recollection rate, post hoc tests showed a significant effect between sequence 1 and 2

as well as between sequence 2 and 3

and not between 3 and 4

. For correct rejection, post doc test showed no significant effect between 1 and 2

, 2 and 3

, or 3 and 4

. Overall, the results suggest a steady increase in participants hit rates, recollection rate, as well as correct rejection rate over the sequence of photos.

Figure 2. (A) Hit rate – the proportion of correctly remembered photos to all tour photos – across the sequence of photos (B) Recollection rate – the proportion of recollect responses to all hit responses – across the sequence of photos (C) Correct rejection rate – the proportion of correctly identified control photos to all control photos – across the sequence of photos. Error bars represent standard error of the mean.

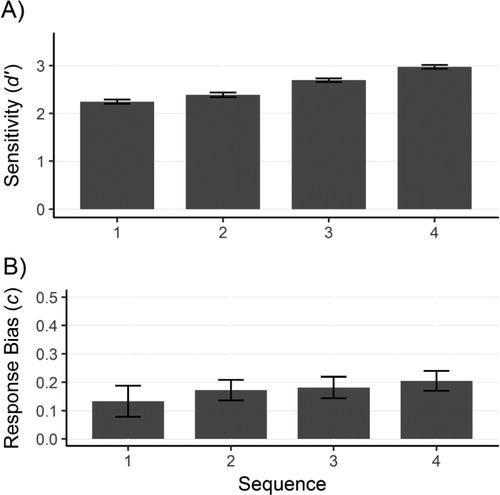

Participants’ sensitivity (d’) and response bias (c) are presented in . There was a significant linear effect of sequence on sensitivity but not on response bias

. For sensitivity, post hoc tests showed that the difference between sequence 3 and sequence 4 was significant

while the differences between sequence 1 and 2

as well as between sequence 2 and 3

were not significant. This suggests a steady increase in participants’ sensitivity with the later photos in the sequence.

Figure 3. (A) Sensitivity and (B) response bias for the pictures of the target in the sequence of photos. Error bars represent standard error of the mean.

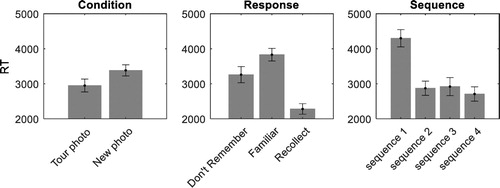

Participants’ RT for different conditions (tour/new) are presented in . Participants took longer to respond to new photos in comparison to tour photos. There was an effect of sequence

on RT, with participants taking longer to respond to the first compared to the later photos in a sequence. Post hoc tests showed there was a significant difference between sequence 1 and 2

while the differences between sequence 2 and 3

and sequence 3 and 4

were not significant. Finally, there was an effect of response type, i.e., “Don’t remember”, “Familiar”, or “Recollect”

. Post-hoc tests with Bonferroni correction on response showed no difference between don’t remember and familiar

, but that “recollect” responses were significantly faster than “don’t remember” responses

and “familiar” responses

. There were no interactions between condition and response

, condition and sequence

, response and sequence

, or condition, response, and condition

.

ERP results

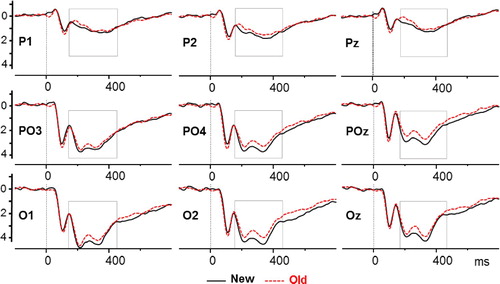

We found no differences between the hit-familiar and hit-recollect responses across any of the regions (see supplementary material for the null statistics). The mean positive amplitude between 135–450 ms after stimuli presentation was significantly lower in response to hit conditions (hereafter old) compared to correct rejection (hereafter new) conditions at occipital electrodes, parieto-occipital

, and that effect was also evident at parietal electrodes

. shows the old-new effects at occipital, parieto-occipital, parietal and frontal electrodes. There was no effect of sequence.

Figure 5. ERP components after the presentation of the stimuli for central (C1, C2, Cz), parietal (P1, P2, Pz), parieto-occipital (PO3, PO4, POz), and occipital (O1, O2, Oz) electrodes. New (solid lines) after the presentation of stimuli participants correctly recognised as new (correct rejection). Old (dashed lines) after the presentation of stimuli participants correctly remembered (familiar and recollect hits). Time windows where the two ERP components are significantly different are depicted with shaded boxes.

Discussion

We explored the behavioural and neural mechanisms underlying the wearable camera photo review by investigating the recognition of real-world events across sequences of photos, captured during a walking tour. We demonstrated that recognition performance improved across the sequence of four photos, with incremental increases in recollection and sensitivity. Analysis of brain activity using ERPs, revealed an old-new effect over frontal and occipital electrodes but showed that these were not modulated across the sequence of four photos.

Even though there are numerous studies demonstrating the benefits of wearable cameras (Chow & Rissman, Citation2017), the underlying mechanism of this enhancement has not been examined. A key feature of the wearable camera in its day-to-day use is that photos are captured and reviewed in sequential form, providing multiple exposures to temporally organised cues. In this study, we specifically explored the relevance of this, by isolating sequences of four photos that led up to specific targets and using these to create a recognition task. Participants hit rates, along with their subjective feeling of recollection increased for later photos in the sequence. Importantly, this pattern was also true for sensitivity. Given that an everyday experience will typically generate a sequence with many hundreds of photos, this experimental study offers limited insight into the full potential of viewing photos in sequence, nevertheless, it is quite striking that this effect can be seen with just four photos. We believe that these results emphasise the benefit of seeing a sequence of related photos, which is a key feature of wearable camera photo review.

Since we did not include sequences of photos in random order, we cannot make inferences about the influence of the order of photos and based on our results, it is possible that the sequence effect is simply due to presence of multiple photos and not their order. However, a recent study has shown that sequences of photos in their natural order lead to a stronger final recollection of the events compared to randomly presented photos (Mair et al., Citation2017). One possibility is that there is an additive effect of the number of cues across the sequence. Since every photo in the sequence has a different perspective, each will contain its own unique combination of cues for the main memory event. Thus, each new photo in the sequence increases the possibility of providing a cue that is personally or environmentally salient.

Finally, it can be argued that sequence effect may be at least partly caused by an increase in recognisability of photos later in the sequence. This could have been especially the case for smaller targets. These targets may be less visible in earlier photos in the sequence as these were taken from a further point and more visible in later photos in the sequence as these were taken from a closer point. However, an opposite pattern would be expected for larger targets. These targets would be more recognisable from earlier photos in the sequence as these were taken from a far point and would contain more information about them. This is contrast to later photos in the sequence that would contain less information about them. Overall, while targets may not be equally recognisable throughout the sequence, it is unlikely that their recognisability is the primary cause of the sequence effect.

A second possible explanation for the sequence effect is that a subthreshold reactivation of memories during the miss trials make the target memory more accessible in the next trial of that sequence. Each time a photo from the tour is presented, it has the capacity to act as a memory cue that reactivates the target memory. If this effect is strong enough, a recollection of the event occurs (i.e., hit trials). However, where the cue is not strong enough to trigger explicit episodic remembering (i.e., some miss trials), there may nevertheless be increased activation (see Conway & Loveday, Citation2015), which makes the target memory more accessible in the next trial of a sequence.

If the sequence does indeed lead to greater activation of the memory trace, then the neural basis remains elusive as we did not find corresponding effects in the EEG analysis. This may, of course, reflect a lack of power, or it may be because this methodology is not able to detect these particular neural correlates, for example, if the changes occur at a more sub-cortical level. Nevertheless, there were important overall findings regarding neural activity: we observed a positive old-new ERP component over the visual electrodes from 135 to 450 milliseconds after the photo presentation onset, and the mean peak amplitude was larger in response to the presentation of photos correctly identified as new (correct rejections) compared to photos correctly identified as old (hits).

This ERP effect is different from that observed in other episodic memory ERP literature (Friedman & Johnson, Citation2000; Mecklinger et al., Citation2016; Mecklinger & Jäger, Citation2009; Rugg, Citation1994; Wilding & Ranganath, Citation2011). Usually, the old new effects found over the parietal regions have a higher amplitude for old compared to new items, whereas here this effect was reversed. However, this likely reflects the major differences between the paradigm used here and those used in most other ERP studies, namely the visual nature of stimuli and the longer retention time. While the laboratory-based episodic memory tasks typically use words or pictures as stimuli, here the stimuli were photos produced by wearable cameras. The visual information in wearable camera photos is more complex than lab-based stimuli; they have a wider visual angle, contain depth, typically have more items per target item, and contain autobiographical information. Due to this complex visual nature of wearable camera photos, it seems plausible that the visual old-new ERP effect reported here reflects the contribution of visual regions in recognition of these memories.

Furthermore, the retention interval of one week in this study has likely allowed for offline processes to consolidate the memories further in contrast to other ERP studies with short retention intervals of minutes. If successful, these processes might have changed how memories are stored and retrieved, potentially making them rely less on hippocampus and more on cortical structures (Nieuwenhuis & Takashima, Citation2011; Squire et al., Citation2004). This may explain why we fail to observe a typical parietal old-new effect here and why others have documented attenuated or no parietal old-new ERP effect after long retention intervals of one or four weeks (Roberts et al., Citation2013; Tsivilis et al., Citation2015). However, St Jacques et al. (Citation2011) found activation of the hippocampus after a mean retention interval of eight days in their fMRI study, which would suggest that memories are still hippocampus dependent after one week. In addition, since the cortical structures responsible for the visual content of the memories are likely the visual regions, it seems sensible that as a result of consolidation processes the visual regions are contributing to the recognition of these memories. Consequently, as a result of the consolidation processes that have occurred during the one-week retention interval and the visual nature of the stimuli, it is likely that the visual old-new ERP reflects the contribution of the visual regions in recognition of real-world memories. However, while these explanations offer potential reasons for the changes in the pattern of ERP results, they do not explain why the old-new effect found here is the reversal of what is typically found.

We observed no differences in the ERPs between the subjective experience of familiarity vs versus recollection responses. This suggests that participants’ subjective responses that whether they had recollected contextual information was not reflected in our observed ERP components. One explanation for this finding is that in our study both the new and old photos looked very similar (both were urban areas of London, UK) hence participants recognition memory in order to differentiate between the photos had to rely more on recollection mechanisms than familiarity-based mechanisms. This is also reflected in the increase in recollection with the sequence. Therefore, it may not be surprising that we observed no effect of familiarity. An alternative explanation would be that ERPs are not sensitive enough, in which case, analysing the underlying oscillatory activity might be more sensitive.

There are several factors that future studies should consider in order to achieve a better understanding of how wearable cameras help memory and eventually create better memory enhancement strategies. One important consideration is the frequency at which the camera takes photos, since this establishes how many images are available for review but may also impact on the overlap and variation in cues. Another important factor is the number of photos used. In an everyday setting, the user can view long sequences of hundreds or even thousands of images, but this is not practical in studies that are assessing the mechanisms. Ideally sequences should be short enough to allow convenient organisation and management of images, but long enough to allow the “Proustian moment” to occur (Loveday & Conway, Citation2011). Although four photos seemed sufficient to detect a sequence effect, it is unclear at which point, if at all, this effect plateaus. This will be crucial in deciding an optimal number of photos for memory enhancement paradigms.

This novel paradigm has allowed us to observe the positive influence of sequence during wearable camera photo-review, but it is essential that future research explores whether this effect is maintained in people with clinical impairments of memory. This not only has important practical implications but may also shed more light on the underlying neural mechanisms. While we did not find ERP correlates of the sequence effect in this study, we did observe an old-new effect over the visual electrodes that has not been previously seen. This may suggest that for long retention intervals these areas store some of the memories. Furthermore, this likely emphasises the role of visual cortices in recognition of autobiographical episodic memory and highlights the importance of using ecologically valid methods to explore autobiographical remembering.

Supplemental Material

Download MS Word (458.7 KB)Disclosure statement

No potential conflict of interest was reported by the author(s).

References

- Barnard, P. J., Murphy, F. C., Carthery-Goulart, M. T., Ramponi, C., & Clare, L. (2011). Exploring the basis and boundary conditions of SenseCam-facilitated recollection. Memory (Hove, England), 19(7), 758–767. https://doi.org/10.1080/09658211.2010.533180

- Barr, D. J., Levy, R., Scheepers, C., & Tily, H. J. (2013). Random effects structure for confirmatory hypothesis testing: Keep it maximal. Journal of Memory and Language, 68(3), 255–278. https://doi.org/10.1016/j.jml.2012.11.001

- Benjamini, Y., & Hochberg, Y. (1995). Controlling the false discovery rate: A practical and powerful approach to multiple testing. In Journal of the Royal Statistical Society B, 57(1), 289–300. https://doi.org/10.2307/2346101

- Bolker, B. M., Brooks, M. E., Clark, C. J., Geange, S. W., Poulsen, J. R., Stevens, M. H. H., & White, J. S. S. (2009). Generalized linear mixed models: A practical guide for ecology and evolution. Trends in Ecology and Evolution, 24(3), 127–135. https://doi.org/10.1016/j.tree.2008.10.008

- Chow, T. E., & Rissman, J. (2017). Neurocognitive mechanisms of real-world autobiographical memory retrieval: Insights from studies using wearable camera technology. Annals of the New York Academy of Sciences, 1396(1), 202–221. https://doi.org/10.1111/nyas.13353

- Conway, M. A., & Loveday, C. (2015). Remembering, imagining, false memories & personal meanings. Consciousness and Cognition, 33, 574–581. https://doi.org/10.1016/j.concog.2014.12.002

- Friedman, D., & Johnson, R. (2000). Event-related potential (ERP) studies of memory encoding and retrieval: A selective review. Microscopy Research and Technique, 51(1), 6–28. https://doi.org/10.1002/1097-0029(20001001)51:1<6::AID-JEMT2>3.0.CO;2-R

- Hautus, M. J. (1995). Corrections for extreme proportions and their biasing effects on estimated values ofd′. Behavior Research Methods, Instruments, & Computers, 27(1), 46–51. https://doi.org/10.3758/BF03203619

- Hodges, S., Berry, E., & Wood, K. (2011). Sensecam: A wearable camera that stimulates and rehabilitates autobiographical memory. Memory (Hove, England), 19(7), 685–696. https://doi.org/10.1080/09658211.2011.605591

- Koerner, T., & Zhang, Y. (2017). Application of linear mixed-effects models in human neuroscience research: A comparison with pearson correlation in two auditory electrophysiology studies. Brain Sciences, 7(3), 26. https://doi.org/10.3390/brainsci7030026

- Loveday, C., & Conway, M. A. (2011). Using SenseCam with an amnesic patient: Accessing inaccessible everyday memories. Memory (Hove, England), 19(7), 697–704. https://doi.org/10.1080/09658211.2011.610803

- Luck, S. J., & Gaspelin, N. (2017). How to get statistically significant effects in any ERP experiment (and why you shouldn’t). Psychophysiology, 54(1), 146–157. https://doi.org/10.1111/psyp.12639

- Mair, A., Poirier, M., & Conway, M. A. (2017). Supporting older and younger adults’ memory for recent everyday events: A prospective sampling study using SenseCam. Consciousness and Cognition, 49, 190–202. https://doi.org/10.1016/j.concog.2017.02.008

- Maisto, A. A., & Queen, D. E. (1992). Memory for pictorial information and the picture superiority effect. Educational Gerontology, 18(2), 213–223. https://doi.org/10.1080/0360127920180207

- Mecklinger, A., & Jäger, T. (2009). Episodic memory storage and retrieval: Insights from electrophysiological measures. In F. Rösler, C. Ranganath, B. Röder, & R. Kluwe (Eds.), Neuroimaging of human memory: Linking cognitive processes to neural systems (pp. 357–382). Oxford University Press. https://doi.org/10.1093/acprof:oso/9780199217298.003.0020

- Mecklinger, A., Rosburg, T., & Johansson, M. (2016). Reconstructing the past: The late posterior negativity (LPN) in episodic memory studies. Neuroscience and Biobehavioral Reviews, 68, 621–638. https://doi.org/10.1016/j.neubiorev.2016.06.024

- Nieuwenhuis, I. L. C., & Takashima, A. (2011). The role of the ventromedial prefrontal cortex in memory consolidation. Behavioural Brain Research, 218(2), 325–334. https://doi.org/10.1016/j.bbr.2010.12.009

- Roberts, J. S., Tsivilis, D., & Mayes, A. R. (2013). The electrophysiological correlates of recent and remote recollection. Neuropsychologia, 51(11), 2162–2171. https://doi.org/10.1016/j.neuropsychologia.2013.07.012

- Rugg, M. D. (1994). Event-related potential studies of human memory. In M. S. Gazzaniga (Ed.), The cognitive neurosciences (pp. 789–801). The MIT Press.

- Sanquist, T. F., Rohrbaugh, J. W., Syndulko, K., & Lindsley, D. B. (1980). Electrocortical signs of levels of processing: Perceptual analysis and recognition memory. Psychophysiology, 17(6), 568–576. https://doi.org/10.1111/j.1469-8986.1980.tb02299.x

- Silva, A. R., Pinho, M. S., Macedo, L., & Moulin, C. J. A. (2016). A critical review of the effects of wearable cameras on memory. Neuropsychological Rehabilitation, 0(0), 1–25. https://doi.org/10.1080/09602011.2015.1128450

- Squire, L. R., Stark, C. E. L., & Clark, R. E. (2004). The medial temporal lobe. Annual Review of Neuroscience, 27(1), 279–306. https://doi.org/10.1146/annurev.neuro.27.070203.144130

- Stanislaw, H., & Todorov, N. (1999). Calculation of signal detection theory measures. Behavior Research Methods, Instruments, & Computers, 31(1), 137–149. https://doi.org/10.3758/BF03207704

- S. Jacques, Peggy L., Conway, Martin A., Lowder, Matthew W., & Cabeza, R. (2011). Watching My Mind Unfold versus Yours: An fMRI Study Using a Novel Camera Technology to Examine Neural Differences in Self-projection of Self versus Other Perspectives. Journal of Cognitive Neuroscience, 23(6), 1275–1284. https://doi.org/10.1162/jocn.2010.21518

- Tibon, R., & Levy, D. A. (2015). Striking a balance: Analyzing unbalanced event-related potential data. In Frontiers in Psychology (Vol. 6, Issue MAY, p. 555). Frontiers. https://doi.org/10.3389/fpsyg.2015.00555

- Tsivilis, D., Allan, K., Roberts, J., Williams, N., Downes, J. J., & El-Deredy, W. (2015). Old-new ERP effects and remote memories: The late parietal effect is absent as recollection fails whereas the early mid-frontal effect persists as familiarity is retained. Frontiers in Human Neuroscience, 9(October), 532. https://doi.org/10.3389/fnhum.2015.00532

- Wilding, E. L., & Ranganath, C. (2011). Electrophysiological correlates of episodic memory processes. In The Oxford handbook of event-related potential components. Oxford University Press. https://doi.org/10.1093/oxfordhb/9780195374148.013.0187