Abstract

Drawing from the field of complex evaluations we discuss a novel application of process tracing for the evaluation of complex tourism interventions. We argue that to better evidence impact of tourism interventions and facilitate policy transfer we ought to adopt approaches to evaluation that allow us to deepen our understanding of causal mechanisms at play in an intervention. We adopt process tracing as a qualitative, theory-based evaluation method to make within-case causal inferences about impact. To showcase the method, we apply it to evaluate the outcomes of a real-world EU-Interreg sustainable tourism intervention called “EXPERIENCE,” implemented across six pilot regions across France and England. We argue that deepening our understanding of how interventions work in a local context is necessary for the design and transferability of future interventions across similar contexts.

Introduction

A major concern when conducting evaluations is addressing issues of causal attribution and contribution (Vanclay, Citation2015). Interventions refer to any course of action, programme, project, policy or strategy exercised or mandated by a national or international authorities and non-governmental actors to create some sort of change. The refers to a combination of activities or strategies designed to assess, improve or promote change in the form of behaviour or increased performance among individuals or a portion of the population (Clarke et al., Citation2019). Interventions that include multiple independent or interactive components are referred to as complex.

To assess whether and how a set of interventions has generated particular outcomes is crucial, and the robustness of claims around attributions is often problematic (Collier, Citation2011; O’Sullivan et al., Citation2009). Theory-based evaluation is “an approach in which attention is paid to the theories of policy makers, programme managers or other stakeholders, i.e. collections of assumptions, and hypotheses - empirically testable - that are logically linked together” (European Commission, Citation2013, p. 51). Process tracing is a qualitative, theory-based evaluation method to draw causal inferences within a single case study to evidence impact (Beach & Pedersen, Citation2016; Stern et al., Citation2012) whilst matching the methodological rigour of counterfactual methodologies (Collier, Citation2011; Wauters & Beach, Citation2018).

Tourism interventions are classified as complex social interventions, yet there is a dearth of knowledge in the tourism literature of studies evaluating their impacts with complexity sensitive methods (Airey, Citation2015; Baggio, Citation2020; Dredge & Jamal, Citation2015). Tourism monitoring and evaluation literature is in its infancy and mostly permeated with quantitative evaluation studies using empirical indicators. These are valuable when measuring things such as visitor satisfaction, increased length of stay or increased expenditure of visitors, but overlook the process that led to those outcomes (O’Sullivan et al., Citation2009; Phi et al., Citation2018). Counterfactual approaches fail to address why change happened, leaving the black-box of mechanisms unopened (Trampusch & Palier, Citation2016). Without understanding why an intervention worked in a particular context, it is impossible to understand how it could work elsewhere (Cartwright & Hardie, Citation2012).

The politics of evaluation have repercussions on the chosen evaluation methods. Scholars have highlighted how organisations often approach evaluation as a box-ticking exercise to evidence success of an intervention at all costs, since they rely on demonstrating success to funders to secure future funding (Regeer et al., Citation2016). This is problematic: 1) it hinders the possibility of project implementers to recognise errors and learn from past mistakes; and 2) it jeopardises the very meaning of being held accountable, since the need to demonstrate success at all costs often translates to manipulated data to satisfy funders’ requirements (Bray et al., Citation2019).

As suggested by various scholars (Busetti & Dente, Citation2017; Eckardt et al., Citation2019; Phi et al., Citation2018), there is a need for theory-based evaluation approaches in tourism that generate knowledge around the context and underlying mechanisms of interventions, to improve future design (Taplin et al., Citation2014). This study is a methodological contribution to the field of complex tourism interventions presenting a process tracing evaluation case study. We adopt process tracing to examine and explain the process that led to two specific outcomes of a tourism intervention implemented at a regional level as part of a larger European tourism project. We evidence the change directly linked to the project activities and resources in one pilot region. The purpose of the paper is to showcase the methodological value of process tracing in explaining outcomes by evidencing the causal chain leading to impact in a robust way. With this in mind, the reader should be aware that to focus on the method and highlight the overall procedure in a meaningful way, details of the evaluation per se have been scaled back.

Theoretical background

What is process tracing?

Process tracing is a case-based qualitative method and is part of a family of theory-based evaluation approaches (Weiss, Citation1998). It consists of the construction of hypothetical causal chains leading from an intervention x to an outcome y plus evidencing each step in the causal chain. In process tracing the evidence is classified through a set of tests evaluating its strength in confirming or disconfirming the causal hypothesis (Bennett, Citation2010). Process tracing has increasingly been applied in recent years (e.g. Befani & Stedman-Bryce, Busetti & Dente, Citation2017; Chen & Henry, Citation2020) because of the value it can bring to policy studies (Kay & Baker, Citation2015) as an analytical tool for evidencing causal inferences or sequence of events within a single case study (Collier, Citation2011). It particularly useful to evaluate interventions based on a Theory of Change (Befani & Stedman-Bryce), especially initiatives which are difficult to evaluate solely through experimental or statistical methods (Befani & Mayne, Citation2014; Wadeson et al., Citation2020). We advocate process tracing not as an alternative to counterfactual methods, but as an approach alongside counterfactual methods to produce a more holistic understanding of how intervention contributed to create impact (e.g. Rothgang & Lageman, Citation2021; Schmitt & Beach, Citation2015).

At the heart of process tracing lies the concept of generative causality (Beach & Pedersen, Citation2016; Collier, Citation2011). Machamer et al. (Citation2000) explain generative causality as the underlying social responses that activities trigger within entities, that ultimately lead to change. A similar concept is discussed by Pawson and Tilley (Citation1997), when they advise to explore what the “initiative fires in people’s minds” (p. 188) to understand the mechanisms behind change. Building on Pawson and Tilley’s Realist Evaluation (1997), Deaton and Cartwright (Citation2018) argue that in complex social interventions, there are causal forces that can be (and should be) identified through alternative epistemological and ontological frameworks, that are not purely empirical. Process tracing supports the researcher in establishing how a set of hypothesised mechanisms connect a given intervention X to an outcome Y (Beach & Pedersen, Citation2018). It implies a causal mechanism to be theorised as a process, i.e. “an unbroken chain of action and reaction enacted by entities - that connects the potential cause with its hypothesised outcome” (Wauters & Beach, Citation2018, p. 288). By evidencing every step in a causal chain, process tracing increases confidence in the causal mechanisms identified as producing a particular impact (Befani & Mayne, Citation2014).

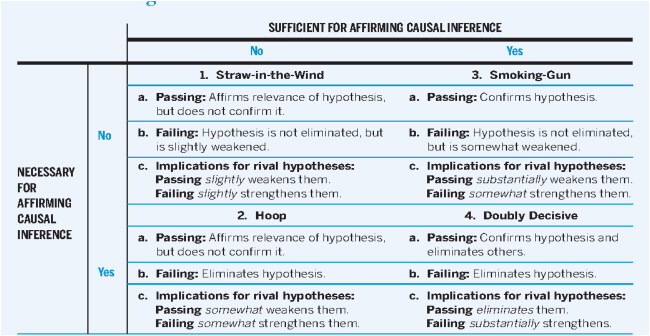

There are several common critiques concerning the usefulness of the method. Beach (Citation2016) highlights that many studies claiming to adopt a PT approach do not execute it sufficiently, resulting in numerous ambiguities and a lack of understanding of the underlying mechanisms. Since it is a qualitative method, it is also important to stress that it is not suited for generating generalisable findings and is extremely resource and time-intensive, thus costly. Moreover, process tracing has been criticised for its reliance on anecdotal data, as a link in the causal chain can be corroborated by relatively weak evidence. Rather than a weakness however, it is a significant strength that the quality of the evidence is not paramount, it is the evidence’s probative value in confirming or refuting a hypothesis that is crucial (Befani & Stedman-Bryce Citation2017). Such probative value of each piece of evidence is determined by four different empirical tests: straw-in the-wind, smoking-gun, hoop and doubly decisive, differing in test direction and probative value (more detail in the methodology section). By classifying evidence through these tests (see below, from Collier, Citation2011) and subsequently pulling together the different pieces of evidence, the researcher can make robust causal claims by evidencing every step in a causal chain.

Source: Collier (Citation2011), adapted from Bennett (Citation2010).

Finally, it has been argued that there is not sufficient guidance on how to deal with rival hypotheses that are not mutually exclusive. Competing explanations can exhibit relationships with the primary hypothesis, each with differing implications for drawing causal inferences (Zaks). Assuming mutual exclusivity of rival hypotheses is not realistic and can lead researchers implementing a process tracing approach to hastily rule out other valid explanations which may work in addition to the main hypothesis and contribute to a same outcome through different mechanisms. Hence, researchers ought to gather evidence to validate a main hypothesis and rival hypotheses in parallel. When two hypotheses can simultaneously bring about an outcome, one cannot assume that evidence for the main hypothesis automatically invalidates the rival hypothesis. In this sense, researchers should account for the implications the different types of rival hypothesis have in relation to the main hypothesis and how these relationships affect judgements on the final causal inferences, as illustrated in the case by Tamm and Duursma (Citation2023).

Applying process tracing to attribute change in complex tourism interventions

Tourism interventions fall under the umbrella of complex social interventions, and as such, should be evaluated with appropriate methods (Dredge & Jenkins, Citation2011; O’Sullivan et al., Citation2009). The dearth of knowledge around the actual outcomes and efficacy of tourism interventions is argued to be linked to a scarcity of effective evaluation methods utilised (Airey, Citation2015; OECD., Citation2020). Claiming causal attributions in complex social interventions and linking interventions to change is challenging (Moumoutzis & Zartaloudis, Citation2016). This is because often stimuli (in the form of activities, investments, partnerships, subsidies, etc.) are implemented to enhance or promote policies which are already pursued at a national and/or local level. Because of such overlap, it is difficult to show that without that particular intervention, a set of outcomes would not have occurred anyway (Airey, Citation2015; Stevenson et al., Citation2009).

Tourism interventions often operate in parallel with many other policy interventions, making it difficult to identify which intervention led to specific impacts. Circumventing causal attribution through overly complicated statistical methods is not effective when dealing with complexity in the social world (Stern et al., Citation2012) and often fail to tackle the problem of equifinality (Schimmelfennig, Citation2015), the issue that many different pathways can result in the same outcome. Instead, process tracing is an analytical tool to empirically evidence a causal chain step-by-step, exposing the pathway that led to the outcome of interest (Collier, Citation2011).

The key intervening forces causing change in publicly funded tourism interventions are often hidden at various nested hierarchical levels (Falleti & Lynch, Citation2009; Kay & Baker, Citation2015). To detect what change is attributable to a specific intervention, it is vital to implement a methodology which allows to piece together all those small changes along the way that constitute the path that lead to overall change or impact: e.g. changes in the capacity of partners, enhanced commitment of local government and private stakeholders, even degree of participation in meetings and engagement in communication: these are all elements which can help trace and attribute change to a specific intervention (Ashley & Mitchell, Citation2008). A final point to highlight is that data available to measure change is often poor and fragmented (Ashley & Mitchell, Citation2008; Kay & Baker, Citation2015). Process tracing can draw together qualitative and quantitative evidence, thus overcoming many data availability challenges (Ricks & Liu, Citation2018). By scrutinising evidence, the researcher can formulate theoretically informed, empirically evidenced, mechanism-based causal claims around impact (Bennett & Checkel, Citation2015), significantly improving the theorisation of programme theory (Trampusch & Palier, Citation2016). Process tracing is a method that allows to: 1) trace the steps within a process leading to change; 2) gather and classify evidence for each of those steps 3) explain how these steps constitute an overall process that contributed to an outcome. The evidence gathered throughout this approach is not evidence of impact per se, but evidence for the causal attribution of the impact of an intervention towards a desired outcome. This study showcases how the method can benefit the evaluation of complex, multi-layer tourism interventions, where data are fragmented and the edges between intervention-specific causal forces and other external influences (national and local), are extremely blurred.

Method

We position ourselves with a growing number of scholars arguing for the wider use of theory-based methods to evaluate social interventions (Baggio, Citation2020; Byrne, Citation2013; Stern et al., Citation2012; Twining-Ward et al., Citation2018). Following guidance on evaluation methods provided by HM Treasury (Citation2020), we opted to implement theory-based methods of evaluation, by applying process tracing to a complex tourism intervention impact evaluation. Process tracing should not be understood as the panacea for methods struggling to address causation. Beach (Citation2016) has gone as far as suggesting that although powerful in what it does, it is a limited methodological tool. Conscious of some of its limitations, we evaluated an EU-funded, cross-border €24.5 m project called EXPERIENCE, to our knowledge one of the largest tourism projects ever funded by the European Commission. Involving six pilot regions across the French-English Channel, the project involved fourteen project partners (seven councils, four destination management organisations, a charity, a private organisation, and a university). The aim was to decrease seasonality in pilot regions by introducing sustainable, low season tourism experiences to attract visitors year-round. Each pilot region deployed project funds to deliver activities such as: 627 training workshops for tourism and atypical actors, consumer testing of marketing materials, 26 infrastructure refurbishments to increase accessibility, design of 622 new itineraries, 206 of which were accessible to users with disabilities, B2C testing of accessibility of products and itineraries, and accessibility-friendly campaigns.

All activities were aimed at the overall objective of adapting existing natural and cultural assets to the low season and creating a more experiential and accessible tourism offer. The project, funded by a supranational body, the EU (macro-level), was implemented by six regional councils and three Destination Management Organisations (DMOs) (meso-level), involving small-medium enterprises (SMEs), individual artists/artisans, accessibility groups and so on (micro-level). The mechanisms leading to change were nested at different hierarchical levels and process tracing allowed us to analyse all the pieces of evidence closely and discern the level of change attributable to the project (Falleti & Lynch, Citation2009; Kay & Baker, Citation2015). In this sense, process tracing revealed to be a useful approach in discerning how the small incremental change that happens at a micro project level can lead to major repercussions at a higher system level (Elsenbroich & Badham, Citation2023). To exemplify how process tracing is a useful, mechanism-based evaluation tool, we focus on two of the project’s desired outcomes and describe how we gathered evidence to make causal inferences concerning the two selected outcomes. The purpose of this approach is to focus on a narrower context to further explore some key moments in the project and the mechanisms that it triggered. To maintain confidentiality, we anonymised all evidence collected and used to illustrate the causal inferences we make in this study.

We discuss how we integrated the empirical evidence with discursive evidence to provide a deeper understanding of the intangible impact that increasing accessibility of tourism products had on visitors and locals within the pilot region and to go beyond an output-focused understanding of impact. In line with Beach (Citation2016), we evaluated the evidence available following three steps: (1) we predicted what empirical evidence a mechanism would leave; (2) we collected empirical evidence and assessed whether predicted evidence was found; (3) we evaluated whether the found evidence was reliable to make causal inferences, as described below.

Theorising mechanisms

We started by predicting the mechanisms that were expected to trigger change (Beach, Citation2016). Before gathering any evidence, it is useful to predict what kind of evidence one should exist, if the activities and resources implemented “work” as expected in bringing about the desired change. In line with previous studies (Mori, Citation2021; Wadeson et al., Citation2020) we opted to do this “theory building” process and predict mechanisms through the co-design of a project-wide theory of change (see Montano et al., Citation2023), a causal graph which allows to identify in a visual representation how and why change is expected to happen. By connecting the resources and activities planned through the project by all project partners to the desired outcomes we were able to exploit the predictive element of a theory of change and theorise the mechanisms expected to trigger desired change ex-ante. Building upon the assumptions made in the Theory of Change we were able to use process tracing to trace the existence of observable and therefore testable implications of the possible reality theorised in the Theory of Change (Befani & Stedman-Bryce), i.e. whether and how the projects’ resources and activities generated the predicted outcomes.

Gathering evidence

The data constituting the pieces of evidence were collected across the lifespan of the project (approximately three years: January 2020 - January 2023) and were extremely diverse. No formal interviews were conducted. Instead, data was gathered progressively through observation, participation in project-wide steering group meetings and periodic one-to-ones with individual partners. Evidence included project documentation such as activity logs, minutes of meetings, documentation detailing resources invested, results from surveys taking place during the project to monitor businesses’ performance in the region monthly, as well as more tangible results such as the timing of infrastructure works, and art installations funded by the project. Most evidence was uploaded by partners and stored onto an online project management platform, and available for all project partners (including ourselves) to access. This was a project requirement and way for us to centralise data from all partners. We obtained discursive evidence by participating in business networking events, steering group meetings and product testing organised by all partners. Evidence such as feedback from users, businesses, comments from implementers and quotes from meeting minutes were all anonymised and used to trace the mechanisms connecting project activities to outcomes in a causal way.

Being part of the evaluation team from the very beginning of the projects’ implementation positioned the researchers in a privileged position to gain an extensive understanding of the context in which the project was implemented and to keep track of and gather a varied array of evidence of incremental changes. Whilst some may argue that being internal to the project our evaluation could be biased, we emphasise that our role was to guide and support project partners through their evaluation endeavours, but ultimately the final evaluation was then validated externally by the funder (i.e. the European Commission). Hence, we refer to our position as “privileged” because our role was to support partners in finding the most appropriate evidence of impact, and not to judge them on performance. We succeeded in establishing a continuous dialogue that ensured a healthy evaluation environment where transparency, trust and collaboration were crucial.

Assessing the value and classifying available evidence

We defined each piece of evidence according to the framework suggested by the recent NAMA evaluation report (Mori, Citation2021), adapting the framework from Delahais and Toulemonde (Citation2017) which categorised evidence according to whether it was (a) from an authoritative source; (b) a signature piece of evidence, i.e. if X caused Y it left this piece of evidence as a particular trace; (c) a convergent triangulated source, i.e. when two sources of data are independent from one another as they originate from parties with differing interest and yet they converge, reinforcing the credibility of one another; (d) chronologically consistent. This classification of evidence also supported the research team in deciding whether the evidence was reliable before making any causal inferences (e.g. when evidence came from an authoritative or triangulated source). To strengthen transparency and to reduce biases, we cross-referenced the evidence classification amongst the team of researchers.

Next, evidence was subjected to the four empirical tests: “Straw-in the-Wind,” “Smoking Gun,” “Hoop” and “Doubly Decisive.” Each test has a different probative strength and confirmation/disconfirmation relationship to a hypothesis. Collier (Citation2011) provides an overview of the tests and their implications for the strength of the evidence in confirming or disconfirming not only its link to a particular outcome, but also the robustness of the causal attribution. For example, “Straw-in-the-Wind” evidence is weak and circumstantial, contributing marginally to our belief or disbelief in a hypothesis. A Hoop Test strongly disconfirms a hypothesis but only weakly confirms a rival hypothesis. A Smoking Gun strongly confirms a hypothesis but does not help us to exclude rival hypotheses. Finally, a Doubly Decisive test strongly confirms a hypothesis and allows us to exclude all other hypotheses with conclusive proof, which is difficult to obtain in the social world. Most often, process tracing will be a combination of pieces of evidence that belong to the other three tests, so that the synthesis of pieces of evidence results in a clearly evidenced overall picture.

Finally, as a research team of four and in collaboration with the project implementers, we considered the existence of rival hypotheses and gathered evidence in support of these. Whilst process tracing does not involve the use of a control group to make causal inferences, it is crucial to consider alternative explanations that may have led to a particular outcome. If these rival explanations do not hold true, this further strengthens the primary hypothesis. If instead a rival explanation is not easily discarded, further evidence should be gathered to dismiss this rival hypothesis to make robust causal claims about impact and attribution (Ricks & Liu, Citation2018). As mutually exclusive explanations are exceedingly rare (Zaks, Citation2017) and multiple hypothesis seemed plausible, our internal role within the project allowed us to have a deeper understanding of the project’s mechanisms to weigh evidence against rival hypotheses and judge which one best explained the outcome being tested.

Results

Theorising how interventions work: process-tracing and theorising process theories of change

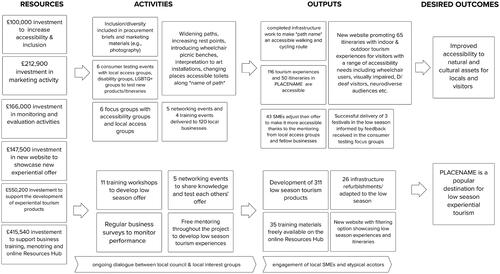

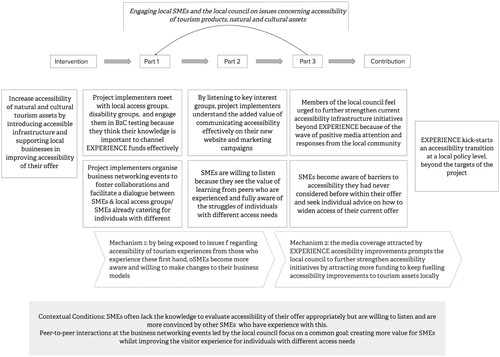

We present the empirical evidence we gathered and classified through a process tracing approach to answer two key questions: (a) why entities in receipt of an intervention do what they do; and (b) how the actions and interactions amongst entities are causally linked to the EXPERIENCE project. Following the three steps described above, this involved (1) defining the intervention and actors involved; (2) theorising the potential contribution pathways and the predicted mechanisms underpinning these; (3) unpacking the process through the classification and analysis of empirical evidence explaining the mechanisms at play. shows the theorised Theory of Change (see Montano et al., Citation2023 for more details), co-designed with the project partner of one pilot region (steps 1 and 2). It is a visual representation of how project implementers expected the intervention to unfold and contribute towards the desired outcomes, with the activities funded through the project. This ex-ante theorising process is pivotal, as knowing approximately what to look for can help pre-empt the type of evidence we can expect to trace the process.

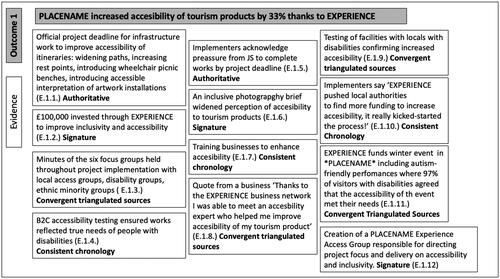

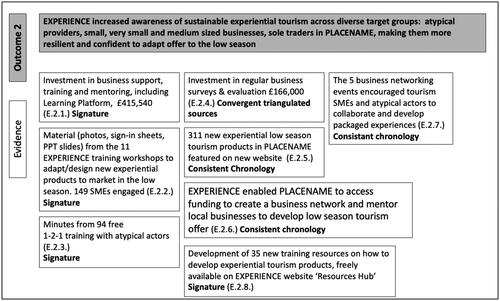

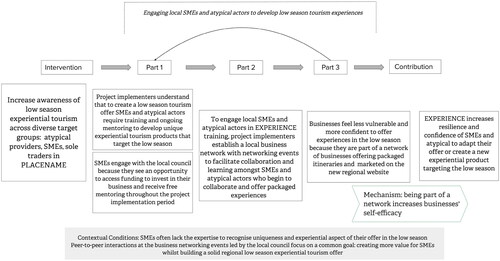

is a Theory of Change emphasising the resources and activities expected to bring about change in theory, but it does not provide a clear explanation of why entities respond to the intervention in a particular way. Whilst theorising the process in a participatory manner with project implementers was useful to gain an in-depth understanding of the contextual features of the intervention, and understand what happened, process tracing supported the research team in identifying specific causal mechanisms within the intervention that may be generalisable. Once we established what change happened, we identified the causal chain linking project activities such as training and mentoring of businesses, to the desired outcome (improving accessibility). We also followed a rival hypothesis which prompted us to investigate whether this change would have occurred regardless of EXPERIENCE and gathered evidence to also test the rival hypothesis. and provide a detailed explanation to this chain of events and unpack the process through which the activities initiated by EXPERIENCE detailed in , contributed to the desired outcomes (step 3). and explicate the process theory of key moments linking entities (who), activities (what), and causal principles (why) to the contribution of EXPERIENCE towards the desired outcomes. Particularly, the two figures highlight who were the actors involved in the change and how their reaction to the intervention and interactions amongst each other led to the desired outcome.

Figure 2. A process theory for the engagement of SMEs and local policy actors in improving accessibility. (Author’s own, 2023, adapted from Camacho Garland & Beach, Citation2023).

Figure 3. A process theory for the engagement of SMEs and atypical actors for the development of a low season tourism offer. (Author’s own, 2023, adapted from Camacho Garland & Beach, Citation2023).

Designing a new website or allocating funding for training to improve accessibility and combat seasonality of a destination’s tourism offer does not by itself make the pilot region’s natural and cultural assets accessible or attractive in the low season. and outline how the behaviour change in the relevant actors was triggered by some key events within the intervention and by entities’ response to these project activities. For instance, the positive response to establishing a dialogue with local access groups and disability groups caused SMEs to re-think the accessibility of their products. The dialogue and interaction amongst these two entities is the key to preserve for future replicability of the intervention. The key mechanism for the successful transfer of the intervention lies in understanding that for SMEs to be motivated to improve accessibility, an ongoing dialogue and mentoring with accessibility groups and one-to-one consultations with SMEs who are already catering for individuals with different access needs are crucial elements in the change process. Our findings show that the SMEs involved in business networking events organised by EXPERIENCE were eager to improve accessibility of their current offer because learning from individuals who experience first-hand the challenges and frustration caused by a lack of clarity on accessibility of facilities allowed them to empathise with the issues they had not previously considered.

An unexpected knock-on effect and local policy response (that had not been envisaged previously when theorising change in ) is also shown to be a result of a behaviour change (a decision to capitalise on improving accessibility) caused by the positive wave of attention generated by the infrastructure adjustments funded by EXPERIENCE (shown in ). This wider policy impact is an emergent outcome not previously theorised but for which we found evidence of once conducting fieldwork. In this sense, process tracing revealed to be an evaluation tool flexible enough to deal with the complexity of emergent outcomes, not merely pre-defined ones.

Similarly, the establishment of a local business network encouraged collaboration amongst SMEs and atypical actors who felt more confident to deliver low season experiences because thanks to EXPERIENCE they became part of a network. The training and mentoring received through project funds were important in developing skills and ideas to adapt or design new products for the low season but being part of a local business network and offering experiences in collaboration with others encouraged SMEs and atypical actors to invest in offering targeted low season experiences.

In the following section we present the evidence gathered for the two outcomes discussed above. Then we present the empirical evidence to confirm/disconfirm the process theory and classify each piece of evidence based on its source as suggested by the framework applied in the NAMA evaluation report (Mori, Citation2021). Finally, we discuss how the evidence supports us in confirming or disconfirming how EXPERIENCE contributed to the desired outcomes. Due to the level of empirical scrutiny required to understand causal linkages, we focus on key events to show the causal chain linking activities and entities within the intervention and their contribution towards the desired outcome.

Evidence for outcome 1: “PLACENAME increased accessibility of tourism products to people with disabilities by 33%”

Increasing accessibility of tourism products was one of the main pillars of the project, hence, demonstrating that EXPERIENCE was responsible for this change was pivotal for both funders and implementers. Process tracing is particularly well-suited to examining this outcome, as it involves several transformative elements necessary to improve accessibility. These elements include modifications in infrastructure and promotional materials, as well as changes in the attitude and behaviour of SMEs, which are essential for implementing these changes in their offers. Given that these various changes manifest in different forms, process tracing enables the combination and synthesis of diverse pieces of evidence into a coherent causal chain of change. Below is a table summarising available evidence which helps us understand the role of EXPERIENCE in achieving this outcome in X pilot region (). When theorising the change and specific outputs we expected the project to achieve (see ), we also identified the type of evidence we were expecting to find if the assumptions in the theorised Theory of Change held true. For example, if EXPERIENCE training had worked in incentivising SMEs to make their tourism products more accessible, then we would expect to see these products with improved accessibility showcased on the region’s new website.

We gathered many diverse types of evidence connecting EXPERIENCE to the achievement of this outcome; however, it is not possible through process tracing to assert whether these improvements met the 33% project target also identified in . Indeed, it was unclear from the project proposal whether the 33% increase was across the six pilot regions or an individual target within each pilot region. Eventually, mid-way through project implementation the lead partner negotiated with the European Commission to change this pre-defined measure. The hard project deadline to make accessibility improvements shows that the very existence of the project contributed to making this change happen within a specific timeframe (see E.1.1. in ). This piece of evidence came from an authoritative source, i.e. an official project document accepted by the funders and is further reinforced by the pressure to complete the works on time acknowledged by the local council implementing the project during one of our many meetings (E.1.5.).

The hard project deadline is further supported by evidence of investments made by the local council from EXPERIENCE funds (E.1.2.), specifically on accessibility-related activities, which was reinforced by the creation of a dedicated EXPERIENCE Access Group within the local council (E.1.12.). The investments to increase accessibility were classified as a signature piece of evidence being the outcomes of such investments visible on various sites where adjustments have taken place. These signature pieces of evidence paired with evidence of funding, alone, show a connection between EXPERIENCE and the achievement of this outcome. Moreover, paired with evidence E.1.3. and the business-to-consumer testing (E.1.4.), which occurred concurrently during project implementation to ensure accessibility improvements met user requirements, show a strong causal narrative that supports us in tracing the process that led these project activities to create a positive impact in terms of accessibility improvement.

Attention must also be paid to the value of the discursive pieces of evidence, that provide a better understanding of the positive knock-on effects of the projects’ activities i.e. the mechanisms created by the projects’ infrastructure adjustments. The testimonial of a business (E.1.8.) who improved accessibility of its tourism offer as a result of attending the training and mentoring funded by EXPERIENCE (E.1.7.), as well as the accounts from locals/visitors with disabilities who enjoyed better access to outdoor experiences in those areas where EXPERIENCE funds had been devolved to improve accessibility (E.1.9.), play a significant role in tracing a causal narrative of the projects’ more intangible impacts. Moreover, E.1.11, classified as a consistent chronology piece of evidence, plays a significant role in demonstrating how the feedback from the ongoing dialogue with local access groups, led project implementers to improve the design and accessibility of a pre-existing local winter festival, by incorporating for the first-time autism friendly performances. Accessibility improvement was highlighted in the visitor survey response from the event. The discursive evidence coming from convergent triangulated sources is particularly important because whilst documenting that the project “worked” in delivering infrastructure adjustments in a timely manner is arguably common sense, the discursive evidence we found allows us to understand the process that brought about this positive change and the wider impacts it produced. Feedback from users with disabilities demonstrates that by working alongside those who would potentially benefit from the projects’ investments, this local council was able to better meet the needs of those with greater accessibility requirements and communicate accessibility more efficiently (E.1.6.). In addition, by creating a network across local businesses, the latter were able to learn from the best practices of one another to enhance accessibility of their product.

Testing outcome 1

The strength of each piece of evidence was determined through four empirical tests. Below is a table summarising how we classified evidence for outcome 1 (see ). Evidence E.1.1. and E.1.5. were classified as “smoking gun” because the existence of an official hard project deadline for work completion, as well as the pressure acknowledged by the local council from the funders, strongly suggest that the change was funded and delivered on time due to EXPERIENCE. We classified E.1.3., E.1.6., E.1.8. and E.1.10. as “straws in the wind” because individually, they represent weak pieces of primarily discursive evidence that are not sufficient to claim causal attribution. Nevertheless, drawing upon this type of evidence is particularly useful to integrate quotes and feedback from project recipients. This evidence further corroborates and provides a context for the hard pieces of evidence such a project deadline or use of funds, particularly when they come from convergent triangulated source. When we asked project implementers what role the project played in achieving outcome 1, a project implementer commented:

Table 1. Testing outcome 1 (Author’s own, 2023).

“Once EXPERIENCE funds enabled the infrastructure investments to go ahead, it kind of gathered its own momentum. Once works were complete and already during the testing, we received many emotional stories and positive feedback from families and users with disabilities which had been on site and seen the accessibility adjustments. The flood of positive feedback is now pushing the local authorities to attract more funding to keep moving forward and enhancing accessibility! EXPERIENCE really kick-started this process.” (E.1.10.)

This piece of discursive evidence highlights what Collier (Citation2011) refers to as a snapshot of a specific moment, a pivotal intermediate moment within the process, that allows us to trace a mechanism within the project which generated the outcome of interest. Another example of emerges by analysing E.1.3. and E.1.4. together. Through E.1.3. we know that the local council organised six focus groups and several informal 1-2-1s to collect feedback from local accessibility groups and individuals with disabilities. These consultations aimed to better plan for infrastructure works, training materials and EXPERIENCE-funded local events to increase the accessibility of tourism experiences in the region. Whilst E.1.3. suggests that the local council invested time and effort into understanding the needs of people with disabilities, this evidence is not strong enough to attribute subsequent change to EXPERIENCE. However, paired with E.1.4., E.1.11 and E.1.12, which demonstrates not only that that during the project, business-to-consumer accessibility testing was carried out, but also that feedback fed into the design of an event targeting specific accessibility requirements, the causal narrative become stronger. Paired with these pieces of evidence, E.1.9. provides a valuable “hoop,” because if we had not found evidence confirming that people with disabilities were enjoying better access to sites and experiences thanks to the infrastructure adjustments funded by the project, then we would not have any evidence that EXPERIENCE contributed to achieving this outcome.

The researchers also considered rival hypotheses in relation to outcome 1 and gathered evidence to test their plausibility. We verified whether there were other existing factors that may have contributed towards this outcome and whether plans were already in place to increase accessibility locally, alongside EXPERIENCE. By conducting some desk research and talking to members of the council implementing the project we found an official document “Sustainable Tourism Strategy for *PLACENAME* 2016-2020” which mentioned targeting visitors with different accessibility requirements. However, this document failed to provide details of how this market would be targeted and what investments were planned to make tourism facilities and cultural landscapes more accessible. In addition to this, members of the council had previously revealed how the project had kick-started the process and motivated the council to seek additional funding to keep promoting and increasing accessibility. This evidence allows for two reflections. First, if improving accessibility of tourism assets was something the local authorities were taking seriously before EXPERIENCE, a clear outline of actions and funding devolved to making *PLACENAME* more accessible and thus more attractive to people with different access needs would have been included in their 2016-2020 strategy document. Secondly, the evidence we possess in relation to the primary hypothesis, particularly the claims made by my project implementers (members of the local council) around the previous lack of action on behalf of the council in really addressing accessibility barriers before EXPERIENCE significantly undermines the rival hypothesis, thus strengthening confidence in our primary hypothesis.

Evidence for outcome 2: “EXPERIENCE increased awareness/engagement to sustainable experiential tourism across diverse target groups: atypical providers, very small businesses, small/medium enterprises, sole traders in *PLACENAME*, making them more resilient and confident to open in the low season”

The second outcome we tested relates to how EXPERIENCE encouraged local businesses to adapt their current offer to experiential tourism activities targeting visitors in the low season (see below). The evidence we gathered suggests that a) some atypical actors began to turn their products into experiences; b) businesses began collaborating with one another thanks to the creation of a local business network. The results show us a consistent chronology by which these changes happened following the investment of EXPERIENCE funds (E.2.1.) to provide training and 1-2-1 mentoring to local businesses and atypical actors (e.g. artists, artisans, foragers, etc.). Of the 120 businesses engaged, we found evidence of 116 businesses who successfully adapted their offer to create a tourism product viable in the low season (E.2.2.), as well as the establishment of a local business network, that facilitated the collaboration and creation packaged experiences (E.2.7.).

The consistent chronology types of evidence (E.2.2.; E.2.6.) show us that during the project, 120 businesses attended EXPERIENCE training and accessed the mentoring programme funded by the project. Following these project activities, we have signature evidence of the creation of new tourism experiences (E.2.5.), which also feature on the new website mentioned in outcome 1. Whilst we cannot assert whether businesses and atypical actors would have developed these new experiences regardless of the EXPERIENCE project, the consistent chronology evidence (E.2.2.; E.2.6) paired with resources invested in the training (E.2.1.; E.2.3.; E.2.4.) and the subsequent development of new experiences targeting the low season (E.2.5.), suggest a strong causal link between EXPERIENCE and the achievement of outcome 2. Moreover, we found evidence of the official destination’s website being redesigned to include imagery of low season tourism offer, as well as a filtering option showing experiences available in the low season only. Moreover, a new marketing campaign funded by the project was launched showcasing both winter and summer experiences at the destination. The results also allow us to see a positive unintended consequence of the project. To meet project requirements and coordinate the creation of 311 new tourism experiences (E.2.5.), the project implementers had to create a local business network which did not exist prior to the project. As a result, businesses were able to connect with one another and collaborate to create packaged experiences (E.2.7.).

Testing outcome 2

Testing outcome 2 represented a greater challenge because of the nature of the impact we were aiming to trace. Detecting the increase in confidence of non-tourism businesses to engage in offering tourism experiences, or that of existing tourism businesses to adapt their offer to the low season, is harder to evidence because this type of impact is in many aspects intangible and change may not occur nor be visible straight away. Whilst it is true that for outcome 1, simply upgrading infrastructure to enhance accessibility is not enough to claim the project succeeded in increasing accessibility of the tourism offer in X destination, as an outcome it is a far more tangible to test as opposed to outcome 2. This is because it is supported by visible changes to the infrastructure in certain sites that allow us to construct a stronger causal narrative. Despite this, we were able to gather evidence which paired together, supports us in testing outcome 2 and understanding merits that are attributable to EXPERIENCE ().

Table 2. Testing outcome 2 (Author’s own, 2023).

Evidence of the resources invested in training and mentoring for businesses (E.2.1.), paired with feedback collected from businesses who took part in the EXPERIENCE training and mentoring programme offered by the council (E.2.2.), reveal clues that pulled together, allow us to trace a significant causal narrative. E.2.1. is a valuable hoop piece of evidence because failure to find any evidence of funds invested from the project to support local businesses in achieving this outcome would have excluded that EXPERIENCE was responsible for any of the change that occurred. However, simply reporting on the use of resources invested by the project is never enough to account for impact. In this sense what were classified as “straws in the wind” (E.2.2.; E.2.6.) again, help us in providing a snapshot of moments in the process .For example, following training provided by the project, shortly after we found evidence that some businesses had created low-season photography for their offer, or instead of closing their business in the Winter, they adapted their high-season offer the low-season by integrating an autumnal themed sailing experience with mulled-wine and cozy afternoon tea experiences. These pieces of evidence strengthen the causal narrative to account for the impact the training/mentoring generated for those involved, particularly because the feedback is from a convergent triangulated source.

“Smoking gun” type evidence is more robust and increases confidence in an outcome significantly. For outcome 2 we classified four pieces of evidence as smoking gun (see ). Interestingly, only E.2.3. fully passed the smoking gun test. E.2.3. suggests that the new/adapted low season experiences that were created were all offered by businesses/atypical actors with some affiliation to EXPERIENCE. E.2.4. would have been a strong piece of “smoking gun” evidence to continue to monitor the change attributable to the projects’ training and mentoring, however, when asked to see the results, at the time of writing this article, project implementers had discontinued the business surveys. A businesses survey was being planned for distribution towards the end of the project, but authors were not able to assess this evidence leading to a failure of the test. E.2.8. is yet another conflicting piece of evidence. Whilst evidence of the 35 training materials was indeed uploaded by the end of the project to meet the required project target of training materials, the research team was aware that the “Resources Hub” which should have hosted the training materials only came to exist towards the very end of the project implementation period, hindering any possibility that the online resources played any relevant part in contributing to the achievement of Outcome 2. Were these have been uploaded in line with the initial timeline, E.1.8. could have represented a strong Smoking Gun test, but due to inconsistency in the timeline, it failed and decreased our confidence in Outcome 2.

Finally, what emerged as a completely unintended consequence of the project, was the creation (from scratch) of a local business network. When participating businesses were asked in a post-training survey how they had benefitted from the projects’ activities/training, almost all respondents highlighted that connecting with other local businesses and learning from one another through the creation of this new businesses network was a real benefit of the project for them and a motivation to keep-up affiliation with the projects’ activities.

As for outcome 1, we also tested rival hypotheses for outcome 2. In this case by talking to the council team in *PLACENAME* implementing “Experience,” we learned that the council had received additional funding from a different Interreg project “Facet,” which promoted sustainable forms of tourism and focused primarily on increasing the adoption of circular economy solutions for entrepreneurs operating in the field of tourism. Similarly to “Experience” we learned that “Facet” targeted SMEs who received hands on support in changing their business models to more sustainable ones whilst enhancing visitor experience. Although this additional project was not aiming to combat seasonality in the pilot destination, there was an evident overlap between how the two projects may have contributed towards achieving outcome 2 in terms of raising awareness of sustainability solutions in tourism entrepreneurs.

It is reasonable to argue that although “Experience” project activities met the required outputs of the project, the relationship between the main hypothesis and the rival hypothesis in relation to this outcome are not mutually exclusive, as was the case for outcome 1. On the contrary, the relationship is comparable to what Zaks refers to as two congruent inclusive hypotheses that operate via sufficiently similar mechanisms, but one theory describes an outcome which is sequentially prior to the other. Indeed, the “Facet” project ran in PLACENAME from 2016-2020, it ended the same year in which “Experience” started. This leads us to argue that the primary hypothesis, i.e. that “Experience” increased awareness/engagement with sustainable experiential tourism across diverse target groups: atypical providers, very small businesses, small/medium enterprises sole traders in PLACENAME, was a novel extension to an existing change being pursued. A previous project had led the groundwork for “Experience” to achieve and build-upon a similar outcome. This does not stop us from drawing causal inferences on the role played by “Experience” in contributing to outcome 2. The evidence from one hypothesis has no impact on the other. We argue that this does not undermine the validity of “Experience” but rather, it shows how pivotal it is to learn from project mechanisms and use them as policy tools to further capitalise on mechanisms that “work.” In fact, following a conversation with the “Experience” project implementers in PLACENAME, they confirmed how “Experience” was indeed a continuation of the previous “Facet” project, of which it was in a sense, riding the wave.

Discussion

We will now discuss two key take-away lessons around the application of process tracing to evaluate a complex tourism intervention, along with some of the limitations. These relate to: (1) how process tracing allowed us to better understand how the project worked; and 2) the value of implementing a process methodology to overcome data fragmentation.

Discerning project mechanisms

We have stressed the value of theory-based evaluation methods to understand how project interventions work to generate successful policy transfer and improve the design of future interventions (Eckardt et al., Citation2019; Taplin et al., Citation2014). Process tracing allowed us to go beyond accounting for what the project achieved (visitor numbers, visitor satisfaction, visitor expenditure…) by unpacking project mechanisms that acted as catalysts to change, thus tracing how change occurred. As discussed by Busetti (Citation2023), one can never provide definite answers as to how and why a certain intervention will work ex-ante. We show how to test causal mechanisms previously hypothesised in a ToC, by tracing whether there is within-case process-related evidence of a theorised mechanism working as expected in the chosen case (Beach, 2018). We illustrate how process tracing allows researchers to listen to the accounts of individuals in implementation roles or in receipt of an intervention and use such data as evidence to identify the mechanism linking activities and entities, i.e. what the initiative fires in people’s minds (Pawson & Tilley, Citation1997, p. 188) to create change.

We went beyond detailing how funders’ money was spent. In line with what Pawson & Tilley have discussed (1997), we show how it is never simply the investments or the activities implemented by a project that bring about change. For example, we learned that being connected with individuals with special needs and learning more about some of the pain points they experienced first-hand, prompted SMEs to look at their offer and re-evaluate areas in which accessibility could be improved. Whilst finding the hard evidence connecting enhanced accessibility to project funding was relatively straightforward, it was the acknowledgment of the pressure implementers were under to demonstrate enhanced accessibility to their funders that allowed to uncover another important mechanism of the project. Indeed, even if as discussed by Moumoutzis and Zartaloudis (Citation2016), these infrastructure activities were already being pursued by the existing agenda of the local authorities, in line with what Busetti & Dente discussed (2017) our findings highlight how the very existence of the project, and the hard project deadline motivated the local council to act on this. This caused an accessibility transition to happen more swiftly to meet the project deadline.

Being able to use and classify all types of evidence allowed us to trace wider and unexpected impacts (Bennett & Checkel, Citation2015). For example, the knock-on effects of the projects’ investments on accessibility that triggered a response from the local authorities to attract more funding was revealed by a member of the local council. Our findings show how small incremental change at a micro project level can cause major repercussions that gradually effect change at higher policy level (Elsenbroich & Badham, Citation2023). In this case, the accessibility adjustments funded by the project attracted the attention and fuelled wider change at a local policy level where further action was taken to attract more funding and build upon what “EXPERIENCE” had kick-started. This may be considered as a positive unintended consequence that triggered further impact which we would not have uncovered, nor accounted for, if we had not implemented a process tracing approach. These considerations lead us back to the value of process tracing in understanding the responses a projects’ activities and resources ignite and to the relationship between activities and entities that brings about change (Pawson & Tilley, Citation1997).

A correct use of process tracing presupposes an in-depth understanding of the steps and mechanisms that led to the achievement of certain outcomes (Beach, Citation2016). Whilst evidence of the activities and resources invested by the project provided us with the initial clues to demonstrate what had been achieved, drawing together discursive evidence from project implementers and beneficiaries gave us an understanding of the context and of the response triggered by the project’s stimuli. When analysing the evidence available for both outcomes, we soon realised how the “straw in the wind” type of evidence, which would not be accounted for when implementing quantitative/experimental approaches to evaluation, was pivotal to unlocking important project mechanisms and deepening our understand of the process that led to achieving desired outcomes (Wauters & Beach, Citation2018).

We acknowledge that process tracing is not a method to measure impact. As was highlighted in the results, an element of outcome 1 which we were not able to assess using process tracing is whether the change that occurred allowed the pilot region to meet its 33% target to increase accessibility. This is because process tracing is a method to assess whether and how impact was achieved, not how much (Befani & Mayne, Citation2014). Outcomes are often difficult to measure, leading to measuring inputs and outputs rather than outcomes (Van der Knapp., 2016). Our findings highlight the importance of selecting evaluation methods that match the complexity of the intervention being evaluated. There was a lack of evidence available to measure the extent to which accessibility had been improved and whether the 33% expected target had been met. Through process tracing we however could show that accessibility was a) supported by infrastructure funded by the project, b) by the actions of businesses and c) by the uptake of the new opportunities by those with accessibility needs.

Beyond the methodological implications of the usefulness of adopting a process tracing approach to evaluate tourism interventions, our findings contribute to the current debate around how to increase awareness and widen participation of individuals with different access needs to tourism activities. Despite the many public and private initiatives around this topic, studies show how people with different access needs are still less likely to take part in tourism activities because of the many cultural and environmental barriers they face (Agovino et al., Citation2017). Our findings highlight how working with interest groups and individuals who experience first-hand the pain of accessibility barriers to raise awareness amongst businesses offering tourism activities and policymakers is crucial. The small businesses and sole traders involved in EXPERIENCE had not considered accessibility of their offer beyond wheelchair access, nor had they considered how crucial it is to advertise accessibility clearly on their websites and social media channels. Opening an ongoing dialogue with local access groups was also crucial to pre-empt accessibility needs and plan events where accessibility is woven into the design of the event ex-ante (as was done for 3 EXPERIENCE funded local winter events), rather than thinking about how to make an event accessible ex-post.

Dealing with data fragmentation

Process tracing was useful in dealing with issues of data fragmentation and data accessibility. As is often the case, availability of data is often hindered by the limited capacity of organisations to collect appropriate data to evidence impacts (Schmitt & Beach, Citation2015). In our case this issue was particularly exacerbated by the very limited capacities of local councils in collecting data, as well as the effects the Covid-19 pandemic had over travel restrictions and on initial plans for data collection. At the beginning of project implementation, we had planned to regularly collect data from visitors to the newly designed experiences offered by businesses part of the project. These large amounts of quantitative data that should have been used to report on project impacts, however, were impossible to collect due to the impacts of Covid-19 pandemic. This forced the research team to dig deeper in search of other types of evidence that could help us in tracing impacts to EXPERIENCE. We considered how evidence of project impact could be traced back to how the project improved the self-efficacy of businesses for example, even if they were not able to collect data from their visitors.

By gathering evidence from different sources and by focusing on the idea of understanding the relationship between activities and entities, we built a causal narrative even with fragmented data. Not being a method to seek a pattern of regularity, it was not the amount of data that we focused on, but how each piece of evidence could allow us to unlock a mechanism that triggered a certain change to occur (Bennett, Citation2010). A process tracing approach also proved to be an invaluable tool to make causal inferences where data from a control group was not available to assert causal attribution (Ricks & Liu, Citation2018). We found that by conducting a closer scrutiny of all available data and being able to collate these together into a causal narrative through process tracing, we were able to trace mechanisms that were not quantifiable, but nevertheless provided us with a richer account of the project’s overall impacts.

However, we must acknowledge that implementing a process tracing approach to evaluation is resource intensive. Seeking evidence for each outcome and subjecting it to the empirical tests and assess its causal strength and relevance is time consuming. Moreover, considering rival hypotheses and gathering evidence for the latter and discerning its relationship to the primary hypothesis is an additional necessary layer that also requires a lot of time. Whilst it can indeed help in instances of data fragmentation, empirical data is still absolutely necessary to make robust causal inferences. Robust causal claims can only be made with the existence of highly reliable and accurate sources of data. Whilst we suggest researchers should not get bogged-down in trying to gather as much evidence as possible to explain an outcome, much effort is required on gathering empirical data and on the strength of a single piece of evidence in explaining an outcome.

A final reflection to be made is that whilst it is true that process tracing, with its empirical tests tries to increase the transparency and reduce bias within the sphere of qualitative methods of evaluation, there is a strong element of researcher involvement that cannot be overlooked. Researchers may seek out evidence which confirms their beliefs, and negative evidence may be simply ignored. To avoid this confirmation bias, we find that the counterfactual reasoning within process tracing pushes the researcher to think of possible rival hypothesis and test their validity. Posing ourselves the question “what would have happened if the project had not existed?” was a powerful way to challenge our pre-conceptions but also reduce the number of alternative explanations, hence reinforcing the robustness of our causal claims. A summary of the common challenges and best practices we encountered is illustrated in below.

Table 3. Summarising common challenges and best practices when applying process tracing.

Conclusions

The aim of this article was to show how a theory-based approach can provide depth to a tourism intervention impact evaluation. We argue that process tracing is a valuable method that can enhance the existing qualitative evaluation toolbox of researchers, allowing to go beyond simply detailing how/whether money was spent to meet project targets, which tells at most a partial story about the impact of a project’s interventions.

Our theoretical contribution through this study is primarily methodological. This is the first article in the field of tourism to detail, step-by-step the application of process tracing to evaluate an outcome of a complex tourism intervention. The core argument is that when conducting evaluations, there is a need to match the right tool with the right task. We have shown that by adopting a theory-based evaluative tool we were able to capture important elements of project mechanisms that would have been overlooked by other methods. Specifically, we have shown the value of process tracing in looking at the entities within a project in a non-passive way. Whilst other conceptualisations offer a rather passive understanding of causality and to the relationship between project activities and entities, through process tracing and the integration of discursive evidence collected from those implementing and in receipt of the project we have accounted for the active and sometimes unpredictable role of people in delivering change, that other evaluation methods overlook.

Turning to managerial implications, considering our findings we believe there is a main issue that needs addressing. Those working in the organisations that commission tourism projects and evaluations should be trained around the value that alternative methods of evaluating project impacts can produce. If we wish to produce robust and even more holistic findings there needs to be a shift in our understanding of the purpose of evaluations. Whilst process tracing is widely accepted as a method of evaluation within development studies, it has so far no adoption in evaluations in tourism interventions. Understanding how tourism interventions work allows us to better understand why a particular destination became more sustainable than another, or why a destination succeeded in attracting more visitors in the low season, helping us in turn to replicate the interventions that worked elsewhere in similar contexts.

Disclosure statement

No potential conflict of interest was reported by the author(s).

Additional information

Funding

References

- Agovino, M., Casaccia, M., Garofalo, A., & Marchesano, K. (2017). Tourism and disability in Italy. Limits and opportunities. Tourism Management Perspectives, 23, 58–67. https://doi.org/10.1016/j.tmp.2017.05.001

- Airey, D. (2015). Developments in understanding tourism policy. Tourism Review, 70(4), 246–258. https://doi.org/10.1108/TR-08-2014-0052

- Ashley, C., & Mitchell, J. (2008). Doing the right thing approximately not the wrong thing precisely: Challenges of monitoring impacts of pro-poor interventions in tourism value chains. Overseas Development Institute London.

- Baggio, R. (2020). The science of complexity in the tourism domain: A perspective article. Tourism Review, 75(1), 16–19. https://doi.org/10.1108/TR-04-2019-0115

- Beach, D. (2016). It’s all about mechanisms–What process-tracing case studies should be tracing. New Political Economy, 21(5), 463–472. https://doi.org/10.1080/13563467.2015.1134466

- Beach, D., & Pedersen, R. B. (2016). Causal case study methods: Foundations and guidelines for comparing, matching, and tracing. University of Michigan Press.

- Beach, D., & Pedersen, R. (2018). Process-Tracing Methods. University of Michigan Press.

- Befani, B., & Mayne, J. (2014). Process tracing and contribution analysis: A combined approach to generative causal inference for impact evaluation. IDS Bulletin, 45(6), 17–36. https://doi.org/10.1111/1759-5436.12110

- Befani, B., & Stedman-Bryce, G. (2017). Process tracing and Bayesian updating for impact evaluation. Evaluation, 23(1), 42–60. https://doi.org/10.1177/1356389016654584

- Bennett, A. (2010). Process tracing and causal inference. In H. Brady & D. Collier (Eds.), Rethinking social inquiry: Diverse tools, shared standards (2nd ed.). Rowman & Littlefield Publishers.

- Bennett, A., & Checkel, J. T. (2015). Process tracing. Cambridge University Press.

- Bray, J., Gray, M., & T Hart, P. (2019). Evaluation and learning from failure and success: An ANZSOG research paper for the Australian public service review panel.

- Busetti, S. (2023). Causality is good for practice: Policy design and reverse engineering. Policy Sciences, 56(2), 419–438. https://doi.org/10.1007/s11077-023-09493-7

- Busetti, S., & Dente, B. (2017). Using process tracing to evaluate unique events: The case of EXPO Milano 2015. Evaluation, 23(3), 256–273. https://doi.org/10.1177/1356389017716738

- Byrne, D. (2013). Evaluating complex social interventions in a complex world. Evaluation, 19(3), 217–228. https://doi.org/10.1177/1356389013495617

- Camacho Garland, G., & Beach, D. (2023). Theorizing how interventions work in evaluation: Process-tracing methods and theorizing process theories of change. Evaluation, 29(4), 390–409. https://doi.org/10.1177/13563890231201876

- Cartwright, N., & Hardie, J. (2012). Evidence-based policy: A practical guide to doing it better. Oxford University Press.

- Chen, S., & Henry, I. (2020). Assessing Olympic legacy claims: Evaluating explanations of causal mechanisms and policy outcomes. Evaluation, 26(3), 275–295. https://doi.org/10.1177/1356389019836675

- Clarke, G. M., Conti, S., Wolters, A. T., & Steventon, A. (2019). Evaluating the impact of healthcare interventions using routine data. BMJ (Clinical Research ed.), 365, l2239. https://doi.org/10.1136/bmj.l2239

- Collier, D. (2011). Understanding process tracing. PS: Political Science & Politics, 44(4), 823–830. https://doi.org/10.1017/S1049096511001429

- Deaton, A., & Cartwright, N. (2018). Understanding and misunderstanding randomized controlled trials. Social Science & Medicine, 210, 2–21. https://doi.org/10.1016/j.socscimed.2017.12.005

- Delahais, T., & Toulemonde, J. (2017). Making rigorous causal claims in a real-life context: Has research contributed to sustainable forest management? Evaluation, 23(4), 370–388. https://doi.org/10.1177/1356389017733211

- Dredge, D., & Jamal, T. (2015). Progress in tourism planning and policy: A post-structural perspective on knowledge production. Tourism Management, 51, 285–297. https://doi.org/10.1016/j.tourman.2015.06.002

- Dredge, D., & Jenkins, J. (Eds.) (2011). Stories of practice: Tourism policy and planning. Ashgate Publishing, Ltd.

- Eckardt, C., Font, X., & Kimbu, A. (2019). Realistic evaluation as a volunteer tourism supply chain methodology. Journal of Sustainable Tourism, 28(5), 647–662. https://doi.org/10.1080/09669582.2019.1696350

- Elsenbroich, C., & Badham, J. (2023). Negotiating a future that is not like the past. International Journal of Social Research Methodology, 26(2), 207–213. https://doi.org/10.1080/13645579.2022.2137935

- European Commission. (2013). Evaluation guidance EVALSED sourcebook: Method and Techniques.

- Falleti, T. G., & Lynch, J. F. (2009). Context and causal mechanisms in political analysis. Comparative Political Studies, 42(9), 1143–1166. https://doi.org/10.1177/0010414009331724

- HM Treasury. (2020). The Magenta Book - Central Government guidance on evaluation. UK Government. www.gov.uk/official-documents.

- Kay, A., & Baker, P. (2015). What can causal process tracing offer to policy studies? A review of the literature. Policy Studies Journal, 43(1), 1–21. https://doi.org/10.1111/psj.12092

- Machamer, P., Darden, L., & Craver, C. F. (2000). Thinking about mechanisms. Philosophy of Science, 67(1), 1–25. https://doi.org/10.1086/392759

- Mellon, V., & Bramwell, B. (2018). The temporal evolution of tourism institutions. Annals of Tourism Research, 69, 42–52. https://doi.org/10.1016/j.annals.2017.12.008

- Montano, L. J., Font, X., Elsenbroich, C., & Ribeiro, M. A. (2023). Co-learning through participatory evaluation: An example using theory of change in a large-scale EU-funded tourism intervention. Journal of Sustainable Tourism, 1–20. https://doi.org/10.1080/09669582.2023.2227781

- Mori, I. (2021). NAMA facility – 2nd Interim evaluation and learning, final report (Issue February).

- Moumoutzis, K., & Zartaloudis, S. (2016). Europeanization mechanisms and process tracing: A template for empirical research. Journal of Common Market Studies, 54(2), 337–352.

- OECD. (2020). Rebuilding tourism for the future: COVID-19 policy responses and recovery. OECD. Paris: OECD Publishing. https://www.oecd.org/coronavirus/policy-responses/rebuilding-tourism-for-the-future-%20covid-19-policy-responses-and-recovery-bced9859/

- O’Sullivan, D., Pickernell, D., & Senyard, J. (2009). Public sector evaluation of festivals and special events. Journal of Policy Research in Tourism, Leisure and Events, 1(1), 19–36. https://doi.org/10.1080/19407960802703482

- Pawson, R., & Tilley, N. (1997). Realistic evaluation. Sage.

- Phi, G. T., Whitford, M., & Reid, S. (2018). What’s in the black box? Evaluating anti-poverty tourism interventions utilizing theory of change. Current Issues in Tourism, 21(17), 1930–1945. https://doi.org/10.1080/13683500.2016.1232703

- Regeer, B. J., de Wildt-Liesveld, R., van Mierlo, B., & Bunders, J. F. (2016). Exploring ways to reconcile accountability and learning in the evaluation of niche experiments. Evaluation, 22(1), 6–28. https://doi.org/10.1177/1356389015623659

- Ricks, J. I., & Liu, A. H. (2018). Process-tracing research designs: A practical guide. PS - Political Science and Politics, 51(4), 842–846.

- Rothgang, M., & Lageman, B. (2021). The unused potential of process tracing as evaluation approach: The case of cluster policy evaluation. Evaluation, 27(4), 527–543. https://doi.org/10.1177/13563890211041676

- Schimmelfennig, F. (2015). Efficient process tracing. Analyzing the causal mechanisms of European integration. In A. Bennett and J. T. Checkel (Eds.), Process tracing in the social sciences: From metaphor to analytic tool (pp. 98–125). Cambridge University Press.

- Schmitt, J., & Beach, D. (2015). The contribution of process tracing to theory-based evaluations of complex aid instruments. Evaluation, 21(4), 429–447. https://doi.org/10.1177/1356389015607739

- Stern, E., Stame, N., Mayne, J., Forss, K., Davies, R., & Befani, B. (2012). Broadening the sharing the of designs and benefits of trade methods for impact evaluations, Working Paper 38. Department for International Development, February 2011, 1–127.

- Stevenson, N., Airey, D., & Miller, G. (2009). Complexity theory and tourism policy research. International Journal of Tourism Policy, 2(3), 206–220. https://doi.org/10.1504/IJTP.2009.024553

- Tamm, H., & Duursma, A. (2023). Combat, commitment, and the termination of Africa’s mutual interventions. European Journal of International Relations, 29(1), 3–28. https://doi.org/10.1177/13540661221112612

- Taplin, J., Dredge, D., & Scherrer, P. (2014). Monitoring and evaluating volunteer tourism: A review and analytical framework. Journal of Sustainable Tourism, 22(6), 874–897. https://doi.org/10.1080/09669582.2013.871022

- Trampusch, C., & Palier, B. (2016). Between X and Y: How process tracing contributes to opening the Black Box of causality, New political economy, 21(5), 437–454.

- Twining-Ward, L., Messerli, H., Sharma, A., & Villascusa Cerezo, J. M. (2018). Tourism theory of change. Tourism for development knowledge series. World Bank. https://openknowledge.worldbank.org/handle/10986/35459

- Vanclay, F. (2015). The potential application of qualitative evaluation methods in European regional development: Reflections on the use of Performance Story Reporting in Australian natural resource management. Regional Studies, 49(8), 1326–1339. https://doi.org/10.1080/00343404.2013.837998

- Van der Knaap, P. (2016). Responsive evaluation and performance management: Overcoming the downsides of policy objectives and performance indicators. Evaluation, 12(3), 278–293. https://doi.org/10.1177/1356389006069135

- Wadeson, A., Monzani, B., & Aston, T. (2020). Process tracing as a practical evaluation method: comparative learning from six evaluations. Monitoring and Evaluation News. https://mande.co.uk/2020/media-3/unpublished-paper/process-tracing-as-a-practical-evaluation-method-comparative-learning-from-six-evaluations/?utm_source=dlvr.it&utm_medium=twitter

- Wauters, B., & Beach, D. (2018). Process tracing and congruence analysis to support theory-based impact evaluation. Evaluation, 24(3), 284–305. https://doi.org/10.1177/1356389018786081

- Weiss, C. H. (1998). Evaluation methods for studying programs and policies. Pearson College Division.

- Zaks, S. (2017). Relationships among rivals (RAR): A framework for analyzing contending hypotheses in process-tracing. Political Analysis, 25(3), 344–362. https://doi.org/10.1017/pan.2017.12