?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.

?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.Abstract

Let T be a tree on n vertices whose edge weights are positive definite matrices of order s. The squared distance matrix of T, denoted by Δ, is the ns × ns block matrix with , where d(i, j) is the sum of the weights of the edges in the unique (i, j)-path. In this article, we obtain a formula for the determinant of Δ and find

under some conditions.

1 Introduction

Let T be a tree with vertex set and edge set

. If two vertices i and j are adjacent, we write

. Let us assign an orientation to each edge of T. The usual distance between the vertices

, denoted by

, is the length of the shortest path between them in T. Two edges

and

of T are similarly oriented if

and is denoted by

, otherwise they are oppositely oriented and is denoted by

. The edge orientation matrix

of T is the

matrix whose rows and columns are indexed by the edges of T and the entries are defined [Citation8] as

The incidence matrix Q of T is the matrix with its rows indexed by V(T) and the columns indexed by E(T). The entry corresponding to the row i and column ej of Q is 1 if ej originates at i,–1 if ej terminates at i, and zero if ej and i are not incident. We assume that the same orientation is used while defining the edge orientation matrix H and the incidence matrix Q.

The distance matrix of T, denoted by D(T), is the matrix whose rows and columns are indexed by the vertices of T and the entries are defined as follows:

, where

. In [Citation8], the authors introduced the notion of squared distance matrix Δ, which is defined to be the Hadamard product

, that is, the (i, j)-th element of Δ is

. For the unweighted tree T, the determinant of Δ is obtained in [Citation8], while the inverse and the inertia of Δ are considered in [Citation7]. In [Citation5], the author considered an extension of these results to a weighted tree whose each edge is assigned a positive scalar weight and found the determinant and inverse of Δ. Recently, in [Citation9], the authors determined the inertia and energy of the squared distance matrix of a complete multipartite graph. Also, they characterized the graphs among all complete t-partite graphs on n vertices for which the spectral radius of the squared distance matrix and the squared distance energy are maximum and minimum, respectively.

In this article, we consider a weighted tree T on n vertices with each edge weight is a positive definite matrix of order s. For , the distance d(i, j) between i and j is the sum of the weight matrices in the unique (i, j)-path of T. Thus, the distance matrix

of T is the block matrix of order ns × ns with its (i, j)-th block

if

, and is the s × s zero matrix if i = j. The squared distance matrix Δ of T is the ns × ns block matrix with its (i, j)-th block is equal to

if

, and is the s × s zero matrix if i = j. The Laplacian matrix

of T is the ns × ns block matrix defined as follows: For

, the (i, j)-th block

if i ~ j, where W(i, j) is the matrix weight of the edge joining the vertices i and j, and the zero matrix otherwise. For

, the (i, i)-th block of L is

.

In the context of classical distance, the matrix weights have been studied in [Citation2] and [Citation3]. The Laplacian matrix with matrix weights have been studied in [Citation2, Citation10] and [Citation11]. The Resistance distance matrix and the Product distance matrix with matrix weights have been considered in [Citation1], and [Citation6], respectively. In this article, we consider the squared distance matrix Δ of a tree T with matrix weights and find the formulae for the determinant and inverse of Δ, which generalizes the results of [Citation5, Citation7, Citation8].

This article is organized as follows. In Section 2, we define needed notations and state some preliminary results, which will be used in the subsequent sections. In Section 3, we find some relations of incidence matrix, Laplacian matrix, and distance matrix with squared distance matrix. In Sections 4 and 5, we obtain the formula for the determinant and inverse of Δ, respectively.

2 Notations and preliminary results

In this section, we define some useful notations and state some known results which will be needed to prove our main results.

The column vector with all ones and the identity matrix of order n are denoted by

and In, respectively. Let J denote the matrix of appropriate size with all entries equal to 1. The transpose of a matrix A is denoted by

. Let A be an n × n matrix partitioned as

, where A11 and A22 are square matrices. If A11 is nonsingular, then the Schur complement of A11 in A is defined as

. The following is the well known Schur complement formula:

. The Kronecker product of two matrices

and

, denoted by

, is defined to be the mp × nq block matrix

. It is known that for the matrices A, B, C and D,

, whenever the products AC and BD are defined. Also

, if A and B are nonsingular. Moreover, if A and B are n × n and p × p matrices, respectively, then

. For more details about the Kronecker product, we refer to [Citation12].

Let H be the edge-orientation matrix, and Q be the incidence matrix of the underlying unweighted tree with an orientation assigned to each edge. The edge-orientation matrix of a weighted tree whose edge weights are positive definite matrices of order s is defined by replacing 1 and–1 by Is and , respectively. The incidence matrix of a weighted tree is defined in a similar way. That is, for the matrix weighted tree T, the edge-orientation matrix and the incidence matrix are defined as

and

, respectively. For a block matrix A, let Aij denote the (i, j)-th block of A.

Now we introduce some more notations. Let T be a tree with vertex set and edge set

. Let Wi be the edge weight matrix associated with each edge ei of T,

. Let δi be the degree of the vertex i and set

for

. Let τ be the

matrix with components

and

be the diagonal matrix with diagonal entries

. Let

be the matrix weighted degree of i, which is defined as

Let be the ns × s block matrix with the components

. Let F be a diagonal matrix with diagonal entries

. It can be verified that

.

A tree T is said to be directed tree, if the edges of the tree T are directed. If the tree T has no vertex of degree 2, then denote the diagonal matrix with diagonal elements

. In the following theorem, we state a basic result about the edge-orientation matrix H of an unweighted tree T, which is a combination of Theorem 9 of [Citation8] and Theorem 11 of [Citation7].

Theorem 2.1.

[Citation7, Citation8] Let T be a directed tree on n vertices and let H and Q be the edge-orientation matrix and incidence matrix of T, respectively. Then . Furthermore, if T has no vertex of degree 2, then H is nonsingular and

.

Next, we state a known result related to the distance matrix of a tree with matrix weights.

Theorem 2.2.

[2, Theorem 3.4] Let T be a tree on n vertices whose each edge is assigned a positive definite matrix of order s. Let L and D be the Laplacian matrix and distance matrix of T, respectively. If D is invertible, then the following assertions hold:

.

3 Properties of the squared distance matrices of trees

In this section, we find the relation of the squared distance matrix with other matrices, such as distance matrix, Laplacian matrix, incidence matrix, etc. We will use these results to obtain the formulae for determinants and inverses of the squared distance matrices of directed trees.

Lemma 3.1.

Let T be a tree with vertex set and each edge of T is assigned a positive definite matrix weight of order s. Let D and Δ be the distance matrix and the squared distance matrix of T, respectively. Then

Proof.

Let be fixed. For

, let p(j) be the predecessor of j on the (i, j)-path of the underlying tree. Let ej be the edge between the vertices p(j) and j. Let Wj be the weight of the edge ej and

. Therefore,

Since the vertex j is the predecessor of vertices in the paths from i, we have

Thus,

Therefore, the (i, j)-th element of is

Now, let us compute the (i, j)-th element of .

Note that Xj is the sum of the weights of all edges incident to j, except ej. Hence,

Therefore,

Thus,

This completes the proof. □

Lemma 3.2.

Let T be a directed tree with vertex set and edge set

with each edge ei is assigned a positive definite matrix weight Wi of order s for

. Let H and Q be the edge orientation matrix and incidence matrix of T, respectively. If F is the diagonal matrix with diagonal entries

, then

Proof.

For , let ei and ej be two edges of T such that ei is directed from p to q and ej is directed from r to s. Let Wi and Wj be the weights of the edges ei and ej, respectively.

Case 1:

Let the p-s path contain the vertices q and r. If , then it is easy to check that

Case 2:

Let the r-q path contain the vertices s and p. If , then

Note that

Thus, □

Lemma 3.3.

Let T be a tree with vertex set and edge set

with each edge ei is assigned a positive definite matrix weight Wi of order s for

. Let L, D and Δ be the Laplacian matrix, the distance matrix and the squared distance matrix of T, respectively. Then

.

Proof.

Let and the degree of the vertex j be t. Suppose j is adjacent to the vertices

, and let

be the corresponding edges with edge weights

, respectively.

Case 1.

For i = j, we have

Case 2.

Let . Without loss of generality, assume that the (i, j)-path passes through the vertex v1 (it is possible that i = v1). If

, then

,

. Therefore,

This completes the proof. □

4 Determinant of the squared distance matrix

In this section, we obtain a formula for the determinant of the squared distance matrix of a tree with positive definite matrix weights. First, we consider trees with no vertex of degree 2.

Theorem 4.1.

Let T be a tree on n vertices, and let Wi be the weights of the edge ei, where Wi’s are positive definite matrices of order s, . If T has no vertex of degree 2, then

Proof.

Let us assign an orientation to each edge of T, and let H be the edge orientation matrix and Q be the incidence matrix of the underlying unweighted tree.

Let Δi denote the i-th column block of the block matrix Δ. Let ti be the column vector with 1 at the i-th position and 0 elsewhere,

. Then

(1)

(1)

We know that Q is totally unimodular matrix and each submatrix of order n–1 is nonsingular [4, Lemmas 2.6 and 2.7]. Therefore, . Now, by taking determinant of matrices in both sides of Equationequation (1)

(1)

(1) , we have

Using Lemma 3.2, we have

By Theorem 2.1, we have

and hence

. Thus,

is nonsingular, and by the Schur complement formula, we have

Now, from Theorem 2.1, it follows that . Therefore,

(2)

(2)

Now, by Lemmas 3.3 and 3.1, we have

Substituting the value of in (2), we get the required result. □

Next, let us illustrate the above theorem by an example.

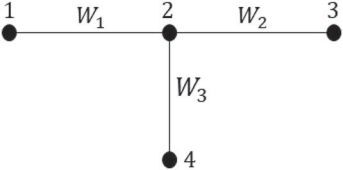

Example 4.2.

Consider the tree T1 in , where the edge weights are

Then,

One can verify that,

Next, we obtain a formula for the determinant of the squared distance matrix of a tree T, which has exactly one vertex of degree 2.

Theorem 4.3.

Let T be a tree on n vertices with the edge set . Let the positive definite matrices

of order s be the weights of the edges

, respectively. Let v be the vertex of degree 2 and u and w be its neighbors in T. If

and

, then

Proof.

Let us assign an orientation to each edge of T. Without loss of generality, assume that, the edge ei is directed from u to v and the edge ej is directed from v to w.

Let Δi denote the i-th column block of the block matrix Δ. Let ti be the column vector with 1 at the i-th position and 0 elsewhere,

. Therefore, by using Lemma 3.2, we have

Pre-multiplying and post-multiplying the above equation by , we get

which implies that

Let denote the

submatrix obtained by deleting the all blocks in the j-th row and j-th column from

. Let

denote the

submatrix obtained by deleting the j-the block in

. Let Ri and Ci denote the i-th row and i-th column of the matrix

, respectively. Note that the blocks in the i-th and j-th column of

are identical. Now, perform the operations

and

in

, and then interchange Rj and

, Cj and

. Since

, therefore

Since is the edge orientation matrix of the tree obtained by deleting the vertex v and replacing the edges ei and ej by a single edge directed from u to w in the tree, by Theorem 2.1, we have

, which is nonzero. Therefore, by applying the Schur complement formula, we have

Again, by the proof of Theorem 4.1, we have

Therefore,

Since , by Schur complement formula, we have

Thus,

This completes the proof. □

Now, we illustrate the above theorem by the following example.

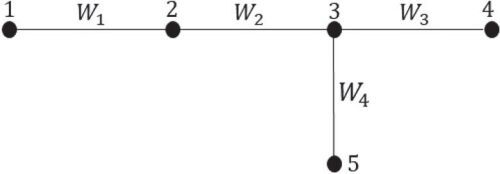

Example 4.4.

Consider the tree T2 in , where the edge weights are

Then,

One can verify that,

Corollary 4.5.

Let T be a tree on n vertices and each edge ei of T is assigned a positive definite matrix Wi order s, . If T has at least two vertices of degree 2, then

.

Proof.

Let T be a tree with at least two vertices v1 and v2 of degree 2. Therefore, and

. Let u and w be the neighbours of v1 and

and

. Therefore, by Theorem 4.3, we have

Since , therefore

. This completes the proof. □

5 Inverse of the squared distance matrix

This section considers trees with no vertex of degree 2 and obtains an explicit formula for the inverse of its squared distance matrix. First, let us prove the following lemma which will be used to find .

Lemma 5.1.

Let T be a tree of order n with no vertex of degree 2 and each edge of T is assigned a positive definite matrix weight of order s. If and

, then

Proof.

By Lemma 3.3, we have . Hence,

Since , therefore

. By Lemma 3.1, we have

and hence

. This completes the proof. □

If the tree T has no vertex of degree 2 and , then Δ is nonsingular, that is,

exists. In the next theorem, we determine the formula for

.

Theorem 5.2.

Let T be a tree of order n with no vertex of degree 2 and each edge of T is assigned a positive definite matrix weight of order s. Let and

. If

, then

Proof.

Let . Then,

(3)

(3)

By Lemma 3.3, we have . Therefore,

By Theorem 2.2, and hence

(4)

(4)

By Lemma 5.1, we have . Therefore, from Equationequation (3)

(3)

(3) and Equation(4)

(4)

(4) , we have

This completes the proof. □

Now, let us illustrate the above formula for by an example.

Example 5.3.

Consider the tree T1 in , where the edge weights are

Then,

Therefore,

One can verify that,

Acknowledgments

We are indebted to the referee for their valuable comments and suggestions.

References

- Atik, F., Bapat, R. B., Kannan, M. R. (2019). Resistance matrices of graphs with matrix weights. Linear Algebra Appl. 571: 41–57.

- Atik, F., Kannan, M. R., Bapat, R. B. (2021). On distance and Laplacian matrices of trees with matrix weights. Linear and Multilinear Algebra. 69(14): 2607–2619.

- Bapat, R. B. (2006). Determinant of the distance matrix of a tree with matrix weights. Linear Algebra Appl. 416(1): 2–7.

- Bapat, R. B. (2010). Graphs and Matrices, Vol. 27. London: Springer.

- Bapat, R. B. (2019). Squared distance matrix of a weighted tree. Electron. J. Graph Theory Appl. (EJGTA). 7(2): 301–313.

- Bapat, R. B., Sivasubramanian, S. (2015). Product distance matrix of a tree with matrix weights. Linear Algebra Appl. 468: 145–153.

- Bapat, R. B., Sivasubramanian, S. (2016). Squared distance matrix of a tree: inverse and inertia. Linear Algebra Appl. 491: 328–342.

- Bapat, R. B., Sivasubramanian, S. (2013). Product distance matrix of a graph and squared distance matrix of a tree. Appl. Anal. Discrete Math. 7(2): 285–301.

- Das, J., Mohanty, S. (2020). On squared distance matrix of complete multipartite graphs. arXiv preprint arXiv:2012.04341.

- Ganesh, S., Mohanty, S. (2022). Trees with matrix weights: Laplacian matrix and characteristic-like vertices. Linear Algebra Appl. 646: 195–237.

- Hansen, J. (2021). Expansion in matrix-weighted graphs. Linear Algebra Appl. 630: 252–273.

- Horn, R. A., Johnson, C. R. (1994). Topics in Matrix analysis. Cambridge: Cambridge University Press, Corrected reprint of the 1991 original.