ABSTRACT

Geosensing and social sensing as two digitalization mainstreams in big data era are increasingly converging toward an integrated system for the creation of semantically enriched digital Earth. Along with the rapid developments of AI technologies, this convergence has inevitably brought about a number of transformations. On the one hand, value-adding chains from raw data to products and services are becoming value-adding loops composed of four successive stages – Informing, Enabling, Engaging and Empowering (IEEE). Each stage is a dynamic loop for itself. On the other hand, the “human versus technology” relationship is upgraded toward a game-changing “human and technology” collaboration. The information loop is essentially shaped by the omnipresent reciprocity between humans and technologies as equal partners, co-learners and co-creators of new values.

The paper gives an analytical review on the mutually changing roles and responsibilities of humans and technologies in the individual stages of the IEEE loop, with the aim to promote a holistic understanding of the state of the art of geospatial information science. Meanwhile, the author elicits a number of challenges facing the interwoven human-technology collaboration. The transformation to a growth mind-set may take time to realize and consolidate. Research works on large-scale semantic data integration are just in the beginning. User experiences of geovisual analytic approaches are far from being systematically studied. Finally, the ethical concerns for the handling of semantically enriched digital Earth cover not only the sensitive issues related to privacy violation, copyright infringement, abuse, etc. but also the questions of how to make technologies as controllable and understandable as possible for humans and how to keep the technological ethos within its constructive sphere of societal influence.

1. Introduction

Two digitalization mainstreams of geospatial information science can be observed in the big data era. The one is characterized by the steady and converging progress of geosensing technologies and supported by globally networked infrastructures for Earth Observation, satellite navigation and communication (Chen et al. Citation2019a). Multiple spaceborne, airborne and in situ sensors are continuously capturing positions of target points, emissions or reflections from physical entities on the Earth, which is then transformed along a value chain to digital representations of the Earth surface at multiple spatiotemporal resolutions. The other is shaped by social sensing technologies and facilitated by social media platforms (Wang et al. Citation2019). Millions of networked users as participatory social sensors are continuously producing contents in texts, images, audios and videos, revealing interpersonal relationships and human-environment interactions including observed geoobjects from personal perspectives. The analytics of social sensing data typically ends up with trends, events, influencers and entertaining media entries with context information about location, time, viewing perspective, preference, sentiment, etc. Human stakeholders bearing different responsibilities and technologies at different advance levels are interwoven and omnipresent in the value-adding process, but each mainstream has its own checklist of data quality. What matters most for geosensing are e.g. seamless coverage, ground truth or application-induced accuracy, etc., while social sensing is more concerned with interestingness, relevance, trustworthiness, representativeness, privacy, etc. Geosensing data is mainly machine readable, whereas social sensing delivers more human readable data.

In the recent years, the two aforementioned mainstreams are converging toward an integrated digitalization platform in which geosensing data and social sensing data could interoperate and mutually enrich each other at different processing stages. Along with the rapid development of AI technologies, the convergence is transforming and upgrading the “human versus technology” relationship to a new quality of game changing “human and technology” collaboration. In such an integrated system, humans and technologies work side by side at eye level rather than one being subordinate to the other. Both are co-learners and co-creators of new values. Their complementary insights are merged and fed to the next stage or next round of value-adding process. Thus, the value chains are becoming value loops. Each loop is composed of four successive stages – Informing, Enabling, Engaging and Empowering (IEEE). Each stage is a loop for itself, involving a more granular reciprocity between humans and technologies.

2. The IEEE loop of human-technology collaboration

An analytical review on the mutually changing roles and responsibilities of humans and technologies in the individual stages of the IEEE loop is given in the following sections, with the aim to promote a holistic understanding of the state of the art of geospatial information science.

2.1. Informing

Unlike the traditional one-way street of geoinformation communication with clearly divided role of sender and receiver, humans and technologies in this integrated loop are mutually sender and receiver. Technologies continuously feel the pulse of the Earth and “push out” updated geodata and services to human users. At the same time, they continuously conduct the social listening and “pull in” user information via various interfaces and tracking devices. The observable human information is actually inexhaustible, ranging from verbal feedbacks, eye-movement traces of watching visual presentations, footprints of performing mobile tasks to brain activities during thinking or in the problem-solving process, etc. The tracked user information may contain important clues to evaluate the quality of geodata or services, prompt for incremental service improvements or disruptive innovations for new services. Likewise, humans have easy access to globally covered maps at different scales or 3D landscape models of different resolutions, thus get timely informed of a lot more than ever before about “what and how much can be found near and far”, “what is happening where and when”, etc.. Well-informed humans tend to make informed decisions rather than entirely relying on personal gut instinct, intuition, prior knowledge and experiences. Meanwhile, humans as social species are constantly sharing what they know, feel and experience here and now with other people on social media platforms, which are also tracking containers of volunteered contents.

2.2. Enabling

The deep involvement of human factors in the information loop has catalyzed technological progress, which in turn facilitates the inclusion of more human participants. The mutually enabling effects are reflected in three different fields: geovisual analytics, semantic data integration and extended human capacities of social sensing.

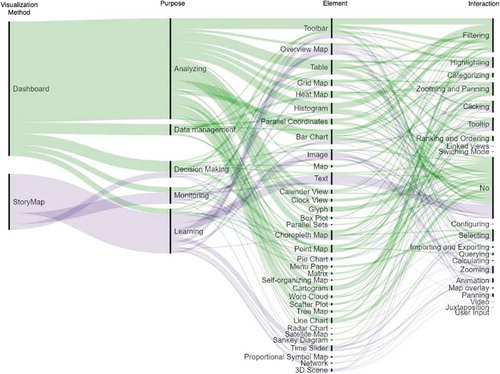

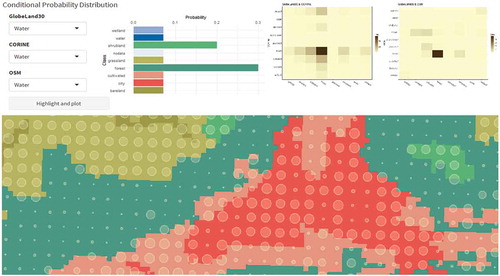

Geovisual analytics approaches are widely used for the understanding of geosensing data or social sensing data as well as their mutual enrichment. They allow the human power of vision-based perception and knowledge-driven cognition to be integrated with accuracy and speed of computing technologies. With the help of a multitude of interactive visualizations, analysts can navigate, filter and drill down to details of heterogeneous data. The streamgraph in summarizes a comparative survey of two popular visualization methods – Dashboard and StoryMap with a great variety of interactive visual elements for different purposes. A geovisual analytics loop typically takes the sequence of (1) visual detection of patterns as hypotheses to assist data exploration, (2) computational extraction and modeling of patterns, (3) verification and refinement of patterns by means of query and visualization for various target groups. For the time being, semi-automatic geovisual analytics approaches can deliver more efficient and reliable results for data enrichment than fully automatic analytical approaches and approaches that rely only on human judgment. An extensive investigation of geovisual analytics for the semantic enrichment of movement trajectories was reported in (Krüger Citation2017), while Yan et al. (Citation2017) demonstrated how to enrich user-generated Points of Interest (POIs) with spatial contexts and then create vector embeddings of place types, which can be visualized for human assessment. Chuprikova (Citation2019) addressed uncertainty issues involved in the enrichment of global land cover classes with OpenStreetMap. She designed an intuitive geovisual analytics interface that allows human operator to adjust a priori probabilities of a Bayesian model as shown in .

The research on semantic data integration is currently focused on theoretical issues of Semantic Web and prototypical experiments, with the aim of sharing and reuse of social sensing data and for a further vision of interoperability between machine-readable data and human-readable data across sensory networks. Ding et al. (Citation2019) developed an ontology-based approach for consistency assessment between open governmental data and OpenStreetMap for a test region in Italy. Chen et al. (Citation2019b) conducted an experiment to extract and embed uncertain semantic relations in Knowledge Graph. Janowicz et al. (Citation2019) reported a Sensor, Observation, Sample and Actuator (SOSA) ontology, which was developed by the first joint working group on “Spatial Data on the Web” of the Open Geospatial Consortium (OGC) and the World Wide Web Consortium (W3C). Its feasibility was proved in a showcase for the derivation of a semantically enriched taxonomy of POIs from Foursquare data. Data-driven classification technologies were combined with POI taxonomies defined by humans. The showcase was successfully implemented as a POI Pulse web application, allowing an interactive visualization of about 165,000 POI at multiple granularity levels based on a seamless switch between raster and vector tiles.

Human capabilities of social sensing have been radically extended by visualization and sensory technologies. The openly accessible digital maps on social media platforms are favorite canvas or playground for individual users to pin down their personal observations, embed their mobile trajectories, or sketch their future plans. Moreover, humans tend to adopt the space-time as a favorite metaphor to accommodate interpersonal relationships in social networks. Expressions such as “our paths have crossed again”, “there are many ups and downs in one’s life”, “we are moving forward” are commonly found in text messages. A more profound enabling impact can be experienced in the enhanced embodied cognition. Traditional embodied cognition relies on the entire human body as an inclusive system situated in a real-world environment. It is generally proved as efficient in terms of learning and generation of meanings because the bodily interactions with the environment are supported by bottom-up sensing via sensorimotor limbs and organs in combination with top-down mental models about the environment. Today’s immersive technologies coupled the light-weighted sensory functions such as ubiquitous positioning, photographing, video recording, phone calls, etc. have extended bodily sensing capacities, leading to an accelerated process of knowledge generation and adaptation. Some sensory functions can be internalized as part of body-mind system to enhance the embodied cognition. Klippel et al. (Citation2019) reported their ongoing research on immersive technologies for embodied learning in geospatial information science.

Figure 1. A streamgraph showing purposes, visual elements and interactions of Dashboard in comparison to StoryMap (Zuo et al. Citation2019).

Figure 2. A geovisual analytics interface for human-machine collaborative exploration of global land cover classes (in colors) with uncertainty indicated in circle size, which is proportional to the entropy value (Chuprikova Citation2019, 100).

2.3. Engaging

The mutual enabling between humans and technologies leads to not only an exponential growth but also a wide dispersion of geodata in the Internet and especially on social media platforms. This imposes daunting challenges to online data searching and processing capacities and gives rise to the development of social bots. As a piece of automated software, a social bot is able to execute millions of simple and repetitive routine tasks such as searching for geo-events embedded in vast quantities of social media data, for which humans fall short of capacities (Polous et al. Citation2015). The deployment of bots is intended to release humans to focus on high-impact strategies, creative contents and overarching actions. The idea of bot is not new. Since the end of 1980s many search engines have been equipped with Internet bots, crawlers or spiders to create entries for a search engine index by visiting millions of web pages. Human designers who have defined these functions may feel a degree of faith in what the bots do. However, bots in social media have undergone some transformational changes in comparison to their predecessors. “Most bots on social media communicate with servers, applications and databases on the backend of websites, but they also interact with human users on the front-end of those same sites” (Woolley, Shorey, and Howard Citation2018). In other words, social bots are acting as a new medium in their own right by processing interactions directed from human to computer on the one hand and those directed from computer to human on the other hand. Engaged social bots work day and night untiringly. Due to their capabilities of multiplying humans’ online presence and speeding up humans’ workflows for specific tasks, they get increasingly involved in intimate relationships with humans. Both designers and users tend to personify bots and treat them as friends and partners, allowing bots to take over more advanced machine-learning tasks. Meanwhile, social bots can extend functions of existing platforms by generating new services based upon profiles and preferences of human users they observe. They are evolving into more autonomous entities than simple surrogates of humans, enacting changes on complex social networks while keeping their own profiles self-adapted and upgraded to do smarter things beyond the expectations of their designers. This out-of-control phenomenon is both exciting and worrisome for designers and users. It remains a commitment of human designers to distinguish bots from human users, and identify the purposes of bots.

2.4. Empowering

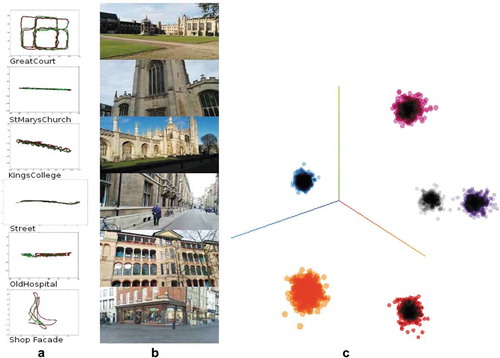

This final step of the IEEE value loop also represents the highest level of human and technology collaboration. Empowering is essentially a deep-learning process with effects of cross-fertilization. Both humans and technologies are mutually empowered to break their own limits and transcend the boundaries of what is immediately possible to what could be possible in the future. What matters here are insights and foresights for handling open-ended wicked problems rather than efficiency and performance for well-defined and small-scale tasks. For this reason, the conventional task division by assigning simple and repetitive routines to technologies and leaving humans alone to conduct creative tasks is adapted to allow more flexibility. Human knowledge and cognition capacities serve as the most powerful driving force for the deployment of most advanced AI technologies. For instance, it is scientifically meaningful to simulate cognitive tasks humans are good at, even if it may be computationally intensive. The gained experiences are useful for cognitively more demanding tasks such as causal mining, natural language generation and autonomous driving. Lyu (Citation2019) demonstrated a case study of place recognition. Given a large number of randomly sorted street view images from an indoor or outdoor environment, humans may easily identify how many places are represented and label the individual images with the places they most likely belong to. The same task can be conducted by a set of deep learning methods. By extracting information about camera pose, illumination conditions and other environmental contexts from the images, some semantic features for places can be identified, which are then used to project the images onto distinctive clusters in the latent space. shows the machine-learning results of six places in Cambridge Landmarks Dataset. The clusters recognized by machines cover fairly well with the places labeled by humans.

Figure 3. Machine learning of places from street view images (Lyu Citation2019). (a) Positions of street view images for six places in a local reference frame – training images (red), test images (green). (b) a representative image for each of the six places. (c) learned clusters in the latent space.

Empowered humans and technologies have more flexibility of scaling and adaptation on their own in changing situations. They are also more transparent for each other and thus ready to complement each other in the right moment in order to detect and solve specific problems faster. Embedded breakpoints in AI technologies, for instance, allow human operators to interrupt the process, inspect the data flow based on intuition and expertise, feed additional information, adjust parameters, predict what may happen next, and prevent negative side effects from happening. Furthermore, adaptive geovisualizations of insights and foresights can potentially empower larger human societies.

3. Challenges

In the IEEE value loop and at each of its stage, humans and technologies share a common goal of making an optimal use of data from geosensing and social sensing, but they have different and changing roles. The following issues currently challenge a mutually beneficial collaboration between humans and technologies:

3.1. Growth mind-set

Computer technologies have been supporting us since decades. We are used to treating them as efficient surrogates of service providers with the aim to reach out to a large number of service receivers. The value chain goes in one direction with a clear boundary between both sides. As technologies and human users reciprocally reach out to each other and increasingly merge with each other, a growth mind-set with continuous learning becomes indispensable for everybody who wants to remain relevant in the loop. Human users should not only know how to operate and keep pace with fast progressing technologies as external aids but also learn how to internalize ubiquitously accessible sensory technologies as parts of our extended body, and incorporate them with human values to solve wicked problems. The mind-set transformation of humans is never trivial and may take time to realize and consolidate.

3.2. Large-scale semantic data integration

Large-Scale integration of social sensing data with geosensing data remains a long-term interdisciplinary endeavor. There are a number of well-known dilemmas in data management. A data structure optimized for efficient storage is often difficult for retrieval. An easily queryable dataset may claim too much storage capacity. Moreover, a data structure that perfectly fits one target group or application does not work well for other target groups or applications. While trade-offs could be found for small geodata, no generally applicable semantic data structures are yet available for big social sensing data characterized by its highly complex, noisy, dynamic and only partly explicit interpersonal and subject–object relationships. The Sensor Web Enablement standards specified by the Open Geospatial Consortium (OGC) provide means to annotate sensors and their observations. However, these standards are not easily aligned with Semantic Web technologies. The introduction of Web Ontology Language (OWL) as well as Research Description Framework (RDF) has marked the beginning of efforts to convert user-generated contents on the Internet into sharable, reusable and meaningful data entries in standard formats. Google’s attempts to identify latent semantic relations behind queries and to map them in a Knowledge Graph is a noteworthy progress toward a Semantic Web that allows users to get not just links to relevant websites, but already facts as answers to queries. However, large-scale experiments with reliable results are not yet available. Most user-generated contents on currently popular social media platforms are neither structured nor standardized, which makes it difficult to query them and thus adequately include them in the input for deep learning algorithms.

3.3. User experiences of geovisual analytics

Geovisual analytics approaches have been rapidly developed in a parallel track to automatic semantic data integration. A large number of visual analytical platforms have occurred, showing data streams at different granularity levels with or without georeferences and during different stages of a value loop. They may serve one or more target groups for one or more purposes along a continuum from information dissemination to knowledge exploration. Each platform typically contains a multitude of more or less interlinked visualization types fitting various display sizes from pocket devices to powerwalls. The advanced technologies from computer graphics, game engineering and media technology have been quickly introduced into geovisual analytics, allowing for increased immersion, more intuitive interaction and a multivariate view of the geodata. Nevertheless, there is a difference between what is possible and what is usable. Systematic studies of user experiences and usability issues lag behind due to difficulties related to the identification of transferrable real-world scenarios, recruitment of representative subjects with large enough sample sizes, determination of relevant indicators, and so on. Consequently, the design of geovisual analytic platforms remains more or less a blind practice with inadequate scientific guidance.

3.4. Ethical concerns

Two categories of ethical concerns are involved in the human and technology collaboration for the handling of semantically enriched digital Earth. First, geosensing and social sensing that deal with georeferences and user-generated data at the finest possible granularity level will inevitably stir up sensitive issues related to privacy violation, copyright infringement, abuse, etc. In addition to continuous law enforcement, it is necessary for all participating individuals to get alerted to conflicting interests of different stakeholders and legal gaps in different cultural contexts, and to raise ethical awareness. With regard to privacy protection, it is not only about the necessary self-protection but also about the protection of other people in social networks. Second, technologies, though originally shaped by humans, are increasingly shaping our view and behavior as well. In fact, technologies are changing the way we perceive our environments through Augmented Reality (AR) and create new environments in which we can immerse ourselves in Virtual Reality (VR). Additionally, they can allow us to navigate and manipulate hard-to-reach parts of the physical world with drones. This reminds us of the famous saying by psychologist Abraham Maslow that if all you have is a hammer, everything looks like a nail. Technologies, once embedded in the value loop of integrated Earth digitization, are ethically not neutral anymore. The intentions of developers, biases in input data and parameter setting of automated routines may be propagated, amplified or reduced during the value-adding process. For example, every map lies more or less. Even if its designer intends to tell truth more efficiently by using some white lies, how the map with its artistic symbolization and geometric distortions caused by the selected projection and generalization operations is interpreted by different users may likely go beyond its designer’s control. The situation is even more serious when technologies such as social bots are autonomously making decisions based on their interactions on social media platforms on behalf of themselves rather than their designers or users. A blind trust in the neutrality of technologies and what they produce regardless of the usage context may expose us to unforeseen risks. Therefore, human developers and users should take the responsibility to make technologies as controllable and understandable as possible and to keep the technological ethos within its constructive sphere of societal influence.

4. Concluding remarks

With the increasing convergence of geosensing and social sensing, humans and technologies have begun to collaborate as equal partners in an integrated Earth digitalization system. They are interwoven in the overall IEEE value loop of geodata, and in each of value stage from informing, enabling, engaging to empowering. AI Technologies as part of the game-changing journey into the future are evolving from sensing to cognition, and become more human-like, but they are there to empower rather than replace humans. Researchers in geospatial information science, like their colleagues in other disciplines, will have more released capacity and flexibility to rethink their roles in the information loop, to learn alongside with social scientists more interdisciplinary skills for a holistic understanding of the digital Earth including its semantic meanings, to initiate and keep pace with technological progress, and last but not least to take responsibility for technological ethos and care more about the social impacts of intelligent geodata services.

Correction Statement

This article has been republished with minor changes. These changes do not impact the academic content of the article.

Acknowledgments

The figures quoted in this article come from research projects financed by Jiangsu Industrial Technology Research Institute (JITRI), Changshu Fengfan Power Equipment Co. Ltd., International Graduate School of Science and Engineering (IGSSE) at Technical University of Munich and China Scholarship Council.

Additional information

Notes on contributors

Liqiu Meng

Liqiu Meng is a professor of Cartography at the Technical University of Munich, and a member of German National Academy of Sciences. She is serving as Vice President of the International Cartographic Association. Her research interests include geodata integration, mobile map services, multimodal navigation algorithms, geovisual analytics, and ethical concerns in social sensing.

References

- Chen, R. Z., L. Wang, D. R. Li, L. Chen, and W. J. Fu. 2019a. “A Survey on the Fusion of the Navigation and the Remote Sensing Techniques.” Acta Geodaetica Et Cartographica Sinica 48 (12): 1507–1522. doi:10.11947/j.AGCS.2019.20190446.

- Chen, X., M. Chen, W. Shi, Y. Sun, and C. Zaniolo. 2019b. “Embedding Uncertain Knowledge Graphs.” Association for the Advancement of Artificial Intelligence. web.cs.ucla.edu/~yzsun/papers/2019_AAAI_UKG.pdf.

- Chuprikova, E. 2019. “Visualizing Uncertainty in Reasoning – A Bayesian Network-enabled Visual Analytics Approach for Geospatial Data.” Doctoral thesis, Technical University of Munich, mediatum.ub.tum.de/doc/1455497/1455497.pdf.

- Ding, L., G. Xiao, D. Calvanese, and L. Meng. 2019. “Consistency Assessment for Open Geodata Integration: An Ontology-based Approach.” Geoinformatica 36. doi:10.1007/s10707-019-00384-9.

- Janowicz, K., A. Haller, S. J. D. Cox, D. Le Phuoc, and M. Lefrançois. 2019. “SOSA: A Lightweight Ontology for Sensors, Observations, Samples, and Actuators.” Journal of Web Semantics 56: 1–10. doi:10.1016/j.websem.2018.06.003.

- Klippel, A., J. Zhao, K. L. Jackson, P. LaFemina, C. Stubbs, R. Wetzel, J. Blair, J. O. Wallgrün, and D. Oprean. 2019. “Transforming Earth Science Education through Immersive Experiences: Delivering on a Long Held Promise.” Journal of Educational Computing Research 57 (7): 1745–1771. doi:10.1177/0735633119854025.

- Krüger, R. L. 2017. “Visual Analytics of Human Mobility Behavior.” Doctoral thesis, University of Stuttgart, elib.uni-stuttgart.de/bitstream/11682/9733/3/dissertation_krueger_robert.pdf.

- Lyu, H. 2019. “Approaching a Collective Place Definition from Street-level Images Using Deep Learning Methods.” Doctoral thesis, Technical University of Munich, mediatum.ub.tum.de/doc/1464609/document.pdf,Chapter 5.

- Polous, K., J. Krisp, L. Meng, B. Shrestha, and J. Xiao. 2015. “OpenEventMap: A Volunteered Location-Based Service.” Cartographica: the International Journal for Geographic Information and Geovisualization 250 (4): 248–258. doi:10.3138/cart.50.4.3130.

- Wang, D., B. K. Szymanski, T. Abdelzaher, H. Ji, and L. Kaplan. 2019. “The Age of Social Sensing.” IEEE Computer 52 (1): 36–45. doi:10.1109/MC.2018.2890173.

- Woolley, S., S. Shorey, and P. Howard. 2018. “The Bot Proxy: Designing Automated Self Expression.” In A Networked Self and Platforms, Stories, Connections, edited by Z. Papacharissi, 59–76. New York: Routledge. Chapter 5.

- Yan, B., K. Janowicz, G. Mai, and S. Gao. 2017. “From ITDL to Place2Vec – Reasoning about Place Type Similarity and Relatedness by Learning Embeddings from Augmented Spatial Contexts.” Proceedings of the 25th ACM SIGSPATIAL International Conference on Advances in Geographic Information Systems. Redondo Beach, CA. doi: 10.1145/3139958.3140054.

- Zuo, C., L. Ding, E. Bogucka, and L. Meng. 2019. “Map-based Dashboards versus Storytelling Maps.” The 15th International Conference on Location Based Services, Vienna, November 11–13. doi: 10.34726/lbs2019.