ABSTRACT

A large number of publications have incorporated deep learning in the process of remote sensing change detection. In these Deep Learning Change Detection (DLCD) publications, deep learning methods have demonstrated their superiority over conventional change detection methods. However, the theoretical underpinnings of why deep learning improves the performance of change detection remain unresolved. As of today, few in-depth reviews have investigated the mechanisms of DLCD. Without such a review, five critical questions remain unclear. Does DLCD provide improved information representation for change detection? If so, how? How to select an appropriate DLCD method and why? How much does each type of change benefits from DLCD in terms of its performance? What are the major limitations of existing DLCD methods and what are the prospects for DLCD? To address these five questions, we reviewed according to the following strategies. We grouped the DLCD information assemblages into the four unique dimensions of remote sensing: spectral, spatial, temporal, and multi-sensor. For the extraction of information in each dimension, the difference between DLCD and conventional change detection methods was compared. We proposed a taxonomy of existing DLCD methods by dividing them into two distinctive pools: separate and coupled models. Their advantages, limitations, applicability, and performance were thoroughly investigated and explicitly presented. We examined the variations in performance between DLCD and conventional change detection. We depicted two limitations of DLCD, i.e. training sample and hardware and software dilemmas. Based on these analyses, we identified directions for future developments. As a result of our review, we found that DLCD’s advantages over conventional change detection can be attributed to three factors: improved information representation; improved change detection methods; and performance enhancements. DLCD has to surpass the limitations with regard to training samples and computing infrastructure. We envision this review can boost developments of deep learning in change detection applications.

1. Introduction

Deep learning is often treated as a black box; thus, the theoretical underpinnings of deep learning mechanisms that improve change detection in remote sensing are little understood. In this paper, we argue that change detection performance improvements stemming from deep learning result from improved information representation and more effective models than those found in statistical, and machine learning methods, more commonly deployed. A comparative review of the related literature shows that Deep Learning Change Detection (DLCD) provides semantic information neglected by other methods, and flexible network configurations that can improve change detection methods and performance. Semantic information, improved change detection methods, and performance enhancements facilitate improved change detection outcomes in remote sensing applications.

1.1. Background of change detection

Change detection aims to discern both subtle and abrupt alternation in a given area as manifested through remote sensing images acquired at different times (Singh Citation1989). It provides a means to study land use/cover change (Weng Citation2002), biodiversity (Newbold et al. Citation2015), the urbanization process (Han et al. Citation2017a), disaster detection (Saito et al. Citation2004), and other environmental changes. Conventionally, two types of changes are often examined (Lu et al. Citation2004): 1) binary changes, and 2) from-to changes. The binary changes only focus on where change has been incurred or not. From-to change detection not only detects variation over time but also the specific types of change e.g. from buildings to vegetation. Lately, a new type of change has been studied: multi-class changes, i.e. clustering the detected changes into different groups regardless of the land cover types (Saha, Bovolo, and Bruzzone Citation2019).

Substantial research has been carried out to recognize these three types of change. The definition of change detection was first introduced by Singh (Citation1989) but more recently, Tewkesbury et al. (Citation2015) summarized all the change detection methods in six categories: 1) Layer arithmetic methods (Howarth, and Wickware Citation1981), 2) Post-Classification Change Methods (PCCMs) (Silván-Cárdenas, and Wang Citation2014; Yuan et al. Citation2005), 3) Direct Classification Methods (DCMs) (Bovolo, Bruzzone, and Marconcini Citation2008), 4) Transformation methods (Gong Citation1993), 5) Change Vector Analysis (CVA) methods (Chen et al. Citation2003; Johnson, and Kasischke Citation1998), and 6) Hybrid change detection methods (Healey et al. Citation2018; McDermid et al. Citation2008). Among these six categories, the layer arithmetic and transformation methods first obtain a Difference Image (DI) and then select a threshold to discriminate the DI to obtain binary changes. These methods are easy to implement but cannot detect from-to changes because of the information loss in the process of obtaining the DI.

Unlike these two categories, PCCMs and DCMs are both to classify multi-date images to detect changes. The difference between these two categories is that PCCMs conduct change analysis after obtaining the independent classification map of each image, whereas DCMs directly classify stacked features obtained from multi-date images to detect changes. As a result, PCCMs can be used for multi-sensor images, and detect both binary changes and from-to changes. However, errors stemming from the classification maps are compounded in the final change map, thus reducing the accuracy of the final change detection result (Chan, Chan, and Yeh Citation2001; Dai, and Khorram Citation1999; Lillesand, Kiefer, and Chipman Citation2015). On the other hand, DCMs can overcome the error propagation problem, but requires training samples of all change types. To simultaneously mitigate the error propagation problem and the sampling difficulty, the fifth category, CVA, was developed. CVA constructs change vectors, and then utilizes their magnitudes and directions to detect changes. This method can detect binary changes, but its ability to detect from-to changes is limited (Tewkesbury et al. Citation2015). To combine the merits of these five categories, the last category, hybrid change detection methods, emerged. However, this category simultaneously inherits the weaknesses of the change detection methods used.

The big data era has further complicated the problems associated with conventional change detection methods (Reichstein et al. Citation2019). This is manifested in the following three ways: 1) Data volume of change detection is unprecedentedly large and quickly expanding, compounding the weaknesses. For example, an error will be further propagated when more data layers are engaged; the required number of training samples is escalating; from-to changes cannot be easily uncovered from the time series data. 2) The data sources of change detection are more diverse than ever. Contemporary data are comprised of multiple spectrums of platforms (satellite and aerial), sensors (passive and active), spatial resolution (250 m to sub-meter), and spectral resolution (multispectral and hyperspectral). This diverse source makes information expression not consistent. What’s more, there unavoidably exists a problem of spectral variability in more hyperspectral imagery (Hong et al. Citation2018), which leads to inaccurate change detection results. 3) The data tend to suffer from various degradation, noise effects, or variabilities in the process of imaging due to atmospheric conditions, illumination, viewing angles, soil moisture, etc. These factors are random and difficult to be considered precisely in traditional change detection methods. Therefore, how address these three issues have become a pressing challenge before change detection can be working for the big remote sensing data.

1.2. Deep learning methods used in change detection

The evolution of deep learning has demonstrated immense potential for addressing various change detection challenges in remote sensing. Deep learning is a particular kind of machine learning method based on artificial neural networks with representation learning (LeCun, Bengio, and Hinton Citation2015). Compared with other machine learning methods, the deep learning model achieves great power and flexibility by representing the world as a nested hierarchy of concepts, with each concept defined about simpler concepts, and more abstract representations computed in terms of less abstract ones (LeCun, Bengio, and Hinton Citation2015).

As of today, deep learning has been intensively used in the remote sensing field (Ma et al. Citation2019a), such as image fusion (Shao, and Cai Citation2018), image registration (Wang et al. Citation2018b), image matching (Chen, Rottensteiner, and Heipke Citation2021; Zhang et al. Citation2020; Cheng et al. Citation2020), change detection (Zhu et al. Citation2018), land use and cover classification (Hong et al. Citation2021, Citation2020b, Citation2020a; Li et al. Citation2022; Zhou et al. Citation2022), semantic segmentation (Kemker, Salvaggio, and Kanan Citation2018), and object-based image analysis (Liu, Yang, and Lunga Citation2021). Specifically, a new sub-field, known as DLCD, has emerged when deep learning methods are employed to detect changes in multi-date remote sensing images.

Compared to other topics in remote sensing, DLCD is still in its infancy. We carried out a search of all the published papers that involves DLCD using the search query “deep learning AND change detection” in the Scopus database, similar to Ma et al. (Citation2019a). Altogether 80 publications () have witnessed the early-stage developments of DLCD-relevant applications between 2013 and 2019, including peer-reviewed articles and conference papers.

There is not a lot of DLCD literature as compared with the scene classification and object detection, land use and cover classification, and semantic segmentation tasks because DLCD faces additional challenges. While segmentation and classification tasks work on images at a single time point, change detection works simultaneously on images at multiple time points. Therefore, the problems and limitations (e.g. noise) associated with single-date image information extraction are multiplied in DLCD. Furthermore, the multi-date images may contain different information expressions (e.g. inconsistent radiometric and geometric information, different spatial resolution, and different sensor), which makes DLCD even more difficult.

Another particular difficulty in DLCD is that change is often minimal in the images. While segmentation and classification tasks extract the bulk of the relevant information, change detection aims to find the minority pixels that have experienced changes. Because changes only occur in limited amounts (<50%) in general, the change information is easily confused with noise. The quantity of the related DLCD publications, however, is increasing rapidly () due to the need of change detection applications.

Among these studies, the most popular Deep Learning Neural Networks (DLNNs) (i.e. deep learning models) used in change detection are Convolutional Neural Networks (CNNs), Auto-Encoders (AEs), Deep Belief Networks (DBNs), Recurrent Neural Networks (RNNs), and Generative Adversarial Networks (GANs). DLNNs may each contain multiple specific models. For example, CNNs can be implemented using ResNet, AlexNet, DenseNet, etc. To discuss the structures of these specific models are beyond the scope of this review; thus, these specific models are not included. For each type of DLNNs, lists the first work that proposed the DLNN (deep learning reference) and the first work that used the DLNN in change detection (DLCD reference). To further understand the structure of specific DLNNs or how they are used in change detection, readers are referred to these publications.

Table 1. The first proposed reference and DLCD reference for each DLNN.

1.3. Unsolved problems in DLCD reviews

Until now, most existing studies reviewing deep learning are general reviews concerning the algorithm development of deep learning and remote sensing specific applications (Zhang, Zhang, and Du Citation2016; LeCun, Bengio, and Hinton Citation2015; Ball, Anderson, and Chan Citation2018; Ma et al. Citation2019a; Yuan et al. Citation2020; Zhu et al. Citation2017). Zhu et al. (Citation2017) and Ma et al. (Citation2019a) have reviewed applications and technology of deep learning remote sensing from preprocessing and mapping. Yuan et al. (Citation2020) have compared the use of the traditional neural network and deep learning methods to advance the environmental remote sensing process. There are two review papers for DLCD, which provided a technical review of the advance of deep learning for change detection (Shi et al. Citation2020; Khelifi, and Mignotte Citation2020). These two reviews are from the perspectives of the implementation process, technical methods, and data sources, providing a good starting point for beginners to understand DLCD. However, the theoretical underpinning of why deep learning improves the performance of change detection as compared with conventional change detection remains unresolved. Such knowledge could enable refinements in the existing deep learning methods and thus supersede current limitations such as data pollution and opaque network configurations. Specifically, to unveil the black box of DLCD, the following questions have to be investigated.

1) Does DLCD provide improved information representation for change detection? If so, how?

The first step in DLCD begins with data input. In general, four different types of inputs are engaged in a typical DLCD study, i.e. spectral, spatial, temporal, and multi-source information. It is critical to understand whether DLCD has a more powerful capability to represent the four types of information compared to the conventional change detection methods. If so, how such information is represented. Without such understanding, it is a challenge to further refine DLCD methods to achieve the best tradeoff that can maximize the authentic information carried over in the input and suppressed the noise at the same time.

2) How to select an appropriate DLCD method and why?

Methodology plays an important role in change detection. The introduction of deep learning network architectures opens up a new avenue for change detection. Since 2013, a variety of DLCD methods has emerged. Each DLCD method has its respective combination of network layers, training samples’ requirements, and applicability. These characteristics result in different performances. A systematical comparison of different advantages, disadvantages, and performance of DLCD methods would give methodological guidance in future change detection applications. However, such a comparison is not available. Without such knowledge, it is a challenge to select DLCD methods suitable for actual applications.

3) How much does each type of change benefits from DLCD in terms of their performance?

There are three types of change detection results including binary changes, multiclass changes, and from-to changes. The complexity of information processing increases from binary change detection to from-to change detection. As a result, the performance improvement from conventional change detection to DLCD is different regarding these three types of changes. Such difference is even more varied depending on the application. However, this difference has not been reviewed systematically. Given the fact that deep learning has a larger computation burden, a better understanding of the difference can help to measure the accuracy vs. computation burden tradeoff when selecting deep learning or conventional methods in different change detection applications.

4) What are the major limitations of existing DLCD methods?

The incorporation of deep learning introduces two major limitations including training sample and hardware and software dilemmas except greatly benefiting change detection. The excellent performance of typical deep learning models relies on extremely large labeled training sample sets (Gong et al. Citation2019b; Wang et al. Citation2018a) but geospatial systems usually have particularly limited labeled training samples available (Ball, Anderson, and Chan Citation2018; De et al. Citation2017; Reichstein et al. Citation2019), especially change detection. Deep learning places high demands on computational power in hardware and software. These pose two big challenges for DLCD, as no research is available. The lack of such research may become a major hurdle for further applying deep learning in change detection.

5) What are the prospects for DLCD?

Based on the analysis of the benefits and limitations of DLCD, we can outline prospects. Although there are some discussions of future directions in the DLCD literature, these articles have tended to focus on the disadvantages of methods themselves, or implementation processes, and so there is no overview of directions for future research based on the theoretical underpinnings. This paper addresses this gap.

1.4. Structure of this review

To answer the five questions, our review made five major contributions, as follows:

We compare the difference in how spectral, spatial, temporal, and multi-sensor information are represented in DLCD and conventional change detection methods.

We propose a taxonomy for DLCD methods by dividing them into two distinctive pools: separate and coupled DLNNs, based on which, a thorough analysis of their advantages, limitations, applicability, and performance is investigated.

We examine the holistic accuracy between DLCD and conventional change detection methods by adopting a box plot to analyze four major land-use types: Urban, Water, Vegetation, and Hazards for the three primary change types: i.e. binary, multiclass, and from-to.

We discuss two limitations of DLCD including training sample and hardware and software dilemmas.

We identify four directions for future development.

2. Improved information representation

The success of change detection depends on maximizing real change. Although both conventional change detection and DLCD aims to achieve this goal, the key difference between the two groups of methods is in the execution. DLCD is optimized for spectral, spatial, temporal, and multi-sensor information representation. In this section, we will compare how these kinds of information are extracted by DLCD as opposed to the conventional change detection methods.

2.1. Spectral information

DLCD is optimized for abstract spectral information representation (such as spectral curve) compared with the conventional change detection methods. Traditional thresholding-based, transformation, spectral analysis, and classification-based methods (Liu et al. Citation2014) can extract low-dimension spectral information (such as the mean value(R, G, B), the standard variance(R, G, B), the brightness, and the maximum difference). However, they neglect abstract spectral information, resulting in inaccurate recognition of complicated land use and land cover changes.

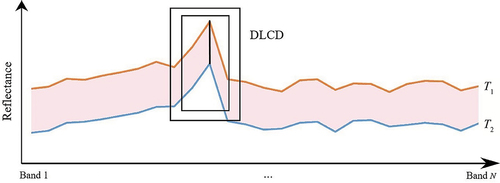

DLCD adopts a different strategy to discern real changes based on multi-scale spectral information differences, as shown in . outlines a basic scenario of change detection based on spectral information. T1 and T2 represent the reflectance at two different times (i.e. before the change and after change). Two rectangular boxes represent the multi-scale spectral information difference between T1 and T2. Typical change detection detects changes based on the spectral information difference presented between T1 and T2 reflectance curves.

From , one example of such multi-scale spectral information difference can be understood as conducting an average of the original spectral information curve (e.g. T1 and T2) with a different number of spectral bands. Once an average curve is derived, the difference between T1 and T2 is calculated. Then all the changes at disparate scales will be accumulated before an overall conclusion of change can be derived. Technically, such multi-scale integration is implemented using a large number of neurons and a complex network structure for deep learning. The advantages of deep learning become more prominent as the spectral band information increases. DLCD methods can directly handle the high-dimensional spectral information and more effectively learn available multi-scale spectral information. This information includes detailed spectral information at a low-level scale and semantic information at a high-level scale (Wang et al. Citation2019; Li, Yuan, and Wang Citation2019).

2.2. Spatial information

DLCD is optimized for multi-scale spatial information extraction as compared with the conventional change detection methods. This spatial information includes texture, location information at low scale, and semantic information at a high scale, and the most commonly used conventional change detection methods for extracting spatial information are object-based (Zhou, Yu, and Qin Citation2014; Chehata et al. Citation2014; Tang, Huang, and Zhang Citation2013), which can extract multi-scale and hierarchical spatial information features. However, the extraction accuracy is heavily dependent on the accuracy of the initial segmentation (Cao et al. Citation2014; Tewkesbury et al. Citation2015; Hussain et al. Citation2013). Another common method is to extract spatial features based on the fixed and limited window such as the gray level co-occurrence matrix (Murray, Lucieer, and Williams Citation2010). Thus, these extracted spatial features are limited-scale features, are not complete and systematic, and thus are not robust enough to detect complicated urban land use and land cover changes.

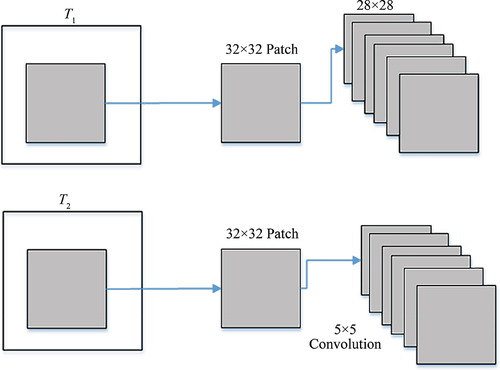

In contrast to the conventional change detection methods, DLCD utilizes multi-scale spatial information differences to discern real changes, presented in . outlines an example of change detection based on spatial information. A typical change detection approach detects changes based on the spatial information difference presented between T1 and T2.

From , one example of such multi-scale spatial information difference can be understood as extracting the spatial features (e.g. T1 and T2) utilizing CNN. CNN utilizes the convolution kernels of different sizes (e.g. 5 × 5) to extract spatial features from a 32 × 32 patch in T1 and T2. Once the spatial features are derived, the spatial information difference between T1 and T2 can be calculated. All the changes at disparate scales will be accumulated before an overall change determination can be derived. Technically, such multi-scale integration is implemented using multi-layer convolutions. Unlike conventional change detection methods, DLCD can automatically extract multi-scale spatial information (Amit, and Aoki Citation2017; El Amin, Liu, and Wang Citation2016; Khan et al. Citation2017).

2.3. Temporal information

DLCD is optimized for extracting nonlinear temporal information compared with the conventional change detection methods. Conventionally, time-series change detection utilizes statistical metrics such as individual ranks, means, and regression slopes for spectral bands and vegetation indices as the input (Shao, and Liu Citation2014). These are generally calculated either based on time-sequential reflectance or reflectance rank (Zhu Citation2017; Shao et al. Citation2019a); however, time-series change detection uses limited and linear statistical features to depict continuous temporal information, neglecting other types of temporal information.

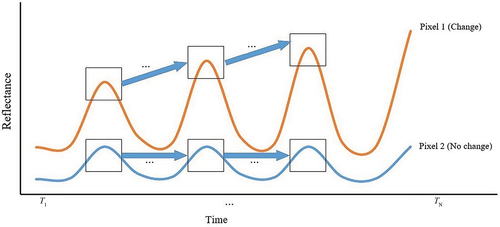

DLCD adopts a recurrent method (Section 3.2.3) to discern real changes based on the differences in the time-series curves, as shown in . outlines a basic scenario of change detection based on temporal information. The blue curve represents Pixel 1 (Change) and the yellow curve represents Pixel 2 (No change). A typical time-series change detection detects changes based on the temporal feature differences from the time-series curves between T1, T2, … , and TN for different pixels.

From , for Pixel 1 and Pixel 2, the reflectance {X1, X2, … , and XN} in T1, T2, … , and TN is the input of the recurrent method. The recurrent method learns temporal information by building recurrent connections between T1, T2, … , and TN. Thus, the output feature information of TN depends on input XN in TN and feature information in TN-1. Once the temporal feature in TN for Pixels 1 and 2 is derived, the feature difference between Pixel 1 and Pixel 2 is calculated. An overall conclusion of change can be derived. Technically, such a recurrent method is implemented using the recurrent connections between the neural activations of RNN at consecutive time steps. In contrast to traditional change detection methods, the DLCD can learn detailed temporal information as well as nonlinear temporal information automatically, supporting the detection of complicated urban land-use change (Lyu, Lu, and Mou Citation2016; Mou, Bruzzone, and Zhu Citation2018) and vegetation phenological patterns (Song et al. Citation2018). The advantages of DLCD become more pronounced as the time series expands.

2.4. Multi-sensor information (multi-source/multi-spatial)

DLCD is optimized for extracting multi-scale features compared with the conventional change detection methods. Change detection methods for multi-sensor images can be divided into two categories: 1) comparative analysis of independently produced classifications for different sensors and 2) simultaneous analysis of multi-temporal data (Singh Citation1989; Zhang et al. Citation2019). The former conducts multi-sensor images classification separately for change detection analysis. DLCD also has this type of method, i.e. the post-classification change method (Section 3.1.1.1), which extracts high-dimensional feature representations to replace limited and low-level feature extractions (Iino et al. Citation2018; Nemoto et al. Citation2017). This method obtains higher accuracy than the traditional post-classification methods (Iino et al. Citation2018; Nemoto et al. Citation2017).

The simultaneous analysis of multi-temporal data methods includes the copula theory (Mercier, Moser, and Serpico Citation2008), manifold learning strategy (Prendes et al. Citation2015b), kernel canonical correlation analysis (Volpi, Camps-Valls, and Tuia Citation2015), and Bayesian nonparametric model associated with a Markov random field (Prendes et al. Citation2015a). However, these methods utilize the limited features to find the relationship between the unchanged areas in multi-sensor remote sensing images and they do not consider the influence of changed areas when identifying this relationship.

DLCD adopts a mapping transformation method (Section 3.1.5.2.2) to discern authentic changes based on different sensor information differences between T1 and T2, as shown in . outlines an example of the usage of multi-sensor (multi-source and multi-spatial) information in DLCD. A typical multi-sensor change detection is how to detect changes from T1 and T2 for different sensors.

From , one example of such multi-sensor information difference can be understood as detecting changes between Landsat (T1) and sentinel 1 (T2). The mapping transformation method transforms T1 and T2 image information into a multi-scale feature space to obtain features similar to those found in the other image or finds the relationship between two image features. Once the similar features or the relationships between features in two images are derived, the difference between T1 and T2 can be calculated, and an overall determination of change can be derived. Technically, the mapping transformation method is implemented by building transformation neural networks. Unlike traditional change detection methods, the DLCD method learns multi-scale features and transforms inconsistent information into consistent information. In addition, it can enlarge the distance between changed pixels as the constraint rule to find more accurate relationships between information transformations.

3. Improved change detection methods

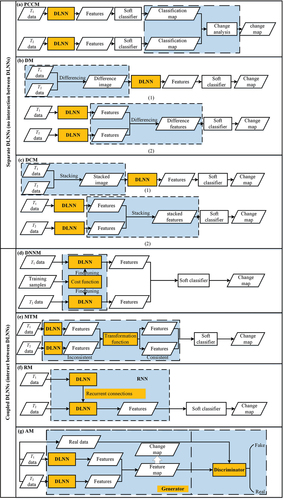

DLCD improves change detection methods as well as information representation for change detection. To provide methodological guidance for future applications, we present a taxonomy of these methods and provide a systematic comparison of their advantages, limitations, applicability, and performance. DLCD methods are separated into two categories at the first level as either separate or coupled DLNNs. At the second level, seven sub-categories are included. The separate DLNNs contain three sub-categories: PCCM, Differencing Methods (DM), and DCM. The coupled DLNNs contain four sub-categories: Differencing Neural Network Method (DNNM), Mapping Transformation Method (MTM), Recurrent Method (RM), and Adversarial Method (AM). A diagram of separate DLNNs and coupled DLNNs is shown in . The frameworks are provided for each sub-category.

Figure 6. The structure of DLCD methods. Yellow boxes represent DLNNs while blue boxes denote the interaction of multi-date information. (a) Post-classification change method (PCCM). (b) Differencing method (DM). (c) Direct classification method (DCM). (d) Differencing neural network method (DNNM). (e) Mapping transformation method (MTM). (f) Recurrent method (RM). (g) Adversarial method (AM).

From , change detection requires the interaction between multi-date information. The main difference between separate DLNNs and coupled DLNNs is clearly shown as the relationship between DLNNs (yellow boxes) and the interaction (blue boxes). In separate DLNNs, DLNNs are used to generate deep features before interaction or after interaction between multi-date image information, but DLNNs do not directly interact. Coupled DLNNs, in contrast, multi-date interaction occurs between DLNNs. The taxonomy of DLCD methods is summarized in . The difference, definition, advantages, limitations, and application examples are provided for each type of DLCD.

Table 2. The taxonomy, difference, definition, advantages, limitations, and applications of DLCD methods.

From , separate DLNNs are adapted from conventional change detection methods by refining image features with DLNNs. They share the same principles as the corresponding conventional change detection methods. The coupled DLNNs, on the other hand, are novel strategies where multiple DLNNs interact to maximize the change information. Unlike separate DLNNs, coupled DLNNs require more knowledge about deep learning and cannot be directly compared to conventional change detection methods. A more detailed discussion of each sub-category is provided in the following sections.

3.1. Separate DLNNs

In this sub-section, we introduce three types of methods that do not allow DLNNs to interact but only use DLNNs to extract deep features, i.e. separate DLNNs (). They are adapted from traditional PCM, layer arithmetic and transformation, and traditional DCM, respectively.

3.1.1. Post-classification change method

PCCM compares classification maps from different times to identify changes between them. PCCM includes three steps (). First, feature learning; two images are input into separate DLNNs to obtain deep feature representations of each image respectively. Secondly classification; the deep features are classified separately to obtain two classification maps. Third, is change analysis; the two classification maps are compared to obtain the final change map. The difference between this method and a traditional PCCM is that feature learning is through a deep learning model (Nemoto et al. Citation2017; Iino et al. Citation2018).

PCCM is easy to implement because the DLNN is only applied for feature learning but no effort is required for the configuration of the DLNN structure. Another advantage of PCCM is that it works on multi-sensor images and does not require radiometric normalization, because the changes are detected from two independent classification maps. In addition, PCCM can detect complete from-to changes. Nevertheless, similar to the traditional PCCM, the disadvantage is that the errors from two classification maps can accumulate in the final change detection map. To date, this method has only been used to detect changes in urban land use (Nemoto et al. Citation2017) ().

3.1.2. Differencing method

DM detects change by comparing the differences in image radiance (Li et al. Citation2017; Liu et al. Citation2016b; Xiao et al. Citation2018) or deep features (Gong et al. Citation2017b; Su et al. Citation2016; Xu et al. Citation2013), and then changes are detected with a supervised or unsupervised deep learning technique. If differences in image radiance are used in DM, we refer to it as image DM. Image DM has three steps ()-(1)). First, differencing; a DI is obtained. Second, is feature learning; DLNNs are built to extract the deep features of this DI. Third, classification; the deep features are input into a soft classifier to obtain the final change map. If differences in deep features are used in DM, we refer to it as feature DM. Feature DM also has three steps ()-(2)). First, is feature learning; two images are input into two DLNNs to obtain deep features. Second, differencing; different features are obtained. Third, classification; these features are input into a classifier to obtain the final change detection result.

The key step in DM is differencing, which aims to suppress unchanged information and highlight changed information. Although the techniques that have been used to obtain DI or difference features are limited, more are available. Similar to traditional layer arithmetic, operations such as subtracting (Arabi, Karoui, and Djerriri Citation2018), log rationing (Gong, Yang, and Zhang Citation2017), and log-mean rationing (Li et al. Citation2017) can be applied. Techniques such as Principal Component Analysis (PCA) can serve a similar purpose. For example, El Amin, Liu, and Wang (Citation2017) applied PCA to stacked high resolution images in order to identify changed features.

In summary, like the PCCM, DM is also easy to implement and the subsequent change detection result is easy to interpret. In this method, only one classification stage is required and identified changes are thematically labeled. Nevertheless, DM does not provide complete from-to changes. To date, this method has been widely used for urban land use (Xu et al. Citation2013), water and hazard (Zhao et al. Citation2014), and vegetation change detections (Li et al. Citation2017) ().

3.1.3. Direct classification method

DCM directly classifies stacked multi-date images or deep features for change detection. If images are stacked in DCM, we refer to it as image stacking DCM. Image stacking DCM includes three steps ()-(1)). Multi-date images are stacked and the stacked images are input into DLNN to learn the deep features. The resulting deep features are input into a soft classifier for change detection.

If deep features are stacked in DCM, we refer to it as feature stacking DCM. Feature stacking DCM also includes three steps ()-(2)). Two images are input into DLNNs to obtain deep feature representations of each image respectively. The two sets of deep features are stacked. The stacked features are input into a soft classifier to obtain the final change map.

In summary, like the other separate DLNNs (PCCM and DM), DCM is also easy to implement. Like traditional DCM, this method requires only one classification stage and can identify changes thematically (Tewkesbury et al. Citation2015). However, the disadvantage is difficult to construct training samples for from-to-change detection. To date, this method has been widely used for urban land use (Zhang et al. Citation2016a), water (Gao et al. Citation2019a), and hazard and vegetation change detections (Gong et al. Citation2015) ().

3.2. Coupled DLNNs

In this sub-section, we introduce four types of methods that not only allow DLNNs to interact but also use DLNNs to extract deep features, i.e. coupled DLNNs (). Compared to the separate DLNNs where DLNNs are adapted from the traditional change detection methods, four types of methods in the coupled DLNNs category are novel implementation strategies for specific change detection application scenarios. DNNM can highlight different information, so it is resistant to noise. MTM can deal with multi-source images and multi-spatial-resolution images. RM can be used in change detection applications that are time-sensitive (e.g. crop growth). AM can eliminate noise and generate high-quality information.

3.2.1. Differencing neural network method

DNNM builds a cost function between two DLNNs to highlight the difference in deep features for change detection. DNNM includes three steps (). Two images are input into DLNNs to pre-train two DLNN models. A cost function is used to adjust the parameters of the two DLNNs to generate deep features. With these deep features, the difference between changed pixels at T1 and T2 is enlarged. The two sets of deep features are input into a soft classifier to obtain the final change detection result.

The key step in DNNM is fine-tuning, i.e. the cost function, which aims to highlight the changed information and suppress the unchanged information. There are different methods to build a cost function. For example, Chen, Shi, and Gong (Citation2016) used the difference between bi-temporal deep features and an initial DI to design a cost function. Cao et al. (Citation2017) extended a backpropagation algorithm to build a cost function between two DBNs.

In summary, these methods enhance the difference in change areas and effectively suppress the noise as compared to traditional DI creation methods (Chu, Cao, and Hayat Citation2016). Compared to the methods in separate DLNNs, especially DM, the advantage of this method is that it uses an extra cost function to simultaneously adjust the parameters of two DLNNs, which makes the network parameters more accurate. However, the disadvantage is that because of this additional cost function, the structure of this method is complex. It is difficult to construct training sample sets for from-to change detection. To date, this method has been used for urban land use (Chu, Cao, and Hayat Citation2016), water and hazard (Chen, Shi, and Gong Citation2016), and vegetation change detections (Geng et al. Citation2017) ().

3.2.2. Mapping transformation method

MTM constructs a transformation function layer between inconsistent deep feature representations for change detection. For multi-spatial resolution or multi-source remote sensing images, the direct comparison between pixel-pair or feature-pair is meaningless (Zhan et al. Citation2018; Zhang et al. Citation2016b). Therefore, MTM was proposed to explore the inner relationships between multi-sensor data.

MTM includes four steps (). Two images are input into DLNNs to obtain deep feature representations of each image, respectively. A transformation function between the two sets of deep features is constructed. The similarity of the transformed features of the two images is calculated, and similarity features are clustered to obtain the final change map.

The key step in MTM is transformation. The transformation function can be constructed based on different principles. A transformation function can be built based on the principle that unchanged pixels at the same position in two input images have similar representations (Liu et al. Citation2016a; Zhang et al. Citation2016b). Conversely, the transformation function can be built by shrinking the difference between the paired features of unchanged positions while enlarging the difference between the paired features of changed positions (Zhan et al. Citation2018). The target of these transformation functions is to make incomparable information from different sensors comparable. For instance, Zhang et al. (Citation2016b) for the first time proposed a Stacked Denoised Auto-Encoder (SDAE) based MTM to detect changes between images of different resolutions. Liu et al. (Citation2018) established a novel MTM by using a convolutional coupling network to detect the change between optical and radar images. The success in these examples showed that this method is capable of detecting changes from multi-sensor images.

MTM is a powerful tool for transforming information from heterogeneous images into consistent information. However, like the DNNM, the disadvantage is that the additional transformation function makes its structure more complex. It is also difficult to construct training samples and provide complete from-to changes. To date, this method has been used for detecting changes in urban land use (Zhan et al. Citation2018), water and hazard (Zhang et al. Citation2016b), and vegetation applications (Su et al. Citation2017) ().

3.2.3. Recurrent method

RM uses recurrent connections between multi-date images to learn deep features and includes three steps (). First, is feature learning. DLNNs such as DBN, AE, and CNN are used to extract spectral or spatial features, while RNNs extracts temporal features. The temporal features are input into a soft classifier to obtain the final change detection result.

This method adds temporal features to traditional spectral or spatial feature methods. For instance, Lyu, Lu, and Mou (Citation2016) used a long short-term memory network to learn spectral and temporal features for change detection. Mou, Bruzzone, and Zhu (Citation2018) proposed a new RM by combining CNN and RNN to learn joint spectral-spatial-temporal features for change detection. These examples demonstrate that learning temporal features can be an effective way to detect change. In addition, it can learn the phenological characteristics of vegetation. For example, Song et al. (Citation2018) proposed an RM by using a 3D fully convolutional network and a convolutional long short-term memory network to learn phenological features for change detection. These examples show that extracting phenological features can effectively facilitate change detection.

As compared to other methods, RM with the help of RNN considers the temporal connections in multi-temporal change detection tasks. A disadvantage is that the combination of RNN and other DLNNs makes its structure complex. It is also difficult to construct training sample sets and provide complete from-to changes. To date, this method has been used for detecting changes in urban land use (Lyu, Lu, and Mou Citation2016) and vegetation applications (Song et al. Citation2018) ().

3.2.4. Adversarial method

AM plays generator neural networks and the discriminator neural networks against each other (i.e. GANs) to achieve change detection. AM includes three steps (). The generator is used to extract deep features for each image. The deep features are stacked (Gong et al. Citation2019b) or differenced (Gong et al. Citation2017a) to obtain a feature map. The discriminator is used to discriminate the feature map (e.g. generated DI) from the real data (e.g. real DI). A final feature map is obtained when the discriminator cannot distinguish the feature map from the real data. This classification method is used to classify a feature map to obtain a binary change detection map.

The generator is common DLNNs such as CNN (Gong et al. Citation2017a). Choosing which DLNN for the generator depends on the specific application (Radford, Metz, and Chintala Citation2015). Gong et al. (Citation2017a) used this AM based on GANs to generate a better DI for change detection, which has less noise than a real DI. Gong et al. (Citation2019b) used this method to generate training data and used these additional training data, label data, and unlabeled data to build a semi-supervised classifier for change detection. The success of these two examples shows that this method has a powerful ability for generating high-quality information.

Compared with other methods, the advantage of AM is that it can eliminate noise and generate high-quality information, which provides a new avenue for change detection tasks such as generating training samples and better change information. However, the disadvantage is the combination of generator and discriminator neural networks makes its structure complex. It is also difficult to provide complete from-to changes. To date, this method has been used for detecting changes in urban land use and water applications (Gong et al. Citation2017a) ().

3.3. Performance comparison

Performance comparisons of the overall accuracy of different DLCD methods, including DM, DCM, DNNM, MTM, RM, and AM were made using a boxplot, as shown in . The accuracy values for each DLCD method are from case studies in the respective DLCD references. Some of the referenced methods may use the same dataset. For example, the Ottawa dataset is used for evaluation studies of the difference (Li et al. Citation2017) and direct classification methods (Gao et al. Citation2017). We did not include PCCM in because the PCCM publications (Lyu, and Lu Citation2017; Cao, Dragićević, and Li Citation2019; Iino et al. Citation2018; Nemoto et al. Citation2017) did not report the overall change detection accuracy.

Figure 7. Distribution of overall accuracies for DLCD methods (differencing method (DM), direct classification method (DCM), differencing neural network method (DNNM), mapping transformation method (MTM), recurrent method (RM), and adversarial method (AM)).

From , using the median of the accuracy values as an indicator of DLCD performance, DLCD methods can be ranked in descending order as DNNM, DCM, RM, DM, MTM, and AM. That DNNM ranks number one can be attributed to that DNNM is more resistant to noise than the other methods. AM can automatically generate training samples, so unsupervised and semi-supervised classifiers are often incorporated into this method. Thus, the median accuracy was slightly lower. In addition, the accuracy variability of the coupled DLNNs was much lower as compared with the separate DLNNs, as illustrated by the Inter-Quartile Range (IQR) within each DLCD method, i.e. the IQR using the coupled DLNNs is smaller than the IQR using separate DLNNs; this implies that the interaction between DLNNs can make change detection more robust.

4. Performance enhancements

DLCD optimized performance in three types of existing change detection (binary, multi-class, and from-to change detection), but how much each type of change detection in specific applications benefits from DLCD in terms of their performance is not yet clear. In the following, we review the difference in the overall improvement in accuracy between binary, multi-class, and from-to change detection for different applications. Due to space restrictions, not all potential applications and references are included. We explore the most popular applications including urban land use, water, hazard, and vegetation change detection.

4.1. Binary changes

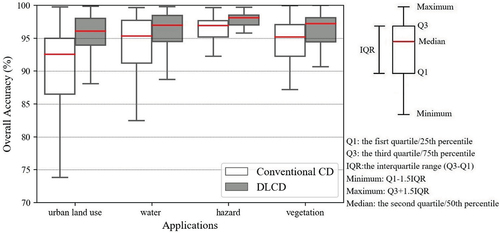

The overall accuracy of binary change detection in urban land use, water, hazard, and vegetation applications using the conventional change detection and the DLCD methods is shown in . With regards to the four applications: urban land use, water, vegetation, and hazard, the overall accuracy values of conventional change detection and DLCD methods were extracted from case studies presented in representative references where both conventional change detection and DLCD methods are used.

Figure 8. Distribution of overall accuracies of binary changes for urban land use, water, hazard, and vegetation applications using the conventional change detection (CD) and DLCD methods.

From , the DLCD methods improved performance in comparison with conventional change detection methods in urban land use, water, hazard, and vegetation change detection applications. This can be evidenced by the fact that the overall median accuracy using the DLCD methods was higher than that using the conventional change detection methods for urban land use (92.55% to 96.07%), water (95.35% to 96.99%), hazard (96.91% to 98.09%), and vegetation (95.18% to 97.23%). The second conclusion is that the accuracy variability of the DLCD methods was lower than the conventional change detection methods. This can be evidenced by the respective IQR within each application, i.e. IQR using the DLCD methods is smaller than that using the conventional change detection methods. This is because DLCD yields a more precise feature representation for binary change detection.

In addition, the increase in the median overall accuracy for urban land use (increased by 3.52%) are more apparent than the increase in accuracy for water (increased by 1.64%), hazard (increased by 1.18%), and vegetation (increased by 2.05%). It is easy to understand why DLCD methods improve performance on the urban land-use change detection. On the one hand, the conventional change detection methods used for water (95.35%), hazard (96.91%), and vegetation (95.18%) applications perform at relatively higher accuracy than the urban land use applications (92.55%); therefore, DLCD methods are more difficult to apply when increasing these relatively high accuracies for water, hazard, and vegetation applications. On the other hand, urban land-use change is caused by human activities, and thus more complicated than the water, hazard, and vegetation changes caused by natural processes. DLCD performs most effectively when addressing complex tasks (Ma et al. Citation2019a).

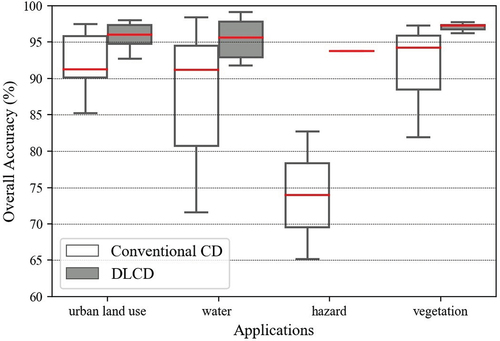

4.2. Multiclass changes

The overall accuracy of multiclass changes for urban land use, water, hazard, and vegetation applications using the conventional change detection and the DLCD methods is shown in . With regards to these four applications: urban land use, water, vegetation, and hazard change detection, the overall accuracy values of conventional change detection and DLCD methods are from case studies reported in the representative references where both conventional change detection and DLCD methods are used. For the hazard application, there is only one case study.

Figure 9. Distribution of overall accuracies of multi-class changes for urban land use, water, hazard, and vegetation applications using the conventional change detection (CD) and DLCD methods.

From , in terms of the statistical accuracy, compared with the traditional change detection methods, DLCD methods also increase the accuracy of these four applications in the same way as binary change detection discussed in Section 4.1. This can be evidenced by the fact that the median overall accuracy of these four applications when using the DLCD methods was higher than instances using conventional change detection methods (urban land use from 91.28% to 96.04%, water from 91.17% to 95.61%, hazard from 73.95% to 93.79%, and vegetation from 94.25% to 97.30%). Variability in the accuracy of the DLCD methods was lower than the conventional change detection methods for these four applications. This can be evidenced by the respective IQR within each application, i.e. IQR using the DLCD methods is smaller than that using the conventional change detection methods. This is because DLCD has more powerful feature representation capabilities.

In addition, the increase in the median overall accuracy for hazard (increase by nearly 20%) is more obvious than that of urban land use (increase by 4.76%), water (increase by 4.45%), and vegetation (increase by 3.05%) applications. However, this conclusion is not very reliable because the research on hazard change detection is sparse with only one case study. We need to do more research in the future to reach a definitive conclusion.

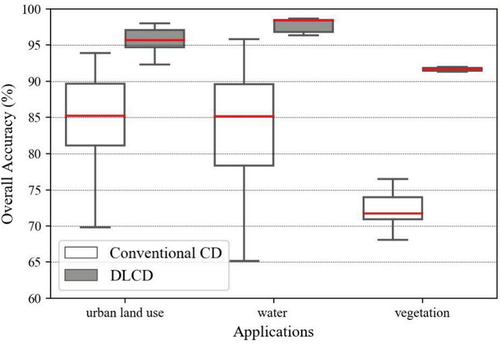

4.3. From-To changes

The overall accuracy of from-to changes for urban land use, water, and vegetation applications using the conventional change detection and the DLCD methods is shown in . With regards to three applications: urban land use, water, and vegetation, the overall accuracy values of conventional change detection and DLCD methods are from case studies in the respective references where both conventional change detection and DLCD methods are used. For water and vegetation change detection applications, there is only one case study. For hazard detection, there are no case studies.

Figure 10. Distribution of overall accuracies of from-to changes for urban land use, water, hazard, and vegetation applications using the conventional change detection (CD) and DLCD methods.

From , DLCD increases the accuracy of these three applications in contrast to traditional change detection methods. This can be evidenced by the fact that the median the overall accuracy of these three applications using the DLCD methods was far greater than the accuracy using the conventional change detection methods (urban land use from 85.22% to 95.68%, water from 85.14% to 98.42%, and vegetation from 71.75% to 91.65%). The second conclusion is that the variability of the accuracy of the DLCD methods was lower than the conventional change detection methods for these three applications. This can be evidenced by the respective IQR within each application, i.e. IQR using the DLCD methods is smaller than that using the conventional change detection methods. This is because of the feature representation capabilities for from-to change detection in the DLCD methods.

In addition, the increase in the median overall accuracy for vegetation (increased by 19.90%) and water (increased by 13.28%) change detection is more apparent than the overall accuracy of urban land-use change detection applications (increased by 10.46%) when using DLCD methods. The most likely cause may be that the urban land use from-to change detection using the DLCD methods has more case studies as compared with vegetation and water change detection applications, decreasing the median overall accuracy. From-to change detection case studies however are too few, requiring additional research to reach a more credible conclusion.

According to the existing literature, from-to change detection accuracy improvement using DLCD increased by nearly 10–20%. Next comes multi-class change detection which increased by 3%–20%. The conventional detection methods for binary changes were more than 92.5% and performed at a relatively higher median accuracy than the multi-class change detection from 73.95% to 94.25%, and from-to change detection increased from 71.75% to 85.22% in four applications. Therefore, DLCD methods do not increase these relatively high accuracies in binary change detection applications. From-to changes involve many classification types, and are more complicated than binary and multi-class changes. In the existing literature, it has been shown that DLCD performs optimally in complex tasks (Ma et al. Citation2019a). However, there is limited published work on the multi-class and from-to change detection applications using DLCD. A larger sample of case studies is needed to confirm this conclusion.

5. Dilemmas of DLCD

Although it is evident that the introduction of deep learning improves information representation, change detection methods, and performance in change detection, the limitations on DLCD are not yet clear. The reference studies have indicated two major dilemmas are often encountered when deep learning is applied in a specific application, large sets of labeled training samples are required, and DLCD places high demands on hardware and software. This is also true in change detection. Therefore, in the following subsections, we will examine these dilemmas related to DLCD.

5.1. Training sample dilemma

The training sample dilemma adds complications when deep learning is incorporated into change detection. Deep learning can execute change detection tasks when there are relatively abundant labeled training samples (LeCun, Bengio, and Hinton Citation2015; Gong et al. Citation2019b; Wang et al. Citation2018a). However, the required sample size is well beyond what is available for change detection (Ball, Anderson, and Chan Citation2018; Reichstein et al. Citation2019; De et al. Citation2017). This poses a big challenge for DLCD, for which no research is available. The lack of such research may become a major hurdle for the further application of deep learning in change detection. Therefore, in this section, we summarized the various solutions that have been proposed to this dilemma.

Except for the manual method, we grouped the remaining solutions into two categories: 1) generating large training samples, and 2) adapting to small training samples. The definition, advantages, limitations, and examples of each solution are shown in . The first published publication and the most cited publication for each solution are listed as examples.

Table 3. The definition, advantages, limitations, and examples of solutions to the training sample dilemma in DLCD.

From , the first category is to enhance the size of training samples. The second category is to develop DLCD methods to enhance the efficiency of the training samples. The review then continues with a more detailed discussion of each solution.

5.1.1. Generating large training samples

In this sub-section, we divide the methods for generating large training samples into three sub-categories: Data Augmentation Method (DAM); Supervised Change Detection Method (SCDM); and Unsupervised Change Detection Method (USCDM). DAM is a method of utilizing affine transformations to enhance the size and diversity of training samples. It includes one step. For this method, four basic transformation operations including rotation (Zhan et al. Citation2017; Nemoto et al. Citation2017; Wang et al. Citation2018a; Zhu et al. Citation2018), flip (Zhan et al. Citation2017), mirror (Zhu et al. Citation2018), and cropping (Nemoto et al. Citation2017; Zhan et al. Citation2017) are directly used to generate augmented training samples. The advantage of this method is that it is easy to implement and does not change the spectral or topological information for the training samples. Data augmentation (i.e. flips, mirror, rotations, and cropping) for training samples diversifies its holistic spatial layout and orientation (Yu et al. Citation2017), which can effectively avoid the over-fitting problem in deep learning (Cireşan et al. Citation2010; Luus et al. Citation2015; Simard, Steinkraus, and Platt Citation2003). However, the disadvantage is that it needs an initial training set and the accuracy of the extended training samples directly depends on initial training samples. Recently, beyond these basic transformation operations (i.e. flips, mirror, rotations, and cropping), Gong et al. (Citation2019b) utilized GANs to generate the new training samples given its advantages of generating new information. To extend accurate training samples, SCDM was developed.

SCDM refers to using traditional SCDM such as the supervised object-based change detection method to extend training samples (Zhang et al. Citation2016b). It includes two steps. The traditional SCDM is used to obtain the initial change detection result and the training samples are selected from this initial change detection result. The advantage of this method is that it can generate more accurate training samples than that using the traditional USCDM. However, the disadvantage is that two typical techniques (i.e. the supervised change detection and the subsequent supervised DLCD) are combined, which makes the structure of the whole model more complex. USCDM was developed to eliminate the need for initial training samples.

USCDM refers to using traditional USCDMs to generate change and no change training samples. It includes two steps. The traditional USCDMs are used to obtain the initial change detection result and the training samples are selected from this initial change detection result. Unsupervised pixel-based change detection methods include thresholding methods (Liu et al. Citation2016b), level set methods (Liu et al. Citation2016b), CVA (Zhang, and Zhang Citation2016), and clustering methods (Geng et al. Citation2017) are used to generate training samples. Unsupervised object-based methods including an ensemble learning method based on objects (Gong et al. Citation2017b) and an unsupervised object-based Markov random field (Li, Xu, and Liu Citation2018) are also utilized to generate training samples. Compared with pixel-based methods, the object-based methods can include neighboring information and edge information to generate more accurate training samples. Compared with DAM and SCDM, the advantage of USCDM is that it does not need an initial training set. However, the disadvantage is that the generated training samples are less accurate. In addition to the DLCD methods that generate large training samples, DLCD methods that adapt to small training samples have also been developed.

5.1.2. Adapting to small training samples

We divided the methods that adapt to small training samples into three sub-categories: Semi-Supervised Methods (SSMs), Transfer Learning Method (TLM), and Unsupervised DLCD Methods (UDLCDMs). SSM refers to methods that use a combination of labeled and unlabeled training samples for change detection (Connors, and Vatsavai Citation2017; Gong et al. Citation2019b). It includes two steps. Unlabeled training samples are used to train an unsupervised deep learning network to extract relevant feature information, and this feature information is input into the supervised classifier for change detection using the labeled training samples. The advantage of the SSM is that it only needs a few training samples, which can reduce the expense of manually obtaining training samples. The disadvantage is that it cannot be transferred to other multi-date images; TLM overcomes this shortcoming.

TLM is a method that uses a pre-trained model from other data to detect changes in current multi-date images. In the DLCD literature, there are two popular ways to transfer learning, direct application and fine-tuning. Direct application means using pre-trained neural networks from other kinds of data to extract deep features (Saha, Bovolo, and Bruzzone Citation2019; El Amin, Liu, and Wang Citation2017). Fine-tuning means using small training samples from the current multi-date images to fine-tune a pre-trained model that came from other training datasets for change detection (Waldeland, Reksten, and Salberg Citation2018). Fine-tuning yields more accurate change detection results than direct application. The advantage of TLM is that it only needs a few training samples, and this model can be transferred to new multi-date images. However, the disadvantage is that the transferability depends on the spectral similarity between the training data and the target image (Yosinski et al. Citation2014). UDLCDM overcomes the dependency on spectral similarity and initial training sample requirements.

UDLCDM refers to methods that combine unsupervised DLNNs with USCDMs for change detection. It includes three steps. Unsupervised DLNNs such as DBN (Zhang et al. Citation2016a) and AE (Su et al. Citation2016; Liu et al. Citation2016a) are feature learning tools that are used to effectively extract high-dimension features, and USCDMs such as CVA (Zhang et al. Citation2016a; Su et al. Citation2016) is used to map these features to characterize change information. Clustering methods are used to detect changes. As compared with other methods, the advantage of UDLCDM is that it is automatic and does not need training samples. However, the disadvantage is that the application scenarios are constrained by the limitations of unsupervised DLNNs and unsupervised change detection.

5.2. Hardware and software dilemmas

Compared with the conventional change detection methods, DLCD methods have stricter software and hardware requirements. Considering hardware requirements, conventional change detection methods just need a CPU card, but DLCD often requires a computer with a GPU card. In terms of software requirements, many conventional change detection methods are ready-to-use tools in software such as ENVI, ERDAS, and ArcGIS. DLCD however, not only requires open-source deep learning frameworks such as Caffe/Caffe2.0, Pytorch, Theano, Tensorflow, Keras, and MATLAB but also requires custom programming. A detailed discussion of popular deep learning frameworks can be found in (De Felice Citation2017). Fortunately, the difficulty of applying DLCD is decreasing. For example, ENVI has released the ENVI Deep Learning Module.

6. Future prospects of DLCD

In sections 2, 3, 4, and 5, we compared the difference in how spectral, spatial, temporal, and multi-sensor information were represented between DLCD and conventional change detection methods. We introduced a taxonomy of DLCD methods and provided a systematic comparison of their advantages, limitations, applicability, and performance. We reviewed the difference in the overall improvement in accuracy between binary, multi-class, and from-to change detection for different applications. We reviewed two major limitations in DLCD: training sample and hardware and software dilemmas. In this section, we summarize four future directions: 1) DLCD methods, 2) DLCD applications, 3) training samples, and 4) the implication of remote sensing/change detection/deep learning for DLCD.

6.1. DLCD methods

The DLCD community has made significant progress in developing DLCD methods. However, there are still existing fields to be developed. The future direction of the DLCD methods could be the coupled DLNNs. This can be attributed to its lower accuracy variability (Section 3.2) and the ability to solve specific problems (i.e. resistance to noise, multi-source images and multi-spatial resolution images change detection, time-sensitive change detection, and generating the high-quality information). Specifically, in coupled DLNNs, RM and AM can be potential methods for change detection. For RM, on the one hand, currently, RM has the second-highest median accuracy performance in coupled DLNNs (Section 3.2). On the other hand, time-series change detection is the future direction of change detection, and RM can provide temporal features for it. For AM, although it has the lowest median accuracy performance in coupled DLNNs, it may be the future direction. Compared with other basic deep learning networks, which only have a discriminator neural network (i.e. (0, 1) classifier), AM not only includes a discriminator, but also includes a generator (i.e. generating new information by a noise input). The current AM studies also proved that it has the advantage of generating training samples (Gong et al. Citation2019b) and high-quality change information (Gong et al. Citation2017a). However, the current studies are limited, so it remains a subject for more research.

6.2. DLCD applications

The DLCD community has made significant progress for real applications. However, there are still existing fields to be developed. For urban land use applications, in terms of the spatial extent, all studies focused on the local areas (e.g. 14,400 ha (Mou, Bruzzone, and Zhu Citation2018)) within a city. Therefore, it would be interesting to extend study areas to a larger spatial scale (i.e. regional, national, and global scale). In terms of the detected changes, 49 of the 89 case studies have detected binary changes (El Amin, Liu, and Wang Citation2016; Chu, Cao, and Hayat Citation2016). Next comes multiclass change detection (specific change types are unclear), which has 19 studies (Su et al. Citation2016; Zhang et al. Citation2016a). Only 12 of the 89 studies have detected land use/cover from-to changes (Lyu, Lu, and Mou Citation2016; Lyu, and Lu Citation2017). Although building change detection is very popular in traditional urban change detection, only nine of the 89 urban DLCD studies have detected building changes (Argyridis, and Argialas Citation2016; Nemoto et al. Citation2017). The limited studies for land use/land cover from-to and building change detection can be attributed to the fact that the former involves many change types and the latter involves the building target, more complicated, compared to binary and multiclass change detection. It would be interesting to see more studies on these two types of change detection.

For water applications, most water DLCD studies focused on the DLCD algorithm development and used public data sets. In addition, the study areas are limited. Among the 40 water DLCD studies, 18 were along the yellow river (Zhao et al. Citation2014; Gong et al. Citation2019a; Su, and Cao Citation2018; Zhao et al. Citation2016), four in San Francisco (Gong, Yang, and Zhang Citation2017; Gao et al. Citation2017; Zhao et al. Citation2016), four in the Sulzberger Ice Shelf (Gao et al. Citation2019a, Citation2019b), six for the Weihe River, China (Zhang, and Zhang Citation2016; Gong et al. Citation2017b; Lei et al. Citation2019b), five for Hongqi, China (Gong et al. Citation2017a, Citation2017b; Zhao et al. Citation2017), two in Sardinia, Italy (Zhang et al. Citation2016b; Gong et al. Citation2019a), one for Lake Lotus (Zhang et al. Citation2016a). In the future, we need to conduct more experiments to make the accuracy of these water studies sufficient to meet the requirements of real-world applications.

For hazard applications, there are 42 applications of before- and after-hazard change detection. Among these studies, 31 worked on flooding, including 17 using the same public data set in Ottawa (Zhao et al. Citation2014; Gong et al. Citation2015)) and six using the same public data set in Bern (Zhao et al. Citation2014; Liu et al. Citation2017)), six along the yellow river (Ma et al. Citation2019b; Chen et al. Citation2019), and two in Thailand (Amit, and Aoki Citation2017). Six worked on the landslide, including two in Japan (Amit, and Aoki Citation2017) and four in China (Chen et al. Citation2018; Lei et al. Citation2019a). Two studies mapped the damage caused by the Tohoku tsunami (Sublime, and Kalinicheva Citation2019). One detected the changes before and after the Aere typhoon (Li, Yuan, and Wang Citation2019). One detected the damage by a forest fire (Cao et al. Citation2017). One conducted avalanche detection (Waldeland, Reksten, and Salberg Citation2018). However, there are no damaged buildings, civil war, volcanic eruptions, and droughts change detection studies. It would be interesting to see DLCD used in more hazard scenarios.

Compared with urban land use, water, and hazard applications, the development of vegetation DLCD application lags. To date, only 13 publications have worked on vegetation DLCD in 22 different areas. Among these 22 studies, 20 worked on farmland (Wang et al. Citation2019; Li, Yuan, and Wang Citation2019; Yuan, Wang, and Li Citation2018) and the other two on forests (Khan et al. Citation2017). All farmland studies focused on small areas (e.g. 5670 ha (Yuan, Wang, and Li Citation2018)). Forest studies are too few to analyze their pattern. It would be interesting to see vegetation DLCD in more application scenarios such as mangrove change detection and larger scale (e.g. global scale) in the future.

6.3. Training samples

The DLCD community has made significant progress on the training sample dilemma, with six solutions currently available. These solutions, however, created new problems so it remains a subject for more research. DLCD methods that adapt to small training samples may be a potential area to explore, because this category only needs a few or no training samples, which reduces the manual workload. When generating large training samples, USCDM generated inaccurate training samples, so it remains a subject for more research. In adapting to small training samples, for UDLCDM, the application scenarios were limited by the unsupervised DLNNs and change detection methods, so it remains a subject for more research. In the future, we can make use of the flexible and deep network structure of deep learning to extend the application range of UDLCDM. TLM also has the great potential to solve the training sample problem because it only needs a few training samples, but its transferability is influenced by the distance of the spectral distribution between the training data and the target image, so it also remains a subject for more research. To make the spectral distribution of the training data closer to the target image, the change detection model needs to be trained through repetition and variation such as in a never-ending learning model (Mitchell et al. Citation2018).

6.4. The implication of remote sensing/change detection/deep learning for DLCD

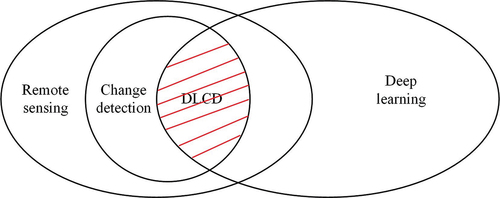

In this section, we discuss the implication of remote sensing, change detection, and deep learning for DLCD. The relationship between DLCD, remote sensing, change detection, and deep learning is shown in .

From , DLCD is the combination of remote sensing, change detection, and deep learning. Thus, we argue that the developments in remote sensing, change detection, and deep learning could be promising directions for developments in future DLCD methods.

The incorporation of spatiotemporal information will play a crucial role in future DLCD developments. The latest work from Yuan et al. (Citation2020) has indicated that spatiotemporal information is indispensable when deep learning is applied to remote sensing applications. With regards to DLCD, a few attempts have been made to exploit spatial and temporal information. For example, Lyu, Lu, and Mou (Citation2016) used the RNN to learn the temporal features. Liu et al. (Citation2017) employed a full-connection layer and a softmax layer to concatenate the output features of two paralleled CNN channels, which amplifies the spatial information. However, spatial and temporal information are considered separately. Recently, one exception is the work of Mou and Zhu (Citation2018) who managed to extract joint spatial-temporal features for land use change detection in complex urban areas using Landsat images by combining CNN and RNN. This study is a good example of simultaneously considering spatial and temporal information by using the same loss function for RNN and CNN. However, this study used shallow CNN and RNN models, which face difficulties in dealing with high-resolution images and long time-series images. In the future, we need to develop a spatiotemporally constrained deep learning model for high-resolution images and long-time-series images.

The incorporation of hybrid methods into DLNNs is anticipated. In the field of change detection, hybrid methods have been used to successfully combine the advantages of separate change detection methods. Currently, in the field of DLCD, hybrid change detection methods have been only used to solve the training samples dilemmas by generating large training samples. Other hybrid strategies such as combining pixel-based and object-based methods have almost been entirely neglected. Therefore, more algorithms that combine DLNNs with hybrid change detection methods are anticipated. In addition, combining different DLNNs may be able to compensate for their single deficiencies, rendering some more reliable results. For example, CNN and SDAE can be combined to take advantage of spatial features from CNN and simultaneously off-load the need for training samples thanks to SDAE (Zhang et al. Citation2016b).

The rapid developments in the deep learning field open new avenues for DLCD as well. Deep learning algorithms such as AlexNet (Han et al. Citation2017b), ResNet (Zhu et al. Citation2021), GoogleNet (Bazi et al. Citation2019), Unet (Jiao et al. Citation2020), Graph Convolutional Network (GCN) (Hong et al. Citation2020a; Gao et al. Citation2021), Spectral Former (Hong et al. Citation2021), and multimodal deep learning framework (Hong et al. 2020), which have been extensively used for remote sensing, are the potential models for DLCD. For example, Hong et al. (2020) have developed a new supervised version of GCNs. This model can jointly use CNNs and GCNs for extracting more diverse and discriminative feature representations for the hyperspectral image classification task, which can potentially model for change detection. To date, a few attempts have been made to exploit these algorithms for DLCD. For example, Waldeland, Reksten, and Salberg (Citation2018) used a ResNet pre-trained from ImageNet data to detect avalanche in SAR images and found that additional training was needed for avalanche detection. Wang et al. (Citation2018a) used a 50-layer Residual Net to obtain differencing feature maps of multi-date high-resolution remote sensing images for change detection. However, this model has only been used to detect simple land cover changes; this study confronts challenges for complex urban land use change detections. Therefore, an immediate need for future studies is to incorporate AlexNet, ResNet, GoogleNet, Unet, GCN, Spectral Former, and multimodal deep learning framework algorithms in more DLCD applications.

7. Conclusion

Change detection permits more effective management and monitoring of natural resources and environmental change. However, as remote sensing images form big data, finding the real change in the multi-date images is very difficult. The emergence of deep learning provides an opportunity for change detection. In this paper, we review DLCD literature to reveal the theoretical underpinnings in five ways: improved information representations, improved change detection methods, performance enhancements, dilemmas of DLCD, and prospects of DLCD. Compared to conventional change detection, DLCD brings advantages in information representation and change detection methods which result in refined performance. Nevertheless, DLCD still faces challenges in lacking training samples and requiring more advanced hardware and software. Finally, we envision the future research of DLCD to improve DLCD methods from the perspective of coupled DLNNs; to widen DLCD applications in land-use/land cover from-to, building, civil war, volcanic eruption, and droughts change detection; to deal with small training samples from the perspective of UDLCDM and TLM; and to absorb developments in remote sensing, change detection, and deep learning to the field of DLCD. We hope this review makes it easier for researchers to find DLCD methods that are most appropriate to their specific applications, to understand the benefits and shortcomings of DLCD, and to contribute to the future development of DLCD .

Acknowledgments

The authors are grateful to anonymous reviewers whose constructive and valuable comments greatly helped us to improve the paper.

Disclosure statement

No potential conflict of interest was reported by the author(s).

Data availability statement (DAS)

The data that support the findings of this study are available from the corresponding author [L. Wang], upon reasonable request.

Additional information