ABSTRACT

The rapid processing, analysis, and mining of remote-sensing big data based on intelligent interpretation technology using remote-sensing cloud computing platforms (RS-CCPs) have recently become a new trend. The existing RS-CCPs mainly focus on developing and optimizing high-performance data storage and intelligent computing for common visual representation, which ignores remote sensing data characteristics such as large image size, large-scale change, multiple data channels, and geographic knowledge embedding, thus impairing computational efficiency and accuracy. We construct a LuoJiaAI platform composed of a standard large-scale sample database (LuoJiaSET) and a dedicated deep learning framework (LuoJiaNET) to achieve state-of-the-art performance on five typical remote sensing interpretation tasks, including scene classification, object detection, land-use classification, change detection, and multi-view 3D reconstruction. The details of the LuoJiaAI application experiment can be found at the white paper for LuoJiaAI industrial application. In addition, LuoJiaAI is an open-source RS-CCP that supports the latest Open Geospatial Consortium (OGC) standards for better developing and sharing Earth Artificial Intelligence (AI) algorithms and products on benchmark datasets. LuoJiaAI narrows the gap between the sample database and deep learning frameworks through a user-friendly data-framework collaboration mechanism, showing great potential in high-precision remote sensing mapping applications.

1. Introduction

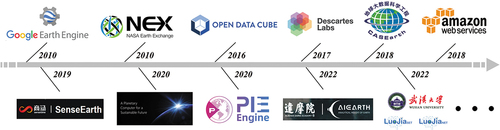

The rapid development of remote sensing technology has obtained a large volume of multi-source (multi-type, multi-temporal, and multi-scale) Earth observation data (Deren et al. Citation2017; Gong Citation2018). With the continuous enrichment of data resources, traditional desktop-based remote sensing platforms can no longer satisfy the higher requirements for dense storage, rapid processing, and analysis of remote sensing big data (Yang et al. Citation2017; Kumar and Mutanga Citation2018; Mutanga and Kumar Citation2019; Wang et al. Citation2020). In recent years, large-scale cloud computing systems have provided unprecedented opportunities for geospatial information mining. A wide variety of remote sensing cloud computing platforms (RS-CCPs) have been developed to facilitate massive data processing, including Google Earth Engine (Gorelick et al. Citation2017), NASA Earth Exchange (Nemani Citation2011), Descartes Labs (https://descarteslabs.com/), Geoscience Data Cube (Lewis et al. Citation2017), Planetary Computer (https://planetarycomputer.microsoft.com/), the CASEarth EarthDataMiner (Liu, Wang, and Zhong Citation2020), and PIE-Engine (https://www.piesat.cn/en/PIE-Engine.html). An increasing number of commercial companies, including Amazon (https://aws.amazon.com/earth/), Alibaba (https://engine-aiearth.aliyun.com/), and SenseTime (https://rs.sensetime.com/), are actively constructing RS-CCP to facilitate large-scale geospatial data processing. These cloud-based platforms provide effective cloud computing services for large-scale geospatial analysis that have been widely employed in water management, crop production forecasting, climate monitoring, and environmental protection (Hansen et al. Citation2013; Pekel et al. Citation2016; Badgley, Field, and Berry Citation2017; Kontgis et al. Citation2017; Xiong et al. Citation2017; MacDonald and Mordecai Citation2019; Ceccherini et al. Citation2020; Gao et al. Citation2020; Nemani et al. Citation2015). shows the timeline of existing popular RS-CCPs.

With the development of technologies, including big data, cloud computing, and Artificial Intelligence (AI), Deep Learning (DL) has made remarkable progress in numerous fields, including image recognition, drug discovery, natural language processing, and self-driving cars, since the ImageNet Challenge in 2012 (LeCun, Bengio, and Hinton Citation2015; Russakovsky et al. Citation2015; Rao and Frtunikj Citation2018; Chen et al. Citation2018; Young et al. Citation2018). In the remote sensing field, researchers integrate DL with remote sensing interpretation technology and apply them in large-scale Earth observation data, which has achieved remarkable results in various image interpretation tasks, such as scene classification, object detection, land-use classification, change detection, and multi-view 3D reconstruction (Zhu et al. Citation2017; Sun et al. Citation2022; Yuan et al. Citation2020; Li, Huang, and Gong Citation2019; Ma et al. Citation2019). Current DL frameworks, such as PyTorch (Paszke et al. Citation2019), TensorFlow (Abadi et al. Citation2016), PaddlePaddle (Ma et al. Citation2019), and MindSpore (Huawei Technologies Co. Citation2022), are employed in VHR image interpretation applications for AI models designing, training, and deploying through a high-level programming interface. Some RS-CCPs, such as Google Earth Engine and PIE-Engine, have involved these DL frameworks in increasing the intelligence level for processing and analyzing remote sensing big data.

The key components of RS-CCPs are available databases, Application Programming Interfaces (APIs) provided by computational frameworks, and computational infrastructures. Although many academic institutions and corporations have developed RS-CCPs and promoted numerous critical achievements in Earth AI Sciences, there is a lack of a standard large-scale sample database for image intelligent interpretation tasks that allows users to efficiently retrieve, access, label, and share data. Besides, the computational frameworks used in existing RS-CCPs mainly focus on developing and optimizing high-performance data storage and computing in the perspective of common visual representation, which ignores remote sensing data characteristics such as large image size, large-scale change, multiple data channels, and geographic knowledge embedding, thus impairing the computational efficiency and accuracy.

To solve the above limitations in existing RS-CCPs, we construct a LuoJiaAI platform that brings Huawei’s massive data storage and computational capabilities for intelligent interpretation and analysis of remote sensing data to achieve state-of-the-art performance on various image interpretation tasks. In summary, we make the following contributions:

As the first contribution of LuoJiaAI, LuoJiaSET builds a large-scale sample database with a unified and scalable classification scheme, an Internet-based crowdsourcing sample collection tool with human–machine interaction, and a sample platform (Homepage, http://58.48.42.237/luojiaSet) for better data preparation and sharing in remote sensing intelligent interpretation.

The second contribution of LuoJiaAI is designing and implementing a dedicated DL framework called LuoJiaNET with remote sensing data characteristics embedded into the underlying system to improve computational efficiency and performance accuracy (Source code, https://github.com/WHULuoJiaTeam/luojianet). LuoJiaAI also develops a front-end application platform (Homepage, http://58.48.42.237/luojiaNet) with a visual modeling tool that provides online creating, training, and deploying models for improving users’ productivity. In addition, there is a growing trend for Earth AI Sciences to create libraries of standardized and benchmark datasets. These benchmark datasets can facilitate users to evaluate developed models on a common and standardized dataset efficiently. LuoJiaAI supports the latest Open Geospatial Consortium (OGC) standards in geographic information and service interfaces (https://www.ogc.org/projects/groups/trainingdmlswg) for better data sharing and model developing (Yue et al. Citation2022).

LuoJiaAI accelerates the integration of AI algorithms and RS-CCP, and promotes the sharing and development of standardized data, algorithms, and products, showing great potential in rapid intelligent processing and analysis of remote sensing big data.

The rest of this paper is organized as follows. Section 2 reviews related works on RS-CCPs, sample databases, and deep learning models and frameworks. Section 3 illustrates the platform overview briefly. Section 4 first demonstrates how to organize and build a standard large-scale sample database, Internet-based sample collection, and sample sharing platform. Section 5 introduces the construction method of a dedicated framework with remote sensing data characteristics and a visual modeling tool based on graphical user interface. Section 6 briefly reviews some application experiments in typical image intelligent interpretation tasks. Section 7 provides the conclusions and further research directions for improvement.

2. Background and related work

2.1. Remote sensing cloud computing platforms

RS-CCPs break through the bottleneck of traditional remote sensing technology and promote the technological innovation of data processing and analysis by combining huge data resources with high-performance cloud servers (Amani et al. Citation2020). Users can only focus on developing the fundamental theories and application models of remote sensing without having difficulties obtaining data and computing resources, thus significantly improving the efficiency of massive data processing and information mining. The main components of existing RS-CCPs include:

Available database. Most RS-CCPs provide a publicly available database that includes remote-sensing imagery from low to medium spatial resolution (e.g. Landsat, Sentinel, and MODIS), elevation datasets, land use and land cover datasets, and simulation datasets (Toth and Jóźków Citation2016). The CASEarth EarthDataMiner also provides public multidisciplinary datasets, like ecological, atmospheric, oceanic, primary geographic, and ground-measure datasets. Except for publicly available datasets, some RS-CCPs like Descartes Labs also provide business datasets, such as the SPOT satellite dataset and ship trajectory AIS (Automatic Identification System) dataset.

Application Programming Interfaces (APIs). Users can rapidly access, compute, and analyze data from the available database of RS-CCP by utilizing APIs provided by the computational frameworks (Bisong Citation2019).

Computational infrastructures. The large-scale Earth datasets and high-complexity DL models require massive computational resources (Tamiminia et al. Citation2020). Most of RS-CCPs are built based on large-scale cloud-based computing platforms (e.g. Google Cloud Platform, Amazon Cloud Platform, Huawei Cloud Platform, and Alibaba Cloud Platform) for quick processing of enormous geospatial datasets without suffering the IT pains of parallel computing and networking.

2.2. Sample databases for remote sensing

DL models are data-driven methods that learn complex patterns from Earth observation data (Cheng et al. Citation2018). The available datasets that include observations and associated labels are thus essential in remote-sensing intelligent interpretation. A number of sample datasets for different image interpretation tasks are publicly available, with new sample datasets being constantly released, including scene classification sample datasets, e.g. WHU-RS19 (Dai and Yang Citation2010), NWPU-RESISC45 (Cheng, Han, and Lu Citation2017), and AID (Xia et al. Citation2017), object detection sample datasets, e.g. VEDAI (Razakarivony and Jurie Citation2016) and DOTA (Xia et al. Citation2018), land-use classification sample datasets, e.g. GID (Tong et al. Citation2020) and INRIA (Maggiori et al. Citation2017), change detection sample datasets, e.g. HRSCD (Daudt et al. Citation2019) and WHU-BCD (Ji, Wei, and Lu Citation2018), and multi-view 3D reconstruction sample datasets, e.g. WHU-MVS (Liu and Ji Citation2020) and US3D (Marc et al. Citation2019). Although many RS-CCPs have included the publicly released datasets in their sample databases, it remains several problems in remote-sensing intelligent interpretation:

The existing classification schemes of current available sample databases are not in a standardized and unified manner (Tenopir et al. Citation2020). For example, there are numerous classification schemes for land-use classification tasks. It is often difficult to harmonize these classification schemes due to the heterogeneity of data attributes such as names, resolution, projection, observation time, data format, and semantics of classes.

A large-scale sample database with different data distributions is the key factor for improving the performance and generalization of DL models in remote sensing intelligent interpretation (Li and Hsu Citation2020). However, the limited number and variety of data in existing RS-CCPs make it hard to obtain a good generalization approach to make DL models fit beyond the training dataset.

The majority of an image interpretation task is typically spent on data preparation (Reichstein et al. Citation2019). Acquiring a large-scale sample database is very costly as labeling is usually manually done by in-house labor. A common solution is to crowdsource hand labeling tasks using services like Amazon Mechanical Turk. However, this leaves nonprofessional users with the time-consuming and difficult task of locating, labeling, and integrating disparate datasets. In the remote sensing field, a lack of an open data labeling and organization platform for large-scale and diverse sample database is an obstacle to realizing efficient image interpretation in geoscience applications.

2.3. Deep learning models and frameworks for remote sensing

Convolutional Neural Networks (CNNs) have been demonstrated to be strong and achieved state-of-art performance in many vision tasks (e.g. image classification, object detection, and semantic segmentation) (Alzubaidi et al. Citation2021). Examples include AlexNet (Krizhevsky, Sutskever, and Hinton Citation2017), VGG-Net (Simonyan and Zisserman Citation2014), GoogleNet (Szegedy et al. Citation2015), ResNet (He et al. Citation2016), DeepLab (Chen et al. Citation2017), and HRNet (Sun et al. Citation2019). These common vision models have numerous variants and form a wide variety of advanced CNN-based networks in different remote sensing image interpretation tasks, such as NEX-Net (Anwer et al. Citation2018), S2A-Net (Han et al. Citation2021), FreeNet (Zheng et al. Citation2020), DSIFN (Zhang et al. Citation2020), and MVS-Net (Yao et al. Citation2018). On the other hand, neural architecture search has become a new trend in model architecture design, including reinforcement learning strategy, evolutionary algorithm, and gradient-based method (Ren et al. Citation2021). DL frameworks accelerate the development of artificial intelligence techniques in vision applications by providing convenient high-level interfaces for users to design DL models on different computing devices (Chen et al. Citation2018). Some RS-CCPs have directly integrated these DL frameworks (like TensorFlow and PyTorch) into traditional modeling workflows to increase the intelligence level. However, the current DL models and frameworks used in geoscience AI research mainly focus on processing natural images (e.g. indoor-outdoor small-size images), which ignore the characteristics of remote sensing data such as large image size, large-scale change, multiple data channels, and geographic knowledge embedding, thus impairing the computational efficiency and accuracy.

3. Platform overview

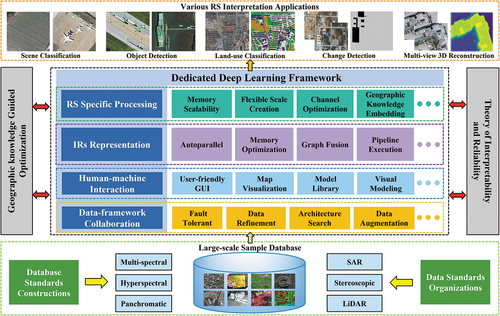

shows a platform overview of LuoJiaAI. LuoJiaAI consists of a standard large-scale sample database (LuoJiaSET) and a dedicated DL framework (LuoJiaNET) for five typical remote sensing interpretation applications with a high-performance cloud storage and computation service. The platform is accessed and controlled through an Internet-accessible API and an associated user-friendly interactive development environment that enables rapid data processing and visualization of results.

The LuoJiaSET houses an extensive publicly available sample database for image interpretation, including remote-sensing sample datasets from various satellite and aerial imaging systems in optical and non-optical data types. All of these sample datasets are well organized in LuoJiaSET by a standard large-scale database that allows efficient retrieval, access, label, and share, removing the barriers associated with data management and computing. Users can access and analyze data using a library of operators provided by the data service interface and web console with OGC standard from the LuoJiaSET application layer.

The LuoJiaNET develops a dedicated framework and optimization method for exploiting remote sensing data characteristics (e.g. large image size, large-scale change, multiple data channels, and geographic knowledge embedding) to increase computational efficiency and accuracy in five typical image interpretation tasks. LuoJiaNET also develops a front-end application platform with a visual modeling tool to provide online model services (e.g. model creation, model training, and model deployment) to improve user productivity. Users can sign up for the LuoJiaNET homepage to access the user’s guide, tutorials, model examples, function references, and educational curricula.

4. LuoJiaSET

In remote sensing image intelligent interpretation, superior performance comes from large amounts of standard and high-quality labeled remote sensing data (Li et al. Citation2020; Van Etten, Lindenbaum, and Bacastow Citation2018; Sumbul et al. Citation2019). LuoJiaSET constructs a standard large-scale sample database with a unified and scalable classification scheme for multi-type sensors, multi-temporal and multi-scale remote sensing data (Section 3.1), and develops an Internet-based crowdsourcing sample collection tool and a sample sharing platform that provide strong data support for high-precision remote sensing mapping applications (Section 3.2).

4.1. Standard large-scale sample database

This section highlights the building steps of a standard large-scale sample database that supports multi-dimensional global retrieval and data analysis.

4.1.1. Sample model design

LuoJiaSET defines the corresponding sample types for typical remote sensing interpretation tasks, such as scene classification, object detection, land-use classification, change detection, and multi-view 3D reconstruction. demonstrates the logical model of all sample types. In this model, the ground object samples are organized by planar images (e.g. single-phase and change detection samples) and multi-view stereo images. Different samples can have the same ground object, and samples from different application tasks can be related in accordance with their geographical coordinate. Each application task can include remote sensing data from different sensor types.

4.1.2. Unified and scalable classification scheme

LuoJiaSET builds a unified classification scheme that supports flexible and extended new categories for the sample database. The sample classification framework is shown in . The main idea of this construction strategy is as follows: The whole samples in the database are divided into two concept hierarchies based on the national geographic information classification scheme (GB/T 30322). The ground cover category is defined by the dichotomy in the first classification level. For the second classification level, geographic attributes are added to existing categories for defining new classifications. The concepts of artificial-related ground objects in a two-layer classification scheme are extended by the definition of moving and fixed objects in the object detection task, and a new scalable classification scheme is formed for the whole sample datasets.

4.1.3. Sample data integration

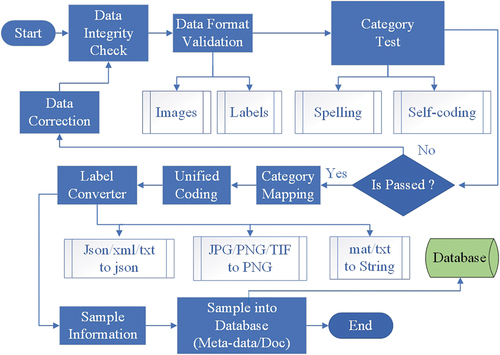

demonstrates the technical process of multi-source sample data integration. The original classification scheme in existing open-source datasets should be reformed and mapped to a new unified and scalable classification scheme adopted in LuoJiaSET. All sample data are transformed under the unified encoding rules and relational database structure designed by the LuoJiaSET sample database, and global queries are created for multi-dimensional retrieval and data analysis. The information of all sample datasets, including metadata, attribute table, classification scheme, and copyright, is stored in their corresponding metadata table to facilitate traceability, quality control, and ownership identification.

4.2. Internet-based sample collection and sharing service

This section highlights the essential components of the sample sharing platform, including the crowdsourcing sample collection platform with human-machine interaction, and the primary infrastructure layers and high-level application Programming Interfaces (APIs).

4.2.1. Crowdsourcing sample collection with human-machine interaction

LuoJiaSET develops an Internet-based sample collection platform that supports the functions of crowdsourcing collection, online verification, and dynamic sample expansion. In the remote sensing field, label sampling is costly, laborious, and has a high demand for specialized geographic knowledge for sample annotators. Therefore, we propose an automatic/semi-automatic combined labeling tool to improve the efficiency and accuracy of label sampling. The main purpose of this tool is to allow participants to interact with automatic interpretation results obtained from trained network models and attempt to reduce recognition errors. The samples that meet the quality requirement after being verified online by professional staff will be accepted by the sample database. shows the whole technical flow diagram of this strategy.

4.2.2. Sample sharing platform

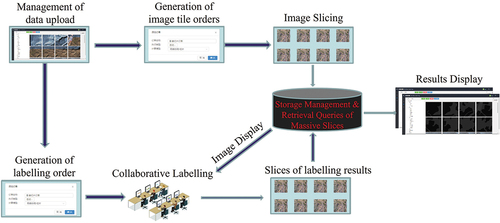

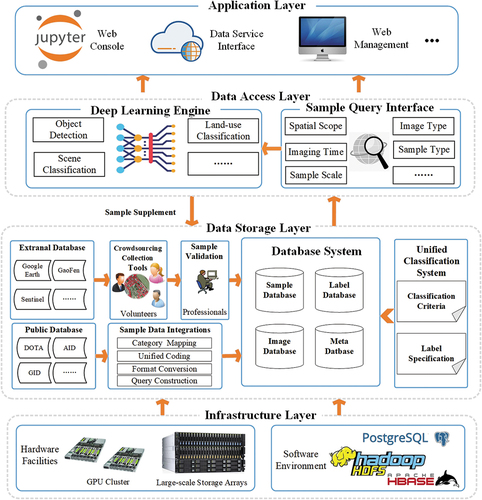

LuoJiaSET develops an open-source sample sharing platform for remote sensing interpretation, which offers the function of global queries for multi-dimensional retrieval, statistical analysis of data, and data sharing services. shows the overall framework of this platform. The infrastructure layer is constructed by distributed storage systems, DL Graphical Processing Unit (GPU) clusters, file systems, database systems, and a modern network environment from Object Storage Service of Huawei’s AI Computing Center in Wuhan. The abilities of storage, expansion, maintenance, and copyright protection of multi-source remote sensing data are supplied in the data storage layer. The service layer provides the APIs for data access and operation and supports multi-dimensional semantic queries and data delivery service. The high application layer provides users with more advanced online functions in data access and analysis, such as spatiotemporal data mining, statistical analysis of sample attributes, and knowledge discovery in the whole database.

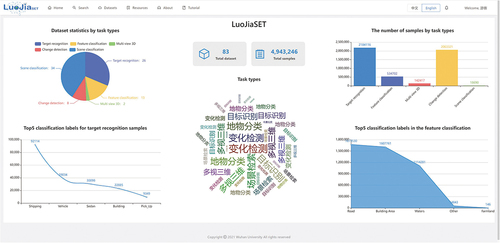

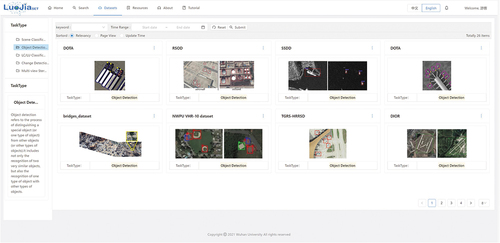

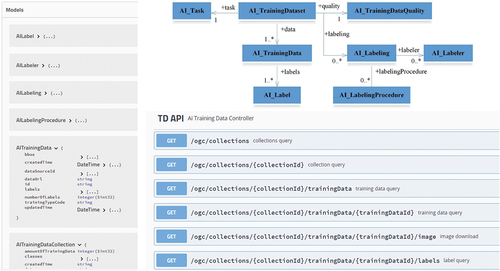

On the basis of the proposed classification scheme and workflow, the LuoJiaSET sample sharing platform has processed 83 open-source datasets and built a database containing about 5 million samples that cover five typical remote sensing image interpretation tasks, such as scene classification, object detection, land-use classification, change detection, and multi-view 3D reconstruction. The statistical analysis of the whole datasets is shown in , and the front-end applications for sample query and display are shown in , respectively. The LuoJiaSET platform provides high-level service APIs in the OGC Training Data Markup Language for AI standard for better data sharing and model developing ().

5. LuoJiaNET

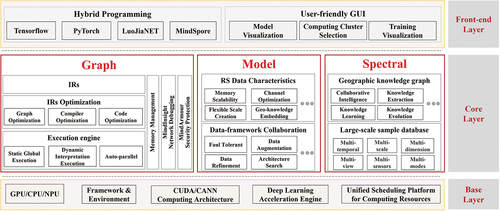

The DL framework is the crucial infrastructure to connect the hardware, software, and AI applications (Liermann Citation2021; Chen Citation2021). LuoJiaNET designs the dedicated DL framework with a Graph-Model-Spectral collaboration core layer for embedding remote-sensing data characteristics (e.g. large image size, large-scale change, multiple data channels, and geographic knowledge embedding) into the underlying system. LuoJiaNET’s simplified system architecture is shown in . The layer above the core of LuoJiaNET is front-end applications and user interfaces, and the hardware device and data access layer are under the core of LuoJiaNET. The core layer of LuoJiaNET includes Intermediate Representations (IRs), computational graph optimization, execution engine, memory management, visual optimization, security protection, remote sensing data characteristics, and data-framework collaboration. Section 4.1 illustrates the graph-based core framework modules of LuoJiaNET. Section 4.2 demonstrates the Model-Spectral driven data processing module to satisfy the demands for remote sensing characteristics embedding. Section 4.3 introduces the overall architecture design of LuoJiaNET’s front-end layer.

5.1. Graph based core framework modules

The core modules of LuoJiaNET are employed to build the Directed Acyclic Graphs (DAGs) for data processing. The neural networks can handle input remote sensing data from multiple layers, integrate remote sensing features into data flow and control flow, and implement data-framework collaboration through DAGs representation. Specifically, the core modules of the dedicated DL framework include the construction of network operators and device management, IRs and their compilation optimization, and automatic differentiation, and modules with remote-sensing data characteristics, such as memory scalability for large-scale images and adaptive optimization methods for optimizing multi-scale and multi-channel image features.

In the LuoJiaNET framework, the hardware module provides a unified interface and shields the underlying hardware differences (e.g. CPU, GPU, and NPU). The data organization and management module is applied to achieve high-efficiency storage and read/write for the sample database. The data organization and management module takes charge of data-framework collaboration tasks, including automatic data augmentation and refinement in LuoJiaSET’s database. LuoJiaNET has compiled and tested the Geospatial Data Abstraction Library (GDAL) for reading and writing various raster and vector data formats under different operating systems. Without introducing any closed-source projects, the GDAL enables LuoJiaNET to have a controllable and efficient Input-Output (I/O) module to process multi-source remote sensing data.

5.2. Model-spectral driven data processing module

This section highlights the strategies of remote-sensing data characteristics embedded in the LuoJiaNET framework, including a memory scalability module and a parallel computing strategy for large-scale image processing, the adaptive optimization method for adaptive learning multi-scale and multi-channel image spectral features, and an auto-parallel strategy for embedding geographic knowledge. The experiments demonstrate the effectiveness of the proposed data processing modules.

5.2.1. Large-scale image processing

5.2.1.1. Indirect buffer group mapping

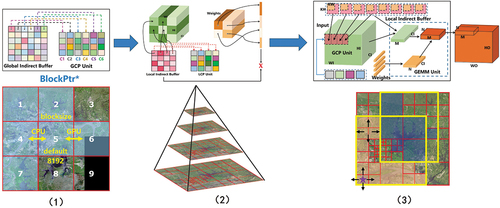

LuoJiaNET proposes a memory scalability module to construct the correlation buffer areas of local features and global spatial context for supporting large-scale image loading, mapping, and learning. demonstrates the brief procedure of this Indirect Buffer Group Mapping (IBGM) method. The large-scale image is divided into different global correlation buffers based on labeled data. The pointers reserved in global correlation buffer areas are called global indirect buffer (GCP) unit. In the GCP Unit, the technique of quadtree spatial indexing is constructed to obtain context features in each local context processing (LCP) unit. The GCP unit and LCP unit features are concatenated in the memory scalability module and weighted by General Matrix Multiplication unit in the network model to achieve the global-local context representation and learning.

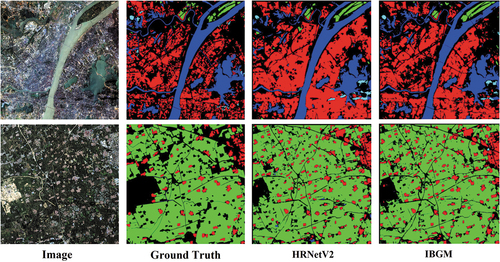

reports the mean Intersection over Union (MIoU) results for comparison of the patch-based processing and IBGM method on Gaofen Image Dataset (GID) using the HRNetV2 model (Sun et al. Citation2019). As shown in , the network with IBGM method outperforms the traditional patch-based processing method by 1.25% in overall MIoU. visualizes the prediction results on the validation set, which proves that global-local context information is essential. The proposed IBGM method supports large-scale image loading and training, and outperforms the traditional patch-based image processing method.

Table 1. Land-use classification results on GID with HRNetV2 model.

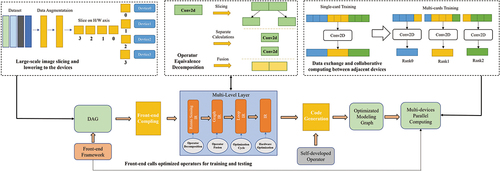

5.2.1.2. Distributed operator decomposition

LuoJiaNET proposes a distributed parallel computing strategy based on the idea of operator equivalent decomposition to handle large-scale image calculation in neural networks (). In this strategy, the large-scale input images need to be cut and distributed to multiple training cards. The networks, such as the fully convolutional network (FCN) model (Long, Shelhamer, and Darrell Citation2015), need to be reformed through IRs and compiler optimization to have the ability to calculate large-scale images in the LuoJiaNET framework directly. The core of this method is to decompose the original large-scale input feature into several small pieces of features for network computing. These small features are combined and recovered to the original size by using image stitching technology, making the computational results equivalent to the initial large-scale input size calculation. Hence, one training card only needs to take charge of a small piece of the large-scale input image, thereby vastly reducing the requirements for device memory.

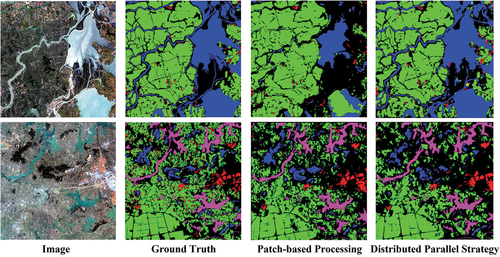

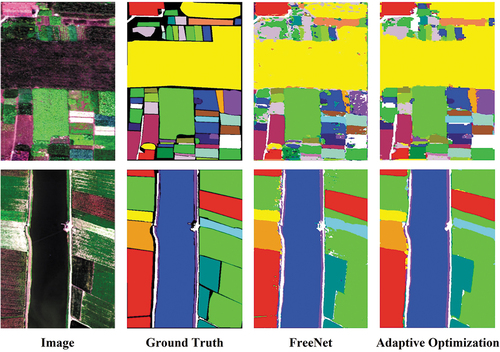

reports the overall MIoU for comparison of the patch-based processing and distributed parallel computing strategy on GID using the FCN8s model. As shown in , large-scale image input with distributed parallel computing strategy outperforms the traditional patch-based processing method by 5.05% in overall MIoU. visualizes the prediction results on the validation set. This result proves that the proposed distributed parallel computing strategy enables the network to directly process large-scale images and improve the extraction accuracy by learning larger spatial contexts of images.

Table 2. Land-use classification results on GID with FCN8s model.

5.2.2. Adaptive optimization

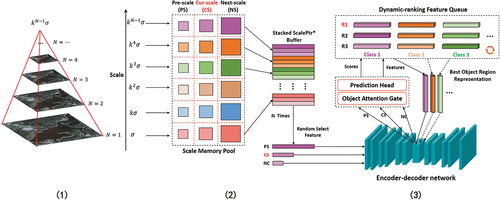

5.2.2.1. Adaptive scale memory pool

LuoJiaNET constructs a scale memory pool for adaptive storing, learning, and optimizing multi-scale stacked image features (). This method’s key technologies include 1) Multi-scale image pyramid construction. Similar to the Laplacian scale space construction, the high-order pyramid is introduced to reduce the loss of scale variation in building an image pyramid. 2) Scale memory pool construction. For each LCP unit image feature (Section 4.2.1), its current-scale, previous-scale, and next-scale images are all stored in the scale memory pool. In each network training iteration, the scale memory pool stacks multiple-scale features and sends them to a DL network for adaptive learning.

5.2.2.2. Dynamic feature selection filter

LuoJiaNET proposes an adaptive optimization method based on a dynamic feature selection filter to learn and optimize data channels adaptively. As shown in , this optimization method uses a fixed memory array size to dynamically store and update the best features in end-to-end network training. The similarity between predicted value and label is the criterion for introducing the high-quality features for each ground object in the dynamic feature selection filter. These high-quality features will be applied as additional weights to guide network training.

reports the overall OA and Kappa for validating the adaptive optimization method on the WHU-Hi dataset (Zhong et al. Citation2020) using the FreeNet model. As shown in , the network with adaptive optimization is improved by 1.54% in OA and 1.88% in Kappa. visualizes the prediction results on the validation set. This result proves that the proposed adaptive optimization method can bring performance improvements for network models.

Table 3. Land-use classification results on WHU-Hi with FreeNet model.

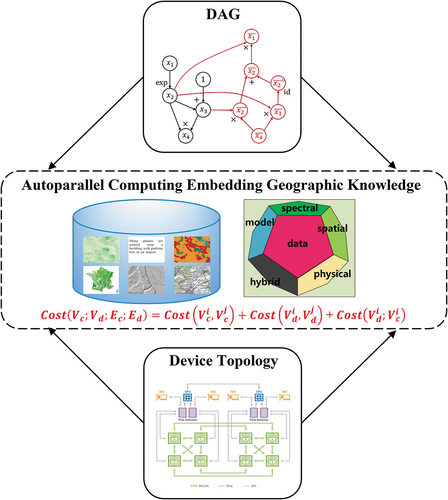

5.2.3. Parallel geographic knowledge embedding

LuoJiaNET proposes an auto-parallel computing strategy embedding geographic knowledge for processing different types of remote sensing images (e.g. multi-spectral, hyperspectral, and SAR). This prior knowledge can be integrated into the cost function of the LuoJiaNET framework in multi-dimensional mixed auto-parallel computing by developing the specific operators for prior geographic knowledge extraction (e.g. image texture, boundary, and physics-based feature extraction), thereby achieving the optimal computational assignment of DAGs on multiple hardware devices. demonstrates this auto-parallel computing strategy. The auto-parallel cost function contains 1) Delay and bandwidth communication cost of transmission operators between devices. 2) Operation cost on different devices based on auto-parallel strategy. 3) Geographic knowledge-based cost in parallel training.

In the experiment, we choose the gray-level co-occurrence matrices (GLCMs) (Ojala, Pietikäinen, and Harwood Citation1996) of remote sensing images as the prior geographic knowledge. reports the top-1 accuracy and top-5 accuracy for comparison of nonparallel training, auto-parallel training with GLCM image features, and auto-parallel training with GLCMs embedded in the cost function on the WHU-RS19 dataset (Xia et al. Citation2010) using the ResNet-50 model. As shown in , auto-parallel training with GLCM image features outperforms the nonparallel training strategy by 3% in top1-accuracy. The top-5 and top-1 accuracies of auto-parallel training with GLCMs embedded in the cost function are increased to 99% and 87%, respectively. This result proves that the proposed parallel optimization method can significantly improve network accuracy.

Table 4. Scene classification results on WHU-RS19 with different parallel computing strategies.

5.3. Framework sharing platform

This section highlights the technology stack of LuoJiaNET’s front-end application architecture. The human-machine interactive modeling tool on the LuoJiaNET platform is introduced to improve users’ productivity.

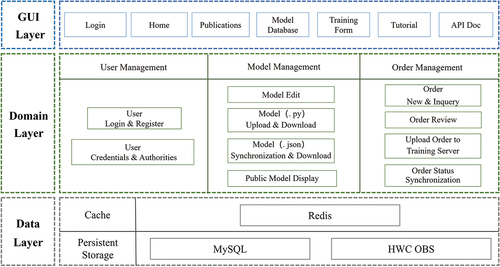

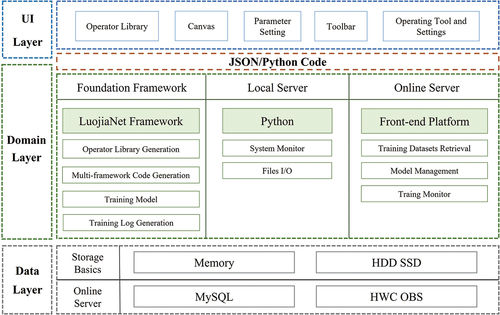

5.3.1. Front-end application architecture

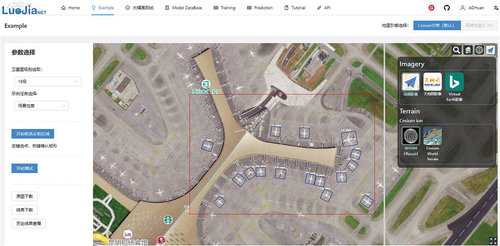

LuoJiaNET’s front-end application architecture has three browser/server architecture-based layers, including the interface layer, application layer, and data layer (). Supported by Ascend APU DL server of Huawei’s AI Computing Center in Wuhan, the LuoJiaNET platform deploys training services in the cloud to provide operator-friendly applications and efficient background computing service for users. The LuoJiaNET platform links the Cesium web map (https://cesium.com/) with the model prediction results through their geographical coordinates, thereby enabling users to view interpretation results in the front-end map window directly.

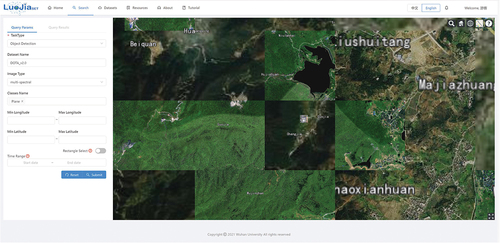

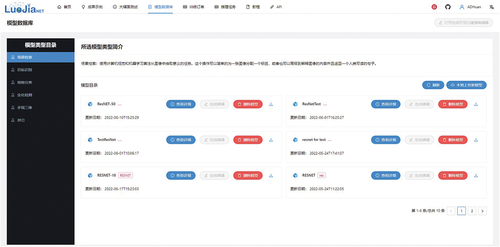

As shown in , the LuoJiaNET sharing service platform’s main page is composed of the parameter setting bar and map visualization window. Users can switch the map online and freely view it at any map’s zoom level. Users can draw a region on the satellite map and then employ the network models from LuoJiaNET’s model database () to accomplish five typical remote sensing intelligent interpretation tasks, such as scene classification, object detection, land-use classification, change detection, and multi-view 3D reconstruction. The final model prediction results can be directly displayed on the map for quick check and output.

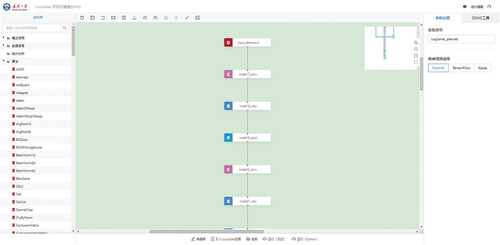

5.3.2. Visual modeling tool

LuoJiaNET develops a visual modeling tool based on GUI to provide data-framework collaboration. The overall architecture of this visual modeling tool is shown in . This tool transfers the complex neural network structure into a form of comprehensible DAGs diagram representation to improve users’ productivity in building models. The input data are successively transmitted, calculated, and flow into the training or storage terminal of LuoJiaNET through every graph node in DAGs. This tool sets up a technological workflow of online data acquisition, network creating, training, and deployment, which successfully hides the complex programming and computing work in the background services to intensely facilitate nonprofessional users.

The main page of LuoJiaNET’s visual modeling tool is shown in . The left side is the operator database of various network models. Users can directly drag the operators into the main modeling window to generate the corresponding DAGs node of models. Users can perform actions, such as copying and undoing nodes, by using the toolbar at the top of the main window. The right side of the main page is the model parameter editor and monitoring tool for the training process. Users can access the script editor on the bottom side of the main window to import APIs from LuoJiaSET’s sample database to obtain remote sensing data and write Python code for model training and inference.

6. Applications

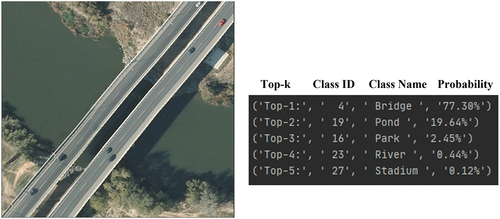

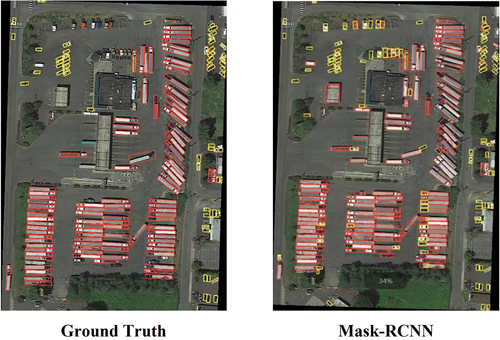

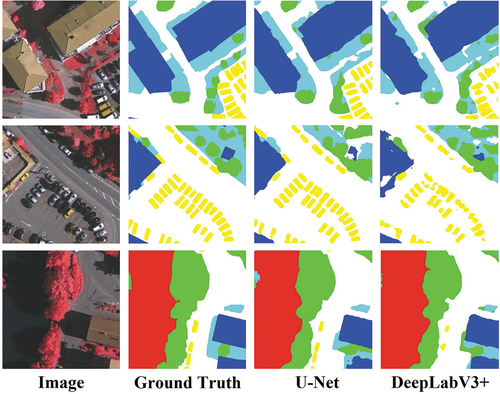

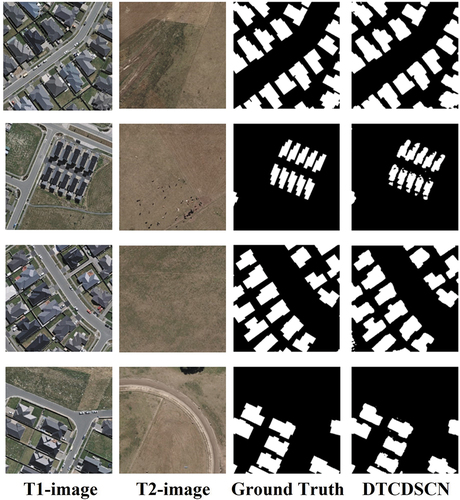

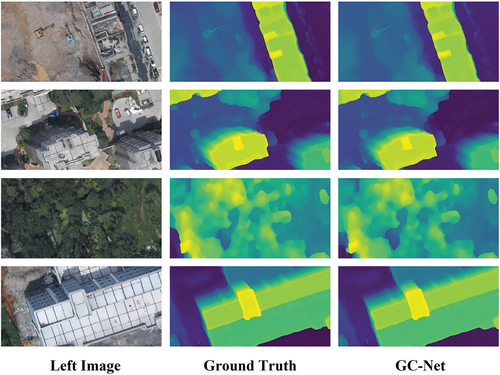

LuoJiaAI is in used across different remote-sensing interpretation tasks, such as scene classification (), object detection (), land-use classification (), change detection (), and multi-view 3D reconstruction (). The results of these validation experiments prove the effectiveness of LuoJiaAI and provide huge support for further optimizing and improving the LuoJiaAI’s overall architecture. The quantitative and qualitative experiments can be referred to the https://github.com/WHULuoJiaTeam/luojianet/blob/master/whitepaper.pdf, and the codes for these AI models can be found at https://github.com/WHULuoJiaTeam/luojianet/tree/master/model_zoo.

Figure 26. Visualization on DOTAV2.0 (Ding et al. Citation2021) using Mask-RCNN (He et al. Citation2017) in object detection task.

Figure 27. Visualization on ISPRS-Vaihingen (Rottensteiner et al. Citation2012) using U-Net (Ronneberger, Fischer, and Brox Citation2015) and DeepLabV3+ (Chen et al. Citation2018) in land-use classification task.

Figure 28. Visualization on WHU-BCD using DTCDSCN (Liu et al. Citation2020) in change detection task.

Figure 29. Visualization on WHU-Stereo (Li et al. Citation2022) using GC-Net (Ni et al. Citation2020) in multi-view 3D reconstruction task.

7. Conclusion and future work

In this paper, we illustrate the design considerations and methods for constructing the LuoJiaAI platform, which is composed of a large-scale sample database with a unified and scalable classification scheme (LuoJiaSET) and a dedicated DL framework with remote sensing data characteristics (LuoJiaNET). It is a cloud-based AI platform with OGC standards for intelligent interpretation and analysis of remote sensing data that brings Huawei’s massive data storage and computational capabilities to achieve state-of-the-art performance on five typical remote sensing interpretation tasks, including scene classification, object detection, land-use classification, change detection, and multi-view 3D reconstruction.

The RS-CCP is bound to be the long-term topic in remote-sensing interpretation with continuous data and computing power growth. The research and development of LuoJiaAI are still in the infancy stage. For LuoJiaSET, we will continue expanding the sample database’s size and improving sharing service platform functions, such as multi-dimensional retrieval and data analysis. For LuoJiaNET, we will further study the strategies for large-scale image processing, optimization methods for IRs with remote sensing data characteristics, data-framework collaboration mechanisms for automatically obtaining high-quality labeled data, and more visual tools and APIs in front-end web service for better human-machine interaction. LuoJiaAI shows a great potential to break the separation of remote sensing data, intelligent interpretation algorithms, and cloud-based computing resources in high-precision remote sensing mapping tasks.

Acknowledgments

We appreciate the effort led by WHU-LuoJiaAI Group and Huawei Artificial Intelligence Group. Great thanks to all the colleagues who have provided their time and advice while writing this paper. Special thanks to Dr. Liangcun Jiang, Dr. Yue Xu, Mr. Haowei Jia, Mr. Yuanxin Zhao, Ms. Siqi Liu, Mr. Bingnan Yang, Mr. Zhenzhang Yang, Mr. Teng Su, Mr. Guowen Zhang, Mr. Laiping Ding, Mr. Shenkai Zhoung, Mr. Jingjun Wang, Mr. Kunyang Tian, Pro. Peng Yue, Pro. Shunping Ji, Pro. Xin Huang, Pro. Haigang Sui, Pro. Guisong Xia, Pro. Yansheng Li, Pro. Kaimin Sun, Pro. Juhua Liu, Pro. Mengting Yuan, Dr. Jiayi Li, and Dr. Xinyu Wang for all the kind support. Thanks to the rest of the WHU-LuoJiaAI Group: Mr. Bin Wang, Ms. Jin Liu, Mr. Haotian Teng, Mr. Jianxun Wang, Mr. Wangbin Li, Mr. Lilin Tu, Mr. Ning Zhou, Mr. Hengwei Zhao, Mr. Yang Pan, Mr. Wei Chen. Finally, we would like to thank the providers of the thousands of public datasets in LuoJiaAI.

Disclosure statement

No potential conflict of interest was reported by the authors.

Data availability statement

The data that support the findings of this study are available on GitHub at https://github.com/WHULuoJiaTeam.

Additional information

Funding

Notes on contributors

Zhan Zhang

Zhan Zhang is a PhD student at the State Key Laboratory of Information Engineering in Surveying, Mapping, and Remote Sensing, Wuhan, China. His research interests mainly include machine learning system, remote sensing intelligent interpretation, and machine vision. He contributed to the LuoJiaAI platform design and integration, LuoJiaNET’s remote sensing characteristics module implementation, and manuscript writing of this paper.

Mi Zhang

Mi Zhang is an associate researcher at the School of Remote Sensing and Information Engineering, Wuhan University. He serves as the chief artificial intelligence scientist in Handleray Corporation and technical director in WHU-LuoJiaAI Group. His research interests mainly include computer vision, machine learning, with particular interest in semantic object segmentation and the construction of DL framework. He contributed to the overall architecture design and implementation of the LuoJiaNET, and manuscript writing of this paper.

Jianya Gong

Jianya Gong is the Academician of Chinese Academy of Sciences, Professor and Dean of School of Remote Sensing and Information Engineering, Wuhan University. His research interests mainly include remote sensing image processing, spatial data infrastructure, geospatial data interoperability, and artificial intelligence. He contributed to the LuoJiaAI project initiation, and implementation of this paper.

Xiangyun Hu

Xiangyun Hu is a professor and head of the department of photogrammetry with the School of Remote Sensing and Information Engineering, Wuhan University. His research interests mainly include artificial intelligence and pattern recognition, remote sensing software development, and remote sensing intelligent interpretation. He led the WHU-LuoJiaAI Group and contributed to the methodology and implementation of this paper.

Hanjiang Xiong

Hanjiang Xiong is a professor in 3-D geographic information system at Wuhan University. His research interests mainly include geospatial data management, 3-D visualization, augmented reality, and indoor and outdoor geographic information system. He contributed to the implementation of this paper.

Huan Zhou

Huan Zhou is a master’s student at the Department of Land-Surveying and Geo-Informatics, Hong Kong Polytechnic University. His research interest is in web server development and cartographic visualization. He contributed to the overall architecture design and implementation of the LuoJiaNET’s front-end layer, and use cases of this paper.

Zhipeng Cao

Zhipeng Cao is a PhD student at the School of Remote Sensing and Information Engineering, Wuhan University. His research interest is in big spatiotemporal data management. He contributed to the implementation of this paper.

References

- Abadi, M., A. Agarwal, P. Barham, E. Brevdo, Z. Chen, C. Citro, G. S. Corrado, A. Davis, J. Dean, and M. Devin. 2016. “Tensorflow: Large-Scale Machine Learning on Heterogeneous Distributed Systems.” arXiv preprint arXiv:1603.04467.

- Alzubaidi, L., J. Zhang, A. J. Humaidi, A. Al-Dujaili, Y. Duan, O. Al-Shamma, J. Santamaría, M. A. Fadhel, M. Al-Amidie, and L. Farhan. 2021. “Review of Deep Learning: Concepts, CNN Architectures, Challenges, Applications, Future Directions.” Journal of Big Data 8 (1): 1–74. doi:10.1186/s40537-021-00444-8.

- Amani, M., A. Ghorbanian, S. A. Ahmadi, M. Kakooei, A. Moghimi, S. M. Mirmazloumi, S. H. A. Moghaddam, S. Mahdavi, M. Ghahremanloo, and S. Parsian. 2020. “Google Earth Engine Cloud Computing Platform for Remote Sensing Big Data Applications: A Comprehensive Review.” IEEE Journal of Selected Topics in Applied Earth Observations and Remote Sensing 13: 5326–5350. doi:10.1109/JSTARS.2020.3021052.

- Anwer, R. M., F. S. Khan, J. Van De Weijer, M. Molinier, and J. Laaksonen. 2018. “Binary Patterns Encoded Convolutional Neural Networks for Texture Recognition and Remote Sensing Scene Classification.” ISPRS Journal of Photogrammetry and Remote Sensing 138: 74–85. doi:10.1016/j.isprsjprs.2018.01.023.

- Badgley, G., C. B. Field, and J. A. Berry. 2017. “Canopy Near-Infrared Reflectance and Terrestrial Photosynthesis.” Science Advances 3 (3): e1602244. doi:10.1126/sciadv.1602244.

- Bisong, E. 2019. Building Machine Learning and Deep Learning Models on Google Cloud Platform. Berlin, Germany: Springer.

- Ceccherini, G., G. Duveiller, G. Grassi, G. Lemoine, V. Avitabile, R. Pilli, and A. Cescatti. 2020. “Abrupt Increase in Harvested Forest Area Over Europe After 2015.” Nature 583 (7814): 72–77. doi:10.1038/s41586-020-2438-y.

- Chen, L. 2021. Deep Learning and Practice with MindSpore. Berlin, Germany: Springer Nature.

- Chen, H., O. Engkvist, Y. Wang, M. Olivecrona, and T. Blaschke. 2018. “The Rise of Deep Learning in Drug Discovery.” Drug Discovery Today 23 (6): 1241–1250. doi:10.1016/j.drudis.2018.01.039.

- Chen, T., T. Moreau, Z. Jiang, H. Shen, E. Q. Yan, L. Wang, Y. Hu, L. Ceze, C. Guestrin, and A. Krishnamurthy. 2018. “TVM: End-To-End Optimization Stack for Deep Learning.” arXiv preprint arXiv:1802.04799 11 (2018): 20.

- Chen, L. C., G. Papandreou, I. Kokkinos, K. Murphy, and A. L. Yuille. 2017. “Deeplab: Semantic Image Segmentation with Deep Convolutional Nets, Atrous Convolution, and Fully Connected Crfs.” IEEE Transactions on Pattern Analysis and Machine Intelligence 40 (4): 834–848. doi:10.1109/TPAMI.2017.2699184.

- Chen, L. C., Y. Zhu, G. Papandreou, F. Schroff, and H. Adam. 2018. “Encoder-Decoder with Atrous Separable Convolution for Semantic Image Segmentation.” Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 801–818.

- Cheng, G., J. Han, and X. Lu. 2017. “Remote Sensing Image Scene Classification: Benchmark and State of the Art.” Proceedings of the IEEE 105 (10): 1865–1883. doi:10.1109/JPROC.2017.2675998.

- Cheng, G., C. Yang, X. Yao, L. Guo, and J. Han. 2018. “When Deep Learning Meets Metric Learning: Remote Sensing Image Scene Classification via Learning Discriminative CNNs.” IEEE Transactions on Geoscience and Remote Sensing 56 (5): 2811–2821. doi:10.1109/TGRS.2017.2783902.

- Dai, D., and W. Yang. 2010. “Satellite Image Classification via Two-Layer Sparse Coding with Biased Image Representation.” IEEE Geoscience and Remote Sensing Letters 8 (1): 173–176. doi:10.1109/LGRS.2010.2055033.

- Daudt, R. C., B. Le Saux, A. Boulch, and Y. Gousseau. 2019. “Multitask Learning for Large-Scale Semantic Change Detection.” Computer Vision and Image Understanding 187: 102783. doi:10.1016/j.cviu.2019.07.003.

- Deren, L. I., M. Wang, X. Shen, and Z. Dong. 2017. “From Earth Observation Satellite to Earth Observation Brain.” Geomatics & Information Ence of Wuhan University 42 (2): 143–149.

- Ding, J., N. Xue, G. S. Xia, X. Bai, W. Yang, M. Yang, S. Belongie, J. Luo, M. Datcu, and M. Pelillo. 2021. “Object Detection in Aerial Images: A Large-Scale Benchmark and Challenges.” IEEE Transactions on Pattern Analysis and Machine Intelligence 44: 7778–7796. doi:10.1109/TPAMI.2021.3117983.

- Gao, S., J. Rao, Y. Kang, Y. Liang, J. Kruse, D. Doepfer, A. K. Sethi, J. F. M. Reyes, J. Patz, and B. S. Yandell. 2020. “Mobile Phone Location Data Reveal the Effect and Geographic Variation of Social Distancing on the Spread of the COVID-19 Epidemic.” arXiv preprint arXiv:2004.11430.

- Gong, J. 2018. “Chances and Challenges for Development of Surveying and Remote Sensing in the Age of Artificial Intelligence.” Wuhan Daxue Xuebao (Xinxi Kexue Ban)/Geomatics and Information Science of Wuhan University 43 (12): 1788–1796.

- Gorelick, N., M. Hancher, M. Dixon, S. Ilyushchenko, D. Thau, and R. Moore. 2017. “Google Earth Engine: Planetary-Scale Geospatial Analysis for Everyone.” Remote Sensing of Environment 202: 18–27. doi:10.1016/j.rse.2017.06.031.

- Han, J., J. Ding, J. Li, and G. S. Xia. 2021. “Align Deep Features for Oriented Object Detection.” IEEE Transactions on Geoscience and Remote Sensing 60: 1–11. doi:10.1109/TGRS.2021.3062048.

- Hansen, M. C., P. V. Potapov, R. Moore, M. Hancher, S. A. Turubanova, A. Tyukavina, D. Thau, S. V. Stehman, S. J. Goetz, and T. R. Loveland. 2013. “High-Resolution Global Maps of 21st-Century Forest Cover Change.” Science 342 (6160): 850–853. doi:10.1126/science.1244693.

- He, K., G. Gkioxari, P. Dollár, and R. Girshick. 2017. “Mask R-CNN.” Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 2961–2969.

- He, K., X. Zhang, S. Ren, and J. Sun. 2016. “Deep Residual Learning for Image Recognition.” Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, Nevada, 770–778.

- Huawei Technologies Co., Ltd. 2022. “Huawei MindSpore AI Development Framework.” Artificial Intelligence Technology, 137–162. Springer.

- Ji, S., S. Wei, and M. Lu. 2018. “Fully Convolutional Networks for Multisource Building Extraction from an Open Aerial and Satellite Imagery Data Set.” IEEE Transactions on Geoscience and Remote Sensing 57 (1): 574–586. doi:10.1109/TGRS.2018.2858817.

- Kontgis, C., M. S. Warren, S. W. Skillman, R. Chartrand, and D. I. Moody. 2017. “Leveraging Sentinel-1 Time-Series Data for Mapping Agricultural Land Cover and Land Use in the Tropics.” 2017 9th International Workshop on the Analysis of Multitemporal Remote Sensing Images (MultiTemp), IEEE. Brugge, Belgium, 1–4.

- Krizhevsky, A., I. Sutskever, and G. E. Hinton. 2017. “Imagenet Classification with Deep Convolutional Neural Networks.” Communications of the ACM 60 (6): 84–90. doi:10.1145/3065386.

- Kumar, L., and O. Mutanga. 2018. “Google Earth Engine Applications Since Inception: Usage, Trends, and Potential.” Remote Sensing 10 (10): 1509. doi:10.3390/rs10101509.

- LeCun, Y., Y. Bengio, and G. Hinton. 2015. “Deep Learning.” Nature 521 (7553): 436–444. doi:10.1038/nature14539.

- Lewis, A., S. Oliver, L. Lymburner, B. Evans, L. Wyborn, N. Mueller, G. Raevksi, J. Hooke, R. Woodcock, and J. Sixsmith. 2017. “The Australian Geoscience Data Cube—foundations and Lessons Learned.” Remote Sensing of Environment 202: 276–292. doi:10.1016/j.rse.2017.03.015.

- Li, S., S. He, S. Jiang, W. Jiang, and L. Zhang. 2022. “WHU-Stereo: A Challenging Benchmark for Stereo Matching of High-Resolution Satellite Images.” arXiv preprint arXiv:2206.02342.

- Li, W., and C. Y. Hsu. 2020. “Automated Terrain Feature Identification from Remote Sensing Imagery: A Deep Learning Approach.” International Journal of Geographical Information Science 34 (4): 637–660. doi:10.1080/13658816.2018.1542697.

- Li, J., X. Huang, and J. Gong. 2019. “Deep Neural Network for Remote-Sensing Image Interpretation: Status and Perspectives.” National Science Review 6 (6): 1082–1086. doi:10.1093/nsr/nwz058.

- Li, K., G. Wan, G. Cheng, L. Meng, and J. Han. 2020. “Object Detection in Optical Remote Sensing Images: A Survey and a New Benchmark.” ISPRS Journal of Photogrammetry and Remote Sensing 159: 296–307. doi:10.1016/j.isprsjprs.2019.11.023.

- Liermann, V. 2021. “Overview Machine Learning and Deep Learning Frameworks.” In The Digital Journey of Banking and Insurance, Volume III, 187–224. Cham, Switzerland: Springer.

- Liu, J., and S. Ji. 2020. “A Novel Recurrent Encoder-Decoder Structure for Large-Scale Multi-View Stereo Reconstruction from an Open Aerial Dataset.” Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 6050–6059.

- Liu, Y., C. Pang, Z. Zhan, X. Zhang, and X. Yang. 2020. “Building Change Detection for Remote Sensing Images Using a Dual-Task Constrained Deep Siamese Convolutional Network Model.” IEEE Geoscience and Remote Sensing Letters 18 (5): 811–815. doi:10.1109/LGRS.2020.2988032.

- Liu, J., W. Wang, and H. Zhong. 2020. “EarthDataminer: A Cloud-Based Big Earth Data Intelligence Analysis Platform.” IOP Conference Series: Earth and Environmental Science 509 (1): 012032 (15pp). doi:10.1088/1755-1315/509/1/012032.

- Long, J., E. Shelhamer, and T. Darrell. 2015. “Fully Convolutional Networks for Semantic Segmentation.” Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Hynes Convention Center in Boston, Massachusetts, USA, 3431–3440.

- Ma, L., Y. Liu, X. Zhang, Y. Ye, G. Yin, and B. A. Johnson. 2019. “Deep Learning in Remote Sensing Applications: A Meta-Analysis and Review.” ISPRS Journal of Photogrammetry and Remote Sensing 152: 166–177. doi:10.1016/j.isprsjprs.2019.04.015.

- Ma, Y., D. Yu, T. Wu, and H. Wang. 2019. “PaddlePaddle: An Open-Source Deep Learning Platform from Industrial Practice.” Frontiers of Data and Domputing 1 (1): 105–115.

- MacDonald, A. J., and E. A. Mordecai. 2019. “Amazon Deforestation Drives Malaria Transmission, and Malaria Burden Reduces Forest Clearing.” Proceedings of the National Academy of Sciences 116 (44): 22212–22218. doi:10.1073/pnas.1905315116.

- Maggiori, E., Y. Tarabalka, G. Charpiat, and P. Alliez. 2017. “Can Semantic Labeling Methods Generalize to Any City? The Inria Aerial Image Labeling Benchmark.” 2017 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), IEEE. Fort Worth, TX, USA, 3226–3229.

- Marc, B., K. Foster, G. Christie, S. Wang, G. D. Hager, and M. Brown. 2019. “Semantic Stereo for Incidental Satellite Images.” 2019 IEEE Winter Conference on Applications of Computer Vision (WACV), IEEE. Waikoloa, HI, USA, 1524–1532.

- Mutanga, O., and L. Kumar. 2019. Google Earth Engine Applications. MDPI.

- Nemani, R. 2011. “NASA Earth Exchange: Next Generation Earth Science Collaborative.” ISPRS-International Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences 3820: 17–17. doi:10.5194/isprsarchives-XXXVIII-8-W20-17-2011.

- Nemani, R. R., B. L. Thrasher, W. Wang, T. J. Lee, F. S. Melton, J. L. Dungan, and A. Michaelis. 2015. “NASA Earth Exchange (Nex) Supporting Analyses for National Climate Assessments.” AGU Fall Meeting Abstracts.

- Ni, J., J. Wu, J. Tong, Z. Chen, and J. Zhao. 2020. “GC-Net: Global Context Network for Medical Image Segmentation.” Computer Methods and Programs in Biomedicine 190: 105121. doi:10.1016/j.cmpb.2019.105121.

- Ojala, T., M. Pietikäinen, and D. Harwood. 1996. “A Comparative Study of Texture Measures with Classification Based on Featured Distributions.” Pattern Recognition 29 (1): 51–59. doi:10.1016/0031-3203(95)00067-4.

- Paszke, A., S. Gross, F. Massa, A. Lerer, J. Bradbury, G. Chanan, T. Killeen, Z. Lin, N. Gimelshein, and L. Antiga. 2019. Pytorch: An Imperative Style, High-Performance Deep Learning Library. In Advances in Neural Information Processing Systems, edited by H. Wallach, Vol. 32, 8024–8035.

- Pekel, J. F., A. Cottam, N. Gorelick, and A. S. Belward. 2016. “High-Resolution Mapping of Global Surface Water and Its Long-Term Changes.” Nature 540 (7633): 418–422. doi:10.1038/nature20584.

- Rao, Q., and J. Frtunikj. 2018. “Deep Learning for Self-Driving Cars: Chances and Challenges.” Proceedings of the 1st International Workshop on Software Engineering for AI in Autonomous Systems, Gothenburg, Sweden, 35–38.

- Razakarivony, S., and F. Jurie. 2016. “Vehicle Detection in Aerial Imagery: A Small Target Detection Benchmark.” Journal of Visual Communication and Image Representation 34: 187–203. doi:10.1016/j.jvcir.2015.11.002.

- Reichstein, M., G. Camps-Valls, B. Stevens, M. Jung, J. Denzler, and N. Carvalhais. 2019. “Deep Learning and Process Understanding for Data-Driven Earth System Science.” Nature 566 (7743): 195–204. doi:10.1038/s41586-019-0912-1.

- Ren, P., Y. Xiao, X. Chang, P. Y. Huang, Z. Li, X. Chen, and X. Wang. 2021. “A Comprehensive Survey of Neural Architecture Search: Challenges and Solutions.” ACM Computing Surveys (CSUR) 54 (4): 1–34. doi:10.1145/3447582.

- Ronneberger, O., P. Fischer, and T. Brox. 2015. “U-Net: Convolutional Networks for Biomedical Image Segmentation.” International Conference on Medical Image Computing and Computer-Assisted Intervention, Springer, Cham. Munich, Germany, 234–241.

- Rottensteiner, F., G. Sohn, J. Jung, M. Gerke, C. Baillard, S. Benitez, and U. Breitkopf. 2012. “The ISPRS Benchmark on Urban Object Classification and 3D Building Reconstruction.” ISPRS Annals of the Photogrammetry, Remote Sensing and Spatial Information Sciences I-3 (2012), Nr. 1 1 (1): 293–298. doi:10.5194/isprsannals-I-3-293-2012.

- Russakovsky, O., J. Deng, H. Su, J. Krause, S. Satheesh, S. Ma, Z. Huang, A. Karpathy, A. Khosla, and M. Bernstein. 2015. “Imagenet Large Scale Visual Recognition Challenge.” International Journal of Computer Vision 115 (3): 211–252. doi:10.1007/s11263-015-0816-y.

- Simonyan, K., and A. Zisserman. 2014. “Very Deep Convolutional Networks for Large-Scale Image Recognition.” arXiv preprint arXiv:1409.1556.

- Sumbul, G., M. Charfuelan, B. Demir, and V. Markl. 2019. “Bigearthnet: A Large-Scale Benchmark Archive for Remote Sensing Image Understanding.” IGARSS 2019-2019 IEEE International Geoscience and Remote Sensing Symposium, IEEE. Yokohama, Japan, 5901–5904.

- Sun, Z., L. Sandoval, R. Crystal-Ornelas, S. M. Mousavi, J. Wang, C. Lin, N. Cristea, D. Tong, W. H. Carande, and X. Ma. 2022. “A Review of Earth Artificial Intelligence.” Computers & Geosciences 105034. doi:10.1016/j.cageo.2022.105034.

- Sun, K., B. Xiao, D. Liu, and J. Wang. 2019. “Deep High-Resolution Representation Learning for Human Pose Estimation.” Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 5693–5703.

- Sun, K., Y. Zhao, B. Jiang, T. Cheng, B. Xiao, D. Liu, Y. Mu, X. Wang, W. Liu, and J. Wang. 2019. “High-Resolution Representations for Labeling Pixels and Regions.” arXiv preprint arXiv:1904.04514.

- Szegedy, C., W. Liu, Y. Jia, P. Sermanet, S. Reed, D. Anguelov, D. Erhan, V. Vanhoucke, and A. Rabinovich. 2015. “Going Deeper with Convolutions.” Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, Massachusetts, USA, 1–9.

- Tamiminia, H., B. Salehi, M. Mahdianpari, L. Quackenbush, S. Adeli, and B. Brisco. 2020. “Google Earth Engine for Geo-Big Data Applications: A Meta-Analysis and Systematic Review.” ISPRS Journal of Photogrammetry and Remote Sensing 164: 152–170. doi:10.1016/j.isprsjprs.2020.04.001.

- Tenopir, C., N. M. Rice, S. Allard, L. Baird, J. Borycz, L. Christian, B. Grant, R. Olendorf, and R. J. Sandusky. 2020. “Data Sharing, Management, Use, and Reuse: Practices and Perceptions of Scientists Worldwide.” PLoS One 15 (3): e0229003. doi:10.1371/journal.pone.0229003.

- Tong, X. Y., G. S. Xia, Q. Lu, H. Shen, S. Li, S. You, and L. Zhang. 2020. “Land-Cover Classification with High-Resolution Remote Sensing Images Using Transferable Deep Models.” Remote Sensing of Environment 237: 111322. doi:10.1016/j.rse.2019.111322.

- Toth, C., and G. Jóźków. 2016. “Remote Sensing Platforms and Sensors: A Survey.” ISPRS Journal of Photogrammetry and Remote Sensing 115: 22–36. doi:10.1016/j.isprsjprs.2015.10.004.

- Van Etten, A., D. Lindenbaum, and T. M. Bacastow. 2018. “Spacenet: A Remote Sensing Dataset and Challenge Series.” arXiv preprint arXiv:1807.01232.

- Wang, L., C. Diao, G. Xian, D. Yin, Y. Lu, S. Zou, and T. A. Erickson. 2020. “A Summary of the Special Issue on Remote Sensing of Land Change Science with Google Earth Engine.” Remote Sensing of Environment 248: 112002. Elsevier. doi:10.1016/j.rse.2020.112002.

- Xia, G. S., X. Bai, J. Ding, Z. Zhu, S. Belongie, J. Luo, M. Datcu, M. Pelillo, and L. Zhang. 2018. “DOTA: A Large-Scale Dataset for Object Detection in Aerial Images.” Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, Utah, USA, 3974–3983.

- Xia, G. S., J. Hu, F. Hu, B. Shi, X. Bai, Y. Zhong, L. Zhang, and X. Lu. 2017. “AID: A Benchmark Data Set for Performance Evaluation of Aerial Scene Classification.” IEEE Transactions on Geoscience and Remote Sensing 55 (7): 3965–3981. doi:10.1109/TGRS.2017.2685945.

- Xia, G. S., W. Yang, J. Delon, Y. Gousseau, H. Sun, and H. Maître. 2010. “Structural High-Resolution Satellite Image Indexing.” ISPRS TC VII Symposium-100 Years ISPRS, Vienna, Austria, 38, 298–303.

- Xiong, J., P. S. Thenkabail, M. K. Gumma, P. Teluguntla, J. Poehnelt, R. G. Congalton, K. Yadav, and D. Thau. 2017. “Automated Cropland Mapping of Continental Africa Using Google Earth Engine Cloud Computing.” ISPRS Journal of Photogrammetry and Remote Sensing 126: 225–244. doi:10.1016/j.isprsjprs.2017.01.019.

- Yang, C., Q. Huang, Z. Li, K. Liu, and F. Hu. 2017. “Big Data and Cloud Computing: Innovation Opportunities and Challenges.” International Journal of Digital Earth 10 (1): 13–53. doi:10.1080/17538947.2016.1239771.

- Yao, Y., Z. Luo, S. Li, T. Fang, and L. Quan. 2018. “Mvsnet: Depth Inference for Unstructured Multi-View Stereo.” Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 767–783.

- Young, T., D. Hazarika, S. Poria, and E. Cambria. 2018. “Recent Trends in Deep Learning Based Natural Language Processing.” IEEE Computational Intelligence Magazine 13 (3): 55–75. doi:10.1109/MCI.2018.2840738.

- Yuan, Q., H. Shen, T. Li, Z. Li, S. Li, Y. Jiang, H. Xu, W. Tan, Q. Yang, and J. Wang. 2020. “Deep Learning in Environmental Remote Sensing: Achievements and Challenges.” Remote Sensing of Environment 241: 111716. doi:10.1016/j.rse.2020.111716.

- Yue, P., B. Shangguan, L. Hu, L. Jiang, C. Zhang, Z. Cao, and Y. Pan. 2022. “Towards a Training Data Model for Artificial Intelligence in Earth Observation.” International Journal of Geographical Information Science 36: 1–25. doi:10.1080/13658816.2022.2087223.

- Zhang, C., P. Yue, D. Tapete, L. Jiang, B. Shangguan, L. Huang, and G. Liu. 2020. “A Deeply Supervised Image Fusion Network for Change Detection in High Resolution Bi-Temporal Remote Sensing Images.” ISPRS Journal of Photogrammetry and Remote Sensing 166: 183–200. doi:10.1016/j.isprsjprs.2020.06.003.

- Zheng, Z., Y. Zhong, A. Ma, and L. Zhang. 2020. “FPGA: Fast Patch-Free Global Learning Framework for Fully End-To-End Hyperspectral Image Classification.” IEEE Transactions on Geoscience and Remote Sensing 58 (8): 5612–5626. doi:10.1109/TGRS.2020.2967821.

- Zhong, Y., X. Hu, C. Luo, X. Wang, J. Zhao, and L. Zhang. 2020. “WHU-Hi: UAV-Borne Hyperspectral with High Spatial Resolution (H2) Benchmark Datasets and Classifier for Precise Crop Identification Based on Deep Convolutional Neural Network with CRF.” Remote Sensing of Environment 250: 112012. doi:10.1016/j.rse.2020.112012.

- Zhu, X. X., D. Tuia, L. Mou, G. S. Xia, L. Zhang, F. Xu, and F. Fraundorfer. 2017. “Deep Learning in Remote Sensing: A Comprehensive Review and List of Resources.” IEEE Geoscience and Remote Sensing Magazine 5 (4): 8–36. doi:10.1109/MGRS.2017.2762307.