?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.

?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.ABSTRACT

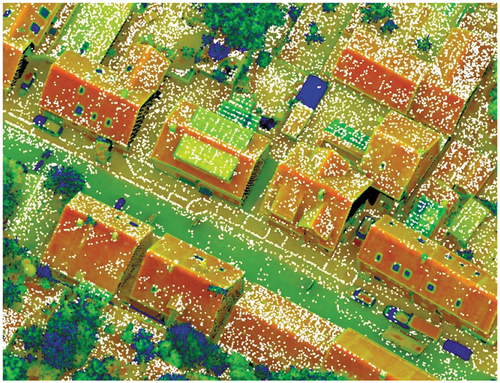

With the rapid development of reality capture methods, such as laser scanning and oblique photogrammetry, point cloud data have become the third most important data source, after vector maps and imagery. Point cloud data also play an increasingly important role in scientific research and engineering in the fields of Earth science, spatial cognition, and smart cities. However, how to acquire high-quality three-dimensional (3D) geospatial information from point clouds has become a scientific frontier, for which there is an urgent demand in the fields of surveying and mapping, as well as geoscience applications. To address the challenges mentioned above, point cloud intelligence came into being. This paper summarizes the state-of-the-art of point cloud intelligence, with regard to acquisition equipment, intelligent processing, scientific research, and engineering applications. For this purpose, we refer to a recent project on the hybrid georeferencing of images and LiDAR data for high-quality point cloud collection, as well as a current benchmark for the semantic segmentation of high-resolution 3D point clouds. These projects were conducted at the Institute for Photogrammetry, the University of Stuttgart, which was initially headed by the late Prof. Ackermann. Finally, the development prospects of point cloud intelligence are summarized.

1. Introduction

The European Geospatial Industry Outlook ReportFootnote1 released in 2018 added three-dimensional (3D) scanning as one of the four major branches of the traditional geospatial industry (Global Navigation Satellite System (GNSS) and positioning, geographic information systems (GIS) and spatial analytics, Earth observation, and 3D scanning), and has predicted that 3D scanning market will become the fastest-growing market among the four major areas, which could lead to rapid development in smart cities, intelligent transportation, global mapping, etc. With unparalleled advantages over vector maps and imagery, point cloud data (X, Y, Z, A) have become the third most important spatio-temporal data source after vector maps and imagery. 3D point cloud data are the major source of 3D geographic information and play an irreplaceable role in the accurate description of 3D spaces. How to obtain 3D geographic information quickly and accurately has become a fundamental task in the field of mapping and geographic information (Deren, Jun, and Zhenfeng Citation2018; Deren Citation2017). With the rapid development of sensors, semiconductors, and unmanned platforms, point cloud big data reality acquisition equipment represented by laser scanning and oblique photogrammetry has made great progress in stability, accuracy, ease-of-use, and intelligence. With the advancement of technology, a range of multi-platform/multi-resolution equipment has been developed, including spaceborne, manned/unmanned, vehicle, ground, backpack, and handheld equipment, which provide convenience for the acquisition of point cloud big data. The International Society for Photogrammetry and Remote Sensing (ISPRS) has set up a working group on point cloud processing. Meanwhile, the industry has also focused on point cloud processing (e.g. the annual International LiDAR Mapping Forum (ILMF)Footnote2). However, the industry still cannot meet the demands for intelligent processing and application of point cloud big data. Point cloud intelligence was born to build a bridge between point cloud big data and scientific research, as well as engineering applications. It is a scientific means to realize 3D representation of entities with structure and function from point cloud big data, including its core of point cloud big data quality enhancement, intelligent 3D information extraction, and on-demand 3D reconstruction. Point cloud intelligence is also a scientific method and tool for scientific research and engineering applications, such as geoscience, information science, smart cities, etc. This paper focuses on the principle of point cloud intelligence, and highlights the research progress and trends from three aspects: 1) point cloud big data acquisition; 2) intelligent processing; and 3) engineering applications. Finally, we give an outlook on the important development directions of point cloud intelligence.

2. Data acquisition

The acquisition equipment is developing rapidly, not only the active acquisition equipment represented by laser scanning, but also the passive acquisition equipment represented by oblique photogrammetry. For the carrier platform, multiple platforms now exist, including spaceborne platforms, manned/unmanned airborne platforms, vehicle platforms, ground-based platforms, and portable platforms, from space to ground.

The laser scanning equipment acquires the point cloud data by integrating Global Positioning System (GPS)/Inertial Measurement Unit (IMU) hardware and scanners of different performances on different platforms to jointly solve the laser emitter position, attitude, and distance to the target. For spaceborne laser scanning, the ICESat and ICESat-2 satellites were launched by NASA in 2003 and 2018, respectively, and the ZiYuan-3 02 satellite (Xinming, Jiyi, and Guoyuan Citation2018) was launched by China in 2017. Manned/unmanned airborne laser scanning systems and vehicle laser scanning systems were the major solutions of Riegl, Optech, Hexagon, and others. Recently, Chinese companies such as Leador Spatial, Surestar, Hi-Target, CHC Navigation, and South Survey have launched a series of airborne, vehicle-based, and backpack laser scanning systems, for which the performance can rival the former systems. A laser bathymetric system measures the depth of water by emitting two different wavelengths of laser in blue band and green band, to obtain the underwater topography. As a result, laser bathymetric systems play an important role in marine mapping and underwater measurement.

Over the last decade, oblique photogrammetry has developed rapidly. During the flight, the ground is observed from both directly above and at tilted directions at the same time, capturing the target texture from the top as well as different angles. Processing by professional software (such as Match-T from Trimble Inpho, SURE from nFrames, PixelGrid, etc.) can be used to generate dense image point clouds with color information, which is a supplement to the laser scanning point clouds, and has been widely used in 3D urban reconstruction. In addition, with consumer-grade depth cameras, close-range 3D point cloud data can be acquired through a structured light camera, Time Of Flight (TOF) camera, or binocular camera. Commercial products have now been released, such as Apple Prime Sense,Footnote3 Microsoft Kinect-1,Footnote4 Intel RealSense,Footnote5 ZED,Footnote6 and Bumblebee.Footnote7

With the increasing demand for the granularity and connotation of geospatial information, the content of point cloud data has moved from mainly geometric information to simultaneous geometric, spectral, and textural information (e.g. multispectral laser scanning systems (Virtanen et al. Citation2017)). In terms of the scanning mode, this has changed from spindle-type scanning to optical phased array/single-photon LiDAR, which has wide application potential in remote sensing and is becoming a future trend of active Earth observation (Li et al. Citation2018). In terms of the acquisition platform, specialized equipment has changed to diversified consumer-level intelligent equipment. As the size, weight, and cost of sensors are respectively becoming more and more miniaturized, lightweight, and less expensive, consumer-grade, portable, and integrated smart scanning equipment is now booming (Hemin, Ruofei, and Donghai Citation2019; Li et al. Citation2019). The U.S. Defense Advanced Research Projects Agency (DARPA) has developed an autonomous collaborative scanning system for ground and air robots to scan unknown environments, supported by Simultaneous Localization And Mapping (SLAM) technology and robot control planning, which greatly reduces the human cost and solves the problem of the inability to operate in hazardous and special environments (Kelly et al. Citation2006). In photogrammetry and remote sensing field, a current trend is aimed at the joint acquisition, georeferencing, and interpretation of optical imagery and LiDAR data (Haala et al. Citation2022; Koelle et al. Citation2021).

The diversity of the point cloud acquisition platforms and methods leads to huge differences and even conflicts in the sampling granularity, quality, and expression of the point cloud. Platform-oriented point cloud processing methods cannot effectively collaborate with multi-platform point clouds to achieve complementarity. Thus, there is an urgent need to develop point cloud intelligence, to provide scientific decisions and means for the intelligent understanding of point cloud scenes described by point cloud big data.

3. Methodology

For the intelligent processing of point cloud data, the current algorithms mainly focus on data quality enhancement (denoising, shape completion, etc.), registration, segmentation, surface reconstruction, etc., to provide the data basis for the subsequent engineering applications. In this section, we summarize the key techniques of point cloud intelligent processing from the above aspects.

3.1. Denoising

Due to the limitation of the acquisition equipment and the influence of the acquisition environment, the point cloud data obtained by a 3D scanner are often accompanied by a large amount of noise and outliers (Roveri et al. Citation2018), which bring additional challenges to the intelligent understanding of the point cloud scene. Based on this problem, more and more researchers have begun to study how to restore clean point cloud data from noisy point cloud data, After reviewing the previous research, we categorize the point cloud denoising methods into two types: optimization-based and deep learning-based.

3.1.1. Optimization-based denoising methods

The optimization-based methods eliminate noise by defining an optimization target. For example, Alexa et al. (Citation2003). proposed to use simple geometric functions to approximate the least squares surface, and then defined the optimal projection operator to project points onto the surface. However, this approach is susceptible to outliers. Avron et al. (Citation2010) proposed to use sparse regularization to solve the optimization problem and reconstruct normals, and then updated the coordinates of the noise points according to the reconstructed normals. Subsequently, Schoenenberger, Paratte, and Vandergheynst (Citation2015) used a graph structure to capture the structural information of point cloud data by defining nodes and edges, and then used a graph Laplacian filter and other filters for the denoising. Zaman, Wong, and Ng (Citation2017) approximated the noisy point cloud by modeling the distribution of points and used techniques such as kernel density estimation. However, the biggest problem of these optimization-based methods is that the optimization-based methods are heavily reliant on prior knowledge, such as the basic geometric structure of the point cloud or the assumption of the noise distribution.

3.1.2. Deep learning-based denoising methods

With the rapid development of deep learning, researchers have begun to use this data-driven approach to deal with noisy point clouds. For example, Roveri et al. (Citation2018). proposed PointProNets, which introduced a neural network into the point cloud denoising task for the first time. PointProNets takes sparse and noisy point cloud data as input. The point cloud is first divided into multiple patches, and then these patches are projected onto height graphs. The final clean point cloud is then obtained by back-projection to 3D coordinates. In this method, part of the cloud information is lost due to projection, which limits the denoising capability of the network. Subsequently, PointCleanNet (Rakotosaona et al. Citation2020) was proposed, which uses PointNet (Qi et al. Citation2017) as the backbone to directly perform convolution operations on unstructured point cloud data and realizes the denoising task for point cloud data by predicting the offset of a noise point to the normal line of the surface of the clean point cloud. In view of the difficulty of obtaining the ground truth of 3D point clouds, Hermosilla, Ritschel, and Ropinski (Citation2019) proposed an unsupervised point cloud denoising method named Total Denoising, which directly regresses the approximate surface from the distribution of the noisy point cloud. However, Luo and Hu (Citation2020) argued that PointCleanNet does not directly restore the real surface, leading to suboptimal denoising results. Based on this, they proposed DMRDenoise, which uses differentiable pooling to downsample the input. The manifold of the point cloud is estimated and the final output is obtained by resampling. This approach can achieve good results, but due to the introduction of the down-sampling scheme, it can easily result in a loss of details. Pistilli et al. (Citation2020) proposed GPDNet, and effectively improved the robustness of the network by using a graph neural network. Recently, Luo and Hu (Citation2021) proposed a new score-based point cloud denoising algorithm, which is based on the use of the gradient ascent technique to move points iteratively to the lower surface by estimating the score of each point. Chen et al. (Citation2022) proposed RePCD-Net, which uses a multi-scale point cloud denoising scheme based on a Recurrent Neural Network (RNN), which iteratively processes point clouds in different denoising stages, reaching the current state-of-the-art level. However, due to the lack of prior knowledge, it is very hard for the deep learning based approaches to distinguish the difference between detailed structure and noise, resulting in excessive smoothing.

3.1.3. Future works

Possible future research directions include: 1) Fusion of prior information. Point cloud denoising is a restoration task analogous to image restoration, and is essentially a “morbid” problem with a one-to-many relationship. Combining optimization-based and deep learning-based methods to integrate prior information into the model is the key to improving the method’s performance. 2) Real-time denoising. Most of the existing methods assume that the noise obeys a Gaussian distribution. However, in a real scene, the noise of the point cloud is often composed of multiple distributions, which limits the effectiveness of the existing methods. 3) Large scene application. Most of the existing methods are limited to target-level point clouds and cannot be directly applied to large-scale scenarios.

3.2. Completion

Point clouds obtained by 3D scanning devices are often incomplete, and thus require completion before the downstream task. With a given partial point cloud observation, the target of point cloud completion is to recover a complete 3D shape.

3.2.1. Traditional shape completion methods

The traditional point cloud completion methods can be grouped into two categories: geometry-based methods and alignment-based methods, which respectively utilize the geometric attributes of the objects (Berger et al. Citation2014; Davis et al. Citation2002; Hu, Fu, and Guo Citation2019; Mitra, Guibas, and Pauly Citation2006) and retrieval of the complete structure in the database (Felzenszwalb et al. Citation2010; Gupta et al. Citation2015; Han and Zhu Citation2008; Li et al. Citation2015). However, these methods cannot robustly generalize to the cases of complex 3D surfaces with large missing parts.

3.2.2. 3D shape completion with pairwise supervision

Researchers began to leverage deep learning based methods for 3D shape completion with the development of the deep neural network models. Early works focused on a 3D voxel grid (Dai, Ruizhongtai Qi, and Nießner Citation2017; Wang et al. Citation2017), but they were limited by the computational cost, which increases cubically with the shape resolution.

On the other hand, since PointNet (Qi et al. Citation2017) and its subsequent studies (Qi et al. Citation2017; Wang et al. Citation2019) have solved the disorder problem of point cloud data, point cloud based methods have emerged a lot in recent years.

The Point Completion Network (PCN) (Yuan et al. Citation2018) is the first point cloud based deep neural completion network, which uses an encoder similar to PointNet (Qi et al. Citation2017) to extract a global feature with several MultiLayer Perceptrons (MLPs) and a max pooling operation, and then employs a decoder to infer the complete point cloud from the global feature.

More recent works have tried to preserve the observed geometric details from the local features in incomplete inputs, and follow a coarse-to-fine strategy. The NSFA method (Zhang, Yan, and Xiao Citation2020) reconstructs the missing parts separately. VRC-Net (Pan et al. Citation2021) is based on a variational framework by leveraging the relationship between structures during the completion process. PMP-Net (Wen et al. Citation2021) accomplishes the completion task by learning point moving paths. SnowflakeNet (Xiang et al. Citation2021) introduces snowflake point deconvolution with skip-transformer for point cloud completion. ASFM-Net (Xia et al. Citation2021) employs an asymmetrical Siamese feature matching strategy and an iterative refinement unit to generate complete shapes.

There are also some recent networks that use a voxel-based completion process. For example, GRNet (Xie et al. Citation2020) is based on a gridding network for dense point reconstruction, and VE-PCN (Wang, Ang, and Lee Citation2021) develops a voxel-based network for point cloud completion by leveraging edge generation.

3.2.3. 3D shape completion without pairwise supervision

On the other hand, the paired ground truth of real scans is difficult to obtain, so a few studies on unpaired shape completion have been conducted.

The Amortized Maximum Likelihood (AML) approach (Stutz and Geiger Citation2018) uses the maximum likelihood method to measure the distance between complete and incomplete point clouds in the latent space. Pcl2Pcl (Chen, Chen, and Mitra Citation2019) pretrains two auto-encoders, and directly learns the mapping from partial shapes to the complete shapes in the latent space. Cycle4Completion (Wen et al. Citation2021) introduces two cycle transformations to establish the geometric correspondence between incomplete and complete shapes in both directions. ShapeInversion (Zhang et al. Citation2021) incorporates a well-trained Generative Adversarial Network (GAN) as an effective prior for shape completion. Cai et al. (Citation2022). established a unified and structured latent space to achieve partially complete geometric consistency and shape completion accuracy.

3.2.4. Future works

Following the above review, the future works will likely focus on several directions: 1) The existing completion networks are still defective in the details of maintenance, especially the thin structures such as wires. 2) Real-time completion is important for driverless vehicles and other applications. The general deep learning based methods for point cloud completion are faster than the traditional methods. 3) The current generalization ability of these methods is not sufficient, and it is difficult to extend these methods to scene completion.

3.3. Registration

Estimating the geometrical transformations of unaligned point clouds, which is known as point cloud registration, is a fundamental task for many downstream fields, such as 3D reconstruction (Izadi et al. Citation2011), autonomous driving (Caesar et al. Citation2020; Zeng et al. Citation2018), and augmented reality and virtual reality (AR/VR) (Gao et al. Citation2019; Zhang, Dai, and Sun Citation2020). The existing registration methods can be grouped into pairwise and multi-view methods (Dong et al. Citation2020).

3.3.1. Pairwise registration

Using local features for pairwise registration has a long history, and typically consists of four main steps (Guo et al. Citation2020): 1) feature detection; 2) feature description; 3) feature matching; and 4) transformation estimation. Specifically, hand-crafted (Tombari, Salti, and Di Stefano Citation2013; Zhong Citation2009; Zaharescu et al. Citation2009) and deep learning-based (Yew and Lee Citation2018; Li and Lee Citation2019; Zheng et al. Citation2019; Tinchev, Penate-Sanchez, and Fallon Citation2021; Wang et al. Citation2021; Bai et al. Citation2020; Huang et al. Citation2021) detectors aim to screen repeatable and distinct keypoints. After this, hand-crafted features such as the spin image (Johnson and Hebert Citation1999), Super4PCS (Mellado, Aiger, and Mitra Citation2014), FPFH (Rusu, Blodow, and Beetz Citation2009), SHOT (CitationTombari, Salti, and Di Stefano), RoPS (Guo et al. Citation2013), BSC (Dong et al. Citation2017) or learning-based features such as 3DMatch (Zeng et al. Citation2017), PPF-FoldNet (Deng, Birdal, and Ilic Citation2018), PerfectMatch (Gojcic et al. Citation2019), FCGF (Choy, Park, and Koltun Citation2019), D3Feat (Bai et al. Citation2020), LMVD (Li et al. Citation2020), SpinNet (Ao et al. Citation2021), and YOHO (Wang et al. Citation2021) are extracted on keypoints by encoding their local geometric patterns. A set of feature correspondences are then estimated by the nearest neighbor searching of features with mutual verification (Gojcic et al. Citation2019; Ao et al. Citation2021), a ratio test (El Banani, Gao, and Johnson Citation2021; Bai et al. Citation2021), or spatial consistency check (Choy, Dong, and Koltun Citation2020; Pais et al. Citation2020; Bai et al. Citation2021; Chen et al. Citation2022; Yu et al. Citation2021; Li and Harada Citation2021; Qin et al. Citation2022). Finally, pairwise transformations estimated by least squares based (Bai et al. Citation2021; Qin et al. Citation2022; Besl and McKay Citation1992) or SO(3)/SE(3) equivariance based (Deng, Birdal, and Ilic Citation2019; Wang et al. Citation2021) methods are evaluated utilizing a RANSAC-like algorithm (Fischler and Bolles Citation1981; Brachmann and Rother Citation2019; Brachmann et al. Citation2017). This category is highly reliant on the discriminative features, but has shown a lot of progress after the boom in deep learning models. However, these methods are faced with the problems of generalization and efficiency (Wang et al. Citation2021).

In addition, instead of feature extraction, a parallel stream of works have resorted to optimization strategies, including Iterative Closest Point (ICP)-based methods (Besl and McKay Citation1992; Bouaziz, Tagliasacchi, and Pauly Citation2013; Chetverikov et al. Citation2002; Yang et al. Citation2015; Wang and Solomon Citation2019; Segal, Haehnel, and Thrun Citation2009; Aoki et al. Citation2019), Normal Distributions Transform (NDT)-based methods (Magnusson, Lilienthal, and Duckett Citation2007; Stoyanov et al. Citation2012), Gaussian Mixture Model (GMM)-based methods (Eckart, Kim, and Kautz Citation2018; Evangelidis and Horaud Citation2017; Yuan et al. Citation2020), and semi-definite based methods (Le et al. Citation2019; Yang, Shi, and Carlone Citation2020). However, the above algorithms are highly dependent on an accurate initial value and are more suitable for fine registration.

3.3.2. Multi-view registration

Compared with pairwise registration, multi-view registration has drawn less attention, especially in the deep learning based field, and can be classified as sequential registration and joint registration algorithms (Dong et al. Citation2020; Gojcic et al. Citation2020; Grisetti et al. Citation2011). The main challenge of both categories lies in building a robust registration path or scene graph and resolving the ambiguous cases arising with pairwise registrations.

Sequential strategies such as minimum spanning tree based methods (Kruskal Citation1956; Zhu et al. Citation2016; Yang et al. Citation2016), shape growing based methods (Mian, Bennamoun, and Owens Citation2006; Ge and Hu Citation2020), and hierarchical merging based methods (Dong et al. Citation2018; Tang and Feng Citation2015) merge the scans incrementally by local pairwise registrations following the recovered registration path. However, a general drawback of the sequential-based methods is the accumulated error from the pairwise registrations.

Thus, joint registration methods have been proposed for solving global energy optimization problems (Zhou, Park, and Koltun Citation2016; Theiler, Wegner, and Schindler Citation2015; Ge, Hu, and Wu Citation2019), motion averaging algorithms (Govindu Citation2004; Shih, Chuang, and Yu Citation2008; Arrigoni, Rossi, and Fusiello Citation2016), and graph synchronization (Gojcic et al. Citation2020; Huang et al. Citation2019), although these methods do tend to be afflicted by local minima for a large search space.

3.3.3. Hybrid georeferencing of LiDAR and image data

In order to compute the respective 3D points from LiDAR range measurements, the sensor platform typically integrates a GNSS/IMU unit to provide the required position and attitude of the system. If suitable calibration of the sensor system is achieved, 3D point accuracies of the centimeter level are feasible (Liu et al. Citation2021a). Typically, LiDAR strip adjustment further improves the trajectory while minimizing the remaining offsets between point clouds of different flight strips in overlapping areas (Cramer et al. Citation2018; Ressl, Kager, and Mandlburger Citation2008; Shan and Toth Citation2018). For this purpose, a sophisticated calibration procedure is applied, while algorithms such as the well-known ICP algorithm (Besl and McKay Citation1992) minimize the discrepancies within the overlapping area of the flight strip pairs.

By extending this traditional LiDAR strip adjustment with additional observations from the bundle adjustment of image blocks, the so-called hybrid georeferencing of LiDAR and aerial images becomes feasible (Glira, Pfeifer, and Mandlburger Citation2016). Usually, bundle block adjustment is used to estimate the respective camera parameters from the corresponding pixel coordinates of overlapping images, while the object coordinates of these tie points are a by-product. Within the hybrid orientation approach, the tie points’ object coordinates from automatic aerial triangulation are re-used to establish the correspondences between the data and the image block, while the resulting discrepancies are minimized within a global adjustment procedure. In this respect, hybrid orientation adds additional observations and thus constraints to LiDAR strip adjustment. During hybrid adjustment, both the laser scanner and camera can be fully re-calibrated by estimating their interior calibration and mounting parameters (lever arm, boresight angles). Furthermore, the systematic measurement errors of the flight trajectory can be corrected individually for each flight strip.

gives an example of the combination of LiDAR points with photogrammetric tie points during hybrid adjustment of Unmanned Aerial Vehicle (UAV) imagery and LiDAR imagery during hybrid georeferencing. In this project, which was aimed at the ultra high precision collection of 3D point clouds for deformation monitoring, dense 3D point clouds at sub-centimeter accuracies were collected using a UAV platform (Haala et al. Citation2022).

3.3.4. Future works

From the above literature review, the possible future directions include: 1) designing (deep) features that are efficient, distinct, generalizable, and robust to noise, point density, and random transformations; 2) designing multi-view registration strategies that are robust to error accumulation and local extremes; 3) designing fully differentiable end-to-end deep pairwise or multi-view registration models balancing accuracy and efficiency; and 4) designing robust and efficient algorithms with high generalization capability for the registration of cross-modal multitemporal point clouds collected in different ways and at different times.

3.4. Segmentation

Point cloud semantic/instance segmentation, as a hot and rapidly developing research topic, connects original remote sensing data to high-level scene understanding. Typically, point cloud segmentation methods learn the point distribution patterns from annotated datasets and accordingly make their predictions. In this section, the previous works are roughly categorized into the following two groups.

3.4.1. Traditional machine learning based methods

Since the popularization of laser scanning, researchers have made great progress in segmenting large-scale point clouds by following the traditional machine learning paradigm (Ma et al. Citation2018; Che, Jung, and Olsen Citation2019; Yang et al. Citation2015). Specifically, for these methods, the key problem is how to define the feature calculation units and design appropriate feature descriptors, so that a machine learning point classifier can be accordingly trained (Dong et al. Citation2017; Landrieu et al. Citation2017; Zheng, Wang, and Xu Citation2016; Guan et al. Citation2016). For example, Weinmann et al. (Citation2015) presented a versatile per-point classification framework and analyzed various neighborhood units, feature descriptors, and feature combinations, to identify the most discriminative features. Alternatively, Yu et al. (Citation2016) clustered points into supervoxels as homogeneous point groups and mapped each group to a contextual visual word. Considering the point-region-instance feature hierarchy, Yang et al. (Citation2017) incorporated multiple levels of features and contextual information. Li et al. (Citation2019) further achieved component-level road object semantic segmentation using a machine learning classifier for pole attachment recognition. Although these machine learning based segmentation methods have contributed a lot to various applications, especially road object extraction, the overreliance on hand-crafted features limits their generalization performance in complex scenes (Zhou et al. Citation2022).

3.4.2. Deep learning based methods

Instead of treating the feature description and classification as separate steps, deep learning based point cloud segmentation methods train a neural network both to encode the point features and make predictions (Guo et al. Citation2020; Li et al. Citation2020). Point clouds are unorganized and irregular, so designing elegant point cloud encoders or effective backbones has always been a major research topic in deep learning based semantic segmentation (Qi et al. Citation2017; Graham, Engelcke, and Van Der Maaten Citation2018; Thomas et al. Citation2019; Zhao et al. Citation2021; Boulch Citation2020; Zhu et al. Citation2021; Xu et al. Citation2020). The RandLA-Net method achieves efficient semantic segmentation for very large scale point clouds, based on the introduced random sampling and feature aggregation strategy (Hu et al. Citation2020). Xu and Lee (Citation2020) exploited and modeled the implicit relationship between labeled and unlabeled points, and thus proposed to segment point clouds by weakly supervised learning. Furthermore, point cloud semantic instance segmentation requires more discriminative features (Wang et al. Citation2018; Yang et al. Citation2019; Jiang et al. Citation2020; Han et al. Citation2020). The Associatively Segmenting Instances and Semantics (ASIS) method associates semantic and instance segmentation tasks and encourages them to cooperate with each other and exploit more information, leading to simultaneous semantic and instance label prediction (Wang et al. Citation2019). Hou et al. (Citation2021) investigated pre-training based on both point pairs and spatial contexts, and accordingly achieved instance segmentation with limited annotation.

3.4.3. Future works

With research in 3D computer vision and computer graphics flourishing, point cloud segmentation solutions come across new challenges and opportunities. 1) Novel point cloud network designs can gain inspiration from the traditional methods, including the over-segmentation strategy or explicit geometric feature description. 2) The ability to learn in semi- or self-supervised manners should be further exploited since annotating full point cloud labels is very laborious and expensive. 3) Jointly segmenting point clouds and reconstructing 3D models has been explored as a promising scene understanding domain, because these tasks help the networks to holistically learn and leverage appearance, geometric, and semantic information. An example of the current focus and state-of-the-art of semantic segmentation of 3D point clouds is the Hessigheim 3D (H3D) benchmark (Koelle et al. Citation2021). This benchmark provides labeled high-resolution 3D point clouds as training and test data. Furthermore, textured meshes as well as multiple epochs are additionally available.

3.5. Surface reconstruction

Given an unstructured point cloud set obtained by a 3D sensing device, the target of surface reconstruction is to recover the underlying continuous surface

. Considering that it is an ill-posed problem to obtain a continuous surface from discrete point clouds, it is necessary to add appropriate regularization to recover the surface

. According to the different kinds of prior behind the regularization, the existing methods can be categorized into triangulation-based methods, implicit methods, and deep learning based-methods.

3.5.1. Triangulation-based methods

These methods (Cohen-Steiner and Da Citation2004; Bernardini et al. Citation1999; Edelsbrunner and Shah Citation1994; Mostegel et al. Citation2017; Labatut, Pons, and Keriven Citation2009; Boissonnat Citation1984; Jancosek and Pajdla Citation2014) represent the local surface by a triangular plane under a piecewise linear assumption, which directly produces the mesh result. In general, these methods first generate the triangle candidate set from the observed point set and select the optimal subset to form the final surface. Edelsbrunner and Shah (Citation1994) proposed constructing a tetrahedron first via the Delaunay triangulation method (Boissonnat Citation1984), and then utilized a graph-cut algorithm to classify the inside/outside property for each tetrahedron, so that the surface can be represented by the shared triangle between the inside tetrahedron and outside tetrahedron. Furthermore, the greedy Delaunay algorithm (Cohen-Steiner and Da Citation2004) adopts a greedy strategy to utilize a topological constraint for selecting the subset triangles sequentially. In addition, the Ball-Pivoting Algorithm (BPA) (Bernardini et al. Citation1999) utilizes balls with various radiuses to roll on

, to generate the triangular face as the reconstructed surface.

3.5.2. Implicit methods

Under the assumption of global smoothness or local smoothness, these methods (Levin Citation1998; Hoppe et al. Citation1992; Kazhdan and Hoppe Citation2013; Atzmon and Lipman Citation2020b, Citation2020a; Carr et al. Citation2001; Kazhdan, Bolitho, and Hoppe Citation2006; Baorui et al. Citation2021; Gropp et al. Citation2020) utilize a continuous function to represent the implicit fields (e.g. the Signed Distance Function (SDF) or indicator function), and the final surface can be extracted by marching cubes (Lorensen and Cline Citation1987). Specifically, the Implicit Moving Least Squares (IMLS) method (Levin Citation1998) utilizes a moving least squares scheme to represent the local surface from the given oriented point cloud under a local smoothness prior. In addition, Carr et al. (Citation2001) adopted a radial basis function to represent the global implicit function, which assumes that the surface should satisfy the property of global smoothness. Furthermore, the sScreened Poisson Surface Reconstruction (SPSR) method (Kazhdan and Hoppe Citation2013) combines the global property and local property to solve the Poisson equation, which can achieve high-fidelity reconstruction results. Other recent works (Atzmon and Lipman Citation2020b, Citation2020a; Baorui et al. Citation2021; Gropp et al. Citation2020) have explored a neural implicit function to reconstruct accurate surfaces directly from raw point clouds.

3.5.3. Deep learning-based methods

Differing from the afore-mentioned methods, these methods (Peng et al. Citation2021; Mostegel et al. Citation2017; Erle, Guerrero, and Ohrhallinger Citation2020, Citation2020; Mescheder et al. Citation2019; Jiang et al. Citation2020; Genova et al. ; Chen and Zhang Citation2019; Liao, Donne, and Geiger Citation2018; Liu et al. Citation2021b; Peng et al. Citation2020) try to leverage a data prior from the existing 3D dataset, which enables the reconstruction of an accurate surface, even from point clouds with high noise or from partial inputs. Some of the early works (Erle, Guerrero, and Ohrhallinger Citation2020; Genova et al. ; Peng et al. Citation2020) proposed to learn a global shape prior encoded by a neural network, but these methods have difficulty in generalizing to unseen objects or large scenes. To enhance the generalization of the neural network, the following works (Jiang et al. Citation2020; Park et al. Citation2019; Jiang et al. Citation2020) proposed learning a local prior instead. Furthermore, Point2Surf (Peng et al. Citation2020) uses a patch-based method to achieve more accurate prediction. Meanwhile, some methods (Peng et al. Citation2021; Erler et al.) attempt to combine the learning prior with traditional methods. For example, IMLSNet (Erler et al.) utilizes a neural network to predict the point-wise offset and normal for the given noisy point clouds, and utilizes traditional IMLS (Levin Citation1998) to reconstruct the surface. In general, the learning-based methods can obtain promising results for noisy and partial point clouds, but it is still an open question as to how an optimal learning scheme utilizes the data prior.

3.5.4. Combining LiDAR and multi-view-stereo

While photogrammetry and LiDAR originally developed as competing techniques, recent systems have utilized their complementary properties for 3D object reconstruction. Thus, the integrated capture and evaluation of airborne images and LiDAR images can generate 3D point clouds of a very high quality, with increased completeness, reliability, robustness, and accuracy. As a matter of principle, Multi-View Stereo (MVS) point clouds feature a high-resolution capability. Since the accuracy of MVS point clouds directly corresponds to the ground sampling distance, suitable image resolutions even enable accuracies in the sub-centimeter range. However, MVS requires visible object points in at least two images. Complex 3D structures are likely to violate this condition, resulting in their reconstruction being severely aggravated. Problems may specifically occur for objects in motion, such as vehicles, pedestrians, etc., or in very narrow urban canyons, due to occlusions. In contrast, a single LiDAR measurement is sufficient for point determination due to the polar measurement principle of LiDAR sensors. The lower requirements on visibility are advantageous for the reconstruction of complex 3D structures, urban canyons, or objects that change their appearance rapidly when seen from different positions. Another advantage of LiDAR is the potential to measure multiple responses of the reflected signals, which enables penetration of semi-transparent objects such as vegetation. However, LiDAR data do not provide color information.

The advantages of combining MVS with LiDAR point measurements is demonstrated in . The left part depicts the textured mesh generated from MVS only, while the hybrid mesh integrating MVS points and LiDAR points is depicted on the right. So far, in this paper, our investigations have been based on 3D point clouds as an unordered set of points. In contrast, depicts a 3D mesh as an alternative representation. Such meshes are graphs consisting of vertices, edges, and faces that provide explicit adjacency information. The main differences between meshes and point clouds are the availability of high-resolution textures and the reduced number of entities. The meshes presented in were generated with SURE software from nFrames (Glira, Pfeifer, and Mandlburger Citation2019), based on data captured from a UAV platform (Haala et al. Citation2022). As they are visible, the incorporation of LiDAR points enhances the reconstructed 3D data substantially. To give examples, the top of the church and the vegetation provide more geometric detail when LiDAR data are integrated.

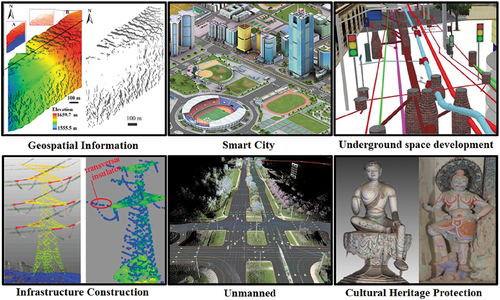

4. Applications

Point cloud intelligence has achieved good results in 3D information extraction and modeling, and has been widely used in scientific research and engineering, such as geospatial informatics research, underground space development and utilization, smart cities, new basic surveying and mapping, and infrastructure health monitoring, as shown in .

In geo-information science, point cloud intelligence can accurately portray the 3D morphological structure of vegetation, glaciers, islands, and the surrounding underwater terrain, providing vital support for global forest accumulation, biomass estimation, global glacial material balance, marine economic development/management, and sea defense security.

In terms of smart cities and “realistic 3D China”, point cloud intelligence is playing an increasingly important role in fine urban management, 3D change detection, urban security analysis, etc. Through the intelligent integration of data, structure, and function as a whole, the 3D geometric information, rich semantic information, and accurate spatial relationships between indoor and outdoor, above and below ground, and above and below water can be expressed in an integrated manner to achieve on-demand multi-level detail modeling and provide informative all-space, dynamic, and static information guarantee for complex cities.

For the comprehensive development and utilization of underground spaces, point cloud intelligence can provide support for digital construction, Building Information Modeling (BIM), underground disaster detection, early warning, etc. It can also be used to establish an all-digital underground space infrastructure and dynamic convergence of Internet of tThings (IoT) data, supporting scientific management support and decision-making for the construction and comprehensive planning of underground spaces, construction projects, process supervision, status and full life cycle databases, as well as the whole process of project planning and management and whole life cycle refinement management.

In terms of the health monitoring of major infrastructure, point cloud intelligence can provide accurate and effective 3D information for power line safety monitoring (safety distance, etc.), road surface health monitoring (collapse, damage, etc.), bridge and tunnel deformation monitoring, etc., through refinement modeling of key structures as well as precise identification of multiple targets and spatial relationship calculation, providing guarantees for the safe operation of infrastructure.

In terms of automatic driving, point cloud intelligence is the core support for real-time motion target detection and localization, real-time obstacle avoidance, and High-Definition (HD) map production. Laser scanning for obstacle avoidance has become a standard for automatic driving, and accurate extraction of HD map elements enables automatic driving, providing users with accurate and intuitive 3D location information as well as precise path planning control strategies that exceed the sensor capabilities.

In terms of digital heritage protection and inheritance, point cloud intelligence can provide systematic scientific support, from data collection to refined reconstruction with digital high-precision reconstruction, virtual restoration, and networked dissemination of cultural heritage, which significantly improves the efficiency of cultural heritage protection and enriches the expression of cultural heritage results, such as the splicing of cultural heritage fragments, 3D model reconstruction of cultural heritage, and restoration of cultural heritage.

5. Outlook

The rapid development of sensors, semiconductors, the IoT, and delivery platforms is continuously improving the efficiency and quality of point cloud big data acquisition, as well as reducing the cost of data collection, allowing the physical world to be digitized more efficiently in 3D. However, the data volume is increasing exponentially, which raises great challenges for storage management and the computation and analysis of point cloud big data. Fortunately, emerging technologies such as edge computing, deep learning, artificial intelligence, etc., can help to provide more opportunities for point cloud intelligence.

The era of large-scale urban scene point clouds and global fine-scale point clouds is coming, and point cloud intelligence, as the scientific support for the intelligent processing and analysis of point cloud big data, which is the third most important type of basic data after vector maps and imagery, will further develop in the following directions: 1) the development of the storage and updating mechanisms for point cloud big data to provide basic support for the efficient utilization of point cloud data; 2) the establishment of industrial and national standards for point cloud 3D information extraction and modeling for new basic mapping, to serve the construction of “realistic 3D China” and natural resource monitoring; 3) the creation of object-oriented deep learning networks for object-oriented point cloud big data, based on artificial intelligence, to transform point cloud processing from the current point-by-point classification to the integration of object classification and boundary extraction for the accurate understanding of 3D scenes; and 4) the development of intelligent equipment that integrates collection, processing, and serving as one, to serve the health management of major infrastructure (such as power grids, high-speed railetc.). It is believed that, in the future, with the support of artificial intelligence and deep learning, point cloud intelligence will not only enable the fine reconstruction of the 3D real world through real-time integration with IoT data, but will also support Earth science application research, smart cities, etc., with more scientific decision-making.

6. Conclusion

This paper has presented a contemporary survey of the state-of-the-art of point cloud intelligence, with regard to the theoretical methods, the key techniques of intelligent processing, and the major engineering applications. We have analyzed the equipment and methods of mainstream point cloud data acquisition and the advanced algorithms for the key technologies involved in point cloud intelligent processing. A comprehensive classification and a merit and demerit analysis of these methods have been presented, with the potential research directions also being highlighted. Finally, based on the summary of point cloud intelligence in scientific research and engineering applications, the future development directions of point cloud intelligence have been discussed.

Disclosure statement

No potential conflict of interest was reported by the author(s).

Data availability statement

The manuscript does not mention any specific data.

Additional information

Funding

Notes on contributors

Bisheng Yang

Bisheng Yang received the B.S. degree in engineering survey, the M.S. degree, and the Ph.D. degree in photogrammetry and remote sensing from Wuhan University, China, in 1996, 1999, and 2002, respectively. From 2002 to 2006, he held a post-doctoral position at the University of Zurich, Switzerland. Since 2007, he has been a Professor with the State Key Laboratory of Information Engineering in Surveying, Mapping and Remote Sensing (LIESMARS), Wuhan University, where he is currently the vice-director of LIESMARS. His main research interests comprise 3-D geographic information systems, urban modeling, and digital city. He was a Guest Editor of the ISPRS Journal of Photogrammetry and Remote Sensing, and Computers \& Geosciences. His main research interests comprise 3-D geographic information systems, urban modeling, and digital city.

Nobert Haala

Norbert Haala is an Associate Professor at the Institute for Photogrammetry, the University of Stuttgart, where he is responsible for lectures in the field of photogrammetric image processing. His research interests include virtual city models and imagebased 3-D reconstruction.

Zhen Dong

Zhen Dong is a professor at the State Key Laboratory of Information Engineering in Surveying, Mapping and Remote Sensing (LIESMARS), Wuhan University. He received his B.E. and Ph.D. degrees in Remote Sensing and Photogrammetry from the Wuhan University in 2011 and 2018. His research interests lie in the field of 3D Computer Vision, particularly including 3D reconstruction, scene understanding, point cloud processing as well as their applications in intelligent transportation system, digital twin cities, urban sustainable development and robotics.

Notes

References

- Alexa, M., J. Behr, D. Cohen-Or, S. Fleishman, D. Levin, and C. T. Silva. 2003. “Computing and Rendering Point Set Surfaces.” IEEE Transactions on Visualization and Computer Graphics 9 (1): 3–15. doi:10.1109/TVCG.2003.1175093.

- Ao, S., Q. Hu, B. Yang, A. Markham, and Y. Guo. 2021. “SpinNet: Learning a General Surface Descriptor for 3D Point Cloud Registration.” In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 11753–11762, Online only (Virtual event).

- Aoki, Y., H. Goforth, R. A. Srivatsan, and S. Lucey. 2019. “PointNetlk: Robust & Efficient Point Cloud Registration Using PointNet.” Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 7163–7172, Long Beach, CA, USA.

- Arrigoni, F., B. Rossi, A. Fusiello. 2016. “Global Registration of 3D Point Sets via LRS Decomposition.” In European Conference on Computer Vision, 489–504. Cham: Springer.

- Atzmon, M., and Y. Lipman. 2020a. “Sal: Sign Agnostic Learning of Shapes from Raw Data.“ In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA.

- Atzmon, M., and Y. Lipman. 2020b. “SALD: Sign Agnostic Learning with Derivatives.” arXiv preprint arXiv:2006.05400.

- Avron, H., A. Sharf, C. Greif, and D. Cohen-Or. 2010. “ℓ1-Sparse Reconstruction of Sharp Point Set Surfaces.” ACM Transactions on Graphics (TOG) 29 (5): 1–12. doi:10.1145/1857907.1857911.

- Bai, X., Z. Luo, L. Zhou, H. Fu, I. Quan, and C. L. Tai. “D3feat: Joint Learning of Dense Detection and Description of 3D Local Features.” In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 6359–6367, Seattle, WA, USA.

- Bai, X., Z. Luo, L. Zhou, H. Chen, L. Li, Z. Hu, H. Fu, and C. L Tai. 2021. “PointDsc: Robust Point Cloud Registration Using Deep Spatial Consistency.” In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 15859–15869, Online only (Virtual event).

- Baorui, M., Z. Han, Y.S. Liu, and Zwicker, M. 2021. “Neural-Pull: Learning Signed Distance Functions from Point Clouds by Learning to Pull Space Onto Surfaces.” doi:10.2147/DDDT.S309648.

- Bernardini, F., J. Mittleman, H. Rushmeier, C. Silva, and G. Taubin. 1999. “The Ball-Pivoting Algorithm for Surface Reconstruction.” IEEE Transactions on Visualization and Computer Graphics 5 (4): 349–359. doi:10.1109/2945.817351.

- Besl, P. J., and N. D. McKay. 1992. “Method for Registration of 3-D Shapes Sensor Fusion IV: Control Paradigms and Data Structures.” SPIE 1611: 586–606.

- Boissonnat, J. D. 1984. “Geometric Structures for Three-Dimensional Shape Representation.” ACM Transactions on Graphics (TOG) 3 (4): 266–286. doi:10.1145/357346.357349.

- Bouaziz, S., A. Tagliasacchi, and M. Pauly. 2013. “Sparse Iterative Closest Point.” In Computer Graphics Forum, Vol. 32. 5, 113–123. Oxford, UK: Blackwell Publishing Ltd. doi:10.1111/cgf.12178.

- Boulch, A. 2020. “ConvPoint: Continuous Convolutions for Point Cloud Processing.” Computers & Graphics 88: 24–34. doi:10.1016/j.cag.2020.02.005.

- Brachmann, E., A. Krull, S. Nowozin, J. Shotton, F. Michel, S. Gumhold, and C. Rother. 2017. “DSAC-Differentiable RANSAC for Camera Localization.” In Proceedings of the IEEE conference on computer vision and pattern recognition, 6684–6692, Honolulu, HI, USA.

- Brachmann, E., C. Rother. 2019. “Neural-Guided RANSAC: Learning Where to Sample Model Hypotheses.” In Proceedings of the IEEE/CVF International Conference on Computer Vision, 4322–4331, Seoul, Korea (South).

- Carr, J. C., R. K. Beatson, J. B. Cherrie, T. J. Mitchell, W. R. Fright, B. C. McCallum, and T. R. Evans. 2001. “Reconstruction and Representation of 3D Objects with Radial Basis Functions.” In Proceedings of the 28th annual conference on Computer graphics and interactive techniques, 67–76.

- Caesar, H., V. Bankiti, H. A. Lang, S. Vora, V. E. Liong, Q. Xu, A. Krishnan, Y. Pan, G. Baldan, and O. Beijbom. 2020. “nuScenes: A Multimodal Dataset for Autonomous Driving.” In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, 11621–11631, Seattle, WA, USA.

- Cai, Y., K. Y. Lin, C. Zhang, Q. Wang, X. Wang, and H. Li. 2022. “Learning a Structured Latent Space for Unsupervised Point Cloud Completion.” In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, Louisiana, USA.

- Chetverikov, D., D. Svirko, D. Stepanov, and P. Krsek. 2002. “The Trimmed Iterative Closest Point Algorithm.” In International Conference on Pattern Recognition 3: 545–548, Quebec, Canada: IEEE.

- Che, E., J. Jung, and M. J. Olsen. 2019. “Object Recognition, Segmentation, and Classification of Mobile Laser Scanning Point Clouds: A State of the Art Review.” Sensors 19 (4): 810. doi:10.3390/s19040810.

- Chen, X., B. Chen, and N. J. Mitra. 2019. “Unpaired Point Cloud Completion on Real Scans Using Adversarial Training.” arXiv preprint arXiv:1904.00069.

- Chen, Z., and H. Zhang. 2019. “Learning Implicit Fields for Generative Shape Modeling.” In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 5939–5948, Long Beach, CA, USA.

- Chen, H., Z. Wei, X. Li, Y. Xu, M. Wei, and J. Wang. 2022. “RePcd-Net: Feature-Aware Recurrent Point Cloud Denoising Network.” International Journal of Computer Vision 130 (1): 1–15. doi:10.1007/s11263-021-01538-9.

- Chen, Z., K. Sun, F. Yang, and W. Tao. 2022. “SC^ 2-PCR: A Second Order Spatial Compatibility for Efficient and Robust Point Cloud Registration.” In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 13221–13231, New Orleans, Louisiana, USA.

- Choy, C., W. Dong, V. Koltun. 2020. “Deep Global Registration.” In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, 2514–2523, Seattle, WA, USA.

- Choy, C., J. Park, V. Koltun. 2019. “Fully Convolutional Geometric Features.” In Proceedings of the IEEE/CVF International Conference on Computer Vision, 8958–8966. Seoul, Korea (South).

- Cohen-Steiner, D., and F. Da. 2004. “A Greedy Delaunay-Based Surface Reconstruction Algorithm.” The Visual Computer 20 (1): 4–16. doi:10.1007/s00371-003-0217-z.

- Cramer, M., N. Haala, D. Laupheimer, G. Mandlburger, and P. Havel. 2018. “Ultra-High Precision UAV-Based LiDar and Dense Image Matching.” The International Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences 1: 115–120. doi:10.5194/isprs-archives-XLII-1-115-2018.

- Dai, A., C. Ruizhongtai Qi, M. Nießner. 2017. “Shape Completion Using 3D-Encoder-Predictor CNNs and Shape Synthesis.” In Proceedings of the IEEE conference on computer vision and pattern recognition, 5868–5877, Honolulu, HI, USA.

- Davis, J., S. R. Marschner, M. Garr, and M. Levoy. 2002. “Filling Holes in Complex Surfaces Using Volumetric Diffusion.” In Proceedings First International Symposium on 3D Data Processing Visualization and Transmission, 428–441. Padova, Italy, IEEE.

- Deng, H., T. Birdal, S. Ilic. 2018. “PPF-FoldNet: Unsupervised Learning of Rotation Invariant 3D Local Descriptors.” In Proceedings of the European Conference on Computer Vision (ECCV), 602–618, Munich, Germany.

- Deng, H., T. Birdal, S. Ilic. 2019. “3D Local Features for Direct Pairwise Registration.” In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 3244–3253, Long Beach, CA, USA.

- Deren, L. I. 2017. “From Geomatics to Geospatial Intelligent Service Science.” Acta Geodaetica et Cartographica Sinica 46 (10): 1207.

- Deren, L., M. Jun, and S. Zhenfeng. 2018. “Innovation in the Census and Monitoring of Geographical National Conditions[J].” Geomatics and Information Science of Wuhan University 43 (1): 1–9.

- Dong, Z., F. Liang, B. Yang, Y. Xu, Y. Zang, J. Li, Y. Wang, et al. 2020. “Registration of Large-Scale Terrestrial Laser Scanner Point Clouds: A Review and Benchmark.” ISPRS Journal of Photogrammetry and Remote Sensing 163: 327–342. doi:10.1016/j.isprsjprs.2020.03.013.

- Dong, Z., B. Yang, F. Liang, R. Huang, and S. Scherer. 2018. “Hierarchical Registration of Unordered TLS Point Clouds Based on Binary Shape Context Descriptor.” ISPRS Journal of Photogrammetry and Remote Sensing 144: 61–79. doi:10.1016/j.isprsjprs.2018.06.018.

- Dong, Z., B. Yang, Y. Liu, F. Liang, B. Li, and Y. Zang. 2017. “A Novel Binary Shape Context for 3D Local Surface Description.” ISPRS Journal of Photogrammetry and Remote Sensing 130: 431–452. doi:10.1016/j.isprsjprs.2017.06.012.

- Eckart, B., K. Kim, J. Kautz. 2018. “HGMR: Hierarchical Gaussian Mixtures for Adaptive 3D Registration.” In Proceedings of the European Conference on Computer Vision (ECCV), 705–721, Munich, Germany.

- Edelsbrunner, H., and N.-R. Shah. 1994. “Triangulating Topological Spaces.“ In Proceedings of the tenth annual symposium on Computational geometry, 285–292.

- El Banani, M., L. Gao, J. Johnson. 2021. “UnsupervisedR&R: Unsupervised Point Cloud Registration via Differentiable Rendering.” In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 7129–7139, Online only (Virtual event).

- Erler, P., P. Guerrero, S. Ohrhallinger, N. J. Mitra, and M. Wimmer. (2020, October). POINTS2SURF Learning Implicit Surfaces from Point Clouds. In Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, August 23–28, 2020, 108–124. Proceedings, Part V. Cham: Springer International Publishing.

- Erler, P., P. Guerrero, S. Ohrhallinger, N. J. Mitra, and M. Wimmer. 2020. Points2surf Learning Implicit Surfaces from Point Clouds, 108–124.

- Evangelidis, G. D., and R. Horaud. 2017. “Joint Alignment of Multiple Point Sets with Batch and Incremental Expectation-Maximization.” IEEE Transactions on Pattern Analysis and Machine Intelligence 40 (6): 1397–1410. doi:10.1109/TPAMI.2017.2717829.

- Felzenszwalb, P. F., R. B. Girshick, D. McAllester, and D. Ramanan. 2010. “Object Detection with Discriminatively Trained Part-Based Models.” IEEE Transactions on Pattern Analysis and Machine Intelligence 32 (9): 1627–1645. doi:10.1109/TPAMI.2009.167.

- Fischler, M. A., and R. C. Bolles. 1981. “Random Sample Consensus: A Paradigm for Model Fitting with Applications to Image Analysis and Automated Cartography.” Communications of the ACM 24 (6): 381–395. doi:10.1145/358669.358692.

- Gao, Q. H., T. R. Wan, W. Tang, and L. Chen. 2019. “Object Registration in Semi-Cluttered and Partial-Occluded Scenes for Augmented Reality.” Multimedia Tools and Applications 78 (11): 15079–15099. doi:10.1007/s11042-018-6905-5.

- Ge, X., and H. Hu. 2020. “Object-Based Incremental Registration of Terrestrial Point Clouds in an Urban Environment.” ISPRS Journal of Photogrammetry and Remote Sensing 161: 218–232. doi:10.1016/j.isprsjprs.2020.01.020.

- Ge, X., H. Hu, and B. Wu. 2019. “Image-Guided Registration of Unordered Terrestrial Laser Scanning Point Clouds for Urban Scenes.” IEEE Transactions on Geoscience and Remote Sensing 57 (11): 9264–9276. doi:10.1109/TGRS.2019.2925805.

- Genova, K., F. Cole, A. Sud, A. Sarna, and T. Funkhouser. 2020. “Local deep implicit functions for 3d shape.” In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 4857–4866, Seattle, WA, USA.

- Glira, P., N. Pfeifer, and G. Mandlburger. 2016. “Rigorous Strip Adjustment of UAV-Based Laser Scanning Data Including Time Dependent Correction of Trajectory Errors.” Photogrammetric Engineering & Remote Sensing 82 (12): 945–954. doi:10.14358/PERS.82.12.945.

- Glira, P., N. Pfeifer, and G. Mandlburger. 2019. “Hybrid Orientation of Airborne LiDar Point Clouds and Aerial Images.” ISPRS - International Society for Photogrammetry and Remote Sensing IV-2/W5: 567–574. doi:10.5194/isprs-annals-IV-2-W5-567-2019.

- Gojcic, Z., C. Zhou, J. D. Wegner, and A. Wieser. 2019. “The Perfect Match: 3D Point Cloud Matching with Smoothed Densities.” In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 5545–5554, Long Beach, CA, USA.

- Gojcic, Z., C. Zhou, J. D. Wegner, L. J. Guibas, and T. Birdal. 2020. “Learning Multiview 3D Point Cloud Registration.” In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, 1759–1769, Seattle, WA, USA.

- Govindu, V. M. 2004. “Lie-Algebraic Averaging for Globally Consistent Motion Estimation.” In Proceedings of the 2004 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, 2004. CVPR 2004, vol. 1, 1. IEEE, Washington, DC, USA.

- Graham, B., M. Engelcke, L. Van Der Maaten. 2018. “3D Semantic Segmentation with Submanifold Sparse Convolutional Networks.” Proceedings of the IEEE conference on computer vision and pattern recognition, 9224–9232, Salt Lake City, UT, USA.

- Grisetti, G., R. Kümmerle, H. Strasdat, and K. Konolige. 2011. “G2o: A General Framework for (Hyper) Graph Optimization.” Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), 9–13, Shanghai, China.

- Gropp, A., L. Yariv, N. Haim, M. Atzmon, and Y. Lipman. 2020. “Implicit Geometric Regularization for Learning Shapes.” arXiv preprint arXiv:2002.10099.

- Guan, H., Y. Yu, J. Li, and P. Liu. 2016. “Pole-Like Road Object Detection in Mobile LiDar Data via Supervoxel and Bag-Of-Contextual-Visual-Words Representation.” IEEE Geoscience and Remote Sensing Letters 13 (4): 520–524. doi:10.1109/LGRS.2016.2521684.

- Guo, Y., F. Sohel, M. Bennamoun, M. Lu, and J. Wan. 2013. “Rotational Projection Statistics for 3D Local Surface Description and Object Recognition.” International Journal of Computer Vision 105 (1): 63–86. doi:10.1007/s11263-013-0627-y.

- Guo, Y., H. Wang, Q. Hu, H. Liu, L. Liu, and M. Bennamoun. 2020. “Deep Learning for 3D Point Clouds: A Survey.” IEEE Transactions on Pattern Analysis and Machine Intelligence 43 (12): 4338–4364. doi:10.1109/TPAMI.2020.3005434.

- Gupta, S., P. Arbeláez, R. Girshick, and J. Malik. 2015. “Aligning 3D Models to RGB-D Images of Cluttered Scenes.” In Proceedings of the IEEE conference on computer vision and pattern recognition, 4731–4740, Boston, MA, USA.

- Haala, N., M. Koe`lle, M. Cramer, D. Laupheimer, and F. Zimmermann. 2022. “Hybrid Georeferencing of Images and LiDar Data for UAV-Based Point Cloud Collection at Millimetre Accuracy, 2022 ISPRS.” ISPRS Journal of Photogrammetry and Remote Sensing 4: 100014. doi:10.1016/j.ophoto.2022.100014.

- Han, L., T. Zheng, L. Xu, and L. Fang . 2020. “OccuSeg: Occupancy-Aware 3D Instance Segmentation.” In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, 2940–2949, Seattle, WA, USA.

- Han, F., and S. C. Zhu. 2008. “Bottom-Up/top-Down Image Parsing with Attribute Grammar.” IEEE Transactions on Pattern Analysis and Machine Intelligence 31 (1): 59–73. doi:10.1109/TPAMI.2008.65.

- Hemin, J., Z. Ruofei, and X. I. E. Donghai. 2019. “Design and Implementation of Mobile Measurement Method for Smartphone.” Bulletin of Surveying and Mapping 6: 71.

- Hermosilla, P., T. Ritschel, T. Ropinski. 2019. Total Denoising: Unsupervised Learning of 3D Point Cloud Cleaning.” In Proceedings of the IEEE/CVF International Conference on Computer Vision, 52–60, Seoul, Korea (South).

- Hoppe, H., T. DeRose, T. Duchamp, J. McDonald, and W. Stuetzle. 1992. “Surface Reconstruction from Unorganized Points.” ACM SIGGRAPH Computer Graphics 26 (2): 71–78. doi:10.1145/142920.134011.

- Hou, J., B. Graham, M. Nießner, and S. Xie. 2021. “Exploring Data-Efficient 3D Scene Understanding with Contrastive Scene Contexts.” In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 15587–15597, Online only (Virtual event).

- Huang, S., Z. Gojcic, M. Usvyatsov, A. Wieser, and K. Schindler. 2021. “Predator: Registration of 3D Point Clouds with Low Overlap.” In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 4267–4276, Online only (Virtual event).

- Huang, X., Z. Liang, X. Zhou, Y Xie, L. J. Guibas, Q. Huang. 2019. “Learning Transformation Synchronization.” In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 8082–8091. Long Beach, CA, USA.

- Hu, W., Z. Fu, and Z. Guo. 2019. “Local Frequency Interpretation and Non-Local Self-Similarity on Graph for Point Cloud Inpainting.” IEEE Transactions on Image Processing 28 (8): 4087–4100. doi:10.1109/TIP.2019.2906554.

- Hu, Q., B. Yang, L. Xie, S. Rosa, Y. Guo, Z. Wang, N. Trigoni, and A. Markham. 2020. “Randla-Net: Efficient Semantic Segmentation of Large-Scale Point Clouds.” In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 11108–11117, Seattle, WA, USA.

- Izadi, S., D. Kim, O. Hilliges, D. Molyneaux, R. Newcombe, P. Kohli, J. Shotton, S. Hodges, D. Freeman, A. Davison, and A. Fitzgibbon. 2011. “KinectFusion: Real-Time 3D Reconstruction and Interaction Using a Moving Depth Camera.” Proceedings of the 24th annual ACM symposium on User interface software and technology, 559–568, Santa Barbara California, USA.

- Jancosek, M., and T. Pajdla. 2014. “Exploiting Visibility Information in Surface Reconstruction to Preserve Weakly Supported Surfaces.” International Scholarly Research Notices 2014: 1–20. doi:10.1155/2014/798595.

- Jiang, C., S. Avneesh, M. Ameesh, H. Jingwei, N. Matthias, and F. Thomas. 2020. “Local Implicit Grid Representations for 3D Scenes.” In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 6001–6010, Seattle, WA, USA.

- Jiang, L., Z. Hengshuang, S. Shaoshuai, L. Shu, F. Chi-Wing, and J. Jiaya. 2020. “PointGroup: Dual-Set Point Grouping for 3D Instance Segmentation.” Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 4867–4876.

- Johnson, A. E., and M. Hebert. 1999. “Using Spin Images for Efficient Object Recognition in Cluttered 3D Scenes.” IEEE Transactions on Pattern Analysis and Machine Intelligence 21 (5): 433–449. doi:10.1109/34.765655.

- Kazhdan, M., M. Bolitho, and H. Hoppe. 2006. “Poisson Surface Reconstruction.” In Proceedings of the fourth Eurographics symposium on Geometry processing, Goslar, Germany (Vol. 7, p. 0).

- Kazhdan, M., and H. Hoppe. 2013. “Screened Poisson Surface Reconstruction.” ACM Transactions on Graphics (TOG) 32 (3): 1–13. doi:10.1145/2487228.2487237.

- Kelly, A., A. Stentz, O. Amidi, M. Bode, D. Bradley, A. Diaz-Calderon, M. Happold, et al. 2006. “Toward Reliable off Road Autonomous Vehicles Operating in Challenging Environments.” The International Journal of Robotics Research 25 (5–6): 449–483. doi:10.1177/0278364906065543.

- Koelle, M., D. Laupheimer, S. Schmohl, N. Haala, F. Rottensteiner, J. D. Wegner, and H. Ledoux. 2021. “The Hessigheim 3D (H3D) Benchmark on Semantic Segmentation of High-Resolution 3D Point Clouds and Textured Meshes from UAV LiDar and Multiview-Stereo.” ISPRS Journal of Photogrammetry and Remote Sensing 1: 100001–3932100001–3932. doi:10.1016/j.ophoto.2021.100001.

- Kruskal, J. B. 1956. “On the Shortest Spanning Subtree of a Graph and the Traveling Salesman Problem.” 7 (1): 48–50. doi:10.1090/S0002-9939-1956-0078686-7.

- Labatut, P., J. -P. Pons, and R. Keriven. 2009. “Robust and Efficient Surface Reconstruction from Range Data.” Wiley Online Library 28 (8): 2275–2290. doi:10.1111/j.1467-8659.2009.01530.x.

- Landrieu, L., H. Raguet, B. Vallet, C. Mallet, and M. Weinmann. 2017. “A Structured Regularization Framework for Spatially Smoothing Semantic Labelings of 3D Point Clouds.” ISPRS Journal of Photogrammetry and Remote Sensing 132: 102–118. doi:10.1016/j.isprsjprs.2017.08.010.

- Le, H. M., D. Thanh-Toan, H. Tuan, and C. Ngai-Man. 2019. “SDRSAC: Semidefinite-Based Randomized Approach for Robust Point Cloud Registration Without Correspondences.” Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 124–133, Long Beach, CA, USA.

- Levin, D. 1998. “The Approximation Power of Moving Least-Squares.” Mathematics of Computation 67 (224): 1517–1531. doi:10.1090/S0025-5718-98-00974-0.

- Liao, Y., S. Donne, and A. Geiger. 2018. “Deep Marching Cubes: Learning Explicit Surface Representations.” In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2916–2925, Salt Lake City, UT, USA.

- Li, Y., A. Dai, L. Guibas, and M. Nießner. 2015. “Database-assisted Object Retrieval for Real-time 3D Reconstruction.” Computer Graphics Forum 34 (2): 435–446. doi:10.1111/cgf.12573.

- Li, Y., and T. Harada. 2021. “Lepard: Learning Partial Point Cloud Matching in Rigid and Deformable Scenes.” arXiv preprint arXiv:2111.12591.

- Li, Y., and H. Tatsuya. 2022 “Lepard: Learning partial point cloud matching in rigid and deformable scenes.” Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. Vitual Event, 5554–5564.

- Li, G., J. Huang, X. Tang, G. Huang, S. Zhou, and Y. Zhao. 2018. “Influence of Range Gate Width on Detection Probability and Ranging Accuracy of Single Photon Laser Altimetry Satellite.” Acta Geodaetica et Cartographica Sinica 47: 1487–1494.

- Li, J., G. H. Lee. 2019. “USIP: Unsupervised Stable Interest Point Detection from 3D Point Clouds.” Proceedings of the IEEE/CVF International Conference on Computer Vision, 361–370, Seoul, Korea (South).

- Li, F., M. Lehtomäki, S. O. Elberink, G. Vosselman, A. Kukko, E. Puttonen, Y. Chen, and J. Hyyppä. 2019. “Semantic Segmentation of Road Furniture in Mobile Laser Scanning Data.” ISPRS Journal of Photogrammetry and Remote Sensing 154: 98–113. doi:10.1016/j.isprsjprs.2019.06.001.

- Li, Y., L. Ma, Z. Zhong, F. Liu, M. A. Chapman, D. Cao, and J. Li. 2020. “Deep Learning for Lidar Point Clouds in Autonomous Driving: A Review.” IEEE Transactions on Neural Networks and Learning Systems 32 (8): 3412–3432. doi:10.1109/TNNLS.2020.3015992.

- Liu, S.-L., G. Hao-Xiang, P. Hao, W. Peng-Shuai, T. Xin, L. Yang. 2021a. “Deep Implicit Moving Least-Squares Functions for 3D Reconstruction.” In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Virtual Event, 1788–1797.

- Liu, S.-L., G. Hao-Xiang, P. Hao, W. Peng-Shuai, T. Xin, L. Yang. 2021b. “Deep Implicit Moving Least-Squares Functions for 3D Reconstruction.” 1788–1797, ACM siggraph computer graphics. 21.

- Li, J., B. Yang, C. Chen, and A. Habib. 2019. “NRLI-UAV: Non-Rigid Registration of Sequential Raw Laser Scans and Images for Low-Cost UAV LiDar Point Cloud Quality Improvement.” ISPRS Journal of Photogrammetry and Remote Sensing 158: 123–145. doi:10.1016/j.isprsjprs.2019.10.009.

- Li, L., Z. Siyu, F. Hongbo, T. Ping, and T. Chiew-Lan. 2020. “End-To-End Learning Local Multi-View Descriptors for 3D Point Clouds.” Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 1919–1928, Seattle, WA, USA.

- Lorensen, W.-E., and H.-E. Cline. 1987. “Marching Cubes: A High Resolution 3D Surface Construction Algorithm.” ACM SIGGRAPH Computer Graphics 21 (4): 163–169. doi:10.1145/37402.37422.

- Luo, S., W. Hu. 2020. ”Differentiable Manifold Reconstruction for Point Cloud Denoising.” Proceedings of the 28th ACM international conference on multimedia, 1330–1338, Seattle, United States.

- Luo, S., W. Hu. 2021. “Score-Based Point Cloud Denoising.” Proceedings of the IEEE/CVF International Conference on Computer Vision, 4583–4592, Montreal, QC, Canada.

- Magnusson, M., A. Lilienthal, and T. Duckett. 2007. “Scan Registration for Autonomous Mining Vehicles Using 3d-ndt.” Journal of Field Robotics 24 (10): 803–827. doi:10.1002/rob.20204.

- Ma, L., Y. Li, J. Li, C. Wang, R. Wang, and M. Chapman. 2018. “Mobile Laser Scanned Point-Clouds for Road Object Detection and Extraction: A Review.” Remote Sensing 10 (10): 1531. doi:10.3390/rs10101531.

- Mellado, N., D. Aiger, and N. J. Mitra. 2014. “SUPER 4PCS Fast Global Pointcloud Registration via Smart Indexing.” Computer Graphics Forum 33 (5): 205–215. doi:10.1111/cgf.12446.

- Mescheder, L., O. Michael, N. Michael, N. Sebastian, and G. Andreas. 2019. “Occupancy Networks: Learning 3D Reconstruction in Function Space.” 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition, 4460–4470, Long Beach, CA, USA.

- Mian, A. S., M. Bennamoun, and R. Owens. 2006. “Three-Dimensional Model-Based Object Recognition and Segmentation in Cluttered Scenes.” IEEE Transactions on Pattern Analysis and Machine Intelligence 28 (10): 1584–1601. doi:10.1109/TPAMI.2006.213.

- Mitra, N. J., L. J. Guibas, and M. Pauly. 2006. “Partial and Approximate Symmetry Detection for 3D Geometry.” ACM Transactions on Graphics (TOG) 25 (3): 560–568. doi:10.1145/1141911.1141924.

- Mostegel, C., P. Rudolf, F. Friedrich, and B. Horst. 2017. “Scalable Surface Reconstruction from Point Clouds with Extreme Scale and Density Diversity.” 2017 IEEE Conference on Computer Vision and Pattern Recognition, 904–913, Honolulu, HI, USA.

- Pais, G. D., R. Srikumar, M. G. Venu, C. N. Jacinto, C. Rama, and M. Pedro. 2020. “3DRegNet: A Deep Neural Network for 3D Point Registration.” Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, 7193–7203, Seattle, WA, USA.

- Pan, L., C. Xinyi, C. Zhongang, Z. Junzhe, Z. Haiyu, Y. Shuai, and L. Ziwei. 2021. “Variational Relational Point Completion Network.” Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 8524–8533, Nashville, TN, USA.

- Park, J. J., F. Peter, S. Julian, N. Richard, and L. Steven. 2019. “{deepsdf}: Learning Continuous Signed Distance Functions for Shape Representation.” 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition, 165–174.

- Peng, S., J. Chiyu, L. Yiyi, N. Michael, P.Marc, and G. Andreas. 2021. “Shape as Points: A Differentiable Poisson Solver.” Advances in Neural Information Processing Systems 34.

- Peng, S., N. Michael, M. M. Lars, P. Marc, and G. Andreas. 2020. Convolutional Occupancy Networks, 523–540. Springer, Glasgow, UK.

- Pistilli, F., F. Giulia, V. Diego, and M. Enrico. 2020. “Learning Graph-Convolutional Representations for Point Cloud Denoising.” European conference on computer vision, Springer, Cham, 103–118, Glasgow, UK.

- Qin, Z., Y. Hao, W. Changiian, G. Yulan, P. Yuxing, and X. Kai. 2022. “Geometric Transformer for Fast and Robust Point Cloud Registration.” arXiv preprint arXiv:2202.06688, IEEE/CVF Conference on Computer Vision and Pattern Recognition, 11133–11142.

- Qi, C. R., S. Hao, K. Mo, and G. J. Leonidas. 2017. “PointNet: Deep Learning on Point Sets for 3D Classification and Segmentation.” Proceedings of the IEEE conference on computer vision and pattern recognition, 652–660, Honolulu, HI, USA.

- Qi, C. R., Y. Li, S. Hao, G. J. Leonidas. 2017. “PointNet++: Deep Hierarchical Feature Learning on Point Sets in a Metric Space.” Advances in Neural Information Processing Systems 30.

- Rakotosaona, M. J., V. La Barbera, P. Guerrero, N. J. Mitra, and M. Ovsjanikov. 2020. “Pointcleannet: Learning to Denoise and Remove Outliers from Dense Point Clouds.” Computer Graphics Forum 39 (1): 185–203. doi:10.1111/cgf.13753.

- Ressl, C., H. Kager, and G. Mandlburger. 2008. “Quality Checking of ALS Projects Using Statis-Tics of Strip Differences.” International Archives of Photogrammetry and Remote Sensing 37 (B3b): 253–260.

- Roveri, R., C. A. Öztireli, I. Pandele, and M. Gross. 2018. “PointPronets: Consolidation of Point Clouds with Convolutional Neural Networks.” Computer Graphics Forum 37 (2): 87–99.

- Roveri, R., A. C. Öztireli, I. Pandele, and M. Gross. 2018. “Pointpronets: Consolidation of Point Clouds with Convolutional Neural Networks.” Computer Graphics Forum 37 (2): 87–99. doi:10.1111/cgf.13344.

- Rusu, R. B., N. Blodow, M. Beetz. 2009. “Fast Point Feature Histograms (FPFH) for 3D Registration.” 2009 IEEE international conference on robotics and automation, IEEE, 3212–3217, Kobe, Japan.

- Schoenenberger, Y., J. Paratte, P. Vandergheynst. 2015. “Graph-Based Denoising for Time-Varying Point Clouds.” 2015 3DTV-Conference: The True Vision-Capture, Transmission and Display of 3D Video (3DTV-CON), 1–4, Lisbon, Portugal, IEEE.

- Segal, A., D. Haehnel, and S. Thrun. 2009. “Generalized-ICP.” Robotics: Science and Systems 2 (4): 435.

- Shan, J., and C. Toth, edited by. 2018. Topographic Laser Ranging and Scanning: Principles and Processing. 2nd ed. CRC press. doi:10.1201/9781315154381.

- Shih, S. W., Y. T. Chuang, and T. Y. Yu. 2008. “An Efficient and Accurate Method for the Relaxation of Multiview Registration Error.” IEEE Transactions on Image Processing 17 (6): 968–981. doi:10.1109/TIP.2008.921987.

- Berger, M., A. Tagliasacchi, L. Seversky, P. Alliez, J. Levine, A. Sharf, and C. Silva. 2014. “State of the Art in Surface Reconstruction from Point Clouds.” Eurographics 2014-State of the Art Reports 1 (1): 161–185.

- Stoyanov, T., M. Magnusson, H. Andreasson, and A. J. Lilienthal. 2012. “Fast and Accurate Scan Registration Through Minimization of the Distance Between Compact 3D NDT Representations.” The International Journal of Robotics Research 31 (12): 1377–1393. doi:10.1177/0278364912460895.