ABSTRACT

In the rapidly evolving realm of remote sensing technology, the classification of Hyperspectral Images (HSIs) is a pivotal yet formidable task. Hindered by inherent limitations in hyperspectral imaging, enhancing the accuracy and efficiency of HSI classification remains a critical and much-debated issue. This review study focuses on a key application area in HSI classification: Land Use/Land Cover (LULC). Our study unfolds in fourfold approaches. First, we present a systematic review of LULC hyperspectral image classification, delving into its background and key challenges. Second, we compile and analyze a number of datasets specific to LULC hyperspectral classification, offering a valuable resource. Third, we explore traditional machine learning models and cutting-edge methods in this field, with a particular focus on deep learning, and spectral decomposition techniques. Finally, we comprehensively analyze future developmental trajectories in HSI classification, pinpointing potential research challenges. This review aspires to be a cornerstone resource, enlightening researchers about the current landscape and future prospects of hyperspectral image classification.

1. Introduction

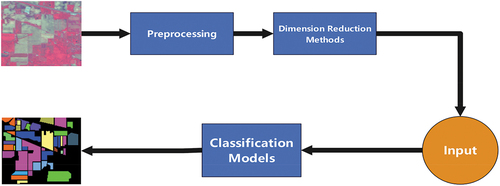

Due to the significant role of artificial Earth satellites in capturing and acquiring Earth image data globally, imaging technology has evolved into a dependable tool for studying Earth information. In traditional imaging methods, distinctions in optical properties are often discerned through the grayscale levels of black-and-white images. Hyperspectral imaging technology integrates spectral technology with imaging techniques to capture high-resolution image data. Initially, hyperspectral imaging technology was manifested as an airborne imaging spectrometer. Through continuous technological refinement, it has evolved into Advanced Visible and Infrared Imaging Spectrometers (AVIRIS) – many benchmark academic hyperspectral datasets now originate from this spectrometer. The general framework for Hyperspectral Image (HSI) processing is illustrated in .

Hyperspectral remote sensing images have garnered considerable attention due to their high spectral resolution, numerous bands, and the capability to precisely delineate spectral characteristic curves of target objects. The progression of imaging technology is pivotal in endowing Hyperspectral Images (HSIs) with their inherent advantages. The advantage of rich spectral information in HSIs enables them to analyze the target scene effectively. Therefore, HSIs have been widely employed in various fields, including pathological diagnosis (Aboughaleb, Aref, and El-Sharkawy Citation2020; Goto et al. Citation2015; Vo-Dinh Citation2004), fruit decay detection (Min et al. Citation2023; Delia et al. Citation2013; Huang et al. Citation2016), wheat seed variety recognition and classification (Bao et al. Citation2019; Que et al. Citation2023; Zhao et al. Citation2022), egg freshness detection (Chen et al. Citation2023; Dai et al. Citation2020; Yao et al. Citation2020), Earth monitoring (Camps-Valls et al. Citation2014; Transon et al. Citation2018), and land cover classification (Hegde et al. Citation2014; Hemanth, Prasad, and Bruce Citation2010; Kwan et al. Citation2020; Priyadarshini et al. Citation2019; Stavrakoudis et al. Citation2012; Zomer, Trabucco, and Ustin Citation2009). Simultaneously, hyperspectral imaging is instrumental in acquiring detailed data for Land Use and Land Cover (LULC) analysis through remote sensing. Specialized remote sensing instruments like satellite imaging and airborne platforms obtain LULC hyperspectral images. This technology facilitates the classification of different land cover types and land use patterns, contributing to a comprehensive understanding of environmental dynamics and resource management. So, LULC hyperspectral image classification has been a particularly active study area. Numerous reviews (Chen et al. Citation2018; Kuras et al. Citation2021; Moharram and Meena Sundaram Citation2023; Vali, Comai, and Matteucci Citation2020) on LULC hyperspectral image classification have recently been published.

In terms of HSI classification, standard classifiers like K-Nearest Neighbor (KNN) and Support Vector Machine (SVM) do not perform well in processing hyperspectral images. On one hand, traditional classification methods fail to account for the abundant spatial information inherent in HSIs, resulting in inadequate feature extraction. On the other hand, most traditional classification methods focus on manual features and require a large amount of manual discrimination and annotation, significantly consuming manpower and time. These factors all contribute to the poor performance of traditional classification methods in classifying hyperspectral images. This has led to the exploration of new, precise classification techniques for hyperspectral image classification. Nowadays, machine learning methods, including advanced Deep Learning (DL) techniques, are widely employed for HSI classification techniques, and the classification performance has dramatically improved compared to previous technologies (Tao et al. Citation2023; Zhong et al. Citation2017).

The LULC can be broadly categorized into two major domains: land cover and land use (Masoumi and van Genderen Citation2023). Land cover pertains to the existing natural and human factors on Earth’s surface, constituting objects that form a covering. This encompasses the natural states of the Earth’s surface, such as forests, grasslands, farmland, soil, and roads. On the other hand, land use refers to the process of transforming the natural ecosystem of land into an artificial ecosystem – a comprehensive process influenced by natural, economic, and social factors. Examples include areas designated for transportation, commerce, residential use, and so forth. Hyperspectral images serve as valuable tools for extracting surface cover features in the context of land use/land cover classification. Furthermore, they facilitate ongoing monitoring of surface cover, enabling a comprehensive understanding of the land’s status and changes. This, in turn, empowers individuals to make optimized and targeted judgments for corresponding maintenance and processing. In the early stages of research on LULC spectral image classification, while many of these classification methods may not have excelled due to their age, they nonetheless demonstrated commendable classification results for their time. These early methods provided valuable insights that contributed to the evolution and improvement of subsequent classification techniques.

In (de Jong et al. Citation2001), the authors introduced the Spatial and Spectral Classifier (SSC) tailored for open natural land cover areas in the Mediterranean region. The SSC integrates the strengths of classification approaches that leverage both spectral and adjacent pixel context information. The SSC method initiates by partitioning HSIs into heterogeneous and homogeneous segments based on spectral changes within the kernel of pixels. Subsequently, a traditional per-pixel method is employed to classify homogeneous segments, while a combination of spectral information and adjacent pixel contextual details is utilized to classify heterogeneous components. Comparative analysis with other classification methods outlined in the article reveals an enhanced overall accuracy with the SSC method. Notably, the SSC method demonstrates proficiency in recognizing mixed pixels within the image, effectively assigning these mixed pixels to appropriate land cover categories. Nevertheless, a limitation of this method exists. Specifically, if the spatial pattern of land cover does not align with the pixel size of the sensors employed and the kernel size used in the analysis, the layering process may encounter impediments, hindering seamless progression. In order to classify multi-source remote sensing and geographic datasets, Gislason et al. (Citation2006) conducted an extensive study on the application domains of random forest classifiers in land cover classification, comparing their accuracy with other well-known ensemble methods. The experimental results highlighted the notable advantages of the random forest classifier. Firstly, in contrast to other ensemble methods, random forest classifiers demonstrated quicker training times without succumbing to overfitting or requiring external guidance. Secondly, the algorithm’s ability to estimate variable importance in classification proved valuable for feature extraction and weighting in remote sensing data classification. Lastly, the random forest algorithm showed proficiency in outlier detection, aiding in avoiding erroneous labeling in certain scenarios. Shao and Lunetta (Citation2012) assessed the classification performance of SVM for spectral images. They compared SVM with two traditional image classification methods – multi-layer perceptron Neural Network (NN) and Classification Regression Tree (CART) – using Medium Resolution Imaging Spectrometer (MODIS) time series data as input. Classification experiments focused on three crucial factors: the size of training samples, changes in training samples, and the impact of reference data point features. The experimental findings underscored that, in comparison to NN and CART methods, SVM achieved higher overall accuracy within a general training sample size range and significantly improved the Kappa coefficient. Moreover, SVM demonstrated superior generalization ability.

Over the past decade, owing to the relentless advancements in machine learning, the classification technology for land use/land cover HSIs has undergone continuous enhancement. Numerous classification methods documented in the relevant literature have demonstrated promising results. Carranza-García et al. (Citation2019) employed a universal convolutional neural network with a fixed structure and parameterization to achieve high accuracy in LULC classification. They also introduced a validation program to compare their method with other traditional classifiers, including random forests, SVM, and KNN. After testing, the results demonstrated that the convolutional neural network exhibited superior performance. Tan and Xue (Citation2022) proposed a new spectral, spatial multi-layer perceptron architecture. This architecture extracts more discriminative features and effectively fuses heterogeneous spatial and spectral features for joint land cover classification. Compared with traditional deep learning classification methods, it has better classification performance. Yang et al. (Citation2023) proposed a partially supervised deep reinforcement learning model to select hyperspectral bands, which exhibits better performance compared to other similar band selection methods. Furthermore, this method was utilized to select the most significant band in the spectral range, and the chosen spectral band exhibited a well-distributed profile across the dynamic range of the spectrum. As a result, the processed band aligns closely with the original hyperspectral data.

1.1. Paper main contribution

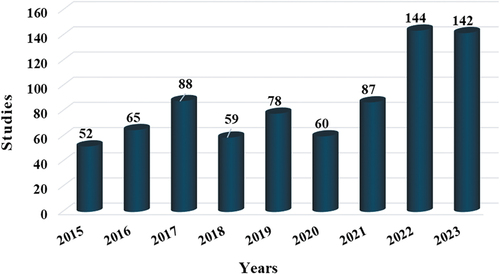

We observed a significant surge in publications after thoroughly examining recent literature on land use/land cover hyperspectral image classification. illustrates this trend, showcasing the latest Scopus-indexed works in the field. Our review evaluates both traditional machine learning models and some emerging technologies, particularly deep learning and spectral unmixing, providing a broader perspective. Despite the existing challenges highlighted in the literature, this surge in research activity has inspired us to collate and present this review. Our goal is to assist researchers in navigating and overcoming these prevalent challenges.

The contributions of this study are outlined as follows:

The review concentrates solely on the LULC HSI obtained by remote sensing instruments. Unlike broader hyperspectral classifications, our study provides a focused exploration into the specific domains of land cover and land use patterns within hyperspectral imagery. This focused approach enhances our understanding and application potential in environmental dynamics and resource management.

We describe the developmental trends and related challenges of hyperspectral image classification methods, elaborating on the relevant information of hyperspectral image classification and dimensionality reduction methods. Additionally, we summarize some challenges in dimensionality reduction techniques to help researchers gain a deeper understanding of the relevant knowledge in this field.

To enhance the comprehensiveness of this review, we synthesize a series of in-depth studies on the classification of land use/land cover hyperspectral data using traditional machine learning models, deep learning, and spectral unmixing. In summary, this review provides potential guidelines for future research work.

By searching for relevant literature on LULC classification, this review analyzes the advantages and limitations of traditional machine learning models and some promising technologies such as deep learning and spectral unmixing. The aim of this review is to assist researchers in the field of LULC classification in better understanding the performance of various advanced classification methods, aiding in the selection of the most effective classification method, and ultimately providing suggestions for the future direction of LULC classification.

Table 1. Relevant information on the review of land cover/land use hyperspectral image classification in recent years.

provides a detailed comparison of five pivotal review articles in HSI classification, highlighting available datasets, core analyses, and identified limitations within these studies. The distinct strengths of our review, setting it apart from these existing works, are as follows:

Extensive dataset compilation: Our review uniquely assembles 12 diverse remote sensing datasets. We provide not only their detailed parameters but also accessible links for each, thereby offering an unprecedented resource for researchers.

In-depth methodological analysis: We delve deeply into dimensionality reduction and classification techniques, providing a thorough exploration of these critical aspects in HSI classification.

Comprehensive technological scope: In addition to discussing the two common learning methods of traditional machine learning models and deep learning, our review also covers a series of cutting-edge technologies such as spectral unmixing and transformer technology. This breadth ensures a more holistic and complete understanding of the current and potential advancements in the field.

These enhancements underscore our commitment to delivering a review that is not only comprehensive but also practical and forward-looking, aiming to contribute significantly to the research community in hyperspectral image classification.

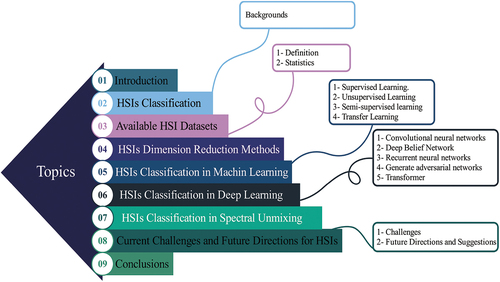

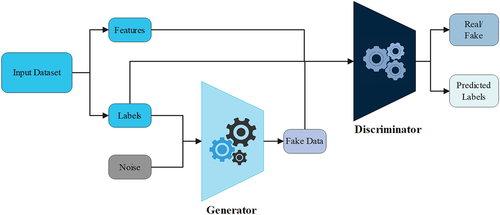

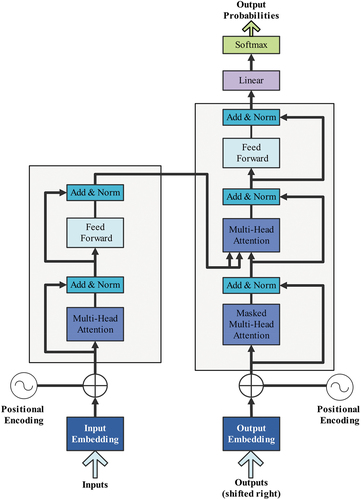

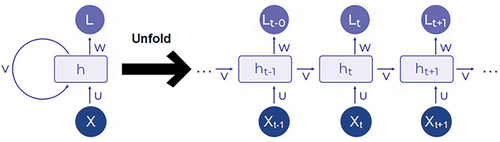

The subsequent sections of the paper are structured as follows: Section 2 examines the background of hyperspectral image classification. Section 3 provides insights into the definition and statistics of available datasets. Section 4 introduces the pertinent background, advantages, and limitations of hyperspectral dimensionality reduction methods. Section 5 and Section 6 respectively discuss the background, advantages, and limitations of traditional machine learning models (supervised, unsupervised, semi-supervised, and transfer learning) and deep learning (CNN, Deep Belief Network (DBN), Recurrent Neural Network (RNN), Generative Adversarial Network (GAN), and transformer) in hyperspectral classification. Additionally, Section 7 introduces the auxiliary technology of hyperspectral image classification – spectral unmixing technology and discusses its background, advantages, and limitations. Section 8 articulates the future development direction of hyperspectral technology, and finally, Section 9 consolidates the main conclusions and suggests potential avenues for further research. The overall structure diagram of this study is shown in .

2. HSIs classification: backgrounds

2.1. Backgrounds

The classification technology of HSIs found broad applications in various fields, including breast cancer screening (Li et al. Citation2022), potato late blight detection (Qi et al. Citation2023), Mengdingshan green tea detection (Zou et al. Citation2023), and crop classification (Hsieh and Kiang Citation2020). Hyperspectral image classification aims to predict and recognize the features of target objects to assist humans in observation and computational analysis.

Over the past decade, HSI classification technology has made significant strides, overcoming various challenges and enhancing processing outcomes. In 2001, Du and Chang introduced a novel discriminant analysis technique, Linear Constraint Distance Analysis (LCDA), which improved upon Fisher’s Linear Discriminant Analysis (LDA) (Du and Chang Citation2001). LCDA uniquely constrains the class center along an orthogonal direction, ensuring separation between all interested classes. This constraint mechanism makes LCDA particularly adept at detecting and classifying similar target information in HSIs with very high spatial resolution. Additionally, LCDA’s versatility allows its extension to unsupervised patterns in scenarios where prior feature knowledge is unavailable. This study (Senthil Kumar et al. Citation2010) proposed a spectral matching method that combines the Variable Interval Spectral Average (VISA) method with the Spectral Curve Matching (SCM) method. This hybrid technique capitalizes on the strengths of both methods: it enables multi-resolution analysis of spectral features and improves digital correlation in the least squares fitting model. The combined approach shows superior performance in classifying both pure and mixed-class pixels, outperforming other methods. Moreover, Du et al. (Citation2012) designed an adaptive binary tree SVM classifier, which incorporated inter-class separability measures with SVM criteria for hyperspectral data (Du, Tan, and Xing Citation2012). This classifier demonstrated enhanced performance compared to other multi-class SVM classifiers and traditional classification methods, showcasing its effectiveness in hyperspectral data analysis.

Recent advancements in deep learning have significantly propelled the field of HSI classification, yielding more precise and effective methods. Sellami et al. (Citation2020) introduced spatial classification methods utilizing spectral band clustering and a three-dimensional (3-D) CNN, including a fused 3-D CNN model (Sellami et al. Citation2020). These methods effectively navigate the challenges of high-dimensional feature space, numerous spectral bands, and limited labeled samples in HSIs. The model excels in extracting deep spectral and spatial features from clusters of similar spectral bands, efficiently mitigating redundancy. By employing spectral clustering to pinpoint the most discriminative and informative spectral bands, it achieves higher classification rates than other methods. The use of 3-D CNN further allows the simultaneous capture of spatial and spectral information, enhancing overall classification performance.

In (Wang, Li, and Zhang Citation2021), a Discriminative Graph Convolutional Network (DGCN) was developed to combat the challenges of intra-class diversity and inter-class similarity in HSI classification. DGCN integrates intra-class and inter-class scattering concepts into GCNs, optimizing feature extraction for enhanced discriminative power. By maximizing inter-class distances and minimizing intra-class distances, DGCN effectively separates different classes, leading to improved classification accuracy. Additionally, to further refine HSI classification, a Multi-Level Graph Learning Network (MGLN) was proposed (Wan et al. Citation2022). This network incorporates global contextual information into local convolutional operations, adaptively learning the graph’s structure to optimize both representation learning and graph reconstruction. MGLN captures contextual information at multiple levels during the graph convolution process, offering a more comprehensive solution than local GCN models by combining local spatial correlation with global image region correlations.

Addressing the limitations of traditional models and low classification accuracy in scenarios with scarce labeled samples, Wang et al. introduced the Capsule Vector Neural Network (CVNN). CVNN combines vector neuron capsule representation with a Vanilla Full Convolutional Network (FCN), enhancing classification methods and accuracy (Wang, Tan, et al. Citation2023). Particularly effective in situations with limited training samples, CVNN manages a balance between retaining secondary features and minimizing salt and pepper noise, demonstrating its efficiency in deep learning modeling. These developments represent the dynamic evolution of HSI classification, with deep learning technologies continually pushing the boundaries of accuracy and efficiency in the field.

The advancement of traditional machine learning models and advanced machine learning (deep learning) algorithms has significantly optimized the field of HSI classification. Despite these improvements, the pursuit of enhanced classification accuracy remains a pressing and dynamic area of research. Inherent limitations within HSIs, such as high dimensionality, spectral variability, and limited labeled samples, continue to pose challenges to classification accuracy. lists the specific information of above mentioned hyperspectral classification studies.

Table 2. Specific introduction to literature related to hyperspectral image classification.

3. Available HSI datasets: definition and statistics

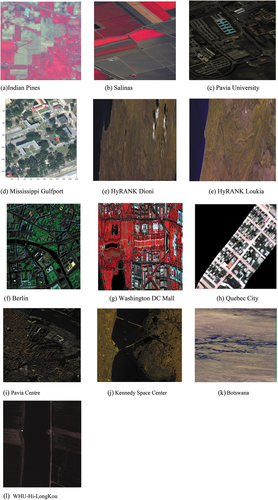

This review identified pertinent datasets from 20 literature sources, encompassing widely used datasets like Indian Pines, Pavia Center, Pavia University, and KSC, alongside several specialized datasets. Subsequently, the collected datasets will undergo definition interpretation and data statistics. We also visualized the pseudo-color maps of all the statistically analyzed hyperspectral datasets, allowing readers to more intuitively understand the overall contours of these datasets, as shown in .

3.1. Definitions of the existing datasets

Indian Pines: This dataset was captured by an AVIRIS in Indiana, USA, in 1992, and was intercepted and labeled with a size of 145 × 145, which was used as an early hyperspectral image classification dataset. The Indian Pines dataset has a spatial resolution of approximately 20 m and has 220 spectral bands, of which 20 are noise bands affected by water absorption. In the actual training process, the actual band used is 200. In addition, the Indian Pines dataset contains 16 categories of ground objects, including Alfalfa, Corn-notill, Corn-mintill, Corn, Grass-pasture, Grass-trees, Grass-pasture-mowed, Hay-windrowed, Oats, Soybean-notill, Soybean-mintill, Soybean-clean, Wheat, Woods, Buildings-Grass-Trees-Drives, and Stone-Steel-Towers.

Salinas: This dataset was captured by AVIRIS sensors in the Salinas Valley region of California. The size of the dataset is 512 × 217, with a spatial resolution of 3.7 m and containing 224 spectral bands. Like the IP dataset, there are also 20 water absorption bands, and the actual bands used for training are 204. The SA dataset has 16 categories of ground objects, including Brocoli_green_weeds_1, Brocoli_green_weeds_2, Fallow, Fallow_rough_plow, Fallow_smooth, Stubble, Celery, Grapes_untrained, Soil_vinyard_develop, Corn_senesced_green_weeds, Lettuce_romaine_4wk, Lettuce_romaine_5wk, Lettuce_romaine_6wk, Lettuce_romaine_7wk, Vinyard_untrained, and Vinyard_vertical_trellis.

Pavia University: In 2003, images were obtained by a German airborne Reflectance Optical Spectrometer (ROSIS) while flying over the city of Pavia in northern Italy. The Pavia University and Pavia Center datasets were both acquired during flights over Pavia, northern Italy, but the two datasets are not identical. The Pavia University dataset has a size of 610 × 340 and a spatial resolution of 1.3 m. The PU uses 103 spectral bands for training. There are a total of 9 ground object categories in the PU, which are Asphalt, Meadows, Gravel, Trees, Painted metal sheets, Bare Soil, Bitumen, Self-Blocking Bricks, and Shadows.

Mississippi Gulfport: This dataset was collected in November 2010 at the University of Southern Mississippi Gulf Park Campus. The original Mississippi Gulfport dataset contains 325 × 337 pixels and has 72 bands. However, due to noise and the presence of an invalid area in the lower right corner of the original image, the resulting cropped image size is 325 × 220, with 64 remaining bands. The Mississippi Gulfport dataset has 11 land-cover categories, including Trees, Grass ground surface, Mixed ground surface, Dirt and sand, Road, Water, Buildings, Shadow of buildings, Sidewalk, Yellow curb, and Cloth panels.

HyRANK dataset: Obtained by Hyperion sensor. The spatial resolution of the HyRANK dataset is 30 m. The two hyperspectral datasets used for training in the HyRANK dataset are Dioni and Loukia, respectively. Both images have 176 spectral bands and 14 ground object categories. However, the sizes of the two images are not consistent. The Dioni image size is 250 × 1376, and the Loukia image size is 249 × 945. Among them, the categories are Dense urban fabric, Mineral extraction site, Non-irrigated arable land, Fruit trees, Olive groves, Broad-leaved forest, Coniferous forest, Mixed forest, Dense sclerophyllous vegetation, Sparse sclerophyllous vegetation, Sparsely vegetated areas, Rocks and sand, Water, and Coastal water.

Berlin: A dataset obtained by HyMap sensors over the city of Berlin. The spectral range of the Berlin dataset is from 0.4 to 2.5 μm. The pixel size of this dataset is 300 × 300, and it has 114 bands. The spatial resolution of this dataset is 3.5 m, and it has 5 categories, namely Vegetation, Build-up, Impervious, Soil, and Water.

Washington DC Mall: An aerial HSI obtained by Hydice sensors over the Washington shopping center. The spectral range of the Washington DC Mall dataset is from 0.4 to 2.4 μm. The pixel size of this dataset is 1208 × 307, and it has 191 bands. The spatial resolution of this dataset is 1.5 m, and it has 7 categories, namely Road, Grass, Water, Trail, Trees, Shadow, and Roofs.

Quebec City: A dataset collected by Hyper-Cam LWIR over Quebec City. The spectral range of the Quebec City dataset is from 7.8 to 12.5 μm. The pixel size of this dataset is 795 × 564, and it has 84 bands. The spatial resolution of this dataset is 1 m, and it has 6 categories, namely Road, Gray roof, Trees, Vegetation, Blue roof, and Concrete roof.

Pavia Center: The acquisition method is consistent with Pavia University. However, the pixel size, number of bands, and category names of the two are different. The size of the Pavia Center dataset is 1096 × 715, but Wang’s paper experiment uses 481 × 291. The band of the Pavia Center dataset is 102. The ground category in the Pavia Center dataset includes Water, Trees, Asphalt, Self-Blocking Bricks, and Bitumen, Tiles, Shadows, Meadows, and Bare Soil. The specific differences between these two datasets can be distinguished in detail by viewing the Pavia Centre and University in the relevant dataset link in .

KSC dataset: This dataset was imaged at the Kennedy Space Center in Florida, USA, in 1996. It was also captured using the AVIRIS infrared imaging spectrometer, and a 512 × 614 pixel section was intercepted and labeled as a hyperspectral dataset. The spatial resolution of the KSC dataset is 18 m. Due to the presence of water absorption bands and low signal-to-noise ratio bands in this dataset, the actual bands used for training are 176. The ground object categories in the KSC dataset are 13, which are Scrub, Willow swamp, Cabbage palm hammock, Cabbage palm/oak hammock, Slash pine, Oak/broadleaf hammock, Hardwood swamp, Graminoid marsh, Spartina marsh, Cattail marsh, Salt marsh, Mud flats, and Water.

Botswana: The Botswana data set was acquired by NASA EO-1 satellite in Botswana’s Okavango Delta in 2001, and its size is 1476 × 256. The spatial resolution of this dataset is about 30 m and it has 242 bands. Due to the presence of many noisy bands in the total band, it uses 145 bands in actual training. There are 14 types of features in this dataset, which are Water, Hippo grass, Floodplain grasses 1, Floodplain grasses 2, Reeds, Riparian, Firescar, island interior, Acacia woodlands, Acacia shrublands, Acacia grasslands, Short mopane, Mixed mopane, and Chalcedony.

WHU Hi LongKou data: This dataset was collected in Longkou Town, Hubei Province, China, in 2018 using hyperspectral imaging sensors on a drone platform. The area studied is an agricultural scene. The acquired hyperspectral image size is 550 × 400, and its spatial resolution is 0.463 m. The WHU-Hi-LongKou dataset has 270 bands and 9 categories, including Corn, Cotton, Sesame, Broad-leaf soybean, Narrow-leaf soybean, Rice, Water, Roads, houses, and Mixed weed.

3.2. Statistics

summarizes the datasets used in different hyperspectral classification studies, their parameters, and links to the datasets.

Table 3. The HSI datasets description and their sources.

4. HSIs dimension reduction methods

While HSI offers a wealth of spectral information, its inherent challenge lies in the excessive number of spectral bands. This not only leads to information redundancy but also escalates computational complexity, potentially undermining classification accuracy. Dimensionality Reduction (DR) methods emerge as a critical solution to this dilemma. They streamline HSIs by curtailing redundancy, thereby enhancing classification performance. Researchers across various HSI applications have embraced DR’s benefits. In winemaking, it aids in predicting the sugar content in grape berries (Silva and Melo-Pinto Citation2021; Silva, Gramaxo Freitas, and Melo-Pinto Citation2023), while in medical diagnostics, it assists in identifying malignant tumors (Lazcano et al. Citation2017; Zheludev et al. Citation2015) and in agriculture, it plays a key role in classifying vegetation and crops (Hidalgo, David, and Caicedo Bravo Citation2021).

Dimensionality reduction, often a preliminary step in processing high-dimensional feature data, branches into two primary techniques: feature extraction (Abd Citation2013; Dalal, Cai, et al. Citation2023; Lu et al. Citation2007) and feature selection (Li et al. Citation2011; Stavrakoudis et al. Citation2010). Feature extraction transforms hyperspectral images into a new feature space, using mathematical approaches to reduce and refine data dimensions. This process retains essential data for target improvement and discards superfluous information. LDA, Principal Component Analysis (PCA), and Singular Value Decomposition (SVD) are prominent methods here. In the realm of deep learning, the Stacked Automatic Encoder (SAE) frequently comes into play for HSI feature extraction, though its need to process numerous features simultaneously can complicate and limit its effectiveness. To address this, Zabalza et al. (Citation2016) introduced segmented SAEs, which simplify the original feature data into smaller segments for independent processing by various SAEs. This strategy reduces complexity and bolsters extraction and classification efficiency. However, its accuracy falters with classes having minimal sample sizes.

Further innovations in DR come from Dalal et al. (Citation2023), who introduced a triumvirate of innovative dimensionality reduction techniques, each methodically designed to refine the complex landscape of HSI data analysis. The first of these, Compression and Reinforced Variation (CRV) (Dalal et al. Citation2022) employs a sophisticated feature selection strategy, adeptly isolating the most critical bands from the HSI spectrum. Building upon this foundation, Improving Distribution Analysis (IDA) (Dalal, Al-qaness, et al. Citation2023) represents an advanced stage of feature extraction, further distilling the data to its most informative essence, thus enhancing classification accuracy. The third method, Enhancing Transformation Reduction (ETR) (Dalal, Cai, et al. Citation2023), masterfully integrates the strengths of both CRV and IDA. This method transcends its precursors in both efficiency and accuracy, showcasing an unparalleled ability to enhance and choose the most informative features and reduce the noise, mixed, and outlier pixels impact while retaining key features. Collectively, these methods – CRV, IDA, and ETR – not only mark a significant leap in the domain of dimensionality reduction but also set a new benchmark in HSI data processing, yielding unprecedented levels of accuracy and performance when compared to other established techniques.

In the realm of HSI, the juxtaposition of feature extraction and feature selection methods in dimensionality reduction offers distinct pathways for enhancing data processing. Feature selection, in particular, aims to distill the most pertinent subset of features from the original dataset, thereby augmenting classification performance by eliminating extraneous features and noise. This technique bifurcates into three primary types: filters, wrappers, and embedded systems.

Elmaizi et al. (Citation2019) proposed a Dimensionality Reduction Method (DRM) utilizing the information gain filter selection. This approach judiciously selects bands with the highest informational content, discarding those that are irrelevant or noisy. While this method demonstrated enhanced classification performance and reduced computational costs in comparative studies on two datasets, its optimal outcomes were confined to the specific image datasets and classifiers used in the research, indicating a potential limitation in broader applicability. Moreover, the CRV is one of the feature selection methods for HSIs and works to reduce the dimension of HSI and normalize its distribution (Dalal et al. Citation2022). The CRV eliminated the issue of noise values and mixed pixels without omitting any outliers. CRV first employs the dilation statistical procedure to narrow the gap between the smallest and largest values. Then, based on these results, CRV selects only the wealthy bands that can aid in HSI classification. The CRV was superior to ten other DR methods in terms of reducing the time required for the calculation and selection of the crucial bands.

Medical hyperspectral imaging also benefits from these advancements. Zhang et al. developed a band selection method for medical HSIs, termed Data Gravitation and Weak Correlation Based Sorting (DGWCR) (Zhang et al. Citation2023). This method effectively clusters signals containing bands and expurgates noisy bands, yielding a frequency band selection characterized by minimal redundancy and maximal discrimination. Despite its superiority over other methods, DGWCR’s precision in identifying the optimal number of bands requires further refinement.

The challenge of handling high-dimensional data in HSIs underscores the criticality of dimensionality reduction technologies in improving classification accuracy. Researchers have been diligently evaluating different dimensionality reduction techniques (Demarchi et al. Citation2014; Khodr and Younes Citation2011), fostering a progressive research landscape. The past decade has seen a proliferation of methods aimed at enhancing overall performance in the HSI domain, each method bringing its unique strengths and limitations. Future research endeavors will inevitably focus on addressing these limitations, propelling the field toward more refined and efficient data processing techniques. compares the performance of different dimensionality reduction methods on remote sensing datasets.

Table 4. Examples of dimensionality reduction literature.

5. HSIs classification using traditional machine learning models

The high-dimensional of hyperspectral remote sensing data is considered a major challenge in image classification. With the continuous advancement of Artificial Intelligence (AI) methods, this problem has been greatly improved due to the advantage of AI being able to handle a considerable amount of big data. Artificial intelligence is mainly composed of machine learning, including deep learning techniques. Machine learning is an advanced learning method that utilizes data to train corresponding models and then uses model predictions. It plays an important role in computer vision (Sánchez et al. Citation2023; Xu and Sun Citation2017), speech recognition (Liu et al. Citation2018), medicine (Gao et al. Citation2023; Urbanos et al. Citation2021), and land use/land cover (Boori et al. Citation2018; Damodaran and Rao Nidamanuri Citation2014; Li et al. Citation2016; Luo et al. Citation2016). The traditional machine learning models were considered early techniques for hyperspectral image classification, primarily divided into supervised, unsupervised, semi-supervised, and transfer learning. For a significant duration in the past, traditional machine learning models played a crucial role in LULC classification, where these algorithms extracted and utilized discriminative features from HSIs to classify LULC. This article aims to provide a summary and detailed exploration of the traditional machine learning methods employed in the literature on LULC classification, including their advantages and disadvantages. However, due to the limitations of traditional machine learning methods, there has been relatively little research on them. The article also introduces HSI classification techniques using traditional machine learning in alternative directions to supplement the existing body of research.

5.1. Supervised learning

Recent advancements in supervised machine learning have significantly enhanced LULC classification. Supervised learning, which involves training a model on labeled data for future predictions, primarily addresses classification and regression tasks. Prominent algorithms in this domain include KNN (Bo, Lu, and Wang Citation2018; Huang et al. Citation2016), decision trees (Pal and Mather Citation2003; Velásquez et al. Citation2017), random forest (Abe, Olugbara, and Marwala Citation2014; Clark Citation2017; Huang and Zhu Citation2013; Li et al. Citation2022), and SVM (Petropoulos et al. Citation2015; Petropoulos, Kalaitzidis, and Prasad Vadrevu Citation2012; Pullanagari et al. Citation2017; Sahithi, Iyyanki, and Giridhar Citation2022; Suresh and Lal Citation2020).

Studies like those by Petropoulos et al. have explored the combination of SVM and Artificial Neural Networks (ANN) in Hyperion HSIs for LULC in the Mediterranean, finding comparable classification accuracies between the two (Petropoulos, Arvanitis, and Sigrimis Citation2012). SVM, known for its effective class separation and low generalization error, tends to outperform ANN under certain conditions. However, both SVM and ANN face limitations in subpixel-level classification, particularly in images with coarse spatial resolution.

Advancements in classifier technology, such as the Rotation-based Object-oriented classification method (RoBOO) proposed by Shah et al. (Citation2016) which combines SVM and KNN, have improved HSI classification diversity and accuracy. While RoBOO demonstrates better performance compared to traditional methods, it incurs higher computational costs.

Superpixel segmentation, widely used in HSI classification, considers each superpixel region uniform but faces challenges when these regions contain different categories. Tu et al. introduced a KNN-based superpixel representation approach, combining superpixel segmentation and domain transform recursive filtering to extract spectral-spatial features (Tu et al. Citation2018). This method shows high classification accuracy, even with limited training samples, but its effectiveness hinges on the quality of segmentation and can be computationally intensive. compares the performance of different supervised learning methods on remote sensing datasets.

Table 5. Examples of supervised learning literature.

Dahiya et al. (Citation2021) analyzed the performance of three supervised classifiers, namely Random Forest (RF), neural network, and minimum distance classifier. They studied the regions of Haryana and parts of Uttar Pradesh, used the Hyperion EO-1 dataset downloaded from the online network platform of the United States Geological Survey’s Earth Probe, and then tested several supervised classifiers. The experimental results revealed that the classification accuracy of neural networks surpassed that of random forests and minimum distance classifiers. This superiority is attributed to the self-learning and improvement capabilities of neural networks, showcasing their effectiveness in LULC change maps using hyperspectral images. However, a well-known drawback of neural networks is their inability to observe the learning process between layers, leading to less explainable output results, potentially impacting the credibility and acceptability of the results.

Hyperspectral data can provide valuable information in many fields, and how to effectively utilize spectral and spatial information in the data has always been a concern for researchers in this field. Tong and Zhang (Citation2022) proposed the Spectral Space Cascaded Multilayer Random Forest (SSCMRF) method for classifying tree species in hyperspectral images. This method employs two classification stages to better utilize and integrate spatial information within superpixels and patches. The incorporation of spectral-spatial information in this approach significantly enhances classification performance. To validate the proposed method’s performance, the experiment utilized hyperspectral data from the Jiepai Forest Farm area of Gaofeng State-owned Forest Farm in Guangxi. The study compared the proposed method against various control technologies, including Extended Attribute Profiles (EAPs), Invariant Attribute Profiles (IAPs), SuperPCA, multiscale SuperPCA (MSuperPCA), 3D-CNN, and the Densely Connected Deep RF (DCDRF). Results indicated that the proposed method not only demonstrated the best classification performance but also exhibited relatively fast convergence speed. However, in the Pavia University (PU) dataset, the overall accuracy and Kappa coefficient of the proposed method were lower than those of the EAP method, suggesting potential areas for further improvement in the method’s performance.

In contemporary classification methods, the assumption that training and testing data share the same categories often results in neglecting unknown categories. Open Set Classification (OSC) addresses this issue by allowing the rejection of unknown categories. However, many current OSC methods face challenges where the feature space of known and unknown categories tends to be consistent, retaining redundant information. To enhance the classification accuracy of OSC, Li et al. (Citation2023) introduced a supervised contrastive learning-based open-set hyperspectral classification framework (OSC-SCL). This framework’s advantage lies in its incorporation of supervised contrastive learning for spectral and spatial feature learning separately, effectively aggregating samples of the same class and distinguishing between unknown and known categories. The framework underwent testing on PU and Houston datasets, with experimental results demonstrating its superior classification performance compared to advanced methods such as Classification-Reconstruction Learning for Open-Set Recognition (CROSR), Multitask Deep Learning Method for the Open World (MDL4OW), and Spectral-Spatial Latent Reconstruction (SSLR). However, it’s worth noting that the training time for the proposed method is relatively long.

In summary, while supervised learning has markedly advanced HSI classification, challenges such as computational burden, dependence on segmentation quality, and the need for distinct spectral features continue to guide ongoing research in this field.

5.2. Unsupervised learning

Unsupervised learning, which involves learning from unlabeled data to discern data distributions or relationships, primarily focuses on clustering and dimensionality reduction. Key algorithms include K-means clustering (Haut et al. Citation2017; Mancini, Frontoni, and Zingaretti Citation2016; Ranjan et al. Citation2017), PCA (Jiang et al. Citation2018; Ye et al. Citation2020), Gaussian Mixture Model (GMM) (Jiang et al. Citation2018; Ye et al. Citation2020), and Self-Supervised Learning (Qin et al. Citation2023; Yang et al. Citation2022), among which self-supervised learning is a popular framework in unsupervised learning and is more widely applied. In scenarios where acquiring labeled data is challenging, unsupervised learning becomes especially valuable. A notable application of GMM is Fauvel et al.‘s nonlinear feature selection algorithm, which selects features by maximizing posterior probability (Fauvel, Zullo, and Ferraty Citation2014). This method, tested on three hyperspectral datasets, demonstrated superior feature selection and classification accuracy over SVM. However, its reliance on statistical analysis demands further research to identify extracted features precisely.

Addressing the need for preserving boundaries in HSIs, Kang et al. introduced PCA-EPF, an edge-preserving feature method. This technique fuses Edge-Preserving Filters (EPFs) obtained under different parameters, enhancing class separability (Kang et al. Citation2017). Tested on three datasets, PCA-EPF showed higher classification accuracy than standard EPF methods, but it requires manual EPF parameter selection, limiting its practical application. On the other hand, for challenges like insufficient training data in HSI classification, a sparse representation framework using a label mutual exclusion dictionary learning algorithm under a sparse representation framework (ME-KSVD) algorithm was developed in (Xie et al. Citation2018). This extension of the K-means algorithm considers intra-class consistency and inter-class mutual exclusion, performing well on diverse sample sizes and achieving higher classification accuracy compared to advanced algorithms. The complexity of this algorithm and its relation to low-rank representations, however, remains an area for further exploration. To improve accuracy and generalization in HSI classification, the PLG-KELM method was proposed by (Chen et al. Citation2021). This approach combines PCA, Local Binary Mode (LBP), Grey Wolf Optimization (GWO), and Kernel Extreme Learning Machine (KELM). Demonstrating superior classification performance and generalization, especially with small sample data, PLG-KELM outperformed methods like BLS, SVM, PCA-CNN, CAE-CNN, and KELM in various datasets. Its limitation lies in lower operational efficiency.

Self-supervised learning represents a distinct branch of unsupervised learning and has gained popularity as a framework, albeit not falling entirely within the unsupervised learning category since it utilizes unlabeled samples. The primary advantage of self-supervised learning lies in its ability to leverage large amounts of unlabeled data, which is often more accessible than labeled data. Furthermore, self-supervised methods eliminate the reliance on manual labeling, mitigating the impact of label errors to some extent. Overall, the performance of self-supervised learning can nearly rival that of supervised learning, providing a significant advantage in addressing various practical problems. Self-supervised learning is typically categorized into generative methods, contrastive methods, and adversarial methods.

Hou et al. (Citation2021) drew inspiration from supervised learning and proposed a hyperspectral imagery classification model based on Self-supervised Contrastive Learning (SSCL). The main concept involves using unlabeled samples to address the challenge of insufficient labeled samples in hyperspectral images. The algorithm comprises two stages. In the first stage, self-supervised learning is employed for pre-training. During this phase, data augmentation is combined with a substantial number of unlabeled samples to construct positive and negative sample pairs, minimizing the distance between positive samples and maximizing the distance between negative samples. Subsequently, the pre-trained model parameters are retained, features are extracted from hyperspectral images for classification, and some labeled samples are used to fine-tune the features. The experiment was conducted on the Salinas, Pavia University, and Botswana datasets, revealing that the proposed method achieved higher overall classification accuracy compared to PCA-EPFs, extended morphological profile (EMP)-SVM (EMP-SVM), 2D-CNNs, Multi-Layer Perceptron (MLP)-CNNs (MLP-CNNs), ResNet50, and a shape-adaptive neighborhood information-based semi-supervised learning (SANI-SSL) methods, with scores of 99.7%, 97.21%, and 97.96%, respectively. Moreover, the proposed method demonstrated greater advantages in scenarios with fewer labeled samples, achieving commendable classification performance. However, it is worth noting that the proposed method exhibited the longest testing time, particularly on the SA dataset, taking 7408.9 seconds. This prolonged duration can be attributed to the incorporation of a large number of unlabeled samples for self-supervised learning.

Supervised-learning based methods are extensively utilized for hyperspectral classification owing to their robust feature extraction capabilities with sufficient labeled samples. However, the associated high cost of acquiring labeled samples poses challenges for practical applications. Addressing the need for hyperspectral classification with fewer labeled samples, Zhao et al. (Citation2022). introduced a contrastive self-supervised learning algorithm. This approach employs specific enhancement modules to generate sample pairs, followed by feature extraction through a Siamese network. The model parameters are then fine-tuned using labeled samples to enhance classification performance. Tested on the PU and Houston 2013 datasets, particularly focusing on scenarios with a limited number of labeled samples, the experiment demonstrated superior classification performance compared to other methods. Notably, the algorithm’s effectiveness relies on a small number of labeled samples to achieve optimal performance, and its network structure might be relatively simple to avoid overfitting with limited labeled samples.

For deep learning to excel in hyperspectral classification, sufficient labeled samples are crucial for training. Liu et al. (Citation2023) proposed a Spectrum Masking-based Self-supervised Learning (SSLSM) method, involving two stages: self-supervised pre-training and fine-tuning. The first stage employs spectral masking methods as an auxiliary task, while the second stage utilizes a cascaded encoder and decoder to extract deep semantic features. To verify the effectiveness of the proposed method, experiments were conducted on the Indian Pines, Pavia University, and Yancheng Wetlands datasets, where the training sets accounted for 5%, 0.8%, and 4% of the total samples, respectively. Nine methods (KNN, RF, HybridSN, PCA-ViT, SSGPN, 3-D Autoencoder and Siamese network (3DAES), Deep Few-shot Learning (DFSL), Deep MultiView Learning (DMVL), and unsupervised Spectrum Motion Feature Learning (SMF-UL)) were used to compare their performance with the proposed method. The experimental results show that the proposed method achieved the best classification performance on these three datasets, with values of 96.52 ± 0.40%, 97.03 ± 0.52%, and 96.70 ± 0.24%, respectively. However, for the time cost experiment, we can see that the proposed method requires a relatively long-time cost, which is 375.40 s, 847.41 s, and 124.62 s, respectively. To improve classification accuracy, the model parameters will also increase accordingly, leading to longer training time. However, due to the two-stage strategy of the proposed method, the training time cost is acceptable.

While many supervised learning methods demonstrate strong classification performance, their efficacy can be compromised in practical scenarios with limited labeled sample data. Liu et al. (Citation2023) proposed a novel unsupervised spatial-spectral hyperspectral classification method (PSSA) addressing scenarios with insufficient labeled sample data. PSSA integrates Entropy Rate Superpixel segmentation (ERS), PCA, and PCA-domain 2D Singular Spectral Analysis (SSA) to enhance feature extraction efficiency. The study assessed three common hyperspectral images (IP, SalinasA, and SA) and five aerial hyperspectral datasets (SC-1, SC-2, SC-3, SC-4, and SC-5). Supervised learning methods (KNN, SVM) and unsupervised learning methods (Spectral Clustering (SC), Nyström Extension Clustering (NEC), and Anchor-based Graph Clustering (AGC)) were employed as comparison benchmarks. Experimental results revealed that the proposed PSSA method demonstrated the best classification performance among unsupervised learning methods. However, in comparison to supervised methods, the overall classification performance of PSSA was not particularly strong, attributed to the absence of prior reference information in unsupervised methods. Nevertheless, unsupervised learning approaches align more closely with practical application requirements. provides a performance comparison of different unsupervised learning methods on remote sensing datasets.

Table 6. Examples of unsupervised learning literature.

Overall, unsupervised learning in HSI classification has made significant strides, offering innovative solutions to challenges posed by unlabeled data and complex image characteristics. However, issues like algorithmic complexity and operational efficiency continue to drive research in this field.

5.3. Semi-supervised learning

Semi-supervised learning aims to train classifiers using a large number of unlabeled samples and a small number of labeled samples to address the challenge of insufficient labeled samples. The main processing scenarios of this method are classification, regression, clustering, and dimensionality reduction. The common algorithms for semi-supervised methods include generative model algorithms, self-training algorithms, and graph theory-based methods, among others. Graph-based classification algorithms are highly valued in semi-supervised classification; they may not fully represent the inherent spatial distribution of data. Wang et al. (Citation2014) introduced a semi-supervised classification method for HSIs known as Spatial Spectral Label Propagation (SS-LPSVM). Experimental tests conducted on four hyperspectral datasets revealed that, in comparison to benchmark algorithms, the proposed SS-LPSVM method attained higher overall and individual category classification accuracy. However, a limitation of the method is its sensitivity to parameters related to spatial maps and spatial spectra, as well as adaptive methods, resulting in relatively prolonged computational times for label allocation. Due to the nonlinear mapping ability of deep learning, methods in this field have gained continuous attention in HSI classification. However, due to the many network parameters involved in deep learning, the training process of these related methods takes a relatively long time. Yi et al. (Citation2018) proposed an HSI classification model based on a semi-supervised generalized learning system (SBLS). This method first obtains the corresponding spectral space representation from the original HSI through hierarchical-guided filtering technology. Then, it combines the class probability structure with a generalized learning model to use many unlabeled samples with limited labeled samples. Finally, the ridge regression approximation method is utilized to calculate connection weights. Experimental tests conducted on three datasets – Indian Pines, Salinas, and Botswana – indicated that, in comparison to deep learning-based methods and traditional classifiers, the proposed method achieved higher classification accuracy, consumed less time, and demonstrated superior overall performance. However, the primary drawbacks of the proposed method include input sensitivity and, when too many nodes are set, increased memory space occupation.

The cross-modal feature learning challenge, specifically whether a limited amount of high-quality data can contribute to tasks related to a large amount of low-quality data, has garnered significant attention in the remote sensing field. Traditional semi-supervised popular alignment methods face limitations in addressing such problems, particularly because collecting hyperspectral data is relatively expensive. Hong et al. (Citation2019) introduced a semi-supervised cross-modal learning framework known as Learnable Popular Alignment (LeMA). This framework directly learns joint graph structures from data and subsequently captures data distribution based on graph labels to identify more accurate decision boundaries. The method underwent testing on three datasets: the University of Houston, Chikusei region, and DFC2018, utilizing two high-performance classifiers, Linear Support Vector Machines (LSVM), and Canonical Correlation Forest (CCF). Experimental results demonstrated that, compared to several state-of-the-art methods, the proposed LeMA method exhibited superior classification performance. Despite its high classification performance, LeMA is constrained by linear modeling methods, and this study lacked consideration of spatial information.

GCN is a widely utilized semi-supervised method known for its superior performance in small-sample environments. Xi et al. (Citation2021) introduced a novel Semi-supervised Graph Prototype Network (SSGPN). Distinguished from GCN, SSGPN incorporates a prototype layer, featuring a distance-based cross-entropy loss function and a time entropy-based regularizer. This layer enhances inter-class distance and intra-class compactness of embedded features, generating more representative prototypes for accurate recognition of diverse land cover categories. Additionally, the network employs graph normalization to expedite convergence. Experimental evaluation on benchmark datasets, IP and PU, demonstrated the superior classification performance of the proposed method compared to other techniques. However, the study did not provide a specific description of the training time required, and the framework may not exhibit the best training time compared to alternative methods.

Due to the limited number of labeled samples in hyperspectral images and the high cost of labeled samples, this problem has always limited the performance of many deep learning methods. Semi-supervised and self-supervised algorithms can effectively address this type of problem. Song et al. (Citation2022). combined self-supervised and semi-supervised methods to design a self-supervised assisted Semi-supervised Residual Network (SSRNet) framework. SSRNet is divided into two branches: self-supervised and semi-supervised. The semi-supervised branch relies on spectral feature shift to introduce hyperspectral data perturbations and improve performance. The self-supervised branch is mainly divided into masking band reconstruction and spectral order prediction, and its specific function is to remember the discriminative features of hyperspectral data. Moreover, compared to many deep learning methods, the proposed framework can better explore unlabeled samples, thereby improving classification accuracy. The author used IP, PU, SA, and Houston 2013 datasets as experimental subjects, and SVM, spectral-spatial LSTM (SSLSTM), Contextual Deep CNN (CDCNN), 3-Dimensional Convolutional Autoencoder (3DCAE), spectral-spatial residual network (SSRN), HybridSN, and Double-branch Multi-attention mechanism network (DBMA) as control groups. The experimental results show that the proposed method achieved the highest classification results on these four datasets, indicating the best classification performance. However, the reason why the proposed method has the highest classification accuracy is mainly because many unlabeled samples are used to improve classification performance, which makes the training time of the proposed method longer.

To address the challenges posed by multiple spectral bands and limited labeled samples in hyperspectral classification, Sellami et al. (Citation2023) proposed a hyperspectral classification method based on unsupervised band selection and Semi-supervised Hypergraph Convolutional Network (SSHCN). Firstly, the advantage of unsupervised band selection methods lies in their ability to automatically select relevant spectral features. Secondly, the advantage of semi-supervised hypergraph convolutional networks lies in their ability to preserve spectral-spatial features and effectively handle high correlations in classification. The method excels in automatically selecting relevant spectral bands, preserving spatial and spectral features, and improving classification performance with fewer labeled samples. Evaluation on real hyperspectral datasets, IP and Houston, demonstrated superior classification performance compared to other methods. However, there were some misclassified pixels in the classification map, and the training time of the proposed method was relatively long compared to alternative methods. provides examples of semi-supervised learning methods.

Table 7. Examples of semi-supervised learning literature.

5.4. Transfer learning

Transfer learning is a branch of machine learning that primarily involves transferring previously learned and trained model parameters to a new model to aid in its training. Since most data exhibits a certain degree of correlation, transfer learning allows the knowledge acquired from the original model to be shared with the new model. This process significantly accelerates and optimizes the learning efficiency of the model, avoiding the need to start training from scratch as is typical for many networks. However, it’s important to note that transfer learning models may have limitations, and in some cases, fine-tuning the network is necessary for new tasks.

Xie et al. (Citation2021) introduced a Superpixel Pooling convolutional neural network (SP-CNN) with transfer learning to address the challenge of limited training samples in hyperspectral image classification. The proposed network consists of three main stages. First, a spectral feature downsampling process is achieved through convolution and pooling operations. Next, the hyperspectral spatial information is explored by combining upsampling and superpixel pooling. Finally, the hyperspectral data of each superpixel is fed into a fully connected neural network. This framework excels in utilizing superpixel pooling to effectively integrate spectral and spatial information, and the incorporation of transfer learning enhances the efficiency of model training. To evaluate the proposed framework’s performance, experiments were conducted on the IP, PU, and SA datasets. Results indicate that, compared to other methods, the proposed method exhibits the highest overall classification accuracy. In various experiments with different labeled samples, the proposed method consistently demonstrates strong classification accuracy, highlighting its ability to handle limited labeled samples effectively. It’s worth noting that the optimization of a large number of parameters in the classification process prolongs the training time. However, the use of transfer learning significantly mitigates this training time. The classification accuracy of the proposed model is notably influenced by the number of superpixels, and determining the optimal number requires experimentation, making it challenging to predefine.

Due to the inadequate ability of CNN methods to obtain nonlocal topological relationships representing the underlying data structures of hyperspectral images, Zhang et al. (Citation2021) developed a Topology and Semantic Information Transmission Network (TSTNet) to address this issue. This network characterizes topological relationships by using graph structures and utilizes graph convolutional networks to handle cross-scene hyperspectral classification problems. The network incorporates Graph Optimal Transfer (GOT) and Maximum Mean Difference (MMD) – based techniques to align topological relationships, assisting in aligning the distribution between the source and target domains of MMD. TSTNet combines CNN and GCN, where CNN effectively merges semantic information and topological structure information by constructing graphs and extracting local spatial features, while GCN captures non-local spatial features. This combination enhances the model’s spatial perception ability. Experimental testing on three cross-scene hyperspectral datasets demonstrated that the proposed method not only reduces domain shift but also enhances the performance of cross-scene hyperspectral classification compared to other methods. However, the inclusion of the SACEConv layer in the proposed method results in more time-consuming data processing, thereby increasing the training time of the network.

To effectively identify and classify different crop categories in hyperspectral data, Hamza et al. (Citation2022). adopted squirrel search optimization with a deep transfer learning-enabled crop classification (SSODTL-CC) to accurately identify crop types in hyperspectral data. The proposed model initially derived a MobileNet with an Adam optimizer for feature extraction. Moreover, the proposed model also adopts the SSO algorithm with a bidirectional LSTM (BiLSTM) model for crop classification. The proposed method was evaluated on two benchmark hyperspectral datasets, demonstrating superior classification accuracy compared to other models. However, it’s noteworthy that there is limited information about the training time for the proposed method in the experimental results, which could impact a comprehensive assessment of the model’s performance.

In hyperspectral classification, there is often a lack of consideration for prior knowledge about land cover categories, especially in the form of textual information. Additionally, existing generalization methods in the field have not effectively addressed the extraction of knowledge from language modalities. To address this gap, (Li et al. Citation2023) proposed a Language-Aware Domain Generalization Network (LDGNet). This network is trained solely on the Source Domain (SD) and then transferred to the Target Domain (TD). The proposed method involves a dual-stream structure consisting of an image encoder and a text encoder to extract both visual and language features. Language features processed by the model are treated as shared semantic spaces across scenes, and alignment between visual and language features is achieved through supervised contrastive learning in the semantic space. Experimental evaluations on three datasets showed that, compared to other methods, the proposed LDGNet achieved the highest classification accuracy. However, it’s worth noting that the proposed method incurs a higher computational cost compared to other methods, primarily attributed to the three-layer converter used as the text encoder in the model. Additional details can be found in .

Table 8. Examples of transfer learning literature.

6. HSIs classification using advanced machine learning (deep learning) models

Deep learning is an advanced machine learning approach that replicates the structure and functionality observed in neural networks of the human brain. It uses multi-level neural networks to learn and extract features from data and uses these features for prediction and decision-making. Deep learning (Zhou et al. Citation2022) plays a vital role in fields such as computer vision, natural language processing, speech recognition, and game development. Deep learning has many advantages over other learning methods, such as handling large-scale and complex data, high accuracy, and the ability to extract features automatically. However, deep learning also has some drawbacks, such as requiring a large amount of data and computational costs for training, high requirements for data quality, and poor interpretability of models. The realm of deep learning encompasses various algorithms, with some of the most common ones being CNN, DBN, RNN, GAN, Transformers, and more. To provide a comprehensive understanding of the performance of these models in HSI classification, this review systematically gathered a significant number of studies on hyperspectral classification based on deep learning. The analysis focused particularly on techniques employed for land cover and land use classification in hyperspectral images. It’s important to note that, due to the specificity of the review’s focus on deep learning techniques for land cover and land use classification, the number of relevant studies retrieved in this particular domain was relatively limited. Consequently, the review extends its coverage to include literature on deep learning-based hyperspectral classification in other application areas to supplement the discussion.

6.1. Convolutional neural networks (CNNs)

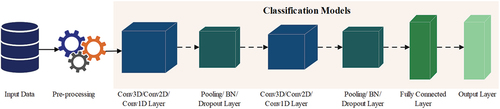

CNNs are a type of feedforward neural network that involves convolutional computation and have a deep architecture. The basic structure of convolutional neural networks is composed of several parts, namely the input layer, convolutional layer, pooling layer, activation function layer, and fully connected layer. The traditional CNN structure diagram is shown in . CNNs have many advantages, such as automatic learning of image features, local perception, and processing of large datasets, which can achieve better accuracy and efficiency. Due to these advantages of CNN, convolutional neural network methods are constantly being applied in the field of HSI classification (Cao et al. Citation2018; Kalantar et al. Citation2022; Ramamurthy et al. Citation2020; Xu et al. Citation2019; Zhang et al. Citation2018). On the other hand, convolutional neural networks also have some drawbacks, such as high demand for computing resources, high data demand, and limited interpretability. Typical convolutional neural network models such as 1DCNN, 2DCNN, and 3DCNN have their advantages and disadvantages, and some researchers have combined them to achieve better performance (Ahmad et al. Citation2021; Fırat, Emin Asker, and Hanbay Citation2022).

Yang et al. (Citation2018) tackled the challenges of HSI classification by developing four deep-learning models. These models were tested on six HSI datasets, showcasing outstanding performance in comparison to other state-of-the-art methods. However, it’s crucial to acknowledge that the four proposed models have certain limitations. Unlike traditional machine learning methods, these models require a larger number of training samples. CNN in deep learning finds extensive use in classifying unstructured data. This versatile technology not only facilitates the classification of land use/land cover using image data but also enables the recognition of crops. To evaluate the performance of this technology, Bhose and Musander (Citation2019) introduced a CNN method for crop recognition utilizing HSI data. The primary advantage of this method is its proficiency in handling unstructured data and automatically extracting features essential for crop monitoring or land classification. The technology underwent testing on the Indian pine tree dataset and the study area dataset, with experimental results demonstrating that CNN can achieve commendable classification performance in both unstructured and small datasets. However, a limitation of this technology is that only spectral features were employed for classification in this study. It may perform even better if spectral space technology is incorporated or the CNN framework is enhanced. To improve the classification performance of HSIs, Sarker et al. (Citation2020) proposed a new multidimensional convolutional neural network based on regularized singular value decomposition (RSVD-MCNN). The main feature of this model is that it can extract advanced features from low dimensional spaces, and MCNN combines convolution operations of different dimensions, which can better perform complex representation learning. This model was tested on three hyperspectral datasets, and the experimental results showed that this method showed excellent advantages in overall classification accuracy. However, although this design has high accuracy, the training time is very long compared to other methods, which becomes the biggest drawback. Hyperspectral images can effectively distinguish various scenes on the Earth’s surface, but this is a challenging task due to the high dimensionality and rich spectral bands of hyperspectral data. Arun Solomon and Akila Agnes, (Citation2023) proposed a new 3D CNN-based model that can identify the most important spectral and spatial features from image datasets, thereby improving the accuracy of land cover classification; it mainly includes two steps: data preprocessing and classification. This method was tested on two typical hyperspectral datasets, Indian Pine and Pavia University, and the results showed that the proposed method exhibited the highest overall accuracy compared to other state-of-the-art classification methods (KNN, SVM, SAE, and 2DCNN). The limitation of the method used is that band selection needs further improvement, classification accuracy can still be improved, and this method has not been extended to applications with fewer labeled training samples.

Due to the high complexity and redundancy of hyperspectral images, these defects have always limited the performance of hyperspectral image classification. To this end, Yu et al. (Citation2021) proposed a CNN framework (FADCNN) that combines spatial-spectral dense connections and feedback attention mechanisms. This framework applies a feedback attention module, which aims to enhance attention maps and utilize multi-scale spatial information to enhance and expand spatial attention modules. In addition, this framework also utilizes a frequency band attention module to improve computational efficiency and feature representation capabilities. Moreover, to better refine the obtained features, the proposed framework densely integrates and mines spatial-spectral features. Test the proposed framework on the Purdue Indian Pines, Kennedy Space Center, and University of Pavia datasets, comparing nine methods (EPF, Iterative Target-constrained Interfere-ence-minimization Classifier (ITCIMC), MFASR, CNN with deconvolution and hashing method (CNNDH), PCA3-F, PCA5-F, FADC, Bidirectional recurrent neural networks (Bi-RNN), and Res-Net34) to better validate their effectiveness. The experimental results show that the proposed method has the highest overall accuracy on all three datasets, which is partly attributed to the frequency attention module (BA). Moreover, compared with the three methods (PCA3-F, PCA5-F, and feedback attention mechanism (FADC)), the proposed method has a relatively shorter time consumption. However, the average accuracy of the proposed method in the Purdue India Indian Pines dataset is not the highest, and a multiple-feature-based adaptive sparse representation (MFASR) method has the highest average accuracy at 97.20%.

Graph convolutional networks are widely used by researchers due to their powerful representation ability. However, most GCN methods overlook pixel-level spectral spatial features. To address this issue, Ding et al. (Citation2022) proposed a method that combines multi-scale GCN and multi-scale CNN (MFGCN). From a multi-scale GCN perspective, this branch can reduce computational costs and refine the multi-scale spatial features of hyperspectral data. From the multi-scale CNN perspective, this branch can extract local features at the multi-scale pixel level. This method also uses one-dimensional CNN to extract spectral features of superpixels. Finally, connect the two branches to fuse complementary multi-scale features. The method was evaluated on the PU, SA, and Houston 2013 datasets, demonstrating superior classification performance compared to six comparative methods. However, the classification accuracy is influenced by three hyperparameters: superpixel scale, number of epochs, and learning rate. Inappropriate settings can lead to negative effects such as reduced classification accuracy and longer training time.

Traditional convolutional neural networks, which mainly use 2D-CNN for feature extraction, cannot effectively handle the inter-band correlation of hyperspectral data. To overcome the shortcomings of 2D-CNN, Alkhatib et al. (Citation2023) proposed a method of fusing multi-scale 3D-CNN with three branch features, which is called Tri-CNN. This method uses PCA to reduce the dimensionality of the data and then uses 3D-CNN to extract hyperspectral features from different scales. Then, the three branches of multi-scale 3D-CNN are flattened and connected, and finally, the softmax and dropout layers are used to generate classification results. The proposed method was tested on three datasets: PU, SA, and GulfPort, and the experiment used 1% labeled training samples to train the model. The experimental results show that the classification accuracy of the proposed method is superior to the other six comparison methods, and the classification map of the proposed method is closer to the ground truth map of the dataset. The author also conducted experiments on training with labeled samples (1%, 2%, 3%, 4%, and 5%) consistently showed that the proposed method achieved the best classification accuracy. However, it’s worth noting that the method involves a substantial number of training parameters, leading to increased computational complexity. Additionally, the classification accuracy of the proposed method on the GulfPort dataset was relatively lower than that on the PU and SA datasets, suggesting room for improvement. lists a number of studies that employed CNNs.

Table 9. Examples of CNNs literature.

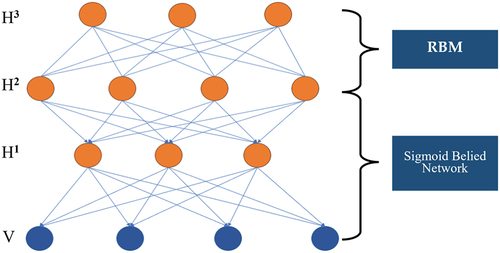

6.2. Deep belief network (DBN)

A deep belief network is a deep learning model composed of multiple stacked restricted Boltzmann machines. Compared with other models, DBN has the advantage of being able to pre-train through unsupervised learning and then fine-tune through supervised learning. DBN can better extract high-level abstract features for some complex feature-learning tasks. Due to the advantages of deep belief networks, many researchers have continuously applied them to the field of HSI classification (Chen, Zhao, and Jia Citation2015; Li et al. Citation2018; Mughees and Tao Citation2018). The structure of the deep belief network is shown in .

Figure 6. Structure diagram of a deep belief network, where H refers to a hidden layer, and V to a visible layer.