Abstract

Data about the land cover have already been for decades one of the most important sources for determining human impact on nature and the environment and possible backward effects of nature and the environment on humans. Images acquired by earth observation (EO) satellites enabled more or less automatic production of global and continental land cover maps, thus performing detailed analysis of land cover changes over time. Although EO images have been broadly used for producing land cover maps for more than 30 years, many challenges are still present in their production workflow. This research firstly briefly analyses characteristics of some of the recent land cover products and then identifies the main steps that are present in producing land cover maps. It further highlights some of the main existing challenges present in those steps as well as future research topics. These challenges are rarely addressed comprehensively, but rather individually, so this research also provides directions to other recent studies that grapple with the mitigation of a particular challenge. An overview of the EO satellite missions and classification algorithms that are most often used to produce moderate resolution (10–30 m) land cover maps is also given.

1. Introduction

Land cover is defined as a biophysical cover of the Earth’s surface (Gómez, White, and Wulder Citation2016), while land use describes what purpose a surface of the Earth is used or intended for. Land cover is, in some cases, the result of land use, i.e., the result of natural or artificial systems that are present on the surface of the earth (Di Gregorio et al. Citation2016). Land cover change, whether human-induced or resulting from natural processes, is among the most influential drivers of change in the Earth system and is also affecting people’s lives (Verburg, Neumann, and Nol Citation2011; Phiri et al. Citation2020). Having data about the land cover is clearly of great importance for analysing and possibly mitigating the effects of human influence on nature and the environment and vice versa.

To produce land cover maps covering large areas, such as countries, continents, or even the whole surface of the earth, any form of manual approach is not feasible, but mostly automated methods must be applied. Satellite images are the main driver in producing those land cover maps (Phiri et al. Citation2020). The automated method of extracting land cover data from satellite images is called classification, a process in which, in the most general sense, a particular land cover class is assigned to each pixel. Although some types of land use can be observed from aerial and satellite images, this research is focused on the land cover since it can be directly derived from those images.

Lu and Weng (Citation2007) comprehensively grouped classification methods into the following broad categories: supervised or unsupervised, parametric or nonparametric, hard or soft, pixel-based, subpixel, object-based, or per-field, and spectral, contextual, or spectral-contextual. The main difference between supervised classification and unsupervised is that supervised requires a substantial amount of training data, i.e., pixels on satellite images for which land cover class is manually labelled by the operator. Both, supervised and unsupervised classification of multispectral images determines the class for each pixel based on its spectral characteristics, i.e., based on the way the corresponding area reflects or emits electromagnetic energy. Parametric classification assumes that data are distributed in line with the normal distribution, while non-parametric does not require presumptions on data distribution and are based on deterministic theories (Weng Citation2010; Phiri and Morgenroth Citation2017). According to Lu and Weng (Citation2007), hard classification means that each pixel is assigned to one class, while soft classification for each pixel provides information about the likeliness of its belonging to each particular class. Pixel-based classification ignores the problem of mixed pixels (i.e., pixels that partly cover two or more land cover classes) and treats each pixel individually, without taking into account its surroundings, while subpixel classification provides soft classification results (Lu and Weng Citation2007). Object-oriented classification or Geographic Object-Based Image Analysis (GEOBIA) does not perform classification on a pixel-by-pixel basis, but delineates image objects (i.e., pixel patches) through segmentation and then classifies those objects (Blaschke et al. Citation2014). In that way, GEOBIA uses not only spectral information, but also texture, shape, and position into account (Phiri and Morgenroth Citation2017). Per-field classification combines vector and raster data where vector data are polygons (e.g., land parcels) that are classified based on satellite image data (Lu and Weng Citation2007). Finally, the spectral classification uses only spectral information and additional auxiliary information if they are being used, while contextual classification also considers pixels surrounding the pixel being classified.

Global and continental land cover products that are being analysed in later sections of this paper are almost all the result of the traditional approach in creating land cover maps, meaning that they are produced by pixel-based classification. Some recent studies show that GEOBIA methods are the subject of more research and, combined with machine learning algorithms, produce more accurate land cover maps (Phiri and Morgenroth Citation2017; Phiri et al. Citation2020) than pixel-based classification. One of the reasons for this case is the efficiency of pixel-based classification when applied over large geographical areas (Xiong et al. Citation2017). Another reason is the fact that global and continental land cover maps are produced from coarse to moderate spatial resolution (up to 10 m) remote sensing images on which the segmentation process can be problematic to apply (Phiri et al. Citation2020) and GEOBIA is predominantly applied on very high resolution images (Blaschke et al. Citation2014).

A type of classification that some authors (e.g., Mehmood et al. Citation2022) separate from supervised and unsupervised ones is a deep learning (DL) classification. DL, also known as deep artificial neural networks, is an artificial intelligence machine learning method that has proven its efficiency in remote sensing image classification (Benhammou et al. Citation2022). Among DL models, convolutional neural networks (CNNs), because of their suitability for processing multiband remote sensing images, are the most often used ones (Ma et al. Citation2019; Benhammou et al. Citation2022; Boston, Van Dijk, and Thackway Citation2023). CNN allow taking into account spatial and temporal patterns along with spectral ones which makes them a better choice for land cover classification applications and are generally producing higher classification accuracies as compared to traditional approaches (Vali, Comai, and Matteucci Citation2020; Boston, Van Dijk, and Thackway Citation2023). Successes of DL algorithms contributed to development of the scene-based classification, a third type of classification along with pixel-based, and object-based ones (Cheng et al. Citation2020; Mehmood et al. Citation2022). Scene-based classification, sometimes referred to as patch-based (Helber et al. Citation2017), is aimed at classifying patches of remote sensing images (e.g., 512 × 512 pixels patches) into semantic classes (e.g., airport, football field, harbour, etc.; Cheng et al. (Citation2020)).

When analysing currently available global and continental land cover products, it can be observed that they are mostly created by employing traditional machine learning methods such as random forest (RF) and support vector machines (SVM). Both methods have over the years been established as the most efficient for large-area land cover mapping (Kussul et al. Citation2017; Talukdar et al. Citation2020; J. Yang and Huang Citation2021). Since deep learning and GEOBIA approaches tend to produce higher accuracy land cover maps, it is therefore clear that their application in large-scale (i.e., global and continental) land cover mapping is yet to be more broadly adopted. One disadvantage of machine learning models is that they often turn into black boxes which hampers defining some general practices that will yield the best results for a particular classifier (Burgueño et al. Citation2023).

This research aims to determine the current state and challenges in producing large-scale land cover products and to provide directions to other studies that are dealing with those challenges. In that manner, this research also identifies future research directions that should be and are already being performed to mitigate some of the present challenges. Although some recent research papers are comparing different land cover products, mostly in terms of their accuracy and classification agreement, to the best of our knowledge, there is no paper equivalent to this one that is analysing recent land cover products and is also providing insights into processes that are essential for their production. Since many global and continental land cover maps have been produced recently and many challenges are still present (e.g., thematic accuracy of large-scale land cover maps is still much lower than it is for small-scale ones), it is needed to sum up the current state in global and continental land cover mapping to provide a basis for further development.

In the following sections, first, an overview of the six chosen recent land cover products is given, and then each aspect of the land cover production workflow is analysed. Sensors that are used for generating input earth observation (EO) data for producing land cover products are also examined in subsequent sections. The discussion section provides a discussion on six chosen land cover products and with the last chapter summarises the main challenges that must be addressed to upgrade the overall quality of land cover products. The last chapter also provides directions for future research.

2. Currently available global and continental land cover products

Many global, continental, and national land cover products have been produced in the last ten years. Among the main reasons for expanding the number of land cover products is the fact that more and more fine resolution (10 – 30 m) data acquired by different EO satellite missions are becoming freely available, and processing capabilities (hardware and software) are being drastically improved. The main problem has shifted from acquiring data (problem with data being unavailable or expansive) and ensuring computational needs to fine-tune different aspects of the classification process. For example, by utilizing Google Earth Engine (Google Citation2022) or Microsoft’s Planetary Computer (Microsoft Citation2022) platforms, enormous amounts of data are directly available, as well as processing capabilities, but decisions have to be made on which input data should be used for a specific purpose, which classification algorithm should be applied, or how should data for the training phase of the classification workflow be obtained.

Among dozens of freely available land cover products, more thoroughly were examined Copernicus Global Land Services Land Cover Map at 100 m (CGLS-LC100; Buchhorn, Lesiv, et al. Citation2020), European Space Agency (ESA) WorldCover (Zanaga et al. Citation2021), Sentinel-based pan-European land cover map (ELC10; Venter and Sydenham Citation2021), Sentinel-2 Global Land Cover (S2GLC; Malinowski et al. Citation2020), Open Data Science Europe Land Use/Land Cover (ODSE-LULC; Witjes et al. Citation2022), and Dynamic World (Brown et al. Citation2022). Some land cover products are focused on one land cover type (for example, water or artificial surfaces), but all six land cover products that are previously mentioned are, so to say, comprehensive, they give land cover information in dozen of classes. Also, analysis of land cover products in this research is intentionally limited to those products that are delivered in raster format and are a result of pixel-based, supervised, spectral/spectral-contextual classification.

Other prominent land cover products are global land use/land cover map produced by the Impact Observatory Inc. with the support of Esri Inc. and Microsoft (Karra et al. Citation2021), Finer Resolution Observation and Monitoring Global Land Cover (FROM-GLC) products (Gong et al. Citation2013, Citation2019), FROM-GLC Plus (Yu et al. Citation2022), Global 30-m Land Cover Classification with a Fine Classification System (GLC_FCS30; Zhang et al. Citation2021), GlobeLand30 (J. Chen et al. Citation2015), European land use and land cover map based on Sentinel and Landsat data (ELULC-10; Mirmazloumi et al. Citation2022), map created by Pflugmacher et al. (Citation2019), and Intelligent Mapping (iMap) World 1.0 (Liu et al. Citation2021) land cover products. These land cover products are not included in the further analysis because most aspects that are of interest for this research are covered in six chosen products. In the following sections and in , a brief overview and main characteristics of each land cover product are given.

Table 1. Main characteristics of analysed land cover products.

2.1. Copernicus Global Land Services Land Cover Map at 100 m (CGLS-LC100)

Although CGLS-LC100 has a spatial resolution of around 100 m, which is an order of magnitude coarser resolution compared to other chosen products, it was still selected for analysis because the algorithm will be applied to 10 m Sentinel-2 images in the future (as announced by Buchhorn, Smets et al. (Citation2020)). Currently, CGLS-LC100 is available for years from 2015 to 2019 and be viewed and downloaded from https://lcviewer.vito.be/. What is interesting about this product is that it does not provide only a discrete classification map, but also the so-called fractional layers which allow users from various domains to adopt land cover data to their needs. Pixels in fraction layers have values that correspond to a percentage of a pixel that is covered in one of the main land cover classes (for example, built-up, cropland, forest, etc.). The main data source used for generating the CGLS-LC100 are images acquired by the VEGETATION instrument onboard the PROBA-V (short for Project for On-Board Autonomy – Vegetation; more in Section 3.2.3. PROBA-V mission) satellite (Dierckx et al. Citation2014).

A couple of vegetation indices (VI) have been generated and indirectly used as classification input, along with textural metrics and calculated descriptive statistics. What is also interesting is that the supervised classification of all input datasets was conducted manually at 10 m spatial resolution which allowed the generation of fraction layers since input data for classification have approximately 100 m spatial resolution (Buchhorn et al. Citation2021). In the training phase, a random forest (RF) classification algorithm was applied to determine optimal classification parameters (i.e., classification model) for different ecoregions (so-called biome clusters) so that regional differences are taken into account, and then each classification model was used to generate region-wide pre-products, including discrete classification and fraction layers (Buchhorn et al. Citation2021). Since all pre-products are being generated for each year independently, they are being further processed to ensure their annual consistency by generating break-maps and conducting some other post-processing, including smoothing fraction layers based on their annual stability (Buchhorn et al. Citation2021). For generating final discrete land cover maps, expert rules applied on auxiliary datasets (OpenStreetMap (OSM) data, 90-m CopernicsDEM digital surface model, and others that are listed in ) were used (Buchhorn, Bertels, et al. Citation2020).

Classification legend for a discrete land cover map is based on the Food and Agriculture Organization (FAO) Land Cover Classification System (LCCS; more in Section 3.1. Defining classification legend) and includes 23 classes (some are listed in ). CGLS-LC100 validation report (Tsendbazar et al. Citation2020) states that the validation dataset consists of almost 22 000 samples roughly evenly distributed across continents and defined by regional experts. Overall accuracy for the discrete land cover map for all years and for level-1 classes is around 80%, and for level-2 classes (for example, level-1 class ‘forest’ corresponds to level-2 classes ‘open forest’ and ‘closed forest’) is around 75% (Tsendbazar et al. Citation2020).

2.2. ESA WorldCover

ESA WorldCover global 10-m land cover product is currently available for 2020 and 2021 and can be viewed and downloaded from https://esa-worldcover.org/. As reported by the ESA WorldCover product user manual (Van De Kerchove et al. Citation2021) land cover classification is based on the algorithm that was used for CGLS-LC100, with two main differences: instead of PROBA-V scenes, Sentinel-2 multispectral images (more in Section 3.2.1. Sentinel-2 mission) and Sentinel-1 radar data (more in Section 3.2.2. Sentinel-1 mission) were employed, and part of the processing that is dealing with ensuring annual consistency is left out. Algorithms that were used for producing land cover maps for 2020 and for 2021 are also not completely the same. This imposes an issue with land cover change analysis since some changes might be introduced because of differences in algorithms used and not because of real changes in land cover.

Sentinel-1 and Sentinel-2 level-2A (L2A) data were preprocessed, and from Sentinel-2 data vegetation indices were calculated, as well as descriptive statistics, which were all used, along with some other metrics extracted from Sentinel-1 data and auxiliary data (altitude and slope from 30 m CopernicusDEM digital surface model, biome clusters, eco-regions (Dinerstein et al. Citation2017), and positional features such as coordinates of each pixel), as input for conducting supervised classification (Van De Kerchove et al. Citation2021). In the training phase of the classification, different classification models were obtained using a gradient-boosting decision tree algorithm (CatBoost), and then different models were combined to predict the land cover class for each pixel, and also to calculate classification probabilities (Van De Kerchove et al. Citation2021). For producing the final land cover map, expert rules that supported the final decision on land cover class for each pixel were applied to different auxiliary data, including OSM, Global Mangrove Watch (GMW) layer, Global Human Settlement Layer (GHSL), and others that are listed in (Van De Kerchove et al. Citation2021).

Land cover is classified into 11 classes that are listed in and defined using FAO LCCS (Van De Kerchove et al. Citation2021). The same dataset that was used for CGLS-LC100 validation (almost 22 000 validation samples) was also used for ESA WorldCover land cover map validation (Van De Kerchove et al. Citation2021). It was calculated that the overall classification accuracy is around 74%, for all classes globally, while continent-wise overall accuracies vary from 68% for Oceania to 81% for Asia (Tsendbazar, Li, et al. Citation2021).

2.3. Sentinel-based pan-European land cover map (ELC10)

Sentinel-based pan-European land cover map (ELC10) covers only EU countries. The main reason for this is the fact that for the training phase of the classification, instead of creating a new training dataset, data from the Land Use and Coverage Area frame Survey (LUCAS) database were used, which are available only for the European Union (EU) countries (Eurostat Citation2022). ELC10 data can be downloaded from https://zenodo.org/record/4407051.

ELC10 is based mainly on Sentinel-1 and Sentinel-2 data from 2018, and around 53 000 polygons and 18 000 points as reference (training and testing) samples from the LUCAS database for the year 2018 (Venter and Sydenham Citation2021). From Sentinel-1 and Sentinel-2 data, spectro-temporal features were extracted, such as pixel-based median values, different spectral indices (normalized burn ratio (NBR), normalized difference vegetation index (NDVI), normalized difference built to index, and normalized difference snow index), different descriptive statistics (5th, 25th, 50th, 75th, and 95th percentile, standard deviation, and others), and textural features (median NDVI values in 5 × 5 pixel moving window), and they have been used as input for classification (Venter and Sydenham Citation2021). To adapt classification to take into account regional characteristics, Venter and Sydenham (Citation2021) also used some additional data (listed in ) as input for classification.

To classify input data in eight land cover classes that are given in , a random forest (RF) classification algorithm was employed. After classification, classification accuracy has been assessed, and Venter and Sydenham (Citation2021) report that overall accuracy is approximately 90%. ELC10 was compared with other land cover products, namely CORINE (short for Coordination of Information on the Environment) Land Cover (CLC; Copernicus Programme Citation2022a), FROM-GLC10 (Gong et al. Citation2019), map created by Pflugmacher et al. (Citation2019), and S2GLC (Malinowski et al. Citation2020). It was concluded that the overall accuracy for ELC10 is higher than for other products, while class-specific accuracies are slightly higher only for a map created by Pflugmacher et al. (Citation2019).

2.4. Sentinel-2 Global Land Cover (S2GLC)

S2GLC also covers only Europe, but unlike ELC10, it includes other European countries that are outside of the EU. The classification was mainly based on Sentinel-2 L2A images (more in Section 3.2.1. Sentinel-2 mission) from which a 10-m land cover map was produced with 13 land cover classes (listed in ; Malinowski et al. Citation2020). For creating S2GLC, CORINE Land Cover (CLC) from 2012 and Copernicus Land Monitoring Services High Resolution Layers (HRLs; Copernicus Programme Citation2022b) were used as a data source for creating training samples (Malinowski et al. Citation2020).

As input for classification, along with Sentinel-2 images, normalized differences between each combination of two spectral bands (except the 60 m ones) were also used (Malinowski et al. Citation2020). Another interesting approach adopted in the S2GLC refers to the way of dealing with multitemporal images. Sentinel-2 tiles from 2017 were picked based on different rules as input for classification. Instead of feeding a random forest classification algorithm with all available pixel values from the whole of 2017, each Sentinel-2 tile was separately classified and then aggregated to produce a final land cover map (Malinowski et al. Citation2020). Aggregation was done based on the work of Lewiński et al. (Citation2017). This tile-by-tile approach has the advantage that it performs flexible classification and adapts to local land cover characteristics since the same land cover class can have a different spectral signature in different regions (Malinowski et al. Citation2020).

For validation purposes, a new validation dataset was created to ensure reliable accuracy assessment (Malinowski et al. Citation2020). Malinowski et al. (Citation2020) report that the final land cover map has a thematic accuracy of around 86%, both overall and average on the country level. S2GLC rasters can be downloaded from https://finder.creodias.eu/.

2.5. Open Data Science Europe Land Use/Land Cover (ODSE-LULC)

Open Data Science Europe Land Use/Land Cover (ODSE-LULC; Witjes et al. Citation2022) product, although it includes some classes that describe land use, has some characteristics that are not shared among other analysed land cover products. As a main data source for producing ODSE-LULC, GLAD (short for Global Land Analysis and Discovery laboratory at the University of Maryland) Landsat ARD (short for Analysis Ready Data) images (Potapov et al. Citation2020) were used. Since Landsat images have temporal coverage that is much longer than it is for Sentinel-2 images (more in Section 3.2.4. Landsat program), with Landsat images it is possible to conduct a much longer temporal analysis. ODSE-LULC product is therefore available as a yearly land cover product from 2000 to 2019 in a 30 m spatial resolution (Witjes et al. Citation2022).

Each pixel was classified in one of 43 classes that correspond to CLC level-3 classes (excluding CLC class ‘5.2.3 Sea and ocean’) by an ensemble of machine learning classifiers, namely random forest, gradient-boosted trees, and neural network (Witjes et al. Citation2022). Each classifier calculated per-pixel per-class probability, thus calculating 129 probabilities for each class and each pixel, and then the fourth algorithm, the logistic regression meta-learner, predicts the final probabilities for each of the 43 classes (Witjes et al. Citation2022). Yearly rasters with final probabilities and yearly rasters with hard classes are available at https://stac.ecodatacube.eu.

Along with the GLAD Landsat ARD images, for producing ODSE-LULC Witjes et al. (Citation2022) also used different spectral indices as input features for classification (NDVI, NBR, NBR2, and others listed in ), as well as night light images from Visible Infrared Imaging Radiometer Suite (VIIRS) Day/Night Band (DNB; H. Chen et al. Citation2017), digital terrain model for the continental EU produced by Hengl et al. (Citation2020), and some others that are given in .

For the training dataset, approximately 8.1 million points were extracted from the LUCAS and CORINE Land Cover (CLC) data (Witjes et al. Citation2022). In the validation phase, Witjes et al. (Citation2022) validated ODSE-LULC against the S2GLC reference dataset (dataset used for training and validation) and performed a 5-fold spatial cross-validation on 30 km tiles. By comparing ODSE-LULC land cover data for 2017 that was reclassified to the S2GLC classes, with the S2GLC reference dataset, above 80% classification accuracy was achieved (Witjes et al. Citation2022). The 5-fold spatial cross-validation reported 84% accuracy for level-1 classes (5 classes), which decreased to 50% for level-3 classes (43 classes; Witjes et al. Citation2022).

2.6. Dynamic World

The main speciality of the Dynamic World land cover product, as compared to previously mentioned ones, is that it provides near real-time (NRT) land cover information. Eight land cover classes (listed in ) are obtained from Sentinel-2 images by semi-supervised deep learning, more precisely by training a Fully Convolutional Neural Network (FCNN; Brown et al. Citation2022).

Dynamic World differs from other land cover products that are previously mentioned in the way of creating training and validation dataset. At each sample location that was sampled within different biomes and different land cover classes, 510 × 510 pixels tiles were extracted from mostly cloudless Sentinel-2 images, randomly chosen from those acquired during 2019. Each tile that was extracted from the Sentinel-2 image was annotated by experts (around 4 000 tiles) and non-experts (around 20 000 tiles). Annotation was performed by drawing vector polygons encompassing a single land cover class and then assigning a land cover class to that polygon. For the validation set, 409 tiles were used and each of them was annotated by three experts and one non-expert. The final training dataset was produced after applying augmentations for the deep learning algorithm, namely rotations and contrasting (Brown et al. Citation2022).

After FCNN is trained with training data, it can be used to assign one of the land cover classes to each pixel in new Sentinel-2 images. Using the Google Earth Engine, each available Sentinel-2 image that passes the cloud coverage criterion (less than 35% covered by clouds) is being processed to obtain Dynamic World land cover (Brown et al. Citation2022).

Because of its regular updating, validation of Dynamic World could not be performed the usual way since the quality of the processing output does not depend only on the trained FCNN but also on the quality of the particular Sentinel-2 image (Brown et al. Citation2022). By comparing expert labels with the output of Dynamic World, it was concluded that the overall agreement is 73,8%.

Dynamic World land cover products include not only single-date discrete classification maps (i.e., maps with hard classification) but also class probabilities rasters. Another aspect of the Dynamic World product that is worth mentioning is the way of visualization of discrete classification map. The class with the highest probability is assigned to each pixel on the discrete classification map. Values of the corresponding class probabilities were used to generate hillshade visualization that was overlaid with the discrete classification map. Hillshade overlay exposes details that would not otherwise be visible (Brown et al. Citation2022). Dynamic World data are available on Google Earth Engine and can be viewed at https://dynamicworld.app/explore/.

shows visualizations of previously analysed land cover maps and visualizations of the main data sources (satellite images) used for their production. By comparing different land cover maps, it is clear that there are inconsistencies in classification results. The much coarser spatial resolution of the CGLS-LC100 as compared to other land cover maps can also be easily noticed as also the lower spatial resolution of the ODSE-LULC land cover product as compared to other Sentinel-2-based products. summarizes some of the main characteristics of each analysed land cover product.

Figure 1. Visualizations of analysed land cover products and main data sources used for their production over the city of Trogir in Croatia. Each column represents one land cover product. First in each column is the land cover map with classes coloured according to legends shown at the bottom of each column. CGLS-LC100 land cover map is from 2019 (Buchhorn, Smets, et al. Citation2020), ESA WorldCover is from 2020 (Zanaga et al. Citation2021), ELC10 (Venter and Sydenham Citation2020) from 2018, S2GLC (Malinowski et al. Citation2020) from 2017, ODSE-LULC (only 14 level-2 classes, available at https://stac.ecodatacube.eu/) from 2019, and the Dynamic World (Brown et al. Citation2022) map is from 17 April 2020. All legends are complete, except the one for the CGLS-LC100, for which classes related to forests are aggregated. Between the land cover map and legend, in each column are visualizations of the main data sources that have been used for producing a particular land cover map. Copernicus Sentinel-2 image (R–G–B = B4–B3–B2) was acquired on 17 April 2020, ESA’s PROBA-V image (R–G–B = red band – NIR (near infrared) band – SWIR (shortwave infrared) band, as suggested by Veljanovski, Čotar, and Marsetič (Citation2019)) was acquired on 16 April 2020, USGS’s Landsat 8 image (R–G–B = B4–B3–B2) was acquired on 4 April 2020, and Copernicus Sentinel-1 image (R–G–B = VH band – [(VH + VV)/2 · 1.1 + 30] – VV band (VV – vertical transmit and vertical receive, VH – vertical transmit and horizontal receive), partly adopted from Syrris et al. (Citation2020)) was acquired on 16 April 2020. Light blue pixels on the Sentinel-1 composite correspond to urban areas (Syrris et al. Citation2020). Abbreviations: Copernicus Global Land Services Land Cover map at 100 m (CGLS-LC100), Sentinel-based pan-European land cover Map (ELC10), European Space Agency (ESA), Open Data Science Europe Land use/Land Cover (ODSE-LULC), Project for on-Board Autonomy – Vegetation (PROBA-V), Sentinel-2 Global Land Cover (S2GLC), U.S. Geological Survey (USGS).

![Figure 1. Visualizations of analysed land cover products and main data sources used for their production over the city of Trogir in Croatia. Each column represents one land cover product. First in each column is the land cover map with classes coloured according to legends shown at the bottom of each column. CGLS-LC100 land cover map is from 2019 (Buchhorn, Smets, et al. Citation2020), ESA WorldCover is from 2020 (Zanaga et al. Citation2021), ELC10 (Venter and Sydenham Citation2020) from 2018, S2GLC (Malinowski et al. Citation2020) from 2017, ODSE-LULC (only 14 level-2 classes, available at https://stac.ecodatacube.eu/) from 2019, and the Dynamic World (Brown et al. Citation2022) map is from 17 April 2020. All legends are complete, except the one for the CGLS-LC100, for which classes related to forests are aggregated. Between the land cover map and legend, in each column are visualizations of the main data sources that have been used for producing a particular land cover map. Copernicus Sentinel-2 image (R–G–B = B4–B3–B2) was acquired on 17 April 2020, ESA’s PROBA-V image (R–G–B = red band – NIR (near infrared) band – SWIR (shortwave infrared) band, as suggested by Veljanovski, Čotar, and Marsetič (Citation2019)) was acquired on 16 April 2020, USGS’s Landsat 8 image (R–G–B = B4–B3–B2) was acquired on 4 April 2020, and Copernicus Sentinel-1 image (R–G–B = VH band – [(VH + VV)/2 · 1.1 + 30] – VV band (VV – vertical transmit and vertical receive, VH – vertical transmit and horizontal receive), partly adopted from Syrris et al. (Citation2020)) was acquired on 16 April 2020. Light blue pixels on the Sentinel-1 composite correspond to urban areas (Syrris et al. Citation2020). Abbreviations: Copernicus Global Land Services Land Cover map at 100 m (CGLS-LC100), Sentinel-based pan-European land cover Map (ELC10), European Space Agency (ESA), Open Data Science Europe Land use/Land Cover (ODSE-LULC), Project for on-Board Autonomy – Vegetation (PROBA-V), Sentinel-2 Global Land Cover (S2GLC), U.S. Geological Survey (USGS).](/cms/asset/8501dec3-be9a-4474-96f6-c37b13b45734/tgei_a_2242693_f0001_c.jpg)

3. Main processes in the global and continental land cover production workflow and their challenges

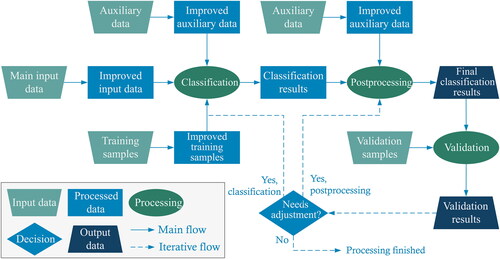

As demonstrated in the previous chapter, different land cover products are being produced by following different workflows and by using different input data. Nevertheless, some general steps are mostly common to all products. Those general steps are visualized in and are further discussed in the subsequent sections. Of course, not all processes from are used for producing all the mentioned land cover products. In it is visible that all input data can go through some level of preprocessing with the aim of their improvement or making them more suitable for classification. Auxiliary data used in the classification step and the postprocessing step do not have to be and usually are not the same. The final outputs of the production workflow are the final classification results and validation results. After validation results are obtained, it is possible to readjust classification variables and parameters (e.g., add new or remove some input data, adjust classification algorithm parameters, etc.) or make some changes in postprocessing (e.g., change expert rules applied on auxiliary data) and reiterate some parts of the workflow. In this iterative part is shown with dashed lines because it is usually not part of the final workflow, but is mostly only included in the workflow modelling stage.

Figure 2. Main steps in producing land cover products. Trapezoids represent input or output data, rectangles correspond to data that are the result of intermediate processing, ovals denote processes, and a diamond-shaped symbol represents a decision.

Wulder et al. (Citation2018) identified seven elements of the land cover classification process: end-user needs (i.e., application area), defining land cover legend, gathering training and validation data, image preprocessing with the derivation of spectro-temporal metrics and incorporation of auxiliary data, classification with suitable algorithms, post-classification error mitigation with iterative accuracy assessment, and product delivery methods. Most of these elements are visible in and are further described in the following sections.

3.1. Defining classification legend

Land cover classification legend depends on the application area of a particular land cover product and end-user needs. When examining land cover products that do not focus on a specific application area (e.g., forestry), they usually classify the whole extent of the area that is being classified into dozen of classes that at least include: vegetated area, artificial (built-up) area, bare ground land, and water bodies. Some of these classes, especially one that refers to vegetated areas, are often divided into more classes (for example, high and low vegetation). As identified by Di Gregorio et al. (Citation2016), many land cover products are produced with different objectives in mind, and the problematic part is establishing semantic interoperability between them. They also state that the most straightforward way of solving semantic inconsistency between different land cover products, which consists of comparing the class name and textual class descriptions, is time-consuming and ineffective. That is why Di Gregorio et al. (Citation2016) created the FAO LCCS (short for Land Cover Classification System) classification system, i.e., a system that is intended for assigning objects to different classes (INSPIRE Thematic Working Group Land Cover Citation2013).

In the FAO LCCS, the emphasis is not on the standardization of classes but on the standardization of attribute terminology that is used to describe each class (Di Gregorio et al. Citation2016). It is used to create classification legends for different applications and to compare classes of different classifications. One of the aims of the FAO LCCS is to enable the automatic harmonization of different land cover products without the need for human intervention (Di Gregorio et al. Citation2016). International Organization for Standardization (ISO) in cooperation with FAO created the standard ISO 19144 Geographic information – Classification system that currently has two parts: ISO 19144-1 Classification system structure and ISO 19144-2 Land Cover Meta Language (LCML). LCML, which is expressed in Uniform Modeling Language (UML), allows describing different classification systems and comparing and integrating them (ISO Citation2012). Version 3 of the LCCS comes with a software application that is intended to be a tool for applying LCML concepts conveniently (Di Gregorio et al. Citation2016).

A similar aim, among others, in terms of enabling semantic comparison between different classification systems has the EAGLE concept (short for EIONET (European Environment Information and Observation Network) Action Group on Land monitoring in Europe; https://land.copernicus.eu/eagle). It is a tool that enables the interpretation of semantic information included in various land cover classes and translation between different classification systems (Zhu, Jin, and Gao Citation2021). EAGLE has also a goal of clearly separating land cover from land use information since they are both often included in the same classification legend (Arnold et al. Citation2021).

3.2. Input data and their preprocessing

All products that are analysed in this research use multispectral or multispectral and radar satellite images as a main source for extracting land cover information. Some products use many other auxiliary data sources to augment and possibly enhance the final product. One of the first steps would then be choosing data that will be used in producing land cover products and conducting preprocessing, i.e., preparing input data in a way that they are as useful as possible to extract land cover information from them.

Most multispectral satellite images are delivered in different levels of already preprocessed forms. In the case of Sentinel-2 images, two product levels are available to general users, level-1C (L1C) and level-2A (L2A). As further discussed in Section 3.2.1. Sentinel-2 mission, one of the main differences between Sentinel-2 L1C and L2A images is that L2A images are atmospherically corrected, meaning that pixel values correspond to surface reflectance (ESA Citation2015), i.e., they are more-less free from atmospheric influences. PROBA-V multispectral optical images used for the production of CGLS-LC100 were also preprocessed to generate surface reflectance from the TOA data (Buchhorn, Lesiv, et al. Citation2020). Radar data also require preprocessing, but a more complex one (Gašparović and Dobrinić Citation2020). However, their inclusion as input data in the land cover production might significantly increase classification accuracy (Venter and Sydenham Citation2021).

When optical images are used for classification, various spectral indices that are calculated from them are also commonly calculated and included as input data. Spectral indices, a common name for the vegetation, water, built-up, and soil indices (Rumora, Miler, and Medak Citation2020), when included as input for spectral classification, increase classification accuracy under some circumstances.

Some yearly land cover products use input data that are acquired throughout the whole year, which means there are numerous values for each pixel from different parts of the year. To take into account the phenological cycle of vegetation and thus increase classification accuracy, it is common to calculate the temporal metrics. For example, Venter and Sydenham (Citation2021) calculated 5th, 25th, 50th, 75th, and 95th percentile values for each pixel across all selected images within the year, as well as standard deviation, median NDVI values for summer, winter, spring, and fall and some other spectro-temporal metrics. Witjes et al. (Citation2022) also used temporal metrics extracted from the Landsat data. They used seven spectral bands (blue, green, red, near-infrared (NIR), shortwave infrared 1 (SWIR1), SWIR2, and thermal), and divided them into three quantiles calculated for each month, and then from those data, they calculated different spectral indices. On the other hand, for the production of, for example, Dynamic World, single-date images were used. Dynamic World land cover products provide near real-time data but do not consider temporal characteristics of different land cover types. For instance, in one part of the year, some agricultural areas can be bare land, and others can be vegetated. Single date land cover product will in the corresponding part of the year classify those areas as bare land and in others as some of the vegetation classes. Suppose a user is interested in identifying land that is used for agriculture (which is more characteristic of land use than land cover) throughout the year, in general. In that case, it will be more accurately classified on products that use data from the whole year than just single-date data.

All the above metrics extracted from the input images are spectral, and they do not consider spatial relations between pixels. Some textural metrics can also be extracted and can indirectly include information from neighbouring pixels in the classification process. For example, it is possible to calculate a standard deviation of pixel values in a 5 × 5 moving window for a particular band or vegetation index (Venter and Sydenham Citation2021). Lower values in these layers with textural information point out to the pixels that are in homogeneous areas (surrounded by pixels with similar values), while higher values indicate more heterogeneous land cover (Buchhorn, Bertels, et al. Citation2020).

The following section focuses on sensors installed on satellites and used to acquire data used as main input data for conducting land cover classification. The analysis is limited only to sensors that are used to produce large-scale land cover products that are analysed in Chapter 2. Currently available global and continental land cover products. Those sensors are installed on Sentinel-1, Sentinel-2, Landsat, and PROBA-V satellites.

3.2.1. Sentinel-2 mission

Two Sentinel-2 satellites have been launched: Sentinel-2A in 2015 and Sentinel-2B in 2017 (Phiri et al. Citation2020). They are part of the space component of the Copernicus program, which is an Earth observation and monitoring program of the EU’s European Commission (Wiatr et al. Citation2016). ESA is in charge of the space component of the Copernicus program (Potin et al. Citation2015). Both Sentinel-2 satellites carry a passive sensor called a multispectral instrument (MSI) at an altitude of 786 km in an orbit that is sun-synchronous and are jointly providing global coverage of land and coastal sea with a 5-day revisit period (Gascon et al. Citation2017). The 5-day revisit period refers to equatorial areas and is lower towards the poles. Both Sentinel-2 satellites are orbiting in the same orbit but are on diametrically opposite sides.

MSI instrument is a push-broom sensor that is acquiring data in 13 spectral bands spreading over a visible, NIR, and SWIR range of the EM spectrum, with a spatial resolution of 10, 30, and 60 m (Gascon et al. Citation2017). Four bands have a spatial resolution of 10 m (blue, green, red, and one wider NIR band), six bands have a 20 m resolution (four narrow bands from the red-edge/NIR part of the spectrum, mainly for vegetation applications, and two wider bands from the SWIR part for detecting snow or clouds, assessing vegetation moisture, and similar applications), and three bands with a 60 m spatial resolution for conducting atmospheric corrections, detecting clouds and similar usages (Gascon et al. Citation2017).

There are three levels of Sentinel-2 products: level-0 (raw, compressed data), level-1, and level-2. Level-1 products are divided into level-1A (L1A), level-1B (L1B), and level-1C (L1C) products, while the only level-2 product is the level-2A (L2A) one. Level-1A products are decompressed level-0 products and are further radiometrically corrected to produce Level-1B products containing top-of-atmosphere (TOA) radiances (Gascon et al. Citation2017). Level-1C products are generated from level-1B ones, they contain TOA reflectance values, they are resampled to Universal Transverse Mercator (UTM) CRS, orthorectified, and are, unlike products from previous levels, publicly and freely accessible (Gascon et al. Citation2017). Level-2A products are produced from the level-1C products by converting TOA to the bottom-of-atmosphere (BOA) reflectance, thus minimizing atmospheric influences on the acquired data (Gascon et al. Citation2017). Sentinel-2 L2A tiles are accordant with minimal requirements of CARD4L-SR (short for CEOS (Committee on Earth Observation Satellites) analysis-ready data for land for surface reflectance products) specification (CEOS WGCV Citation2020a), meaning they can be analysed with minimal additional processing and should be interoperable with other CARD4L-SR datasets.

Some deficiencies of using Sentinel-2 images for generating land cover products are a lack of thermal bands and panchromatic band, uneven spatial resolution between bands, and also geolocational sensor-to-sensor misalignment with Landsat 8 images if they both want to be used in the same workflow (Phiri et al. Citation2020).

3.2.2. Sentinel-1 mission

Sentinel-1 mission is also a space component of the Copernicus program. Two Sentinel-1 satellites have been launched: Sentinel-1A in 2014 and Sentinel-1B in 2016 (Schubert et al. Citation2017). While Sentinel-1A is still fully operational, Sentinel-1B suffered an unrecoverable malfunction at the end of 2021 and ended its mission (ESA Citation2022a). To mitigate this loss, ESA announced speeding up the process of the Sentinel-1C satellite launch (ESA Citation2022a).

Sentinel-1 satellites are orbiting in a sun-synchronous orbit at 693 km altitude, they need 12 days to achieve global image coverage and are equipped with a C-band synthetic aperture radar (SAR) sensor (Geudtner et al. Citation2014; Schubert et al. Citation2017). Sentinel-1 satellites have four operational modes that provide different spatial resolutions and coverages: interferometric wide swath (IW, roughly 5 × 20 m resolution and 250 km wide swath), extra wide swath (EW, roughly 20 × 40 m resolution and 400 km wide swath), StripMap (SM, roughly 5 × 5 m resolution and 80 km wide swath), and Wave (WV, roughly 5 × 5 m resolution, 20 × 20 km scenes), which is the only mode that cannot operate in dual polarization (HH/HV or VV/VH; HH is short for horizontal transmit and horizontal receive, VH for vertical transmit and horizontal receive, and HV and VV analogously), but only in single (HH or VV; Geudtner et al. Citation2014; Miranda et al. Citation2017). Different imaging modes are utilized for different parts of the world, where IW and EW are the main imaging modes (Miranda et al. Citation2017). The observation scenario, which defines imaging modes that will be used for different parts of the world, is not constant and is changing based on observation requirements posed by the evolution of Copernicus services, requirements of ESA or EU member states, or by scientific or international initiatives (Potin et al. Citation2015).

Acquired data from each imaging mode are downlinked to the ground stations and processed to produce level-1, and level-2 products (Bourbigot, Harald, and Piantanida Citation2016). Level-1 products are slant range single look complex (SLC) and ground range, multi-look, detected (GRD), while level-2 are ocean (OCN) products that have three components: Ocean Swell Spectra (OSW), Ocean Wind Field (OWI), and Radial Velocity (RVL; Bourbigot, Harald, and Piantanida Citation2016).

OCN products, since they are mainly intended for applications on the sea, are not used as input data for land cover classification, while GRD Sentinel-1 images are. For example, for the production of ESA WorldCover and ELC-10 land cover products, GRD images were used as input data (Van De Kerchove et al. Citation2021; Venter and Sydenham Citation2021). SLC products are mostly not used for spectral-based classification. Some of the reasons are their more complex processing, multiple times bigger file sizes, and little additional useful information compared to GRD data (Hansen, Mitchard, and King Citation2020), however, there are also some examples of their utilization (Mohammadi et al. Citation2020). There are also examples of using SLC products for land cover classification based on polarimetric classification, and not on the spectral one, such as the one by Roychowdhury (Citation2016). Roychowdhury (Citation2016) classified urban areas into four broad classes (built-up, water bodies and wetlands, vegetation, and open spaces) and achieved higher overall accuracy with a polarimetric unsupervised classification of SLC data than with supervised and unsupervised spectral classification of GRD data.

GRD and SLC products both require preprocessing before they can be used and are not considered CEOS-ARD according to the CEOS WGCV (short for Working Group on Calibration and Validation) specifications. ESA does not currently provide CEOS-ARD for Sentinel-1, but a new product that will be following CARD4L-NRB (CEOS analysis ready data for land for normalized radar backscatter products) specification (CEOS WGCV Citation2021) is currently under development (ESA Citation2022b). However, ESA has provided the Sentinel Application Platform (SNAP), a free and open-source application that enables the processing of Sentinel-1 images, and also Sentinel-2 and Sentinel-3 ones (Weiß and Fincke Citation2022). Sentinel-1 images can be preprocessed with the Sentinel-1 toolbox (S1TBX) that is available in SNAP (Sumbul et al. Citation2021).

Mullissa et al. (Citation2021) proposed a procedure for generating Sentinel-1 ARD on Google Earth Engine (GEE) from Sentinel-1 GRD scenes that are already preprocessed to a certain degree and available on GEE. Private company Sinergise offers a tool for generating CARD4L-NRB data from Sentinel-1 GRD images: Sentinel Hub CARD4L Generation Tool (Dyke et al. Citation2021). The tool is accessible at https://apps.sentinel-hub.com/s1-card4l/, but it is not freely available. Digital Earth Africa program partnered with Sinergise to produce the first continuously updating free and open continental CARD4L-NRB Sentinel-1 dataset available for Africa only (Yuan et al. Citation2022).

3.2.3. PROBA-V mission

PROBA-V satellite, equipped with VEGETATION (VGT) optical instrument and five other non-scientific instruments, was launched in 2013 (Wolters et al. Citation2018; ESA Citation2022c). In 2020, the VGT instrument ended its operational mission, but the PROBA-V satellite is still flying since other instruments remained operating (ESA Citation2021). The main objective of the PROBA-V mission was to ensure the continuity of the SPOT-VEGETATION mission until the launch of Sentinel-3 satellites (Wolters et al. Citation2018), which was more than fulfilled. PROBA-V satellite is flying in a sun-synchronous orbit at an altitude of 820 km (Wolters et al. Citation2018). VGT has four spectral bands including blue, red, NIR, and SWIR bands (Dierckx et al. Citation2014). It consists of three cameras that jointly provide 2-day global coverage, while the main camera that provides images with the highest spatial resolution ensures 5-day global coverage (Wolters et al. Citation2018). Spatial resolution is higher for the blue, red, and NIR bands and lower for the SWIR band, ranging at nadir from 100 to 180 m, and at swath edges, from 350 m to 660 m (Francois et al. (Citation2014), as cited in Wolters et al. (Citation2018)). PROBA-V products are available in three spatial resolutions: 100 m, 300 m, and 1 km; and in different product levels ranging from raw unprojected level-1C products to level-3 atmospherically corrected top-of-canopy (TOC) products (Wolters et al. Citation2018).

For producing CGLS-LC100, the complete level-1C data archive was reprocessed with enhanced atmospheric and a new geometric correction (Buchhorn et al. Citation2021). Generated TOC daily-synthesis data with a spatial resolution of 100 m were used as a primary source of earth observation (EO) data, and 300 m resolution images as a secondary one (Buchhorn et al. Citation2021).

3.2.4. Landsat program

The first moderate-resolution earth surface observations under the Landsat program were performed with the Landsat 1 satellite that was launched in 1972 (Markham et al. Citation2016). Ever since 1972, the Landsat program has been providing multispectral images with global coverage. The latest Landsat satellite, Landsat 9, which is a joint project of the National Aeronautics and Space Administration (NASA) and the United States Geological Survey (USGS), was launched in 2021 (Showstack Citation2022) and is almost a replica of its predecessor Landsat 8 that was launched in 2013 (Markham et al. Citation2016). Landsat 8 satellite was the first one of the Landsat satellites that was equipped with push-broom instruments (Markham et al. Citation2019), while previous ones were equipped with whisk-broom ones (Barsi et al. Citation2016).

Landsat 9 carries the second Operational Land Imager (OLI-2) and second Thermal Infrared Sensor (TIRS-2), and they have almost the same characteristics as OLI and TIRS instruments onboard Landsat 8 (Markham et al. Citation2019). OLI and OLI-2 observe the surface of the Earth in nine spectral bands: three from the visible part of the spectrum that corresponds to the red, green, and blue wavelengths, one from NIR and two from SWIR parts of the spectrum, ‘cirrus’ band, ‘coastal aerosol’ band, and one panchromatic that covers almost the entire visible part of the spectrum (Markham et al. Citation2019). All OLI and OLI-2 bands have a spatial resolution of 30 m, except the panchromatic band, which has a 15 m resolution (Markham et al. Citation2019). TIRS-2 is a more reliable version of the TIRS instrument, with eliminated performance problems that were noticed on TIRS (Markham et al. Citation2016). TIRS and TIRS-2 instruments have two bands covering the thermal infrared part of the spectrum and have a 100 m spatial resolution (Markham et al. Citation2019).

Landsat 4-9 satellites are deployed in a sun-synchronous orbit at an altitude of 705 km (Masek et al. Citation2020; NASA Citation2021). Landsat 8 and 9, currently only operational Landsat satellites (Markham et al. Citation2016; Landsat 7 ended its nominal science mission in April 2022 (USGS Citation2022b)) need 16 days to capture the entire surface of the Earth with OLI and TIRS instruments (Masek et al. Citation2020). Landsat 9 satellite, which took the place of Landsat 7, is shifted in phase by 8 days from Landsat 8 and is thus jointly with Landsat 8 providing global coverage every eight days (Masek et al. Citation2020).

The USGS is distributing Landsat images after conducting various types of processing. In 2016 all Landsat images were reprocessed into the so-called collection-1 dataset in order to ensure that images are suitable for time-series analysis and compositing (USGS Citation2019). All collection-1 products were removed from the USGS archives and are not accessible through USGS platforms as of the end of 2022 (USGS Citation2022a). The entire Landsat archive was again reprocessed using consistent radiometric and geometric algorithms to the collection-2 dataset that became available in late 2020 (Choate et al. Citation2021). One of the main reasons for producing the collection-2 dataset is to improve its geolocation accuracy (Dwyer et al. Citation2018) and harmonize Landsat and Sentinel-2 missions by improving their pixel-based positional alignment (Masek et al. Citation2020. Harmonization between Sentinel-2 and Landsat 8/9 data is particularly interesting because they jointly provide a global average median revisit interval for all four sensors (MSI on Sentinel-2A and Sentinel-2B, OLI, and OLI-2) of 2.3 days (Li and Chen Citation2020). Collection-2 consists of level-1 (TOA reflectance for complete Landsat archive) and level-2 (surface reflectance and surface temperature for Landsat 4-9) products that are divided into so-called tier-1 and tier-2 categories (USGS Citation2021). Images are placed in the tier-1 category if their geometric root-mean-square triangulation error (RMSE) is below 12 m and in tier-2 otherwise (Masek et al. Citation2020). Collection-2 is being continuously updated with new Landsat 8 and Landsat 9 data as they are being acquired (USGS Citation2021).

Collection-2 level-2 images are ARD products that are compliant with CARD4L-SR and CARD4L-ST (CEOS analysis-ready data for land for surface temperature products) specifications (CEOS WGCV Citation2020a, Citation2020b). USGS is also delivering the so-called U.S. ARD for Conterminous United States (CONUS), Hawaii, and Alaska from the Landsat 4-9 level-1 images (Dwyer et al. Citation2018; USGS Citation2022c). Another already mentioned global surface reflectance Landsat ARD (but not a CEOS-ARD) dataset is being produced by the GLAD from Landsat data acquired from 1997 to the present (Potapov et al. Citation2020). These GLAD Landsat ARD images were used to produce ODSE-LULC land cover product.

3.3. Auxiliary data

Auxiliary data can be used as input for classification, along with optical and/or radar data, or they can be used to enhance classification accuracy after classification was performed. For example, in the production of ESA WorldCover, altitude and slope extracted from the digital elevation model were used as additional features included in the classification phase, while OpenStreetMap and GHSL data, among others, were used as a support in making the final decision on land cover class for each pixel (Van De Kerchove et al. Citation2021).

Auxiliary data often need certain preprocessing before they can be included in some of the phases of creating land cover products. Some of that preprocessing include rasterizing vector data, reprojecting data to the common CRS, extracting only a useful subset of data, harmonizing different classification schemes, etc. Including auxiliary data can greatly improve classification accuracy, as shown by Venter and Sydenham (Citation2021). Khatami et al. (Citation2016) also showed that the inclusion of auxiliary data could significantly increase classification accuracy, much more than including spectral indices.

3.4. Training and validation samples

Almost all currently used land cover classification techniques are supervised, meaning they are heavily dependent on an often large volume of training data. Those data are used to train the classification algorithm, which then classifies the so-called never-before-seen data in the corresponding class. To validate classification results, an additional validation dataset is also required. The validation dataset is most often acquired at the same time as the training one but is not used in the training phase. Wulder et al. (Citation2018) state that training and validation data can be acquired by in-situ sampling, by creating samples using higher resolution aerial or satellite images, from pre-existing suitable non-remote sensing data, from other available land cover data, and more recently, by exploiting volunteered geographic information (VGI), or more advanced techniques including adaptive based learning.

Currently, training and validation samples are most often acquired by assigning a land cover class to each pixel by making a comparison to adequate higher spatial resolution remote sensing images. The process of creating a completely new training and validation dataset this way, because it is often almost entirely manual, is the most time-consuming step in the land cover production workflow. The quality and design of the sampling procedure are often directly correlated with the overall classification accuracy.

In some cases, already existing datasets are used for training and validation. The obstacle that often needs to be overcome in this case is an incompatibility between the classification scheme used in the production of particular existing training datasets and the scheme defined for a land cover product that should be created based on that dataset. One example of a dataset that was not primarily created for training and validation of land cover classification products (d’Andrimont et al. Citation2021), but is becoming increasingly used for it, is the Land Use/Cover Area frame Survey (LUCAS) data. LUCAS land cover and land use statistical data are collected by in situ surveys that are cyclically repeated within the EU countries every three years since 2006 (d’Andrimont et al. 2021). Before 2018, LUCAS data were collected and delivered in the form of points with assigned, among other data, land cover and land use information. The dataset from 2018, unlike previous ones, additionally contains the so-called LUCAS Copernicus module, which is specifically designed to be suitable for EO applications (d’Andrimont et al. 2021).

Another example of using existing land cover products is applied in the production of S2GLC. As already mentioned, HRLs and CLC were used as data sources for generating training samples. Training samples were generated by applying a set of rules on HRLs, CLC, and indices extracted from Sentinel-2 images for each of the 13 land cover classes (Malinowski et al. Citation2020). Those rules, which can be fully automated, were applied to a couple of Sentinel-2 images, and then up to 1000 training samples for each land cover class were randomly extracted (Malinowski et al. Citation2020). Finally, as Malinowski et al. (Citation2020) explain, those samples were used to classify each previously selected Sentinel-2 image from 2017 with an RF classifier. Although it can be fully automated, this classification workflow can also be considered a supervised one since it uses a classifier that requires a training dataset. The difference between this workflow and classical supervised classification is that the training dataset is not acquired manually but automatically.

Recently, a couple of datasets for training deep learning models for land cover classification have become available. Helber et al. (Citation2017) created a dataset consisting of 27,000 labelled Sentinel-2 patches that are suitable for DL scene-based land cover classification (available at https://zenodo.org/record/7711810#.ZAm3k-zMKEA). Sumbul et al. (Citation2021) generated the BigEarthNet-MM dataset that contains more than 590,000 pairs of Sentinel-1 and Sentinel-2 patches from 10 European countries, also for scene-based DL studies (available at https://bigearth.net/). Benhammou et al. (Citation2022) provided Sentinel2GlobalLULC, a global Sentinel-2-based dataset, again for scene-based land cover classification (available at https://zenodo.org/record/6941662). The dataset that is also freely available and is suitable for pixel-based DL land cover classification is a dataset that was used for creating Dynamic World land cover product (provided by Tait et al. (Citation2021)).

When considering training and validation samples, the Geo-Wiki platform (available at https://www.geo-wiki.org/) should also be mentioned. Although the Geo-Wiki platform now provides many different applications, the main one is still the Geo-Wiki application that is used for crowdsourcing training, calibration, and validation data for producing land cover datasets (S. Fritz et al. Citation2012). Fritz et al. (Citation2017) combined data collected via the Geo-Wiki application from four campaigns and created a single land cover and land use dataset for the validation and training phase of producing land cover maps.

The Geo-Wiki app was used in the production workflow of CGLS-LC100 (Buchhorn, Lesiv, et al. Citation2020) and ESA WorldCover (Tsendbazar, Li, et al. Citation2021). In the production of the training dataset for the Dynamic World land cover product, the Labelbox platform (available at https://labelbox.com/) was used as a tool for drawing vector polygons, i.e., annotating land cover classes on Sentinel-2 tiles for deep learning classification (Brown et al. Citation2022).

3.5. Land cover classification

Classification is, in this sense, a process of determining the most probable land cover class for each pixel. This research is mostly limited only to the pixel-based supervised classification which uses spectral information acquired by multispectral sensors as a main input data source. Classification is carried out in the so-called feature space, where each pixel corresponds to one point whose coordinates are determined by feature values assigned to that pixel. Features are variables that are used as input for classification and are assigned to each pixel, such as bands from multispectral images, vegetation indices extracted from them, auxiliary data, and all other data that should help distinguish different land cover classes. Originally, only bands from multispectral images were used for classification since it is expected that similar land cover should have similar spectral characteristics (i.e., they should similarly interact with electromagnetic (EM) waves). Additional features that are included in classification should also follow that principle: similar land cover should have similar ‘behaviour’ for a particular feature. Dimensions of feature space are equal to the number of features. It is expected that points in feature space corresponding to a single land cover class should be relatively close to each other. The classification algorithm has a task to determine the delineation between classes in feature space and then assign a class to every point, and consequently to every pixel. To delineate classes, a supervised classification algorithm requires a training dataset that consists of pixels, i.e., corresponding points in feature space for which land cover class is already determined.

As already stated, this paper limits research to pixel-based supervised classification. This kind of satellite image classification is inductive (Raiyani et al. Citation2021), meaning that it is based on drawing conclusions for the whole dataset from just one subsample of it. As already discussed although there are other classification techniques, such as GEOBIA which shows a tendency to achieve high classification accuracy and unsupervised algorithms, pixel-based supervised classification techniques are still the most widely used ones (Phiri et al. Citation2020), especially for producing large-scale land cover products that are based on moderate resolution remote sensing images. There are multiple classification algorithms, some of which are mentioned in the following section.

3.5.1. Classification algorithms

The two most often used metrics to compare classification algorithms (i.e., classifiers) are classification accuracy and the time needed for performing classification. When comparing classification algorithms based on these two metrics, it should be ensured that all other variables that influence accuracy and processing time remain unchanged. This for example means that input data and training and validation datasets remain unchanged. Many papers have been comparing different classifiers for land cover classification on satellite images, some of which are mentioned below.

Khatami et al. (Citation2016) found that different studies report disagreeing results concerning achieved accuracies with different classifiers which provoked them to make further investigation and to draw some general conclusions. They did not limit their research only to the influence of classification algorithm choice on classification accuracy but also to preprocessing methods applied to input data. Meta-analysis was run on 157 peer-reviewed articles by pairwise comparing overall accuracies achieved by different classifiers, with a drawback that it included only articles published before 2012 (Khatami, Mountrakis, and Stehman Citation2016). That is rather outdated, but some meaningful insights can be drawn out. Based on a meta-analysis, Khatami et al. (Citation2016) concluded that Support Vector Machines (SVM) classifier outperformed the Maximum Likelihood classifier (MLC) in 28 out of 30 studies and the Neural Network algorithm in 14 out of 22 studies with a tendency to have a better performance when spatial and spectral resolutions are higher. SVM also achieved higher overall accuracy (OA) than the Random Forest (RF) classifier. Another finding is that K-Nearest Neighbor, although a very simple algorithm, slightly outperformed MLC in 12 out of 17 studies (Khatami, Mountrakis, and Stehman Citation2016).

Phiri et al. (Citation2020) investigated the findings of multiple articles concerning the usage of Sentinel-2 images for land cover mapping, including the comparison of different algorithms used for that purpose. They found out that machine learning (ML) algorithms dominate, including RF, KNN, SVM, and Bayes, and more sophisticated Convolutional Neural Networks (CNN). It is interesting to notice that most of the land cover products that are listed in were produced by a random forest classifier, which supports the conclusion of Phiri et al. (Citation2020) which denotes it as the most commonly used classifier.

Many researchers investigated land cover classification accuracies using different classifiers but within a limited geographical area. This is one of the problems identified by Small (Citation2021), stating that in many articles, it is not clear if the methodology being developed is suitable for general application or just in the area in which it was applied. Nevertheless, these articles can be a valuable source of information when choosing which classifier should be applied.

Talukdar et al. (Citation2020) examined land cover classification accuracies for six machine learning algorithms, specifically Artificial Neural Network (ANN), RF, Fuzzy Adaptive Resonance Theory-Supervised Predictive Mapping (Fuzzy ARTMAP), SVM, Spectral Angle Mapper (SAM), and Mahalanobis distance (MD). The classification was performed on Landsat 8 images with a conclusion that, although no major classification accuracy differences were obtained between all used classifiers, the highest accuracies were achieved with RF, ANN, and SVM, in that order, from the most accurate one (Talukdar et al. Citation2020). Talukdar et al. (Citation2020) also list a dozen of research that dealt with the comparison of classification accuracies achieved by different classifiers and concluded that in most papers RF, ANN, and SVM were reported as the most accurate classifiers. Finally, Talukdar et al. (Citation2020) point out that the analysis of classifier accuracies in different climatic regions still has to be done.

Artificial Neural Network (ANN) is a non-parametric classification technique (Talukdar et al. Citation2020), meaning that it does not presume the nature of data distribution (Vali, Comai, and Matteucci Citation2020). The ANN is constructed in a similar way to the human nervous system, it consists of connected nerve fibres (Talukdar et al. Citation2020). One of the advantages of the ANN classifier is its ability to generate useful results even if training data are incomplete or erroneous. The ANN contains three types of layers: the input layer in which each node represents one feature in a dataset that has to be classified (for example, each spectral band or auxiliary dataset), the output layer, in which each node corresponds to one class, and hidden layers that is between those two layers and that consists of a user-defined number of layers and nodes within them (Shih, Stow, and Tsai Citation2019). In general, nodes from the input layer are connected with nodes in the hidden layer which are connected with nodes in the output layer. Weight is assigned to each connection and that weight is being adjusted in the training phase. In the training phase, for pixels that have known both, input and output values, weights of connections are being adjusted so that most of the training samples are correctly classified in the appropriate class, i.e., so that discrepancies between network-generated classes and classes assigned to training samples are minimized (Shih, Stow, and Tsai Citation2019; Talukdar et al. Citation2020). When ANN is trained, it can be used to classify new data in corresponding classes. Two main parameters that have to be defined by the user are the learning rate (i.e., the amount of weight change in each iteration) and the number of iterations (Shih, Stow, and Tsai Citation2019). Both parameters influence the classification accuracy and the best way to define their optimal values is to apply a trial-and-error approach (Talukdar et al. Citation2020).

Support Vector Machines (SVM), which is also a non-parametric method (Talukdar et al. Citation2020), applies a more traditional approach by delineating classes in feature space. The training dataset is used to determine hyperplanes that separate one class from another (Shih, Stow, and Tsai Citation2019). The user has to define two hyperparameters: C and gamma (Talukdar et al. Citation2020). The value of the C hyperparameter has to be carefully chosen since the wrong value can lead to model overfitting or underfitting because it presents a compromise between class separation and training error (Rumora, Miler, and Medak Citation2020). The gamma hyperparameter controls the shape of the hyperplanes and in that way affects the obtained accuracy.

Random Forest (RF), also a non-parametric algorithm (Talukdar et al. Citation2020), is a machine learning method that is based on Decision Trees (DT; Masiliūnas et al. Citation2021) and that was developed by Breiman (Citation2001). RF model requires two main parameters: the number of trees and the number of features that, when reached, will initiate a split in DT (Malinowski et al. Citation2020). Random forest algorithm is also considered an ensemble-based machine learning algorithm in which each tree in the forest gives a vote on class and the final class is determined based on the majority of votes from all trees (Shih, Stow, and Tsai Citation2019; Talukdar et al. Citation2020). RF is more immune to overfitting and errors in training samples than other algorithms are (Malinowski et al. Citation2020). Burgueño et al. (Citation2023) compared ANN and RF algorithms and found that RF yields better results because it better classifies spectrally heterogeneous classes.

3.6. Validation of land cover products

For a land cover product to be useful to the end user, it is required to provide convenient information about its quality. Validation of land cover products is a necessary part of the production process. Its importance is affirmed by the fact that two documents usually accompany most land cover products: one describing production workflow and communicating general information about the land cover product, and another reporting validation results.

Validation is mostly done by comparing classification results with the validation dataset. The validation dataset is usually acquired in the same way as the training dataset with the main difference being that the validation dataset is not used for training the classifier. In any case, the validation dataset must have an accuracy much higher than the expected accuracy of the land cover product. By comparing classification results, i.e., land cover classes that are assigned to pixels, with the validation dataset (considered true data), it is possible to calculate different measures that report classification quality. The metric that is most often used and that is easy to calculate is overall accuracy. Overall accuracy reports the percentage of correctly classified pixels from all pixels included in a validation dataset.

CEOS WGCV Land Product Validation (LPV) Subgroup has a mission to standardize quantitative validation of products (not only land cover ones) derived from different satellite sensors and obtained by different algorithms (CEOS WGCV LPV Subgroup Citation2022). CEOS WGCV LPV Subgroup defines five validation stages, where each stage has more restrictive requirements than its predecessor (CEOS WGCV LPV Subgroup Citation2022). Stage-0 does not require any validation, while stage-1 requires accuracy assessment on not more than 30 locations and time periods (CEOS WGCV LPV Subgroup Citation2022). For stage-2, it is necessary to conduct accuracy estimation with a validation dataset including more than 30 spatiotemporal locations and to assess spatiotemporal product consistency and consistency with other similar products (CEOS WGCV LPV Subgroup Citation2022). In addition to the requirements of stage-2, stage-3 requires that validation assessment is quantitively expressed and based on procedures that are well acquainted amongst the validation community (CEOS WGCV LPV Subgroup Citation2022). Finally, stage-4 requires that whenever a new version of the product or a new iteration for time series products is available, stage-3 results for a particular product are systematically updated (CEOS WGCV LPV Subgroup Citation2022).