Abstract

Compared to the significant development of creativity studies, individual creativity research has not reached a meaningful consensus regarding the most valid and reliable method for assessing individual creativity. This study revisited 2 of the most popular methods for assessing individual creativity: subjective and objective methods. This study analyzed 1,500 individuals to investigate whether the methods for assessing individual creativity affect the measurement outcomes of individual creativity. Findings indicated that subjective assessments have a smaller variance a higher mean and a moderate but significant correlation with objective assessment methods. Such differences can be motivated by social desirability, consistency motif, illusory superiority, and leniency biases. Based on these findings, this study highlighted the need to acknowledge how subjective and objective assessment methods may affect individual creativity assessment outcomes.

The assessment of creativity is one of the central topics in individual creativity research (e.g., Kaufman, Plucker, & Baer, Citation2008; Puccio & Murdock, Citation1999). The growth of creativity studies led to the development of many methods for assessing creativity, which explored diverse aspects of individual creativity from personal to various contextual factors. Despite significant progress over the last few decades, there still exists an ongoing debate on the reliability and validity of creativity assessment methods (e.g., Cropley, Citation2000; Hocevar, Citation1981; Runco, Citation1986; Wallach & Kogan, Citation1965). As Plucker and Makel (Citation2010) acknowledged, creativity assessment is among the topics that incite the most discourse in creativity studies.

Individual creativity assessment methods are often classified as subjective and objective, depending on their characteristics and measurement tools. Many previous studies on individual creativity used either subjective or objective methods interchangeably without clearly distinguishing the possible differences in measurement outcomes. Although both subjective and objective methods have their own upsides respectively, previous studies suggested that the special attention to measurement and methodological issues is still important for advancing research in theoretical relationships between constructs (Venkatraman & Grant, Citation1986; Venkatraman, Citation1989). Specifically, they suggested developing a method that simultaneously integrates the merits of objective and subjective methods. Regarding subjective assessment, they advised combining objective methods to correct for possible bias and derive a clearer picture of individual creativity (Madjar, Oldham, & Pratt, Citation2002; Shin, Kim, Lee, & Bian, Citation2012). Regarding objective assessment, they argued that it may fall short of fully capturing the dynamic interplay of the various factors of creativity (Elsbach & Kramer, Citation2003). They further criticized the fact that most standardized tests do not pay sufficient attention to important dimensions such as out-of-the-box thinking, originality, and flair (Elsbach & Kramer, Citation2003; Reich, Citation2001). The complex and multidimensional characteristic of creativity requires a more comprehensive approach in assessing creativity (Cropley, Citation2000; Feldhusen & Goh, 1995; Plucker & Makel, Citation2010).

Therefore, this study aimed to investigate how different assessment methods affect measurement outcomes of individual creativity. Specifically, it examined whether subjective and objective assessment methods may lead to the same measurement outcomes or not. If not, it further explored how these two are different. To test the research questions, a total of 1,500 individual data in South Korea was collected. Both subjective and objective assessment methods were used to assess each individual’s creativity. Findings indicated that that subjective and objective methods showed significant differences in their assessment results. Specifically, the subjective assessment method showed a higher mean and smaller variance than the objective method. This study also found that the correlation between the two assessment methods was significant, but not as high as expected. Moderate correlations between subjective and objective assessment could possibly weaken the robustness of research conclusions. Overall, this study showed that the quality of assessment methods used for empirical research may play a crucial role in linking theory to empirical tests.

TRIANGULATION OF SUBJECTIVE AND OBJECTIVE ASSESSMENT METHODS FOR INDIVIDUAL CREATIVITY

The attempts to develop better assessment methods took many forms, such as improving perceived weaknesses of existing measures or encompassing new dimensions believed to affect creativity (e.g., Plucker & Makel, Citation2010; Plucker & Runco, Citation1998; Schoenfeldt & Jansen, Citation1997). Previous studies continuously acknowledged the need for methodological triangulation (e.g., Madjar et al., Citation2002; Perry-Smith, Citation2006; Runco, Citation1986; Shalley, Gilson, & Blum, Citation2000). Triangulation refers to the combination of multiple methodologies in the study of the same phenomenon for the purpose of better research design (Denzin, Citation1970; Mathison, Citation1988). A possible triangulation approach for individual creativity assessment may concern dealing with the characteristics of assessment methods, specifically subjective or objective assessment methods. A well-taken distinction criteria between objective and subjective creativity assessment methods concerns how creativity is measured and by whom it is measured. It is also possible that the distinction between objective and subjective assessment is two opposite ends of a continuum, rather than two completely distinct methods. Thus, in this study, objective and subjective methods refer to a method that has more or less subjective/objective qualities than other methods. Subjective assessment methods are more qualitative and involve analyzing data from direct fieldwork observations, surveys, interviews and written documents. On the other hand, objective methods involve more quantitative analyses that concern systematic empirical investigations through statistical, mathematical or numerical approaches (Patton, Citation1990). Developing objective creativity assessment methods often requires significant investment of resources, such as the long process of designing assessment structures, developing grading systems, training research administrators and sometimes developing computer algorithms. To review the upsides and downsides of each method, this study first selected the three most representative and well-taken assessment methods that have typically either subjective or objective characteristics. Then, each assessment method was individually examined in greater depth and discussion about key issues was followed.

Self-Report

Previous studies argued that individuals are most likely to be aware of subtle tasks and the occurrence and frequencies of certain creative behaviors (Janssen, Citation2000; Ng & Feldman, Citation2012). The self-reporting method directly asks the subjects to self-judge their own creativity-related abilities, perceptions or creative performance. Self-reports usually rely on relatively simple questionnaire-type methods that are fairly easy to administer. As Janssen (Citation2000) argued, some aspects of the creative person, such as perceptions, beliefs, self-concepts, and past behaviors may not be known to peers or supervisors unless one simultaneously engages in impression management. Thus, self-reports are sometimes the only available and reliable assessment method for assessing certain aspects of creativity (Amabile, Citation1996; Kaufman & Baer, Citation2004). Well-regarded assessment methods using self-reports include Gough’s (Citation1979) Creative Personality Scale (CPS), Amabile’s (Citation1996) KEYS (Assessing the Climate for Creativity) and Hocevar’s (Citation1979) and Kirschenbaum’s (Citation1989) Creative Behavior Inventory (CBI).

On the subjective/objective assessment scale, self-reports are closest to being the most subjective of methods as they rely solely on subjective judgments of oneself. Numerous studies showed significant correlation between self-reports and other measures of creativity, such as divergent thinking (Barron, Citation1955; Batey & Furnham, Citation2006; Carson, Peterson, & Higgins, Citation2005; Gough, Citation1979). For example, Axtell et al. (Citation2000) found that the correlation between the creativity indexes measured by self-reports and supervisor evaluations was significant and as high as 0.62. However, the self-report method has not been able to avoid criticisms, such as providing at best ‘soft data’ and being prone to misinterpretation (Podsakoff & Organ, Citation1986). Some studies suggested that self-reports are subject to dangers of bias due to social desirability and consistency motif (Podsakoff, MacKenzie, Lee, & Podsakoff, Citation2003).

Supervisor Evaluation

Previous studies recognized that supervisors are in good position to recognize creativity in certain domains. Domain specificity, an important reason for using supervisor evaluation, recognizes that supervisors are among those most familiar with the domain and possess the expertise to judge (Hocevar, Citation1981). Supervisor evaluation has often been used as a common assessment tool for field studies at organizational settings (George & Zhou, Citation2002; Oldham & Cummings, Citation1996; Scott & Bruce, Citation1994). For example, Oldham and Cummings (Citation1996) developed a 3-item to assess creative performance and asked questions such as “How original and practical it this person’s work?” (p. 634) on a 7-point Likert-type scale. Similarly, Zhou and George (Citation2001) developed a 13-item scale, and asked questions such as “Is this person a good source of creative ideas?” or “Does this person come up with creative solutions to problems?” (p. 696) Zhou and Shalley (Citation2003) noted that although these tests share many similarities, they are at the same time different, emphasizing different aspects such as innovation, creative outcomes, creative processes, and so forth.

On the subjective/objective assessment scale, while more objective than self-reports, supervisor evaluation still has subjective qualities, as it relies on supervisors’ subjective evaluations. Supervisor evaluations are also relatively simple and easy to administer. Moreover, the quantitative nature of supervisor evaluations allows for broad evaluation of the employee’s creativity due to domain specific knowledge of the supervisor, providing rich and comprehensive descriptions (Zhou & Shalley, Citation2003). Supervisors’ ratings have been found to significantly correlate with archival measures (Scott & Bruce, Citation1994). However, supervisor evaluation is not free from potential bias, such as demographic characteristics, supervisory liking, halo effects, systematic bias and social desirability (Gilson, Mathieu, Shalley, & Ruddy, Citation2005; Landy & Farr, Citation1980; Madjar et al., Citation2002). Some researchers criticized this method on the grounds that supervisors are not best suited to rate complex creative processes or attributes which is comparatively less obvious than objective performance that is often more obvious and visible (Janssen, Citation2000; Organ & Konovsky, Citation1989).

EXPERT EVALUATION

Experts refers to those who have specialized knowledge in a certain domain and at the same time do not have a supervisor–employee relationship with the examinee. For example, experts may include panels of experts in the relevant domain, such as judges or reviewers and professionals with advanced degrees (Amabile Citation1983, Citation1996; MacKinnon, Citation1962). Creative outputs such as patent disclosures, awards, or research papers all involve evaluations of various experts (Amabile, Citation1996; Oldham & Cummings, Citation1996; Scott & Bruce, Citation1994; Tierney, Farmer, & Graen, Citation1999). Experts can also include trained experts who grade standardized creativity tests such as the Torrance Test of Creative Thinking (TTCT Torrance, Citation1974). These experts follow strict standardized guidelines to ensure objective assessment of creativity.

On the subjective/objective scale, expert evaluation is the most objective out of the three. Expert evaluation usually relies on quantitative indexes, such as the number of patents or scores of a divergent thinking test, which can ensure greater objectivity, allows easier comparison across different examinees, and be a solution to the bias problems of more subjective assessment methods (e.g., Madjar et al., Citation2002; Perry-Smith, Citation2006; Zhou & Shalley, Citation2003). In particular, standardized tests are recognized as one of the most adept in assessing the cognitive processes of creativity (e.g., Guilford, Citation1965; Plucker & Runco, Citation1998). Divergent thinking frequently assessed through standardized tests is one of the single most extensively studied topics in creativity studies (Runco, Citation2010). However, expert evaluations have not been able to avoid several critical weaknesses. First, scholars raised questions about the definition of experts: “Who can rightfully be considered experts of the domain?” (Kaufman & Baer, Citation2012, pg. 84). Moreover, some objective creativity indicators such as patents and awards may only be relevant indicators for limited areas and industries (Zhou & Shalley, Citation2003). In spite of considerable evidence of interrater reliability, some studies argued that high interrater reliability alone is not a sufficient condition to ensure assessment validity (Kaufman & Baer, Citation2012).

Despite different approaches for assessing creativity, current creativity studies do not seem to apply a clear-cut distinction between subjective and objective assessment of creativity, using the two different methods rather interchangeably. This study questioned this assumption of interchangeability and developed hypotheses addressing the reasons why subjective or objective assessment methods could generate different outcomes. When assessing individual creativity, subjective assessment methods may be inherently exposed to at least two critical biases, social desirability and consistency motif (also referred to as cognitive consistency) (Podsakoff & Organ, Citation1986). Social desirability bias refers to the measurement error attributed to the individual tendencies in over-reporting socially desirable personal characteristics and under-reporting socially undesirable characteristics (Arnold & Feldman, Citation1981; Taylor, Citation1961; Thomas & Kilmann, Citation1975). This bias often prompts responses that represent the person in a more socially favorable light. For example, in a study on self-confidence and creativity, Goldsmith and Matherly (Citation1988) found possibility of systematic bias in creativity and self-confidence scales among male students. They explained that, compared to women, men were inclined to represent themselves in what they thought was a socially desirable, masculine manner. Consistency motif describes the tendency to maintain consistency between cognition and attitudes (Heider, Citation1958; Osgood & Tannenbaum, Citation1955). Respondents participating in evaluations often have the desire to make themselves appear consistent and rational. They tend to search for similarities between questions asked and try to produce relationships that would otherwise not exist in real-life contexts (Podsakoff et al., Citation2003). Coupled with social desirability, respondents may prefer to maintain a consistent line in a series of answers that they think are socially favorable. This will become more prominent when they believe that socially mediated rewards (or punishments) are significant (Fisher, Citation1993).

Hence, due to these tendencies, compared to objective assessment outcomes, subjective assessment outcomes of individual creativity will likely have a frequency distribution that has a higher frequency of values near the mean, concentrated within a certain ‘preferred’ range of scores. Thus:

Hypothesis 1:

The variance of subjective assessment measures on individual creativity will be smaller than the variance of objective assessment measures on individual creativity.

Compared to objective assessment methods, the respondents to subjective assessment methods may have the tendency to overestimate themselves due to biases of illusory superiority and leniency. The concept of illusory superiority, also identified as a sense of relative superiority (Headey & Wearing, Citation1988) or the above average effect (Dunning, Meyerowitz, & Holzberg, Citation1989), describes the tendency to treat all members of a group, or to describe oneself as above average (Brown, Citation1986). Leniency bias refers to the tendency of evaluators rating themselves as higher than they should (Guilford, Citation1954). Leniency bias may be a more serious problem for certain raters, such as those who have the tendency to give overall higher marks as compared to other judges or those that give higher marks to certain examinees, such as those with whom they are ego involved. For example, in the case of peer and supervisor evaluations, Moneta, Amabile, Schatzel, and Kramer (Citation2010) found that evaluators with a high degree of agreeableness tended to generally rate examinees as more creative than other evaluators. In addition, the aforementioned social desirability could also prompt respondents to conform to certain social expectations, such as giving higher scores for more socially desired items. Compared to objective assessments, subjective assessment methods are more prone to bias, because most evaluations are administered in a social context where examinees may be concerned about their self-evaluation results. Likewise, evaluators may also be concerned about the possibility that their personal relationships might interfere with their evaluation results. In comparison to objective assessments, the three tendencies mentioned previously may lead evaluators to give higher ratings, resulting to an upwardly biased evaluation. Thus:

Hypothesis 2:

The mean of subjective assessment measures of individual creativity will be higher than the mean of objective assessment measures of individual creativity.

As predicted, if both the mean and variance of subjective and objective assessments are significantly different, a critical question is whether subjective and objective assessment methods effectively measure the same construct. To ensure triangulation between subjective and objective assessment methods, it is necessary to make sure that there exists convergent validity or strong correlation, showing agreement between two different methods in measuring a single construct (Campbell & Fiske, Citation1959). If multiple and independent assessment methods do not reach the same conclusions, it is evidence of weak convergent validity (Jick, Citation1979). Very weak convergent validity could possibly threaten the entire validity of research results (Campbell & Fiske, Citation1959). Thus, checking for convergent validity is an important step to determining the usefulness and effectiveness of different measurement methods in the research design process (Amabile, Conti, Coon, Lazenby, & Herron, Citation1996; Hocevar, Citation1981).

Whether subjective and objective assessments of individual creativity reach the same conclusions has been an on-going debate. For example, there exists evidence of significant correlations between various subjective assessment methods and between subjective and objective methods (Janssen, Citation2000; Scott & Bruce, Citation1994). Others however, found significant differences between several assessment methods on individual creativity. For example, Oldham and Cummings (Citation1996) found that subjective assessments, such as supervisor ratings, were more closely aligned with objective measures, such as number of patents, but not with measures of creative process. Furthermore, Moneta et al. (Citation2010) found that there was a significant discrepancy even within subjective assessment methods. They showed that peer, supervisor, and self-evaluations overlap to only to some extent, and each method simultaneously addressed different sets of cues of creative performance. These confounding results of previous studies clearly suggest that it is still an important empirical question to investigate the convergent validity issue between subjective and objective assessments of individual creativity. Thus:

Hypothesis 3:

There will be a significant positive correlation between objective and subjective assessment measures of individual creativity.

METHOD

Participants

The participants in this study were 1,500 Koreans who voluntarily took part in this research while they were taking classes on creativity and innovation. Demographic information (age, gender, education level, and current occupation) was collected prior to the test. The ages of the participants ranged from 16 to 58 (M = 34.9, SD = 12.7), they were either students (middle school, high school, university and graduate) or MBA students who had previous work experience in various industries such as finance, automobile, electronics, construction, pharmaceutical and public service. There were 1,333 men (88%) and 167 women (12%) in the sample. All participants gave informed consents prior to their participation and were debriefed regarding the purpose of the study.

Procedure and Materials for Individual Creativity Assessment

The test was administered in 2-hr group testing sessions over a total period of 8 years. Different test sets were developed for this study, one for subjective and another for objective creativity assessment. The subjective assessment score was measured through self-ratings by participants. Self-ratings followed and reintegrated previous subjective measurements (e.g., Gough’s CPS, Citation1979; Oldham & Cummings, Citation1996; Tierney et al., Citation1999; Zhou & George, Citation2001), which mainly asked participants to self-rate their creative interests, attitudes, and self-perceptions of their own creative ability using a 7-point Likert-type scale ranging from 1 = strongly disagree to 7 = strongly agree. Some samples of frequently used subjective indicators include, “Suggests new ways to achieve goals or objectives.” “Not afraid to take risks” (Zhou & George, Citation2001, p. 696) and “Tries new ideas or methods first” (Tierney et al., Citation1999, p. 620). Questions developed for this section are quite similar to the aforementioned subjective assessment measures of creativity. It consisted of 45 items and participants were given 10 min. These questions were designed to evaluate dimensions of self-assessed creativity such as boldness, motivation, persistence, acceptability, and curiosity. The subjective assessment score for each participant was calculated as a single index that ranged from 0 to 100.

Objective assessment followed previous studies that focus on examining creative cognitive skills (Guilford, Citation1967a, Citation1967b; Torrance, Citation1962, Citation1974). It was designed to measure creative thinking abilities, more specifically divergent and convergent thinking abilities. Previous studies suggested that divergent thinking is a good predictor of creative potential and achievement (e.g., Basadur, Graen, & Scandura, Citation1986; Guilford, Citation1967a; Torrance, Citation1962; Woodman, Sawyer, & Griffin, Citation1993). Divergent thinking requires the ability to make unique combinations of ideas, make remote associations, and transform ideas into unusual forms (Cropley, Citation2006; Mednick, Citation1962; Wallach & Kogan, Citation1965). To objectively assess divergent thinking ability, participants in this study were given 30 short-essay questions to complete within 50 min, with an average time of 100 sec for each question. Participants were asked to produce multiple or alternative answers from given information or situations that were described in verbal or figural form.

Previous research also highlights convergent thinking ability as a critical determinant of individual creativity (Cropley & Cropley, Citation2012; Cropley, Citation2006; Runco, Citation2004). Convergent thinking is the thinking process that involves logical search and information processing to derive a single best answer to a predefined question. Although divergent thinking alone would often just lead to wild ideas that might be creative but unrealistic, convergent thinking is important in making sure that ideas are useful and relevant (Runco & Acar, Citation2012). Lonergan, Scott, and Mumford (Citation2004) also suggested a two-step process that combines novel idea generation (divergent thinking) and simultaneous exploration of its effectiveness (convergent thinking). Following the previous arguments, divergent and convergent thinking ability scores were given equal weight to calculate the objective assessment score. Convergent thinking ability was assessed through 15 multichoice items completed within 30 min with an average time of 120 sec per question. Participants were mainly asked to solve questions with a single answer. This required qualities such as speed, accuracy, and logic. Overall, the objective creativity score consisted of the sum of divergent and convergent thinking scores. Objective assessment questions in this study were designed to evaluate several subcomponents of creative thinking ability, such as novelty, synthesis, elaboration, complexity, redefinition, fluency, and flexibility. It was calculated as a single index that ranged from 0 to 100.

Coding and Scoring

Various types of questions, such as short essays, multiple choice, and open-end questions, were used to assess individual creativity. Relying on the coding manual developed by the authors, two research assistants coded all answers from participants for the analysis. Cronbach α for the interrater reliabilities across items and participants ranged from .87 to .96. Once all coding procedures were completed, computer algorithms automatically calculated both subjective and objective assessment scores. Computer algorithms were developed in collaboration with computer program engineers from a private research institute, the iCreate Creativity Institute. They were refined and adjusted through multiple pilot tests and grading exercises for over 2 years. Sample test questions are available from the authors upon request.

RESULTS

Descriptive Statistics

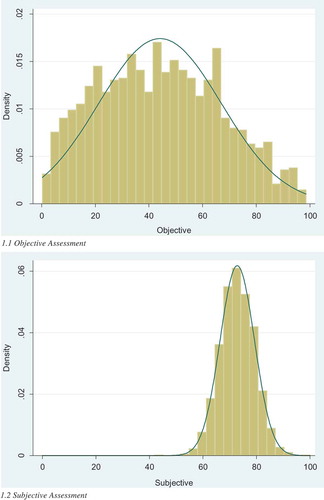

displays descriptive statistics of all the variables. Participants’ subjective creativity score mean (M = 72.8, SD = 6.5) was significantly higher than that of their objective creativity score (M = 44.1, SD = 22.9). The score range for objective creativity (range: 0.3–99) was much wider than that of subjective creativity (range: 44.7–100). shows the frequency distribution graph for objective and subjective creativity scores. As shown in , the subjective creativity score shows a higher mean with lower variability. Skewness and kurtosis values for objective and subjective creativity scores were examined to check the normality of each variable’s distribution. Skewness and kurtosis for both scores were within acceptable limits.

Table 1 Descriptive statistics

Objective and Subjective Creativity Score Analysis

A variance ratio test was conducted to test hypothesis 1. The test for is given by

, which is distributed as F with (n–1, n–1) degrees of freedom. Results of the F-test showed a statistically significant difference between these two variances, F(1499, 1499) = 12.59, p < .001. The variance ratio of the objective creativity score to the subjective creativity score was much greater than 1, indicating greater variability for the objective creativity score. Overall, the findings supported hypothesis 1. To test the second hypothesis, paired t-tests were conducted. Paired t-tests are often used to test the statistically significant mean difference of creativity measurement scores (e.g., Runco, Citation1986). The results are shown in . They indicate that the mean difference between objective and subjective creativity scores is statistically significant, t(1499) = 49.38, p <.001. The study found that the mean of the subjective creativity score (M = 72.8) was significantly higher than that of the objective creativity score (M = 44.1). Overall, Hypothesis 2 was also supported. As shown in these results, participants had the tendency to rate themselves as having moderate levels of creative abilities, a quality which is generally socially favorable. The majority of participants showed similar patterns, exposing the subjective creativity score to greater systematic overestimation bias than the objective creativity score (Goldsmith & Matherly, Citation1988).

Table 2 Mean differences and paired t-test for subjective and objective creativity scores

Correlation Analysis

The relevant bivariate correlations are reported in . Supporting hypothesis 3, the subjective creativity score was significantly correlated with the objective creativity score (r = .20, p <.01). The moderate level of correlation between the two scores fulfills the necessary condition for the triangulation of creativity measurement. Among other variables, correlations between the objective creativity score and both the convergent (r = .81, p < .01) and divergent thinking (r = .82, p < .01) ability scores were significantly correlated. Considering that the objective creativity score is the sum of the convergent and divergent thinking ability scores, a high level of positive correlations among these variables is naturally expected. Convergent and divergent thinking ability is found to be significantly correlated (r = .33, p < .01) at a moderate level. These results suggest that individuals with high objective creativity scores are likely to have extremely high scores in either convergent or divergent thinking or ability.

Table 3 Correlation

DISCUSSION

The significance of individual creativity assessment led to the development of numerous measurement methods over the last few decades (Kaufman et al., Citation2008; Puccio & Murdock, Citation1999). Although progress has been significant, such diversity of assessment methods itself has illuminated the need to investigate ways to improve the reliability and validity of individual creativity assessment. Feldhusen and Goh (Citation1995) acknowledged that scholars need to combine different measures of creativity to derive a comprehensive picture of creativity in individuals. To address this issue, this study aimed to investigate how subjective and objective assessment methods differently affect measurement outcomes of individual creativity. Analyzing 1,500 individuals, it showed that subjective assessment results have a smaller variance than objective assessment results (SD = 6.5 and SD = 22.9, respectively). This study argues that such results can be attributed to social desirability and consistency motif. This study showed that subjective assessment results tend to be more upwardly biased with a higher mean compared to objective assessment (M = 72.8 and M = 44.1 for subjective and objective assessment, respectively). These differences may be associated with illusory superiority, leniency biases and social desirability that are rooted in social settings.

Furthermore, the correlation between the two assessment methods is significantly positive and greater than zero (r = .20, p < .01). Social science research has not specified statistical threshold levels of correlations sufficient to confirm convergent validity (Carlson & Herdman, Citation2012). For example, Campbell and Fiske (Citation1959) reviewed 12 studies and reported 144 estimates of convergent validities ranging from r = .20 to .82. Other researchers (Wall et al., Citation2004) investigated studies with correlations between subjective and objective measures of organization performance and found associations ranging from r = .26 to r = .65. The correlation coefficient of r = .20 in this study, although positive and significant, is rather weak in comparison to the ranges of correlations reported in other studies. Thus, this study may not deny that there are no critical differences between the two assessment methods. These results advise scholars to be very cautious when using the two methods interchangeably. With this in mind, it may be a more reasonable approach to combine multiple assessment methods for individual creativity assessment. This study could provide solutions to an ongoing measurement issue in creativity studies. Many empirical studies on individual creativity that use various assessment methods often relied on a single assessment method only and admitted to measurement issues only as limitations or for future research (e.g., Perry-Smith, Citation2006; Shalley et al., Citation2000; Tierney & Farmer, Citation2002). Nonetheless, persistent calls for the need to incorporate different or multiple methods to enhance the robustness of research results has not ceased (e.g., Farmer, Tierney, & Kung-Mcintyre, Citation2003; Oldham & Cummings, Citation1996). Campbell and Fiske (Citation1959) suggested triangulation strategies that use complementary and multiple methods to enhance the validity of analysis results. Jick (Citation1979) acknowledged that each method has its own strengths and weaknesses, proposing that triangulation helps exploit strengths and neutralize weaknesses.

This study could also provide a foundation to better understand conflicting results of previous empirical studies. Considering the moderately low levels of significant correlation between subjective and objective assessment outcomes, measurement-related issues as a possible reason for mixed findings cannot be eliminated. For example, some studies showed intrinsic motivation as critical to creativity (e.g., Amabile, Citation1979, Citation1985; Amabile, Hill, Hennessey, & Tighe, Citation1994); others reported that the relationship was, at best, weak and/or statistically nonsignificant (e.g., Dewett, Citation2007; Perry-Smith, Citation2006; Shalley et al., Citation2000). In particular, Grant and Berry (Citation2011) specifically raised methodological issues about intrinsic motivation. They argued that intrinsic motivation is more consistently associated with creativity scores measured by self-reports, rather than those assessed by either observer ratings or archival measures. Mixed findings are present in many other studies, such as the relationship between individual creativity and transformational leadership (Jaussi & Dionne, Citation2003; Shin & Zhou, Citation2003), organizational learning (Gong, Huang, & Farh, Citation2009; Redmond, Mumford, & Teach, Citation1993), and intrinsic and extrinsic rewards (Eisenberger & Rhoades, Citation2001; Hackman & Oldham, Citation1980). Addressing these conflicting findings from a measurement perspective could refine and enhance future creativity research.

This research is also not free from limitations that could provide meaningful opportunities for future research. First, self-reports and expert evaluation with computer algorithms were utilized to compare subjective and objective assessment methods. Although encompassing all existing methods may not be possible, future studies could incorporate other and multiple methods, such as archival, historiometric studies, and experimental measures. By examining how each method affects and/or changes analysis results, future research could provide further fruitful discussions. Second, this study mainly examined cognitive capabilities that consist of convergent and divergent thinking ability. This could be further divided into multiple subcomponents or multiple dimensions, such as looking at divergent thinking form a product, or process perspective. Future studies could refine findings by answering how the assessment methods of individual creativity could affect such subcomponents or substructures of creativity. Third, future studies could integrate other conditional factors that could possibly affect individual creativity. For example, they could address possible associations between assessment methods and social and/or hierarchical contexts. Last, subjective and objective assessment methods and their triangulation could also be relevant and important for team or organizational creativity. Future research could examine measurement issues at different levels of analysis in creativity studies.

This study extends existing creativity literature by revisiting assessment method issues and individual creativity. Measurement issues, if serious, can significantly change either magnitudes or directions of research findings. In research design for creativity studies, our study argues that it lacked a thorough and in-depth consideration regarding the possible triangulation of objective and subjective assessment methods.

REFERENCES

- Amabile, T. M. (1979). Effects of external evaluation on artistic creativity. Journal of Personality and Social Psychology, 37, 221–233. doi:10.1037/0022-3514.37.2.221

- Amabile, T. M. (1983). The social psychology of creativity. New York, NY: Springer-Verlag.

- Amabile, T. M. (1985). Motivation and creativity: Effects of motivational orientation on creative writers. Journal of Personality and Social Psychology, 48, 393–399. doi:10.1037/0022-3514.48.2.393

- Amabile, T. M. (1996). Creativity in context. Boulder, CO: Westview.

- Amabile, T. M., Conti, R., Coon, H., Lazenby, J., & Herron, M. (1996). Assessing the work environment for creativity. Academy of Management Journal, 39, 1154–1184. doi:10.2307/256995

- Amabile, T. M., Hill, K. G., Hennessey, B. A., & Tighe, E. M. (1994). The work preference inventory: Assessing intrinsic and extrinsic motivational orientations. Journal of Personality and Social Psychology, 66, 950–967. doi:10.1037/0022-3514.66.5.950

- Arnold, H. J., & Feldman, D. C. (1981). Social desirability response bias in self-report choice situations. Academy of Management Journal, 24, 377–385. doi:10.2307/255848

- Axtell, C. M., Holman, D. J., Unsworth, K. L., Wall, T. D., Waterson, P. E., & Harrington, E. (2000). Shopfloor innovation: Facilitating the suggestion and implementation of ideas. Journal of Occupational and Organizational Psychology, 73, 265–285. doi:10.1348/096317900167029

- Barron, F. (1955). The disposition toward originality. Journal of Abnormal and Social Psychology, 51, 478–485. doi:10.1037/h0048073

- Basadur, M., Graen, G. B., & Scandura, T. A. (1986). Training effects on attitudes toward divergent thinking among manufacturing engineers. Journal of Applied Psychology, 71, 612–617. doi:10.1037/0021-9010.71.4.612

- Batey, M., & Furnham, A. (2006). Creativity, intelligence, and personality: A critical review of the scattered literature. Genetic, Social, and General Psychology Monographs, 132, 355–429. doi:10.3200/MONO.132.4.355-430

- Brown, J. D. (1986). Evaluations of self and others: Self-enhancement biases in social judgments. Social Cognition, 4, 353–376. doi:10.1521/soco.1986.4.4.353

- Campbell, D. T., & Fiske, D. W. (1959). Convergent and discriminant validation by the multitrait-multimethod matrix. Psychological Bulletin, 56, 81–105. doi:10.1037/h0046016

- Carlson, K. D., & Herdman, A. O. (2012). Understanding the impact of convergent validity on research results. Organizational Research Methods, 15, 17–32. doi:10.1177/1094428110392383

- Carson, S. H., Peterson, J. B., & Higgins, D. M. (2005). Reliability, validity, and factor structure of the creative achievement questionnaire. Creativity Research Journal, 17, 37–50. doi:10.1207/s15326934crj1701_4

- Cropley, A. (2000). Defining and measuring creativity: Are creativity tests worth using?. Roeper Review, 23, 72–79. doi:10.1080/02783190009554069

- Cropley, A. (2006). In praise of convergent thinking. Creativity Research Journal, 18, 391–404. doi:10.1207/s15326934crj1803_13

- Cropley, D., & Cropley, A. (2012). A psychological taxonomy of organizational innovation: Resolving the paradoxes. Creativity Research Journal, 24, 29–40. doi:10.1080/10400419.2012.649234

- Denzin, N. K. (1970). The research act: A theoretical introduction to sociological methods. London, UK: Butterworths.

- Dewett, T. (2007). Linking intrinsic motivation, risk taking, and employee creativity in an R&D environment. R&D Management, 37, 197–208. doi:10.1111/radm.2007.37.issue-3

- Dunning, D., Meyerowitz, J. A., & Holzberg, A. D. (1989). Ambiguity and self-evaluation: The role of idiosyncratic trait definitions in self-serving assessments of ability. Journal of Personality and Social Psychology, 57, 1082–1090. doi:10.1037/0022-3514.57.6.1082

- Eisenberger, R., & Rhoades, L. (2001). Incremental effects of reward on creativity. Journal of Personality and Social Psychology, 81(4), 728–741. doi:10.1037/0022-3514.81.4.728

- Elsbach, K. D., & Kramer, R. M. (2003). Assessing creativity in Hollywood pitch meetings: Evidence for a dual-process model of creativity judgments. Academy of Management Journal, 46, 283–301. doi:10.2307/30040623

- Farmer, S. M., Tierney, P., & Kung-Mcintyre, K. (2003). Employee creativity in Taiwan: An application of role identity theory. Academy of Management Journal, 46, 618–630. doi:10.2307/30040653

- Feldhusen, J. F., & Goh, B. E. (1995). Assessing and accessing creativity: An integrative review of theory, research, and development. Creativity Research Journal, 8, 231–247. doi:10.1207/s15326934crj0803_3

- Fisher, R. J. (1993). Social desirability bias and the validity of indirect questioning. Journal of Consumer Research, 20, 303–315. doi:10.1086/jcr.1993.20.issue-2

- George, J. M., & Zhou, J. (2002). Understanding when bad moods foster creativity and good ones don’t: The role of context and clarity of feelings. Journal of Applied Psychology, 87, 687–697. doi:10.1037/0021-9010.87.4.687

- Gilson, L. L., Mathieu, J. E., Shalley, C. E., & Ruddy, T. M. (2005). Creativity and standardization: Complementary or conflicting drivers of team effectiveness? Academy of Management Journal, 48, 521–531. doi:10.5465/AMJ.2005.17407916

- Goldsmith, R. E., & Matherly, T. A. (1988). Creativity and self-esteem: A multiple operationalization validity study. Journal of Psychology, 122, 47–56. doi:10.1080/00223980.1988.10542942

- Gong, Y., Huang, J. C., & Farh, J. L. (2009). Employee learning orientation, transformational leadership, and employee creativity: The mediating role of employee creative self-efficacy. Academy of Management Journal, 52, 765–778. doi:10.5465/AMJ.2009.43670890

- Gough, H. G. (1979). A creative personality scale for the Adjective Check List. Journal of Personality and Social Psychology, 37, 1398–1405. doi:10.1037/0022-3514.37.8.1398

- Grant, A. M., & Berry, J. W. (2011). The necessity of others is the mother of invention: Intrinsic and prosocial motivations, perspective taking, and creativity. Academy of Management Journal, 54, 73–96. doi:10.5465/AMJ.2011.59215085

- Guilford, J. P. (1954). Psychometric methods (2nd ed.). New York, NY: McGraw-Hill.

- Guilford, J. P. (1965). Intellectual factors in productive thinking. In Productive thinking in education. Washington, DC: National Education Association.

- Guilford, J. P. (1967a). Creativity: Yesterday, today and tomorrow. Journal of Creative Behavior, 1, 3–14. doi:10.1002/j.2162-6057.1967.tb00002.x

- Guilford, J. P. (1967b). Intellectual factors in productive thinking. In R. L. Mooney, & T. A. Razik (Eds.), Explorations in creativity. New York, NY: Harper & Row.

- Hackman, J. R., & Oldham, G. R. (1980). Work redesign. Reading, MA: Addison-Wesley.

- Headey, B., & Wearing, A. (1988). The sense of relative superiority: Central to well-being. Social Indicators Research, 20, 497–516.

- Heider, F. (1958). The psychology of interpersonal relations. New York, NY: Wiley.

- Hocevar, D. (1979, April). The Development of the Creative Behavior Inventory (CBI). Paper presented at the annual meeting of the Rocky Mountain Psychological Association. (ERIC Document Reproduction Service No. ED 170 350).

- Hocevar, D. (1981). Measurement of creativity: Review and critique. Journal of Personality Assessment, 45, 450–464. doi:10.1207/s15327752jpa4505_1

- Janssen, O. (2000). Job demands, perceptions of effort reward fairness and innovative work behaviour. Journal of Occupational and Organizational Psychology, 73, 287–302. doi:10.1348/096317900167038

- Jaussi, K. S., & Dionne, S. D. (2003). Leading for creativity: The role of unconventional leader behavior. The Leadership Quarterly, 14, 475–498. doi:10.1016/S1048-9843(03)00048-1

- Jick, T. D. (1979). Mixing qualitative and quantitative methods: Triangulation in action. Administrative Science Quarterly, 24, 602–611. doi:10.2307/2392366

- Kaufman, J. C., & Baer, J. (2004). Sure I’m creative- But not in mathematics!: Self-reported creativity in diverse domains. Empirical Studies of the Arts, 22, 143–155. doi:10.2190/26HQ-VHE8-GTLN-BJJM

- Kaufman, J. C., & Baer, J. (2012). Beyond new and appropriate: Who decides what is creative? Creativity Research Journal, 24, 83–91. doi:10.1080/10400419.2012.649237

- Kaufman, J. C., Plucker, J. A., & Baer, J. (2008). Essentials of creativity assessment (Vol. 53). New York, NY: John Wiley & Sons.

- Kirschenbaum, R. J. (1989). Understanding the creative activity of students: Including an instruction manual for the creative behavior inventory. St. Louis, MO: Creative Learning Press.

- Landy, F. J., & Farr, J. L. (1980). Performance rating. Psychological Bulletin, 87, 72–107. doi:10.1037/0033-2909.87.1.72

- Lonergan, D. C., Scott, G. M., & Mumford, M. D. (2004). Evaluative aspects of creative thought: Effects of appraisal and revision standards. Creativity Research Journal, 16, 231–246. doi:10.1080/10400419.2004.9651455

- MacKinnon, D. W. (1962). The nature and nurture of creative talent. American Psychologist, 17, 484–495. doi:10.1037/h0046541

- Madjar, N., Oldham, G. R., & Pratt, M. G. (2002). There’s no place like home? The contributions of work and nonwork creativity support to employees’ creative performance. Academy of Management Journal, 45, 757–767. doi:10.2307/3069309

- Mathison, S. (1988). Why triangulate?. Educational Researcher, 17, 13–17. doi:10.3102/0013189X017002013

- Mednick, S. (1962). The associative basis of the creative process. Psychological Review, 69, 220–232. doi:10.1037/h0048850

- Moneta, G. B., Amabile, T. M., Schatzel, E. A., & Kramer, S. J. (2010). Multirater assessment of creative contributions to team projects in organizations. European Journal of Work and Organizational Psychology, 19, 150–176. doi:10.1080/13594320902815312

- Ng, T. W., & Feldman, D. C. (2012). A comparison of self-ratings and non-self-report measures of employee creativity. Human Relations, 65, 1021–1047. doi:10.1177/0018726712446015

- Oldham, G. R., & Cummings, A. (1996). Employee creativity: Personal and contextual factors at work. Academy of Management Journal, 39, 607–634. doi:10.2307/256657

- Organ, D. W., & Konovsky, M. (1989). Cognitive versus affective determinants of organizational citizenship behavior. Journal of Applied Psychology, 74, 157–164. doi:10.1037/0021-9010.74.1.157

- Osgood, C. E., & Tannenbaum, P. H. (1955). The principle of congruity in the prediction of attitude change. Psychological Review, 62, 42–55. doi:10.1037/h0048153

- Patton, M. Q. (1990). Qualitative evaluation and research methods. Newbury Park, CA: SAGE.

- Perry-Smith, J. E. (2006). Social yet creative: The role of social relationships in facilitating individual creativity. Academy of Management Journal, 49, 85–101. doi:10.5465/AMJ.2006.20785503

- Plucker, J. A., & Makel, M. C. (2010).Assessment of creativity. In J.C. Kaufman & R. J. Sternberg (Eds.), The Cambridge handbook of creativity. New York, NY: Cambridge University Press.

- Plucker, J. A., & Runco, M. A. (1998). The death of creativity measurement has been greatly exaggerated: Current issues, recent advances, and future directions in creativity assessment. Roeper Review, 21, 36–39. doi:10.1080/02783199809553924

- Podsakoff, P. M., MacKenzie, S. B., Lee, J.-Y., & Podsakoff, N. P. (2003). Common method biases in behavioral research: A critical review of the literature and recommended remedies. Journal of Applied Psychology, 88, 879–903. doi:10.1037/0021-9010.88.5.879

- Podsakoff, P. M., & Organ, D. W. (1986). Self-reports in organizational research: Problems and prospects. Journal of Management, 12, 531–544. doi:10.1177/014920638601200408

- Puccio, G. J., & Murdock, M. C. (1999). Creativity assessment: Readings and resources. Buffalo, NY: Creative Education Foundation Press.

- Redmond, M. R., Mumford, M. D., & Teach, R. (1993). Putting creativity to work: Effects of leader behavior on subordinate creativity. Organizational Behavior and Human Decision Processes, 55(1), 120–151. doi:10.1006/obhd.1993.1027

- Reich, R. B. (2001, July 20). Standards for what? Education Week, 20, 64.

- Runco, M. A. (1986). The discriminant validity of gifted children’s divergent thinking test scores. Gifted Child Quarterly, 30, 78–82. doi:10.1177/001698628603000207

- Runco, M. A. (2004). Creativity. Annual Review of Psychology, 55, 657–687. doi:10.1146/annurev.psych.55.090902.141502

- Runco, M. A. (2010). Divergent thinking, creativity, and ideation. In J. Kaufman & R. Sternberg (Eds.), Cambridge, UK: The Cambridge handbook of creativity. Cambridge, UK: The Cambridge University Press.

- Runco, M. A., & Acar, S. (2012). Divergent thinking as an indicator of creative potential. Creativity Research Journal, 24, 66–75. doi:10.1080/10400419.2012.652929

- Schoenfeldt, L. F., & Jansen, K. J. (1997). Methodological requirements for studying creativity in organizations. Journal of Creative Behavior, 31, 73–90. doi:10.1002/jocb.1997.31.issue-1

- Scott, S. G., & Bruce, R. A. (1994). Determinants of innovative behavior: A path model of individual innovation in the workplace. Academy of Management Journal, 37, 580–607. doi:10.2307/256701

- Shalley, C. E., Gilson, L. L., & Blum, T. C. (2000). Matching creativity requirements and the work environment: Effects on satisfaction and intentions to leave. Academy of Management Journal, 43, 215–223. doi:10.2307/1556378

- Shin, S. J., Kim, T.-Y., Lee, J.-Y., & Bian, L. (2012). Cognitive team diversity and individual team member creativity: A cross-level interaction. Academy of Management Journal, 55, 197–212. doi:10.5465/amj.2010.0270

- Shin, S. J., & Zhou, J. (2003). Transformational leadership, conservation, and creativity: Evidence from Korea. Academy of Management Journal, 46, 703–714. doi:10.2307/30040662

- Taylor, J. B. (1961). What do attitude scales measure: The problem of social desirability. The Journal of Abnormal and Social Psychology, 62, 386–390. doi:10.1037/h0042497

- Thomas, K. W., & Kilmann, R. H. (1975). The social desirability variable in organizational research: An alternative explanation for reported findings. Academy of Management Journal, 18, 741–752. doi:10.2307/255376

- Tierney, P., & Farmer, S. M. (2002). Creative self-efficacy: Its potential antecedents and relationship to creative performance. Academy of Management Journal, 45, 1137–1148. doi:10.2307/3069429

- Tierney, P., Farmer, S. M., & Graen, G. B. (1999). An examination of leadership and employee creativity: The relevance of traits and relationships. Personnel Psychology, 52, 591–620. doi:10.1111/peps.1999.52.issue-3

- Torrance, E. P. (1962). Guiding creative talent. Englewood Cliffs, NJ: Prentice-Hall.

- Torrance, E. P. (1974). The Torrance tests of creative thinking-TTCT Manual and Scoring Guide: Verbal test A, figural test. Lexington, KY: Ginn.

- Venkatraman, N. (1989). The concept of fit in strategy research: Toward verbal and statistical correspondence. Academy of Management Review, 14, 423–444.

- Venkatraman, N., & Grant, J. H. (1986). Construct measurement in organizational strategy research: A critique and proposal. Academy of Management Review, 11, 71–87.

- Wall, T. D., Michie, J., Patterson, M., Wood, S. J., Sheehan, M., Clegg, C. W., & West, M. (2004). On the validity of subjective measures of company performance. Personnel Psychology, 57, 95–118. doi:10.1111/peps.2004.57.issue-1

- Wallach, M. A., & Kogan, N. (1965). Modes of thinking in young children. New York, NY: Holt, Rinehart and Winston.

- Woodman, R. W., Sawyer, J. E., & Griffin, R. W. (1993). Toward a theory of organizational creativity. Academy of Management Review, 18, 293–321.

- Zhou, J., & George, J. M. (2001). When job dissatisfaction leads to creativity: Encouraging the expression of voice. Academy of Management Journal, 44, 682–696. doi:10.2307/3069410

- Zhou, J., & Shalley, C. E. (2003). Research on employee creativity: A critical review and directions for future research. Research in Personnel and Human Resources Management, 22, 165–217.