ABSTRACT

In the development of communication devices for individuals who are Deafblind, a significant challenge is achieving a seamless transition from human-generated to technology-mediated communication. This study compares the intelligibility of the Australian Deafblind tactile fingerspelling alphabet rendered on the HaptiComm tactile communication device with the same alphabet articulated by a human signer. After a short training period, participants identified the 26 English alphabet letters in both the mediated (device) and non-mediated (human) conditions. Results indicated that while participants easily identified most letters in the non-mediated condition, the mediated condition was more difficult to decipher. Specifically, letters presented on the palm or near the index finger had significantly lower recognition rates. These findings highlight the need for further research on the tactile features of communication devices and emphasize the importance of refining these features to enhance the reliability and readability of mediated tactile communication produced through tactile fingerspelling.

Introduction

Effective communication for individuals who are Deafblind poses distinctive challenges not adequately addressed by conventional Braille displays or assistive devices designed for individuals with single sensory impairment (Ziat et al., Citation2005). While Braille effectively conveys written information, it requires literacy skills not all Deafblind individuals possess and lacks the immediate, dynamic interaction of spoken or visual language (American Foundation for the Blind, Citation2022). Additionally, longer lifespans due to medical advancements mean older individuals with declining sensory acuity may need a tactile communication system with more pronounced signals than the fine tactile sensitivity required for Braille reading.

Our study evaluates the HaptiComm, an assistive technology device designed to address existing limitations in communication for Deafblind individuals. The HaptiComm is a mediated tactile communication device inspired by established tactile communication techniques, including Australian Deafblind Tactile FingerspellingFootnote1 (TFS), LORM, Malossi, and British Deafblind Manual. It accommodates various literacy levels through programmability and individual tailoring, allowing adjustments in tactile output speed and intensity to suit the user’s sensory preference and processing capability. The device’s handrest is also modifiable for different hand sizes and shapes. This adaptability facilitates a more nuanced exchange of information, enabling the conveyance and modulation of prosodic features that are crucial for human interaction but often missing in text-based devices.

The development of the HaptiComm involved direct collaboration with a Deafblind coauthor and ongoing consultation with the Deafblind community; ensuring the design aligns with authentic communication practices and meets the nuanced needs of Deafblind users. These partnerships provided invaluable lived experiences and underscored the importance of user-centered design in creating assistive technologies.

In this study, we specifically examine the recognition rates of tactile representations of the 26-letter English signed alphabet delivered via the HaptiComm compared to those conveyed through non-mediated human TFS. This comparative analysis is crucial for evaluating the efficacy of mediated tactile communication and provides insights for further development of the HaptiComm. The goal is to ensure the device functions as both a literacy tool and a platform to facilitate rich, multi-dimensional interaction.

Related work

The landscape of assistive devices for Deafblind individuals has progressively evolved. Shaped by the requirements of tactile communication and technological innovation, the development process of these assistive technologies has become more community-centered and iterative. Early endeavors into mediated tactile communication like the Tactaid (Galvin et al., Citation1999, Citation2001) and Vocoder (Ozdamar et al., Citation1987) attempted to employ verbal assistive communication systems for individuals with hearing impairments. However, these devices were primarily optimized for those with single sensory loss, either in hearing or vision, and did not fully address the complex communication needs of Deafblind users.

The Optacon, developed in the 1970s, marked a significant technological advancement by translating visual information into tactile feedback (Goldish & Taylor, Citation1974). Using a handheld camera, it scanned text and converted it into signals that activated vibrating pins on a tactile display, allowing users to “feel” the shapes of letters and words with their fingertips. While this innovation enabled blind individuals to access printed materials via a direct optical-to-tactile interface, its reliance on visual-to-tactile translation limited its applicability for Deafblind communities, many of whom preferred direct tactile sign languages or tactile communication (for review, see Iwasaki et al., Citation2019; Willoughby et al., Citation2018).

Acknowledging the limitations of early devices, subsequent research has shifted focus toward better integrating with the naturally emerging and evolving communication methods within the Deafblind community (Tan et al., Citation1999). The advancement of Tactile Fingerspelling, wherein hand-touch signals correlate to letters, words, concepts, or signs, has led to conceptualizing systems such as the HaptiComm.

Effective communication for individuals experiencing visual and auditory impairments necessitates specialized assistive devices, including sensory substitution devices (see Ziat et al., Citation2007 for a comprehensive review). These devices are designed to meet the unique communication requirements of individuals with dual sensory impairments, which vary widely depending on factors including the onset and severity of visual and auditory impairment, educational background, and personal preferences (Sorgini et al., Citation2018).

There are numerous refreshable Braille displays that replicate the Braille reading process, some utilizing single Braille cells (Bettelani et al., Citation2020) and others simulating multiple cells (Kim & Kwon, Citation2020). For tactile communication, Finger-Braille, which is predominantly used in Japan, involves tapping patterns on the dorsal finger surface to represent Braille cells to facilitate real-time communication (Ozioko & Hersh, Citation2015; Ozioko et al., Citation2017). A dedicated refreshable Braille device for Finger-Braille was created as part of the original HaptiComm project, named HaptiBraille, which combines taps on three fingers of each of the hands of the person receiving the communication (Duvernoy et al., Citation2020). Various devices, including the bimanual Finger-Braille glove (Matsuda, Citation2016), which emulates Braille cells using actuators, have been developed to replicate prosody (i.e., intonation, stress pattern, loudness variations, pausing, and rhythm) by modulating the tap strength and duration (Miyagi et al., Citation2006). Similar glove-based devices developed in close collaboration with Deafblind individuals in Germany (Gollner et al., Citation2012) and Italy (Caporusso, Citation2008) reflect international efforts to support the Deafblind community.

Haptic devices have seen technological advancements in replicating sign languages via force feedback, simulating hand shapes, orientations, and movements integral to sign language communication (Chang et al., Citation2022; Gelsomini et al., Citation2022; Jaffe, Citation1994; Johnson et al., Citation2021; Meade, Citation1987; Tomovic, Citation1960). In addition to tactile cues, these devices provide proprioceptive cues, where the hands and arms of the receiver are moved in space to deliver information. Notably, the PALORMA device was designed to convey letters of Italian Sign Language and has shown promising recognition rates in both sighted and Deafblind users (Russo et al., Citation2015).

Specific devices integrate tactile and haptic feedback elements to deliver a more encompassing sensory experience. For instance, a device replicating the Tadoma method – a technique where speech articulation is felt through hand placements on the speaker’s lips, jaw, and throat, demonstrated high accuracy rates following 27 hours of training (Tan et al., Citation1999).

Expanding on previous endeavors, recent approaches have shifted toward speech encoding involving alphabetic, phonetic, or phonemic to facilitate communication (Reed et al., Citation2018, Citation2021). For example, Zhao et al. (Citation2018) introduced a device utilizing a 2 × 3array to mediate phonemic encoding with nine tactile symbols, achieving an impressive recall rate of seven out of ten words the day after a 26-minute training session to memorize 20 words through spatiotemporal patterns on the forearm.

In a subsequent development phase, the Tactile Phonemic Sleeve, incorporating a more complex 4 × 6array, was created to encode 39 English phonemes. This device demonstrated a recognition rate for the phonemes ranging between 71% and 97% and the ability to identify 200 words with an accuracy ranging from 63% to 94% (Tan et al., Citation2020). These advancements highlight the potential for sophisticated encoding devices to enhance detailed communication capabilities for the Deafblind community.

Despite notable technological advancements, the acceptance and usability of such devices within the Deafblind community remain low. Deafblind users often report disadvantages in communication and information access, emphasizing the importance of technologies with manageable learning curves and improved usability (Dyzel et al., Citation2020). Additionally, ongoing efforts are required to develop new devices in tandem with the evolving forms of natural Deafblind communication, such as Pro-Tactile (Edwards & Brentari, Citation2020).

Our study recognizes these challenges and contributes to the field by evaluating the performance of human-delivered TFS and machine-delivered TFS letters using the HaptiComm. Co-designing the device with a Deafblind coauthor ensures direct involvement and alignment with the communication strategies and needs of Deafblind individuals. Although the device is still in its early stages and awaits comprehensive testing with various assistive technology users, insights from our Deafblind collaborator are invaluable for refining and advancing tactile communication technologies.

Comparative study

The purpose of the study is to compare the effectiveness of the HaptiComm device against human-mediated TFS, specifically targeting the recognition rates of the English alphabet under different tactile conditions (e.g., Tap, Tap-and-Hold, and Slide). We aim to assess how recognition rates vary between mediated and non-mediated TFS, determine which letters or hand sites show significant accuracy disparities, and evaluate the impacts of different tactile sensations on letter recognition accuracy.

Participants

In order to explore the ease of learning TFS among inexperienced individuals who do not regularly use tactile communication, 18 participants (6 F, 12 M, mean age = 28.45, SD = 7.52) from Bentley University were recruited and duly compensated for their involvement. None of them reported hearing or visual impairments, hand injury, or nerve damage. Participants’ hand size was measured from the tip of the middle finger to the wrist crease. Six participants had small hand sizes (18.79–20.03 cm), eight had medium sizes (20.39–22.32 cm), and four had large hand sizes (23.26–23.85 cm). The experimental procedure was in accordance with the Declaration of Helsinki guidelines, and the study protocol received approval from the Institutional Review Board of Bentley University.

Apparatus and stimuli

The HaptiComm (Duvernoy et al., Citation2023), a device specifically developed to produce mediated TFS, operates with 23 voice coil actuators (). These actuators are strategically positioned, with nine forming a 3 × 3array in the central palm area, two on the thumb (D1), one near the side of the palm, two on the little finger (D5), and three on each of the index, middle, and ring fingers (D2 to D4) (). The text-to-tactile converter software uses a digital-to-analog converter driver and drive electronics to transform digital signals into analog signals, which are then amplified. Upon activation, the actuators gently contact a participant’s hand, producing three distinct types of sensations with a maximum force of 0.5 N: Tap with 40 ms duration (e.g., letter E), Tap-and-Hold with 80 ms duration (e.g., letter P), and Slide (e.g., letter C) achieved by activating one or multiple actuators sequentially. For instance, the letter C involves the consecutive actuation of four actuators, while the letter H engages three lines of actuators traveling distally. The activation of actuators for each letter in the TFS alphabet was meticulously tuned to faithfully replicate the dynamic interaction of human fingers on a hand during non-mediated TFS with minimal modification. To assess the device’s performance, the mediated touch generated by the HaptiComm was compared against the non-mediated touch provided by a tactile signer who had acquired proficiency in rendering the TFS alphabet.

Figure 1. The HaptiComm consists of 23 voice coil actuators for mediated TFS. The top pad is designed for a hand with a large size. The length, width, and height are 310 × 170 x 130 mm, respectively.

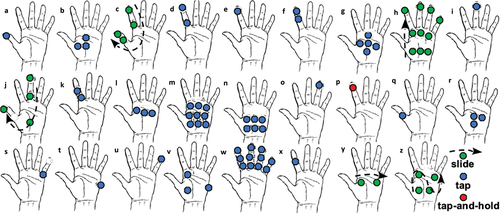

Figure 2. Map showing the Mediated Tactile Fingerspelling Alphabet using 23 voice-coil actuators on the HaptiComm. Tap, Tap-and-Hold, and Slide actuation are shown in blue, red, and green, respectively. Arrows indicate the direction of the motion.

A video tutorial (see supplemental materials) of the TFS alphabet was created to facilitate participants’ offsite learning. It featured a demonstration of the hand movements accompanied by verbal explanations on how to sign each of the 26 fingerspelled English letters. The use of a tutorial video reduced the influence of potential confounding variables arising from the differences in teaching and acquisition of the TFS alphabet. provides a static representation of the TFS alphabet.

Experimental procedure

One day prior to the experiment, participants electronically signed the consent form. Subsequently, they were provided access to an online video demonstrating the 26 signs representing letters of the English TFS alphabet. Participants were asked to watch this video multiple times at their discretion to familiarize themselves with the TFS system. Upon their arrival at the experimental session, we verified that all participants had viewed the instructional material. The reported viewing frequency ranged from two to three times. To ensure a consistent level of familiarity, participants were asked to watch the video once again immediately before commencing the experiment. The experiment comprised two blocks of trials, each containing two test conditions: non-mediated and mediated TFS. Within each block, 52 stimuli (2 × 26 signs) were presented randomly. Participants were tasked with completing both blocks, presented in a randomized order to minimize potential order effects.

Non-mediated TFS: In this condition, participants received tactile stimuli directly from an experimenter without visual or auditory impairments. The experimenter fingerspelled on the participant’s left hand, replicating the movements demonstrated in the previously watched instructional video.

Mediated TFS: Before initiating this condition, the experimenter verbally explained how the HaptiComm represented the twenty-six signs of the English TFS alphabet and showed which actuators were associated with each letter. Participants were allotted a fixed familiarization period lasting 5 to 10 minutes to acquaint themselves with the HaptiComm. Throughout this period, participants had the flexibility to request repetitions of any letters, ensuring they reached a comfortable level of identification with the device output. Once a participant felt sufficiently acquainted with all 26 signs, the mediated condition commenced by placing their left hand on the HaptiComm. The HaptiComm was controlled by software that transmitted commands to trigger the actuators according to the text input entered by the experimenter on the keyboard, thereby activating the actuators corresponding to each letter. (see )

During the trials, participants wore a blindfold and a Bose noise-cancellation headset, eliminating visual and auditory cues to ensure reliance solely on tactile sensation. Participants verbally reported which letter they perceived for each trial based on tactile stimuli. Without providing feedback, the experimenter recorded each response. A 5-minute break was granted between the two conditions to mitigate potential strain or fatigue. Once both conditions were completed, participants were asked to provide feedback and report their overall experience during the experiment.

Results

Data analysis

To evaluate tactile communication performance, a two-way repeated measures ANOVA was conducted incorporating within-subject factors of hand site and method of communication. Hand sites were categorized based on the location of tactile stimulation, including fingertips, index finger, palm, or a unique localized pattern, with each category corresponding to specific signs, each representing a letter of the English alphabet (refer to for the letter breakdown by hand site). The method of communication was segregated into two conditions: mediated and non-mediated TFS. This approach assessed the interaction between Hand Site and Communication Method, revealing their combined impact on overall performance. We controlled for order effects, finding no statistical significance. Additionally, there was no significant difference in hand size across participants, and data were found to be normally distributed for each subset, as confirmed by the Shapiro-Wilk test (p > 0.05).

Table 1. Correspondence of letters to hand sites.

Mediated and non-mediated performance

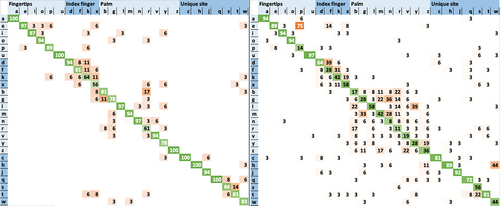

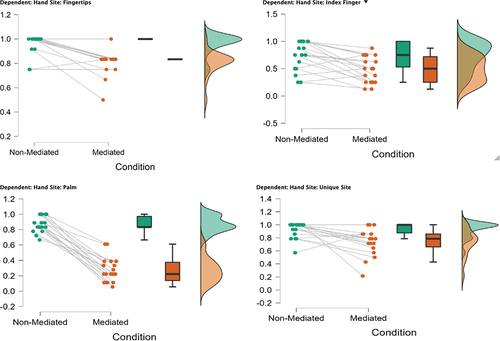

The confusion matrices in depict correct response percentages for both experiment conditions, offering a comprehensive overview of response patterns and contributing to our understanding of how tactile communication varies between the conditions (see for means and standard deviations). A repeated measures ANOVA was conducted, revealing a significant main effect of condition, F(1, 17) = 90.021, p < 0.001, η2 = .332, a significant main effect of hand site, F(3, 51) = 35.979, p < 0.001, η2 = .273, and a significant interaction between condition and hand site, F(3, 51) = 20.293, p < 0.001, η2 = .111. The breakdown of this interaction, as attested by post hoc analysis, is shown in . These results indicate significant differences in performance rates between the two communication methods and between various hand sites. Raincloud plots in illustrate the distribution of performance rates across conditions and hand sites, integrating individual data points to emphasize within-group variability. The following subsections provide a detailed examination of the interaction between Communication Method and Hand Site.

Figure 4. Confusion matrices of correct responses (%) for all participants for non-mediated (left) and mediated (right) conditions for the 26 Roman alphabet letters. The letters are classified by their stimulation location: fingertips, index finger, palm, and unique patterns.

Figure 5. The performance rates for non-mediated and mediated conditions per hand site. Raincloud plots show the distribution performance of each hand site (Fingertips, Index finger, Palm, and Unique site) across both conditions. Circle plots represent individual data, and box-and-whisker plots are median (IQR and max/min).

Table 2. Post Hoc Comparisons - Condition ✻ Hand Site.

Fingertips

In the non-mediated condition, participants exhibited consistently high-performance rates for the fingertip site, rarely performing at lower levels. These elevated performance rates were found to significantly (p < 0.05) differ across all hand sites in the mediated condition, including Fingertips ().

Table 3. Descriptive data (mean and standard deviation (SD)) per hand sites for mediated and non-mediated TFS conditions.

A parallel trend of high performance was observed in the mediated condition, with occasional lapses in accuracy. Notably, correct response rates for fingertip sites were significantly higher than for the Index Finger and Palm site within the mediated condition (p < 0.05).

These observations align with the findings in the confusion matrix (), affirming participants’ reliable identification of vowels (A, E, I, O, U) in both conditions. However, there was a significant decline in performance for the letter P in the mediated TFS, t(17) = −3.964, p < 0.001, with a 75% confusion rate between P and E likely attributable to the similarity in tactile cues – both letters activate the same actuator on the index finger but with different durations (i.e., a tap for E and a tap-and-hold for P). This confusion underscores the challenges of differentiating tactile signals that share hand site characteristics using a mediated communication device.

Index finger

In the non-mediated condition, the performance scores for the Index Finger site varied widely. While these scores were significantly lower than those for the Fingertip and Unique Site locations, they significantly exceeded those observed in the mediated condition for the Index Finger site. The raincloud plots () illustrate a shift toward a broader spread of mid-level scores and some lower scores in the mediated condition, indicating increased variability.

Distinct patterns of stimulation were associated with Index Finger letters, delivered on the distal and proximal phalanges for D and F, intermediate and proximal for K, and solely the intermediate for X. The non-mediated condition demonstrated relatively robust performance for D and F, which significantly decreased in the mediated condition. Specifically, D was often misidentified as other index-related letters, including E, while F was predominantly confused with D, occurring in 39% of instances (see ). This confusion likely stems from the hardware limitations of the HaptiComm, necessitating the implementation of F and D on the same side of the finger – unlike the non-mediated condition where they are located on different sides of the finger. In contrast, performance for K and X remained consistent across both experimental conditions, though only marginally better than chance, suggesting frequent confusion between K for X and vice versa, which may be lessened in the context of spelling full words rather than isolated letters.

Palm

In the mediated condition, the Palm site demonstrated the participants’ lowest performance, falling below chance levels, as evidenced by its significant difference from all other mediated and non-mediated conditions. This contrasted with the non-mediated condition, where despite a few errors, performance rates remained relatively consistent (). Out of the nine letters associated with the Palm site (see ), Tap sensation is primarily employed, except for Y and Z which used a Slide sensation (see ). Despite the tactile distinction, Y was frequently confused with L. However, L itself had a correct response rate of 58%, with most errors distributed among other palm site letters. The correct response rate for Z was only 36%, with the most common confusion occurring with the Slide letters Y and H, at 19% and 11%, respectively.

The mediated condition posed significant challenges for letters B, G, M, N, R, and V, likely due to their shared actuators and similar locations on the palm. In stark contrast, these letters were accurately identified in the non-mediated condition, achieving higher correct response rates.

Unique sites

While both conditions exhibited high correct response rates for Unique sites, the mediated condition demonstrated significantly higher performance variability than non-mediated (see and ). This variability implies that while distinctive tactile signals were generally perceived with consistency, the communication method introduced some variability in recognition.

Letters J and T maintained comparable recognition rates across both conditions. In the mediated condition, letters C, H, and Q achieved high correct recognition rates of 81%, 89%, and 72%, respectively. However, these rates did not reach perfect recognition (100%) observed with the non-mediated condition.

In contrast, recognition rates for letters S and W were significantly lower in the mediated condition, with S mistaken for T in 22% of cases and W confused with H in 44% of instances. These findings underscore the importance of distinct and salient tactile cues, as even Unique sites may not be distinct enough to prevent confusion.

Participant feedback

Participants were requested to assess the task difficulty for both experiment conditions on a 7-point Likert scale (1 – very easy, 7 – very difficult). In the non-mediated condition, the average task difficulty was 2.24 (SD: 1.15, 95% CI: 0.53). For the mediated condition, task difficulty had an average score of 5.44 (SD: 1.38, 95% CI: 0.64). Most participants perceived the task with a human experimenter easier than with the HaptiComm. Some participants reported difficulty distinguishing letters located on the palm and letters E and P, aligning with the corresponding results. Finally, some participants highlighted the challenge of mapping mediated TFS to non-mediated TFS as their palm was facing down in the mediated condition and facing up in the non-mediated condition.

Preliminary insight from end-users

Advancing the development of the HaptiComm for tactile communication requires meticulous consideration of the valuable insights provided by end-users within the Deafblind community, the intended beneficiaries of this technology. This section includes diverse feedback from three Deafblind users gathered through informal interactions and open-ended discussions. They were instructed to place their hands on the HaptiComm and asked to recognize letters and words and share comments on their experiences interacting with the device.

User 1 found it hard to distinguish letters due to blending, possibly caused by timing or personal difficulty, saying, “The letters are not very clear. It’s hard to distinguish which letters are being played on your hand, it’s quite subtle, it’s not well defined, the letters tend to blend. Maybe it’s the timing, but maybe it’s just me.”

User 2 initially struggled with hand positioning but had a positive overall experience; comparing the sensations to playing with a mechanical typewriter during childhood and praising the device for converting sound into touch: “It’s perfect, perfect. Turning sound into touch is fantastic.” They also noted that the letters were very quick.

User 3, one of the coauthors, noted challenges with random letter recognition, with around 70% accuracy and confusion among certain letters (especially E and P, as well as B, N, G, L, M, and N), but found word recognition more positive. They stated, “I rarely made an error in word recognition for common words. This is because receiving works a lot like the auto-complete feature. I’m usually 3–5 words ahead, and reception is more akin to error checking than actual recognition.” However, they also mentioned challenges with unfamiliar words, requiring multiple attempts for accurate recognition, a common issue in both HaptiComm and human TFS. They highlighted the importance of sentence context in understanding communication and appreciated HaptiComm’s extended pause feature for distinguishing between words. Furthermore, they observed that the device initially ran too fast for human perception and required adjustment, commenting, “It runs tooooooo fast for human perception, and we had to slow it down (2–4 letters per second are decipherable, with 2 letters per second being a comfortably sustainable rate).” This critical feedback highlights potential issues with letter clarity and the blending effect of tactile signals interfering with clear communication. These observations were corroborated by the results of our comparative study.

Discussion

Our comprehensive analysis comparing non-mediated and mediated TFS methods revealed performance discrepancies across various hand sites. Insights from Deafblind users who had actively engaged with the HaptiComm aligned with our findings, indicating a consistent trend of superior recognition rates with non-mediated TFS. Several letters had notably lower recognition with the mediated HaptiComm device, especially those on the palm, those sharing actuators on the index finger, and those with unique stimulation patterns.

The differences in how well people with limited prior experience in TFS perform tasks with and without mediation highlight specific difficulties depending on where the letter is signed on hand. Specifically, the letter P exhibited a significant decline in recognition on the fingertip in the mediated condition. This trend extended to the index finger, affecting the recognition of letters D and F, diverging from the robust recognition rates observed in the human condition. Although less pronounced, both conditions showed degraded recognition for letters K and X.

Communication involving the palm presented significant challenges in the mediated condition, with a substantial drop in performance for the letters B, G, L, M, N, R, V, Y, and Z, with R being the only letter to also exhibit degraded performance in the non-mediated condition. The Unique Site group was not immune to these challenges, with S and W showing significant recognition declines with the HaptiComm. This could be attributed to the lower spatial acuity and skin continuity of the palm relative to the fingers, requiring closer actuators to achieve satisfactory results.

While the brief training may have varied among participants, it does not fully account for the lower performance rate in mediated TFS. Participants demonstrated proficiency with non-mediated TFS, evidenced by average task difficulty ratings, primarily in the easy to somewhat easy range. Factors influencing performance rates include hand positioning, stimulus timing, and information encoding. Although participant hand size showed no statistical significance, some participants adjusted their hand positions on the device to feel all actuators, potentially affecting performance. Stimulus duration may have significantly impacted performance, especially for letters reliant on duration discrimination (i.e., 40 ms for E and 80 ms for P). This could be caused by participants using a predictive strategy and responding before the end of the stimulation (Ziat et al., Citation2010).

Palm orientation, facing up or down, may have influenced performance, aligning with previous research on skin surface positioning (Oldfield & Phillips, Citation1983). The skin surface position relative to the stimulus determines the perceptual experience, which is also consistent with Gibson’s observation on tactual perception, where the perceiver considers their skin surface orientation relative to them and the object in contact with the skin (Gibson, Citation1966).

Comparing our findings with those of Russo et al. (Citation2015), we noticed notable parallels and distinctions in the challenges encountered with gesture and tactile recognition systems. Russo et al. identified noisy data from sensors as a primary source of error, particularly impacting the recognition of gestures like P and K. For instance, P was often mistaken for A and K for P or L due to the limitations in discriminating similar hand poses where relative joint distances do not vary significantly.

These complexities highlight the challenges in emulating the subtleties of human TFS with haptic technology. The end-user’s firsthand account emphasizes the need for a tactile output that is efficient in communication and aligns with the qualitative aspects of touch that are familiar and comfortable for the user. In comparison to traditional tactile communication methods, such as basic tactile devices like the Tactaid and Vocoder, the HaptiComm introduces notable advancements. The system provides a high level of flexibility to meet diverse end-user requirements, and this flexibility extends to both hardware and software aspects, allowing for customization in hardware layout, shape, size, implementation, and software programmability. Actuators used in the device possess adjustable properties to accommodate these features. These customizable features allow users to modify the speed and intensity of tactile output, catering to individual sensory preferences and processing capabilities. In addition, the HaptiComm has low latency and rapid pattern generation that exceeds human perceptual capacities and can potentially outperform human interpreters. While the HaptiComm represents these significant advancements, it also highlights the complexity of tactile communication – a modality where human touch provides intricate cues through pressure, touch dynamics, and multi-point contact (see Raisamo et al. (Citation2022) for an overview of these features in mediated social touch).

As the HaptiComm device evolves, capturing and conveying the rich expressiveness and variability of human TFS accurately becomes paramount. Considerations include stimulus duration differentiation, especially for letters like P and E, and a more detailed replication of multi-finger and palm interactions to enhance tactile communication accuracy for users.

These initial findings set the stage for future improvements in device recognition rates before expanding into more complex forms of communication. Such advancements benefit TFS and contribute to the broader field of tactile communication technologies, enhancing autonomy and connectivity within the Deafblind community. While comparing with other tactile communication devices is challenging due to differing approaches and languages, our research underscores common issues, including accurate tactile sensation replication, device-user interface design, and overall user experience.

The study highlights the critical importance of distinct and salient tactile cues, particularly in areas prone to confusion. The observed challenges, especially with the palm-based letters and shared actuators, underscore the need for refining HaptiComm’s hardware design to better differentiate tactile signals in these contexts. While resting hands on the HaptiComm in a relaxed state could alleviate the strain on shoulders and arms from prolonged use, the difficulties reported by participants in mapping mediated TFS to non-mediated TFS, considering the orientation of the palm, suggest the necessity for user-friendly adaptions in future iterations of the device. Additionally, the current study explored the greater flexibility in pattern generation in mediated TFS, which is not available in non-mediated TFS, and the possibility of allowing inexperienced participants to self-assign patterns to letter attributions and how this would affect performance awaits investigation.

Conclusion

Our study sheds light on the strengths and challenges of the HaptiComm device. While recognizing the device’s overall efficacy in facilitating tactile communication, the identified performance discrepancies emphasize the ongoing need for refinement and optimization to seamlessly integrate mediated TFS into the communication repertoire of individuals with dual sensory impairments. Future iterations and developments should focus on the specific challenges highlighted in this study, with continuous input from end-users serving as a guiding principle in the evolution of assistive technologies for the Deafblind Community.

Further research should explore the use of HaptiComm in real-world scenarios, such as dynamic communication settings where users interact using more complex phrases and sentences. This exploration could validate the device’s utility in everyday communication tasks and provide insights into necessary adjustments for broader usage contexts. Additionally, it is crucial to investigate the long-term learning effects and user adaptation over time. Such studies could provide deeper insights into the device’s practicality and usability beyond initial training sessions, examining how users’ proficiency and comfort with the device evolve.

Author contributions

All authors contributed equally to this work. They all read and agreed to the submitted version of the manuscript.

Data sharing

Data are available upon request by contacting the corresponding author directly.

Supplemental Material

Download MP4 Video (7.4 MB)Acknowledgements

This research was supported by a Google CS-ER grant awarded to Dr. Mounia Ziat. We thank the reviewers for their feedback.

Disclosure statement

No potential conflict of interest was reported by the author(s).

Supplementary material

Supplemental data for this article can be accessed online at https://doi.org/10.1080/10400435.2024.2369547

Additional information

Funding

Notes

1 Throughout this manuscript, we reference a communication technique named “Australian Deafblind Tactile Fingerspelling.” This system, one of several used within the Australian Deafblind community, focuses on the tactile discernability of each sign, which may represent letters, numbers, words, concepts, ideas, and other information. For simplicity reasons, the experiment is limited to using alphabetical signs only. Despite similarities, these systems should not be confused with other forms of communication such as “Tactile Auslan,” “Pro-Tactile,” “Tactile ASL,” or any other form.

References

- American Foundation for the Blind. (2022). Technology resources for people with vision loss. https://www.afb.org/blindness-and-low-vision/using-technology/assistive-technology-products/braille-printers

- Bettelani, G. C., Averta, G., Catalano, M. G., Leporini, B., & Bianchi, M. (2020). Design and validation of the readable device: A single-cell electromagnetic refreshable braille display. IEEE Transactions on Haptics, 13(1), 239. https://doi.org/10.1109/TOH.2020.2970929

- Caporusso, N. (2008). A wearable malossi alphabet interface for deafblind people. In Proceedings of the Working Conference on Advanced Visual Interfaces, AVI ’08 (pp. 445–448). Association for Computing Machinery, New York, NY, USA.

- Chang, C. M., Sanches, F., Gao, G., Johnson, S., & Liarokapis, M. (2022, October). An adaptive, affordable, humanlike arm hand system for deaf and deaf-blind communication with the American sign language. In 2022 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Kyoto, Japan (pp. 871–878).

- Duvernoy, B., Kappassov, Z., Topp, S., Milroy, J., Xiao, S., Lacôte, I., Abdikarimov, A., Hayward, V., & Ziat, M. (2023). Hapticomm: A touch-mediated communication device for deafblind individuals. IEEE Robotics and Automation Letters, 8(4), 2014–2021. https://doi.org/10.1109/LRA.2023.3241758

- Duvernoy, B., Topp, S., Milroy, J., & Hayward, V. (2020). Numerosity identification used to assess tactile stimulation methods for communication. IEEE Transactions on Haptics, 14(3), 660–667. https://doi.org/10.1109/TOH.2020.3045928

- Dyzel, V., Oosterom-Calo, R., Worm, M., & Sterkenburg, P. S. (2020). Assistive technology to promote communication and social interaction for people with deafblindness: A systematic review. Frontiers in Education, 5. https://doi.org/10.3389/feduc.2020.578389

- Edwards, T., & Brentari, D. (2020). Feeling phonology: The conventionalization of phonology in protactile communities in the United States. Language, 96(4), 819–840. https://doi.org/10.1353/lan.2020.0063

- Galvin, K., Ginis, J., Cowan, R., Blamey, P., & Clark, G. (2001). a comparison of a new prototype tickle talker™ with the Tactaid 7. Australian and New Zealand Journal of Audiology, 23(1), 18–36. https://doi.org/10.1375/audi.23.1.18.31095

- Galvin, K., Mavrias, G., Moore, A., Cowan, R., Blamey, P., & Clark, G. (1999). A comparison of tactaid ii+ and tactaid 7 use by adults with a profound hearing impairment. Ear and Hearing, 20(6), 471–482. https://doi.org/10.1097/00003446-199912000-00003

- Gelsomini, F., Tomasuolo, E., Roc- Caforte, M., Hung, P., Kapralos, B., Doubrowski, A., Quevedo, A., Kanev, K., Makoto, H., & Mimura, H. (2022). Communicating with humans and robots: A motion tracking data glove for enhanced support of deafblind. In 55th Hawaii International Conference on System Sciences (pp. 2056–2064). ScholarSpace.

- Gibson, J. J. (1966). The senses considered as perceptual systems.

- Goldish, M. L. H., & Taylor, M. H. E. (1974). The optacon: A valuable device for blind persons. Journal of Visual Impairment & Blindness, 68(2), 49–56. https://doi.org/10.1177/0145482X7406800201

- Gollner, U., Bieling, T., & Joost, G. (2012). Mobile lorm glove: Introducing a communication device for deaf-blind people [Paper presentation]. Proceedings of the Sixth International Conference on Tangible, Embedded and Embodied Interaction, TEI ’12, Kignston, Ontario, Canada (pp. 127–130). Association for Computing Machinery.

- Iwasaki, S., Bartlett, M., Manns, H., & Willoughby, L. (2019). The challenges of multimodality and multi-sensoriality: Methodological issues in analyzing tactile signed interaction. Journal of Pragmatics, 143, 215–227. https://doi.org/10.1016/j.pragma.2018.05.003

- Jaffe, D. (1994). Evolution of mechanical fingerspelling hands for people who are deaf-blind. Journal of Rehabilitation Research and Development, 31(3), 236–244.

- Johnson, S., Gao, G., Johnson, T., Liarokapis, M., & Bellini, C. (2021). An adaptive, affordable, open-source robotic hand for deaf and deaf-blind communication using tactile American sign language [Paper presentation]. IEEE Engineering in Medicine & Biology Society (pp. 4732–4737.

- Kim, J., & Kwon, D.-S. (2020). Braille display for portable device using flip-latch structured electromagnetic actuator. IEEE Transactions on Haptics, 13(1), 59–65. https://doi.org/10.1109/TOH.2019.2963858

- Matsuda, Y. (2016). Teaching interface of finger braille teaching system using smartphone. 2016 8th International Conference on Intelligent Human-Machine Systems and Cybernetics (IHMSC), Hangzhou, China (Vol. 2. pp. 115–118).

- Meade, A. (1987). Dexter–a finger-spelling hand for the deaf-blind. Proceedings. 1987 IEEE International Conference on Robotics and Automation, Raleigh, NC, USA (Vol. 4. pp. 1192–1195).

- Miyagi, M., Nishida, M., Horiuchi, Y., & Ichikawa, A. (2006). Analysis of prosody in finger braille using electromyography. 2006 International Conference of the IEEE Engineering in Medicine and Biology Society, New York, NY, USA (pp. 4901–4904).

- Oldfield, S. R., & Phillips, J. R. (1983). The spatial characteristics of tactile form perception. Perception, 12(5), 615–626. https://doi.org/10.1068/p120615

- Ozdamar, O., Eilers, R. E., & Oller, D. K. (1987). Tactile vocoders for the deaf. IEEE Engineering in Medicine and Biology Magazine, 6(3), 37–42. https://doi.org/10.1109/MEMB.1987.5006436

- Ozioko, O., & Hersh, M. (2015). Development of a portable two-way communication and information device for deafblind people. In Assistive technology (pp. 518–525). IOS Press.

- Ozioko, O., Taube, W., Hersh, M., & Dahiya, R. (2017). Smartfingerbraille: A tactile sensing and actuation based communication glove for deafblind people. In IEEE ISIE, Edinburgh, UK (pp. 2014–2018.

- Raisamo, R., Salminen, K., Rantala, J., Farooq, A., & Ziat, M. (2022). Interpersonal haptic communication: Review and directions for the future. International Journal of Human-Computer Studies, 166, 102881. https://doi.org/10.1016/j.ijhcs.2022.102881

- Reed, C. M., Tan, H. Z., Jiao, Y., Perez, Z. D., & Wilson, E. C. (2021). Identification of words and phrases through a phonemic-based haptic display: Effects of inter-phoneme and inter-word interval durations. ACM Transactions on Applied Perception, 18(3), 1–22. https://doi.org/10.1145/3458725

- Reed, C. M., Tan, H. Z., Perez, Z. D., Wilson, E. C., Severgnini, F. M., Jung, J., Martinez, J. S., Jiao, Y., Israr, A., Lau, F., Klumb, K., Turcott, R., & Abnousi, F. (2018). A phonemic-based tactile display for speech communication. Transactions on Haptics, 12(1), 2–17. https://doi.org/10.1109/TOH.2018.2861010

- Russo, L. O., Farulla, G. A., Pianu, D., Salgarella, A. R., Controzzi, M., Cipriani, C., Oddo, C. M., Geraci, C., Rosa, S., & Indaco, M. (2015). Parloma – a novel human-robot interaction system for deaf-blind remote communication. International Journal of Advanced Robotic Systems, 12(5), 57. https://doi.org/10.5772/60416

- Sorgini, F., Caliò, R., Carrozza, M. C, & Oddo, C. M. (2018). Haptic-assistive technologies for audition and vision sensory disabilities, disability and rehabilitation. Assistive Technology, 13(4), 394–421. https://doi.org/10.1080/17483107.2017.1385100

- Tan, H. Z., Durlach, N. I., Reed, C. M., & Rabinowitz, W. M. (1999). Information transmission with a multifinger tactual display. Perception & Psychophysics, 61(6), 993–1008. https://doi.org/10.3758/BF03207608

- Tan, H. Z., Reed, C. M., Jiao, Y., Perez, Z. D., Wilson, E. C., Jung, J., Martinez, J. S., & Sev-Ergnini, F. M. (2020). Acquisition of 500 English words through a tactile phonemic sleeve (taps). IEEE Transactions on Haptics, 13(4), 745–760. https://doi.org/10.1109/TOH.2020.2973135

- Tomovic, R. (1960). Human hand as a feedback system—International federation of automatic control. Moscow, 2, 1119. https://doi.org/10.1016/S1474-6670(17)70142-5

- Willoughby, L., Iwasaki, S., Bartlett, M., & Manns, H. (2018). Tactile sign languages. Handbook of Pragmatics, 21, 239–258.

- Zhao, S., Israr, A., Lau, F., & Abnousi, F. (2018). Coding tactile symbols for phonemic communication. CHI Conference on Human Factors in Computing Systems, Montreal, QC, Canada (pp. 1–13).

- Ziat, M., Gapenne, O., Stewart, J., & Lenay, C. (2005). A comparison of two methods of scaling on form perception via a haptic interface. International conference on Multimodal interfaces, Trento, Italy (pp. 236–243).

- Ziat, M., Hayward, V., Chapman, C. E., Ernst, M. O., & Lenay, C. (2010). Tactile suppression of displacement. Experimental Brain Research, 206(3), 299–310. https://doi.org/10.1007/s00221-010-2407-z

- Ziat, M., Lenay, C., Gapenne, O., Stewart, J., Ammar, A. A., & Aubert, D. (2007). Perceptive supplementation for an access to graphical interfaces. In Universal access in human computer interaction. Coping with Diversity: 4th International Conference on Universal Access in Human-Computer Interaction, UAHCI 2007, Held as Part of HCI International 2007, Beijing, China, July 22-27, 2007, Proceedings, Part I 4 (pp. 841–850). Springer Berlin Heidelberg.