?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.

?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.Abstract

Benchmark dose (BMD) modeling is now the state of the science for determining the point of departure for risk assessment. Key advantages include the fact that the modeling takes account of all of the data for a particular effect from a particular experiment, increased consistency, and better accounting for statistical uncertainties. Despite these strong advantages, disagreements remain as to several specific aspects of the modeling, including differences in the recommendations of the US Environmental Protection Agency (US EPA) and the European Food Safety Authority (EFSA). Differences exist in the choice of the benchmark response (BMR) for continuous data, the use of unrestricted models, and the mathematical models used; these can lead to differences in the final BMDL. It is important to take confidence in the model into account in choosing the BMDL, rather than simply choosing the lowest value. The field is moving in the direction of model averaging, which will avoid many of the challenges of choosing a single best model when the underlying biology does not suggest one, but additional research would be useful into methods of incorporating biological considerations into the weights used in the averaging. Additional research is also needed regarding the interplay between the BMR and the UF to ensure appropriate use for studies supporting a lower BMR than default values, such as for epidemiology data. Addressing these issues will aid in harmonizing methods and moving the field of risk assessment forward.

1. Introduction

The benchmark dose (BMD) approach was introduced more than 30 years ago as a way of refining the estimate of the point of departure (POD, also known as the reference point [RP]) (Crump Citation1984; Stara and Erdreich Citation1984; Dourson et al. Citation1985). Additional publications and workshops followed (Farland and Dourson Citation1992; Leisenring and Ryan Citation1992; Hertzberg and Dourson Citation1993; Allen et al. Citation1994a, Citation1994b; Barnes et al. Citation1995; Kavlock et al. Citation1995; US EPA Citation1995; Slob Citation2002; Brown and Strickland Citation2003; Slob Citation2014a, Citation2014b). The first BMD-based safe dose, specifically for methylmercury, was loaded onto EPA’s Integrated Risk Information System (IRIS) in 1995. Guidance and general descriptions on the use of the BMD followed (Klaassen Citation1995; US EPA Citation2000; EFSA Citation2009; EFSA Citation2017). With this level of guidance and acceptance from regulatory agencies, BMD modeling is now considered the preferred approach for deriving PODs/RPs for developing “safe dose” estimates such as reference doses (RfDs), acceptable daily intake values (ADIs), or similar values, generically termed “risk values” in this article.

The advantages of the BMD are that it can be any dose level, takes the entire dose–response curve into account, and is based on a consistent response level across studies and study types. In contrast, the disadvantages associated with the use of no-observed-adverse-effect levels (NOAELs) or other direct tested study doses as the POD are well documented (Crump Citation1984; Dourson et al. Citation1985; Kimmel and Gaylor Citation1988; Barnes et al. Citation1995; US EPA Citation2012; EFSA Citation2017). These include:

The NOAEL is limited to the experimental doses tested;

NOAELs are based on a single data point of a single effect and, therefore, ignore most of the available dose–response information of this single effect;

The observed experimental response at the NOAEL may vary between studies, making it harder to compare studies;

The NOAEL approach does not allow estimation of the probability of response for any dose level;

Studies conducted with fewer animals per dose group tend to yield higher NOAELs due to decreased statistical sensitivity. This is the opposite of what one might desire in a regulatory context because there is a disincentive for better designed, larger studies.

An additional advantage to BMD modeling is that it allows for a more efficient use of animals, allowing more information and better estimates to be obtained from a given number of animals in an experiment, compared with the NOAEL approach (Gaylor et al. Citation1985; Slob Citation2014a, Citation2014b).

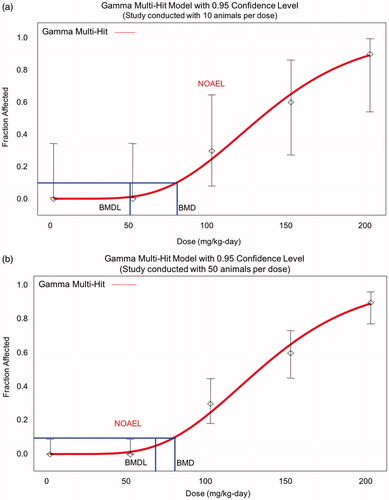

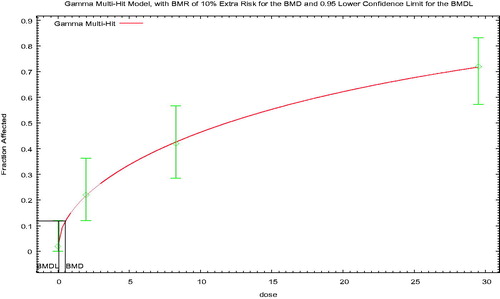

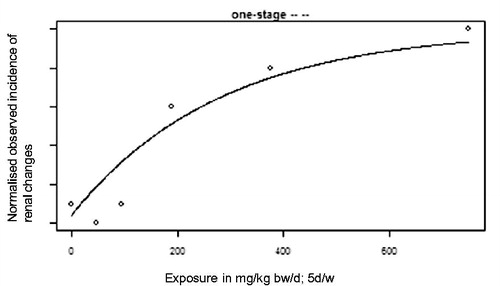

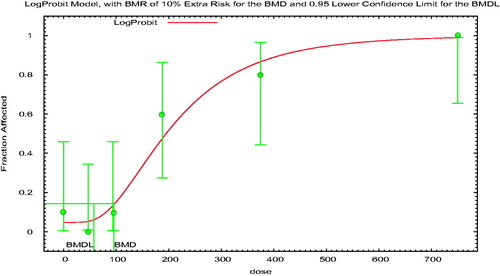

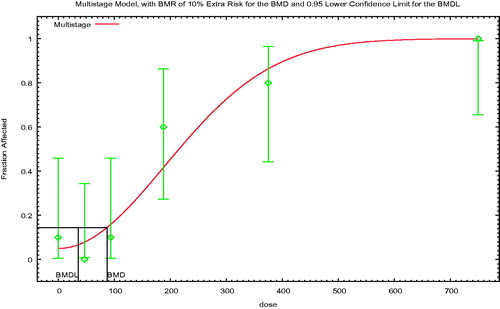

The last bullet may require some additional explanation. A primary advantage of using a BMD vs. a NOAEL is that the former more appropriately accounts for study size (and thus the study’s statistical power), and allows consistent comparison across studies via use of a consistent benchmark response (BMR). For example when using the NOAEL, real differences in response rates among groups may be deemed statistically insignificant if the study has insufficient power, leading to identification of an inappropriately high NOAEL for the study with lower confidence. By contrast, using the BMD approach, confidence limits around the BMD will be tighter for better studies and better models, and the resulting lower bound estimates on the BMD (i.e. BMDLs) will be higher (with smaller studies or poorer models having wider confidence limits and lower BMDLs), all other things being equal (). Thus, the BMD approach appropriately reflects the greater degree of precision afforded by a larger study or a better experimental model, resulting in more confidence in the results.

Figure 1. Implication of sample size on NOAEL and BMDL. (a) When there are only 10 animals/group, the response at 100 mg/kg/d in this example is not statistically significant, and so may be considered a NOAEL, but the BMDL is close to 50 mg/kg/d. (b) The greater study sensitivity with 50 animals/group means that the same fraction affected at 100 mg/kg/d is now statistically significant, and so 50 mg/kg/d is considered a NOAEL, but the BMDL is higher than in Figure 1(a). Thus, the BMDL appropriately reflects study sensitivity.

Despite wide acceptance of the BMD approach, there are a number of issues related to its application that can be challenging or confusing, both for novices and sometimes even for experienced risk assessors. There are also several aspects where differences in opinion exist, and/or where guidance continues to evolve. In particular, the European Food Safety Authority (EFSA Citation2017) and the US Environmental Protection Agency (US EPA Citation2012) have both developed guidance documents for BMD derivations that differ on several key issues. The purpose of this article is to identify areas where consensus exists and areas where different perspectives exist. Identification of areas of consensus and of disagreement is important in moving toward harmonization, that is transparency, communication, and a shared understanding of approaches, not necessarily standardization (Sonich-Mullin et al. Citation2001; Rhomberg et al. Citation2011). Case studies are used to highlight differences in approach and the implication of such differences. In addition, the article identifies and addresses several key areas of confusion among risk assessors. However, this article is not intended as an exhaustive review of the literature or all aspects of the state-of-the-science with regard to BMD modeling. It is also noteworthy that a WHO project to update its guidance on BMD modeling is underway and will address several of the key differences between the US EPA and EFSA guidance at greater depth, particularly from the statistical perspective.

2. Issues

2.1. General description of the method

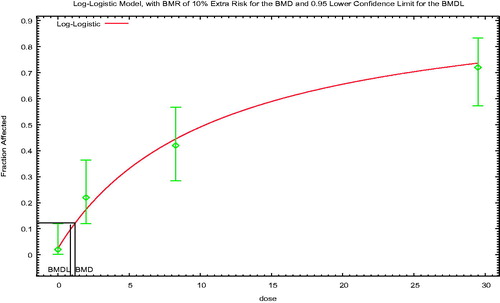

BMD modeling is conceptually relatively simple. A flexible mathematical model (or group of models) is fit to experimental data, and the dose corresponding to a defined response level is identified. The defined response level is termed the BMR, and the corresponding dose is termed the BMD1; most risk assessment applications use the lower 95% confidence limit on the BMD (i.e. the BMDL) and EFSA (Citation2017) also recommends that the entire confidence interval (i.e. including the upper bound on the BMD, that is, the BMDU) be described.

In practice, there are several additional potential challenges to BMD modeling. First, unlike the approach using a NOAEL or lowest observed adverse effect level (LOAEL), where the study NOAEL/LOAEL can often be identified by inspection of the study data, it is often necessary to model more than one endpoint in order to identify the critical effect2. This is because the endpoint with the lowest NOAEL is not necessarily the endpoint with the lowest BMDL, due to differences in the response in the low dose region, the slope, and potential differences in bounds between dichotomous and continuous endpoints. In practice, a rule of thumb is to model all endpoints with a LOAEL within a factor of 10 of the lowest LOAEL in the database. Evaluation of multiple models for multiple endpoints also can make the modeling process more time consuming than use of the NOAEL/LOAEL approach, although user-friendly software enhancements (BMDS and PROAST) over the past several years have helped to decrease the time needed. Some additional mathematical sophistication is needed to ensure the modeling is done properly and to appropriately interpret the model results, although again the availability of user-friendly software and guidance documents have lowered the barriers to use of BMD modeling. Finally, differences in interpretation can also result with BMD modeling, as discussed in more detail below, although guidance documents (US EPA Citation2012; EFSA Citation2017) and ongoing efforts at harmonization aim to decrease such differences, which are probably no larger than similar interpretation differences for the NOAEL approach.

As described in the rest of this critical review, there are also several areas of active discussion or disagreement relating to this deceptively simple series of steps. Differences exist between the US EPA (Citation2012) and EFSA (Citation2017) guidance and associated software in the mathematical models used, the reasons for choosing those models, appropriate constraints on model parameters, and the approach used to choose a final model and associated BMD(L), or whether model averaging should be used instead of choosing a single model.

Before addressing the specific issues related to the modeling and its application, it is useful to provide some additional brief background on the specifics of the modeling. Risk can be expressed as either “additional risk” or “extra risk.” Additional risk expresses the increased probability of response over background. Extra risk takes into account the background response and is essentially the additional risk in the animals that do not develop the endpoint under consideration spontaneously. When the background incidence of a particular effect is small, the BMD results are comparable between these two approaches; when the background is large, there is a larger difference between the additional and extra risk.

(1)

(1)

(2)

(2)

where p(d) and p(0) are the risks at dose d or background, respectively. Risk here can be the probability of adverse response in an individual, or the fraction responding in a population. Both the US EPA (Citation2012) and EFSA (Citation2017) recommend the use of extra risk.

Three general types of data can be addressed in the modeling: dichotomous data, continuous data, and nested data. Dichotomous data, also known as quantal data, reflect the count or incidence of some endpoint. Histopathology data are a common type of dichotomous data3. In order to model dichotomous data, one needs the number affected and total sample size at each dose level. Continuous data can be any value (usually a positive value) on the number line. Endpoints associated with continuous data include body weight, serum chemistry, and hematology parameters. In order to model continuous data, one needs the mean and a measure of variability (e.g. the SD) at each dose level, as well as the sample size.

The third type of data is nested data, which are most commonly encountered in developmental toxicity studies. The data are described as nested, because the individual responses (presence or absence of effect in a fetus) are grouped (nested) within the experimental unit, the litter. Models for nested data are currently only in US EPA’s BMDS software, not in PROAST. BMDS has models only for nested dichotomous data, not for nested continuous data. In order to model nested dichotomous data, one needs the incidence of the effect of interest in each litter for each dose. These models reflect the underlying biology, in which effects in the litter are related to the physiology of the dam. Classical statistical analyses of developmental toxicity data should be conducted on a per litter basis rather than a per pup basis, since fetuses from the same dam are not statistically independent. However, the models in BMDS are able to predict fetal risk, while appropriately taking into account the similarity among fetuses from the same dam. It can do this in two ways. First, the software can take into account within-litter similarity due to features of the dam prior to dosing that may be correlated with the outcome of interest (the litter specific covariate); dam weight is a common litter-specific covariate. It can also account for within-litter similarity in response to treatment (intra-litter correlation). This latter covariate reflects the tendency of littermates to respond more similarly to one another relative to the other litters in a dose group, due in part, to the fact that the mother’s internal dose (due to individual toxicokinetics) is a critical determinant of the dose to the pups. Inclusion of the intra-litter correlation helps to ensure that the variance is appropriately estimated, avoiding mis-specification of confidence limits. Thus, the models for nested data capture both the distribution of responses within a litter, as well as the underlying probability of response that varies from litter to litter. Use of these models is more time-consuming than the standard dichotomous models, since incidence data need to be input for each litter individually4. Furthermore, since such data are not usually provided in published studies, this modeling usually requires access to the original (often unpublished) study report.

Although BMD modeling is the currently preferred approach for most risk assessments, it may not be possible to apply it to all data sets of interest, because not all data sets are amenable to BMD analysis. Some older studies, particularly acute studies where the expected control response is zero, may report only that specific effects were observed, without any incidence data. Other studies may report central tendency estimates (e.g. mean values), but not a measure of variability, as is necessary to model continuous data. One could reasonably argue that such studies are of inherently low quality and should not be used to derive any sort of risk value. However, in some cases, there may not be other alternatives to deriving a risk value or having some estimate of a risk value to protect public health, and a risk management decision may be made to use poor data in the absence of good data. In other cases, these limited data provide an important part of the weight of evidence, even if they are not used as the basis for a risk value. This could be done qualitatively or semi-quantitatively, or more formally, using such data in a Bayesian approach to help define prior estimation values. In still other cases, the risk assessor may wish to look holistically at several different related measures (e.g. different hematology parameters; or considering data on clinical chemistry, histopathology, and liver weight) to identify a biological threshold and thus an appropriate POD, while BMD modeling forces one to focus separately on the dose-response for each endpoint. In such cases, each endpoint will have a somewhat different BMDL, and it becomes necessary to choose one endpoint of a spectrum of related endpoints as the basis for the POD, while several lines of reasoning from several related endpoints may all point to the same NOAEL.5 Another situation might be if there is no dose-response for incidence, due to high background, but there is a clear increase in severity with dose. In some cases, studies with small “n” may be of particular interest because they have been conducted with larger experimental animals (e.g. dogs), for which standard study protocols recommend smaller sample sizes. For such cases, a question arises of whether the greater uncertainty associated with such small samples (and associated wide confidence limits) would be balanced by the greater relevance of experimental animal species, perhaps suggesting a preference for a NOAEL over a BMDL with wide confidence limits. Finally, US EPA (Citation2012) states that BMD modeling is not recommended in cases where the response in all dose groups is high, since extrapolation to the range of the BMR is too uncertain. In such cases, US EPA (Citation2012) recommends obtaining more data or using the NOAEL/LOAEL approach. It is noted, however, that, for many or most of the scenarios identified in this paragraph, analysts with a mathematical focus will often prefer BMD modeling, due to the rigorous and consistent statistical nature of the approach, while more biologically focused assessors may prefer alternative approaches that allow for additional integration of non-quantitative inputs.

The determination of whether BMD modeling or another approach is used may also depend on the problem formulation. For example using NOAELs may be adequate for a screening analysis where a risk management decision needs to be made in less than a day, while BMD modeling may be essential for quantitative evaluations, such as sensitivity analyses or uncertainty characterization, or for a major decision involving exposures of large numbers of people. The problem formulation may also be part of the considerations for addressing several of the other issues addressed in this article.

It is also noted that data sets with small “n” will typically have large confidence limits, potentially resulting in BMDLs much smaller than the associated NOAELs. Often such BMDLs would be preferred, as more appropriately reflecting the uncertainties in the data. However, for certain study types, such as neurotoxicity data, the use of multiple assays measuring closely related endpoints may give the assessor a higher level of certainty about a reasonable starting point (e.g. a BMD) than are reflected in the statistical limits for any single assay or endpoint. Specialized BMD modeling approaches have been developed for such data sets (Zhu et al. Citation2005).

2.2. Choice of the BMR

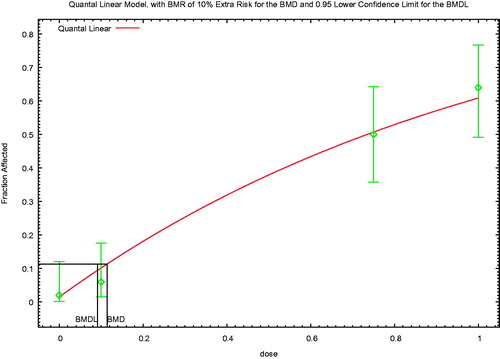

Both EFSA (Citation2017) and US EPA (2012) focus on the 10% response range in determining the BMR for dichotomous data, for similar reasons, although the implementation specifics differ. EFSA (Citation2017) notes that “various studies” estimated that the median of the upper bounds of extra risk at the NOAEL was close to 10%, suggesting that the BMDL10 may be an appropriate default (Allen et al. Citation1994a; Fowles et al. Citation1999; Sand et al. Citation2011). BMRs in this range also tend to be in the range of experimental data and thus minimize model dependence.

US EPA (Citation2012) avoids providing default BMRs, but provides the following guidance regarding the choice of BMR for dichotomous endpoints:

An extra risk of 10% is recommended as a standard reporting level for quantal data, for the purposes of making comparisons across chemicals or endpoints. The 10% response level has customarily been used for comparisons because it is at or near the limit of sensitivity in most cancer bioassays and in noncancer bioassays of comparable size. Note that this level is not a default BMR for developing PODs or for other purposes.

Biological considerations may warrant the use of a BMR of 5% or lower for some types of effects (e.g., frank effects), or a BMR greater than 10% (e.g., for early precursor effects) as the basis of a POD for a reference value.

Sometimes, a BMR lower than 10% (based on biological considerations) falls within the observable range. From a statistical standpoint, most reproductive and developmental studies with nested study designs easily support a BMR of 5%. Similarly, a BMR of 1% has typically been used for quantal human data from epidemiology studies. In other cases, if one models below the observable range, one needs to be mindful that the degree of uncertainty in the estimates increases. In such cases, the BMD and BMDL can be compared for excessive divergence. In addition, model uncertainty increases below the range of data.

Consistent with the last bullet, EFSA (Citation2017) also notes that “the default BMR may be modified based on statistical or biological considerations. For example, if the BMR is considerably smaller than the observed response(s) at the lowest dose(s), leading to the need to extrapolate substantially outside the observation range, a larger BMR may be chosen.” Additional information on the US EPA perspective on the choice of BMD is provided in the (US EPA Citation2005) cancer risk assessment guidelines. That guidance, consistent with the general preference among practitioners, recommends that the BMR be biologically reasonable, and it should be based on the lowest POD adequately supported by the data; BMDLs associated with standard responses of 1, 5, and 10% can be presented.

Thus, the US EPA (Citation2012) and EFSA (Citation2017) guidance are largely consistent regarding the BMR for dichotomous data, although the areas of emphasis differ. In contrast, the two organizations took somewhat contrasting approaches to defining the BMR for continuous data.

Both organizations allow for the BMR for continuous data to be based on toxicological grounds, on a general consensus of the degree of change that is considered biologically significant. This approach is an important part of bringing biological considerations into the evaluation. US EPA (Citation2012) describes this as the ideal approach for continuous data, while EFSA (Citation2017) notes this as a potential modification to the default. As an example of the importance of biological significance, very small changes in hematology parameters sometimes are statistically significant due to low variability in the data, but the magnitude of the change is not sufficient for the change to be considered adverse. A small control SD would mean that not much of a change from control is needed in order to reach a BMR defined as a change of 1 control SD (as addressed below).

Both groups also generally steer away from “dichotomizing” the data into responders and non-responders based on a defined adverse response level and then using quantal models. EFSA does not explicitly address the potential for dichotomizing. US EPA (Citation2012) notes the loss of information when dichotomizing data, although it states that this approach may be used when available continuous models are inadequate for the data. In practice, there have been few if any situations in the past 20 years when EPA assessments have dichotomized continuous endpoints.

The two groups differ, however, in the approach when there is not a clear consensus on the degree of change that is adverse. As a second choice after the ideal situation, the US EPA (Citation2012) notes the potential for application of a hybrid approach (Gaylor and Slikker Citation1990; Crump Citation1995; Kodell et al. Citation1995), which fits continuous models to continuous data, and presuming a distribution of the data, calculates a BMD in terms of the fraction affected. The hybrid models are not explicitly implemented in EPA’s BMDS software, but the third option is a modification of the hybrid approach and the most commonly used approach for assessments in the United States. The US EPA (Citation2012) recommends that a BMR corresponding to a change in the control mean response of one control SD always be presented for comparison purposes and that this BMR could be used in the absence of a biological consensus. The choice of one control SD is based on the work of Crump (Citation1995), who determined that, if exposure results in a shift in a normal distribution of response, and if there is a 1% “background” response in the control group, then changing the mean response by 1.1 standard deviations will result in an additional 10% of the animals reaching the abnormal response level.

EFSA (Citation2017) recommends that the BMR for continuous endpoints be defined as a percent change in the mean response. In the absence of an endpoint-specific determination, EFSA (Citation2017) recommends a default of a 5% change in the mean response. This was based on a re-analysis of data from National Toxicology Program (NTP) studies that found that the BMDL05 was, on average, close to the NOAEL (Bokkers and Slob Citation2007), as well as similar results from studies of fetal weight data (Kavlock et al. Citation1995). EFSA (Citation2017) also notes that it may be appropriate to choose a higher BMR for endpoints with larger within-group variability, a situation that it likens to using a BMD defined as a change of 1 SD.

It is interesting how the rationales of the two groups reflect differences in priorities (i.e. what the groups are trying to estimate) and differences in areas of comfort with uncertainty. US EPA (Citation2012) recommends that a defined change in the mean not be used as the basis for the BMR in the absence of a consensus on what is adverse, because “the same percent change for different endpoints (with different degrees of variability) could be associated with very different degrees of response.” Conversely, EFSA (Citation2017) expresses concern that defining the BMR in terms of the control variability means that the BMD varies with study-specific factors (measurement error; dosing error; heterogeneity in experimental conditions), and that the resulting BMD “cannot be translated into an equipotent dose in populations with larger within-group variation, including humans.” Clearly, the definition of the BMR includes judgment and elements of science policy.

For nested data, US EPA (Citation2012) notes that the study designs for most developmental studies readily support a BMR of 5% from a statistical perspective. In addition, Allen and colleagues (Allen et al. Citation1994a, Citation1994b; Kavlock et al. Citation1995) found that statistically derived NOAELs from developmental toxicity studies were comparable on average to BMDLs calculated using a BMR of 5% (and closer to that than BMDLs calculated using a BMR of 10%). EFSA (Citation2017) did not address BMRs for nested data.

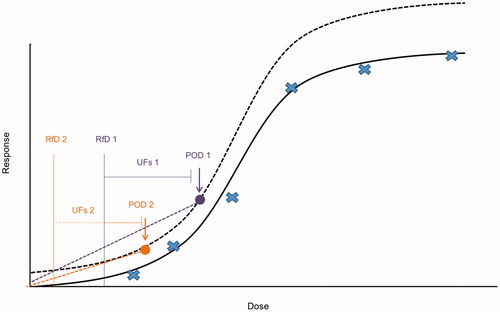

The US EPA (Citation2005) recommendation to use the lowest POD adequately supported by the data (and the corresponding US EPA (Citation2012) acceptance of using BMRs lower than 10% based on biological considerations and when the BMR is in the observable range) raises the issue of the implication of using BMRs other than 10%, when supported by the data. shows that the implication of a lower BMR varies with the approach used for low-dose extrapolation. When a no-threshold linear extrapolation is applied (e.g. for carcinogens acting via direct DNA reactivity), a lower BMR provides a better approximation of the expected actual shape of the dose–response curve in the low-dose region, because it is closer to the typical shape of the dose–response curve in this region. In other words, most dose–response curves have shallower slopes at low doses than at higher doses, and so extrapolating from a lower POD would better approximate the expected shape of the curve in the very low-dose region. (Compare the lines from POD1 and POD2.) No additional quantitative adjustment is needed, as the BMR is taken into account when extrapolating to an acceptable risk (e.g. extrapolating from a BMR of 2% to an acceptable risk of 1 in 100 000, or 1 in 1 000 000). This approach has been used to improve the dose–response estimate in animal studies with large numbers of animals [e.g. (Dourson et al. Citation2008) used a BMR of 2%].

Figure 2. Implications of alternative PODs/BMRs. When a no-threshold linear extrapolation is applied, a lower BMR provides a better approximation of the expected actual shape of the dose–response curve in the low-dose region (reflecting the typically shallower slope at low doses than at higher doses). This means that extrapolating from a lower POD would better approximate the expected shape of the curve in the very low-dose region. (Compare the lines from POD1 and POD2.) In contrast, when the endpoint being modeled has a threshold, so that uncertainty factors would be applied in deriving a safe dose, choosing a lower BMR for a given data set will result in a lower RfD (compare RfD1 and RfD2), suggesting that in this case it may be necessary to adjust the UFs to reflect the lower POD.

In contrast, when the endpoint being modeled has a threshold, so that uncertainty factors (UFs) would be applied in deriving a safe dose, it may be necessary to adjust the UFs to reflect the lower POD. Consider a chemical with a BMDL derived from a BMR of 10% relative change from controls, the limit of the existing study. When an improved study is selected that allows a BMR of 1% to be used, the corresponding BMDL could be much lower, reflecting the lower BMR and BMD6. This raises the question of whether one or more of the UFs should be modified to reflect the lower POD. Application of the same composite UF to a lower POD would automatically result in a lower risk value (compare RfD1 and RfD2, corresponding to POD1 and POD2). Thus, rather than improving the accuracy of the dose–response estimate, using a lower BMR for a threshold effect automatically reduces the final risk value, unless an adjustment is made to the UFs. Crump (Citation1995) also noted this interaction between the BMR and choice of UFs.

The approach to this issue may depend in part on whether the POD comes from an animal study or an epidemiology study. Both US EPA (Citation2012) and EFSA (Citation2017), as well as (US EPA Citation2002) suggest the potential use of a lower BMR, such as a BMR of 1%, for quantal data from epidemiology studies, since the response is often in this range and use of a 10% response would involve extrapolation upward from the data. However, none of these sources suggest modifying the corresponding UFs, or even address whether the UF should be modified. In the absence of any documentation, the underlying thinking is not clear, but it is worth highlighting some issues that should be considered. One key aspect is recognizing that the overall composite UF is generally lower when extrapolating from epidemiology studies than from animal data, by at least a factor of 10, and often a larger factor. The inherent conservatism in default UFs generally provides some extra margin of safety when extrapolating from animal data, but this additional margin is no longer available with a small uncertainty factor. It would be useful to further investigate the impact of the size of the BMR using actual epidemiology data. To do this, one would calculate the slope at different BMRs, and then determine the number of standard deviations by which the BMD(L) would need to be decreased to result in the response below some targeted percentage of the population. Repeating this for different data sets would provide an estimate of the distribution of slopes, albeit a crude one in light of the expected small number of different chemicals with appropriate data for analysis. Based on this analysis, one could determine the most appropriate UFs for different combinations of slope and BMR for epidemiology data.

It is also worth noting that the BMDL and the UFH in epidemiology studies both reflect some of the variability in the population, as well as sample size, but in different ways. The BMDL reflects the variability in the sample population based on sampling variability and the resulting uncertainty about the “true” BMD. In contrast, although the UFH also addresses population variability, it is designed to address how well the population sample reflects the sensitive population. Thus, this factor is often reduced if the study reflects sensitive humans. EPA has used considerations of the degree to which the sensitive population was included in the population sample in its reduction of UFH from 10- to 3-fold for the RfDs for arsenic, molybdenum, and selenium (Dourson et al. Citation2001). For all three of these RfDs, large samples of the general population were used to determine the POD that was the basis for the RfD. However, UFH is not automatically decreased solely because the sample is large, but because it was also heterogeneous. UFH would not be reduced if a large epidemiology study were conducted in a homogeneous population (e.g. male workers).

Because both the BMDL and the UFH may reflect different aspects related to the population size, it is worth considering the potential for some interplay between the two parameters. For example if the sample size is small, a full UFH would generally be used, but the confidence limits would also be expected to be wider, reflecting the small sample size. The use of the wider confidence limits for a smaller study in the general population, together with a full UFH might be considered to be doubly penalizing the study for the smaller size. Conversely, a large sample size would result in relatively tight confidence limits and a higher BMDL. If a reduced UFH is used in this case, based on a large sample size that covers the sensitive population, one might consider whether this is giving the study “double credit” for the larger sample size. Thus, it may make sense to have one standard UFH for epidemiology studies with the same BMR, with the idea that the sample size is accounted for in the lower limit. It would be useful to conduct further research on this issue, using model data sets extracted from real epidemiology data, in order to better characterize the interplay among sample size, BMD, and BMDL, as well as the implication of the choice of BMR and uncertainty factors.

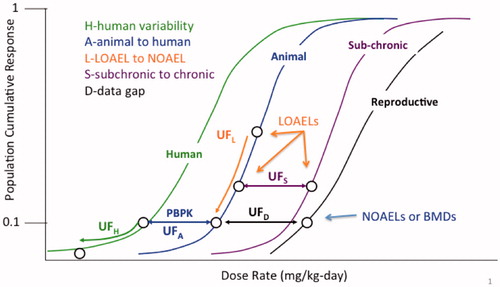

Different considerations apply in addressing whether specific adjustments to the UFs are needed when extrapolating from lower BMDLs in animal studies. But first, some brief background on UFs is needed. shows the use of these factors conceptually on a hypothetical dose–response curve for a threshold toxicant, using the scheme developed by the US EPA (Barnes and Dourson Citation1988; US EPA Citation2002). Other organizations’ schemes are similar and also can be used in this comparison. In brief, the areas of uncertainty considered are the following:

UFH – human variability, including protection of sensitive populations.

UFA – interspecies differences between the experimental animals and humans.

UFL – extrapolation from a LOAEL to a NOAEL.

UFS – extrapolation from a subchronic study to a chronic/lifetime exposure.

UFD – incomplete database, accounting for missing studies, or other database deficiencies.

Figure 3. Typical uncertainty factors used in safe dose assessment. This figure distinguishes among the uncertainty factors that extrapolate between (hypothetical) dose-response curves (i.e. UFA, UFS, and UFD), and those that move down the same dose-response curve (UFH and UFL).

Some approaches (IPCS Citation1994; Meek et al. Citation1994) combine one or more of the latter three areas of uncertainty into one factor.

shows that only two UFs address reductions in risk: UFH and UFL. UFH lowers the BMD or NOAEL for an average group of humans to a BMD or NOAEL for a sensitive group of humans, thereby lowering the overall risk to the population. UFL lowers the value of the LOAEL into the expected range of the BMD or NOAEL, thereby lowering the overall risk to the experimental group. The other three factors, UFA, UFS, and UFD, are extrapolations from one hypothetical dose–response curve to another without any corresponding change in the projected (or estimated) risk.

Categorizing the UFs into those that reduce risk and those that move from one hypothetical dose–response curve to another helps in identifying the UFs that would be relevant to a change in the BMR. Because using a BMR different from 10% affects the underlying risk, one should focus on potential modifications to the uncertainty factors UFH and UFL if one were to extrapolate from a lower BMR in an animal study. However, as noted, BMDLs are chosen to be equivalent, on average, to a NOAEL, and thus UFL is generally 1 when BMD modeling is conducted. This raises the question of how to adjust for differences in the BMR while ensuring that sensitive human populations are protected.

This line of thinking suggests that the magnitude of the composite UF (and associated degree of conservatism) be considered in identifying the BMR, to ensure appropriate estimation of the risk value. Thus, for example if extrapolating from an animal study using a chemical-specific adjustment factor (CSAF) (IPCS Citation2005) or data-derived extrapolation factor (DDEF) (US EPA Citation2014), it is useful to consider the magnitude of the combined factor and the resulting projected risk. If, for example the total factor is 10 or less and the severity of the critical effect is more than mild, it may be appropriated to consider a lower BMR.

Another issue that relates to the choice of the BMR reflects the interplay between that choice and using the BMD vs. the BMDL as the POD. As noted, the US EPA and several other organizations use the BMDL. That is, the lower bound on the dose is used, as part of a desire to reflect the uncertainty inherent in the data, and the 10% response is commonly used (for quantal data), in order to match, on average, historic NOAELs. But this is not the only combination that can match historic NOAELs on average. Health Canada’s Existing Substances Division has used the BMD instead of the BMDL, since the BMD is more stable statistically, particularly for small sample sizes. In order to be generally consistent with historic NOAELs, Health Canada has used the BMD05, and in many cases, the BMD05 is expected to be in the range of a BMDL10 (personal observations of M. Dourson and L. Haber). However, use of the BMD05 eliminates one of the advantages of the BMD approach, namely appropriately reflecting uncertainty in the point of departure, as discussed above.

2.3. Comparisons of NOAELs to BMDLs

As noted, part of the rationale for the choice of BMRs was based on a goal of having BMDLs agree, on average, with NOAELs. This does not mean, however, that the BMDL for every endpoint will be comparable to the corresponding NOAEL. It is not surprising that some BMDLs will be lower and some will be higher; that is part of the definition of “agree on average.”

Despite these basic definitional considerations, confusion sometimes arises regarding the appropriate approach and interpretation when there are apparent disparities between NOAELs and BMDLs. Sometimes, the BMDL is closer to the LOAEL than the NOAEL, or even higher than the LOAEL. In other cases, the BMDL may be substantially lower than the NOAEL. In considering how to evaluate these cases, it is important to keep in mind that the ultimate goal is to estimate a human dose that is as close as possible to, but still below, the biological threshold for adversity. To achieve this goal, the modeled POD should be near the data (non-control points) but as low as can be reliably and plausibly extrapolated along the fitted dose–response curve. However, it should be recognized that the experimental animal response at the NOAEL is not necessarily zero. In fact, statistical analyses have shown that the response at the NOAEL can be as high as 30% for the standard 10 animals/group in a subchronic study (e.g. US EPA Citation2012). This potentially higher response is reduced at low levels of human exposure because of the conservatism inherent to the application of uncertainty factors, which are multiplied together. That is, the default uncertainty factors are each based on a high percentile of the relevant distribution (e.g. the difference between human and animal sensitivity) and it is unlikely that the same chemical will fall at the high end of the distribution for all relevant uncertainty factors. Therefore, the use of the default factors (including the default factor of 100) is considered to be protective (Dourson and Stara Citation1983).

Thus, it is important to consider the data in detail and evaluate the reason for apparent contradictions between the BMD and NOAEL approach, rather than automatically choosing one approach or another. In particular, one should consider how the power of the study and the tightness of the data are reflected in the BMD, BMDL, NOAEL, and LOAEL. For example if the sample size is 10/group, then a 10% change in incidence is a difference in incidence of only 1 animal or subject. This magnitude of change would not be statistically significant, but it would be the basis of a BMD. The BMDL would be even lower, and a high background in the control group would lead to an even smaller BMDL when “extra risk” is used. (High background can be thought of as a smaller “n” of animals that are left able to respond to exposure.) While this may appropriately reflect the uncertainty in the data, a risk assessor may have concerns about allowing such a small change, which could be due to random variation, to drive a very low BMDL. The risk assessor needs to consider the overall dose-response, as well as the nature of the endpoint and historical control data (as an indication of the variability in that high background) in considering whether it is appropriate to use such a low BMDL. Conversely, studies with small “n” may raise the question of whether an adverse change is really occurring, but the dose group size or overall study size is not sufficiently large to allow its detection. Due to the potential impact of small changes in the incidence, some have expressed reluctance to use the BMD approach for datasets with small “n.” In addition, methods to address this issue have been explored specifically for neurotoxicology testing, when a large number of related assays are conducted with the same small group of animals (Zhu et al. Citation2005), and in general (Leisenring and Ryan Citation1992; Brown and Strickland Citation2003).

2.4. Model formulas used for modeling

The next step after identification of the BMR is to identify the mathematical models that will be used for the modeling. This is another area where the US EPA and EFSA guidance differ7. Both groups note that the ideal approach is to use a biologically based dose–response (BBDR) model that describes the chemical’s toxicokinetics and toxicodynamics. Both groups also note that it is rare to have such a model and that in the absence of a BBDR, the modeling is largely a mathematical curve-fitting exercise.

EPA and EFSA appear to differ in philosophy on the approach to use to identify candidate models in the absence of a BBDR model. US EPA (Citation2012) states that no recommended model hierarchy was available at the time. Instead, coupled with the BMDS software (U.S. Environmental Protection Agency, Washington, DC), the EPA approach is to use a variety of flexible models. BMDS includes nine dichotomous models, eight “alternative” dichotomous models, and five continuous models (counting the variations on the exponential model as one model).

EFSA (Citation2017) also recommends a variety of flexible models, but within a narrower range. For the continuous models, EFSA (Citation2017) recommends only the 3- and 4-parameter versions of the exponential and Hill models. EFSA notes that the recommended models have the following characteristics:

They always predict positive values, e.g. organ weight cannot be ≤0.

They are monotonic (i.e. either increasing or decreasing).

They are suitable for data that level off to a maximum response.

They have been shown to describe dose–response data sets for a wide variety of endpoints adequately, as established in a review of historical data (Slob and Setzer Citation2014).

They allow for incorporating covariates in a toxicologically meaningful way.

They contain up to four parameters, which have the same interpretation in both models. families, in particular: a is the response at dose 0, b is a parameter reflecting the potency of the chemical (or the sensitivity of the population), c is the maximum fold change in response compared to background response, and d is a parameter reflecting the steepness of the curve (on log-dose scale).

EFSA (Citation2017) also notes that it no longer recommends the models in these families with fewer parameters, because “BMD confidence intervals tend to have low coverage when parameter d is in reality unequal to one.”

Before continuing to describe the EFSA approach, we wish to add some comments on the EFSA concerns about adequate coverage of confidence intervals. The exponential Hill models with fewer parameters set the power (d) parameters equal to one. So, it would not be surprising if those models had poorer coverage when, in fact, d was greater than 1. But that is somewhat circular: one does not know a priori what the shape of the dose-response is, and dropping those models would not be appropriate when the underlying dose–response shape does have d = 1, except insofar as the 4-parameter models have the d = 1 models as special cases. Although these are special cases, if a restriction is imposed on the power parameter (such that it is greater than or equal to 1, as is often done to assure finite slope near background), then that special case is on the boundary of the allowed parameter space and is worth including on its own.

EFSA (Citation2017) notes that the power and polynomial models of BMDS are not recommended because they are additive with respect to the background response, which could result in fitted curves predicting negative values. While this may be a theoretical concern, it does not seem to be an issue in practice; the authors have never encountered this issue in modeling data sets over many years. Similarly, while it is possible to obtain non-monotonic curves with the polynomial model, these results are avoided by constraining (restricting) the polynomial coefficients so that they are either non-positive or non-negative. The remaining characteristics apply to some, but not all, of the BMDS models, though all apply for at least some of the models. Thus EFSA appears to place more emphasis on all of the suggested models having certain parallel characteristics, rather than ensuring the characteristics are met by having a variety of options.

For dichotomous models, EFSA lists eight recommended models. This list is again largely a subset of the BMDS models, with two deviations. First, EFSA also includes latent variable models based on an underlying continuous response, which is dichotomized based on a (latent) cutoff value that is estimated from the data. In addition, EFSA recommends only the two-stage version of the linearized multistage (LMS) model, while BMDS allows for higher stages. EFSA explains the elimination of the three-stage model as being because it rarely provides a better fit to the data. EFSA also notes that the logit and probit models have only two parameters, and a minimum of three appears to be needed. EFSA states that “it is general experience that these two models provide poor fits to real data sets that include more than the usual number of doses (three plus controls).”

With regard to the differences in choices of models for both dichotomous and continuous endpoints, there appears to be little harm (and some potential benefit) in including the models as options when the BMDL is chosen based on the best-fitting model(s). It is also worth noting that in such cases, the best fitting model has often in our experience been chosen from among the models that EFSA would eliminate. However, when model averaging approaches are applied (see below), it may become more important to limit the suite of models being tested. Model averaging is additionally discussed in Section 2.7.

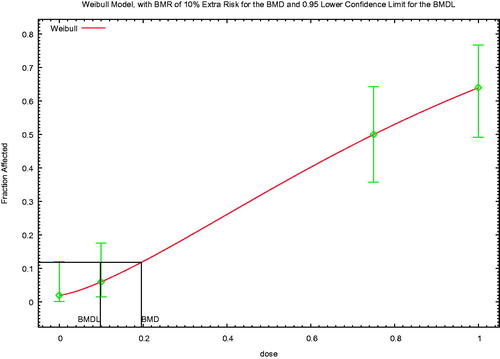

2.5. Restriction/constraining parameters

One of the significant areas of disagreement between the current US EPA (Citation2012) and the EFSA (Citation2017) guidance relates to restricting (also known as constraining) parameters. The EPA’s guidance is that one should first run the “restricted” models, to avoid biologically problematic dose–response curves, and to run the unrestricted models only if an acceptable fit is not obtained with any of the restricted models. While there is more general agreement that parameters should be restricted to avoid non-monotonic curves, the issue of constraining models that are steeply supralinear is more controversial. US EPA (Citation2012) states:

In general, the modeler should consider constraining power parameters to be 1 or greater (this is the default in the BMDS software…). However, if the observed data do appear supralinear, unconstrained models or models that contain an asymptote term (e.g., a Hill model) warrant investigation to see whether they can support reasonable BMD and BMDL values. If they cannot, other model forms should be considered for a POD; at times, modeling will not yield useful results and the NOAEL/LOAEL approach might be considered, although the data gaps and inherent limitations of that approach should be acknowledged.

The EPA guidance presents a fairly nuanced discussion of this issue, noting that it is not unusual for data in the observed range to be supralinear, such as in the case of a Michaelis–Menten relationship, and so excluding power parameters less than 1 may result in worse fit to the data. In addition, since BMD modeling does not involve extrapolation to very low doses, “the high slopes seen for some unconstrained models near the origin is not in itself a fundamental problem.” However, EPA notes that the calculated BMDs and BMDLs can be very low.

EFSA (Citation2017) removes the recommendation for first running the restricted models, based largely on the work of Slob and Setzer (Citation2014), biomathematicians who have played a key role in the development of BMD methodology for EFSA and US EPA, respectively. Slob and Setzer (Citation2014) state that the concern about infinite slope at zero dose is not a plausible one, since the curve has zero slope at zero dose when the x-axis is log-dose instead of linear dose. Therefore, they argue, there is no biological reason to reject the unrestricted models.

The common argument against the unrestricted models is that the low-dose slope is steep because data are missing in the region of interest. According to this argument, the curve would exhibit a more traditional dose–response shape if the lower-dose data were available, and thus such data sets with an initial steep slope have higher uncertainty. Slob and Setzer (Citation2014) come to a similar conclusion, via somewhat different reasoning. They note for some datasets (where the unrestricted model has very steep low-dose slope), the BMDL is very small, reflecting that insufficient dose–response data are available to provide an estimate of the BMD with reasonable precision. They suggest, however, that, instead of constraining the parameters, that using historical data from comparable studies for comparable endpoints together with the study of interest can result in a usable POD. Interestingly, Slob and Setzer (Citation2014) also note that for the data sets that they evaluated, dose–response curves with infinite slope at zero dose occur for about 50% of the chemicals evaluated. The high prevalence of such cases underlines the importance of the development of consistent guidance on this issue.

This issue of constraining the power parameter is discussed in much greater detail in the WHO manuscript under development mentioned above. There appears to be general agreement that the steep slope reflects uncertainty in the dose-response, due to insufficient data in the low-response region. However, additional discussion is needed regarding the proper approach to deal with this important paucity of data. While an ideal situation might be to repeat the experiment to obtain low-response data, repeating studies is generally avoided due to cost concerns, animal welfare concerns, and sometimes because of the urgency for a decision.

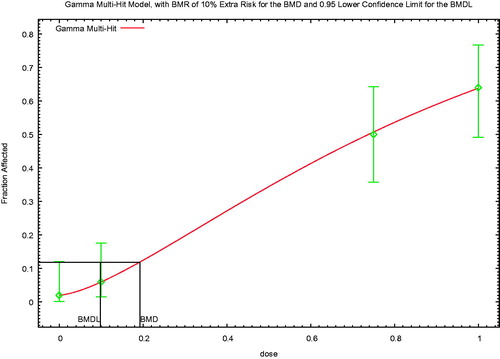

2.6. Model choice

2.6.1. US EPA guidance

US EPA (Citation2012) recommends the following approach for choosing the model to use for calculating a POD. Because in our experience the choice of model is often one of the most controversial aspects of BMD modeling, the US EPA guidance is quoted verbatim (except for removing references to other sections of the US EPA guidance), followed by discussion, to allow each reader to interpret the words of the guidance.

Assess goodness-of-fit, using a value of α = 0.1 to determine a critical value (or α = 0.05 or α = 0.01 if there is reason to use a specific model(s) rather than fitting a suite of models).

Further reject models that apparently do not adequately describe the relevant low-dose portion of the dose–response relationship, examining residuals and graphs of models, and data.

As the remaining models have met the recommended default statistical criteria for adequacy and visually fit the data, any of them theoretically could be used for determining the BMDL. The remaining criteria for selecting the BMDL are necessarily somewhat arbitrary and are suggested as defaults.

If the BMDL estimates from the remaining models are sufficiently close (given the needs of the assessment), reflecting no particular influence of the individual models, then the model with the lowest Akaike information criterion (AIC) may be used to calculate the BMDL for the POD. This criterion is intended to help arrive at a single BMDL value in an objective and reproducible manner. If two or more models share the lowest AIC, the simple average or geometric mean of the BMDLs with the lowest AIC may be used. Note that this is not the same as “model averaging”, which involves weighing a fuller set of adequately fitting models. In addition, such an average has drawbacks, including the fact that it is not a 95% lower bound (on the average BMD); it is just the average of the particular BMDLs under consideration (i.e. the average loses the statistical properties of the individual estimates).

If the BMDL estimates from the remaining models are not sufficiently close, some model dependence of the estimate can be assumed. Expert statistical judgment may help at this point to judge whether model uncertainty is too great to rely on some or all of the results. If the range of results is judged to be reasonable, there is no clear remaining biological or statistical basis on which to choose among them, and the lowest BMDL may be selected as a reasonable conservative estimate. Additional analysis and discussion might include consideration of additional models, the examination of the parameter values for the models used, or an evaluation of the BMDs to determine if the same pattern exists as for the BMDLs. Discussion of the decision procedure should always be provided.

In some cases, modeling attempts may not yield useful results. When this occurs and the most biologically relevant effect is from a study considered adequate but not amenable to modeling, the NOAEL (or LOAEL) could be used as the POD. The modeling issues that arose should be discussed in the assessment, along with the impacts of any related data limitations on the results from the alternate NOAEL/LOAEL approach.

The US EPA (Citation2012) guidance also notes that model averaging is possible, but at the time the guidance was written, additional research and guidance were recommended. As discussed in the following section, model averaging appears to be on the verge of being the state of the science, and not solely a tool for mathematical experts. Model averaging is described by EFSA (Citation2017) as the method of choice. Model averaging capabilities are part of PROAST, and US EPA is actively working on incorporating model averaging capabilities into its BMDS software.

The AIC noted in item (4) above is a measure of overall fit that takes into account the number of model parameters, and is calculated as −2LL + 2p, where LL is the log-likelihood at the maximum likelihood estimates (MLEs) for p estimated parameters. The AIC, rather than the goodness of fit p values, is used to compare model outputs from a data set, to account for the general improvement of fit as additional parameters are added within a family of models. The +2p factor in the AIC equation addresses the question of whether the improvement in fit justifies estimating additional parameters. That is, among models with similar fit, the AIC prefers less complex models. Smaller AICs (to the left on the number line) are better when comparing two models. Note that only the difference in AIC is meaningful, not the actual value. Because the AIC includes an adjustment of 2 times the number of parameters, a difference of 2 in the AIC (a unitless measure) is often considered meaningful, since a difference of that magnitude means that adding the parameters improved the fit more than the penalty for adding the parameters.

A few aspects of US EPA’s guidance are worthy of particular note. First, EPA describes the process of model selection as a two-step process. The first step is identifying which models are acceptable, based on the goodness of fit p values, visual fit, and scaled residuals (a measure of local fit). The second step is the choice of the model (or models, if the BMDLs are averaged) from among those with acceptable fit. The guidance document appears to avoid being prescriptive with regard to the choice of the model, stating that the “the model with the lowest AIC may be used” (emphasis added). Similarly, in the fifth point above, no definition is provided for “sufficiently close” BMDL estimates. This was a conscious decision by EPA to not define sufficiently close; a factor of 3 was specified in the draft guidance. EPA’s training materials state that a factor of no more than 3 can be used, but one can use a smaller factor (Jeff Gift and Allen Davis, personal communication, November 18, 2016).

Despite the generally non-prescriptive nature of this portion of the EPA guidance, in practice, EPA assessments have often been based on the model with the lowest AIC, to the point that many consider it mandatory to choose the model with the lowest AIC, regardless of other fit considerations. Although this approach does result in ease of use, consistency and avoids concerns about the potential for “cherry picking,” it may not always be scientifically justified, such as in cases of poor fit in the low-dose region or when biological understanding would suggest one or more models over the one with the lowest AIC. Also, as noted, small differences in AIC are not considered statistically meaningful. While it appears that modelers consider a value of 2 or more to be a meaningful difference in the AIC for the reason noted above, it is not clear how small the difference can be in AIC values and still be meaningful for model selection purposes. This suggests that, rather than relying solely on the AIC, it is useful to consider all fit criteria more holistically.

In addition to the previously described criteria (i.e. scaled residuals, visual fit, AIC, etc.), the EPA guidance notes the importance of accurately estimating the variances for continuous data sets. The variance parameter is estimated simultaneously with the dose–response model parameters, and so the accuracy of the estimation of the variance affects the model fit parameters. Inaccuracies in the estimate of the variance estimate may bias estimates of the model parameters. In addition, when the BMR is defined in terms of a one standard deviation change in the control mean, accurate estimation of the control variance is essential for an accurate estimation of the BMD(L). Unfortunately, BMDS currently includes only two options for estimating the variance. The variance can be assumed to be constant with dose, or it can be modeled as increasing with dose, proportional to the mean raised to a power. If neither of these two approaches can adequately describe the variance, then a common approach, albeit suboptimal, is to revert to the NOAEL/LOAEL approach. However, mathematically sophisticated analysts can use spreadsheets to fit models with independent variances, that is, separate variance terms for each dose group, that are independent of one another and of the underlying dose–response pattern. In fact, such an approach reflects the least restrictive set of assumptions about the underlying data-generating process. While it requires estimation of additional parameters (dose-specific variance estimates), this is a viable option much preferred over a NOAEL/LOAEL derivation when the interest is primarily on the dose–response pattern rather than the variability around that pattern. In other words, the independent variance approach does not allow one to extrapolate the variance estimates to other dose or response levels. It is possible that the independent variance approach may be incorporated into a future release of BMDS.

The BMD:BMDL ratio is not explicitly addressed in the EPA guidance, although some of the authors of this article see this as part of the guidance (see below). In practice, the BMD:BMDL ratio is part of the output of the Wizard program that is part of BMDS, and large ratios indicate greater uncertainty in estimating the most likely actual BMD value. The use of the BMD:BMDL ratio as one measure of influence of model on BMDL is reasonable to some analysts but not others, and discussed in greater depth in Section 2.8.

The same general approach as for quantal data is used for nested data sets for quantal endpoints, although there are some additional complications. For example the reported scaled residuals are litter-specific, rather than dose-specific. In addition, visual fit generally carries less weight, again reflecting the variability across litters.

2.6.2. EFSA guidance

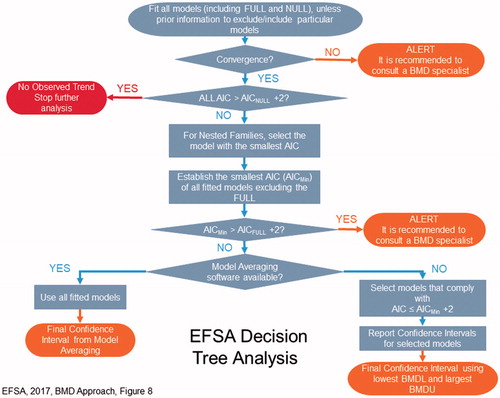

The EFSA (Citation2017) approach is shown in . There is less ambiguity for interpretation in the EFSA guidance than the US EPA approach, and so the EFSA approach is paraphrased in portions here. However, two additional definitions are needed prior to discussing the EFSA approach. As defined by EFSA:

The full model describes the dose–response relationship simply by the observed (mean) responses at the tested doses, without assuming any specific dose–response. It does, however, include the (same) distributional part of the model and thus it may be used for evaluating the goodness of fit of any dose–response model.

The null model expresses the situation that there is no dose-related trend, i.e. it is a horizontal line, and may be used for statistically evaluating the presence of a dose-related trend.

After fitting all of the models (including the full and null models), unless there is prior information to exclude or include particular models, the first step is to evaluate model convergence. If the model did not converge to a single maximum likelihood, it is possible that there may be more than one set of parameter estimates that would result in similar log-likelihood values. The EFSA guidance states that, “convergence may not be critical in providing a reliable BMD confidence interval, and therefore a message of non-convergence does not necessarily imply that the model should be rejected.” Instead, a BMD specialist (not further defined) should be consulted. The lack of convergence could be because the data are not informative or the model may be over-parameterized.

EFSA’s next step is to compare the AIC of the null model with that of the fitted models (i.e. various mathematical expressions used to fit the data), to evaluate for evidence of a dose-related trend. EFSA (Citation2017) states that a model shows statistical evidence of a dose-related trend, if its AIC is less than AICnull − 2. If the AICs of all of the fitted models are greater than AICnull – 2, then there is no evidence of trend and no further analysis is conducted.

For nested families, EFSA then chooses the model with the lowest AIC, so that only one model from each family is carried into the next step.

To evaluate adequacy of fit, the AIC is then compared with the AIC of the full model. EFSA (Citation2017) states that “the AIC of a fitted model should be no more than two units larger than the full model’s AIC. If the model with the minimal AIC is more than two units larger than that of the full model (AICmin > AICfull + 2), this could be due to the use of an inappropriate dose–response model (e.g. it contains an insufficient number of parameters), or to misspecification of the distributional part of the model (e.g. litter effects are ignored), or to non-random errors in the data.” If AICmin > AICfull + 2, it is recommended to consult a BMD specialist.

This approach narrows the models down to those that EFSA considers statistically acceptable. Note that one potential limitation it that the EFSA guidance does not address visual fit or scaled residuals, despite the broad acceptance of these as biologically relevant parameters. Under the EFSA approach, these biologically important parameters of fit are not considered in either the weighting of models for the preferred approach of model averaging or are they considered in the choice of individual models.

At this point, EFSA’s preferred approach is to use model averaging. If the appropriate software is available, all models (excluding the full and null models) are averaged, weighted by the AIC. If model averaging software is not available, then the models are sorted to identify those with “relatively good fit” and “relatively poor fit.” Models with “relatively good fit” are defined as the model with the lowest AIC, and all models with an AIC no more than two units larger than that. EFSA then recommends that one approach is to choose the lowest BMDL of these models. However, EFSA focuses more on the complete confidence interval, reporting the final confidence interval using the lowest BMDL and the highest BMDU. EFSA provides somewhat ambiguous guidance with regard to the choice of the BMDL. Instead of choosing the lowest BMDL, the guidance states:

…this procedure may not be optimal in all cases, and the risk assessor might decide to use a more holistic approach, where all relevant aspects are taken into account, such as the BMD confidence intervals (rather than just the BMDLs), the biological meaning of the relevant endpoints, and the consequences for the HBGV (health-based guidance value) or the MOE (margin of exposure). This process will differ from case to case and it is the risk assessor’s responsibility to make a substantiated decision on what BMDL will be used as the RP (reference point, the term used by EFSA for the POD). One example is a situation where the BMD confidence interval with the lowest BMDL is orders of magnitude wide. This means that the true BMD might be much higher than the BMDL, which raises the question if that BMDL would be an appropriate RP.

The EFSA guidance further states in the context of wide confidence limits:

In some cases, the selected RP may not be the lowest BMDL, for example, when this lowest BMDL concerns an effect that is also reflected by other endpoints (e.g. the combination of liver necrosis and serum enzymes) that resulted in much smaller confidence intervals but with higher BMDLs. In that case, it might be argued that the true BMDs for those analogous endpoints would probably be similar, but one of them resulted in a much wider confidence interval (e.g. due to large measurement errors).

This final approach appears to at least partially address the issue noted in Section 2.1 that BMD modeling has typically been conducted endpoint by endpoint, not allowing the integration across related endpoints that is common in the use of the NOAEL approach to identify a POD. It will be of interest to see examples of cases where several related endpoints are modeled and a BMDL based on an endpoint with tighter confidence limits is used as the POD. See also the discussion of BMD:BMDL ratios in Section 2.8.

2.6.3. A holistic approach

Many of the authors of this article have advocated a holistic approach to model selection. This approach follows the US EPA guidelines, but does not automatically choose the model with the lowest AIC. Instead, it considers multiple measures of fit, including p values, scaled residuals, the visual fit and evaluation of model influence. Like the EFSA approach, models that have AICs that differ by more than 2 from the lowest AIC of the acceptable models are excluded. For data sets where the BMD:BMDL ratios resulting from different models differ greatly, further examination is useful to evaluate those model(s) with large ratios, to determine what aspects resulted in the larger ratios. This examination can help in assessing underlying elements of the data or models that can inform the best choice of model. Biological considerations can be taken into account, preferring models that are concave upward over models that are concave downward, unless there is a biological reason to expect the latter curve shape. Some models may exhibit a perfect visual fit, but have a shape of the dose–response curve that does not appear to be biologically reasonable. Some models have excellent residuals but have larger BMD:BMDL ratios. Some models have larger AICs, but smaller ratios or residuals. All of these considerations are taken into account in identifying the best model(s). Where there is no clearly best model, BMDLs are often averaged for models with similar fit. Alternatively, the lowest BMD and BMDL can be selected as a conservative choice. In all of these situations, however, it is important to be transparent about the rationale for the choice, and to recognize that much of the rationale is based on scientific judgment and science policy, rather than clear scientific distinctions.

This holistic approach has the advantage of including biological judgment, considering fit more broadly (particularly in the low-dose region), and focusing on statistically meaningful differences in AIC, rather than choosing a model based on small differences in AIC. It also allows the assessor to focus on biologically meaningful differences across models, rather than making decisions solely based on statistical considerations. This holistic approach of focusing on biologically meaningful differences is consistent with both US EPA and EFSA guidance, which allows for a role of scientific judgment by the risk assessor within the confines of their decision steps. However, it is acknowledged that consistency using this approach can be challenging, and it is not even guaranteed that a single assessor would always obtain the same conclusion. This can make the holistic approach more challenging in regulatory applications, where consistency and verifiability are critical. Development of quantitative metrics that capture some of the additional considerations included in the holistic approach, including the visual fit, would help to make the holistic approach more systematic. This may be facilitated by the development of modeling averaging methods that reflect local fit (residuals) and visual fit. Specifically, it would be useful to have a way to quantitatively capture whether the curvature of the fitted curve is consistent with biology and the data.

2.6.4. Summary regarding model selection

We have described three different approaches to choose the best model; additional approaches for choosing the best model are possible, particular variations on the holistic approach. It is recognized that some of the issues of model choice will be eliminated by the use of model averaging, which is gaining acceptance. However, as discussed in the next section, model averaging does not completely eliminate the issues discussed above. Instead, issues of whether and how much to consider biological issues are reframed in terms of what method to use to weight the different models.

Finally, it is important to note that some of the differences in approaches to model choice reflect important underlying differences in perspective regarding the goal of the modeling. US EPA (Citation2012) talks about identifying the model that best fits the data, although the thinking at US EPA has now moved toward model averaging. EFSA (Citation2017) states that “the BMD approach does not aim to find the single statistically best estimate of the BMD but rather all plausible values that are compatible with the data. Therefore, the goal is not to find the single best fitting model, but rather to take into account the results from all models applied.” As discussed in Section 2.8, the “best” model identified using statistical metrics sometimes does not lead to the best estimate of the BMD, based on consideration of both biology and statistics. Therefore, although historic practice (and current best practice) is to choose a single model or average BMDLs, there is a utility in considering multiple models, but it is necessary to ensure that these multiple models capture biologically reasonable BMDs. However, the EFSA approach of describing all plausible values that are compatible with the data risks losing important information. Among the plausible values for the BMD (and associated models), some values and models are more plausible than others. Therefore, perhaps a more nuanced goal would be to identify the more plausible values (taking into account biological judgment), and then identify either a “best” estimate (if it is defined as the most plausible), or identify the range of most plausible values.

2.7. Model averaging

There has been a growing trend in chemical risk assessment to better characterize uncertainty and to have the final assessments appropriately reflect the underlying uncertainty (NRC Citation2008, Citation2011, Citation2014).There has also been a growing recognition that picking the “best” model and using the associated BMDL may not fully reflect the true model uncertainty (Wheeler and Bailer Citation2007; Piegorsch et al. Citation2013; Ringblom et al. Citation2014). Model averaging addresses those concerns by including the results of several models (appropriately averaged) when estimating the BMD and characterizing the uncertainty about that estimate (i.e. through calculation of a BMDL). Note that “model averaging” here does not mean calculating the average BMD or BMDL from several different models. Rather, it refers to a (potentially computationally intensive) approach for calculating a weighted average of model-predicted responses as a function of dose. That is, one can think of a dose–response relationship that, at each dose, computes a weighted average of predictions of the individual models, in essence based on a mathematical function that includes each of the individual model functions. The model average BMD is then based on the dose that gives the defined risk (BMR) after appropriate optimization. The associated uncertainties are then used to calculate the corresponding BMDLs. This can be done using either frequentist approaches (Wheeler and Bailer Citation2008; Piegorsch et al. Citation2013; Piegorsch et al. Citation2014) or using Bayesian methods (Bailer et al. Citation2005; Morales et al. Citation2006; Dankovic et al. Citation2007).

Model averaging is gaining acceptance for BMD modeling. It has been implemented for standard suites of dichotomous models as part of PROAST, and an R version has been developed by Wheeler and Bailer (Citation2008) using the mathematical functions that are part of BMDS. Kan Shao used the method described by Wasserman (Citation2000) to develop Bayesian model averaging that is available at benchmarkdose.com. US EPA held a workshop in 2015 on issues related to model averaging for continuous endpoints, and documentation of the workshop discussions are publicly available (US EPA Citation2016).

A key issue for model averaging is how the models are weighted. For example Wheeler and Bailer (Citation2008) note that model averaging can be conducted with models weighted by information theoretical values, such as the AIC, Bayesian information criterion (BIC), etc. This sort of weighting can appropriately reflect the overall goodness of fit, thus giving more weight to the models that do a better job of describing the overall dataset, while penalizing for addition of parameters. There are other options, especially in a Bayesian framework, where prior model weights can be assigned (perhaps based on understanding of the underlying biology) and updated as part of the Bayesian analysis. Furthermore, the weights themselves can be made parameters of the “averaged model” to be estimated (or updated) along with the individual-model parameters. Other possible approaches might include including the scaled residuals in the low-dose region as part of the weighting, or focusing on the AIC in the low-dose region, although we are not aware of any research or development along those lines.

However, as noted earlier, the focus of BMD modeling is to describe the data in the range of the BMD. Therefore, model averaging methods where the weighting can focus on the region of the dose-response near the BMD would be useful. Another approach would be to set priors based on information or toxicological insight for individual model parameters. For example one could define a low probability that a power parameter is less than 1, thus downweighting models that are supra-linear at low doses.

2.8. BMD:BMDL ratio