ABSTRACT

Immersive technologies, such as virtual and augmented reality, initially failed to live up to expectations, but have improved greatly, with many new head-worn displays and associated applications being released over the past few years. Unfortunately, ‘cybersickness’ remains as a common user problem that must be overcome if mass adoption is to be realized. This article evaluates the state of research on this problem, identifies challenges that must be addressed, and formulates an updated cybersickness research and development (R&D) agenda. The new agenda recommends prioritizing creation of powerful, lightweight, and untethered head-worn displays, reduction of visual latencies, standardization of symptom and aftereffect measurement, development of improved countermeasures, and improved understanding of the magnitude of the problem and its implications for job performance. Some of these priorities are unresolved problems from the original agenda which should get increased attention now that immersive technologies are proliferating widely. If the resulting R&D agenda is carefully executed, it should render cybersickness a challenge of the past and accelerate mass adoption of immersive technologies to enhance training, performance, and recreation.

1. Introduction: Identifying causes of and solutions for cybersickness: Reformulation of a research and development agenda

Virtual and augmented reality (AR) technology provides sensory input to users that often simulates real-world stimuli. The approaches taken by virtual and augmented reality are slightly different. Virtual reality (VR) aims to substitute virtual for real stimulation for one or more sensory organs. Augmented reality, on the other hand, blends real and virtual stimulation. Of course, surreal stimuli can be added to VR or AR as well. In any of these cases, virtual aspects of sensory input are presented with the goal of having users interact with them as if they are real.

Following industry practice, we will use the term eXtended reality (XR) when we refer to the shared aspects of virtual and augmented reality. eXtended Reality could transform the way we work, learn, and play. Besides its wide use in entertainment and gaming, XR has significant applications in the domains of education, manufacturing, training, health care, retail, and tourism (Stanney et al., Citation2020). It can transform education by permitting interaction with environments far removed, long gone, or of a dramatically different scale. It provides operational support at the point-of-need, thereby accelerating task performance while improving safety and reducing downtime and costs. It allows for training of essential skills through safe, contextually relevant, and embodied immersive experiences, even in rare and hazardous scenarios, such as training oil rig workers to handle emergencies or sailors to put out ship fires. It can support physicians in reaching patients in underserved and remote areas, and support diagnostics, surgical planning, and image-guided treatment. It is destined to reshape commerce, by supporting remote exploration of physical products, enhancing remote customer support, and fostering interactive branding. It can also revolutionize tourism via the integration of interactive elements into hotel experiences, augmented tourist points-of-interest, and immersive navigation assistance when exploring new places.

Despite the vast promise XR technology presents, a nettlesome motion sickness (a.k.a. cybersickness) problem exists (McCauley & Sharkey, Citation1992). The cybersickness associated with XR exposure often includes nausea, disorientation, oculomotor disturbances, drowsiness (a.k.a. sopite syndrome), and other discomforts (Graybiel & Knepton, Citation1976; Stanney et al., Citation2014). At least 5% of VR users will not be able to tolerate prolonged exposure, and ~1% of users will experience retching or vomiting, typically during prolonged exposure to a fully immersive VR headset (Stanney et al., Citation2003; Lawson, Citation2014).

Cybersickness can linger long after XR exposure and compromise postural stability, hand-eye coordination, visual functioning, and general well-being. These aftereffects generally result from the individual’s sensorimotor adaptation to the immersive experience, which is a natural and automatic response to an intersensorily imperfect virtual experience and is elicited (and often resolved) by the plasticity of the human nervous system, which recalibrates continuously to new inputs (Stanney et al., Citation1998). It is speculated that the problem is less severe in AR, as compared to VR, but that assumption has yet to be fully validated.

To discuss potential solutions for cybersickness, a special session was held at the 1997 Human Computer Interaction (HCI) International Conference. As a result of that (largely NASA-sponsored) session, a research and development (R&D) agenda was formulated (Stanney et al., Citation1998). High priority recommendations included developing an understanding of the role of sensory discordance, employing human adaptation schedules, developing and implementing design guidelines to minimize sensory conflicts, developing standardized subjective and objective measures of aftereffects to quantify the problem, establishing countermeasures to sensory cue conflicts, and improving head tracking technology and system response latency. Since that session, significant advances have been made in our understanding of the causes underlying cybersickness and technology facilitating XR applications. To evaluate progress since the 1997 special session, assess the current state of the field, and identify future challenges, a workshop entitled Cybersickness: Causes and Solutions was convened in Los Angeles CA, at the SIGGRAPH 2019 conference (Special Interest Group on Computer GRAPHics and Interactive Techniques). This paper engages the participants from that workshop in formulating an updated R&D agenda, three of whom participated in the development of the original 1998 agenda.

2. Consolidation of the literature (Lawson and Stanney)

2.1. Cybersickness revisited: The rationale for this paper

Immersive technologies, such as virtual and augmented reality, initially failed to live up to inflated expectations. They have since improved greatly, traveling along the popular (but hypothetical) Gartner Hype Cycle of innovation (Linden & Fenn, Citation2003) and emerging from the “trough of disillusionment” (p. 2), which contributed to waning interest in the 1990s. These immersive technologies appear to be nearing the “plateau of productivity” (p. 2), with mainstream adoption on the horizon. Unfortunately, cybersickness (a type of motion sickness associated with prolonged immersion) remains as a common user problem that stands in the way. Stanney et al. (Citation1998) described key cybersickness-related R&D problems that would need to be solved before VR technology could live up to its potential and achieve mass adoption. This paper garnered much interest, but is due for an update, as VR and AR systems are now commercially available that are of acceptable quality and are more affordable than some smartphones today. In fact, the time has finally come when tens of millions of XR headsets have been sold worldwide, but mentions of unpleasant symptoms are emerging again in the news and are regularly discussed on blog posts. Therefore, it is appropriate to revisit the cybersickness problems discussed by Stanney et al. (Citation1998) and to identify any needed modifications to the recommended R&D agenda.

2.2. Are we seeing the rebirth of XR now or merely another hype cycle?

The original Stanney et al. (Citation1998) paper cited a prediction that VR would be widespread by approximately 2008. It appears that true dissemination did not begin until nearly a decade later than predicted, but it is now fully underway. The evidence that XR is undergoing a revival derives from Google Trends searches, unit sales statistics, and research publication trends, which are described below.

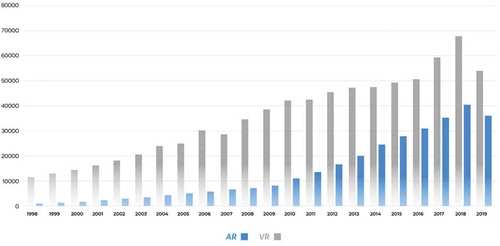

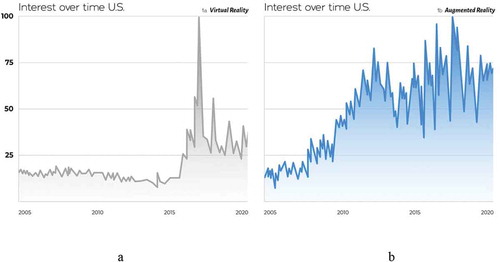

Google Trends searches reveal that VR interest did not rise steeply until the mid-2010s (see screen captures from trends.google.com). Interest rose quickly following the 2013 introduction of the earliest developer version of the Oculus Rift. For the first time, users had access to VR of acceptable quality for under 500 USD which created a tipping point for sales, with several competitor VR headsets marketed shortly thereafter. Interest in AR was already surging at that time and has remained strong (see ), while interest in VR has waned a bit in recent years, perhaps because of consistent reports of cybersickness, and the need to completely remove a user from real-world viewing. This is reflected in the latest headset usage estimates, with 52.1 million people anticipated to use VR, as compared to 83.1 million anticipated to use AR at least once per month in 2020 (Petrock, Citation2020).

Figure 1. Popularity of search terms “virtual reality” (1a) and “Augmented Reality” (1b), Implying a resurgence of interest in VR ca. 2015 and a Growing Interest in AR ca. 2011. (Peak popularity is assigned 100, 50% of this peak is assigned 50, etc.).

The Google Trends searches above are fairly consistent with evidence concerning the increasing sales curve of VR and AR around this same time (Dunn, Citation2017), as units numbered in the millions worldwide and are projected to become even more common by 2024 (see ).

Table 1. XR headset sales by type.

The surge in general interest and sales of XR appears to match the increase in studies by research and technical groups, as reflected by the growing number of Google Scholar Articles from 1998 to 2019 focused on “virtual reality” and “augmented reality” (see ).

Considering the trends described above for Google Trends searches, usage statistics, unit sales, and scholarly publications, we can assert that the age of ubiquitous XR technology finally seems to have arrived. Only time will tell if sales will continue to grow as predicted (Dunn, Citation2017; IDC, Citation2020). Will the years 2015–2020 someday be considered the “renaissance of XR,” or merely another “hype bubble?” The answer to this question will depend upon the existence of useful XR software applications and alleviation of cybersickness. As was recommended in the Stanney et al. (Citation1998) R&D agenda, more value-added, widely adopted enterprise applications for AR and VR are still needed today to ensure XR dissemination, so the present authors feel this should be maintained in the current updated R&D agenda:

Identify and evaluate value-added XR applications, both personal and collaborative.

Develop value-added enterprise XR applications that drive mass adoption.

Beyond value-added applications, the authors believe that one of the most important factors determining mass adoption of XR technology is cybersickness. Interest in cybersickness was high in the early 2000s (see ), likely due to VR disenchantment following the over-hyping of VR capabilities in the 1990s and a growing popular awareness of how commonly cybersickness was induced (Stanney et al., Citation1998). Concomitant with the increasing recent XR sales and usage, there has been a resurgence of interest in searches for the word “cybersickness.” This trend cannot be explained by a similar recent surge in interest in analogous maladies such as “simulator sickness” (see ). The authors conjecture that interest in cybersickness is surging because there are far more XR users now, yet the modern XRs they use still cause cybersickness. This malady must be resolved if XR technology is to experience mass adoption. The updated R&D agenda presented herein is intended to be a step toward such resolution. The next section of this paper describes the theories, probable causes, symptoms, and incidence of cybersickness associated with early and recent XR technology.

Figure 3. Popularity of search terms “Cybersickness” (3a) and “Simulator Sickness” (3b), implying a resurgence of interest specific to Cybersickness, ca. 2015, around the same time interest in XR Increased (see , and Dunn, Citation2017). (100 = peak interest, 50 = 50% peak, etc.).

2.3. Cybersickness: A continuing obstacle to XR use?

While XR applications are no longer as limited by cost, processing speed, or available software, it is possible that cybersickness will limit proliferation as much as it did for earlier generations of XR technology (Hale & Stanney, Citation2014), unless effective countermeasures are implemented. How prevalent is cybersickness with current XR? What are the symptoms? What causes cybersickness and how can it be mitigated? The rest of this paper considers such questions and highlights important knowledge gaps requiring further study. Our intent is to formulate a broad R&D agenda to ensure that the initial promise of XR is realized and to ensure that XR is “here to stay” as a valued aspect of training, operational support, commerce, and entertainment. We will first describe the latest information concerning the incidence of cybersickness, which is important in determining whether it is still a problem.

2.4. User susceptibility to cybersickness

Cybersickness incidence estimates imply that early VR headsets (available ca. 1994–2010) elicited adverse symptoms among >60% of users during their first exposure, with 40–100% being deemed motion sick (depending on the criteria, systems, tasks, and exposure durations across five studies reviewed), 5% quitting prematurely, and 5% experiencing no symptoms (Lawson, Citation2014). While XR systems have begun proliferating worldwide due to their affordability, cybersickness was still observed shortly after the introduction of the latest generation of VR headsets, such as the Oculus Rift (Serge & Moss, Citation2015). The introduction of these headsets addressed some of the headset-related recommendations from the Stanney et al. (Citation1998) R&D agenda by creating bright, low-cost head-worn displays (HWDs). Other desired headset features are still lacking, however, such as a wide field-of-view (FOV; current displays are still limited to ~100O) that supports task performance and presence but is free from cybersickness (wider FOV often increases cybersickness; Lin et al., Citation2002). Also needed are untethered HWD with as much processing speed as their tethered counterparts and further reduction in headset weight, as extended wear can still be physically fatiguing.

The 1998 R&D agenda also called for reduced latencies (but visual latency issues persist today; see Gruen et al., Citation2020), establishment of cross-platform software with portability (which Unity, Unreal Engine, and other development platforms now support), improved tracking technology (now often seamlessly integrated into the VR/AR headset technology itself), and creation of better haptic interfaces (tactile and force feedback is still rudimentary, but hand tracking and gesture recognition have been integrated into some VR/AR headsets). While modern multicore processors have made it possible to render complex auditory environments over headphones integrated into headsets, other senses have yet to be incorporated adequately (including, vestibular, chemical, or non-haptic somatosensory cues such as body cutaneous and kinesthetic stimuli). In fact, it is unlikely that the eXtended Reality Turing Test could be passed for any XR scenarios involving body locomotion or driving until natural vestibular and somatosensory cues are adequately simulated (Lawson & Riecke, Citation2014; Middleton et al., Citation1993). Based on these findings and considerations, recommendations from the Stanney et al. (Citation1998) R&D agenda that should be maintained include:

Create a wide FOV that supports task performance and presence but does not elicit cybersickness.

Create powerful, lightweight, and untethered AR/VR HWDs.

Reduce visual latencies.

Create peripheral devices for senses beyond vision and audition.

What can we conclude about the incidence of cybersickness in newer XR? While cybersickness still occurs in the present generation of VR headsets, there is very limited evidence in the literature to enable one to discern whether the incidence of cybersickness has changed versus previous generations, and it comes from studies designed to elicit at least mild symptoms. An update to VR incidence estimates comes from a recent dissertation by Stone (Citation2017), who observed that 64/195 (32.8%) of subjects playing one of the three dynamic video games (for an average of 9–19 min) using an HTC Vive™ (a current generation VR headset) said they “somewhat agree” or “strongly agree” that the experience was nauseating (with 5.6% saying “strongly agree”). Davis et al. (Citation2015) provided another incidence estimate which applies to adults using an Oculus Rift and stimuli consisting of two intentionally disturbing roller coaster scenarios. Under that level of challenge, at least one of the two coaster simulations caused 12/12 participants to get mild (or worse) nausea, with 17% quitting in one simulation and 66% in the other.

Overall, the limited cybersickness findings with the latest HWDs imply that: a) Cybersickness has not been eliminated with the newest generation of headsets; b) As with past systems, incidence of cybersickness varies greatly (due to large differences in devices, stimuli, usage protocols, individual physiology, and measures of sickness); c) At least one-third of users will experience discomfort during VR usage; d) AR usage may be less problematic but more research is needed to back this assertion; e) At least 5% of XR users are expected to experience severe symptoms in the latest generation headsets.

One factor that can affect cybersickness incidence is the ergonomics of the VR. The Oculus Rift VR headset such as that used by Davis et al. is not expected to fit, on average, 16% of females and 1% of males based on its interpupillary distance (IPD) range of adjustment, while the Oculus Quest will not fit 7% of females and 1% of males; so while the latest VR headsets are closing the IPD mismatch gap, there is still room for improvement. When the visual displays in a VR are not positioned in front of each eye properly, normal visual accommodation and binocular vision are challenged, leading to perceptual distortion, eyestrain, headache, etc. While Oculus is one of the most accommodating designs in terms of ergonomic fit, any person without a good IPD fit could experience greater cybersickness (Stanney et al., Citation2020), and a poor fit is more common among people of smaller average stature, such as women. Could this be the reason that some past studies have claimed that women are more susceptible to cybersickness than men? Stanney et al. (Citation2020) noted that another popular VR, the HTC Vive VR headset, is not expected to fit, on average, ~35% of females and ~16% of males based on its adjustable IPD range. This IPD fit/no-fit variable, when incorporated into a predictive model of cybersickness, was found to be the primary driver of cybersickness recovery time, with a secondary driver being motion sickness history (Stanney et al., Citation2020). Women have been reported to be more susceptible to motion sickness in only 50% of the studies in the literature (Lawson, Citation2014), with women being reported more susceptible far less often in controlled laboratory studies (versus surveys). Stanney et al. concluded that it could be the ergonomics of the technology and not sex differences that drive cybersickness up in females using certain types of VR. Many other confounding factors contribute to observed sex differences in motion sickness (Lawson, Citation2014), some of which, like IPD, imply that not all users are experiencing the same stimulus. Thus, presently, it cannot be said definitively that women have been proven to be more susceptible to motion sickness, nor that, when differences are observed, they are due to sex, per se.

Based on the findings in this section, important considerations in the Stanney et al. (Citation1998) R&D agenda that should be maintained in the current recommendations include:

Determine the various drivers of cybersickness and create a predictive model.

Establish means of determining user susceptibility to cybersickness and aftereffects.

Establish countermeasures to aftereffects.

Identify factors that contribute to how provocative XR content is, and determine their relative weightings.

There is a general belief that AR HWDs will not have the same level of physiological adverse impact on users compared to VR; however, there are limited data to back this assertion. The evidence available comes from Vovk, Wild, Guest, and Kuula (Citation2018), who claimed AR engenders negligible cybersickness, yet they excluded a relatively large number (>15%) of outliers in their data. Hughes et al. (Citation2020) found a Simulator Sickness Questionnaire (SSQ) symptom profile of Oculomotor > Disorientation > Nausea (O > D > N) in a current generation immersive AR headset (Microsoft HoloLens 1), with an average Total sickness SSQ score of 23.69 (S.D. = 21.73) immediately following 3–40 min exposures with 30 min breaks between sessions, which puts AR firmly in the “Medium” intensity range (75th percentile) based on VR system SSQ percentiles (Stanney et al., Citation2014). With VR systems, a Total SSQ > 20.1 is generally associated with adverse physiological effects (e.g., ataxia, degraded hand-eye coordination, VOR shifts) and dropouts (users quitting). As an augmentation to symptom measures such as the SSQ, physiological measures of adverse aftereffects should be explored with long-duration AR exposure, to determine if there is a similar adverse physiological impact and whether this is associated with dropouts. Finally, usage protocols should be explored further, such as in Hughes et al. (Citation2020), who found that when a usage protocol of 6–20 min AR sessions with 30 min breaks between sessions was employed, the average Total SSQ score dropped to 15.71 (S.D. = 10.4) immediately post-exposure, which is approaching the “Low” range (25th percentile; Stanney et al., Citation2014). Based on these findings, important considerations that were part of the Stanney et al. (Citation1998) R&D agenda that should be maintained include:

Standardize subjective and objective measurement of aftereffects. If possible, on-line measurement approaches should be established that are integrated into XR systems.

Establish product acceptance criteria and systems administration protocols to ensure that cybersickness and aftereffects are minimized.

2.5. Etiological hypotheses

The Stanney et al. (Citation1998) R&D agenda identified the need to develop a better theoretical understanding of cybersickness in terms of sensory discordance and human adaptation timelines. This has yet to be fully achieved. While the facts for which a complete motion sickness theory must account have been stipulated (Lawson, Citation2014), there are many knowledge gaps concerning causes of cybersickness and no definitive, universally accepted theory. For this reason, the term “hypothesis” was used throughout this paper to refer to the competing schools-of-thought on cybersickness, with a focus on identifying practical recommendations that can be derived from each one. Specifically, three widely known hypotheses and one new one are introduced briefly, below, with an emphasis on their contributions to the updated R&D agenda and implications for cybersickness mitigation expounded. However, the reader should be aware of other important hypotheses concerning motion sickness, which have emphasized the role of eye movements, rest frames, perceived vertical, and ontological development of motor control – a critical review of more than ten motion sickness hypotheses (or variants of hypotheses) is provided by Keshavarz et al. (Citation2014). Four examples of hypotheses are provided below.

Sensory conflict hypothesis. The sensory conflict (or neural mismatch) hypothesis of motion sickness, which is the most widely cited, postulates that motion sickness is due to sensory conflicts between expected patterns of afferent/reafferent signals from visual, auditory, tactile, kinesthetic, and vestibular activity established through previous experiences and what is being experienced in a novel environment (Oman, Citation1998; Reason & Brand, Citation1975). For example, there can be a mismatch between what the proprioceptive (tactile, kinesthetic, vestibular) senses indicate is happening in the real world (e.g., sitting still in a chair while wearing a VR headset), versus what the visual and auditory senses are indicating in the virtual world (e.g., accelerating through a virtual environment), which causes a perceptual conflict that is often sickness-inducing. The visual contributors to this mismatch are not as profound in AR because AR typically allows for viewing of real-world rest frames, such as walls, floors, etc., which is one reason AR systems tend to elicit less overt nausea than VR systems. Potential limitations of the sensory conflict hypothesis are described in the Ecological Hypothesis section of this paper and in detail in Keshavarz and Hecht (Citation2014).

Evolutionary hypothesis. The evolutionary (or poison) hypothesis is an expansion of the sensory conflict hypothesis, which seeks to explain the origins of the most cardinal symptoms of the motion sickness response (viz., nausea and vomiting) (Money, Citation1990; Treisman, Citation1977). This is a hypothesis concerning the origin of motion sickness rather than the specific aspects of a stimulus immediately causing motion sickness. The hypothesis is that nausea and vomiting due to sensory cue conflicts are an indirect by-product of an ancient evolutionary adaptation poison defense system that is being accidentally triggered by modern challenging situations of real or apparent motion (the latter of which is prevalent in XR systems). The brain has evolved in such a way that it mistakes modern real/apparent motion stimuli causing disorganization to typical patterns of vestibular, visual, and somatosensory information as instead representing a natural toxin-related malfunction in the central nervous system (CNS), which initiates an emetic response as a defense mechanism.

Oman (Citation2012) has pondered whether the evolutionary hypothesis is an overly “adaptationist” explanation for cybersickness, as it assumes “that evolution has shaped all phenotypic traits for survival advantage” (Oman, Citation2012, p. 117). The key assumptions of the evolutionary hypothesis were recently challenged by Lawson (Citation2014) who argued (in the direct evolutionary hypothesis) that there are plausible means by which ancient forms of real or apparent motion could have contributed directly to the evolution of aversive reactions (without the need for a co-opted poison response). Extending this idea, Lawson offered a modification of Triesman’s poison hypothesis (the direct poison hypothesis), which argues that the evolutionary circumstances that caused the ingestion of toxins or intoxicants were the same circumstances in which unusual, maladaptive motions of ancient origin frequently were present. Therefore, the human motion sickness response did not have to evolve accidentally by generalizing a poison response to modern motions, but could have evolved directly, because the ancient circumstances under which our primate ancestors could become poisoned (or intoxicated) also were circumstances which harmed their evolutionary fitness directly during natural conditions of real-body motion and/or visual-field motion.

Ecological hypothesis. Under the ecological hypothesis (also known as the postural instability hypothesis), motion sickness is postulated to be caused by alterations of postural control (Riccio & Stoffregen, Citation1991). This hypothesis suggests that a primary behavioral goal of humans is to maintain postural stability, and thus as postural instability increases, motion sickness develops. Postural instability is suggested to occur in novel environments in which “we fail to perceive the new dynamics or … are unable to … execute the control actions that are appropriate for the new dynamics” (Riccio & Stoffregen, Citation1991, p. 204); it lasts until the individual can adapt to the novel motion cues. As with sensory conflict hypothesis, potential limitations of the ecological hypothesis or related findings have been raised (Bos, Citation2011; Golding & Gresty, Citation2005; Keshavarz et al., Citation2014; Lackner, Citation2014; Lubeck et al., Citation2015; Pettijohn et al., Citation2018).

Multisensory re-weighting hypothesis. Another expansion of the sensory conflict hypothesis is the multisensory reweighting hypothesis, which suggests that susceptibility to cybersickness may be associated with an individual’s ability to rapidly reweight (Oman, Citation1990) conflicting multisensory cues in XR environments, such as visual-vestibular mismatches (Gallagher & Ferrè, Citation2018). Whereas in the real world, vestibular cues are highly weighted, due to their lack of validity in immersive environments, vestibular cues must be down-weighted to resolve sensory conflicts, which are most pronounced in fully immersive VR headsets. Those who can rapidly adapt to these conflicts, for example, by upregulating visual cues relative to conflicting vestibular cues that do not contribute to useful estimates of the physical body while immersed in the virtual world, will experience a mild form of inoculation to cybersickness with repeat exposure to XR. Thus, individual multisensory reweighting speed may factor into the degree to which an individual experiences cybersickness.

Each one of these hypotheses, and their contributions to the updated R&D agenda, will now be discussed in more detail. The reader will note that there is a diverse range of opinions and approaches among the named section authors discussing this dynamic field of inquiry.

3. Sensory cue conflict and cybersickness (Fulvio and Rokers)

The sensory conflict hypothesis of motion sickness attributes cybersickness to conflicts between expected sensory cues established through previous experiences and those currently being experienced in a novel environment (Oman, Citation1998; Reason & Brand, Citation1975). In particular, conflicts between vestibular and visual motion cues, prominent in VR systems, appear to be particularly troublesome. It is known that individuals vary in their sensitivity to such sensory cues. Specifically, individuals show considerable variability in cybersickness propensity and severity – some individuals can tolerate only very brief exposure to XR (minutes or less), whereas others seem limitless in their tolerance (hours). This range of cybersickness susceptibility suggests that there are other characteristics that also vary among individuals and relate to cybersickness. Their identification can make it easier to predict in advance who will be likely to experience discomfort when using XR, and, more importantly, to develop solutions that mitigate the effects. We thus tested the a priori prediction that individuals with greater sensitivity to sensory cues will be more likely to detect sensory conflicts when placed in XR environments, and will therefore be more susceptible to cybersickness. As such, individual differences in cue sensitivity should predict differences in cybersickness susceptibility.

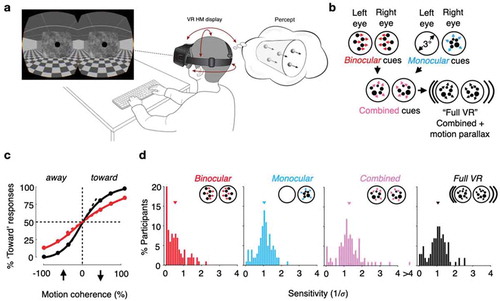

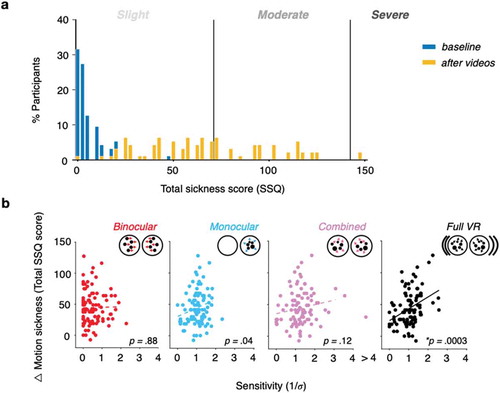

A large sample of undergraduate students (n = 95; 59 females), most of whom had little to no experience with VR devices, completed a two-part study in which they were first measured for their sensitivity to different sensory cues to motion-in-depth presented in a VR HWD, and then separately measured for their propensity for cybersickness while viewing video content in the same VR display. Finally, the relationship between the two measures for the different sensory cues was quantified in order to (i) test the prediction that greater sensitivity to sensory cues is associated with more severe cybersickness; and to (ii) determine which sensory cues in particular are sickness-inducing.

Sensitivity to sensory cues was measured using a dot motion direction discrimination task viewed in a VR HWD (see ). Sensitivity to 3D motion defined by four sets of sensory cues was tested (see ). One type of 3D motion was defined by binocular cues only. This Binocular motion was presented as a cloud of dots to both eyes such that when viewed in the VR display, a single cloud of dots appeared to be moving toward or away from the participant on each trial, within the context of a larger virtual room. Importantly, monocular information (dot size and density changes) was removed from this stimulus. A second type of 3D motion was defined by monocular cues only. This Monocular motion was presented as a cloud of dots to only one eye within the same virtual room on each trial, with the other eye viewing only the virtual room. Thus, binocular information was removed so that the motion direction of the dots (toward or away) was defined by the dot size and density changes in the single eye’s view. A third type of 3D motion included both binocular and monocular cues – Combined Cue Motion – thus presenting the motion with dot size and density changes to both eyes within the virtual room. Finally, the fourth type of 3D motion included binocular, monocular, and motion parallax cues due to head-motion contingent updating of the VR display – Full VR Motion. The motion was presented in the same way as the combined cues motion, but the viewpoint of the scene changed according to participants’ head movements.

Figure 4. Assessing the sensitivity to motion in depth under different cue conditions* (Adapted from Fulvio, Ji, & Rokers, Citation2020). * a) Observers viewed random dot motion stimuli in an Oculus DK2 head-mounted display (HMD). The stimuli simulated dots that moved toward or away from the observer through a cylindrical volume. The proportion of signal dots (coherence), which moved coherently either toward or away, and noise dots, which moved randomly through the volume, was varied in a random, counterbalanced order across trials. Observers reported the perceived motion direction. Stimuli were presented in a virtual environment that consisted of a room (the insert depicts a “zoomed out” view) with a 1/f noise-patterned plane located 1.2 m from the observer. b) Dot motion was defined by one of four sets of sensory cues, presented in a randomized blocked order. Binocular dot motion contained binocular cues to depth (i.e., changing disparity, inter-ocular velocity), but lacked monocular cues to depth (i.e., dot density and size changes). Monocular dot motion contained monocular cues to depth but lacked binocular cues. Combined cues dot motion contained both binocular and monocular cues. Full VR dot motion contained binocular and monocular cues and motion parallax cues associated with head-motion contingent updating of the display. c) Psychometric functions were fit to the percentage of “toward” responses at each motion coherence level to obtain the slope (σ) of the fit for each observer in each cueing condition. Sensitivity in each cueing condition was defined as the inverse of the slope, with larger values indicating greater sensitivity. d) Histograms of the distribution of sensitivities across observers in each of the four cueing conditions reveal considerable variability both within and across conditions. Sensitivity tends to be greater in combined cue conditions compared to when cues are presented in isolation.

The four types of dot motion were presented in blocks and the order of the blocks was random and counterbalanced across participants. Within a block, the dots had different coherence levels, such that lower coherence values made discrimination of dot motion direction more difficult. Individual sensitivity to the individual sensory cue conditions was defined as the slope of the psychometric curve fit to a participant’s data across all motion coherence values – larger slopes implied greater sensitivity (see ). As expected, large variability in sensitivity was found across participants for any given sensory cue condition, as well as variability across sensory cue conditions (see ). Not surprisingly, sensitivity was greater when more cues to 3D motion were available (i.e., compare “Combined cues” and “Full VR” to “Binocular” and “Monocular”).

The critical question, however, is whether these differences in sensitivity relate to and predict differences in cybersickness. Cybersickness propensity was measured in a separate task in which the same participants viewed up to 22.5 min of binocular, head-fixed video content that increased in intensity. The video content included footage of a first-person car ride, computer-generated video footage of a first-person fighter jet flight through a canyon, footage of a first-person drone flight through a parking lot, and footage of a first-person drone flight around a bridge. Participants had the option of quitting if viewing became intolerable. Both prior to and after viewing the videos, participants completed the 16-item SSQ (Kennedy et al., Citation1993). Cybersickness propensity/severity was quantified by the difference in the total sickness score between post- and pre-video viewing.

The video footage was intended to be sickness-inducing to allow for a range of sickness across individuals. Indeed, the video footage led to significant sickness on average compared to baseline, but across individuals, there was considerable variability in severity (see ). Critically, when individual sensitivity in each of the four sensory cue conditions was related to the change in cybersickness due to video-viewing, trends were uncovered of the expected relationship whereby larger sensitivity was associated with greater sickness in all conditions, but only in the Full VR sensory cue condition was the relationship significant after correcting for multiple comparisons (see ). It is noted that the Full VR sensory cue set is the only one of the four that includes motion parallax. Moreover, the video footage was head-fixed – it did not update according to the participants’ head movements – and therefore lacked motion parallax cues. Further, head movements made during the video viewing created conflicts with movements implied by the footage itself.

Figure 5. Sensory cue sensitivity predicts cybersickness severity* (Adapted from Fulvio, Ji, & Rokers, Citation2020). * a) After viewing binocular, head-fixed video content in the VR HMD, observers reported significantly greater levels of cybersickness compared to baseline reports obtained at the beginning of the study. However, sickness-inducing effects of the video content were highly-variable among observers, with some individuals appearing to be relatively immune to cybersickness. b) Change in motion sickness as a function of sensory cue sensitivity. Observers’ sensitivity to the Full VR dot motion predicted their susceptibility to cybersickness with video viewing. The relationship was not significant in the other cue conditions. This suggests that sensitivity to motion parallax cues in particular is an important factor susceptibility to cybersickness.

Taken together, the specificity of the relationship between sensitivity to the Full VR condition and cybersickness and the potential for motion parallax-related conflict during video viewing implicate sensitivity to motion parallax cues as an important predictor of cybersickness. As predicted, those individuals who had greater sensitivity to motion parallax information experienced greater discomfort when that information was absent or in conflict. Future work can test other XR applications to determine how sensitivity to different cues may impact cybersickness under various scenarios. For those sensitive to cue conflicts, design techniques can be employed to attempt to minimize sensory conflicts and thus reduce cybersickness, such as teleportation, concordant motion, alignment of head/body with virtual motion, speed modification, and provision of visual cues that match or minimize vestibular cues (Bowman et al., Citation1998; Carnegie & Rhee, Citation2015; Dennison & D’Zmura, Citation2017; Fernandes & Feiner, Citation2016; Keshavarz, Hecht, & Lawson, Citation2014; Kuiper et al., Citation2019; Prothero & Parker, Citation2003; Wada et al., Citation2012).

Based on this review, an important consideration that was part of the Stanney et al. (Citation1998) R&D agenda that should be maintained includes:

Establish XR content design guidelines that assist in mitigating cybersickness.

In addition, important considerations to add to the updated R&D agenda include:

Determine feasibility of testing an individual’s sensitivity to sensory depth cues and determine if such sensitivity is associated with more severe cybersickness.

Determine which sensory cues, beyond motion parallax, are sickness-inducing.

In conclusion, the primary outcome of this sensory conflict-based research may seem problematic for XR – those who would derive the best experiences from XR would also be those who could tolerate the experience the least. However, the results suggest possible solutions. Ideas for cybersickness mitigation are summarized in , based upon the implications from a variety of theoretical approaches to the problem, which are described in this paper, including sensory/cue conflict (this Section 3), the evolutionary hypothesis (the next Section 4), the ecological hypothesis (Section 5), and the multisensory re-weighting hypothesis (Section 6). We turn next to the implications of the evolutionary approach.

Table 2. Potential cybersickness mitigation strategies implied by different etiological hypothesis.

4. Evolutionary hypothesis: Implications of human-system adaptation to cybersickness (Fidopiastis and Stanney)

The evolutionary hypothesis implies that the brain recognizes certain sensorimotor conflicts as toxins that must be expelled. Such conflicts may occur during integration of vergence-accommodation, visual-vestibular, or visual-vestibulo-somatosensory (tactile/kinesthetic) information while using XR headsets. Specifically, donning an HWD in an XR environment with limited FOV, optical distortions, static projection depth planes, and certain types of sustained active motions are expected to present conflicts that elicit cybersickness with associated maladaptation in visual, vestibular, or psychomotor systems (e.g., changes in accommodation, vergence, vestibulo-ocular reflex [VOR], ataxia, or maladroit hand-eye coordination). Since “the evolutionary function of the human brain is to process information in ways that lead to adaptive behavior” (Cosmides & Tooby, Citation1987, p. 282), and maladaptive behaviors associated with XR exposure could be harmful, XR systems should be designed such that human sensorineural mechanisms associated with discordance are minimized; otherwise cybersickness and associated potentially harmful maladaptation may manifest.

When an XR experience sufficiently perturbs perceptual cues within virtual or augmented operational environments that define the nature of the world and indicate how to perform actions, cybersickness will occur (Kruijff et al., Citation2010; Welch & Mohler, Citation2014). Thus, cybersickness is not predicted as a result of all intersensory conflict conditions. Furthermore, those individuals who can quickly adapt their sensorimotor responses to XR environments may avoid sickness altogether (McCauley & Sharkey, Citation1992). Characterizing sensory conflicts that lead to cybersickness is complex given the myriad of ways that XR technology, coupled with computer graphics techniques, negatively impact human intersensory and sensorimotor integration. XR technology thus redefines human perception of the natural world and perturbs multimodal cues that assist with visuomotor coordination (Welch & Mohler, Citation2014). Nonetheless, human perceptual and sensorimotor systems are dynamic and allow humans to adapt to such diverse rearrangements of spatial information (Welch, Citation1986).

Adaptation as a process can be quick, as in visual coding (Webster, Citation2015), or more gradual, as in the outcome or end state of the adaptation (Welch, Citation1978). Most research evaluating effects of adaptation associated with XR exposure are end-state results that quantify prolonged changes (i.e., aftereffects) in perception or shifts in perceptual motor coordination that reduce or eliminate inter-sensory conflicts (Stanney & Kennedy, Citation1998; Stanney et al., Citation1999). Yet, reducing the adaptation process to only a hierarchical sensory loop may be why there are conflicting theories as to how best to use adaptation as a mitigation for cybersickness.

Functional magnetic resonance imaging (fMRI) of persons experiencing adaptation when wearing prism glasses provides information on how the brain changes in response to intersensory conflicts induced by visuomotor discrepancies (Sekiyama et al., Citation2000). Psychophysical results accompanying such fMRI research demonstrate the time course and localization error reduction of sensor-motor adaptation that one can expect to experience in XR environments; such adaptation is typically associated with a loss in cue sensitivity when exposed to a perturbing sensory conflict (Webster, Citation2015). The fMRI results further suggest a potential for broader change in visuomotor function that includes coding new brain representations such as body schemas as an outcome of a positive or more functional adaptation process. As aforementioned, these and other psychophysical data suggest that visuomotor adaptation to XR stimuli can occur within minutes of XR exposure (M. S. Dennison et al., Citation2016; Moss & Muth, Citation2011) or over longer periods of time as changes register beyond the retina to higher perceptual areas (Kitazaki, Citation2013). Overtime, such adaptation is expected to mitigate cybersickness. To better understand the role of adaptation as a mitigation for cybersickness, a human-centered approach is needed where symbiosis between human and technology is achieved (Fidopiastis et al., Citation2010).

Adaptation can be considered a multichannel process, as it relies on complex neural processing in multiple interacting networks of both motor and sensory systems (i.e., receptors, such as retina or limb). Thus, adaptation effects are not localized to the level of the sensor alone (Webster, Citation2015), but are rather manifest at the brain level in action networks, such as those used to direct hand-eye coordination or postural stability. For example, a viewer is presented with a virtual visual object in an XR display, which will be coarser in appearance than in the real world because pixels will be magnified in the display. This visual object will thus appear different than in the real world because of the magnification and smaller display FOV. Further, the fixed single image plane of the display will affect vergence-accommodation of the eye, thus leading to unnatural blurring of the virtual object within the visual field. As a result, when a viewer attempts to invoke natural hand-eye coordinated activities with the virtual object, the body will need to adapt to the offset world displayed in the headset. All of these offsets (i.e., pixel magnification, vergence-accommodation mismatches, unnatural viewing), not only affect the retina of the eye but also the brain networks necessary for directed behavior in the real world. The human visual system is thus constantly adapting and responding to many XR induced consistent and inconsistent visual perceptual changes simultaneous to the observer’s interaction within XR environments. These perceptual changes are occurring quickly and along multiple perception channels, such as visual, auditory, and tactile/kinesthetic. Thus, adaptation “process” outcomes are complex, integrated, and brain-based. These effects may also interact with end-state adaptations, such as hand-eye coordination and postural stability. It is important to consider integration of eye tracking into HWDs to effectively capture such adaptive changes in eye point positioning and adjust visual content to support these adaptive changes, such as by presenting virtual objects at the appropriate depth and resolution to support human performance (Fidopiastis et al., Citation2003; Fidopiastis, Citation2006). This is not a simple challenge. Current XR designers and developers must be careful not to oversimplify the problem space of human adaptation. Thus, while adaptation is a potential mediator to cybersickness, given the multichannel nature of adaptation, the specific training methods, or exposure protocols required to drive adaptation within XR are not entirely established for all aspects of the user’s response (Dużmańska et al., Citation2018). An opportunity exists to discern a collection of adaptation schemes such that the effects and duration of cybersickness and negative sensory-motor reorganization are lessened across a wide variety of XR solutions (c.f. Kennedy et al., Citation2000).

Welch and Sampanes (Citation2008) suggested that two types of adaptation, perceptual recalibration (i.e., an automatic, restricted process of perceptual learning) and visual-motor skill alterations (i.e., learning hand and eye-coordinated movements through a cognitive problem-solving process) are distinguishable based upon characteristics and conditions that constitute the XR experience. Visual perceptual recalibration occurs when one sensory system (e.g., vision) calibrates another (e.g., touch). This type of perceptual learning is expected to contribute greatly to negative aftereffects, whereas visual motor skill acquisition occurring during problem-solving tasks that are spatially complex and require more voluntary movement may not contribute as much to negative aftereffects (Clower & Boussaoud, Citation2000).

In terms of perceptual recalibration, adaptation has conscious and unconscious components that lend to one’s perception and representation of self or body schema as an actor in XR environments (Rossetti et al., Citation2005). Body schema (unconscious, interactor with the environment) and body image (partially conscious, personal self) are understood to be integrally connected via dynamic interactions instead of hierarchical linkages, which together drive body ownership (Gallagher, Citation2005). In healthy persons, embodied object (inanimate, animate, human) “representations” or cognitive assessments assist in determining perception and action with reference to one’s own body (Reed et al., Citation2004). Alteration in perception of such body schema is apparent, for example, in prism studies where a new representation of visuomotor mapping of the hands emerges during adaptation to reversal of the visual field (Sekiyama et al., Citation2000). An embodied cognition XR design approach (Weser & Proffitt, Citation2019) may thus better assist with facilitating multiple levels of human system adaptation responses and allow for a more symbiotic integration with XR technologies (see ).

One class of XR adaptation schemes could be targeted at perceptual recalibration and involve highlighting visuomotor discordances in order to engender a sense of body ownership and drive sensorimotor adaptation in XR environments (i.e., when visuomotor discrepancies occur, feedback could be provided that is coincident with the limb indicating an internal error, thereby engendering perceptual recalibration). While such recalibration is expected to lead to adverse aftereffects, when sense of embodiment is critical to skill acquisition this approach may be appropriate.

In terms of visual-motor skill alterations, research using re-adaptation strategies, such as eye-to-hand coordination activities post-exposure, suggests that during XR exposure participants undergo visual-motor adaptations that do not quickly return to baseline skill levels even after short exposure durations (c.f. Champney et al., Citation2007). Given that much of the past visual-motor skill alterations related research utilized VR systems (c.f. Bagce et al., Citation2011), one might conclude that maladaptation issues are pervasive only within fully immersive XR displays. This conclusion is unsupported, as AR video and projection HWDs also show evidence of negative hand-eye coordination aftereffects (Biocca & Rolland, Citation1998). For example, Condino et al. (Citation2020) demonstrated that persons performing a precision connect-the-dot task in AR were off by an average 5.9 mm but could not tell that they were making such gross hand-eye coordination errors. These results suggest that visual-motor skill alterations also change body perception and attribution of movement agency. Design strategies may need to account for body perception to allow for a more cooperative adaptation process (see ).

A second class of XR adaptation schemes could be targeted at visual-motor skill alterations and involve using representational feedback that appears to arise from manipulation of the XR environment (i.e., not perceived to be physically coincident with the limb and thus registered as an external error), leading to an indirect mapping strategy that allows for context-specific adjustments of motor responses within the XR environment but minimal adverse aftereffects. When embodiment is not critical to skill acquisition, such as in more cognitive, problem-solving tasks, this type of adaptation scheme may be appropriate.

A human-centered design cycle that includes these and other types of adaptation schemes may help mitigate cybersickness in XR systems. The effectiveness of such adaptation may, however, be XR technology and task dependent and may be confounded by exposure duration (Dużmańska et al., Citation2018). Tasks and types of feedback make a difference in adaptation outcomes when the XR environment requires real-world actions. Thus, adaptation effects may scale to body schema representations in the brain and only become facilitatory once a new brain representation is defined. Providing a more encompassing perspective of adaptation may guide research and facilitate a better understanding of mechanisms of cybersickness. Based on this review, important considerations to add to the updated R&D agenda include:

Establish human adaptation schemes in XR applications.

Integrate eye-tracking into XR devices.

Determine specific training methods or exposure protocols required to drive sensory adaptation within XR systems; such as by examining:

The feasibility of fostering perceptual recalibration to engender a sense of body ownership and drive sensorimotor adaptation.

The feasibility of fostering visual-motor skill alterations that lead to an indirect mapping strategy that allows for context-specific adjustments of motor responses.

In conclusion, the review in this section suggests that, while the sensory conflict hypothesis implies that individuals may be sensitive to sensory cues and this sensitivity may lead to cybersickness, it may be feasible to mitigate this sensitivity by fostering sensory adaptation. Related implications for cybersickness mitigation based on the evolutionary hypothesis have been summarized in .

5. Ecological hypothesis: Postural instability and cybersickness (Stoffregen)

Sensory conflict is hypothesized to arise from discrepancies between the current pattern of multisensory input and the pattern expected on the basis of past interactions with the environment (Oman, Citation1982; Reason, Citation1978). The hypothesis proposes an antagonistic process in which some inputs are suppressed in favor of others, and in which adaptation consists of changes in weights (e.g., Gallagher & Ferrè, Citation2018; Weech, Varghese, & Barnett-Cowan, Citation2018). Variants of sensory conflict hypothesis have been proposed, such as Treisman’s (Citation1977) poison hypothesis, which argues that perception requires sense-specific spatial reference systems, and that ingested toxins produce “mismatches between the systems” (p. 494). Thus, ingested toxins are seen simply as being a source of sensory conflict.

In the “rest frame” hypothesis (Prothero & Parker, Citation2003), hypothetical rest frames are accessed via multisensory stimulation; a “visual rest frame,” and “inertial rest frame,” and so on. Hence, conflict between hypothetical rest frames entails sensory conflict. Finally, Ebenholtz et al. (Citation1994, p. 1034), acknowledged that their “eye movement hypothesis” was closely aligned with sensory conflict hypothesis.

Stoffregen and Riccio (Citation1991), argued that the interpretation of any pattern of multisensory input as “conflict” requires comparison with some other pattern that is defined as “non-conflict.” Patterns of light, sound, and so on carry no intrinsic metric for “equality” (contra Howard, Citation1982). Accordingly, the definition, or standard, for non-conflict must be internal (e.g., Oman, Citation1982). Comparison of current multisensory patterns against internal expectations is problematic because the scientist cannot know a person’s history of interactions with the environment and, therefore, cannot know (in quantitative detail) what pattern of multisensory stimulation is expected. Thus, while we may quantify patterns of sensory stimulation, we cannot compute a quantitative estimate of conflict between current and expected inputs. It follows that sensory conflict hypothesis is not subject to empirical test: It cannot be falsified (Ebenholtz et al., Citation1994; Lackner & Graybiel, Citation1981; Stoffregen & Riccio, Citation1991).

Sensory conflict hypothesis has struggled to explain common motion sickness phenomena. For example, several studies find that motion sickness is more common among women than among men. Attempts to account for this effect (for a review, see Koslucher et al., Citation2016) have been ad hoc: they have not claimed there are sex-specific levels of sensory conflict. Women and men have similar types of perceptual-motor interactions with the world. Given this elementary fact, why should sensory conflict differ between the genders?

Consider also the common observation that passengers are more likely to experience motion sickness than drivers (e.g., Oman, Citation1982; Reason, Citation1978). In a yoked control study, Rolnick and Lubow (Citation1991) found that passengers were more likely than drivers to become sick. As the authors noted, “there is no reason to assume that this conflict was absent or reduced in subjects who had control, as compared to no-control subjects” (p. 877). Stoffregen, Chang et al. (Citation2017) asked middle-aged adults to drive a virtual automobile. Some participants had decades of experience driving physical vehicles, while others had never driven any motor vehicle. If real driving experience gives rise to particular expectations for multisensory stimulation which match simulated driving, then motion sickness should have differed between drivers and non-drivers, but the incidence of motion sickness was not observed to differ between drivers and non-drivers. The fact that sensory conflict hypothesis cannot explain either sex differences or the driver-passenger effect, both of which have been reported in the literature, calls for consideration of alternative theories.

Riccio and Stoffregen (Citation1991, p. 205) hypothesized that, “ … prolonged postural instability is the cause of motion sickness symptoms. That is to say, we believe that postural instability precedes the symptoms of motion sickness, and that it is necessary to produce symptoms.” Riccio and Stoffregen acknowledged that “inputs” from different perceptual systems often differ, but they pointed out that these differences need not be interpreted in terms of conflict. They proposed that multisensory non-identities constitute higher-order information about animal-environment interaction, and that the senses work cooperatively (rather than antagonistically) to detect this higher-order information. This proposal has relevance for motion sickness, but also in terms of general theories of perception (Riccio & Stoffregen, Citation1988; Stoffregen & Bardy, Citation2001; Stoffregen, Mantel et al., Citation2017; Stoffregen & Riccio, Citation1988). The postural instability hypothesis is incompatible with sensory conflict hypothesis in terms of broader concepts of information processing upon which the sensory conflict hypothesis is based (e.g., Chemero, Citation2011; Gibson, Citation1966; Citation1979; Pascual-Leone et al., Citation2005). The postural instability hypothesis shifts the focus in motion sickness research away from perceptual inputs and information processing and toward the organization and control of movement (Stoffregen, Citation2011).

The postural instability hypothesis suggests that a postural precursor of motion sickness is a parameter of postural activity that differs between individuals who (later) report being well versus sick (e.g., Munafo, Diedrick & Stoffregen, Citation2017; Stoffregen & Smart, Citation1998), or which differs as a function of the severity of subsequent sickness (e.g., Stoffregen et al., Citation2013; Weech et al., Citation2018). Postural precursors of motion sickness have been identified in numerous settings, populations, and dependent variables, including:

Head-mounted displays (Munafo et al., Citation2017; Arcioni et al., Citation2018; Merhi et al., Citation2007; Risi & Palmisano, Citation2019).

Desktop VR technologies (Chang et al., Citation2017, Citation2012; Chen et al., Citation2012; Dennison & D’Zmura, Citation2017; Dong et al., Citation2011; Stoffregen et al., Citation2014, Citation2008; Stoffregen et al., Citation2017; Villard et al., Citation2008).

Military flight simulators (Stoffregen, Hettinger, Haas, Roe, & Smart, Citation2000).

In moving rooms (Bonnet et al., Citation2006; Li et al., Citation2018; Smart et al., Citation2002; Stoffregen & Smart, Citation1998; Stoffregen et al., Citation2010; Walter et al., Citation2019).

On ships at sea (Stoffregen et al., Citation2013).

For postural activity during stance (e.g., Bonnet et al., Citation2006; Stoffregen et al., Citation2010; Walter et al., Citation2019) and while seated (Chen et al., Citation2012; Dong et al., Citation2011; Merhi et al., Citation2007; Stoffregen et al., 2000; Stoffregen et al., Citation2008, Citation2014).

For the “driver-passenger effect”. Postural precursors of motion sickness differ qualitatively between persons who do versus do not exert active control of visual motion stimuli. This effect has been demonstrated in virtual vehicles (Dong et al., Citation2011), and in virtual ambulation (Chen et al., Citation2012). Postural precursors of motion sickness can differ between individuals who exert different types of control over visual motion stimuli (Stoffregen et al., Citation2014).

In pre-adolescent children (Chang et al., Citation2012), young adults (e.g., Munafo et al., Citation2017; Chang et al., Citation2017; Stoffregen & Smart, Citation1998; Walter et al., Citation2019), and middle-aged adults (Munafo et al., Citation2017).

In men and women (Munafo et al., Citation2017; Koslucher et al., Citation2015, Citation2016).

For a wide variety of dependent variables, many of which are orthogonal. These include the spatial magnitude of postural activity (e.g., Munafo et al., Citation2017; Stoffregen & Smart, Citation1998), temporal dynamics (e.g., Stoffregen et al., Citation2010; Villard et al., Citation2008), movement multifractality (e.g., Munafo et al., Citation2017; Koslucher et al., Citation2016), the temporal coupling of postural activity with imposed motion (Walter et al., Citation2019), and postural “time-to-contact” (Li et al., Citation2018).

Researchers sometimes have mistakenly assumed that the postural instability hypothesis would be supported only if motion sickness was associated with increased spatial magnitude of postural activity (e.g., Dennison & D’Zmura, Citation2017; Widdowson et al., Citation2019); however, Riccio and Stoffregen (Citation1991) did not make this prediction. Motion sickness has been preceded by an increase in the spatial magnitude of postural activity (e.g., Stoffregen & Smart, Citation1998), or by a decrease in spatial magnitude (e.g., Dennison & D’Zmura, Citation2017; Stoffregen et al., Citation2008; Weech et al., 2018). Both results are consistent with the prediction of the postural instability hypothesis that postural sway will differ between persons who experience motion sickness and those who do not, and that such differences will exist before the onset of motion sickness symptoms (Stoffregen et al., Citation2008; Weech et al., Citation2018).

Based on this review, an important consideration to add to the updated R&D agenda includes:

Determine what makes an individual susceptible to postural instability during XR use (e.g., vestibular migraine susceptibility; Lim et al., Citation2018).

In summary, Stoffregen and Riccio (Citation1991) have provided much evidence in support of the postural instability hypothesis, which has been supported by the many studies cited above. Based on this review, the design implications for cybersickness mitigation based on the ecological hypothesis have been summarized in .

6. Multisensory re-weighting and an alternate approach to cybersickness (Weech)

Weech et al. (Citation2018a) has sought to develop and test a perspective of cybersickness that focuses on its relationship to a crucial task of the CNS: accurate estimation and control of 3D orientation of head-and-body. This process requires as input a stream of noisy, multimodal cues and will ideally produce appropriate behavior as output. A study of cybersickness from this perspective means, first, to assess how individual differences in multimodal information processing might relate to discomfort in virtual spaces; and second, to develop interventions that aim to mitigate the damage caused when the CNS experiences a challenge in finding solutions to this control task.

Hardware, software, and user factors play a significant moderating role in the nauseogenicity of XR exposure, and what we know about cybersickness etiology has derived partially from a careful study of the most provocative cases. Nausea is provoked by simulating user-motion in XR by moving the viewpoint independently of the head of the user (e.g., applying a “head bob”, passive navigation, or tracking jitter; Palmisano et al., Citation2007), by adjusting the visual gain of head movements (Chung & Barnett-Cowan, Citation2019), or by visual update failure during head motion (Kolasinski & U.S. Army Research Institute For The Behavioral And Social Sciences, Citation1995). In the Sensory cue conflict and cybersickness section above, the role of individual sensitivity to sensory cues was discussed, and the impact of miscalibrated visual displays was briefly mentioned in the introduction. The negative effects produced in these cases are often causally attributed to the conflict between expected patterns of multimodal input and those generated during these conditions (Oman, Citation1990; Reason & Brand, Citation1975; Watt, Citation1983). Prospective sites of “conflict detection” identified by monkey neurophysiology include the vestibular brainstem (Oman & Cullen, Citation2014), which is densely linked to emetic centers of the brain (Yates et al., Citation2014). Thus, the sensory conflict hypothesis provides a strong model for cybersickness (Oman, Citation1990), which is supported by empirical and neurophysiological evidence (Oman & Cullen, Citation2014; J. Wang & Lewis, Citation2016), and which has generated testable predictions about how to tackle the problem (Cevette et al., Citation2012; Reed-Jones et al., Citation2007; Weech et al., Citation2018a).

At the same time, the rate at which the cybersickness problem grows in severity is much faster than the rate of our scientific progress. The XR user base is orders of magnitude greater than a decade ago, and the most recently-developed XR hardware entirely eclipses even the most expensive hardware from 10 years ago, by which time the bulk of our field’s research had already been carried out. Here, recent discoveries and advances in cybersickness research are highlighted with the hope of inspiring further research that, one day, leads to the reliable and universal solution to cybersickness that currently eludes us.

Much of the recent research on cybersickness is informed by the finding that users often experience a mild form of inoculation to cybersickness with repeat exposure to XR. In the Evolutionary Hypothesis section of this paper, it is suggested that the user “learns” to ignore the conflict between visual and vestibular information (i.e., sensory adaptation) to which they are exposed over time (Rebenitsch & Owen, Citation2016; Watt, Citation1983). This adaptation has been described as a sensory re-weighting process, where weights of visual cues are upregulated relative to conflicting vestibular cues (Gallagher & Ferrè, Citation2018; Weech et al., Citation2018a) according to principles of statistically optimal cue integration (Butler et al., Citation2010; Fetsch et al., Citation2010). In this framework, cues are down-weighted if they do not contribute to useful estimates of the state of the world or body; sensations that are high in reliability (such as vision) take precedence over noise-rich cues (as in the vestibular modality). An example of the low variance of vision compared to vestibular cues can be seen in vection, the visual illusion of self-motion: visual motion dominates perception after a few seconds, resulting in the feeling that one’s body is in motion, regardless of the vestibular cues that indicate a static head during the initial period of visual surround motion (Weech & Troje, Citation2017).

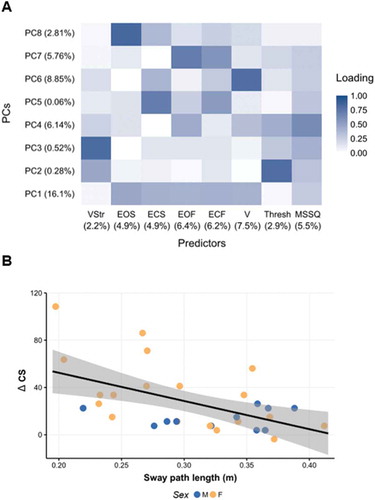

Recently, we have sought evidence about the aspects of sensorimotor processing that are most predictive of cybersickness proclivity. A set of predictors was collected, including self-reports of vection strength, vestibular thresholds, and excursions of the body center-of- pressure from 30 participants. To eliminate collinearity, a principal components regression approach was adopted, which factors out correlations and computes unique variances for each predictor. The resulting model, which was built using indices derived before exposure to VR, significantly predicted later cybersickness severity (R2 = 0.37, p =.018). The greatest unique variance in the model was carried by the postural sway during trials intended to elicit vection (see ), and by inspecting the simple trends, one can see that increased visual motion-induced postural sway was associated with lower levels of cybersickness (r(28) = −0.53, p = .002; ; Weech et al., Citation2018b). One interpretation of these data is that those who rapidly re-weight multisensory cues are also those who are most protected against the provocative conditions of XR exposure. This account would also suggest that methods of facilitating vection might offer protection from cybersickness in XR. This is a prospect that has been tested in previous experiments, and the evidence appears to align with this suggestion.

Figure 6. (a) Principal component (PC) loadings for each predictor*; b) Negative correlation between postural sway during vection, and cybersickness (ΔCS) (Adapted from Weech et al., Citation2018b). * Percentage values on left indicate the amount of variance in cybersickness scores accounted for by each PC; Percentage values on the bottom indicate the amount of unique variance in cybersickness scores accounted for by each predictor. Darker colors depict higher loadings, representing a greater expression of that factor on the PC. MSSQ = Motion Sickness Susceptibility Questionnaire score; EOF = Eyes Open Foam condition sway path length (SPL); ECF = Eyes Closed Foam condition SPL; V = Vection condition SPL; EOS = Eyes Open Standard condition SPL; ECS = Eyes Closed Standard condition SPL; VStr = Vection Strength ratings; Thresh = Vestibular Thresholds.

One such piece of evidence comes from procedures that “re-couple” multimodal cues, either by physically moving an observer along with visual self-motion (Aykent et al., Citation2014; Dziuda et al., Citation2014; Kluver et al., Citation2015), or by stimulating the vestibular sense with mild current (galvanic vestibular stimulation, GVS) to replicate missing acceleration cues (Cevette et al., Citation2012; Gálvez-García et al., Citation2014; Reed-Jones et al., Citation2007). Despite the efficacy of such methods, accurately replicating missing inertial cues is challenging. Based on the premise that noise modulates the reliability of sensory channels, we examined if adding sensory noise to the vestibular system can guide self-motion perception away from the zone of discomfort (conflicting multimodal cues) and toward a resolved percept (Weech & Troje, Citation2017). It was first established if visual dominance can be facilitated using vestibular noise, which was applied using either stochastic electrical current (noisy GVS) or vibration of the mastoid bone (bone-conducted vibration, BCV). Results indicated a quickening of the vection response across the range of circular vection axes when transient vestibular noise was applied, but not for seat vibration or air-conducted sound, which supports a vestibular origin for the effects.

In XR environments, vestibular noise can also modulate the cybersickness response. Results of a navigation task where participants piloted through virtual space with or without BCV revealed a reduction in sickness severity when BCV was used (Weech et al., Citation2018a). The time-course of stimulation was an important factor: For this short-duration experiment, cybersickness was diminished only when stimulation was time-coupled to large visual accelerations. This time-specificity of cue integration in perceptual decision making is consistent with recent models (Parise & Ernst, Citation2016; Wong, Citation2007). It has also been demonstrated that VR users who are exposed to tonic noisy GVS over a longer duration (30 min continuous) demonstrate a reduction of cybersickness (Weech et al., Citation2020b).

Emerging evidence reveals the scope for cybersickness reduction interventions that target the multisensory cortex. Neuroimaging highlights a differential activation of temporoparietal junction (a major multimodal integration site; Blanke, Citation2012) and occipital areas during cybersickness (Chen et al., Citation2010). Although recent evidence shows that trans-cranial direct current stimulation (tDCS) to reduce cortical excitability over temporoparietal junction is associated with a reduced severity of cybersickness (Takeuchi et al., Citation2018), more studies are required to confirm these effects (tDCS paradigms have been criticized for non-reproducibility of late; e.g., Medina & Cason, Citation2017; Minarik et al., Citation2016).

Another cybersickness reduction technique involves the protective use of “presence,” the sense of “being there” in an XR environment. An increased level of presence has been associated with lower cybersickness, either due to the effects of the latter on attentional resources, or changes in the processing of multimodal stimuli during presence (Weech et al., Citation2019a). Results of a recent study with a large sample of participants (Total N = 170) revealed that building an enriched narrative context in VR both increases levels of presence and reduces cybersickness severity (Weech et al., Citation2020a). This reduction was greatest for individuals at-risk to cybersickness, such as those unfamiliar with video games. Other top-down manipulations on cybersickness have demonstrated effectiveness in recent years, including findings that a positive smell (“rose”; Keshavarz et al., Citation2015) and pleasant-sounding music (Keshavarz & Hecht, Citation2014; Sang et al., Citation2006) can promote moderately better user comfort. Based on these limited findings, a presence consideration that was part of the Stanney et al. (Citation1998) R&D agenda and an additional consideration to add to the updated R&D agenda include:

Determine relationship between presence, cybersickness, and adverse physiological aftereffects.

Determine aspects of sensorimotor processing that are protective of cybersickness proclivity (e.g., ability to reweight sensory cues rapidly).

Based on these results and the associated review, the design implications for cybersickness mitigation based on multisensory reweighting have been summarized in . In sum, it is clear that we are at an exciting time in cybersickness research. While much research has been focused on trying to further our understanding of cybersickness from the “sensory re-weighting” approach, others have obtained evidence for the alternative approaches discussed in each respective hypothesis section above. Far from being a problem, this should be perceived as a healthy diversity of opinion in the cybersickness research field, and as a welcome reminder of the significant work still to be done before a single unifying theory of cybersickness is attained.

7. Operational implications of cybersickness: Considerations of a military use case (Dennison and Lawson)

Why should we care about cybersickness? As mentioned in the Introduction, XR technology is expanding into multiple domains from entertainment to commerce to industry to military operations. The envisioned applications are endless. Yet, if cybersickness cannot be resolved, XR could fall far short of its promise and, worse yet, create a divide between the susceptible and non-susceptible. As Lackner (Citation2014, p. 2495) noted:

“ … the range of sensitivity in the general population varies about 10–1, and the adaptation constant also ranges from 10 to 1. By contrast, the decay time constant varies by 100–1. The import of these values is that susceptibility to motion sickness in the general population varies by about 10,000–1, a vast range.”

The implication of these vast individual differences is that, while some people may be able to fully embrace the power and potential of XR technology, those who are highly susceptible may be left on the sidelines watching this new era of XR-empowered productivity and entertainment pass them by. This could have future effects on career opportunities and progression. For example, the military is interested in learning how HWDs can aid Warfighters in performing multiple tasks in parallel and with greater efficiency. Such technologies have the potential to dramatically change the way that Warfighters train and fight. In fact, former Secretary of Defense James Mattis wanted all infantrymen to fight “25 bloodless battles” before they ever set foot in a real combat environment. If some service members are not able to tolerate such training, this could affect future selection as much as intractable airsickness affects pilot selection today.