ABSTRACT

The present aim was to investigate if angry, neutral, and happy facial expressions have different effects on haptic responses. Two experiments were conducted. In Experiment 1, participants (N = 24) were to respond as fast as they could to images of angry, neutral, and happy faces using a haptic device that measured the amount of applied force in newton units and the duration of touch in milliseconds. Experiment 2 was identical to Experiment 1, except that ratings of emotion-related experiences evoked by the stimuli were also collected in terms of valence and arousal. The results from both studies showed that the force of touch in response to angry expressions was significantly stronger than in response to happy expressions. The ratings of valence showed that angry, neutral, and happy faces were experienced statistically significantly different from each other along the dimension of valence. Ratings of arousal showed that angry faces were experienced as significantly more arousing than neutral and happy faces. The results suggest a relationship between the processing of emotion-related facial expressions and haptic response systems. The force of touch could be used as one of the measures conveying information about the user’s state of mind when interacting with haptic user interfaces.

1. Introduction

The significance of haptic communication was revealed already in 1950s when Harlow made his famous studies with rhesus monkeys. He noted that the deprivation of infant monkeys from their caregiver caused severe and permanent damage to the social and emotional development of the monkeys. From his studies, Harlow made a conclusion that tactile contact and warmth in early childhood are essential for normal socio-emotional development (Harlow, Citation1958). Touch can be used to both sense and communicate information. When using touch for communication, it is usually about mediating emotion-related information like hugging, caressing, tapping, and perhaps even hitting someone. The meaning, content, or aim of our haptic communication embeds itself in various cues of the touch, for example, the direction, length, and force of the touch. Further, the meaning of someone’s tactile expression is interpreted in the context of other nonverbal cues like facial expressions of emotions.

In respect to human emotions, there is a long line of research showing evidence that facial expression stimuli do evoke emotions in terms of recruiting brain activity, facial muscle activity, and facially expressive behavior (e.g., Anttonen et al., Citation2009; Dimberg et al., Citation2000; Ekman et al., Citation1990; Surakka & Hietanen, Citation1998). Facial expressions are cues for everyday interaction and there is evidence that in addition to communicating the emotional state and behavioral intentions, they evoke demand for action to the perceiver (Seidel et al., Citation2010). These requests for action are roughly about withdrawal-approach motivation in respect to the evoked emotion. For example, Marsh et al. (Citation2005) showed that angry facial expressions facilitated avoidance-related behavior. According to Lang et al. (Citation1990), there is a direct link between perception and action, which activates through the perception of emotionally meaningful information.

Research around haptics currently extends itself also to communication technology and human technology interaction. Devices that utilize the sense of touch (e.g., touch screens and social robots) have become increasingly popular and at the same time, the significant association of touch and emotions has been realized in the research area known as affective haptics, a part of affective computing-related research field. It focuses on the study and design of devices and applications that can evoke, enhance, influence, or take into consideration something about the emotional state of humans (Picard, Citation1997; Tsetserukou et al., Citation2009). Understanding of the effects of facial expressions to haptic perception, for example, when a machine touches one or when in virtual reality has recently evoked interest (e.g., Ellingsen et al., Citation2014; Ravaja et al., Citation2017).

Affective haptics in social robotics is also a growing field interested in robots’ affective awareness. There is an increasing number of research around affective social robots (e.g., Andreasson et al., Citation2018; Breazeal, Citation2001; Kirby et al., Citation2010). Many of the social robots, for example, Paro, Huggable, Probo, and CuddleBot (Allen et al., Citation2015; Shibata et al., Citation1996; Stiehl et al., Citation2006; Saldien et al., Citation2008 respectively) are used in healthcare centers or nursing homes to interact with sick children or elderly citizens. In those contexts, understanding the quality of users’ touch would improve the quality of the interaction between robots and humans. In fact, this type of affective awareness has been found important and beneficial for the users (Kidd et al., Citation2006; Wada & Shibata, Citation2007).

Investigations between perception of facial emotions and haptic responses can offer evidence of the above-mentioned perception action links. Thinking in the context of affective haptics and haptic user interfaces, one notion is that people use their fingers, especially their index fingers for the interaction. Although there is earlier research in investigating responses (e.g., using joysticks) to emotionally relevant stimuli in affective haptics context (e.g., Bailenson et al., Citation2007; Seidel et al., Citation2010) the earlier studies did not directly concentrate on the dynamic properties of haptic responses. Further, although interaction with haptic user interfaces in many cases take place using index fingers there appears to be very little direct evidence on the association of index finger responses to emotional stimuli. Still further, based on our literature review, it was not clear how different emotion stimuli might affect haptics. Therefore, the main research question for the present study was to investigate if angry, neutral, and happy facial expressions have different effects to haptic responses in terms of force and duration of touch.

2. Related work

For the present work, there are two central approaches to emotion. The discrete emotion theory suggests that humans have an innate set of basic emotions that are biologically determined and therefore both universal and discrete (Ekman, Citation1992; Ekman & Friesen, Citation1976). According to this theory, there are six universal basic emotions (i.e. happiness, sadness, fear, anger, surprise, and disgust) and consequently the emotion-related ratings use a set of discrete emotion categories. In addition, Ekman and Friesen (Citation1976) have created systematic sets of facial expressions that are widely used as stimuli in various emotion-focused studies.

On the other hand, the dimensional theory of emotion states, that emotion can be defined as a set of dimensions, such as valence, arousal, and dominance. Thus, emotions can be mapped using a two- or three-dimensional space (Bradley & Lang, Citation1994). In this theoretical frame, emotion-related ratings are given using different types of dimensional rating scales. Bradley and Lang (Citation1994) have designed a nonverbal pictorial assessment technique called Self-Assessment Manikin (SAM), which directly measures the dimensions of pleasure, arousal, and dominance associated with a person’s ratings to a wide variety of stimuli. This method and modifications of it have also been frequently used in the context of Human-Computer interaction (HCI) research (e.g., Rantala et al., Citation2013; Salminen et al., Citation2008) to rate emotional qualities of interaction.

In recent years, the association between haptics and emotions has started to attract attention within the context of communication technology research. To date, majority of the research in affective haptics has focused on collecting emotion-related ratings in response to haptic stimulation. For instance, Bailenson et al. (Citation2007) conducted a study on how to express and recognize emotions through a 2-DOF force-feedback haptic device. In their first experiment, they recorded force with the force-feedback joystick while participants tried volitionally to express seven distinct emotions (i.e., disgust, anger, sadness, joy, fear, interest, and surprise). In a second experiment, they used a separate group of participants to identify the emotions generated based on their recordings in the first experiment. Finally, in a third experiment, they asked pairs of participants to try to express these same seven emotions using physical handshakes. The results suggested that the participants were slightly better at recognizing emotions via the force-feedback device than when expressing emotions through non-mediated handshakes (Bailenson et al., Citation2007).

In addition, Hertenstein et al. (Citation2006) studied how touch can communicate distinct emotions. They divided the study participants into two groups, encoders, and decoders. They then gave the encoders a list of 12 emotion words and asked each of them to signal the emotions on their corresponding decoder’s arm using only touch. Their results showed that the encoded emotions could be recognized higher than chance level and that anger was felt via touch more precisely than other emotions such as fear, gratitude, and love (Hertenstein et al., Citation2007). As a follow-up to Hertenstein et al. (Citation2006) study, Andreasson et al., Citation2018 replaced human–human interaction with human-robot to convey eight emotions (anger, disgust, fear, happiness, sadness, gratitude, sympathy, and love) via touch. The study followed similar procedure as Hertenstein et al.’s (Citation2006) study, using encoders (humans) and decoder (robot), except that, unlike humans, the social robot was not equipped to decode the conveyed emotions by the encoder (human). Instead, they evaluated the tactile dimensions that the robot could distinguish from the different emotions that the humans conveyed. The results showed that the human–robot and human–human interaction via touch were in agreement with each other. Additionally, anger was the most intensely rated emotion and the negative emotions (i.e. anger, disgust, fear) were rated as more expressive than positive (e.g., happiness, gratitude, love) emotions (Andreasson et al., Citation2018).

Apart from that, Tsetserukou et al. (Citation2009) proposed an approach to reinforce one’s feelings and reproduce the emotions felt by one’s partner during online communication through a specially designed system called “iFeel_IM!”. First, their system automatically recognized nine emotions (anger, disgust, fear, guilt, interest, joy, sadness, shame, and surprise) from chat text. Then, the detected emotion was simulated by the wearable affective haptic devices (i.e., HaptiHeart, HaptiHug, HaptiButterfly, HaptiTickler, HaptiTemper, and HaptiShiver) integrated into iFeel_IM!. These devices produced different sensations of touch via kinesthetic and cutaneous channels. The study revealed that the users were more interested in online chatting and experienced more emotional arousal when using the affective haptic devices than without them (Tsetserukou et al., Citation2009).

The above-reviewed studies at least partly used force of touch as a central factor in mediating the content of tactile messages (e.g., Bailenson et al., Citation2007; Hertenstein et al., Citation2007; Tsetserukou et al., Citation2009).

Gao et al. (Citation2012) studied the effect of emotions to haptic responses when using finger-stroke features for a 20-level gameplay on a touch screen. They built systems using machine-learning algorithms for automatically discerning between four emotional states excited, relaxed, frustrated, and bored. Emotion recognition was based on the length, pressure, direction, and speed of finger stroking motion on the touch screen. Their results suggested that especially pressure as measured indirectly in terms of the area of contact seemed most promising to discriminate frustration from the other three states (Gao et al., Citation2012). Additionally, Hernandez et al. (Citation2014) studied the effect of increased stress during computerized writing tasks by using a pressure-sensitive keyboard and capacitive mouse. The results showed that increased emotional stress was expressed with stronger use of keystroke force on the keyboard and increased amount of mouse contact by the computer users.

Ellingsen et al. (Citation2014) investigated how angry, neutral, and happy faces influenced touch perception. Different facial expressions were presented together with human touch or machine touch and the participants were to rate the pleasantness of touch to these stimuli. Their findings suggested that the perception of the pleasantness of touch changed by the facial expression. Touch was perceived as least pleasant when presented together with angry facial expressions, and as most pleasant with happy facial expressions. Angry faces reduced the pleasantness of touch more strongly when presented together with human touch (Ellingsen et al., Citation2014).

In line with Ellingsen et al. (Citation2014) findings, Ravaja et al. (Citation2017) examined how different facial emotional expressions (anger, happiness, fear, sadness, and neutral) modulate the processing of tactile stimuli as measured by somatosensory-evoked potentials (SEP). The participants wore a virtual reality scene where a 3D character varied its facial expression and at the same time, it was touching participant’s hand. The touch was simulated using vibrotactile and mechanical stimuli. The findings showed that the late SEP amplitudes (i.e. responses to touch stimuli) were highest while the character had an angry and lowest when it had a happy facial expression. They also showed that although the tactile stimulation was the same, the ratings of touch stimuli depended on the facial expression. For example, the intensity of character’s touch was rated as high in response during both angry and happy faces, but most intense during angry faces. There were no differences between ratings during sad and happy or between fearful and happy facial expressions. Thus, importantly the results from both the studies (Ellingsen et al., Citation2014; Ravaja et al., Citation2017), showed that facial emotional expressions affect touch perception too.

In respect to perceptions of the various facial expressions of emotions, there have been studies on the “anger superiority effect” versus the “happiness superiority effect”. For example, Hansen and Hansen (Citation1988) and Öhman et al. (Citation2001) found that anger, as both an emotion and facial expression, is more pronounced than happiness and that angry faces are detected faster in a crowd of faces. However, contrary to these findings, a study by Becker et al. (Citation2011) found evidence that happy faces are more prominent than angry faces. They therefore proposed that the human facial expression of happiness has evolved to be more visually distinguishable compared to other facial expressions (Becker et al., Citation2011). As there is evidence that both angry and happy faces are potentially effective in evoking faster responses than other types of expressions, this might also be reflected in haptic responses.

Therefore, there is evidence that artificially created haptic stimulations can evoke emotions and that some emotions, even discrete emotions can be mediated or enhanced in information communication technology contexts. Further, in previous studies on emotion and touch, haptic parameters were (mainly) used to find out how various haptic stimulations using various parameters might evoke emotions or how emotions would be recognized from certain haptic stimuli. Alternatively, earlier research has investigated how facial information may affect the perception of haptic stimuli (e.g., Ahmed et al., Citation2016; Ellingsen et al., Citation2014; Hertenstein et al., Citation2006; Ravaja et al., Citation2017). However, there is a lack of understanding how exactly emotional facial expressions can affect haptic responses. Therefore, the present study focused on this.

In Experiment 1, only the force and duration of the haptic responses to the facial expressions were measured. In Experiment 2, ratings of the facial stimuli were also collected using the dimensional emotion theory frame of reference (i.e., Bradley & Lang, Citation1994). This was done to investigate the relations between the facial stimuli, haptic reactions, and participants’ emotion.

3. Experiment 1

3.1. Participants

There were 24 participants, 14 males, and 10 females. Their mean age was 30 years (range 20–58) with a standard error of 2.18 years. The participants were university students from which the majority of the participants were from northern Europe (Finnish and Swedish), and the rest had Asian backgrounds (i.e., Indian and Pakistani). Participation was voluntary, and all the participants had normal or corrected-to-normal vision.

3.2. Facial stimuli

Four different facial images that represented anger, happiness, and expressive neutrality were selected from a set of 110 black-and-white pictures developed by Ekman and Friesen (Citation1976). In addition, gender of the posers was balanced across picture series so that two male and two female pictures for each expression were chosen. Thus, in total there were 12 facial images. The selection was based on the highest percentage of consistent agreement among groups of observers in Ekman and Friesen’s study. The average percentage for happy was 100%, for neutral 74%, and for anger 98%.

3.3. Apparatus

3.3.1. Hardware

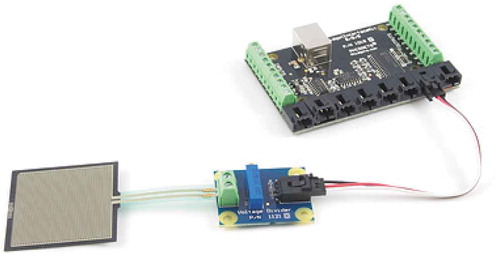

A haptic device (as shown in ), with a force-sensing resistor (FSR) having an active 39.6 mm x 39.6 mm sensing area was used to record the applied force and duration of touch. It can measure forces from ~0.2 N up to 20 N.

Figure 1. The 1.5” square Force Sensing Resistor (FSR), connected to a voltage divider and an analogue input device. Published with written permission from Phidgets Inc

FSR is a very thin (0.46 mm) 1.5” square polymer thick-film device that decreases in resistance when increased pressure is applied to the surface of the sensor. The output voltage increases with increasing force (CitationInterlink Electronics Inc., Westlake Village, CA, USA).

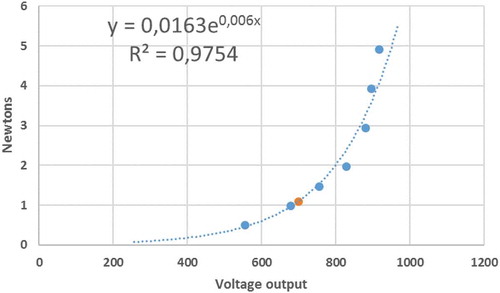

The voltage level of the applied pressure was converted to newton units by measuring the voltage under several different loads and then fitting a logarithmic curve to the resulting data. The equation of the best-fitting curve (see ) was then used for the conversion. Since in the experiments, only a tap with a finger on the FSR was required, the applied minimum voltage output from the experiments was more than 50 grams and maximum were less than 500 grams, so only the loads from 50 grams to 500 grams were used for calibration.

All the electronics were concealed inside a box and secured with duct tape. Only the FSR was visible outside the box, and this haptic device was plugged into a laptop via the USB port as shown in .

3.3.2. Software environment

The laptop used for the experiment had the Windows 7, 32-bit operating system. The .NET framework was installed so that the haptic device installer could automatically install all the needed libraries to run the device (Phidgets Inc., Citation2010). Visual Basic 6.0, JavaScript, and MySQL database were used to develop the test application for the experiment. The API and the code examples were downloaded from the Phidget website (https://www.phidgets.com/docs/OS_-_Windows) and edited to suit the experiment’s needs.

3.4. Procedure

At first, before starting the experiment, a verbal informed consent was asked from the participants. The participants were told a cover story explaining that the purpose of the experiment was to find out how fast they could react to images displayed on the computer screen by tapping on the FSR with their dominant hand’s index finger (see ). The FSR was positioned horizontally so that downward pressure could be exerted. The task was to tap the FSR as fast as possible in response to the image displayed on the screen.

The experiment was conducted in a quiet isolated study room without any external disturbances or distractions. First, the participant was seated comfortably at a table facing the laptop screen and his/her hands resting on the table. Then, the haptic device connected to the laptop was placed comfortably near (just as they use a computer mouse), to the dominant hand of the participant resting on the table. The test application was started when the participant was ready to begin. The participant first performed a practice test to ensure that he or she was comfortable with the FSR and the test procedures. This practice test consisted of five different facial stimuli not used in the actual experiment. This practice could be repeated as many times as they wanted, to get comfortable with the process.

At the beginning of the test, the participant entered his or her personal details, such as name, age, gender, occupation, and nationality. After this, the participant clicked on the “Start Test” button. When the “Start Test” button was selected, the facial expression stimuli were displayed on the computer monitor randomly using a deterministic algorithm. The participant had to tap the FSR with the index finger as fast as possible in response to the images. A tap on the FSR resulted in the immediate disappearance of the current image, followed by a randomized pause for 3 to 5 seconds before a new image appeared automatically. Once the experiment was complete, the participant was given the consent form that debriefed about the actual purpose of the experiment and requested the permission to use the experiment data for research purposes. A signed copy of that form was given also to the participant.

3.5. Results

The means and standard error of the mean (S.E.M.) of the force of touch (converted to newton units) and the duration of touch for the different facial expressions are shown in and , respectively.

A one-way within-subject repeated-measures analysis of variance (ANOVA) was conducted to see if expression had an effect to the use of force and duration of touch. The results showed a statistically significant effect of expression on the force of touch, F (2, 46) = 4.529, p < .05, but the effect of the expression on duration of touch, F (2, 46) = 0.055, p > .05, was not as statistically significant.

Bonferroni corrected post hoc pairwise comparisons revealed that the use of force was stronger in response to the angry compared with the happy faces (mean difference (MD) = 0.330, p < .05). The difference between the responses to the angry and neutral faces was also significant (MD = 0.305, p < .05). However, the difference between the responses to the neutral and happy faces was not statistically significant (MD = 0.025, p > .05).

4. Experiment 2

4.1. Methods

Experiment 2 was identical to Experiment 1 except that the ratings of the facial stimuli were collected after the experiment was completed.

4.1.1. Participants

Twenty voluntary participants, 10 males, and 10 females, with a mean age of 26 years (range 20–40) with a standard error of 1.11 years, who were not involved in Experiment 1, took part in this experiment. The majority of the participants were students from the Tampere University. They were from different nationalities, such as Northern European and Asian. All participants had normal or corrected-to-normal vision.

4.1.2. Materials and apparatus

The same haptic device and software environment as in Experiment 1 were used.

4.1.3. Procedure

The procedure was identical to Experiment 1, except that this time, after the experiment was completed, the ratings of pleasantness and arousal of each facial stimuli were collected using the 9-point bipolar rating scales. They ranged from −4 to +4 (i.e., unpleasant to pleasant and calm to aroused), with 0 representing the center (e.g., neither unpleasant nor pleasant). The participants were asked to rate each image according to how they felt about it. Both ratings scales were presented along with each image and the participant was to give both ratings at the same time. The ratings were given by a mouse click on both scales. Then, the experimenter scrolled down to the next image of the image document, the participant gave the ratings, etc., until all the images were rated. The rating data was automatically recorded to an excel document at the end of rating procedure.

4.2. Results

4.2.1. The force and duration of touch

and show the mean and S.E.M. values for the force and duration for each facial expression.

A one-way, within-subject, repeated measures ANOVA showed a statistically significant effect of expression to the force of touch, F (2, 38) = 6.29, p < .05, but showed no statistically significant effect of expression to the duration of touch, F (2, 38) = 1.34, p > .05.

Bonferroni corrected post hoc pairwise comparisons revealed that the use of force was stronger in response to angry than happy faces (MD = 0.27, p < .05). The difference between the responses to the angry and neutral expressions was also significant (MD = 0.27, p < .05). However, the difference between the responses to happy and neutral expressions was not statistically significant (MD = 0.002, p > .05).

4.2.2. Ratings of valence & arousal

The means and S.E.M.’s for the ratings of valence and arousal are presented in and , respectively.

A one-way, within-subjects ANOVA showed a statistically significant effect of expression for valence, F (2, 38) = 105.67, p < .001. Bonferroni corrected post-hoc pairwise comparisons showed that there was a significant difference between the ratings of valence angry and happy expressions (MD = 4.45, p< .01), angry and neutral expressions (MD = 3.16, p< .01) and neutral and happy expressions (MD = 1.29, p< .01).

A one-way, within-subjects ANOVA showed a statistically significant effect of expression for arousal, F (2, 38) = 32.39, p< .001. Bonferroni corrected post hoc pairwise comparisons showed that there was a significant difference in the ratings of arousal between angry and happy expressions (MD = 2.8, p < .001), and between angry and neutral expressions (MD = 3.0, p< .001). However, the difference between the ratings of arousal to neutral and happy expressions was not statistically significant (MD = 0.2, p> .05).

5. Discussion

The results from both studies gave coherent findings in respect to the measurement of tactile responses to the facial expressions of emotions. The results showed that the force of touch was significantly stronger in response to angry than in response to happy and neutral expressions. The facial stimuli had no statistically significant effect on the duration of touch. Additionally, the participants’ ratings of their responses to the facial stimuli showed that angry, neutral, and happy evoked experiences in line with negative – positive valence dimension. The ratings of arousal showed that the angry facial expressions were felt significantly more arousing in comparison to the neutral or happy expressions. There was no significant difference between felt arousal to neutral and happy faces.

Taken together, our results showed that the facial expressions modulated both the haptic responses and ratings of emotion experiences. To our knowledge, this is the first empirical study where the connection between facial emotion perception and haptic response has been found. Although there are no exactly similar studies we can interpret that the findings are in line with earlier studies dealing somehow with the force of touch. For example, the earlier studies (e.g., Bailenson et al., Citation2007; Hertenstein et al., Citation2007; Tsetserukou et al., Citation2009) all used force of touch as a central factor in mediating the content of haptic messages. The study by Gao et al. (Citation2012) indicated that the force of touch as measured in terms of the area of contact seemed most promising to discriminate frustration from the other emotions (i.e. excited, relaxed, and bored). Similar to Gao et al. (Citation2012), Hernandez et al. (Citation2014), using a pressure-sensitive keyboard and capacitive mouse showed that an increased emotional state of stress level was expressed with stronger use of keystroke force. The study by Ellingsen et al. (Citation2014) found that when presenting facial expressions of anger, neutrality, and happiness together with human touch or machine touch the touch was perceived as least pleasant when presented together with angry facial expressions, and with human touch. Similarly, the study by Ravaja et al. (Citation2017) showed that the late SEP amplitudes (i.e. responses to touch stimuli) were highest in response to angry and lowest to happy facial expression. They also showed that when given an impression of a 3D facial figure touching their participants the ratings to exactly the same touch stimuli depended on the facial expression of the figure. The figure’s touch was rated as most intense during angry faces. In summary, there is surrounding supportive evidence for our findings.

In more general framework, our finding supports the suggestion, for example, by Lang et al. (Citation1990) that there is a direct link between the perception of an emotionally meaningful information and action relevant to the information perceived. As our participants used more force of touch to images they rated as being felt both unpleasant and arousing, the results support the idea of angry images activating both primitive motive circuits (i.e. defensive) and initiating a demand for action (Lang & Bradley, Citation2010; Seidel et al., Citation2010). It may be that the use of force can indicate the required charge for action in respect to the perception. Thus, our findings may be indicative of the idea that angry facial expressions elicit an automatic behavioral response that evoke demand for action or avoidance behavior (e.g., Marsh et al., Citation2005) that results in a stronger force of touch to avoid or eliminate the unpleasant, arousing experience as fast as possible.

It could be argued that the use of increased force was merely an indication of stronger desire to remove the angry face as fast as possible from view and so a faster response will naturally be stronger. However, earlier research on reaction times to facial expressions has been controversial. There is evidence that reaction times to happy faces in comparison to angry faces are faster (e.g., Billings et al., Citation1993; Harrison et al., Citation1990; Hugdahl et al., Citation1993; Leppänen et al., Citation2003) but also the other way around (e.g., Mather & Knight, Citation2006; Öhman et al., Citation2001; Richards et al., Citation2011). Should it be so that angry faces were responded faster it is likely that this would have been reflected also in the duration of touch, either duration being shorter or longer compared to other expressions. We note that previous research has shown that negative emotion results in stronger motor evoked potentials (i.e. neuroelectrical signals on the muscles) than emotion induced by neutral and pleasant images (Blakemore et al., Citation2016; Coelho et al., Citation2010; Coombes et al., Citation2009). Additionally, participants viewing emotionally arousing images subconsciously applied stronger force when using the hand compared to participants viewing neutral images (Coombes et al., Citation2008, Citation2011, Citation2009; Naugle et al., Citation2012). In sum, there is supporting evidence that negative emotions can evoke the stronger force of touch. Therefore, it is unclear whether happy or angry facial expression stimuli has a major impact on the haptic reaction time response. To clarify this, future studies should also include reaction time measurements.

It is not clear from this study if the results indicate avoidance or approach motivation. Recently, the idea of positive emotions being related simply to approach motivation and negative emotions being related to escape/withdraw motivation has been challenged. It has been argued that approach motivation can be evoked by unpleasant stimuli and especially so by anger-related stimuli. Even smiles can evoke avoidance motivation (Harmon-Jones et al., Citation2013). Thus, we must note that it is not fully clear if the response measures we used, reflect tendency to attack or tendency to get rid of an unpleasant stimulus. In future studies ratings of motivation to withdraw-approach the stimuli should be included to clarify the relations between stimulations and dynamics of haptic responses.

Admittedly, our study used only four expressions per category. It could be argued that there should have been more repetitions to improve the reliability of the findings. This is true. However, the images were carefully selected so, that they represented the best stimulus images from the Pictures of Facial Affect set (Ekman & Friesen, Citation1976). Further, the experiment was repeated two times and both studies gave coherent results in respect to the use of force. This can be taken as an indication of the reliability of our findings. Another limitation of the study is that although ratings of felt emotions did fit well for unpleasant-pleasant dimension the fit along felt arousal was not perfect as there was no significant difference between responses to neutral and happy faces. In future studies stimuli should be balanced in respect to both dimensions. In our study, the difference between responses to angry and happy faces was, however, statistically significant.

In respect to HCI or human-robot interaction, the results are also worth considering. Measurement of force and duration of touch could, in principle aid as one of the modalities in designing intelligent systems that measure and analyses users’ behavior during interaction. Currently, affective haptics in robotics, use different sensors (i.e. touch, force/pressure, temperature) to measure touch input in order to analyze different qualities of touch such as good touch (e.g., stroking, patting) and bad touch (e.g., hitting, pushing). To improve human-robot communication, it is important that the affective social robots could identify, express, and react to different qualities of touch in a smooth and natural manner (Kirby et al., Citation2010). Similarly, in the field of HCI, the immersive Virtual Reality (VR) paired with haptic technology (e.g., haptic gloves accessory) that simulates tactile sensations of virtual objects, allows the user to physically interact with the virtual objects. Generally, the haptic technology creates the sensations such as tactile feedback, texture, density, strength, smoothness or roughness, friction of the virtual objects (Kumar & Bhavani, Citation2017), but currently lacks the affective interactions via haptics. An affective immersive VR with haptic technology that could also identify and react to different measurements of touch could greatly benefit, for example, the gaming sector where emotions play a vital role. A study by Ahmed et al. (Citation2020) using virtual agent with facial emotional expression and pressure-sensing tube presented as the agent’s arm in VR showed that the facial emotional expression of agents affected squeeze intensity and duration through changes in emotional perception and experience. Thus, suggesting that the haptic responses may yield an implicit measure of persons’ experience toward a virtual agent. Additionally, studies by Gao et al. (Citation2012) and Hernandez et al. (Citation2014) with touch screens and keyboard (respectively) showed that negative emotions such as frustration and stress elicited more force of touch in HCI. Our results were in line with these showing that facial stimuli modulated touch force and they also modulated emotional experiences. Of course, more research on the use of touch while interacting with technology (e.g., computer) is needed before force of touch measurements could reliably aid in improving machine’s potential predictions of its user’s mental state in terms of valence and arousal.

Acknowledgments

We thank Dr. Poika Isokoski for his help in calibrating the sensor and the volunteers who took part in the experiment. This research was financially supported with the funding from the Tampere University, Faculty of Information Technology and Communication Sciences.

Additional information

Notes on contributors

Deepa Vasara

Deepa Vasara received her Master’s degree in Human-Technology Interaction in 2016 from Tampere University, and is a doctoral researcher in Interactive Technology and a member of the Research Group for Emotions, Sociality, and Computing (https://research.tuni.fi/esc/). Her research focuses especially on research on haptics, emotion, human-technology interaction research, and affective interaction.

Veikko Surakka is a Professor of interactive technology (2007-present) and the head of the Research Group for Emotions, Sociality, and Computing (https://research.tuni.fi/esc/). His and the group’s research focuses especially on research on emotion, cognition, human-human and human-technology interaction research, and development of new multimodal interaction technology.

References

- Ahmed, I., Harjunen, V., Jacucci, G., Hoggan, E., Ravaja, N., & Spapé, M. M. (2016, October). Reach out and touch me: Effects of four distinct haptic technologies on affective touch in virtual reality. In Proceedings of the 18th ACM International Conference on Multimodal Interaction (ICMI’16), (pp. 341–348). Tokyo, Japan: ICMI. https://doi.org/10.1145/2993148.2993171

- Ahmed, I., Harjunen, V. J., Jacucci, G., Ravaja, N., Ruotsalo, T., & Spape, M. (2020). Touching virtual humans: Haptic responses reveal the emotional impact of affective agents. IEEE Transactions on Affective Computing, 1. https://doi.org/10.1109/TAFFC.2020.3038137

- Allen, J., Cang, L., Phan-Ba, M., Strang, A., & MacLean, K. (2015, March). Introducing the Cuddlebot: A robot that responds to touch gestures. In Proceedings of the tenth annual ACM/IEEE international conference on human-robot interaction extended abstracts (p. 295). Portland, OR. https://doi.org/10.1145/2701973.2702698.

- Andreasson, R., Alenljung, B., Billing, E., & Lowe, R. (2018). Affective touch in human–robot interaction: Conveying emotion to the nao robot. International Journal of Social Robotics, 10(4), 473–491. https://doi.org/10.1007/s12369-017-0446-3

- Anttonen, J., Surakka, V., & Koivuluoma, M. (2009). Ballistocardiographic responses to dynamic facial displays of emotion while sitting on the EMFi chair. Journal of Media Psychology, 21(2), 69–84. https://doi.org/10.1027/1864-1105.21.2.69

- Bailenson, J. N., Yee, N., Brave, S., Merget, D., & Koslow, D. (2007). Virtual interpersonal touch: Expressing and recognizing emotions through haptic devices. Human–Computer Interaction, 22(3), 325–353. https://doi.org/10.1080/07370020701493509.

- Becker, D. V., Anderson, U. S., Mortensen, C. R., Neufeld, S. L., & Neel, R. (2011). The face in the crowd effect unconfounded: Happy faces, not angry faces, are more efficiently detected in single-and multiple-target visual search tasks. Journal of Experimental Psychology. General, 140(4), 637. https://doi.org/10.1037/a0024060

- Billings, L. S., Harrison, D. W., & Alden, J. D. (1993). Age differences among women in the functional asymmetry for bias in facial affect perception. Bulletin of the Psychonomic Society, 31(4), 317–320. https://doi.org/10.3758/BF03334940

- Blakemore, R. L., Rieger, S. W., & Vuilleumier, P. (2016). Negative emotions facilitate isometric force through activation of prefrontal cortex and periaqueductal gray. Neuroimage, 124(Pt A), 627–640. https://doi.org/10.1016/j.neuroimage.2015.09.029

- Bradley, M. M., & Lang, P. J. (1994). Measuring emotion: The self-assessment manikin and the semantic differential. Journal of Behavior Therapy and Experimental Psychiatry, 25(1), 49–59. https://doi.org/10.1016/0005-7916(94)90063-9

- Breazeal, C. (2001, September). Affective interaction between humans and robots. European conference on artificial life (pp. 582–591). Berlin, Heidelberg: Springer.

- Coelho, C. M., Lipp, O. V., Marinovic, W., Wallis, G., & Riek, S. (2010). Increased corticospinal excitability induced by unpleasant visual stimuli. Neuroscience Letters, 481(3), 135–138. https://doi.org/10.1016/j.neulet.2010.03.027

- Coombes, S. A., Gamble, K. M., Cauraugh, J. H., & Janelle, C. M. (2008). Emotional states alter force control during a feedback occluded motor task. Emotion, 8(1), 104. https://doi.org/10.1037/1528-3542.8.1.104

- Coombes, S. A., Naugle, K. M., Barnes, R. T., Cauraugh, J. H., & Janelle, C. M. (2011). Emotional reactivity and force control: The influence of behavioral inhibition. Human Movement Science, 30(6), 1052–1061. https://doi.org/10.1016/j.humov.2010.10.009

- Coombes, S. A., Tandonnet, C., Fujiyama, H., Janelle, C. M., Cauraugh, J. H., & Summers, J. J. (2009). Emotion and motor preparation: A transcranial magnetic stimulation study of corticospinal motor tract excitability. Cognitive, Affective & Behavioral Neuroscience, 9(4), 380–388. https://doi.org/10.3758/CABN.9.4.380

- Dimberg, U., Thunberg, M., & Elmehed, K. (2000). Unconscious facial reactions to emotional facial expressions. Psychological Science, 11(1), 86–89. https://doi.org/10.1111/1467-9280.00221

- Ekman, P. (1992). An argument for basic emotions. Cognition & Emotion, 6(3–4), 169–200. https://doi.org/10.1080/02699939208411068

- Ekman, P., Davidson, R. J., & Friesen, W. V. (1990). The Duchenne smile: Emotional expression and brain physiology: II. Journal of Personality and Social Psychology, 58(2), 342. https://doi.org/10.1037/0022-3514.58.2.342

- Ekman, P., & Friesen, W. V. (1976). Pictures of facial affect.Palo Alto, CA: Consulting Psychologists Press.

- Ellingsen, D. M., Wessberg, J., Chelnokova, O., Olausson, H., Laeng, B., & Leknes, S. (2014). In touch with your emotions: Oxytocin and touch change social impressions while others’ facial expressions can alter touch. Psychoneuroendocrinology, 39, 11–20. https://doi.org/10.1016/j.psyneuen.2013.09.017

- FSR 400 series data sheet. Interlink Electronics, Inc. Retrieved May 15, 2017, from https://www.interlinkelectronics.com/datasheets/Datasheet_FSR.pdf

- Gao, Y., Bianchi-Berthouze, N., & Meng, H. (2012). What does touch tell us about emotions in touchscreen-based gameplay? ACM Transactions on Computer-Human Interaction (TOCHI), 19(4), 1–30. https://doi.org/10.1145/2395131.2395138

- Hansen, C. H., & Hansen, R. D. (1988). Finding the face in the crowd: An anger superiority effect. Journal of Personality and Social Psychology, 54(6), 917. https://doi.org/10.1037/0022-3514.54.6.917

- Harlow, H. F. (1958). The nature of love. American Psychologist, 13(12), 673–685. https://doi.org/10.1037/h0047884.

- Harmon-Jones, E., Harmon-Jones, C., & Price, T. F. (2013). What is approach motivation? Emotion Review, 5(3), 291–295. https://doi.org/10.1177/1754073913477509

- Harrison, D. W., Gorelczenko, P. M., & Cook, J. (1990). Sex differences in the functional asymmetry for facial affect perception. International Journal of Neuroscience, 52(1–2), 11–16. https://doi.org/10.3109/00207459008994238

- Hernandez, J., Paredes, P., Roseway, A., & Czerwinski, M. (2014, April). Under pressure: Sensing stress of computer users. Proceedings of the SIGCHI conference on human factors in computing systems (pp. 51–60). Toronto, ON.

- Hertenstein, M. J., Butts, A., & Hile, S. (2007). The communication of anger: Beyond the face. Psychology of anger. Nova Science Publishers.

- Hertenstein, M. J., Keltner, D., App, B., Bulleit, B. A., & Jaskolka, A. R. (2006). Touch communicates distinct emotions. Emotion, 6(3), 528. https://doi.org/10.1037/1528-3542.6.3.528

- Hugdahl, K., Iversen, P. M., & Johnsen, B. H. (1993). Laterality for facial expressions: Does the sex of the subject interact with the sex of the stimulus face? Cortex, 29(2), 325–331. https://doi.org/10.1016/S0010-9452(13)80185-2

- Kidd, C. D., Taggart, W., & Turkle, S. (2006, May). A sociable robot to encourage social interaction among the elderly. Proceedings 2006 IEEE international conference on robotics and automation, 2006. ICRA 2006. (pp. 3972–3976). Orlando, FL: IEEE.

- Kirby, R., Forlizzi, J., & Simmons, R. (2010). Affective social robots. Robotics and Autonomous Systems, 58(3), 322–332. https://doi.org/10.1016/j.robot.2009.09.015

- Kumar, G. S., & Bhavani, G. S. (2017, September). A new dimension of immersiveness into virtual reality through haptic technology. 2017 IEEE International Conference on Power, Control, Signals and Instrumentation Engineering (ICPCSI) (pp. 1651–1655). Chennai, India: IEEE.

- Lang, P. J., & Bradley, M. M. (2010). Emotion and the motivational brain. Biological Psychology, 84(3), 437–450. https://doi.org/10.1016/j.biopsycho.2009.10.007

- Lang, P. J., Bradley, M. M., & Cuthbert, B. N. (1990). Emotion, attention, and the startle reflex. Psychological Review, 97(3), 377–395. https://doi.org/10.1037/0033-295X.97.3.377

- Leppänen, J. M., Tenhunen, M., & Hietanen, J. K. (2003). Faster choice-reaction times to positive than to negative facial expressions: The role of cognitive and motor processes. Journal of Psychophysiology, 17(3), 113. https://doi.org/10.1027//0269-8803.17.3.113

- Marsh, A. A., Ambady, N., & Kleck, R. E. (2005). The effects of fear and anger facial expressions on approach-and avoidance-related behaviors. Emotion, 5(1), 119. https://doi.org/10.1037/1528-3542.5.1.119

- Mather, M., & Knight, M. R. (2006). Angry faces get noticed quickly: Threat detection is not impaired among older adults. The Journals of Gerontology. Series B, Psychological Sciences and Social Sciences, 61(1), P54–57. https://doi.org/10.1093/geronb/61.1.P54

- Naugle, K. M., Coombes, S. A., Cauraugh, J. H., & Janelle, C. M. (2012). Influence of emotion on the control of low-level force production. Research Quarterly for Exercise and Sport, 83(2), 353–358. https://doi.org/10.1080/02701367.2012.10599867

- Öhman, A., Lundqvist, D., & Esteves, F. (2001). The face in the crowd revisited: A threat advantage with schematic stimuli. Journal of Personality and Social Psychology, 80(3), 381. https://doi.org/10.1037/0022-3514.80.3.381

- Picard, R. W. (1997). Affective Computing. MIT Press.

- Programming resources. (2010). Phidgets Inc. Retrieved December 23, 2018, from http://www.phidgets.com/docs/Programming_Resources

- Rantala, J., Salminen, K., Raisamo, R., & Surakka, V. (2013). Touch gestures in communicating emotional intention via vibrotactile stimulation. International Journal of Human-Computer Studies, 71(6), 679–690. https://doi.org/10.1016/j.ijhcs.2013.02.004

- Ravaja, N., Harjunen, V., Ahmed, I., Jacucci, G., & Spapé, M. M. (2017). Feeling touched: Emotional modulation of somatosensory potentials to interpersonal touch. Scientific Reports, 7(1), 1–11. https://doi.org/10.1038/srep40504

- Richards, H. J., Hadwin, J. A., Benson, V., Wenger, M. J., & Donnelly, N. (2011). The influence of anxiety on processing capacity for threat detection. Psychonomic Bulletin & Review, 18(5), 883. https://doi.org/10.3758/s13423-011-0124-7

- Saldien, J., Goris, K., Yilmazyildiz, S., Verhelst, W., & Lefeber, D. (2008). On the design of the huggable robot Probo. Journal of Physical Agents, 2(2), 3–12.

- Salminen, K., Surakka, V., Lylykangas, J., Raisamo, J., Saarinen, R., Raisamo, R., … Evreinov, G. (2008, April). Emotional and behavioral responses to haptic stimulation. Proceedings of the SIGCHI conference on human factors in computing systems (pp. 1555–1562). Florence, Italy.

- Seidel, E. M., Habel, U., Kirschner, M., Gur, R. C., & Derntl, B. (2010). The impact of facial emotional expressions on behavioral tendencies in women and men. Journal of Experimental Psychology. Human Perception and Performance, 36(2), 500. http://doi.org/10.1037/a0018169

- Shibata, T., Inoue, K., & Irie, R. (1996, November). Emotional robot for intelligent system-artificial emotional creature project. Proceedings 5th IEEE international workshop on robot and human communication. RO-MAN’96 TSUKUBA (pp. 466–471). Tsukuba, Japan: IEEE.

- Stiehl, W. D., Breazeal, C., Han, K. H., Lieberman, J., Lalla, L., Maymin, A., … Gray, J. (2006). The huggable: A therapeutic robotic companion for relational, affective touch. ACM SIGGRAPH 2006 emerging technologies (pp. 15–es). Boston, MA.

- Surakka, V., & Hietanen, J. K. (1998). Facial and emotional reactions to Duchenne and non-Duchenne smiles. International Journal of Psychophysiology, 29(1), 23–33. https://doi.org/10.1016/S0167-8760(97)00088-3

- Tsetserukou, D., Neviarouskaya, A., Prendinger, H., Kawakami, N., & Tachi, S. (2009, September). Affective haptics in emotional communication. 2009 3rd international conference on affective computing and intelligent interaction and workshops (pp. 1–6). Amsterdam, Netherlands: IEEE.

- Wada, K., & Shibata, T. (2007). Living with seal robots—its sociopsychological and physiological influences on the elderly at a care house. IEEE Transactions on Robotics, 23(5), 972–980. https://doi.org/10.1109/TRO.2007.906261