ABSTRACT

Virtual agents are systems that add a social dimension to computing, often featuring not only natural language input but also an embodiment or avatar. This allows them to take on a more social role and leverage the use of nonverbal communication (NVC). In humans, NVC is used for many purposes, including communicating intent, directing attention, and conveying emotion. As a result, researchers have developed agents that emulate these behaviors. However, challenges pervade the design and development of NVC in agents. Some articles reveal inconsistencies in the benefits of agent NVC; others show signs of difficulties in the process of analyzing and implementing behaviors. Thus, it is unclear what the specific outcomes and effects of incorporating NVC in agents and what outstanding challenges underlie development. This survey seeks to review the uses, outcomes, and development of NVC in virtual agents to identify challenges and themes to improve and motivate the design of future virtual agents.

1. Introduction

Virtual agents are computer systems that strive to engage with users on a social level, through the use of technologies such as natural language interfaces and digital avatars. While traditional dialogue systems enable natural user interaction by allowing users to speak and converse with a computer through natural discourse (Jurafsky & Martin, Citation2000), virtual agents have the ability to go a step further by supplementing the system with a visual representation, or avatar. For example, embodied conversational agents extend the capabilities of dialogue systems through an embodiment (Cassell, Citation2001), which helps an agent take on a social role in interaction as users treat the agent more as another person or individual (Reeves & Nass, Citation1996; Sproull et al., Citation1996; Walker et al., Citation1994).

However, people communicate through more than just speech. For instance, we use gesture to illustrate ideas and provide information (Kendon, Citation2004; McNeill, Citation1992), gaze to show responsiveness and direct attention (Frischen et al., Citation2007), and facial expressions to convey emotion and mood (Ekman, Citation1993). While dialogue systems are unable to fully take advantage of these modalities, virtual agents can emulate these behaviors through an embodiment and thus leverage the full multimodality of human communication (Cassell, Citation2001).

The use of nonverbal communication (NVC) in virtual agents is practically as old as agents themselves (Cassell, Citation2001). Although rudimentary compared to more recent examples, early virtual agents already exhibited different nonverbal behaviors in addition to speech. For instance, the Rea agent (Cassell et al., Citation1999) was able to communicate through speech and gesture, pointing at objects to refer to them and making sweeping motions to pass the speaking floor back and forth. Other agents focused on other forms of NVC such as facial expressions to express emotion and adorn their interaction with affective content (Becker et al., Citation2004; Cassell & Thorisson, Citation1999). With advances in technology, more recent agents feature complex models of NVC (Andrist et al., Citation2012b; Cafaro et al., Citation2016; Pelachaud, Citation2017) and use a combination of different types of behaviors (DeVault et al., Citation2014; Gratch, Wang, Gerten et al., Citation2007; Traum et al., Citation2008).

Despite the number of agents that incorporate some form of NVC, to our knowledge, there are few literature reviews that focus on how NVC is used in agents, and none that explore the roles and outcomes of different types of behaviors and the efforts required to develop an agent that uses NVC. Previous surveys (Allbeck & Badler, Citation2001; André & Pelachaud, Citation2010; Nijholt, Citation2004) have presented an overview of the different behaviors that agents have employed, but do not elaborate on the inherent difficulties nor identify the outcomes of using such behaviors. Other articles focus on how the appearance of an agent, which can be considered a type of NVC (Argyle, Citation1988), affects how users perceive it (Baylor, Citation2009, Citation2011). However, these articles do not focus on more explicit nonverbal behaviors, which are what we seek to study in our review.

More relevant are the surveys of pedagogical agents, which detail the effectiveness of agents in teaching scenarios (Clarebout et al., Citation2002; Heidig & Clarebout, Citation2011; Johnson et al., Citation2000; Krämer & Bente, Citation2010), but these surveys do not focus on the use of NVC, or only mention it in passing. However, a few papers hint at the existence of issues in virtual agent NVC, such as the inconsistency of effectiveness in teaching scenarios (Baylor & Kim, Citation2008; Frechette & Moreno, Citation2010) and the time-consuming nature of defining nonverbal behaviors (Rehm & André, Citation2008).

Thus, the goal of this literature review is to explore the use of NVC in virtual agents and identify the challenges with incorporating NVC in virtual agents, from both the interaction and development standpoints. While agents can feature a range of embodiments from none (e.g., dialogue systems) to physical, real-world embodiments (e.g., robots), for our review, we focus specifically on virtual agents that feature a digital avatar, or visual representation of that agent.

We begin our survey in Section 3 by highlighting the role NVC takes in human-human interactions and then continue in Section 4 by comparing how NVC is likewise emulated in virtual agents. In Section 5, we detail the effects and outcomes NVC has on human-agent interactions, and then, in Section 6, we describe how agent systems that incorporate NVC are designed and developed. We follow this in Section 7 with a discussion of the primary challenges and themes identified in the literature. We then conclude in Section 8 by summarizing our findings, with the intent of motivating continued research into the creation of virtual agents that use NVC.

2. Methodology

To conduct the paper search portion of this literature survey, we utilized the methodology by Kitchenham et al. (Citation2009), who present a set of guidelines for conducting a systematic literature review. For our review, we focused on adapting their strategy for planning research questions and identifying relevant papers. In their process, they recommend starting with establishing a set of research questions/goals to motivate the initial search. To start, we came up with a number of different research questions based on the topic of incorporating NVC in virtual agents. These are listed below:

How is NVC used in virtual agents?

(a)Specifically, what types of NVC have been implemented?

(b)How do the outcomes and functions of NVC in agents compare with natural human interaction?

(2)What challenges are there to creating virtual agents that use NVC?

(a)How is NVC implemented in virtual agent systems?

(b)How do researchers understand and define nonverbal behaviors?

(3)How is mirroring/mimicry utilized in virtual agents that display NVC?

Based on these questions, we derived a set of keywords and search combinations that would yield relevant results. For instance, to identify implementations of NVC in agents, we searched for “nonverbal behavior” and “virtual agent,” which yielded a list of articles relating to virtual agents that used some form of NVC. Key search terms included: Nonverbal communication, gesture, facial expression, multimodal, virtual agent, mirroring, mimicry, chameleon effect.

These keyword combinations and synonyms (e.g., “virtual agent,” “embodied conversational agent,” and “virtual human”) were inputted into Google Scholar to generate a broad listing of articles aggregated from multiple sources. For each search result, we looked through at least the first 10 result pages (up to 15, based on if we encountered many repeated entries in the first 10 pages) for each of the search terms and identified papers relevant to our research questions. This yielded 79 papers from the initial search protocol.

After this search, we also further reviewed the related work and other citations from each selected paper. From these, the relevant articles were added to our paper list. Likewise, these newly added papers were also reviewed to find additional relevant papers, what Kitchenham et al. (Citation2009) refer to as “snowballing.” Using this method, 43 papers were added to the list.

Additionally, papers from relevant authors as identified during the search process (and noted as commonly cited, during the snowballing method) were also reviewed for inclusion in our literature review. We searched for these authors on Google Scholar and their relevant works were also evaluated for inclusion. 22 papers from related authors (that were not already included in the list) were added.

During the search process, papers suggested by collaborators were also added to the list. The resulting number of papers identified through each method is listed in , for a total of 150 papers selected to be in this literature review.

Table 1. Paper identification methods

3. NVC in human-human interactions

To understand how to effectively implement nonverbal behaviors in virtual agents, we first examine how they are used in humans. Nonverbal cues are used as an additional channel of communication that can be used alongside speech to modify what is being said, adding meaning and richness (Argyle, Citation1988; Kendon, Citation2004; Quek et al., Citation2002). In this section, we briefly describe the relevant main benefits of NVC in human interactions as the key motivation and rationale behind the use of NVC in virtual agents. Although there are many forms of nonverbal behavior, we discuss the primary ones that are most relevant to communication and common among the virtual agents identified through our survey.

3.1. Gesture

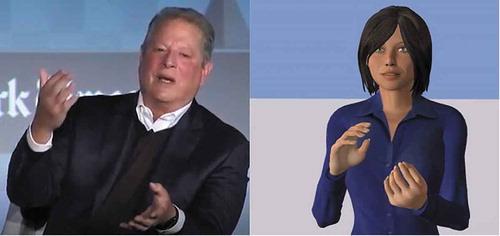

One of the most evident forms of NVC is gesture. Gestures are motions and poses primarily made with the arms and hands (or sometimes other body parts) in order to communicate, often while speaking (). Gesture is used by humans in everyday conversation to refer to objects and add expressiveness to language by demonstrating events and actions (Kendon, Citation2004). Kendon (Citation1988) and McNeill (Citation1992) further separate gestures into five different categories with differing communicatory functions. They range from simple beat gestures (rhythmic motions that go along with words) to emblem gestures (that have their own meaning and can fully replace words). These can add dynamics to a conversation, driving the point through a beat gesture or signifying approval through an emblematic thumbs-up. These gestures co-exist with speech, adding complementary or redundant information. By annotating and quantitatively analyzing videos of people communicating, Quek et al. (Citation2002) show how gesture and speech form a temporal and co-expressive relationship with one another. Gestures occur at key points in time while speaking, such as during speech or specifically during a verbal pause.

Figure 1. A man naturally gesturing with his hands while speaking to emphasize the importance of a concept. (Public domain image)

While these examples highlight the descriptive use of gesture in the contexts of narration and conversation, gestures also play a more functional role in delivering information. Due to their spatial nature, gestures have the ability to describe spatial features, allowing a person to illustrate imaginary objects and spaces (Alibali, Citation2005; Bergmann, Citation2006; Kendon, Citation2004). They also facilitate conversational grounding, i.e. the process of establishing mutual understanding when communicating (Clark & Brennan, Citation1991; Clark & Wilkes-Gibbs, Citation1986; Dillenbourg & Traum, Citation2006). Gestures also help manage the flow of conversation by functioning as a signal for turn-taking and also providing a “back-channel” for communicating attention (Duncan & Niederehe, Citation1974; Young & Lee, Citation2004), such as a nodding head gesture. The use of gesture in teaching is also associated with comprehension and information recall (Cook & Goldin-Meadow, Citation2006; Goldin-Meadow & Alibali, Citation2013; Novack & Goldin-Meadow, Citation2015).

The HCI field has also examined the use of gesture to communicate, with the express intent to build gestural interaction systems. For instance, in a study looking at how people use gestures to describe objects and actions, Grandhi et al. (Citation2011) saw how people used pantomime to directly paint an imaginary image of the action (i.e., holding imaginary objects and pretending to perform a task) rather than attempt to use abstract gestures to describe the task or object. Other research focusing on shared visuals and workspaces between people also highlight the usefulness of non-verbal communication to support coordination between humans (Fussell et al., Citation2000, Citation2004; Gergle et al., Citation2004; Kraut et al., Citation2003). In these cases, deictic gestures (i.e., pointing) are used to direct shared attention between people and signal toward specific objects or areas when speaking.

3.2. Gaze

As a nonverbal signal, gaze has both interpersonal effects as well as practical functions. For example, mutual gaze or eye contact can signal likability or intimacy (Argyle & Dean, Citation1965; Cook, Citation1977), but gazing for too long can increase discomfort or awkwardness (Cook, Citation1977). Mutual gaze (eye contact between individuals) has also been shown to have an impact on a person’s credibility (Beebe, Citation1976). On a more functional level, shared gaze, or joint attention, has been shown to increase performance in spatial tasks between collaborators (Brennan et al., Citation2008). This is due to the ability for gaze to both convey attention and direct attention (Frischen et al., Citation2007), with similar effect to deictic gestures.

There are also conversational functions associated with gaze behavior. While speaking, a person may use gaze to nonverbally manage the conversation (Duncan, Citation1972). The use of key gaze signals (such as averting the eyes and then returning to a mutual gaze) can be used when turn-taking to pass the speaking floor to someone else or maintain control of the floor. When listening, gaze can be indicative of a person’s level of interest and attention (Bavelas & Chovil, Citation2006; Bavelas et al., Citation2002). A speaker would often gaze away while speaking but engage in brief moments of mutual gaze in order to “checkup” on the listener. The listener would react to the gaze by giving an affirmative response (whether verbal or gestural), to which the speaker would resume. Likewise, gaze can also be a measure of listener comprehension (Beebe, Citation1976).

3.3. Facial expression

Facial expressions are often used by humans to convey emotion or affect. While facial expressions are not the only channel for communicating emotion, they are among the strongest indicators (Ekman, Citation1993). Research by Ekman (Citation1992) has shown how, as humans, we have at minimum a basic set of emotions (ranging from anger to surprise) with virtually universal facial expressions to convey them (Ekman et al., Citation1987). In contrast, Horstmann (Citation2003) argues that expressions signal more than just emotion, but also reveal the person’s intent and can even communicate desired actions. Facial expressions are also readily interpretable by people (H. L. Wagner et al., Citation1986), as well as by facial recognition systems (Bartlett et al., Citation1999). Thus, facial expressions provide a rich source of information that is both readily available and recognizable.

3.4. Proxemics

There are other behaviors which also communicate but are more focused around the whole body and its positioning. One such focus is on proxemics, or the study of the interpersonal distance between individuals and how that form of body language is interpreted. Hall (Citation1966) laid out four progressive levels of interpersonal distance, ranging from a close intimate distance to a far public distance. People naturally interact at these different distances based on their interpersonal relationship (Willis, Citation1966), for instance, talking with friends would be at a personal distance (around 2–5 feet) but likely not at an intimate distance (less than 2 feet). When meeting someone for the first time, how close they come can also express different personality traits, such as friendliness or extroversion (Patterson & Sechrest, Citation1970).

3.5. Posture

Postures have been shown to express a number of different attitudes and meanings. A posture with arms folded or legs crossed can signify being “closed-off” or less inviting to social interaction (Argyle, Citation1988). Mehrabian (Citation1969) showed how postures directly related to feelings of assertion and dominance, based on the perceived relaxation of a pose. They also showed how likability and attentiveness can be conveyed through body language such as leaning toward another person, sometimes in conjunction with eye contact and closer proximity (Mehrabian, Citation1972).

Similar to facial expression, posture also has the ability to communicate emotional state. A study by Dael et al. (Citation2012) showed how specific patterns of body movements and poses commonly expressed specific emotions and that people were readily able to differentiate between them. The level of intensity for an emotion can also be conveyed through the body. Wallbott (Citation1998) studied how the amplitude/level of energy in motions and body poses can reflect the intensity of the emotion portrayed. Posture can also be a measure of rapport (Kendon, Citation1970), more recently studied through the psychological effect of mirroring, where a person mimics the body poses, gestures, or even general attentiveness of another, often subconsciously (Lafrance & Broadbent, Citation1976). In doing so, this is interpreted as a sign of likability or willingness to cooperate (Chartrand & Bargh, Citation1999).

3.6. Behavioral mirroring

One particular nonverbal behavior we want to introduce here is mirroring. Mirroring is different from other behaviors in that it is not technically a “type” of NVC. Instead, mirroring is when one person subconsciously mimics the behavior of another (Kendon, Citation1970). The act may be verbal or nonverbal; however, for this paper, we refer to behavioral mirroring in the nonverbal sense. Mirroring has many benefits in human-human communication, including increasing likability (Chartrand & Bargh, Citation1999; Duy & Chartrand, Citation2015; Jacob, Gueguen, Martin, & Boulbry, Citation2011), rapport (Chartrand & Bargh, Citation1999; Duy & Chartrand, Citation2015; Kendon, Citation1970; Lafrance & Broadbent, Citation1976; Lakin & Chartrand, Citation2003), and persuasion (Jacob et al., Citation2011; van Swol, Citation2003).

Kendon (Citation1970) was one of the earliest to study mirroring, calling it “interactional synchrony” that naturally occurred between individuals. Lafrance and Broadbent (Citation1976) noted that posture mimicry (e.g., leaning forward after a speaker leans forward) may be a subconscious signal to convey a listener’s attention. This signal, when (subconsciously) received back by the speaker, can increase feelings of rapport. LaFrance (Citation1985) even refers to mirroring as “an obvious yet unobtrusive indicator of openness to interpersonal involvement.”

These two studies were observational; Chartrand and Bargh (Citation1999) were the first to investigate mirroring in an experimental setting. In their study, participants described photos to another individual (a researcher who functioned as a confederate). The confederate would either mimic the participant’s body language (e.g., gesture and posture shifts) or not. Participants who interacted with the mimicking confederate rated their interaction as smoother, with a similar increase in likability and empathy.

Mirroring can even influence behavior. In a study by Jacob et al. (Citation2011), customers who interacted with a retail clerk that mimicked their behaviors rated the clerk as more likable and having more influence. The customers who interacted with the mirroring clerk were also more likely to spend more. Van Baaren et al. (Citation2004) even showed how mirroring may even have positive social effects. They conducted a study where participants interacted with either a mirroring or non-mirroring confederate. The confederate would “accidentally” drop a few pens on the ground. Participants in the mirroring condition were more likely to help the confederate pick up the pens. Cook and Goldin-Meadow (Citation2006) studied how mirroring can impact learning in children. Children who were taught by an instructor who used gesture were more likely to use the same gestures when explaining the concepts they learned. The children who mirrored the gesture also scored higher on a test versus children who did not mirror, despite both receiving the same instruction.

3.7. Complex NVC

Furthermore, an important point about these nonverbal behaviors is that they are not all independent – different behaviors are often combined to form more complex nonverbal expressions. A person might, for example, both gaze at another while gesturing toward them to signal that they are passing the speaking floor (Duncan, Citation1972; Duncan & Niederehe, Citation1974). In addition, the act of communicating understanding involves gaze, facial expression, and even posture (Clark & Brennan, Citation1991; Duncan & Niederehe, Citation1974; Young & Lee, Citation2004). Proximity can also be used as an indicator of willingness to interact (Argyle, Citation1988), such as walking toward someone but in conjunction with gaze and facial expression to communicate their intention. In contrast, two types of NVC may even interact, such as with mutual gaze and proximity (Argyle & Dean, Citation1965). Gaze may improve levels of intimacy between two people but can be affected by their proximity (thus requiring a balance between the two in order to create the desired effect).

4. NVC in virtual agent interactions

In the previous section, we highlighted different types of NVC used in human-human interactions. These encompassed a wide range of nonverbal cues, from gesture to proxemics. Likewise, virtual agents have implemented similar cues in order to emulate natural human behavior. These agents have been created for a number of different application areas, including teaching (Andrist, Citation2013; Baylor & Kim, Citation2008, Citation2009; Noma et al., Citation2000; Rickel & Johnson, Citation1999, Citation2000), coaching (Anderson et al., Citation2013; Bergmann & Macedonia, Citation2013; Kang et al., Citation2008), healthcare (DeVault et al., Citation2014; Hirsh et al., Citation2009; Kang et al., Citation2012), military settings (Kenny et al., Citation2007; Lee et al., Citation2007; Traum et al., Citation2008), as general conversation partners (Buschmeier & Kopp, Citation2018; Gratch, Wang, Okhmatovskaia et al., Citation2007; Hartmann et al., Citation2006; Pelachaud, Citation2005a), and as virtual assistants (Cassell et al., Citation1999; Matsuyama et al., Citation2016; Theune, Citation2001). In our literature search, we identified papers describing agents that implement each of the NVC types detailed in the previous section. In this section, we give examples of how virtual agents emulate each type of behavior. We start by summarizing the types of NVC used in agents and how they compare against human-human interactions, before later describing the overall goals and effects of agent NVC in Section 5.

4.1. Gesture

Gesture is one of the most predominant forms of NVC used in agents. Recall that, in humans, gesture has many purposes ranging from illustrating words and ideas to directing attention and referring to objects. We see these same purposes featured in virtual agents. Like with human-human interactions, gesture is primarily used to accompany speech. The Rea agent, created by Cassell et al. (Citation1999) to function as a virtual real estate agent, is one of the earliest to use gestures for this purpose. As she interacts with users, she will nod (a head gesture) to signal attention and use beat gestures to emphasize specific words when she speaks.

Similarly, the Greta agent (Niewiadomski et al., Citation2009; Pelachaud, Citation2017; Poggi et al., Citation2005) in is a multi-purpose conversational agent that is designed for multipurpose applications (ranging from interviews to coaching). This allows her to be adapted and used for different research goals. One of the key features of the Greta agent is that she performs different gestures when speaking to the user. Like with human-human communication, Greta’s use of gestures is for added expressivity, but also for the goal of increasing the agent’s believability in interactions. The Max agent (Becker et al., Citation2004) also performs gestures while speaking to increase believability and realism.

Figure 2. The Greta agent communicating through gesture. Image from https://github.com/isir/greta. Reproduced with permission

On a different note, the Steve agent (Rickel & Johnson, Citation1999) also uses gestures when speaking, but instead in teaching and training situations. Unlike the previous agents that use gesture to primarily make conversations more expressive, Steve takes a more functional approach. Steve uses deictic gestures while referring to specific objects in his environment as well as demonstrative gestures and direct actions to directly show the user how to perform a task. Traum et al. (Citation2008) also had their agent gesture toward objects when referring to them. These examples highlight how agents, much like humans, are able to use gesture to help in completing tasks.

4.2. Gaze

Gaze is also commonly emulated in virtual agents. In particular, agents focus on implementing both mutual gaze and deictic gaze to convey their attention and refer to objects, respectively. Andrist, Mutlu et al. Citation(2012a, 2013) describe the development of agents that employ mutual gaze and gaze aversions in a teaching setting to control the conversation and help increase feelings of affiliation in users. Likewise, an agent by Lee et al. (Citation2007) uses a model of mutual gaze in conversation to show when the agent is paying attention and listening intently. More recently, the Ellie agent by DeVault et al. (Citation2014) and the SARA agent by Matsuyama et al. (Citation2016) use gaze in combination with other types of NVC to build rapport. Lance and Marsella (Citation2009, Citation2010), Lance & Marsella (Citation2008) go even further by using gaze aversion to convey emotion in agents.

Andrist et al. (Citation2017) also created an agent that used gaze to coordinate and direct attention. A user was asked to collaborate with the agent to build different types of sandwiches. The agent would use gaze in conjunction with speech to refer to ingredients. In that same vein, Pejsa et al. (Citation2015) describe the development of an agent that uses both mutual and deictic gaze to coordinate movement and show interest in both the user as well as objects in the environment. Overall, as with gesture, gaze is well-utilized in agents with much of the same purposes as in human-human interactions.

4.3. Facial expression

A number of agents have the ability to display different facial expressions. Just like with human-human interactions, facial expressions in virtual agents are used to convey emotion, or affect. As an early example, Gandalf (Cassell & Thorisson, Citation1999) makes different faces while answering users’ questions, such as smiling while making a joke. A more advanced agent, Max, by Becker et al. (Citation2004) has an internal model of emotion that enables him to smile when happy or even look angry when annoyed. Greta (Poggi et al., Citation2005), as mentioned earlier, is also expression-capable and can even use them in conjunction with other nonverbal behaviors.

These facial expressions help give an agent its own kind of personality. Cafaro et al. (Citation2016) describe their Tinker agent, designed to function as a guide for museum exhibits. They focus on making sure that the user’s first impression of the agent is a positive and friendly one, so that they continue to interact with the agent. Expression is one of the behaviors that Tinker uses to convey such a personality. McRorie et al. (Citation2012) created four different agents featuring different expressions, enabling each of them to express their own personality.

On the healthcare side, the virtual therapist by Grolleman et al. (Citation2006) focuses on facial expressions to try and increase feelings of empathy and acceptability. The Ellie agent (DeVault et al., Citation2014) mentioned earlier also uses expression alongside gaze with the goal of increasing rapport. Kang et al. (Citation2012) focused on increasing intimacy with an agent, which is important for users to feel comfortable when disclosing sensitive information to a virtual counselor. As a whole, the use of expression enables agents to engage users on an affective level, making them more capable social actors.

4.4. Proxemics

Although not as common, a few agents exist that follow the rules of proxemics when interacting with others. The museum guide Tinker (Cafaro et al., Citation2016), mentioned earlier, uses more than just facial expression to engage users. Tinker also obeys the rules of interpersonal distance, ensuring that the interaction does not feel awkward by invading in intimate space or staying too far away to communicate. Edith (Andrist, Leite et al., Citation2013), an agent designed to interact with groups of children, typically stays within an acceptable social distance when speaking to the whole group but moves closer as needed to engage with an individual. The chat agents by Isbister et al. (Citation2000) also employs similar group dynamics. When engaging multiple people, the agents will move back to ensure that they are seen by all parties. In contrast, the agents will turn and move closer when their speech is directed to an individual. Similarly, Pejsa et al. (Citation2017) developed a footing model for agents to orient and engage users in virtual environments. Responding to proxemic social cues is also a way for agents to come across as more personal and aware (Garau et al., Citation2005).

In humans, the rules of proxemics also differ based on cultural norms. Distinct cultures have varying comfort levels regarding interpersonal distance. Virtual agents have also been designed that mimic these behaviors. Kistler et al. (Citation2012) created agents that exhibited proxemic behaviors for both individualistic and collectivistic cultures. As a result, users had different responses to the agents based on their own cultural standards.

4.5. Posture

As with proxemics, posture is also not as commonly used compared to other NVC types such as gaze and gesture. However, there are some full-body agents that do assume different postures while interacting with a user. The therapist agent Ellie (DeVault et al., Citation2014) is one agent that increases rapport through appropriate body postures (), in an attempt to emulate what is done by real therapists. Gratch et al. (Citation2006), Huang et al. (Citation2011), and Kang et al. (Citation2008) also focus on rapport in conversation and interview settings. Their agents use posture shifts alongside other types of NVC to increase users’ feelings of rapport and make the agent feel more natural.

4.6. Behavioral mirroring

Mirroring is also not as prominent in virtual agents. However, researchers have developed a few agents that mirror the nonverbal behavior of users. For example, the Rapport Agent by Gratch et al. (Citation2006) implements posture, gaze, and head motion (nod and shake) mimicry as a form of listening feedback. The goal is that the inclusion of these behaviors increases a user’s sense of rapport with the agent, much like in humans. Similarly, Stevens et al. (Citation2016) created an agent that would mimic a user’s facial expressions while speaking, to increase feelings of lifelikeness and likability. Other agents emulate the expressiveness of gestures (Bevacqua et al., Citation2006; Caridakis et al., Citation2007), mimic head motions (Bailenson & Yee, Citation2005; Bailenson et al., Citation2008), and gestures (Castellano et al., Citation2012).

5. Effects of NVC in virtual agents

In the previous sections, we highlighted the benefits of NVC in humans and how agents attempt to emulate this behavior. In this section, we focus on how the NVC produced by agents affects users, specifically, the different roles it plays and the corresponding outcomes and effects. For example, many agents use facial expressions with the goal of conveying emotion, but do users actually pick up on these signals and correctly identify the agent’s emotion? This section aims to answer this question and understand the outcomes of NVC in virtual agents. provides a summary of the different purposes and objectives of agent NVC identified in our review. In the following subsections, we describe these in detail, highlighting the outcomes and effects that NVC has on users.

Table 2. Papers grouped by type and purpose of virtual agent NVC

5.1. Managing conversation

To start, as in humans, NVC in agents can be used to manage a conversation, which affects how users perceive an agent’s engagement and helpfulness. For instance, Andrist, Leite et al. (Citation2013) showed how NVC can be effective for managing conversation in groups. They conducted a study where an agent would try to manage a group of children. The agent would use multimodal cues (including gaze, gesture, and proxemics) to pass the speaking floor around so that each child would have an equal opportunity to contribute. The study showed that an agent that includes all three cues resulted in a more evenly managed group with lower variance in turns taken. Compared to an agent that only used vocal cues or a subset of the nonverbal cues, the agent that used all three was most successful in managing the conversation without decreasing the enjoyment of the agent.

Research has also shown how NVC in agents can also be effective in communicating feedback such as attention. For example, Bailenson et al. (Citation2002) showed that the ability for head movements to communicate gaze can be transferred into virtual agents with similar effects. They represented users with motion-tracked avatars in a virtual environment and showed that the use of communicative head movements still enriched interaction and decreased the proportion of speech needed (as gaze could be used to nonverbally communicate attention and intention). Turning the problem around, Buschmeier and Kopp (Citation2018) created an “attentive” agent that responded to the user’s levels of attention. Their agent that was able to adapt its communication based on human attentiveness and verbal/nonverbal feedback (e.g., head gestures). They compared this agent against one that did not respond to these inputs. Surprisingly, they found that humans naturally produced more of these feedback behaviors for the attentive agent. This points to the possibility that, at some level, users were aware of the fact that the agent could understand their behaviors, and thus communicated more in these modalities. In their study, subjective ratings reflected that users were aware of the agent’s capabilities: they rated the agent higher in terms of understanding them and feeling attentive. These are key examples of how agents can effectively use NVC to perform different conversational functions. However, recall that agents also perform nonverbal cues with less functional, and more expressive purposes.

An early study by Cassell and Thorisson (Citation1999) aimed to understand which type of cues were more important in human-agent interactions. They separated nonverbal feedback into two categories: emotional, which focuses more on conveying affect (through facial expressions and other body language), and envelope, which focuses on conversational functions (through gaze, gestures, etc.). By their definition, envelope feedback is feedback that does not add to a conversation, it merely coordinates it. As an example, averting one’s gaze to signal that they are taking a turn, or expressing attention while the other is speaking would be considered forms of envelope feedback. They conducted a study asking participants to interact with an agent that exhibited content-only feedback (speech without NVC), content with emotional feedback, and content with envelope feedback. Users rated the agent with envelope feedback as more helpful and efficient than the one with emotional feedback.

5.2. Expressing a unique personality

In contrast to this work is the idea that emotional feedback/behaviors are also important. Agents often need to focus on changing users’ perceptions of them. For instance, personality is important in virtual agents, especially when agents are targeted toward healthcare and therapy applications (DeVault et al., Citation2014). An agent should ideally have an appropriate personality and behavior for its given context. Giving a computer system a face allows it to take on a social role (Reeves & Nass, Citation1996). In doing so, people naturally view an agent in light of different social attributes: for instance, people view a computer with a face as more likable (Walker et al., Citation1994) and often ascribe specific personalities to it (Sproull et al., Citation1996). They change their attitude toward the more human-like interface than a bare system. By extension, how an agent behaves, both verbally and nonverbally, can have a large influence on its perceived personality (Cafaro et al., Citation2012).

A number of studies have focused on studying the interplay between NVC and personality. Although personality and emotional traits are primarily delivered through speech and facial expression, in some cases, even behavior alone is enough to convey a sense of emotion and personality (Clavel et al., Citation2009). As an example, Neff et al. (Citation2010) looked at correlations between different behavioral characteristics against perceptions of introversion and extroversion. They found that tweaking different gesture parameters, such as speed and the range of motion used, changed the perceived personality of an agent. In their study, agents that displayed gestures where limbs stayed close to the body were rated as more introverted compared to gestures that extended further. Performing gestures more often also increased senses of extroversion. McRorie et al. (Citation2012) extended this to show how additional characteristics of face and gesture (such as gesture speed, spatial volume, energy, etc.) can also express the traits of extraversion, psychoticism, and neuroticism. A user’s perceptions of extraversion and affiliation are also affected by the inclusion of smile, gaze, and proxemics (Cafaro et al., Citation2012). When studying first impressions of virtual agents, Cafaro et al. (Citation2012) found that while proximity mainly affected perceptions of extraversion, smiling behavior dominated positive impressions of friendliness, and gaze had a smaller influence on personality.

Personality also affects the perceived warmth and competence of an agent. When analyzing behaviors to implement in agents, Biancardi et al. (Citation2017a) analyzed a corpus of human-human interactions and found a correlation between gesture use and personality. They annotated videos of human experts sharing knowledge with novices and found that the use of gestures was associated with both higher senses of warmth and competence, and smiling was associated with higher senses of warmth but lower competence. This was also found to extend to virtual agents in a follow-up study (Biancardi et al., Citation2017b). Bergmann et al. (Citation2012) similarly emphasize how including gestures helps improve a user’s first impressions of an agent when interacting with it for the first time. The researchers measured responses against the social cognition dimensions of warmth and competence. When gestures were present, feelings of competence increased, and likewise decreased when gestures were absent, aligning with the findings by Biancardi et al. (Citation2017a).

Although these studies show how personality can be attributed to agents through their use of NVC, the appropriateness of those behaviors is equally important. In addition to their earlier findings, Cafaro et al. (Citation2016) also showed how the appropriateness of an agent’s behavior is crucial in forming first impressions of the agent. They explained how first impressions set a baseline for the human’s expectations and is influential in deciding whether or not they should continue to interact with the agent. Through the use of both verbal and nonverbal behaviors (including proxemics, gaze, and smiling), an agent can express more relational capabilities such as showing empathy and friendliness (Cafaro et al., Citation2016). In their study, Cafaro et al. found that the use of these appropriate behaviors resulted in increased time spent with the agent, with greater reported values of user engagement and satisfaction. In addition, Kistler et al. (Citation2012) further emphasized the importance of appropriate behaviors in an agent when they showed how people felt that agents that displayed nonverbal behavior aligning with their own cultural expectations were more appropriate.

5.3. Achieving a sense of copresence

Social presence, or copresence, is a sense of intimacy and immediacy between people (Short et al., Citation1976). As virtual agents strive to be social actors, it is vital that they provide a sense of copresence to the user. Research has focused on how both agent appearance and agent behavior create a sense of presence.

For example, Bailenson et al. (Citation2005) studied how agent copresence is affected by appearance and behavior with regards to head movements. They compared how users’ ratings of copresence were affected by both an agent’s realism of motion and realism of appearance. They emphasize that the two are strongly related. Participants felt that for the least human-like agents, the least realistic motions were appropriate. Likewise, for the more human-like agents, more realistic motions were appropriate. They found that a mismatch between appearance and motion would lead to lower ratings and sense of copresence.

Even different degrees of agency can affect presence. To illustrate, Nowak and Biocca (Citation2003) compared different agents in a virtual environment, varying their agency (such as having a speech-only agent) and anthropomorphism (robotic vs. human-like agent). They argue that an agent’s appearance alone can increase a user’s sense of copresence, just through the use of embodiment. In their study, people would naturally assume an agent as anthropomorphic unless the agent presented evidence to the contrary. For example, an agent without a visual appearance felt more humanlike than one with an obviously robotic appearance. However, Guadagno et al. (Citation2007) provide an interesting nuance to this argument. They establish that higher levels of behavioral realism (not just a realistic appearance) produce higher levels of social presence. These findings show the importance of an agent’s behavior (not just appearance or linguistic capabilities) and how users’ incoming beliefs, perceptions, and expectations play a large role as well.

The way an agent responds to and interacts with users can also affect presence. An agent that provides realistic nonverbal feedback to a user’s actions feels more copresent. Garau et al. (Citation2005) studied how people perceived agents based on their movements and responsiveness with regards to proxemic behavior. For example, a responsive agent would look at the user when the user walked close to it in a VR environment. The researchers’ goal was to see if agents would be treated as social entities. They asked participants to walk through a virtual environment that with a virtual agent in it, without explicitly asking them to interact with the agent. They found that users reported a higher sense of personal contact and copresence when interacting with the agents that would recognize the user’s behavior and respond accordingly. Agents that spoke led users to engage with them more often. The non-engaging, unresponsive agents felt “ghostlike” as their behavior was not influenced by the user’s actions. Behavior itself can affect how users socially perceive an agent even without speech. To quote the authors, “On some level people can respond to agents as social actors even in the absence of two-way verbal interaction.” This example points to the power of NVC in human-agent interactions.

5.4. Increasing rapport, trust, and empathy

Related to the sense of social presence is the concept of rapport. Rapport refers to the coordination and relationship between individuals and is related to a mutual sense of trust. In humans, rapport is strongly related to nonverbal behaviors, including expressions of positivity (like smiling), shared attention, and coordination (Tickle-Degnen & Rosenthal, Citation1990). Maintaining rapport with a user is important for agents in therapy/healthcare applications (DeVault et al., Citation2014; Gratch et al., Citation2006; Kang et al., Citation2012; Nguyen & Masthoff, Citation2009), but also teaching (Baylor & Kim, Citation2008, Citation2009; Rickel & Johnson, Citation1999, Citation2000) and collaboration when completing tasks (Andrist et al., Citation2017).

The Rapport Agent by Gratch et al. (Citation2006) is a prime example of an agent that leverages NVC to increase rapport. In a lab study using the Rapport Agent, Gratch, Wang, Gerten et al. (Citation2007) showed how differing agent responsiveness affects human behavior and feelings of rapport. They tested different conditions: a face-to-face condition where two participants spoke directly with each other, a mediated condition where the “listener” participant was represented by an avatar instead, a responsive agent that displayed nonverbal listening behavior based on perceived speech and head movements from the user, and a prerecorded avatar that moved, but not in response to any user input. Surprisingly, Gratch et al. found that the responsive agent led to the highest amount of engagement and that many participants believed they were interacting with an avatar representing a human rather than an autonomous agent. Their results showed how the use of NVC can greatly improve the effectiveness of a virtual agent.

As with the agents described in earlier sections (Buschmeier & Kopp, Citation2018; Cassell & Thorisson, Citation1999; Garau et al., Citation2005), Gratch et al. (Citation2006) found that responsiveness and feedback is key to an effective agent interaction. They emphasize that the contingency of feedback, not just the prevalence of behavioral cues and animation is important for creating feelings of rapport between a human and an agent. A responsive agent may even be better at creating feelings of rapport than a human (Gratch, Wang, Okhmatovskaia et al., Citation2007). Kang et al. (Citation2008) continued these studies by looking at users’ feelings of shyness and self-performance when interacting with contingent agents. They found that social anxiety decreased with an increase in the contingency of the agent’s nonverbal behaviors. Greater anxiety led to decreased feelings of rapport and worse performance with the non-contingent agent. Huang et al. (Citation2011) later improved on the Rapport Agent by using collected data of people interacting with prerecorded videos and implementing their specific behaviors into the agent. The agent also incorporates partial mirroring of the other’s behavior. They found that this agent improved feelings of rapport and was better overall in terms of naturalness, turn-taking, etc.

Related to rapport is the notion of trust. As an example, Cowell and Stanney (Citation2003) focused on how nonverbal behavior can create trust and credibility in virtual agent interactions. They designed an agent that could portray both trusting (e.g., increased eye contact) and non-trusting (e.g., gaze aversions, negative facial expressions) behaviors. Users presented with the trusting agent rated the agent as more credible and were more satisfied with the interaction than the non-trusting agent, with which they rated as less trustworthy. Thus, it is important to use proper nonverbal behaviors for an agent that needs the user to trust them.

Mirroring and mimicry can also increase the trustworthiness and persuasiveness of an agent, as shown by Bailenson and Yee (Citation2005). Bailenson and Yee (Citation2005) created an agent that would mimic a person’s head movements as the agent delivered a persuasive message. The mimicking agent was more effective than one that did not mimic. However, when the mimicry was too obvious, people realized that the agent was copying them (Bailenson et al., Citation2008). The detection of mimicry decreased ratings of trust and friendliness. Their findings indicate that there may be a threshold to mirroring, balancing the potential benefits with the possibility that the behavior could backfire.

The effects of mirroring and trust may also depend on context. Verberne et al. (Citation2013) evaluated mimicry in two scenarios: one that was more competence-based (trusting that the agent could do the job) and one that was more relational (trusting that the agent’s intentions were pure). In the competence-based scenario, participants had to choose if they would trust the navigational skills of an agent to their own. In the relational-based scenario, participants had to choose whether or not to trust an agent with investing their money. For both scenarios, Verberne et al. compared the use of a head-motion mimicking agent vs a non-mimicking agent. The results from the experiment showed that although people liked and trusted the mimicking agent overall, the effects were more pronounced for the competence-based scenario than the relational one. Their results imply that, although mirroring behaviors can increase trust, the effects may be tempered by the context and experiences a user has with the agent.

An agent that displays empathetic emotions can also increase senses of trust and empathy. For example, Nguyen and Masthoff (Citation2009) developed an agent that intervenes to alleviate a user’s negative mood. When evaluating their system, they found that an agent that displayed empathic expressions led to higher positive ratings and likability from users. They establish that a human-like representation affords empathic capabilities and increases the sense of emotional intelligence in an agent. Likewise, Kang et al. (Citation2012) showed how the use of specific nonverbal behaviors in a virtual counselor increased user perceptions of intimacy. A virtual counselor would require self-disclosure from people, thus requiring a degree of intimacy. Kang et al. first studied natural human behavior to identify eye gazes, head nods, head shakes, tilts, pauses, and smiles, which they then implemented into an agent. Participants that saw the agent expressing these emotional behaviors attributed a higher level of intimacy to the interaction. Even head movements alone (without any facial features/expressions) are able to increase trust and induce more disclosure of information from people (Bailenson et al., Citation2006), showing just how important NVC is at establishing trust.

5.5. Enhancing the efficacy of collaboration and learning

NVC also plays a large role in agents that teach and agents that collaborate with people to accomplish tasks. Research in these areas mainly focuses on studying how the inclusion of NVC improves memory recall and task performance. In humans, recall that gaze is an efficient way to coordinate attention between individuals (Brennan et al., Citation2008). Andrist et al. (Citation2017) showed how gaze can be also used for coordinating actions in a virtual agent. They created a model of gaze behavior based on prior literature and implemented these behaviors into an agent that cooperated with users to build sandwiches. The researchers evaluated their agent in a user study, asking participants to collaborate with the agent to complete sandwich-building tasks (). Andrist et al. found that an agent that responded to and produced gaze resulted in a faster task completion time. Additionally, the agent was rated higher in terms of cognitive ability and competence, also resulting in higher amounts of shared gaze and mutual gaze. These results show how an agent’s behavior can affect both users’ performance and perception of the agent.

Figure 3. A user interacting with an agent that employs gaze cues to help coordinate and direct the user to the intended target in a collaborative sandwich-building game. (Image from Andrist et al., Citation2017)

For pedagogical/teaching agents, NVC is often used to add richness and emphasize concepts when delivering information (Andrist et al., Citation2012a; Baylor & Kim, Citation2008, Citation2009; Bergmann & Macedonia, Citation2013; Mayer & DaPra, Citation2012). For instance, Andrist et al. (Citation2012a) created a presenter agent that used referential gaze to increase information recall in users. They also showed how affiliative (i.e., mutual) gaze increased feelings of connectedness, but failed to show an increase in recall performance. Similarly, Baylor and Kim (Citation2008) looked at the interplay between an agent’s nonverbal behaviors and its teaching style. They found that the perception of behavior differed depending on the way a course was taught. For teaching attitudinal and persuasive information, facial expressions were valued more than deictic gestures, which were valued more in procedural, linear lecturing. This was followed up (Baylor & Kim, Citation2009) with a deeper analysis of gestures and teaching. The authors theorize that because expressions and gestures were both types of visual information, they may have interfered with users’ working memory (cognitive load theory, Chandler & Sweller, Citation1991). When inappropriate, nonverbal cues can even evoke negative responses (Baylor & Kim, Citation2009); thus, agent behaviors must match the intended application and content to avoid detrimental or non-effects.

This theme is echoed by other research. Bergmann and Macedonia (Citation2013) looked at the use of iconic gestures (those that illustrate words by painting a nonverbal picture) in agents. People were asked to learn foreign words from an agent; the researchers measured their learning performance and memory recall of the words. They found that including iconic gestures resulted in better performance than a control with no gestures. Surprisingly, Bergmann and Macedonia found that for long-term recall, the agent was more effective than a human when teaching higher-performing students (i.e., those who scored well on a short-term recall test). However, a human was better for teaching low-performing students. They speculate that the agent’s behavior may have contributed additional cognitive load, which distracted the low-performing students, leading to a lessened effect. This finding emphasizes how important it is for an agent’s behaviors to appear natural and appropriate.

Although gesture has been established to be beneficial for learning in humans (Cook & Goldin-Meadow, Citation2006; Goldin-Meadow & Alibali, Citation2013; Gorham, Citation1988; Novack & Goldin-Meadow, Citation2015), its effects are inconsistent in agents, with studies often unable to find a significant effect of NVC on learning outcomes (Baylor et al., Citation2003; Buisine et al., Citation2004; Buisine & Martin, Citation2007; Frechette & Moreno, Citation2010; Krämer et al., Citation2007; Wang & Gratch, Citation2009). To illustrate, Wang and Gratch (Citation2009) used the Rapport Agent in a sexual harassment training context. The Rapport Agent would take users through a course and then they were asked to retell the information they learned. The researchers saw that including immediate feedback in the agent (in the form of nodding when spoken to, or mimicking the gaze of the speaker) helped increase feelings of rapport and helpfulness. The agent also increased subjective reports of self-efficacy (which may in turn help with learning) but was not able to significantly affect recall performance.

To further study the effects of gesture in agents, Buisine et al. (Citation2004) looked at different gesturing strategies for agents when teaching or presenting information. Specifically, they looked at redundant (communicates same information as speech), complementary (communicates additional information alongside speech), and speech-specialization (gestures not intended to convey task-specific information; e.g., touching one’s face) gestures and evaluated users’ subjective impressions and ability to recall information taught by an agent. They found that gestures did not enable users to recall information any better, although users did rate the redundant and complementary gesturing agents higher. Essentially, there was only a difference in likability, not actual performance. A later study (Buisine & Martin, Citation2007) adjusted the behaviors to fix negatively perceived gestures from the prior study. This time, they found that speech-redundant gestures resulted in significantly higher recall of verbal information and also had higher ratings of quality and expressiveness. Multimodal redundancy may improve a user’s social perception of an agent, viewing it as more likable and with a positive personality.

However, when designing pedagogical agents, likability is not as critical as an agent’s ability to teach. In a comparison of agents that gestured against agents that did not, Krämer et al. (Citation2003) found a surprising result. Users rated agents that used gesture as significantly more entertaining but less helpful than agents which did not gesture. They speculated that the gestures may have been too pronounced and therefore caused an adverse effect in users, echoing the cognitive load issues described earlier. It seems likely that the context of the agents may also have played a role. The agents were designed to provide a human-like interface that controlled a TV/VCR system. Users may have seen the use of gestures as too unnecessary, given the limited conversation needed to operate the functions of a VCR.

Frechette and Moreno (Citation2010) also conducted a study to look at learning performance when instructed by a virtual agent. In their study, they looked at information recall and comprehension when learning about planetary systems. They compared agents that displayed combinations of affective facial expressions and cognitive thinking gestures against those that did not. They also compared a version of the teaching system with no agent. They found no significant differences between the agents, with the exception that affective nonverbals (such as facial expressions) resulted in lower performance than just a static, non-animated agent. This supports the criticism that agent NVC may serve as a distractor or may be counterproductive and not help learning outcomes. This may also be related to the appropriateness of the behaviors: affective behaviors may not have been proper for this type of teaching style and thus accrued more cognitive load, affecting the results. Overall, research has been inconsistent in determining the effectiveness of NVC in teaching agents.

6. Developing a virtual agent that uses NVC

In order to understand the challenges with creating a virtual agent that uses NVC, we must also understand how an agent is developed, in terms of the technical challenges that are inherent to such a complex system. Based on our survey, we identified common themes among many of the virtual agent systems described above that underlie the implementation of NVC in agents. In this section, we describe the general components that go into a virtual agent that uses NVC and how the individual subcomponents, or modules, are developed and integrated together. Additionally, we detail the methods used to understand and author agent behaviors, comparing the benefits and challenges of each approach.

6.1. What makes up a virtual agent?

To understand the design of virtual agent systems, we must first look at how dialogue systems are designed, or the forerunner to the embodied conversational agent. Spoken dialogue systems have traditionally followed an architecture that incorporates six components, or modules, that divide the system into logical parts (Jurafsky & Martin, Citation2000). First and foremost, these include input modules both to recognize words spoken by a user (speech recognition) and to parse the intent of the speech (natural language understanding). From there, a system needs to keep track of the current state of the conversation and plan the next course of action a system should take (dialogue manager). The dialogue manager may also need to perform specific actions by interfacing with another application (task manager). Following this comes the output modules to plan what a system should say next (natural language generation) and, finally, convert the text back into audible words for the user to hear (text-to-speech synthesis).

For the most part, virtual agents have followed a similar architecture. This is mainly due to their history as extensions of dialogue systems and their still prevalent need for speech. Virtual agent architectures include the modules listed above with the addition of nonverbal behavior modules to support the added display of an avatar. These may include modules to handle animation of the avatar’s behaviors (e.g., producing gestures or displaying facial expressions) as well as modules for perceiving and recognizing any nonverbal input from the user.

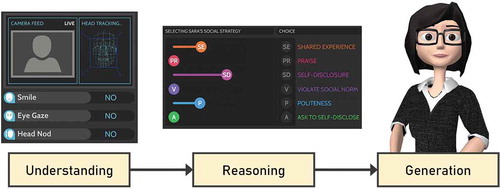

A typical virtual agent architecture is best described by the pattern laid out by Matsuyama et al. (Citation2016) in the recent development of an agent called SARA. They condense the architecture into a simple pipeline consisting of three main phases: understanding, reasoning, and generation (). Below, we go deeper into each phase, describing the submodules involved in each phase and how agents have incorporated them.

Figure 4. SARA agent and the three phases that make up her architecture, going from understanding (perceiving the user and interpreting their input) to reasoning (deciding on conversational strategy based on those inputs) and finally to generation (production of speech and NVC in an animated avatar) (Images modified from Matsuyama et al., Citation2016) Images courtesy Justine Cassell, Carnegie Mellon University

6.1.1. Understanding phase

The understanding phase deals with converting the raw input from the user into a clean semantic form (such as the pertinent information in speech or the intent of a gesture, etc.) that the agent can easily parse. First, recognition modules use sensors to obtain input/information from the user (Jurafsky & Martin, Citation2000; Kopp et al., Citation2006; Matsuyama et al., Citation2016). For instance, a speech recognition module listens for a user’s utterance from a microphone and converts it into text. Similarly, a facial expression recognition module tracks faces through a camera and uses computer vision to identify distinct expressions. After recognition, semantic understanding modules parse the inputs to infer the user’s intent behind each utterance and nonverbal behavior. The utterance text is parsed by a natural language understanding module to extract the user’s communicative intent. Likewise, recognized facial expressions are used to infer the user’s current mood.

To start, developing NVC recognition modules is difficult (Kopp et al., Citation2004). Recognizing and interpreting user behavior is a large research effort on its own, and often intersects with the fields of machine learning, computer vision, natural language processing and similar areas of expertise (Gratch et al., Citation2002). The difficulty lies in the challenge of, first, recognizing raw input from the user and, second, mapping those inputs to semantic intents (Kopp et al., Citation2004). Both input recognition and intent parsing modules require training machine learning classifiers (such as decision trees or support vector machines) or relying on heuristic models derived from psychological and communication literature.

Fortunately, for input recognition, researchers have utilized off-the-shelf components and existing recognition systems to help simplify parts of this process. As an example, Anderson et al. (Citation2013) used the Microsoft Kinect sensor to capture human gestures, gaze, and facial expression. They developed an interview coach that used recognition to detect detrimental social cues during an interview. Researchers have also relied on the use of wearable sensors (Feese et al., Citation2012; Terven et al., Citation2016; Thórisson, Citation1997), motion sensing cameras (Cassell et al., Citation1999; Morency et al., Citation2006), gaze trackers (DeVault et al., Citation2014; Thórisson, Citation1997), facial expression libraries (Burleson et al., Citation2004; DeVault et al., Citation2014), and motion capture systems (Anderson et al., Citation2013; Lance & Marsella, Citation2009) to fill the need for recognition.

Although these systems convert user input (raw speech/motion) into standardized and manageable forms (text, gesture description, features, etc.), the issue remains on how to process this data and extract the user’s semantic intent. It is one thing to understand the words that are being spoken, but another to actually comprehend them. In dialogue systems, this is the job of the natural language understanding module (Jurafsky & Martin, Citation2000). Virtual agent systems that use NVC need a similar understanding module, but for nonverbal behaviors.

Interpreting nonverbal behaviors requires a model that maps input behaviors to intents, either derived from prior work or mined using collected data. As an example, for the interview coach mentioned above, Anderson et al. (Citation2013) trained a Bayesian network on the multimodal input in order to classify social cues. The agent would detect the user’s affective state as well, progressing with the interview when appropriate cues were detected. Another example is one by Wang et al. (Citation2020), which featured a model that estimated users’ impressions of an agent by monitoring their facial expressions and leveraging this information to change how users felt about the agent. Other agents recognize gaze behaviors through complex models derived from psychological research (Andrist et al., Citation2017; Huang et al., Citation2011) or trained using data from user studies (Morency et al., Citation2006). Bevacqua et al. (Citation2010) used a combination of trained models and heuristic rules to determine the state of the user and the conversation. Their rules were based on previous perceptual studies of human-human communication, which allowed them to accurately determine what a user’s intent was based on their behaviors.

Oftentimes, these recognized inputs (speech, expression, gesture, etc.) may not only be parsed individually, but also combined to determine complex intents. For instance, the SARA agent (Matsuyama et al., Citation2016) uses a combination of utterance, facial expressions, gaze, and other auditory and visual cues as input to a classifier that infers the user’s conversational strategy. Through the classifier, SARA estimates the current level of rapport with the user. She then strategizes how to respond with appropriate verbal and nonverbal behavior to increase the level of rapport. Similarly, the SimSensei agent (DeVault et al., Citation2014) tracks a user’s facial expressions, gaze, and fidgeting motions. By combining these inputs in the MultiSense system (Gratch et al., Citation2013), the agent is able to estimate the user’s level of anxiety and distress. This complex understanding of human behavior allows the agent to be more effective as a virtual therapist. Burleson et al. (Citation2004) created a complex “inference engine” that takes in data from a wide variety of sensors to accurately determine a user’s affective state. Another example is the engagement modeling by Dermouche and Pelachaud (Citation2019), who combined facial expressions, head gestures, and gaze to interpret the level of engagement from the user. Although multimodal recognition adds complexity to an agent, it allows the agent to interact in a much more human-like manner; human-human communication is often multimodal in nature (Quek et al., Citation2002).

6.1.2. Reasoning phase

The extracted intents produced by the understanding phase are fed into the reasoning phase to determine what the agent should do next. The reasoning phase deals with taking in the intents derived from the understanding phase and deciding what the agent should do next in the interaction. This typically involves any dialogue management or state-keeping in the system and represents the bulk of an agent’s intelligence. In a dialogue system, this is mainly the job of the dialogue manager module (Jurafsky & Martin, Citation2000). Although often not explicitly called a “dialogue manager” due to the addition of NVC, virtual agents utilize similar modules for deciding an agent’s next move in response to the user.

An agent’s actions may be determined by simple rule-based decisions (Cassell et al., Citation1999; Lee et al., Citation2007), a finite state machine (Matsuyama et al., Citation2016), or more complex AI networks (Cafaro et al., Citation2016; Pelachaud, Citation2017), among other methods. An agent’s intelligence may even incorporate task and domain knowledge, allowing it to teach specific subjects (Rickel & Johnson, Citation1999, Citation2000) or answer questions (Cassell et al., Citation1999; Thórisson, Citation1997). At a simple level, an agent may decide to directly proceed with dialogue (Anderson et al., Citation2013), show empathy (Nguyen & Masthoff, Citation2009), or even nod to indicate attention (Huang et al., Citation2011; Lee et al., Citation2007). Often, these actions are a direct result of user input. On a higher level, an agent may have its own goals or even personality that governs its actions, regardless of the user’s direct input.

For instance, the Max agent (Becker et al., Citation2004) introduces the concept of mood. Max features an internal model of overall mood and current emotion which can change over the course of an interaction. These moods are modeled after the pleasure-arousal-dominance (PAD) scale (Mehrabian, Citation1969), which is a way to describe temperament based on those three dimensions. Max’s mood dictates how the agent should interpret specific statements and respond. For example, he may get bored of an interaction or even become annoyed if the user says something offensive. This will cause him to start making different facial expressions and more assertive gestures. If the user continues their behavior, Max may even get angry and decide to leave the conversation until the emotion system “cools off.”

This notion of a simulated mental state is not unique to Max. Several researchers have adopted and advocated for the use of an internal agent state (in the form of goals, attitude, etc.) that drives behavior (Becker et al., Citation2004; Gratch et al., Citation2002; Lee et al., Citation2007; Poggi et al., Citation2000, Citation2005; Traum et al., Citation2008). One notable example is the negotiation agent created by Traum et al. (Citation2008). The agent must solve problems and gain a user’s trust, all while managing the conversation. The agent’s internal goal is to persuade; thus, it tries to actively understand the beliefs and goals of others while employing different strategies for negotiation based on changes in their behavior.

Pelachaud et al. (Citation2002) argue that agents should have an internal model of belief, desire, and intent (BDI), presenting their Greta agent as an example. Greta utilizes a complex dynamic belief network to represent BDI. The network governs when to show emotion, when to hide them, and changes emotional state over time. All verbal and nonverbal behaviors are a result of the agent’s internal state of mind RPPC03. (Rosis et al., Citation2003). Poggi et al. (Citation2005) argue that including a BDI model is necessary for agents to act natural and believable. They emphasize that the production of both speech and gesture should come from a common intent. These concepts align with the theory that, in humans, gesture is directly tied to the production of thought (McNeill, Citation1992).

Thus, NVC can even be considered a window into the internal state of an agent. That is the principle Lee et al. (Citation2007) considered when creating the Rickel Gaze Model, a novel way for agents to subtly communicate their intent. Recall that gaze can be used to give feedback, direct attention, and even pass/hold the speaking floor. The Rickel Gaze Model drives an agent’s gaze behaviors (e.g., looking away) for conveying its internal state (e.g., planning an utterance). These behaviors allow for giving realistic feedback that is valuable for agent-human communication.

6.1.3. Generation phase

The generation phase deals with converting the agent’s next action or intent into real audio and visual output that the user can perceive. This can basically be thought of as the reverse of the understanding phase; here, we start with an intent and convert it back into speech and nonverbal behaviors. For dialogue systems, this consists of two modules, natural language generation, which takes in an agent’s intent and generates the corresponding utterance text, followed by text-to-speech, which takes in the text and produces actual audible speech (Jurafsky & Martin, Citation2000). A virtual agent system must do the same but include the production of NVC in addition to speech.

Instead of a language generation module, agents utilize a behavior generator that takes in the agent’s intent and determines the type of NVC to produce, and when to produce it (Kipp et al., Citation2010). The module typically produces a description of the behavior, with parameters that dictate how they are to be performed. The earliest agents used the XML format to store this information, but replacements tailored to virtual agent applications have since been developed (Badler et al., Citation2000; Heloir & Kipp, Citation2009; Kipp et al., Citation2010; Kopp et al., Citation2006; Kranstedt et al., Citation2002). The abstract description of the behavior is then passed to a behavior “realizer,” a module that governs the kinematics, animation, and synchronization of behaviors in the agent’s avatar (Kipp et al., Citation2010).

Behavior generation can be as simple as manually scripting intended NVC into the dialogue. For instance, Kranstedt et al. (Citation2002) introduced a markup language called MURML (Multimodal Utterance Representation Markup Language) that allows text to be tagged with gestures. The tags describe the specific hand and arm motions that comprises each gesture and when to perform them in alignment with speech. However, manually authoring dialogue scripts and annotating them with gestures is time consuming and may not represent natural behavior, as they are subject to the author’s best judgment. Instead, researchers have developed complex systems that automatically generate intended behaviors with or without accompanying text (Bergmann & Kopp, Citation2009; Cassell et al., Citation1994, Citation2004; Ravenet et al., Citation2018; Salem & Earle, Citation2000).

The BodyChat system by Cassell and Vilhjálmsson (Citation1999) was an early system designed to automatically animate avatars based on different conversational behaviors. Although their system was designed for human-controlled avatars, not autonomous agents, it was capable of animating gaze, gesture, and facial expressions to match the user’s inputted text. They later introduced the Behavior Expression Animation Toolkit (BEAT) (Cassell et al., Citation2004). The BEAT system takes in text, parses it to determine clauses, objects, actions, etc., and suggests different nonverbal behaviors that would be appropriate. The capabilities range from simple “beat” gestures that sync with each spoken word to performing iconic gestures that illustrate different actions.

These methods rely on parsing text to determine the underlying communicative intent and then applying nonverbal behaviors that correspond to those behaviors. Lee and Marsella (Citation2006) analyzed video clips of human interactions to create a technique that automatically generates behaviors based on speech. Their technique involved first parsing the given text to determine the type of utterance, such as an affirmation or interjection. Based on the type, they would then choose a nonverbal behavior based on behavior rules that they identified through the video clips. For example, the text “I suppose” would be classified as a “possibility,” which would produce head nods and raised eyebrows.

Other techniques generate gestures that convey spatial information based on context. For instance, Bergmann and Kopp (Citation2009) created an agent that automatically generates gestures for giving directions, based on the spatial relationship of the agent to locations in real life. Another system, developed by Ravenet et al. (Citation2018), would create appropriate metaphoric gestures (i.e. gestures used to try and depict abstract ideas) from user speech. Their technique focused on mapping words to physical representations and gestures (). If an agent said the word “rise,” it would make a rising gesture to accompany it. If it talked about a table, that would result in a broad “wiping” gesture representing the table’s surface.

Figure 5. A person (left) describing two ideas by gesturing separately with each hand; when given the same speech text, a virtual agent (right) automatically produces similar gestures. (Image from Ravenet et al., Citation2018)