?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.

?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.ABSTRACT

Iterative design, implementation, and evaluation of prototype systems is a common approach in Human-Computer Interaction (HCI) and Usable Privacy and Security (USEC); however, research involving physical prototypes can be particularly challenging. We report on twelve interviews with established and nascent USEC researchers who prototype security and privacy-protecting systems and have published work in top-tier venues. Our interviewees range from professors to senior PhD candidates, and researchers from industry. We discussed their experiences conducting USEC research that involves prototyping, opinions on the challenges involved, and the ecological validity issues surrounding current evaluation approaches. We identify the challenges faced by researchers in this area such as the high costs of conducting field studies when evaluating hardware prototypes, the scarcity of open-source material, and the resistance to novel prototypes. We conclude with a discussion of how the USEC community currently supports researchers in overcoming these challenges and places to potentially improve support.

1. Introduction

Prototyping is an integral part of human-centered research and design (Fallman, Citation2003; Ogunyemi et al., Citation2019; Wobbrock & Kientz, Citation2016). Wobbrock and Kientz (Citation2016) argue that one of the main types of research contributions in Human-computer Interaction (HCI) is artifact contributions: where researchers design inventive prototypes, such as new systems, tools and techniques that demonstrate novel forward-looking possibilities, or generate new insights through implementing and evaluating the prototypes (e.g., (Baudisch et al., Citation2006; Greenberg & Fitchett, Citation2001; Ishii & Ullmer, Citation1998; Lopes et al., Citation2017, Citation2018)). Usable Privacy and Security (USEC) research is not an exception. USEC researchers have brought forth a plethora of novel usable privacy and security systems that extended state-of-the-art and facilitated new insights (e.g., (Hayashi et al., Citation2012; Krombholz et al., Citation2016; De Luca et al., Citation2014; De Luca, Von Zezschwitz, Nguyen et al., Citation2013)) – some of which found their way to wider adoption, such as PassPoints, Pass-Go and DAS which inspired Android’s lock patterns (Jermyn et al., Citation1999; Tao & Adams, Citation2008; Wiedenbeck et al., Citation2005). At the same time, USEC researchers have argued for the importance of human-centered design since the 1970’s, when Saltzer and Schroeder (Citation1975) outlined that security protection mechanisms require “psychological acceptability.” This position was taken further by researchers from both the security and HCI communities (Adams & Sasse, Citation1999; Whitten & Tygar, Citation1999; Zurko & Simon, Citation1996).

Conducting research that involves prototyping comes with unique challenges, such as hardware deployments in ecologically valid contexts and evaluations with adequate sample sizes, that hinder its undertaking. Our work provides: 1) the first interview-based insight into the challenges faced by USEC experts when designing, implementing, evaluating and also publicizing research that is based on prototyping usable privacy and security systems, and 2) ways forward to support research in this direction on both the individual researcher and the wider community level. We interviewed twelve expert and nascent USEC researchers from academia and industry who have made significant contributions to USEC research and whose work involved prototyping novel systems to unveil and better understand their research challenges. Our interviewees include full/associate/assistant professors, researchers from large tech companies, consultants, and senior PhD candidates. Our work tackles the following two research questions:

• RQ1: Where, if any, are the bottlenecks USEC experts face when designing, implementing, and evaluating usable privacy and security prototype systems?

• RQ2: What does the USEC community need to better facilitate the transition of artifact contributions into practice?

We present 9 key challenges impeding artifact contributions in USEC, including challenges that have not seen in-depth discussion in prior literature, e.g., the implementation challenges due to scarcity of open-source material; difficulties conducting ecologically valid studies, especially when evaluating hardware usable privacy and security solutions; and the lack of publication venues where novel evaluated USEC systems are encouraged. We propose five ways the USEC community can support overcoming these challenges, such as encouraging collaborations between academia, industry and across research groups, being open to novel evaluated solutions, and encouraging development of new methodologies to cope with high costs of ecologically valid field studies and the shortcomings of lab studies.

We aim to raise awareness of the existing challenges and start a critical discussion and self-reflection on how the USEC community operates, provoke change in how the community addresses work that involves prototyping USEC systems, and discuss our experts’ voiced challenges in the light of neighboring communities such as HCI, Mobile HCI, Ubicomp. The insights from USEC experts coupled with the in-depth discussions presented in this work should be valuable to the USEC community as well as neighboring communities.

2. Background & related work

The term “usable privacy and security research” refers to research that touches both on human-factors work such as human-computer interaction, design, and user experience as well as on privacy and security issues such as user authentication, e-mail security, anti-phishing, web privacy, mobile security/privacy, and social media privacy. Because the research is by its nature interdisciplinary, it inherits the research approaches and challenges from all areas it touches on. For example, many of the research methodologies are drawn from the HCI community (Garfinkel & Lipford, Citation2014) which has a rich history in user-based research. Yet, many approaches need to be adapted to handle the sensitive nature of security and privacy work. For example, finding ways to study ATM PIN entry in a way that does not break laws, endanger participants, or leak sensitive data while also ensuring the evaluation is ecologically valid are all challenging (De Luca et al., Citation2010; Volkamer et al., Citation2018). As a result, privacy and security researchers struggle with getting access to “real” user data or need to spend significant additional effort. In this paper, we aim to identify the set of challenges that are particularly problematic to the subset of the USEC community that conducts prototyping-related research.

2.1. USEC research and its challenges

Past efforts have organized existing research in particular domains within USEC. Iachello and Hong (Citation2007) outlined approaches, results, and trends in research on privacy in HCI. In their work, they analyzed academic and industrial literature published between 1977 and 2007. They described some legal foundations and historical aspects of privacy, which included designing, implementing, and evaluating privacy-affecting systems. Work by Acar et al. (Citation2016) reviewed state-of-the-art USEC research that typically focused on end-users, and laid out an agenda to support software developers. A more general review of USEC research by Garfinkel and Lipford (Citation2014) covered the state of USEC research in 2014, and suggested future research directions. Their work highlighted that “only by simultaneously addressing both usability and security concerns will we be able to build systems that are truly secure” (Garfinkel & Lipford, Citation2014, p.vi), emphasizing the need for novel well-evaluated systems that address both security and usability from the beginning of the design process.

Alt and Von Zezschwitz (Citation2019) highlighted the need to develop study paradigms for collecting data while minimizing the required effort for both participants and researchers. Alt and Von Zezschwitz (Citation2019) and Bianchi and Oakley (Citation2016) also underlined that the fast pace of emerging technologies (e.g., wearables such as smart glasses and smart watches) comes with novel challenges that require rapid adaptions of user-centered usability, security, and privacy research. For example, while wearable devices can enable novel authentication techniques (e.g., (Chun et al., Citation2016; Liu et al., Citation2018), they also require researchers to adjust their research methods to consider new contexts and privacy implications (Bianchi & Oakley, Citation2016). The book by Garfinkel and Lipford (Citation2014) dedicates three pages (pp. 4–6) in the intro to discussing challenges that make USEC research hard, including challenges around conducting ecologically valid studies due to priming caused by the study itself and challenges around designing in the presence of an adversary that is actively attempting to attack the user. USEC research has also been previously found to be challenging because security is often not users’ primary task (A. M. Sasse et al., Citation2001). Studying sensitive and private contexts often also requires additional effort and resources due to ethical and legal constraints, as highlighted in prior work (De Luca et al., Citation2010; Trowbridge et al., Citation2018).

While the works above provide valuable observations, most of them are drawn either from a single study or a review of written works. Their focus is also often on capturing the current state of the field or providing structured book-like observations for students. Work that captures common opinions and “hallway chatter” among USEC researchers that is typically less structured are more rare. One of the few works in this direction is a work by M. A. Sasse et al. (Citation2016) which reports on a record of a conversation among experts about the usability-security trade-off. These works are valuable because they capture the attitudes of practitioners in the field in a more candid way. Our work aims to provide this kind of “hallway knowledge” view of the challenges faced by USEC researchers who design, develop, and evaluate prototypes.

2.2. A glance at USEC’s prototyping challenges

Building and testing prototypes is a common approach in USEC when the specifics of a design are likely to impact how users interact with it. Prototyping is commonly used in areas like authentication where the physical design of the input mechanism can have a large impact on a user’s input speed and accuracy as well as an attacker’s ability to remotely view and replicate their actions. We use the range of USEC’s prototypes that we discuss below to provide readers with an idea about the available prototype systems in USEC research. Note that people often have different expectations of what a prototype is. As a result, finding one definition that covers all different research fields and prototype variants is challenging.

Everyone has a different expectation of what a prototype is. […] Is a brick a prototype? The answer depends on how it is used. If it is used to represent the weight and scale of some future artifact, then it certainly is: it prototypes the weight and scale of the artifact. - (Houde & Hill, Citation1997, p. 368)

While we are not aware of an existing overview of prototyping challenges in USEC, several researchers have commented on specific challenges they faced when designing and testing USEC-related prototypes. Reilly et al. (Citation2014) presented a software toolkit for USEC research in mixed reality collaborative environments and emphasized that complex prototypes can be difficult to set up correctly. They also argued that their toolkit was limited by the functionalities of the base platform they used (i.e., Open WonderlandFootnote1). Zeng and Roesner (Citation2019) built a prototype smart home app to find answers to how a smart home should be designed to address multi-user security and privacy challenges and what security and privacy behaviors smart home users exhibit in practice. In their work, they identified challenges introduced by their used SmartThings API. Activity notifications could not be used to attribute changes in the home state to particular third-party apps. Other limitations were introduced by the underlying operating system. Persistent low priority notifications were only implemented on Android but not on iOS as the notification center did not support these notifications (Zeng & Roesner, Citation2019). Other works reported that their prototype limitations resulted in lower ecological validity. Hundlani et al. (Citation2017), for example, stated that their prototypes were created for research purposes only and were not on the level of finished products.

When it comes to hardware prototypes, USEC researchers have reported a variety of additional challenges. Physical prototypes are often made in research labs and therefore are physical approximations rather than professionally designed products, which can lead to confounds around usability. For example, using two connected mobile phones to provide users with a back and front display for user authentication enabled testing of the idea, but at the same time negatively impacted users’ authentication experience due to the prototype’s weight (De Luca, von Zezschwitz, Nguyen et al., Citation2013). The work by Chen et al. (Citation2020) showed that achieving a form factor similar to the original product can be technically challenging. Their wearable jammer to protect users’ privacy was indeed larger than a typical bracelet. Other work reported that the form factor of their privacy-protecting prototype was not perceived well by users (Perez et al., Citation2018). Prototypes are also often built using existing hardware and software which can limit the range of what they can accurately do. Mhaidli et al. (Citation2020), for example, built a smart speaker prototype but encountered robustness issues with their Kinect camera when tracking users’ eye movements. In a similar vein, Schaub et al. (Citation2014) faced reliability issues with their presence detection and identification system, which likely resulted in more conservative privacy settings than preferred by their participants.

The challenges mentioned above are likely only a small percentage of the types of problems USEC researchers face when designing, developing, and evaluating prototypes. We aim to expand on these existing observations about prototyping challenges by talking with researchers about their experiences and challenges that may be well known in the community, but are not necessarily reported in publications.

2.3. Contribution over prior work

In this work we present an overview of challenges faced by experts who design, develop, and evaluate prototypes as part of their USEC-related research. While other works have touched on what makes USEC research challenging in general, and individual works have commented on challenges they have faced in completing their research, there has not yet been a structured attempt to elicit challenges experienced by USEC researchers that use prototypes in their work. In this work we put forward such a compilation of experienced challenges.

Such a compilation is valuable and novel, and it also sits within the wider contexts of the HCI, security, and privacy fields. Some of our identified challenges have been identified previously, for example, the challenge of finding research participants is well known in HCI. However, we argue that there is value in compiling the set of challenges that most impact USEC researchers and to put these challenges into a USEC context. For example, USEC makes heavy use of deception studies where the participant is told that the study is about, say, testing a social networking site, but the research is actually about authentication or privacy. The use of deception makes it impossible to re-use participants, so the well known HCI challenge of finding participants has a particular shape in USEC work. In this paper we present the challenges faced by our participants and how those challenges manifested in their research.

3. Methodology

This section describes our recruiting process, the structure of our interviews, our research approach and analysis, and some potential limitations of our work.

3.1. Recruiting USEC Experts

We completed an ethical review through the University of Glasgow College of Science & Engineering Ethics Committee in advance of participant recruitment. Potential interviewees were selected with the goal of obtaining a mix of researchers and practitioners who are experienced in USEC; thus, work at the intersection of HCI and security & privacy research. We sought those who both published works and had hands-on experience in the design, implementation, and evaluation of usable privacy and security systems. To compose a list of potential interviewees, we started with a rough literature review to identify authors and then added people we knew about already in the area to fill out the list. We searched the ACM Digital Library, IEEE Xplore, and Google Scholar to find scholars with published USEC work at highly ranked HCI and security venues (e.g., ACM CHI, USENIX SOUPS, IEEE S&P). We started with broad search terms like “usable security,” “usable privacy” that formed the basis of our search and then followed-up with more specific search terms that are relevant for our research: “security prototype,” “privacy prototype.” We then reviewed papers to identify those that included building a security and/or privacy-protection solution and a user-centered evaluation. To further improve our coverage, we also used a snowball approach: the references in the papers were reviewed for relevant titles and added to the list of reviewed publications. We used Google Scholar and dblp to determine the publication profile and experience of the identified authors in the area. Relevant identified publications were recorded for later use in the interviews. From this process we identified a pool of 56 potential interviewees who have significant expertise in usable privacy and security and prototyping. We sorted the list with an eye toward multiple variables: selecting people with a range of seniority, university, industry, country, research domain, and experience publishing systems solution papers in USEC venues. Researchers who were more senior and had recent USEC prototype publications were ranked higher.

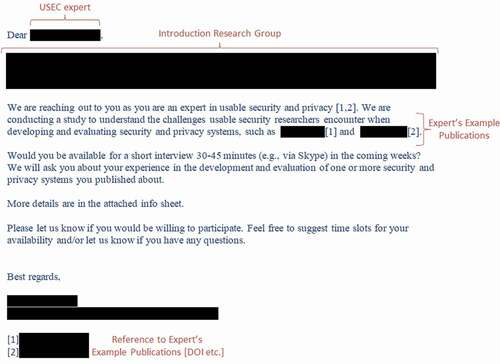

We then sent invitations (see template in Appendix A) asking if the person was willing to be interviewed about their research. Although recruiting senior people is time-consuming and challenging, we were able to secure fourteen responses (70%) from twenty invitations. Two then declined due to unavailability, the remaining twelve agreed to participate. Eleven interviews took place via Skype and were audio and video recorded with consent. One preferred an e-mail interview, which is a viable alternative (Meho, Citation2006). As we progressed through the interviews, few novel insights emerged after the tenth interview. We continued with two more interviews and observed nothing new in the twelfth (theoretical saturation) (Guest et al., Citation2006). We, therefore, decided not to send out additional interview requests.

3.1.1. Demographics of the USEC experts and interview material

Our final sample had 12 USEC experts (4 females, 8 males). Our interviewees are from the US, Europe, and Asia, and work in academia (6), industry (2), or in both academia and industry (4). At the time of the interviews, 10 interviewees held a PhD (1 full professor/4 associate professors/1 assistant professor/1 adjunct professor & security research scientist/1 user experience researcher/1 USEC research engineer/1 research fellow). We also included two senior PhD candidates who had published usable privacy and security research in top-tier venues and received best paper awards. Their inclusion widened the covered spectrum as they had more recent hands-on experience in implementing systems and conducting user-centered evaluations. All interviewees worked in the broader field of usable privacy and security including, but not limited to, user authentication, anti-phishing efforts, mobile security and privacy, and web privacy. Our interviewed experts have on average 123.42 publications (max = 386, min = 18, SD = 129.81), 3740.75 citations (max = 14,627, min = 25, SD = 4857.28), and an h-index of 22.5 (max = 56, min = 3, SD = 16.69). All reported numbers (i.e., publications, citations, h-index) involve all kinds of publications, including usable privacy and security works. We report the overall numbers because all publications eventually contribute to a researcher’s h-index, and extracting the number of USEC-specific papers in a precise way is challenging. The final set of publications (N = 27) used to setup context during interviews ranged from 2010 to 2019 (Md = 2018). Out of the 27 publications used in the interviews, 14 papers comprise software-based prototype systems and 9 comprise hardware components. We also used four additional USEC papers from experts we interviewed, three of which are considered to be highly influential in USEC and the fourth one reports on research on an in-the-wild deployed security system. One of these additional publications discussed, for example, the last decade of usable security prototype systems and outlined learned lessons when developing and evaluating USEC prototypes. shows an overview of our participants in an anonymized form.

Table 1. Our interviewees have published a significant number of work (pub = 123.42) that is highly cited (

cite = 3740.75). Note that the data reported is from early 2020

3.2. Interview structure

We conducted semi-structured interviews informed by the content of the interviewees’ publications which the interviewer familiarized themselves with in advance. All publications were drawn from the initial literature review that we used for our sampling procedure, outlined in Section 3.1. The corresponding publications were then used as example papers and we attached them to the initial e-mail request (see Figure A1 in Appendix A). This allowed us to efficiently use the interviewees’ time and add context to their opinions. This also facilitated detailed discussions, allowing the interviewee to explore examples and the interviewer to ask informed follow up questions. We chose semi-structured interviews because they allowed us to ask the same high-level questions to all participants, and also ask follow up questions and encourage participants to discuss their past experiences. All interviews covered the following questions, and follow up questions were prepared and used if needed (see full list in Appendix B):

3.2.1. Typical research journey from idea to publication

The purpose of this question was to understand how the interviewee normally progresses from a research idea to publication(s), and how that progression occurs within their broader research community. Journeys typically included topics such as: idea generation, resources, prototype development, idea refinement, evaluation, publication.

3.2.2. Research challenges and limitations

We asked our interviewees about the challenges they faced in conducting research that involved implemented solutions and their opinion about the more general challenges and limitations of USEC research that includes prototyping.

3.2.3. The ecological validity of current evaluations

We collected insights on different study types that our USEC experts employed. We also aimed to understand obstacles to conducting fully ecological valid evaluations. Inspired by recent work that argued for developing novel methodologies to understand and design for emerging technologies and mitigate new threats (Alt & Von Zezschwitz, Citation2019; Garfinkel & Lipford, Citation2014), we asked our experts whether or not they see the current evaluation approaches of the USEC community as the way to go in the future or if they would prefer to see alternative evaluation approaches.

Finally, we debriefed our interviewees, asked them if they have any final questions or thoughts, and concluded with an informal chat. Interviews lasted 48.5 minutes on average. We offered all interviewees an £8 online shopping voucher. Some of them waived the compensation due to different reasons (e.g., donate it, keep it for other research projects).

3.3. Research approach and data analysis

We applied open coding followed by thematic analysis inspired by Grounded Theory (Corbin & Strauss, Citation2014) on our interview data. We decided to a) apply open coding to build the insights and key challenges directly from the raw data of our expert interviews, and b) use a thematic analysis inspired by Grounded Theory (Corbin & Strauss, Citation2014) to uncover the main concerns and challenges of experts in the field of USEC when prototyping usable privacy and security systems. We also conducted an initial literature review prior to the interviews to better understand the research area and line up potential interviewees (as previously described in Section 3.1), who we then contacted by e-mail. Doing this enabled us to familiarize ourselves with the expert’s works and access a promising USEC sample for our investigation and research questions (Hoda et al., Citation2011). For the data collection, we conducted semi-structured interviews with open-ended questions. While interviews were ongoing, two authors regularly met to discuss 1) the notes taken by the interviewer about interesting observations and thoughts that emerged during the interviews and 2) the publications associated with upcoming interviews. These meetings allowed the researchers to regularly reflect on the findings and keep those points in mind in further interviews. Once all the interviews were completed, the lead author transcribed all audio recordings and open coded the transcriptions. The initial open coding scope was drawn from the regular discussion meetings and the lead researcher also took Memos (Saldaña, Citation2015) when conducting the open coding. A second researcher went through the interview raw data and added additional Memos. This process generated 325 open codes and 93 memos. The lead author then organized all codes and printed those out to have a paper-based piece for each code. Two authors then conducted a paper-based affinity diagram of the open codes (Kawakita, Citation1991). The transcript, Memos, and audio were revisited when additional information about a code’s context was needed. The authors organized the codes into groups which were then further refined into themes.

In summary, we went through an initial literature review to compose a list of potential interviewees and followed with semi-structured interviews to collect USEC experts’ opinions and insights that we then transcribed and further analyzed (Hoda et al., Citation2011). We present the themes, experts’ voiced comments, and the key challenges when prototyping usable privacy and security systems in Section 4 and tie our findings with previous literature in an in-depth discussion in Section 5.

3.4. Limitations

While the approaches we use are common in human-centered and usable privacy and security research, some of our specific decisions have limitations to keep in mind. First, we selected experienced researchers who have been successful in publishing works involving prototypes in USEC venues. Their experience is valuable, but it is also biased toward those who ultimately succeeded in publishing. The challenges faced by those who tried and failed to conduct this type of research due to issues such as lack of mentorship, or choosing too challenging of problems are therefore not well represented here. Additionally, we aimed to talk to USEC researchers who have been successful and have been through a range of failures, which is an accompanying element when being successful in academia.Footnote2 Although our sample also includes more junior researchers (e.g., P1, P7), we encourage future work to look into a sample of junior researchers only and compare their thoughts and opinions on USEC’s prototyping challenges to the ones reported in our work. Moreover, interviews with experts in USEC on a research and community level might not have captured all sides of the conversation; for example, the views of entities such as research institutions and funding agencies are not covered. We leave this to future work. Our participants were also likely biased by the publications we selected and sent to them in advance of the interview. Pre-selecting publications helped both the interviewer and the interviewees scope the interviews in a time-productive manner. But the scoping also likely had an impact on the topics interviewees chose to discuss. Four of the participants, two pairs, had coauthored papers in our reviewed paper set. Given the seniority of some participants and the size of the field, such a situation is expected. However, we were careful not to use the same publication in more than one interview session to ensure that experts’ voiced comments do not revolve around the same publications. Finally, interviews were also retrospective in nature, focusing on past experience and opinions about the area. While retrospective interviews can be quite effective for learning about rare events or those that take place over a long time period, they also suffer from a bias toward memorable events. Our interviewees described projects where the initial idea generation was sometimes years in the past likely resulting in some issues of memory bias.

4. Results

Below, we present our key findings: 1) threat modeling, 2) prototyping USEC systems, 3) sample size and selection, 4) evaluations, 5) USEC’s research culture, and 6) USEC’s real-world impact. Protecting expert participant anonymity is challenging (Saunders et al., Citation2015; Scott, Citation2005; Van den Hoonaard, Citation2003), so we refer to interviewees using a participant number (P1 to P12) and use they for all participants. In advance of presenting each key challenge, we use short preambles to set the frame of the challenge and introduce readers to the topic.

4.1. Threat modeling is not straight forward

Threat modeling is commonly used in privacy and security research to describe the assumed skills and capabilities of an attacker. Many input and feedback methods can be observed by bystanders, which led to a lot of emphasis on shoulder surfing in the past (Bošnjak & Brumen, Citation2020). Shoulder surfing attacks, for example, often assume that the attacker can get physically close to the user or has an observation device like a camera. The considered threat impacts the design and evaluation of usable privacy and security prototypes because researchers need to consider said threats in the design and development process. While there are many different threats including, for example, social engineering attacks (Krombholz et al., Citation2015) and online/offline guessing attacks (Gong et al., Citation1993), shoulder surfing as a threat model as studied by, for example, Brudy et al. (Citation2014), De Luca et al. (Citation2009), George et al. (Citation2019), and Mathis et al. (Citation2020), was particularly discussed by several experts who exhibited a range of opinions about what constitutes a “realistic” threat model. Many of the interviewed experts focused on authentication research in the past, which is not surprising given that authentication has been a major theme in USEC research with Garfinkel and Lipford (Citation2014) spending 27 pages on the subject compared to 10 on phishing. Consequently, the example of shoulder surfing threat models was brought up several times in reference to how threat models, prototype systems, and study designs can interact. Our expert interviews revealed two opposing opinions regarding valid threat models that address security, which we discuss below.

P5 argued that the relevance of shoulder surfing attacks depends heavily on the context, and explained that the threat has different implications in different countries and that these attacks definitely scare them.

in the U.S. as well as in Europe you may not really feel [that] shoulder surfing attacks are something that you should really care about [...]. In over-populated countries like India you have a lot of people […] when you go to an ATM machine or to places like coffee shops […] there are like three people standing right behind you. [In these cases,] shoulder surfing is a really big problem. - P5

Taken together, P5 voiced that cultural differences in perceived personal space impact susceptibility to shoulder surfing (Remland et al., Citation1995). In a simialr vein, P8 outlined that such attacks are actually realistic in the real world and further argued that researchers have to consider both, user concerns as well as the point of view of experts to accurately assess the value and validity of certain threats.

If you actually keep asking the users about what they are worried about; often they are less worried about the NSA and more worried about their parents/their partner. - P8

However, even if a threat model seems to be appropriate for a given context and is of particular importance to end-users, experts had different opinions on the value of specific threat models and some mentioned that shoulder surfing attacks are overrated and uninteresting.

“shoulder surfing is a problem, but it’s hugely overblown.” – P9Fundamentally for me the problem with observation attacks is, [they] are not that interesting from a security point of view, it’s a real niche attack […] [researchers] report performance against observation attacks with a very narrow threat model: ‘can you see it’; which is incredibly, it’s very very narrow. - P3

P2 emphasized the problem that there is no common agreement among USEC experts regarding the validity of specific threat models.

[researchers] think they use the worst case scenario, but actually they did not. - P2

P7 further described the threat modeling challenges using shoulder surfing as an example and emphasized that there is a clear mismatch between researchers’ assumptions and the reality and that it is important to consider social norms when studying threat models because “people move closer than [researchers] actually thought they ever would, or they stay further away because they respect people’s social norms”(P7).

4.2. Prototyping USEC systems

Prototyping is an integral part of human-centered research and design (Fallman, Citation2003; Wobbrock & Kientz, Citation2016), one of the main types of research contributions in HCI research (Wobbrock & Kientz, Citation2016), as well as a major theme in USEC research (Garfinkel & Lipford, Citation2014). We observed themes around the hardware challenges when building usable privacy and security prototypes (4.2.1) and around the deployment and corresponding evaluation challenges (4.2.2).

4.2.1. Development & hardware challenges

Experts voiced that developing usable privacy and security prototype systems is challenging and costly, partially due to limited access to appropriate hardware and the limited prototyping expertise of USEC researchers.

I think we actually really need more collaborations between the usable security people and the people who are fairly close to building [hardware prototypes]. - P8

P1 voiced that they faced issues with the eye tracker due to, for example, inappropriate lighting conditions. They also reported that the interplay between multiple hardware components caused them some issues and that this resulted in significant more effort, additional pilot tests, and in excluding data from the actual user study.

I combined [the hardware] all together […] and then [faced] issues […] because they are all working with infrared and [operate] on the same wave length. - P1

If you recruit 50 participants […] you have to discard five to ten participants because the eye tracker is not working. - P1

Our experts also voiced that these limitations lead to many prototype systems “[that] were made very quickly [and] are not well made” (P3) or that hardware is used inappropriately, threatening ecological validity.

We slapped the phone on [a user’s] wrist and put a little active part in a corner, so it was sort of a like big wrist watch but it was not usable […] the validity of using a phone on users’ wrist is relatively low. - P3

Experts attested that the lack of appropriate hardware, partially due to a lack of funding, is a fundamental problem.

Usually we do not have funding to buy new equipment […] then we have to come up with ideas of how we can build that hardware.”- P4

P2 mentioned that such bottlenecks have a noticeable impact on USEC research with their prototype being significantly heavier than mobile devices at that time.

A lot of negative feedback in those evaluations was around the weight of the prototype […] [the weight] made it more difficult to use [the prototype]. - P2

Some experts even mentioned that they had to adjust their research projects due to the lack of appropriate research equipment.

We try to have as fast as we can the first prototype and see what are the challenges from the development side because often we need to alter the project to fit to the equipment we have. - P4

Other experts further mentioned that setting up hardware components at their intended place can be challenging and that these physical restrictions often force them to come up with alternative solutions: “I didn’t really manage to put [the front camera] exactly in the middle because the eye tracker was [already] there” (P1).

4.2.2. Deployment & evaluation challenges

When it comes to the evaluation of USEC prototypes and conducting research that goes beyond in-lab investigations, experts explained they had a hard time in evaluating their systems and that there are a lot of issues around deployability, especially in the case of using new hardware. Although USEC experts strive for real-world deployments to increase ecological validity, P4 still sees the transferability of findings to users’ everyday life as one of the major problems.

The major problem with evaluating privacy and security systems is that how can you visualize that the users are acting the way they would act if they would [use] it in their everyday life. - P4

P2 further voiced that deploying their prototype to a large sample was impossible and explained the situation with having access to only one device.

There’s a lot of issues around deployability, specifically when it comes to using new hardware […] the deployment was impossible […] we had one device and that device we could hand out to one person at a time. - P2

When using new hardware, our interviewees also highlighted that the cost of failure might be high and that it is important to invest only in equipment that is likely to become publicly available in the future and provides promising future use cases. P2 further highlighted the noticeable impact of limited deployable hardware on research. As a result, P2 voiced that they were not able to run a memorability study.

We did not run a memorability study [for our authentication scheme] mainly due to hardware issues […] the magical formula would be having an infrastructure that allows to [build hardware-based prototypes] in a very quick way. - P2

In summary, experts reported that research involving hardware prototypes often introduces additional challenges. Besides the hardware challenges, many of the challenges voiced by the experts are the result of limited access to appropriate resources and lack of funding, which we discuss further in the context of USEC’s research culture in Section 4.5.3.

4.3. Sample size and selection process

Sample concerns, especially discussions around the appropriate size of a sample and its characteristics, are highly dominant when conducting experiments and frequently discussed in the HCI (Caine, Citation2016) and USEC community (Redmiles et al., Citation2017).

4.3.1. Small sample sizes

Our interviewees highlighted the importance of collecting large datasets, especially for security evaluations. P3, for example, described the problem with the pool of real-world passwords which is significantly larger compared to a small subset of passwords collected from user studies: “there’s 70 million from a cracked database, you got six and a half thousand – that’s like a drop in the ocean” (P3). P3 also argued that prototype evaluations with small datasets cannot be used to assess security.

[we] have got 12-20 users […] the security data is of no value and the conclusion is that there is no value inside the small sample size. - P3

Across all experts there was a consensus that the sample size and selection is a fundamental and ongoing challenge that goes beyond USEC. P11 repeatedly emphasized the challenge with achieving large sample sizes: “finding a large sample size is really hard” (P11). While small sample sizes are problematic as pointed out by P3, external factors (e.g., access to different research environments) can have a notable impact on the sampling process and the resulting sample size. P11, for example, voiced that they face significant issues when recruiting participants and that their resources are limited.

I recently moved to another country and I was really happy to get 25 [participants] […] I was really happy to get them but well … - P11

Throughout the interview, P11 further voiced that for some researchers such a sample is too small and immediately invalidates the conducted research, but that they often overlook the still valuable research and its contributions. P1 voiced that the lack of participants is one of their main research problems and that it is often challenging to convince potential participants to come to the lab.

4.3.2. Biased recruitment

Additionally to the sample size concerns above, there were discussions and concerns about the recruitment process – the way in which researchers recruit participants for their studies.

[sample size and selection] is one of the largest outstanding problems with all HCI systems work which is that we evaluate [our systems] by knocking on the doors of friends and colleagues and be like ‘hey, come do my user study and I’ll give you $10.’ - P11

P7 echoed the problem of evaluations using experimenters’ social circles and that it is often unclear what happens if the system is evaluated with a more diverse sample and even with people who do not know what privacy is.

We run [studies] within our social circles, what happens if we get someone who’s elderly, who’s not familiar with technology, [or] who doesn’t even know what privacy is? - P7

P2 further highlighted that although they have access to a gigantic user pool, which is not comparable to the (often) limited user pools in academic environments, their user pool still runs out and that they still rely on vendors to have access to an even larger set of participants.

4.4. Evaluation methodologies

Discussions on the topic of lab and field studies were common among our participants. We observed themes around the importance of both of them (Section 4.4.1), the value/cost trade-off (Section 4.4.2), and the perceived value of field studies in USEC research (Section 4.4.3).

4.4.1. Importance of lab and field studies

Our experts emphasized the necessity of different evaluation approaches, and that starting with lab studies is often a prerequisite for evaluating USEC systems.

There’s a place for both […] I don’t think it makes any sense to go directly into the field to evaluate new systems when we haven’t done any lab studies at all. - P9

There was an agreement across all experts on: 1) lab studies should be conducted before going into the field and 2) the potential of field studies to lead to ecologically valid findings. Experts also voiced that researchers should not underestimate the importance of lab studies. P11, for example, highlighted that different study types come with different pros and cons and that imperfect evaluations of usable privacy and security prototypes can still be valid contributions to the research community.

We can have ideas – that’s the strength of academia – ideas that are totally radical and new and not going to be evaluated perfectly in the context of a lab study […] but that doesn’t mean they don’t have value [or] can’t inspire the direction of the usable security and privacy future. - P11

P7 further emphasized the importance of taking prototypes out of the lab and putting them into real environments. Other experts mentioned that real-world investigations have a particular importance as participants manifest “demand characteristics’; they subconsciously change their behavior to fit the experimenter’s purpose. P11, for example, highlighted the uncertainty about the effect of lab studies on results and that they “cannot be sure whether [participants] are acting as [they] would act in the wild or if they’ve changed their behavior because they know they are being part of a study” (P11).

4.4.2. Value/cost trade-off

The trade-off between effort in applying a methodology (e.g., lab vs field study) and the value/ecological validity of the corresponding findings was highly discussed by our experts and is considered to be a domain challenge in USEC research (Garfinkel & Lipford, Citation2014). Our experts stated that lab studies are considered simpler than field studies and that this is one of the main reasons why we see a plethora of lab studies but significantly less field studies in USEC prototyping research.

[the] uncharitable view would be that [running lab studies rather than field studies] is just easier to do. - P8

My take is that it’s a mix of convenience, not knowing better, and impossibility as in certain [situations] you can’t do [experiments] that are difficult to do that it’s not worth the additional effort. - P2

P9 also voiced that “it’s easier to do a lab study; the odds of something going wrong are way too high” (P9). Another expert, P3, voiced that before conducting field studies it is important to compare the value versus the effort.

There is a place for [field studies] but is there enough added value in field studies generally that this is important? - P3

Experts also voiced that field studies can be powerful and it is not unlikely that results deviate from lab findings, but researchers need to be clear about what they are seeking rather than being exploratory. P3 elaborated that “field studies can be valuable but there needs to be a clear value […] the data will differ from a lab” (P3). Other experts, for example, P4, highlighted the strength of field studies as they provide insights about how systems are really going to be used and how people are going to accept them. On the other hand, P5 voiced it is hard to pinpoint causes of effects in field studies and that achieving accurate results through field studies only is challenging.

4.4.3. Experts’ views on field studies in USEC research

Some experts reported believing that “field studies are sort of a gold standard” (P11) and that they “would like to see more about how security fits into real life as opposed to specific little corner cases that are easy to run” (P9). P10 highlighted that the suitability of field studies heavily depends on the required investigation and the legal and ethical considerations, and that this differs a lot between different countries. Experts also described some unsuccessful attempts to study prototypes in a real-world setting.

We looked at investigating [our security system] within a real setting but there were just too many legal and ethical constraints around that. - P2

P6 and P7 added that such field studies are expensive and that they often have to rely on findings from lab studies only due to budget issues and technological issues they would face in field studies.

4.5. USEC’s research culture

Different research fields and individual researchers come with different sets of behaviors, values, expectations, attitudes, and norms, forming a unique research environment and culture. Open science and reproducibility, for example, are recognized as vital features of science across research fields and considered as a disciplinary norm and value (McNutt, Citation2014); however, in practice there are significant differences across research communities. Wacharamanotham et al. (Citation2020) showed that the process of sharing artifacts is an uncommon practice in the HCI community and Cockburn et al. (Citation2018) showed that preregistration has received little to no published attention in HCI whereas other research fields (e.g., psychology) started to award badges for different categories (e.g., “open data,” “preregistration”) (Eich, Citation2014), with promising adoption rates in the first year of operation (Nosek et al., Citation2015).

When it comes to USEC and researchers’ behaviors, values, expectations, attitudes, and norms, experts mentioned challenges around the expected, often hard to reach, high ecological validity of usable privacy and security prototype evaluations (Section 4.5.1), USEC researchers’ reserved enthusiastic about novel evaluated systems (Section 4.5.2), and the lack of access to research resources (Section 4.5.3). While some of these challenges can also be found in neighboring research communities (e.g., the lack of access to research resources in HCI (Wacharamanotham et al., Citation2020)), the combination of the challenges and USEC researchers’ opinions and their research approaches form a unique research culture.

4.5.1. Toward (high) ecological validity

An important objective in usable privacy and security research is to achieve high ecological validity; the extent to which a study adequately reflects real-world conditions. A password study by Fahl et al. (Citation2013) showed that participants in a lab study behaved differently compared to their real-world behavior. Although Redmiles et al. (Citation2018) argued that many insights from self-report security data can, when used with care, translate to the real world, they also emphasized that self-reported data can indeed vary from data collected in the field and that alternative research methodologies should be considered for studying detailed constructs. Some of our experts mentioned that USEC researchers often expect high ecological validity and generalizability of study findings. As a result, they aim to, for example, role-play real-world situations in the lab (Fahl et al., Citation2013), conduct field studies (e.g., Harbach, De Luca, Egelman et al., Citation2016; Malkin et al., Citation2017; Mare et al., Citation2016), or leverage online studies to increase sample size and target a more representative sample (e.g., (Cheon et al., Citation2020; Harbach, De Luca, Malkin et al., Citation2016; Markert et al., Citation2020)). However, P12, for example, stated that a real-world evaluation of all systems’ usability and security aspects is almost impossible: “the difficulty in evaluating system security is that the lack of security can have many different sources.” (P12). P12 further emphasized the complexity of security evaluations.

All secure systems are alike. But there are many different ways for a system to be not secure. It is not possible to enumerate them all. - P12

A concern by P1 was about the lack of common evaluation approaches and that their set of evaluation metrics (e.g., interaction time with a security system, error rate when providing input) often has to evolve from literature reviews because of the lack of any standards. Researchers’ various evaluation approaches exacerbate the problem of determining which metrics to investigate and which evaluation method to employ for evaluating USEC systems. P2, for example, voiced that the variety of evaluation approaches often also leads to a wide range of different system evaluations and conclusions.

If you look at five different usable security papers you can’t compare them because they have used slightly different approaches of evaluating the different parts of their systems […] you can’t really say which one was better or worse. - P2

P2 particularly highlighted the subjectivity of privacy and security and that many researchers have strong opinions when it comes to the evaluation. P2 also voiced that the lack of standardized sets to evaluate security schemes makes it even harder to fully address ecological validity and perform comparisons between multiple works.

I am not aware of any standard scenarios that can say ‘okay, here now we can compare it if we’re running a lab study.’ - P2

In line with Key Challenge #4, P11 underlined the need for a clear vision of what is expected of evaluations that are either conducted in lab settings and are likely less ecologically valid, or are conducted as organized field studies that are still limited to an extent due to research participation effects (Nichols & Maner, Citation2008; Orne, Citation1962).

We [as a community] just need to be a little bit more open to what sort of solutions/evaluations we are expecting out of [something] that has not actually been deployed in the real world. - P11

4.5.2. Creating space for novel solutions

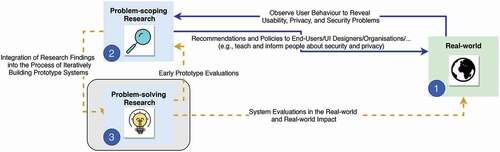

P11 expressed that while problem-scoping research, e.g., identifying usability issues in existing systems, is important, it is equally important to conduct problem-solving research. Some experts also raised the concern that the USEC community is very focused on the evaluation part when a large element of the contribution is building a system functional enough to demonstrate effectiveness in terms of both deployment and usability.

The [USEC community] wants to evaluate everything when like a big part of your contribution is just the fact that you could build this [system]. - P11

P11 further argued that without recognition of the value of functional solutions, which may come with limitations imposed by the real world, the community may struggle to really engage with the realities of solving problems.

I feel like we as a community refuse to accept that kind of contribution – then you know, we’re shooting ourselves in the foot, we’re never going to be part of the broader conversation. - P11

Other experts discussed the community’s focus on realistic use cases resulting in limited enthusiasm for building speculative future-oriented solutions. P8 mentioned that they have seen some shift recently, but problem-scoping and problem-solving USEC research are still not balanced.

I like some of the shift we’ve seen recently […] to actually really look at finding ways of supporting [users]. - P8

Considering the implementation of novel solutions, P11 argued that there is still a lack of future-oriented USEC research where use cases are more speculative or avant-garde.

In general the usable security and privacy security community is not very imaginative […] they don’t really like thinking too far in the future. - P11

P10 agreed to some extent and voiced that the USEC community does not appreciate research where they have to imagine worlds that do not exist.

4.5.3. Accessibility and availability of resources

Experts highlighted the lack of open-source material within the USEC community that negatively affects their research outcome. According to P6 there is a significant lack of open-source implementations of usable privacy and security systems available. P4 voiced that the lack of open-source material makes it time-consuming and challenging to build certain features and P11 even suggested to collectively build a platform that supports researchers in their research.

How can we create a platform that will make it super easy for other researchers to build upon the foundation that you’ve created? - P11

Experts also voiced that their research is often driven by the hardware that is available. For example, P2 faced challenges in investigating a security system’s usability while users are walking.

We had the idea of putting people on a treadmill for the evaluation […] but then didn’t have a treadmill. - P2

Building upon the sample size discussions in Section 4.3, experts also asserted that finding a broad user base is even more critical in academia and that this is where most academic studies suffer because the resources for recruiting are limited.

4.6. Academia & industry in USEC research

Getting access to real systems used by companies is challenging and the lack of access can result in lower ecological validity as well as barriers to transitioning research results into practice. For example, one issue in privacy and security research is that potential industry partners are concerned about harmful findings and do not allow any “vulnerability research” (Gamero-Garrido et al., Citation2017), including prototype-building work. P2 related such an incident.

We did have some connections with [companies] but they are like: ´you can’t touch our machines.’ - P2

Experts voiced that this type of research can be of great value, but there are concerns over legal challenges. Building upon the discussions around USEC’s research culture, we present experts’ comments on the lack of collaborations between academia and industry (Section 4.6.1) and the resulting limited real-world impact (Section 4.6.2).

4.6.1. Academia and industry – Status Quo

Experts voiced that although there are collaborations between academia and industry, there is still room for improvements when it comes to exchanging knowledge, sharing research resources, and accelerating impact. Our experts voiced that one of the resulting problems is the lack of hardware accessibility (similar to Key Challenge #8) that leads to limited research contributions and therefore decreases ecological validity: “if they just lent us [an ATM] for a period of time it would have been really good to do our studies” (P8). Another expert, P7, also brought up the ATM example and the corresponding challenges with financial institutions.

Which bank would allow [to install] some random prototypical hardware; probably no bank. - P7

The lack of access to real-world hardware is only one problem according to P8. P8 also voiced that another considerable problem is companies’ fear of security leaks that impacts usable privacy and security research.

If you are working in the security in an environment where there is real-world security, they often won’t let you do any observations and I think that’s really bad […] they are afraid that you’re going to find something that means the security isn’t working. - P8

Besides that, researchers are restricted in publishing findings based on observations within companies: “I’ve been lucky to have done observational studies a couple of times [but] I wasn’t allowed to publish them” (P8). The importance of ecological validity in USEC underpins the need for more real-world studies where users actually use those systems on a daily basis. One way to conduct more of these investigations is, according to P11, to collaborate closely with industry and establish stronger collaborations.

4.6.2. USEC research and its “Real-world” impact

When asking our experts whether or not they see controlled lab studies as the “way to go” to evaluate security and privacy-protecting systems and what progress they would like to see within the USEC community, discussions around the impact of USEC research on real-world applications came up and that this transition, moving USEC research and corresponding usable privacy and security solutions into practice, is still lacking. Some experts voiced that the problem is not that the USEC community lacks ideas for usable and secure systems; instead, they would like to see how these systems and solutions fit into real life. P9, for example, voiced that many publications end in a heap of privacy and security schemes that never find their way into users’ daily lives.

There’s a lot of proposed authentication schemes out there and a lot of them aren’t gonna move forward like a lot of them are ideas, they didn’t really work out, they’re not really showing any promise and so you know, we discarded them. - P9

Although there are technologies that are widely deployed nowadays (e.g., anti-phishing technology, two-factor authentication) much of USEC research has indeed not been applied in the real world; examples include enhancing authentication on mobile devices (Bianchi et al., Citation2010; Khamis et al., Citation2017; De Luca et al., Citation2014; Von Zezschwitz et al., Citation2015) or protecting users’ privacy when interacting with public displays (De Luca, Von Zezschwitz, Pichler et al., Citation2013; Ragozin et al., Citation2019; Von Zezschwitz et al., Citation2015). Our experts voiced that a major reason for the limited impact is the huge gap between prototype evaluations and being able to use these systems in real-world settings: “there’s a huge gap between possibility and building the system and commercialization” (P5). Complementing this, P8 emphasized that many researchers do not want to change their existing theories or their skillset; therefore, there seems to exist some kind of resistance to change within the USEC community. Our experts also highlighted that the interests of USEC researchers and practitioners vary widely. Particularly, some experts were concerned about some other USEC experts mindset.

I’ve seen this in rebuttals […] when I write a review about something […] and they are like oh well so many other people have published lab studies, why should I have to go out and do something differently […] it’s a lot harder, it’s a lot more work and as long as I can get this stuff published why should I bother? - P8

Experts also highlighted that USEC research should go beyond publications and not be entirely driven by the “publish or perish” mindset (McGrail et al., Citation2006). P11 encouraged the USEC community to think big and collaboratively aim for more than “little projects.”

How can we make that little project the next like D3.jsFootnote3 for usable security? - P11

P12 further criticized the opinionated mindset of many researchers and that the academic career is often considered to be more important than having real-world impact.

Most researchers’ goal is to produce papers and get their degree or tenure; few researchers are [actually] building and deploying working systems. - P12

5. Discussion

We have identified 9 key challenges, each of which contributes to answering RQ1: current bottlenecks of USEC research that involves prototyping and user studies are manifold and it is challenging to pinpoint a single source (Key Challenge #1 – #9). In RQ2, we asked what the USEC community needs to better transition research contributions to the real world. We discuss how our findings contribute to RQ2’s answer and provide a discussion of and comparison to similar challenges in neighboring HCI disciplines. To conclude, we discuss the implications of our work and provide ways forward for both individual researchers and the broader USEC community.

5.1. There is no one best way for doing USEC research

Our experts noted that it is impossible to enumerate all security aspects of a system but that imperfectly prototyped and evaluated systems can still have value and inspire the direction of USEC’s future. Arguably, the opinions brought up by our experts around the design, development, and evaluation of prototype systems are not far away from the HCI literature. Greenberg and Buxton (Citation2008) and Shneiderman et al. (Citation2016) emphasized that the choice of evaluation methodology should evolve from the actual problem (e.g., what are users’ needs) and appropriate research questions. In the context of usable security, it is also important to note here that a system’s usability and security oftentimes highly depends on the specific context (e.g., external factors can impact a system’s state or a user’s behavior (Kainda et al., Citation2010)). The value, benefits, and drawbacks of different evaluation methods were echoed by our interviewees together with the non-trivial part of threat modeling (Key Challenge #1 and #4). Below, we discuss our experts’ voiced comments in more detail and tie those back to the broader research field.

5.1.1. Adjusting expectations of prototype developments and evaluations

According to some of the experts (e.g., see P12’s statement in Section 4.5.1 or P11’s statements in Section 4.5.1 & 4.5.2), the problem is exacerbated by some researchers’ expectation of an exhaustive evaluation that assesses every single aspect of a system’s characteristics in an ecologically valid setting. There are many reasons that make this often infeasible when evaluating novel systems, including: 1) the need to run lab studies first to evaluate the new elements in the prototype and pinpoint causes of problems and 2) not having the resources (e.g., hardware) to produce multiple prototypes for in-the-wild testing. The voiced hardware prototyping and ecological validity challenges (Key Challenge #2 and #6) voiced by our experts can also be found in neighboring research communities such as Ubicomp. Prototyping novel ubiquitous systems is challenging (Dix et al., Citation2003; Greenberg & Fitchett, Citation2001) and often requires additional expertise and specific tools (e.g., knowledge about different electronic components, access to soldering irons). Greenberg and Fitchett (Citation2001) even described developing and combining physical devices and interfacing them within the application software as one of the biggest obstacles. In a similar vein to the lack of sharing research resources and expertise in building hardware voiced by our experts (Key Challenge #2 and #8), Greenberg and Fitchett (Citation2001) observed that researchers who develop systems based on physical devices are often required to start from scratch and face many difficulties. In their own little project, building a reactive media space environment, one of their colleagues (an electrical engineer) joined the team and provided significant support in the hardware-building process (Greenberg & Fitchett, Citation2001). More than ten years later, we can indeed see similar interdisciplinary collaborations in USEC research. One example is the Back-of-Device prototype by De Luca et al. (Citation2013) and their follow up work XSide (De Luca et al., Citation2014). The form factor of their first prototype significantly reduced the generalizability of the results of one-handed interaction. While Greenberg and Fitchett (Citation2001) benefited greatly from an electrical engineer that joined the project, the prototype by De Luca et al. (Citation2014) benefited greatly from the 3D printing expertise of one of the researchers; thus improved, together with an advanced algorithm, user experience.

This shows that collaborations can greatly improve USEC prototype systems and corresponding evaluations. As emphasized by Fléchais and Faily (Citation2010), usable privacy and security research requires a variety of researchers from different research areas beyond USEC (e.g., psychology, economics), which we discuss further in Section 5.4.2. The voiced prototype-related challenges (e.g., Key Challenge #2 and #6) also suggest that expectations of prototype developments and evaluations should be adjusted in situations where building “perfect” prototypes and conducting highly realistic evaluations are too challenging.

5.1.2. Bridging the gap between lab and field studies

The USEC community has been debating the respective value of lab and field studies for some time, with our experts similarly mentioning the need to be open to alternative evaluation approaches (Key Challenge #2 and #6). Discussions around lab and field studies, especially when and how field studies are “worth the hassle” are also discussed in neighboring communities such as Mobile HCI (Kjeldskov & Skov, Citation2014). A corresponding critical evaluation and comparison of a lab and field study even impacted the Mobile HCI research field in the subsequent years (Kjeldskov & Skov, Citation2014; Kjeldskov et al., Citation2004). Kjeldskov et al. (Citation2004) discovered more usability issues in the lab than in a similar field study, for roughly half the cost; consequently, the researchers concluded that the added value of field studies is very little and neglectable, which resulted in a heated debate about the generalizability as the study did not cover long-term use and adoption (Iachello & Terrenghi, Citation2005).

In USEC research, the long-term use and evaluation of systems is indeed an important component. For example, previous works showed that habituation can impact users’ perception and security behavior (e.g., see the replication study of CMU’s SSL study (Sotirakopoulos et al., Citation2011; Sunshine et al., Citation2009)). Other works also emphasized the importance of habituation and its key role in USEC research (e.g., in classification of genuine login attempts (Syed et al., Citation2011) or in research on security alert dialogs (Maurer et al., Citation2011)). That being said, Greenberg and Buxton (Citation2008), for example, assert that there is a need to recognize many other appropriate ways to evaluate and validate work and that usability evaluations can be ineffective if naively done “by rule” rather than “by thought” and that “a combination of methods – from empirical to non-empirical to reflective – will likely help triangulate and enrich the discussion of a system’s validity.” (Greenberg & Buxton, Citation2008).

In USEC, there seems to be a need to fundamentally rethink current study paradigms (Alt & Von Zezschwitz, Citation2019) and frameworks for understanding privacy risks and solutions in personalization systems (Toch et al., Citation2012). For example, the uptake of smart speakers that could collect sensitive data about users (e.g., (Alrawais et al., Citation2017; Lau et al., Citation2018; Toch et al., Citation2012)) requires a change in the way current security and privacy prototype systems are designed and evaluated.

There have been suggestions to improve ecological validity of usability and security evaluations in the lab. For example, role-playing real-world situations (Fahl et al., Citation2013) to mimic scenarios where security is a secondary task (which is usually the case in the real world (A. M. Sasse et al., Citation2001)). However, it has also been argued that these approaches can not necessarily compete with the ecological validity of real-world studies and should therefore not be treated as an alternative. As voiced by our experts, the context and expectation of the corresponding evaluation method is important and USEC research needs all facets of evaluation methods, including studies of different types beyond traditional lab and field studies. While preceding lab studies and follow up field studies are vital to transition usable privacy and security prototype systems into practice in the long run, alternative evaluation methods are equally important and can inspire usable privacy and security research in the future.

A potential direction to address the challenges around ecological validity is to leverage novel technologies for prototype development, deployment, and evaluation. As brought up by one of our experts (see also Section 5.4.3), 3D printing can significantly facilitate prototyping of security systems and USEC research in general (as evidenced by, for example, De Luca et al. (Citation2014); Marky et al. (Citation2020)). Future work could also consider the use of online platforms to facilitate field studies. For example, Redmiles et al. (Citation2018) showed that many insights from online surveys on security and privacy translate to the real world. In line with Redmiles et al. (Citation2018), Mazurek et al. (Citation2013) suggest that passwords collected through online studies can be a reasonable proxy for real-world passwords. Similar to the transition of lab to online studies, there has also been a movement in human-centered research to use virtual and augmented reality to conduct user-centered evaluations of, for example, authentication schemes, IoT devices, and public displays (Mäkelä et al., Citation2020; Mathis, Vaniea et al., Citation2021; Voit et al., Citation2019). Mathis, Vaniea, et al. (Citation2021) showed that virtual reality (VR) can serve as a suitable test bed for the usability and security evaluation of real-world authentication schemes. In a similar vein, Mäkelä et al. (Citation2020) reported that user behavior is largely similar in field studies of public displays compared to behavior in immersive virtual reality, while Voit et al. (Citation2019) compared conducting empirical studies online, in virtual reality, in augmented reality, in the lab, and in in-situ studies to find that some findings are comparable across them while others are not. Following P11’s emphasis on aiming for something beyond little projects, building an online platform that is capable of evaluating physical privacy and security systems in an ecologically valid way could be a powerful approach to establish an infrastructure for USEC research that may be complementary to lab and field studies.

One key message here is that we as a research community should be mindful of the challenges that USEC researchers encounter when evaluating usable privacy and security prototype systems. There is often great value and depth in findings from lab studies. It is also important to note that novel technologies and evaluation methods can augment USEC research in the long run (e.g., the previously discussed 3D printing examples by De Luca et al. Citation2014, and Marky et al. Citation2020 or using VR as a test-bed Mathis, Vaniea, et al., Citation2021). It is without question that field studies are essential for high ecological validity; however, sometimes they are infeasible due to constraints beyond researchers’ capabilities due to lack of resources or the nature of the prototype (e.g., evaluating a tethered hardware prototype). In these cases, field studies can take place only if the prototype features much higher fidelity than what can be achieved in typical research environments.

5.2. Selecting sample sizes in the presence of constraints

A major discussion point in our interviews was about sample sizes and selection processes (Key Challenge #3), which is a major domain challenge in USEC research (Garfinkel & Lipford, Citation2014; Redmiles et al., Citation2017). Looking at the content of the neighboring HCI community, we see a wide range of sample sizes and compositions used. For example, Caine highlighted that twelve participants was the most common sample size across papers published in CHI 2014 (Caine, Citation2016). Focusing on usability only, Turner et al. (Citation2006) found that five users allow discovering 80% of a system’s usability problems. Similarly to our experts’ concerns about the way participant recruitment happens in their USEC research (e.g., “we evaluate [our systems] by knocking on the doors of friends and colleagues and be like ‘hey, come do my user study”’ (P11), Lazar et al. (Citation2017) argued that there are many HCI studies that come with a small and non-diverse sample (e.g., students only); therefore, often do not allow generalizing results. We discuss the “correct” sample size selection and its reality further in Section 5.2.1 and 5.2.2.

5.2.1. “Correct” sample size selection

Classically the “correct” way to decide on a sample size is highly dependent on the research question and the type of data being collected (Lazar et al., Citation2017; Redmiles et al., Citation2017). For example, qualitative studies that focus on ground-up approaches use the concept of “saturation” (Guest et al., Citation2006), where data is collected till the uncovered insights start saturating, i.e., increasing the sample size does not reveal additional insights. Saturation is an interesting approach because sample size is decided while the research is ongoing rather than up-front, making it challenging to know at the start how many participants will be needed. Quantitative studies that involve statistical testing use a very different approach. The number of needed participants is calculated up-front using information like expected variance to perform a power analysis computation of how many subjects are required to reach statistical significance (Field & Hole, Citation2002; Yatani, Citation2016). However, this often clashes with the realities of finding and conducting experiments with users.

Redmiles et al. (Citation2017) emphasized the importance of different sampling methods in different research contexts and the need to rely on some form of convenience sampling due to, for example, time and cost considerations. Sample composition is also an issue since some groups, like security engineers, penetration testers, and chief security officers, are not necessarily easy to get time with. Yet, targeting populations like this even at low sample numbers may be the most appropriate approach to answer a specific research question (Redmiles et al., Citation2017). The tension harks back to the initial attempts by Nielsen (Citation1994) to find valid ways of conducting usability tests in low-budget environments, such as universities. Approaches like Think Aloud (Nielsen, Citation1994) and Delphi (Loo, Citation2002) studies were specifically designed to extract the maximum amount of usability data from small samples. USEC research has some unique properties that make the application of these approaches challenging for prototype testing, namely that security is often a secondary task (Cranor & Garfinkel, Citation2005; Garfinkel & Lipford, Citation2014) where users’ main goal is likely something other than the prototype’s security function. Since many of the “discount” usability approaches focus on having the user engage with the tested system as a primary task, they are challenging to fully adapt to USEC (Kainda et al., Citation2010).

5.2.2. “Realities” of sample size selections