Abstract

Despite recent efforts to make AI systems more transparent, a general lack of trust in such systems still discourages people and organizations from using or adopting them. In this article, we first present our approach for evaluating the trustworthiness of AI solutions from the perspectives of end-user explainability and normative ethics. Then, we illustrate its adoption through a case study involving an AI recommendation system used in a real business setting. The results show that our proposed approach allows for the identification of a wide range of practical issues related to AI systems and further supports the formulation of improvement opportunities and generalized design principles. By linking these identified opportunities to ethical considerations, the overall results show that our approach can support the design and development of trustworthy AI solutions and ethically-aligned business improvement.

1. Introduction

AIFootnote1 pervades every aspect of our lives, but people and organizations are concerned about trusting and adopting these systems. This might be due to the “opacity” of current AI algorithms, that is, the fact that they are not able to explain their inner operations or reasoning to users (Adadi & Berrada, Citation2018).

Efforts to make AI systems more transparent have led to the study of eXplainable AI (XAI) (Adadi & Berrada, Citation2018; Gunning et al., Citation2019). This has also caught the attention of the human-computer interaction (HCI) community (Abdul et al., Citation2018), who outlined that a human-centered approach to AI is needed to develop reliable, safe, and trustworthy AI applications (Shneiderman, Citation2020). In particular, recent research results have shown that explainability can influence user trust, and thus, the subsequent adoption of AI systems (Shin, Citation2021).

However, little is known about how people interpret such explanations and understand their causal relationships (Barredo Arrieta et al., Citation2020; Chou et al., Citation2022)—a construct referred to in the literature as causability (Holzinger et al., Citation2019)—and more generally, what are the factors involved in the establishment of trust between humans and AI (Gillath et al., Citation2021; Jacovi et al., Citation2021). Understanding causal relations is considered important because it can promote a higher degree of interpretability of AI explanations (Chou et al., Citation2022). This, in turn, could better support people in understanding the AI reasoning process, thus increasing the trustworthiness of AI systems, and allowing them to anticipate the behavior of such systems, hence supporting the building of warranted trust toward AI (Jacovi et al., Citation2021).

However, explanations are only one mechanism that can be used to build trust in AI solutions. People might trust actors or their claims without any explanation or even if contradictory evidence exists. Human trust is a much broader issue than understanding logical reasoning behind a claim. Trust is a multidimensional contextual phenomenon in which people prioritize different issues according to their situational desires and opportunities. Contextual factors are considered to be critical for evaluating AI systems (van Berkel et al., Citation2022).

Therefore, we turned to normative ethics that includes three branches of ethical thinking that could be used to widen the perspectives of trust: utilitarian, deontological, and virtue ethical (Dignum, Citation2019). These alternative perspectives are important because they can help reveal the different factors of how people think in their context. Normative ethics also provides a strong theoretical foundation that guides us to investigate the situation from fundamentally complementary and sometimes conflicting perspectives.

The aim of our research is to investigate how people understand AI decisions from a causability point of view, as well as how they make sense of the interaction situation and context from three perspectives based on normative ethics. We hypothesize that this type of holistic investigation would help us identify issues that cause distrust or would otherwise be desirable for end users to improve the trustworthiness of the AI solution at hand. Such insights could support designers in identifying improvement opportunities and formulating design principles that can increase the trustworthiness of the considered systems.

For this purpose, we developed a set of interview questions based on the System Causability Scale (Holzinger et al., Citation2020) and formulated three additional sets of questions to gain insight into how people understand and experience AI decisions as part of a wider socio-technical context. The questions are open-ended to foster richer user feedback and aim to assess (i) whether users understood the reasons behind an AI result, such as a prediction or recommendation; (ii) if the AI results were informative enough for them to make a decision followed by an action; and (iii) what users’ considerations regarding utilitarian, deontological, and virtue ethical aspects of the AI solution in their socio-technical context were.

In this article, we first outline the rationale behind the creation of our assessment method. Then, we illustrate through a case study how it can be used in the design and development of trustworthy AI solutions in a real business context and present the lessons we learned and our reflections.

For the empirical case study, we turned to the AI solution we use in our organization to support consultants’ allocation management tasks (Seeker) and interviewed 10 people with different roles using the sets of questions we developed. Seeker was chosen as a case solution because it is a seemingly simple AI solution that supports our organization’s core operation and value proposition, that is, searching for consultants for customers’ requests and allocating them to agreed customer assignments. In a wider context, its recommendations could affect company business performance as well as consultants’ careers, similar to recent AI technologies that support personnel recruitment and selection processes. Given the impact that such AI systems can have on business models and operations, as well as people’s lives and careers, their ethicality needs to be comprehensively understood (Hunkenschroer & Luetge, Citation2022). In fact, such systems are ranked as high-risk applications in the proposed European Commission AI Regulation (European Commission, Citation2021b).

The remainder of the article is organized as follows. Section 2 introduces the concepts of human-AI trust and trustworthy AI, XAI, causability, and normative ethics and then reviews related work. Section 3 presents our approach to a trustworthy AI and the case study we carried out. Section 4 describes the case study findings, which are discussed in Section 5. Finally, Sections 6 and 7 present conclusions, limitations, and future work.

2. Related work

2.1. Trust and trustworthiness in Artificial Intelligence

In this article, we consider human-AI trust as a contractual phenomenon, as formalized by Jacovi et al. (Citation2021). From this perspective, trust means that a trustor believes that a trustee will adhere to a specific contract that should be made explicit and verifiable. Examples of contracts are the principles, requirements, or functionalities that AI systems aim to implement and offer.

According to the authors’ definitions, an AI system is trustworthy to a contract if it is capable of maintaining it. Human trust toward AI then occurs when people perceive that the system is trustworthy to a predefined contract and accept vulnerabilities to its actions; that is, they can anticipate system behavior in the presence of uncertainties. To increase human trust in contracts, they can be communicated to people through cognitive or affective means such as reasoning or emotional aspects (Gillath et al., Citation2021). In any case, if trust is caused by trustworthiness, it is considered warranted. Conversely, if people perceive that an AI system is not trustworthy to a contract and does not accept vulnerability to the system’s actions, distrust occurs.

In line with these definitions, we assume that an AI system becomes more trustworthy the more it embodies a wide variety of contracts and is capable of maintaining them. Thus, the trustworthiness of an AI system increases when additional contracts are made explicit and verifiable, as this can guarantee that its decisions follow what the contracts claim to be.

In addition, since trust and trustworthiness are multi-perspective dilemmas, including contradictory valuations and prerequisites, we also assume that contracts should be agreed upon transparently from many alternative perspectives. The contracts people consider more important can and will differ between the contexts of use and individuals’ situated preferences.

2.2. Explainability, causability, and user trust

Despite the increasing popularity of concepts such as XAI or explainability, common and agreed upon definitions seem to be lacking in the literature. In this article, we consider those proposed by Barredo Arrieta et al. (Citation2020), who refer to explainability as “the details and reasons a model gives to make its functioning clear or easy to understand” and define an explainable Artificial Intelligence as “the one that produces details or reasons to make its functioning clear or easy to understand.” In other words, explainability and XAI refer to approaches aimed at making AI systems more transparent or less opaque.

However, explainability and XAI can be viewed as properties of an AI system (Holzinger et al., Citation2020; Holzinger & Müller, Citation2021), and more evidence is needed to investigate how people interpret AI explanations and understand their causal relationships (Barredo Arrieta et al., Citation2020). In particular, Holzinger et al. (Citation2019) referred to this construct as causability and defined it as “the extent to which an explanation of a statement to a user achieves a specified level of causal understanding with effectiveness, efficiency, and satisfaction in a specified context of use.” Thus, causability is seen as a property of the user (Holzinger et al., Citation2020; Holzinger & Müller, Citation2021) and is considered critical for achieving a certain degree of human-understandable explanations (Chou et al., Citation2022). Interestingly, recent studies have shown that it positively influences explainability and contributes in tandem to the building of user trust toward AI systems (Shin, Citation2021).

In line with the definitions of human-AI trust outlined in the previous section, causability techniques that promote a higher level of interpretability to people can (i) increase the trustworthiness of AI by revealing the relevant signals in the AI reasoning process, (ii) increase the trust of the user in a trustworthy AI by allowing them easier access to the signals that enable them to anticipate behavior in the presence of risk, and conversely, (iii) increase the distrust of the user in a non-trustworthy AI by allowing them to anticipate when a desired behavior does not occur, all corresponding to a stated contract (Jacovi et al., Citation2021). Thus, it is necessary to include XAI techniques in AI solutions to support the adoption of these systems by people and organizations—for a recent review on studies that investigated the relationship between XAI and user trust, see for example Mohseni et al., (Citation2021). However, very few studies have specifically investigated the effect of causability on explainability (Barredo Arrieta et al., Citation2020); thus, more effort is needed to understand its role in building user trust.

2.3. Philosophical aspects of trust and trustworthiness

Trust is an important concept for AI systems because it is widely accepted that people and organizations cooperate, use, and adopt more readily systems that they trust. Therefore, trust is one of the most common foundations for ethical AI frameworks, such as those proposed by public organizations or private companies such as Deloitte, EU, EY, IBM, KPMG, and OECD (Deloitte, Citation2021; European Commission, Citation2019; EY, Citation2021; Gillespie et al., Citation2020; IBM, Citation2021; OECD, Citation2021).

It is important to ensure that when people and organizations decide to trust AI systems, the results are anticipated and beneficial rather than unexpected and undesirable. Trust is valuable only when placed in trustworthy agents and activities but damaging or costly when (mis)placed in untrustworthy agents and activities (O’Neill, Citation2018). In philosophical studies, normative ethics investigates how one ought to act in a moral sense. Considering this, we deduced that normative ethics could be used to guide our investigations of how AI systems ought to act to convince people of their trustworthiness. In normative ethics, philosophers have recognized three classical schools of ethical argumentation, or ways of thinking, i.e., utilitarian, deontological, and virtue ethical (Dignum, Citation2019). Therefore, we selected these frameworks to guide us in capturing the different factors that affect trust toward AI systems as well as potential mechanisms to improve their trustworthiness. Considering only one of these perspectives, one might miss important contextual details about issues that should be be made transparent, verifiable, and explained to end users as well as other relevant stakeholders. Similar arguments have also been promoted by other researchers, such as van Berkel et al. (Citation2022), who emphasize the importance of recognizing contextual factors under which an AI system is assessed and deployed.

The first of these three perspectives, utilitarian reasoning, is concerned with consequences. Doing something, such as following an AI system’s investment recommendations, will result in positive consequences, even better than following recommendations made by human analysts. Deontological thinking argues that people cannot judge everything based on its consequences. There are more fundamental issues such as rules regarding rights and responsibilities. Human life can be considered an absolute right, not something to be traded for useful goods or services. Finally, the third way of thinking, virtue ethical, argues that rights and responsibilities are not enough and that they might even be morally wrong. Higher principles should be followed, even when not required. Thus, people should be honest or generous, even when they are not forced to be.

Thus, it is important to consider these three perspectives when investigating the factors that can contribute to users’ trust toward AI. They provide alternative perspectives and conflicting arguments on how people understand AI, its decisions, and the surrounding socio-technical context.

2.4. XAI design and evaluation frameworks

Owing to the need for increased transparency in AI systems, the HCI community has started to propose frameworks and guidelines to include XAI aspects in the design, development, and evaluation of such systems.

In particular, some frameworks have outlined a step-by-step approach to achieving such goals. For example, Eiband et al. (Citation2018) proposed a 5-stage participatory design process for designing transparent user interfaces that considers what to explain in the system, that is, the first three stages, and how to explain them, that is, the last two stages. For each stage, the framework indicates important questions that need to be addressed, types of stakeholders that need to be involved, and design methods that can be used. The authors evaluated the framework using a commercial intelligent fitness coach application. The results indicated that the framework was a valid method for addressing the transparency-related challenges of the app, improved users’ mental models of it, and met the needs of the different stakeholders involved. However, although the case study investigated some aspects related to causability, the study does not specify any evaluation method that can be used to assess such aspects with end users.

Similarly, Mohseni et al. (Citation2021) defined a nested framework for the design and evaluation of XAI systems considering their algorithmic and human-related aspects. Application of the framework starts from the outer layer, which focuses on the overall system goals; the middle layer, which addresses end-user needs for explainability; and ends in the innermost layer, which focuses on designing algorithms that generate explanations to users. For each layer, the model outlines the design goals and choices that need to be addressed and presents the appropriate evaluation methods and measures to be used. The use of the nested framework is illustrated through a case study in a research setting involving the design of an XAI system for fake news detection, summarized with a series of generic design guidelines for multidisciplinary XAI system design. Although the framework outlines evaluation methods to explore users’ understanding and satisfaction of explanations, the case study does not explicitly indicate what questions have been employed for this purpose.

Liao et al. (Citation2021) instead proposed a four-step design process based on a question bank they previously developed (Liao et al., Citation2020), which aimed to investigate explainability needs as questions that users might ask (XAI Question Bank). The first two steps of the proposed framework aim to investigate the needs and requirements for integrating XAI into the user experience, whereas the last two steps focus on engaging designers and AI engineers in the collaborative problem solving and development of XAI technology and design artifacts. For each step, the framework outlines the goals to be addressed and the task to be performed, together with the suggestion of the main stakeholders to be included and a list of additional resources or supplementary guidance if a product team faces difficulty in executing it.

The article also presents a series of XAI techniques grouped by the category of questions that users might ask when presented with an AI decision or result based on their XAI Question Bank. The use of the four-step framework is illustrated with a case study including XAI in an AI system that predicts patients’ risk of adverse effects in healthcare. The results indicate that the framework was an effective method for including explainability aspects into a design prototype, from the identification of users’ XAI needs to the definition of XAI requirements. Moreover, it was accepted by different stakeholders involved and, in particular, supported collaboration between designers and AI engineers. Interestingly, the case study illustrates how participants developed questions that considered causability or utilitarian aspects. However, the framework seems more focused on eliciting explainability needs than on investigating such aspects of people’s experiences.

Finally, Schoonderwoerd et al. (Citation2021) proposed a three-step design approach for a human-centered XAI design (Do-Re-Mi). The first step (domain analysis) aims to investigate the context in which a system will be introduced and the need for explanation. The second step (requirements elicitation and assessment) focuses on eliciting requirements for explanations from end users and identifying potential contextual dependencies. Finally, the third step (multimodal interaction design and evaluation) aims to generate design solutions to satisfy the elicited requirements for explanations and generalizes the solutions to XAI interaction design patterns. This step also involves the evaluation of such patterns involving the end users. The application of the framework was evaluated with a case study aimed at designing explanations for a clinical decision support system (CDSS) for child health involving pediatricians. The results indicated that the method supported the identification of explanation needs from participants and the creation of reusable design patterns. Such patterns proved to be effective in satisfying participants’ needs, considering the information included in the explanations. Interestingly, to evaluate these patterns, the authors employed a quantitative questionnaire that included three questions aimed at assessing explanation satisfaction in users (taken from Hoffman et al., Citation2018), thus considering some aspects related to causability.

Other frameworks do not propose a step-by-step approach but are instead based on (i) human-reasoning theories (Wang et al., Citation2019), (ii) the AI lifecycle (Dhanorkar et al., Citation2021), or (iii) the target of explanations (Ribera & Lapedriza, Citation2019). The framework proposed by Wang et al. (Citation2019) describes how people reason rationally or heuristically but are subject to cognitive biases. It also indicates how different XAI techniques can support specific rational reasoning processes or target decision-making errors. The framework was evaluated by co-designing exercises aimed at designing and implementing an explainable clinical diagnostic tool for intensive care settings. The results highlight the usefulness of the framework for understanding how different XAI techniques can be used to support human explanation needs and reasoning processes and mitigate possible cognitive biases. The framework addresses the theory behind people’s need for causal explanations and how XAI can support them. However, there is no clear indication as to how these aspects can be evaluated. Dhanorkar et al. (Citation2021) proposed using the AI lifecycle as a design metaphor. Their framework is the result of in-depth interviews with individuals working on AI projects related to text data at a large technology and consulting company aimed at understanding interpretability concerns that arise in such contexts. The framework is organized into three steps, and for each step, it outlines the different stakeholders that should be involved and the explainability needs that they might have. It also outlines the general considerations that should be considered throughout the model lifecycle. However, the use of this framework has not yet been evaluated. Similarly, Ribera and Lapedriza (Citation2019) focused their framework on different AI user profiles. Each outlines goals and needs for explanations, the type and content of explanations, and evaluation methods. The framework is the result of a literature review on XAI, but it has not been evaluated.

Finally, other researchers proposed generic guidelines (Amershi et al., Citation2019) or scenarios for possible use (Wolf, Citation2019) in the design of XAI systems.

In addition to these approaches, a significant number of guidelines, principles, and toolkits have been published globally to develop and assess the trustworthiness of AI systems, see Crockett et al. (Citation2021) for a recent review. A notable example is the Z-Inspection method (Zicari et al., Citation2021), which is based on the European Commission framework for trustworthy AI (European Commission, Citation2019) and aims to assess the trustworthiness of AI systems in practice and produce improvement recommendations. This method consists of three main phases. The first (set up) aims to investigate the context in which an AI system is deployed, setting the team of stakeholders, and defining the boundaries of the assessment. The second phase (assess) focuses on evaluating the AI system in its socio-technical context, identifying possible ethical issues, and mapping them to trustworthy AI requirements. Finally, the third step (resolve) aims to address the emerging ethical, technical, and legal issues and produce recommendations for improving the design of AI. The use of this method has been illustrated with a case study to evaluate a noninvasive AI medical device designed to assist medical doctors in the diagnosis of cardiovascular diseases. In particular, the use case describes the path from the identification of ethical issues to their alignment with the European Commission guidelines for trustworthy AI (European Commission, Citation2019, Citation2020) and the creation of recommendations. The Z-Inspection method is thus interesting in the context of our research because it considers the actors and the context in which a system is deployed, is based on normative ethics aspects, and aims to produce recommendations for improvement. However, it does not explicitly focus on aspects related to causability.

In summary, several approaches have been proposed in the HCI literature to support the inclusion of XAI aspects in the design, development, and evaluation of AI systems. However, to the best of our knowledge, none of these studies have considered aspects related to causability together with normative ethical factors in a wider context. In addition, although many principles and guidelines have been proposed, there seems to be a gap in their application in turning ethical considerations into practical business improvements.

3. Our approach to trustworthy AI

In this section, we first present the rationale behind our approach for incorporating XAI aspects into the design and development of a trustworthy AI solution. Then, we describe the case study we conducted to evaluate how our method can be used in a real business context.

3.1. Research objectives

The goal of our research is to develop a theoretically grounded and industrially relevant method that can be used to improve the trustworthiness of AI systems in a real business setting. Specifically, we have two main objectives based on our ethical point of view and pragmatic requirements.

First, the method should help explore and recognize situational details and stakeholder views of a specific decision support or decision-making situation. This is in contrast to many other methods, which aim to introduce and externally enforce a predefined set of principles, requirements, and metrics to AI systems and their use, see, for example, Crockett et al. (Citation2021) for a review of available toolkits to include ethical aspects in AI systems. Our empirical assessment method aims to understand situational reasoning and contextual issues as openly as possible.

Second, the design approach should help derive pragmatic improvement opportunities that can be inserted into a system development backlog, implemented in organizational reality, and generalized to a shared design pattern library. Therefore, our research follows a constructive approach. Such approaches are iterative scientific methodologies, such as design science research, which aim to produce artifacts that solve theoretically and practically relevant problems (Hevner et al., Citation2004). According to design science research, artifacts can be constructs, models, methods, and instantiations (March & Smith, Citation1995), and we aim towards a generally applicable method.

The constructive design journey presented in this article illustrates how our practical method can turn empirical perceptions into pragmatic improvement opportunities, which we further generalized into design principles. Given the different perspectives considered by our approach, we hypothesize that the implementation of these improvement opportunities and design principles can improve the trustworthiness of AI systems and thus support the building of user trust toward them.

3.2. Conceptual framework and assessment method development

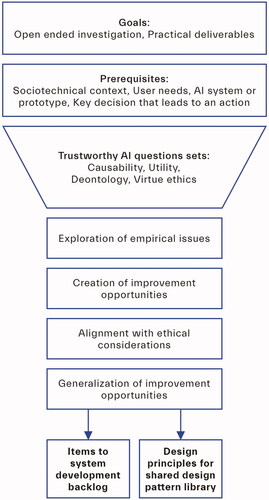

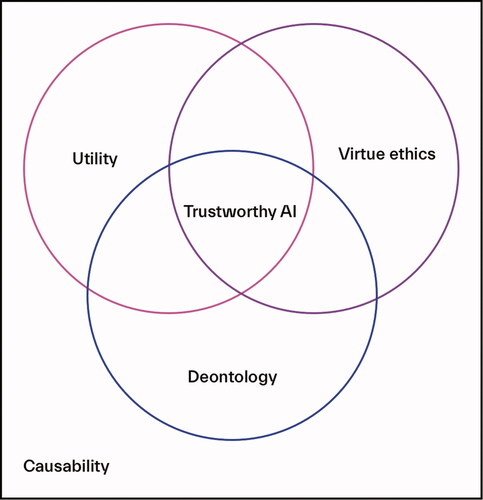

As our literature review points out, more evidence is needed to increase the transparency of AI systems and to understand the factors that contribute to users’ trust toward them. Thus, we consider transparency as the main component of our conceptual framework to achieve trustworthy AI. For this reason, we focused on causability, considering its effects on explainability, and subsequently on user trust in individual decisions. In addition, to gain a wider understanding of issues that might affect users’ trust in an AI system and factors that could improve its trustworthiness, we wanted to expand upon our investigation around how people reason during their AI interaction situations. For this purpose, our conceptual framework for trustworthy AI includes three alternative perspectives of normative ethics: utilitarian, deontological, and virtue ethical (see ).

Figure 1. Our conceptual framework to achieve trustworthy AI. In our view, trustworthiness can and should be built from different perspectives on how AI ought to work. These perspectives should consider how people understand a system’s decision (Causability), and perceive the system’s (i) consequences (Utility), (ii) compliance with rights and responsibilities (Deontology), and (iii) alignment with values (Virtue ethics).

The reason for considering these three perspectives is that trusting something is context dependent. Trustworthiness is a complex combination of complementary and contradictory factors. People and organizations recognize, reason, and prioritize individual factors differently, and they even change their priorities in diverse situations. For example, sometimes people trust only what they can see and feel, while others might trust their preferred authorities and even their unsubstantiated claims. Alternatively, some prioritize potential benefits and are willing to take risks, while others are attracted to long-term risk-avoidance. Each person or organization may have a unique path of reasoning based on their personal and organizational perspectives and ways of thinking.

Consequently, our assessment method, based on our conceptual framework, comprises four parts: (i) causability, (ii) utility, (iii) deontology, and (iv) virtue ethics. The following sections describe these components.

3.2.1. Causability

For causability, we took inspiration from the System Causability Scale (Holzinger et al., Citation2020). The scale comprises 10 items and aims to quickly determine whether and to what extent an explainable user interface, explanation, or explanation process is suitable for the intended purpose. We turned the scale items into an open-ended format to allow for a deeper investigation of user causability needs and to be used in a qualitative and semi-structured setting. We then added an additional question to be used as a holistic final question. In summary, the resulting set of questions comprises 11 items and aims to understand (i) whether users understood the reasons behind an AI result, such as a prediction or recommendation, and (ii) whether the AI results were sufficiently informative for them to make a decision followed by an action. See Appendix A for the questions related to causability.

For the utility, deontology, and virtue ethics factors, the initial aim of our questions was to investigate the short-term and immediate context of the main actors involved with an AI system and the activities they perform with it. We then expanded the focus of our questions to include the long-term and wider context of the business process supported by the AI system, including its relationship to related organizational aspects (e.g., a company business model) and alignment with the broader goals of the main actors (e.g., their career path or performance achievements).

Subsequently, we reviewed existing scales that aim to assess human trust in automation, which are presented by Hoffman et al. (Citation2018) to ensure that our questions align with aspects considered by previous studies in the literature.

Thus, the resulting questions are a combination of our conceptual framework and relevant items from existing scales that can match each normative perspective. During the formulation of the final questions, we also aimed to simplify the concepts and terms to ensure easier understanding by the participants and spark conversations around such topics.

In the following, we describe each component in detail.

3.2.2. Utility

As described in Section 2.3, utilitarian reasoning is concerned with consequences. Doing something, such as following an AI system’s investment recommendations, will result in positive consequences, even better than following recommendations made by human analysts.

For this component, we started from our conceptual framework and took inspiration from the items in the Adams et al. (Citation2003) and Cahour and Forzy (Citation2009) scales presented by Hoffman et al. (Citation2018), which ask people about their perception of an XAI system in terms of predictability, reliability, safety, and efficiency; that is, aspects related to the consequences of using an AI system.

We then merged our initial open-ended questions with these items to investigate people’s perceptions of short- and long-term desirable consequences, possible challenges, and their reasons, considering the main stakeholders that can be affected by the system, their activities, and the overall socio-technical context. To frame participants’ mindsets, the first question explicitly asks them to indicate who they think would benefit from using such an AI system.

3.2.3. Deontology

As outlined in Section 2.3, deontological thinking argues that people cannot judge everything through its consequences. There are more fundamental issues such as rules regarding rights and responsibilities. Human life can be considered an absolute right, not something to be traded for useful goods or services.

For the deontology component, we started from our contextual framework and took inspiration from the items in the “Faith” factor of the Madsen and Gregor (Citation2000) scale and in the Merritt (Citation2011) scale presented by Hoffman et al. (Citation2018), which asks people about their trust and confidence toward an AI system, its performance, and output.

In particular, we merged these items with our open-ended questions and broadened the focus of the confidence aspect to assess whether participants (i) were aware of the laws and policies that regulate an AI system and the process it aims to support, (ii) know who the main actors involved are, how they perceive their compliance with laws and policies, and (iii) trust the AI system outcomes.

3.2.4. Virtue ethics

As described in Section 2.3, the virtue ethics perspective argues that rights and responsibilities are insufficient, and they might even be morally wrong. Higher principles should be followed, even when not required. Thus, people should be honest or generous, even when they are not forced to be.

For this component, we started from our conceptual framework and took inspiration from those items in the “Personal Attachment” factor of the Madsen and Gregor (Citation2000) scale, as presented by Hoffman et al. (Citation2018), that investigates people’s alignment with and perception of using an AI system for decision-making.

We then merged our initial open-ended questions with these items and expanded their focus to assess users’ ethical perceptions of the outputs of an AI system, their decisions based on it, and the overall organizational process that it supports. We also investigated users’ perceptions of the alignment of these aspects with an organization’s stated values.

The resulting set of 15 questions for the utility, deontology, and virtue ethics aspects is presented in Appendix B.

In summary, the goal of our research is to develop a method for exploring decision-making situations from alternative perspectives based on our conceptual framework to achieve trustworthy AI. Prerequisites for its usage are a real decision-making situation involving (i) a socio-technical context, (ii) a real need/goal, (iii) an existing AI service, solution, or prototype, and (iv) a given explanation, decision, or recommendation that leads to an action. The application of this method involves the following steps.

Exploration of the empirical findings from the interviews;

Creation of improvement opportunities from the findings to be used as backlog items for an AI system or concept development iterations;

Alignment of the improvement opportunities to ethical considerations in wider business and systems development contexts to ethically improve business operations;

Generalization of these opportunities into design principles that would be relevant for other AI systems and concepts, forming the company asset for trustworthy AI best practices.

illustrates the core components and steps of our assessment method.

3.3. Case study

In this section, we describe the case study conducted to evaluate how our approach can be used in the design and development of trustworthy AI solutions.

3.3.1. Business context

Our organization, Siili Solutions Oyj, is a digital consulting agency based in Finland, with offices in seven European countries that serves customers that operate globally. The services offered range across the full solution development lifecycle, including digital strategies, experience designs, solution deliveries, and managed services. Founded in 2005, it currently comprises more than 750 employees organized into different business units and functions. Consultants are organized into tribes, that is, competence-based groups, such as data engineering or user experience design, which are in turn grouped into wider competence units, such as digital experience or creative technology.

In this study, we focus on an AI system that supports consultants’ allocation processes. In practice, it is a continuous collaboration between sales and staffing activities performed by sales managers and consultants’ supervisors (in the following, tribal leads). This is the core activity of any digital consultancy agency founded to serve customers by matching customer needs with available competent consultants. As pointed out in the Introduction, our AI tool could affect consultants’ careers, similar to recent AI technology that supports personnel recruiting and selection processes. Given the impact of these AI systems on people’s lives and careers, their ethicality needs to be comprehensively understood (Hunkenschroer & Luetge, Citation2022).

3.3.2. Considered systems

To support Siili’s sales processes, and more specifically consultants’ allocation management tasks, Siili has developed a tool called Seeker. Technically, it is an algorithmic decision-support system that, according to the European Commission’s definition of AI (European Commission, Citation2021b), can be classified as an AI system. Seeker allows consultants’ supervisors and sales managers to (i) find available consultants based on, for example, their skills, experience, or competence area, (ii) track the history of their project allocations, and (iii) reserve or allocate them to projects.

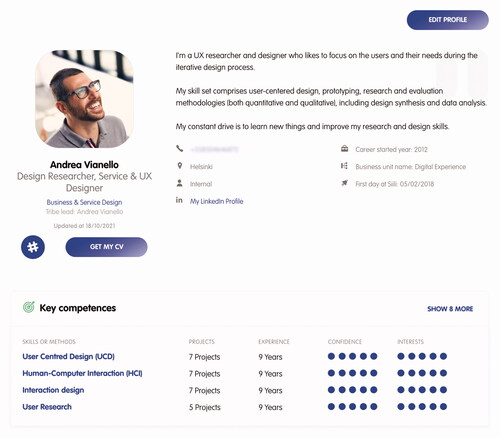

Seeker is an application built on top of a competence management system (CMS), also developed by our organization, called KnoMe (Niemi & Laine, Citation2016). The system supports several business processes, that is, sales operations can use it for searching competences needed for customer assignments, human resources development operations can use it when evaluating and managing competence needs at different organizational levels, and recruitment operations can use it in the talent attraction process.

The foundational KnoMe platform and Siili Solutions service vision were inspired by the hedgehog concept (Collins, Citation2001), meaning that a firm aspiring to rise to excellence should aim at the focal point among (i) what it can be the best at in the world, that is, competence, (ii) what can best drive its economics, that is, customer demand, and (iii) what most interests its employees, that is, passion. In this way, the ethical perspective, especially due to the passion principle, has been a part of company service vision and internal software development practices for a much longer time than in our current study.

The most essential KnoMe functionalities are (i) inputting and editing competence data, (ii) searching for individuals or competences, and (iii) visualizing data on different levels. The system contains data on people, organization units, customer and partner organizations, and projects. This master data can be supplemented by different types of competence data, including personal details, personal descriptions, certificates, trainings, and most importantly, methodology and technological skills.

To emphasize the ethical dimension of consulting and Siili Solutions’ company values, the system was developed to highlight the principle of passion, one of the three principles of the hedgehog concept, in a unique way. KnoMe lets consultants rate not only their confidence in their skills but also their interest in using and developing each skill on a scale from 0 to 5. Considering the confidence aspect, the available ratings range from 0, i.e., “I know what this is, but I don’t know how to use it” to 5, i.e., “I am a commonly recognized expert on this.” For the interest aspect, the ratings range from 0, i.e., “I don’t want to touch this,” to 5, i.e., “I would love to work with this and develop my skills”—see for an example KnoMe profile.

3.3.3. Business process

The allocation process consists of three phases: searching, selecting, and allocating consultants. Currently, Seeker supports human decision-making rather than automating any part of the process. In practice, each phase requires significant holistic understanding and many human tasks for many reasons. For example, during the search phase, customers may not be able to describe their needs precisely, and the competence data in KnoMe may not perfectly reflect reality. Selecting consultants is a complex problem that must be discussed by sales managers, tribal leads, and consultants to ensure that customer needs, consultants’ wishes, and company operations align to increase the success rate of the allocation choice. Finally, an allocation task is always a significant business decision that requires human accountability and decision-making. This can have a significant financial impact on consultants’ salaries and work satisfaction, Siili Solutions’ financial revenue and brand value, and customers operations and service satisfaction.

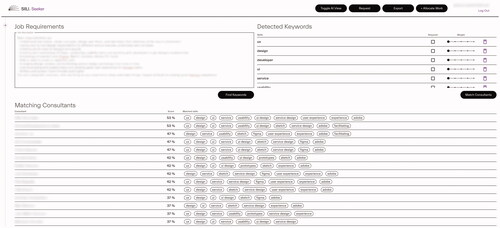

In a typical Seeker use case, users can access the “AI View” (), where they can enter the text description of a customer’s need for a task, project, and/or service. After pressing the “Find keywords button,” Seeker extracts the skill keywords from the entered text and compares them to the consultants’ skills and experience taken from their KnoMe profiles. Alternatively, the required skills, weights, and relevant parameters can be entered manually. Users can then press the “Match consultants” button, and as a result, Seeker returns a ranked list of consultants, including a score and a list of matching skills as keywords.

Figure 4. Siili Seeker “AI View.” Users can enter a description of a customer need in the top-left text area. The Seeker detected keywords are displayed in the top-right, while the list of matching consultants is shown in the bottom part of the screen.

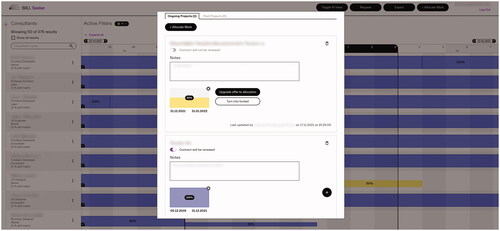

Users can then switch to the “Regular View” to determine the allocation status of these matching consultants, which includes past and current project allocations. The status can also indicate (i) reservations for future projects, that is, reflecting the situation in which a consultant has been offered to a customer who has not yet accepted the proposal, and (ii) possible notes, for example, indicating the end date of a project or when a reservation will expire (see ). Finally, when users find a suitable candidate, they can update their status by reserving or allocating him/her to a customer project ().

Figure 5. Siili Seeker “Regular view.” The screen displays the allocation status of matching consultants. The blue color indicates a project allocation.

Figure 6. Detailed view of a consultant, in which project reservations (in yellow) or project allocations (in blue) are marked. For both cases, users can enter additional notes, and indicate a start and end date.

In summary, we focused on the Seeker tool because it supports our organization’s core operation and value proposition, which is used to identify consultants for customer requests and allocate them to agreed customer assignments. In particular, its AI view sits in the middle of these core tasks, since it matches entered customer requirements with consultants’ competences and recommends consultants based on their match score.

This task, that is, searching matching keywords through numerous CVs and unstructured customer texts to find optimal combinations, is also a very common challenge across industries around the world. Indeed, AI-enabled recruitment and task allocation software systems are increasingly being used to outreach people, screen resumes, assess candidates, and facilitate processes (Hunkenschroer & Luetge, Citation2022). In this context, Seeker supports screening with recommendations and facilitates the overall process from customer request entry to allocating individual consultants to assignments.

However, ethical challenges can emerge from the use of such systems, for example, see, Hunkenschroer and Luetge (Citation2022) for a review of possible ethical risks and ambiguities. Examples from global industries are Amazon’s recruitment algorithm, which was shut down after a public outcry due to its gender discrimination, or Deliveroo riders’ ranking algorithm, which was ruled illegal by an Italian court since it could not provide detailed explanations on how ranking was calculated to prove that it did not break employee laws.

Thus, it is necessary to properly consider the ethical aspects when developing AI systems that support recruitment and task allocation processes.

3.3.4. Method and participants

The AI-assisted business problem considered in our case study was to perform an optimal allocation of a consultant by matching their skills and wishes to the needs of a task, project, and/or service coming from an existing or possible new customer. To gain a wider understanding of the allocation process, particularly their views on Seeker AI recommendations, we included people with different roles in our organization who use Seeker on a daily or weekly basis. We also considered it relevant to include consultants who could not access the tool but are a central part of the allocation process.

Given that we first focused on creating questions for the causability component, we conducted a small pilot study. An additional motivation for this study was that such a question set could also be used as standalone. The pilot involved three participants (two tribal leads and one sales manager) and served the purpose of testing participants’ understanding of the questions. We then refined the questions and added the ones from the utility, deontology, and virtue ethics components. With the full set of questions, we organized a second round of interviews involving new participants. For this round, we split the interviews into two sessions, that is, (i) causability and (ii) utility, deontology, and virtue ethics, to avoid conducting long interviews that could affect participants’ attention and thus the quality of the data collected. In the second round, we included seven participants (two sales managers, three tribal leads, and two consultants).

In summary, the overall case study involved 10 participants: three sales managers, five tribal leads, and two consultants.

Before starting both the pilot and second round of interviews, sales managers and tribal leads were asked to think of a recent need for consultant allocation and to use Seeker to achieve this. Since all interviews were performed remotely, the participants were also asked to share their screens. In the second round, consultants were asked about their experience with the Siili allocation process and if they were aware of the Seeker tool. Here, we did not consider questions related to causability since they do not use the Seeker tool themselves and therefore do not see any recommendations. Similarly, one tribal lead involved in the second round did not participate in the causability interview session.

summarizes the number of participants that answered each questions set.

Table 1. Number of participants that answered each question set.

All interviews followed a semi-structured approach and additional prompts were asked when interesting issues arose. After the interview, participants were also asked about their opinions and reflections on the questions and interview process.

3.3.5. Data analysis

Considering the constructive design approach adopted in our study, we did not aim to conduct a thorough, qualitative investigation. Our goal was to identify improvement opportunities that could support the next iteration of the design and development of trustworthy AI solutions. Therefore, instead of systematically carrying out a thematic analysis including phases such as inter-coder reliability, we holistically explored and tagged the collected data to identify the underlying issues of our AI tool. Afterwards, we engaged in brainstorming workshops to ideate possible improvement opportunities, frame them within our organizational ethical principles, and generalize them to design principles. Although in different settings, exploratory analysis phases have been employed in recent studies of XAI systems, for example, see Schoonderwoerd et al. (Citation2021).

In the next section, we demonstrate how our proposed method supports the path from empirical issues to design principles.

4. Results

In the following sections, we briefly present the findings and outline the derived improvement opportunities, ethical considerations, and more generic design principles.

4.1. Causability

4.1.1. Findings

Answers to questions belonging to the causability component revealed that Seeker does not provide a clear link to the root causes of the ranking. Users can see significant keywords that caused the recommendations, and they can also alter their weights. However, they could not fully understand the reasons in which each consultant received such a ranking score, and the original context of the keywords. For example, TL1 remarked that they did not understand how the weighting of keywords works or how they are related to the consultants’ experience. This participant, along with SM1, expressed the need to have information on how many years and in how many projects each skill has been used to better understand the significance of scoring. SM1 also mentioned that it would be relevant to indicate where a keyword comes from, for example, from project experience or a list of skills (e.g., by using different colors).

A reason for this is that the lineage to the original data source context was missing, and users could not see whether the matching keywords were related to consultants’ primary competences, skills, or mentioned as their contribution in past projects. How exactly the keyword relates to a consultant may vary, and this contextual understanding is critical for anyone to be able to evaluate the validity of the recommendations. Interestingly, TL5 outlined that this lack of information might lead to false positives, that is, consultants who have expressed interest in working with a skill but without any proper project experience. Therefore, TL5 must always verify consultants’ level of skills from their KnoMe profiles.

4.1.2. Improvement opportunities

These findings, and in particular the lack of contextual information, allowed us to identify the following improvement opportunities:

IO1: Seeker should provide a list of reasons (e.g., keywords) and also indicate the context from which these reasons were found (e.g., description, skills, projects) during the data collection and preparation phase.

IO2: Seeker should provide a list of reasons that were not considered (e.g., similar or dependent keywords) but might be contextually relevant.

For example, IO1 can be implemented in the form of “‘SQL Server’ | ‘skills’, ‘4 years’, ‘very interested’,” and IO2 as “‘SQL Server’ | ‘Microsoft SQL Server Management Studio’, ‘SSIS’, ‘SSAS’, ‘SSRS’.” This type of additional context metadata could help discern false positives among profiles that appear similar without additional metadata. A project manager involved in SQL Server projects is a completely different type of expert than a data engineer, with SQL Server listed as a primary skill and with 10 years of experience. Similar reasons not considered in matching could help identify false negatives, that is, potential skills and consultants that might have been omitted from the rankings.

4.1.3. Ethical considerations

Providing contextual information about keywords and searches, as included in IO1 and IO2, can help consultants. For example, they could recognize how they can modify their KnoMe profiles to obtain a better recommendation score or the skills on which they need more training to appear among recommendations. Implementing both improvement opportunities could empower consultants and help them obtain more meaningful projects that better align with their working passions. The same improvement opportunities would also help sales managers and tribal leads to a better understanding of potential uncertainties of recommendations, thus preventing false positives and recognizing false negatives. As a result, implementing IO1 and IO2 can improve the quality of recommendations, consultants’ satisfaction, and project delivery. Interestingly, even though these topics came from a causability point of view, other perspectives offered complementary reasons for their implementation.

4.1.4. Generalized design principle

The two identified improvement opportunities can also be synthetized into a more generic design principle for incorporating causability aspects in future AI recommendation systems.

DP1: An AI solution should explain the reasons for making the decision, similar reasons that were not considered in the decision, and their contexts.

This design principle can be widely applied and recommended for AI use cases for two reasons. First, end users or data subjects often need to be aware of false positives and false negatives in AI decisions. Second, they may need to influence such results by optimizing them towards their goals. Simply understanding the key data points that caused the scoring is not enough, since this does not allow users to recognize what was left out or was missing from the considered input datasets. Users may also need to recognize contextual aspects that could have influenced the meaning and significance of the input data.

4.2. Utility

4.2.1. Findings

Considering utility, some participants pointed out that Seeker does not recommend changing consultants’ project allocation to more suited projects; for example, considering consultants’ wishes to grow on different skills. Current Seeker recommendations are based only on consultants’ availability and their existing skill keywords. In the short term, neglecting consultants’ wishes in assignments can lead to dissatisfaction and a suboptimal fit for customers’ needs. In the long term, consultants remaining in their current assignments can lead to competitiveness challenges when their skills are not renewed or diversified. This type of situation can lead to dead-end consultant careers and deteriorate the competitiveness of the consulting agency due to outdated expertise. These aspects were explicitly mentioned by C2, who referred to the fact that some colleagues left because they were put onto projects they did not like to work in. This participant also suggested that the opportunity to change projects could also benefit Siili customers.

4.2.2. Improvement opportunity

The above findings allowed us to identify the following utility-related improvement opportunity:

IO3: Seeker should recommend a project change when sufficient conditions suggest a better fit for the value.

In the project change case, conditions that could trigger a need for such consideration could be consultants’ personal wishes to change customer assignments, a need to develop skills not utilized in the current assignment, or the length they have been in a project. On the other hand, scoring should also consider whether there would be better combinations of consultants allocated to each project. In addition, this scoring would need to be handled carefully, because all personnel changes always cause temporary distractions and costs. Thus, the benefits of a project change would need to outweigh the possible short-term negative outcomes.

4.2.3. Ethical considerations

Recommending a project change as outlined by IO3 could provide many benefits. Indeed, a project change between consultants could lead to a better fit with customers’ needs and rearranged consultant allocations, which might improve the delivery quality of several projects. A project change requested by consultants can also increase their work satisfaction in the long term and reduce the risk of them changing employer. This could also provide consultants with the opportunity to follow new passions. As a result, implementing IO3 could lead to a win-win situation for consultants, service providers, and several customers, which would compensate for the temporary challenges that are always caused by project changes in knowledge-work settings. Although many of the project change considerations were about consequences, benefits, and drawbacks regarding different stakeholders—especially consultants—they were also important from the virtue ethics perspective, as remarked by C2.

4.2.4. Generalized design principle

The identified improvement opportunity can be generalized to the following design principle to incorporate utilitarian aspects into future recommendation AI systems.

DP2: An AI solution should select allocation opportunities across a wider list of conditions and score them from the perspective of different stakeholders.

Widening the scope of source data from available consultants and unallocated customer assignments to already assigned consultants with project change wishes could help optimize long-term customer value, consultant career wishes, and service provider value. This type of design principle could prompt other AI use cases to evaluate their supported business cases in a wider context. Optimizing AI results to a single decision at a time might not be ideal when the business case actually consists of a large puzzle—including all customers and consultant competence portfolios—with many complex pieces, including all assignments and consultant competences, that can be matched together in numerous ways.

4.3. Deontology

4.3.1. Findings

Considering deontology, the findings revealed that potential biases could affect the allocation process, as mentioned by some participants. In addition, Siili Seeker does not provide statistics on how allocations and availabilities are distributed across potentially sensitive categories, such as age, well-being, relationships, preference, and business units or tribes. For example, TL5 and C2 referred to the fact that the allocation choices were vulnerable to biases regarding the aforementioned sensitive personal characteristics or the aim of reaching business unit targets (C2). At the same time, mathematically equal distribution should not be an absolute requirement or even a goal itself, since company values, such as the hedgehog concept, emphasize that customer demand, consultant competence, and individual passion should guide the selections. There is a large amount of qualitative complexity in customer requests, consultant competencies, and various relevant aspects in consultant allocation in consulting services.

4.3.2. Improvement opportunity

The above-described findings allowed us to identify the following improvement opportunity:

IO4: Seeker should implement distribution validations between sensitive attributes for AI recommendations, allocation decisions, and open availability.

In the consulting service business, long delays between allocations or a lack of suitable open availabilities are critical problems for both the company and consultants. Distribution statistics and alerts for anomalies can be used to recognize potential discrimination, career development issues, or business challenges in selling some type of expertise to customers. This type of information could help recognize relevant people to be directed for support services, for example, related to career development or personnel training.

4.3.3. Ethical considerations

By implementing IO4, companies can proactively identify when exceptional patterns in availability duration, recommendations, and final allocation decisions emerge. Interestingly, the potential discrimination issues were discussed from both deontological and virtue ethical points of view. Indeed, although preventing discrimination and unfair treatment of employees is a legal obligation, the same improvement can also be seen from the virtue ethical stance, that is, their competence goals for self-improvement and personal passions such as working for ethically responsible companies. Regardless of legal obligations or business consequences, consultants are humans, and the Seeker tool should empower them to achieve their own goals. These improvements could lead to significant utilitarian benefits, and early problem identification and issue resolution could prevent consultants who feel that their career is not proceeding as desired from leaving the company. It is important to note that this particular scenario is common and costly for consultant rental companies.

4.3.4. Generalized design principle

Our identified improvement opportunity can be generalized to the following design principle to incorporate deontological aspects into AI recommendation systems:

DP3: An AI solution should implement distribution validations between sensitive attributes for AI recommendations, also covering relevant pre- and post-conditions.

The design principle emphasizes that it is not sufficient to consider the immediate distribution of decisions across sensitive attributes, but one should also monitor how distributions occur in the preceding and following process phases. It might be that AI recommendations seem to be technically fair and equal considering the immediate keywords, but discrimination occurs in the preceding skill development or following allocation decision-making phases.

4.4. Virtue ethics

4.4.1. Findings

Answers to our questions related to the virtue ethics factor revealed that Seeker does not consider consultants’ personal wishes in the recommendations, such as personal interests or distastes related to specific industries, customers, or skills. For example, C1 mentioned the desire to flag companies or fields that would be interesting to work with, as well as the preference between the B2C and B2B contexts. Interestingly, this aspect also emerges from the sales managers’ point of view, as SM3 mentioned that the visibility of desires can speed up the allocation process by reducing the need for personal communication with consultants.

This lack of preference data is unfortunate, since the underlying competence development system KnoMe already stores such data about consultants’ personal wishes in terms of skills they would be more eager to use or those that they would prefer to stop using. Moreover, the virtue of personal passion is one of the foundational ethical values of the Siili Solutions service vision. At the same time, the recommendations are based only on available consultants and their existing skill keywords. Seeker recommendations do not consider the personal wishes regarding industry. This is an important part of the allocation process in some cases, since in our organization, consultants have the right to refuse working for companies and industries for specific reasons, such as ethical reasons. Currently, personal wishes regarding career development and industry preferences can be handled only during personal discussions that occur at a later stage among consultants, sales managers, and tribal leads.

4.4.2. Improvement opportunity

These findings allowed us to identify the following improvement opportunity:

IO5: Seeker should weigh skills according to consultants’ self-expressed wishes and leave consultants out of industries that they have banned in their personal lists.

Consultants are the main resources and assets of digitalization service companies. Their personal wishes and desires are critical business issues in industries wherein highly skilled experts have a wide variety of employment opportunities. As pointed out in the previous section, personal wishes about skills and industry sectors are currently discussed personally by sales managers, supervisors, and consultants. Managing these in a more systematic manner could improve the efficiency of the sales process, delivery of customer assignments, and success of employee career management.

4.4.3. Ethical considerations

Considering consultants’ willingness to use specific skills or wishes to avoid specific industries within recommendations could support consultants’ career development and increase their work satisfaction. Implementing IO5 could also lessen the need for additional managerial discussions that currently takes place between sales managers, supervisors, and consultants. In this way, ethical values that are at the core of a company and its culture could also result in utilitarian benefits related to business process efficiency. Whether consultants’ personal wishes are considered to be utilitarian or ethical issues might differ between companies depending on their culture and leadership model.

4.4.4. Generalized design principle

Our identified improvement opportunity can be generalized to the following design principle to incorporate virtue ethical aspects into future AI systems.

DP4: An AI solution should consider personal wishes as weights or complete bans in the recommendation scoring.

This type of design principle could be useful in all AI cases, where the results are lists of rankings. Although there might exist many weighting variables in the AI system input data, reasoning models, and/or end-user UI, it could be useful to consider how the personal preferences of data subjects could be incorporated into ranking processes. This is especially important in situations wherein data subjects have or should have significant power in AI decisions and their consequences. In our specific case, even though consultants might not always have the immediate power to decline all assigned customer tasks, they have the ultimate power to resign from the company. Fully automating this type of job assignment task without considering personal wishes could lead to huge challenges for companies trying to enforce such systems.

5. Discussion

The aim of the case study presented in this article was to illustrate how our assessment tool can be used in the design and development of trustworthy AI solutions. We described how our approach was used to identify improvement opportunities and more generalized design principles from causability, utilitarian, deontological, and virtue ethical perspectives. In the following sections, we reflect on the lessons learned from the development of our assessment method, its usage in a business setting, and how the insights it helps identify can be applied in the design and development of trustworthy AI solutions.

5.1. Developing a method for practical purposes

The goal of our research was to develop a method for designing and developing trustworthy AI solutions in a real business context. To do so, we recognized four different perspectives that we think are relevant to how people understand AI decisions in the context where the interaction takes place, that is, causability (Holzinger et al., Citation2019) and three perspectives of normative ethics (Dignum, Citation2019). The reason for focusing on causability is that it can help people better understand AI explanations (Chou et al., Citation2022), which, in turn, can increase the trustworthiness of an AI system and support the building of user trust toward it (Jacovi et al., Citation2021), as recent evidence has shown (Shin, Citation2021). Normative ethics, and in particular, the utility, deontology, and virtue ethics perspectives, can help reveal different factors that matter for people in their situated contexts, which are important when evaluating an AI system (van Berkel et al., Citation2022).

Our method is in line with recent work from the HCI literature, which aims to offer practical ways to improve the transparency and more widely the trustworthiness of AI systems by outlining a clear evaluation method and step-by-step approach, for example (Liao et al., Citation2021; Schoonderwoerd et al., Citation2021; Zicari et al., Citation2021). However, to the best of our knowledge, none of the previous XAI approaches have explicitly considered all four perspectives simultaneously.

Although many practical methods and toolkits exist for assessing AI systems from an ethical perspective (Crockett et al., Citation2021), many of these approaches are based on a predefined set of ethical principles and processes. From our practitioner experience, applying predefined large frameworks can be a challenge, especially for small- and medium-sized businesses. Interestingly, this view has also been reported recently (Crockett et al., Citation2021). In developing our proposed method, we aimed to gain a wider and more open understanding of the situational and ethical considerations of end users. Therefore, we believe that our approach could point out improvement opportunities that would not be considered in the scope of other practical toolkits nor be regarded as ethical issues by them. However, we believe that all AI development investment decisions always include prioritization and ethical choices that impact someone’s benefit, are bounded by compliance risks, and are guided by ethical goals.

Considering the identification of improvement opportunities, the ones described in previous papers, for example (Zicari et al., Citation2021), seem to be at a more abstract level than those outlined in our case study, which we consider more concrete system development features, such as project change or additional metadata about the keywords context in the source system. Thus, we think that some of our outlined examples are much closer to systems development backlog items and have a higher possibility of being included in future development sprints than the recommendations outlined in other articles, e.g., Zicari et al. (Citation2021). To the best of our knowledge, few prior case studies describe an actual empirical finding that has caused users to contest or work around the suggested AI results and then derive practical recommendations that can fix problematic situations.

In particular, the implementation of our identified improvement opportunities could enhance and make the contracts that an AI system claims to follow more visible, thus increasing perceived trustworthiness from causability and alternative contextual perspectives, that is, utilitarian benefits, deontological compliance, and virtue ethical values. According to Jacovi et al. (Citation2021) and van Berkel et al. (Citation2022), including these aspects in AI systems could contribute to improving their trustworthiness, thus supporting the building of users’ warranted trust toward them (Jacovi et al., Citation2021).

In contrast to prior work, our approach also aims to link practically actionable improvement opportunities to various ethical aspects and frame them in a real business case. As an AI solution rarely operates in isolation and is often part of a socio-organizational context (Ehsan et al., Citation2021; van Berkel et al., Citation2022), we believe that it is important for a method like ours to openly discover different aspects that may contribute to the ethical improvement of AI business operations. In doing so, we differ from approaches that aim to enforce a predefined set of external requirements. Instead, we wish to recognize the most important issues that should be changed to reduce distrust or improve trustworthiness with open discussions on peoples’ preferences and situational considerations.

Most importantly, we extended our approach to produce generalizable best practices in the form of shareable design principles. The reasoning for this is pragmatic; consulting companies and large AI user enterprises often develop their own design systems and guidelines to document and share best practices across use cases. Rather than just developing a single use case, empirical learning and design insights can be added to an enterprise knowledge base for wider benefits. There are other approaches that aim to produce generalizable design principles, such as those proposed by Schoonderwoerd et al. (Citation2021). However, they do not consider alignment with a wider set of ethical considerations, such as legal and ethical issues. Instead, they limit the focus to utilitarian perspectives, such as the accuracy of decisions.

5.2. Applying the method in a business setting

To test the validity of our developed method, we selected an empirical business case with significant business value and ethical considerations. In our organization, we developed the Seeker system for managers and supervisors to search for and select consultants for customer requests.

If we had limited the evaluation focus to direct interaction with Seeker and immediate selection of a consultant from the recommended list of consultants, relevant issues might have been left out of the findings. For example, this can be related to the personal preference aspects that normally emerge in subsequent discussions between consultants and their supervisors or sales managers. By considering the whole allocation process and interviewing a wider set of roles involved, including activities that precede or follow the interaction with Seeker and people who do not have access to the system, our assessment tool allowed us to obtain a wider and deeper understanding of the process and its underlying issues considering causability and normative ethics aspects. It is worth noting that participants mentioned how the questions made them reflect on some ethical topics that they had not considered previously, such as regarding the laws that govern the allocation process or the responsibility of the main stakeholders involved.

Thus, our findings suggest that it is important to consider the overall business case when assessing the trustworthiness of the socio-technical AI systems involved, rather than explaining the immediate AI recommendation without a wider context. This is in line with Schiff et al. (Citation2021), who proposed that to bridge the gap between principles and practice, AI frameworks should widely and broadly consider the perspectives of different stakeholders and the impacts of an AI system, respectively.

Our method can be used in many phases across the AI development lifecycle, for example, with mock-ups, prototypes, or deployed systems, as long as there is a clear user interaction situation and decision to be made by the user. More importantly, we could formulate actionable improvement opportunities for Siili Seeker from the findings, which we then synthetized into more generic design principles for future recommendation AI systems. An illustration of the path from empirical consideration to design principles with clear examples is missing from many theoretical frameworks and method papers, as pointed out by Crockett et al. (Citation2021). Again, our approach is in line with Schiff et al. (Citation2021), who recommends that frameworks should support the operationalization of conceptual principles into specific strategies and be applicable iteratively across the development lifecycle.

In particular, to identify improvement opportunities to be implemented in the future, they should be viewed as valuable and desirable for investment. To support this goal, we posit that each improvement opportunity should be grounded within an organization’s internal needs and cultural values and outline how it contributes to its business operations. As expected, the broad empirical findings provide us with additional arguments to motivate a single improvement opportunity from different points of view. Indeed, during the interviews, the same user needs often came from two or even three different perspectives. It is thus important to link empirical findings and solution improvement opportunities to wider ethical themes (hopefully grounded in well-accepted company values) to improve the trustworthiness of an AI solution.

6. Conclusion

It is widely believed that people and organizations adopt and use AI systems more eagerly if they trust them. In our work, we aimed to develop an approach to explore the issues that might affect users’ trust in AI systems and derive practical improvement opportunities that could be used to increase the trustworthiness of AI systems. Therefore, we developed four sets of questions to investigate how people understand and experience AI decisions as part of a wider socio-technical context in real business settings, considering end-user explainability and normative ethical aspects. Then, we applied our approach to investigate the AI solution we use in our organization to support the consultant allocation process. Our results showed that our qualitative assessment tool helps to identify widely underlying issues that hinder the trustworthiness of an existing AI system from the perspective of its users. These empirical findings can be summarized as improvement opportunities and then synthetized into more generic design principles, as described in this article. By linking the suggested improvement opportunities to ethical principles, this approach can provide ethical frames for wider business improvements. Overall, our research illustrates how the developed method can be used to design and develop trustworthy AI solutions and support ethically motivated business improvement.

7. Limitations and future work