Abstract

What factors drive consumers to use artificial intelligence (AI)-powered chatbots? The current study examined the associations among AI-powered chatbots’ anthropomorphism (human-likeness, animacy, and intelligence), social presence, imagery processing, psychological ownership, and continuance intention in the context of Human-AI-Interaction. Results from a path analysis using LISREL 8.54 show that consumers’ perceived human-likeness of AI-powered chatbots is a positive predictor of social presence and imagery processing. Imagery processing is a positive predictor of psychological ownership of the products (fashion industry) and services (tourism industry) promoted by the chatbots. Most importantly, social presence and imagery processing are positive predictors of AI-chatbot continuance intention. These empirical findings entail practical implications for AI-powered chatbot developers and managerial implications for commercial brands such that (1) increasing anthropomorphism of chatbots and inducing the sense of being co-present with the chatbots are important factors AI-chatbot designers and developers need to consider and (2) inducing vivid visualization of the products endorsed by the chatbots is an important variable marketers need to understand.

1. Introduction

In light of advances in artificial intelligence (AI), there has been a recent surge of interest in human-chatbot-relationships in academia, industry, and public sectors (Aoki, Citation2020; Barnett et al., Citation2021; Chaves & Gerosa, Citation2021; Skjuve et al., Citation2021; Smutny & Schreiberova, Citation2020; Shumanov & Johnson, Citation2021). AI-powered chatbots increase customer engagement with brands and build relationships with customers by providing personalized interactions and continuous service (Prentice et al., Citation2020; Shumanov & Johnson, Citation2021; Walch, Citation2019; Youn & Jin, Citation2021).

What technological aspects of AI-interface and what social psychological factors during Human-AI-Interaction drive consumers to continue using AI-powered chatbots? The present study aims to answer this research question by proposing relevant predictors of consumers’ intention to continue using AI-chatbots. Put differently, the current study focuses on user experience (UX) with chatbots during Human-AI-Interaction and their intention to continue using AI-powered chatbots in the context of fashion marketing and tourism marketing. As specific research objectives, this study endeavors to examine the effect of the dimensions of anthropomorphic chatbots on social presence and imagery processing, which subsequently influence psychological ownership of products/services and chatbot continuance use intention.

Recent research shows that chatbots positively affect consumers of the online fashion sector (Tran et al., Citation2021), luxury fashion brands (Chung et al., Citation2020), and tourism/hospitality industry (Melian-Gonzalez et al., Citation2021). Chatbots can be an effective tool in prompting experiential consumption of products and services in the fashion industry and tourism sector. For example, chatbots can provide consumers with vivid descriptions of various products (e.g., sunglasses, jeans, and shoes) or services (e.g., hotel rooms, hotel spas, and room services) in real time and further answer consumers’ questions about the products/services they can’t readily experience. Thus, pre-consumption interaction with AI-chatbots can be particularly beneficial for consumers of fashion items and hospitality services. Retailers and marketing managers can also benefit from AI-chatbots since consumers’ positive pre-consumption experience with chatbots can lead to actual purchasing decisions and behavior. Furthermore, from the AI-chatbot developer’s perspective, it is crucial to understand relevant factors that can increase users’ retention. Therefore, the current research attempts to examine relevant technological and psychological predictors of AI-chatbot continuance intention, drawing from the literature on AI-chatbots and consumer psychology as well as theories of social presence.

2. Theoretical frameworks and research hypotheses

2.1. Anthropomorphism and social presence in human-AI-interaction

Human-like technological objects enable individuals to more easily access human congruence schemas (Belanche et al., Citation2021). A chatbot’s use of human language is theorized to produce attributions of human-likeness via the psychological process called anthropomorphism (Kiesler et al., Citation2008; Sheehan et al., Citation2020). There have been increasing needs to resemble conversational interaction styles in human-chatbot-interaction, thus expecting chatbots to demonstrate social behaviors that are habitually shown in human-human-interaction and to be equipped with “social characteristics that cohere with users’ expectations” (Chaves & Gerosa, Citation2021, p. 729). In the current study, three dimensions of anthropomorphism were examined: human-likeness (as opposed to machine-likeness), animacy, and intelligence (Bartneck et al., Citation2009).

Understanding the role of emotion is important in human-chatbot interaction (Tasi et al., Citation2021). The current study particularly focuses on feelings of social presence as one of the emotional experiences in human-computer-interaction. Intelligent agents with a human-like appearance induce stronger feelings of social presence (Castro-Gonzalez et al., Citation2016; Nass & Moon, Citation2000). Chatbots’ human-like features including a human face, human language, and personality (S. A. Lee & Oh, Citation2021; MacInnis & Folkes, Citation2017) may increase consumers’ feelings of being co-present with a social actor (H1a).

Piaget formulated a theory of cognitive development and human intelligence. Piaget conceptualizes intelligence as a form of biological adaptation (Citation1971). Piaget’s theory inspired many education models such as learner-centered approach, discovery-based learning, collaborative learning, constructivism, exploratory learning, and more (Muthivhi, Citation2015). Piaget’s work also provides theoretical and practical implications for our understanding of AI, Artificial Life Neural Network (ALNN), and reinforcement learning (Bossens, Townsend, & Sobey, Citation2019; Parisi & Schlesinger, Citation2002). Parisi and Schlesinger (Citation2002) argues that “Artificial Life models of evolution and development offer a new set of theoretical and methodological tools for investigating Piaget’s ideas” (p. 1301). Piaget’s framework emphasizes the importance of movement and intentional behavior for the perception of animacy (Bartneck et al., Citation2009; Parisi & Schlesinger, Citation2002). The classic perception of life, which is often associated with an animacy, is based on the “Piagetian framework centered on moving of one’s own accord” (Bartneck et al., Citation2009, p. 74). Thus, animacy can be proposed as an integral aspect of perceiving AI-chatbots to be a social actor that can induce users’ feelings of social presence (H1b).

“Regardless of their physical or virtual embodiment, artificial agents’ ability to have language-based communications with users serves as a critical human-like cue that evokes a sense of social presence in the users’ minds, making the users treat the artificial agent as they do other humans and respond to them socially” (Chattaraman et al., Citation2019, p. 316). Users’ cognition about and perception of AI-chatbots’ intelligence can be important antecedents to users’ feelings of social presence with the AI-chatbots (H1c). Chong et al. (Citation2021) presented a “3-level classification of AI-chatbot design” composed of “anthropomorphic role, appearance, and interactivity” (p. 1), thus suggesting the importance of chatbots’ socially defined roles (Wirtz et al., Citation2018), humanlike appearance (Nass & Moon, Citation2000), and ability to engage in two-way interaction (Miao et al., Citation2022). Synthesizing the three rationales elaborated so far, therefore, three dimensions of chatbots’ anthropomorphism can be precedents to consumers’ sense of social presence (H1).

H1: The (a) human-likeness (b) animacy and (c) intelligence dimensions of AI-chatbots’ anthropomorphism are positive predictors of consumers’ feelings of social presence.

2.2. Anthropomorphism and imagery processing in human-AI-interaction

Imagery processing is defined as a process by which “sensory information is represented in working memory” (MacInnis & Price, Citation1987, p. 473). Imagery processing is “very like picturing” (Fodor, Citation1981, p. 76) and forming pictures of stimuli in the mind’s eye (Aylwin, Citation1990; Schlosser, Citation2018). Imagery processing can be triggered through the use of pictures and concrete words (MacInnis & Price, Citation1987). Pictorial information is delivered through visual imagery processing, whereas textual descriptions are delivered through verbal discursive processing (Jin & Ryu, Citation2019). Greater consumption-imagery increases the persuasiveness of the communication (Aydınoğlu & Krishna, Citation2019). Following this premise, advertisers stimulate mental imagery by using pictures, words, sound effects, and instructions to imagine (Kim et al., Citation2014). The stronger presence of visual and pictorial information in consumer memory than its verbal and textual counterparts speaks to visuals’ superior power to evoke the use of imagery processing as opposed to discursive processing (Childers & Houston, Citation1984; Debevec & Romeo, Citation1992; Jin & Ryu, Citation2019).

Applying visual imagery processing to the tourism/hospitality industry, when tourists engage in visual imagery processing, they form concrete conceptions of a destination in their minds and may become more likely to consider visiting the destination/hotel (W. Lee & Gretzel, Citation2012). Likewise, applying this principle to the fashion industry, consumers can have vicarious consumption experiences through imagery processing that provides sensory stimulation, during the pre-consumption stage (J. E. Lee & Shin, Citation2020). Thus, consumers’ pre-purchase interactions with anthropomorphic chatbots can function as a priori avenue for imagery processing. Instructions provided by human-like, animate, and intelligent chatbots may increase the extent to which consumers imagine the possible products/services they could buy, visualize the consumption scenarios, and picture their own consumption behaviors. Therefore, three dimensions of chatbots’ anthropomorphism can be precedents to consumers’ imagery processing (H2).

H2: The (a) human-likeness (b) animacy and (c) intelligence dimensions of AI-chatbots’ anthropomorphism are positive predictors of consumers’ imagery processing.

“Stimulating consumers to imagine being present in a mediated environment and experiencing a product or service” can be a powerful marketing strategy (Ha et al., Citation2019, p. 42). Mental imagery, referring to this perception of being present in an imagined situation in the absence of appropriate direct contact (Argyriou, Citation2012; Ha et al., Citation2019), is related to feelings of presence. When media users experience feelings of presence, they feel transported to another place as though they are physically located inside the virtual or mediated environment (Jin, Citation2011, Citation2012). In the case of social presence, when consumers experience feelings of co-presence with an AI-powered chatbot, they would feel as if they were with and were interacting with an actual social actor. This sense of social presence can positively influence the extent to which consumers can visualize their consumption scenarios. Therefore, the current research proposes a novel relationship between consumers’ feelings of social presence during their interaction with an AI-powered chatbot and enhanced level of imagery processing (H3).

H3: Social presence is a positive predictor of imagery processing.

2.3. Psychological ownership in human-AI-interaction

Psychological ownership is defined as “a state in which individuals feel as though the target of ownership (or a piece of that target) is theirs (i.e., it is ‘MINE’)” (Pierce et al., Citation2003, p. 86). Psychological ownership of an object is the inference or feeling that the object is MINE (Belk, Citation1988; Morewedge, Citation2021; Pierce et al., Citation2001). People can experience psychological ownership over targets that are important to them, whether material or immaterial (Pierce et al., Citation2003). Hence, psychological ownership can occur both for material consumption of products like shopping shoes (fashion industry) (Loussaief et al., Citation2019) and experiential consumption of services like booking a hotel room for vacation (tourism industry) (Kumar & Nayak, Citation2019; S. Lee & Kim, Citation2020; S. Li et al., Citation2021). Research shows that giving a product a human name enhances psychological ownership of the product (Kirk et al., Citation2018; Stoner et al., Citation2018). Thus, consumers feel stronger emotional connections with their owned products and form stronger bonds with them when those products are humanized and anthropomorphized (Kim & Swaminathan, Citation2021).

Recently, psychological ownership has received increasing attention from not only Information technology (IT) and emerging media scholars examining a wide variety of topics including addiction to virtual online gaming (Wang et al., Citation2021), augmented reality in interactive marketing (Carrozzi et al., Citation2019), gamification of in-game advertising (Mishra & Malhotra, Citation2021), augmented reality in advertising (Yuan et al., Citation2021), user engagement with robots in Human-Robot-Interaction (Delgosha & Hajiheydari, Citation2021) but also business researchers examining tourism and hospitality management (Kumar & Nayak, Citation2019; S. Li et al. Citation2021). Yuan et al. (Citation2021) examined the formation of psychological ownership in the augmented reality (AR) marketing environment from the perspective of consumer flow experience, and showed that (1) consumers’ parasocial interaction is a positive predictor of their flow experience, and (2) flow, in turn, positively influences psychological ownership. To our best knowledge, there is a dearth of empirical research on users’ psychological ownership in the emerging context of Human-AI-Interaction. The present research aims to test the roles of social presence with AI-powered chatbots (H4a) and imagery processing during social interactions with chabots (H4b) in forming consumers’ psychological ownership of the products/services promoted and endorsed by the chatbots. Since psychological ownership can be developed through the sense of belonging (Asatryan & Oh, Citation2008; Mishra & Malhotra, Citation2021), it can be reasonably theorized that the extent to which consumers feel as if they were interacting with an actual social actor during virtual conversations with a chatbot as a salesperson (the degree of social presence) can be correlated with the extent to which they feel the products (for material consumption like fashion items) and services (for experiential consumption like hotels) promoted by the chatbot could be theirs (the degree of psychological ownership) (H4a). In the sense that imagery processing is associated with psychological ownership (Kamleitner & Feuchtl, Citation2015), it can be theorized that the extent to which consumers visually imagine the products/services they could buy and picture themselves actually using the products/services (the degree of imagery processing) can be correlated with the extent to which they feel the products/services could be theirs (the degree of psychological ownership) (H4b).

H4: Consumes’ (a) feelings of social presence and (b) imagery processing are positive predictors of psychological ownership of the products/services promoted by AI-chatbots.

2.4. Continuance intention in human-AI-interaction

Information technology (IT) continuance has emerged as a prominent area of IT research that aims to understand users and consumers’ post-adoption behavior (Jumaan et al., Citation2020; Karahanna et al., Citation1999). Continuance intention can be defined as “users’ long-term intention to use an innovation on a regular basis” (Jahanmir, Citation2020, p. 226). It is important to examine the long-term or sustained use of IT over time, since any IT cannot be considered successful if it is not sustained by users who expect to benefit from continuing to use it (Jumaan et al., Citation2020; Bhattacherjee Citation2001a). Continuance intention has been studied in a variety of information system and digital technology settings (Yan et al., Citation2021), including mobile apps (Wang et al., Citation2019), mobile branded apps (C.-Y. Li & Fang, Citation2019), online banking (Bhattacherjee, Citation2001b), massive open online courses (MOOCs) (Dai et al., Citation2020), virtual worlds (Hooi & Cho, Citation2017), wearable technology like smartwatches (Bolen, Citation2020; Chuah, Citation2019; Nascimento et al., Citation2018), social recommender systems (Yang, Citation2021), and mobile government (Wang et al., Citation2020), to name a few.

The current study examines the chatbot continuance intention in the context of luxury brands because the chatbot e-service concept is well aligned with luxury brand values that offer superior service quality to consumers and bolster the quality of the relationship with consumers (Chung et al., Citation2020; Arthur, Citation2017). In the study of luxury fashion brands, Chung et al. (Citation2020) found that chatbot e-service agents providing marketing efforts enhance communication quality (accuracy and credibility) and consumer satisfaction with chatbots. Given that consumer satisfaction with chatbot services was found to account for continuance intention to use in prior studies (Ashfaq et al., Citation2020; Cheng & Jiang, Citation2020; M. Lee & Park, Citation2022), it is important to investigate continued intentions to use chatbots and its antecedents in the luxury brand context.

Prior research shows that the sense of presence increases users’ continuance intention to use social virtual worlds (Jung, Citation2011). Furthermore, a study on e-learning environments shows that e-learners’ imagery processing is a positive predictor of continuance intention (Rodriguez-Ardura & Meseguer-Artola, Citation2016). No prior research has examined feelings of social presence and imagery processing as variables correlated with continuance intention toward AI-chatbots in the fashion/tourism marketing context yet. It can be reasonably predicted that consumers would be more willing to continue using the AI-chatbot when they feel as if they were actually interacting with the AI-chatbot as a social actor. Furthermore, the level of vivid imagery processing of the products/services endorsed by the AI-chatbot can be a positive predictor of consumers’ intention to continue using the AI-chatbot. To test these untapped relationships in the novel context of Human-AI-Interaction-based marketing, the current research proposes social presence (H5a) and imagery processing (H5b) as antecedents to continuance intention.

H5: Consumers’ (a) feelings of social presence and (b) imagery processing are positive predictors of AI-chatbot continuance intention.

Continuance intention is an important factor to consider for both utilitarian and hedonic service consumption (Hepola et al., Citation2020). The most recent research on Human-Robot-Interaction shows that psychological ownership of a robot is positively related to consumers’ intention to explore the robot in a post-adoption context (Delgosha & Hajiheydari, Citation2021). Consumers’ psychological ownership significantly drives their active post-consumption engagement behaviors such as social influence engagement and knowledge-sharing engagement (S. Li et al., Citation2021). Tourism research also shows that the sense of psychological ownership towards the travel destination is a positive predictor of intentions to revisit and recommend the destination (Kumar & Nayak, Citation2019) as well as word-of-mouth communication, willingness to pay more, and relationship intention (Asatryan & Oh, Citation2008). These findings about the significant role played by the sense of psychological ownership in tourism marketing are particularly relevant to the current research. However, no prior research has examined psychological ownership in the context of tourism promotion and fashion marketing invoked by AI-chatbots. The current research attempts to test the underexplored association between consumers’ psychological ownership of the products/services promoted by AI-chatbots and intention to continue using the AI-chatbot in Human-AI-Interaction environments. An original hypothesis can be proposed such that psychological ownership consumers feel during their interaction with an AI-chatbot is positively associated with intentions to continue using the AI-chatbot (H6).

H6: Psychological ownership of the product/service endorsed by AI-chatbots is a positive predictor of AI-chatbot continuance intention.

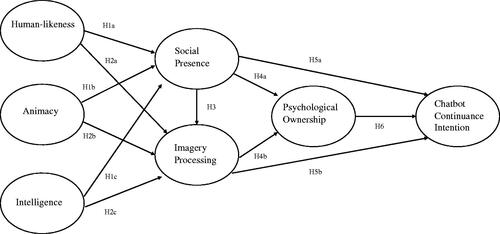

The individual hypotheses and the integrative conceptual model are presented in .

3. Methods

3.1. Sample and procedure

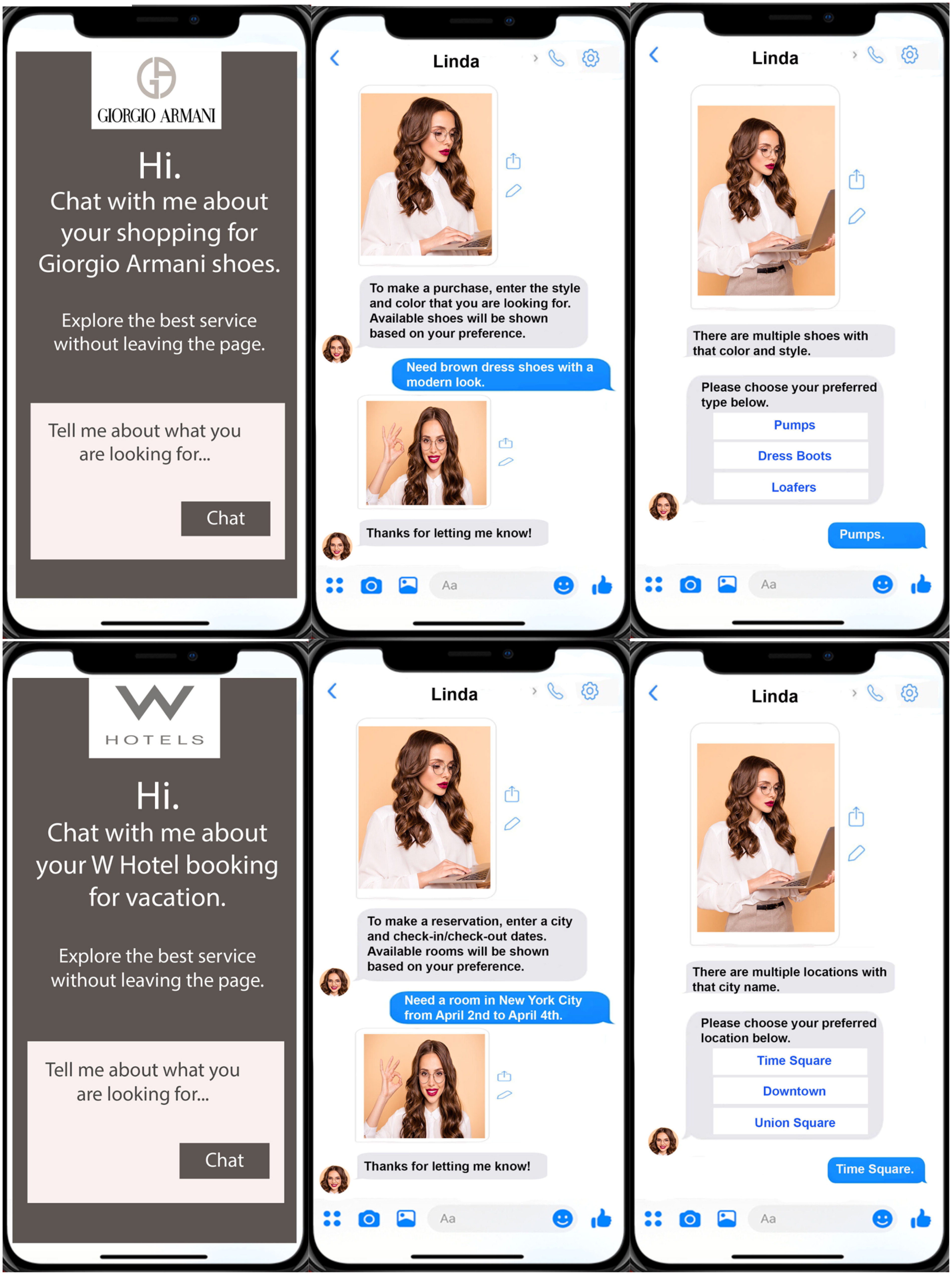

Data were collected from Amazon MTurk online panel by using Qualtrics. A total of 284 U.S. participants completed an online survey questionnaire. Among them, 5 participants did not identify the right answer in an attention check question. Thus, a total of 279 participants were included in the final data analysis (51.6% female [N female = 144]; Meanage = 38.92 years old; White 68.1%). Before answering the questionnaire, participants were asked to read an instructional page and its corresponding chatbot messages that talked about one of the two offerings (shoes [fashion industry] or hotel room [tourism/hospitality industry]). Each participant was randomly exposed to one type of the offerings, either Giorgio Armani shoes or W Hotel room, and filled out the questionnaire only once for the corresponding chatbot example seen. Specifically, a scenario-based instructional page asked participants to imagine that they were searching for information about a pair of shoes for their upcoming event or a hotel room for their future vacation and read the chatbot messages generated by the company. Participants viewed the screenshots showing the interaction sequence of the conversations between a consumer and the chatbot (as presented in Appendix A). To create an authentic text-based chatbot message, this study crafted the conversational dialogue by tweaking the best chatbot examples used in the industries (Keenan, Citation2022; Satisfi Labs, Citation2020). Fashion and hotel brands were chosen in our study because these brands use emerging technology such as AI-powered chatbots to offer personalized, engaging services for their targets (Fuentes-Moraleda et al., Citation2020; S. A. Lee & Oh, Citation2021; Morozova, Citation2018). The conversational messages between a consumer and the chatbot consist of a series of dialogue that typically takes place when consumers interact with chatbots in the context of booking a hotel room (e.g., check-in/check-out dates) and/or shopping for a fashion item (e.g., style, color, type).

For both chatbot messages, this study produced the fictitious chatbot called Linda by using Photoshop that delivers verbal and non-verbal communication cues (facial expression, body gesture, movement, and eye gaze). By doing so, we ensured that participants understood what an anthropomorphic, conversational chatbot message is. We took this procedural approach to increase (1) not only internal validity with regard to people’s perception of chatbots by providing actual Human-AI-Interaction examples (2) but also the generalizability of our findings by covering the two different types of products/services (fashion industry and tourism industry). It should be noted that all two chatbot messages were similar in terms of the level of anthropomorphism that features human-like characters. After exposure to the conversational chatbot messages, participants were directed to the survey questionnaire page.

3.2. Pooled data across two product/service categories

The pooled data of the two products/services were analyzed to test the proposed conceptual model. Yet, it is important to inspect possible differences in the major constructs across the two offerings prior to merging each data set, since recent research indicates that consumer sentiment and expectations differ across sectors (e.g., low involvement sectors like online fashion versus high involvement sectors like telecommunication) (Tran et al., Citation2021). An independent samples t-test exhibited no significant differences in all the constructs between the two product categories, thus making it possible to combine the two data sets for subsequent data analyses.

3.3. Measures

The items for measurement were borrowed from the scales validated in prior studies and modified to reflect the context of an AI-powered chatbot. Anthropomorphism of the chatbot was estimated with three sub-constructs by using three items for each (Bartneck et al., Citation2009): human-likeness (α = 0.93), animacy (α = 0.89), and perceived intelligence (α = 0.90). Social presence (α = 0.93) was gauged with four items adapted from the scale used by K. M. Lee et al. (Citation2006) and Witmer and Singer (Citation1998). Imagery processing was rated with three items (α = 0.92), which were borrowed from Roy and Phau (Citation2014). To assess psychological ownership (α = 0.89), three items were chosen and modified from Van Dyne and Pierce’s (Citation2004) scale. Chatbot continuance intention (α = 0.95) was rated with three items adopted from Bhattacherjee’s (Citation2001b) study. All constructs were measured with a 7-point scale. Descriptive statistics, along with the item wordings, were reported in .

Table 1. Summary of measurement model statistics.

4. Results

4.1. Measurement model

Following a two-step approach (Anderson & Gerbing, Citation1988), we first tested the measurement model using a confirmatory factor analysis (CFA) and subsequently tested a structural model. We performed a CFA on 22 items to scope out whether the items, which are supposed to represent the corresponding construct, exhibit appropriate psychometric qualities, using LISREL 8.54 (Jöreskog & Sörbom, Citation1993). The CFA used the correlation matrix between individual item indicators as an input. The CFA findings showed a good fit indices of the model to the data: χ2 = 542.668, df = 188, p < 0.001, CFI = 0.986; NFI = 0.978; IFI = 0.986; RMSEA = 0.082 (Browne & Cudeck, Citation1993). All items that are assumed to appraise each construct stand for each corresponding construct well with lambda-X estimates ranging from 0.814 to 0.953 (see ). The average variance extracted (AVE) met the critical threshold of 0.500 for each construct (0.729–0.874) (see ), establishing convergent validity of the construct (Fornell & Larcker, Citation1981). As all AVEs were bigger than all maximum shared variances (MSVs) and average shared variances (ASVs), a satisfactory discriminant validity of the construct was found (Fornell & Larcker, Citation1981). Composite reliabilities (CRs) were calculated from 0.890 to 0.954, backing up construct reliability (Hair et al., Citation2006). All measurement features of each construct are presented in .

Table 2. Correlations, shared variance, CR, AVE, MSV, and ASV.

4.2. Structural model and hypotheses testing

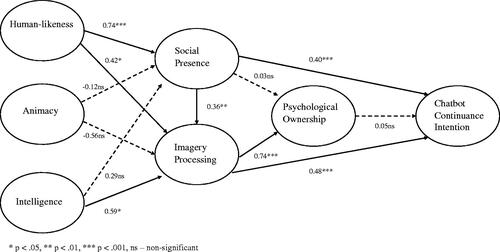

A path analysis was undertaken with individual item indicators and latent constructs by using LISREL 8.54 (Jӧreskog & Sӧrbom, Citation1993) to evaluate the structural model testing the associations among constructs. The correlation matrix between individual item indicators was used as an input. The goodness-of-fit statistics exhibited a good fit of the model to the data: χ2 = 555.062, df = 194, p < 0.001, CFI = 0.986; NFI = 0.978; IFI = 0.986; RMSEA = 0.082 (Browne & Cudeck, Citation1993). and displayed the standardized path estimates in the structural model.

Table 3. Results of structural model.

It is expected that the three sub-dimensions of AI-chatbot’s anthropomorphism (human-likeness, animacy, and intelligence) would be positive predictors of consumers’ feelings of social presence (H1) and imagery processing (H2). The results confirmed our expectation for human-likeness (γ = 0.74, p < 0.001 social presence; γ = 0.42, p < 0.05 for imagery processing). For intelligence, the results showed a positive relationship with imagery processing (γ = 0.59, p < 0.05), but not with social presence (γ = 0.29, p = ns). It was found that animacy did not show any significant relationships with social presence (γ = −0.12, p = ns) and imagery processing (γ = −0.56, p = ns). Thus, H1 and H2 received partial support (H1a, H2a, and H2c supported, while H1b, H1c, and H2b rejected). H3 expected social presence to be a positive predictor of imagery processing. The results supported this expectation with β = 0.36, p < 0.01.

H4 anticipated that consumers’ (a) feelings of social presence and (b) imagery processing would be positive predictors of psychological ownership. The analysis provided support for the path from imagery processing to psychological ownership (H4b: β = 0.74, p < 0.001), but not for the path from social presence to psychological ownership (H4a: β = 0.03, p = ns). In H5, consumers’ (a) feelings of social presence and (b) imagery processing are expected to predict AI-chatbot continuance intention positively. The results confirmed H5a and H5b with β = 0.40, p < 0.001 for the path from social presence to chatbot continuance intention and β = 0.48, p < 0.001 for the path from imagery processing to chatbot continuance intention. Finally, H6 expected the path from psychological ownership to chatbot continuance intention. The findings did not support H6 with β = 0.05, p = ns.

5. Discussion

5.1. Key findings and theoretical contributions

The current study empirically demonstrates that (1) the human-likeness dimension of anthropomorphic AI-powered chatbots is a positive predictor of consumers’ social presence and imagery processing and the intelligence dimension of anthropomorphic chatbots has a positive influence on imagery processing; (2) consumers’ social presence is a positive predictor of imagery processing; (3) imagery processing is a positive predictor of psychological ownership of the product/service endorsed by the AI-powered chatbots, and (4) social presence and imagery processing are positive predictors of AI-chatbot continuance intention. Thus, the present data add original theoretical propositions about the association between and among anthropomorphic AI, social presence, and imagery processing to the Human-AI-Interaction literature, consumer psychology, and theories of social presence. In the domain of retailing and consumer services, AI-chatbots have been increasingly acknowledged as frontline agents that promise to shape service offerings from which both customers and retailers can benefit (Chong et al., Citation2021).

Furthermore, findings about imagery processing and its associated relationship with relevant constructs (anthropomorphic attributes of AI-chatbots, social presence, psychological ownership of the products/services endorsed by the AI-chatbots, and chatbot continuance intention) are novel in the Human-Computer-Interaction literature, since no prior research has provided empirical findings about the associations between and among those factors in the context of human-chatbot-interaction. It is important to discuss theoretical implications for the significant paths from social presence to chatbot continuance intention and from imagery processing to chatbot continuance intention. These findings are important to be emphasized in light of the theoretical contributions. First, with regard to the association between social presence and chatbot continuance intention, this study adds chatbot continuance intention as a significant endogenous variable to the social presence literature. Second, regarding the relationship between imagery processing and continuance intention, our study provides the first theoretical proposition and empirical evidence about imagery processing as an exogenous variable predicting chatbot continence intention. Third, it’s important to note that imagery processing was significantly associated with all the key variables including social presence, psychological ownership, and chatbot continuance intention. Furthermore, intelligence and social presence, both of which items are about interacting with an intelligent being, influence imagery processing.

Unexpectedly, we found several non-significant findings, which are worthy of discussion for theoretical advancement. It’s crucial to discuss alternative explanations for these non-significant findings. First, the non-significant path from animacy to social presence and the non-significant path from animacy to imagery processing can be attributed to the non-animated nature of the static chatbot images the participants were exposed to during the data collection. This limitation is further discussed in the later Section 5.3.

Second, the non-significant path from intelligence to social presence implies that the level of perceived intelligence of chatbots does not necessarily predict the level of co-presence with chatbots in HCI. This is an interesting finding since it implies that people may still experience the sense of social presence even when they are interacting with not-so-smart (dumb) chatbots. Relatedly, Hu (Citation2019) extended the existing chatbot research by comparing the usability of a conversational “smart” chatbot with national language processing (NLP) versus a menu-based “dumb” chatbots without any intelligent capabilities (p. 8). Our finding implies that the static image-based, non-animated chatbot to which the participants were exposed during the survey might have induced the participants to think the chatbot is rather a menu-based “dumb” chatbots than a “smart” chatbot.

Third, the non-significant path from social presence to psychological ownership implies that the level of the sense of social presence with chatbot is not necessarily a predictor of the sense of owning the product endorsed by the chatbot. This study failed to provide empirical evidence about the transfer of feelings of social presence with chatbots in the HCI interface to the feelings of ownership of the products endorsed by the chatbots in the marketing/retailing domain. Interestingly, the indirect effect of social presence on psychological ownership through imagery processing was found, while there was no direct effect between social presence and psychological ownership, which suggests the key role of imagery processing to spark psychological ownership. Similarly, the non-significant path from psychological ownership to chatbot continuance intention implies that the sense of ownership of the products endorsed by the chatbot is not a significant predictor of chatbot continuance intention. Psychological ownership of products and services primarily drives consumers’ behaviors to use the offering (e.g., intention to continue to adopt the offerings promoted), but does not necessarily further transfer to the consumers’ intention to continuously use the chatbot, which is an “agent” or “tool” for promotion.

5.2. Practical and managerial implications

Our data report the unique positive consequences of consumers’ social presence and imagery processing on consumers’ intention to continue using anthropomorphic AI-powered chatbots. Marketers increasingly create brand characters and endow them with emotions, faces, names, and other human characteristics in order to promote the anthropomorphization of a brand through a variety of information technology and digital media (van Esch et al., Citation2019). Our study provides encouraging findings for companies and brands since it provides empirical data supporting the efficacy of anthropomorphic chatbots in increasing social presence and imagery processing. Our data regarding consumers’ intention to continue using a company’s chatbot are timely and relevant since continuance intention is of increasing importance in an ever-growing competitive IT landscape (Nascimento et al., Citation2018) as well as in smart retailing (Nikhashemi et al., Citation2021). This research is one of the first empirical demonstrations of the significant paths from social presence and imagery processing to continuance intention towards chatbots representing a brand. These findings provide practical implications for AI-developers, suggesting the importance of creating anthropomorphic, human-like chatbots (even lacking animacy) that can induce users’ feelings of social presence and imagery processing. This finding can be applied to the novel context of anthropomorphic AI-based marketing communication about fashion products and tourism/hospitality services, thus providing managerial implications for marketers. More specifically, the current data provide practical recommendations for AI-developers and marketers such that tourism marketing managers and fashion marketing managers need to harness socially interactive AI-chatbots that can prompt consumers to imagine and visualize their consumption scenarios and behaviors in the pre-purchase/consumption phase. Otherwise stated, (1) increasing anthropomorphism of chatbots and inducing the sense of being co-present with the chatbots are important factors AI-chatbot designers and developers need to consider and (2) inducing vivid visualization and increasing the sense of possessing the products endorsed by the chatbots are important variables marketers need to understand.

5.3. Limitations and conclusion

Several limitations of our research include (1) the non-significant path from animacy to social presence and imagery processing (H1b and H2b rejected) due to the non-animated nature of the conversation snapshots shown to the participants prior to the survey; (2) absence of observation of consumers’ interaction with AI-chatbots in real time, in-depth focus group interviews with AI-user communities, and longitudinal studies of human-chatbot-relationships (Skjuve et al., Citation2021, p. 3) lack of examination of the moderating effects of psychological ownership (Ding et al., Citation2021; S. Lee & Kim, Citation2020; Mishra & Malhotra, Citation2021), product/service/consumption type (S. A. Lee & Oh, Citation2021), advertising intrusiveness (Mishra & Malhotra, Citation2021), brand attachment (MacInnis & Folkes, Citation2017), consumers’ uncertainty avoidance (Velasco et al., Citation2021), AI preference (Prentice et al., Citation2020), and so forth, and (4) lack of discussion about the dark side of AI-based chatbot technology and negative experiences consumers might encounter during their interaction with chatbots.

With regard to the first limitation, although participants were exposed to the AI-chatbot’s non-verbal communication cues (facial expression, body gesture, movement, and eye gaze), the current study setting was not technologically optimized to create animated chatbots while mostly utilizing text-based chatting function and the static images of the chatbots’ non-verbal cues of animacy. Put differently, participants did not converse with the chatbot without experiencing a real-time interaction with the chatbot for shopping. To increase ecological isomorphism and external validity, this line of future research needs to utilize multiple modalities including auditory modality (e.g., voice, music, sound effects) and augmented visuals (e.g., 3 D animation) beyond textual modality-based chatting and static imaged-based visualization. Utilization of augmented visuals will be an important addition to research on imagery processing in Human-AI-Interaction.

As for the second weakness, this study was a one-shot cross-sectional survey that mainly measured participants’ perception and evaluation of AI-chatbots. Data triangulation and replication through qualitative data collections such as one-on-one in-depth interviews and focus group interviews regarding users’ feelings and hand-on experience, as well as longitudinal trend/panel surveys will improve both internal validity and external validity of AI-chatbot research. An additional limitation should be discussed in relation to a lack of external validity. For data collection, this study used the scenario that asked the participants to imagine that they were searching for information about luxury products or services. Thus, there is a greater likelihood that all participants are not necessarily luxury product purchasers, thus not involving a representative user group which should have been consumers interested and wealthy enough to buy luxury shoes or making luxury hotel room bookings, and limiting external validity of the findings. Besides, our study has some limitations by studying luxury items only (Giorgio Armani shoes and W Hotel room), which consumers may not shop repetitively and thus do not use their chatbots continuously. This limitation raises some concern about the relevancy of examining “chatbot continuance intention” for luxury brands. Unfortunately, these weaknesses cannot be fixed without conducting study again with different populations and with significant modifications. In the follow-up studies, it is worth replicating the findings by recruiting only those who have purchased luxury products or services and/or investigating “chatbot continuance intention” in the context of non-luxury products or services.

Regarding the third limitation, this study is missing analysis of and discussion about relevant moderators and the roles of users’ personality and individual difference factors in Human-AI-Interaction. Furthermore, this study examined only two different product/service categories (fashion and tourism). The underexplored moderating role of different retail categories such as low-involvement sectors (e.g., fast fashion items, routine grocery shopping, low-cost stationery items, etc.) versus high involvement sectors (e.g., luxury fashion items, long-term telecommunication services, high-cost technology items, etc.) may provide differentiated practical recommendations for AI-chatbot developers, since differences in the level of consumers’ involvement with products/services lead to differential attitude toward, perception of, evaluation of, and willingness to adopt innovative technologies like AI-chatbots (Tran et al., Citation2021). Follow-up studies need to address these limitations to provide deeper understanding of user experience (UX) in chatbot-based marketing communication and brand management.

With regard to the fourth limitation, additional studies need to include such factors as creepiness, privacy concerns, and technology anxiety in the theoretical model (Rajaobelina et al., Citation2021; Youn & Jin, Citation2021) to provide a balanced view on AI-enabled chatbots and the dark side of user experiences (UX) in human-chatbot-interaction. Negative physiological responses, uncomfortable emotions, privacy concerns, and technology anxiety may decrease customer loyalty and continuance intention (Rajaobelina et al., Citation2021). Future research needs to propose an integrative model that includes both the positive and negative aspects of UX in human-AI-interaction.

Despite several caveats, we believe our study could serve as a steppingstone toward more provocative discussions and fruitful studies about constructive ways to harness AI for users’ wellbeing (Skjuve et al., Citation2021) and for the benefit of humanity (Feijóo et al., Citation2020) as well as “to create a sustainable future with AI” (Tussyadiah, Citation2020, p. 1), by elucidating novel predictors of chatbot continuance intention in human-centered AI interface.

Disclosure statement

No potential conflict of interest was reported by the author(s).

Additional information

Funding

Notes on contributors

S. Venus Jin

S. Venus Jin is a Full Professor and Director of Communication Program in Northwestern University in Qatar. Her research focuses on human-computer-interaction, artificial intelligence, and influencer marketing. Her publications appear in Journal of Communication, New Media & Society, Journal of Advertising, Telematics & Informatics, and Journal of Interactive Marketing, among others.

Seounmi Youn

Seounmi Youn is a Full Professor of marketing communication at Emerson College in Boston, MA. Her research focuses on new media technologies and their impact on consumer psychology. Her publications appear in Journal of Computer-Mediated Communication, Computers in Human Behavior, Telematics & Informatics, and Communication Research, among others.

References

- Anderson, J. C., & Gerbing, D. W. (1988). Structural equation modeling in practice: A review and recommended two-step approach. Psychological Bulletin, 103(3), 411–423. https://doi.org/10.1037/0033-2909.103.3.411

- Aoki, N. (2020). An experimental study of public trust in AI chatbots in the public sector. Government Information Quarterly, 37(4), 101490. https://doi.org/10.1016/j.giq.2020.101490

- Argyriou, E. (2012). Consumer intentions to revisit online retailers: A mental imagery account. Psychology & Marketing, 29(1), 25–35. https://doi.org/10.1002/mar.20405

- Arthur, R. (2017, December 8). Louis Vuitton becomes latest luxury brand to launch a chatbot. Forbes. https://www.forbes.com/sites/rachelarthur/2017/12/08/louis-vuitton-becomes-latest-luxury-brand-to-launch-a-chatbot/?sh=219b1b2efe10

- Asatryan, V. S., & Oh, H. (2008). Psychological ownership theory: An exploratory application in the restaurant industry. Journal of Hospitality & Tourism Research, 32(3), 363–386. https://doi.org/10.1177/1096348008317391

- Ashfaq, M., Yun, J., Yu, S., & Loureiro, S. M. (2020). I, Chatbot: Modeling the determinants of users’ satisfaction and continuance intention of AI-powered service agents. Telematics and Informatics, 54, 101473. https://doi.org/10.1016/j.tele.2020.101473

- Aydınoğlu, N. Z., & Krishna, A. (2019). The power of consumption-imagery in communicating retail-store deals. Journal of Retailing, 95(4), 116–127. https://doi.org/10.1016/j.jretai.2019.10.010

- Aylwin, S. (1990). Imagery and affect: Big questions, little answers. In P. J. Hampson, D. F. Marks, J. E. Richardson, P. J. Hampson, D. F. Marks, & J. E. Richardson (Eds.), Imagery: Current developments (pp. 247–267). Taylor & Francis/Routledge.

- Barnett, A., Savic, M., Pienaar, K., Carter, A., Warren, N., Sandral, E., Manning, V., & Lubman, D. I. (2021). Enacting ‘more-than-human’ care: Clients’ and counsellors’ views on the multiple affordances of chatbots in alcohol and other drug counselling. The International Journal on Drug Policy, 94, 102910. https://doi.org/10.1016/j.drugpo.2020.102910

- Bartneck, C., Kulić, D., Croft, E., & Zoghbi, S. (2009). Measurement instruments for the anthropomorphism, animacy, likeability, perceived intelligence, and perceived safety of robots. International Journal of Social Robotics, 1(1), 71–81. https://doi.org/10.1007/s12369-008-0001-3

- Belanche, D., Casaló, L. V., Schepers, J., & Flavián, C. (2021). Examining the effects of robots’ physical appearance, warmth, and competence in frontline services: The humanness-value-loyalty model. Psychology & Marketing, 38(12), 2357–2376. https://doi.org/10.1002/mar.21532

- Belk, R. W. (1988). Possessions and the extended self. Journal of Consumer Research, 15(2), 139–310. https://doi.org/10.1086/209154

- Bhattacherjee, A. (2001a). Understanding information systems continuance: An expectation-confirmation model. MIS Quarterly, 25(3), 351–370. https://doi.org/10.2307/3250921

- Bhattacherjee, A. (2001b). An empirical analysis of the antecedents of electronic commerce service continuance. Decision Support Systems, 32(2), 201–214. https://doi.org/10.1016/S0167-9236(01)00111-7

- Bolen, M. C. (2020). Exploring the determinants of users’ continuance intention in smartwatches. Technology in Society, 60, 101209. https://doi.org/10.1016/j.techsoc.2019.101209

- Bossens, D. M., Townsend, N. C., & Sobey, A. J. (2019). Learning to learn with active adaptive perception. Neural Networks, 115, 30–49. https://doi.org/10.1016/j.neunet.2019.03.006

- Browne, M. W., & Cudeck R. (1993). Alternative ways of assessing model fit. In K. A. Bollen and J. S. Long (Eds.), Testing structural equation models (pp. 136–162). Sage.

- Carrozzi, A., Chylinski, M., Heller, J., Hilken, T., Keeling, D. I., & de Ruyter, K. (2019). What’s mine is a hologram? How shared augmented reality augments psychological ownership. Journal of Interactive Marketing, 48(1), 71–88. https://doi.org/10.1016/j.intmar.2019.05.004

- Castro-Gonzalez, A., Admoni, H., & Scassellati, B. (2016). Effects of form and motion on judgements of social robots’ animacy, likability, trustworthiness, and unpleasantness. International Journal of Human-Computer Studies, 90, 27–38. https://doi.org/10.1016/j.ijhcs.2016.02.004

- Chattaraman, V., Kwon, W. S., Gilbert, J. E., & Ross, K. (2019). Should AI-based, conversational digital assistants employ social- or task-oriented interaction style? A task-competency and reciprocity perspective for older adults. Computers in Human Behavior, 90, 315–330. https://doi.org/10.1016/j.chb.2018.08.048

- Chaves, A. P., & Gerosa, M. A. (2021). How should my chatbot interact? A survey on social characteristics in human-chatbot-interaction design. International Journal of Human–Computer Interaction, 37(8), 729–758. https://doi.org/10.1080/10447318.2020.1841438

- Cheng, Y., & Jiang, H. (2020). How do AI-driven chatbots impact user experience? Examining gratifications, perceived privacy risk, satisfaction, loyalty, and continued use. Journal of Broadcasting & Electronic Media, 64(4), 592–614. https://doi.org/10.1080/08838151.2020.1834296

- Childers, T., & Houston, M. J. (1984). Conditions for a picture-superiority effects in consumer memory. Journal of Consumer Research, 11(2), 643–654. https://doi.org/10.1086/209001

- Chong, T., Yu, T., Keeling, D. I., & Ruyter, K. (2021). AI-chatbots on the services frontline addressing the challenges and opportunities of agency. Journal of Retailing and Consumer Services, 63, 102735. https://doi.org/10.1016/j.jretconser.2021.102735

- Chuah, S. H.-W. (2019). You inspire me and make my life better: Investigating a multiple sequential mediation model of smartwatch continuance intention. Telematics and Informatics, 43, 101245. https://doi.org/10.1016/j.tele.2019.101245

- Chung, M., Ko, E., Joung, H., & Kim, S. J. (2020). Chatbot e-service and customer satisfaction regarding luxury brands. Journal of Business Research, 117, 587–595. https://doi.org/10.1016/j.jbusres.2018.10.004

- Dai, H. M., Teo, T., & Rappa, N. A. (2020). Understanding continuance intention among MOOC participants: The role of habit and MOOC performance. Computers in Human Behavior, 112, 106455. https://doi.org/10.1016/j.chb.2020.106455

- Debevec, K., & Romeo, J. B. (1992). Self-referent processing in perceptions of verbal and visual commercial information. Journal of Consumer Psychology, 1(1), 83–102. https://doi.org/10.1016/S1057-7408(08)80046-0

- Delgosha, M. S., & Hajiheydari, N. (2021). How human users engage with consumer robots? A dual model of psychological ownership and trust to explain post-adoption behaviours. Computers in Human Behavior, 117, 106660. https://doi.org/10.1016/j.chb.2020.106660

- Ding, Z., Sun, J., Wang, Y., Jiang, X., Liu, R., Sun, W., Mou, Y., Wang, D., & Liu, M. (2021). Research on the influence of anthropomorphic design on the consumers’ express packaging recycling willingness: The moderating effect of psychological ownership. Resources, Conservation, and Recycling, 168, 105269. https://doi.org/10.1016/j.resconrec.2020.105269

- Feijóo, C., Kwon, Y., Bauer, J. M., Bohlin, E., Howell, B., Jain, R., Potgieter, P., Vu, K., Whalley, J., & Xia, J. (2020). Harnessing artificial intelligence (AI) to increase wellbeing for all: The case for a new technology diplomacy. Telecommunications Policy, 44(6), 101988. https://doi.org/10.1016/j.telpol.2020.101988

- Fodor, J. A. (1981). Imagistic representation. In N. Block (Ed.). Imagery (pp. 63–86). MIT Press.

- Fornell, C., & Larcker, D. F. (1981). Evaluating structural equation models with unobservable variables and measurement error. Journal of Marketing Research, 18(1), 39–50. https://doi.org/10.1177/002224378101800104

- Fuentes-Moraleda, L., Díaz-Pérez, P., Orea-Giner, A., Muñoz- Mazón, A., & Villacé-Molinero, T. (2020). Interaction between hotel service robots and humans: A hotel-specific service robot acceptance model (sRAM). Tourism Management Perspectives, 36, 100751. https://doi.org/10.1016/j.tmp.2020.100751

- Ha, S., Huang, R., & Park, J.-S. (2019). Persuasive brand messages in social media: A mental imagery processing perspective. Journal of Retailing and Consumer Services, 48, 41–49. https://doi.org/10.1016/j.jretconser.2019.01.006

- Hair, J. F., Black, W. C., & Babin, B. J. (2006). Multivariate data analysis (6th ed.). Prentice Hall.

- Hepola, J., Leppaniemi, M., & Karjaluoto, H. (2020). Is it all about consumer engagement? Explaining continuance intention for utilitarian and hedonic service consumption. Journal of Retailing and Consumer Services, 57, 102232. https://doi.org/10.1016/j.jretconser.2020.102232

- Hooi, R., & Cho, H. (2017). Virtual world continuance intention. Telematics and Informatics, 34(8), 1454–1464. https://doi.org/10.1016/j.tele.2017.06.009

- Hu, Y. (2019). Do people want to message chatbots? Developing and comparing the usability of a conversational vs. menu-based chatbot in context of new hire onboarding [Master’s thesis]. Aalto University. http://urn.fi/URN:NBN:fi:aalto-201910275838

- Jahanmir, S. F., Silva, G. M., Gomes, P. J., & Goncalves, H. M. (2020). Determinants of users’ continuance intention toward digital innovations: Are late adopters different? Journal of Business Research, 115, 225–233. https://doi.org/10.1016/j.jbusres.2019.11.010

- Jin, S. A. (2011). I feel present. Therefore, I experience flow: A structural equation modeling approach to flow and presence in video games. Journal of Broadcasting & Electronic Media, 55(1), 114–136. https://doi.org/10.1080/08838151.2011.546248

- Jin, S. A. (2012). Toward integrative models of flow: Effects of performance, skill, challenge, playfulness, and presence on flow in video games. Journal of Broadcasting & Electronic Media, 56(2), 169–186. https://doi.org/10.1080/08838151.2012.678516

- Jin, S. V., & Ryu, E. (2019). Instagram fashionistas, luxury visual image strategies, and vanity. Journal of Product & Brand Management, 29(3), 355–368. https://doi.org/10.1108/JPBM-08-2018-1987

- Jöreskog, K. G., & Sörbom, D. (1993). LISREL 8: Structural equation modeling with the SIMPLIS command language. Scientific Software International.

- Jumaan, I. A., Hashim, N. H., & Al-Ghazali, B. M. (2020). The role of cognitive absorption in predicting mobile Internet users’ continuance intention: An extension of the expectation-confirmation model. Technology in Society, 22, 101355. https://doi.org/10.1016/j.techsoc.2020.101355

- Jung, Y. (2011). Understanding the role of sense of presence and perceived autonomy in users’ continued use of social virtual worlds. Journal of Computer-Mediated Communication, 16(4), 492–510. https://doi.org/10.1111/j.1083-6101.2011.01540.x

- Kamleitner, B., & Feuchtl, S. (2015). “As if it were mine”: Imagery works by inducing psychological ownership. Journal of Marketing Theory and Practice, 23(2), 208–223. https://doi.org/10.1080/10696679.2015.1002337

- Karahanna, E., Straub, D. W., & Chervany, N. L. (1999). Information technology adoption across time: A cross-sectional comparison of pre-adoption and post-adoption beliefs. MIS Quarterly, 23(2), 183–213. https://doi.org/10.2307/249751

- Keenan, M. (2022). The 14 best chatbot examples in 2022 (and how to build your own). ManyChat. https://manychat.com/blog/chatbot-examples/.

- Kiesler, S., Powers, A., Fussell, S., & Torrey, C. (2008). Anthropomorphic interactions with a robot and robot-like agent. Social Cognition, 26(2), 169–181. https://doi.org/10.1521/soco.2008.26.2.169

- Kim, S. B., Kim, D.-Y., & Bolls, P. (2014). Tourist mental-imagery processing: Attention and arousal. Annals of Tourism Research, 45, 63–76. https://doi.org/10.1016/j.annals.2013.12.005

- Kim, J., & Swaminathan, S. (2021). Time to say goodbye: The impact of anthropomorphism on selling prices of used products. Journal of Business Research, 126, 78–87. https://doi.org/10.1016/j.jbusres.2020.12.046

- Kirk, C. P., Peck, J., & Swain, S. D. (2018). Property lines in the mind: Consumers’ psychological ownership and their territorial responses. Journal of Consumer Research, 45(1), 148–168. https://doi.org/10.1093/jcr/ucx111

- Kumar, J., & Nayak, J. K. (2019). Exploring destination psychological ownership among tourists: Antecedents and outcomes. Journal of Hospitality and Tourism Management, 13, 30–39. https://doi.org/10.1016/j.jhtm.2019.01.006

- Lee, W., & Gretzel, U. (2012). Designing persuasive destination websites: A mental imagery processing perspective. Tourism Management, 33(5), 1270–1280. https://doi.org/10.1016/j.tourman.2011.10.012

- Lee, S. A., & Oh, H. (2021). Anthropomorphism and its implications for advertising hotel brands. Journal of Business Research, 129, 455–464. https://doi.org/10.1016/j.jbusres.2019.09.053

- Lee, M., & Park, J. S. (2022). Do parasocial relationships and the quality of communication with AI shopping chatbots determine middle‐aged women consumers’ continuance usage Intentions? Journal of Consumer Behaviour, 21(4), 842–854. https://doi.org/10.1002/cb.2043

- Lee, K. M., Peng, W., Jin, S. A., & Yan, C. (2006). Can robots manifest personality?: An empirical test of personality recognition, social responses, and social presence in human–robot interaction. Journal of Communication, 56(4), 754–772. https://doi.org/10.1111/j.1460-2466.2006.00318.x

- Lee, S., & Kim, D.-Y. (2020). The BRAND tourism effect on loyal customer experiences in luxury hotel: The moderating role of psychological ownership. Tourism Management Perspectives, 35, 100725. https://doi.org/10.1016/j.tmp.2020.100725

- Lee, J. E., & Shin, E. (2020). The effects of apparel names and visual complexity of apparel design on consumers’ apparel product attitudes: A mental imagery perspective. Journal of Business Research, 120, 407–417. https://doi.org/10.1016/j.jbusres.2019.08.023

- Li, C.-Y., & Fang, Y.-H. (2019). Predicting continuance intention toward mobile branded apps through satisfaction and attachment. Telematics and Informatics, 43, 101248. https://doi.org/10.1016/j.tele.2019.101248

- Li, S., Qu, H., & Wei, M. (2021). Antecedents and consequences of hotel customers’ psychological ownership. International Journal of Hospitality Management, 93, 102773. https://doi.org/10.1016/j.ijhm.2020.102773

- Loussaief, L., Ulrich, I., & Damay, C. (2019). How does access to luxury fashion challenge self-identity? Exploring women’s practices of joint and non-ownership. Journal of Business Research, 102, 263–272. https://doi.org/10.1016/j.jbusres.2019.02.020

- MacInnis, D., & Folkes, V. (2017). Humanizing brands: When brands seem to like me, part of me, and in a relationship with me. Journal of Consumer Psychology, 27(3), 355–374. https://doi.org/10.1016/j.jcps.2016.12.003

- MacInnis, D. J., & Price, L. L. (1987). The role of imagery in information processing: Review and extensions. Journal of Consumer Research, 13(4), 473–491. https://doi.org/10.1086/209082

- Melian-Gonzalez, S., Gutierrez-Tano, D., & Bulchand-Gidumal, J. (2021). Predicting the intentions to use chatbots for travel and tourism. Current Issues in Tourism, 24(2), 192–210. https://doi.org/10.1080/13683500.2019.1706457

- Miao, F., Kozlenkova, I. V., Wang, H., Xie, T., & Palmatier, R. W. (2022). An emerging theory of avatar marketing. Journal of Marketing, 86(1), 67–90. https://doi.org/10.1177/0022242921996646

- Mishra, S., & Malhotra, G. (2021). The gamification of in-game advertising: Examining the role of psychological ownership and advertisement intrusiveness. International Journal of Information Management, 61, 102245. https://doi.org/10.1016/j.ijinfomgt.2020.102245

- Morewedge, C. K. (2021). Psychological ownership: Implicit and explicit. Current Opinion in Psychology, 39, 125–132. https://doi.org/10.1016/j.copsyc.2020.10.003

- Morozova, A. (2018, January 18). Burberry, Victoria’s Secret, Tommy Hilfiger: How major fashion retailers experiment with chatbots. https://jasoren.com/burberry-victorias-secret-tommy-hilfiger-how-major-fashion-retailers-experiment-with-chatbots/.

- Muthivhi, A. E. (2015). Piaget’s theory of human development and education. International Encyclopedia of the Social & Behavioral Sciences, 18, 125–132.

- Nascimento, B., Oliveira, T., & Tam, C. (2018). Wearable technology: What explains continuance intention in smartwatches? Journal of Retailing and Consumer Services, 43, 157–169. https://doi.org/10.1016/j.jretconser.2018.03.017

- Nass, C., & Moon, Y. (2000). Machines and mindlessness: Social responses to computers. Journal of Social Issues, 56(1), 81–103. https://doi.org/10.1111/0022-4537.00153

- Nikhashemi, S. R., Knight, H. H., Nusair, K., & Liat, C. B. (2021). Augmented reality in smart retailing: A(n) (A) symmetric approach to continuous intention to use retail brands’ mobile AR apps. Journal of Retailing and Consumer Services, 60, 102464. https://doi.org/10.1016/j.jretconser.2021.102464

- Parisi, D., & Schlesinger, M. (2002). Artificial life and Piaget. Cognitive Development, 17(3–4), 1301–1321. https://doi.org/10.1016/S0885-2014(02)00119-3

- Piaget, J. (1971). Biology and knowledge. An essay on the relations between organic regulations and cognitive processes. University of Chicago Press.

- Pierce, J. L., Kostova, T., & Dirks, K. T. (2001). Toward a theory of psychological ownership in organizations. The Academy of Management Review, 26(2), 298–310. https://doi.org/10.2307/259124

- Pierce, J. L., Kostova, T., & Dirks, K. T. (2003). The state of psychological ownership: Integrating and extending a century of research. Review of General Psychology, 7(1), 84–107. [Database] https://doi.org/10.1037/1089-2680.7.1.84

- Prentice, C., Weaven, S., & Wong, I. A. (2020). Linking AI quality performance and customer engagement: The moderating effect of AI preference. International Journal of Hospitality Management, 90, 102629. https://doi.org/10.1016/j.ijhm.2020.102629

- Rajaobelina, L., Tep, S. P., Arcand, M., & Ricard, L. (2021). Creepiness: Its antecedents and impact on loyalty when interacting with a chatbot. Psychology & Marketing, 38(12), 2339–2356. https://doi.org/10.1002/mar.21548

- Rodriguez-Ardura, I., & Meseguer-Artola, A. (2016). E-learning continuance: The impact of interactivity and the mediating role of imagery, presence, and flow. Information & Management, 53(4), 504–516. https://doi.org/10.1016/j.im.2015.11.005

- Roy, R., & Phau, I. (2014). Examining regulatory focus in the information processing of imagery and analytical advertisements. Journal of Advertising, 43(4), 371–381. https://doi.org/10.1080/00913367.2014.888323

- Satisfi Labs. (2020). Solutions: For hospitality. Retrieved January 16, 2020, from https://satisfilabs.com/hospitality/

- Schlosser, A. E. (2018). What are my changes? An imagery versus discursive processing approach to understanding ratio-bias effects. Organizational Behavior and Human Decision Processes, 144, 112–124. https://doi.org/10.1016/j.obhdp.2017.11.001

- Sheehan, B., Jin, H. S., & Gottlieb, U. (2020). Customer service chatbots: Anthropomorphism and adoption. Journal of Business Research, 115, 14–24. https://doi.org/10.1016/j.jbusres.2020.04.030

- Shumanov, M., & Johnson, L. (2021). Making conversations with chatbots more personalized. Computers in Human Behavior, 117, 106627. https://doi.org/10.1016/j.chb.2020.106627

- Skjuve, M., Folstad, A., Fostervold, K. I., & Brandtzaeg, P. B. (2021). My chatbot companion: A study of human-chatbot relationships. International Journal of Human-Computer Studies, 149, 102601. https://doi.org/10.1016/j.ijhcs.2021.102601

- Smutny, P., & Schreiberova, P. (2020). Chatbots for learning: A review of educational chatbots for the Facebook Messenger. Computers & Education, 151, 103862. https://doi.org/10.1016/j.compedu.2020.103862

- Stoner, J. L., Loken, B., & Stadler-Blank, A. (2018). The name game: How naming products increases psychological ownership and subsequent consumer evaluations. Journal of Consumer Psychology, 28(1), 130–137. https://doi.org/10.1002/jcpy.1005

- Tran, A. D., Pallant, J. I., & Johnson, L. W. (2021). Exploring the impact of chatbots on consumer sentiment and expectations in retail. Journal of Retailing and Consumer Services, 63, 102718. https://doi.org/10.1016/j.jretconser.2021.102718

- Tasi, W.-H S., Lun, D., Carcioppolo, N., & Chuan, C.-H. (2021). Human versus chatbot:Understanding the role of emotion in health marketing communication for vaccines. Psychology & Marketing, 38(12), 2377–2392. https://doi.org/10.1002/mar.21556

- Tussyadiah, I. (2020). A review of research into automation in tourism: Launching the annals of tourism research curated collection on artificial intelligence and robotics in tourism. Annals of Tourism Research, 81, 102883. https://doi.org/10.1016/j.annals.2020.102883

- Van Dyne, L., & Pierce, J. L. (2004). Psychological ownership and feelings of possession: Three field studies predicting employee attitudes and organizational citizenship behavior. Journal of Organizational Behavior, 25(4), 439–459. https://doi.org/10.1002/job.249

- van Esch, P., Arli, D., Gheshlaghi, M. H., Andonopoulos, V., von der Heidt, T., & Northey, G. (2019). Anthropomorphism and augmented reality in the retail environment. Journal of Retailing and Consumer Services, 49, 35–42. https://doi.org/10.1016/j.jretconser.2019.03.002

- Velasco, F., Yang, Z., & Janakiraman, N. (2021). A meta-analytic investigation of consumer response to anthropomorphic appeals: The roles of product type and uncertainty avoidance. Journal of Business Research, 131, 735–746. https://doi.org/10.1016/j.jbusres.2020.11.015

- Walch, K. (2019). AI’s increasing role in customer service. Cognitive World. https://www.forbes.com/sites/cognitiveworld/2019/07/02/ais-increasing-role-in-customer-service/?sh=3f03677a73fc#1fafeb2d73fc/

- Wang, C., Teo, T. S. H., & Liu, L. (2020). Perceived value and continuance intention in mobile government service in China. Telematics and Informatics, 48, 101348. https://doi.org/10.1016/j.tele.2020.101348

- Wang, X., Abdelhamid, M., & Sanders, G. L. (2021). Exploring the effects of psychological ownership, gaming motivations, and primary/secondary control on online game addiction. Decision Support Systems, 144(9), 113512. https://doi.org/10.1016/j.dss.2021.113512

- Wang, W. T., Ou, W. M., & Chen, W. Y. (2019). The impact of inertia and user satisfaction on the continuance intentions to use mobile communication applications: A mobile service Quality perspective. International Journal of Information Management, 44, 178–193. https://doi.org/10.1016/j.ijinfomgt.2018.10.011

- Wirtz, J., Patterson, P. G., Kunz, W. H., Gruber, T., Lu, V. N., Paluch, S., & Martins, A. (2018). Brave new world. Service robots in the frontline. Journal of Service Marketing, 29(5), 907–931. https://doi.org/10.1108/JOSM-04-2018-0119

- Witmer, B. G., & Singer, M. J. (1998). Measuring presence in virtual environments: A presence questionnaire. Presence: Teleoperators and Virtual Environments, 7(3), 225–240. https://doi.org/10.1162/105474698565686

- Yan, M., Filieri, R., & Gorton, M. (2021). Continuance intention of online technologies: A systematic literature review. International Journal of Information Management, 58, 102315. https://doi.org/10.1016/j.ijinfomgt.2021.102315

- Yang, X. (2021). Determinants of consumers’ continuance intention to use social recommender systems A self-regulation perspective. Technology in Society, 64, 101464. https://doi.org/10.1016/j.techsoc.2020.101464

- Youn, S., & Jin, S. V. (2021). In A.I. we trust? The effects of parasocial interaction and technopian versus luddite ideological views on chatbot-based customer relationship management in the emerging feeling economy. Computers in Human Behavior, 119, 106721. https://doi.org/10.1016/j.chb.2021.106721

- Yuan, C., Wang, S., Yu, X., Kim, K. H., & Moon, H. (2021). The influence of flow experience in the augmented reality context on psychological ownership. International Journal of Advertising, 40(6), 922–944. https://doi.org/10.1080/02650487.2020.1869387

Appendix A