Abstract

Internet of things (IoT) technology must provide users with benefits that outweigh the costs and risks for it to be widely accepted and adopted. New functionality is continuously added, and costs decrease with the increasing volumes and technological advances; however, the risks also increase as technology becomes increasingly integrated into human lives. This survey study of 251 respondents investigated how users and different user groups perceive IoT security and privacy in the home domain, their trust in the involved parties, and how different types of measures, as suggested by the literature, can affect user adoption. The results indicate that people are particularly concerned about security and privacy and are fearful of breaches and misuse. A large majority of the people do not believe the products to be secure. Less than a third of the users trust manufacturers, service providers, and governments to uphold their privacy; this remarkably hinders IoT adoption.

1. Introduction

The Internet of things (IoT) can facilitate communication among various devices and users to afford knowledge and control that can help improve human lives (Mohamed, Citation2019; Patel & Patel, Citation2016; Vermesan et al., Citation2009). IoT devices are known to be mostly autonomous, both under operation and configuration, last a long time on their energy sources, have efficient communication that ensures the quality of service, and can interact with other devices or platforms through different networks; however, they are also notorious for their weak security and privacy (Mohamed, Citation2019). Securing IoT devices and services is a significant challenge (Ismagilova et al., Citation2019; Tawalbeh et al., Citation2020; Zhou et al. (Citation2017) because their power and computational resources are limited, and they often use wireless communication. Therefore, controls are absent or limited, making devices vulnerable to attacks (Alladi et al., Citation2020).

Lu et al. (Citation2018) synthesized IoT applications in 14 service domains by categorizing them into four types according to their target and scope of adoption: the infrastructural level (e.g., smart home domain), organizational level (e.g., smart agriculture), individual level (e.g., wearables), and all-inclusive level (e.g., self-driving cars). While IoT security and privacy have been important challenges in all these application domains, the smart home domain needs particular attention, as it involves human daily life, and the users are engaged with IoT technologies in their daily use context and real-life settings (Ismagilova et al., Citation2020).

By 2025, 5 G technology will enable more devices and features that will continue to drive growth, reaching between 20 and 43 billion devices (Popescu et al., Citation2021). The rapid development and spread of technology have resulted in an increasing number of threats affecting personal security and privacy, particularly in the smart home domain. In just two years, the number of IoT malware collected by Kaspersky Lab increased from just over 3000 to over 121,000, while only one in ten companies were confident in securing their IoT devices (Mehdipour, Citation2020). If these threats are not appropriately addressed and serious incidents continue to occur regularly, user trust and IoT adoption will be negatively affected, making security, privacy, and trust, the key limitations of IoT (Popescu et al., Citation2021).

These problems make the current research in IoT as much focused on security issues as on new technology (Lu et al., Citation2018); however, IoT security areas are often overlooked by the developers because they prioritize bringing products to the market as fast as possible. This will be a significant problem because future development is dependent on users accepting and adopting IoT technology (Kim & Kim, Citation2016; Rekha et al., Citation2021).

Because a regular user is not an IoT or security expert and would not have the ability to evaluate risks or security and privacy measures, user trust will be critical for IoT development. Macedo et al. (Citation2019) found that most research related to IoT focuses on authentication techniques, whereas trust is the least studied aspect, with only a few papers addressing it. Their results highlight that it is unclear as to how to gain the users’ trust, which is fundamental for the future of IoT and needs to be studied further.

The impact of IoT innovations on daily human lives is both inevitable and undeniable. With advanced IoT technologies in society, particularly in their everyday living space (i.e., people’s homes), the social aspects of human everyday lives become intertwined with technical aspects (Mumford, Citation2006; Pilemalm et al., Citation2007). Therefore, people strongly consider the use and adoption of IoT technologies in their home environment, particularly because of the privacy and security threats that they might be facing (Lin & Bergmann, Citation2016). This may, in turn, affect the adoption rate of IoT solutions in the home domain (Neupane et al., Citation2021). Therefore, researchers have called for further research on the effects of privacy and security on user adoption in the smart home domain (e.g., Ismagilova et al., Citation2020) and (Alnahari & Quasim, Citation2021). Despite this, even the few studies that have investigated the effects of privacy and security on IoT adoption in smart cities have mainly considered the whole city as the context of their study and from a broader organizational perspective (Ismagilova et al., Citation2020; Tawalbeh et al., Citation2020). Consequently, there is a dearth of research that considers real or potential users’ perspectives to demonstrate the effects of perceived security and privacy issues on IoT adoption for smart home products; this area must be extensively studied further.

Accordingly, this study focused on the security and privacy issues that users are most concerned with in the home domain, and what producers and service providers can do to gain user trust in their products and promote adoption. This study was guided by the following research questions:

What effect do the perceived security and privacy issues of smart products have on technology adoption among current and potential users in the home domain?

What commonly suggested measures should the industry implement to gain user trust to facilitate the adoption of IoT technology in the home domain?

To answer these research questions, a quantitative research methodology was employed by distributing an online survey that collected 251 responses from the survey respondents. The remainder of this article is structured as follows. The next section outlines the current state of the research, followed by the research methodology section, which explains the overall approach used to conduct this study. The results of this study are then described, and the collected data is analyzed. Finally, we discuss the findings, the contribution of research in theory and practice, as well as limitations and future research.

2. Literature review

The home domain is a living area where users can access anything from a single device or system to a smart home. A smart home can be defined as “a residence equipped with a high-tech network, linking sensors and domestic devices, appliances, and features that can be remotely monitored, accessed or controlled, and provide services that respond to the needs of its inhabitants” (Balta-Ozkan et al., Citation2013, p. 364). This study aimed to explore what producers and service providers can do to gain trust in their products and the effect that different measures would have on user adoption.

While innovation adoption and diffusion concepts have been used interchangeably by some researchers, they differ in nature. Innovation adoption investigates individuals’ choice to accept or reject a particular innovation, whereas innovation diffusion describes how an innovation spreads through a population (Straub, Citation2009). Therefore, innovation adoption is more focused on the micro perspective (decision of the user at the individual level), whereas diffusion of innovation emphasizes the macro perspective at the organizational level (Frambach & Schillewaert, Citation2002; Hameed et al., Citation2012).

At the individual level, and from the users’ perspective, diffusion of innovation theory (DOI), and more specifically its innovation decision process (Rogers, Citation2003), explains the successful spread of technology over time using the following five steps: knowledge, where the user becomes aware of the existence of the technology and what it can do; persuasion, where the user can be easily influenced and forms an attitude toward the technology; decision, where the user adopts or rejects the technology; implementation, which is the first use; and confirmation, where the user tries to validate that it was the right decision. For technology to be viable, it must be commonly adopted and reach a critical mass (Padyab et al., Citation2019). Several studies have shown that security and privacy factors have a significant impact on IoT purchase intention, adoption, and usage (Chang et al., Citation2014; Johnson et al., Citation2020; Lee, Citation2019; Lu et al., Citation2018; Padyab et al., Citation2019); this implies that users’ perceptions of security and privacy affect several DOI steps.

Prior studies show that both current and potential users are highly concerned about home privacy and that their concerns affect the acceptance of home IoT services. Some of the concerns are how IoT data are transferred, managed, and stored in cloud-centric systems, where they are frequently attacked by cyber criminals, which can lead to data breaches where the attackers use the data for identity theft or extortion (Saura et al., Citation2021). Another concern is physical intrusion, where a malicious actor can gain physical control over devices such as a camera. A breach could also lead to physical damage if the attacker, for example, takes control of a smart door lock, or even fatalities in the case of an attack against an electric smart plug or a smart insulin pump (Lee, Citation2020). For IoT devices to be used in the home domain, people must feel that their privacy is not violated. Hence, apart from collecting the data necessary for technology to work, they need to feel safe that their personal information is well protected against malicious users (Ismagilova et al., Citation2020).

Because user acceptance of the technology is critical to its success, adoption should be the primary focus (Li et al., Citation2021). Actions must be taken to make the products privacy-friendly and minimize the risks of data leakage or misuse. Addressing users’ risk concerns and providing transparency and familiarity are crucial to gaining the trust that facilitates adoption (Li et al., Citation2021; Alraja et al., Citation2019). Trust is needed because it is difficult for a regular consumer to know if a device is secure enough; thus, privacy and trust are the main factors for users when deciding whether to use a system or not (Schomakers et al., Citation2021). The importance of trust increases with the development of the IoT (Lee, Citation2019), and lack of trust, privacy, and security issues are barriers that need to be addressed continuously throughout the product life cycle (Padyab et al., Citation2019).

If companies desire to sell more IoT products, they should not only aim to get the products on the market as soon as possible but also invest in safeguarding user privacy, informing the users about the risks, and educating them on how to manage their IoT products (Lu et al., Citation2018). Users must feel that they have the ability to easily control the behavior of their devices (Strous et al., Citation2021), control their data (Lu et al., Citation2018; Rau et al., Citation2015), and ensure that sensitive data are exchanged between trusted parties in an easy and protected way. The amount of collected data should be kept to a minimum and users can choose their level of privacy (Ogonji et al., Citation2020). At present, privacy and security concerns related to IoT solutions have become more apparent, as many of these IoT devices are cloud-based, meaning that the data are stored in the cloud, which is more vulnerable to aspects such as attacks and data leakage (Zhou et al., Citation2017).

Customers’ privacy concerns can also be considerably lowered by showing compliance with regulations, such as GDPR (General Data Protection Regulation), which involves applying privacy by design, using trust badges for third-party companies, informing users about the risks, and having them influence the data collection and usage by providing them the option to opt in or out while making it clear why the data are collected and the consequences of their choices (Leroux & Pupion, Citation2022; Padyab et al., Citation2019). Saura et al. (Citation2022), who studied how government AI strategies can risk citizens’ privacy and affect collective behavior, pointed out that the choice of having the data transferred to a third party is not really a choice at present. Users often need to accept a privacy policy before using an application. If they decline, they would not be able to use the application. Saura et al. went so far as to call it blackmail and called for the government to regulate data management for user integrity. Both producers and service providers can be bound by a legal framework to achieve a reasonable level of privacy and choice (Li et al., Citation2021). However, the ethical regulations of data collection and management do not progress at the same pace as technology, thus negatively affecting user privacy (Saura et al., Citation2022). The use of a large amount of data to predict behavior can systematically violate the user’s privacy, without the government even realizing it, owing to a lack of awareness or understanding of the technology (Saura et al., Citation2022).

The GDPR addresses users’ privacy concerns by requiring privacy by design, privacy by default, transparency, and informed consent. User-centric privacy-preserving guidelines can be based on, and comply with, GDPR to ensure users’ privacy and control over their data (Kounoudes & Kapitsaki, Citation2020). Developers need to provide the end users with easy-to-use tools for configuring, enforcing, and monitoring security and privacy policies, and the industry needs to come up with standards protocols and certification frameworks where labels can be used to declare security and privacy levels. Overall, an end-to-end holistic approach needs to be used, and different responsibilities need to be clarified (Ziegler et al., Citation2019).

Privacy by design can be based on seven ground principles—privacy needs to be proactive; enabled by default; embedded in the design; not affecting the functionality negatively; protected everywhere in the system during the whole lifecycle; transparent to gain trust; and respectful of the users, giving them control over their data (Weinberg et al., Citation2015). To protect the data on all layers throughout the life cycle, security by design should be implemented for each component by having them work together to keep the entire unit secure because a breach in one part can affect the entire system (Macedo et al., Citation2019; Strous et al., Citation2021).

Security labeling has been shown to help consumers make informed choices by showing them what products they can trust. A study by Johnson et al. (Citation2020) showed that consumers were willing to pay 25%–38% more for four different types of smart products with security labels in comparison to the same products without labels. This suggests that there exists a significant economic incentive for producers to improve security in IoT products as long as the customers are informed about it and that the cost of improving security should not be a hindrance.

To protect the IoT system, security needs to be integrated into its lifecycle. The system should be constantly monitored so that threats can be detected in all security layers. If a device is attacked, actions should be taken to eliminate the threat and ensure that it does not spread while the system is self-healing (Chang & Hung, Citation2021; Iqbal et al., Citation2020; Mehdipour, Citation2020). Therefore, important general IoT security measures for producers would be to secure the network with regular patching, updates, antivirus, firewalls, and IDS/IPS with real-time and predictive analytics to monitor the system, implement two-factor authentication including biometrics, and secure the apps by integrating security in the design and encrypting the data. Producers should not launch new products until any security issues are appropriately managed, and they should be updated regarding the most recent threats (Omolara et al., Citation2022).

While research on security technology is progressing rapidly, Macedo (Citation2019) argues that the user perception of IoT security and privacy that creates trust needs to be examined in detail, specifically, how that trust can increase and how it affects IoT adoption. One important missing aspect in the reviewed literature, as briefly stated in the Introduction, is the users’ perspective (particularly those who are not currently using IoT technologies). Although the importance of security and privacy in the adoption of IoT solutions has been acknowledged by researchers, the effects of privacy and security aspects on the adoption of IoT technologies have not been at the center of attention in the above-mentioned studies. This can be explained by the dominant organizational perspective regarding the adoption of innovations (Rogers, Citation2003), as well as the prioritization of the adoption rate among current users on the needs, motivations, and expectations of future or potential users (Habibipour, Citation2020).

Another aspect that should be considered is that most of those studies (e.g., Lee, Citation2020 and Li et al., Citation2021) focus on technology acceptance, which involves less commitment compared to actual adoption of technology by current or future users in their real-life setting. While technology acceptance mostly concerns the ability of a user or organization to accept and use the technology, adoption is associated with a more long-term commitment and the decision of users to adopt and use the technology in the long run (Hameed et al., Citation2012; Rogers, Citation2003).

3. Research methodology

We adopted a positivistic approach to quantitative descriptive survey research (Leedy & Ormrod, Citation2015). A quantitative approach is suitable for this explanatory research because it examines the effects of privacy and security on IoT adoption in a social context (i.e., as in this study) (Powell, Citation2020). Additionally, a quantitative research approach is more suitable when the problem involves a limited set of variables (Bloomfield & Fisher, Citation2019). The literature review was used to find security and privacy concerns creating barriers to IoT adoption, together with possible measures that would lower users’ risk perception and facilitate adoption. A survey was then conducted to examine the degree of importance of such concerns, trust, and the significance of the suggested measures on IoT adoption. The study follows Punch’s (Citation2003) systematic research model for survey studies.

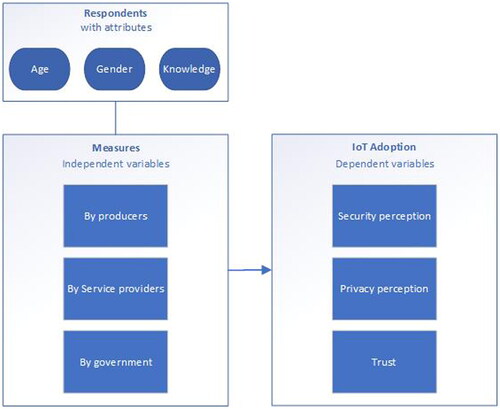

The initial list of influential factors on the adoption of IoT technologies in the home domain from the users’ perspective was deduced from the literature and then quantified and validated with empirical data from the survey. The results were also used to investigate the second research question, where respondents were asked about their attitude toward commonly suggested security- and privacy-related measures and the impact of such measures on adoption. The literature provides demographics of importance: age, gender, and familiarity (Lee, Citation2019; Citation2020; Leroux & Pupion, Citation2022; Li et al., Citation2021), which were added as attributes and considered in the results. This makes it possible to see differences between groups of users and whether measures should differ depending on the target group.

The aim was to obtain 250 responses from a random selection of respondents to draw general conclusions. Because it was important to obtain comparable data that were not dependent on culture, prices, laws, technology level, etc., the survey was limited to respondents from one country. Sweden was selected because it is a small, cohesive country and one of the most technologically advanced countries. Additionally, very few non-Swedish people know the language, which makes it easier to exclude people of other nationalities. Another limitation was the inclusion of people over 18 years of age, which is the legal age to enter into agreements with, for example, service providers. Because the target group was potential users, the survey was published online via a large number of different social media groups with a request to spread it to other random groups. This made it possible to exclude people without access to an Internet connection while reaching something close to a random selection of respondents. The survey was conducted online for four days and ended after 251 responses. All respondents were anonymous, and no personal data that could reveal their identity were collected to make the respondents more willing to answer and do it truthfully. The respondents were not balanced by any factor because the approach did not make it possible to control who could answer the survey, and excluding respondents would have created selection problems and provided less data.

The theoretical framework was based on the model of Alraja et al. (Citation2019), who studied the effects of security, privacy, familiarity, and trust on users’ attitudes toward the use of IoT-based healthcare. This framework is suitable because it focuses on the three themes that this study aims to explore. However, there was a need to adjust the model because we wanted to investigate how different measures affect user adoption. The results were explored with regard to age, gender, and knowledge level ().

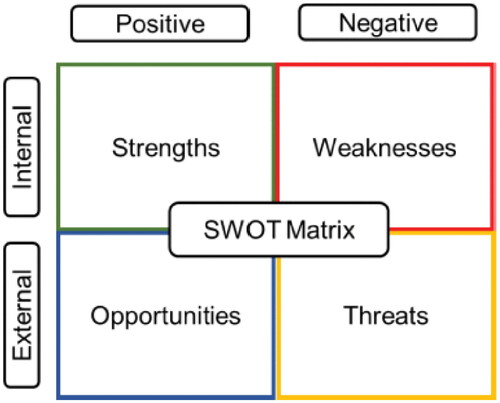

No hypotheses were created because the study explored the area from an unprejudiced standpoint. It is also not suitable for social research problems derived from professional practice and organizational or institutional environments (Punch, Citation2005). The analysis consisted of three parts: correlation analysis; manual analysis; and strengths, weaknesses, opportunities and threats (SWOT) analysis.

In the first part, correlations between attributes were examined to determine relationships. The strength of the relationship was measured using a correlation coefficient (Kotz & Mari, Citation2001). The coefficient was also used with a threshold value selected to create a reasonable number of attribute groups, which would be used later to categorize the measures. In the second part, the results were aggregated into groups based on the attributes, to rank issues and measures, and to calculate percentages, which gives a clear image of how the users view the issues, the results of the measures, and if there is a difference between the groups that need to be considered. In the third part, the SWOT analysis framework was used as an analytical lens to obtain a clear view of the strengths, weaknesses, opportunities, and threats of IoT adoption with regard to security, privacy, and trust (Kuzminykh et al., Citation2020) ().

4. Results and analysis

This section outlines the results of this study and provides an analysis of the raw data. The next section discusses these results in relation to the available literature, together with the findings of the SWOT analysis.

In total, 251 respondents answered the survey, of which 72% were men and 27% were women.

While the intention was to attract respondents from any gender, without any restrictions, men showed a greater interest in completing the survey. This might be partially explained by the field of our study as it involves technical aspects (i.e., IoT solutions). Previous studies have shown that women are less interested in technology and are more interested in socially oriented areas (Rosser, Citation2009). The fact that women are less interested in technology than men may lead to slower adoption rates when it comes to innovation adoption (Liff et al., Citation2004).

The respondents were all between 18 and 82 years of age, with an average and median age of 44. The respondents were divided into two genders, five age groups, and five knowledge-based groups ().

Table 1. Knowledge level per gender and age.

At least one smart product was in use in the homes of 85% of the respondents (not including smartphones or tablets). When divided by gender, 91% of the respondents were men and 71% were women. The percentage by age decreased from 92% in the 30–39 group down to 79% in the 50–59 and 60+ groups. However, the youngest group broke the trend with 82%.

Only 8% of the respondents agreed with the statement that smart home products have a high level of security, making it difficult for unauthorized persons to take control of their devices. The same percentage of respondents believed that smart products have a high level of privacy protection, making it difficult for unauthorized persons to access device data. While 33% trust large reputable manufacturers to secure their devices, 29% trust that large reputable service providers will protect user integrity, and 33% trust that government and authorities will do what they can to uphold user integrity and not misuse data. While 71% of the respondents wanted to own and use more smart home products, 35% considered security and privacy issues as general barriers to purchase and use ().

Table 2. Perceived security privacy, trust and attitude.

Respondents were asked about the importance of the different measures suggested in the literature. For privacy by default, which means that a manufacturer applies maximum privacy protection as standard, 85% considered it important, while only 3% did not. Security by design was described as a manufacturer’s security strategy for protecting the components of the product and the product as a whole during the entire product life cycle. While 80% of the respondents found the strategy important, only 4% did not. Only 2% of the respondents did not believe that it was important for the device not to send any data to a 3rd party without the user’s active consent, compared to 91% who did. When asked if it is important that all communication to and from devices would be encrypted, 84% agreed, while only 5% did not. While 67% of the respondents believed that it is important for devices automatically download and install security patches, 13% did not. The use of two different authentication methods to obtain access to the device was deemed important by 61% of the respondents, while 17% disagreed. While 73% believed that it is important that a device has protection against malware and hackers (such as antivirus and firewalls), 11% disagreed. When asked if it is important that the system is constantly searching for suspicious behavior that can be caused by a hacker or malware, 63% agreed and 14% did not. While 65% of the respondents considered “self-healing” important, meaning the device restores itself and continues to function as it should after an attack or malware, 13% did not agree. According to 84% of the respondents, devices must always ask the user for permission before collecting or using data; that is, it should be clear what data are being collected, why, and how, as well as the consequences of the users’ choice; only 6% disagreed. While 72% of the respondents considered a clear division of responsibilities between the manufacturer, service provider, and user to be important, 5% did not. For 69% of the respondents, it was important to introduce a certification where the devices would be equipped with labels for security and privacy levels, while 9% did not agree. To protect user privacy, 63% of the respondents believed it would be important for manufacturers to comply with a law based on the GDPR; however, 10% disagreed ().

Table 3. The importance of different measures per gender, age and knowledge group.

Because 93% of the respondents stated that they understood the questions, 92% indicated their answers reflected their opinions and beliefs, and only 1% somewhat disagreed, we find that the respondents validate the survey design.

5. Discussion

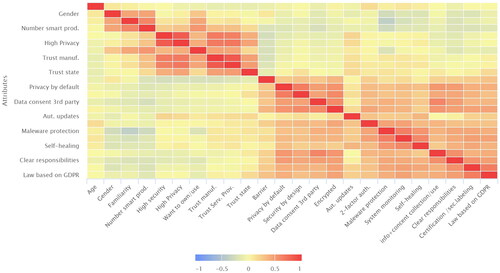

In this study, correlation analysis was applied to examine the strength of the relationships between selected attributes, and the results were printed as a correlation matrix ().

It is clear that knowledge correlates with a positive attitude toward using more smart products, and it is expected that usage correlates with a higher level of knowledge, which is in line with Alraja et al. (Citation2019). The significant difference in knowledge levels between men and women can be a result of different interests or work areas. A smaller difference in usage, however, indicates that men may exaggerate their knowledge level or vice versa ().

Table 4. Correlation between demographics and attitude.

The belief that products are secure and have a high level of privacy correlates with a high level of trust in manufacturers and service providers, which matches the results of Alraja et al. (Citation2019), who found that user trust increases with the perception of privacy. The findings of Lee (Citation2019) also support that trust in a service provider lowers the perceived privacy and security risk. The correlation coefficient suggests that trust affects attitude toward using smart products, which is consistent with the results of Alraja et al. (Citation2019) and Schomakers et al. (Citation2021), who found a clear connection between user trust and acceptance. The results indicate that if a product is not secure, the data are not secure ().

Table 5. Correlation between perception, trust and attitude.

Privacy concerns also have a negative impact on the willingness to use more products, which is supported by Alraja et al. (Citation2019), who added that privacy is prioritized by manufacturers to gain user trust ().

Table 6. Attribute categories by correlation threshold 0.62.

Our results reveal that respondents, regardless of the demographic group, did not perceive that smart products were secure or that privacy was upheld. As 0% of the respondents with no or little knowledge believed that the common image of security and privacy is evidently even worse than reality, creating a considerable barrier for new users. Li et al. (Citation2021) agree that privacy and security are barriers to IoT adoption, Balta-Ozkan et al. (Citation2013) state that consumers will not use systems in which they lack confidence, and Lee (Citation2020) statistically confirms that privacy concerns lead to resistance against IoT in the home domain.

According to the results, over 60% might refrain from using or purchasing smart products for this reason. This conclusion is supported by both Lu et al. (Citation2018), who found that users are highly concerned about privacy and security, and Mehdipour (Citation2020), who stated that more than 70% are concerned about privacy and security in smart homes, which leads to users avoiding the products.

Trust was also low among all groups. No more than one-third of trusted manufacturers, service providers, or authorities uphold security and privacy. The results are not in line with the work of Lee (Citation2019) on home IoT devices in South Korea, even though the concepts were similar in both the surveys. While this study found the trust average to be 3.49 for service providers and 3.58 for the government (on a 7-graded scale), Lee’s average was 4.56 and 4.15, respectively. For users with little experience, the difference was much larger: 2.86 for this study and 4.23 for Lee’s. One plausible explanation for this is that culture is a significant trust factor. The high belief in professionals and authorities in Asia contrasts with the Western questioning and skeptical attitude, which needs to be considered when creating strategies for adoption. Lee (Citation2019) found that people with more experience had more trust in service providers and authorities. Our results indicate that this is the case. However, when dividing the users into five groups instead of two, trust in service providers showed no clear patterns. Fewer women (28%) than men (34%) trusted the government and authorities on the matter. This result was unexpected and contradicts the results of Lee (Citation2020), who found the opposite to be true.

Most respondents wanted to use smart products, and the positive attitude increased by knowledge, which is supported by Alraja et al. (Citation2019), who found that people familiar with the technology were more likely to use and adopt IoT devices. Even though women and older users had less enthusiasm for smart products than others, the majority of those groups were still positive.

At the same time, the majority did not believe that the products were secure, or that their privacy was upheld. They did not trust any of the involved parties, and over a third did not want to buy or use IoT products for this reason. The findings are consistent with the work of Lee (Citation2020), who found that users and potential users were truly concerned about privacy in their homes and that user vulnerability was the most important reason for not adopting the technology. This is also supported by Chang et al. (Citation2014), who found that perceived security issues directly affect consumers’ intentions to purchase IoT products.

Women form a large user group, and while relatively few of them have IoT home products, many are interested, implying a remarkable potential IoT demand. The youngest group, which is the next generation of users, considers security and privacy issues to be significant hindrances. These issues could potentially be a hindrance for the majority of the group. Because user adoption is critical for success (Li et al., Citation2021), the industry needs to take action to reach general adoption.

Overall, the security and privacy situation for smart products is critical and only second to the picture of reality that is even worse; thus, a lot of trust needs to be established to reach widespread adoption.

Respondents were asked about 13 possible measures. All were considered important by at least 60%. The results indicate that the perceived security and privacy levels are so low that users believe that almost any measure would make a significant difference. This conclusion is supported by the results of Alraja et al. (Citation2019), who found that trust could be increased by providing potential users with information on the use of modern technology for privacy protection. The measures were divided into four categories using a correlation threshold of 0.62, named by type, and analyzed.

Strategies: Privacy by default and security by design were considered the 2nd and 5th most important measures. The result is consistent with the findings of Chang et al. (2014), where advanced security features embedded in the design made consumers feel more secure. Following an explicit comprehensive strategy must therefore be considered crucial.

User control: The results clearly show that users highly value control over their data, which is consistent with the finding of Balta-Ozkan et al. (2013), who found that users were afraid that third parties would violate their privacy, or that their data would fall into the wrong hands. A majority agreed that the most important measure was the device requiring consent to send any information to a third party. Making sure that the users are informed about the collection and storage of data and have the option to approve it or not came in fourth place. These results are supported by Alraja et al. (2019), who found that trust increased when users were ensured that their data would not be misused. The results show that user control is considered to be at least as important as strategies and, therefore, critical.

Protection measures: Security updates, 2-factor authentication, system monitoring, and self-healing capabilities are all in the second half of the chart. Only encryption (3rd place) and malware protection (6th place) were above. One could argue that the reason might be that regular users do not have sufficient knowledge to determine the importance of protection measures. However, this is contradicted by the highly knowledgeable IoT experts who did not rank them higher than the user control measures.

Regulations: The respondents ranked clear responsibilities higher than certification and product labeling for security and privacy. A law based on GDPR states that manufacturers and service providers would have to comply with ranks even lower. Low support for the law is in line with the low trust in the authorities. The 81% in the study by Johnson et al. (2020), which would use such a label in their purchase decision, is right between the 69% that find the measure important and the 89% that include the uncertain, indicating that the result is reasonable. IoT experts ranked regulations lower than other knowledge groups, and for respondents in general, regulations cannot compete with the high results of strategies and user control. However, approximately two-thirds still believe that regulations would be an important measure.

The difference between knowledge and experience is evident. People with no knowledge exaggerated the risks and rates of almost all measures as important. To attract new users and increase adoption, increasing familiarity is crucial (Alraja et al., Citation2019; Lee, Citation2020; Li et al., Citation2021). Alraja et al. (2019) and Chang et al. (2014) suggested increasing security and privacy in combination with general user awareness efforts. Lee (Citation2020) believes that both service providers and the government should take precautions, including privacy regulations, to make users feel safe. However, it is a balancing act, because too many regulations could destroy the industry (Balta-Ozkan et al., Citation2013).

The results of this study support Alraja et al. (2019), who claim that the perception or assurance of privacy has a positive effect on user trust, while familiarity and trust have a positive effect on attitude, adoption, and use. The conclusion of Li et al. (Citation2021) that privacy and security concerns are barriers to IoT adoption can also be confirmed. Balta-Ozkan et al. (2013) stated that users highly value control over their data, are concerned about data breaches and misuse, and avoid systems that they do not trust.

The findings of this study are also in line with those of Lee (Citation2019), who stated that trust in service providers lowers perceived privacy and security risks and that familiarity has a positive impact on trust in the authorities. However, the claim that the same would be true for trust in service providers is not supported when users are divided into several groups by knowledge level. A high level of trust in authorities and service providers was also not supported, while the statement that women have more trust in the government and authorities than men was not in accordance with the findings of the present study. However, these differences can be explained by differences in culture.

The study also confirms the findings of Schoemakers et al. (2021), who claimed that user trust and acceptance are connected, Lu et al. (Citation2018), who stated that users are highly concerned about privacy and security, and Mehdipour (Citation2020), who stated that a large majority of users are concerned about privacy and security in smart homes and that many therefore are avoiding the products. Finally, the study supports the findings of Chang et al. (2014) that advanced security features embedded in the design make consumers feel more secure, and the findings of Johnson et al. (2020) that a vast majority of users believe that security labeling would help them in their purchase decision is reasonable.

It is worth mentioning that all presented correlations have a p-value of .000 according to the Pearson significance test (Freedman et al., Citation2007), meaning that they are all statistically significant.

5.1. SWOT analysis

The results of this study and the collected data were also analyzed using the SWOT Matrix, as outlined in the methodology section.

Strength: A rapidly increasing demand and number of products generate experience and knowledge, which leads to fewer security concerns and a positive effect on attitude and adoption. Technological development creates more functions that will benefit and attract more users and gradually introduces smart functions in regular products, creating an experience among people who have not made an active decision on using smart products. Much research and resources are focused on solving these issues and securing products.

Weaknesses: The perceived security and privacy levels are worse than the actual levels. Only a third of potential users trust manufacturers, service providers, and authorities, and more than a third consider security and privacy issues as barriers to using more smart products. These devices are very difficult to secure, and there is no international standard, certification, labeling, or regulation that could build trust.

Opportunities: Few women or young people own or use smart products. Making them feel secure could result in adoption leaps. Most suggested measures have a high perceived impact on attitude, and rapid technological development can deliver more and better measures. Security and privacy standards, certifications, and privacy laws can build trust and adoption.

Threats: If a significant increase in malicious software and attacks continues, it could negatively affect security trust and adoption. Increasing severity, such as physical destruction or casualties, could discourage those that have already adopted the technology. It is also difficult to reach a high level of adoption if the industry cannot agree on standards and certifications.

6. Conclusion

The results of this study indicate that people are particularly concerned about security and privacy, and they are afraid of breaches and misuse. A large majority do not believe that products are secure or uphold privacy. Less than a third of the potential users trust the manufacturer, service provider, or government regarding these issues. Therefore, security and privacy issues are significant barriers to its adoption.

6.1. Theoretical implications

This study contributes to innovation adoption and diffusion research by discussing the effects of information security and privacy on users’ trust in adopting IoT solutions in the home domain and from their perspective. More specifically, this study provides knowledge regarding trust in IoT technology and how different techniques can improve trust in particular. It extends prior studies by investigating how users and different user groups perceive IoT security and privacy, their trust in involved parties, and how different types of measures, as suggested by previous literature, can affect user adoption in the home domain, which has not been examined in previous studies. The contributions of this study can be summarized as follows.

It affords insight and an understanding of the perceived security and privacy issues of smart products, as well as trust in manufacturers, service providers, and the government.

It helps quantify the view of commonly suggested measures, leading to knowledge regarding the perceived importance of such measures and rankings.

6.2. Practical implications

It is clear that all suggested measures are considered important by the majority, and that the industry needs to apply a broad approach to facilitate user adoption. The most important measure is to improve the users’ control of their data while providing transparency, which requires active consent from the users and the possibility of opting in/out. The second most important measure is the implementation of comprehensive security strategies that include the principles of security by design and privacy by default. Third, a wide spectrum of protection measures covering all layers should be implemented, specifically, data encryption and malware protection. Finally, responsibilities should be clear, and common industry standards should be created, as well as a global certification and labeling system. To reach general and widespread adoption, the industry should also focus on providing basic knowledge to potential users with little or no familiarity because the lack of knowledge has a substantial negative impact.

Because a majority want to use more smart home products, and the willingness increases with familiarity and younger age, the market should have significant growth potential. To maximize adoption, the industry should focus on people with little knowledge, as they have not adopted the technology, have the most concerns, and are more unlikely to buy and use products because of security and privacy issues. They include women, as few of them are using smart products but a majority would like to, and security measures have a significant impact on their attitude. They should also include the younger people, as few of them are using the products; while they are interested, they consider security and privacy issues a barrier to a larger extent than do all other groups and are the future users.

6.3. Limitations and future research

As the survey was conducted in a single country, the conclusions cannot be generalized to the world. Even if previous research indicates that the examined principles of trust, acceptance, and adoption apply to several different countries around the world, some differences may still exist. Similar studies should be conducted in more countries with other cultures and conditions to determine whether the results are universal. This research indicates that users and potential users do not rate the possible impact of security or privacy breaches that are equally severe for all types of smart home products. Therefore, the results may differ depending on the type of device used. Further research is required to investigate how security, privacy, and trust are perceived for different product categories and IoT application domains. Further, more work can be done in future research when it comes to the gender perspective, as our study was not balanced in terms of female/male participation. This low interest rate among females could be an interesting research opportunity in the future.

Acknowledgement

The authors would like to thank Mattias Pilheden for his contribution in the statistical analysis.

Disclosure statement

No potential conflict of interest was reported by the author(s).

Data availability statement

All collected data were anonymized and will only be used for research in accordance with the information provided to the research participants.

Additional information

Funding

Notes on contributors

Frederik Schuster

Frederik Schuster is a Senior Research Engineer in Information Systems at Luleå University of Technology, Sweden. His research is focused on IoT technologies, information security and privacy. He is also a university lecturer in the field of information security, systems science, and digital service innovations at bachelor and master levels.

Abdolrasoul Habibipour

Abdolrasoul Habibipour is a senior lecturer in Information Systems and managing director of Botnia Living Lab at Luleå University of Technology, Sweden. His research is focused on participatory design, living labs and digital transformation. He has been involved in several international projects as project manager, project leader, and coordinator.

References

- Alladi, T., Chamola, V., Sikdar, B., & Choo, K.-K R. (2020). Consumer IoT: Security vulnerability case studies and solutions. IEEE Consumer Electronics Magazine, 9(2), 17–25. https://doi.org/10.1109/MCE.2019.2953740

- Alnahari, W., & Quasim, M. T. (2021). Privacy concerns, IoT devices and attacks in smart cities. In 2021 International Congress of Advanced Technology and Engineering (ICOTEN) (pp. 1–5). https://doi.org/10.1109/ICOTEN52080.2021.9493559

- Alraja, M. N., Farooque, M. M. J., & Khashab, B. (2019). The effect of security, privacy, familiarity, and trust on user’s attitudes toward the use of the IoT-based healthcare: The mediation role of risk perception. IEEE Access, 7, 111341–111354. https://doi.org/10.1109/ACCESS.2019.2904006

- Balta-Ozkan, N., Davidson, R., Bicket, M., & Whitmarsh, L. (2013). Social barriers to the adoption of smart homes. Energy Policy, 63, 363–374. https://doi.org/10.1016/j.enpol.2013.08.043

- Bloomfield, J., & Fisher, M. J. (2019). Quantitative research design. Journal of the Australasian Rehabilitation Nurses’ Association, 22(2), 27–30. https://doi.org/10.33235/jarna.22.2.27-30

- Chang, Y., Dong, X., & Sun, W. (2014). Influence of characteristics of the internet of things on consumer purchase intention. Social Behavior and Personality, 42(2), 321–330. https://doi.org/10.2224/sbp.2014.42.2.321

- Chang, C.-W., & Hung, W.-H. (2021). Strengthening existing internet of things system security: Case study of improved security structure in smart health. Sensors and Materials, 33(4), 1257–1272. https://doi.org/10.18494/SAM.2021.3163

- Frambach, R. T., & Schillewaert, N. (2002). Organizational innovation adoption: A multi-level framework of determinants and opportunities for future research. Journal of Business Research, 55(2), 163–176. https://doi.org/10.1016/S0148-2963(00)00152-1

- Freedman, D., Pisani, R., & Purves, R. (2007). Statistics (international student edition). Pisani, R. Purves, 4th ed. WW Norton & Company.

- Habibipour, A. (2020). User engagement in Living Labs: Issues and concerns [Doctoral dissertation]. Luleå University of Technology. http://urn.kb.se/resolve?urn=urn:nbn:se:ltu:diva-80563

- Hameed, M. A., Counsell, S., & Swift, S. (2012). A conceptual model for the process of IT innovation adoption in organizations. Journal of Engineering and Technology Management, 29(3), 358–390. https://doi.org/10.1016/j.jengtecman.2012.03.007

- Iqbal, W., Abbas, H., Daneshmand, M., Rauf, B., & Bangash, Y. A. (2020). An in-depth analysis of iot security requirements, challenges, and their countermeasures via software-defined security. IEEE Internet of Things Journal, 7(10), 10250–10276. https://doi.org/10.1109/JIOT.2020.2997651

- Ismagilova, E., Hughes, L., Dwivedi, Y. K., & Raman, K. R. (2019). Smart cities: Advances in research – An information systems perspective. International Journal of Information Management, 47, 88–100. https://doi.org/10.1016/j.ijinfomgt.2019.01.004

- Ismagilova, E., Hughes, L., Rana, N. P., & Dwivedi, Y. K. (2020). Security, privacy and risks within smart cities: Literature review and development of a smart city interaction framework. Information Systems Frontiers, 24(2), 393–414. https://doi.org/10.1007/s10796-020-10044-1

- Johnson, S. D., Blythe, J. M., Manning, M., & Wong, G. T. W. (2020). The impact of IoT security labelling on consumer product choice and willingness to pay. PLoS One, 15(1), e0227800–21. https://doi.org/10.1371/journal.pone.0227800

- Kim, S., & Kim, S. (2016). A multi-criteria approach toward discovering killer IoT application in Korea. Technological Forecasting and Social Change, 102, 143–155. https://doi.org/10.1016/j.techfore.2015.05.007

- Kotz, S., & Mari, D. D. (2001). Correlation and dependence. Imperial College Press.

- Kounoudes, A. D., & Kapitsaki, G. M. (2020). A mapping of IoT user-centric privacy preserving approaches to the GDPR. Internet of Things, 11, 100179. https://doi.org/10.1016/j.iot.2020.100179

- Kuzminykh, I., Yevdokymenko, M., & Ageyev, D. (2020). Analysis of encryption key management systems: Strengths, weaknesses, opportunities, threats. In 2020 IEEE International Conference on Problems of Infocommunications. Science and Technology (PIC S&T) (pp. 515–520). IEEE. https://doi.org/10.1109/PICST51311.2020.9467909

- Lee, H. (2020). Home IoT resistance: Extended privacy and vulnerability perspective. Telematics and Informatics, 49, 101377. https://doi.org/10.1016/j.tele.2020.101377

- Lee, M. (2019). An empirical study of home IoT services in South Korea: The moderating effect of the usage experience. International Journal of Human–Computer Interaction, 35(7), 535–547. https://doi.org/10.1080/10447318.2018.1480121

- Leedy, P. D., & Ormrod, J. E. (2015). Practical research. Planning and design (11th ed.). Pearson.

- Leroux, E., & Pupion, P.-C. (2022). Smart territories and IoT adoption by local authorities: A question of trust, efficiency, and relationship with the citizen-user-taxpayer. Technological Forecasting and Social Change, 174, 121195. https://doi.org/10.1016/j.techfore.2021.121195

- Li, W., Yigitcanlar, T., Erol, I., & Liu, A. (2021). Motivations, barriers and risks of smart home adoption: From systematic literature review to conceptual framework. Energy Research & Social Science, 80, 102211. https://doi.org/10.1016/j.erss.2021.102211

- Liff, S., Shepherd, A., Wajcman, J., Rice, R., & Hargittai, E. (2004). An evolving gender digital divide? (SSRN Scholarly Paper No. 1308492). https://doi.org/10.2139/ssrn.1308492

- Lin, H., & Bergmann, N. W. (2016). IoT privacy and security challenges for smart home environments. Information, 7(3), 44. https://doi.org/10.3390/info7030044

- Lu, Y., Papagiannidis, S., & Alamanos, E. (2018). Internet of things: A systematic review of the business literature from the user and organisational perspectives. Technological Forecasting and Social Change, 136, 285–297. https://doi.org/10.1016/j.techfore.2018.01.022

- Macedo, E. L. C., de Oliveira, E. A. R., Silva, F. H., Mello, R. R., Franca, F. M. G., Delicato, F. C., de Rezende, J. F., & de Moraes, L. F. M. (2019). On the security aspects of Internet of Things: A systematic literature review. Journal of Communications and Networks, 21(5), 444–457. https://doi.org/10.1109/JCN.2019.000048

- Mehdipour, F. (2020). A review of IoT security challenges and solutions. In 8th International Japan-Africa Conference on Electronics, Communications, and Computations (JAC-ECC) (pp. 1–6). https://doi.org/10.1109/JAC-ECC51597.2020.9355854

- Mohamed, K. S. (2019). The era of internet of things: Towards a smart world. Springer. https://doi.org/10.1007/978-3-030-18133-8

- Mumford, E. (2006). The story of socio-technical design: Reflections on its successes, failures and potential. Information Systems Journal, 16(4), 317–342. https://doi.org/10.1111/j.1365-2575.2006.00221.x

- Neupane, C., Wibowo, S., Grandhi, S., & Deng, H. (2021). A trust-based model for the adoption of smart city technologies in Australian Regional Cities. Sustainability, 13(16), 9316. https://doi.org/10.3390/su13169316

- Ogonji, M. M., Okeyo, G., & Wafula, J. M. (2020). A survey on privacy and security of Internet of Things. Computer Science Review, 38, 100312. https://doi.org/10.1016/j.cosrev.2020.100312

- Omolara, A. E., Alabdulatif, A., Abiodun, O. I., Alawida, M., Alabdulatif, A., Alshoura, W. H., & Arshad, H. (2022). The internet of things security: A survey encompassing unexplored areas and new insights. Computers & Security, 112, Article 102494. https://doi.org/10.1016/j.cose.2021.102494

- Padyab, A., Habibipour, A., Rizk, A., & Ståhlbröst, A. (2019). Adoption barriers of IoT in large scale pilots. Information, 11(1), 23. https://doi.org/10.3390/info11010023

- Patel, K. K., & Patel, S. M. (2016). Internet of things-IOT: Definition, characteristics, architecture, enabling technologies, application & future challenges. International Journal of Engineering Science and Computing, 6(5), 6122–6131.

- Pilemalm, S., Lindell, P.-O., Hallberg, N., & Eriksson, H. (2007). Integrating the Rational Unified Process and participatory design for development of socio-technical systems: A user participative approach. Design Studies, 28(3), 263–288. https://doi.org/10.1016/j.destud.2007.02.009

- Popescu, T., Popescu, A., & Prostean, G. (2021). IoT Security Risk Management Strategy Reference Model (IoTSRM2). Future Internet, 13(6), 148. https://doi.org/10.3390/fi13060148

- Powell, T. C. (2020). Can quantitative research solve social problems? Pragmatism and the ethics of social research. Journal of Business Ethics, 167(1), 41–48. https://doi.org/10.1007/s10551-019-04196-7

- Punch, K. (2003). Survey research: The basics. SAGE Publications.

- Punch, K. (2005). Introduction to Social Research – Quantitative & Qualitative Approaches. SAGE Publications.

- Rau, P.-L P., Huang, E., Mao, M., Gao, Q., Feng, C., & Zhang, Y. (2015). Exploring interactive style and user experience design for social web of things of Chinese users: A case study in Beijing. International Journal of Human-Computer Studies, 80, 24–35. https://doi.org/10.1016/j.ijhcs.2015.02.007

- Rekha, S., Thirupathi, L., Renikunta, S., Gangula, R. (2021). Study of security issues and solutions in Internet of Things (IoT). Materials Today: Proceedings, ISSN 2214-7853. https://doi.org/10.1016/j.matpr.2021.07.295

- Rogers, E. M. (2003). Diffusion of innovations, 5th Edition. Simon and Schuster.

- Rosser, S. V. (2009). The gender gap in patenting: Is technology transfer a feminist issue? NWSA Journal, 21(2), 65–84. https://www.jstor.org/stable/20628174

- Saura, J. R., Ribeiro-Soriano, D., & Palacios-Marqués, D. (2021). Using data mining techniques to explore security issues in smart living environments in Twitter. Computer Communications, 179(C), 285–295. https://doi.org/10.1016/j.comcom.2021.08.021

- Saura, J. R., Ribeiro-Soriano, D., & Palacios-Marqués, D. (2022). Assessing behavioral data science privacy issues in government artificial intelligence deployment. Government Information Quarterly, 39(4), 101679. https://doi.org/10.1016/j.giq.2022.101679

- Schomakers, E.-M., Biermann, H., & Ziefle, M. (2021). Users’ preferences for smart home automation – Investigating aspects of privacy and trust. Telematics and Informatics, 64, 101689. https://doi.org/10.1016/j.tele.2021.101689

- Straub, E. T. (2009). Understanding technology adoption: Theory and future directions for informal learning. Review of Educational Research, 79(2), 625–649. https://doi.org/10.3102/0034654308325896

- Strous, L., von Solms, S., & Zúquete, A. (2021). Security and privacy of the Internet of Things. Computers & Security, 102, 102148. https://doi.org/10.1016/j.cose.2020.102148

- Tawalbeh, L., Muheidat, F., Tawalbeh, M., & Quwaider, M. (2020). IoT privacy and security: Challenges and solutions. Applied Sciences, 10(12), 4102. https://doi.org/10.3390/app10124102

- Vermesan, O., Friess, P., Guillemin, P., Gusmeroli, S., Sundmaeker, H., Bassi, A., Jubert, I. S., Mazura, M., Harrison, M., Eisenhauer, M., & Doody, P. (2009). Internet of things strategic research roadmap. Internet of Things, 1, 9–52. http://hdl.handle.net/11250/2430372

- Weinberg, B. D., Milne, G. R., Andonova, Y. G., & Hajjat, F. M. (2015). Internet of things: Convenience vs. privacy and secrecy. Business Horizons, 58(6), 615–624. https://doi.org/10.1016/j.bushor.2015.06.005

- Zhou, J., Cao, Z., Dong X., & Vasilakos, A. V. (2017). Security and privacy for cloud-based IoT: Challenges. IEEE Communications Magazine, 55(1), 26–33. https://doi.org/10.1109/MCOM.2017.1600363CM

- Ziegler, S., Crettaz, C., Kim, E., Skarmeta, A., Bernabe, J. B., Trapero, R., & Bianchi, S. (2019). Privacy and security threats on the internet of things. In S. Ziegler (Eds.), Internet of things security and data protection. internet of things (Technology, communications and computing) (pp. 9–43). Springer. https://doi.org/10.1007/978-3-030-04984-3_2