Abstract

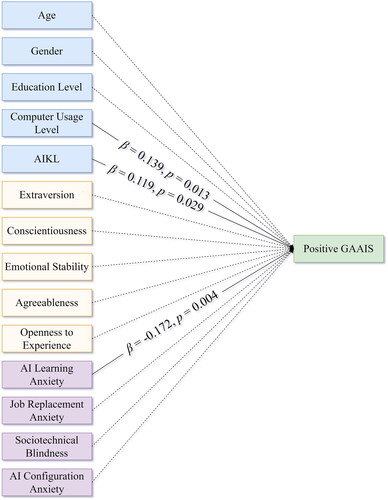

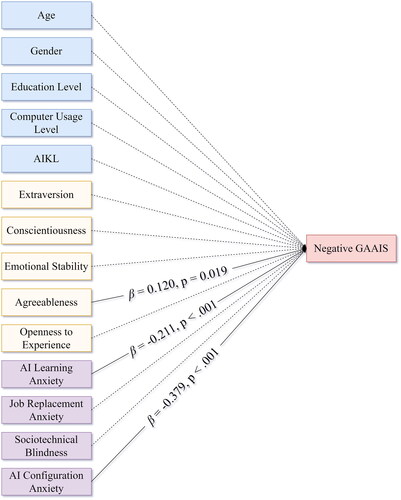

The present study adapted the General Attitudes toward Artificial Intelligence Scale (GAAIS) to Turkish and investigated the impact of personality traits, artificial intelligence anxiety, and demographics on attitudes toward artificial intelligence. The sample consisted of 259 female (74%) and 91 male (26%) individuals aged between 18 and 51 (Mean = 24.23). Measures taken were demographics, the Ten-Item Personality Inventory, the Artificial Intelligence Anxiety Scale, and the General Attitudes toward Artificial Intelligence Scale. The Turkish GAAIS had good validity and reliability. Hierarchical Multiple Linear Regression Analyses showed that positive attitudes toward artificial intelligence were significantly predicted by the level of computer use (β = 0.139, p = 0.013), level of knowledge about artificial intelligence (β = 0.119, p = 0.029), and AI learning anxiety (β = −0.172, p = 0.004). Negative attitudes toward artificial intelligence were significantly predicted by agreeableness (β = 0.120, p = 0.019), AI configuration anxiety (β = −0.379, p < 0.001), and AI learning anxiety (β = −0.211, p < 0.001). Personality traits, AI anxiety, and demographics play important roles in attitudes toward AI. Results are discussed in light of the previous research and theoretical explanations.

1. Introduction

In recent years, rapid improvements in artificial intelligence (AI) technologies have affected all social systems, including the economy, politics, science, and education (Luan et al., Citation2020, Stephanidis et al., Citation2019). Indeed, Reinhart (Citation2018) stated that 85% of American people used at least one technology powered by AI. However, people often are not aware of the presence of AI applications (Tai, Citation2020). With the rapid development of cybernetic technology, artificial intelligence is used in almost all areas of life. A part of them, on the other hand, is still considered a futuristic, almost sci-fi, technology that is disconnected from the realities of life.

Gansser and Reich (Citation2021) simply describe AI as a technology that was developed to ease human life and help people in certain scenarios. Darko et al. (Citation2020) remark that AI is the key technology of the Fourth Industrial Revolution (Industry 4.0). Indeed, AI is used in many beneficial contexts, e.g., diagnosing diseases, preserving environmental resources, predicting natural disasters, improving education, preventing violent acts, and reducing risks at work (Brooks, Citation2019). Hartwig (Citation2021) underlines that AI will enhance efficiency, create new opportunities, reduce man-made errors, undertake the responsibility of solving complex problems, and carry out boring tasks. Therefore, these benefits of AI may create free time for people to learn, experiment, and discover, which may consequently enhance the creativity and quality of life of mankind. A report prepared by the Organization for Economic Co-operation and Development (OECD, Citation2019) includes many projected future developments in AI. AI technology promises advancements in many sectors including the labor market, education, health, and national security (Zhang & Dafoe, Citation2019). These are positive aspects of AI and due to these benefits, we would expect to observe positive attitudes toward these aspects of AI.

Although people have hopes regarding AI, they also have concerns regarding this technology (Rhee & Rhee, Citation2019). There has been an extensive discussion regarding potential ethical, sociopolitical, and economic risks (see e.g., Neudert et al., Citation2020). Economic risks caused by AI are an important issue. Huang and Rust (Citation2018) stated that AI is threatening services provided by people. Frey and Osborne (Citation2017) pointed out that 47% of American workers are at risk of losing their jobs in the upcoming years due to computerization including AI and robotics. Similarly, Acemoglu and Restrepo (Citation2017) emphasized that robots reduce costs, also stating that the US economy is losing 360,000 to 670,000 jobs per annum due to robots. They also underlined that if developments continue at the estimated speed, then prospective total job losses will be much bigger. Furthermore, Bossman (Citation2016) stated that due to job losses, the profits will be shared among a smaller group of people, which will deepen the issue of inequality among people on a global scale. Alongside the economic risks, AI may also lead to various security problems. Existing concerns are being raised by high-profile AI usage which violates human rights and is biased, discriminatory, manipulative, and illegal (Gillespie et al., Citation2021). It was also emphasized that AI may induce social anxieties and cause ethical issues (OECD, Citation2019). Frequently highlighted ethical issues include racism arising from AI-powered decision systems (Lyons, Citation2020; Sanjay, Citation2020), potential data privacy violations that some have suggested have been carried out by some large technology companies, e.g., tapping clients using AI technologies (Nicolas, 2022), and discriminatory algorithmic biases that disregard human rights (Circiumaru, Citation2022). Further issues that are widely discussed include security gaps originating from AI systems; legal and administrative problems that occur while using these technologies; lack of trust toward AI on the social scale; and unrealistic expectations from AI (Dwivedi et al., Citation2021). These are negative aspects of AI, toward which people may show negative attitudes.

The concept of attitudes toward artificial intelligence has emerged from all these discussions and it has gained importance in recent years. There is growing interest in beliefs and attitudes toward AI and the factors affecting them (Schepman & Rodway, Citation2020, Citation2022). Neudert et al. (Citation2020) conducted comprehensive research involving 142 countries and 154,195 participants and found that many individuals feel anxious about the risks of using AI. Similarly, Zhang and Dafoe (Citation2019) recruited 2,000 American adults in a study and found that a significant proportion of the participants (41%) supported AI, while another 22% opposed the development of such technologies. A large-scale investigation enlisting 27,901 participants in several European countries showed that most people exhibit a positive attitude toward robots and AI (European Commission & Directorate-General for Communications Networks, Content & Technology, Citation2017). It was also stressed that attitudes mostly stem from the level of knowledge: more internet usage and higher education levels were associated with more positive attitudes toward AI. It was also found that male participants and younger participants had more positive opinions of AI compared to female and older participants, respectively. Thus, while some demographic predictors of AI attitudes have been documented in prior research, there is much scope for exploring these in different cultures, and this gap is addressed in our study, which examines AI attitudes in Turkey.

AI technologies cover a wide area, such as the economy (Belanche et al., Citation2019), education (Chocarro et al., Citation2021), health (Fan et al., Citation2020), law (Xu & Wang, Citation2021), transportation (Ribeiro et al., Citation2022), agriculture (Mohr & Kühl, Citation2021), and tourism (Go et al., Citation2020). Consequently, there could be many factors contributing to an individual’s tendency to use AI technology in specific fields of application. In a detailed study, Park and Woo (Citation2022) found that (1) personality traits, (2) psychological factors, such as inner motivation, self-efficacy, voluntariness, and performance expectation, and (3) technological factors like perceived practicality, perceived ease of use, technology complexity and relative advantage predicted the adoption of AI-powered applications. It was also revealed that acceptance of smart information technologies was significantly predicted by facilitating factors, such as user experience and cost; factors associated with personal values, such as optimism about science and technology, anthropocentrism, and ideology; and factors regarding risk perception, such as perceived risk, perceived benefit, positive views about technology, and trust in government. In addition, acceptance of AI technologies was affected by subjective norms (Belanche et al., Citation2019), culture (Sindermann et al., Citation2020, Citation2021), technology efficiency, perceived threat of job loss (Vu & Lim, Citation2022), confidence (Shin & Kweon, Citation2021) and hedonic factors (Rese et al., Citation2020). Further research conducted among 6,054 participants in America, Australia, Canada, Germany, and the UK revealed that people have a low level of trust toward AI, and that trust plays a central role in the acceptance of AI (Gillespie et al., Citation2021). Therefore, it can be concluded that the reasons and consequences of acceptance of AI technology and attitudes toward it are complex structures involving many elements. Therefore, investigating the factors contributing to the attitudes toward AI might enrich knowledge about society’s reaction to these newly developed technologies to provide a broader perspective and deeper understanding. Our aim in the current research was to examine the impact of selected personal factors, in part to examine the interface between broad and narrow predictive factors in predicting attitudes toward AI.

1.1. The current study and development of the hypotheses

1.1.1. Personality traits

In recent years, researchers have been investigating the major personality variables that may affect individuals’ attitudes toward AI. Personality traits may be defined as relatively stable tendencies that determine the thoughts, emotions, and behaviors of people (Devaraj et al., Citation2008). One of the major models widely accepted among personality theories is the Big-Five Personality Theory proposed by Costa and McCrae (Citation1992), which identifies five main traits, namely openness, agreeableness, extroversion, conscientiousness, and neuroticism. Evidence indicates that these traits may have biological-hereditary bases (McCrae & Costa, Citation1995; Grant & Langan-Fox, Citation2007; Power & Pluess, Citation2015). The first one, openness encompasses open-mindedness, liking innovation, and intellectual curiosity. Extroversion refers to tendencies like energy and intense social interaction. Agreeableness indicates individuals’ level of cooperation, tenderness, and courtesy, and conscientiousness comprises attention, self-inspection, and organization. On the other hand, neuroticism is associated with negative emotions like anxiety, insecurity, and depression and includes emotional instability. Recent evidence suggests that big-five personality traits play a crucial role in attitudes (Gallego & Pardos-Prado, Citation2014). There is a growing body of research indicating that personality traits might contribute to the tendency to adopt and accept various technologies (Dalvi-Esfahani et al., Citation2020; Devaraj et al., Citation2008; Özbek et al., Citation2014). Some attention has been drawn to the association between personality traits and attitudes toward AI (Park & Woo, Citation2022; Schepman & Rodway, Citation2022; Sindermann et al., Citation2020). However, there is mixed evidence in the literature, signifying a need for further investigation. The variation in the predictive power of the big five personality traits in different strands of technology research constitutes a gap in our understanding, making it difficult to foresee how they relate to AI attitudes. It is an aim of the current study to address this.

1.1.2. AI anxiety

As discussed, AI technology has brought challenges to life, such as job losses, concerns about privacy and transparency, algorithmic biases, growing socio-economic inequalities, and unethical actions (Green, Citation2020). These challenges may create disturbances that manifest themselves as anxiety. AI anxiety can be defined as excessive fear arising from problems originating from changes formed by AI technologies in personal or social life. Wang and Wang (Citation2022) categorized AI anxiety under the dimensions of “job replacement anxiety” which refers to the fear of the negative effects of AI on business life; “sociotechnical blindness” which refers to the anxiety arising from a lack of full understanding of the dependence of AI on humans; “AI configuration anxiety” which expresses fear regarding humanoid AI; and “AI learning anxiety” which refers to anxiety regarding learning AI technologies. Li and Huang (Citation2020), on the other hand, added some features, such as privacy, transparency, bias, and ethics to the phenomenon of AI anxiety. AI anxiety is a relatively new concept and there is a gap in the literature regarding how an individual’s AI anxiety reflects their attitudes toward AI, and how this interfaces with the impact of broader personality traits. One of our study’s aims was to address this gap and to examine to what extent AI anxiety would predict AI attitudes in the context of other factors, including personality traits.

1.1.3. Hypotheses

We formulated the following hypotheses, which were statistically assessed via hierarchical multiple linear regression analyses using survey data. Our measurement tool for AI attitudes was the General Attitudes toward Artificial Intelligence Scale (GAAIS), validated in English by Schepman and Rodway (Citation2020, Citation2022), to be validated in Turkish in this study. The GAAIS has two subscales: Positive and negative. The positive scales capture positive attitudes toward the benefits of AI, with higher scores indicating more positive attitudes. The negative subscale is reverse-scored, making higher scores on the negative GAAIS indicative of more forgiving attitudes toward AI drawbacks.

Hypothesis 1

was that demographic characteristics (age, gender, education level, level of computer usage, level of knowledge about AI) would predict attitudes toward AI, with male, younger, more educated people, more frequent computer users, and people with higher levels of computer knowledge predicted to have more positive attitudes toward positive aspects of AI (positive GAAIS) and more forgiving attitudes toward negative aspects of AI (negative GAAIS), as based on prior findings discussed above.

Hypothesis 2

was that personality traits would predict attitudes toward AI. Following reasoning, prior research and findings set out in Schepman and Rodway (Citation2022; see Table 6 therein for an overview), it was predicted that higher openness to experience would be predictive of higher scores on the positive GAAIS, higher conscientiousness, and agreeableness of higher scores on both GAAIS subscales and lower emotional stability of lower scores on the negative GAAIS (i.e., less forgiving attitudes toward the drawbacks of AI). We had a two-tailed hypothesis regarding the prediction of AI attitudes from extroversion, in light of varying directions of associations between extroversion and technology in prior research (see Schepman & Rodway, Citation2022).

Hypothesis 3

was that high levels of AI anxiety (AI learning anxiety, job replacement anxiety, sociotechnical blindness, AI configuration anxiety) would predict more negative attitudes toward AI on both GAAIS subscales because anxiogenic objects generally predict aversion toward the object (see e.g., Beckers & Schmidt, Citation2001).

In Hypothesis 4, which was two-tailed, we were interested in pitting the broad big five personality traits against AI anxiety in their predictive power in the overall model, following theorizing regarding the breadth of constructs in personality-related behavioral predictions (e.g., Landers & Lounsbury, Citation2006, who studied internet use). Big five personality traits are broad and predict many behaviors and attitudes, but the greater specificity of AI anxiety may predict AI attitudes more precisely. Thus,

Hypothesis 4

if AI attitudes were driven by broad character traits, then we would expect personality traits to have stronger predictive power than AI anxiety, but if the dispositions that drove AI attitudes were more specifically bound to AI anxiety, then we would expect AI anxiety to have stronger predictive power.

2. Method

2.1. Sample

The sample of the present study consisted of 350 individuals: 259 (74%) females and 91 (26%) males. The mean age was 24.23 (SD = 6.10, min.–max. = 18–51) for the total sample; 23.83 (SD = 5.75, min.–max. = 18–51) for females; and 25.40 (SD = 6.91, min.–max. = 18–50) for males. The sample was recruited in line with the convenience sampling method. It enables researchers to collect data from groups of people who are more economical to reach in terms of time, money, and accessibility (Creswell, Citation2014). Because there was no random selection of the participants from the target population, the convenience sampling method had some drawbacks, namely, concerns about the generalizability of the results and the repeatability of the research (Ary et al., Citation2014). To eliminate these issues, the demographic characteristics of the sample were detailed in , following recommendations by Gravetter and Forzano (Citation2018). In addition, data were compared to previous samples that used this scale. Ethical approval was obtained from The University Ethical Committee at Ataturk University. Participants took part voluntarily.

Table 1. Sociodemographic characteristics of the participants.

2.2. Materials

2.2.1. The demographic information form

This form was developed by the researchers and consisted of questions regarding participants’ gender, age, education level, and level of knowledge about AI (1 = I have no knowledge at all, 2 = I have some knowledge, 3 = I have enough knowledge, and 4 = I have detailed knowledge), level of computer use (1 = I can hardly use the computer, 2 = I am a slightly below average computer user, 3 = I am an average computer user, and 4 = I am an expert computer user), and employment status.

2.2.2. The General Attitudes toward Artificial Intelligence Scale (GAAIS)

This scale was developed by Schepman and Rodway (Citation2020) to measure individuals’ general attitudes toward AI. The scale has 20 items, comprising 12 items in the Positive GAAIS and 8 items in the Negative GAAIS. The items are scored with a five-point Likert-type (1 = strongly disagree through 5 = strongly agree) rating scale. The scale had good internal consistency reliability, as α = 0.88 for Positive GAAIS and α = 0.83 for Negative GAAIS (Schepman & Rodway, Citation2020). An adaptation of the scale into Turkish was carried out for the current study. The scale items were translated into Turkish by four specialists. The items were examined by separate teams of experts who specialized in English and Turkish languages. The findings regarding the validity and reliability of the Turkish Version of the GAAIS are presented in the results section.

2.2.3. The Ten-Item Personality Inventory

This scale was developed by Gosling et al. (Citation2003) to measure five important personality traits, namely, openness to experience, conscientiousness, extroversion, agreeableness, and emotional stability. The scale has a five-factor structure. The items are scored with a seven-point Likert-type rating scale (1 = strongly disagree through 7 = strongly agree). At validation, internal consistency coefficients of the subscales were calculated as α = 0.68 for extroversion, α = 0.40 for agreeableness, α = 0.50 for conscientiousness, α = 0.73 for emotional stability, and α = 0.45 for openness to experience. The test-retest reliability coefficients of the factors of the scale were r = 0.77 for extroversion, r = 0.71 for agreeableness, r = 0.76 for conscientiousness, r = 0.70 for emotional stability, and r = 0.62 for openness to experience (Gosling et al., Citation2003). The adaptation of the scale into Turkish was conducted by Atak (Citation2013), who confirmed the factor structure in the Turkish sample (χ2/df = 2.20, CFI = .93, NNFI = .91, RMR = .04, RMSEA = .03), with internal consistency coefficients for the subscales being α = 0.86 for extroversion, α = 0.81 for agreeableness, α = 0.84 for conscientiousness, α = 0.83 for emotional stability, and α = 0.83 for openness to experience. The test-retest reliability coefficients of the scale were r = 0.88 for extroversion, r = 0.87 for agreeableness, r = 0.87 for conscientiousness, r = 0.89 for emotional stability, and r = 0.89 for openness to experience (Atak, Citation2013).

The use of the Spearman-Brown Correlation Coefficient was recommended to test the reliability of the instruments consisting of two items (Eisinga et al., Citation2013). Hence, in the present study, the Spearman-Brown Correlation Coefficient was used to calculate the split-half reliability of the instrument. Unlike the high coefficients found by Atak (Citation2013), the analysis based on the current sample revealed similar results to the original study carried out by Gosling et al. (Citation2003): r = 0.683 for extroversion, r = 0.518 for agreeableness, r = 0.625 for conscientiousness, r = 0.604 for emotional stability, and r = 0.558 for openness to experience.

2.2.4. The Artificial Intelligence Anxiety Scale

This scale was developed by Wang and Wang (Citation2022) to measure individuals’ anxiety about AI. The scale consists of 21 items and has a four-factor structure, namely, AI Learning Anxiety, Job Replacement Anxiety, Sociotechnical Blindness, and AI Configuration Anxiety. The items are scored with a seven-point Likert-type rating scale (1 = never through 7 = completely). The reliability coefficients of the scale were α = 0.97 for the AI Learning Anxiety Subscale, α = 0.92 for the Job Replacement Anxiety Subscale, α = 0.92 for the Sociotechnical Blindness Subscale, and α = 0.92 for the AI Configuration Anxiety Subscale (Wang & Wang, Citation2022). The scale was adapted to Turkish culture by Terzi (Citation2020). The factor structure of the original scale was also confirmed in Turkish culture (χ2/df = 2.57, CFI = .9, SRMR = .07, RMSEA = .08). Reliability Analysis revealed that the internal consistency reliability coefficients were α = 0.89 for the learning dimension, α = 0.95 for the job replacement dimension, α = 0.89 for sociotechnical blindness, and α = 0.95 for the AI configuration (Terzi, Citation2020).

2.3. Procedure

After finalizing the scale items, the measurement tools were added to an online questionnaire. Next, the data were collected from individuals enrolled in the study voluntarily. The data were transferred to the SPSS and JASP software for analysis.

2.4. Data analysis

In brief, there were two separate analysis procedures in the present study: validating the Turkish GAAIS and testing the study hypotheses. The structural validity of the Turkish GAAIS was tested by utilizing Confirmatory Factor Analysis (CFA) and the reliability was tested by Cronbach’s Alpha Internal Consistency Coefficient, while predictors were tested using hierarchical multiple regression. Scales and subscales were scored in line with their published scoring methods, which included reverse-scoring all items of the negative GAAIS, such that higher scores mean more positive/forgiving attitudes. Before the main analyses, the data were screened for assumptions for CFA and regression analyses.

The dataset was screened for missing data and outliers and examined for normal distribution and collinearity (Field, Citation2013). The total scores were converted into standardized z scores to check for outliers. It was observed that the z statistics were between −3 and +3, so the data set was free from outliers (Tabachnick & Fidell, Citation2014). Then, the skewness and kurtosis values were calculated to check the normality. Accordingly, the results were found to be between −2 and +2, signifying that the data were normally distributed (George & Mallery, Citation2019).

After validating that the data were suitable for analysis, the construct validity of the GAAIS was investigated with Confirmatory Factor Analysis, and the internal consistency reliability coefficients and split-half correlations were calculated to test the reliability of all the tools. Then, two separate Hierarchical Multiple Linear Regression analyses were conducted for positive and negative attitudes toward AI to test the research hypotheses.

Some additional assumptions were checked before the regression analysis. First, the necessary sample size was calculated for the present study to confirm that the current sample size was adequate. The criterion (n ≥ 50 + 8 m) suggested by Tabachnick and Fidell (Citation2014) was taken as a basis for this. Accordingly, there were 14 independent variables in the present study, considering the proposed criteria, it was decided that a sample size of 353 individuals met the recommended sampling criterion (353 ≥ 50 + 14 × 10 = 190) for regression analysis.

To test autocorrelation in the multiple regression, the Durbin-Watson statistic was utilized. According to Durbin and Watson (Citation1950), the test statistics should be between 1 and 3. In the present study, this coefficient was found to be 1.168 in the positive attitudes toward the AI model and 1.717 in the negative attitudes toward the AI model, and it was concluded that this assumption was not violated, either. For the multicollinearity assumption, the relationships between the predictive variables, variance inflation factor (VIF), and tolerance value were examined. According to this assumption, the relationships between the predictor variables should not be >.900 (Field, Citation2013), the VIF value should be <5, and the tolerance value should be >.200 (Hair et al., Citation2011, Citation2019). In the present study, it was observed that the highest correlation between the predictive variables was 0.843, less than the recommended criterion of .90. It was also revealed that the VIF values ranged between 1.143 and 4.387 for both models and were <5. Furthermore, the tolerance values varied between 0.228 and 0.875 for both models and were >.200. These results showed that the multicollinearity assumption was met. In addition, Cook’s D was examined for the absence of multivariate outliers, which is the last assumption of the multiple regression. Cook’s D values >1 should be considered an outlier (Cook, Citation1977; Cook & Wcisberg, Citation1982). It was found that there was no value >1 in Cook’s D values calculated for both models. Similarly, Field (Citation2013) suggested that standardized DFBeta values should be <1. No value >1 was found in either model. Analyses were carried out after seeing that there was no multicollinearity problem.

3. Results

3.1. Validity and reliability of the Turkish GAAIS

First, the parameter estimates were investigated. It was confirmed that all paths in the CFA model of the Turkish version of the GAAIS were significant. The findings indicated that the factor loading values were between .40 and .71 for Positive GAAIS and between .41 and .76 for Negative GAAIS. The factor loadings of the instrument can be seen in . After verifying the parameter estimates, the goodness of fit of the CFA model of the GAAIS was evaluated. CFA revealed that the two-dimensional structure was also confirmed in the Turkish sample (χ2 = 557.01, df = 169, χ2/df = 3.30, CFI = 0.92, NNFI = 0.91, SRMR = 0.067, RMSEA = 0.081). Fit measures were then also assessed using the DWLS (diagonally weighted least squares) estimator on JASP software, in line with the ordinal nature of the data (Li, Citation2016; Schepman & Rodway, Citation2022). This showed χ2 = 255.38, df = 169, χ2/df = 1.51, CFI = 0.974, NNFI = 0.971, SRMR = 0.066, RMSEA = 0.038, 90% CI [0.028, 0.048]. As is generally the case with fit indices, CFI and NFI > 0.90 and RMSEA and SRMR < 0.08 were taken as criteria (Byrne, Citation2010; Hu & Bentler, Citation1999; Kline, Citation2016; Schumacker & Lomax, Citation2010; Tabachnick & Fidell, Citation2014). For the RMSEA value, it is stated that <0.05 indicates good fit, <0.08 indicates acceptable fit, and >0.10 indicates unacceptable fit, while values between 0.08 and 0.10 indicate moderate fit (Brown, Citation2015). The internal consistency reliability of the Turkish version of the scale was also satisfactory, as α = 0.82 for the Positive GAAIS and α = 0.84 for the Negative GAAIS. Furthermore, the split-half reliability coefficients were calculated as r = 0.77 for Positive GAAIS and r = 0.83 for Negative GAAIS.

Table 2. Factor loadings of the Turkish Version of the GAAIS.

3.2. Correlations

Before the hypotheses were tested using hierarchical multiple regression, preliminary analyses were performed. Descriptive statistics and Pearson correlation analysis was carried out, and the results are presented in . Positive attitudes toward AI correlated significantly with negative attitudes toward AI (r = 0.327, p < .001), and, due to reverse scoring of the negative GAAIS, this meant that those who were more positive about the positive aspects of AI were also more forgiving about the negative aspects of AI. Positive attitudes toward AI also correlated positively with the level of computer use (r = 0.238, p < .001), level of knowledge of AI (r = 0.256, p < .001), openness to experience (r = 0.228, p < .001), gender (females = 0, males = 1, r = 0.201, p < .001), and extroversion (r = 0.122, p = 0.022). On the other hand, statistically significant negative correlations were found between positive attitudes toward AI and AI learning anxiety (r = −0.294, p < .001), AI configuration anxiety (r = −0.250, p < .001), sociotechnical blindness (r = −0.138, p = 0.010), and job replacement anxiety (r = −0.122, p = 0.023). In addition, statistically non-significant correlations were found between positive attitudes toward AI and conscientiousness (r = 0.095, p = 0.077), emotional stability (r = 0.094, p = 0.079), agreeableness (r = 0.043, p = 0.428), education level (r = 0.038, p = 0.478), and age (r = −0.047, p = 0.377).

Table 3. Descriptive statistics and correlations between variables.

Preliminary analysis also revealed that there were statistically significant positive correlations between negative attitudes toward AI and conscientiousness (r = 0.226, p < .001), emotional stability (r = 0.217, p < .001), agreeableness (r = 0.211, p < .001), extroversion (r = 0.159, p = 0.003), openness to experience (r = 0.134, p = 0.012), level of computer use (r = 0.121, p = 0.023), level of knowledge of AI (r = 0.118, p = 0.028), and education level (r = 0.108, p = 0.043). In addition, statistically significant negative correlations were found between negative attitudes toward AI and AI configuration anxiety (r = −0.459, p < .001), AI learning anxiety (r = −0.410, p < .001), sociotechnical blindness (r = −0.296, p < .001), and job replacement anxiety (r = −0.249, p < .001). Finally, statistically non-significant correlations were found between negative attitudes toward AI and age (r = 0.072, p = 0.182), and gender (females = 0, males = 1, r = .037, p = 0.492).

3.3. Testing the hypotheses: Hierarchical multiple regressions

3.3.1. Predicting the positive GAAIS

A hierarchical multiple linear regression analysis was conducted to examine the predictive effects of age, gender, education level, level of computer use, level of knowledge of AI, personality traits, and AI anxiety subscales (AI learning anxiety, job replacement anxiety, sociotechnical blindness, AI configuration anxiety) on positive attitudes toward AI. The results are presented in .

Table 4. Hierarchical multiple linear regression analysis results on the predictive role of various variables on positive attitudes toward artificial intelligence.

In the first model, age, gender, education level, level of computer usage, and knowledge of AI explained 12% of the variance in positive attitudes toward AI [F(5, 344) = 8.928, p < .001]. It was found that the level of computer usage (β = 0.177, p = 0.002) and knowledge of AI (β = 0.172, p = 0.002) significantly predicted positive attitudes toward AI. Then, personality traits were added to the model (see Model 2), and it was revealed that the additional factors explained a further 3% variance in positive attitudes toward AI [Rchange = 0.037, Fchange (5, 339) = 2.962, p = 0.012]. Openness to experience was the only personality trait significantly predicting the positive attitudes toward AI in Model 2 (β = 0.149, p = 0.005). Lastly, individual factors and personality traits were controlled, and AI anxiety subscales were added to the model. With that, an additional 4% variance was explained in the positive attitudes toward AI [Rchange = 0.038, Fchange (4, 335) = 3.955, p = 0.004]. AI learning anxiety was also found to be a significant predictor (β = −0.172, p = 0.004), and openness became non-significant. These results are visualized in .

3.3.2. Predicting the negative GAAIS

A separate hierarchical multiple linear regression analysis was also conducted to examine the predictive effects of age, gender, education level, level of computer use, level of knowledge of AI, personality traits, and AI anxiety subscales on negative attitudes toward AI. The results are presented in and visualized in .

Figure 2. Predictors of the Negative General Attitudes toward Artificial Intelligence subscale, with predictors that were significant in the final model showing standardized coefficients (β) and p-values. AIKL: Artificial Intelligence Knowledge Level.

Table 5. Hierarchical multiple linear regression analysis results on the predictive role of various variables on negative attitudes toward artificial intelligence.

Findings revealed that, in the first model, age, gender, education level, level of computer usage, and knowledge of AI explained 4% of the variance in negative attitudes toward AI [F(5, 344) = 2. 661, p = 0.022]. In Model 2, where the personality traits were added, an additional 8% of the variance was explained in the negative attitudes toward AI [Rchange = 0.083, Fchange (5, 339) = 6.378, p < .001]. In this model, agreeableness (β = 0.141, p = 0.013) and emotional stability (β = 0.126, p = 0.027) were two significant predictors of negative attitudes, but note that this changed in a subsequent model. Finally, AI anxiety subscales were added to the model. After controlling the individual factors and personality traits, Model 3 explained an additional 20% variance in the negative attitudes toward AI [Rchange = 0.198, Fchange (4, 335) = 24.259, p < 0.001]. AI learning anxiety (β = −0.211, p < 0.001) and AI configuration anxiety (β = −0.379, p < 0.001) were also found to be a significant predictor. In Model 3, among five personality traits, only agreeableness significantly predicted the negative attitudes toward AI (β = 0.120, p = 0.019), and emotional stability became non-significant.

4. Discussion

We briefly address the validation of the Turkish GAAIS, before evaluating the results with respect to the hypotheses.

4.1. Validity and reliability of the Turkish GAAIS

The first aim of the present study was to adapt the GAAIS to Turkish and investigate its validity and reliability in an adult sample living in Turkey. CFA findings demonstrated that the factor structure of the GAAIS in the original study carried out in the UK sample was also confirmed in the present study.

Previous findings had indicated that the GAAIS had satisfactory evidence regarding the internal consistency reliability: Cronbach’s α was 0.88 for the positive GAAIS and 0.83 for the negative GAAIS (Schepman & Rodway, Citation2020) and replicated as 0.88 and 0.82 for the positive and negative GAAIS, respectively (Schepman & Rodway, Citation2022). The present study revealed similar results. Cronbach’s α was 0.82 for the positive GAAIS and 0.84 for the negative GAAIS in the Turkish sample. In addition, the split-half reliability was also investigated in the present study. The findings indicated satisfactory evidence regarding the split-half reliability, as r = 0.77 for positive GAAIS and r = 0.83 for negative GAAIS. The literature suggests that results equal to 0.70 and above is a sign of adequate internal consistency (Hair et al., Citation2019; Nunnally & Bernstein, Citation1994), and high correlations indicate high split-half reliability (Field, Citation2013). The data from the CFA and internal consistency and reliability assessments demonstrate the validity of the Turkish GAAIS. Additional evidence of the need for two GAAIS subscales comes from the patterning of the predictors on the criterion variables, which was substantially different for the positive and negative GAAIS.

A further aspect of the measurement model concerns factor loadings of items. In the original study, Exploratory Factor Analysis (EFA) revealed that the factor loadings were between 0.47 and 0.78 for positive GAAIS and between 0.41 and 0.75 for negative GAAIS (Schepman & Rodway, Citation2020). In another study conducted in a UK sample, CFA was utilized to investigate the structural validity of the GAAIS and it was found that the factor loadings were between 0.42 and 0.79 for positive GAAIS, and between 0.37 and 0.80 for negative GAAIS (Schepman & Rodway, Citation2022). The factor loadings of the Turkish Version of the GAAIS are also consistent with the previous evidence, where the estimates were between 0.40 and 0.71 for the positive GAAIS, and between 0.41 and 0.72 for the negative GAAIS. There is no consensus on the cutoff value for factor loadings. For instance, Field (Citation2013), Hair et al. (Citation2011), and Stevens (Citation2002) recommend a heuristic value of 0.40. Following Comrey and Lee (Citation1992), Tabachnick and Fidell (Citation2014) suggest the cutoff values of 0.32 (poor), 0.45 (fair), 0.55 (good), 0.63 (very good), and 0.71 (excellent). Some scholars advocate making cut-off values dependent on the sample size (e.g., in samples with N = 350, as our sample, use a cut-off of .3, Hair et al., Citation1998). Some suggest adopting factor loadings between 0.30 and 0.40 as the minimum level (Hair et al., Citation2019). The observation that some factor loadings in the Turkish (and English) GAAIS were somewhat low may represent the nature of the attitude construct, which has considerable breadth. Factor loadings need to be interpreted in relation to item semantics, with items contributing important elements of meaning to the overall construct (see Schepman & Rodway, Citation2022). Factor loadings also need to be interpreted in relation to fit indices, and these showed a strong fit. Thus, eliminating the items with lower factor loadings (e.g., 0.40) might lower GAAIS’s ability to capture the full breadth of attitudes toward AI via a reduction in semantic breadth, while there is no justification from the overall fit data. All these considerations led us to adopt the full Turkish GAAIS as a valid tool to measure positive and negative attitudes toward Artificial Intelligence for the purpose of hypothesis testing.

Finally, the Turkish GAAIS showed very similar means (Mpositive = 3.59, SD = .52; Mnegative = 3.06, SD = .69) to those observed in the English validation studies, where means for the positive GAAIS had been 3.60, 3.60, 3.61, and means for the negative GAAIS 2.93, 3.10, and 3.14 (Schepman & Rodway, Citation2020, Citation2022, Studies 1 and 2, respectively). This suggests that the sample’s different gender balance or culture did not create major shifts in mean attitudes.

4.2. Hypothesis 1: Demographic characteristics

The two first-step models predicting the positive and negative GAAIS, with only demographic factors, predicted significant amounts of variance, to some extent supporting Hypothesis 1. However, not all demographic factors were significant in the final model. We consider each in turn.

4.2.1. Gender

Gender did not predict attitudes toward AI, though it correlated with the positive GAAIS. This contrasts with several studies that indicate that males have more positive attitudes toward AI technologies than females (European Commission & Directorate-General for Communications Networks, Content & Technology, Citation2017; Fietta et al., Citation2022; Figueiredo, Citation2019; Pinto Dos Santos et al., Citation2019; Schepman & Rodway, Citation2022; Sindermann et al., Citation2020, Citation2021; Zhang & Dafoe, Citation2019). Similarly, research often emphasizes that males are more interested in technological advancements than females, and, problematically, they are more likely to be addicted to them (Broos, Citation2005; Su et al., Citation2019). In light of a significant correlation that did not carry through as a significant predictor, it is possible that the factor gender was statistically overshadowed by the more powerful factors of computer use and AI knowledge, which were significant predictors of the positive GAAIS in model 1.

4.2.2. Age

Our data revealed that age did not predict attitudes toward AI, replicating some previous research, e.g., Chocarro et al. (Citation2021), who investigated the factors affecting teachers’ adoption of chatbots and found that the intention to use technology did not increase with age. Similarly, Park et al. (Citation2022) stated that people of older age exhibit a higher acceptance of smart information technologies powered by AI, and accept new technologies as they get older to stay up to date. Nevertheless, a large body of literature suggests that younger individuals have more positive attitudes toward AI (European Commission & Directorate-General for Communications Networks, Content & Technology, Citation2017; Gillespie et al., Citation2021). Thus, there are conflicting findings concerning associations between attitudes toward AI technology and age. This may signify a need for a broader interpretation against the context of other demographics. For example, well-educated people of older ages might react to AI technologies more positively than their counterparts with lower levels of education. Further research would need to explore this in more detail.

4.2.3. Education levels

Our study showed that education levels did not significantly predict attitudes toward AI. Previous research had found that having a higher level of education increased the chances of having positive attitudes toward AI in general (Gnambs & Appel, Citation2019; Zhang & Dafoe, Citation2019). For instance, using a single-item question responded to on behalf of firms, Masayuki (Citation2016) found that in companies where employees had higher levels of education, there were much more positive attitudes toward AI, compared to companies where the employees had lower levels of education. In our sample, the participants with the lowest educational level were undergraduate students (50.86%), 40.86% had a graduate degree, and 8.28% had a master’s or doctoral degree. The narrow educational range may have limited the prediction of attitudes toward AI from the education level in our study. Future studies with a wider educational range could investigate this link further.

4.2.4. Computer usage

We found that computer usage predicted individuals’ attitudes toward AI in the positive GAAIS only. Many studies show that people who use the internet and smartphone apps powered by AI develop a more positive attitude toward it (European Commission & Directorate-General for Communications Networks, Content & Technology, Citation2017; Martin et al., Citation2020). For instance, Zhang and Dafoe (Citation2019) found that individuals who are experienced in computer sciences or programming give more support to the development of AI than those who are not. Similarly, Vu and Lim (Citation2022) emphasized that individuals who believe that they have sufficient digital skills are more likely to adopt AI technologies. This may be because as people know about technology and believe in their own technological efficacies, they trust more and fear less from AI (Pinto Dos Santos et al., Citation2019). However, this did not translate into more forgiving attitudes in the negative GAAIS.

4.2.5. Self-rated AI knowledge

The level of self-rated knowledge regarding AI predicted AI attitudes. Individuals who are more familiar with technological innovations may have first-hand access to such technologies, be more aware of the use scenarios and practical values of technologies, and therefore, have a more positive stance toward AI (Belanche et al., Citation2019; Mantello et al., Citation2021). On the other hand, individuals who have less familiarity with AI technologies will be more affected by subjective norms (i.e., others’ views) because they have more indefinite and indirect information. Our study added further evidence that as people accept technological advancements and have beneficial experiences with them, they will have more positive attitudes. In addition, they may be more likely to adopt them and may even become enthusiastic about their use (European Commission & Directorate-General for Communications Networks, Content & Technology, Citation2017; Fietta et al., Citation2022; Gillespie et al., Citation2021; Kim & Lee, Citation2020; Park et al., Citation2022). However, it is also important to note that self-rated familiarity with AI technologies may not always represent the actual knowledge of an individual. People may consider themselves knowledgeable, but they might not know all the features, applications, and use cases of AI technologies. More specifically, they might not be aware of the dark side of AI technologies (e.g., privacy violations, manipulation, and algorithmic biases) which may create discontent among individuals (Bakir, Citation2020; Hanemaayer, Citation2022). Knowing the potential harms of AI might not be as satisfying as knowing its benefits. Besides, being aware of the risks may not necessarily mean that individuals will have a more negative attitude toward AI or quit using applications powered by it, as we observed in our data. Therefore, measuring individuals’ actual familiarity with AI technologies by taking into account the benefits and risks together might provide a better understanding of its role in general attitudes toward AI in the future.

In all, demographic factors had modest predictive power in the first-step models, with self-rated knowledge and computer use being the most prominent individual factors. It is possible that somewhat narrow sampling suppressed other demographic effects. It is also possible that demographic factors may have suppressed each other, based on some significant correlates not translating into significant predictors.

4.3. Hypothesis 2: Personality and General Attitudes toward AI

Hypothesis 2 concerned the prediction of the GAAIS from the big five personality traits. Correlation patterns showed significant but modest positive associations between all traits and the negative GAAIS, but fewer traits correlated significantly with the positive GAAIS (only openness to experience and extroversion). Multiple regression analyses showed that agreeableness was the only significant predictor of the negative GAAIS in the final model once AI anxiety was included, while other personality traits (openness for the positive GAAIS and emotional stability for the negative GAAIS) were the only significant predictors before AI Anxiety measures were added to the model. Thus, there was some support for Hypothesis 2, but this was modest. The personality scale used in the present study to assess the five-factor personality traits was different from other studies (e.g., Schepman & Rodway, Citation2022). Due to its shortened number of items, item brevity, and use of summary labels (e.g., “Extroverted, enthusiastic”), rather than more traditional behavioral manifestations linked to latent constructs, the Ten Item Personality Inventory might limit the degree of relationship with the GAAIS, despite its high internal consistency reliability in the Turkish sample. We next discuss each personality trait in turn.

4.3.1. Agreeableness

The significant positive prediction from agreeableness onto the negative GAAIS demonstrates that more agreeable people have more tolerant attitudes toward the negative aspects of AI (recall that the negative GAAIS is reverse-coded). Agreeable individuals tend to be warm, pleasant, and kind to others, which enables them to get along with people surrounding them more efficiently and be more accommodating (Gosling et al., Citation2003; McCarthy et al., Citation2017). These features might activate a cognitive set that facilitates a better adjustment to changes in daily life originating from technological innovations, such as AI. Previous research also pointed out a significant association between agreeableness and negative attitudes toward technology/AI (Barnett et al., Citation2015; Charness et al., Citation2018; Schepman & Rodway, Citation2022), and a nonsignificant association with positive attitudes toward AI (Park & Woo, Citation2022; Schepman & Rodway, Citation2022). Thus, our results replicated similar prior work.

4.3.2. Extroversion

Although extroversion showed weak positive correlations with both subscales of the GAAIS, it was not a significant predictor of either GAAIS subscale. There is prior evidence that extroversion had no significant association with both positive and negative attitudes toward AI (Park & Woo, Citation2022; Sindermann et al., Citation2020), although many studies revealed that extroversion may boost individuals’ acceptance of technology (Devaraj et al., Citation2008), with evidence indicating that extroversion is one of the key traits consolidating the behavioral intentions and actual use of technology (Barnett et al., Citation2015; Svendsen et al., Citation2013; Wang et al., Citation2012; Zhou & Lu, Citation2011). In contrast, Schepman and Rodway (Citation2022) found a negative association between extroversion and attitudes toward AI, indicating that the more introverted individuals are, the more positive their attitudes toward AI. Reconciling these contrasting findings, the relationship between extroversion and technology acceptance may depend on the technology domain. Technologies that facilitate social interaction may be liked by extroverts, but AI technologies can help reduce social interactions (Schepman & Rodway, Citation2022, Yuan et al., Citation2022), which may benefit introverts. The contrast between the findings of Schepman and Rodway (Citation2022) and our present results may be due to the use of a different personality scale in the two studies.

4.3.3. Conscientiousness

Conscientiousness was not a significant predictor of positive and negative attitudes toward AI, though it showed a weak yet significant positive correlation with the negative GAAIS. This finding replicates earlier studies (Park & Woo, Citation2022; Sindermann et al., Citation2020), though Schepman and Rodway (Citation2022) observed a positive prediction of the negative GAAIS from conscientiousness in their UK sample. Broader prior evidence suggests that conscientious individuals are aware of the negative aspects of technology use, and are better able to inhibit negative technology-related behaviors (Barnett et al., Citation2015; Buckner et al., Citation2012; Dalvi-Esfahani et al., Citation2020; Hawi & Samaha, Citation2019; Rivers, Citation2021; Svendsen et al., Citation2013; Wang et al., Citation2012). For example, Buckner et al. (Citation2012) found negative associations between conscientiousness and employees’ problematic and pathological internet use and text messaging. Similarly, conscientiousness increased learning technology use (Rivers, Citation2021), and had a mitigating effect on internet and social media addiction (Hawi & Samaha, Citation2019), suggesting it can promote positive behaviors. However, all these behaviors may be linked to an underlying work ethic, which may not be present in attitudes toward AI, possibly explaining the lack of a robust association in the current study. Once more discrepancies between the current data and the observations in Schepman and Rodway (Citation2022) may be due to the different personality scales used.

4.3.4. Openness to experience

Openness to experience was not a significant predictor of positive or negative AI attitudes in the final models, though it was in Model 2 of the positive GAAIS, becoming non-significant once AI anxiety was added. This replicates previous findings (Charness et al., Citation2018; Devaraj et al., Citation2008; Park & Woo, Citation2022; Schepman & Rodway, Citation2022). There are, however, conflicting findings, with Sindermann et al. (Citation2020) finding that openness to experience enabled people to think and act positively toward AI. More broadly, some researchers suggest that openness to experience may increase the perceived practicality and ease of use of technology, including smartphones, personal computers, AI-powered applications, and internet usage (Hawi & Samaha, Citation2019; McElroy et al., Citation2007; Na et al., Citation2022; Özbek et al., Citation2014; Svendsen et al., Citation2013; Zhou & Lu, Citation2011). Openness to experience has been associated with innovativeness (Park & Woo, Citation2022), a personal disposition linked to a greater tendency to adopt innovations (Agarwal & Prasad, Citation1998; Ahn et al., Citation2016), and research suggests that innovativeness increases positive attitudes toward AI technologies (Gansser & Reich, Citation2021; Lewis et al., Citation2003; Mohr & Kühl, Citation2021; Park et al., Citation2022; Zhang, Citation2020). Although openness to experience and innovativeness may share mental substructures that make people less resistant to change, and more likely to perceive new technology as simple to use (Hampel et al., Citation2021; He & Veronesi, Citation2017; Lee et al., Citation2009; Nov & Ye, Citation2008), prior observations about links between openness and AI/technology attitudes did not seem to generalize to general attitudes toward AI in this instance. Factors influencing this outcome may include the sample demographics, the personality scale used, and the more specific predictive power of AI Anxiety.

4.3.5. Emotional stability

Emotional stability, a tendency to be emotionally stable and unworried, was not significantly predictive of AI attitudes in the final models, despite a weak yet significant correlation with the negative GAAIS and significance as a predictor in Model 2 of the negative GAAIS. Other studies also found nonsignificant associations between emotional stability and attitudes toward AI and technology adoption (Park & Woo, Citation2022; Schepman & Rodway, Citation2022). Other research found that emotionally unstable people have less forgiving attitudes toward technology (Barnett et al., Citation2015; Svendsen et al., Citation2013), while emotional stability may mitigate individuals’ worries about autonomous vehicles and enhance their acceptance of autonomous transport (Charness et al., Citation2018). Neuroticism may also influence the perceived practicality of technologies (Devaraj et al., Citation2008; Zhou & Lu, Citation2011). Studies also report that emotional stability alleviates the problematic and pathological use of the internet and social media (Hawi & Samaha, Citation2019). However, in our study, this effect may have been overshadowed by more specific predictors.

4.4. Hypothesis 3: AI Anxiety and General Attitudes toward AI

Hypothesis 3 proposed that AI Anxiety would negatively predict attitudes toward AI. All subconstructs showed significant negative correlations with both AI attitudes subscales. In the hierarchical model, adding the AI Anxiety subscales to Model 2 improved the overall prediction of attitudes toward AI, and this was particularly pronounced for the negative GAAIS: People with high AI anxiety were less forgiving toward the drawbacks of AI, and this added 20% to the explained variance. However, not all subconstructs of AI Anxiety had a significant predictive impact in Model 3. We now discuss each subscale in turn.

4.4.1. AI learning anxiety

AI learning anxiety significantly predicted both positive and negative attitudes toward AI. AI learning anxiety refers to a fear of being unable to acquire specific knowledge and skills about AI (Terzi, Citation2020; Wang & Wang, Citation2022). People who fear that they do not have adequate personal resources to acquire knowledge and skills for AI may avoid AI-powered technologies, to their potential detriment. Our data suggest that they demonstrate less positive attitudes toward the beneficial aspects of AI and less tolerant attitudes toward the negative aspects of AI. Thus, addressing AI learning may be an important factor in fostering more positive views of AI in the general population.

4.4.2. AI configuration anxiety

AI configuration anxiety predicted less forgiving attitudes toward negative aspects of AI in our study. AI configuration anxiety means fear of humanoid AI (Wang & Wang, Citation2022). People may be afraid of human-like robots as exemplified in the movie “I, Robot” (Mark et al., Citation2004). Rosanda and Istenič (Citation2021) reported that pre-service teachers were not willing to work with humanoid robots in their future classrooms. Yuan et al. (Citation2022) demonstrated that social anxiety moderates the interaction between individuals’ perceptions of humanoid assistants and their values, which may affect their attitudes toward AI-powered assistants. They also found that anxiety was associated with negative attitudes toward interaction with humanoid robots. However, humanoid AI robots are not currently widespread in Turkey, suggesting that encounters with ideas surrounding humanoid robots may have taken place via other channels, e.g., the media.

4.4.3. Job replacement anxiety

Our study showed that individuals’ anxieties about job losses induced by the development of AI technology did not significantly predict positive or negative attitudes toward AI. Research indicates that technology employees may find it challenging to keep up with job requirements, and may consequently experience reduced well-being (Synard & Gazzola, Citation2018), indicating that the fears have realistic grounds, and safeguarding a job may be difficult. Similarly, Erebak and Turgut (Citation2021) reported that people may suffer from job insecurity and anxiety due to the speed of technological change, and Vatan and Dogan (Citation2021) found that hotel employees working in Turkey had negative emotions toward AI robots, and believed that service robots may lead to increased job losses in the future. However, only a small proportion of the sample worked in the technology industry. The majority of participants were university students or civil servants. There may be somewhat less pressure on students and civil servants to update their technological skills compared to the private sector, and they may feel their jobs are harder to replace by AI. This may have limited the predictive power of job replacement anxiety with regard to attitudes toward AI in the current sample.

4.4.4. Sociotechnical blindness

Our study revealed that individuals’ sociotechnical blindness was not a significant predictor of attitudes toward AI. Sociotechnical blindness refers to anxiety arising from a failure “to recognize that AI is a system and always and only operates in combination with people and social institutions” (Johnson & Verdicchio, Citation2017, p. 2). In Turkey, like in the rest of the world, there is an increasing trend to adopt AI technology in the workplace. However, the majority of the workforce in ordinary jobs still relies on human efforts (Ermağan, Citation2021). Therefore, a lack of awareness regarding AI technology and its role in working life may decrease the probability of AI being seen as some kind of self-sustaining existence, like a newly evolved independent species, in the eyes of most people in our sample. This is likely to explain why AI attitudes were not predicted by sociotechnical blindness in this sample.

As shown in our data, AI anxiety is an issue that may impede technology adoption, usage, or acceptance, lead to failure to see the advantages of AI technology, underestimation of the practical benefits, and failure to acknowledge its ease of use (Alenezi & Karim, Citation2010; Çalisir et al., Citation2014; Dönmez-Turan & Kır, Citation2019; Hsu et al., Citation2009; Lee et al., Citation2009; Nov & Ye, Citation2008). Major concerns include the inability to control personal data and the violation of privacy (Forsythe & Shi, Citation2003). When people have a mistrust of technology, they may magnify the expectation of harmful future experiences, perceive a greater number of risks, and consequently, feel more anxious and have a less positive attitude toward AI (Chuang et al., Citation2016; Haqqi & Suzianti, Citation2020; Park et al., Citation2022; Schepman & Rodway, Citation2022; Siau & Wang, Citation2018; Thanabordeekij et al., Citation2020). Such anxieties may be alleviated and replaced with more positive attitudes toward AI by discussing with people their thoughts about AI and helping them reduce their worries about the consequences of technology.

4.5. Hypothesis 4: Narrow or Broad Predictors?

Hypothesis 4 was related to the relative predictive power of broad personality traits vs. the narrower construct of AI Anxiety. The data showed that for the positive GAAIS, there was a relatively modest incremental prediction from each of these two sets of factors, with the Big Five traits in Model 2 adding a modest yet significant 3% of predicted variance to Model 1, and AI Anxiety a further modest but significant 4%, though displacing openness as a significant predictor. For the negative GAAIS, the big five personality traits added a significant 8% of variance predicted in Model 1, and in Model 2 both agreeableness and emotional stability were significant predictors. When AI Anxiety was added to Model 3, a further 20% of the variance was explained, and emotional stability become a non-significant predictor, displaced by AI learning anxiety and AI configuration anxiety. Thus, there was evidence that the narrow construct of AI Anxiety added a large amount of explained variance, especially to the negative GAAIS model, and also displaced broader constructs. For openness to experience to be replaced by AI learning anxiety may mean that, although individuals can be generally open-minded, this may not transfer to technology domains more specifically. For emotional stability, this means that, if there is an element of anxiety or emotional concern about AI, it is predicted more strongly by specific AI anxiety, than by a general personality trait. As also argued by Schepman and Rodway (Citation2022), broader anxiety may be more readily directed at everyday concerns than at AI, and this is reflected in the current data.

4.6. Cultural and social dimensions

AI profoundly impacts societies, but the impact may depend on the culture of the specific society. We briefly consider these important social and cultural dimensions.

AI-powered personal assistants, home management systems, self-driving cars, security software and hardware, marketing systems, personal computers, and smartphones are becoming a part of daily life. These technological advancements reshape our lives. For instance, as window winders in cars are replaced by voice commands, the gesture of turning one’s hand to signal to lower a car window will lose its meaning. Similarly, phubbing (Chotpitayasunondh & Douglas, Citation2016) has emerged, i.e., communication via smartphones by people in the same room. Not all developments are negative. Technology also can be used to promote better behaviors, social-emotional learning, and character development among children in classroom settings (Williamson, Citation2017). In the future, dynamic relationships between humans and AI devices may emerge, and these are already studied in the lab (Hidalgo et al., Citation2021), with a growing need for new research tools as this progresses.

Just like other characteristics, attitudes toward AI are shaped in specific cultural and social contexts. Having different cultural and social backgrounds may create a diverse set of actions toward technology. In a recent study, Ho et al. (Citation2022) investigated the attitudes toward emotional AI among the population of generation Z. They found that Islamic and Christian participants had higher sensitivity than Buddhist participants toward the collection of non-conscious emotional data with the help of AI technologies. They also emphasized that cultural and demographic differences may predict attitudes toward AI. In the present study, the majority of personality traits, some AI-related anxieties, and some demographic factors were nonsignificant predictors of attitudes toward AI. This may stem from the fact that, in the Turkish sample, the general population may not be aware of the various uses of AI technologies, despite their adoption of many applications that use AI technology. This, in turn, may be because AI technologies are seamlessly embedded in software and applications, making it difficult for the general population, who are not specialized in the field of technology to notice their underlying mechanisms. One possible explanation behind the nonsignificant findings could stem from the fact that religion might restrain individuals’ positive attitudes toward AI, as suggested by Ho et al. (Citation2022). It is important to note that no data regarding religion were collected in the present study. However, Turkey is a country in which the majority of the population believes in Islam, and this may lead to specific associations between AI attitudes and other traits.

In the Islamic faith, privacy is one of the cornerstones of human rights, and violating it is not welcomed (Hayat, Citation2007). As it is expected from others to respect the right of privacy, subjects are also required by God to take care of their own privacy, for example, not to talk about others in their absence, not to reveal other’s personal information and deficiencies, not to share private information about the self and the family, and cover the private parts of the body from others (also called proper clothing), etc. Therefore, being very strict about privacy may impact on attitudes toward AI. However, Turkey is not a country governed by Islamic Law. It is a modern and developing country located between Europe and Asia. Its culture includes elements of individualistic and collectivistic values. It would be valuable to examine cultural dimensions related to AI attitudes more extensively in future studies, particularly with reference to privacy concerns.

4.7. Future directions and recommendations

Based on our findings, we recommend three future lines of work. First, because AI anxieties may form inhibitory factors for potential users, policymakers should reassure the public that these technologies are controlled for their safety. This may lead to more positive public opinions of AI and may enhance AI acceptance. This, in turn, may boost the economic benefits that AI may offer in sectors, such as education, health, and tourism.

Second, education about AI may be beneficial. AI technology could be introduced to students. Basic computer programming and technical knowledge could be integrated into Information Technology courses embedded in every level of education. The knowledge and experience gained may lead to more positive attitudes toward AI, and anxieties may be replaced by a greater understanding of AI. To increase general knowledge in wider society, particularly in communities where AI is not well-known or in widespread use, local administrations may offer learning opportunities via life-long learning organizations. This may increase public awareness of the issues and make citizens less concerned about using AI technologies in their lives.

Third, government support is needed. Support for researchers investigating factors behind the potential reluctance to accept AI would also be beneficial. Governments also need to safeguard against potential violations of rights by AI-powered applications and their potential predatory use in society.

In all, educational, legislative, and safety-related duties should be fulfilled by governments to implement better use of AI in people’s daily lives.

5. Conclusions

We validated the Turkish General Attitudes toward Artificial Intelligence scale (Turkish GAAIS), which showed close comparability to the English GAAIS (Schepman & Rodway, Citation2020, Citation2022). In our Turkish sample, attitudes toward positive aspects of AI were significantly positively predicted by subjective knowledge of AI and computer use, and negatively by AI learning anxiety. Forgiving attitudes toward AI drawbacks were positively predicted by agreeableness, and negatively by AI learning anxiety, and AI configurational anxiety. The broader traits of openness to experience and emotional stability were statistically overshadowed by more specific AI-anxiety predictors. Knowledge, experience, and AI anxiety are potentially modifiable, and creating effective interventions may enhance AI attitudes in populations.

Ethical approval

All procedures followed were in accordance with the ethical standards of the responsible committee on human experimentation (institutional and national) and with the Helsinki Declaration of 1975, as revised in 2000. Informed consent was obtained from all participants recruited in the study. Ethical approval was given for the study by the Social and Human Sciences Ethics Committee at Ataturk University with the approval number E-88656144-000-2200099542.

Supplemental Material

Download MS Word (21 KB)Disclosure statement

No potential conflict of interest was reported by the author(s).

Additional information

Funding

Notes on contributors

Feridun Kaya

Feridun Kaya is an assistant professor in Psychology at Atatürk University, Turkey, with an educational background in Counselling Psychology. His research areas include forgiveness, resilience, educational stress, academic life satisfaction, subjective well-being, personality, psychological distress, emotional intelligence, and Artificial Intelligence.

Fatih Aydin

Fatih Aydin is a research assistant in Counselling and Guidance at Sivas Cumhuriyet University, Turkey, with an educational background in Counselling Psychology. His current interests include the psychological consequences of the COVID-19 pandemic in adults, adolescents and school-aged children, and factors affecting the adoption of smart technologies including Artificial Intelligence.

Astrid Schepman

Astrid Schepman is a senior lecturer in Psychology at the University of Chester, UK, with a multidisciplinary educational background in Experimental Psychology and Linguistics. Her interests in technology include public perceptions of Artificial Intelligence, technology and emotion, and technology’s interfaces with language.

Paul Rodway

Paul Rodway is a senior lecturer in Psychology at the University of Chester, UK, with an educational background in Experimental Psychology and Intelligent Systems. His technology interests include applications of Artificial Intelligence and their links to Individual Differences, and Cognitive Science.

Okan Yetişensoy

Okan Yetişensoy is a research assistant in Social Studies Teaching at Bayburt University, Turkey, with an educational background in Social Studies Teaching. He is currently a doctoral student at Anadolu University. His interests include disaster awareness, academic success, anxiety, attitudes and Artificial Intelligence.

Meva Demir Kaya

Meva Demir Kaya is an assistant professor in Psychology at Atatürk University, Turkey, with an educational background in Developmental Psychology. Her research areas include identity, personality, traumatic experience, emotion regulation, emotional intelligence, Artificial Intelligence, attachment, and mental health.

References

- Acemoglu, D., & Restrepo, P. (2017, April 10). Robots and jobs: Evidence from US labor markets. Voxeu. https://voxeu.org/article/robots-and-jobs-evidence-us

- Agarwal, R., & Prasad, J. (1998). A conceptual and operational definition of personal innovativeness in the domain of information technology. Information Systems Research, 9(2), 204–215. https://doi.org/10.1287/isre.9.2.204

- Ahn, M., Kang, J., & Hustvedt, G. (2016). A model of sustainable household technology acceptance. International Journal of Consumer Studies, 40(1), 83–91. https://doi.org/10.1111/ijcs.12217

- Alenezi, A. R., & Karim, A. (2010). An empirical investigation into the role of enjoyment, computer anxiety, computer self-efficacy and internet experience in influencing the students’ intention to use e-learning: A case study from Saudi Arabian governmental universities. Turkish Online Journal of Educational Technology, 9(4), 22–34.

- Ary, D., Jacobs, L. C., Sorensen, C. K., & Walker, D. A. (2014). Introduction to research in education (9th ed.). Wadsworth.

- Atak, H. (2013). Turkish adaptation of the Ten-Item Personality Inventory. Noro Psikiyatri Arsivi, 50(4), 312–319.

- Bakir, V. (2020). Psychological operations in digital political campaigns: Assessing Cambridge Analytica’s psychographic profiling and targeting. Frontiers in Communication, 5, 67. https://doi.org/10.3389/fcomm.2020.00067

- Barnett, T., Pearson, A. W., Pearson, R., & Kellermanns, F. W. (2015). Five-factor model personality traits as predictors of perceived and actual usage of technology. European Journal of Information Systems, 24(4), 374–390. https://doi.org/10.1057/ejis.2014.10

- Beckers, J. J., & Schmidt, H. G. (2001). The structure of computer anxiety: A six-factor model. Computers in Human Behavior, 17(1), 35–49. https://doi.org/10.1016/S0747-5632(00)00036-4

- Belanche, D., Casaló, L. V., & Flavián, C. (2019). Artificial intelligence in FinTech: Understanding robo-advisors adoption among customers. Industrial Management & Data Systems, 119(7), 1411–1430. https://doi.org/10.1108/IMDS-08-2018-0368

- Bossman, J. (2016, October 21). Top 9 ethical issues in artificial intelligence. World Economic Forum. https://www.weforum.org/agenda/2016/10/top-10-ethical-issues-in-artificial-intelligence/

- Brown, T. A. (2015). Confirmatory factor analysis for applied research. Guilford Publications.

- Brooks, A. (2019, April 11). The benefits of AI: 6 societal advantages of automation. Rasmussen University. https://www.rasmussen.edu/degrees/technology/blog/benefits-of-ai/

- Broos, A. (2005). Gender and information and communication technologies (ICT) anxiety: Male self-assurance and female hesitation. Cyberpsychology & Behavior, 8(1), 21–31. https://doi.org/10.1089/cpb.2005.8.21

- Buckner, V. J. E., Castille, C. M., & Sheets, T. L. (2012). The Five Factor Model of personality and employees’ excessive use of technology. Computers in Human Behavior, 28(5), 1947–1953. https://doi.org/10.1016/j.chb.2012.05.014

- Byrne, B. M. (2010). Structural equation modeling with AMOS: Basic concepts, applications and programming (2th ed.). Routledge.

- Charness, N., Yoon, J. S., Souders, D., Stothart, C., & Yehnert, C. (2018). Predictors of attitudes toward autonomous vehicles: The roles of age, gender, prior knowledge, and personality. Frontiers in Psychology, 9, 2589. https://doi.org/10.3389/fpsyg.2018.02589

- Chocarro, R., Cortiñas, M., & Marcos-Matás, G. (2021). Teachers’ attitudes towards chatbots in education: A technology acceptance model approach considering the effect of social language, bot proactiveness, and users’ characteristics. Educational Studies, 1–19. https://doi.org/10.1080/03055698.2020.1850426

- Chotpitayasunondh, V., & Douglas, K. M. (2016). How “phubbing” becomes the norm: The antecedents and consequences of snubbing via smartphone. Computers in Human Behavior, 63, 9–18. https://doi.org/10.1016/j.chb.2016.05.018

- Chuang, L.-M., Liu, C.-C., & Kao, H.-K. (2016). The adoption of fintech service: TAM perspective. International Journal of Management Administrative Sciences, 3(7), 1–15.

- Circiumaru, A. (2022). [Futureproofing EU law the case of algorithmic discrimination] [Unpublished master’s thesis]. University of Oxford.

- Cook, R. D. (1977). Detection of influential observations in linear regression. Technometrics, 19(1), 15–18. https://doi.org/10.1080/00401706.1977.10489493

- Cook, R. D., & Wcisberg, S. (1982). Residuals and influence in regression. Chapman & Hall.

- Comrey, A. L., & Lee, H. B. (1992). A first course in factor analysis (2nd ed.). Lawrence Erlbaum Associates.

- Costa, P. T., & McCrae, R. R. (1992). Revised NEO Personality Inventory and NEO Five-Factor Inventory: Professional manual. Psychological Assessment Resources.

- Creswell, J. W. (2014). Research design: Qualitative, quantitative, and mixed methods approaches. Sage.

- Çalisir, F., Altin Gumussoy, C., Bayraktaroglu, A. E., & Karaali, D. (2014). Predicting the intention to use a webbased learning system: Perceived content quality, anxiety, perceived system quality, image, and the technology acceptance model. Human Factors and Ergonomics in Manufacturing & Service Industries, 24(5), 515–531. https://doi.org/10.1002/hfm.20548

- Dalvi-Esfahani, M., Alaedini, Z., Nilashi, M., Samad, S., Asadi, S., & Mohammadi, M. (2020). Students’ green information technology behavior: Beliefs and personality traits. Journal of Cleaner Production, 257, 120406. https://doi.org/10.1016/j.jclepro.2020.120406

- Darko, A., Chan, A. P., Adabre, M. A., Edwards, D. J., Hosseini, M. R., & Ameyaw, E. E. (2020). Artificial intelligence in the AEC industry: Scientometric analysis and visualization of research activities. Automation in Construction, 112, 103081. https://doi.org/10.1016/j.autcon.2020.103081

- Devaraj, S., Easley, R. F., & Crant, J. M. (2008). Research note how does personality matter? Relating the five-factor model to technology acceptance and use. Information Systems Research, 19(1), 93–105. https://doi.org/10.1287/isre.1070.0153

- Dönmez-Turan, A., & Kır, M. (2019). User anxiety as an external variable of technology acceptance model: A meta-analytic study. Procedia Computer Science, 158, 715–724. https://doi.org/10.1016/j.procs.2019.09.107

- Durbin, J., & Watson, G. S. (1950). Testing for serial correlation in least squares regression: I. Biometrika, 37(3–4), 409–428.