Abstract

Widespread adoption of artificial intelligence (AI) technologies is substantially affecting the human condition in ways that are not yet well understood. Negative unintended consequences abound including the perpetuation and exacerbation of societal inequalities and divisions via algorithmic decision making. We present six grand challenges for the scientific community to create AI technologies that are human-centered, that is, ethical, fair, and enhance the human condition. These grand challenges are the result of an international collaboration across academia, industry and government and represent the consensus views of a group of 26 experts in the field of human-centered artificial intelligence (HCAI). In essence, these challenges advocate for a human-centered approach to AI that (1) is centered in human well-being, (2) is designed responsibly, (3) respects privacy, (4) follows human-centered design principles, (5) is subject to appropriate governance and oversight, and (6) interacts with individuals while respecting human’s cognitive capacities. We hope that these challenges and their associated research directions serve as a call for action to conduct research and development in AI that serves as a force multiplier towards more fair, equitable and sustainable societies.

1. Introduction

The time of reckoning for Artificial Intelligence is now. Artificial Intelligence, or AI, started as the quest to not just understand what intelligence is, but to build intelligent entities (Dietrich & Fields, Citation1989). Since the 1950s, AI developed as a field that combined increasing multitasking abilities, computational power, and memory with progressively larger datasets, allowing for computer-based inference and problem-solving (Komal, Citation2014). The subfields of machine learning (ML), deep learning, and reinforcement learning were later developed which leverage AI algorithms – computational units which transform given inputs into desired outputs – to provide predictions and classifications based on available data. Currently the digitalization of most aspects of human activity has produced massive amounts of data for training algorithms. This data coupled with the exponential increase in computational power is propelling AI techniques to become widespread across all industries (Le et al., Citation2020). Although the ultimate goal of building fully intelligent entities remains elusive, the age of AI is already impacting humanity in ways that are substantial yet not well understood. In the recent past, various scientific disciplines including physics and chemistry had to reckon with the societal consequences of their scientific advances when these advancements migrated from conference discussion or a laboratory experiment into wide adoption by industry. In a similar manner, now is the time for the scientific community to grapple with the societal consequences and potential changes to the human condition resulting from the adoption of current AI systems.

AI has permeated many industries and aspects of human life. For example, in healthcare, while AI has improved diagnosis, treatment, and lowered the cost and time to discover and develop drugs, it has also introduced biases in automated decision making. These biases are detrimental to demographic minorities because of the disproportionate over-representation of Caucasian and higher income patients in electronic health record databases (West & Allen, Citation2020, July 28). In criminal justice, the Correctional Offender Management Profiling for Alternative Sanctions (COMPAS) is a decision making AI deployed by the US criminal justice system to assess the likelihood of a criminal defendant’s recidivism. COMPAS has shown significant bias against African American defendants (Chouldechova, Citation2017). Similarly, predictive AI-assisted policing affects minorities disproportionately (Spielkamp, Citation2017). Algorithmic biases also exist in AI systems used in the education sector. For example, bias has been demonstrated in algorithmic decision making for university admissions and in predictive analytics for identifying at-risk students (Williams et al., Citation2018). In the technology sector, studies show that search engine targeted advertising shows high-paying jobs significantly more frequently to males than females (Lambrecht & Tucker, Citation2019). In the financial sector, “color-blind” automated underwriting systems recommend higher denial rates for minority racial/ethnic groups (Bhutta et al., Citation2021). Algorithms that curate social media content for engagement maximization provide personalized content that lack diversity of opinions and information. Studies show that AI curation risks creating silos of opinions and echo chambers that eventually lead to deep divisions in society (Section 2.2.3). In our view, technology companies, admission officers, hiring officers, banking executives and other decision makers could obtain better results by adopting an all-encompassing human-centered approach to AI-driven curation, moderation, and prediction instead of a purely technological one. Indeed, many firms that famously adopted purely technological processes have found it necessary to reintroduce humans.

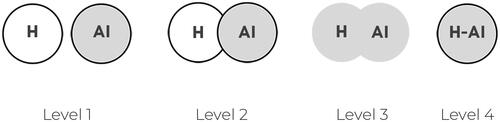

Throughout previous industrial evolutions, mechanization eclipsed human abilities to perform physical work, while humans maintained cognitive superiority. In the current age, many have warned of AI exceeding human intelligence leading to job loss, dependence, and far-reaching societal effects (Anderson et al., Citation2018). However, human and artificial intelligence are not equivalent. While AI performs well at multitasking, computation, and memory, humans excel in logical reasoning, language processing, creativity and emotion, among other areas (Komal, Citation2014). Although some have envisioned a future in which AI eclipses human intelligence (Grace et al., Citation2018), this group argues for a future in which advances in AI augment rather than replace humans and improve their environment. Ultimately, AI should support the wide-reaching goals of increasing equality, reducing poverty, improving medical outcomes, expanding and individualizing education, ending epidemics, providing more efficient commerce and safer transportation, promoting sustainable communities, and improving the environment (United Nations Department of Economic & Social Affairs, Citation2018, Apr 20).

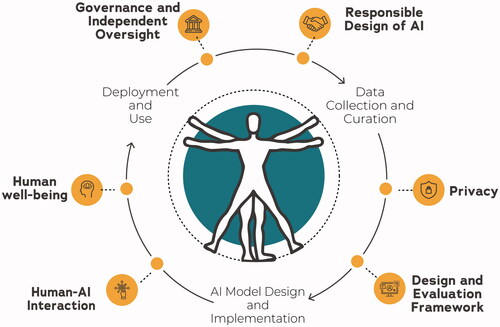

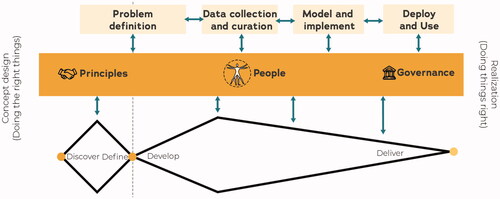

Given the impact that the human-computer interaction (HCI) field has had on expanding the capabilities and widespread use of computers, HCI is in a unique position to expand the usefulness of AI and ensure that future applications are human-centered. While HCI has previously focused on the human and how technological artifacts can be better designed to meet the user’s needs, in the age of AI, HCI can lead the way in providing a much-needed human-centered approach to AI. While traditional software systems follow a set of rules that guide in the generation of a result, AI systems evolve and adapt over time. For instance, ML has three main design stages: (1) data collection and curation, (2) algorithm design and experimentation and (3) deployment and use (). As a result, the AI design cycle is a continuous process and requires perpetual oversight as part of the design framework to preserve alignment of system goals and objectives with user values and goals. Furthermore, human values, goals and context also change over time. Recognizing these changes and adopting system behavior to ensure value alignment is essential for human-centered design. Moreover, unlike humans and human teams, presently AI systems have no rights, responsibilities and are not accountable for their actions and decisions, though this could change in the future (see 6. Governance and Independent Oversight). People are responsible and accountable for the system design and behavior which requires them to comprehend AI well enough to anticipate potentially problematic system behavior (Hoffman et al., Citation2018). This requires new design methods, practices, verification and validation methods, and frameworks to detect and incorporate ever evolving human goals and values from user research into the AI system’s objectives (Gibbons, Citation1998) and build responsible, transparent, and interpretable AI systems.

In contrast to the problematic AI systems mentioned above that have been developed and deployed to date, human-centered AI (HCAI) seeks to re-position humans at the center of the AI lifecycle (Bond et al., Citation2019; Riedl, Citation2019; Xu, Citation2019) and improve human performance in ways that are reliable, safe, and trustworthy by augmenting rather than replacing human capabilities (Shneiderman, Citation2020a). Such an approach considers individual human differences, demands, values, expectations, and preferences rather than algorithmic capabilities, resulting in systems that are accessible, understandable, and trustworthy (Sarakiotis, Citation2020), allowing for high levels of human control and automation to occur simultaneously (Shneiderman et al., Citation2020b). This approach will encompass establishing frameworks for design, implementation, evaluation, operation, maintenance, decommissioning, and governance of AI systems that will strive to create technologies that are compatible with human values, protect human safety, and assure human agency. HCAI is beginning to make impacts on education (Renz & Vladova, Citation2021) and medicine (Gong et al., Citation2021), but widespread adoption remains forthcoming. Given the current trajectory of AI research and industrial adoption, we can easily envision a future in which AI is even more ingrained in society and its impact more salient. It is in this context that this group proposes six grand challenges in HCAI along with a human-centered research agenda for the responsible study, development, deployment, and operation of AI systems that will guide and promote research that is responsible, sustainable, ethical, and in essence, human-centric. Implementing the HCAI vision is of necessity highly interdisciplinary, requiring the integration of expertise across traditional disciplines such as HCI, ML, and software engineering, but given the increasing reach and worldwide impacts of AI technology, complementary fields such as sociology, ethics, law, bioengineering, and policy will also be required (Bond et al., Citation2019).

This article presents the results of 26 international experts from North America, Europe, and Asia with broad interest in the field of HCAI across academia, industry, and government. Participation was voluntary and recruiting was done through professional networks. Expertise was sought across disciplines in the social and computational sciences as well as industry. Considerations were given to increase participation by members of under-represented groups when announcements and invitations were sent. The group had various educational backgrounds with a majority holding Ph.Ds. Disciplines ranged from Computer Science, Psychology, Engineering, and Medicine, with different specializations within represented disciplines.

The group’s collaboration started in early 2021, with the identification through online questionnaire and discussion of major challenges facing the adoption and application of HCAI principles at present, scientific challenges and opportunities for HCAI in the future, and recommendations for future research directions. Authors of this article collaborated synchronously and asynchronously to concept-map (Falk-Krzesinski et al., Citation2011) the collected opinions, summarize them into major areas, and rank them through an online questionnaire.

Results were presented to the broader research community at the HCI International 2021 on July 26 2021. Major areas included: trustworthy AI for a human-centered future; AI, decision making, and the impact on humans; human-AI collaboration; and exploring a human-centered future for AI (Garibay & Winslow, Citation2021). Announcements about the session were sent to the research community interested in HCAI. Those who accepted were invited to a one-day closed meeting held on July 27 2021, the major challenges were analyzed and discussed, and a condensed set of 6 challenges was produced (), followed by team member development of written descriptions of the challenges, rationale for including them, main research challenges, and emerging requirements. Breakout groups were formed for smaller discussions and online documents, editable by all participants, were used for information collection. The overall session and breakouts were facilitated by members of the author team. Given that this was done in an online and synchronous interaction platform, host facilitation ensured that communication was managed and open among participants.

The six grand challenges of building human-centered artificial intelligence systems and technologies are identified as developing AI that (1) is human well-being oriented, (2) is responsible, (3) respects privacy, (4) incorporates human-centered design and evaluation frameworks, (5) is governance and oversight enabled, and (6) respects human cognitive processes at the human-AI interaction frontier. The first major challenge presented represents the overall purpose of HCAI, human-wellbeing to ensure that AI systems are centered on improving people’s lives and experiences. The second and third challenge areas represent principles that ensure responsible AI system design and development and protect human privacy. The fourth and fifth challenges represents processes to develop and provide a comprehensive HCAI design, evaluation, governance and oversight framework for appropriate guidance and human control over AI life cycle. The final challenge area represents the ultimate product of HCAI, the vision of future human-AI interaction. Since the identified challenges are interrelated, the discussions that follow are interconnected, e.g., a comprehensive HCAI design, implementation, and evaluation framework encompasses aspects of responsibility and privacy. We describe these six challenges and their associated research directions and recommendations (see Sections 8.1 and 8.2). These serve as a call for action to conduct research and development in AI that accelerates the movement towards more fair, equitable and sustainable societies.

2. Human well-being (challenge 1)

2.1. Definitions and rationale

2.1.1. Human well-being

The concept of human well-being is hard to define. Two general perspectives have emerged over the years: the hedonic approach, which focuses on positive emotions such as happiness, positive affect, low negative affect, and satisfaction with life (Diener et al., Citation2009); and the eudemonic approach, which focuses on meaning and self-realization, viewing well-being in terms of the degree to which a person is fully functioning (Ryff & Singer, Citation2008). Today, the common understanding is that well-being is a multi-dimensional construct involving both a hedonic and a eudemonic dimension. For example, Positive Psychology (Seligman, Citation2012) supports the concept of human flourishing, intended as “positive” mental health (Keyes, Citation2002).

Towards these aims there is a need to elaborate and explore what is understood about well-being and to apply them to AI, ultimately translating these higher-level concepts into at least two veins of effort: 1. Discovering implementation opportunities for AI to benefit human well-being and 2. Making specific design considerations to support user well-being when interacting with AI.

2.1.2. Unique features of AI technology that may impact well-being

In its various conceptions, AI or technologies that replicate, exceed, or augment human capabilities have characteristics that may uniquely impact human well-being. By design, AI offers a higher level of automation and self-direction, requiring less human input. However, it lacks a concept of human values, common sense, or ethics (Allen et al., Citation2005; Han et al., Citation2022), and thus may perform its tasks or influence decisions from a basis that is not human-oriented or may cause direct harms. In many cases AI is trained on data derived from human behaviors and thus may adopt inclinations or behaviors that result from implicit bias in that data (Ntoutsi et al., Citation2020). When ML algorithms are trained to optimize a narrowly defined outcome this may be at the expense of other desired (alternative) outcomes representing values that were not included in the model. AI may influence what people believe to be true or important in a decision making task, but do so spuriously with potential negative consequences (Araujo et al., Citation2020). Because underlying methods are generally not transparent, users of AI may overtrust or undertrust the system, leading to errors (Okamura & Yamada, Citation2020). Explainability methods can be complex for end users and have a similar result of overtrust (Ghassemi et al., Citation2021; He et al., Citation2022). Learning systems can be dynamic, such that performance may not be consistent. This may result in an inappropriate shift in responsibility where end users are expected to evaluate a tool’s performance without a desire or realistic capability of doing so (Amershi et al., Citation2014; Groce et al., Citation2014). Finally, AI is an added accelerant for technology development (Wu et al., Citation2020). Any degree of mastery in conceptualizing and creating human-centered AI could have both early, broad, and ultimately persistent impacts.

As the AI becomes increasingly integrated (Poquet & Laat, Citation2021) in work and life, it is essential that we can consider, observe, evaluate, articulate, and act upon the human experience with AI, both individual and collective. A well-being orientation is mindful of these concerns. It approaches AI from a perspective of human impacts, individual and collective, with the aim of generally increasing eudaimonia and flourishing for those who either interact with or are impacted by AI.

In this challenge we consider the unique impacts of AI as a technology and the types of considerations that might be made as human-AI interactions become a common part of the human experience. Social media is discussed as a representative case study.

2.2. Main research issues and state of the art

2.2.1. Foundational and actionable characteristics of well-being-oriented AI

As already mentioned, AI systems are considered as potentially capable of enhancing human well-being, since they offer technical solutions for monitoring and reasoning about human needs and activities, as well as for making decisions about how to intervene in support of humans. In this context, however, it is important to answer the question of which characteristics AI systems must have to achieve the above objectives.

In this respect, the quality of experience becomes the guiding principle in the design and development of new technologies, as well as a primary metric for the evaluation of their applications. “Positive technology” as a general term (Riva et al., Citation2012), and “Positive computing” more specifically (Calvo & Peters, Citation2014), suggest that it is possible to use technology to influence specific features of human experience that serve to promote adaptive behaviors and positive functioning, such as affective quality, engagement, and connectedness.

Targeted well-being factors include positive emotions, self-awareness, mindfulness, empathy, and compassion, and technology has been proposed to support these factors (Calvo & Peters, Citation2014).

In order to enhance human well-being, emerging AI technologies should be inclusive, avoid bias, and be transparent and accountable (Section 3), respect human resources – especially time and data (Section 4), adopt simplicity in design (Section 5), prevent negative side effects (Section 6), and respect, support and expand human cognitive capacities and adapt to humans (Section 7), (Wellbeing AI Research Institute, Citation2022).

As a research foundation, a very robust and potentially useful framework is Self-Determination Theory (SDT) which is well aligned with eudaimonia concepts and offers an evidence-based approach for increasing motivation and well-being (Ryan & Deci, Citation2017). SDT is “deliberate in its embracing of empirical methods, and statistical inferences, as central and meaningful to its epistemological strategy” and has been investigated in thousands of studies across a variety of domains including the workplace, schools and learning, sports and exercise, healthcare, psychotherapy, cultural and religious settings, and even virtual worlds (Ryan & Deci, Citation2017). SDT finds that autonomy, competence, and relatedness are the essential psychological nutrients that are essential for individuals’ motivation, well-being, and growth. As an approach that enhances both well-being and intrinsic motivation, SDT could be an especially useful resource in designing AI tools that support eudaimonia while also creating engagement towards business goals or other imperatives (Peters et al., Citation2018).

2.2.2. Opportunities for AI that support well-being

As it does with other ambitions, AI offers many opportunities towards the pursuit of well-being. For example, a discussion is ongoing among the scientific and the global community regarding how technology can support the UN Sustainable Development Goals (SDGs) (United Nations Department of Economic & Social Affairs, Citation2018, Apr 20) for 2030, which also include good health and well-being for everybody. AI has been identified as a supporting technology in the achievement of 128 targets across all SDGs (Vinuesa et al., Citation2020). The AI for Good initiative (AI for Good, Citation2021) aims to identify practical applications of AI to advance the UN SDGs.

Mental health is a domain where several efforts have leveraged AI towards improving well-being. In this context, AI is often used in the development of prediction, detection, and treatment solutions for mental health care. AI has been incorporated into digital interventions, particularly web and smartphone apps, to enhance user experience and optimize personalized mental health care (Neary & Schueller, Citation2018), and has been used to develop prediction/detection models for mental health conditions (Carr, Citation2020). Smart environments including monitoring infrastructures as well as AI-based reasoning components have been proposed to address common well-being issues relevant for large parts of the population, such as emotion regulation (Fernandez-Caballero et al., Citation2016), stress management (Winslow et al., Citation2016, Citation2022), sleep hygiene (Leonidis et al., Citation2021), and independent living and everyday activities of people with disability and the aging population (Burzagli et al., Citation2022). A common issue for this type of systems is assessing their impact on well-being in a reliable way and using effective instruments.

Another exemplary area effort involves the development of interactive technologies to support universal accessibility (see Section 5.2.3 Ensuring universal accessibility). These tools aim to provide technological solutions that are accessible by all and support the independent living and everyday activities of people with disabilities and advancing age (Stephanidis, Citation2021). In this context, AI has the potential to provide the reasoning means to make decisions about the type of support needed in an individual and context-dependent way (Burzagli et al., Citation2022).

2.2.3. Unintended AI impacts on general well-being

The question of harms is crucial to the design community, especially those harms that are incidental, that arise from choices in the design of AI rather than the specific intent of an AI tool. Certainly, the malevolent use or weaponization of AI is to be safeguarded against (Winfield et al., Citation2019), but a response to those issues may need less advocacy than the issue of less apparent harms from AI that arise from poor design.

Perhaps the most insidious risk with AI is that its applications will have mixed results, with enthusiastic deployments accompanied by harms that are never corrected or evaluated. This might be the case with a tool that increases capability but adds risk, stress, or administrative burdens. A tool might benefit people generally while exhibiting bias or marginalizing subgroups of people. For example, a tool may improve efficiency, such as academic admissions processes, (Vinichenko et al., Citation2020), but result in marginalization or unequal distribution of resources (see Section 1). An application may leverage data in useful ways, but also impact privacy inappropriately (see Section 4). Decision support tools may be generally superior to humans but lack the common sense or values to address outlier cases in a humane way. As discussed in other challenges, AI may exacerbate inequalities, result in job disruption, and cause worker deskilling (Ernst et al., Citation2019). In another example, social media algorithms confer both benefits and harms not only to individual users but also to society at large (Balaji et al., Citation2021). Beyond the individual and groups there are potential impacts on the natural world from AI (Ryan, Citation2022), with which human flourishing is inextricably enmeshed.

The UN targets previously discussed also face the issue of mixed benefits and harms. While AI could improve 128 of the previously mentioned UN SDG’s, it may also inhibit 58 targets. For example, while the SDGs are founded on the values of equity, inclusion, and global solidarity, the advent of AI could further exacerbate existing patterns of health inequities if the benefits of AI primarily support populations in high-income countries, or privilege the wealthiest within countries (Murphy et al., Citation2021).

The underlying aim of AI for well-being is to support “inclusive flourishing” so that as many as possible can experience autonomy, competence, and relatedness, and are enabled to grow and pursue their purpose or goals in life (Calvo et al., Citation2020). While much of this has been discussed in terms of AI as a force for change, it will also become an increasingly common interactional experience for individuals. It is crucial to begin to understand the human-AI interaction itself, which is a poorly explored but potentially impactful experience that will become increasingly persistent in our work and lives.

2.2.4. Understand user impacts of augmented decision making

Decision making is of particular interest to human well-being. With the presence of risk and the possibility of loss, even the most rational decision making process has emotional components (Croskerry et al., Citation2013). A decision making process can result in satisfaction or result in decisional stress, decisional conflict, lack of closure, or regret (Becerra-Perez et al., Citation2016; Joseph-Williams et al., Citation2011). Adding the uncertainty and complexities of AI and AI explanations poses additional uncertain impacts to these decisional stresses.

In domains such as law, healthcare, defense, finance, and admissions, there are longstanding processes to support trustworthy decisions and support the well-being of decision makers by providing an explainable and defensible framework for their outputs. AI can potentially disrupt these processes, offering irresistible capabilities coupled with non-intuitive methods that can fail disastrously and indefensibly. Without effective design strategies, these realities will leave users with the stress of being responsible for AI without having full understanding or control (see also section 3.2.1 Accountability and liability – moral and legal)

The question of responsibility highlights the important issue of task and risk-shifting that can occur with AI in decision making or other tasks. Ultimately, people are responsible for actions performed by AI or decisions made with AI support. However, the reasons that AI delivers a particular result are difficult for users to explain or verify on a variety of levels (Wing, Citation2021). Explainability methods can be useful but are also complicated (T. Miller, Citation2019) and potentially fallible themselves, making them incomplete or inappropriate solutions in some cases (Rudin & Radin, Citation2019). Also, the challenge of verification is ongoing, as outputs can change given the dynamic “learning” of these tools.

In some cases, it may fall to the user to prevent mistakes made by AI, but they may neither desire this nor have the capabilities or time to do so. In one anecdote (Szalavitz et al., Citation2021), a patient was unable to receive adequate pain treatment due to an AI algorithm which suggested that the patient had a high risk of overdose. With little ability to evaluate the AI and a desire to prevent overdose, the physicians generally accepted its recommendation. It was left to the patient to confront the AI company, ultimately discovering that the algorithm had been skewed by prescriptions made for the patient’s dog. In this case, both the risk of AI harm and the work of remediating it was shifted to the patient, paradoxically the person with the least agency to do so. These issues in decision support are possible with loan approvals, school applications, or any similar situation where black-box AI-generated information impacts human benefits.

Automated technologies present similar challenges. From self-driving cars to self-regulating factories, power-grids, financial processes, and robots, there is a need to consider task and risk-shifting such that when it occurs it is intentional and acceptable to the involved stakeholders. Furthermore, tools must provide appropriate feedback and controls so that if users are assigned to bear the risks that AI contributes, they can do so comfortably and capably (Cheatham et al., Citation2019). In short, AI interfaces should result in both peace and power, and it is not simply algorithm design, but ultimately excellence in interface designs and collaborative decisions about risk management that bear this responsibility. proposes 6 principles and questions toward HCAI design for human well-being.

Table 1. Six principles that promote well-being in HCAI

2.2.5. Case study: Ethical use of AI in social media

The ethical use of AI in social media is an important component of promoting human well-being. The SDT concept of relatedness implies social connectivity, or the sense of belonging to a community as a source of happiness. Relatedness can be promoted or distorted by user engagement with social media platforms that relay on automated, algorithmic curation or information resulting on constructive of destructive effects to human well-being.

From life-saving health information to democratic decision making, access to accurate and truthful information is essential for human and societal well-being. With social media, AI has become an invisible but ubiquitous mediator of our social fabric, determining what information reaches who and for what purpose, raising important questions about its effects on the well-being of our society. Currently, most online users consume news through social networks (Shearer & Mitchell, Citation2021) where their news feed is under the control of the social network platforms. Yet, social media platforms acknowledge only a limited role in moderating and curating the information in their systems. This, along with a new ability to publish content easily and cheaply has created an explosion of information coupled with a vacuum of oversight wherein misinformation and disinformation have flourished.

The AI mediation of this information has resulted in a distorted and manipulated information environment with varied impacts ranging from misinformed users to the extreme polarization and radicalization of users. These impacts can result from an admittedly blurry spectrum ranging from misinformation (where false or out-of-context information is presented as fact whether or not the intention to deceive is explicit) to disinformation (with an explicit intent to deceive).

The design objective of social networks themselves centers on the following four activities: View, Like, Comment, and Subscribe/Follow. The success of the platform is determined by the count of these activities, and the AI algorithm, known as the social recommender system (Guy, Citation2015), curates the content provided to the individual user to maximize the activities. The recommender system is essential to the success of the social media website which raises a question regarding the privacy of monitored individuals and other ethical challenges (Milano et al., Citation2020). The appropriate use of AI for mediating or “recommending” news also raises the question of how much power the design of such platforms and their AI algorithms have over the “individual-level visibility of news and political content on social media” (Thorson et al., Citation2021). Perhaps the most notable study is the Facebook emotional contagion study (Kramer et al., Citation2014), in which Facebook would alter its news feed for individual experience to manipulate their emotional state. Using only photographs as input data (Chen et al., Citation2017), social network platforms were able to reliably identify the user’s mental state. Young people are particularly vulnerable to social media effects. For instance, researchers reported increased consumption of alcohol and rising levels of stress (Oliva et al., Citation2018), depression, and loneliness (Park et al., Citation2015) among young people using Facebook.

Perhaps the most serious ethical breaches involve the use of social media for social control (Engelmann et al., Citation2019) and disinformation campaigns (Lazer et al., Citation2018). The most visible example of a social control campaign is the “social credit system” implemented by the Chinese government, in which the “state surveillance infrastructure” (Liang et al., Citation2018) punishes failures to conform to the choices of the regime (Creemers, Citation2018). The system is heavily dependent on AI which monitors public events through installed video cameras around the country using facial recognition systems to identify individuals. While some governments’ champion such systems as a method to fight crime, the use of social control is not without controversy, since it raises serious “legal and ethical challenges relating to privacy” (Završnik, Citation2017). Disinformation campaigns are viewed as sinister state actions since they block independent information and muddle the facts in order to create a narrative interpretation of events that only benefits the disinformation producer. The utilization of AI bots along with trolls to create an alternative internet news keeps the mis/disinformation actor visible. Such strategies known as “flooding the zone” (Ramsay & Robertshaw, Citation2019) or just “flooding” (Roberts, Citation2018) are heavily used by nation states (Pomerantsev, Citation2019). Bots along with trolls are also used by state actors to “start arguments, upset people, and sow confusion among users” (Iyengar & Massey, Citation2019) in countries that the state actors consider their adversaries (Bessi & Ferrara, Citation2016).

In terms of the collective impacts of social media, there is a need to guarantee individuals fair access to knowledge regarding the source of information and the reason they are being exposed to this information. Towards this aim, the concept of Communication Platform Neutrality (CPN) is proposed to promote well-being for users of social media. CPN is a set of principles and a research agenda focusing on how to achieve fair, equitable, and unbiased information creation, communication, and consumption for the benefit of society. Many researchers have argued that social platforms are inherently non-neutral (Berghel, Citation2017; Chander & Krishnamurthy, Citation2018). In proposing CPN, platform neutrality is adopted with a focus on principles that tackle the issue of information consumption by individuals. This work contextualizes principles that guarantee individuals a fair access to knowledge regarding the source of information and the reason they are being exposed to this information. These principles seek to counterbalance the overarching control over the information consumption by individuals that companies like Meta and Twitter currently have by giving control to the user. details the principles of Communication Platform Neutrality along with its foremost research questions.

Table 2. Principles and research questions for communication platform neutrality.

2.3. Summary of emerging requirements

At the heart of this first challenge, expanding into the other 5 challenges, is a question of HCI evolution: How might we apply current knowledge and expand future knowledge such that we can systematically study, design, evaluate, and improve AI-enhanced technologies with primary attention to their human benefits and harms? To achieve HCAI (Shneiderman, Citation2022), efforts must go beyond optimizing algorithms to designing interactions that satisfy both technical and humanistic concerns (Cai, Reif, et al., Citation2019).

2.3.1. Redefining “usability”

Developing the capabilities for avoiding harms and enhancing experiences with AI requires a range of technical efforts. At the individual level, the scientific understanding of “usability” must expand significantly to address the unique features and impacts of AI and the special issues of undue influence, dynamic reliability, and errors of automation. In the face of tools that can appear intelligent, there is an urgent need for human controls, feedback, and affordances that allow users to calibrate their trust of AI outputs and exert control over its actions. AI also exhibits adaptive or learning behaviors which, while incredibly useful, also means that the performance can change over time, and it can react unpredictably to new inputs or stimuli. Designers must provide users with the ability to manage this with increasing capability, confidence, and comfort.

2.3.2. Human-centered evaluation and remediation

Perhaps the most urgent technical requirement involves evaluation and remediation. Given the potential harms along with the relative “newness” of these tools, there must be an ability to evaluate them from a human-centered standpoint, to consider the impacts on individuals as well as the collective, both short- and long-term, and to be able to correct them. The cost and difficulty of redesigning errant AI must be reduced significantly, and the cycles of improvement shortened. Naturally, the keystone of all these efforts is the ability to elevate their importance to designers, engineers, consumers, and regulators such that HCAI is both in high demand and insisted upon by all stakeholders. All stakeholders should be able to, at some basic level, evaluate and challenge the appropriateness of the AI tools they experience. Along with that must be a well described pathway towards satisfying this demand via human-centered, research-based design principles, processes, and evaluations.

2.3.3. Disambiguating accountability

While industries typically have existing standards and regulations that must be adhered to, they will require refinement to address the unique features and impacts of AI. As discussed earlier with risk shifting there can be an ambiguity of accountability in terms of what person or group is accountable, along with a lack of tools to successfully bear that accountability. It is crucial that designers explicitly consider these accountabilities, and that purchasers and users learn to expect clarity and fairness in terms of risks and responsibilities (see Section 6).

There is a need for researchers, designers, regulators, purchasers, business leaders, and users alike to develop a shared, clearly articulated vision of what “responsible” AI (see 3. Responsible Design of AI) is along with standards for security, privacy, interaction design, governance, all of which might be cohesively held within an overarching framework.

3. Responsible design of AI (challenge 2)

3.1. Definitions and rationale

Responsible design of AI is an umbrella term for various efforts to investigate legal, ethical and moral standpoints when using AI applications. Responsible design of AI possesses the systematic adoption of several AI principles (Barredo Arrieta et al., Citation2020), and is also often used to describe a governance framework documenting how a specific organization is addressing potential negative externalities around AI. In this case responsible AI is seen as a practice of designing, developing, and deploying AI with good intention, allowing companies to engender trust by employees, businesses, customers and society. The goal of a responsible AI framework can be to establish a governance structure to ensure a responsible use of AI technology but also to run an expanded marketing strategy to positively influence the corporate image, or a mixture of both.

Efforts in the area of responsible design of AI are increasing, as processes become more and more automated. The introduction of advanced ML methods leads to a moral wiggle room when it comes to questions concerning accountability and pivotality. Actions become increasingly unattributable to a single entity or person. In other words, the ultimate responsibilities for actions of AI implementations become increasingly opaque with the growing use of advanced ML methods. Due to this development, not only the technical responsibility but also the interaction in the legal and ethical context must be considered in order to ensure a responsible use of the technology. The technology must therefore be considered not only in terms of its efficiency but also in the context of its usage. With the introduction of advanced ML methods, it becomes increasingly important to understand how a decision was made and who is responsible for it. This broadening of the evaluation spectrum represents a new approach to the development and use of ML methods, prompting the need for a more strategic view on legal, ethical and behavioral topics defined and developed within a responsible AI design framework.

3.1.1. Subcomponents of responsible design of AI

Responsible design of AI coalesced around a set of different concepts covered by the term. Core concepts for responsible design of AI are summarized graphically in . While the concepts may go by different names, the key principles are the same. The guidelines behind Responsible design of AI establish that explainability, fairness, accountability and privacy should be considered when using AI models. Trustworthiness, data protection, reliability, security, and human-centeredness are also other terms that are frequently mentioned when it comes to responsible design of AI (Barredo Arrieta et al., Citation2020).

3.1.2. Summary of AI initiatives and standards

A growing number of non-profit organizations focused on governance (Responsible Artificial Intelligence Institute, Citation2022), technology companies developing and promoting tools and processes for responsible design of AI (Google AI, Citation2022; Microsoft, Citation2022), individual countries setting fiscal and research goals (Dumon et al., Citation2021; Roberts, Citation2018; Stanton & Jensen, Citation2021), as well as larger regions (Martin, Citation2021), and global organizations (Organisation for Economic Co-operation & Development, Citation2021; United Nations Educational Scientific and Cultural Organization (UNESCO), Citation2021) have recommended procedures for ensuring responsible design of AI. The most common principle recommended by these organizations is ensuring AI explainability, such that stakeholders including citizens, regulators, domain experts, or developers are able to understand AI predictions (Arya et al., Citation2020). Fairness, including accounting for potential bias (Mehrabi et al., Citation2021) and ensuring privacy through appropriate cybersecurity safeguards as prerequisites are also frequently recommended. Finally, ensuring that a thorough ethical analysis is performed throughout the AI development life cycle is also commonly recommended by these groups. The HCAI community has an opportunity to lead in the standardization of these recommendations and processes by coordinating across non-profit organizations, technology companies, countries, regions, and global organizations to ensure AI is responsibly developed for the world.

3.2. Main research issues and state of the art

3.2.1. Accountability and liability – moral and legal

During the last decade, a discussion concerning the possible accountability and liability of AI driven autonomous systems has gained momentum in the realms of the legal and philosophical literature. Legal accounts on this topic naturally concentrate on questions of possible liability gaps and how to ensure the compensation of victims following AI offenses. Scholars of philosophy and psychology meanwhile point out that responsibility and retribution gaps are likely to open up, if AI errs and causes harm, even if a human is found liable for an offense and victims are compensated.

The question of assigning legal liability in the event of a failure of AI is complex. The legal system distinguishes between private and criminal law. In private law, entities other than natural persons can be considered an actor and found liable. Therefore, private law can integrate the liability of AI more easily than criminal law (Gless et al., Citation2016). In recent years, a broad discussion has developed on whether AI can even be granted the legal status of a person under certain circumstances. This would equip AI also with rights, such as freedom of speech and freedom of religion, which might already be possible under current U.S. law (Bayern, Citation2016). In criminal law the liability of nonhuman agents is internationally contested (Gless et al., Citation2016). U.S. law allows for the prosecution of nonhuman agents such as corporations (Wellner, Citation2005). Cases in which an AI’s involvement in an offense may be considered under U.S. criminal law (Hallevy, Citation2010) include (1) an AI as an innocent agent of a perpetrator, (2) an AI committing an offense because a negligent human failed to act on the foreseeable consequences of its use, and (3) an AI itself being liable. However, whether it will ever really be possible to ascribe the criminal intent that is a prerequisite for criminal liability to an AI system is still contested (Osmani, Citation2020). Until now, robots are, despite certain degrees of freedom in their attributes (e.g., in communication, knowledge and creativity), frequently recognized as a product at law and are thus considered under product liability (Bertolini, Citation2013; Hubbard, Citation2014). Practical law lacks precedents of cases that involve highly sophisticated AI systems, and as such, many of these disputes have stayed theoretical for now. Corporations that create sophisticated AI systems normally are keen to settle cases stemming from accidents involving the AI systems (e.g., autonomous vehicles or advanced driverless assistance systems) outside the courtroom (Wigger, Citation2020).

Ethicists and psychologists focus their debate concerning the consequences of AI offenses on the possibility of responsibility and retribution gaps that might follow, or even exceed, liability gaps resulting from the delegation of decision making authority to AI systems. From a psychological perspective, when confronted with perceived injustice, people tend to want to identify the perpetrator of the harm they suffered and punish his or her wrongdoing (Carlsmith & Darley, Citation2008). The question arises whether a proclaimed liability and a compensation of some sort may satisfy the retributive needs of victims if the entity they experience as the perpetrator is an AI system. The human victim will most likely not accept the AI system as a suitable recipient of retributive blame or legal punishment due to an AI-system’s lack of self-awareness and moral consciousness. However, it is questionable whether the punishment of an entity responsible for the creation of the machine can compensate victims for the lack of direct retributive punishment, especially if the responsibility for the actions of the AI system on the part of its creators is diffused. One important factor in the diffusion of responsibility with respect to AI systems is the self-learning nature of modern systems and the creation of “black boxes.” In addition, technical goods are produced in the context of highly diversified transnational supply chains, which complicates the assignment of responsibility. From an ethical and psychological perspective, this creates a problematic disconnect between modern reality and the human urge to retaliate (Danaher, Citation2016).

3.2.2. Explainable AI

As AI increasingly shapes our view of the world, it influences individuals and social groups in their introspection and perception of others by: (1) providing information for human-human interactions, which is processed, aggregated and evaluated; and (2) interacting directly in human-machine interactions. Thus, explainable AI has – for good reason – become a prominent keyword in technology ethics (Mittelstadt et al., Citation2016; Wachter et al., Citation2017). Two main drivers of the opacity of AI generated results are the so-called “black box” of the self-learning algorithm and the influence of human subjectivity on the design process. HCAI will have to be designed in a way that takes all human factors of its different stakeholders into account. For instance, a socio-technical approach in which the technical AI development and the understanding of subconscious and implicit human factors evolve together, has been proposed to incorporate the “who” in a systems’ design (Ehsan & Riedl, Citation2020). The authors concentrate herein on “who” the user of an AI is and the social factors which surround the AI, once it is deployed. An explainable HCAI will also have to incorporate “who” influenced the system prior to its deployment, since an AI is already inherently value-laden in its design phase (Mittelstadt et al., Citation2016). Thus, as with any processed data, the information provided by an AI has to be interpretable in later life cycle stages through the lens of the influences it was exposed to in earlier stages.

Human-AI interactions will yield results that exceed human performance, to reach the expected added value, if AI is well calibrated to balance human trust and attentiveness in the interaction. HCAI will have to be designed so that users do not blindly trust the machine and carelessly ignore important information on the system’s performance and how the output is possibly biased or distorted by statistical variance. Humans must be enabled to critically question an AI’s output. On the other hand, human nature also requires that certain information not be disclosed openly. Behavioral sciences document the tendency to ignore information, if this helps to preserve a certain self-image (Grossman & van der Weele, Citation2017). In many cases the possibility of strategic ignorance might be just as important for the well-being of the user. Hence, for HCAI, transparency cannot be promoted without any limitations since a complete opaqueness and explainability may repulse people in some applications.

A distinction should be made between the transparency and explainability needs of end users and model developers. For example, while model developers are interested in the in-depth explanations of the underlying model attributes, end users are interested in more general explanations. The challenge is to satisfy both needs. The development of systems that satisfy the needs of both user groups is a major challenge and most interfaces are tailored either to model developers (Gunning & Aha, Citation2019) or end users (Mitchell et al., Citation2019). The development of such systems focusing on both groups is still in its infancy.

Without doubt, a high human need for explainability in AI applications based on interpretable transparency arises in the event of a failure of the system, as well as for AI applications that make ethically relevant decisions, as in the event of ethical dilemmas (e.g., a distribution of scarce resources) between different human parties. Here, affected people will want to be able to convince themselves that certain mistakes are not repeated in the former case. In the latter case, they will want to ensure that distribution outcomes are based on decision making mechanisms that are judged to be fair, legal and ethical in society. The importance of explainability in cases of ethically relevant decisions through AI becomes especially clear in light of possible responsibility gaps (see section 3.2.1 Accountability and liability – moral and legal). If AI systems fail and the need for explainability is left unanswered this may not just diminish trust in AI systems, but also in the institutions behind the AI (López-González, Citation2021). In other words, people’s societal trust may be challenged. To counteract such developments, institutions should be held as accountable for the actions of algorithms they deploy, or use, as they would be for the same events, if caused manually. This will set incentives to strive for explainability already in the development process of HCAI, which corresponds to the core concept of such applications.

3.2.3. Fairness

Fairness is defined as “the quality of treating people equally or in a way that is right or reasonable” (Cambridge University Press, Citation2022). In the legal domain, fairness is defined in two main areas: (1) disparate treatment (Zimmer, Citation1995) is a direct discrimination that happens when individuals are intentionally treated differently; and (2) disparate impact (Rutherglen, Citation1987) is an indirect discrimination that happens when individuals are unintentionally treated different under a neutral policy. Researchers categorize biases that create unfair AI deployment cases into three categories: data, model, and evaluation biases. Data biases comprise biases in the dataset due to unrepresentative data collection, defective sampling, and/or wrong data cleaning. The most common examples of data bias are historical bias, selection bias, representation bias, measurement bias, and self-report bias. Model biases, on the other hand, are observed when an AI algorithm does not neutrally extract or transform the data regardless of the biases in the dataset. Sources of model biases include several sources (Danks & London, Citation2017): AI algorithms might be inherently biased to differential use of information to maximize the accuracy rather than focusing on morally relevant and sensitive judgments, which yield the utilization of a statistically biased estimator; the use of transfer learning techniques in which AI is trained on a specific context but employed outside of its context without considering the new context’s feature space; and aggregation bias – wrongly assuming that the trends seen in aggregated data also apply to individual data points, algorithmic bias – wrongly extracting or transforming the data, and group attribution bias – assuming that a person’s traits always follow the ideologies of a group (Ho & Beyan, Citation2020). Further biases are the hot-hand fallacy – the tendency to believe that something that has worked in the past is more likely to be successful again in further even if there is no correlation, and the bandwagon bias – the tendency for people to adopt certain behaviors, styles, or attitudes simply because others are doing so. Lastly, evaluation biases can also arise when a thorough evaluation is not carried out or an inappropriate performance metric is chosen for the evaluation. The most common examples are rescue bias – selectively finding faults and discounting data, deployment bias – system interpreted improperly during deployment, and the Simpson’s paradox – biased analysis of heterogenous data by assuming associations, or characteristics observed in underlying subgroups are similar from one subgroup to another.

The most common measures of algorithmic fairness in AI tasks are disparate impact, demographic parity, and equalized odds. Among these, disparate impact and demographic parity aims to quantify the legal notion of disparate impact by considering true positive rates for different groups (Calders & Verwer, Citation2010; Feldman et al., Citation2015). Equalized odds, on the other hand, is proposed to quantify differences between predictions for different groups by considering both false-positive rates and true positive rates of the two groups (Hardt et al., Citation2016).

Since the unfairness in the deployment of AI algorithms may stem from a bias in data preparation, modelling, and/or evaluation parts, different pre-processing, in-processing, and post-processing strategies are proposed to enhance their fairness, respectively. In pre-process fairness-enhancing strategies, data is manipulated before training the AI algorithm to make it fairer. These preliminary manipulations can be either done by completely changing (Kamiran & Calders, Citation2012) or partially reweighing (Luong et al., Citation2011) the labels of training data which are closer to the decision boundaries to reduce the discrimination, or by modifying the feature representations rather than labels (Calmon et al., Citation2017). Recently, more sophisticated techniques such as generative adversarial networks are used to produce synthetic data to be augmented into the original dataset to improve demographic parity (Rajabi & Garibay, Citation2021). For in-process fairness-enhancing strategies fairness of the AI algorithm is yielded during the training time; thus, it requires modification in the architecture of the AI algorithm itself. In this context, one of the most intuitive strategies is to add a regularization parameter in the objective function to penalize the mutual information between sensitive variables and estimates (Kamishima et al., Citation2012). Similarly, numerous studies integrated fairness measures as a constraint in the optimization functions of logistic regression models (Zemel et al., Citation2013), variational autoencoder models (Louizos et al., Citation2015), kernel density estimation models (Cho et al., Citation2020), or through introducing a stability-focused regularization term in different AI algorithms to tackle the fairness-accuracy trade-off (Huang et al., Citation2019). Another recent study demonstrated that quantum-like techniques have a promise to prevent unfair decision making by amplifying the accuracy of extant AI algorithms, especially under uncertainty (Mutlu & Garibay, Citation2021). In post-process fairness-enhancing strategies, modifications are made after running the AI algorithm by assigning different thresholds to change the decision boundaries of algorithms (Corbett-Davies et al., Citation2017).

3.2.4. Ethical AI

To discuss artificial morality with a focus on responsible design of AI, there is a need to differentiate between two extremes of human-centeredness: the AI in an autonomous system (i.e., by definition an AI acting without direct human control) and recommender systems, which keep the human-in-the-loop by asking the human to make a final decision or action (see Section 7.2.2. Human-AI system interactions at work). As discussed in earlier (see Section 3.2.1. Accountability and liability – moral and legal) the deployment of HCAI should encompass the attribution of responsibility to human stakeholders of the AI, since an AI system does not offer any opportunity for meaningful retributive actions. While it may seem most concerning from an ethical, as well as psychological view that under certain legal models an AI may be considered itself as liable (Hallevy, Citation2010), already the responsibility ascription to human users of recommender systems may prove challenging, if not legally so, then at least in a moral sense. First, users who act upon the advice of a recommender system might themselves feel less responsible for the outcome of their actions. Individual human deciders can diffuse responsibility in a decision process with other people. This psychological response to a collaborative decision may prove to be even stronger, when people rely on recommender systems, than if they rely on human advisors. Human decision makers seem to adhere more to algorithmic advice (Logg et al., Citation2019) and are reluctant to acknowledge how strongly the machine’s advice influences them (Krügel et al., Citation2022). Secondly, society might encounter barriers to view human deciders, who follow the suggestion of a recommender system, as responsible as it would view a non- or merely human-advised decider (Braun et al., Citation2021; Nissenbaum, Citation1996).

The toughest challenge to responsibility ascription is, however, the deployment of autonomous systems. For instance, while some industries have specific regulations for safety critical devices (e.g., medical and pharmaceutical industry) the technology itself is not yet regulated, opening a moral wiggle room when it comes to responsibility attributions. Here, the “problem of many hands” becomes especially evident, when the acting entity is an AI, in which the prerequisites for its ethically relevant decisions are embedded a priori to the application by designers, engineers and adopters along its manufacturing process. Research has shown that when it comes to shared decision making, the type of partner with whom the decision is made affects the perceived responsibility for the decision, the perception of the choice, and the choice itself (Kirchkamp & Strobel, Citation2019). Therefore, it is advisable for HCAI to ensure that the responsibility for ethical decisions is clearly attributed to the production chain of the AI. While ML-based applications are not yet regulated, a first step is to clearly assign responsibilities and liabilities for the technology.

For the actual programming of moral capacities into AI systems, two approaches are mainly considered: a top-down and a bottom-up approach (Wallach & Allen, Citation2008). Bottom-up approaches, on the one hand, are based on the self-learning capacities of the AI, but may be strongly contested because of their unpredictability (Misselhorn, Citation2018) and the challenge to trace a morally relevant decision back to a human decision maker or programmer. A top-down approach, on the other hand, formulates moral principles, which are then implemented into the system. Discussions of which moral principles may be of relevance revolve mostly around the principles of deontological and utilitarian ethics, as well as Asimov’s laws of robotics (Misselhorn, Citation2018). A special interest lies in the decisions of autonomous systems in moral dilemmas. These have been discussed in the realm of autonomous vehicles during past years (Awad et al., Citation2020). Several scholars have argued that these kinds of dilemmas, in which an autonomous vehicle must decide whom to save and whom to harm in accident situations may not be technically applicable or at the forefront of autonomous vehicle ethics (De Freitas et al., Citation2020; Himmelreich, Citation2018). The COVID-19 pandemic has sadly shown that such life-and-death decisions might not be restricted to accident situations in civilian life (Bodenschatz et al., Citation2021; Truog et al., Citation2020); they may also arise for medical AI systems at some point, if these are to distribute scarce resources (Hao, Citation2020).

3.3. Summary of emerging requirements

3.3.1. Policy requirements

Implementing responsible design of AI is a growing concern with prodigious societal importance, and scholars from different disciplines try to identify what it entails and how it may serve to elevate human trust in AI systems and their ability to reap the benefits of AI, while they do not feel absolved of their own responsibility. Responsible AI itself is an umbrella term that encompasses many concepts, its boundaries are blurry, and concepts are often interchangeably labeled and used as semantic synonyms. It is therefore difficult to find a unique definition that covers the concepts of responsible design of AI in decision making. Establishing an appropriate definition of responsible design of AI and the concepts it encompasses however becomes increasingly important as it is imperative to define concepts to mitigate negative impacts of AI applications. Therefore, a better-contemplated taxonomy for a responsible AI definition is one of the most significant milestones in advances towards HCAI and to inform the development of policy guidelines.

Policymakers must also address the following issues, which are becoming increasingly important. Semantics is not only critical in defining a responsible AI framework, but as applications become more autonomous, the wording used to advertise the applications must determine the extent to which the human user can delegate their own responsibility to the system. It is necessary to examine, for different levels of applications, what terms lay people intuitively associate with the actual technical occurrence. For example, there is an ongoing legal discussion whether Tesla’s “Autopilot” may be labelled this way, because of the expectations towards the technical system that this word evokes, or whether this designation should be reserved for higher levels of automation (Taylor, Citation2020, Jul 14).

In addition, urgently pending policy implications can be derived from a discussion on the concept of ethicality within the responsible design of AI framework. Policy makers need to address societal concerns about moral dilemmas that AI systems may have to address in the near future. Especially in democracies, it may lead to societal unrest, if possible life-and-death decisions are not addressed by elected representatives but seemingly left to individuals and corporations to decide. Although responses to simplified dilemma situations and behavioral experiments should not be the basis for legal or ethical regulations, they may serve to gain insights into common intuitions on these matters (Awad et al., Citation2020; Bodenschatz et al., Citation2021; De Freitas & Cikara, Citation2021).

3.3.2. Technical requirements

Human nature makes it a challenge to encourage stakeholders along the AI life cycle to own their responsibility for their influence on the algorithms and decisions they make under the influence of an AI system. For this reason it is of utmost importance that certain technical requirements are fulfilled. These include requirements about (a) the UX design of AI interfaces, (b) a proper technical definitions of the fairness, explainability, and liability indicators, and (c) a holistic approach embedded into the system to make sure that explainability requirements are met.

More specifically, emphasis within a responsible design of AI framework should be on the interface for AI applications to meet the requirements of HCAI. The requirements need to evolve around physical and psychological human boundaries. Thus, they need to be defined by interdisciplinary consortia. The composition of the interface needs to interactively mitigate human biases and heuristics. A special emphasis should be on the mitigation of those biases that lead to a diffusion of responsibility with the autonomous system or between the human entities which the system connects.

Furthermore, especially for autonomous systems without human oversight, a determination of the appropriate measures and fairness indicators is of utmost importance. Since AI algorithms are trained to be fair with respect to a specific fairness measure, the selection of the proper measure may affect the disparity to a significant extent. As an analogy to the trade-off between sensitivity and specificity in statistics, it is critical to determine whether one should aim to maximize the equal probability of benefit or minimize the harm for the least advantaged populations. Additionally, understanding potential sources of unfairness is the key milestone to providing fair solutions in the deployment of AI; thus, an effective strategy can be employed to mitigate the unfair AI estimates for different subpopulations and individuals after the first challenge is overcome. Lastly, because AI and ML algorithms often rely on large amounts of data, availability of fairness-aware datasets to train these algorithms and benchmark datasets to test to ensure that AI systems produce fair and equitable outputs is important (Quy et al., Citation2021). There are efforts in government and private institutions to create and make these types of datasets available for training and testing of AI systems that impact various aspects of life such as finance, justice, health care, education and society in general (Brennan, Citation2021, Oct 8).

We acknowledge that humans are prone to bias and that algorithms designed by and using data collected by humans can preserve and exacerbate these biases. An important emergent requirement is to conduct research on how diverse teams of individuals can achieve designs that are as unbiased as possible or at least aware of the potential biases introduced.

Explainability must be ensured where users demand it. AI must be easily accessible and comprehensible in the sense of being understandable. For this, integration of explanation mechanisms into the direct user experience is indispensable. The integration into the UX design of an AI application must be intuitive, not only in its operation but also in its wording. It must be possible for the human user to bypass this information in certain applications (see Section 3.2.2 Explainable AI). The biggest challenge for an explanatory capability in HCAI applications will be to calibrate the trade-off between harmless individual system adaptations and harmful interventions promoting human weaknesses (e.g., the human tendency to diffuse responsibility).

In summary, the requirements emerging from a responsible design AI framework call for an interdisciplinary approach towards the technical design of HCAI. The systems of the future need to directly evolve from a framework that centers around considerations of human limitations. These limitations influence the way people make use of these systems. A framework for HCAI implementations also needs to take into consideration which social upheavals these systems’ deployment might entail if the human responsibility behind the AI is blurred. A taxonomy for a responsible design of AI definition needs to be established and semantics around the HCAI need to be adjusted to speak to human intuitions concerning the right interpretation of a machine’s capabilities. Social concerns need to be addressed in legal and ethical regulations. Legislative regulations for external audits of AI applications need to move from vague to concrete and applicable requirements and need to address the use of advanced and adaptive systems. They also need to ensure that methodological safeguards for the appropriate measures and fairness indicators are always met.

4. Privacy (challenge 3)

4.1. Definitions and rationale

The crucial foundation of a functioning AI is the data on which it is based. While AI may have broad and varying definitions, the applications that are most exciting for their capabilities in automation, enhancing human knowledge, and supplementing human actors, are those which draw most deeply on robust and representative datasets. This holds for the broad spectrum of AI applications – those embodied and non-embodied; for the practical or impractical; for life-saving measures or video games. There are few aspects of human life to which AI cannot or will not be applied, fuelled by the “big data” boom of the last decade.

Data is an abstraction of the fundamental building blocks that make up the way we perceive the world: the colors we detect, shapes we recognize, distances we travel. Narrow AI are trained, tested, and produced to act upon various data inputs – to “speak” to a child, assess optimal solar panel direction, or make tea. While the data does of course exist outside of the world of AI, it must be captured in order to train, test and produce the AI. Types of data are theoretically immeasurable, and while many may be largely independent of direct human interaction, such as the distance and travel of interplanetary bodies, the majority of AI applications are based around humans and their interactions. This is for multiple reasons: replacements and supplementation of labor; creation of new markets and products; and the sheer variety of human interaction. In any case where an AI is intended to interact with a human - be it physically, economically, or digitally - the AI must be trained on information regarding humans and human behavior. Human data comes, axiomatically, from humans.

HCAI presents the proposition of harnessing the potential power of AI in a way that benefits humanity, drawing on tools that “amplify human abilities, empowering people in remarkable ways,” calling for reliable, safe, and trustworthy design (Shneiderman, Citation2022). As evidenced by the other writings herewith, this is no simple task: ethical and sustainable collection, implementation, and use each present unique challenges and pitfalls. This is no less true for the data upon which AI depend: data about humans fundamentally affects both the humans about which the data is collected, and the humans of the system in which the AI will be deployed. This produces two major categories of impact: those relating to accuracy and robustness of data – bias and discrimination (see Section 3.2.3: Fairness).; and those relating to data subjects – privacy.

Conceptions of privacy vary from individual to individual, as well as by technical and legal definition, and have been summarized as six conceptions: the right to be left alone; the right to limit access to the self, including the ability to shield oneself from unwanted access by others; the right to secrecy, including the concealment of certain matters from others; the right to control over personal information, including the ability to exercise control over the information about oneself; the right to personhood, including the protection of one’s personality, individuality, and dignity; and the right to intimacy, including the control over, and limited access to one’s intimate relationships or aspects of life (Solove, Citation2002). Given the potentially broad characteristics of data for AI, each of these may be drawn upon: individuals or groups may not wish to have their data collected, nor others’ data used in relation to them. They may wish to conceal certain data, or to control its dissemination and audience. They may feel they are reduced to a set of data or a series of numbers by being incorporated into a larger system of data, or simply that they do not wish the multitudinous aspects of their life, including relationships, to be exposed to largely corporate entities to exploit. Most worryingly, individuals or groups may have a limited conception of what data is held about them, either siloed or in combination, and how that data might impact them. While all of these concerns are valid, for the purposes of HCAI, this discussion will focus on: how data is used by AI, and how it may be used against individuals and groups. These hinges on: the consent of collection and use; understanding of types and extent of impact both by the data subjects and the data holders; and what the data can be used, for what purpose, and for how long.

4.2. Main research issues and state of the art

4.2.1. Case study: social robots

How, then, could these concerns play out in real-time? This of course depends on the application of the AI. While harms of digital AI are equally as important as their embodied counterparts, their effects on privacy are best addressed in the context of their specific use and broader effects: for example, social media algorithms have been much discussed in the context of psychological harms such as addiction and self-esteem (Błachnio et al., Citation2016) alongside such concerns as political radicalization (Van Raemdonck, Citation2019) and sex trafficking (Latonero, Citation2011). However, there is considerable concern too alongside the physicalization of AI – its placement with and among humans. Beyond merely tripping over the Roomba, social robots provide an interesting case study for privacy.

Social robots are those AI-enabled digital counterparts with whom humans interact. This naturally encompasses a broad range of applications. Humanity’s proclivity to delegate labor is second perhaps only to its desire for companionship: beyond even the domestication of animals, humans anthropomorphize and are affectionate towards inanimate objects (Tahiroglu & Taylor, Citation2019), and have been since long before robots – one would be hard-pressed, for example, to find an unnamed boat docked at a marina, even though they may equally have an identifier similar to a license plate. Social robots appeal to many, either for their intended function or a fostered sense of affection.

Social robots are not, however, created equal. Depending on their intended purpose, social robots will have different methods of data gathering, processing, and storage, and even these can vary. For example, an AI lawn mower might have a complex machine-learning model to interpret a camera feed to precisely mow a new or previously-mapped lawn. Conversely, it might require a set of electrical boundary fences to “bump” into to turn. While a lawn mowing AI may raise few privacy concerns, particularly as there would be no reason to give it audio recording capabilities, robots in the home have this same variation but with far more potential information to gather.