Abstract

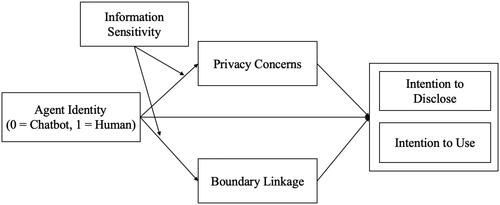

Chatbots have been widely adopted to support online customer service and supplement human agents. However, online data transmission may involve privacy issues and arouse users’ privacy concerns. In order to understand the privacy management mechanism when interacting with chatbots and human agents, we designed a cross-national comparative study and conducted online experiments in China and the United States based on Communication Privacy Management (CPM) theory. The results show that privacy concerns and boundary linkage played different mediation roles between agent identity and the intention to disclose as well as the intention to use the service. Information sensitivity had a significant moderating effect on the mechanism. Our research contributes to a better understanding of personal boundary management in the context of human-machine interaction.

1. Introduction

The rapid development of artificial intelligence (AI) technology has led to a broad application of AI customer service across the globe (Gartner, Citation2019). When it is used effectively, AI can improve the customer experience in many areas, including data collection, speech recognition, and message response time (Forbes, Citation2021). As one of the most popular AI applications, task-oriented chatbots, which are created for a specific task and are designed to have short conversations, have played an important role in increasing sales and enhancing the service quality of e-commerce, financial agencies, and healthcare. A market research report (Grand View Research, Citation2021) estimates that in 2021 the global chatbot market was worth USD 525.7 million. About 23% of customer service corporations used AI chatbots in 2022 (ServiceBell, Citation2022).

There are usually two options for users to start the conversation: chatbots or human agents (Majstorovic, Citation2023). Since chatbots cannot replace humans in terms of performing complicated and personalized tasks, human agents still co-exist with chatbots. Sectors that initiate customer service are able to collect, analyze and utilize personal information in a variety of ways, whether through human agents or automated services. As such, privacy concerns about human agents or chatbots have become a hot topic of consumer concern. Users are concerned about the security of the chatbot platforms or the agent service system, like hacking and cyberattacks. In addition, there is a lack of transparency in how chatbot data are collected, stored and accessed, leading people to be reluctant to utilize chatbots and to mistrust them (Akshay & Labdhi, Citation2023). Unintentional disclosure of sensitive information while talking to chatbots can also be a cause for concern to users. These concerns may affect the extent to which people open privacy boundaries. In line with the Computers are Social Actors (CASA) paradigm, people may treat chatbots as humans and interact with chatbots based on rules of interpersonal communication (Nass et al., Citation1994). Nevertheless, some studies have indicated that users’ privacy perceptions regarding human agents and chatbots may be different (e.g., Sundar et al., Citation2016; Tsai et al., Citation2021). Despite there having been some competing results regarding perceptions between chatbots and humans, users’ privacy management between different countries, and their effects on behavioral intentions have not yet been well-illustrated. Hence, this paper focuses on exploring the differences in the privacy rules of users when facing different agents in two countries.

In addition to the discrepancies in users’ privacy management mechanisms, privacy-related perceptions may also be dependent on the degree of sensitivity of disclosed information (Ha et al., Citation2021; Mothersbaugh et al., Citation2012). When people are using virtual customer services, they are likely to be requested to share personal information that may be private and even sensitive. Such disclosure may be helpful for users to receive a better service from the companies (Viejo & Sánchez, Citation2014). However, it could raise privacy concerns. Past literature has explored the influence of information sensitivity on user participation or their refusal to disclose information due to privacy concerns in certain conditions (Bansal et al., Citation2010; Ha et al., Citation2021). Since information sensitivity is highly situational and subjective (Sheehan & Hoy, Citation2000), people’s discomfort with disclosing sensitive information may prevent them from using the chatbots. In this regard, this study also focuses on the specific effects of information sensitivity on privacy perceptions when people use online customer services.

Past studies suggested that people in different countries may value information privacy differently (Bellman et al., Citation2004; LaBrie et al., Citation2018; Lu et al., Citation2017; Milberg et al., Citation2000). Generally speaking, the decision to disclose or conceal privacy relies on the basic values regarding privacy management in a society, which are referred to as the socialization of pre-existing privacy rules (Petronio, Citation2002; Petronio & Child, Citation2020). In this study, we specifically focus on how users’ privacy management mechanisms in China and the US mediate the relationship between agent identity and behavioral intentions. These two nations were selected because they are the dominant powers in terms of developing and promoting AI applications in the world. Legislators and government organizations in China and the US have been working hard to support AI innovations with their laws and policies (Xing et al., Citation2023). The two nations are currently leading the world in research on and the commercialization of AI technology. According to Lee (Citation2018), the US and China will control almost 70% of the anticipated $15.7 trillion in wealth that AI will create globally by 2030. In addition, they represent typical eastern culture and western culture (Wang et al., Citation2020). Previous studies have demonstrated that people in western cultures are more likely to expose their private information than people in eastern cultures (Litt, Citation2013). Therefore, China and the US provide suitable contexts to examine how users’ privacy management may differ between two countries with different cultural backgrounds.

Moreover, the level of tolerance for the discomfort brought by an unknown future is reflected in the degree of uncertainty avoidance (Hofstede, Citation2001), which is a perspective of cultural differences among regions. Statistics show that China avoids uncertainty to a lesser extent than the US (Hofstede Insights, Citation2022). Due to the unknown privacy risks associated with interacting with agents, uncertainty avoidance might elicit distinct privacy management rules of users from the two countries.

The past literature sketched different vantage points about people’s privacy perceptions in regard to human agents and chatbots (e.g., Sundar et al., Citation2016; Tsai et al., Citation2021). As for users’ privacy management mechanisms, the level of information sensitivity may influence their feelings of privacy (Ha et al., Citation2021; Mothersbaugh et al., Citation2012). Meanwhile, past studies regarding privacy perceptions on chatbots and human agents were often conducted in a single region (e.g., Büchi et al., Citation2023; Song et al., Citation2022; Tsai et al., Citation2021), but little is known about cross-country conclusions. In order to fill these gaps, this study aims to explore the mechanism of self-disclosure and usage intention towards chatbots and human agents in online customer service based on Communication Privacy Management (CPM) theory (Petronio, Citation2002). In addition, three privacy rules (i.e., privacy concerns, boundary linkage and information sensitivity) will be examined to understand how people manage different privacy boundaries when they interact with chatbots or human agents. The research questions of this study are as follows: (1) How do two privacy management mechanisms (i.e., privacy concerns and boundary linkage) mediate the influence of service agent identity on usage intention and disclosure intention? (2) How do such mechanisms vary at different levels of information sensitivity? (3) What are the differences in the privacy rules mentioned above between Chinese users and American users?

2. Literature review

2.1. Communication privacy management theory

CPM theory is used to depict a series of privacy rules when people share personal information, which means that individuals regard themselves as the owners of their private information. They choose the co-owners of their information through privacy boundaries (Petronio, Citation2002). Although the theory was initially developed for understanding privacy management in interpersonal communication, it has been verified in the context of human-AI interaction such as in contact-tracing technology (Hong & Cho, Citation2023), smart speakers (Kang & Oh, Citation2023; Xu et al., Citation2022) and chatbots (Sannon et al., Citation2020). CPM theory provides a useful lens for observing people’s privacy management when interacting with machines. This is because individuals and the agent enter into a relationship of information co-ownership. People in this relationship probably have implicit privacy rules and standards for how the agent should manage their disclosures and information (Sannon et al., Citation2020). In addition, the different dynamics between interactions with chatbot agents and interactions with human agents exert varying degrees of perceptions of privacy and decisions to disclose private information, leading to the significance of creation and maintenance of these communication boundaries (Ha et al., Citation2021).

This theory provides three privacy rules for managing the boundary coordination process: ownership rules, permeability rules, and linkage rules (Child et al., Citation2009; Petronio, Citation2010). People can adopt different privacy rules to define different boundaries which can be categorized into two categories, personal privacy boundaries and collective privacy boundaries (Petronio, Citation2010). A personal privacy boundary implies that individuals have control over the level of disclosure. In other words, people can decide how much of their personal information is available to others across the boundaries. It is determined by the permeability rule (Petronio, Citation2002). When an individual is unwilling to share too much private information, his or her permeability is low and the privacy boundary is thick (Son & Kim, Citation2008). Conversely, when the permeability is high, individuals have a thin privacy boundary and are more willing to disclose private information. When people have greater privacy concerns, they are more likely to adopt the low-permeability privacy rule. Privacy concerns describe the users’ worries about the undesirable usage of their private information by organizations (Osatuyi et al., Citation2018; Xu et al., Citation2011) and it has been viewed as an indicator of the formation of an individual boundary. Thus, the first privacy rule, namely, the permeability rule, is operationalized as privacy concerns because it determines the extent to which information is permeable to others (Osatuyi et al., Citation2018).

A collective privacy boundary emerges when private information has already been disclosed. Such a boundary is created jointly by the information owner and co-owners who are ensured the legitimacy of access (Petronio, Citation2002). It is subject to the second privacy rule, namely, the linkage rule, which determines who should be included in the collective boundary (Kang & Oh, Citation2023). The boundary linkage rule is rooted in the concept of reciprocity because individuals expect to gain benefits from the linkage (Osatuyi et al., Citation2018). More specifically, boundary linkage refers to a shared agreement related to the privacy rules shaping and deciding information access (Hosek & Thompson, Citation2009). It indicates users’ acceptance on containing others within the collective boundary. Generally speaking, greater linkages mean that co-owners share more personal information (Petronio, Citation2002), and are potentially less worried about disclosure. For example, if people share personal information with someone, they might expect to receive the same amount or type of information in return (Petronio, Citation2010). In the context of customer service, linkages may involve a large number of co-owners, such as supporting platforms, IT developers or operators. When individuals interact with different agents, the extent of the linkage may differ significantly between users and affect subsequent disclosure intention. Therefore, we regard the boundary linkage as the extent to which the user allows the agent’s proprietary company (i.e., an insurance company) and third parties to access the information, which embodies the linkage rule when deciding a collective privacy boundary.

A third type of privacy rule—the ownership rule—implies that an individual controls the extent of disclosure by managing both the personal and collective boundaries (Petronio, Citation2002). Individuals prefer to control privacy because disclosure could be risky. The scale of control depends on the level of vulnerability that they are willing to endure. The sensitivity of disclosed information is a critical factor in determining the vulnerability (Ha et al., Citation2021; Osatuyi et al., Citation2018). It is relevant to the context of online customer service because customers could be requested to provide sensitive personal information during a conversation. Therefore, the control rule in this study is conceptualized with regard to how the information sensitivity adjusts the privacy management mechanism.

2.2. Agent identity and privacy boundary rules

Both chatbots and human agents can provide online customer services. Supported by AI, chatbots can play a communicator role rather than only act as a channel in communication (Guzman & Lewis, Citation2020). Sundar (Citation2008) pointed out that young people may search for information from a news aggregator like Google News rather than human beings. The perception regarding the information source will affect the perceived credibility of information. Previous studies have shown that users could be affected by product recommendations by chatbots (e.g., Ischen et al., Citation2020; Rhee & Choi, Citation2020). These findings suggest that agent identity plays an important role in forming individuals’ attitudes and behaviors.

Contradictory views can be found in the literature regarding the influence of agent identity on privacy perceptions. Some scholars imply that there is no substantial difference in an individual’s perception of humans and chatbots (e.g., Beattie et al., Citation2020; Ho et al., Citation2018). This type of argument is mainly based on the Computers Are Social Actors (CASA) paradigm, which suggests that people may treat technology as social actors when interacting with it (Nass et al., Citation1994). The media equation theory further elaborates on the idea that technology could be equated with humans by users (Reeves & Nass, Citation1996). This is because when people have no intention to carefully check the source of information, they tend to unconsciously perceive and interact with computers, just as they do with humans. CASA has been widely adopted in human-machine communication research to explain why and how people perceive intelligent devices to be human beings, such as smartphones (Carolus et al., Citation2018), robots (Mou et al., Citation2023) and health advice chatbots (Liu & Sundar, Citation2018). According to this view, when communicating with a task-oriented chatbot, people may follow the same social norms as they do when communicating with human agents, even if they have to disclose private information.

In contrast to CASA and the media equation theory, some scholars have demonstrated discrepant outcomes in privacy-related perceptions between human agents and chatbots (e.g., Sundar & Kim, Citation2019; Tsai et al., Citation2021). According to cognitive heuristics (Tversky & Kahneman, Citation1974), people may make decisions about disclosure based on heuristic cues rather than a careful comparison between the risks and benefits of such disclosure. This could relate to the concept of the machine heuristic, which refers to the mental shortcut that people attribute to machine features or machine-like actions when evaluating the outcome of communicating with a machine. Such a mental shortcut involves a number of common stereotypes regarding machines, such as machinery, objectivity and ideological neutrality (Sundar & Kim, Citation2019). Compared to human agents, chatbots might reduce the need for impression management, especially when users are asked about sensitive questions. As mentioned above, our study focuses on the operationalization of privacy rules for two kinds of privacy boundaries, namely, privacy concerns and boundary linkage. Researchers have demonstrated that chatbots may trigger people’s concerns since they are not able to display how the AI program is making the decision, namely, the issue of an “algorithmic black box” (Ishii, Citation2019; Sun & Sundar, Citation2022). Nevertheless, others have indicated that people tend to trust chatbots more because of machine heuristics (Sundar & Kim, Citation2019). These findings lead to contrasting hypotheses as listed below:

H1a: A human agent will elicit higher privacy concerns of the user.

H1b: A chatbot agent will elicit higher privacy concerns of the user.

Similarly, the linkage rule indicates that privacy management strategies may differ between humans and chatbots. In other words, the extent of the boundary linkage can vary significantly depending on the agent’s identity. Therefore, we propose the following competitive hypotheses:

H2a: A human agent will elicit a higher boundary linkage of the user.

H2b: A chatbot agent will elicit a higher boundary linkage of the user.

2.3. Privacy management and behavioral intentions of customer service

When people perceive privacy threats from the service agents, they are more reluctant to use the services (Kala Kamdjoug et al., Citation2021; Liu et al., Citation2021). In this study, usage intention refers to the individual’s tendency to use the agent service (Go & Sundar, Citation2019). Past works have demonstrated that privacy perceptions are crucial factors influencing usage intentions in customer service, whether for chatbots (Sannon et al., Citation2020) or human agents (Song et al., Citation2022). Meanwhile, disclosure intention has been viewed as crucial downstream effects on people’s privacy management strategy (e.g., Sharma & Crossler, Citation2014; Waters & Ackerman, Citation2011). To personalize this service, the agents require the users to share information about themselves (Lappeman et al., Citation2023; Widener & Lim, Citation2020). Nevertheless, very few extant studies have thoroughly revealed how people disclose personal information to two types of agents under different privacy rules.

Privacy concerns could lead to a greater desire to control instead of sharing personal information (Bawack et al., Citation2021; Yun et al., Citation2019). Previous research has indicated that it can significantly influence self-disclosure (Hong & Cho, Citation2023; Wirth et al., Citation2022). Moreover, privacy concerns also reduce the willingness to adopt online services. For example, de Cosmo et al. (Citation2021) have proven that privacy concerns towards the Internet can moderate the relationship between people’s attitudes towards chatbot services and usage intention. Since privacy concerns are closely associated with the opacity of data collection, sharing and usage, we propose the following hypotheses:

H3(a/b): While using the agent service, the greater the privacy concerns that the user has, (a) the lower the intention to disclose, and (b) the lower the intention to use.

The linkage rule determines who should be the co-owners of the privacy information (Petronio & Child, Citation2020), which clarifies that the information owner selects others to be involved in privacy management. A greater boundary linkage means that users’ personal information is owned by more co-owners, which could lead to greater privacy risk. In our case, people may be concerned about unwelcome boundary linkage such as data leaks or hacking, resulting in an increased number of co-owners. Consequently, these people will be less likely to use customer service and disclose information to the agents. By contrast, if people have fewer concerns about privacy issues related to data co-ownership, they will be willing to share their data with more partners such as the company of the service provider. Such positiveness towards boundary linkage could increase the disclosure and usage intention of customer service. Thus, we propose the following hypotheses:

H4(a/b): While using the agent service, the greater the boundary linkage the user has, (a) the higher the intention to disclose, and (b) the higher the intention to use.

2.4. Information sensitivity

Information sensitivity can be viewed as an indicator of control rules because it implies the degree of risk that addresses the sense of control for privacy management. This concept reflects the perceived discomfort that people feel when they disclose personal information (Dinev et al., Citation2013) to customer service chatbots or human agents, which largely relies on the types of information requested by the channel or platform (Malhotra et al., Citation2004). Chatbots are already being applied in a large number of areas where sensitive information is needed, such as financial care and healthcare (Przegalinska et al., Citation2019). Users may be asked to provide medical records, personally identifiable information or financial information during the conversation, which may cause physical, financial, or psychological harm (Bickmore & Cassell, Citation2001). Since the context criteria play a vital role in formulating individuals’ privacy rules, we contend that when chatting with a customer agent, the classification of sensitive information by individuals reflects a clear identification of the ownership rule.

Information sensitivity can influence people’s perceptions and behaviors related to privacy. For example, on social media platforms, bloggers disclose less sensitive information more frequently as opposed to highly sensitive information (Li et al., Citation2015). In a high-sensitivity condition, people tend to perceive a greater privacy risk (Dinev et al., Citation2013). As a consequence, they may be more concerned about their privacy. They may also worry about the undesirable use of their personal data by external agents. According to the CPM theory, such worries will increase the perceived boundary linkage of the users (Petronio, Citation1991). Given that disclosing sensitive information to service agents could be harmful, people may be reluctant to use the service. Moreover, when being requested to share sensitive information, people may be motivated to adopt a conservative disclosure strategy and adjust privacy boundaries. They may choose to control instead of disclose information, leading to a decrease in disclosure intention.

By contrast, people will have less privacy risk in a low-sensitivity condition. Thus, they will be less concerned about privacy issues such as boundary linkage. Without these concerns, they may be more willing to use the customer service and share personal information with the service agents. In view of the previous literature, we assume that information sensitivity could moderate the relationships between agent identity and the two privacy rules. Thus, we propose the following hypotheses and build the theoretical framework ():

H5(a/b): The information sensitivity will moderate the influence of agent identity on (a) privacy concerns, (b) boundary linkage, which will further influence the intention to disclose.

H6(a/b): The information sensitivity will moderate the influence of agent identity on (a) privacy concerns, (b) boundary linkage, which will further influence the intention to use.

2.5. China and US user differences in the privacy mechanism

Privacy rules develop not only based on individuals, but also based on different cultural backgrounds of different countries (Child & Petronio, Citation2011). This is because individuals are educated regarding appropriate social behaviors by cultural expectations, which ultimately regulates boundary accessibility. These expectations are grounded by the underlying value of privacy in forming the boundary rules (Petronio, Citation2002). To understand whether users from different cultural backgrounds will adopt different privacy management strategies when interacting with chatbots and human agents, we compare users in China and the US. Lowry et al. (Citation2011) found that when using online self-disclosure technology—instant messaging (IM), Chinese participants exhibited a weaker attitude toward the intention to use and the actual use of instant messaging, but a higher demand for internet awareness compared to the US participants. Furthermore, compared with users in China, the disposition to value privacy and the perceived privacy risks of users in the US have a greater impact on self-disclosure when they use social networking sites (Liu & Wang, Citation2018). Another distinction is related to cultural dimensions. In particular, uncertainty avoidance reflects the degree of tolerance for anxiety caused by an uncertain future (Hofstede, Citation2001). It may influence privacy management because interaction with chatbots and human agents could involve unpredictable privacy risk. Previous studies have suggested that privacy risk is more critical for people with high uncertainty avoidance than for those with low uncertainty avoidance (Trepte et al., Citation2017; Wang & Liu, Citation2019). According to statistics, China has a lower level of uncertainty avoidance than the US (Hofstede Insights, Citation2022). Considering the above distinctions, we propose the following research question:

RQ: What are the differences between users in China and the US regarding the privacy mechanism of disclosure intention and usage intention towards the agent?

3. Method

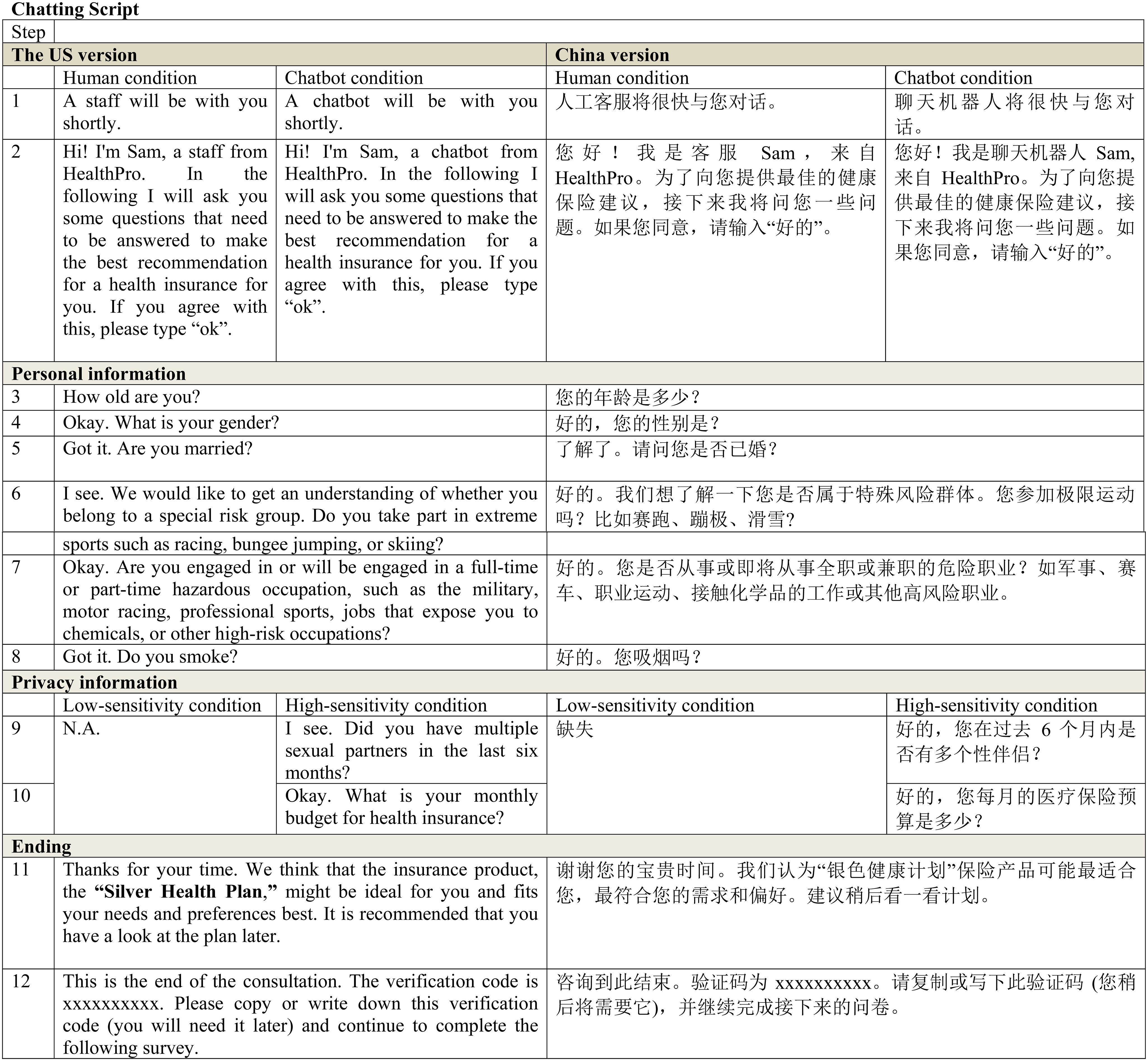

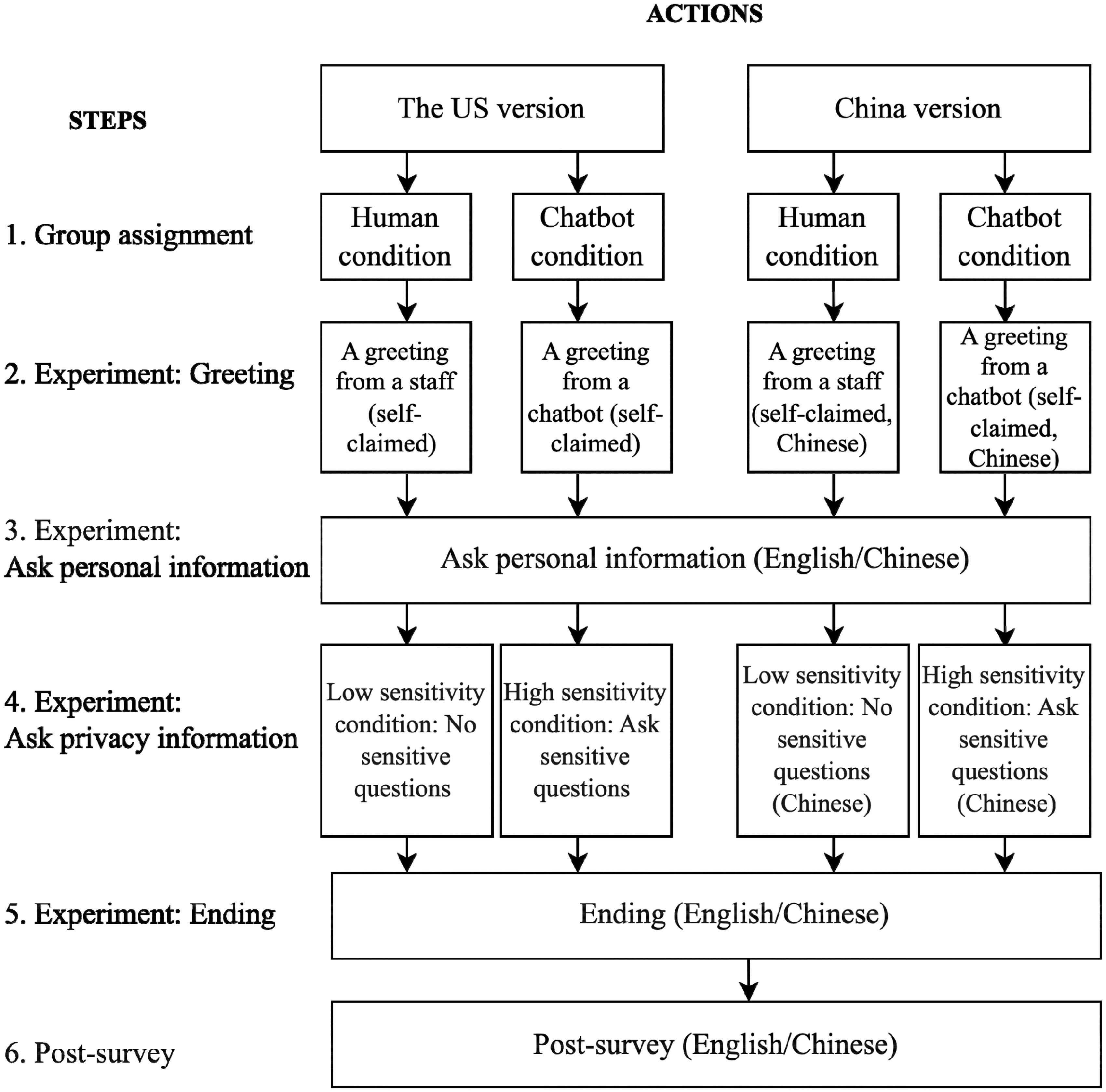

We conducted an online experiment with a 2 (agent identity: chatbot vs. human) by 2 (information sensitivity: low vs. high) between-subject factorial design in China and the US, respectively. Inquiring the agent for recommendation regarding the health insurance product was selected as the research context. The experiment’s procedure and materials remained consistent between the two countries, with the language (Chinese or English) as the only difference. Participants were randomly assigned to one of the four conditions.

3.1. Pre-tests

A pre-test was conducted to identify the most sensitive personal information, which could be used as the stimulus material for the high-sensitivity situations during the formal experiment. After referring to the previous literature and talking to real health insurance consultants, we listed 8 types of personal information that are commonly requested when purchasing health insurances (Ischen et al., Citation2020). In March 2022, we published a recruitment advertisement via Amazon Mechanical Turk to recruit US participants and posted an advertisement through a WeChat official account platform called “marketing research group” (similar to Mechanical Turk) to recruit participants in China. Finally, we invited 55 participants from China and 56 participants from the US to complete an online survey. The participants were asked to indicate the degree of perceived sensitivity for the 8 types of personal information. The results showed that information about whether people have had multiple sexual partners in the last six months and their monthly budget for health insurance were the most sensitive.

3.2. Participants

Before collecting the data, a power analysis was conducted to determine the sample size using G*Power. The effect size f was estimated as 0.25. The results indicated that 210 participants were required to achieve 95% power at an alpha-error level of 5%. During the formal data collection, we recruited 211 participants (108 male and 116 female) from China and 224 participants (106 male and 105 female) from the US through Qualtrics from March to April in 2022. The experiments in the two countries were conducted simultaneously. The average age was 43.40 (SD = 13.43) for participants from China and 45.45 (SD = 17.63) for the US. Most of the participants in China (63.5%) had received a bachelor’s degree, followed by those with a college degree/vocational education (22.3%) and high school or lower (14.2%). Most of the US participants (44.2%) had received a bachelor’s degree, followed by a high school education or lower (31.7%) and college degree/vocational education (24.1%). In China, 39.4% of participants had a monthly income of 10,000 RMB or less. In the US, 70.1% of participants had a monthly income of 10,000 USD or less. The majority of participants (China: 70.6%, US: 53.6%) had not used chatbots before joining the experiment.

3.3. Procedure

The experiment was embedded in an online survey. The complete procedure is shown in . Before participation, the participants expressed their consent through an informed consent form. After that, they were randomly assigned to one of the four experimental situations (). They were directed to read a scenario (Appendix B), which stated that they were looking for a health insurance plan. They were asked to provide some personal information to a service agent in order to receive a recommendation.

Table 1. Sample distribution.

After reading the scenario, participants were asked to interact with the agent through an online conversational program built by our research team using Microsoft Azure. We referred to the real customer services when designing the conversational program. The whole process of the interaction with the agent lasted about 10–15 minutes. Once the participants started the conversation, the agent provided a short introduction and then started to ask questions about personal information. When the agent asked a question, the participants were asked to respond. After receiving the response, the agent asked a following question. The agent asked one question each time. If participants preferred not to answer certain questions, they could skip those questions by replying “skip.” At the end of the conversation, the participants were recommended a product by the agent, and were provided with a verification code. When designing the conversation program, we used the linear conversation flow to ensure that only those participants who answered carefully and properly during the experiment progress could get the code for the questionnaire. They were to paste the code on the next page to get access to the follow-up survey.

The survey consisted of three sections. First, the participants were asked to finish the manipulation check questions. Participants who failed to recognize the identity (a human or a chatbot) of the agent were excluded. Then they were asked to answer questions that measured the variables in the research model. Lastly, they were asked to provide demographic information and indicate their chatbot usage experiences.

3.4. Stimulus material

The complete question list in the interaction of the experiment is shown in Appendix B. The conversational agent could respond to participants by recognizing keywords in their inputs. Health insurance was selected as the target product because purchasing a health insurance policy is common in both China and the US (Ma et al., Citation2015). Moreover, people who wanted to buy health insurance needed to provide certain personal information that helped determine a proper insurance plan and price.

3.5. Manipulation of agent identity

We manipulated the source identity following previous studies (e.g., Go & Sundar, Citation2019; Meng & Dai, Citation2021). Participants in the chatbot conditions, they were told that they were consulting a customer service chatbot in the scenario description. After initiating the conversation, the screen showed “A chatbot will be with you shortly.” Then, a greeting was sent to the participants, which was “I’m Sam, a chatbot from HealthPro.” For participants in the human condition, they were told that they were consulting a customer service staff member in the scenario description. After initiating the conversation, the screen showed “A staff member will be with you shortly.” Then, a greeting was sent to the participants, which was “I’m Sam, a staff member from HealthPro.” Moreover, to enhance the manipulation, the screen showed “dot dot dot” in the human conditions which suggested that the staff member was typing. Given that chatbots reply faster than humans, we set the response time from the staff to be 5 seconds longer than that from the chatbot (Meng & Dai, Citation2021).

3.6. Manipulation of information sensitivity

The manipulation of information sensitivity was based on the pre-test and the design used in the existing literature (e.g., Ischen et al., Citation2020). In the low-sensitivity condition, participants were asked to provide six types of information. In the high-sensitivity condition, besides the six types, participants were asked to provide two additional types of information that were sensitive (i.e., sexual partners and monthly budget).

3.7. Measures

We assessed all the measures on a 7-point Likert scale, with the exception of the manipulation check question for the source identity.

3.7.1. Manipulation check

For the identity manipulation check, participants were asked to indicate the agents that they were chatting with. There were two choices, which were “a customer service chatbot” and “a customer service representative” (Go & Sundar, Citation2019). Participants were supposed to choose the correct answer. To check the manipulation of information sensitivity, participants were asked to indicate the degree of sensitivity of the information that they were asked to provide in the conversation (1 = not at all, 7 = very sensitive) (Ha et al., Citation2021).

3.7.2. Outcome variables

We assessed all the measures of the outcome variables on a 7-point Likert scale from “1 = strongly disagree” to “7 = strongly agree.” Privacy concerns were measured by four items (Xu et al., Citation2011). Participants were asked to indicate the degree to which they agreed with the following items: “I am concerned that the information I submitted to this chatbot/staff could be misused,” “I am concerned that others might find private information about me from this chatbot/staff,” “I am concerned about providing personal information to this chatbot/staff, because of what others might do with it,” and “I am concerned about providing personal information to this chatbot/staff, because it could be used in a way I did not foresee” (China: α = 0.95; US: α = 0.97).

Boundary linkage was measured by three items (Kang & Oh, Citation2023). Participants were asked to indicate the degree to which they agreed with the following items: “I allow this insurance company to share my information with marketers for personalized advertising,” “I let this insurance company access my information to enjoy a personalized experience,” and “I allow this insurance company to share my information with other parties (e.g., governments) for better convenience” (China: α = 0.87; US: α = 0.87).

Disclosure intention was measured by three items (Ha et al., Citation2021). Participants were asked to indicate the degree to which they agreed with the following items: “I am willing to disclose the information that I just provided to this chatbot/staff,” “I am likely to disclose the same personal information that I just provided to this chatbot/staff in the future,” and “I am comfortable allowing this chatbot/staff to have my personal information” (China: α = 0.87; US: α = 0.87).

Usage intention was measured by three items (Go & Sundar, Citation2019). Participants were asked to indicate the degree to which they agreed with the following items: “I would like to use this chatbot/staff consultation service again next time I inquire about an insurance product,” “If there is a chance, I will make enquiries using this chatbot/staff consultation service in the future,” and “If possible, I will visit the chatbot/staff consultation service again in the future” (China: α = 0.83; US: α = 0.93).

4. Results

4.1. Manipulation check

For both the Chinese sample and US sample, the participants answered the manipulation check regarding the source identity correctly. Meanwhile, an independent samples t-test was conducted to examine mean differences in information sensitivity between the high and low groups. The results showed that participants in the high condition perceived significantly higher message sensitivity (China: M = 4.29, SD =1.89; US: M = 3.51, SD = 1.83) than did those in the low condition (China: M = 3.47, SD = 1.86, t = −3.17, p < 0.01; US: M = 2.80, SD = 1.52, t = −3.12, p < 0.01). Thus, the manipulations of both source identity and information sensitivity were successful.

4.2. Hypothesis testing

The means and standard deviations of the variables were calculated (). To test H1 and H2, a series of one-way ANOVAs was conducted for the two samples. For Chinese users, there was a significant difference between the chatbot condition and human condition in terms of the privacy concerns, F (1, 209) = 7.579, p < 0.01 (). More specifically, a chatbot agent (M = 4.03, SD = 1.77) elicited higher privacy concerns than a human agent (M = 3.37, SD = 1.71). As for the US, we found no significant difference between the chatbot (M = 3.17, SD = 1.69) and human conditions (M = 2.92, SD = 1.79) in terms of privacy concerns. Thus, H1b was partially supported, while H1a was rejected. For participants from China, there was no significant difference in boundary linkage between the chatbot group (M = 4.94, SD = 1.45) and human group (M = 5.01, SD = 1.42). Similar findings could be found in the US sample. No significant differences in terms of the boundary linkage could be found between the chatbot (M = 3.68, SD = 1.52) and human (M = 3.34, SD = 1.68) conditions. Thus, both H2a and H2b were rejected ().

Table 2. Means and standard deviations (in parentheses) of the variables.

Table 3. One-way ANOVA results in terms of privacy concerns and boundary linkage.

To further examine the moderating effect of information sensitivity on the mediating effects of privacy concerns and boundary linkage, we conducted two moderated mediation analyses (Hayes, Citation2013) with two dependent variables (i.e., the intention to disclose and intention to use), respectively, using the process macro (model 7) in the SPSS. In both analyses, agent identity (0 = chatbot, 1 = human) was set as the predictor and information sensitivity (0 = low sensitivity, 1 = high sensitivity) as the moderator. We also included age, gender and usage experience as covariates. Aligned with the results, we found a significant interaction effect between agent identity and information sensitivity on privacy concerns (China: b = −0.95, SE = 0.48, p < 0.05, 95%CI [−1.90, −0.01]; US: b = −0.95, SE = 0.45, p < 0.05, 95%CI [−1.85, −0.06]), and on boundary linkage (China: b = −1.27, SE = 0.38, p < 0.01, 95%CI [−2.03, −0.51]; US: b = −0.83, SE = 0.41, p < 0.05, 95%CI [−1.64, −0.02]) in both samples. With regard to the effects of the control variables, we found that in the Chinese sample, only usage experience had a significant negative effect on boundary linkage (b = −0.63, SE = 0.22, p < 0.01). However, in the US sample, gender (0 = male, 1 = female) significantly influenced privacy concerns (b = 0.47, SE = 0.23, p < 0.05) and disclosure intention (b = 0.44, SE = 0.15, p < 0.01). Age significantly influenced boundary linkage (b = −0.02, SE = 0.01, p < 0.001) and disclosure intention (b = 0.01, SE = 0.004, p < 0.01). Usage experience significantly influenced boundary linkage (b = −0.47, SE = 0.21, p < 0.05).

Further analysis was conducted to examine the direct and indirect relationships between the constructs (). In the Chinese sample, boundary linkage could significantly influence the intention to disclose, whereas privacy concerns could not. Both privacy concerns and boundary linkage could significantly influence the intention to use. In the US sample, both privacy concerns and boundary linkage had a significant impact on the disclosure intention. Both privacy concerns and boundary linkage could significantly influence the usage intention. H3a was partially supported while H3b, H4a, and H4b were supported.

Table 4. Direct and indirect path analysis.

We further investigated the conditional effect of information sensitivity on the indirect path via privacy concerns between agent identity and disclosure/usage intention. As for the disclosure intention, the moderated mediation index was not significant in the Chinese sample (Index = 0.03, SE = 0.04, 95%CI [−0.04,0.12]). However, such an index was significant in the US sample (Index = 0.24, SE = 0.12, 95%CI [0.01, 0.48]). The conditional indirect effect on disclosure intention was only significant in the high-sensitivity condition but not in the low-sensitivity condition. Moreover, in the Chinese sample, the conditional indirect effects of agent identity on usage intention through privacy concerns were different depending on the information sensitivity (Index = 0.14, SE = 0.08, 95%CI [0.002, 0.300]). Such an indirect effect was only significant in the high-sensitivity condition but not in the low-sensitivity condition. As for the US, such a moderated mediation index was also significant (Index = 0.18, SE = 0.11, 95%CI [0.01, 0.41]). The indirect effect was significant in the high-sensitivity condition but not in the low-sensitivity condition. In line with these results, privacy concerns were the mediator between agent identity and intention to use in the high-sensitivity condition.

We then examined the conditional effect of information sensitivity on the indirect path via boundary linkage between agent identity and disclosure/usage intention. In the Chinese sample, the conditional indirect effects of agent identity on the intention to disclose through boundary linkage were significantly different depending on information sensitivity (Index = −0.87, SE = 0.28, 95%CI [−1.44, −0.36]). Such an indirect effect was only significant in the high-sensitivity condition but not in the low-sensitivity condition. As for the US, the conditional indirect effects on disclosure intention were significantly different depending on information sensitivity (Index = −0.43, SE = 0.22, 95%CI [−0.88, −0.02]). The indirect effect via boundary linkage was significant in the high-sensitivity condition but not in the low-sensitivity condition. Furthermore, the conditional indirect effect via boundary linkage on usage intention was significantly different depending on information sensitivity (Index= −0.44, SE = 0.14, 95%CI [−0.72, −0.18]) in the Chinese sample. The indirect effect was significant in the high-sensitivity condition but not in the low-sensitivity condition. In the US sample, the conditional indirect effects were significantly different depending on information sensitivity (Index = −0.33, SE = 0.17, 95%CI [−0.71, −0.01]). The indirect effect was significant in the high-sensitivity condition but not in the low-sensitivity condition. Based on these results, boundary linkage was the significant mediator that mediated the relationship between agent identity and the intention to use and disclose in the high-sensitivity condition. That is, when sensitive information was required, participants had higher boundary linkage towards the chatbot agent, thereby subsequently increasing their behavioral intentions.

For both samples, participants with higher privacy concerns tend not to use the service when they are asked to share sensitive information. People with greater boundary linkage tend to use the service and disclose information to the agents in the high-sensitivity condition. Specifically, H5a was supported in the US sample, but not supported in the China sample. H5b, H6a, and H6b were supported in both the US and China samples.

5. Discussion

Based on a cross-country research design, we conducted online experiments in China and the United States, respectively, and provided some illuminating insights into personal and collective boundary management in the context of human-machine interaction.

The findings highlight the importance of information sensitivity in forming personal privacy boundaries, which is consistent with the findings in previous research regarding mobile applications (Balapour et al., Citation2020). The results have shown that the impact of agent identity on privacy perception and behavioral intention may vary at different levels of information sensitivity. Information sensitivity is a situational factor (Malhotra et al., Citation2004), which can further influence individuals’ cognitive processing. When the information users need to disclose is less sensitive, it is possible that people may fundamentally attach less importance to the facilitators and consequences of the disclosure behaviors. In this case, it is conceivable that they will not care whether the agent is a human or a chatbot. Conversely, when users perceive the information to be highly sensitive, they will tend to place more emphasis on evaluating the trade-off process (Hu et al., Citation2023) and may pay more attention to the potential outcomes of the disclosure (Balapour et al., Citation2020). As they become more cautious and sensitive, the human agent and chatbot agent may induce different levels of users’ perceptions regarding the privacy management. The moderation effect of sensitivity reveals the fact that people have started to consider information ownership towards human agents and chatbots in a more subtle way. This finding indicates that people tend to be more thoughtful when establishing privacy boundaries in a more sensitive conversation.

The results indicated that participants showed higher privacy concerns for chatbot agents than human agents under the condition of high information sensitivity, which was consistent between the two countries. This finding is inconsistent with the CASA paradigm and media equation theory, which suggests that users’ disclosure processes are consistent regardless of whether the communicating partner is a human or a chatbot (Ho et al., Citation2018; Reeves & Nass, Citation1996). This could be explained by several reasons. First, the differences in people’s privacy perceptions may be attributed to the social rules that people follow when interacting with different communicators. Previous research has implied a number of reasons for the unsuccessful appropriation of social rules from human-to-human interactions to human-to-machine interactions. Human agents possess normative norms and moral integrity (Giroux et al., Citation2022). These are social rules related to professionalism and moral responsibility. However, it is difficult to apply such rules to chatbots (Marche, Citation2021; Medeiros, Citation2017). Thus, people tend to place more trust in human agents than chatbots. This finding is consistent with one of the tenets of CPM theory, which is that people use different privacy rules to determine their privacy boundaries and privacy perceptions towards different partners (Petronio & Caughlin, Citation2006). Second, the emergence of security vulnerabilities of AI may have exacerbated privacy concerns towards chatbots. In some cases, personal data is leaked due to technical accidents or external attacks (Kelly, Citation2020). Furthermore, AI anxiety can also explain the privacy concerns towards chatbots (Hong & Cho, Citation2023). People who experience AI anxiety may be apprehensive or nervous regarding the use of chatbots due to privacy concerns. In sum, the divergence in social rules and the risk of data insecurity may have contributed to the difference in permeability rules towards chatbots and human agents.

We also found that participants have higher boundary linkage to chatbots than human agents under the condition of high information sensitivity in both China and the US. To the best of our knowledge, no previous study has compared people’s linkage rules between chatbot agents and human agents. On the one hand, this may be explained by the principle of machine heuristics (Sundar & Kim, Citation2019). People believe that machines could share information with third parties in a more objective and secure way. Therefore, there is little pressure when sharing highly sensitive information such as one’s financial status and the number of sexual partners to third parties via chatbot agents. On the other hand, sharing data with the chatbot may also benefit users by providing personalized services. When users feel that they could receive those benefits by sharing private information, they are more likely to do so. In this regard, users expect that their interactions with the chatbots could follow the principle of reciprocity, which is useful in establishing a collective privacy boundary (Petronio, Citation2010).

The result indicates that privacy concerns and boundary linkage play important mediating roles in the influence of agent identity on users’ subsequent behavioral intentions under certain circumstances. When people interact with chatbot agents or human agents and provide sensitive information, privacy concerns and boundary linkage are vital integral antecedents that can capture and depict individuals’ considerations behind their decisions related to information disclosure. In other words, when users have fewer worries and accept others as the owner for their sensitive information, they are more willing to disclose themselves and reuse the service system. This result reveals the intense connection between people’s permeability rules, linkage rules and behavioral intentions in the context of human-agent interactions, providing new evidence to corroborate the CPM theory. These privacy rules from the theory successfully explain how users perceive and evaluate their interactions with humans and chatbots, which lays the foundations for their further actions.

Another finding of this study is that it reveals the different mediation effects of privacy management rules between China and the US, which may be explained by the different levels of uncertainty avoidance in the two countries. In the high-sensitivity condition, we found that privacy concerns significantly mediated the relationship between the agent identity and disclosure intention in the US sample but not the Chinese sample. Uncertainty avoidance reflects the level of tolerance for anxiety brought about by an unknown future (Hofstede, Citation2001) and the level of uncertainty avoidance between China and the US is different (Hofstede Insights, Citation2022). Thus, it is conceivable that people from a culture with a low level of uncertainty avoidance may be less sensitive to privacy issues in their decision-making. Actually, there is evidence showing that uncertainty avoidance could affect the impact of privacy concerns (Krasnova et al., Citation2012). Thus, compared with users in the US, the disclosure intention of users in China is less likely to be affected by concerns over privacy. In line with CPM theory, the collective boundary is more important than the personal boundary when managing privacy in customer service for Chinese users. As for the US users, both the personal boundary and collective boundary are critical rules to follow in order to protect privacy. This finding also reveals that the level of permeability may not be a key factor in the disclosure intention of Chinese users. In other words, the security threat or concern brought by disclosure is not a major consideration for Chinese users. Lowry et al. (Citation2011) pointed out that, compared with other countries, the obedient and cooperative nature of Chinese people in a collectivist culture results in their having less privacy awareness. This may explain our finding that the mediating role of privacy concerns for disclosure intention differ between the two countries. People with less privacy awareness tend to worry less about privacy issues. Accordingly, this indicates that privacy concerns may be less salient for Chinese users in the process of information disclosure to different service agents. This finding illustrates that the creation of personal boundaries via users’ permeability rules, namely, the degrees of privacy concerns, is more significant in regard to the US users’ disclosure intentions compared to the users in China. Countries with a low level of uncertainty avoidance may place less emphasis on the permeability rule in their privacy management. In conclusion, the permeability rule is an important indicator for distinguishing privacy management mechanisms in different countries.

This study has demonstrated that privacy concerns and boundary linkage significantly mediate the relationship between the agent identity and intention to use for both Chinese and American users in the high-sensitivity condition. This finding may be because China and the US are the AI superpowers (Apoorva, Citation2021). There is a convergence tendency in the antecedent of AI technology acceptance. It is thus reasonable to surmise that the mechanism of usage intention is similar between users from the two nations. Meanwhile, we found that the extent of the collective boundaries (i.e., boundary linkage) is extremely important to the disclosure and usage intentions in the two countries.

6. Implications and conclusions

The theoretical contributions of this study are threefold. This study extends the lines of research about CPM theory and information privacy by comparing agents with different identities. The three factors conceptualized by CPM theory (i.e., privacy concerns, boundary linkage and information sensitivity) can be used to identify different privacy boundaries when interacting with chatbots compared to interacting with human agents. The results show that privacy concerns and boundary linkage play different mediating roles between agent identity and usage intention as well as disclosure intention in a high-sensitivity condition, which provides new evidence for CPM theory in the context of human-machine interaction.

This study also contributes to the literature on human-machine communication by demonstrating that information sensitivity is a boundary condition of the CASA. The findings imply the important role of information sensitivity in establishing boundaries when managing personal privacy. We found no substantial differences between humans and chatbots in a low-sensitivity condition, which is consistent with the CASA. However, there is a significant difference between the two types of agents in a high-sensitivity condition. Moreover, our research design in manipulating the information sensitivity could provide methodological implications for scholars to conduct further research on this topic.

In addition, this study contributes to the literature by exploring the distinct degrees of boundary permeability between China and the US. The findings show that the thickness of the privacy boundary differs significantly. That is, privacy concerns can significantly affect the disclosure intention of American participants but have no effect on Chinese users. This supports Petronio (Citation2002)’s opinion about the privacy management process, which proposes that different countries attach different degrees of importance to managing private information. In this regard, the influence of privacy concerns in China may not be as strong as in the US.

This study has important practical implications for practitioners. In general, companies can utilize either chatbots or human agents for customer services that do not require users to divulge sensitive information. However, service providers can consider the use of chatbots if their service involves the disclosure of sensitive information from the users. In China, companies could use chatbots if customers are required to provide sensitive information and to use the service only on one occasion. If customers have to use the service for a long time, companies in both countries should consider not only reducing their privacy concerns but also encouraging boundary linkage when employing the agents. The companies can provide options for users to let them decide which agent to use. Lastly, in considering the significant role of boundary linkage in the two countries, chatbot providers could specify the third party who hosts information regarding their privacy policy.

To conclude, this study investigates how different privacy management mechanisms influence the intention to use and intention to disclose between customer service chatbots and human agents. Based on CPM theory, the results provided further evidence with regard to the influences of privacy concerns and boundary linkage on two behavioral intentions (i.e., the intention to use and intention to disclose). More specifically, in the Chinese sample, privacy concerns could not significantly influence the participants’ intention to disclose, but could significantly influence their intention to use. Boundary linkage could significantly influence both behavioral intentions of Chinese participants. In the US sample, both privacy concerns and boundary linkage had significant impacts on the disclosure and usage intention. In addition, our results indicate the moderating role of information sensitivity in the indirect relationships between agent identity and usage/disclosure intention via privacy concerns and boundary linkage. Only in the condition of high information sensitivity did participants have significantly greater privacy concerns and higher boundary linkage from the interaction with chatbot agents than the interaction with human agents; privacy concerns and boundary linkage further mediated the indirect relationships between agent identity and usage intention in both countries, while privacy concerns could only mediate the influence of agent identity on disclosure intention in the US sample. In short, through comparing agents with two identities and three privacy rules (i.e., privacy concerns, boundary linkage and information sensitivity), this work enriches the literature with regard to the CPM theory and information privacy. That is, when interacting with chatbots as opposed to human agents, the three variables outlined by CPM theory (privacy concerns, boundary linkage and information sensitivity) can be used to identify different privacy boundaries. In addition, this study contributes to the research on human-machine communication by illuminating the fact that information sensitivity is a significant boundary condition, adding discussion to studies on CASA. These findings have practical implications for practitioners in both China and the US.

7. Limitations and suggestions for future research

In this study, we have only examined the use of task-oriented service agents in the context of insurance consultation. As the chatbot agents have been used in various contexts, we suggest future studies explore this topic in other scenarios. Moreover, besides China and the US, it would be meaningful to further explore this topic in other different cultures. In addition, our study relies on self-reported data, which may not be able to reflect actual privacy management behaviors in a real-world setting. Future research could use actual privacy settings and data logs to measure those behaviors more accurately. Researchers can also explore the motivation for privacy protection and the underpinning psychological mechanisms when interacting with service agents.

Lastly, we referred to the uncertainty avoidance proposed by Hofstede to explain the results from the perspective of cultural differences between China and the United States. Aligned with previous studies (Widjaja et al., Citation2019; Wu et al., Citation2012), we assumed that the cultural dimensions in Hofstede’s theory are constructs at a national level. Besides Hofstede’s cultural theory, CPM theory also argues that the nation-level cultural differences may lead to differences in users’ psychological mechanisms related to privacy issues (Petronio, Citation2002). Given this, we did not measure cultural constructs to capture cultural dimensions at the individual level in this research. We suggest that future studies could consider individual cultural constructs to further deepen the knowledge on people’s privacy-related decisions.

Ethical approval

The study was approved by the Human Subjects Ethics Sub-Committee of City University of Hong Kong (Application No.: H002620).

Disclosure statement

No potential conflict of interest was reported by the author(s).

Additional information

Funding

Notes on contributors

Yu-li Liu

Yu-li Liu is Distinguished Professor of School of Journalism and Communication at Shanghai University. Her research interests include communications law and policy, AI ethics and governance, etc. Currently, she is an associate editor of Telecommunications Policy and member of the editorial board of several notable journals.

Wenjia Yan

Wenjia Yan is a PhD student in the Department of Media and Communication at City University of Hong Kong. Wenjia’s work focuses on information privacy and human-machine communication.

Bo Hu

Bo Hu is a PhD student in the Department of Media and Communication at City University of Hong Kong. His research interests include human-AI interaction and computer-mediated communication.

Zhi Lin

Zhi Lin is a PhD student in the School of Journalism and Media at the University of Texas at Austin. Her research interests include computer-mediated communication and health communication.

Yunya Song

Yunya Song is a Professor in the School of Communication and Director of AI and Media Research Lab, Hong Kong Baptist University. Her research on digital media and political communication has appeared in, among other journals, New Media & Society, International Journal of Press/Politics, and Computers in Human Behavior.

References

- Akshay, G., & Labdhi, J. (2023, June 7). Privacy concerns over AI-based chatbots. https://ciso.economictimes.indiatimes.com/news/privacy-concerns-over-ai-based-chatbots/97432534

- Apoorva, K. (2021, February 19). China will become the AI superpower surpassing U.S. How long? https://www.analyticsinsight.net/china-will-become-the-ai-superpower-surpassing-u-s-how-long/,

- Balapour, A., Nikkhah, H. R., & Sabherwal, R. (2020). Mobile application security: Role of perceived privacy as the predictor of security perceptions. International Journal of Information Management, 52, 102063. https://doi.org/10.1016/j.ijinfomgt.2019.102063

- Bansal, G., Zahedi, F., & Gefen, D. (2010). The impact of personal dispositions on information sensitivity, privacy concern and trust in disclosing health information online. Decision Support Systems, 49(2), 138–150. https://doi.org/10.1016/j.dss.2010.01.010

- Bawack, R. E., Wamba, S. F., & Carillo, K. D. A. (2021). Exploring the role of personality, trust, and privacy in customer experience performance during voice shopping: Evidence from SEM and fuzzy set qualitative comparative analysis. International Journal of Information Management, 58, 102309. https://doi.org/10.1016/j.ijinfomgt.2021.102309

- Beattie, A., Edwards, A. P., & Edwards, C. (2020). A bot and a smile: Interpersonal impressions of chatbots and humans using emoji in computer-mediated communication. Communication Studies, 71(3), 409–427. https://doi.org/10.1080/10510974.2020.1725082

- Bellman, S., Johnson, E. J., Kobrin, S. J., & Lohse, G. L. (2004). International differences in information privacy concerns: A global survey of consumers. The Information Society, 20(5), 313–324. https://doi.org/10.1080/01972240490507956

- Bickmore, T., & Cassell, J. (2001). Relational agents: A model and implementation of building user trust. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems (pp. 396–403). ACM Press. https://doi.org/10.1145/365024.365304

- Büchi, M., Fosch-Villaronga, E., Lutz, C., Tamò-Larrieux, A., & Velidi, S. (2023). Making sense of algorithmic profiling: User perceptions on Facebook. Information, Communication & Society, 26(4), 809–825. https://doi.org/10.1080/1369118X.2021.1989011

- Carolus, A., Schmidt, C., Muench, R., Mayer, L., & Schneider, F. (2018). Pink stinks—at least for men. In M. Kurosu (Eds.), Human-computer interaction. Interaction in context. HCI 2018. Lecture notes in computer science (pp. 10902). Springer. https://doi.org/10.1007/978-3-319-91244-8_40

- Child, J. T., Pearson, J. C., & Petronio, S. (2009). Blogging, communication, and privacy management: Development of the blogging privacy management measure. Journal of the American Society for Information Science and Technology, 60(10), 2079–2094. https://doi.org/10.1002/asi.21122

- Child, J. T., & Petronio, S. (2011). Unpacking the paradoxes of privacy in CMC relationships: The challenges of blogging and relational communication on the Internet. In K. B. Wright, & L. M. Webb (Eds.), Computer-Mediated Communication in Personal Relationships (pp. 21–40). Peter Lang.

- de Cosmo, L. M., Piper, L., & Vittorio, A. D. (2021). The role of attitude toward chatbots and privacy concern on the relationship between attitude toward mobile advertising and behavioral intent to use chatbots. Italian Journal of Marketing, 2021(1–2), 83–102. https://doi.org/10.1007/s43039-021-00020-1

- Dinev, T., Xu, H., Smith, J. H., & Hart, P. (2013). Information privacy and correlates: An empirical attempt to bridge and distinguish privacy-related concepts. European Journal of Information Systems, 22(3), 295–316. https://doi.org/10.1057/ejis.2012.23

- Forbes. (2021, November 5). Council post: 15 Ways to leverage AI in customer service. https://www.forbes.com/sites/forbesbusinesscouncil/2021/07/22/15-ways-to-leverage-ai-in-customer-service/.

- Gartner. (2019, November 5). Gartner predicts the future of AI technologies. Gartner. https://www.gartner.com/smarterwithgartner/gartner-predicts-the-future-of-ai-technologies.

- Giroux, M., Kim, J., Lee, J. C., & Park, J. (2022). Artificial intelligence and declined guilt: Retailing morality comparison between human and AI. Journal of Business Ethics, 178(4), 1027–1041. https://doi.org/10.1007/s10551-022-05056-7

- Go, E., & Sundar, S. S. (2019). Humanizing chatbots: The effects of visual, identity and conversational cues on humanness perceptions. Computers in Human Behavior, 97, 304–316. https://doi.org/10.1016/j.chb.2019.01.020

- Grand View Research. (2021). Chatbot market size, share & growth report, 2022–2030. https://www.grandviewresearch.com/industry-analysis/chatbot-market.

- Guzman, A. L., & Lewis, S. C. (2020). Artificial intelligence and communication: A human–machine communication research agenda. New Media & Society, 22(1), 70–86. https://doi.org/10.1177/1461444819858691

- Ha, Q.-A., Chen, J. V., Uy, H. U., & Capistrano, E. P. (2021). Exploring the privacy concerns in using intelligent virtual assistants under perspectives of information sensitivity and anthropomorphism. International Journal of Human–Computer Interaction, 37(6), 512–527. https://doi.org/10.1080/10447318.2020.1834728

- Hayes, A. F. (2013). Introduction to mediation, moderation, and conditional process analysis, first edition: A regression-based approach. The Guilford Press. https://doi.org/10.1016/j.techfore.2017.10.007

- Ho, A., Hancock, J., & Miner, A. S. (2018). Psychological, relational, and emotional effects of self-disclosure after conversations with a chatbot. The Journal of Communication, 68(4), 712–733. https://doi.org/10.1093/joc/jqy026

- Hofstede Insights. (2022). Country comparison. https://www.hofstede-insights.com/country-comparison/.

- Hofstede, G. (2001). Culture’s consequences: Comparing values, behaviors, institutions, and organizations across nations. Sage.

- Hong, S. J., & Cho, H. (2023). Privacy management and health information sharing via contact tracing during the COVID-19 pandemic: A hypothetical study on AI-based technologies. Health Communication, 38(5), 913–924. https://doi.org/10.1080/10410236.2021.1981565

- Hosek, A. M., & Thompson, J. (2009). Communication privacy management and college instruction: Exploring the rules and boundaries that frame instructor private disclosures. Communication Education, 58(3), 327–349. https://doi.org/10.1080/03634520902777585

- Hu, B., Liu, Y. L., & Yan, W. (2023). Should I scan my face? The influence of perceived value and trust on Chinese users’ intention to use facial recognition payment. Telematics and Informatics, 78, 101951. https://doi.org/10.1016/j.tele.2023.101951

- Ischen, C., Araujo, T., van Noort, G., Voorveld, H., & Smit, E. (2020). “I am here to assist you today”: The role of entity, interactivity and experiential perceptions in chatbot persuasion. Journal of Broadcasting & Electronic Media, 64(4), 615–639. https://doi.org/10.1080/08838151.2020.1834297

- Ishii, K. (2019). Comparative legal study on privacy and personal data protection for robots equipped with artificial intelligence: Looking at functional and technological aspects. AI & Society, 34(3), 509–533. https://doi.org/10.1007/s00146-017-0758-8

- Kala Kamdjoug, J. R., Wamba-Taguimdje, S.-L., Wamba, S. F., & Kake, I. B. (2021). Determining factors and impacts of the intention to adopt mobile banking app in Cameroon: Case of SARA by Afriland First Bank. Journal of Retailing and Consumer Services, 61, 102509. https://doi.org/10.1016/j.jretconser.2021.102509

- Kang, H., & Oh, J. (2023). Communication privacy management for smart speaker use: Integrating the role of privacy self-efficacy and the multidimensional view. New Media & Society, 25(5), 1153–1175. https://doi.org/10.1177/14614448211026611

- Kelly, R. (2020, November 13). Ticketmaster fined £1.25 million over 2018 chatbot data breach. Digit. https://www.digit.fyi/ticketmaster-fined-over-2018-chatbot-data-breach/.

- Krasnova, H., Veltri, N. F., & Günther, O. (2012). Self-disclosure and privacy calculus on social networking sites: The role of culture: Intercultural dynamics of privacy calculus. WIRTSCHAFTSINFORMATIK, 54(3), 123–133. https://doi.org/10.1007/s11576-012-0323-5

- LaBrie, R. C., Steinke, G. H., Li, X., & Cazier, J. A. (2018). Big data analytics sentiment: US-China reaction to data collection by business and government. Technological Forecasting and Social Change, 130, 45–55. https://doi.org/10.1016/j.techfore.2017.06.029

- Lappeman, J., Marlie, S., Johnson, T., & Poggenpoel, S. (2023). Trust and digital privacy: Willingness to disclose personal information to banking chatbot services. Journal of Financial Services Marketing, 28(2), 337–357. https://doi.org/10.1057/s41264-022-00154-z

- Lee, K.-F. (2018, September 18). AI has far-reaching consequences for emerging markets. South China Morning Post. https://www.scmp.com/tech/innovation/article/2164597/opinion-ai-has-far-reaching-consequences-emerging-markets.

- Li, K., Lin, Z., & Wang, X. (2015). An empirical analysis of users’ privacy disclosure behaviors on social network sites. Information & Management, 52(7), 882–891. https://doi.org/10.1016/j.im.2015.07.006

- Litt, E. (2013). Understanding social network site users’ privacy tool use. Computers in Human Behavior, 29(4), 1649–1656. https://doi.org/10.1016/j.chb.2013.01.049

- Liu, B., & Sundar, S. S. (2018). Should machines express sympathy and empathy? Experiments with a health advice chatbot. Cyberpsychology, Behavior and Social Networking, 21(10), 625–636. https://doi.org/10.1089/cyber.2018.0110

- Liu, Z., & Wang, X. (2018). How to regulate individuals’ privacy boundaries on social network sites: A cross-cultural comparison. Information & Management, 55(8), 1005–1023. https://doi.org/10.1016/j.im.2018.05.006

- Liu, Y., Yan, W., & Hu, B. (2021). Resistance to facial recognition payment in China: The influence of privacy-related factors. Telecommunications Policy, 45(5), 102155. https://doi.org/10.1016/j.telpol.2021.102155

- Lowry, P. B., Cao, J., & Everard, A. (2011). Privacy concerns versus desire for interpersonal awareness in driving the use of self-disclosure technologies: The case of instant messaging in two cultures. Journal of Management Information Systems, 27(4), 163–200. https://doi.org/10.2753/MIS0742-1222270406

- Lu, J., Yu, C., Liu, C., & Wei, J. (2017). Comparison of mobile shopping continuance intention between China and USA from an espoused cultural perspective. Computers in Human Behavior, 75, 130–146. https://doi.org/10.1016/j.chb.2017.05.002

- Ma, R., Huang, L., Zhao, D., & Xu, L. (2015). Commercial health insurance – a new power to push china healthcare reform forward? Value in Health, 18(3), A103. https://doi.org/10.1016/j.jval.2015.03.601

- Majstorovic, M. (2023, February 16). Conversational AI and the future of customer service. https://frontlogix.com/conversational-ai-and-the-future-of-customer-service/.

- Malhotra, N. K., Kim, S. S., & Agarwal, J. (2004). Internet users’ information privacy concerns (IUIPC): The construct, the scale, and a causal model. Information Systems Research, 15(4), 336–355. https://doi.org/10.1287/isre.1040.0032

- Marche, S. (2021, July 23). The chatbot problem. The New Yorker. https://www.newyorker.com/culture/cultural-comment/the-chatbot-problem.

- Medeiros, M. (2017, October 3). Chatbots gone wild? Some ethical considerations. Social Media Law Bulletin. https://www.socialmedialawbulletin.com/2017/10/chatbots-gone-wild-ethical-considerations/.

- Meng, J., & Dai, Y. (2021). Emotional support from AI chatbots: Should a supportive partner self-disclose or not? Journal of Computer-Mediated Communication, 26(4), 207–222. https://doi.org/10.1093/jcmc/zmab005

- Milberg, S. J., Smith, H. J., & Burke, S. J. (2000). Information privacy: Corporate management and national regulation. Organization Science, 11(1), 35–57. https://doi.org/10.1287/orsc.11.1.35.12567

- Mothersbaugh, D. L., Foxx, W. K., Beatty, S. E., & Wang, S. (2012). Disclosure antecedents in an online service context: The role of sensitivity of information. Journal of Service Research, 15(1), 76–98. https://doi.org/10.1177/1094670511424924

- Mou, Y., Zhang, L., Wu, Y., Pan, S., & Ye, X. (2023). Does self-disclosing to a robot induce liking for the robot? Testing the disclosure and liking hypotheses in human–robot interaction. International Journal of Human–Computer Interaction, 1–12. https://doi.org/10.1080/10447318.2022.2163350

- Nass, C., Steuer, J., & Tauber, E. R. (1994). Computers are social actors. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems (pp. 72–78). https://doi.org/10.1145/191666.191703

- Osatuyi, B., Passerini, K., Ravarini, A., & Grandhi, S. A. (2018). “Fool me once, shame on you … then, I learn.” An examination of information disclosure in social networking sites. Computers in Human Behavior, 83, 73–86. https://doi.org/10.1016/j.chb.2018.01.018

- Petronio, S. (1991). Communication Boundary management: A theoretical model of managing disclosure of private information between marital couples. Communication Theory, 1(4), 311–335. https://doi.org/10.1111/j.1468-2885.1991.tb00023.x

- Petronio, S. (2002). Boundaries of privacy: Dialectics of disclosure. Suny Press.

- Petronio, S. (2010). Communication privacy management theory: What do we know about family privacy regulation? Journal of Family Theory & Review, 2(3), 175–196. https://doi.org/10.1111/j.1756-2589.2010.00052.x

- Petronio, S., & Caughlin, J. P. (2006). Communication privacy management theory: Understanding families. In D. O. Braithwaite & L. A. Baxter (Eds.), Engaging theories in family communication: Multiple perspectives (pp. 35–49). Sage Publications, Inc. https://doi.org/10.4135/9781452204420.n3

- Petronio, S., & Child, J. T. (2020). Conceptualization and operationalization: Utility of communication privacy management theory. Current Opinion in Psychology, 31, 76–82. https://doi.org/10.1016/j.copsyc.2019.08.009

- Przegalinska, A., Ciechanowski, L., Stroz, A., Gloor, P., & Mazurek, G. (2019). In bot we trust: A new methodology of chatbot performance measures. Business Horizons, 62(6), 785–797. https://doi.org/10.1016/j.bushor.2019.08.005

- Reeves, B., & Nass, C. (1996). The Media equation: How people treat computers, television, and new media like real people and places. Cambridge University Press.

- Rhee, C. E., & Choi, J. (2020). Effects of personalization and social role in voice shopping: An experimental study on product recommendation by a conversational voice agent. Computers in Human Behavior, 109, 106359. https://doi.org/10.1016/j.chb.2020.106359

- Sannon, S., Stoll, B., DiFranzo, D., Jung, M. F., & Bazarova, N. N. (2020). I just shared your responses. Extending communication privacy management theory to interactions with conversational agents. Proceedings of the ACM on Human-Computer Interaction, 4(GROUP), 1–18. https://doi.org/10.1145/3375188

- ServiceBell. (2022). 53 Chatbot statistics for 2022: Usage, demographics, trends | ServiceBell. https://getservicebell.com/post/chatbot-statistics.

- Sharma, S., & Crossler, R. E. (2014). Disclosing too much? Situational factors affecting information disclosure in social commerce environment. Electronic Commerce Research and Applications, 13(5), 305–319. https://doi.org/10.1016/j.elerap.2014.06.007

- Sheehan, K. B., & Hoy, M. G. (2000). Dimensions of privacy concern among online consumers. Journal of Public Policy & Marketing, 19(1), 62–73. https://doi.org/10.1509/jppm.19.1.62.16949

- Son, J.-Y., & Kim, S. S. (2008). Internet users’ information privacy-protective responses: A taxonomy and a nomological model. MIS Quarterly, 32(3), 503–529. https://doi.org/10.2307/25148854

- Song, M., Xing, X., Duan, Y., Cohen, J., & Mou, J. (2022). Will artificial intelligence replace human customer service? The impact of communication quality and privacy risks on adoption intention. Journal of Retailing and Consumer Services, 66, 102900. https://doi.org/10.1016/j.jretconser.2021.102900